This section is aimed at a concise introduction to the history of Mancala games and one of their recent forms—Kalah. As the primary goal of the section, selected significant research results on playing and solving Kalah or some rules-related Mancala versions are reviewed, which gives rise to the collateral motivation of this paper.

2.2. Mancala Research Survey

As early as in 1964, Russell [

49,

50] presented two studies on playing Kalah on the computer. A partial self-learning computer program to demonstrate the game was developed by Bell in 1968 [

48]. Slagle and Dixon [

51] programmed several board-games, including Kalah, to illustrate and test their M&N algorithm two years later. From that time, no relevant result has been published until the end of the millennium. However, some end-game databases were built for Awari [

24], the rules of which are close to Kalah. The end-game database indicates whether a player wins, losses, or draws from any possible game position for any allowed move. The size of such a database depends on all possible positions expressing a particular seed distribution over the pits of the board. If

m is the number of pits per side (except for the stores) and

N stands for the total number of initial seeds, then the total number

p(

m,

N) of such positions reads [

26,

34].

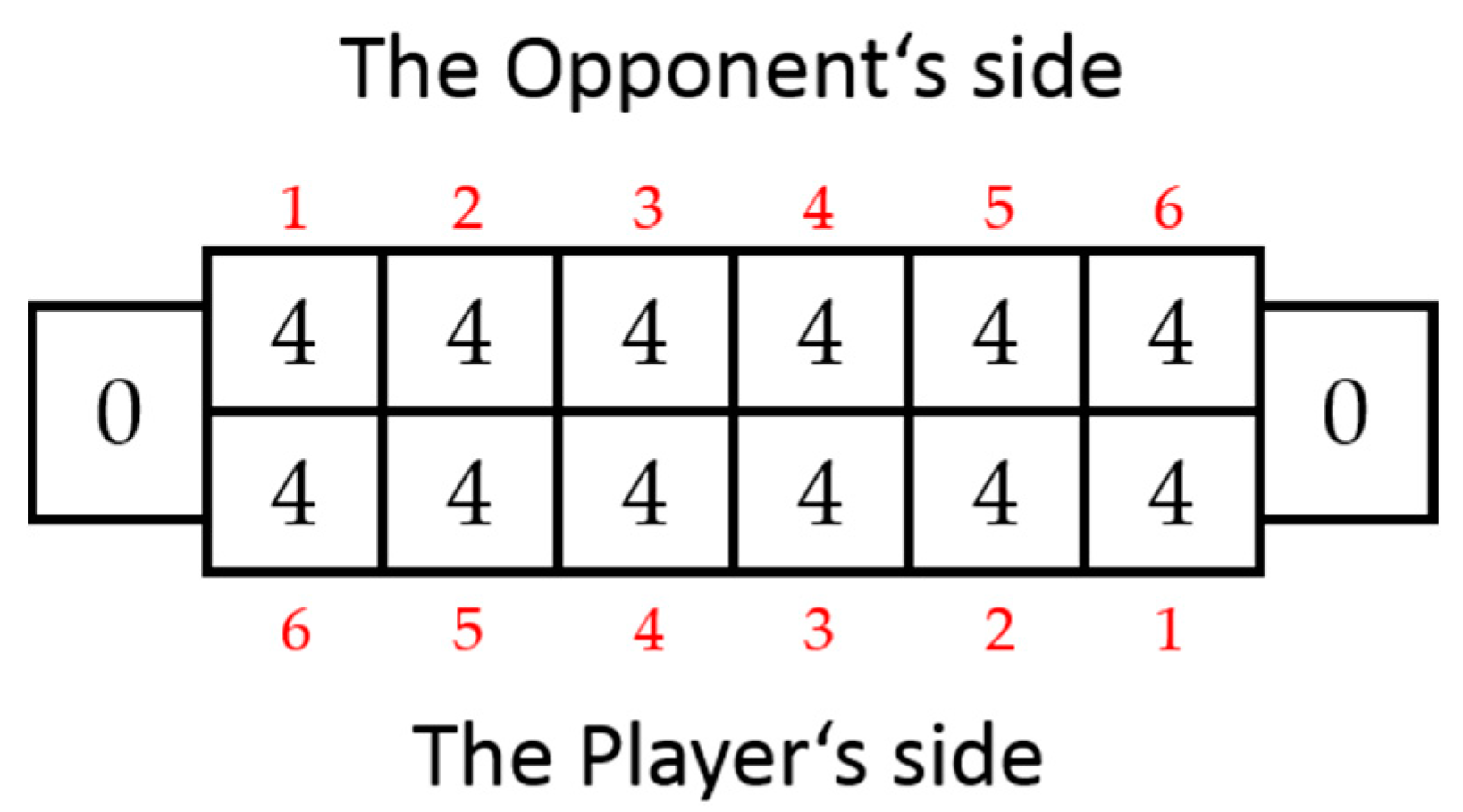

where the information about which player moves next is included. Since seeds captured into stores cannot re-enter the game, the number of active seeds decreases during the play; hence, the number of possible positions decreases as well. Therefore, Irving et al. [

29] determined the formula for the set size

s(

m,

Na) of all configurations with the number

Na of active seeds, regardless who is playing next, as follows

where configurations with no seeds on one of the board sizes are left out. The values of both

p(

m,

N) and

s(

m,

Na) increase rapidly with respect to the parameter values. The standard game with

m = 6,

N = 48 (i.e., four seeds per pit) yields

p = 1.313 × 10

13 and, for instance, if

N = 24, then

s = 1.25 × 10

9. However, only about 5% of all these positions can appear in a real game [

29,

34]. Romein and Bal [

35] solved Awari for the perfect play of both players. They used a large computing cluster and implemented a parallel search algorithm that determined the results for almost 9 × 10

9 positions in a 178-gigabyte database.

Regarding the solution of Kalah, the work of Irving et al. [

29] represents the fundamental contribution. The full-game databases were constructed (via the full game-tree search and the backward evaluation) for smaller instances of Kalah, up to

m = 4,

n = 3 where

n is the number of seeds per pit at the very beginning of the game. It represents the so-called strong solution, i.e., a strategy is known to achieve the game-theoretic value of all (not only the initial) possible board positions [

31]. This value means the outcome of the game when both the players behave optimally (the so-called perfect play), i.e., whether the game is won, drawn, or lost for the first player [

37]. A so-called weak solution for

m = 4 to 6,

n = 1 to 6 (except for

m =

n = 6) in the form of an advanced game-tree search was found in [

29] as well. This searching algorithm applies MTD(

f) [

52] (i.e., an improved alpha-beta pruning) with iterative deepening, including futility pruning [

53], transposition tables, a move-ordering, and an end-game database (with

m = 6,

Na ≤ 30). Note that the weak solution means that a strategy is known to achieve the game-theoretic value of the game from the initial position [

31,

54]. Smith [

36] in his survey on the use of dynamic programming for board games referred to the advantages and disadvantages of the above-introduced works [

29,

35]. A detailed analysis of the game with the combinations

m = 1 with 1 ≤

n ≤ 300 and 1 ≤

m ≤ 4 with

n = 1, and the eventual numerical observations were reported in [

32]. Pok and Tay [

55] proved that every game starting with

m = 1 and 11…11 (in ternary notation) seeds for each player yields a loss for the first player. Moreover, they proposed a modification of Kalah.

Results of a brute-force computer programming endeavor by M. Rawlings are referenced in [

56,

57]. It is reported therein that end-game databases for

m = 6,

n = 4 to 6 were computed within the years 2015 and 2018. In contrast to [

29], each of the initial moves with the further perfect play was analyzed. Besides, they constructed end-game databases, including the perfect play results for

m = 6,

Na up to 35; i.e., Equation (2) gives

s = 52.24 × 10

9 possible positions.

An excellent survey by Herik et al. [

37] analyzed several two-player board games in terms of used brute-force and knowledge-based methods. Regarding the latter ones, the authors discussed two algorithms. First, the threat-space search [

31] that investigates whether a win can be forced by a sequence of threats (to which the opponent has only a limited set of replies at any time). Second, the proof-number search [

58] where the cost function is given by the minimum number of nodes that have to be expanded to prove the goal. In this survey, it was also questioned whether the knowledge obtained from solved games be translated into rules and strategies which human beings can assimilate and whether these rules are ad-hoc or generic for various games and their complexity. The authors concluded that the answers are dependent on the capacity of the human brain, and the rules are ad-hoc and hardly intelligible to human experts.

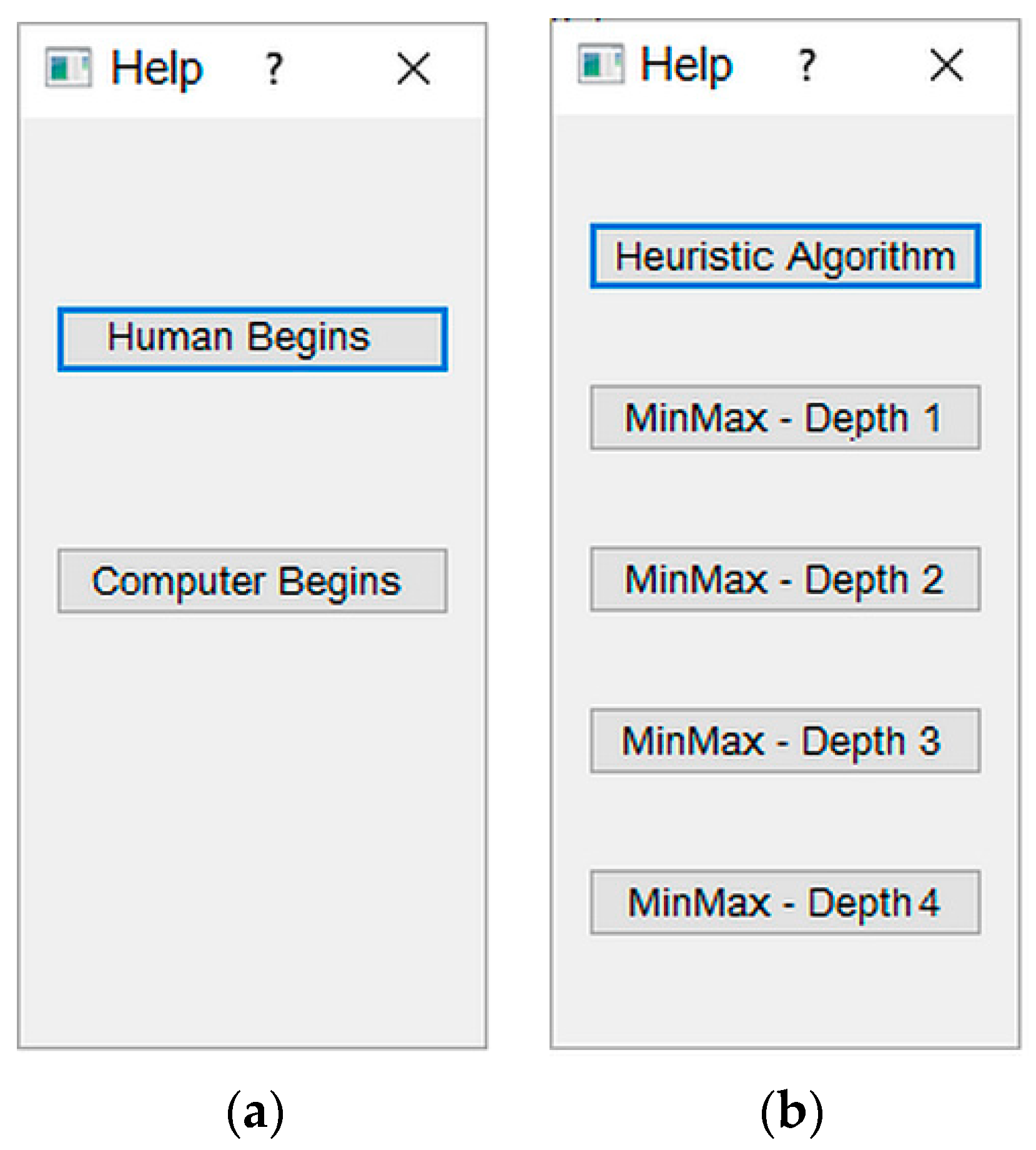

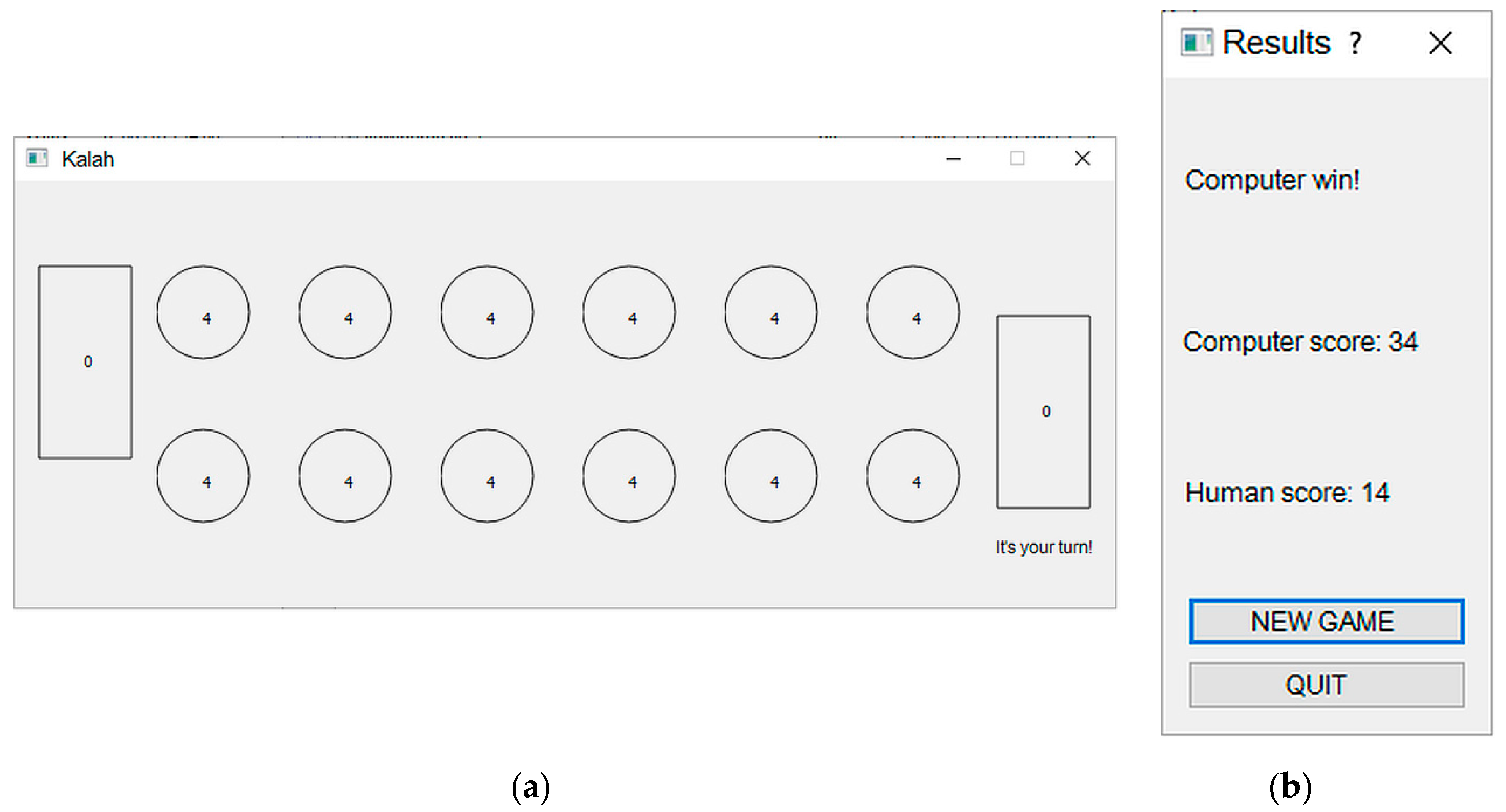

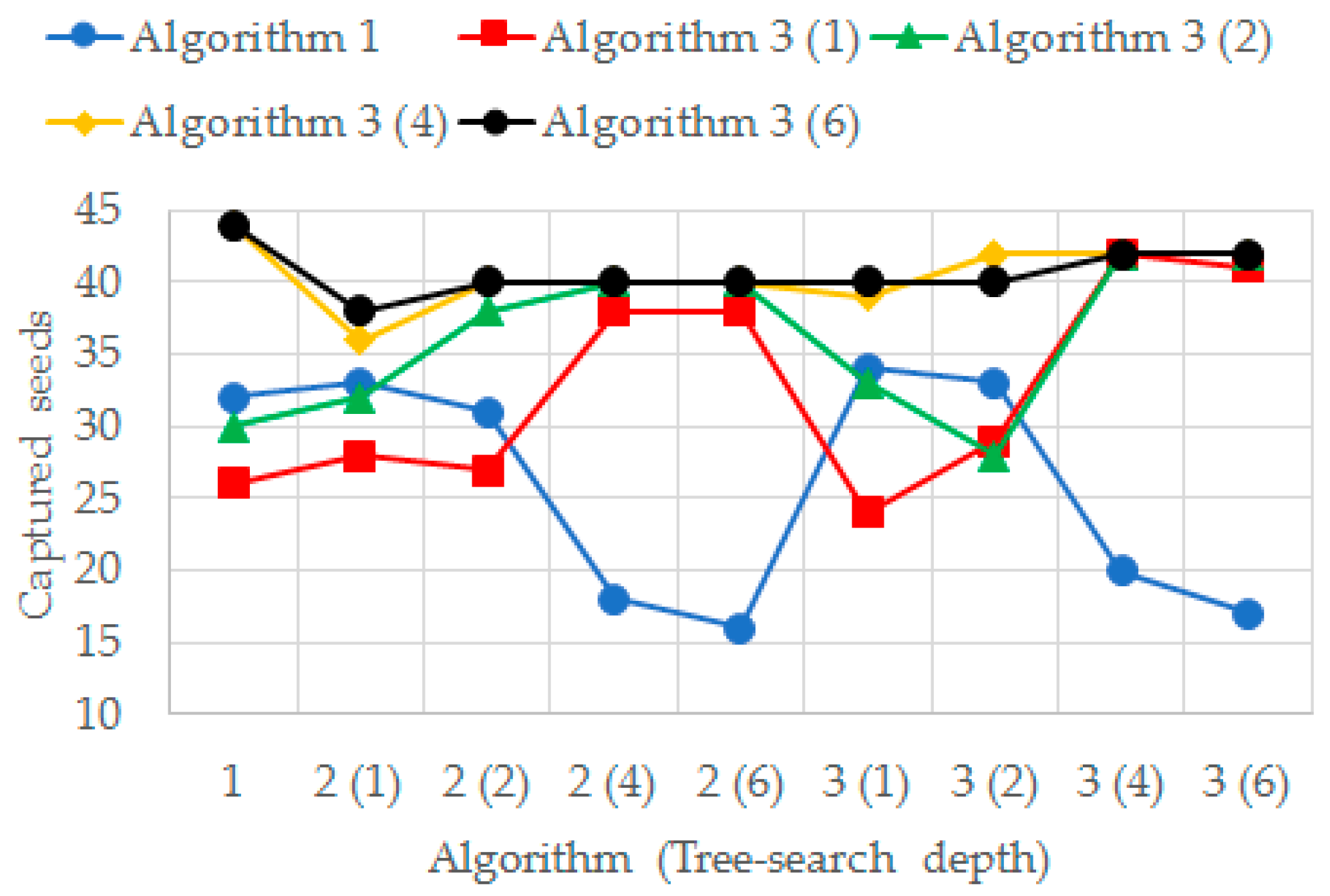

Tree-search algorithms have become popular in the reign of board games. In contrast to brute-force full-game databases, they provide faster searching for a suitable move strategy and usually adopt some artificial intelligence (AI) or heuristic approaches [

59]. Usually, it is supposed that both the players behave optimally—in such a case, the standard mini–max tree-searching algorithm is used to get the evaluation function maximum value for one player and the minimum for the opponent in every single depth of the game tree. In [

60], an overview of popular and effective enhancements for board game using Monte Carlo tree search (MCTS) agents is given. The MCTS is based on a randomized exploration of the search space [

61]. Ramanujan [

62] studied, inter alia, the efficiency of a family of mini–max methods, and the Upper Confidence bounds applied to Trees (UCT) [

63], an advanced sampling-based planning approach, used to general Mancala games. They claimed that both the UCT and mini–max produce players that are competitive with nearly no enhancements to the basic algorithms in Mancala. A combination of the mini–max game-tree search and heuristics was proposed in [

38]. Even if used for a Domineering board when perfect solving (defined as solving without any search), Uiterwijk [

40,

64] discussed the advantage of a heuristic that might be very useful in Kalah as well—safe moves. A safe move cannot be prevented by the opponent. In his bachelor’s thesis, Berkman [

65] summarized and benchmarked several advanced mini–max techniques for Toguz kumalak (a Mancala game close to Kalah), such as alpha–beta pruning, iterative deepening, hash functions, the MCTS, and transpositions tables. An excellent, thorough, and extensive analysis and benchmark of AI game-tree algorithms for several board games (including Kalah) were provided in [

26]. Compared to the preceding referenced source, the greedy algorithm and advanced heuristic mini–max [

38] (that uses a more refined heuristic function) were applied in addition. The results proved the advantage of the heuristic approach. Perlinski [

66] proposed a faster search by using the so-called partial board tree search that involves dividing the game board into smaller parts. In [

56], several Kalah aspects and tactics by Chris Bandy are referred. Note that some of the above-mentioned heuristic approaches are introduced in more detail in

Section 3.2.

Akinyemi et al. [

67] proposed a hybrid combination of the mini–max and a machine-learning-based refinement applying the perceptron algorithm [

68] for the Ayo/Awari game. They used “priority moves” that give the player a better advantage; however, their meaning remains unclear due to a poorly detailed description. Oon and Lim [

69] used the co-evolution of artificial neural networks on Kalah, where the input layer represented the current board position, and each input was an integer equal to the number of seeds in the corresponding pit. The so-called opponent-model search [

70] for board games (namely, the Bao game was used as a testbed) was investigated in detail in [

71]. This game-tree search algorithm uses a player’s hypothesized model of the opponent moves in order to exploit weak points in their strategy; it assumes that the opponent uses the mini–max algorithm, and their evaluation function is worse than the player’s one. Birrell [

72] investigated and discussed the results of applying reinforcement learning to train an agent to play an (unspecified) Mancala game with the rules almost identical to Kalah. Here, the agent saves the board state to a stack at the start of each turn. At the end of the game, it goes through each board state and adjusts the weight of the move according to the agent’s success. Training sets begin with an untrained agent that chooses each move randomly. Yet, only one-step ahead moves were evaluated in [

72].

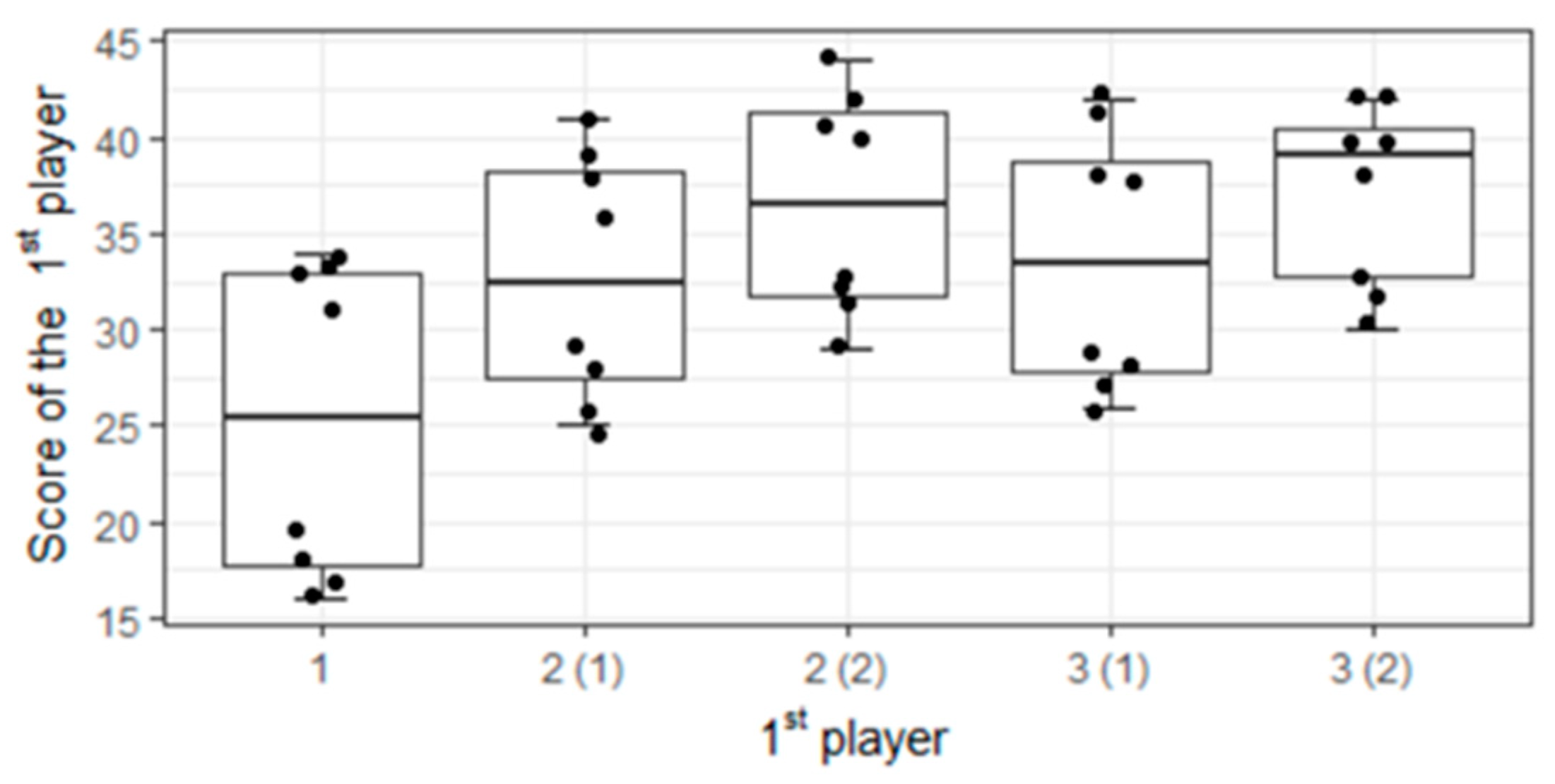

Some scholars discussed the advantage of the first turn yielding the winning end of the game. Donkers et al. [

73] proved the winning opening for the Dakon game, the rules of which are close to Kalah. Irving et al. [

29] computed that higher values of

m and

n (

m +

n > 6) yield the advantage of the first player when perfect playing; however, this rule has exceptions (e.g.,

m = 3,

n = 6 results in a loss for the first player). They particularly proved a win by 10 for the first player with a perfect play for the standard game (

m = 6,

n = 4). Carstensen [

74] filled in the gap of [

29] and proved the first-player win for

m =

n = 6; this win is by 2 [

56]. In [

32], a summarizing table of the first player’s wins/draws/losses for 2 ≤

m ≤ 6 and 1 ≤

n ≤ 10 (except for some higher values of the sum

m +

n) is displayed for a specific simple heuristic strategy (see

Section 3.2). Rovaris [

26] referred to a slight bias towards the first player (48.6% vs. 44.9%) when combating several strategies randomly. They believed that it is because the player going first is the first one able to gain multiple moves. Herik et al. [

37] found that there is a clear correlation between the first player’s initiative and the necessary effort to solve the game. In [

72], it was initially supposed that the first player could win 70% of all games; however, no significant advantage of the first player was eventually approved for a trained agent.

Another problem is to propose a suitable and meaningful position evaluation, i.e., to determine the cost function. Donkers et al. [

73] defined three different types of evaluation functions expressing prediction of the game-theoretic value, probability of winning, and profitability of the current position. A cost function in the form of a weighted combination of six heuristics (strategies) of the player and the opponent was proposed in [

38]. In [

65], the cost function is simply given by the number of stored seeds, the weights of which are set by a genetic algorithm. Note that the use of genetic programming when playing Mancala games was discussed in [

75].

Only a few research results were dedicated to a rigorous mathematical analysis of the game based on the combinatorial theory. Broline and Loeb [

76] studied a certain end-game correspondence between a Mancala game Ayo (Awale) and the solitaire game Tchoukaillon. In particular, they analyzed the possibility of repeated moves (i.e., when the last seed ends in the player’s kalaha). There exist schemes that enable to clear a particular number of pits by these moves there. It was proved that the minimum number of seeds required for such a clearance scheme for

m pits is asymptotically approximated by

π2/

m. An extension of a novel method for constructing move vectors in Tchoukaillon to Mancala games was proposed in [

77]. Musesti et al. [

78] derived the lower and upper bounds required to reach periodicity in Oware. However, these results are purely theoretical and hardly applicable when practicing the game.

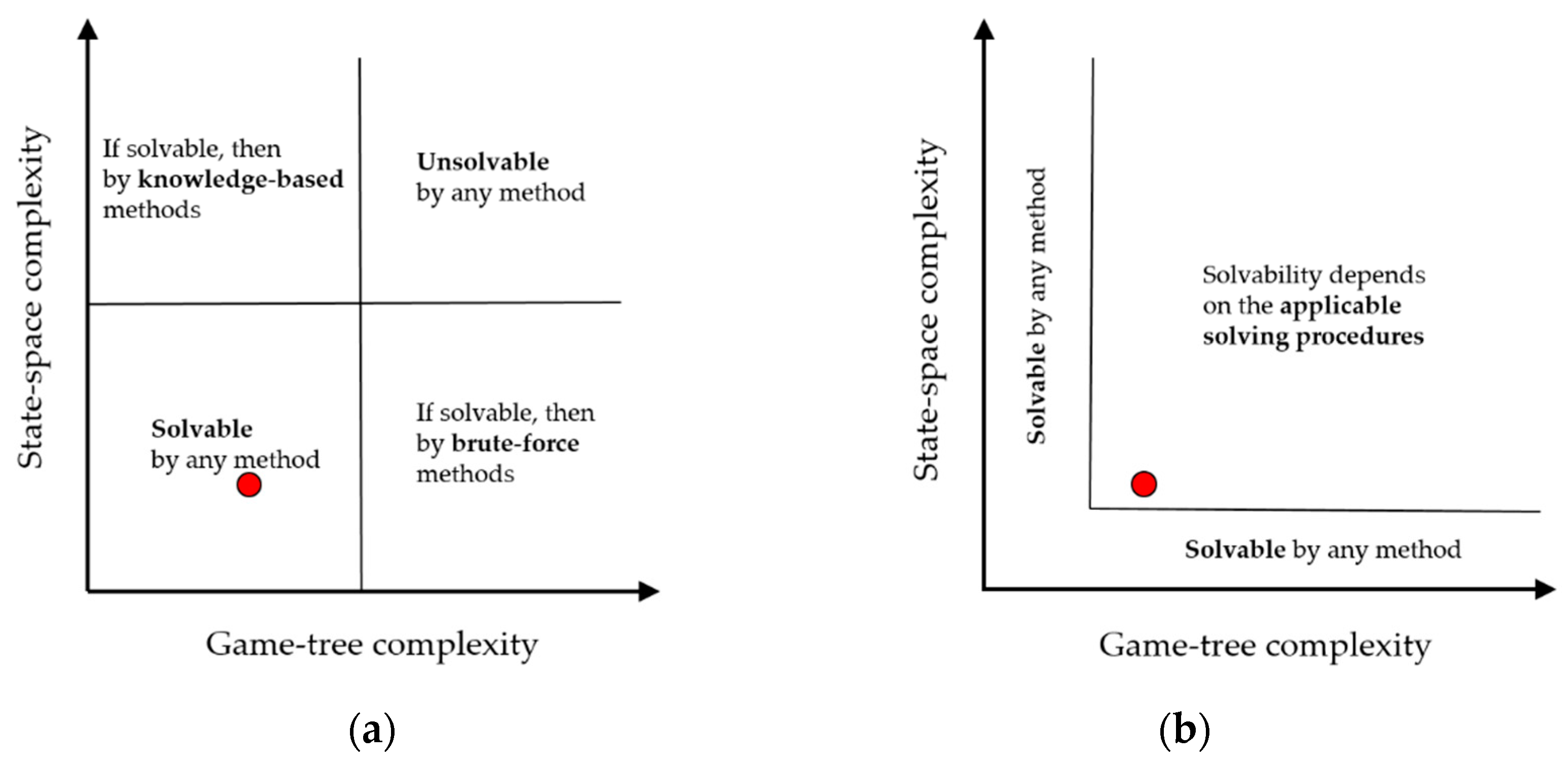

Kalah has relatively low state-space and game-tree complexity, these are 10

13 and 10

18, respectively [

54]. For instance, Chess has 10

43 and 10

123, respectively. Besides these two complexity measures, an alternative concept (based on the games solved until the date) to determine the solvability of two-player games was introduced in [

38], see

Figure 2. The authors pointed out that, e.g., Qubic game was solved in 1980 while Awari in 2003—but the former one has the lower state-space and the game-tree complexity than the latter one. They introduced the notion of the solution size that refers to the number of positions for which the optimal move or strategy must be stored in a certificate proving the solvability of a game. This notion extends the decision complexity expressing the number of decisions that are required to store a solution [

31]. It holds that the solution size is smaller or equal to the decision complexity. The Awari game has a very high solution size of almost 9 × 10

11 [

35,

38], and it is expected that Kalah has a similar one.

Five categories/techniques (retrograde analysis, transposition tables, game-tree search procedures, winning threat-sequence methods, and winning pattern procedures) were discussed to reduce the solution size, and the retrograde analysis [

79] (starting at the terminal position and working backward) combined with the end-game databases was identified as a suitable solving procedure for games with large size. However, it represents a brute-force method that is suitable for solving the game, not for real-time playing, as discussed below.