Abstract

Meme image sentiment analysis is a task of examining public opinion based on meme images posted on social media. In various fields, stakeholders often need to quickly and accurately determine the sentiment of memes from large amounts of available data. Therefore, innovation is needed in image pre-processing so that an increase in performance metrics, especially accuracy, can be obtained in improving the classification of meme image sentiment. This is because sentiment classification using human face datasets yields higher accuracy than using meme images. This research aims to develop a sentiment analysis model for meme images based on key points. The analyzed meme images contain human faces. The facial features extracted using key points are the eyebrows, eyes, and mouth. In the proposed method, key points of facial features are represented in the form of graphs, specifically directed graphs, weighted graphs, or weighted directed graphs. These graph representations of key points are then used to build a sentiment analysis model based on a Deep Neural Network (DNN) with three layers (hidden layer: i = 64, j = 64, k = 90). There are several contributions of this study, namely developing a human facial sentiment detection model using key points, representing key points as various graphs, and constructing a meme dataset with Indonesian text. The proposed model is evaluated using several metrics, namely accuracy, precision, recall, and F-1 score. Furthermore, a comparative analysis is conducted to evaluate the performance of the proposed model against existing approaches. The experimental results show that the proposed model, which utilized the directed graph representation of key points, obtained the highest accuracy at 83% and F1 score at 81%, respectively.

1. Introduction

A Meme is a rapidly spreading idea, behavior, or style, generally in the form of images sourced from TV shows, movies, or user-created visuals accompanied by a witty text [1]. Typically, memes are intentionally created to express opinions, ideas, and emotions relevant to a topic trending on social media. Generally, memes are created when a particular topic gains widespread attention, as it is a phenomenon amplified by the growing number of social media users. The increase in meme creation and distribution has prompted the application of sentiment analysis, which is an analytical method that can be used to quickly study public opinion towards digitally circulating memes [2]. Through sentiment analysis, the prevailing public mood can be discerned and anticipated for future trends [3]. This analytical method has found applications in various domains, including politics [4,5], tourism [6,7], government intelligence [8], and academia [9]. Meme images may or may not contain human faces. However, sentiment analysis of meme images that contain human faces can be carried out with the use of facial expression recognition (FER) images. FER images contain only the face of a human and show various expressions of human faces that depict different emotions.

Sentiment analysis using datasets of human facial images that are not memes has been conducted by [10,11,12,13,14,15] and the resulting accuracy is more than 90%. These studies used publicly available datasets such as Facial Expression Recognition (FER2013), Japanese Female Facial (JAFFE), and/or Extended Cohn-Kande (CK+). The RAF-DB dataset was also used in [16,17] for robust facial emotion recognition. The primary objective of these studies was to categorize facial expressions into distinct emotions, including happiness, sadness, anger, and neutral. Deep learning was the most common approach for facial expression classification within these studies.

Despite extensive research on sentiment analysis, the number of studies that focus on memes is still limited [18]. Sentiment analysis has been frequently used to classify public comments/reviews regarding products, services, policies, and other subjects. Previous studies that have carried out sentiment analysis of memes using meme datasets include hateful meme classification [19,20], sentiment analysis-based emotion detection [21], sarcasm detection [18,22], and identification of offensive content within memes [23]. All these studies focus on meme datasets that contain non-Indonesian text, indicating the scarcity of research on sentiment analysis using meme datasets that contain Indonesian text.

Previous studies have carried out sentiment analysis on image-based memes. Prakash and Aloysius [21] carried out sentiment analysis on meme images based on facial expressions using a CNN architecture. The corresponding emotions of the facial expressions within the meme images are identified using the proposed CNN architecture. Subsequently, the meme images are categorized into three types of polarity, namely positive, negative, and neutral, based on the identified emotion. Meanwhile, Kumar et al. [24] applied the Bag of Visual Words (BoVW) and SVM algorithm to classify meme images into five types of polarity, namely highly positive, positive, neutral, negative, and highly negative. This research achieved an accuracy of 75.4% in carrying out sentiment analysis of meme images. Furthermore, Elahi et al. [25] conducted sentiment analysis on Bengali memes using a multimodal approach. The proposed method produced an image accuracy level of 73%.

Various techniques have been used to recognize features of objects within images, including the utilization of key points. Arwoko et al. [26] used key points to recognize finger movements representing the numbers 1 through 5, in which the proposed Deep Neural Network (DNN) model achieved an accuracy of 99%. Hangaragi et al. [27] used facial points (key points) to recognize eyes, nose, mouth, and outer facial lines which are then further used to detect human faces. The goal of the study was to create a facial recognition system based on a DNN model, which achieved an accuracy of 94.23%. The use of key points for feature extraction and the use of DNN models for classification in research [21,22] was proven to produce a high level of accuracy. Therefore, in this research, key points are used for feature extraction in meme images, while a DNN is used for one of the classification models. By using key points, facial features that are needed for image sentiment classification can be extracted. This is different from the research by Aggarwal et al. [19] and Rosid et al. [22], in which sentiment classification was conducted with the use of all the features within the image.

This study aims to develop a sentiment analysis model for meme images based on key points [27], using a dataset of meme images containing Indonesian text from digital media. The meme images analyzed in this study contain human faces. Sentiment analysis is conducted based on key points that represent facial features such as eyebrows, eyes, and mouth. In meme image classification, key points can be focused on representing important facial features to conduct sentiment analysis. These key points are represented in various forms, namely (x, y) coordinates, vectors centered on one point [26], and proposed graph representations, namely directed graph, weighted graph, and weighted directed graph. The use of graphs can make classification easier, especially for complex systems [28].

DNN models were fine-tuned and used to determine the sentiment polarity of the meme images, with the aim of achieving optimal accuracy. The use of key points in image sentiment analysis is still not widely developed. Usually, sentiment analysis is carried out from images that are processed directly using deep learning methods. The key point is used by [22] to recognize hand gestures to indicate numbers 1 to 5. By using key points, an accuracy of 98.5% is obtained. Even though the accuracy of [21] using machine learning methods is still around 75.4%, further innovation is needed. By using key points, image detection can be carried out in the required areas (corners, edges, unique patterns) so that the model does not process the entire image. This can speed up the process, reduce computing load, and save memory. Therefore, the contributions of this study are as follows (1) Developing a human facial sentiment detection model using key points, (2) Representing key points as various graphs, (3) Constructing a meme dataset with Indonesian text.

The subsequent sections of the paper are organized as follows: Section 2 describes related work on sentiment analysis, Section 3 details the methodology of this study, Section 4 elaborates on the experiment and comparative performance analysis of the proposed model, Section 5 discusses the results of the experiment, and finally, Section 6 presents the conclusion and future works.

2. Related Work

Previous works have been conducted on sentiment analysis of meme images. Image meme datasets were used for human facial emotion classification [9,10,11,12,13,14] and for building a presence system based on facial data [22]. Meena et al. [10] analyzed the sentiment of images that are not memes and consist of human faces using a hybrid CNN-InceptionV3 model. The public datasets in this study were Facial Expression Recognition (FER2013), Japanese Female Facial (JAFFE), and Extended Cohn-Kande (CK+). The same datasets were analyzed in the study by Meena et al. [11] to detect facial sentiment using VGG19. The results of this study indicated that on the CK+ and JAFFE datasets, the use of the VGG19 method produced better accuracy compared to InceptionV3. Moung et al. [12] compared the performance of Resnet50, InceptionV3, and CNN models for image sentiment analysis on the FER2013 dataset. The experimental results of this study showed that the ResNet50 model obtained the highest accuracy.

Prior research on sentiment analysis of multimodal data has been conducted by utilizing the Contrastive Language-Image Pre-training (CLIP) model, which is a model that connects text and image data to generate a unified understanding of both modalities. Liang et al. [29] used a CLIP-based multimodal feature extraction model to extract aligned features from different modalities that prevent significant feature differences caused by data heterogeneity. It was shown in the experimental results that the proposed model achieved classification accuracy improvements of 9.57%, 3.87%, 3.63%, 3.14%, 0.77%, and 0.28% for six different classification tasks compared to baseline models. Yu et al. [30] proposed a cross-modal sentiment model based on CLIP image-text attention interaction. The model utilizes pre-trained ResNet50 and RoBERTa to extract primary image-text features. The experimental results indicate that the model achieved accuracy rates of 75.38% and 73.95%.

Furthermore, previous studies on sentiment analysis have also been carried out on multimodal meme datasets to identify offensive content [19] and to analyze sentiment based on facial expressions [18,20,31]. While text meme datasets have been used for sentiment analysis [32] and sarcasm detection [33]. Research related to sentiment analysis using text meme datasets has been carried out in various fields, including economics [34,35], tourism [36], academics [37], and customer satisfaction [38]. Almost all of these studies use datasets from Twitter. Various machine learning and deep learning methods were used to perform different tasks. It was shown that the use of deep learning methods produced accuracies of more than 85%.

Several prior studies have focused on conducting sentiment analysis of multimodal memes. Kumar et al. [24] aimed to analyze fine-grained sentiment from multimodal memes. The polarity of sentiment was categorized into five classes, namely very positive, positive, neutral, negative, and very negative. This study used a hybrid deep learning model, namely ConvNet-SVMBoVW, that was based on CNN and SVM. The proposed model achieved an accuracy of 75.4%. Kumar and Garg [31] conducted multimodal meme sentiment analysis, focusing on meme images containing text. The sentiment score of images is calculated using SentiBank, a visual sentiment ontology, and R-CNN. Text sentiment score was calculated using a hybrid of context-aware lexicon and ensemble learning. Finally, the polarity of the multimodal memes was determined by aggregating the scores of image sentiment and text sentiment. Evaluation was carried out using randomly selected multimodal tweets related to the Indian Criminal Court verdict against the LGBT community. The proposed model achieved an accuracy of 91.32% for multimodal sentiment analysis.

Moreover, several previous studies focused on sentiment analysis based on facial expressions. Aksoy and Güney [14] explored sentiment analysis based on facial expression using a dataset of human facial images that are not memes. Facial expressions were classified into seven groups, namely happy, sad, surprised, disgusted, angry, afraid, and neutral. Several public datasets were used, including Facial Expression Recognition (FER2013), Japanese Female Facial (JAFFE), and Extended Cohn-Kande. The experimental results indicate that the highest accuracy was obtained with the use of Histograms of Oriented Gradients (HOG) for feature extraction and ResNet for classification. Moreover, sentiment analysis based on facial expression is carried out by considering many facial features. Zhang [39] stated that in a meme image, there exists a relationship between the object, emotion, and sentiment.

Datasets of human face images are slightly different from meme image datasets. In datasets of human face images, facial expressions are shown from several different angles of the face. The meme images used in this study are meme images in which the face of a person can be observed. Occasionally, the human face in a meme image may look small. Based on the results of individual tests to see the differences in sentiment groups, with α = 5%, it was obtained that in the face dataset (FER2013), 88.4% of features were different between the sentiment groups, while in the meme dataset, only 53% of features were different. As a result, sentiment analysis on the human face dataset will produce better accuracy than sentiment analysis on the meme dataset.

In this research, sentiment analysis is carried out by extracting facial features within meme images using key points. The key points indicating eyes, eyebrows, and mouth are represented in five forms, namely (x, y) coordinates, vectors centered on one point, directed graph, weighted graph, and weighted directed graph. Model evaluation is carried out using several evaluation metrics, namely accuracy, recall, precision, and F1-score.

3. Methodology

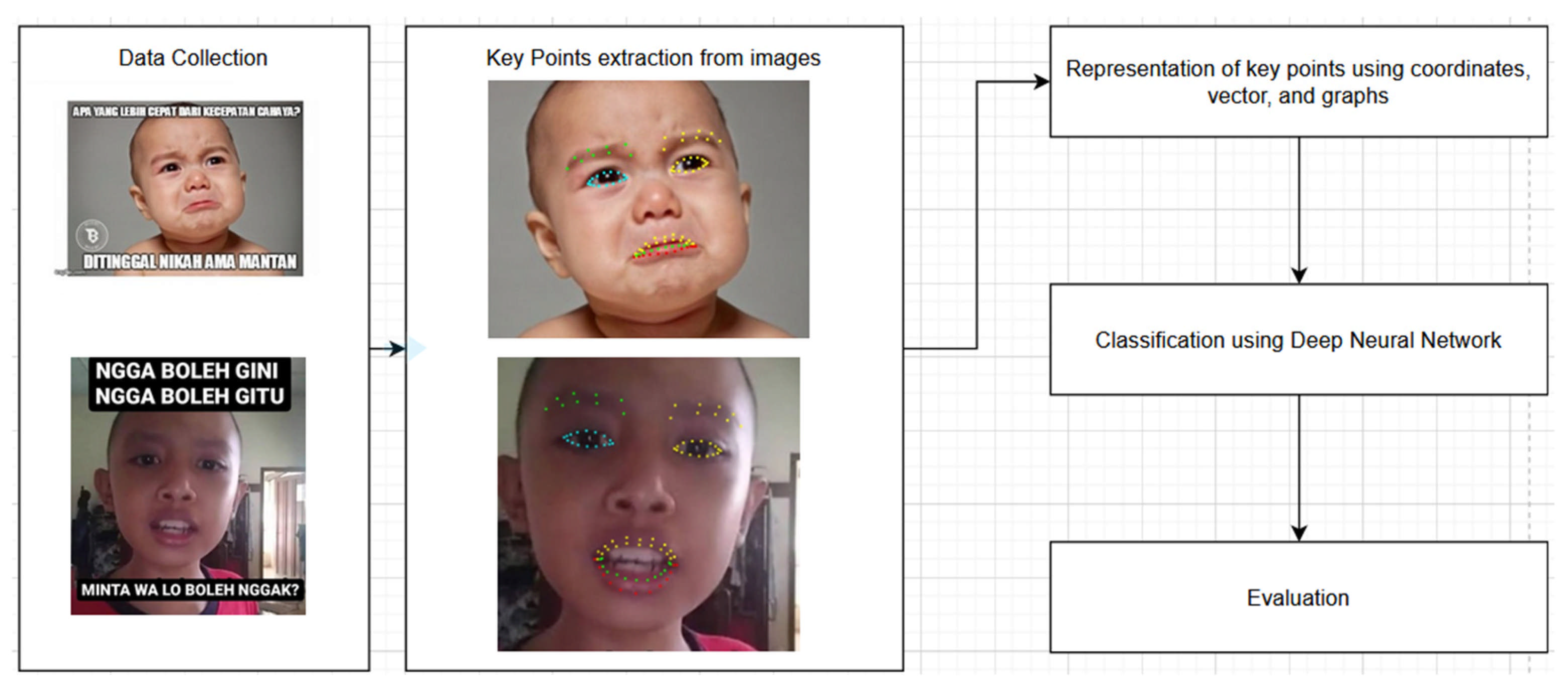

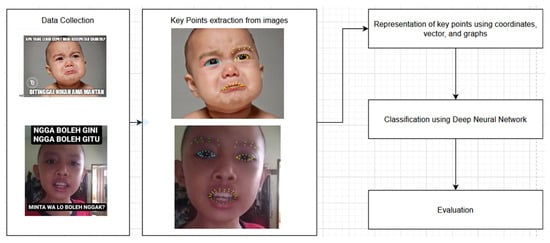

This study was conducted in five stages, namely (1) data collection, (2) key points extraction from images, (3) key points representation using coordinates, vectors, and graphs, (4) classification, and (5) evaluation of results. Figure 1 shows the flow diagram of the five stages in this study.

Figure 1.

Diagram of the research method.

3.1. Data Collection

The dataset used in this study consists of meme images containing Indonesian text gathered from digital media. The memes are obtained from various social media, including Instagram, Facebook, Twitter, Kompasiana.com, detik.com, Liputan6.com, and several blogs. A manual collection of meme images was conducted between September 2021 and May 2024, using keywords such as ‘mudik’, ‘nikah, ‘17 Agustus’, ‘Idul Fitri’, ‘THR’, ‘liburan’, ‘Kaesang’, ‘piala dunia’, ‘Mandalika’, ‘politik’, ‘natal’, ‘tahun baru’, ‘timnas’.

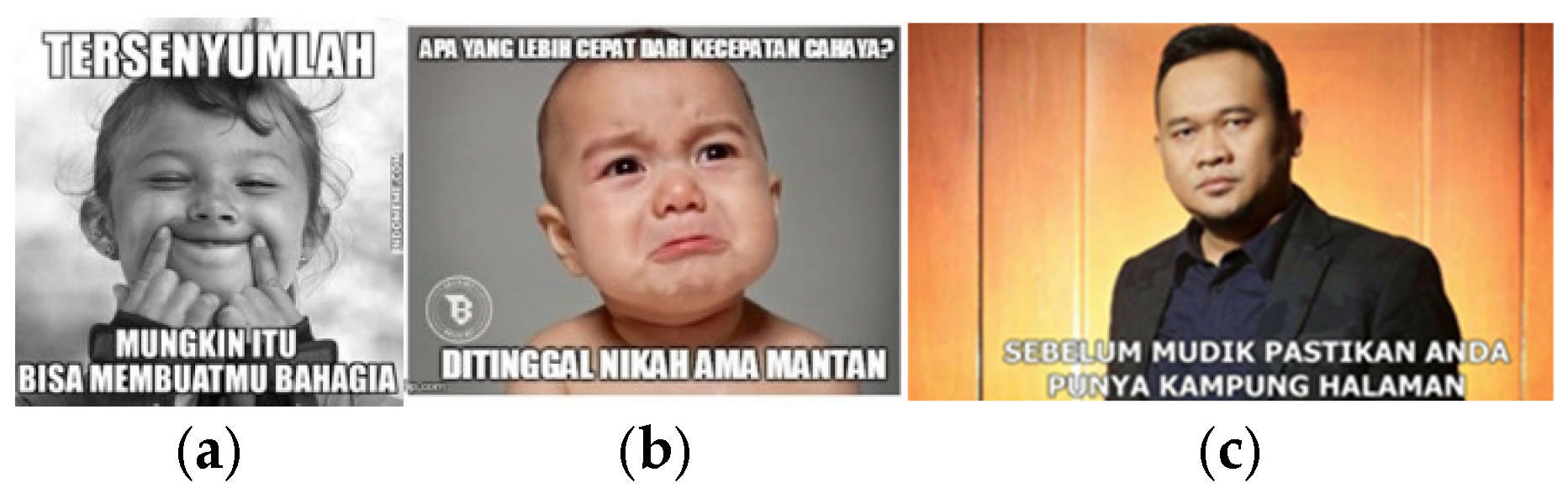

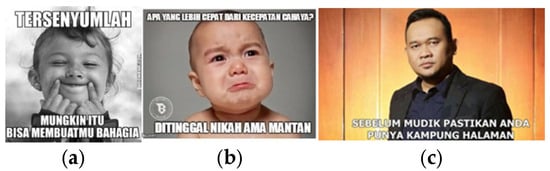

The meme images that were collected are memes that contain human faces, in which the shape of the eyebrows, eyes and mouth are visible. The face within the images must be facing the front or at a slight angle. The system is not able to recognize side view faces. Overall, there were 2200 meme images that were collected. Figure 2a shows a picture of a smiling person, which is recognized as having a positive sentiment [12]. The text in the image “Tersenyumlah, mungkin itu bisa membuatmu bahagia” (“Smile, maybe it will make you happy”) expresses a positive sentiment. Figure 2b shows a picture of a sad crying person, which is recognized as having a negative sentiment [12]. The text contained in this meme, “Apa yang lebih cepat dari kecepatan cahaya? Ditinggal nikah ama mantan” (“What is faster than the speed of light? Being left by your ex-partner to get married”), also expresses a negative sentiment. Meanwhile, Figure 2c shows a picture of a person with no expression, which is categorized as having a neutral sentiment.

Figure 2.

Meme images labeled as (a) Positive, (b) Negative, and (c) Neutral.

In general, facial expressions can be categorized into three types of polarity, namely positive (happy and surprised), negative (angry, disgusted, fearful, and sad), and neutral [12]. A happy expression can be characterized by wrinkled eyes, lip corners that are pulled upwards, and a smile. A surprised expression can be identified by raised eyebrows, a slightly opened mouth, and wide eyes. An angry expression can be shown by sharp eyes, furrowed eyebrows, flared nostrils, and narrowed lips. The expression of disgust can be identified through eyes that are contracted in the upper area and slightly raised lips. An expression of fear is characterized by simultaneously raised eyebrows, raised upper eyelids, tense lower eyelids, and horizontally stretched lips towards the ears. When a person is sad, the top of their eyes will drop downwards, their eyes will become unfocused, and the corners of their lips will drop slightly [40]. Labeling was carried out by two annotators who are experts in facial expressions. If the two annotators provide different labels for a meme image, then the meme image is not used.

Data resulting from key point representation in the form of coordinates, vectors, or graphs are subjected to statistical tests, using multivariate analysis, to determine whether the feature values for positive, negative, and neutral data are significantly different.

3.2. Key Points Extraction from Images

Facial key points are the vital areas on a face, from which the facial expression and emotion of a person can be evaluated. Hangaragi et al. [27] developed a face detection and recognition model using key points. These key points represent the eyes, mouth and outermost facial lines. Facial expressions that reflect emotions can be characterized from various parts of the face, including the eyes, eyebrows, and mouth [41]. In this study, key points representing eyebrows, eyes, and lip lines are the analyzed facial features. Key points are outlined using Python and the media-pipe library. By using the media-pipe library, a face is represented by 468 points. There are 18 key points for the eyebrow line, 32 key points for the eyes, and 47 key points for the lips. It should be acknowledged that each image is analyzed using 97 key points.

3.3. Key Points Representation

The key points derived from meme images are represented in five forms, namely (x, y) coordinates, vectors centered on one point, directed graph, weighted graph, and weighted directed graph. The five representations are written in numbers that describe positions, vectors, or angles.

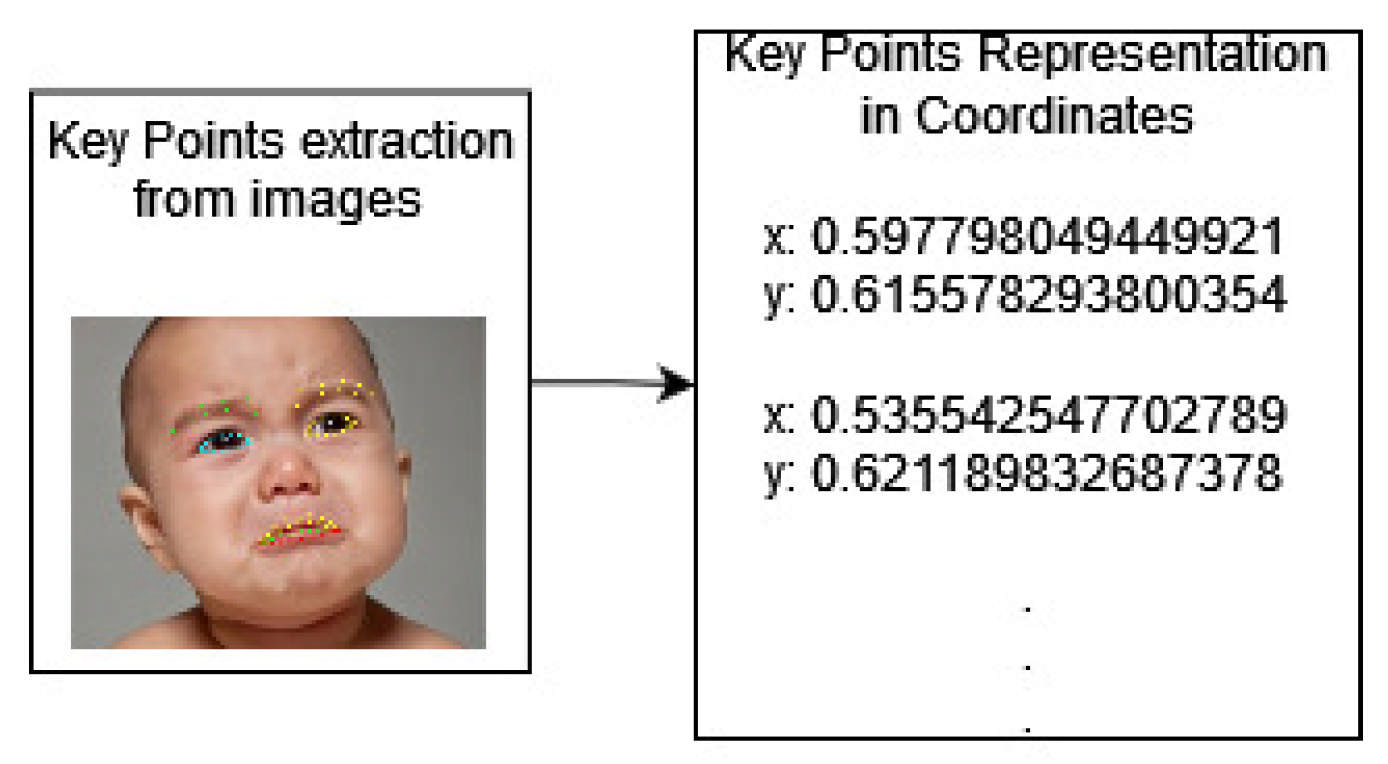

In the first representation, namely (x, y) coordinates, the position of each point is expressed in terms of numbers indicating the x and y coordinate positions. One eye in a meme image is represented by 16 key points, which yields 32 coordinates. In the coordinate representation of meme images, we obtain a set of (x, y) coordinates that describe positive, negative, or neutral sentiment. Table 1 shows examples of 3 key points represented in coordinates that indicate a specific sentiment. The data in Table 1 will be classified using DNN. Sentiment 0, 1, and 2 indicate negative, neutral, and positive sentiment, respectively.

Table 1.

Example of 3 key points from eye features indicating different sentiment.

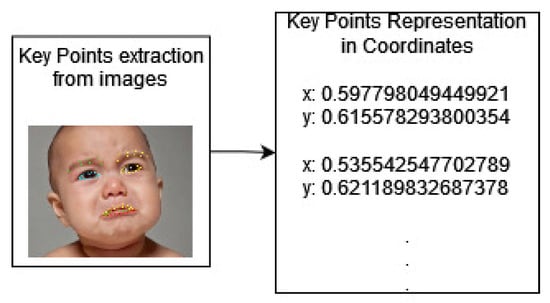

If there are n meme images, of which each image is represented by 97 key points, then 194n coordinates are obtained. The representation of key points in coordinates is classified by a machine learning model into three categories, namely positive, negative, or neutral. The illustration of this methodological process is shown in Figure 3.

Figure 3.

Key points representation in coordinates.

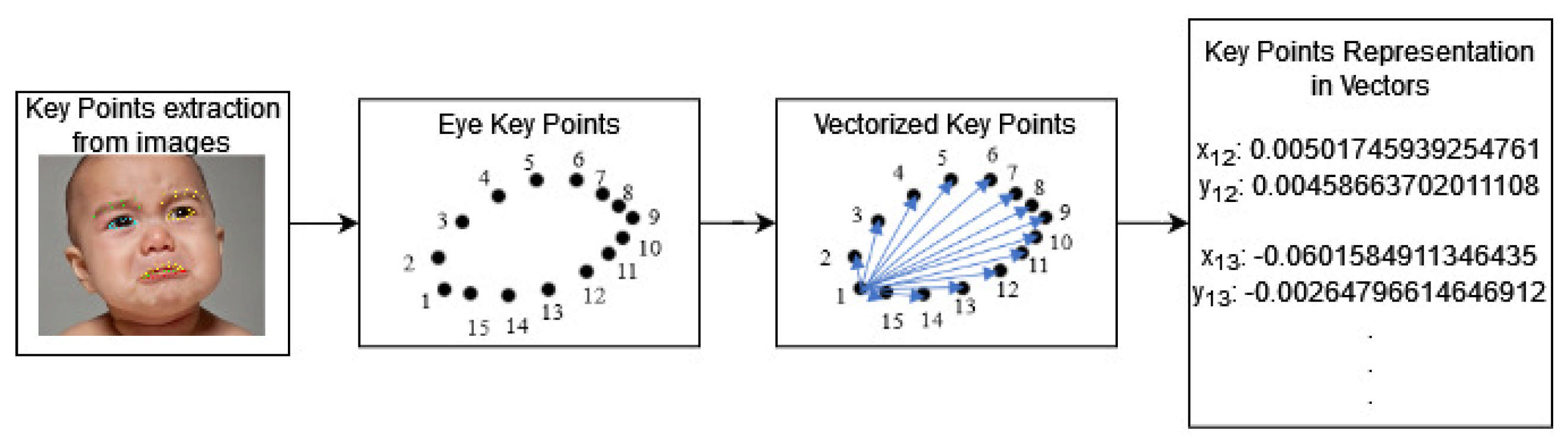

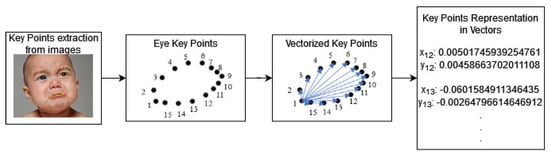

In the second representation, namely vectors centered on one point, all facial key points are labeled and vectorized towards a central point [26]. For example, vector V representing the eye position comprises a set of vectors from all points towards the center:

Subsequently, vector V is normalized as follows:

The normalized vector is then modeled and classified using a machine learning model. Figure 4 shows the classification process using key points in the form of vectors. For example, if there are n key points of an eye feature and one of the key points (key point 1) is used as a reference point, then (n − 1) vectors are obtained. For the vectors are calculated as follows:

Figure 4.

Eye key points representation in vectors.

The resulting vector set is normalized using Equation (2). The normalized data is used for sentiment classification using DNN.

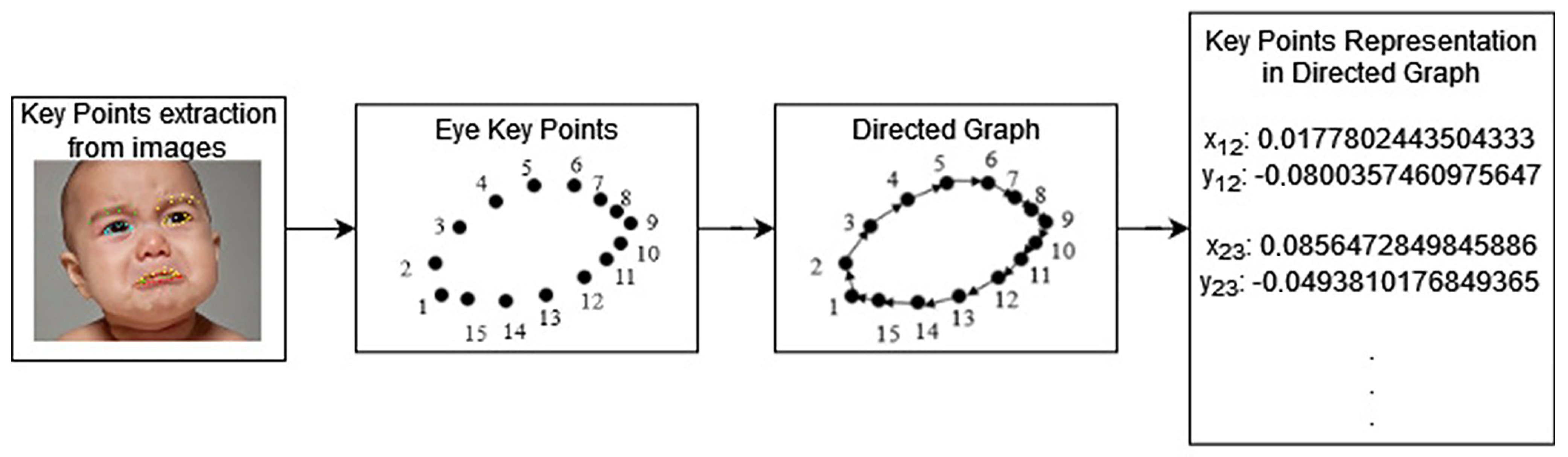

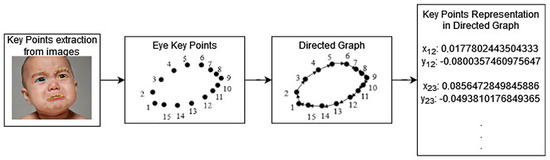

Key points are also represented as a directed graph. A directed graph is a graph in which the vertices are connected by directed edges. Key points are represented by the vertices of the graph, and the edges of the graph connect adjacent vertices. The vertices representing key points are labeled with a number. Two vertices that are consecutively labeled are connected by an edge, and the direction of the edge is from the smaller number to the larger number. As an example, Figure 5 shows key points represented as a directed graph. The vertices are labeled from 1 to 15. The edge connecting the key points labeled consecutively as and is termed , which is a vector with coordinates . In a meme, an eye feature is represented by several key points. Every two adjacent key points are connected by a directed line, thus forming a directed graph. The directed graph data is represented by coordinates . The data representation of key points as a directed graph is further classified using machine learning methods.

Figure 5.

Eye key points representation in directed graph.

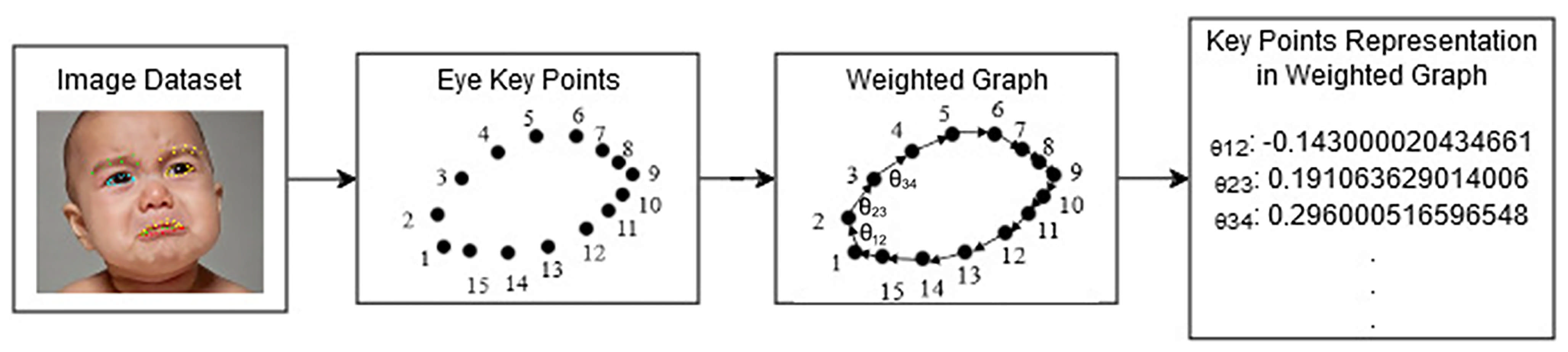

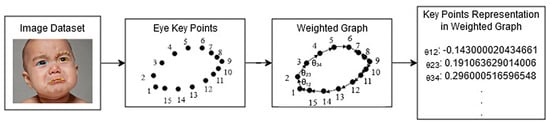

Furthermore, an example of key points represented as a weighted graph is shown in Figure 6. The weight indicates the angle between two key points. The angle between key points labeled consecutively as and that are connected by an edge with vector coordinates and is calculated as follows:

Figure 6.

Eye key points representation in weighted graph.

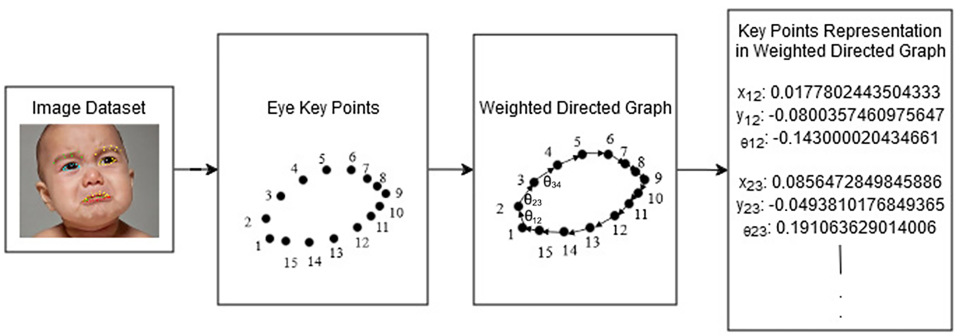

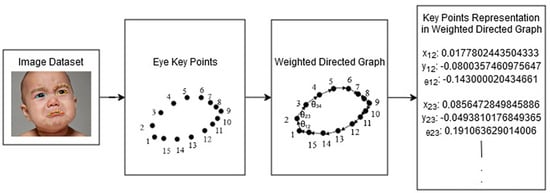

Figure 7 shows the representation of key points as a weighted directed graph. This representation is a combination of the directed graph and weighted graph representation of key points previously detailed.

Figure 7.

Eye key points representation in weighted directed graph.

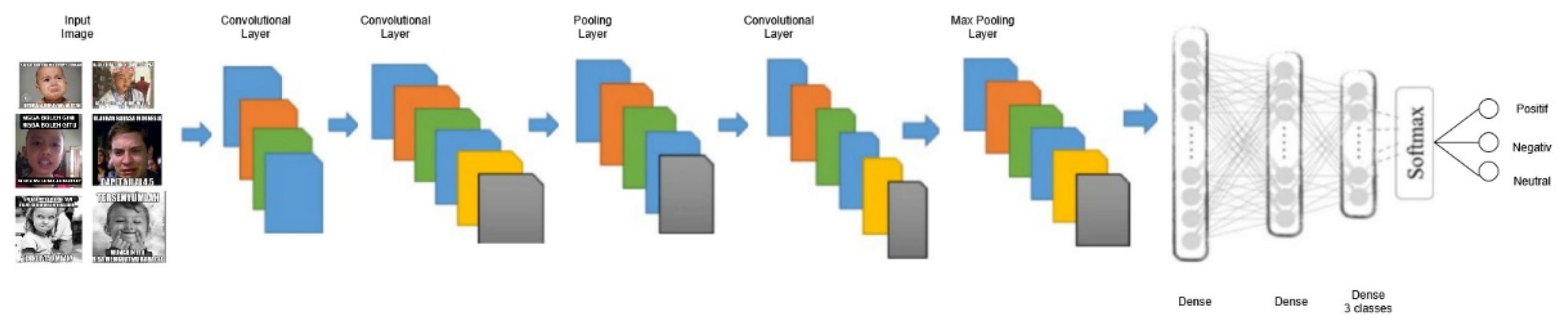

3.4. Classification Model

The model used for classification is the Deep Neural Network (DNN) model with three layers. The architecture of the proposed model can be seen in Figure 8. The meme image as input data is processed by the MediaPipe library (as seen in Figure 8) to obtain keypoints. The use of MediaPipe is necessary because only essential features of the facial region are needed for classification. The keypoints are converted into features based on the representation used. These features are classified by DNN into positive/negative/neutral sentiments.

Figure 8.

The proposed model.

This model is applied to classify each representation of key points, namely (x, y) coordinates, vectors centered on one point, directed graph, weighted graph, and weighted directed graph. Model creation for each key point representation is carried out until a small error is obtained. The training model is created through several trials. To obtain the best model, parameter tuning is carried out on the DNN model. The result of parameter tuning on one key point representation is used to create the models of the other representations. The best model is used to carry out sentiment analysis, and the performance is measured using several metrics. This is done for all key point representations.

The highest obtained classification result with the use of key points represented as a directed graph along with DNN will be compared with the classification results of other models, namely Logistic Regression (LR), Random Forest (RF), Decision Tree (DT), and Support Vector Machine (SVM). The goal is to determine whether the use of other models combined with the directed graph representation can obtain better results compared to the DNN model. Moreover, to further evaluate the proposed graph representation of key points in determining the sentiment of the meme images, experiments were also carried out to determine the sentiment of the meme images using several deep learning models without the use of the graph representation of the key points. The deep learning models that were used in this study are CNN, MobileNet, VGG 16, ResNet50, Xception, and InceptionV3. The evaluation results were also compared with the results of previous research.

3.5. Evaluation

An evaluation was carried out to determine the optimal proposed model for sentiment analysis of the meme images. The dataset was divided into training (90%) and testing (10%) data. Performance evaluation was conducted for all the key point representations. Several evaluation metrics were used, namely accuracy, precision, recall, and F1-score. In the evaluation, it was shown that the resulting accuracy on the meme dataset was not as good as that on the human face dataset.

4. Experiments

This section provides an in-depth analysis of the performance of the proposed model. The efficacy of the proposed model in carrying out sentiment analysis of meme images is detailed and measured using several evaluation metrics. This is followed by a comparative analysis, in which the performance of the proposed model is compared to that of baseline models and models described in previous research.

4.1. Dataset

In total, 2200 meme images were collected. Two annotators were tasked to label the meme images. To assess the reliability of the labeling process, the multiclass Kappa coefficient test was used. The Kappa test yielded a coefficient of 0.36, indicating fair agreement [42]. To increase the agreement between the two annotators, images that were labeled differently by the annotators were excluded, resulting in 676 meme images. After the initial data processing stage using Python 3, 32 unrecognized images were discarded, resulting in 644 meme images for further analysis. The class distribution of the collected meme images dataset is 35.1% positive, 47.7% negative, and 17.2% neutral.

To determine whether there were differences in the values in each negative, neutral, or positive group, a comparison test of the averages of the three groups was carried out. Table 2 shows the results of statistical testing for the weighted graph data. With a value of α = 5% and H0, the average value of each group is the same. Furthermore, a Sig value < 5% was obtained, meaning that H0 was rejected, indicating that the average value of each sentiment group was different. The same result was also obtained in the statistical testing for the directed graph data. Table 3 shows that for α = 5%, F-value = 5.821 and F-table = 2.372. F-value > F-table indicates that there were differences in the average value of each sentiment group.

Table 2.

Statistics result of representation in weighted graph.

Table 3.

Multivariate analysis of variance of representation in directed graph.

4.2. Performance Evaluation

We evaluated the proposed model on meme images that were collected from several social media platforms, including Twitter, Facebook, and Instagram. The performance of the proposed model for sentiment analysis was evaluated using four metrics that are commonly used in image classification, namely precision, recall, F1 score, and accuracy. Table 1 shows the performance of the proposed model with the DNN architecture. These metrics rely on analyzing four categorical outcomes of the classification process, namely True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN). In the context of sentiment analysis, TP represents the samples that were correctly classified. FP represents the samples that were incorrectly identified as positive sentiment, TN represents the samples that were correctly identified as negative sentiment, and FN represents the samples that were incorrectly identified as negative sentiment. The evaluation metrics are calculated as follows:

Based on Table 4, the use of key points represented as a directed graph resulted in the best accuracy compared to the other representations. Table 5 shows that the DNN model correctly classified 97.37% of the meme images with negative sentiment, 27.27% of the meme images with neutral sentiment, and 82.35% of the meme images with positive sentiment. From all the test data, only 4.55% of the meme images with neutral sentiment were correctly predicted. Most of the meme images with neutral sentiment were predicted to have a negative sentiment (71.21%). The dataset is dominated by the negative class. Class imbalance can significantly impact classification results when using machine learning because the classification model is more inclined toward the majority class. This causes the model to consider training samples from the majority class as more important. On the other hand, data from minority classes may even be regarded as noisy data [43].

Table 4.

Performance of the proposed model.

Table 5.

Confusion matrix for directed graph.

The sentiment accuracy results obtained are still smaller compared to the sentiment accuracy with the human face dataset (FER2013). Therefore, statistical testing is carried out to see the differences in sentiment groups on each feature for the directed graph representation. By using the Manova test (Wilks’ Lambda), a significant P-value was obtained for the meme and human face datasets. Further analysis used individual tests for 181 features; it was obtained that in the human face dataset, 88.4% of the features were different between sentiment groups, while in the meme dataset, only 53% of the features were different. Due to the large difference in features between sentiment groups, the evaluation results of the human face dataset were better than the meme dataset.

The confusion matrix in Table 5 shows that most of the samples with neutral sentiment were predicted to have a negative sentiment. Neither the negative nor the positive samples were predicted as neutral. This is due to the relatively small number of neutral samples compared to the other classes.

Figure 9 shows the accuracy and loss curves for 100 epochs. It can be seen in Figure 9 that at the 80th epoch, the accuracy curve became stable. Meanwhile, for the loss curve, stability was observed at the 60th epoch for the training data. However, for the testing data, the loss curve fluctuated until the 100th epoch. However, the fluctuation was not too large.

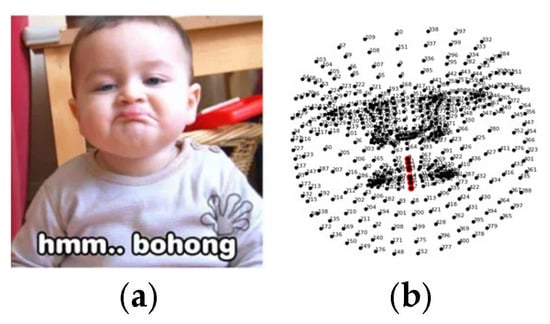

Figure 9.

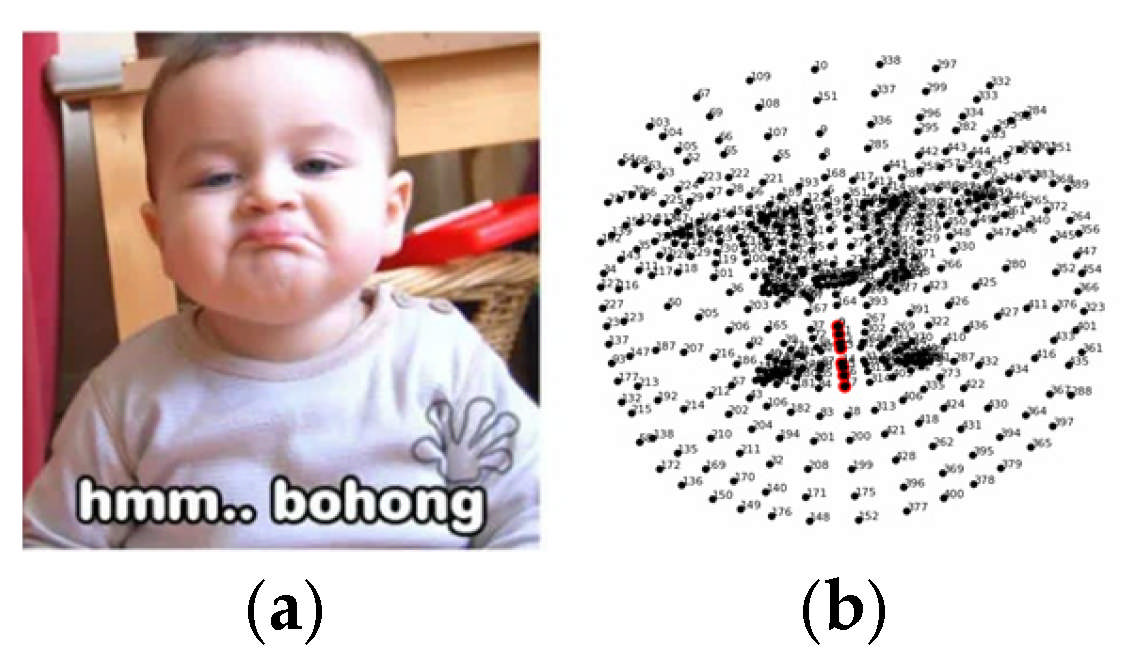

Example of key point error from media pipe (a) meme image, (b) key points.

4.3. Comparison with Other Models

Based on the results in Table 6, the DNN model obtained the best performance with the use of directed graph and weighted directed graph key point representation. To further evaluate the performance of DNN, a comparative analysis was carried out by observing the results of other models using the directed graph key point representation. The models that were compared with DT, LR, RF, and SVM. The performance results of all methods can be seen in Table 6.

Table 6.

Performance comparison of several models with directed graph representation.

Based on Table 6, the DNN model obtained the best performance in terms of accuracy, precision, recall and F1-score compared to the other models. SVM produced the lowest performance.

4.4. Comparison with Previous Research

In this section, the performance of the DNN model with the directed graph key point representation was compared with the results in [24,25]. The datasets used in both studies were meme datasets. The results shown are the experimental results presented in [24,25]. In addition, the meme image dataset in this study was evaluated using MobileNet, VGG 16, ResNet50, Xception, and InceptionV3 models without the implementation of key points. The performance evaluation results of these models are presented in Table 7. It can be seen in Table 6 that the best result in previous research was obtained by Kumar et al. [24], with the use of Bag of Visual Words and SVM. The method proposed by Kumar et al. [24] obtained an accuracy, precision, and recall of 0.75, 0.75, and 0.79, respectively.

Table 7.

Performance comparison of several models.

The DNN model using the directed graph key point representation outperformed all the previous models and baseline deep learning models. In particular, the proposed model outperformed the method by Kumar et al. [24] by a margin of 4% in terms of accuracy. This increase in accuracy was caused by several main factors due to the use of key points that were different from those in previous models. The use of key points enabled the model to focus better on the eyes, eyebrows, and mouth, according to the features selected for analysis.

5. Discussion

The performance results presented in the previous section indicated that the DNN model with the directed graph key point representation achieved superior performance compared to the basic model and other deep learning models. In this section, the impact of several factors on the ability of the model to determine the sentiment of the meme images is investigated and presented.

There is a significant difference in the accuracy of the DNN model with the directed graph key point representation when carrying out sentiment analysis of meme images compared to non-meme images. The sentiment accuracy of meme images achieved by the proposed model was not as high as that of publicly available human face images. There are several differences between the meme image dataset and the human facial image dataset. The publicly available human facial image dataset (FER2013, JAFFE, CK+) is a facial expression dataset that was built to specifically show happy, sad, angry, neutral, or other facial expressions, and therefore, they can be clearly differentiated. This is different from the meme image dataset. In the meme images, facial expression labeling is done by annotators who are facial expression experts. Some meme images have different facial expression interpretations, of which different experts labeled the same meme image differently. To reduce bias that occurred due to differences in labeling, only meme images that were labeled the same by the annotators were included in the dataset.

When compared to previous similar studies using meme datasets, the results of this study show an increase in accuracy. Kumar’s study used a hybrid CNN-SVM and achieved an accuracy of 75.4%. Direct image classification using CNN without any special treatment. This differs from the proposed method, which uses keypoints and representations in a directed graph in the classification model. Therefore, the image dataset is taken from the keypoints and represented in a directed graph. This directed graph feature is then classified into positive, negative, or neutral sentiment. This method produces an 8% increase in accuracy compared to Kumar’s.

The use of the media pipe library in determining key points from images really helps the classification process. The key points produced by the MediaPipe library are more precise than those produced by the OpenPose library. In MediaPipe, face points are divided into 468 keypoints, while in OpenPose, face points are divided into 70 keypoints. Even though MediaPipe provides a larger number of keypoints, in some images where the mouth shape is extreme, MediaPipe fails to correctly detect the mouth. Figure 9 shows a mouth expression in which the key point transformation does not match the expression in the original image. Inaccuracy in describing expressions can cause errors in sentiment classification by the system. In Figure 9, the annotator labeled the image as having a negative sentiment, while the system labeled the image as having a neutral sentiment.

Key points are represented in five forms: (x, y) coordinates, vectors centered on one point, directed graph, weighted graph, and weighted directed graph. Based on the experiment results, the (x, y) coordinates representation of key points resulted in the lowest accuracy. This is due to the position of the key point. Meanwhile, the vectors centered on a single point representation produced higher accuracy compared to the (x, y) coordinate representation. The vectors centered on one point representation are equipped with relative coordinates of each key point to the point that has been determined as the centroid of the key point of each eye, eyebrow and mouth shape. A vector-based key point dataset, expressed in relative coordinates, reveals the distinctive characteristics of each object for a specific sentiment. This results in a better accuracy compared to the (x, y) coordinates’ representation.

Better accuracy is obtained with the use of key points in the form of graphs. In the directed graph representation, every pair of adjacent key points is expressed in vectors. Therefore, data is obtained in the form of relative coordinates for two consecutive key points. In the weighted graph representation, the weight is expressed as the angle between 2 key points. Based on the experimental results, the directed graph representation produced better accuracy compared to the weighted graph representation. Although the weighted directed graph representation produced almost the same accuracy as the directed graph representation, the learning process took longer.

The performance of the DNN model with a directed graph representation is compared to other models incorporating the proposed directed graph representation. The purpose of this comparison is to determine whether the directed graph representation can elevate the accuracy of the other models.

At the start of testing, parameter values were chosen based on the test results in [22], namely 3 hidden layers with each size being i = 13, j = 13, k = 14, activation = relu, and solver = adam. To obtain optimal results, parameter tuning was carried out. The parameter settings are as follows:

- Size of each hidden layer: 10 to 100;

- Activation: tanh, relu and logistics;

- Solver: SGD, adam and L-BFGS;

- Learning rate: constant, adaptive.

Based on the results of parameter tuning trials, the accuracy value increased by 1.1–3.0%. The best accuracy value was obtained with the use of the following parameters:

- Hidden layer: i = 64, j = 64, k = 90;

- Activation: tanh;

- Solver: SGD;

- Learning rate: adaptive.

6. Conclusions

In this study, a model for sentiment analysis of meme images is proposed. The proposed method utilized DNN architecture for classifying the meme images. Furthermore, in the proposed method, facial features, such as eyes, eyebrows, and mouths, are extracted using key points. The key points of each facial feature are then represented in various forms, namely (x, y) coordinates, vectors centered on a single point, a directed graph, a weighted graph, and a weighted directed graph. The weight of the graph is the angle between two adjacent key points, and the direction shows the relative coordinates of the two adjacent key points. The representation of key points is used to identify certain characteristics of each sentiment polarity from the meme images. Statistical testing was conducted to determine whether the sentiment group data differed significantly. Based on the results of the statistical testing, it was found that the average value for each sentiment group was differed. Sentiment analysis was then carried out using the dataset and the proposed method. Evaluation was carried out by comparing the classification results of the proposed method with those of other models. The experimental results indicate that the use of the directed graph representation yielded the best performance, with an obtained accuracy, recall, precision, and F1 score of 0.83, 0.83, 0.86, and 0.81, respectively. The best results were obtained by tuning several parameters, namely the hidden layer, activation function, solver, and learning rate.

Future works can be conducted to improve the performance of the proposed method in sentiment analysis of image memes by modifying the architecture, for instance, improving the process of representing key points from images. Besides that, use of keypoints for image classification isn’t limited to human images. They can also be used for non-human images (e.g., animals), non-face images (e.g., hands), or other images. To maximize data usage, in further research several approaches to improve dataset efficiency include adding one annotator so that image labels are handled by three annotators and performing data augmentation (vertical flip).

Author Contributions

Conceptualization, E.A., A.S. and D.O.S.; methodology, E.A., A.S. and D.O.S.; software, E.A.; validation, E.A.; formal analysis, A.S. and D.O.S.; investigation, E.A., A.S. and D.O.S.; resources, E.A.; data curation, E.A. and A.S.; writing—original draft preparation, E.A.; writing—review and editing, A.S. and D.O.S.; visualization, E.A.; supervision, A.S. and D.O.S.; project administration, A.S.; funding acquisition, A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Institut Teknologi Sepuluh Nopember, Surabaya, Indonesia and Universitas Surabaya under Grant Pakerti ITS-Ubaya-no 1777/PKS/ITS/2023.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in FigShare at doi: 10.6084/m9.figshare.28917455.

Acknowledgments

We also want to thank Institut Teknologi Sepuluh Nopember and Universitas Surabaya for funding this research under Grant Pakerti ITS-Ubaya.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tesaurus Meme. Available online: https://kbbi.kemdikbud.go.id/ (accessed on 13 July 2021).

- Mertiya, M.; Singh, A. Combining naive bayes and adjective analysis for sentiment detection on Twitter. In Proceedings of the 2016 International Conference on Inventive Computation Technologies, Coimbatore, India, 26–27 August 2016; Volume 2, pp. 1–6. [Google Scholar]

- Pozzi, F.A.; Fersini, E.; Messina, E.; Liu, B. Challenges of Sentiment Analysis in Social Networks: An Overview; Elsevier Inc.: Amsterdam, The Netherlands, 2017; ISBN 9780128044384. [Google Scholar]

- D’Andrea, A.; Ferri, F.; Grifoni, P.; Guzzo, T. Approaches, Tools and Applications for Sentiment Analysis Implementation. Int. J. Comput. Appl. 2015, 125, 26–33. [Google Scholar] [CrossRef]

- Chaudhry, H.N.; Javed, Y.; Kulsoom, F.; Mehmood, Z.; Khan, Z.I.; Shoaib, U.; Janjua, S.H. Sentiment analysis of before and after elections: Twitter data of U.S. election 2020. Electronics 2021, 10, 2082. [Google Scholar] [CrossRef]

- Hashim, R.; Omar, B.; Saeed, N.; Ba-Anqud, A.; Al-Samarraie, H. the Application of Sentiment Analysis in Tourism Research: A Brief Review. IJBTS Int. J. Bus. Tour. Appl. Sci. 2020, 8, 51–60. [Google Scholar]

- Hemamalini, U.; Perumal, S. Literature review on sentiment analysis. Int. J. Sci. Technol. Res. 2020, 9, 2009–2013. [Google Scholar] [CrossRef]

- Kumar, A.; Sharma, A. Systematic Literature Review on Opinion Mining of Big Data for Government Intelligence. Webology 2017, 14, 6–47. [Google Scholar]

- Almosawi, M.M.; Mahmood, S.A. Lexicon-Based Approach for Sentiment Analysis to Student Feedback. Webology 2022, 19, 6971–6989. [Google Scholar]

- Meena, G.; Mohbey, K.K.; Kumar, S. Sentiment analysis on images using convolutional neural networks based Inception-V3 transfer learning approach. Int. J. Inf. Manag. Data Insights 2023, 3, 100174. [Google Scholar] [CrossRef]

- Meena, G.; Mohbey, K.K.; Indian, A.; Kumar, S. Sentiment Analysis from Images using VGG19 based Transfer Learning Approach. Procedia Comput. Sci. 2022, 204, 411–418. [Google Scholar] [CrossRef]

- Moung, E.G.; Wooi, C.C.; Sufian, M.M.; On, C.K.; Dargham, J.A. Ensemble-based face expression recognition approach for image sentiment analysis. Int. J. Electr. Comput. Eng. 2022, 12, 2588–2600. [Google Scholar] [CrossRef]

- Patel, K.; Mehta, D.; Mistry, C.; Gupta, R.; Tanwar, S.; Kumar, N.; Alazab, M. Facial Sentiment Analysis Using AI Techniques: State-of-the-Art, Taxonomies, and Challenges. IEEE Access 2020, 8, 90495–90519. [Google Scholar] [CrossRef]

- Aksoy, O.E.; Güney, S. Sentiment Analysis from Face Expressions Based on Image Processing Using Deep Learning Methods. J. Adv. Res. Nat. Appl. Sci. 2022, 8, 736–752. [Google Scholar] [CrossRef]

- de Paula, D.; Alexandre, L.A. Facial Emotion Recognition for Sentiment Analysis of Social Media Data. In Proceedings of the 10th Iberian Conference, IbPRIA 2022, Aveiro, Portugal, 4–6 May 2022; Volume 13256, pp. 207–217. [Google Scholar] [CrossRef]

- Gan, Y.; Xu, L.; Song, S.; Tao, X. Context transformer with multiscale fusion for robust facial emotion recognition. Pattern Recognit. 2025, 167, 111720. [Google Scholar] [CrossRef]

- So, J.; Han, Y. Facial Landmark-Driven Keypoint Feature Extraction for Robust Facial Expression Recognition. Sensors 2025, 25, 3762. [Google Scholar] [CrossRef]

- Avvaru, A.; Vobilisetty, S. BERT at SemEval-2020 Task 8: Using BERT to analyse meme emotions. In Proceedings of the Fourteenth Workshop on Semantic Evaluation, Barcelona, Spain, 12–13 December 2020; pp. 1094–1099. [Google Scholar] [CrossRef]

- Aggarwal, A.; Sharma, V.; Trivedi, A.; Yadav, M.; Agrawal, C.; Singh, D.; Mishra, V.; Gritli, H. Two-Way Feature Extraction Using Sequential and Multimodal Approach for Hateful Meme Classification. Complexity 2021, 2021, 510253. [Google Scholar] [CrossRef]

- Thapa, S.; Shah, A.; Jafri, F.; Naseem, U.; Razzak, I. A Multi-Modal Dataset for Hate Speech Detection on Social Media: Case-study of Russia-Ukraine Conflict. In Proceedings of the 5th Workshop on Challenges and Applications of Automated Extraction of Socio-political Events from Text (CASE), Abu Dhabi, United Arab Emirates, 7–December 2022; pp. 1–6. [Google Scholar]

- Prakash, T.N.; Aloysius, A. Hybrid Approaches Based Emotion Detection in Memes Sentiment Analysis. Int. J. Eng. Res. Technol. 2021, 14, 151–155. [Google Scholar]

- Rosid, M.A.; Siahaan, D.; Saikhu, A. Sarcasm Detection in Indonesian-English Code-Mixed Text Using Multihead Attention-Based Convolutional and Bi-Directional GRU. IEEE Access 2024, 12, 137063–137079. [Google Scholar] [CrossRef]

- Suryawanshi, S.; Chakravarthi, B.R.; Arcan, M.; Buitelaar, P. Multimodal Meme Dataset (MultiOFF) for Identifying Offensive Content in Image and Text. In Proceedings of the Second Workshop on Trolling, Aggression and Cyberbullying, Marseille, France, 11–16 May 2020; pp. 32–41. [Google Scholar]

- Kumar, A.; Srinivasan, K.; Cheng, W.H.; Zomaya, A.Y. Hybrid context enriched deep learning model for fine-grained sentiment analysis in textual and visual semiotic modality social data. Inf. Process. Manag. 2020, 57, 102141. [Google Scholar] [CrossRef]

- Elahi, K.T.; Rahman, T.B.; Shahriar, S.; Sarker, S. Explainable Multimodal Sentiment Analysis on Bengali Memes. In Proceedings of the 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 13–15 December 2023; pp. 1–6. [Google Scholar]

- Arwoko, H.; Yuniarno, E.M.; Purnomo, M.H. Hand Gesture Recognition Based on Keypoint Vector. In Proceedings of the IES 2022 International Electronics Symposium: Energy Development for Climate Change Solution and Clean Energy Transition, Surabaya, Indonesia, 9–11 August 2022; pp. 530–533. [Google Scholar]

- Hangaragi, S.; Singh, T.; N, N. Face Detection and Recognition Using Face Mesh and Deep Neural Network. Procedia Comput. Sci. 2023, 218, 741–749. [Google Scholar] [CrossRef]

- Albadani, B.; Shi, R.; Dong, J.; Al-Sabri, R.; Moctard, O.B. Transformer-Based Graph Convolutional Network for Sentiment Analysis. Appl. Sci. 2022, 12, 1316. [Google Scholar] [CrossRef]

- Liang, Y.; Han, D.; He, Z.; Kong, B.; Wen, S. SceEmoNet: A Sentiment Analysis Model with Scene Construction Capability. Appl. Sci. 2025, 15, 8588. [Google Scholar] [CrossRef]

- Lu, X.; Ni, Y.; Ding, Z. Cross-Modal Sentiment Analysis Based on CLIP Image-Text Attention Interaction. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 895–903. [Google Scholar] [CrossRef]

- Kumar, A.; Garg, G. Sentiment analysis of multimodal twitter data. Multimed. Tools Appl. 2019, 78, 24103–24119. [Google Scholar] [CrossRef]

- Asmawati, E.; Saikhu, A.; Siahaan, D. Sentiment Analysis of Text Memes: A Comparison among Supervised Machine Learning Methods. In Proceedings of the 9th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Jakarta, Indonesia, 6–7 October 2022; pp. 349–354. [Google Scholar] [CrossRef]

- Rosid, M.A.; Siahaan, D.; Saikhu, A. Improving Sarcasm Detection in Mash-Up Language Through Hybrid Pretrained Word Embedding. In Proceedings of the IEEE 8th International Conference on Software Engineering and Computer Systems (ICSECS), Penang, Malaysia, 25–27 August 2023; pp. 58–63. [Google Scholar] [CrossRef]

- Nguyen, B.H.; Huynh, V.N. Textual analysis and corporate bankruptcy: A financial dictionary-based sentiment approach. J. Oper. Res. Soc. 2022, 73, 102–121. [Google Scholar] [CrossRef]

- Ghobakhloo, M.; Ghobakhloo, M. Design of a personalized recommender system using sentiment analysis in social media (case study: Banking system). Soc. Netw. Anal. Min. 2022, 12, 1–16. [Google Scholar] [CrossRef]

- George, O.A.; Ramos, C.M.Q. Sentiment analysis applied to tourism: Exploring tourist-generated content in the case of a wellness tourism destination. Int. J. Spa Wellness 2024, 7, 139–161. [Google Scholar] [CrossRef]

- Yan, W.; Zhou, L.; Qian, Z.; Xiao, L.; Zhu, H. Sentiment Analysis of Student Texts Using the CNN-BiGRU-AT Model. Sci. Program. 2021, 2021, 8405623. [Google Scholar] [CrossRef]

- Andrian, B.; Simanungkalit, T.; Budi, I.; Wicaksono, A.F. Sentiment Analysis on Customer Satisfaction of Digital Banking in Indonesia. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 466–473. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, J.; Ding, W.; Wang, Z. Object aroused emotion analysis network for image sentiment analysis. Knowl.-Based Syst. 2024, 286, 111429. [Google Scholar] [CrossRef]

- Ekman, P. Membaca Emosi Orang; Think Jogjakata: Yogyakarta, Indonesia, 2003; Available online: https://dlibrary.ittelkom-pwt.ac.id/index.php?p=show_detail&id=1896&keywords= (accessed on 13 July 2021).

- Guermazi, R.; Abdallah, T.B.; Hammami, M. Facial micro-expression recognition based on accordion spatio-temporal representation and random forests. J. Vis. Commun. Image Represent. 2021, 79, 103183. [Google Scholar] [CrossRef]

- Cruz, R.F.; Koch, S.C. Issues of vailidity and reliability in the Use of Movement Observations and Scales. In Dance/Movement Therapists in Action: A Working Guide to Research Options; Cruz, R.F., Berrol, C., Eds.; Charles, C. Thomas: Springfield, IL, USA, 2004; pp. 45–68. [Google Scholar]

- Soon, H.F.; Amir, A.; Azemi, S.N. An Analysis of Multiclass Imbalanced Data Problem in Machine Learning for Network Attack Detections. J. Phys. Conf. Ser. 2021, 1755, 012030. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).