Research Trends in the Use of Machine Learning Applied in Mobile Networks: A Bibliometric Approach and Research Agenda

Abstract

:1. Introduction

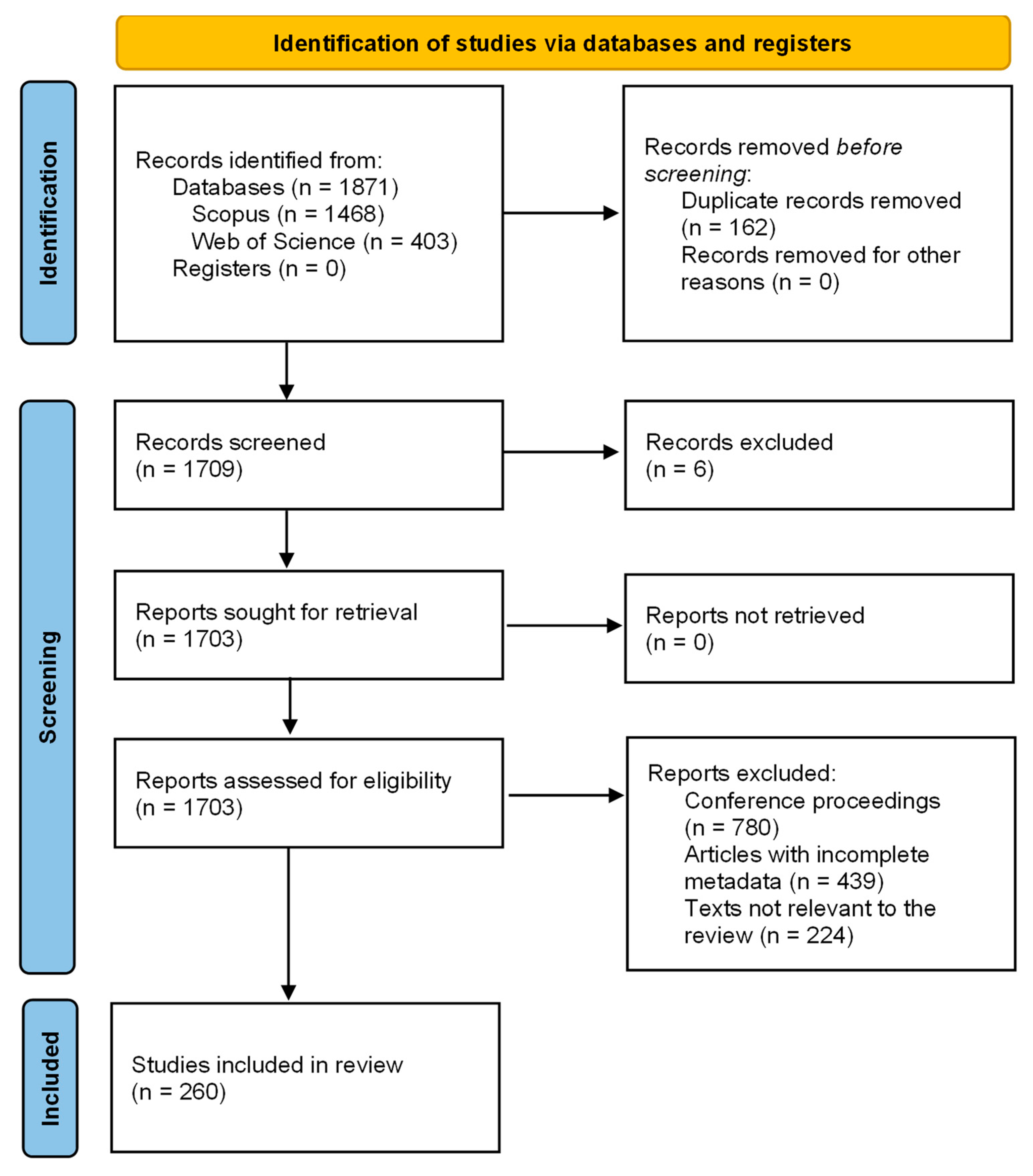

2. Materials and Methods

2.1. Eligibility Criteria

2.1.1. Inclusion Criteria

2.1.2. Exclusion Criteria

2.2. Information Sources

2.3. Search Strategy

2.4. Study Record

2.4.1. Data Management

2.4.2. Selection Process

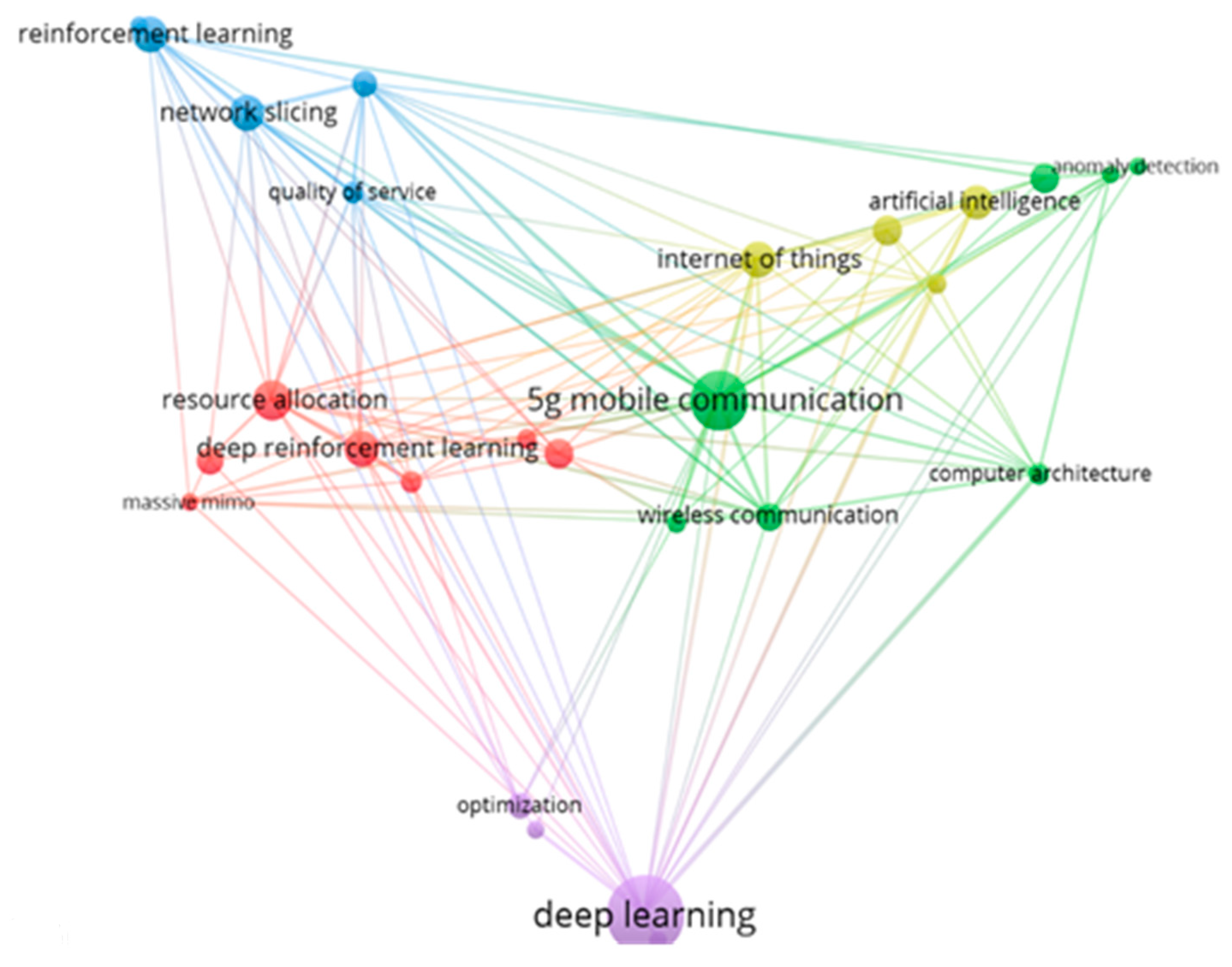

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aryal, B.; Abbas, R.; Collings, I.B. SDN enabled DDoS attack detection and mitigation for 5G networks. J. Commun. 2021, 16, 267–275. [Google Scholar] [CrossRef]

- Abdulqadder, I.H.; Zhou, S.; Zou, D.; Aziz, I.T.; Akber, S.M.A. Multi-layered intrusion detection and prevention in the SDN/NFV enabled cloud of 5G networks using AI-based defense mechanisms. Comput. Netw. 2020, 179, 107364. [Google Scholar] [CrossRef]

- Abusubaih, M. Intelligent wireless networks: Challenges and future research topics. J. Netw. Syst. Manag. 2022, 30, 18. [Google Scholar] [CrossRef]

- Jagannath, A.; Jagannath, J. Multi-task learning approach for modulation and wireless signal classification for 5G and beyond: Edge deployment via model compression. Phys. Commun. 2022, 54, 101793. [Google Scholar] [CrossRef]

- Thang, V.V.; Pashchenko, F.F. Multistage system-based machine learning techniques for intrusion detection in WiFi network. J. Comput. Netw. Commun. 2019, 2019, 4708201. [Google Scholar] [CrossRef]

- Thantharate, A.; Beard, C. ADAPTIVE6G: Adaptive resource management for network slicing architectures in current 5G and future 6G systems. J. Netw. Syst. Manag. 2022, 31, 9. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, X.; Xiao, F. 5GMEC-DP: Differentially private protection of trajectory data based on 5G-based mobile edge computing. Comput. Netw. 2022, 218, 109376. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, Y.; Zhang, X.; Geng, G.; Zhang, W.; Sun, Y. A survey of networking applications applying the software defined networking concept based on machine learning. IEEE Access 2019, 7, 95397–95417. [Google Scholar] [CrossRef]

- Dharmani, P.; Das, S.; Prashar, S. A bibliometric analysis of creative industries: Current trends and future directions. J. Bus. Res. 2021, 135, 252–267. [Google Scholar] [CrossRef]

- Estarli, M.; Aguilar Barrera, E.S.; Martínez-Rodríguez, R.; Baladia, E.; Agüero, S.D.; Camacho, S.; Buhring, K.; Herrero-López, A.; Gil-González, D.M. Ítems de referencia para publicar protocolos de revisiones sistemáticas y metaanálisis: Declaración PRISMA-P 2015. Rev. Esp. Nutr. Hum. Diet. 2016, 20, 148–160. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Moher, D. Updating guidance for reporting systematic reviews: Development of the PRISMA 2020 statement. J. Clin. Epidemiol. 2021, 134, 103–112. [Google Scholar] [CrossRef] [PubMed]

- Pranckutė, R. Web of science (WoS) and scopus: The titans of bibliographic information in today’s academic world. Publications 2021, 9, 12. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep learning in mobile and wireless networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Björnson, E.; Sanguinetti, L.; Wymeersch, H.; Hoydis, J.; Marzetta, T.L. Massive MIMO is a reality—What is next? Five promising research directions for antenna arrays. Digit. Signal Process. 2019, 94, 3–20. [Google Scholar] [CrossRef]

- Pham, Q.V.; Fang, F.; Ha, V.N.; Piran, M.J.; Le, M.; Le, L.B.; Hwang, W.J.; Ding, Z. A survey of multi-access edge computing in 5G and beyond: Fundamentals, technology integration, and state-of-the-art. IEEE Access 2020, 8, 116974–117017. [Google Scholar] [CrossRef]

- Sharma, S.K.; Wang, X. Toward massive machine type communications in ultra-dense cellular IoT networks: Current issues and machine learning-assisted solutions. IEEE Commun. Surv. Tutor. 2020, 22, 426–471. [Google Scholar] [CrossRef]

- Jiang, W.; Han, B.; Habibi, M.A.; Schotten, H.D. The road towards 6G: A comprehensive survey. IEEE Open J. Commun. Soc. 2021, 2, 334–366. [Google Scholar] [CrossRef]

- Shajin, F.H.; Rajesh, P. FPGA realization of a reversible data hiding scheme for 5G MIMO-OFDM system by chaotic key generation-based paillier cryptography along with LDPC and its side channel estimation using machine learning technique. J. Circuits Syst. Comput. 2022, 31, 2250093. [Google Scholar] [CrossRef]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.C.; Kim, D.I. Applications of deep reinforcement learning in communications and networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174. [Google Scholar] [CrossRef]

- Xu, H.; Yu, W.; Griffith, D.; Golmie, N. A survey on industrial internet of things: A cyber-physical systems perspective. IEEE Access 2018, 6, 78238–78259. [Google Scholar] [CrossRef]

- Dai, Y.; Xu, D.; Maharjan, S.; Chen, Z.; He, Q.; Zhang, Y. Blockchain and deep reinforcement learning empowered intelligent 5G beyond. IEEE Netw. 2019, 33, 10–17. [Google Scholar] [CrossRef]

- Moysen, J.; Giupponi, L. From 4G to 5G: Self-organized network management meets machine learning. Comput. Commun. 2018, 129, 248–268. [Google Scholar] [CrossRef]

- Mozaffari, M.; Kasgari, A.T.Z.; Saad, W.; Bennis, M.; Debbah, M. Beyond 5G with UAVs: Foundations of a 3D wireless cellular network. IEEE Trans. Wirel. Commun. 2018, 18, 357–372. [Google Scholar] [CrossRef]

- Baştuğ, E.; Bennis, M.; Zeydan, E.; Kader, M.A.; Karatepe, I.A.; Er, A.S.; Debbah, M. Big data meets telcos: A proactive caching perspective. J. Commun. Netw. 2015, 17, 549–557. [Google Scholar] [CrossRef]

- Chaccour, C.; Soorki, M.N.; Saad, W.; Bennis, M.; Popovski, P.; Debbah, M. Seven defining features of terahertz (THz) wireless systems: A fellowship of communication and sensing. IEEE Commun. Surv. Tutor. 2022, 24, 967–993. [Google Scholar] [CrossRef]

- Xie, J.; Yu, F.R.; Huang, T.; Xie, R.; Liu, J.; Wang, C.; Liu, Y. A survey of machine learning techniques applied to software defined networking (SDN): Research issues and challenges. IEEE Commun. Surv. Tutor. 2018, 21, 393–430. [Google Scholar] [CrossRef]

- Xu, Y.; Yin, F.; Xu, W.; Lin, J.; Cui, S. Wireless traffic prediction with scalable Gaussian process: Framework, algorithms, and verification. IEEE J. Sel. Areas Commun. 2019, 37, 1291–1306. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, S.; Mu, X.; Ding, Z.; Schober, R.; Al-Dhahir, N.; Hossain, E.; Shen, X. Evolution of NOMA toward next generation multiple access (NGMA) for 6G. IEEE J. Sel. Areas Commun. 2022, 40, 1037–1071. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, X.; Zhang, K.; Zhang, B.; Tao, X.; Zhang, P. Machine learning based flexible transmission time interval scheduling for eMBB and uRLLC coexistence scenario. IEEE Access 2019, 7, 65811–65820. [Google Scholar] [CrossRef]

- Park, J.; Samarakoon, S.; Bennis, M.; Debbah, M. Wireless network intelligence at the edge. Proc. IEEE 2019, 107, 2204–2239. [Google Scholar] [CrossRef]

- Guo, F.; Yu, F.R.; Zhang, H.; Li, X.; Ji, H.; Leung, V.C.M. Enabling massive IoT Toward 6G: A comprehensive survey. IEEE Internet Things J. 2021, 8, 11891–11915. [Google Scholar] [CrossRef]

- Lopez-Perez, D.; De Domenico, A.; Piovesan, N.; Xinli, G.; Bao, H.; Qitao, S.; Debbah, M. A survey on 5G radio access network energy efficiency: Massive MIMO, lean carrier design, sleep modes, and machine learning. IEEE Commun. Surv. Tutor. 2022, 24, 653–697. [Google Scholar] [CrossRef]

- Vukobratovic, D.; Jakovetic, D.; Skachek, V.; Bajovic, D.; Sejdinovic, D.; Karabulut Kurt, G.; Hollanti, C.; Fischer, I. CONDENSE: A reconfigurable knowledge acquisition architecture for future 5G IoT. IEEE Access 2016, 4, 3360–3378. [Google Scholar] [CrossRef]

- Yahia, I.G.B.; Bendriss, J.; Samba, A.; Dooze, P. CogNitive 5G networks: Comprehensive operator use cases with machine learning for management operations. In Proceedings of the 2017 20th Conference on Innovations in Clouds, Internet and Networks (ICIN), Paris, France, 7–9 March 2017; IEEE: Paris, France, 2017; pp. 252–259. [Google Scholar]

- Bi, Q. Ten trends in the cellular industry and an outlook on 6G. IEEE Commun. Mag. 2019, 57, 31–36. [Google Scholar] [CrossRef]

- Kazi, B.U.; Wainer, G.A. Next generation wireless cellular networks: Ultra-dense multi-tier and multi-cell cooperation perspective. Wirel. Netw. 2018, 25, 2041–2064. [Google Scholar] [CrossRef]

- Faisal, K.M.; Choi, W. Machine learning approaches for reconfigurable intelligent surfaces: A survey. IEEE Access 2022, 10, 27343–27367. [Google Scholar] [CrossRef]

- Gavrilovska, L.; Rakovic, V.; Denkovski, D. From cloud RAN to open RAN. Wirel. Pers. Commun. 2020, 113, 1523–1539. [Google Scholar] [CrossRef]

- Mahmood, M.R.; Matin, M.A.; Sarigiannidis, P.; Goudos, S.K. A comprehensive review on artificial intelligence/machine learning algorithms for empowering the future IoT toward 6G era. IEEE Access 2022, 10, 87535–87562. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Xiong, Z.; Miao, C.; Niyato, D.; Yang, Q.; Leung, C.; Poor, H.V. Hierarchical incentive mechanism design for federated machine learning in mobile networks. IEEE Internet Things J. 2020, 7, 9575–9588. [Google Scholar] [CrossRef]

- Mao, Q.; Hu, F.; Hao, Q. Deep learning for intelligent wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2018, 20, 2595–2621. [Google Scholar] [CrossRef]

- Viswanathan, H.; Mogensen, P.E. Communications in the 6G era. IEEE Access 2020, 8, 57063–57074. [Google Scholar] [CrossRef]

- Wild, T.; Braun, V.; Viswanathan, H. Joint design of communication and sensing for beyond 5G and 6G systems. IEEE Access 2021, 9, 30845–30857. [Google Scholar] [CrossRef]

- Elijah, O.; Rahim, S.K.A.; New, W.K.; Leow, C.Y.; Cumanan, K.; Geok, T.K. Intelligent massive MIMO systems for beyond 5G networks: An overview and future trends. IEEE Access 2022, 10, 102532–102563. [Google Scholar] [CrossRef]

- Kato, N.; Fadlullah, Z.M.; Mao, B.; Tang, F.; Akashi, O.; Inoue, T.; Mizutani, K. The deep learning vision for heterogeneous network traffic control: Proposal, challenges, and future perspective. IEEE Wirel. Commun. 2017, 24, 146–153. [Google Scholar] [CrossRef]

- Mao, B.; Fadlullah, Z.M.; Tang, F.; Kato, N.; Akashi, O.; Inoue, T.; Mizutani, K. Routing or computing? The paradigm shift towards intelligent computer network packet transmission based on deep learning. IEEE Trans. Comput. 2017, 66, 1946–1960. [Google Scholar] [CrossRef]

- Zhou, Y.; Fadlullah, Z.M.; Mao, B.; Kato, N. A deep-learning-based radio resource assignment technique for 5G ultra dense networks. IEEE Netw. 2018, 32, 28–34. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, J.; Liu, M.; Zhang, K.; Gui, G.; Ohtsuki, T.; Adachi, F. Semi-supervised machine learning aided anomaly detection method in cellular networks. IEEE Trans. Veh. Technol. 2020, 69, 8459–8467. [Google Scholar] [CrossRef]

- Kamruzzaman, M.M.; Alruwaili, O. AI-based computer vision using deep learning in 6G wireless networks. Comput. Electr. Eng. 2022, 102, 108233. [Google Scholar] [CrossRef]

- Bagchi, S.; Abdelzaher, T.F.; Govindan, R.; Shenoy, P.; Atrey, A.; Ghosh, P.; Xu, R. New frontiers in IoT: Networking, systems, reliability, and security challenges. IEEE Internet Things J. 2020, 7, 11330–11346. [Google Scholar] [CrossRef]

- Savazzi, S.; Rampa, V.; Kianoush, S.; Bennis, M. An energy and carbon footprint analysis of distributed and federated learning. IEEE Trans. Green Commun. Netw. 2022, 1, 248–264. [Google Scholar] [CrossRef]

- Letaief, K.B.; Chen, W.; Shi, Y.; Zhang, J.; Zhang, Y.J.A. The Roadmap to 6G: AI empowered wireless networks. IEEE Commun. Mag. 2019, 57, 84–90. [Google Scholar] [CrossRef]

- Maraqa, O.; Rajasekaran, A.S.; Al-Ahmadi, S.; Yanikomeroglu, H.; Sait, S.M. A survey of rate-optimal power domain NOMA with enabling technologies of future wireless networks. IEEE Commun. Surv. Tutor. 2020, 22, 2192–2235. [Google Scholar] [CrossRef]

- Vaezi, M.; Schober, R.; Ding, Z.; Poor, H.V. Non-orthogonal multiple access: Common myths and critical questions. IEEE Wirel. Commun. 2019, 26, 174–180. [Google Scholar] [CrossRef]

- Chen, Y.; Bayesteh, A.; Wu, Y.; Ren, B.; Kang, S.; Sun, S.; Xiong, Q.; Qian, C.; Yu, B.; Ding, Z.; et al. Toward the standardization of non-orthogonal multiple access for next generation wireless networks. IEEE Commun. Mag. 2018, 56, 19–27. [Google Scholar] [CrossRef]

- Mach, P.; Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Comșa, I.S.; Trestian, R.; Muntean, G.M.; Ghinea, G. 5MART: A 5G SMART scheduling framework for optimizing QoS through reinforcement learning. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1110–1124. [Google Scholar] [CrossRef]

- Shang, X.; Huang, Y.; Liu, Z.; Yang, Y. NVM-enhanced machine learning inference in 6G edge computing. IEEE Trans. Netw. Sci. Eng. 2021; 1, Early Access. [Google Scholar] [CrossRef]

- Zhao, S.; Yu, H.; Yu, F.X.; Yang, Q.; Xu, Z.; Xiong, L.; Wang, J.; Vepakomma, P.; Tramèr, F.; Suresh, A.T.; et al. Advances and open problems in federated learning. Found. Trends® Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Haneda, E.M.K.; Nguyen, S.L.H.; Karttunen, A.; Järveläinen, J.; Bamba, A.; D’Errico, R.; Medbo, J.-N.; Undi, F.; Jaeckel, S.; Iqbal, N.; et al. Millimetre-Wave Based Mobile Radio Access Network for Fifth Generation Integrated Communications (mmMAGIC); European Commission: Brussels, Belgium, 2017; pp. 1–13.

- Mazin, A.; Elkourdi, M.; Gitlin, R.D. Accelerating beam sweeping in mmwave standalone 5G new radios using recurrent neural networks. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; IEEE: Chicago, IL, USA, 2018; pp. 1–4. [Google Scholar]

- Aqdus, A.; Amin, R.; Ramzan, S.; Alshamrani, S.S.S.; Alshehri, A.; El-kenawy, E.S.M. Detection collision flows in SDN based 5G using machine learning algorithms. Comput. Mater. Contin. 2023, 74, 1413–1435. [Google Scholar] [CrossRef]

- Cao, B.; Fan, J.; Yuan, M.; Li, Y. Toward accurate energy-efficient cellular network: Switching off excessive carriers based on traffic profiling. In Proceedings of the 31st Annual ACM Symposium on Applied Computing, Pisa, Italy, 4–8 April 2016; ACM: New York, NY, USA, 2016; pp. 546–551. [Google Scholar]

- Kao, W.C.; Zhan, S.Q.; Lee, T.S. AI-aided 3-D beamforming for millimeter wave communications. In Proceedings of the 2018 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Okinawa, Japan, 27–30 November 2018; IEEE: Ishigaki, Japan, 2018; pp. 278–283. [Google Scholar]

- Sun, G.; Zemuy, G.T.; Xiong, K. Dynamic reservation and deep reinforcement learning based autonomous resource management for wireless virtual networks. In Proceedings of the 2018 IEEE 37th International Performance Computing and Communications Conference (IPCCC), Orlando, FL, USA, 17–19 November 2018; IEEE: Orlando, FL, USA, 2018; pp. 1–4. [Google Scholar]

- Kader, M.A.; Bastug, E.; Bennis, M.; Zeydan, E.; Karatepe, A.; Er, A.S.; Debbah, M. Leveraging big data analytics for cache-enabled wireless networks. In Proceedings of the 2015 IEEE Globecom Workshops (GC Wkshps), San Diego, CA, USA, 6–10 December 2015; IEEE: San Diego, CA, USA, 2015; pp. 1–6. [Google Scholar]

- Sundsøy, P.; Bjelland, J.; Iqbal, A.M.; Pentland, A.S.; de Montjoye, Y.A. Big data-driven marketing: How machine learning outperforms marketers’ gut-feeling. In Social Computing, Behavioral-Cultural Modeling and Prediction; Kennedy, W.G., Agarwal, N., Yang, S.J., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 367–374. [Google Scholar]

- Fang, H.; Wang, X.; Tomasin, S. Machine learning for intelligent authentication in 5G and beyond wireless networks. IEEE Wirel. Commun. 2019, 26, 55–61. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, X.; Shen, F.; Zeng, Q. Two tier slicing resource allocation algorithm based on deep reinforcement learning and joint bidding in wireless access networks. Sensors 2022, 22, 3495. [Google Scholar] [CrossRef]

- Rodrigues, T.K.; Kato, N. Network slicing with centralized and distributed reinforcement learning for combined satellite/ground networks in a 6G environment. IEEE Wirel. Commun. 2022, 29, 104–110. [Google Scholar] [CrossRef]

- Nomikos, N.; Zoupanos, S.; Charalambous, T.; Krikidis, I. A survey on reinforcement learning-aided caching in heterogeneous mobile edge networks. IEEE Access 2022, 10, 4380–4413. [Google Scholar] [CrossRef]

- Narmanlioglu, O.; Zeydan, E. Learning in SDN-based multi-tenant cellular networks: A game-theoretic perspective. In Proceedings of the 2017 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Lisbon, Portugal, 8–12 May 2017; IEEE: Lisbon, Portugal, 2017; pp. 929–934. [Google Scholar]

- Le, L.V.; Lin, B.S.P.; Tung, L.P.; Sinh, D. SDN/NFV, machine learning, and big data driven network slicing for 5G. In Proceedings of the 2018 IEEE 5G World Forum (5GWF), Santa Clara, CA, USA, 9–11 July 2018; IEEE: Silicon Valley, CA, USA, 2018; pp. 20–25. [Google Scholar]

- Nakao, A.; Du, P. Toward in-network deep machine learning for identifying mobile applications and enabling application specific network slicing. IEICE Trans. Commun. 2018, E101.B, 1536–1543. [Google Scholar] [CrossRef]

- Upadhyay, D.; Tiwari, P.; Mohd, N.; Pant, B. A machine learning approach in 5G user prediction. In ICT with Intelligent Applications. Smart Innovation, Systems and Technologies; Choudrie, J., Mahalle, P., Perumal, T., Joshi, A., Eds.; Springer: Singapore, 2023; pp. 643–652. [Google Scholar]

- Paropkari, R.A.; Thantharate, A.; Beard, C. Deep-mobility: A deep learning approach for an efficient and reliable 5G handover. In Proceedings of the 2022 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET), Chennai, India, 24–26 March 2022; IEEE: Chennai, India, 2022; pp. 244–250. [Google Scholar]

- Hua, Y.; Li, R.; Zhao, Z.; Chen, X.; Zhang, H. GAN-powered deep distributional reinforcement learning for resource management in network slicing. IEEE J. Sel. Areas Commun. 2020, 38, 334–349. [Google Scholar] [CrossRef]

- Rahman, A.; Hasan, K.; Kundu, D.; Islam, M.J.; Debnath, T.; Band, S.S.; Kumar, N. On the ICN-IoT with federated learning integration of communication: Concepts, security-privacy issues, applications, and future perspectives. Future Gener. Comput. Syst. 2023, 138, 61–88. [Google Scholar] [CrossRef]

- Koudouridis, G.P.; He, Q.; Dán, G. An architecture and performance evaluation framework for artificial intelligence solutions in beyond 5G radio access networks. EURASIP J. Wirel. Commun. Netw. 2022, 2022, 94. [Google Scholar] [CrossRef]

- Dubreil, H.; Altman, Z.; Diascorn, V.; Picard, J.; Clerc, M. Particle swarm optimization of fuzzy logic controller for high quality RRM auto-tuning of UMTS networks. In Proceedings of the 2005 IEEE 61st Vehicular Technology Conference, Stockholm, Sweden, 30 May 2005–1 June 2005; IEEE: Stockholm, Sweden, 2005; pp. 1865–1869. [Google Scholar]

- Nasri, R.; Altman, Z.; Dubreil, H. Fuzzy-Q-learning-based autonomic management of macro-diversity algorithm in UMTS networks. Ann. Telecommun. 2006, 61, 1119–1135. [Google Scholar] [CrossRef]

- Das, S.; Pourzandi, M.; Debbabi, M. On SPIM detection in LTE networks. In Proceedings of the 2012 25th IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Montreal, QC, Canada, 29 April–2 May 2012; IEEE: Montreal, QC, Canada, 2012; pp. 1–4. [Google Scholar]

- Balachandran, A.; Aggarwal, V.; Halepovic, E.; Pang, J.; Seshan, S.; Venkataraman, S.; Yan, H. Modeling web quality-of-experience on cellular networks. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; ACM: New York, NY, USA, 2014; pp. 213–224. [Google Scholar]

- Mason, F.; Nencioni, G.; Zanella, A. A multi-agent reinforcement learning architecture for network slicing orchestration. In Proceedings of the 2021 19th Mediterranean Communication and Computer Networking Conference (MedComNet), Ibiza, Spain, 15–17 June 2021; IEEE: Ibiza, Spain, 2021; pp. 1–8. [Google Scholar]

- Tomoskozi, M.; Seeling, P.; Ekler, P.; Fitzek, F.H.P. Efficiency gain for RoHC compressor implementations with dynamic configuration. In Proceedings of the 2016 IEEE 84th Vehicular Technology Conference (VTC-Fall), Montreal, QC, Canada, 18–21 September 2016; IEEE: Montreal, QC, Canada, 2016; pp. 1–5. [Google Scholar]

- Mwanje, S.; Decarreau, G.; Mannweiler, C.; Naseer-ul-Islam, M.; Schmelz, L.C. Network management automation in 5G: Challenges and opportunities. In Proceedings of the 2016 IEEE 27th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Valencia, Spain, 4–8 September 2016; IEEE: Valencia, Spain, 2016; pp. 1–6. [Google Scholar]

- Jiang, W.; Strufe, M.; Schotten, H.D. Autonomic network management for software-defined and virtualized 5G systems. In Proceedings of the European Wireless 2017-23rd European Wireless Conference, Dresden, Germany, 17–19 May 2017; IEEE: Dresden, Germany, 2017; pp. 1–6. [Google Scholar]

- Perez, J.S.; Jayaweera, S.K.; Lane, S. Machine learning aided cognitive RAT selection for 5G heterogeneous networks. In Proceedings of the 2017 IEEE International Black Sea Conference on Communications and Networking (BlackSeaCom), Istanbul, Turkey, 5–8 June 2017; IEEE: Istanbul, Turkey, 2017; pp. 1–5. [Google Scholar]

- Sun, D.; Willmann, S. Deep learning-based dependability assessment method for industrial wireless network. IFAC-PapersOnLine 2019, 52, 219–224. [Google Scholar] [CrossRef]

- Mughees, A.; Tahir, M.; Sheikh, M.A.; Ahad, A. Towards energy efficient 5G networks using machine learning: Taxonomy, research challenges, and future research directions. IEEE Access 2020, 8, 187498–187522. [Google Scholar] [CrossRef]

- Singh, M. Integrating artificial intelligence and 5G in the era of next-generation computing. In Proceedings of the 2021 2nd International Conference on Computational Methods in Science & Technology (ICCMST), Mohali, India, 17–18 December 2021; IEEE: Mohali, India, 2021; pp. 24–29. [Google Scholar]

- Kumar, R.; Sinwar, D.; Pandey, A.; Tadele, T.; Singh, V.; Raghuwanshi, G. IoT enabled technologies in smart farming and challenges for adoption. In Internet of Things and Analytics for Agriculture, Volume 3. Studies in Big Data; Pattnaik, P.K., Kumar, R., Pal, S., Eds.; Springer: Singapore, 2021; pp. 141–164. [Google Scholar]

- Kirsur, S.M.; Dakshayini, M.; Gowri, M. An effective eye-blink-based cyber secure PIN password authentication system. In Computational Intelligence and Data Analytics. Lecture Notes on Data Engineering and Communications Technologies; Buyya, R., Hernandez, S.M., Kovvur, R.M.R., Sarma, T.H., Eds.; Springer: Singapore, 2022; pp. 89–99. [Google Scholar]

- Parera, C.; Redondi, A.E.C.; Cesana, M.; Liao, Q.; Malanchini, I. Transfer learning for channel quality prediction. In Proceedings of the 2019 IEEE International Symposium on Measurements & Networking (M&N), Catania, Italy, 8–10 July 2019; IEEE: Catania, Italy, 2019; pp. 1–6. [Google Scholar]

- Klaine, P.V.; Imran, M.A.; Onireti, V.; Souza, R.D. A Survey of Machine Learning Techniques Applied to Self-Organizing Cellular Networks. IEEE Commun. Surv. Tutor. 2017, 19, 2392–2431. [Google Scholar] [CrossRef]

- Nawaz, S.J.; Sharma, S.K.; Wyne, S.; Patwary, M.N.; Asaduzzaman, M. Quantum Machine Learning for 6G Communication Networks: State-of-the-Art and Vision for the Future. IEEE Access 2019, 7, 46317–46350. [Google Scholar] [CrossRef]

| Abbreviations | Meaning |

|---|---|

| 3G | Third generation |

| 5G | Fifth generation |

| 6G | Sixth generation |

| AI | Artificial intelligence |

| ANNs | Artificial neural networks |

| UAVs | Aerial vehicles |

| B5G | Beyond 5G |

| DRL | Deep reinforcement learning |

| DL | Deep learning |

| FFNN | Feedforward neural network |

| FL | Federated learning |

| HetNets | Heterogeneous networks |

| KPIs | Key performance indicators |

| LSTM | Long short-term memory |

| IoT | Internet of Things |

| ML | Machine learning |

| mmWave | Millimeter wave communications |

| MIMO | Multiple inputs, multiple outputs |

| ML | Machine learning |

| NFV | Network function virtualization |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| QC | Quantum computing |

| QML | Quantum ML |

| RAN | Radio access networks |

| RIS | Reconfigurable intelligent surfaces |

| RNNs | Recurrent neural networks |

| SDNs | Software-defined networks |

| THz | Terahertz |

| N | Citation | Main Contribution | Limitations | Methodology | Citation Number | Technique | Technique Approach | Application |

|---|---|---|---|---|---|---|---|---|

| 1 | [13] | Based on an exhaustive literature review, the authors provide different options to adapt deep learning models to mobile device networks in general and highlight the different problems to be solved, thereby opening up in-depth research in the field of knowledge of mobile networks and machine learning. | Although the work focuses on deep learning, other machine learning techniques used in wireless networks could be compared. | Literature Review | 825 | Deep Learning | Deep Learning-Driven Network-Level Mobile Data Analysis; Deep Learning-Driven App-Level Mobile Data Analysis; Deep Learning-Driven User Mobility Analysis; Deep Learning Driven User Localization; Deep Learning-Driven Wireless Sensor Networks; Deep Learning-Driven Network Control; Deep Learning-Driven Network Security; Deep Learning-Driven Signal Processing; Emerging Deep Learning-Driven Mobile Network Application | Wireless Networks |

| 2 | [19] | The authors present a detailed review of DRL approaches proposed to address emerging problems in communication networks, such as dynamic network access, data rate control, wireless caching, data offloading, network security and connectivity preservation. Additionally, the authors present DRL applications for traffic routing, resource sharing and data collection. | Although the work focuses on deep reinforcement learning, other machine learning techniques used in wireless networks could be compared. | Literature Review | 806 | Deep Reinforcement Learning | Deep Deterministic Policy Gradient Q-Learning for Continuous Action; Deep Recurrent Q-Learning for POMDPs; Deep SARSA Learning; Deep Q-Learning for Markov Games | Communications Network |

| 3 | [14] | The authors present five lines of future research related to massive MIMO, digital beamforming and/or antenna arrays. These five lines focus on proposals for extremely large aperture arrays, holographic massive MIMO, six-dimensional positioning, large-scale MIMO radar and intelligent massive MIMO. | Although the authors provide windows for future research around antenna arrays and massive MIMO. The authors do not consider multiple options regarding antenna array and do not compare MIMO with other techniques; although they talk about the next generation of communications, they do not consider a wide field on 6G communications and dedicate only a small space to it. | Literature Review | 362 | Machine Learning | Reinforcement Learning | Antenna Arrays |

| 4 | [15] | In this review article, the authors describe up-to-date research on the integration of multi-access edge computing with new technologies to be deployed in 5G. | While the authors provide a complete framework on multi-access edge computing features, they focus on applications, needs and features, leaving less room for machine learning techniques applied in current research advances. | Literature Review | 354 | Machine Learning | Unsupervised Learning; Supervised Learning; Reinforcement Learning | 5G Network |

| 5 | [17] | The authors present an overview of the sixth generation (6G) system based on the following possibilities: usage scenarios, requirements, key performance indicators (KPIs), architecture and enabling technologies, based on the projection of mobile traffic to 2030. | While the authors provide a complete framework on the features and a strategic path for 6G, starting from the possible applications, use cases and scenarios, a reduced part is left for the possible machine learning techniques and comparison of the same in 6G. | Literature Review | 323 | Artificial Intelligence | Block Chain; Digital Twins; Intelligent Edge Computing; | 6G Network |

| 6 | [20] | Particular challenges present and future research needed in control systems, networks and computing, as well as for the adoption of machine learning in an I-IoT context | This article focuses on the characteristics of the architecture necessary for IoT, taking into account the possible high traffic demand that will be required to facilitate the connection of these devices. However, it does not focus on machine learning techniques that may allow the best management of networks for the connection of IoT devices. | Literature Review | 315 | Machine Learning | Unsupervised Learning; Supervised Learning; Reinforcement Learning | IoT |

| 7 | [95] | In this research, the authors carry out a general description of the most common machine learning techniques applied to cellular networks, classifying the ML solution applied according to the usage. | Different machine learning techniques are discussed; however, only a final reference to deep learning is made, without expanding the possibilities of the application of deep learning techniques. | Literature Review | 299 | Machine Learning | Supervised Learning (k-Nearest Neighbor; Neural Networks; Bayes’ Theory; Support Vector Machine; Decision Trees); Unsupervised Learning (Anomaly Detectors; Self Organizing Maps; K-Means); Reinforcement Learning | Cellular Networks |

| 8 | [30] | The authors indicate different potentials of cloud-based machine learning (ML) for the architectural deployment of 5G by presenting different case studies and applications that demonstrate the potential of edge ML in 5G. | The authors focus their research on edge ML, so the study focuses on providing an overview of future research on edge ML without considering other types of architectures for wireless networks, although they reflect on different types of wireless networks. | Literature Review | 269 | Machine Learning | Supervised Learning (k-Nearest Neighbor; Neural Networks; Bayes’ Theory; Support Vector Machine; Decision Trees); Unsupervised Learning (Anomaly Detectors; Self Organizing Maps; K-Means); Reinforcement Learning | Wireless Network |

| 9 | [96] | The authors provide a description of the possible enablers of 6G networks from the domain of theoretical elements of machine learning (ML), quantum computing (QC) and quantum ML (QML). The authors propose possible challenges for 6G networks, benefits and usages for applications in Beyond 5G networks. | This is a work more focused on the future of communication networks based on the application of quantum computing techniques; therefore, less emphasis is placed on recent use cases of machine learning applied to mobile networks. | Literature Review | 269 | Quantum Machine Learning | Supervised Learning; Semi-supervised and Unsupervised Learning; Reinforcement Learning; Genetic programming; Learning Requirements and Capability; Deep Neural Networks; Deep Transfer Learning; Deep Unfolding; Deep Learning for Cognitive Communications | Beyond 5G |

| 10 | [53] | The authors propose a 3D cellular architecture for drone base station network planning and minimum latency cell association for user equipment drones, through a manageable method based on the notion of truncated octahedral shapes, allowing for the complete coverage for a given space with a minimum number of drone base stations. | The research is based solely on a proposal for drones that can be replicated for unmanned aerial vehicles; however, it does not consider other mobile equipment. | Kernel density estimation | 266 | Machine Learning | 3D Wireless Cellular Network |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

García-Pineda, V.; Valencia-Arias, A.; Patiño-Vanegas, J.C.; Flores Cueto, J.J.; Arango-Botero, D.; Rojas Coronel, A.M.; Rodríguez-Correa, P.A. Research Trends in the Use of Machine Learning Applied in Mobile Networks: A Bibliometric Approach and Research Agenda. Informatics 2023, 10, 73. https://doi.org/10.3390/informatics10030073

García-Pineda V, Valencia-Arias A, Patiño-Vanegas JC, Flores Cueto JJ, Arango-Botero D, Rojas Coronel AM, Rodríguez-Correa PA. Research Trends in the Use of Machine Learning Applied in Mobile Networks: A Bibliometric Approach and Research Agenda. Informatics. 2023; 10(3):73. https://doi.org/10.3390/informatics10030073

Chicago/Turabian StyleGarcía-Pineda, Vanessa, Alejandro Valencia-Arias, Juan Camilo Patiño-Vanegas, Juan José Flores Cueto, Diana Arango-Botero, Angel Marcelo Rojas Coronel, and Paula Andrea Rodríguez-Correa. 2023. "Research Trends in the Use of Machine Learning Applied in Mobile Networks: A Bibliometric Approach and Research Agenda" Informatics 10, no. 3: 73. https://doi.org/10.3390/informatics10030073

APA StyleGarcía-Pineda, V., Valencia-Arias, A., Patiño-Vanegas, J. C., Flores Cueto, J. J., Arango-Botero, D., Rojas Coronel, A. M., & Rodríguez-Correa, P. A. (2023). Research Trends in the Use of Machine Learning Applied in Mobile Networks: A Bibliometric Approach and Research Agenda. Informatics, 10(3), 73. https://doi.org/10.3390/informatics10030073