1. Introduction

Neural networks have achieved unprecedented performances in almost every area where machine learning is applicable [

1,

2,

3]. Throughout its history, computer science has had several turning points with groundbreaking consequences that unleashed the power of neural networks. To name a few, one might regard the chain rule backpropagation [

4], the invention of convolutional layers [

5] and recurrent models [

4], the advent of low-cost specialized parallel hardware (mostly GPUs) [

6] and the exponential growth of available training data as some of the most important factors behind today’s success of neural networks.

Ironically, despite our understanding of every atomic element of a neural network and our capability to successfully train it, it is still difficult with today’s formalism to understand what makes neural networks so effective. As neural nets increase in size, the combinatorics between its weights and activation functions makes it impossible (at least today) to formally answer questions such as: (i) why neural networks (almost) always converge towards a global minima regardless of their initialization, the data it is trained on and the associated loss function; (ii) what is the true capacity of a neural net? (iii) what are the true generalization capabilities of a neural net?

One may hypothesize that the limited understanding of these fundamental concepts derives from the more or less formal representation that we have of these machines. Since the 1980s, neural nets have been mostly represented in two ways: (i) a cascade of non-linear atomic operations (be it, a series of neurons with their activation functions, layers, convolution blocks, etc.) often represented graphically (e.g., Figure 3 by He et al. [

7]) and (ii) a point in an N dimensional Euclidean space (where N is the number of weights in the network) lying on the slope of a loss landscape that an optimizer ought to climb down [

8].

In this work, we propose a fundamentally different way to represent neural networks. Based on quiver representation theory, we provide a new mathematical footing to represent neural networks as well as the data they process. We show that this mathematical representation is by no means an approximation of what neural networks are as it tightly matches reality.

In this paper, we do not focus on how neural networks learn, but rather on the intrinsic properties of their architectures and their forward pass of data. Therefore providing new insights on how to understand neural networks. Our mathematical formulation accounts for the wide variety of architectures there are, and also usages and behaviors of today’s neural networks. For this, we study the combinatorial and algebraic nature of neural networks by using ideas coming from the mathematical theory of quiver representations [

9,

10]. Although this paper focuses on feed-forward networks, a combinatorial argument on recurrent neural networks can be made to apply our results to them: the cycles in recurrent neural networks are only applied a finite number of times, and once unraveled they combinatorially become networks that feed information in a single direction with shared weights [

11].

This paper is based on two observations that expose the algebraic nature of neural networks and how it is related to quiver representations:

- 1.

When computing a prediction, neural networks are quiver representations together with activation functions.

- 2.

The forward pass of data through the network is encoded as quiver representations.

Everything else in this work is a mathematical consequence of these two observations. Our main contributions can be summarized by the following six items:

We provide the first explicit link between representations of quivers and neural networks.

We show that quiver representations gently adapt to common neural network concepts such as fully connected layers, convolution operations, residual connections, batch normalization, pooling operations, and any feed-forward architecture, since this is a universal description of neural networks.

We prove that algebraic isomorphisms of neural networks preserve the network function and obtain, as a corollary, that ReLU networks are positive scale invariant [

12,

13,

14].

We present the theoretical interpretation of data in terms of the architecture of the neural network and of quiver representations.

We mathematically formalize a modified version of the manifold hypothesis [

3,

11] in terms of the combinatorial architecture of the network.

We provide constructions and results supporting existing intuitions in deep learning while discarding others, and bring new concepts to the table.

2. Previous Work

In the theoretical description of the deep neural optimization paradigm given by Choromanska et al. [

15], the authors underline that “

clearly the model (neural net) contains several dependencies as one input is associated with many paths in the network. That poses a major theoretical problem in analyzing these models as it is unclear how to account for these dependencies”. Interestingly, this is exactly what quiver representations are about [

9,

10,

16].

While as far as we know, quiver representation theory has never been used to study neural networks, some authors have nonetheless used a subset of it, sometimes unbeknownst to them. It is the case of the so-called

positive scale invariance of ReLU networks which Dinh et al. [

12] used to mathematically prove that most notions of loss flatness cannot be used directly to explain generalization. This property of ReLU networks has also been used by Neyshabur et al. [

14] to improve the optimization of ReLU networks. In their paper, they propose the

Path-SGD (stochastic gradient descent), which is an approximate gradient descent method with respect to a path-wise regularizer. Furthermore, Meng et al. [

13] defined a space where points are ReLU networks with the same network function, which they use to find better gradient descent paths. In this paper (cf. Theorem 1 and Corollary 2), we prove that positive scale invariance of ReLU networks is a property derived from the representation theory of neural networks that we present in the following sections. We interpret these results as evidence of the algebraic nature of neural networks, as they exactly match the basic definitions of representation theory (i.e., quiver representations and morphisms of quiver representations).

Wood and Shawe-Taylor [

17] used group representation theory to account for symmetries in the layers of a neural network. Our mathematical approach is different since quiver representations are representations of algebras [

9] and not of groups. Besides, Wood and Shawe-Taylor [

17] present architectures that match mathematical objects with nice properties while we define the objects that model the computations of the neural network. We prove that quiver representations are more suited to study networks due to their combinatorial and algebraic nature.

Healy and Caudell [

18] mathematically represent neural networks by objects called

categories. However, as mentioned by the authors, their representation is an approximation of what neural nets are as they do not account for each of their atomic elements. In contrast, our quiver representation approach includes every computation involved in a neural network, be it a neural operation (i.e., dot product + activation function), layer operations (fully connected, convolutional, pooling) as well as batch normalization. As such, our representation is a universal description of neural networks, i.e., the results and consequences of this paper apply to all neural networks.

Quiver representations have been used to find lower-dimensional sub-space structures of datasets [

19] without, however, any relation to neural networks. Our interpretation of data is orthogonal to this one since we look at how neural networks interpret the data in terms of every single computation they perform.

Following the discussion by S. Arora in his 2018 ICML tutorial [

20] on the characteristics of a theory for deep learning, our goal is precisely this. Namely, to provide a theoretical footing that can validate and formalize certain intuitions about deep neural nets and lead to new insights and new concepts. One such intuition is related to feature map visualization. It is well known that feature maps can be visualized into images showing the input signal characteristics and thus providing intuitions on the behavior of the network and its impact on an image [

21,

22]. This notion is strongly supported by our findings. Namely, our data representation introduced in

Section 6 is a thin quiver representation that contains the network features (i.e., neuron outputs or feature maps) induced by the data. Said otherwise, our data representation includes both the network structure and the neuron’s inputs and outputs induced by a forward pass of a single data sample (see Equation (

5) in page 19 and the proof of Theorem 2). Our data quiver representations contain every feature map during a forward pass of data and so it is aligned with the notion of

representations in representation learning [

3,

11,

23].

We show in

Section 7 that our data representations lie into a so-called

moduli space. Interestingly, the dimension of the moduli space is the same value that was computed by Zheng et al. [

24] and used to measure the capacity of ReLU networks. They empirically confirmed that the dimension of the moduli space is directly linked to generalization. Our results suggest that the findings mentioned above can be generalized to any neural network via representation theory.

The moduli space also formalizes a modified version of the manifold hypothesis for the data see [

3] (Chapter 5.11.3). This hypothesis states that high-dimensional data (typically images and text) live on a thin and yet convoluted manifold in their original space. We show that this data manifold can be mapped to the moduli space while carrying the feature maps induced by the data, and then it is related to notions appearing in manifold learning [

11,

23]. Our results, therefore, create a new bridge between the mathematical study of these moduli spaces [

25,

26,

27] and the study of the training dynamics of neural networks inside these moduli spaces.

Naive pruning of neural networks [

28] where the smallest weights get pruned is also explained by our interpretation of the data and the moduli space (see consequence 4 on

Section 7.1.2), since the coordinates of the data quiver representations inside the moduli space are given as a function of the weights of the network and the activation outputs of each neuron on a forward pass (cf. Equation (

5) in page 19).

There exist empirical results where, up to certain restrictions, the activation functions can be learned [

29] and our interpretation of the data supports why this is a good idea in terms of the moduli space. For further details see

Section 7.2.3.

3. Preliminaries of Quiver Representations

Before we show how neural networks are related to quiver representations, we start by defining the basic concepts of quiver representation theory [

9,

10,

16]. The reader can find a glossary with all the definitions introduced in this and the next chapters at the end of this paper.

Definition 1 ([

9] (Chapter 2))

. A quiver Q is given by a tuple where is an oriented graph with a set of vertices and a set of oriented edges , and maps that send to its source vertex and target vertex , respectively. Throughout the present paper, we work only with quivers whose sets of edges and vertices are finite.

Definition 2 ([

9] (Chapter 2))

. A source vertex of a quiver Q is a vertex such that there are no oriented edges with target . A sink vertex of a quiver Q is a vertex such that there are no oriented edges with source . A loop in a quiver Q is an oriented edge ϵ such that . Definition 3 ([

9] (Chapter 3))

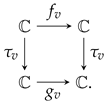

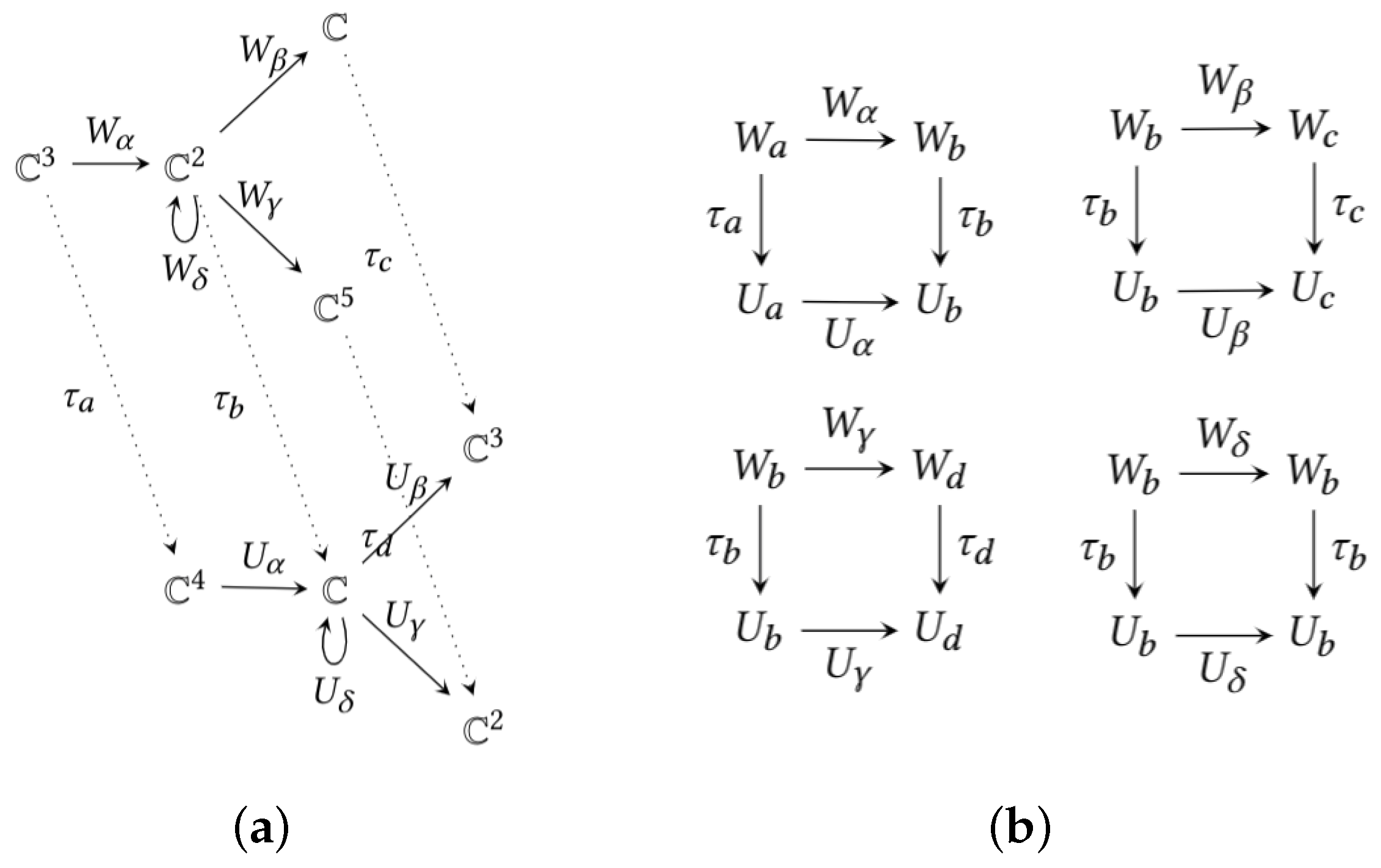

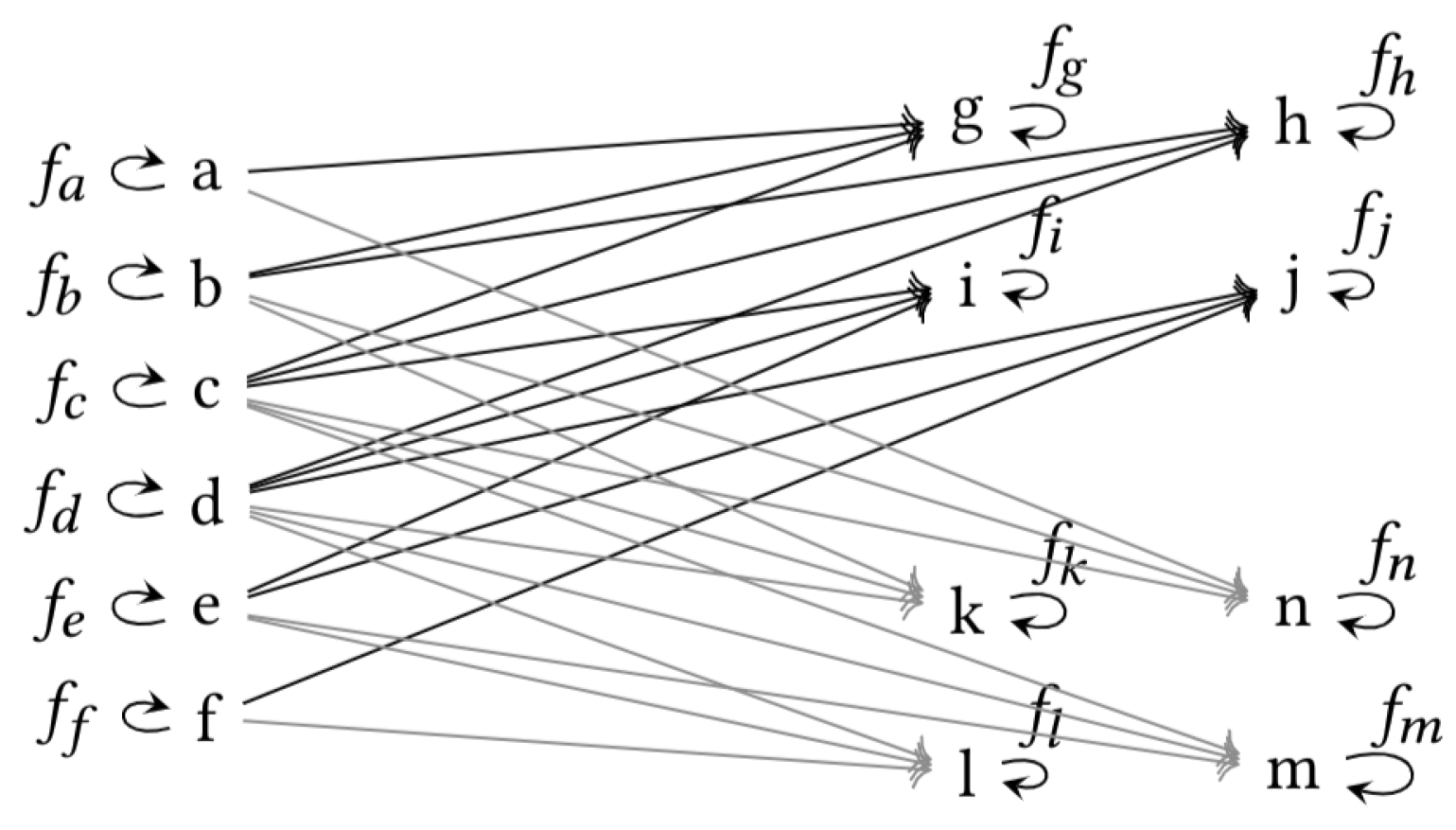

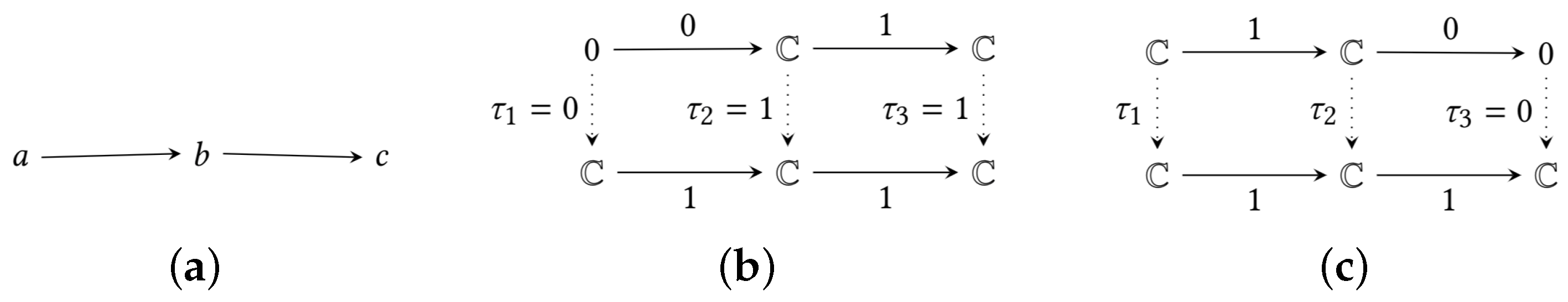

. If Q is a quiver, a quiver representation of Q is given by a pair of setswhere the ’s are vector spaces indexed by the vertices of Q, and the ’s are linear maps indexed by the oriented edges of Q, such that for every edge Figure 1a illustrates a quiver

Q while

Figure 1b,c are two quiver representations of

Q.

Definition 4 ([

9] (Chapter 3))

. Let Q be a quiver and let W and U be two representations of Q. A morphism of representations is a set of linear maps indexed by the vertices of Q, where is a linear map such that for every . To illustrate this definition, one may consider the quiver

Q and its representations

W and

U of

Figure 1. The morphism between

W and

U via the linear maps

are pictured in

Figure 2a. As shown, each

is a matrix which allows to transform the vector space of vertex

v of

W into the vector space of vertex

v of

U.

Definition 5. Let Q be a quiver and let W and U be two representations of Q. If there is a morphism of representations where each is an invertible linear map, then W and U are said to beisomorphic representations.

The previous definition is equivalent to the usual categorical definition of isomorphism, see [

9] (Chapter 3). Namely, a morphism of representations

is an isomorphism if there exists a morphism of representations

such that

and

. Observe here that the composition of morphisms is defined as a coordinate-wise composition, indexed by the vertices of the quiver.

In

Section 4, we will be working with a particular type of quiver representations, where the vector space of each vertex is in 1D. These 1D representations are called thin representations, and the morphisms of representations between thin representations are easily described.

Definition 6. Athin representationof a quiver Q is a quiver representation W such that for all .

If W is a thin representation of Q, then every linear map is a matrix, so is given by multiplication with a fixed complex number. We may and will identify every linear map between one-dimensional spaces with the number whose multiplication defines it.

Before we move on to neural networks, we will introduce the notion of group and action of a group.

Definition 7 ([

30] (Chapter 1))

. A non-empty set G is called a group if there exists a function , called the product of the group denoted , such that for all .

There exists an element such that for all , called theidentityof G.

For each there exists such that .

For example, the set of non-zero complex numbers (and also the non-zero real numbers ) with the usual multiplication operation forms a group. Usually, one does not write the product of the group as a dot and just concatenates the elements to denote multiplication , as for the product of numbers.

Definition 8 ([

30] (Chapter 3))

. Let G be a group and let X be a set. We say that there is an action of G on X if there exists a map such that for all , where is the identity.

, for all and all .

In our case, G will be a group indexed by the vertices of Q, and the set X will be the set of thin quiver representations of Q.

Let

W be a thin representation of a quiver

Q. Given a choice of invertible (non-zero) linear maps

for every

, we are going to construct a thin representation

U such that

is an isomorphism of representations. Since

U is thin, we have that

for all

. Let

be an edge of

, we define the group action as follows,

Thus, for every edge we get a commutative diagram

The construction of the thin representation U from the thin representation W and the choice of invertible linear maps , defines an action on thin representations of a group. The set of all possible isomorphisms of thin representations of Q forms such a group.

Definition 9. Thechange of basis groupof thin representations over a quiver Q iswhere denotes the multiplicative group of non-zero complex numbers. That is, the elements of G are vectors of non-zero complex numbers indexed by the set of vertices of Q, and the group operation between two elements and is by definition We use the action notation for the action of the group G on thin representations. Namely, for of the form and a thin representation W of Q, the thin representation U constructed above is denoted .

4. Neural Networks

In this section, we connect the dots between neural networks and the basic definitions of quiver representation theory that we presented before. However, before we do so, let us mention that since the vector space of each vertex of a quiver representation is defined over the complex numbers, it implies that the weights on the neural networks that we are to present will also be complex numbers. Despite some papers on complex neural networks [

31], this approach may seem unorthodox. However, the use of complex numbers is a mathematical pre-requisite for the upcoming notion of moduli space that we will introduce in

Section 7. Observe also, that this does not mean that in practice neural networks should be based on complex numbers. It only means that neural networks in practice, which are based upon real numbers, trivially satisfy the condition of being complex neural networks, and therefore the mathematics derived from using complex numbers apply to neural networks over real numbers.

For the rest of this paper, we will focus on a special type of quiver Q that we call network quiver. A network quiver Q has no oriented cycles other than loops. Moreover, a sub-set of d source vertices of Q are called the input vertices. The source vertices that are not input vertices are called bias vertices. Let k be the number of all sinks of Q, we call these the output vertices. All other vertices of Q are called hidden vertices.

Definition 10. A quiver Q isarranged by layersif it can be drawn from left to right arranging its vertices in columns such that:

There are no oriented edges from vertices on the right to vertices on the left.

There are no oriented edges between vertices in the same column, other than loops and edges from bias vertices.

The first layer on the left, called theinput layer, will be formed by the d input vertices. The last layer on the right, called theoutput layer, will be formed by the k output vertices. The layers that are not input nor output layers are calledhidden layers. We enumerate the hidden layers from left to right as 1st hidden layer, 2nd hidden layer, 3rd hidden layer, and so on.

From now on Q will always denote a quiver with d input vertices and k output vertices.

Definition 11. Anetwork quiverQ is a quiver arranged by layers such that:

- 1.

There are no loops on source (i.e., input and bias) nor sink vertices;

- 2.

There is exactly one loop on each hidden vertex.

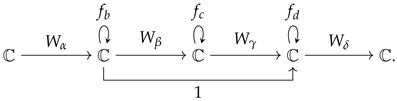

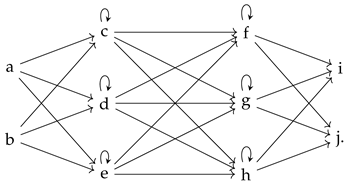

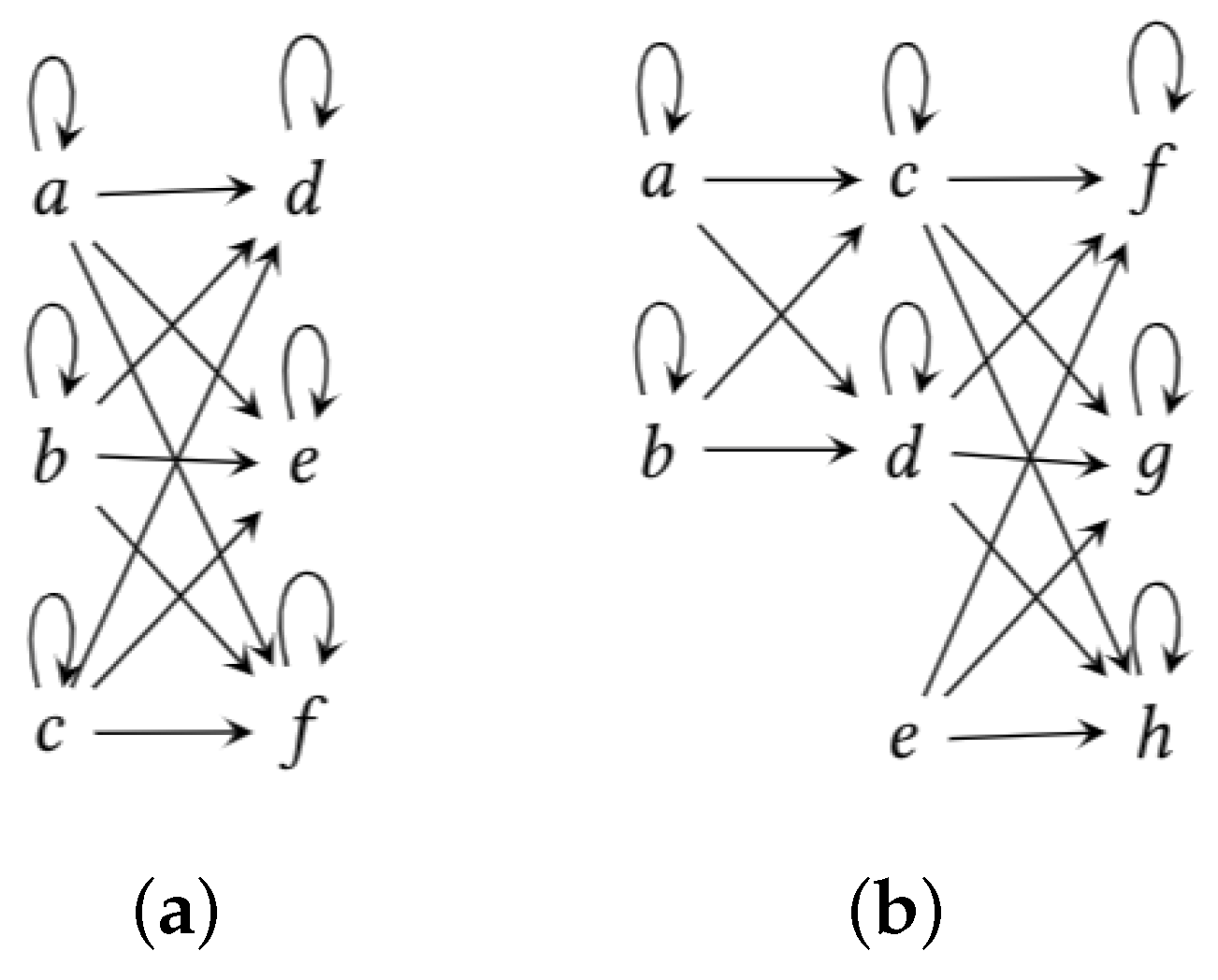

An example of a network quiver can be found in

Figure 3a.

Definition 12. Thedeloopedquiver of Q is the quiver obtained by removing all loops of Q. We denote .

When a neural network computes a forward pass (be it a multilayer perceptron, a convolutional neural network and even a randomly wired neural network [

32]), the weight between two neurons is used to multiply the output signal of the first neuron and the result is fed to the second neuron. Since multiplying with a number (the weight) is a linear map, we get that a weight is used as a linear map between two 1D vector spaces during inference. Therefore the weights of a neural network define a thin quiver representation of the delooped quiver

of its network quiver

Q, every time it computes a prediction.

When a neural network computes a forward pass, we get a combination of two things:

- 1.

A thin quiver representation.

- 2.

Activation functions.

Definition 13. Anactivation functionis a one variable non-linear function differentiable except in a set of measure zero.

Remark 1. An activation function can, in principle, be linear. Nevertheless, neural network learning occurs with all its benefits only in the case where activation functions are fundamentally non-linear. Here, we want to provide a universal language for neural networks, so we will work with neural networks with non-linear activation functions, unless explicitly stated otherwise, for example as in our data representations in Section 6. We will encode the point-wise usage of activation functions as maps assigned to the loops of a network quiver.

Definition 14. Aneural networkover a network quiver Q is a pair where W is a thin representation of the delooped quiver and are activation functions, assigned to the loops of Q.

An example of neural network

over a network quiver

Q can be seen in

Figure 3b. The words

neuron and

unit refer to the combinatorics of a vertex together with its activation function in a neural network over a network quiver. The

weights of a neural network

are the complex numbers defining the maps

for all

.

When computing a prediction, we have to take into account two things:

Once a network quiver and a neural network are chosen, a decision has to be made on how to compute with the network. For example, a hidden neuron may compute an inner product of its inputs followed by the activation function, but others, like max-pooling, output the maximum of the input values. We account for this by specifying in the next definition how every type of vertex is used to compute.

Definition 15. Let be a neural network over a network quiver Q and let be an input vector of the network. Denote by the set of edges of Q with target v. Theactivation output of the vertexwith respect tox after applying a forward pass is denoted and is computed as follows:

If is an input vertex, then ;

If is a bias vertex, then ;

If is a hidden vertex, then ;

If is an output vertex, then ;

If is a max-pooling vertex, then , where denotes the real part of a complex number, and the maximum is taken over all such that .

We will see in the next chapter how and why average pooling vertices do not require a different specification on the computation rule, because it can be written in terms of these same rules.

The previous definition is equivalent to the basic operations of a neural net, which are affine transformations followed by point-wise non-linear activation functions, see

Appendix A where we clarify this with an example. The advantage of using the combinatorial expression of Definition 15 is twofold, (i) it allows to represent any architecture, even randomly wired neural networks [

32], and (ii) it allows to simplify the notation on proofs concerning the network function.

For our purposes, it is convenient to consider no activation functions on the output vertices. This is consistent with current deep learning practices as one can consider the activation functions of the output neurons to be part of the loss function (like softmax + cross-entropy or as done by Dinh et al. [

12]).

Definition 16. Let be a neural network over a network quiver Q. Thenetwork functionof the neural network is the functionwhere the coordinates of are the activation outputs of the output vertices of (often called the “score” of the neural net) with respect to an input vector . The only difference in our approach is the combinatorial expression of Definition 15 which can be seen as a neuron-wise computation, that in practice is performed by layers for implementation purposes. These expressions will be useful to prove our more general results.

We now extend the notion of isomorphism of quiver representations to isomorphism of neural networks. For this, we have to take into account that isomorphisms of quiver representations carry the commutative diagram conditions given by all the edges in the quiver, as shown in

Figure 2. For neural networks, the activation functions are non-linear, but this does not prevent us from putting a commutative diagram condition on activation functions as well. Therefore, an isomorphism of quiver representations acts on a neural network in the sense of the following definition.

Definition 17. Let and be neural networks over the same network quiver Q. Amorphism of neural networks is a morphism of thin quiver representations such that for all that is not a hidden vertex, and for every hidden vertex the following diagram is commutative A morphism of neural networks is anisomorphism of neural networksif is an isomorphism of quiver representations. We say that two neural networks over Q areisomorphicif there exists an isomorphism of neural networks between them.

Remark 2. The terms ‘network morphism’ [33], ‘isomorphic neural network’ and ‘isomorphic network structures’ [34,35] have already been used with different approaches. In this work, we will not refer to any of those terms. Definition 18. Thehidden quiverof Q, denoted by , is given by the hidden vertices of Q and all the oriented edges between hidden vertices of Q that are not loops.

Said otherwise, is the same as the delooped quiver but without the source and sink vertices.

Definition 19. Thegroup of change of basisfor neural networks is denoted as An element of the change of basis group is called achange of basisof the neural network .

Note that this group has as many factors as hidden vertices of Q. Given an element we can induce , where G is the change of basis group of thin representations over the delooped quiver . We do this by assigning for every that is not a hidden vertex. Therefore, we will simply write for elements of considered as elements of G.

The action of the group

on a neural network

is defined on a given element

and a neural network

by

where

is the thin representation such that for each edge

, the linear map

following the group action of Equation (

1), and the activation

on the hidden vertex

is given by

Observe that

is a neural network such that

is an isomorphism of neural networks. This leads us to the following theorem, which is an important corner stone of our paper. Please refer to

Appendix A for an illustration of this proof.

Theorem 1. If is an isomorphism of neural networks, then

Proof. Let

be an isomorphism of neural networks over

Q and

an oriented edge of

Q. Considering the group action of Equation (

1), if

and

are hidden vertices then

. However, if

is a source vertex, then

and

. Additionally, if

is an output vertex, then

and

. Furthermore, for every hidden vertex

we get the activation function

for all

.

We proceed with a forward pass to compare the activation outputs of both neural networks with respect to the same input vector. Let

be the input vector of the networks, for every source vertex

we have

Now let

be a vertex in the first hidden layer and

the set of edges between the source vertices and

, the activation output of

v in

is

As an illustration, if

is the neural network of

Figure 3, the source vertices would be

, the first hidden layer vertices would be

and the weights

in the previous equation would be

when

. We now calculate in

the activation output of the same vertex

v,

since

, then

and

and since

is a source vertex, it follows from Equation (

3) that

and

Assume now that

is in the second hidden layer (e.g., vertex

g or

h in

Figure 3), the activation output of

v in

is

and since

from the equation above, then

Inductively, we obtain that

for every vertex

. Finally, the coordinates of

are the activation outputs of

on the output vertices, and analogously for

. Since

for every output vertex

, we obtain

which proves that an isomorphism between two neural networks

and

preserves the network function. □

Remark 3. Max-pooling represents a different operation to obtain the activation output of neurons. After applying an isomorphism τ to a neural network , where the vertex is a max-pooling vertex we obtain an isomorphic neural network , whose activation output on vertex v is given by the following formula:andwhich is the main argument in the proof of the previous theorem, so the result applies to max-pooling. Note also that max-pooling vertices are positive scale invariant. 4.1. Consequences

Representing a neural network over a network quiver Q by a pair and Theorem 1 has two consequences on neural networks.

4.1.1. Consequence 1

Corollary 1. There are infinitely many neural networks with the same network function, independently of the architecture and the activation functions.

If each neuron of a neural network is assigned a change of basis value

, its weights

W can be transformed to another set of weights

V following the group action of Equation (

1). Similarly, the activation functions

f of that network can be transformed to other ones

g following the group action of Equation (

2). For example, if

f is ReLU and

is a negative real value, then

g becomes an inverted-flipped ReLU function, i.e.,

. From the usual neural network representation stand point, the two neural networks

and

are different as their activation functions

f and

g are different and their weights

W and

V are different. Nonetheless, their function (i.e., the output of the networks given some input vector

x) is rigorously identical. This is true regardless of the structure of the neural network, its activation functions and weight vector

W.

Said otherwise, Theorem 1 implies that there is not a unique neural network with a given network function and that an [infinite] amount of other neural networks with different weights and different activation functions have the same network function and that these other neural networks may be obtained with the change of basis group .

4.1.2. Consequence 2

A weak version of Theorem 1 proves a property of ReLU networks known as positive scale invariance or positive homogeneity [

12,

13,

36,

37,

38]. Positive scale invariance is a property of ReLU non-linearities, where the network function remains unchanged if we (for example) multiply the weights in one layer of a network by a positive factor, and divide the weights on the next layer by that same positive factor. Even more, this can be done on a per neuron basis. Namely, assigning a positive factor

to a neuron and multiplying every weight that points to that neuron with

r, and dividing every weight that starts on that neuron by

r.

Corollary 2 (Positive Scale Invariance of ReLU Networks)

. Let be a neural network over Q over the real numbers where f is the ReLU activation function. Let where if v is not a hidden vertex, and for any other v. ThenAs a consequence, and are isomorphic neural networks. In particular, they have the same network function, .

Proof. Recall that

. Since ReLU satisfies

for all

x and all

and since

corresponds to

at each vertex

v as mentioned in Equation (

2), we get that

for each vertex

v and thus

. Finally,

. □

We stress that this known result is a consequence of neural networks being pairs whose structure is governed by representation theory, and therefore exposes the algebraic and combinatorial nature of neural networks.

5. Architecture

In this section, we first outline the different types of architectures that we consider. We also show how the commonly used layers for neural networks translate into quiver representations. Finally, we will present in detail how an isomorphism of neural networks can be chosen so that the structure of the weights gets preserved.

5.1. Types of Architectures

Definition 20 ([

3] (p. 193))

. The architecture of a neural network refers to its structure which accounts for how many units (neurons) it has and how these units are connected together. For our purposes, we distinguish three types of architectures: combinatorial architecture, weight architecture and activation architecture.

Definition 21. Thecombinatorial architectureof a neural network is its network quiver. Theweight architectureis given by constraints on how the weights are chosen, and theactivation architectureis the set of activation functions assigned to the loops of the network quiver.

If we consider the neural network of

Figure 3, the combinatorial architecture specifies how the vertices are connected together, the weight architecture on how the weights

are assigned and the activation architecture deals with the activation functions

.

Two neural networks may have different combinatorial, weight and activation architecture like ResNet [

7] vs. VGGnet [

39] for example. Neural network layers may have the same combinatorial architecture but a different activation and weight architecture. It is the case for example of a mean pooling layer vs. a convolution layer. While they both encode a convolution (same combinatorial architecture) they have a different activation architecture (as opposed to conv layers, mean pooling has no activation function) and a different weight architecture as the mean pooling weights are fixed, and on conv layers they are shared across filters. This is what we mean by “

constraints” on how the weights are chosen, namely, weights in conv layers and mean-pooling layers are not chosen freely, as in fully connected layers. Overall, two neural networks have globally the same architecture if and only if they share the same combinatorial, weight and activation architectures.

Additionally, isomorphic neural networks always have the same combinatorial architecture, since isomorphisms of neural networks are defined over the same network quiver. However, an isomorphism of neural networks can change or not the weight and the activation architecture. We will return to that concept at the end of this section.

5.2. Neural Network Layers

Here, we look at how fully-connected layers, convolutional layers, pooling layers, batch normalization layers and residual connections are related to the quiver representation language.

Let

be the set of vertices on the

j-th hidden layer of

Q. A

fully connected layer is a hidden layer

where all vertices on the previous layer are connected to all vertices in

. A

fully connected layer with bias is a hidden layer

that puts constraints on the previous layer

such that the non-bias vertices of

are fully connected with the non-bias vertices of layer

. A fully connected layer has no constraints on its weight and activation architecture but impose that the bias vertex has no activation function and not connected with the vertex of the previous layer. The reader can find an illustration of this in

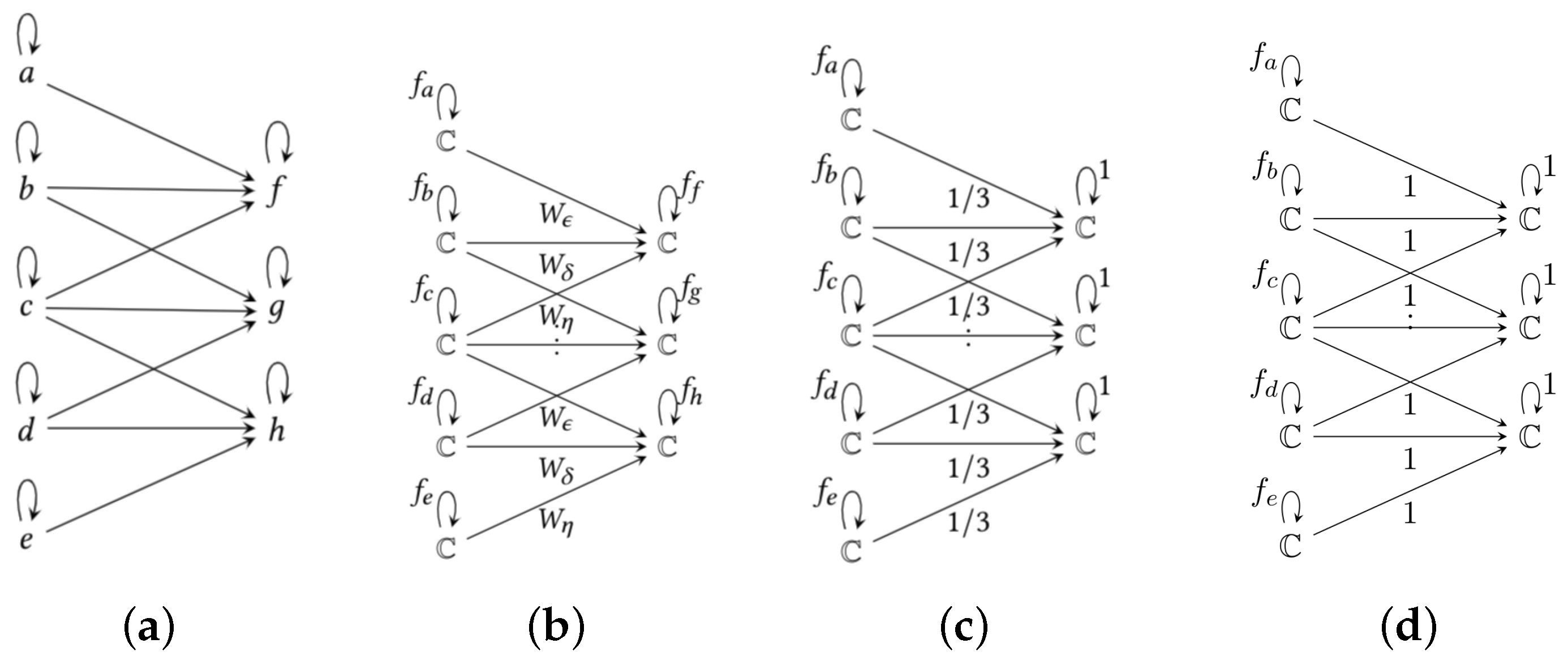

Figure 4.

A convolutional layer is a hidden layer whose vertices are separated in channels (or feature maps). The weights are typically organized in filters , and each is a tensor made of channels. By “channels”, we mean that the shape of, for example, a 2D convolution is given by , where w is the width, h is the height and c is the number of channels on the previous layer. A “filter” is given by the weights and edges on a conv layer whose target lies in the same channel.

As opposed to fully connected layers, convolutional layers have constraints. One of which is that convolutional layers should be partitioned into channels of the same cardinality. Each filter produces a channel on the layer by a convolution of with the filter . Moreover, a convolution operation has a stride and may use padding.

A convolutional layer also has constraints on its combinatorial and weight architecture. First, each

is connected to a sub-set of vertices in the previous layer “in front” of which it is located. The combinatorial architecture of a conv layer for one feature map is illustrated in

Figure 5a. Second, the weight architecture requires that the weights on the filters repeat in every sliding of the convolutional window. In other words, the weights of the edges on a conv layer must be shared across all filters as in

Figure 5b.

A conv layer with bias is a hidden layer partitioned into channels, where each channel is obtained by convolution of with each filter , , plus one bias vertex in layer that is connected to every vertex on every channel of . The weights of the edges starting on the bias vertex should repeat within the same channel. Again, bias vertices do not have an activation function and are not connected to neurons of the previous layer.

The combinatorial architecture of a

pooling layer is the same as that of a conv layer, see

Figure 5a. However, since the purpose of that operation is usually to reduce the size of the previous layer, it contains non-trainable parameters. Thus, pooling layers have a different weight architecture than the conv layers. Average pooling fixes the weights in a layer to

where

n is the size of the feature map, while max-pooling fixes the weights in a layer to 1 and outputs the maximum over each window in the previous layer. Additionally, the activation function of an average and max-pooling layer is the identity function. This can be appreciated in

Figure 5c,d.

Remark 4. Max-pooling layers are compatible with our constructions, but they force us to consider another operation in the neuron, as was noted in Definition 15.

It is known that max-pooling layers give a small amount of translation invariance at each level since the precise location of the most active feature detector is discarded, and this produces doubts about the use of max-pooling layers, see [40,41]. An alternative to this is the use of attention-based pooling [42], which is a global-average pooling. Our interpretation provides a framework that supports why these doubts about the use of max-pooling layers exist: they break the algebraic structure on the computations of a neural network. However, average pooling layers, and therefore global-average pooling layers, are perfectly consistent with respect to our results since they are given by fixed weights for any input vector while not requiring specification of another operation. Batch normalization layers [

43] require specifications on the three types of architecture. Their combinatorial architecture is given by two identical consecutive hidden layers where each neuron on the first is connected to only one neuron on the second, and there is one bias vertex in each layer. The weight architecture is given by the batch norm operation, which is

where

is the mean of a batch and

its variance, and

and

are learnable parameters. The activation architecture is given by two identity activations. This can be seen in

Figure 6.

Remark 5. The weights μ and σ are not determined until the network is fed with a batch of data. However, at test time, μ and σ are set to the overall mean and variance computed across the training data set and thus become normal weights. This does not mean that the architecture of the network depends on the input vector, but that the way these particular weights are chosen is by obtaining mean and variance from the data.

The combinatorial architecture of a

residual connection [

7] requires the existence of edges in

Q that jump over one or more layers. Their weight architecture forces the weights chosen for those edges to be always equal to 1. We refer to

Figure 7 for an illustration of the architecture of a residual connection.

5.3. Architecture Preserved by Isomorphisms

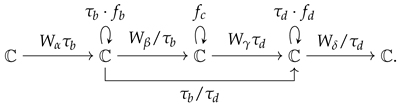

Two isomorphic neural networks can have different weight architectures. Let us illustrate this with a residual connection. Let

Q be the following network quiver

and the neural network

over

Q given by

Let be non-zero numbers, we define a change of basis of the neural network by . After applying the action of the change of basis we obtain an isomorphic neural network given by

The neural networks and are isomorphic and therefore they have the same network function by Theorem 1. However, the neural network has a residual connection, while does not since the weight on the skip connection is not equal to 1. Nevertheless, if we take , then the change of basis will produce an isomorphic neural network with a residual connection, and therefore both neural networks and will have the same weight architecture.

The same phenomenon as for residual connections happens for convolutions, where one has to choose a specific kind of isomorphism to preserve the weight architecture, as shown in

Figure 8. Isomorphisms of neural networks preserve the combinatorial architecture but not necessarily the weight architecture nor the activation architecture.

Remark 6. Note that with the constructions given above any architecture can be written down as a neural network over a network quiver in the sense of Definition 14, such as multilayer perceptrons, VGG net, ResNet, DensNet, and so on.

5.4. Consequences

As for the previous section, expressing neural network layers through the basic definitions of quiver representation theory has some consequences. Let us mention two.

5.4.1. Consequence 1

The first consequence derives from the isomorphism of residual layers. It is claimed by Meng et al. [

13] that there is no positive scale invariance across residual blocks. However, we can see that the quiver representation language allows us to prove that in fact there is positive scale invariance across residual blocks for ReLU networks. Therefore, isomorphisms allow to understand that there are far more symmetries on neural networks than was previously known, as noted in

Section 5.3, which can be written as follows:

Corollary 3. There is invariance across residual blocks under isomorphisms of neural networks.

5.4.2. Consequence 2

The second consequence is related to the existence of isomorphisms that preserve the weight architecture and not the activation architecture. As in

Figure 8, a change of basis

, which preserves the weight architecture of this convolutional layer, has to be of the form

where

and

. This is what Meng et al. [

13] do for the particular case of ReLU networks and positive change of basis (they consider the action of the group

on neural networks). Note that if the change of basis is not chosen in this way, the isomorphism will produce a layer with different weights in each convolutional filter, and therefore the resulting operation will not be a convolution with respect to the same filter. While positive scale invariance of ReLU networks is a special kind of invariance under isomorphisms of neural networks that preserve both the weight and the activation architecture, we may generalize this notion by allowing isomorphisms to change the activation architecture while preserving the weight architecture.

Definition 22. Let be a neural network and let be an element of the group of change of basis of neural networks such that the isomorphic neural network has the same weight architecture as . Theteleportationof the neural network with respect to τ is the neural network .

Since teleportation preserves the weight architecture, it follows that the teleportation of a conv layer is a conv layer, the teleportation of a pooling layer is a pooling layer, the teleportation of a batch norm layer is a batch norm layer, and the teleportation of a residual block is a residual block. Teleportation produces a neural network with the same combinatorial architecture, weight architecture and network function while it may change the activation architecture. For example, consider a neural network with ReLU activations and real change of basis. Since ReLU is positive scale invariant, any positive change of basis will leave ReLU invariant. On the other hand, for a negative change of basis the activation function changes to and therefore the weight optimization landscape also changes. This implies that teleportation may change the optimization problem by changing the activation functions, while preserving the network function, and the network gets “teleported” to either other place in the same loss landscape (if the activation functions are not changed) or to a completely different loss landscape (if activation functions are changed).

6. Data Representations

In machine learning, a data sample is usually represented by a vector, a matrix or a tensor containing a series of observed variables. However, one may view data from a different perspective, namely the neuron outputs obtained after a forward pass, also known as “feature maps” for conv nets [

3]. This has been done in the past to visualize what neurons have learned [

11,

21,

23].

In this section, we propose a mathematical description of the data in terms of the architecture of the neural network, i.e., the neuron values obtained after a forward pass. We shall prove that doing so allows to represent data by a quiver representation. Our approach is different from representation learning [

3] (p. 4) because we do not focus on how the representations are learned but rather on how the representations of the data are encoded by the forward pass of the neural network.

Definition 23. Alabeled data setis given by a finite set of pairs such that is a data vector (could also be a matrix or a tensor) and is a target. We can have for a regression and for a classification.

Let

be a neural network over a network quiver

Q and a sample

of a data set

D. When the network processes the input

x, the vector

x percolates through the edges and the vertices from the input to the output of the network. As mentioned before, this results in neuron values (or feature maps) that one can visualize [

21]. On its own, the neuron values are not a quiver representation per se. However, one can combine these neuron values with their pre-activations and the network weights to obtain

a thin quiver representation. Since that representation derives from the forward pass of

x, it is specific to it. We will evaluate the activation functions in each neuron and then construct with them a quiver representation for a given input. We stress that this process is not ignoring the very important non-linearity of the activation functions, so no information of the forward pass is lost in this interpretation.

Remark 7. Every thin quiver representation V of the delooped quiver defines a neural network over the network quiver Q with identity activations, that we denote . We do not claim that taking identity activation functions for a neural network will result in something good in usual deep learning practices. This is only a theoretical trick to manipulate the underlying algebraic objects we have constructed. As such, we will identify thin quiver representations V with neural networks with identity activation functions .

Our data representation for

x is a thin representation that we call

with identity activations whose function when fed with an input vector of ones

satisfies

where

is the score of the network

after a forward pass of

x.

Recovering

given the forward pass of

x through

is illustrated in

Figure 9a,b. Let us keep track of the computations of the network in the thin quiver representation

and remember that at the end, we want the output of the neural network

when fed with the input vector

, to be equal to

.

If

is an oriented edge such that

is a bias vertex, then the computations of the weight corresponding to

get encoded as

. If, on the other hand,

is an input vertex, then the computations of the weights on the first layer get encoded as

, see

Figure 9b.

On the second and subsequent layers of the network

we encounter activation functions. Additionally, the weight corresponding to an oriented edge

in

will have to cancel the unnecessary computations coming from the previous layer. That is,

has to be equal to

times the activation output of the vertex

divided by the pre-activation of

. Overall,

is defined as

where

is the set of oriented edges of

Q with target

. In the case where the activation function is ReLU, for

an oriented edge such that

is a hidden vertex, either

or

.

Remark 8. Observe that the denominator is the pre-activation of vertex and can be equal to zero. However, the set where this happens is of measure zero. Even in the case that it turns out to be exactly zero, one can add a number (for example ) to make it non-zero and then consider η as the pre-activation of that corresponding neuron, see Figure 9c. Therefore, we will assume, without loss of generality, that pre-activations of neurons are always non-zero. The quiver representation

of the delooped quiver

accounts for the combinatorics of the history of all the computations that the neural network

performs on a forward pass given the input

x. The main property of the quiver representation

is given by the following result. A small example of the computation of

and a view into how the next Theorem works can be found in

Appendix B.

Theorem 2. Let be a neural network over Q, let be a data sample for and consider the induced thin quiver representation of . The network function of the neural network satisfies Proof. Obviously, both neural networks have different input vectors, that is,

for

and

x for

. If

is a source vertex, by definition

. We will show that in the other layers, the activation output of a vertex in

is equal to the pre-activation of

in that same vertex. Assume that

is in the first hidden layer, let

be the set of oriented edges of

Q with target

v and source vertex a bias vertex, and let

be the set of oriented edges of

Q with target

v and source vertex an input vertex. Then, for every

where

, we have that

, and therefore

which is the pre-activation of vertex

v in

, i.e.,

. If

is in the second hidden layer then

since

is the pre-activation of vertex

in

, by the above formula we get that

, and then

which is the pre-activation of vertex

v in

when fed with the input vector

x. That is,

. An induction argument gives the desired result since the output layer has no activation function, and the coordinates of

and

are the values of the output vertices. □

6.1. Consequences

Interpreting data as quiver representations has several consequences.

6.1.1. Consequence 1

The combinatorial architecture of and of are equal, and the weight architecture of is determined by both the weight and activation architectures of the neural network when it is fed the input vector x. This means that even though the network function is non-linear because of the activation functions, all computations of the forward pass of a network on a given input vector can be arranged into a linear object (the quiver representation ), while preserving the output of the network, by Theorem 2.

Even more, feature maps and outputs of hidden neurons can be recovered completely from the quiver representations

, which implies that the notion [

11,

23] of

representation created by a neural network in deep learning is a mathematical consequence of understanding data as quiver representations.

It is well known that feature maps can be visualized into images showing the input signal characteristics and thus providing intuitions on the behavior of the network and its impact on an image [

11,

21,

22,

23]. This notion is implied by our findings as our thin quiver representations of data

include both the network structure and the feature maps induced by the data, expressed by the formula

see Equation (

5) in page 19 and the proof of Theorem 2.

Practically speaking, it is useless to compute the quiver representation only to recover the outputs of hidden neurons, that are even more efficiently computed directly from the forward pass of data. Nevertheless, the way in which the outputs of hidden neurons are obtained from the quiver representations is by forgetting algebraic structure, more specifically forgetting pieces of the quiver, which is formalized by the notion of forgetful functors in representation theory. All this implies that the notion of representation in deep learning is obtained from the quiver representations by loosing information of the computations of the neural network.

As such, using a thin quiver representation opens the door to a formal (and less intuitive) way to understand the interaction between data and the structure of a network, that takes into account all the combinatorics of the network and not only the activation outputs of the neurons, as it is currently understood.

6.1.2. Consequence 2

Corollary 4. Let and be data samples for . If the quiver representations and are isomorphic via then .

Proof. The neural networks

and

are isomorphic if and only if the quiver representations

and

are isomorphic via

. By the last Theorem and the fact that isomorphic neural networks have the same network function (Theorem 1) we obtain

□

By this Corollary and the invariance of the network function under isomorphisms of the group (Theorem 1), we obtain that the neural network is representing the data and the output on as the isomorphism classes of the thin quiver representations under the action of the change of basis group of neural networks. This motivates the construction of a space whose points are isomorphism classes of quiver representations, which is exactly the construction of “moduli space” presented in the next section.

6.2. Induced Inquiry for Future Research

The language of quiver representations applied to neural networks brings new perspectives on their behavior and thus is likely to open doors for future works. Here is one inquiry for the future.

If a data sample

x is represented by a thin quiver representation

, one can generate an infinite amount of new data representations

via

which all have the same network output, by applying an isomorphism given by

using Equation (

1), and then constructing an input

from it that produces such isomorphic quiver representation. Doing so could have important implications in the field of adversarial attacks and network fooling [

44] where one could generate fake data at will which, when fed to a network, all have exactly the same output as the original data

x. This will require the construction of a map from quiver representations to the input space, which could be done by using tools from algebraic geometry to find sections of the map

, for which the construction of the moduli space in the next section is necessary, but not sufficient. This leads us to propose the following question for future research:

Following the same logic, one could use this for data augmentation. Starting from an annotated dataset , one could represent each data by a thin quiver representation: , apply an arbitrary number of isomorphisms to it: and then convert these representations back to the input data space.

7. The Moduli Space of a Neural Network

In this section, we propose a modified version of the manifold hypothesis of Goodfellow et al. [

3] (Section 5.11.3). The original manifold hypothesis claims that the data lie in a small dimensional manifold inside the input space. We will provide an explicit map from the input space to the moduli space of a neural network with which the data manifold can be translated to the moduli space. This will allow the use of mathematical theory for quiver moduli spaces [

25,

26,

27] to manifold learning, representation learning and the dynamics of neural network learning [

11,

23].

Remark 9. Throughout this section, we assume that all the weights of a neural network and of the induced data representations are non-zero. This can be assumed since the set where some of the weights are zero is of measure zero, and even in the case where it is exactly zero we can add a small number to it to make it non-zero and at the same time imperceptible to the computations of any computer, for example, infinitesimally smaller than the machine epsilon.

In order to formalize our manifold hypothesis, we will attach an explicit geometrical object to every neural network over a network quiver Q, that will contain the isomorphism classes of the data quiver representations induced by any kind of data set D. This geometrical object that we denote is called the moduli space. The moduli space only depends on the combinatorial architecture of the neural network, while the activation and weight architectures of the neural network determine how the isomorphism classes of the data quiver representations are distributed inside the moduli space.

The mathematical objects required to formalize our manifold hypothesis are known as

framed quiver representations. We will follow Reineke [

25] for the construction of framed quiver representations in our particular case of thin representations. Recall that the

hidden quiver of a network quiver

Q is the sub-quiver of the delooped quiver

formed by the hidden vertices

and the oriented edges

between hidden vertices. Every thin representation of the delooped quiver

induces a thin representation of the hidden quiver

by forgetting the oriented edges whose source is an input (or bias) vertex, or the target is an output vertex.

Definition 24. We callinput verticesof the vertices of that are connected to the input vertices of Q, and we calloutput verticesof the vertices that are connected to the output vertices of Q.

Observe that the input vertices of the hidden quiver may not all of them be source vertices, so in the neural network we allow oriented edges from the input layer to deeper layers in the network. Dually, the output vertices of the hidden quiver may not all of them be sink vertices, so in the neural network we allow oriented edges from any layer to the output layer.

Remark 10. For the sake of simplicity, we will assume that there are no bias vertices in the quiver Q. If there are bias vertices in Q, we can consider them as part of the input layer in such a way that every input vector needs to be extended to a vector with its last b coordinates all equal to 1, where b is the number of bias vertices. All the quiver representation theoretic arguments made in this section are therefore valid also for neural networks with bias vertices under these considerations. This also has to do with the fact that the group of change of basis of neural networks has no factor corresponding to bias vertices, as the hidden quiver is obtained by removing all source vertices, not only input vertices.

Let be a thin representation of . We fix once and for all a family of vector spaces indexed by the vertices of , given by when v is an output vertex of and for any other .

Definition 25 ([

25]).

A choice of a thin representation of the hidden quiver and a map for each determines a pair , where , that is known as a framed quiver representation of by the family of vector spaces . We can see that is equal to the zero map when v is not an output vertex of , and for every v output vertex of .

Dually, we can fix a family of vector spaces indexed by and given by when v is an input vertex of and for any other .

Definition 26 ([

25]).

A choice of a thin representation of the hidden quiver and a map for each determines a pair , where , that is known as a co-framed quiver representation of by the family of vector spaces . We can see that is the zero map when v is not an input vertex of , and for every v an input vertex of .

Definition 27. Adouble-framedthin quiver representation is a triple where is a thin quiver representation of the hidden quiver, is a framed representation of and is a co-framed representation of .

Remark 11. In representation theory, one does either a framing or a co-framing, and chooses a stability condition for each one. In our case, we will do both at the same time, and use the definition of stability given by [25] for framed representations, together with its dual notion of stability for co-framed representations. Definition 28. The group ofchange of basis of double-framed thin quiver representationsis the same group of change of basis of neural networks.

The action of

on double-framed quiver representations for

is given by

where each component of

is given by

, if we express

, and each component of

is given by

, if we express

. Every double-framed thin quiver representation of

isomorphic to

is of the form

for some

. In the following theorem, we show that instead of studying the isomorphism classes

of the thin quiver representations of the delooped quiver

induced by the data, we can study the isomorphism classes of double-framed thin quiver representations of the hidden quiver.

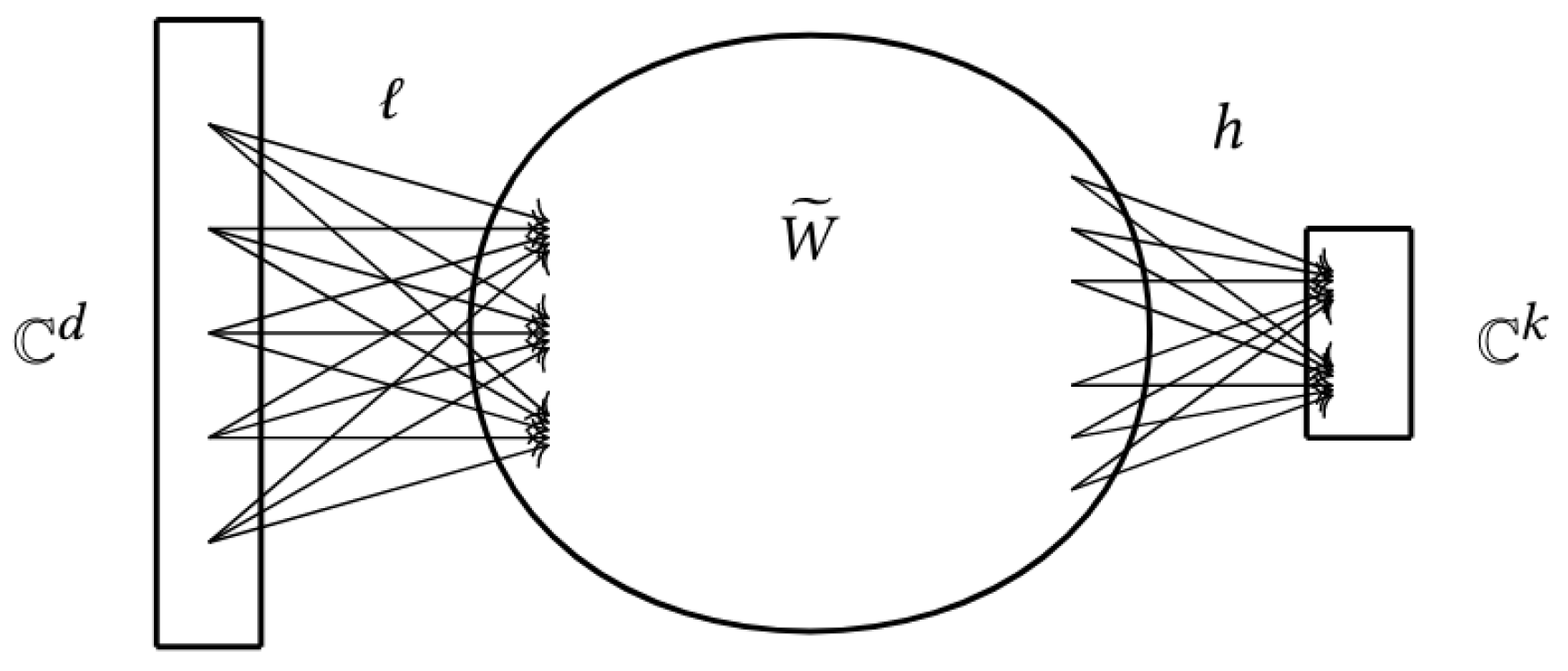

Theorem 3. There exists a bijective correspondence between the set of isomorphism classes via of thin representations over the delooped quiver and the set of isomorphism classes of double-framed thin quiver representations of .

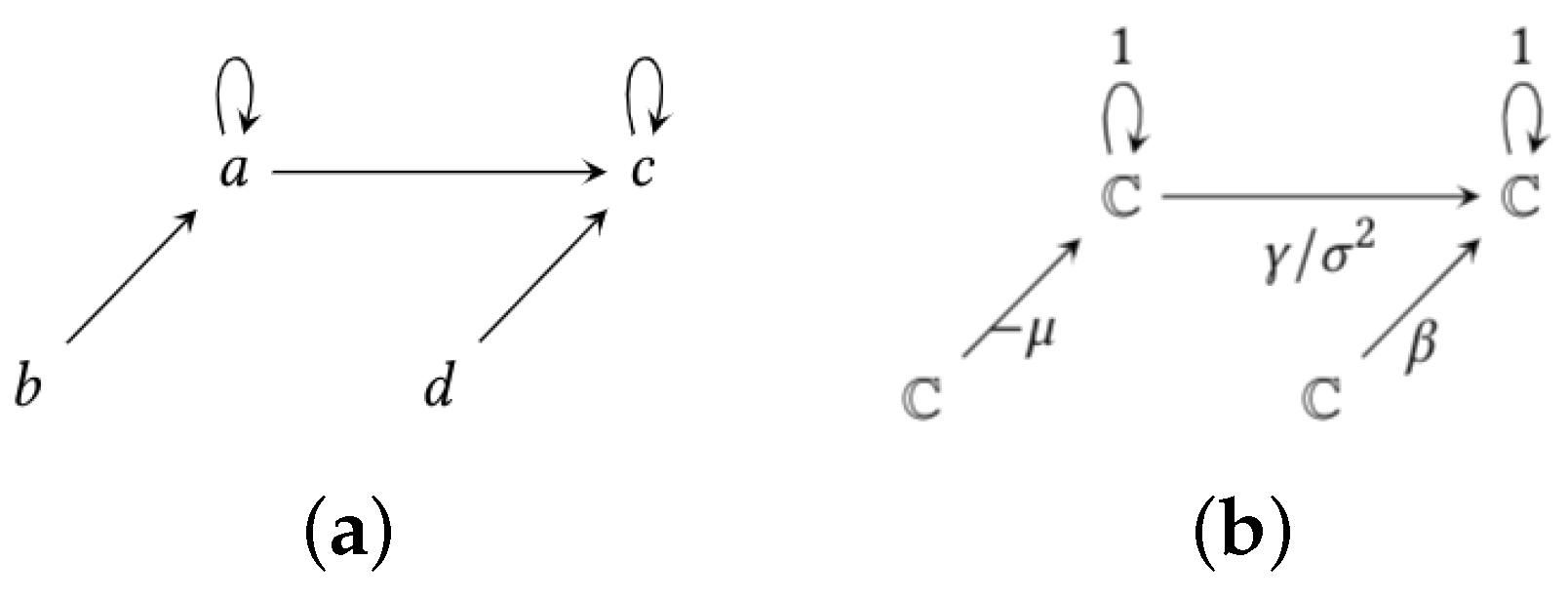

Proof. The correspondence between isomorphism classes is due to the equality of the group of change of basis for neural networks and double-framed thin quiver representations, since the isomorphism classes are given by the action of the same group. Given a thin representation

W of the delooped quiver, it induces a thin representation

of the hidden quiver

by forgetting the input and output layers of

Q. Moreover, if we consider the input vertices of

Q as the coordinates of

and the output vertices of

Q as the coordinates of

, then the weights starting on input vertices of

Q define the map

ℓ while the weights ending on output vertices of

Q define the map

h. This can be seen in

Figure 10. Given a double-framed thin quiver representation

, the entries

ℓ (resp.,

h) are the weights of a thin representation

W starting (resp., ending) on input (resp., output) vertices, while

defines the hidden weights of

W. □

From now on, we will identify a double-framed thin quiver representation

with the thin representation

W of the delooped quiver

defined by

as in the proof of the last theorem. We will also identify the isomorphism classes

where the symbol on the left means the isomorphism class of the thin representation

W under the action of

, and the one on the right is the isomorphism class of the double-framed thin quiver representation

.

One would like to study the space of

all isomorphism classes of double-framed thin representations of the delooped quiver. However, it is well known that this space does not have a good topology [

45]. Therefore, one considers the space of isomorphism classes of

stable double-framed thin quiver representations instead of all quiver representations, which can be shown to have a much richer topological and geometrical structure. In order to be stable, a representation has to satisfy a stability condition that is given in terms of its

sub-representations. We will prove that the data representations

are stable in this sense, and to do so we will now introduce the necessary definitions.

Definition 29 ([

10] (p. 14))

. Let W be a thin representation of the delooped quiver of a network quiver Q. A sub-representation of W is a representation U of such that there is a morphism of representations where each map is an injective map. Definition 30. Thezero representationof Q is the representation denoted 0 where every vector space assigned to every vertex is the zero vector space, and therefore every linear map in it is also zero.

Note that if U is a quiver representation, then the zero representation 0 is a sub-representation of U since is an injective map in this case.

We can see from

Figure 11 that the combinatorics of the quiver are related to the existence of sub-representations. Therefore, we explain now how to use the combinatorics of the quiver to prove stability of our data-representations

.

Given a double-framed thin quiver representation

, the image of the map

ℓ lies inside the representation

. The map

ℓ is given by a family of maps indexed by the vertices of the hidden quiver

, namely,

. Recall that

if

v is not an input vertex of the hidden quiver

, and

when

v is an input vertex of

. The image of

ℓ is by definition a family of vector spaces indexed by the hidden quiver

, given by

By definition,

. Recall that we will interpret the data quiver representations

as double-framed representations, and that

respects the output of the network

when it is fed the input vector

x. According to Equation (

5), the weights in the input layer of

are given in terms of the weights in the input layer of the network

W and the input vector

x. Therefore, only on a set of measure zero we have that some of the weights in the input layer of

are zero, so we can assume, without loss of generality, that the weights on the input layer of

are all non-zero.

Dually, the kernel of the map

h lies inside the representation

. The map

h is given by a family of maps indexed by the vertices of the hidden quiver

, namely

. Recall that

if

v is not an output vertex of the hidden quiver

, and

when

v is an output vertex of

. Therefore, the kernel of

h is by definition a family of vector spaces indexed by the hidden quiver

. That is,

By definition . The set where all of are equal to zero is of measure zero, and even in the case where it is exactly zero we can add a very small number to every coordinate of to make it non-zero and that the output of the network does not change significantly. Thus, we can assume, without loss of generality, that all the maps are non-zero for every output vertex v of .

Definition 31. A double-framed thin quiver representation isstableif the following two conditions are satisfied:

- 1.

The only sub-representation U of which is contained in is the zero sub-representation, and

- 2.

The only sub-representation U of that contains is .

Theorem 4. Let be a neural network and let be a data sample for . Then the double-framed thin quiver representation is stable.

Proof. We express as in Theorem 3. As explained before Definition 31, we can assume, without loss of generality, that for every input vertex v of the map is non-zero, and that for every output vertex v of the map is non-zero.

We have that

is a linear map, so its kernel is either 0 or

. However,

if and only if

, and since

we get that

and, as in

Figure 11, after the combinatorics of quiver representations, there is no sub-representation of

with all its factors corresponding to output vertices of

, other than the zero representation. Since the combinatorics of network quivers forces a sub-representation contained in

to be the zero sub-representation, we obtain the first condition for stability of double-framed thin quiver representations.

Dually, we have that

is a linear map, so its image is either 0 or

. However,

if and only if

, and since

we get that

and, as in

Figure 11, there is no sub-representation of

that contains

other than

. Therefore, the only sub-representation of

that contains

is

.

Thus, is a stable double-framed thin quiver representation of the hidden quiver . □

Denote by the space of all double-framed thin quiver representations.

Definition 32. Themoduli spaceof stable double-framed thin quiver representations of is by definition Note that the moduli space depends on the hidden quiver and the chosen vector spaces from which one double-frames the thin representations.

Given a neural network

and an input vector

, we can define a map

By the last theorem, in the case where all the weights of

are non-zero, this map takes values in the moduli space which parametrizes isomorphism classes of stable double-framed thin quiver representations

Remark 12. For ReLU activations one can produce representations with some weights . However, note that these representations can be arbitrarily approximated by representations with non-zero weights. Nevertheless, the map with values in still decomposes the network function as in Consequence 1 below.

The following result is a particular case of Nakajima [

45]’stheorem, generalized for double-framings and restricted to thin representations, combined with Reineke [

25]’s calculation of framed quiver moduli space dimension adjusted for double-framings (see

Appendix C for details about the computation of this dimension).

Theorem 5. Let Q be a network quiver. There exists a geometric quotient by the action of the group , called themoduli spaceof stable double-framed thin quiver representations of . Moreover, is non-empty and its complex dimension isIn short, the dimension of the moduli space of the hidden quiver equals the number of edges of minus the number of hidden vertices. Remark 13. The mathematical existence of the moduli space [25,45] depends on two things, the neural networks and the data may be build upon the real numbers, but we are considering them over the complex numbers, and

the change of basis group of neural networks is the change of basis group of thin quiver representations of , which is a reductive group.

One may try to study instead the space whose points are isomorphism classes given by the action of the sub-group H of the change of basis group , whose action preserves both the weight and the activation architectures. By doing so we obtain a group H that is not reductive, which gets in the way of the construction, and therefore the existence, of the moduli space. This happens even in the case of ReLU activation.

Finally, let us underline that the map from the input space to the representation space (i) takes values in the moduli space when all weights of the representations are non-zero, and (ii) may or may not be 1-1. Even if is not 1-1, all the results in this work still hold. The most important implication of the existence of the map is our Consequence 1 below, which does not depend on being 1-1.

7.1. Consequences

The existence of the moduli space of a neural network has the following consequences.

7.1.1. Consequence 1

The moduli space

as a set is given by

That is, the points of the moduli space are the isomorphism classes of (stable) double-framed thin quiver representations of

over the action of the change of basis group

of neural networks. Given any point in the moduli space

we can define

since the network function is invariant under isomorphisms, which gives a map

Furthermore, given a neural network

, we define a map

by

Corollary 5. The network function of any neural network is decomposed as Proof. This is a consequence of Theorem 2 since for any

we have

□

This implies that any decision of any neural network passes through the moduli space (and the representation space), and this fact is independent of the architecture, the activation function, the data and the task.

7.1.2. Consequence 2

Let

be a neural network over

Q and let

be a data sample. If

, then any other quiver representation

V of the delooped quiver

that is isomorphic to

has

. Therefore, if in a dataset

the majority of samples

such that for a specific edge

the corresponding weight on

is zero, then the coordinates of

inside the moduli space corresponding to

are not used for computations. Therefore, a projection of those coordinates to zero corresponds to the notion of pruning of neural networks, that is forcing to zero the smaller weights on a network [

28]. From Equation (

5) in page 20, we can see that this interpretation of the data explains why naive pruning works. Namely, if one of the weights in the neural network

is small, then so does the corresponding weight in

for any input

x. Since the coordinates of

are given in function of the weights of

, by Equation (

5) in page 23 and the previous consequence, a small weight of

sends inputs

x to representations

with some coordinates equal to zero in the moduli space. If this happens for a big proportion of the samples in the dataset, then the network

is not using all of the coordinates in the moduli space to represent its data in the form of the map

.

7.1.3. Consequence 3

Let

be the data manifold in the input space of a neural network

. The map

takes

to

. The subset

generates a sub-manifold of the moduli space (as it is well known in topology [

46]) that parametrizes all possible outputs that the neural network

can produce from inputs on the data manifold

. This means that the geometry of the data manifold

has been translated into the moduli space

, and this implies that the mathematical knowledge [

25,

26,

27] that we have of the geometry of the moduli spaces

can be used to understand the dynamics of neural network training, due to the universality of the description of neural networks we have provided.

7.2. Induced Inquiries for Future Research

7.2.1. Inquiry 1

Following Consequence 1, one would like to look for correlations between the dimension of the moduli space and properties of neural networks. The dimension of the moduli space is equal to the number of basis paths in ReLU networks found by Zheng et al. [

24], where they empirically confirm that it is a good measure for generalization. This number was also obtained as the rank of a structure matrix for paths in a ReLU network [

13], however, they put restrictions on the architecture of the network to compute it. As we noted before, the network function of any neural network passes through the moduli space, where the data quiver representations

lie, so the dimension of the moduli space could be used to quantify the capacity of neural networks in general.

7.2.2. Inquiry 2

We can use the moduli space to formulate what training does to the data quiver representations. Training a neural network through gradient descent generates an iterative sequence of neural networks

where

m is the total number of training iterations. For each gradient descent iteration

we have

The moduli space is given only in terms of the combinatorial architecture of the neural network, while the weight and activation architectures determine how the points

are distributed inside the moduli space

, because of Equation (

5). Since the training changes the weights and not (always) the network quiver (unless of course in neural architecture search), we obtain that each training step defines a different map

. Therefore, the sub-manifold

is changing its shape during training inside the moduli space

.

A training of a neural network, which is a sequence of neural networks , can be thought as, first adjusting the manifold into , then the manifold into , and so on. This is a completely new way of representing the training of neural networks that works universally for any neural network, which leads to the following question:

“Can training dynamics be made more explicit in these moduli spaces in such a way that allows proving more precise convergence theorems than the currently known?”

7.2.3. Inquiry 3

A training of the form

only changes the weights of the neural network. As we can see, our data quiver representations depend on both the weights and the activations, and therefore a usual training does not exploit completely the fact that the data quiver representations are mapped via

to the moduli space. Thus, the idea of learning the activation functions, as it is done by Goyal et al. [

29], will produce a training of the form

, and this allows the maps

to explore more freely the moduli space than the case where only the weights are learned. Our results imply that a training that changes (and not necessarily learns) the activation functions has the possibility of exploring more the moduli space due to the dependence of the map

on the activation functions. One would like to see if this can actually improve the training of neural networks, and these are exactly the results obtained by the experiments of Goyal et al. [

29]. Therefore, the following question arises naturally:

“Can neural network learning be improved by changing activation functions during training?”