Multi-Drone 3D Building Reconstruction Method

Abstract

1. Introduction

2. State of the Art

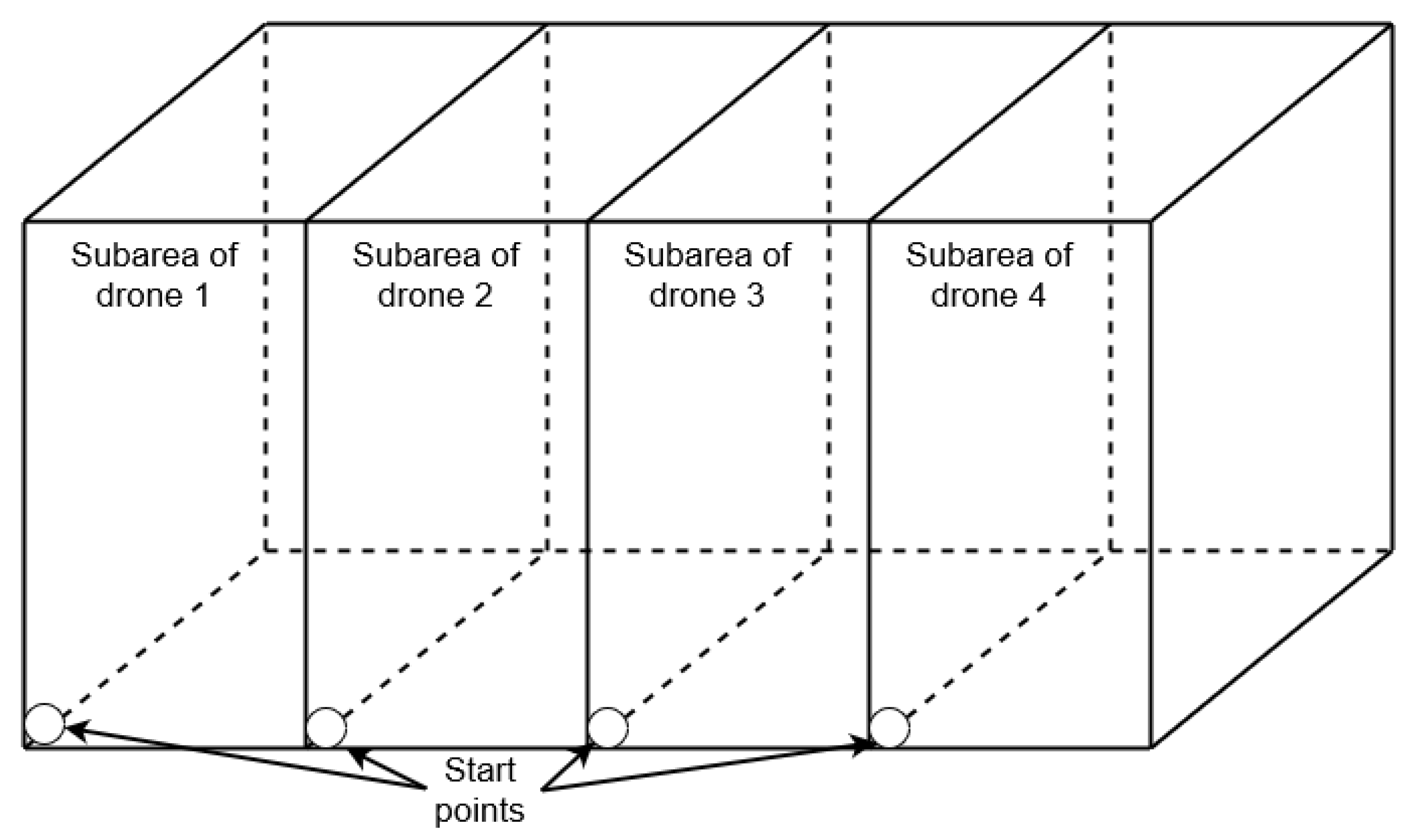

- Workload distribution among drones;

- Localization and control of each drone;

- 3D scanning by using only simple drone sensors;

- Usage of any additional hardware apart from drones and a control server;

- Automation of the whole process.

- Mention scanning large objects;

- Mention the usage of several drones;

- Describe industrial or research reconstruction solutions.

- What multiagent approach is used? This question includes subquestions about a workload distribution, a trajectory planning, a collision avoidance, an accuracy, and the speed of the whole process;

- What localization approach is used?;

- What 3d reconstruction approach is used?;

- What is the level of solution automation? This question includes subquestions about prerequisites (including preconfiguration, calibration, building, and teaching models), the need of human attention during the process and abilities for customizing the solution for different objects and environments;

- What limitations does a solution have? This question addresses the usage of any additional hardware or external spatial markup.

2.1. What Multiagent Approach Is Used?

2.2. What Localization Approach Is Used?

2.3. What 3D Reconstruction Approach Is Used?

2.4. What Is the Level of Solution Automation

2.5. What Are the Limitations of a Solution?

2.6. Conclusions

- The solution should use homogeneous architecture with centralized control in order to support low-cost drones;

- The solution should provide a two-step 3D reconstruction process (online and offline);

- Online 3D reconstruction process should be a reasonable trade-off between 3D map accuracy and computing complexity;

- Offline 3D reconstruction process should use photogrammetry because it allows scaling the number of drones and input data volume;

- Due to the usage of low-cost drones it is important to use RGB images as a main data source because it is available on most platforms instead of complex sensors (LiDAR, RGBD, GPS).

3. The Essence of the Proposed Method

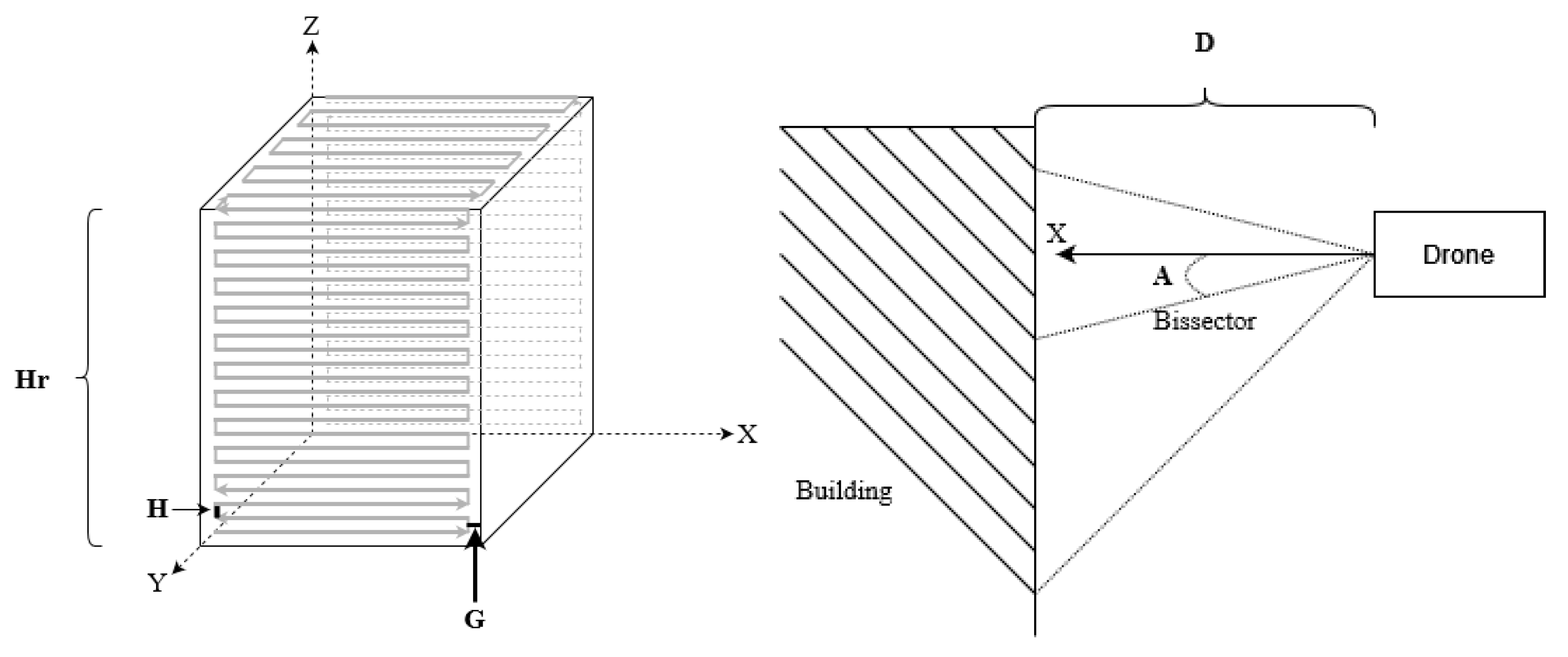

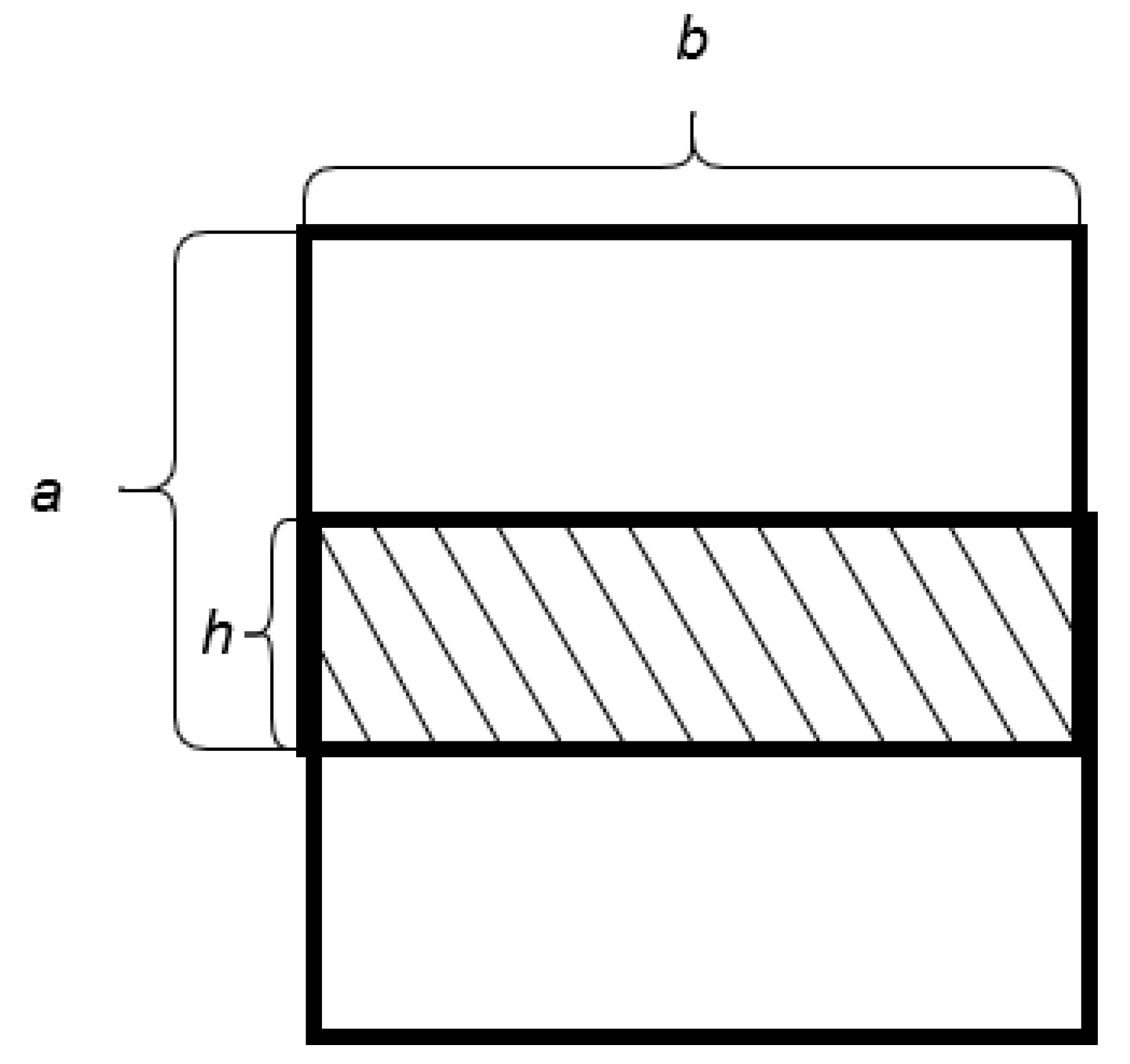

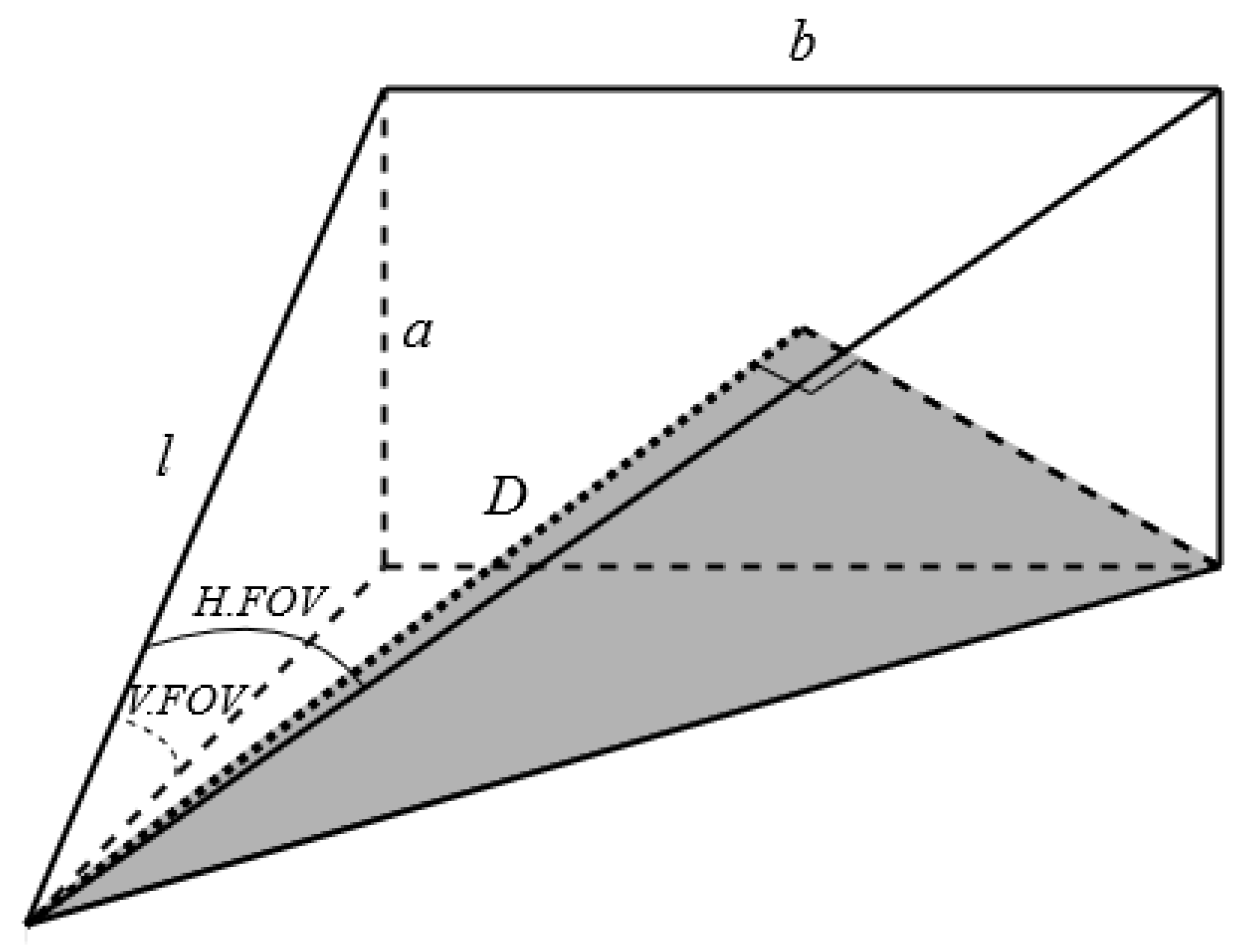

3.1. Trajectory Building

3.2. The Localization Approach

3.3. 3D Reconstruction Approach for Offline Map

3.4. Method Scalability and Adaptation

- Divide the subarea approximately into three parts—front side, roof, and back side of the building.;

- Divide the front side and back side parts of the subareas evenly and horizontally (division lines are parallel X axis) in the ZX plane;

- Divide the roof part in the XY plane evenly and vertically (division lines are parallel Y axis) for subsubareas.

- Each drone is assigned to a particular subsubarea;

- The system calculates deploying trajectories for each drone from the base to the approximate starting point of a subsubarea (bottom-left corner for frontside and backside subsubareas, front-left corner for roof subsubareas);

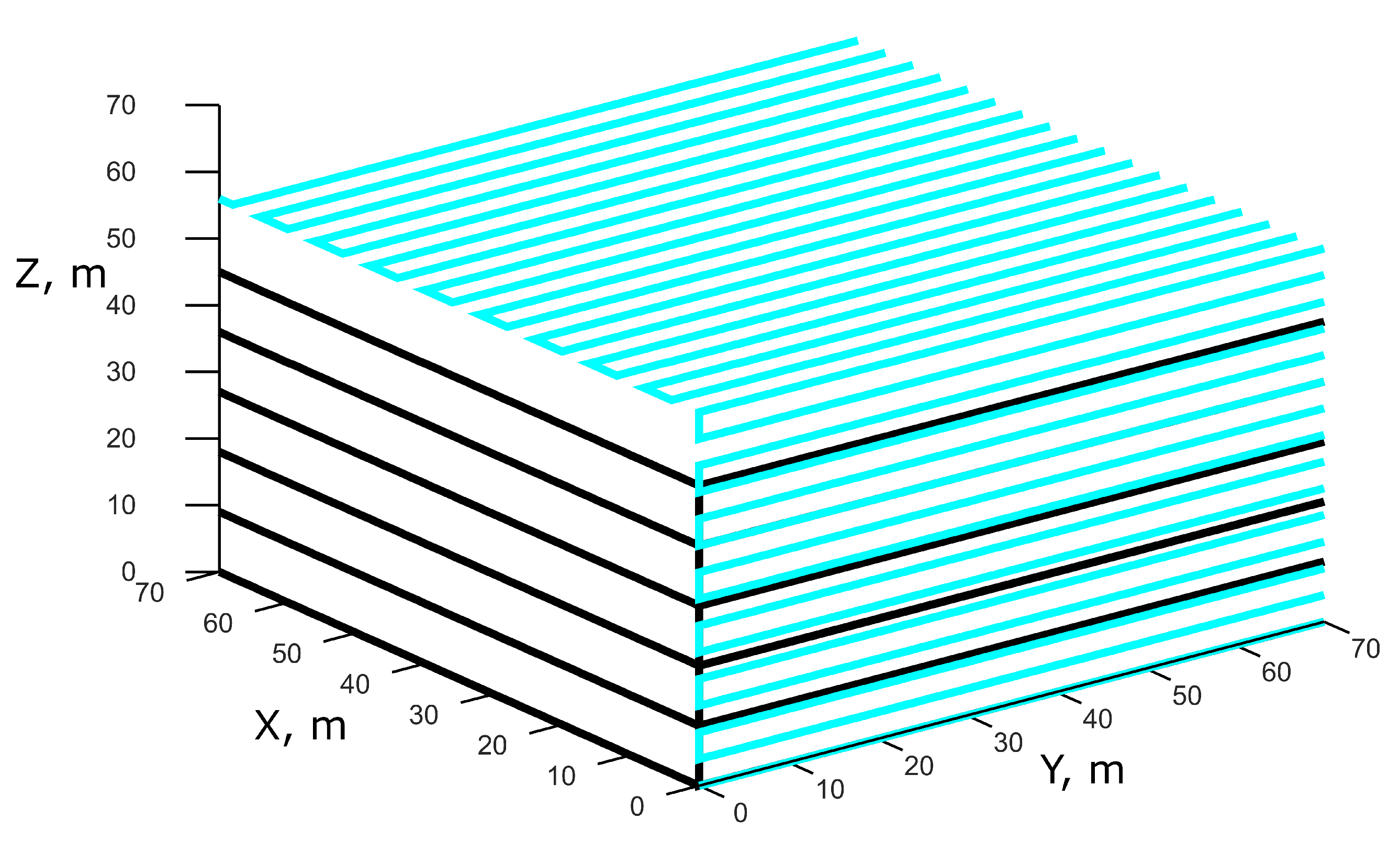

- Each drone on frontside and backside subsubareas starts scanning using a snake pattern moving from left to right and from down to up;

- Each drone on roof subsubareas starts scanning using a snake pattern moving from left to right and from front to back;

- After completing the subsubarea drone returns to the base.

4. Evaluation

4.1. Trajectory Benefits

- S—the distance that the drone might process;

- v—the average speed of the drone;

- —the estimated time resource of the battery.

- N—amount of spiral turns in a vertical plane;

- M—amount of spiral turns in a horizontal plane;

- —widths of the drone’s subarea;

- —height of a building;

- y—width of a building.

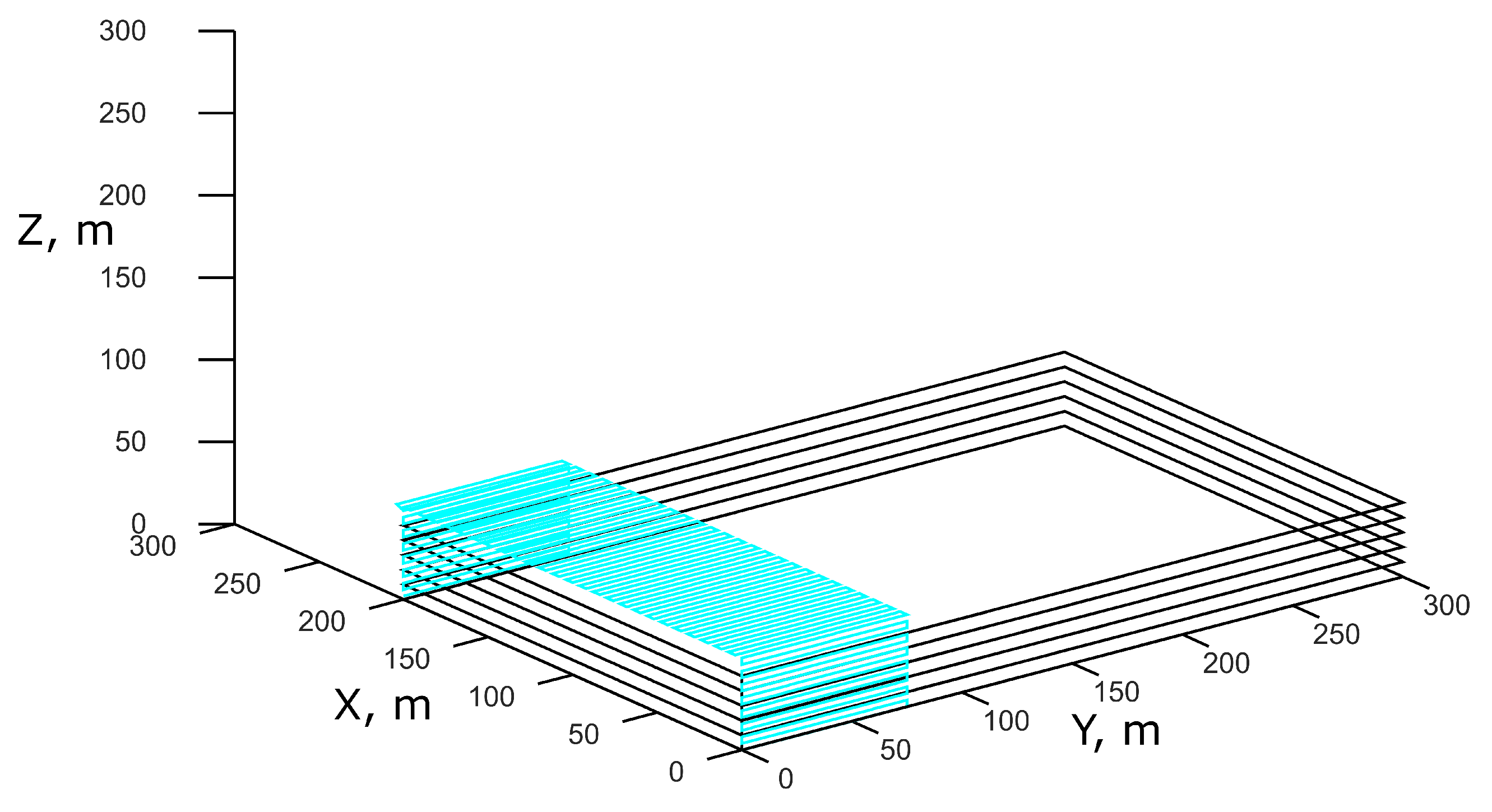

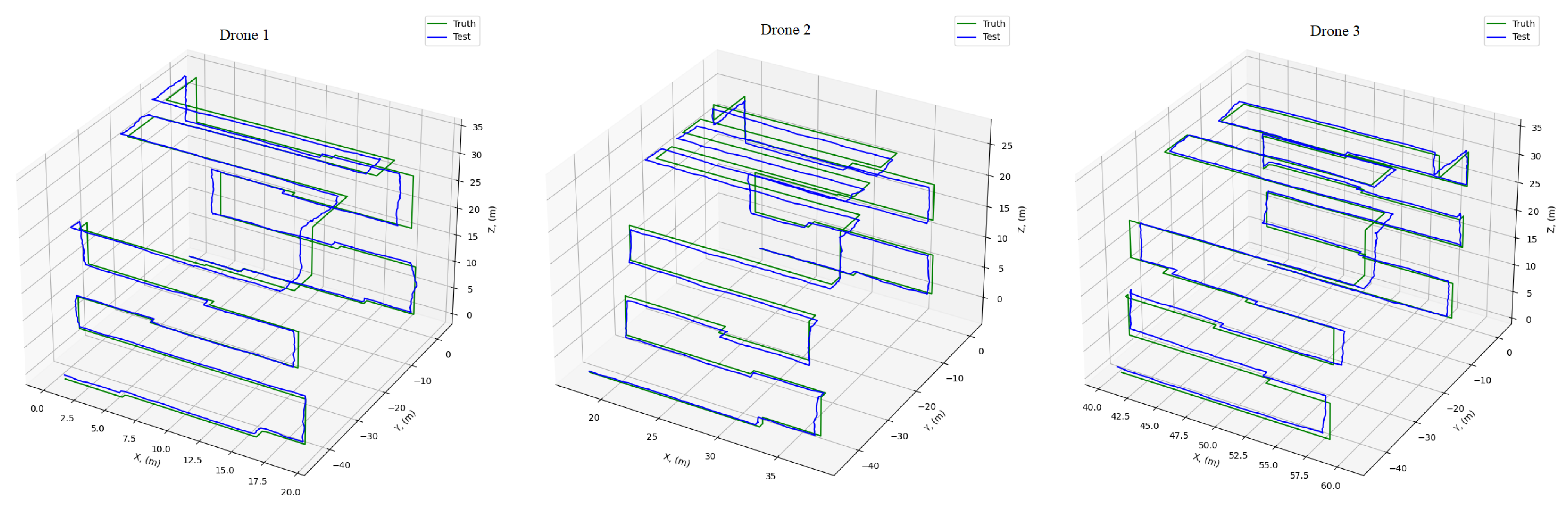

4.2. Experiments in a Simulation

- Knowing the rough boundaries of a building 60 × 40 × 30, the area was divided into 3 parts—each for one drone;

- The trajectory for each drone was constructed independently. The beginning point for each drone is set manually according to the division on sub-areas;

- Each drone follows the trajectory, keeping a constant distance to the building. If the distance is changed (i.e., because of unevenness of a building wall), the trajectory of the drone is updated to keep the constant distance;

- After the drone reaches the end of its trajectory, it returns back to the point of the beginning;

- Captured images are provided to Meshroom [24] to construct a 3D model of a building.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Drone Services Market by Type, Application, Industry, Solution, and Region—Global Forecast to 2026. Available online: https://www.marketsandmarkets.com/Market-Reports/drone-services-market-80726041.html (accessed on 9 October 2021).

- Drone Data Services Market Size, Share & Trends Analysis Report by Service Type, by Platform, by End-Use, by Region, and Segment Forecasts, 2018–2025. Available online: https://www.grandviewresearch.com/industry-analysis/drone-data-services-market (accessed on 9 October 2021).

- An, Q.; Shen, Y. On the performance analysis of active visual 3d reconstruction in multi-agent networks. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP), Xi’an, China, 23–25 October 2019; pp. 1–5. [Google Scholar]

- Aydın, M.; Bostancı, E.; Güzel, M.S.; Kanwal, N. Multiagent Systems for 3D Reconstruction Applications. Multi Agent Syst. Strateg. Appl. 2020, 25. [Google Scholar] [CrossRef]

- Meng, W.; Yang, Q.; Sarangapani, J.; Sun, Y. Distributed control of nonlinear multiagent systems with asymptotic consensus. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 749–757. [Google Scholar] [CrossRef]

- Daftry, S.; Hoppe, C.; Bischof, H. Building with drones: Accurate 3D facade reconstruction using MAVs. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3487–3494. [Google Scholar]

- Ahmad, F.; Shin, C.; Chai, E.; Sundaresan, K.; Govindan, R. ARES: Accurate, Autonomous, Near Real-time 3D Reconstruction using Drones. arXiv 2021, arXiv:2104.08634. [Google Scholar]

- Nair, S.; Ramachandran, A.; Kundzicz, P. Annotated reconstruction of 3D spaces using drones. In Proceedings of the 2017 IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 3–5 November 2017; pp. 1–5. [Google Scholar]

- Anwar, N.; Izhar, M.A.; Najam, F.A. Construction monitoring and reporting using drones and unmanned aerial vehicles (UAVs). In Proceedings of the Tenth International Conference on Construction in the 21st Century (CITC-10), Colombo, Sri Lanka, 2–4 July 2018; pp. 2–4. [Google Scholar]

- McAlinden, R.; Suma, E.; Grechkin, T.; Enloe, M. Procedural reconstruction of simulation terrain using drones. In Proceedings of the Interservice/Industry Training, Simulation, and Education Conference (I/ITSEC), Orlando, FL, USA, 30 November–4 December 2015; pp. 1–12. [Google Scholar]

- Minos-Stensrud, M.; Haakstad, O.H.; Sakseid, O.; Westby, B.; Alcocer, A. Towards Automated 3D reconstruction in SME factories and Digital Twin Model generation. In Proceedings of the 2018 18th International Conference on Control, Automation and Systems (ICCAS), PyeongChang, Korea, 17–20 October 2018; pp. 1777–1781. [Google Scholar]

- Peralta, D.; Casimiro, J.; Nilles, A.M.; Aguilar, J.A.; Atienza, R.; Cajote, R. Next-best view policy for 3D reconstruction. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 558–573. [Google Scholar]

- Milani, S.; Memo, A. Impact of drone swarm formations in 3D scene reconstruction. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2598–2602. [Google Scholar]

- Renwick, J.D.; Klein, L.J.; Hamann, H.F. Drone-based reconstruction for 3D geospatial data processing. In Proceedings of the 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Reston, VA, USA, 12–14 December 2016; pp. 729–734. [Google Scholar]

- Zhang, G.; Shang, B.; Chen, Y.; Moyes, H. SmartCaveDrone: 3D cave mapping using UAVs as robotic co-archaeologists. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1052–1057. [Google Scholar]

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) applications in the built environment: Towards automated building inspection procedures using drones. Autom. Construct. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Mentasti, S.; Pedersini, F. Controlling the flight of a drone and its camera for 3D reconstruction of large objects. Sensors 2019, 19, 2333. [Google Scholar] [CrossRef] [PubMed]

- Shang, Z.; Shen, Z. Real-time 3D reconstruction on construction site using visual SLAM and UAV. arXiv 2017, arXiv:1712.07122. [Google Scholar]

- Huang, F.; Yang, H.; Tan, X.; Peng, S.; Tao, J.; Peng, S. Fast Reconstruction of 3D Point Cloud Model Using Visual SLAM on Embedded UAV Development Platform. Remote Sens. 2020, 12, 3308. [Google Scholar] [CrossRef]

- Viswanathan, D.G. Features from accelerated segment test (fast). In Proceedings of the 10th Workshop on Image Analysis for Multimedia Interactive Services, London, UK, 6–8 May 2009; pp. 6–8. [Google Scholar]

- OpenCV 3.0 Based Algorithm of Visual Odometry. Available online: https://github.com/avisingh599/mono-vo (accessed on 9 October 2021).

- DJI Tello Drone. Available online: https://www.ryzerobotics.com/tello (accessed on 9 October 2021).

- Unreal Engine 3D Creation Tool. Available online: https://www.unrealengine.com/en-US/ (accessed on 9 October 2021).

- AliceVision. Meshroom: A 3D Reconstruction Software. Available online: https://github.com/alicevision/meshroom (accessed on 9 October 2021).

| Drone 1 | Drone 2 | Drone 3 | |

|---|---|---|---|

| MAE of trajectory, m | |||

| Max error of trajectory, m | 0.70 | 0.74 |

| X, m | Y, m | Z, m | Number | Trajectory | Distance to | Overlap | Expected Quality |

|---|---|---|---|---|---|---|---|

| of Drones | Resource, m | the Building, m | of Images | of Photogrammetry | |||

| 100 | 50 | 30 | 1 | 1200 | 15 | 0.45 | average |

| 100 | 50 | 30 | 2 | 1200 | 5 | 0.17 | low |

| 100 | 50 | 30 | 2 | 1200 | 10 | 0.59 | high |

| 100 | 50 | 30 | 2 | 2400 | 5 | 0.61 | high |

| 100 | 50 | 30 | 2 | 2400 | 10 | 0.80 | high |

| 100 | 50 | 30 | 3 | 1200 | 5 | 0.45 | average |

| 100 | 50 | 30 | 3 | 1200 | 10 | 0.72 | high |

| 100 | 50 | 30 | 3 | 2400 | 5 | 0.74 | high |

| 100 | 50 | 30 | 3 | 2400 | 10 | 0.87 | high |

| 200 | 100 | 50 | 2 | 2400 | 10 | 0.20 | low |

| 200 | 100 | 50 | 3 | 1200 | 5 | 0 | fail |

| 200 | 100 | 50 | 3 | 1200 | 10 | 0 | fail |

| 200 | 100 | 50 | 3 | 2400 | 5 | 0 | fail |

| 200 | 100 | 50 | 3 | 2400 | 10 | 0.47 | average |

| 200 | 100 | 50 | 4 | 1200 | 5 | 0 | fail |

| 200 | 100 | 50 | 4 | 1200 | 10 | 0.13 | low |

| 200 | 100 | 50 | 4 | 2400 | 5 | 0.20 | low |

| 200 | 100 | 50 | 4 | 2400 | 10 | 0.60 | high |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Filatov, A.; Zaslavskiy, M.; Krinkin, K. Multi-Drone 3D Building Reconstruction Method. Mathematics 2021, 9, 3033. https://doi.org/10.3390/math9233033

Filatov A, Zaslavskiy M, Krinkin K. Multi-Drone 3D Building Reconstruction Method. Mathematics. 2021; 9(23):3033. https://doi.org/10.3390/math9233033

Chicago/Turabian StyleFilatov, Anton, Mark Zaslavskiy, and Kirill Krinkin. 2021. "Multi-Drone 3D Building Reconstruction Method" Mathematics 9, no. 23: 3033. https://doi.org/10.3390/math9233033

APA StyleFilatov, A., Zaslavskiy, M., & Krinkin, K. (2021). Multi-Drone 3D Building Reconstruction Method. Mathematics, 9(23), 3033. https://doi.org/10.3390/math9233033