Abstract

We consider biclustering that clusters both samples and features and propose efficient convex biclustering procedures. The convex biclustering algorithm (COBRA) procedure solves twice the standard convex clustering problem that contains a non-differentiable function optimization. We instead convert the original optimization problem to a differentiable one and improve another approach based on the augmented Lagrangian method (ALM). Our proposed method combines the basic procedures in the ALM with the accelerated gradient descent method (Nesterov’s accelerated gradient method), which can attain convergence rate. It only uses first-order gradient information, and the efficiency is not influenced by the tuning parameter so much. This advantage allows users to quickly iterate among the various tuning parameters and explore the resulting changes in the biclustering solutions. The numerical experiments demonstrate that our proposed method has high accuracy and is much faster than the currently known algorithms, even for large-scale problems.

1. Introduction

By clustering, such as k-means clustering [1] and hierarchical clustering [2,3], we usually mean dividing N samples, each consisting of p covariate values, into several categories, where .

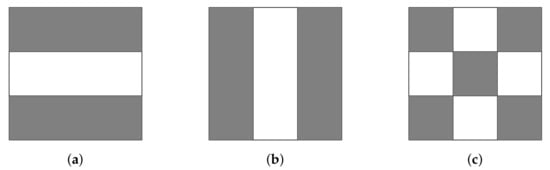

In this paper, we consider biclustering [4] that is an extended notion of clustering. In biclustering, we divide both and based on the data simultaneously. If we are given a data matrix in , then the rows and columns within the shared group exhibit similar characteristics. For example, given a gene expression data matrix, with genes as columns and samples as rows, the biclustering detects the submatrices, which represent the cooperative behavior of a group of genes corresponding to a group of samples [5]. Figure 1 illustrates an intuitive difference between standard clustering and biclustering. In recent years, biclustering has become a ubiquitous data-mining technique with varied applications, such as text mining, recommendation system, and bioinformatics. A comprehensive survey of biclustering was given by [6,7,8].

Figure 1.

While standard clustering divides either rows or columns, the biclustering divides both. (a) Row clustering; (b) column clustering; (c) biclustering.

However, as noted in [6], biclustering is an NP-hard problem. Thus, the results may vary significantly with different initializations. Moreover, some conventional biclustering models suffer from poor performance due to non-convexity, which may return local optimal solutions. In order to avoid such an inconvenience, Chi et al. [9] proposed convex biclustering by reformulating the problem to convex formulations and using the fused lasso [10] concept.

In convex clustering [9,11,12,13], given a data matrix and , we compute a matrix U of the same size as X. Let and be the i-th rows of X and U, let and be the the j-th columns of X and U, and let denote the square sums of the elements, and , as the norm of p, N-dimensional vectors, respectively. Convex clustering finds that minimizes a weighted sum of and (it may be formulated as minimizing a weighted sum of and ). If and share , then they are in the same group w.r.t. . On the other hand, convex biclustering finds that minimizes a weighted sum of and and .

The convex biclustering achieves checker-board-like biclusters by penalizing both the rows and columns of U. When (the tuning parameter for and ) is zero, each occupies a unique bicluster and for and when and . As increases, the bicluster begins to fuse. For sufficiently large , all merge into one single bicluster . The convex formulation guarantees a globally optimal solution and demonstrates superior performance to competing approaches. Chi et al. [9] claimed that the convex biclustering performs better than the dynamic tree-cutting algorithm [14] and sparse biclustering algorithm [15] in their experiments.

Nevertheless, despite these advantages, convex biclustering has not yet gained widespread popularity, due to its intensive computation. On the one hand, the main challenge of solving the optimization problem is the two fused penalty terms: indecomposable and non-differentiable. These properties increase the difficulty of solving. Many splitting methods for the indecomposable problem are complicated and create many subproblems to solve; techniques such as the subgradient method for the non-differentiable problem are slow to converge [16,17]. Moreover, it is difficult to find the optimal tuning parameter because we need to solve optimization problems with a sequence of parameters and select the one for the specific demand of researchers. Hence, we need to propose a fast way to solve the problems with the sequence of parameters . On the other hand, with the increased demands of biclustering techniques, convex biclustering is faced with large-scale data as the volume and complexity of data grows. Above all, it is necessary to propose an efficient algorithm to solve the convex biclustering problem.

There are limited algorithms for solving the problem in the literature. Chi et al. [9] proposed the convex biclustering algorithm (COBRA) using a Dykstra-like proximal algorithm [18] to solve the convex biclustering problem. Weylandt [19] proposed using alternating direction method of multipliers (ADMM) [20,21] and its variant, generalized ADMM [22], to solve the problem.

However, the COBRA yields subproblems, including the convex clustering problem, which requires expensive computations for large-scale problems due to the high per iteration cost [23,24]. Essentially, COBRA is a splitting method that separately solves a composite optimization problem containing three terms. Additionally, it is sensitive to tuning parameter . Therefore, obtaining the solutions under a wide range of parameters takes time, which is not feasible for broad applications and different demands for users. ADMM generally solves the problem by breaking it into smaller pieces and updating the variables alternately. Still, at the same time, it also introduces several subproblems which may cost much time. To be more specific, the ADMM proposed by Weylandt [19] requires solving the Sylvester equation in the step of updating the variable U. Hence, the Schur decomposition requires solving the Sylvester equation based on the numerical method from [25], which is complicated and time consuming. Additionally, it is known that ADMM exhibits , where k is the number of iterations, convergence in general [26]. It often takes time to achieve relatively high precision [27], which is not feasible in some highly accurate applications. For example, the gene expression data contain huge information (the feature dimension usually exceeds 1000). However, COBRA and ADMM do not scale well for such large-scale problems. Overall, the above algorithm shows weak performance, which motivates us to combine some current algorithms to efficiently solve the convex biclustering problem like in reference [28].

This paper proposes an efficient algorithm with simple subproblems and a fast convergence rate to solve the convex biclustering problem. Rather than update each variable alternately, like ADMM, we use the augmented Lagrangian method (ALM) to update the primal variables simultaneously. In this way, we can transform the optimization problem to be differentiable, solve the problem via an efficient gradient descent method and further simplify the subproblems. Our proposed method is motivated by the work [29] in which the authors presented a way to convert the augmented Lagrangian function to a composite optimization that can be solved by the proximal gradient method [30]. Using the process twice to handle the two fused penalties and , we obtain a differentiable problem from the augmented Lagrangian function. Then, we propose Nesterov’s accelerated gradient method to solve the differentiable problem, which has global convergence rate.

Our main contributions are as follows:

- We propose an efficient algorithm to solve the convex biclustering model for large-scale N and p. The algorithm is a first-order method with simple subproblems. It only requires calculating the matrix multiplications and simple proximal operators, while the ADMM approaches require matrix inversion.

- Our proposed method does not require as much computation, even when the tuning parameter is large, as the existing approaches do, which means that it is easier to obtain biclustering results simultaneously for several values.

The remaining parts of this paper are as follows. In Section 2, we provide some preliminaries which are used in the paper and introduce the convex biclustering problem. In Section 3, we illustrate our proposed algorithm for solving the convex biclustering model. After that, we conduct numerical experiments to evaluate the performance of our algorithm in Section 4.

Notation: In this paper, we use to denote the norm of a vector , for , and . For a matrix , we use to denote the Frobenius norm, denotes the spectral norm, and if not specified.

2. Preliminaries

In this section, we provide the background for understanding the proposed method in Section 3. In particular, we introduce the notions of ADMM, ALM, NAGM, and convex biclustering.

We say that differentiable has a Lipschitz-continuous gradient if there exists (Lipschitz constant) such that

We define the conjugate of a function by

where is the domain of f, and know that is closed ( is a closed set for any ) and convex. It is known that when f is closed and convex, and that Moreau’s decomposition [31] is available. Let be closed and convex. For any and , we have

where is the proximal operator defined by

2.1. ADMM and ALM

In this subsection, we introduce the general optimization procedures of ADMM and ALM.

Let and be convex. Assume that f is differentiable, and g and h are not necessarily differentiable. We consider the following optimization problem:

with variables and , and matrix . To this end, we define the augmented Lagrangian function as the following,

where is an augmented Lagrangian parameter, and is the Lagrangian multipliers.

ADMM is a general procedure to find the solution to the problem (4) by iterating

given the initial values and .

What we mean by the ALM [32,33,34] is to minimize the augmented Lagrangian function (5) w.r.t. variables x and y simultaneously given a value, i.e., we iterate the following steps:

Shimmura and Suzuki [29] considered the minimization of the function ,

and the non-differentiable function over , replacing the minimization over and in (6).

Lemma 1

([29], Theorem 1). The function is differentiable and its differential is

By Lemma 1, the minimization in (6) can be regarded as the composite optimization problem with the differentiable function and the non-differentiable function . Therefore, it is feasible to use the proximal gradient method to update the variable x, such as the fast iterative shrinkage-thresholding algorithm (FISTA) [30].

2.2. Nesterov’s Accelerated Gradient Method

Nesterov [35] proposed a variant of the gradient descent method for Lipschitz differentiable functions. It has convergence rate while the (traditional) gradient descent method has [35]. Considering the minimization of a convex and differentiable function , NAGM is described in Algorithm 1 when has a Lipschitz constant L in (1).

| Algorithm 1 NAGM. |

Input: Lipschitz constant L, initial value , . While (until convergence) do

End while |

Algorithm 1 replaces the gradient descent by Steps 1 to 3: Step 1 executes the gradient descent to obtain from , Steps 2 and 3 calculate new based on the previous , and then return to the gradient descent in Step 1. NAGM assumes that F is differentiable, while FISTA [30], an accelerated version of ISTA [30], deals with non-differentiable F using the proximal gradient descent (see Table 1).

Table 1.

Gradient descent and its modifications.

2.3. Convex Biclustering

We consider the convex biclustering problem in a general setting. Suppose we have a data matrix consisting of N observations, , w.r.t. p features . Our task is to assign each observation to one of the non-overlapped row clusters and assign each feature to one of the non-overlapped column clusters . We assume that the clusters and and the values of R and K are not known a priori.

More precisely, the convex biclustering in this paper is formulated as follows:

where and are the i-th row and j-th column of . Chi et al. [9] suggested a requirement on the weights selection:

and

where is 1 if j belongs to the i’s k-nearest neighbors and 0 otherwise. is defined similarly (the parameter k should be specified beforehand), and and are the i-th row and j-th column of the matrix X. They suggested that the constants and are determined so that the sums and are and , respectively.

Chi et al. [9] proposed COBRA to solve the problem (8). Essentially, COBRA solves the standard convex clustering problems of rows and columns alternately,

and

until the solution converges. However, both optimization problems contain a non-differentiable norm term, and solving the convex clustering problems (9) and (10) take much time [23]. Later, the ADMM-based approaches considered alternating variables procedures and outperformed the COBRA for large parameter [19].

3. The Proposed Method and Theoretical Analysis

In this section, we first show that the whole terms in the augmented Lagrangian function of (8) can be differentiable w.r.t. U after introducing two dual variables. Therefore, we use NAGM rather than FISTA in [29], where assumes the objective function contains a non-differentiable term.

In order to make the notation clear, we formulate the problem (8) in another way. Let and be the sets and , respectively, and denote the cardinality of a set S by . We define the matrices and by

and

respectively.

Then, the optimization problem (8) can be reformulated as follows:

3.1. The ALM Formulation

To implement ALM, we further construct the problem (11) into the following constrained optimization problem by introducing the dual variables and ,

where and are the l-th row and l-th column of V and Z, respectively. If we introduce the following functions,

then the problem in (12) becomes

The augmented Lagrangian function of the problem (12) is given by

where is an augmented Lagrangian penalty, and are Lagrangian multipliers, and and are the l-th row and l-th column of and , respectively.

3.2. The Proposed Method

We construct our proposed method: repeatedly minimizing the augmented Lagrangian function in Equation (15) w.r.t and updating the Lagrange multipliers in Equations (16) and (17). The whole procedure is summarized in Algorithm 2.

| Algorithm 2 Proposed method. |

Input: Data X, matrices C and D, Lipschitz constant L calculated by (27), penalties and , initial value , , . While (until convergence) do

Output: optimal solution to problem (8), . |

Step 1: update . In the U-update, if we define the following function in Equation (14),

then the update of U in (15) can be written as

We find that (18) is differentiable due to Lemma 1, and obtain the following proposition.

Proposition 1.

The function is differentiable with respect to U, and

For the proof, see the Appendix A.1.

With Proposition 1, we can use NAGM (Algorithm 1) to update U by solving the differentiable optimization problem (19).

Step 2: update and . By step (15) in the ALM procedure, we must minimize the functions in Equation (18) corresponding to the vector by updating the following,

where denotes the l-th term in .

We substitute the optimal in the k-th iteration into step ()

to obtain

By Moreau’s decomposition (2), we further simplify the update (21) as follows,

which means the updates of and become one update (22). Hence, there is no longer a need to store and compute the variable in the ALM updates, which reduces computational costs.

In the update (22), the conjugate function of the norm is an indicator function ([36], Example 3.26):

Moreover, the proximal operator of the indicator function (22) is the projection problem ([37], Theorem 6.24):

where , and the operator denotes the projection onto the ball . It solves the problem , i.e.,

This projection problem completes in operations for a p-dimensional vector .

Step 3: update Z and . Similarly, we can derive the following equations:

where . Then, the dual variable update becomes

If we write in the projection operator, then it becomes

where .

Our proposed method only uses first-order information. Furthermore, we just need to calculate the gradient of the function F and proximal operators in each iteration, where the proximal operators are easy to obtain by solving the projection problem.

3.3. Lipschitz Constant and Convergence Rate

By the following lemma, we know that if we choose as the step size for each iteration in the NAGM, then the convergence rate is, at most, .

Lemma 2

([30,35,38]). Let as the sequence generated by Algorithm 1, and as an initial value. If we take the step size as , then for any we have

In order to examine the performance of the proposed method, we derive the Lipschitz constant L of as in the following proposition.

Proposition 2.

The Lipschitz constant of is upperbounded by

where denotes the maximum eigenvalue of the corresponding matrix.

Proof.

By definition in (1) and Proposition 1, we derive the Lipschitz constant as follows,

By the definition of matrix 2-norm and the nonexpansiveness of the proximal operators ([39], Lemma 2.4), we obtain

□

Finally, it should be noted that for the time complexity, the proposed method is less sensitive to the value than the conventional methods. In fact, the value affects the proposed method only through the functions and that take 0 or ∞ depending on and in (23).

On the other hand, the COBRA solves two optimization problems, and the ADMM-based methods need to solve the Sylvester equation, which means that all of them are influenced by the value so much.

4. Experiments

In this section, we show the performance of the proposed approach for estimating and assessing the biclusters by conducting experiments on both synthetic and real datasets. We executed the following algorithms:

- COBRA: Dykstra-like proximal algorithm proposed by Chi et al. [9].

- ADMM: the ADMM proposed by Weylandt [19].

- G-ADMM (generalized ADMM): the modified ADMM presented by Weylandt [19].

- Proposed method: the proposed algorithm showed in Algorithm 2.

They were all implemented by Rcpp on a Macbook Air with 1.6 GHz Intel Core i5 and 8 GB memory. We recorded the wall times for the four algorithms.

4.1. Artificial Data Analysis

We evaluate the performance of the proposed methods on synthetic data in terms of the number of iterations, the execution time, and the clustering quality.

We generate the artificial data with a checkerboard bicluster structure similar to the method in [9]. We simulate (i.i.d.) as follows, where the indices r and c range in clusters and , respectively, which means that the number of biclusters is . We assign each randomly belongs to one of those M biclusters. The mean is chosen uniformly from an equally spaced sequence , and the is chosen as and for different noise levels.

In our experiments, we consider the following stopping criteria for the four algorithms.

- Relative error:

- Objective function error:

where is a given accuracy tolerance. We terminate the algorithm if the above error is smaller than or the maximum number of iterations exceeds 10,000. We use the relative error for the time comparisons and quality assessment and the objective function error for convergence rate analysis.

4.1.1. Comparisons

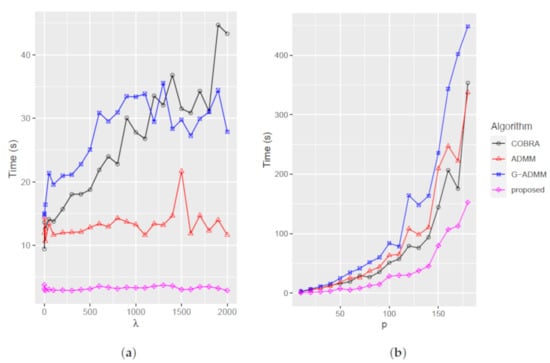

We change the sizes of of the data matrix X and the tuning parameter to test the performance of four algorithms and compare the performance among the algorithms. At first, we compare the execution time with different , ranging from 1 to 2000, and setting , , . We obtain the results shown in Figure 2a.

Figure 2.

Execution time for various and p with and . (a) Different , with ; (b) different p, with .

From Figure 2a, we observe that the execution time of the COBRA and G-ADMM increases rapidly as varies. The execution time of COBRA is the largest when . Therefore, it will take a long time for COBRA to visualize the whole fusion process, particularly the single bicluster case. Our proposed method significantly outperforms the other three algorithms and offers high stability in a wide range of . Due to its low computational time, our proposed method is a preferable choice to visualize the biclusters for various values when applying biclustering.

Next, we compare the execution time with different p (from 1 to 200). Here, we fix the number of column clusters and row clusters (). Figure 2b shows that the execution time of the algorithms increases as p grows. The proposed method shows better performance than the other three. In particular, the ADMM and G-ADMM are suffered from the feature dimension p and the computations grow dramatically.

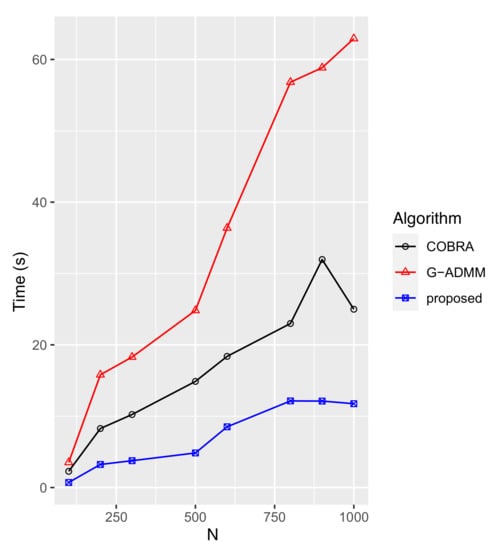

Then, we vary the sample size N from 100 to 1000 and fix the size of the feature , with , , , . Figure 3 shows the execution times of the three algorithms (COBRA, G-ADMM, and Proposed) for each N.

Figure 3.

Execution times for each N with and .

The curves reveal that when the larger the sample size N, the more time the algorithms require. Moreover, the ADMM takes more than 500 s when and takes around 1800 s when , which are much larger than the other three algorithms. Thus, we remove the result of ADMM from the figure. However, our proposed method only takes around 10 s even when , which is six times smaller than G-ADMM.

4.1.2. Assessment

We evaluate the clustering quality by a widely used criterion called the Rand index (RI) [40]. The value of RI ranges from 0 to 1; a higher value shows better performance, and 1 indicates the perfect quality of the clustering. Note that we can obtain the true bicluster labels in the data generation procedure. We generate the matrix data with and , and set two noise levels, low () and high (). We compare the clustering quality of our proposed method with ADMM, G-ADMM, and COBRA under different settings. Setting 1: , , ; Setting 2: , , ; Setting 3: , , ; Setting 4: , , ; Setting 5: , , ; Setting 6: , , .

Table 2 presents the result of the experiment. As the tuning parameter increases, the biclusters tend to fuse and reduce noise interference in the raw data. While in some cases, for extremely high such as 10,000, the biclusters may be over-smoothed, and the value of the Rand index is decreased. For example, in the first case (Setting 1: the number of biclusters is and ). The Rand index in COBRA, ADMM, and our proposed method shows a similar value in most cases because all the algorithms solve the same model. However, the G-ADMM exhibits the worst performance due to its slow convergence rate, and it cannot converge well when the tuning parameter is large ( and 10,000). Overall, from the results in Table 2, our proposed method shows high accuracy and stability from low to high noise.

Table 2.

Assessment result.

4.2. Real Data Analysis

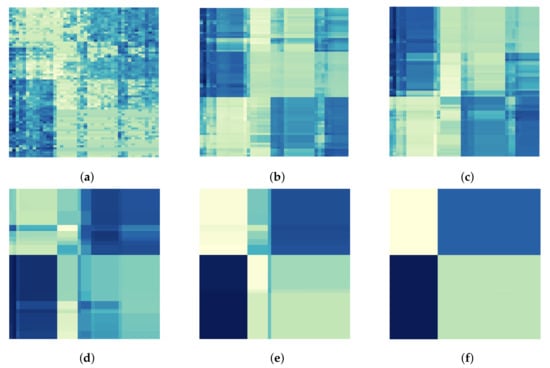

In this section, we use three different real datasets to demonstrate the performance of our proposed method.

Firstly, we use the presidential speeches dataset preprocessed by Weylandt et al. [41] that contains 75 high-frequency words taken from the significant speeches of the 44 U.S. presidents around the year 2018. We show the heatmaps in Figure 4 under a wide range of tuning parameters to exhibit the fusion process of biclusters. We set the tolerance to be , and use the relative error stopping criterion as described in Section 4.1. The columns represent the different presidents, and the rows represent the different words. When , the heatmap is disordered, and there are no distinct subgroups. While we increase the , the biclusters begin to merge. We can further find out the common vocabulary used in some groups of the prime minister’s speeches. Moreover, as shown in Figure 4f, the heatmap clearly shows four biclusters with two subgroups of presidents and two subgroups of words when 30,000.

Figure 4.

The heatmap results of proposed method implementation on the presidential speeches dataset under a wide range of . (a) ; (b) ; (c) ; (d) ; (e) 15,000; (f) 30,000.

Secondly, we compare the computational time of four algorithms for two actual datasets. One is The Cancer Genome Atlas (TCGA) dataset [42], which contains 438 breast cancer patients (samples) and 353 genes (features), and the other one is the diffuse large-B-cell lymphoma (DLBCL) dataset [43] with 3795 genes and 58 patient samples. In DLBCL, there are 32 samples from cured patients and 26 samples from sick individuals among the 58 samples. Furthermore, we extract 500 genes with the highest variances among the original genes.

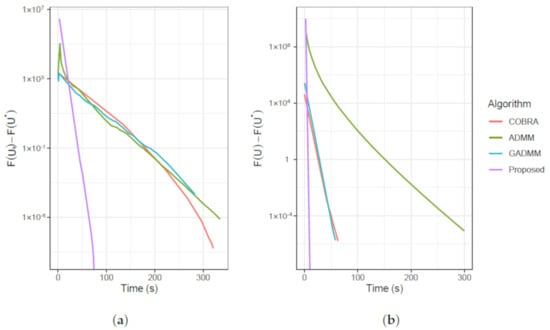

Figure 5a,b depicts the outcomes of the elapsed time comparison. From the curves, we observe that our proposed approach surpasses the other three methods. In contrast, ADMM shows the worst performance in the DLBCL dataset, and the case of tolerance requirement in the TCGA dataset.

Figure 5.

Plot of vs. the elapsed time. (a) TCGA; (b) DLBCL.

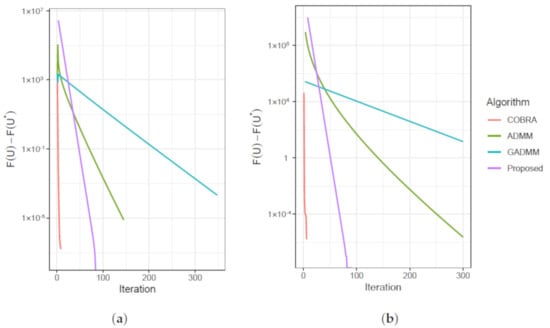

Lastly, we compare the number of iterations to achieve the specified tolerance of and run it on the TCGA and DLBCL datasets. Figure 6a,b reveals that the COBRA algorithm has the fastest convergence rate, whereas the generalized ADMM is the slowest to converge. Our proposed method shows competitive performance in the convergence rate.

Figure 6.

Plot of vs. the number of iterations. (a) TCGA; (b) DLBCL.

Overall, from the above experiment results of the artificial and real datasets, our proposed method has superior computational performance with high accuracy.

5. Discussion

We proposed a method to find a solution to the convex biclustering problem. We found that it outperformed the conventional algorithms, such as COBRA and ADMM-based procedures, in the sense of efficiency. Our proposed method is more efficient than COBRA because the latter should solve two optimization problems containing non-differentiable fused terms in each cycle. Additionally, the proposed method performed better than the ADMM-based procedures because the former is based on [29] and uses the NAGM to update the variable U. However, ADMM spends much more time computing the matrix inverse. Moreover, our proposed method is stable while varying the tuning parameters , which is convenient for us to find the optimal and visualize the variation of the heatmaps under a wide range of .

As for further improvements, we can use ADMM as a warm start strategy to select an initial value for our proposed method. What is more, according to the fusion process of the heatmap results in Figure 4, it will be meaningful if we can derive the range of tuning parameters that yield the non-trivial solutions of the convex biclustering with more than one bicluster. Additionally, the proposed method can motivate future work. We can extend the proposed method to solve other clustering problems, such as the sparse singular value decomposition model [44] and the integrative generalized convex clustering model [45].

Author Contributions

Conceptualization, J.C. and J.S.; methodology, J.C. and J.S.; software, J.C.; formal analysis, J.C. and J.S.; investigation, J.C.; resources, J.C.; data curation, J.C.; writing—original draft preparation, J.C. and J.S.; writing—review and editing, J.C. and J.S.; visualization, J.C.; supervision, J.S.; project administration, J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Grant-in-Aid for Scientific Research (KAKENHI) C, Grant number: 18K11192.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this paper. Presidential speeches dataset: https://www.presidency.ucsb.edu (accessed date 18 October 2021); DLBCL dataset: http://portals.broadinstitute.org/cgi-bin/cancer/datasets.cgi (accessed date 18 October 2021).

Acknowledgments

The authors would like to thank Ryosuke Shimmura for helpful discussions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADMM | alternating direction method of multipliers |

| ALM | augmented Lagrangian method |

| COBRA | convex biclustering algorithm |

| DLBCL | diffuse large-B-cell lymphoma |

| FISTA | fast iterative shrinkage-thresholding algorithm |

| ISTA | iterative shrinkage-thresholding algorithm |

| NAGM | Nesterov’s accelerated gradient method |

| RI | Rand index |

| TCGA | The Cancer Genome Atlas |

Appendix A

Appendix A.1. Proof of Proposition 1

First, we define the following two functions,

and

By the definition of in (18),

By Theorem 26.3 in [33], if the function is closed and strongly convex, then we have the differentiable conjugate function , and

Hence, we can derive the following equations,

Then, we obtain

and, similarly,

Next, take the derivative of w.r.t U,

and we obtain the last equation by Moreau’s decomposition.

References

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A k-means clustering algorithm. J. R. Stat. Soc. Ser. (Appl. Stat.) 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Johnson, S.C. Hierarchical clustering schemes. Psychometrika 1967, 32, 241–254. [Google Scholar] [CrossRef] [PubMed]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Hartigan, J.A. Direct clustering of a data matrix. J. Am. Stat. Assoc. 1972, 67, 123–129. [Google Scholar] [CrossRef]

- Cheng, Y.; Church, G.M. Biclustering of expression data. ISMB Int. Conf. Intell. Syst. Mol. Biol. 2000, 8, 93–103. [Google Scholar]

- Tanay, A.; Sharan, R.; Shamir, R. Discovering statistically significant biclusters in gene expression data. Bioinformatics 2002, 18, S136–S144. [Google Scholar] [CrossRef] [Green Version]

- Prelić, A.; Bleuler, S.; Zimmermann, P.; Wille, A.; Bühlmann, P.; Gruissem, W.; Hennig, L.; Thiele, L.; Zitzler, E. A systematic comparison and evaluation of biclustering methods for gene expression data. Bioinformatics 2006, 22, 1122–1129. [Google Scholar] [CrossRef] [PubMed]

- Madeira, S.C.; Oliveira, A.L. Biclustering algorithms for biological data analysis: A survey. IEEE/ACM Trans. Comput. Biol. Bioinform. 2004, 1, 24–45. [Google Scholar] [CrossRef]

- Chi, E.C.; Allen, G.I.; Baraniuk, R.G. Convex biclustering. Biometrics 2017, 73, 10–19. [Google Scholar] [CrossRef]

- Tibshirani, R.; Saunders, M.; Rosset, S.; Zhu, J.; Knight, K. Sparsity and smoothness via the fused lasso. J. R. Stat. Soc. Ser. (Stat. Methodol.) 2005, 67, 91–108. [Google Scholar] [CrossRef] [Green Version]

- Hocking, T.D.; Joulin, A.; Bach, F.; Vert, J.P. Clusterpath an algorithm for clustering using convex fusion penalties. In Proceedings of the 28th International Conference on Machine Learning, ICML 2011, Bellevue, DC, USA, 28 June–2 July 2011; p. 1. [Google Scholar]

- Lindsten, F.; Ohlsson, H.; Ljung, L. Clustering using sum-of-norms regularization: With application to particle filter output computation. In Proceedings of the 2011 IEEE Statistical Signal Processing Workshop (SSP), Nice, France, 28–30 June 2011; pp. 201–204. [Google Scholar]

- Pelckmans, K.; De Brabanter, J.; Suykens, J.A.; De Moor, B. Convex clustering shrinkage. In Proceedings of the PASCALWorkshop on Statistics and Optimization of Clustering Workshop, London, UK, 4–5 July 2005. [Google Scholar]

- Langfelder, P.; Horvath, S. WGCNA: An R package for weighted correlation network analysis. BMC Bioinform. 2008, 9, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Tan, K.M.; Witten, D.M. Sparse biclustering of transposable data. J. Comput. Graph. Stat. 2014, 23, 985–1008. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shor, N.Z. Minimization Methods for Non-Differentiable Functions; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 3. [Google Scholar]

- Boyd, S.; Xiao, L.; Mutapcic, A. Subgradient methods. Lect. Notes EE392o Stanf. Univ. Autumn Quart. 2003, 2004, 2004–2005. [Google Scholar]

- Bauschke, H.H.; Combettes, P.L. A Dykstra-like algorithm for two monotone operators. Pac. J. Optim. 2008, 4, 383–391. [Google Scholar]

- Weylandt, M. Splitting methods for convex bi-clustering and co-clustering. In Proceedings of the 2019 IEEE Data Science Workshop (DSW), Minneapolis, MN, USA, 2–5 June 2019; pp. 237–242. [Google Scholar]

- Glowinski, R.; Marroco, A. Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité d’une classe de problèmes de Dirichlet non linéaires. ESAIM Math. Model. Numer. Anal.-ModéLisation MathéMatique Anal. NuméRique 1975, 9, 41–76. [Google Scholar] [CrossRef]

- Gabay, D.; Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 1976, 2, 17–40. [Google Scholar] [CrossRef] [Green Version]

- Deng, W.; Yin, W. On the global and linear convergence of the generalized alternating direction method of multipliers. J. Sci. Comput. 2016, 66, 889–916. [Google Scholar] [CrossRef] [Green Version]

- Chi, E.C.; Lange, K. Splitting methods for convex clustering. J. Comput. Graph. Stat. 2015, 24, 994–1013. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Suzuki, J. Sparse Estimation with Math and R: 100 Exercises for Building Logic; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Bartels, R.H.; Stewart, G.W. Solution of the matrix equation AX+ XB= C [F4]. Commun. ACM 1972, 15, 820–826. [Google Scholar] [CrossRef]

- Goldstein, T.; O’Donoghue, B.; Setzer, S.; Baraniuk, R. Fast alternating direction optimization methods. Siam J. Imaging Sci. 2014, 7, 1588–1623. [Google Scholar] [CrossRef] [Green Version]

- Boyd, S.; Parikh, N.; Chu, E. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Publishers Inc.: Boston, MA, USA, 2011. [Google Scholar]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Shimmura, R.; Suzuki, J. Converting ADMM to a Proximal Gradient for Convex Optimization Problems. arXiv 2021, arXiv:2104.10911. [Google Scholar]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. Siam J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef] [Green Version]

- Moreau, J.J. Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. France 1965, 93, 273–299. [Google Scholar] [CrossRef]

- Hestenes, M.R. Multiplier and gradient methods. J. Optim. Theory Appl. 1969, 4, 303–320. [Google Scholar] [CrossRef]

- Rockafellar, R.T. The multiplier method of Hestenes and Powell applied to convex programming. J. Optim. Theory Appl. 1973, 12, 555–562. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S. Numerical Optimization; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Nesterov, Y.E. A method for solving the convex programming problem with convergence rate O (1/k2). Dokl. Akad. Nauk Sssr 1983, 269, 543–547. [Google Scholar]

- Boyd, S.; Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Beck, A. First-Order Methods in Optimization; SIAM: Philadelphia, PA, USA, 2017. [Google Scholar]

- Nemirovski, A.; Yudin, D. Problem Complexity and Method Efficiency in Optimization; John Wiley: Hoboken, NJ, USA, 1983. [Google Scholar]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef] [Green Version]

- Rand, W.M. Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 1971, 66, 846–850. [Google Scholar] [CrossRef]

- Weylandt, M.; Nagorski, J.; Allen, G.I. Dynamic visualization and fast computation for convex clustering via algorithmic regularization. J. Comput. Graph. Stat. 2020, 29, 87–96. [Google Scholar] [CrossRef] [Green Version]

- Koboldt, D.; Fulton, R.; McLellan, M.; Schmidt, H.; Kalicki-Veizer, J.; McMichael, J.; Fulton, L.; Dooling, D.; Ding, L.; Mardis, E.; et al. Comprehensive molecular portraits of human breast tumours. Nature 2012, 490, 61–70. [Google Scholar]

- Rosenwald, A.; Wright, G.; Chan, W.C.; Connors, J.M.; Campo, E.; Fisher, R.I.; Gascoyne, R.D.; Muller-Hermelink, H.K.; Smeland, E.B.; Giltnane, J.M.; et al. The use of molecular profiling to predict survival after chemotherapy for diffuse large-B-cell lymphoma. N. Engl. J. Med. 2002, 346, 1937–1947. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Shen, H.; Huang, J.Z.; Marron, J. Biclustering via sparse singular value decomposition. Biometrics 2010, 66, 1087–1095. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Allen, G.I. Integrative generalized convex clustering optimization and feature selection for mixed multi-view data. J. Mach. Learn. Res. 2021, 22, 1–73. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).