3.1. Estimating Dynamic Factor Models Using Bayesian MCMC

In this section, we first introduce our method of implementing the unbalanced data into our model framework naturally. Then, we finish our model specification by assigning priors in Bayesian Framework. Finally, the MCMC procedure is discussed in detail.

As discussed in

Section 2, macroeconomic series are released with diverse lags in real time. Thus, a difficulty in real-time nowcasting is to deal with unbalanced data. In this section, we develop a computational Bayesian MCMC approach that can tackle this issue naturally.

To deal with the missing data in

at the end of the sample, we introduce the

indicator matrix

by deleting the

ith row from the identity matrix

if

. For the example discussed in

Section 2, at the third releasing date

in month

T,

. Therefore, removing the fifth and sixth row of

gives us

Similarly, for the index set

, deleting the last four rows of

leads to

Then, we can simply rewrite as

To better derive the posterior distributions, we express the dynamic of

in Equation (

1) as:

where

,

is a

vector representing the

ith row of

,

, and the symbol ⊗ denotes the Kronecker product. Thus, for the

qth releasing date in month

T, the conditional density for

is

the conditional density of

is

and the conditional density of

is

for

. In this way, the unbalanced structure of the data is built into our model framework through this indicator matrix

.

Let denote all parameters to be estimated. Suppose we are at releasing date q in month T of quarter , our task is to use observations and to estimate parameters and latent factors , then conduct the nowcast for .

The joint posterior distribution

can be written as a product of individual conditionals,

where

,

,

, and

can be derived according to Equations (

7)–(

9), respectively.

is the prior distribution for the parameter set

.

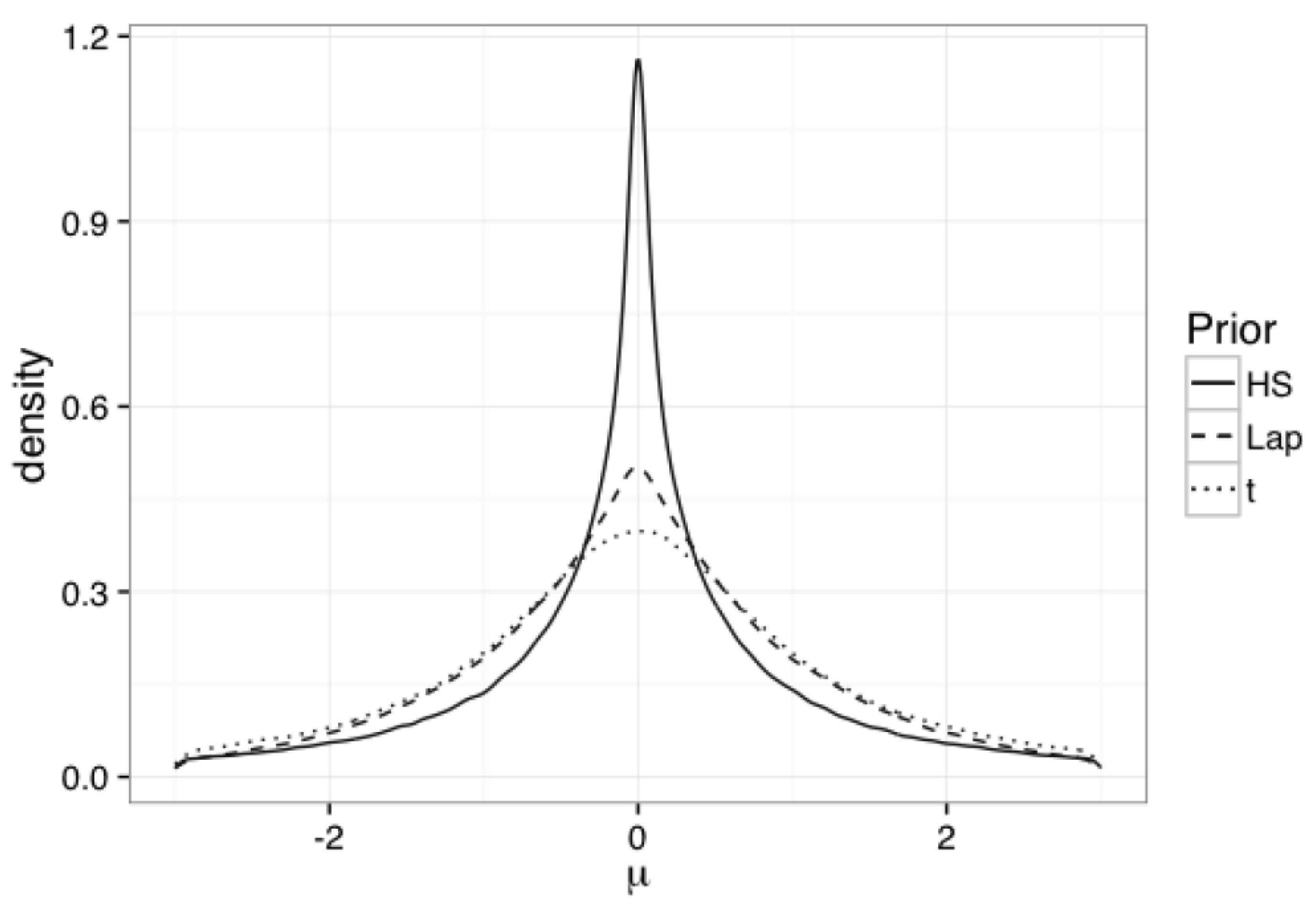

We finish the model specification by assigning prior distributions in Bayesian framework. We set prior for

as

. The prior for

is defined as

. This prior on

, along with two restrictions we set in

Section 2 (

and

for

), satisfy the identification assumptions in Stock and Watson [

16]. The prior for

is defined as

, where

is a scalar and pre-specified to be

so that the expectation of

is

. The prior for

is the standard normal truncated at

, that is: for

where

and

are PDF and CDF for standard normal distribution. Then,

. The priors for the diagonal elements of

are defined as

for

, where

and

are scalars and pre-specified to be 2 and

, accordingly. Then,

. The prior for

is

. The prior for

is set to be

for

(

is set to be 1). As discussed in

Section 2.2, these prior specifications of

and

imply a horseshoe shrinkage on the coefficients

’s. The prior for

is

, where

and

are scalars and pre-specified to be 4 and

, accordingly, to provide a reasonable mean and variance of

.

All priors are assumed to be independent. Based on the derived complete conditional posterior distributions for each parameter and latent variable, we obtain posterior samples using Metropolis–Hastings within Gibbs sampling since some conditional posterior distributions do not have closed forms. In estimation, we use the means of posterior samples as estimates for parameters and latent factors. Complete conditional posterior distributions for all model parameters and latent factors are provided in

Appendix A.

3.2. Nowcasting Formulas

In this section, nowcasting formulas are provided. Suppose we are at

, the

qth (

) releasing date in month

T. As discussed in

Section 3.1, the available information are

and

, here

T can be the first (

), second (

), or third (

) month of the quarter

. Our goal is to nowcast GDP

. Let

,

,

(

),

,

,

, and

(

) be the

gth posterior draws for parameters and latent factors after the burn-in period, where

. We nowcast

using the following formulas.

When

, the nowcast of

using BAY is given by:

When

, the nowcast of

using BAY is given by:

When

, the nowcast of

using BAY is given by:

Note that for some releasing dates, if , meaning that no monthly series are available at releasing date , then posterior samples cannot be generated. As a solution, we use to replace in nowcasting equations. All of the parameter and factor estimations are updated in every single release within a month. Then, is re-produced for each release date.