1. Introduction

As an important branch of computational intelligence, swarm intelligence (SI) [

1] provides a competitive solution for dealing with large-scale, nonlinear, and complex problems, and has become an important research direction of artificial intelligence. In the SI model, each individual constitutes an organic whole by simulating the behavior of natural biological groups. Although each individual is very simple, the group shows complex emergent behavior. In particular, it does not require prior knowledge of the problem and has the characteristics of parallelism, so it has significant advantages in dealing with problems that are difficult to solve by traditional optimization algorithms. With the deepening of research, more and more swarm intelligence algorithms have been proposed, such as ant colony optimization algorithm (ACO) [

2], particle swarm optimization (PSO) [

3], artificial bee colony algorithm (ABC) [

4], firefly algorithm (FA) [

5], cuckoo algorithm (CA) [

6], krill herd algorithm [

7], monarch butterfly optimization (MBO) [

8], and moth search algorithm [

9], etc.

ACO as one of the typical SI is first proposed by Macro Dorigo [

2] based on the observation of group behaviors of ants in nature. During the process of food searching, ants will release pheromones in the path when they pass through. Pheromones can be detected by other ants and can affect their further path choices. Generally, the shorter the path is, the more intense the pheromones will be, which means the shortest path will be chosen with the highest probability. The pheromone in other paths will disappear with time. Therefore, given enough time, the optimal path will have the most condensed pheromone. In this way, ants will find the shortest path from their nest to the food source in the end.

ACO has advantages in reasonable robustness, distributed parallel computing, and easy combination with other algorithms. It has been successfully applied in many fields, including traveling salesman problem (TSP) [

10,

11], satellite control resource scheduling problem [

12], knapsack problem [

13,

14], vehicle routing problem [

15,

16], and continuous function optimization [

17,

18,

19]. However, conventional ACO is still far from perfect due to issues like premature convergence and long search time [

20].

Many scholars have made substantial contributions to improve ACO, mainly focusing on two perspectives, including model modification and algorithms combination. For example, in the line of model improvement, an ant colony system (ACS) [

21] employs a pseudo-random proportional rule, which leads to faster convergence. In ACS, only the pheromone of the optimal path will be increased after each iteration. To prevent premature convergence caused by excessive pheromones concentration in some paths, the max-min ant system (MMAS) modifies AS with three main strategies for pheromone [

22], including limitation, maximum initialization, and updating rules. To avoid the early planning of the blind search, an improved ACO algorithm by constructing the unequal allocation initial pheromones is proposed in [

23]. Path selecting is based on the pseudo-random rule for state transition. The probability is decided by the number of iterations, and the optimal solution. Introducing a penalty function to the pheromone updating, a novel ACO algorithm is addressed in [

24] to improve the solution accuracy.

Considering the other primary kind of modification to the original ACO, algorithm combination, several approaches are proposed as well. A multi-type ant system (MTAS) [

25] is proposed combining ACS and MMAS, inheriting advantages from both of these algorithms. Combining particle swarm algorithm (PSO) with ACO, a new ant colony algorithm was proposed in [

26] and named PS-ACO. PS-ACO employs pheromones updating rules of ACO and searches mechanisms of PSO simultaneously to keep the trade-off between the exploitation and exploration. A multi-objective evolutionary algorithm via decomposition is combined with ACO, an algorithm, termed MOEA/D-ACO [

27], which proposes a series of single-objective optimization problems to solve multi-objective optimization problems. Executing ACO in combination with a genetic algorithm (GA), a new hybrid algorithm is proposed in [

28]. Embedding GA into ACO, this method improves ACO in convergence speed and GA in searching ability.

Besides the above primary improvement strategies considering model modification and algorithm combination, approaches based on machine learning are also proposed in recent decades [

29]. On the one hand, swarm intelligence can be used to solve the optimization problems in deep learning. In deep neural networks, for example, convolutional neural network (CNN), the optimization of hyperparameters is an NP hard problem. Using the SI method can solve this kind of problem better. PSO, CS, and FA were employed to properly select dropout parameters concerning CNN in [

30]. The hybridized algorithm [

31] based on original MBO with ABC and FAs was proposed to solve CNN hyperparameters optimization. On the other hand, we can learn from machine learning to improve performance of SI. For example, information feedback models are used to enhance the ability of algorithms [

32,

33,

34]. In addition, opposition-based learning (OBL) [

35], which was first proposed by Tizhoosh, is a famous algorithm. Its main idea is to calculate all the opposite solutions after current iteration, and then optimal solutions are selected among the generated solutions and their opposite solutions for the next round of iteration. OBL has been widely accepted in SI, including ABC [

36], differential evolution (DE) [

37,

38,

39], and PSO [

40,

41], leading to reasonable performances.

Since opposite solutions to continuous problems are convenient to construct, OBL has been used to solve continuous problems more commonly as above, compared with discrete problems. OBL is combined with ACS and applied to solve the TSP as an example for discrete problems in [

42] to acquire the better solution. The solution construction phase and the pheromone updating phase of ACS are the primary foci of this hybrid approach. Besides TSP, the graph coloring problem is also employed as a discrete optimization problem in [

43], and an improved DE algorithm based on OBL is proposed, which introduces two different methods of opposition. In [

44], a pretreatment step was added in the initial stage when the two-membered evolution strategy was used to solve the total rotation minimization problem. The opposite solutions generated by OBL is compared with the initial solutions randomly generated, and a better solution is selected for the subsequent optimization process.

Inspired by the idea of OBL, in this paper, a series of methods, focusing on the opposite solution construction and the pheromone updating rule, are proposed. Aiming to solve TSP, our proposed methods introduce OBL to ACO and enable ACO no longer limited to the local optimal solutions, avoid premature convergence, and improve its performances.

The rest of this paper is organized as follows. In

Section 2, the background knowledge of ACO and OBL are briefly reviewed. In

Section 3, the opposition-based extensions to ACO are presented. In

Section 4, the effectiveness of the improvement is verified through experiments.

Section 5 presents the conclusions of this paper.

3. Opposition-Based ACO

The method of construction opposite path based on OBL is given in this section. At the same time, in order to use the opposite path information, three kinds of frameworks of opposite-based ACO algorithms including ACO-Index, ACO-MaxIt, and ACO-Rand will also be proposed. In order to unify the content, the construction method of the opposite path will be combined with the specific algorithm. Details will be given in the following subsections.

3.1. ACO-Index

According to the definition given in Equation (

7), the same route may lead to different opposite paths. Taking a TSP of six cities as an example, path

and path

are the same path; however, their opposite paths,

and

, are different.

In addition, the initialization procedure of ACO is not random compared with DE, but more similar to the greedy algorithm, which selects a closer according to the rule of state transition. Therefore, opposite paths are always longer than the original ones generally and cannot be used pheromone updating. Aiming to solve the shortcomings, a novel ACO algorithm, namely ACO-Index, is proposed based on a modified strategy of opposite path construction.

Opposite path construction is mainly composed of two steps. The first step is the path sorting, and the second is the decision of opposite path. Suppose the number of cities n is even, then, during path sorting, put the path P back into a cycle and appoint a particular city A as the starting city with index 1. In addition, the rest of the cities will be given indices according to their position in this cycle. In this way, we could get the indices .

During the second step, indices of the opposite path

should be found through Equation (

7) and the opposite path

can be found based on the indices

appointed previously.

Moreover, when the number of cities

n is odd, an auxiliary index should be added to the end of the indices

, and we could get

. According to Equation (

7), we could get the opposite indices

, and its last index is the auxiliary index itself. Remove the latest index, and we can get

. In addition, then, decide the opposite path

according to the opposite indices

.

In this way, opposite paths for different paths that share the same cycle route are the same. Pseudocode for opposite path construction is addressed in Algorithm 1.

| Algorithm 1 Constructing the opposite path |

- Input:

original path P - 1:

Put the path back into a circle - 2:

Appoint a specific city A with index 1 - 3:

Appoint other cities in this circle with indices 2, 3, ⋯, and get the indices - 4:

ifn is even - 5:

Calculate the opposite indices according to Equation ( 7) - 6:

else - 7:

Add an auxiliary index at the end of and get - 8:

Calculate the opposite indices according to Equation ( 7) - 9:

Delete the final index from and get - 10:

endif - 11:

Calculate the based on - Output:

opposite path

|

Although some paths may be longer than the optimal path, they still contain useful information within themselves, which inspires us to apply them to reasonably modifying pheromone. For ACO algorithms, if the number of ants is

m, the number of paths should also be

m for pheromone updating. In the proposed ACO-Index, the top

shortest original paths and the top

shortest opposite paths will be chosen to form the

paths. Algorithm 2 presents the pseudocode for pheromone updating.

| Algorithm 2 Updating pheromone |

- Input:

original paths and opposite paths - 1:

Sort original paths and opposite paths by length - 2:

Select the top shortest paths and the top shortest opposite paths - 3:

Construct new paths - 4:

Update pheromone according to Equation ( 2) - Output:

Pheromone trail in each path

|

Algorithm 3 shows the pseudocode for the primary steps of ACO-Index for total iterations

.

| Algorithm 3 ACO-Index algorithm |

- Input:

parameters: m, n, , , , Q, , , - 1:

Initialize pheromone and heuristic information - 2:

for iteration index do - 3:

for to m do - 4:

Construct paths according to Equation ( 1) - 5:

Construct opposite paths through Algorithm 1 - 6:

endfor - 7:

Update pheromone according to Algorithm 2 - 8:

endfor - Output:

the optimal path

|

3.2. ACO-MaxIt

Although ACO-Index modifies ACO with a better path construction strategy, it inherits a similar opposite path generation method from [

43]. In this section, a novel opposite path generation method, together with a novel pheromone updating rule, is proposed as an improved ACO algorithm, named ACO-MaxIt, which will be described in detail as follows.

The mirror point

M is defined by

where

denotes the ceiling operator.

Considering the case when

n is odd, the opposite city

for the current city

C could be defined as follows:

Considering the case when

n is even, the opposite city

for the current city

C could be defined as follows

The pseudocode for opposite path construction is shown in Algorithm 4.

| Algorithm 4 Constructing the opposite path based on the mirror point |

- Input:

original path - 1:

Decide mirror point M, according to Equation ( 8) - 2:

for to n do - 3:

Calculate through Equation ( 9) or Equation ( 10) according to the parity of n- 4:

endfor - Output:

opposite path

|

The pheromone update process consists of two stages. For the first stage, when

and

, opposite paths will be decided through Algorithm 4. Meanwhile, the pheromone will be updated according to Algorithm 2. In the later stage, when

, no more opposite paths could be calculated, and pheromones will still be updated according to Equation (

2).

The pseudocode of ACO-MaxIt is presented in Algorithm 5.

| Algorithm 5 ACO-MaxIt algorithm |

- Input:

parameters: m, n, , , , Q, g, , , - 1:

Initialize pheromone and heuristic information - 2:

for iteration index do - 3:

for to m do - 4:

Construct paths according to Equation ( 1) - 5:

endfor - 6:

if then - 7:

Construct opposite paths according to Algorithm 4 - 8:

Update pheromone according to Algorithm 2 - 9:

else - 10:

Update pheromone according to Equation ( 2) - 11:

endif - 12:

endfor - Output:

the optimal solution

|

3.3. ACO-Rand

In the pheromone updating stage of ACO-Index or ACO-MaxIt, it is decided based on experiences of when to calculate the opposite paths. Therefore, in this section, another strategy to update pheromones is addressed, and the novel ACO algorithm is named ACO-Rand since whether or not to construct the opposite path is decided by two random variables.

The whole procedure of ACO-Rand is much like that of ACO-MaxIt; however, two random variables

and

R are introduced.

is chosen randomly but fixed after generated, and

R is randomly selected during each iteration. The pseudocode of ACO-Rand is given in Algorithm 6.

| Algorithm 6 ACO-Rand algorithm |

- Input:

parameters: m, n, , , , Q, , , , - 1:

Initialize pheromone and heuristic information - 2:

for iteration index do - 3:

for to m do - 4:

Construct paths according to Equation ( 1) - 5:

endfor - 6:

Generate a random variable R - 7:

if then - 8:

Construct opposite paths according to Algorithm 4 - 9:

Update pheromone according to Algorithm 2 - 10:

else - 11:

Update pheromone according to Equation ( 2) - 12:

endif - 13:

endfor - Output:

the optimal solution

|

3.4. Time Complexity Analysis

The main steps of the three improved ant colony algorithms include initialization, solution construction and pheromone updating. The time complexity of initialization is . The time complexity of constructing the solution is . The time complexity of pheromone updating is . In addition, the time complexity of constructing and sorting the inverse solutions is . Therefore, the complexity of the final algorithm is . It is the same time complexity as the basic ant colony algorithm. Therefore, the improved algorithm does not increase significantly in time.

4. Experiments and Results

AS and PS-ACO are employed as ACO algorithms to verify the feasibility of three opposition-based ACO algorithms. The experiments were performed in the following hardware and software environments. CPU is Core i5@2.9 GB, and RAM is 16 GB. The operating system is Windows 10. TSP examples are exported from TSPLIB (

http://comopt.ifi.uni-heidelberg.de/software/TSPLIB95/tsp/).

4.1. Parameter Setting

In the following experiments, the parameters are setting as , , , , , , , , for ACO-MaxIt, while for ACO-Rand. Twenty cycles of experiments are carried out for each example independently. Then, minimum solution , maximum solution , average solution , standard deviation , and average runtime for different examples of 20 times are given in the tables, where minimum solution , maximum solution , and average solution are the percentage value deviation against the known optimal solution. The minimum value in each result is bolded in the tables.

4.2. Experimental Results Comparison Based on AS

First, we employ AS to three kinds of opposite based ACO, called AS-Index, AS-MaxIt, and AS-Rand, to verify the effectiveness of the improved algorithm. Twenty-six TSP examples are divided into three main categories, the small-scale, the medium-scale, and the large-scale according to the number of cities, respectively.

Small-scale city example sets are selected from TSPLIB, including eil51, st70, pr76, kroA100, eil101, bier127, pr136, pr152, u159, and rat195. The results are shown in

Table 1.

From

Table 1, the proposed AS-Index, AS-MaxIt, and AS-Rand show superior performances over AS for the examples, st70, kroA100, eil101, bier127, pr136, and u159. For other examples, the proposed algorithms outperform AS in general, except eil51. Meanwhile, stability by standard deviation is the not the primary concern when evaluating an algorithm. Compared among three proposed algorithms, AS-MaxIt illustrates superior performances for most cases.

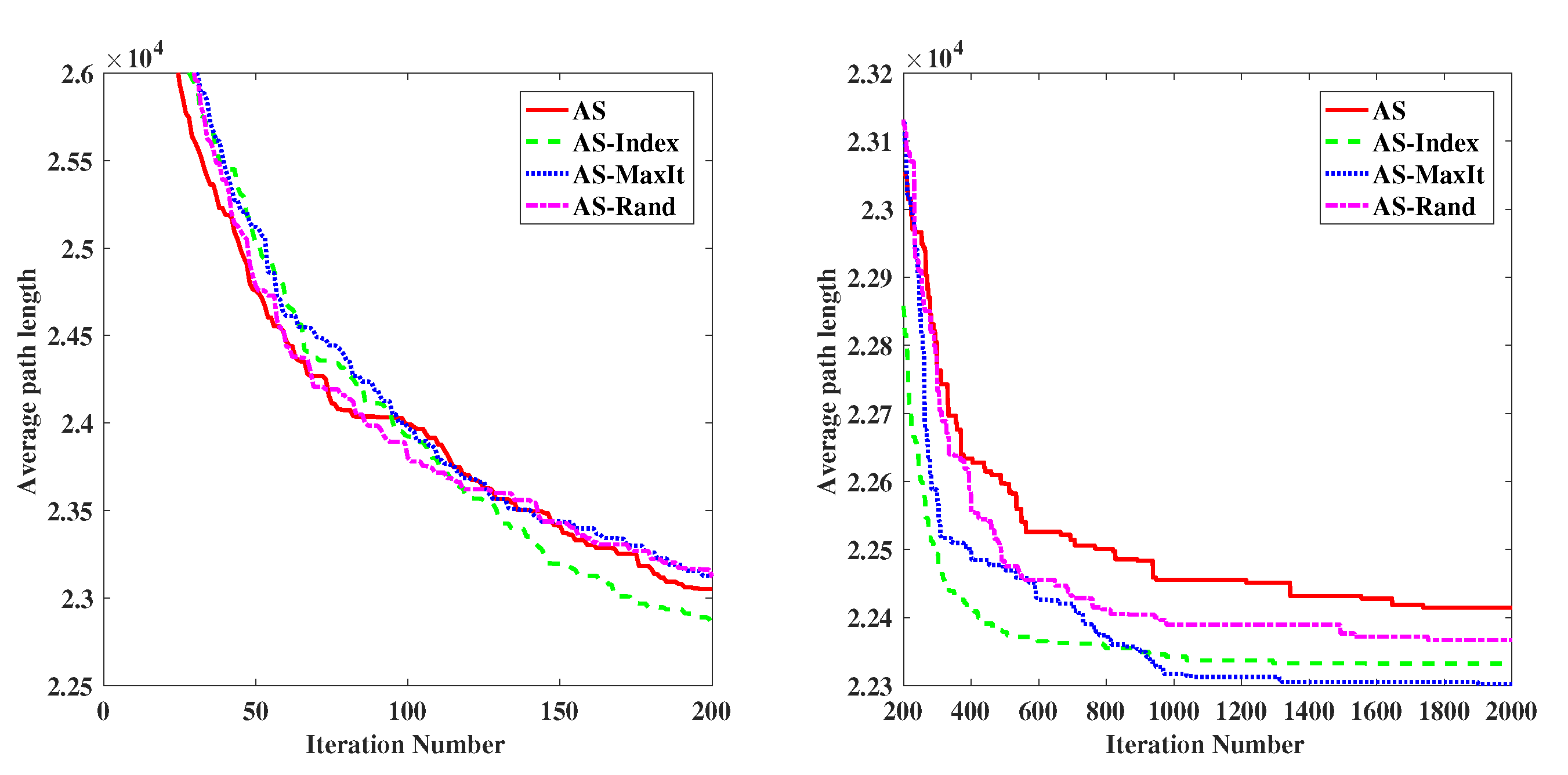

To show more details in the process of evolutionary, curves for different stages are given in

Figure 1 based on the case of kroA100.

According to

Figure 1, AS shows faster convergence speed than the other three proposed methods in early iterations, while AS-Index, AS-MaxIt, and AS-Rand all surpass AS in average path length in later iterations. Meanwhile, AS-MaxIt performs best among all these algorithms, which also verifies the results in

Table 1.

In the early stage, opposite path information introduced by OBL has negative impact on the convergence speed for all three proposed algorithms; however, it can provide extra information which guarantees the boost in accuracy for the later stage. The results lie in the fact that introducing extra information of opposite paths help to increase the diversity of the population, which balances the exploration and exploitation of solution space.

Medium-scale city example sets are selected from TSPLIB, including kroA200, ts225, tsp225, pr226, pr299, lin318, fl417, pr439, pcb442, and d493. The results are shown in

Table 2.

From

Table 2, it can be found that the proposed algorithms outperform AS in all the cases except ts225. Among all three algorithms, AS-Index and AS-MaxIt perform similarly, but better than AS-Rand generally. From these results, it can be seen that, with the help of extra information from opposite paths, three proposed methods all improve the original AS in solution accuracy.

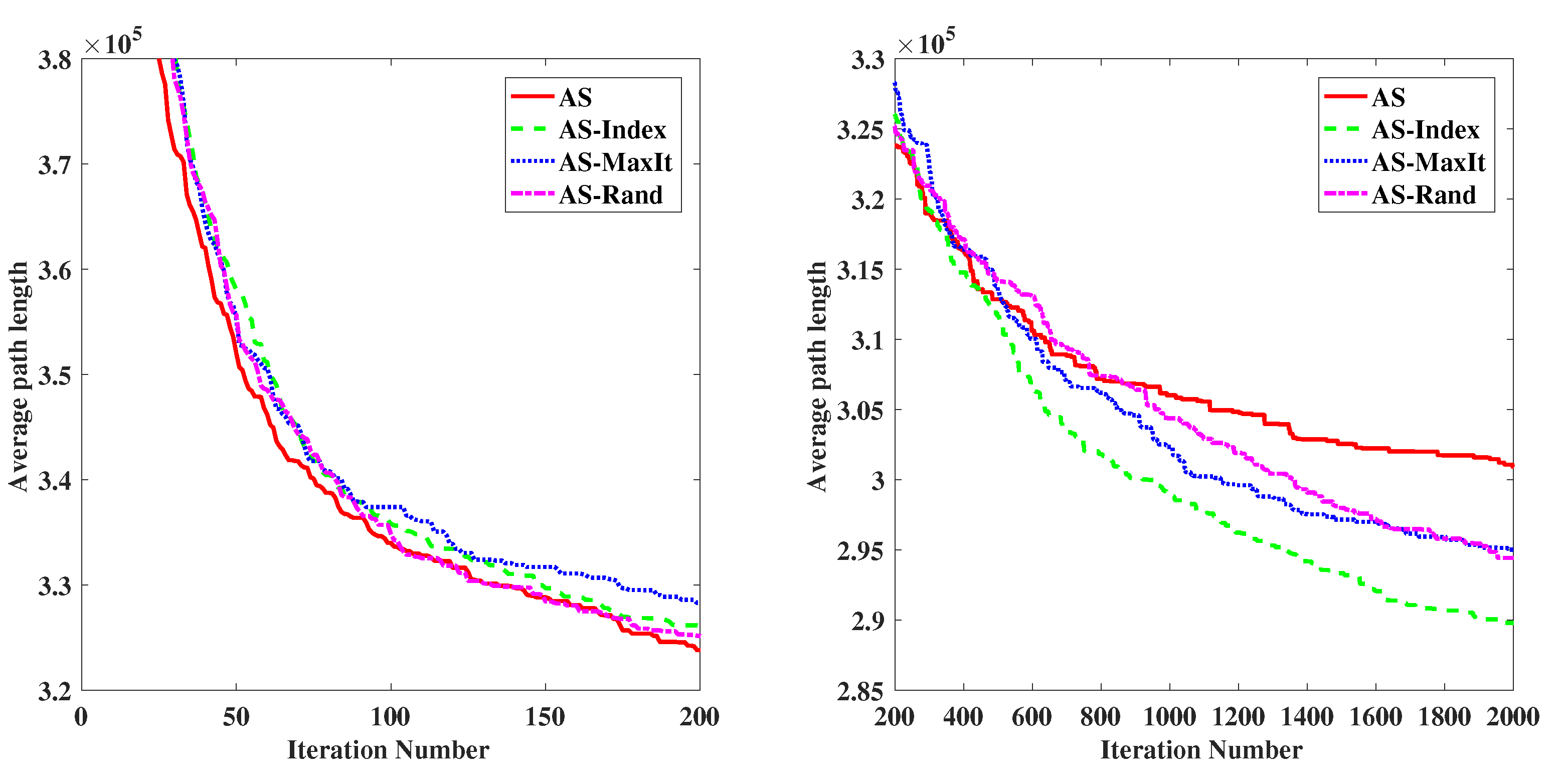

Taking fl417 as the example, evolutionary curves in detail for different iteration stages are given in

Figure 2, accordingly. According to

Figure 2, AS also converges faster than the other three proposed methods in early iterations—for example before 1000 iterations. In addition, in later iterations, the other three proposed methods all exceed AS in average path length. This further validates the conclusions obtained from the analysis of

Figure 1.

Large-scale city example sets are selected from TSPLIB, including att532, rat575, d657, u724, vm1084, and rl1304. The results are shown in

Table 3.

From

Table 3, it can be discovered that the AS-Index shows the obvious superior performance over all the other algorithms, which reveals a fact that the advantages of AS-Index appears as the scale of the example increases based on these results.

Taking vm1084 as the example, evolutionary curves in detail for different iteration stages are given in

Figure 3, accordingly. According to

Figure 3, AS still shows faster convergence speed than the other three proposed methods in early iterations, but AS-Index outperforms all the others in the end.

Based on all the tables and figures, it can be found that, in most scenarios, at least one of AS-Index, AS-MaxIt, and AS-Rand outperforms AS in average path length. For small-scale examples, AS-MaxIt shows better performance, while, for medium-scale cases, AS-Index and AS-MaxIt perform similarly better than the others. For large-scale city sets, AS-Index is the best algorithm, while AS-Rand ranks in the middle for most cases regarding figures, and it illustrates its stability to some extent. Therefore, it can be drawn that the strategy to introduce OBL into AS provides more information, namely better exploration capability, which explains the superiority of these proposed methods over the original AS. By comparing the results of the running time from

Table 1,

Table 2 and

Table 3, we can also find that the running time of the three improved algorithms is not significantly increased compared with AS. It also validates our previous discussion on time complexity.

4.3. Experimental Results Comparison Based on PS-ACO

To further verify the effectiveness of the proposed algorithm, we employed another PS-ACO to three kinds of opposite based ACO, PS-ACO-Index, PS-ACO-MaxIt, and PS-ACO-Rand to verify the effectiveness of the improved algorithm. The number of ants is 50, and the other parameters are the same as in [

26]. Twelve sets of TSP examples are eil51, st70, kroA100, pr136, u159, rat195, tsp225, pr299, lin318, fl417, att532, and d657. The results are given in

Table 4.

From

Table 4, the proposed PS-ACO-Index, PS-ACO-MaxIt, and PS-ACO-Rand show superior performances over PS-ACO for the examples, eil51, st70, rat195, tsp225, and pr299. For other examples, the proposed algorithms outperform PS-ACO in general, except lin318 and fl417. Compared among three proposed algorithms, PS-ACO-Rand illustrates superior performances for most cases. By comparing the results of the running time, we can also find that the running time of the three improved algorithms is not significantly increased compared with PS-ACO.

5. Conclusions

The performances of swarm optimization algorithms based on OBL present advantages when handling problems of continuous optimization. However, there are only a few approaches proposed to solve problems of discrete optimization. The difficulty in opposite solution construction is considered as one top reason. To solve this problem, two different strategies, direction and indirection, of constructing opposite paths are presented individually in this paper. For indirection strategy, other than using the order of cities from the current solution directly, it studies the positions, noted as indices, of the cities rearranged in a circle, and then calculates the opposite indices. While for direction strategy, opposite operations are carried out directly to the cities in each path.

To use the information of the opposite path, three different frameworks of opposite-based ACO, called ACO-Index, ACO-MaxIt, and ACO-Rand, are also proposed. All ants need to get the increment of pheromone in three improved frameworks. Among three proposed algorithms, ACO-Index employs the strategy of indirection to construct the opposite path and introduces it to pheromone updating. ACO-MaxIt also employs direction strategy to obtain opposite path but only adopts it in the early updating period. Similar to ACO-MaxIt in opposite path construction, ACO-Rand employs this opposite path throughout the stage of pheromone updating. In order to verify the effectiveness of the improvement strategy, AS and PS-ACO are used in three frameworks, respectively. Experiments demonstrate that all three methods, As-Index, As-MaxIt, and AS-Rand, outperform original AS in the cases of small-scale and medium-scale cities while AS-Index performs best when facing large-scale cities. The three improved PS-ACO also showed good performance.

Constructing the opposite path mentioned in this paper is only suitable for symmetric TSP. This is mainly because the path (solution) of the problem is an arrangement without considering the direction. However, if it is replaced by the asymmetric TSP, this method needs to be modified. In addition, if it is replaced by a more general combinatorial optimization problem, it is necessary to restudy how to construct the opposite solution according to the characteristics of the problem. Therefore, our current method of constructing opposite solution is not universal. This is one of the limitations of this study. At the same time, the improved algorithm requires all ants to participate in pheromone updating in order to use the information of opposite path. However, now many algorithms use the best ant to update pheromone, so the method in this paper will have some limitations when it is extended to more ant colony algorithm. However, we also find that it is effective to apply reverse learning to combinatorial optimization problems. Therefore, we will carry out our future research work from two aspects. On the one hand, we plan to continue to study the construction method of more general opposite solution for combinatorial optimization problems, so as to improve its generality. In addition, it will be applied to practical problems such as path optimization to further expand the scope of application. Meanwhile, applying OBL to more widely used algorithms is also one interesting and promising topic. Therefore, on the other hand, we plan to study more effective use of the reverse solution and extend it to the more wildly used ACO, such as MMAS and ACS, and even some other optimization algorithms such as PSO and ABC, to solve more combinatorial optimization problems more effectively.