On a Bi-Parametric Family of Fourth Order Composite Newton–Jarratt Methods for Nonlinear Systems

Abstract

1. Introduction

2. Development of Method

- (i)

- ,

- (ii)

- , ∀ permutation of .

- (a)

- ,

- (b)

- .

- (i)

- The set of valuesyields the fourth order generalized Jarratt’s method(see [11]) that, from now on, is denoted by JM.

- (ii)

- When and , we obtain the following methodwhich is denoted as MI.

- (iii)

- For and , we obtain the methodthat now on is denoted by MII.

- (iv)

- For and , the method is given bywhich is denoted as MIII.

- (v)

- For and , the following method is obtainedwhich is denoted as MIV.

3. Local Convergence in Banach Space

- (a1)

- Let be continuously Fréchet-differentiable. Suppose there exists such that and .

- (a2)

- There exists function continuous and nondecreasing satisfying such that for eachSetwhere is given in (24).

- (a3)

- There exist functions , continuous and nondecreasing satisfying such that for eachand

- (a4)

- (a5)

- There exists such that Set .

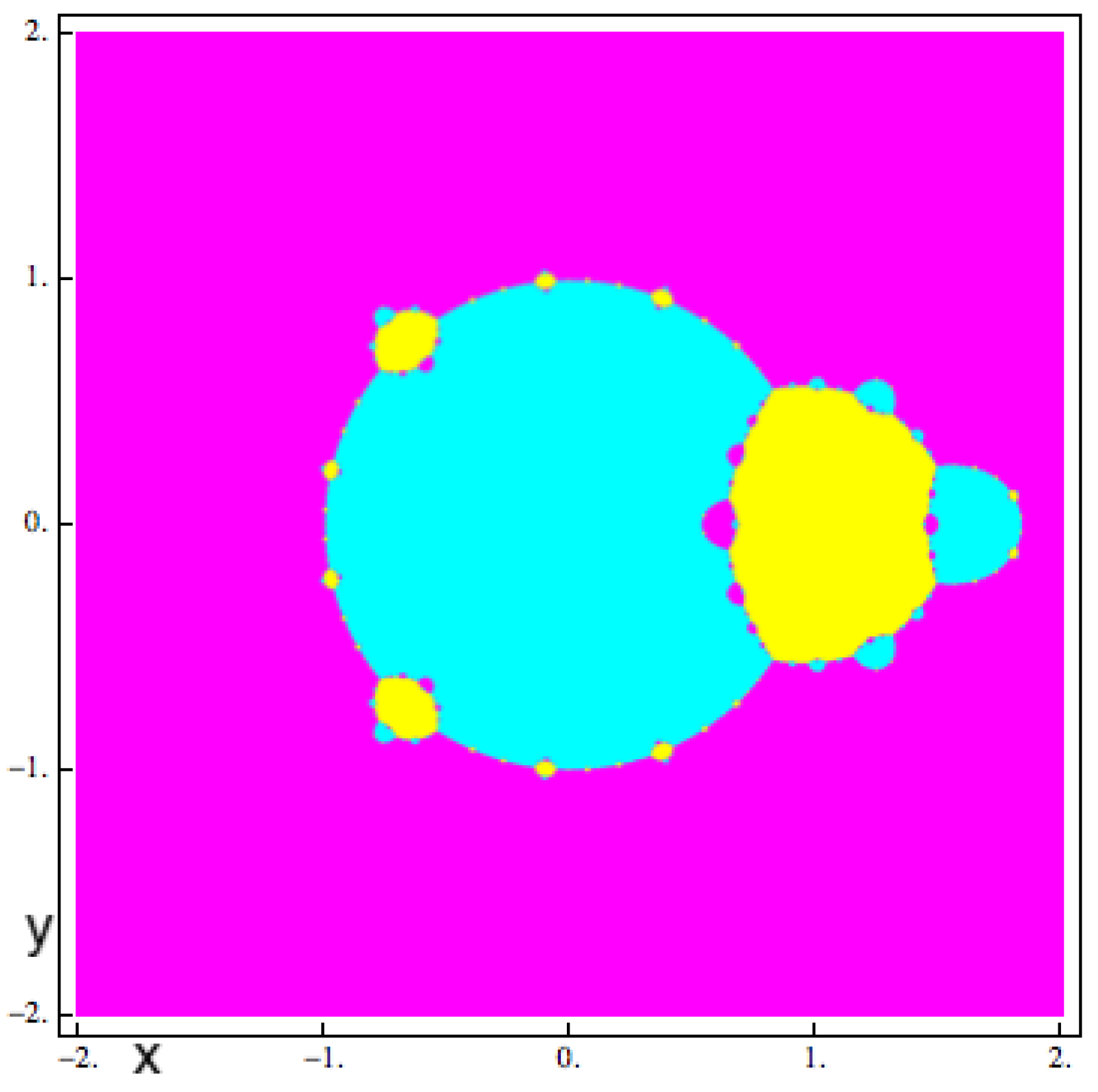

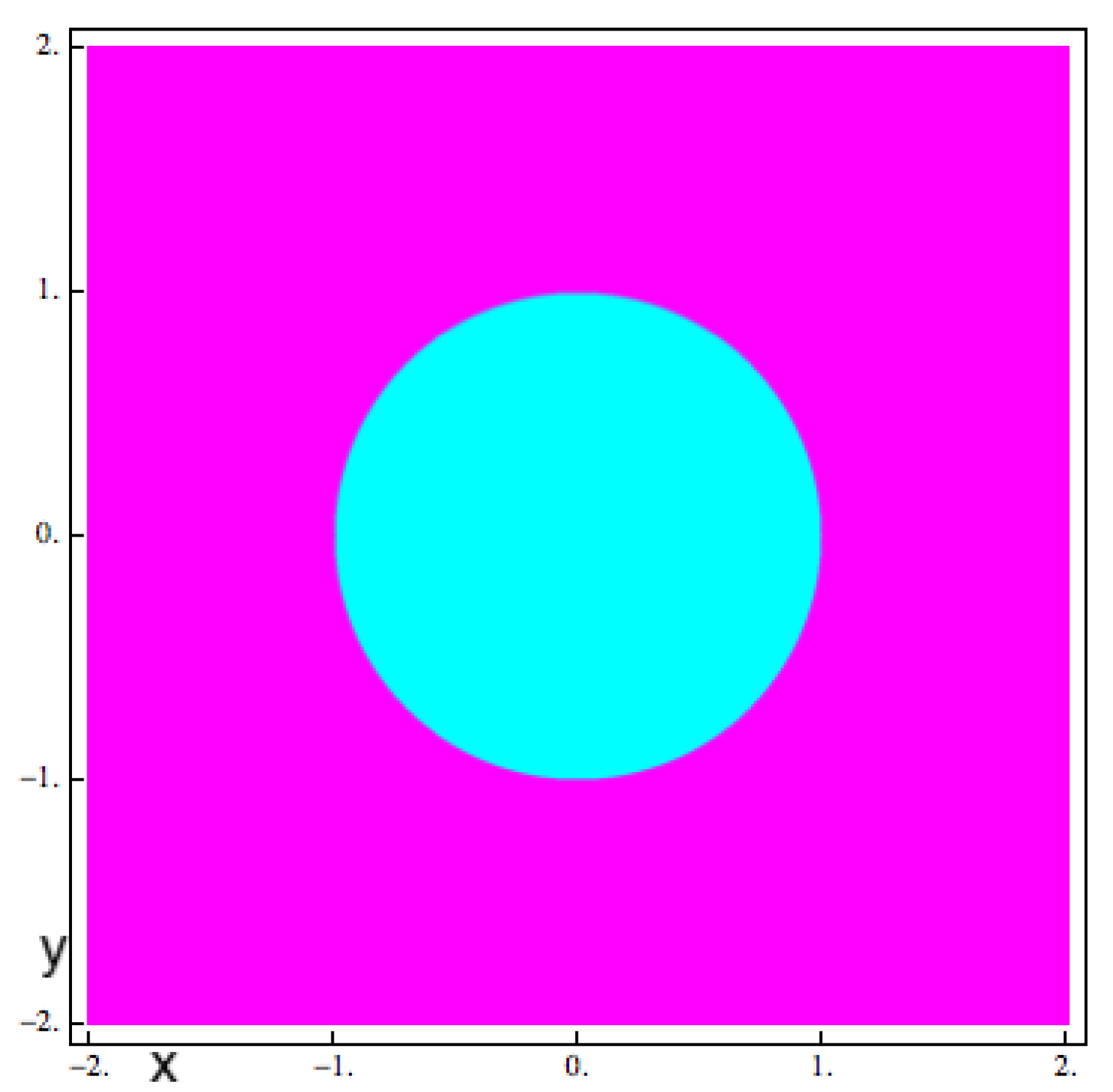

4. Dynamical Study

4.1. Fixed Points and Stability

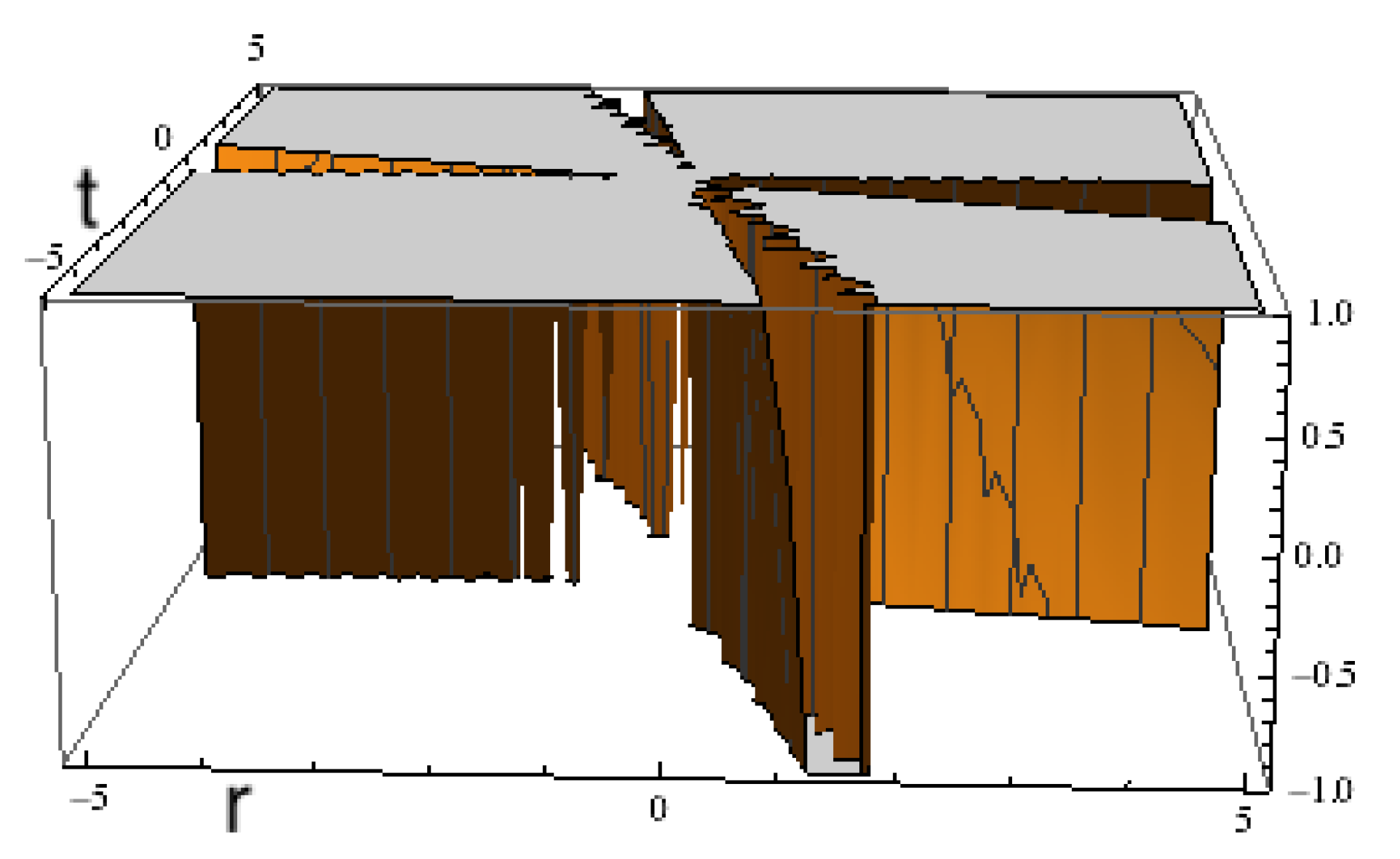

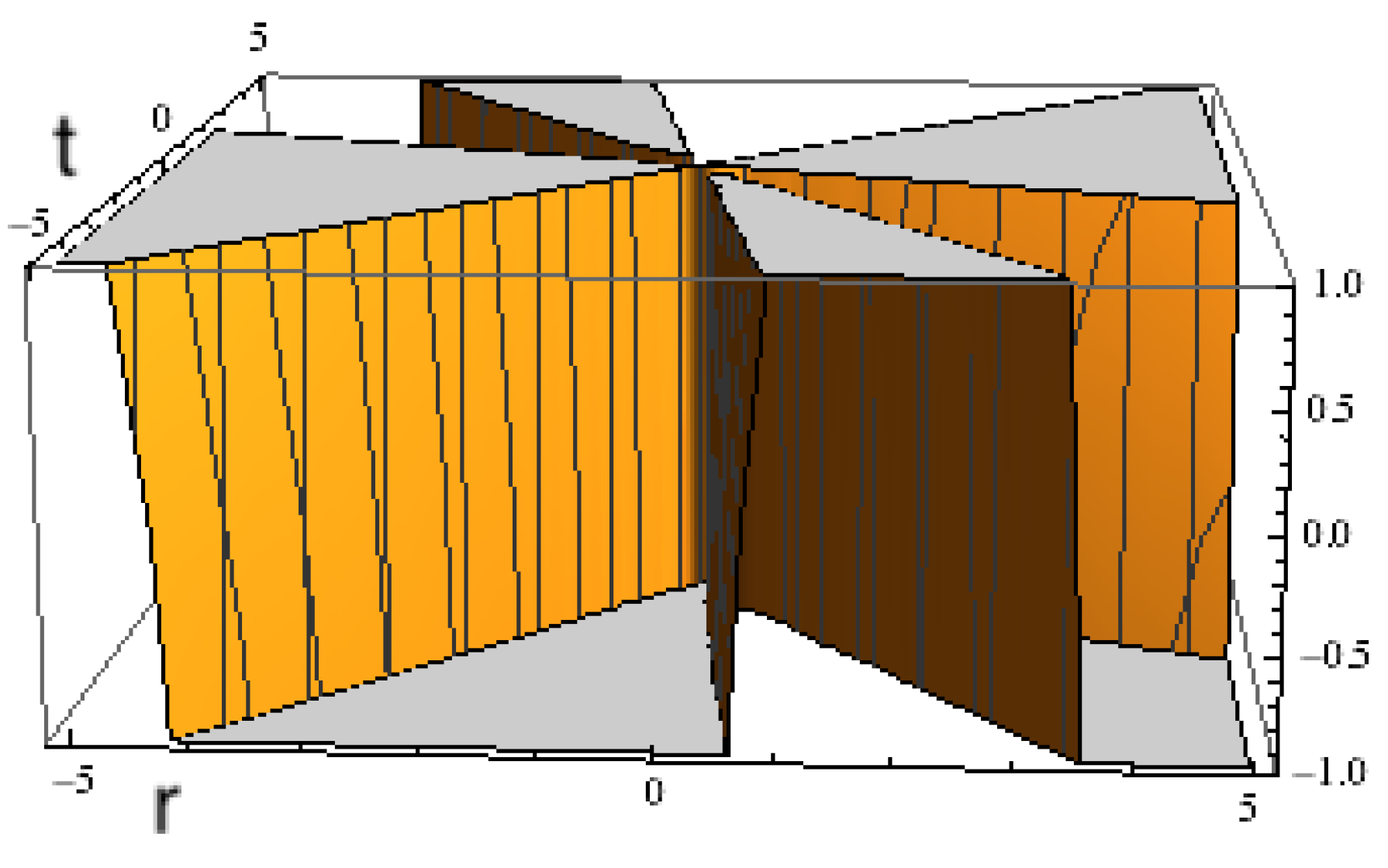

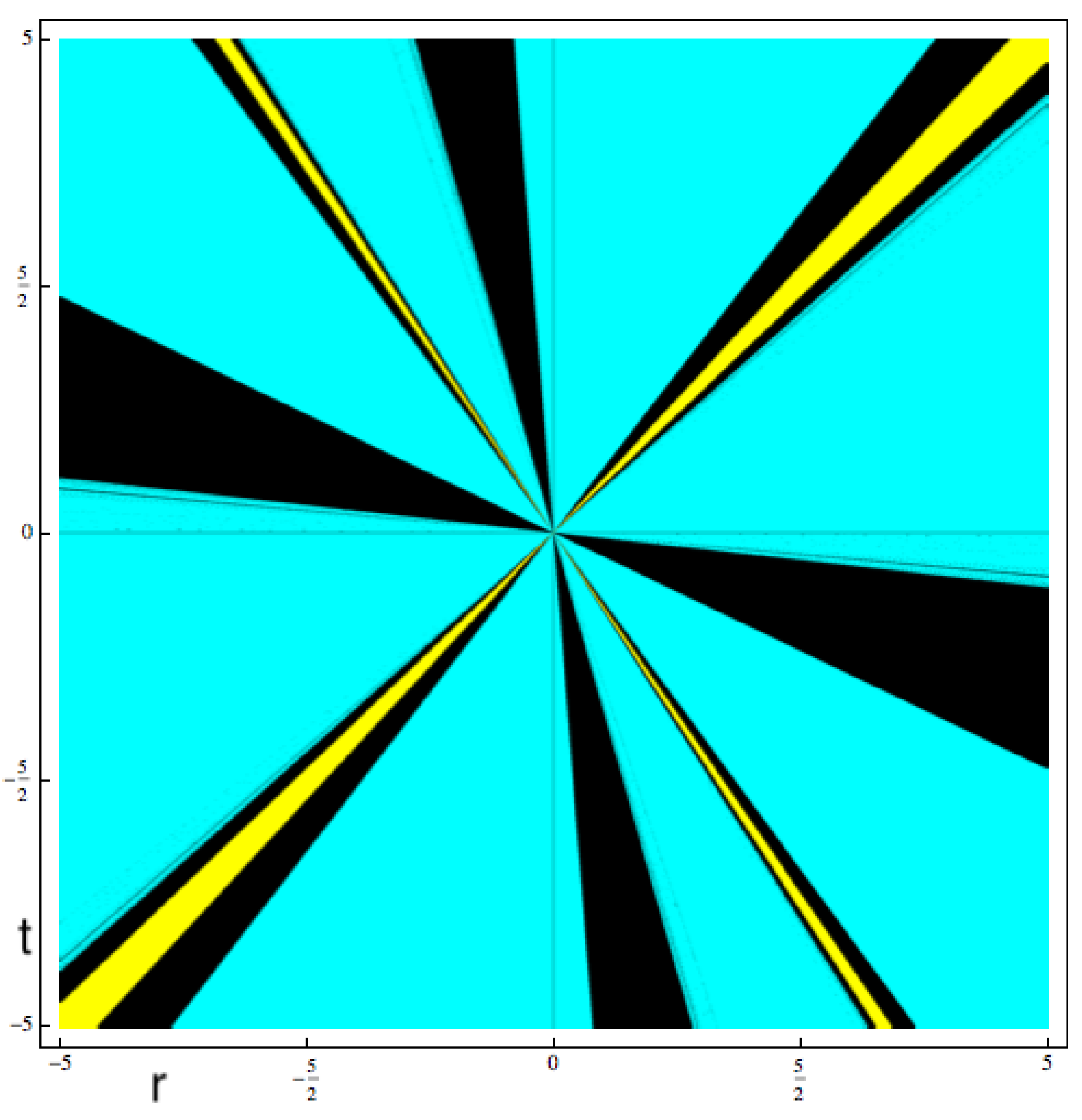

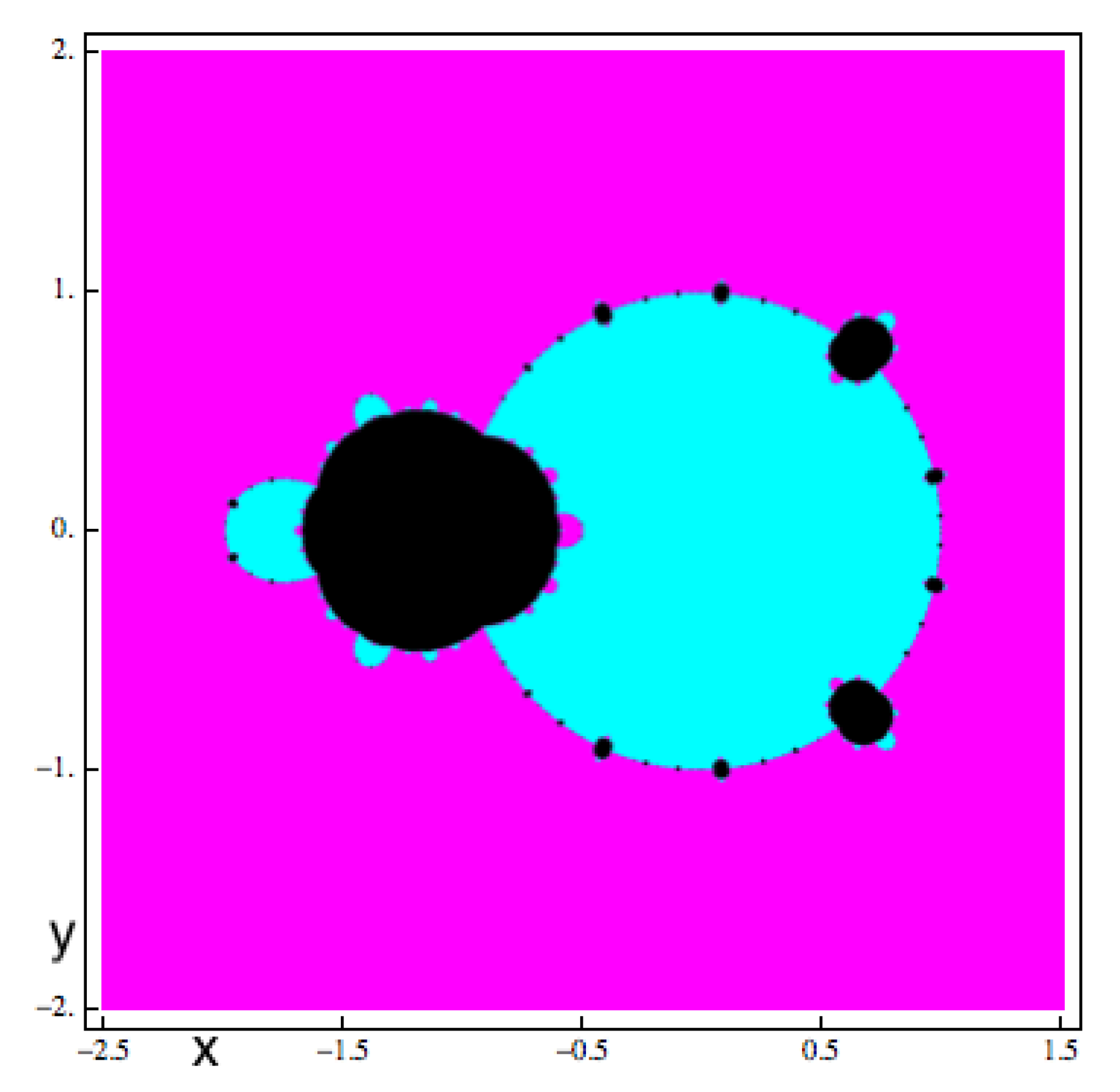

4.2. Critical Points and Parameter Spaces

- (a)

- If or or , then .

- (b)

- If or or , then .

- (c)

- For other values of r and t, the family has 2 free critical points.

5. Numerical Examples

6. Applications

- –

- Dimension of considered system of equations.

- –

- Required number of iterations .

- –

- Error of the approximation to the corresponding solution of above problems, wherein means .

- –

- Approximate computational order of convergence (ACOC) calculated by the Formula (57).

7. Conclusions

Author Contributions

Conflicts of Interest

References

- Argyros, I.K. Convergence and Applications of Newton-Type Iterations; Springer: New York, NY, USA, 2008. [Google Scholar]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Ostrowski, A.M. Solutions of Equations and System of Equations; Academic Press: New York, NY, USA, 1966. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method for functions of several variables. Appl. Math. Comput. 2006, 183, 199–208. [Google Scholar] [CrossRef]

- Frontini, M.; Sormani, E. Third-order methods from quadrature formulae for solving systems of nonlinear equations. Appl. Math. Comput. 2004, 149, 771–782. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, Á.; Noguera, M. On the computational efficiency index and some iterative methods for solving systems of nonlinear equations. J. Comput. Appl. Math. 2011, 236, 1259–1266. [Google Scholar] [CrossRef]

- Homeier, H.H.H. A modified Newton method with cubic convergence: The multivariate case. J. Comput. Appl. Math. 2004, 169, 161–169. [Google Scholar] [CrossRef]

- Noor, M.A.; Waseem, M. Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 2009, 57, 101–106. [Google Scholar] [CrossRef]

- Darvishi, M.T.; Barati, A. A third-order Newton-type method to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 187, 630–635. [Google Scholar] [CrossRef]

- Babajee, D.K.R.; Cordero, A.; Soleymani, F.; Torregrosa, J.R. On a novel fourth-order algorithm for solving systems of nonlinear equations. J. Appl. Math. 2012, 2012, 165452. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A modified Newton–Jarratt’s composition. Numer. Algor. 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Jarratt, P. Some fourth order multipoint iterative methods for solving equations. Math. Comput. 1996, 20, 434–437. [Google Scholar] [CrossRef]

- Cordero, A.; Martínez, E.; Torregrosa, J.R. Iterative methods of order four and five for systems of nonlinear equations. J. Comput. Appl. Math. 2009, 231, 541–551. [Google Scholar] [CrossRef]

- Darvishi, M.T.; Barati, A. A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 188, 257–261. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, Á.; Noguera, M. Ostrowski type methods for solving systems of nonlinear equations. Appl. Math. Comput. 2011, 218, 2377–2385. [Google Scholar] [CrossRef]

- Grau, M.; Díaz-Barrero, J.L. A technique to composite a modified Newton’s method for solving nonlinear equations. arXiv 2011, arXiv:1106.0996. [Google Scholar]

- Hueso, J.L.; Martínez, E.; Teruel, C. Convergence, efficiency and dynamics of new fourth and sixth order families of iterative methods for nonlinear systems. J. Comput. Appl. Math. 2015, 275, 412–420. [Google Scholar] [CrossRef]

- Noor, K.I.; Noor, M.A. Iterative methods with fourth-order convergence for nonlinear equations. Appl. Math. Comput. 2007, 189, 221–227. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. Efficient Jarratt-like methods for solving systems of nonlinear equations. Calcolo 2014, 51, 193–210. [Google Scholar] [CrossRef]

- Artidiello, S.; Cordero, A.; Torregrosa, J.R.; Vassileva, M.P. Design and multidimensional extension of iterative methods for solving nonlinear problems. Appl. Math. Comput. 2017, 293, 194–203. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 2012, 25, 2369–2374. [Google Scholar] [CrossRef]

- Montazeri, H.; Soleymani, F.; Shateyi, S.; Motsa, S.S. On a new method for computing the numerical solution of systems of nonlinear equations. J. Appl. Math. 2012, 2012, 751975. [Google Scholar] [CrossRef]

- Sharma, J.R.; Sharma, R.; Kalra, N. A novel family of composite Newton–Traub methods for solving systems of nonlinear equations. Appl. Math. Comput. 2015, 269, 520–535. [Google Scholar] [CrossRef]

- Abbasbandy, S.; Bakhtiari, P.; Cordero, A.; Torregrosa, J.R.; Lotfi, T. New efficient methods for solving nonlinear systems of equations with arbitrary even order. Appl. Math. Comput. 2016, 287–288, 94–103. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. On the convergence of an optimal fourth-order family of methods and its dynamics. Appl. Math. Comput. 2015, 252, 336–346. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. A study on the local convergence and the dynamics of Chebyshev-Halley-type methods free from second derivative. Numer. Algor. 2016, 71, 1–23. [Google Scholar] [CrossRef]

- Magreñán, Á.A. A new tool to study real dynamics: The convergence plane. Appl. Math. Comput. 2014, 248, 215–224. [Google Scholar] [CrossRef]

- Varona, J.L. Graphic and numerical comparison between iterative methods. Math. Intell. 2002, 24, 37–46. [Google Scholar] [CrossRef]

- van Sosin, B.; Elber, G. Solving piecewise polynomial constraint systems with decomposition and a subdivision-based solver. Comput. Aided Design 2017, 90, 37–47. [Google Scholar] [CrossRef]

- Aizenshtein, M.; Bartoň, M.; Elber, G. Global solutions of well-constrained transcendental systems using expression trees and a single solution test. Comput. Aided Geom. Design 2012, 29, 265–279. [Google Scholar] [CrossRef]

- Bartoň, M. Solving polynomial systems using no-root elimination blending schemes. Comput. Aided Design 2011, 43, 1870–1878. [Google Scholar] [CrossRef]

- Argyros, I.K.; Sharma, J.R.; Kumar, D. Ball convergence of the Newton–Gauss method in Banach space. SeMA 2017, 74, 429–439. [Google Scholar] [CrossRef]

- Wolfram, S. The Mathematica Book, 5th ed.; Wolfram Media: Champaign, IL, USA, 2003. [Google Scholar]

- Bargiacchi-Soula, S.; Fehrenbach, J.; Masmoudi, M. From linear to nonlinear large scale systems. SIAM J. Matrix Anal. Appl. 2010, 31, 1552–1569. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. A novel derivative free algorithm with seventh order convergence for solving systems of nonlinear equations. Numer. Algor. 2014, 67, 917–933. [Google Scholar] [CrossRef]

| Parameter | NM | JM | MI | MII | MIII | MIV |

|---|---|---|---|---|---|---|

| - | - | - | - | - | ||

| - | ||||||

| - | ||||||

| - |

| Parameter | NM | JM | MI | MII | MIII | MIV |

|---|---|---|---|---|---|---|

| - | - | - | - | - | ||

| - | ||||||

| - | ||||||

| - |

| Parameter | NM | JM | MI | MII | MIII | MIV |

|---|---|---|---|---|---|---|

| - | - | - | - | - | ||

| - | ||||||

| - | ||||||

| - |

| Methods | BM | CM | DBM | HM | NNM | SAM | JM | MI | MII | MIII | MIV |

|---|---|---|---|---|---|---|---|---|---|---|---|

| m = 10 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| ACOC | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.000 | 4.000 |

| m = 50 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| ACOC | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.000 | 4.000 |

| m = 100 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| ACOC | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.000 | 4.000 |

| m = 200 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| ACOC | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.000 | 4.000 |

| m = 500 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| ACOC | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.001 | 4.000 | 4.000 |

| Methods | BM | CM | DBM | HM | NNM | SAM | JM | MI | MII | MIII | MIV |

|---|---|---|---|---|---|---|---|---|---|---|---|

| m = 10 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 6 | 5 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 |

| m = 50 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 6 | 5 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 |

| m = 100 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 6 | 5 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 |

| m = 200 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 6 | 5 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 |

| m = 500 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 6 | 5 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 |

| Methods | BM | CM | DBM | HM | NNM | SAM | JM | MI | MII | MIII | MIV |

|---|---|---|---|---|---|---|---|---|---|---|---|

| m = 10 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 4 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 3.993 | 3.999 |

| m = 50 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 4 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 3.993 | 3.999 |

| m = 100 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 4 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 3.993 | 3.999 |

| m = 200 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 4 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 3.993 | 3.999 |

| m = 500 | |||||||||||

| n | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 4 |

| ACOC | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 4.000 | 3.993 | 3.999 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, J.R.; Kumar, D.; Argyros, I.K.; Magreñán, Á.A. On a Bi-Parametric Family of Fourth Order Composite Newton–Jarratt Methods for Nonlinear Systems. Mathematics 2019, 7, 492. https://doi.org/10.3390/math7060492

Sharma JR, Kumar D, Argyros IK, Magreñán ÁA. On a Bi-Parametric Family of Fourth Order Composite Newton–Jarratt Methods for Nonlinear Systems. Mathematics. 2019; 7(6):492. https://doi.org/10.3390/math7060492

Chicago/Turabian StyleSharma, Janak Raj, Deepak Kumar, Ioannis K. Argyros, and Ángel Alberto Magreñán. 2019. "On a Bi-Parametric Family of Fourth Order Composite Newton–Jarratt Methods for Nonlinear Systems" Mathematics 7, no. 6: 492. https://doi.org/10.3390/math7060492

APA StyleSharma, J. R., Kumar, D., Argyros, I. K., & Magreñán, Á. A. (2019). On a Bi-Parametric Family of Fourth Order Composite Newton–Jarratt Methods for Nonlinear Systems. Mathematics, 7(6), 492. https://doi.org/10.3390/math7060492