Abstract

A quadratic programming problem with positive definite Hessian subject to box constraints is solved, using an active-set approach. Convex quadratic programming (QP) problems with box constraints appear quite frequently in various real-world applications. The proposed method employs an active-set strategy with Lagrange multipliers, demonstrating rapid convergence. The algorithm, at each iteration, modifies both the minimization parameters in the primal space and the Lagrange multipliers in the dual space. The algorithm is particularly well suited for machine learning, scientific computing, and engineering applications that require solving box constraint QP subproblems efficiently. Key use cases include Support Vector Machines (SVMs), reinforcement learning, portfolio optimization, and trust-region methods in non-linear programming. Extensive numerical experiments demonstrate the method’s superior performance in handling large-scale problems, making it an ideal choice for contemporary optimization tasks. To encourage and facilitate its adoption, the implementation is available in multiple programming languages, ensuring easy integration into existing optimization frameworks.

Keywords:

convex quadratic programming; machine learning; optimization; active set; Lagrange multipliers; practical applications MSC:

65K10

1. Introduction

Convex quadratic programming (QP) refers to optimization problems in which a quadratic objective function is minimized subject to linear constraints. In the convex case, the quadratic cost matrix is positive semidefinite, ensuring a unimodal (bowl-shaped) objective so that any local minimum is global. The convex quadratic programming problem with simple bounds is stated as:

where and are a symmetric, positive definite matrix. In this paper, we propose a novel active-set strategy for solving problems of the form in Equation (1), that in each step solves a linear system and updates the active set and the Lagrange multipliers accordingly.

This type of problem minimizing a convex quadratic function subject to bound constraints appears quite frequently in scientific applications as well as in parts of generic optimization algorithms. Many non-linear optimization techniques are based on solving quadratic model subproblems [1,2,3,4].

While much of the foundational work on convex quadratic programming with box constraints originates from earlier research, there has also been a growing body of recent literature proposing modifications and new approaches targeting higher efficiency [5,6,7,8].

Portfolio optimization problems often use QP to allocate assets efficiently [9,10,11,12]. In Markowitz’s classic mean-variance portfolio model, the objective is quadratic (portfolio risk) and constraints ensure that the allocation weights sum to a budget [10]. If short-selling is disallowed (no negative weights), this imposes bound constraints , rendering the model convex QP. Such QP formulations are central in financial risk management and asset allocation.

Support Vector Machines (SVMs) for classification and regression are trained by solving a convex QP that maximizes the margin between classes while penalizing errors. The SVM optimization typically includes linear constraints and bound constraints on the variables of the dual formulation (e.g., in the dual formulation) [13,14,15,16]. Specialized solvers like the sequential minimal optimization (SMO) algorithm exploit this structure by breaking the problem into small QP subproblems. Similarly, in data-fitting applications, non-negative least squares (NNLS) problems (which minimize a sum of squared errors with ) are convex QPs with simple bounds. NNLS [17,18] and its generalization to bounded-variable least squares (constraining ) are widely used in machine learning and signal processing.

Many engineering optimization tasks are naturally cast as QPs with bounded controls or resources. For instance, in model predictive control (MPC) [19,20]—a technique for controlling processes and robots—the controller solves a QP at each time step to minimize a quadratic performance index, subject to constraints like actuator limits and state bounds. These actuator limits are simple bound constraints on the control variables. Likewise, energy management systems, network flow optimization, and other resource allocation problems often involve quadratic cost functions (e.g., minimizing power loss or deviation from a target) with variables constrained by minimum/maximum operating levels.

The optimization of radiation intensity in oncology treatment [21] is a critical aspect of radiotherapy planning, aiming to maximize the dose delivered to tumor regions while minimizing exposure to surrounding healthy tissues. These methods are commonly formulated as quadratic optimization problems, where the objective function represents the trade-off between dose conformity and tissue sparing. The quadratic nature arises from the squared deviation of the delivered dose from the prescribed dose, ensuring a smooth and controlled distribution of radiation. Constraints are incorporated to enforce clinical requirements such as maximum allowable dose limits for organs at risk and minimum dose thresholds for tumor coverage. Various numerical techniques, including gradient-based algorithms and interior-point methods, are employed to efficiently solve these optimization problems, ensuring precise and effective treatment planning.

For the problem in Equation ((1)) two major strategies exist in the literature, both of which require feasible steps to be taken. The first one is the active-set strategy [2,3], which generates iterates on a face of the feasible box, never violating the primary constraints on the variables. Active-set algorithms work by iteratively guessing which constraints are “active” and which are not. They temporarily treat the active constraints as equalities, solve the resulting reduced QP, then check optimality conditions (Karush–Kuhn–Tucker conditions) to adjust the active set.

The basic disadvantage of this approach, especially in the large-scale case, is that constraints are added or removed one at a time, thus requiring a number of iterations proportional to the problem size. To overcome this, gradient projection methods [22,23] were proposed. In that framework, the active set algorithm is allowed to add or remove many constraints per iteration.

The second strategy treats the inequality constraints using interior-point techniques. In brief, an interior-point algorithm consists of a series of parametrized barrier functions which are minimized using Newton’s method. The major computational cost is due to the solution of a linear system, which provides a feasible search direction. Modern primal–dual interior-point algorithms are known for their polynomial-time complexity and strong practical performance on large-scale problems. In contrast to active-set methods (which pivot along constraint boundaries), interior-point methods move through the interior and typically require fewer iterations (albeit with more computation per iteration).

In this paper, we investigate a series of convex quadratic test problems. We recognize that bound constraints are a very special case of linear inequalities, which may in general have the form , with A being an matrix and b a vector . Our investigation is also motivated by the fact that in the convex case, every problem subject to inequality constraints can be transformed to a bound-constrained one, using duality [24,25]:

It is important to note, however, that the dual transformation from a general inequality-constrained convex quadratic program to a bound-constrained dual problem does not always result in a positive definite matrix . The positive definiteness of is guaranteed only when the matrix A has full row rank and the original Hessian matrix B is strictly positive definite. If A is rank-deficient or if B is only positive semi-definite, the resulting may become singular or indefinite, which can negatively affect the stability and solvability of the dual problem.

Our proposed approach to solving the problem in Equation (1) is an active-set algorithm that, unlike traditional methods, does not require strictly feasible or descent directions at each iteration. While an initial version of the algorithm was briefly introduced in [26], this paper presents extended results and a detailed comparison. We provide a step-by-step description of the algorithm, including the Lagrange multiplier updates, dual feasibility checks, and implementation insights. Furthermore, we demonstrate the algorithm’s broad applicability and its consistently rapid convergence through extensive experiments on a diverse set of benchmark problems, including synthetic, structured, and real-world optimization tasks. Comparative results and a ranking-based evaluation confirm the robustness and efficiency of our method across a host of problems of varying dimensions and conditioning.

The paper is organized as follows. The proposed algorithm is described in detail in Section 2. In Section 3, we briefly present four quadratic programming codes that were used against our method on five different problem types, which are described in Section 4. Finally, in Section 5.6, a new trust-region-like method is proposed which takes full advantage of our quadratic programming algorithm.

2. Solving the Quadratic Problem

For the problem in Equation (1), we introduce Lagrange multipliers in order construct the associated Lagrangian:

The necessary optimality conditions (KKT conditions) at the minimum require that:

A solution to all the equations of above system (4) can be obtained through an active-set strategy sketched in the following steps:

- At the initial iteration, we set the Lagrange multipliers and to zero and compute the Newton point .If is feasible, it is accepted as the optimal solution.

- At each iteration k, we define three disjoint index sets:

- (a)

- L: indices where the lower bound is active or violated (Equation (4b));U: indices where the upper bound is active or violated (Equation (4b));

- (b)

- (c)

- For each , the value is set to the upper bound, satisfying primal feasibility (Equation (4b)), and is set to zero, again satisfying the complementarity condition (Equation (4f)).

- (d)

- (e)

- The rest of the N unknowns—namely the for , the for , and the for —are computed by solving the stationarity condition after some rearrangement:

The BoxCQP (abbreviation for box-constrained quadratic programming) algorithm is formally presented below:

The solution of the linear system in Step 3 of Algorithm 1 needs further consideration. Let us rewrite the system in a component-wise fashion.

Since , we have that ; hence, we can calculate by splitting the sum in Equation (5) and taking into account Step 2 of the algorithm, i.e.,:

The submatrix is positive definite as can be readily verified, given that the full matrix B is. The calculation of and of is straightforward and is given by:

| Algorithm 1 BoxCQP |

|

Initially set: , and . If is feasible, Stop, the solution is: . At iteration k, the quantities are available. |

|

The convergence analysis along the lines of Kunisch and Rendl [27] is applicable for our method as well. Hungerländer and Rendl [5] have showed that when the Hessian B is positive definite, then there exists a solution, and have developed a procedure leading to a convergence proof. One may also apply their scheme to prove the convergence of the presented algorithm. However, it is lengthy and complicated, and therefore we preferred to present extended numerical evidence instead. We numerically tested cases with thousands of variables and a wide range for the condition number of B from 1 to . When B becomes nearly singular, then cycling occurs as expected. (Note that in such a case, the linear system is ill-conditioned). At this point, ad hoc corrective measures may be taken.

The main computational task of the algorithm above is the solution of the linear system in Step 3. We have implemented three variants that differ in the way the linear system is solved. In Variant 1, at every iteration, we use a Cholesky decomposition. In Variant 2, we employ a conjugate gradient iterative method [28,29] throughout. In Variant 3, at the first iteration, where we need to solve the full system, we use a few iterations of the conjugate gradient scheme and subsequently decomposition.

Table 1 provides a summary of the KKT conditions that are assured to be met at each constructive iteration, classified according to the respective index sets (L, S, U). Most of the six KKT conditions are persistently adhered to throughout the procedure. Nonetheless, certain indices might momentarily breach conditions such as primal feasibility (4b), lower-bound dual feasibility (4c), or upper-bound dual feasibility (4d). Crucially, the number of discrepancies involving either Lagrange multipliers or primal variables remains below the problem dimension N. This table outlines the dynamic modification of the primal and dual variables and demonstrates their changing relationship with the KKT conditions as the algorithm progresses.

Table 1.

BoxCQP consecutive iterations and KKT.

Experimental Convergence Analysis: Controlled Indefiniteness

To assess the robustness of the BoxCQP algorithm beyond its scope, we designed a controlled experiment that systematically introduces indefiniteness into the quadratic term of the objective function. We argue that BoxCQP algorithm converges for strictly positive definite matrices B. Although theoretical convergence is possible following the proof found in [5], it is also imperative to investigate the behavior of the algorithm in a practical manner. To examine its behavior under near-indefinite and indefinite scenarios, we generated perturbed matrices that violate this assumption in a controlled way.

The experimental procedure described next was repeated for 100 random instances for every dimension setting:

- Step 1:

- Matrix and Vector Generation:For a given problem dimension , we generated a random positive definite matrix , as well as random vectors , , and , defining the box-constrained quadratic programming problem:

- Step 2:

- Controlled Perturbation:We applied a Cholesky decomposition . Then, approximately 20% of the diagonal entries of L were selectively modified by replacing them with values drawn from the set:This range includes small negative, zero, and small positive values, effectively creating a smooth spectrum from definiteness to indefiniteness. The modified lower-triangular matrix was then used to reconstruct , which served as the input matrix for BoxCQP.

- Step 3:

- Solver Execution:Each problem instance was solved using the BoxCQP algorithm with a maximum limit of 100 iterations. For each configuration, we recorded the number of iterations required to converge, or marked the instance as failed if convergence was not reached within the iteration limit.

- Step 4:

- Evaluation Metrics:For each perturbation level and each dimension, we measure:

- The mean number of iterations required to reach convergence;

- The failure count, i.e., the number of cases out of 100 in which the algorithm did not converge;

- The average condition number of the resulting matrix , measured as the ratio of its largest to smallest eigenvalue.

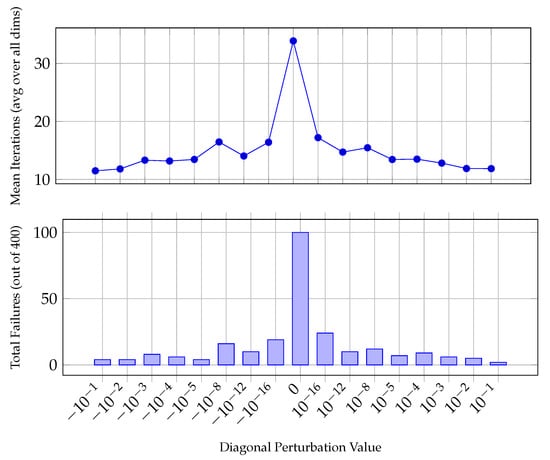

This procedure allowed us to systematically investigate how BoxCQP performs as the definiteness of the matrix degrades, and to associate failure patterns and convergence delays with condition number growth and specific types of matrix perturbations. The results are shown in Table 2 and graphically in Figure 1.

Table 2.

BoxCQP performance under controlled indefiniteness: mean iterations, failure counts, and condition numbers (100 runs).

Figure 1.

BoxCQP convergence vs. perturbation level.

The experimental evaluation of the BoxCQP algorithm under controlled indefiniteness reveals a strong dependence of convergence behavior on the condition number of the modified matrix B. As expected, the algorithm demonstrates robust performance in well-conditioned scenarios, particularly when the smallest diagonal entry remains significantly positive (e.g., or ). In these cases, the mean iteration counts remain low and convergence failures are rare or nonexistent across all dimensions tested.

However, as the matrix becomes increasingly ill-conditioned—especially around diagonal perturbations close to zero or slightly negative—the condition number grows rapidly, often exceeding , and in extreme cases (e.g., with zero diagonal values), becomes infinite. These configurations correspond to a substantial rise in both iteration count and failure rates. For instance, when the diagonal includes zero, the algorithm fails to converge in up to 28% of the runs for , and similar behavior is observed for higher dimensions. Even for small negative values (e.g., or ), the algorithm begins to exhibit instability as the condition number increases.

Interestingly, there appears to be a zone of tolerance: small perturbations toward indefiniteness (especially around to ) do not always lead to immediate failure. Instead, BoxCQP still converges in many cases, albeit with increased iteration counts. This suggests some resilience of the solver near the boundary of positive definiteness. Nevertheless, the results clearly highlight the algorithm’s sensitivity to definiteness and condition number, emphasizing the importance of matrix conditioning in practical applications. Incorporating condition number estimation or preconditioning strategies could enhance solver stability and broaden the range of problems to which BoxCQP can be reliably applied.

As a closing remark, we should point out that out of the total 6400 experiments (16 perturbation levels × 100 runs × 4 dimension settings), the BoxCQP algorithm successfully converged in 6146, i.e., , of the cases. The rest of correspond to indefinite Hessians.

3. State-of-the-Art Convex Quadratic Programming Solutions

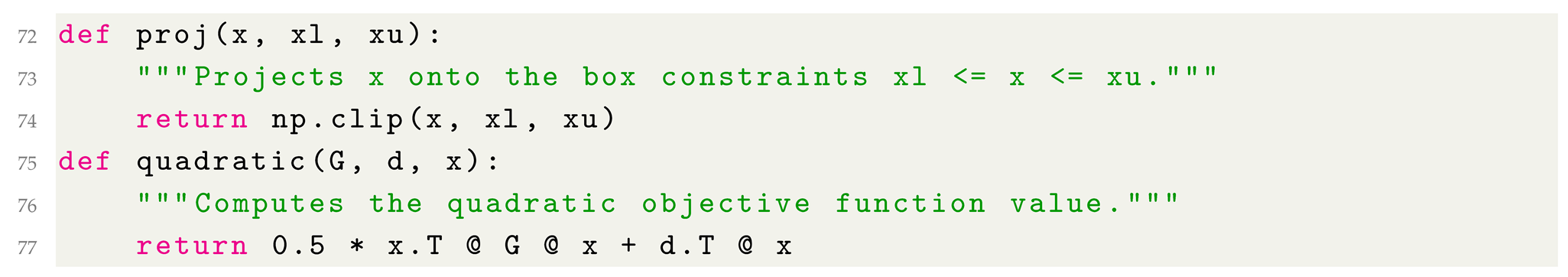

There exist several quadratic programming codes in the literature. We have chosen to compare with three of them, specifically with QPBOX, QLD, and QUACAN. These codes share several common features so that the comparison is both meaningful and fair. All codes are written in the same language (FORTRAN 77) so that different language overheads are eliminated. Also, they are written by leading experts in the field of quadratic programming, so that their quality is guaranteed. Notice also that all codes are specific to the problem, and not of general purpose nature and are distributed freely through the World Wide Web at the time of writing.

3.1. QPBOX

QPBOX [30] is a Fortran77 package for box-constrained quadratic programs developed in IMM in the Technical University of Denmark. The bound-constrained quadratic program is solved via a dual problem, which is the minimization of an unbounded, piecewise quadratic function. The dual problem involves a lower bound of , i.e, the smallest eigenvalue of a symmetric, positive matrix, and is solved by Newton iteration with line search.

3.2. QLD

This code [31] is available due to K.Schittkowski of the University of Bayreuth, Germany and is a modification of routines due to MJD Powell at the University of Cambridge. It is essentially an active set, interior-point method and supports general linear constraints too.

3.3. QUACAN

This algorithm combines conjugate gradients with gradient projection techniques, as the algorithm of Moré and Toraldo [32]. A new strategy for the decision of leaving the current face is introduced, making it possible to obtain finite convergence even for a singular Hessian and in the presence of dual degeneracy. QUACAN [33] is specialized for convex problems subject to simple bounds.

4. Experimental Results—Fortran Implementation

To verify the effectiveness of the proposed approach, we experimented with five different problem types, and measured cpu times to make a comparison possible. We have implemented BoxCQP in Fortan 77 and used a recent Intel processor with a Linux operating system and employed the suite of the GNU gfortran compiler.

In the subsections that follow, we describe in brief the different test problems used for the experiments, and report our results.

4.1. Random Problems

The first set of experiments treats randomly generated problems. We generate problems following the general guidelines of [32]. Specific details about creating these random problems are provided in the Appendix A.1.

For every random problem class, we have created Hessian matrices with three different condition numbers:

- Using = 0.1 and hence, ;

- Using = 1 and hence, ;

- Using = 5 and hence, .

The results for the three variants of BoxCQP against the other quadratic codes for the classes (a), (b), and (c) and for three different condition numbers are shown in Table 1, Table 2, and Table 3, respectively. In each table, alongside the execution times of the competing solvers, we include additional columns presenting their rankings for the specific case. These rankings serve to facilitate a more general interpretation and comparison of the results.

Table 3.

Random table results; , .

The results presented in Table 3 show that across all conditioning levels, computational time increases with problem size, as expected. Variant 1 ( decomposition) performs well for small-to-moderate problem sizes but suffers from scalability issues as the problem dimension increases, particularly in ill-conditioned cases where factorization becomes computationally expensive. In contrast, Variant 2 (conjugate gradient) exhibits greater robustness for ill-conditioned problems but is slower than direct decomposition for well-conditioned cases. The hybrid Variant 3 provides the best overall performance, maintaining lower runtimes across different problem sizes and conditioning levels.

Comparing BoxCQP to the antagonistic solvers, QUACAN performs well on small problems but becomes inefficient for large-scale and ill-conditioned cases. QPBOX and QLD exhibit competitive runtimes, with QLD showing the best efficiency in large, ill-conditioned scenarios. BoxCQP Variants 2 and 3 consistently outperform QUACAN, making them preferable for difficult optimization problems. In this case, BoxCQP Variant 3 emerges as the most effective approach, striking a balance between computational efficiency and robustness to ill-conditioning.

Considering the results presented in Table 4 and Table 5, we can see that for the most ill-conditioned cases, Variant 1 (LDL) becomes prohibitively expensive, and methods using iterative methods (Variants 2 and 3) become more favorable. On the other hand, for the well-conditioned cases, iterative BoxCQP variants outperform the LDL one. Among the other solvers, QUACAN struggles significantly with ill-conditioned problems and large problem sizes, often displaying dramatically increased runtime, particularly for . QPBOX and QLD scale more effectively, with QLD consistently outperforming QUACAN in all problem sizes. However, BoxCQP Variants 2 and 3 maintain superior performance over QUACAN and, in all cases, are better than QPBOX and QLD.

Table 4.

Random table results; , .

Table 5.

Random table results; , .

Overall, the results confirm that when most variables reside on the bounds, BoxCQP Variant 3 is the most practical choice, as it combines the advantages of both and CG, adapting well to different problem conditions. Variant 1 remains suitable for well-conditioned small problems, whereas Variant 2 is preferable for handling ill-conditioned, large-scale problems.

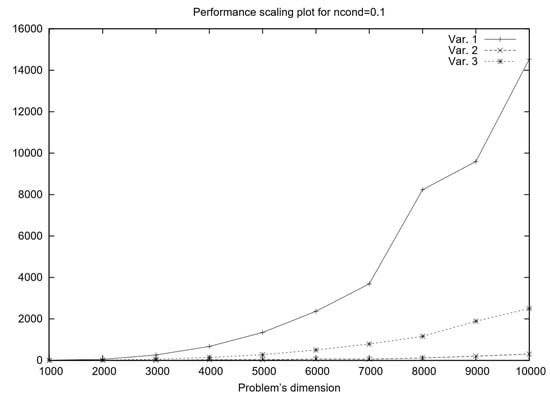

The performance scaling plot in Figure 2 compares the runtime of three algorithmic variants for solving well-conditioned problems, where half of the variables are fixed on bounds. The primary computational task in the algorithm is solving a linear system at each iteration, and the three variants differ in their approach to this task. We can an infer that for small problem sizes, the differences between the three variants are relatively minor. However, as the problem size grows, Variant 1 ( decomposition at every iteration) exhibits the steepest runtime increase due to the high cost of repeated factorizations. Meanwhile, Variant 2 (CG throughout) shows much better scalability, with its runtime growing at a slower rate, making it preferable for large-scale problems. Variant 3 (hybrid) likely demonstrates an intermediate performance profile, outperforming Variant 1 in terms of efficiency while maintaining better numerical robustness than Variant 2.

Figure 2.

BoxCQP variants’ scaling results for .

In summary, if computational speed is the primary concern, Variant 2 (CG) is the most scalable option, particularly for large problem sizes. If numerical stability and accuracy are the priority, Variant 1 () is the most robust but at the cost of significantly higher computation time. Variant 3 (hybrid) emerges as an optimal middle-ground approach, balancing efficiency and stability by combining iterative and direct methods. The performance-scaling trends confirm these expectations, with Variant 1 becoming increasingly costly, Variant 2 scaling efficiently, and Variant 3 offering a competitive compromise.

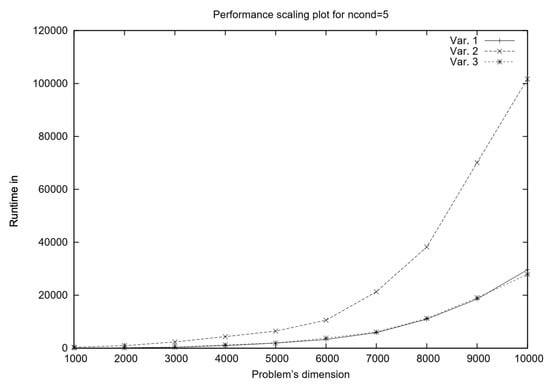

For ill-conditioned problems (see Figure 3, the performance-scaling behavior of the three algorithmic variants shifts significantly compared to well-conditioned problems. In such cases, the system matrix exhibits a high condition number, which impacts both direct and iterative solution methods differently.

Figure 3.

BoxCQP variants’ scaling results for .

From the runtime trends observed in Figure 3 in the new performance scaling plot, it appears that Variant 1 () performs better than in the well-conditioned scenario. This is likely due to the fact that decomposition, being a direct method, remains robust even when the system matrix is poorly conditioned. Unlike iterative methods, which suffer from slow convergence or numerical instability when the condition number is large, maintains accuracy at the cost of higher computational effort per iteration. However, in ill-conditioned problems, iterative solvers such as the conjugate gradient (CG) method (used in Variant 2) may require significantly more iterations to converge, making them less efficient overall. This explains why Variant 1, despite its higher theoretical complexity, outperforms the other two methods in this setting.

Variant 3 balances these trade-offs effectively by leveraging the fast initial approximations of CG while maintaining the stability of in subsequent iterations. While CG may struggle with slow convergence in ill-conditioned problems, using it only in the first iteration can still provide a useful initial guess that reduces the effort needed for decomposition later on. This reduces the total computational burden of while still ensuring that subsequent iterations remain numerically stable.

4.2. Circus-Tent Problem

The circus-tent problem serves as a foundational case that highlights how mathematical optimization techniques can be applied to real-world engineering challenges. Its formulation as box-constrained quadratic programming problem is described in detail in Appendix A.2. Table 6 presents execution times for different solution approaches to the circus-tent problem across increasing problem sizes, measured in seconds. As the problem size grows, Variant 1 becomes increasingly expensive due to the repeated direct factorization, reaching 1333.51 s for . Variant 2, relying entirely on the iterative CG method, exhibits significantly better scalability, with execution time increasing more gradually to s at . Variant 3, which blends iterative and direct methods, initially performs better than Variant 1 but eventually shows similar growth in execution time, reaching s for the largest problem size. Among the solvers compared, QUACAN successfully solves only the smallest in negligible time but fails for larger cases. QPBOX is unable to solve any instance, as indicated by the NC values across all entries. QLD, however, demonstrates competitive performance, outperforming Variant 1 and Variant 3 for larger problems, reaching s for .

Table 6.

Results for circus-tent problem case (single-thread execution time in seconds; NC stands for no convergence).

From these results, Variant 2 (conjugate gradient method) proves to be the most efficient and scalable, particularly for larger problem sizes, making it the preferred choice for large-scale computations. Variant 3 (hybrid approach) offers a reasonable compromise between iterative and direct methods, performing well for small-to-medium problems before exhibiting similar computational demands to Variant 1. Variant 1 ( decomposition), while accurate, is computationally expensive and less suitable for large-scale applications. Among the solvers, QLD remains the most competitive alternative, performing consistently better than -based methods for larger problem sizes.

4.3. Biharmonic Equation Problem

The biharmonic equation arises in elasticity theory and describes the small vertical deformations of a thin elastic membrane. In this work, we consider an elastic membrane clamped on a rectangular boundary and subject to a vertical force while being constrained to remain below a given obstacle. This leads to a constrained variational problem that can be formulated as a convex quadratic programming (QP) problem with bound constraints. For more details about the formulation, see Appendix A.3.

Table 7 presents execution times (in seconds) for different numerical methods applied to the biharmonic equation problem across increasing problem sizes. As the problem size increases, Variant 1 exhibits the steepest growth in execution time, reaching s for the largest case (). Variant 2, which leverages iterative solvers, scales better, achieving a significantly lower execution time of s for the same problem size. Variant 3 strikes a balance between direct and iterative methods, showing better performance than Variant 1 but remaining slightly less efficient than Variant 2, with s for the case .

Table 7.

Results for the biharmonic equation case (single-thread execution time in seconds).

In the series of solver tests, QLD demonstrates superior performance compared to QUACAN and QPBOX, solving the largest problem in 1837.66 s. By contrast, QUACAN and QPBOX exhibit considerably worse scalability, with times of 8282.04 s and 3067.21 s, respectively, for . QUACAN shows effectiveness with smaller problems but becomes very inefficient as the problem size grows, whereas QPBOX follows a similar pattern, though it performs somewhat better. The findings emphasize that Variant 3 is the most scalable method, making it the optimal choice for large-scale biharmonic problems. Additionally, all BoxCQP variants outperform the competition in this scenario.

4.4. Intensity-Modulated Radiation Therapy

Intensity-Modulated Radiation Therapy (IMRT) is an advanced radiotherapy technique that optimizes the spatial distribution of radiation to maximize tumor control while minimizing damage to surrounding healthy tissues and vital organs. The goal is to deliver a precisely calculated radiation dose that conforms to the tumor shape, reducing side effects and improving treatment effectiveness.

This problem is typically formulated as a quadratic programming (QP) task, where the objective is to determine the optimal fluence intensity profile for a given set of beam configurations. The radiation dose distribution can be represented as a linear combination of beamlet intensities, allowing for a mathematical optimization approach. Given a set of desired dose levels, the optimal beamlet intensities are computed by solving a quadratic objective function that minimizes the difference between the prescribed and delivered doses. The optimization constraints include dose limits for critical organs and physical feasibility conditions. In Appendix A.4, we present in some details the derivation of the formulation.

In practical applications, inverse treatment planning in IMRT requires solving a quadratic optimization problem of the form shown in Equation (9) multiple times, as beam configurations are iteratively adjusted to meet clinical constraints. Since the process involves large-scale quadratic systems, efficient solvers are essential to ensure fast and accurate treatment planning.

Table 8 showcases findings derived from actual data generously shared by S. Breedveld [34]. In this scenario, seven beams are integrated, leading to a quadratic problem comprising 2342 parameters. In this instance, Variants 2 and 3 exhibit superior performance, outperforming their counterparts by a factor of two.

Table 8.

Results for the Intensity-Modulated Radiation Therapy case (single-thread execution time in seconds).

4.5. Support Vector Classification

To create the problem for the case of Support Vector Classification, we used the CLOUDS dataset [35] a well-estabalished two dimensional and two-class classification task. The specifics of the quadratic programming formulation are provided in the Appendix A.5. We run different experiments for an increasing number of CLOUDS datapoints. From Table 9, we can see that Variant 1 experiences significant growth in execution time, reaching s for , making it the least efficient among the three variants. In contrast, Variant 2 scales much better due to its reliance on iterative methods, requiring s for the largest problem. Variant 3, which combines both iterative and direct methods, shows even better performance than Variant 1 for larger problems, reducing execution time to s at. When comparing solver performance, QUACAN, QPBOX, and QLD also display increasing execution times as problem sizes grow. QUACAN shows relatively poor scalability, requiring s for , making it the slowest solver in the test. QPBOX, while performing better, still struggles with larger problem sizes, reaching s at . QLD, is completing the largest problem in s, yet still significantly slower than Variants 2 and 3. These results highlight that Variant 2 (conjugate gradient method) is the most scalable approach, making it the preferred choice for large-scale SVM problems. Variant 3 (hybrid approach) still offers the best balance between iterative and direct solvers. Therefore, the findings suggest that a combination of CG-based techniques is optimal for solving large-scale SVM optimization problems efficiently.

Table 9.

Results for SVM training (single-thread execution time in seconds).

4.6. Summarizing Fortran Experimental Results

The performance of the six solver variants—Variant 1, Variant 2, Variant 3 versus QUACAN, QPBOX, and QLD—was evaluated across a diverse set of convex quadratic programming problems.

Each case revealed different characteristics of the solver behavior. In the random problem set, Variant 3 and Variant 2 consistently achieved the lowest average rankings ( and , respectively, on all 90 cases), indicating robust and efficient performance, especially under varying condition numbers. QUACAN followed with an average rank of , while QPBOX and QLD were generally slower ( and , respectively), reflecting their higher computational overheads.

In the context of the biharmonic scenario, characterized by structured sparse problems, Variant 3 emerged as the leader, boasting the top average rank (), with Variant 2 not far behind (). A similar pattern occurred in the circus-tent problem, where Variant 2 was clearly the standout performer, achieving the highest average rank (), while QLD followed in second place (), demonstrating its effectiveness for structured geometric problems when feasible. For SVM classification tasks, Variant 2 excelled, with an average rank of , and was trailed by Variant 3 and Variant 1. Meanwhile, QLD and QPBOX showed inferior performance, especially with large datasets, due to scaling challenges. In the singular IMRT instance, Variant 2 along with Variant 3 were rated highest, emphasizing their competitiveness even in substantial real-world applications.

In Table 10 we present aggregated ranking results for all the test problem cases. When evaluating all problems together, the aggregate average ranking supports the prior findings. Variant 3 and Variant 2 secured top overall rankings of and , respectively, with Var.1 following at . Among the other solvers, QUACAN occupied a central rank of , while QPBOX and QLD frequently ranked lower at and , respectively. These outcomes highlight the robustness and versatility of the proposed BoxCQP variants, particularly Var.2 and Var.3, which effectively balance speed and dependability across diverse problem categories.

Table 10.

Aggregated results for all cases (average ranking).

5. Experimental Results—Python Implementation

Porting code from Fortran 77 (F77) and MATLAB R2023b to Python 3.8 offers significant advantages in terms of accessibility, reproducibility, and community engagement, making it highly beneficial for exposure in the scientific computing community. Python’s open-source nature, extensive ecosystem, interoperability, and modern programming features make it an ideal platform for sharing, optimizing, and scaling scientific applications. In this section, we present some experimental results from the Python implementation of BoxCQP.

5.1. Linear Least Squares with Bound Constraints

Linear least squares with bound constraints (LLSBC) is a convex optimization problem that bridges classical linear algebra and modern optimization theory. By imposing simple bound constraints to a linear least-squares objective, we obtain a quadratic program (QP) that is guaranteed to be convex.

In practice, adding bound constraints to a least-squares problem is hugely important because it lets us incorporate prior knowledge or physical limitations into the solution. Ordinary linear least squares (OLS) may produce solutions that are mathematically optimal but physically impossible or undesirable (e.g., negative values for inherently non-negative quantities, or parameters outside a feasible range). LLSBC addresses this by enforcing simple “box” constraints () on the solution vector. This leads to more realistic and interpretable models in many fields. For instance, in machine learning and statistics, it is often unreasonable for certain coefficients to be negative or to exceed certain values (consider probabilities, ages, or concentrations). By constraining coefficients to be non-negative or within a plausible range, we guarantee that the model’s outputs make sense (e.g., predicted prices or counts cannot be negative)

In engineering and the sciences, bound constraints allow us to respect physical laws or design limits—for example, in control systems, we might require gain parameters to remain within stable ranges, or in curve fitting, we might enforce that a response is non-decreasing with an input. In signal processing and image processing, constraints like non-negativity (pixel intensities, power spectra) or monotonicity can significantly improve solutions by reducing noise artifacts and preventing unphysical oscillations. Overall, LLSBC is interesting because it enhances the least-squares approach with robustness and domain knowledge, making the solutions applicable in real-world scenarios where unconstrained solutions would fail. It strikes a useful balance: retaining the computational efficiency and well-understood nature of least squares, while adding just enough constraints to capture practical requirements.

Many regression and estimation tasks in machine learning benefit from bound constraints. A notable example is non-negative least squares (NNLS), where we require for all i. This is used when model coefficients represent quantities that cannot go below zero. For instance, when fitting a model to predict prices, ages, or counts, allowing negative coefficients or predictions is not meaningful Imposing non-negativity yields more sensible models and can also have a regularizing effect (often promoting sparsity in the solution similar to an penalty). NNLS is widely used as a subroutine in matrix factorization problems like PARAFAC and non-negative matrix factorization (NMF), where one alternates solving least-squares subproblems under non-negativity constraints. This helps extract interpretable features (e.g., in text mining, image analysis, or clustering) because each factor is constrained to contribute additively (no negative cancellations). Another application is isotonic regression, which is a least-squares problem with a monotonicity constraint (). This can be formulated as LLSBC by introducing linear inequality constraints between variables.

5.2. Problem Definition (Bounded Least Squares)

- A is an design matrix;

- b is an m-vector of observations;

- ℓ and u are n-vectors (or scalars) defining lower and upper bounds.

5.3. Expanding the Objective Function

Expanding this quadratic term:

Ignoring the constant term (since it does not affect the minimizer), we obtain the standard **convex quadratic program (QP) form**:

5.4. Standard Quadratic Programming Representation

Rewriting the problem in standard QP form:

where:

This formulation ensures that the problem is a convex QP since Q is positive semidefinite (or positive definite if A has full column rank).

Experimental Setup

For comparison, we utilize SciPy, a robust open-source Python library for scientific computing and optimization, offering efficient numerical methods for linear algebra, optimization, signal processing, and statistical analysis. Specifically, the scipy.optimize.lsq_linear function is employed to solve bounded linear least-squares problems of the form of Equation (10). The solution is obtained using two distinct approaches: Trust-Region Reflective (TRF) and Bounded Variable Least Squares (BVLS) algorithms.

The TRF (Trust-Region Reflective) algorithm [36] is a subspace trust-region method that is particularly effective for solving large-scale and well-conditioned least-squares problems. It operates by iteratively refining the solution within a trust region, ensuring that the step size remains appropriate to maintain stability. This method enforces bound constraints using an active-set strategy, meaning it considers only variables that are likely to be active at the optimal solution. Trust-region methods are robust, particularly when dealing with ill-conditioned problems, as they naturally handle numerical instabilities and provide controlled step updates.

The BVLS (Bounded Variable Least Squares) algorithm [37] is a projection-based method that explicitly enforces bound constraints at each iteration. Unlike TRF, which works in a trust-region framework, BVLS solves a sequence of unconstrained least-squares problems while ensuring that the solution stays within the prescribed bounds. It follows a gradient-based active-set approach, where variables are either held at their bounds or updated according to the gradient direction, leading to the efficient handling of constraints. This method is particularly useful when strict bound enforcement is crucial, as it prevents overshooting beyond limits at any step.

Our Python implementation is presented in the Appendix A and it directly solves the problem in Equation (14). Notice that we include in timing the multiplications in Equations (15) and (16).

In Table 11 we present execution times (in seconds) for solving random linear least squares (LSQ) problems with bound constraints using three different methods: Variant 1, lsq_linear-TRF, and lsq_linear-BVLS. The problem sizes n vary in terms of the number of observations m. Variant 1 consistently outperforms both lsq_linear-TRF and lsq_linear-BVLS in terms of execution time. For smaller problem sizes, (such as and ), Variant 1 completes the computation in s, whereas lsq_linear-TRF and lsq_linear-BVLS require s and s, respectively. As the problem size increases, the execution time of Variant 1 scales more efficiently compared to the other two methods. For example, for dimension (, ), Variant 1 takes s, whereas lsq_linear-TRF and lsq_linear-BVLS require s and s, respectively. This significant difference highlights the computational efficiency of Variant 1, particularly for large-scale problems.

Table 11.

Linear least-squares random cases (single-thread execution time in seconds).

5.5. 225-Asset Portfolio Optimization Problem

The 225-Asset problem refers to a large-scale quadratic programming formulation used in portfolio optimization, where the objective is to minimize the portfolio’s risk (variance) subject to a set of linear constraints. The problem involves a universe of 225 assets and is based on a classic mean-variance optimization model proposed by Markowitz [38].

Let be the portfolio weights vector, where each represents the fraction of the total investment allocated to asset i. The problem is formulated as:

where

- is the positive definite covariance matrix of asset returns.

- is the expected return vector.

- is the minimum required portfolio return.

- is the vector of ones (to enforce full investment).

The constraints ensure:

- A minimum return threshold is met ().

- The portfolio is fully invested ().

- No short selling is allowed ().

This problem is often used as a benchmark in quadratic programming solvers because of its size (225 variables and several hundred constraints) and its relevance in financial optimization. It tests a solver’s ability to handle large, sparse quadratic programs with both inequality and equality constraints in practical applications. To express this problem in the standard quadratic programming form with inequality constraints of the form seen in Equation (2), we perform the following transformations:

- Set , and . There is no linear cost term in the mean-variance objective, only the quadratic risk term.

- Encode the return constraint as one row in A and b:,

- Convert the equality constraint into two inequalities:and . These become rows in A:, ;,

- Express the no short-selling constraint as , the identity matrix, and

Putting all constraints together:

This formulation is now fully compatible with solvers that accept inequality-only quadratic programs, such as those in the form of Equation (2).

To benchmark the Python implementation of BoxCQP, we compared its performance against two well-established quadratic programming solvers available through the qpsolvers Python interface: quadprog and osqp [39,40]. The quadprog solver is based on the Goldfarb–Idnani active-set method, which is particularly suitable for small- to medium-scale convex QP problems with dense Hessians. In contrast, osqp (Operator Splitting Quadratic Program) is a modern, operator-splitting-based solver that handles large-scale problems efficiently, even when the matrices involved are sparse or poorly conditioned. In Table 12, we present some preliminary results comparing BoxCQP to modern antagonistic methods.

Table 12.

225-Asset portfolio optimization (single-thread execution time in seconds).

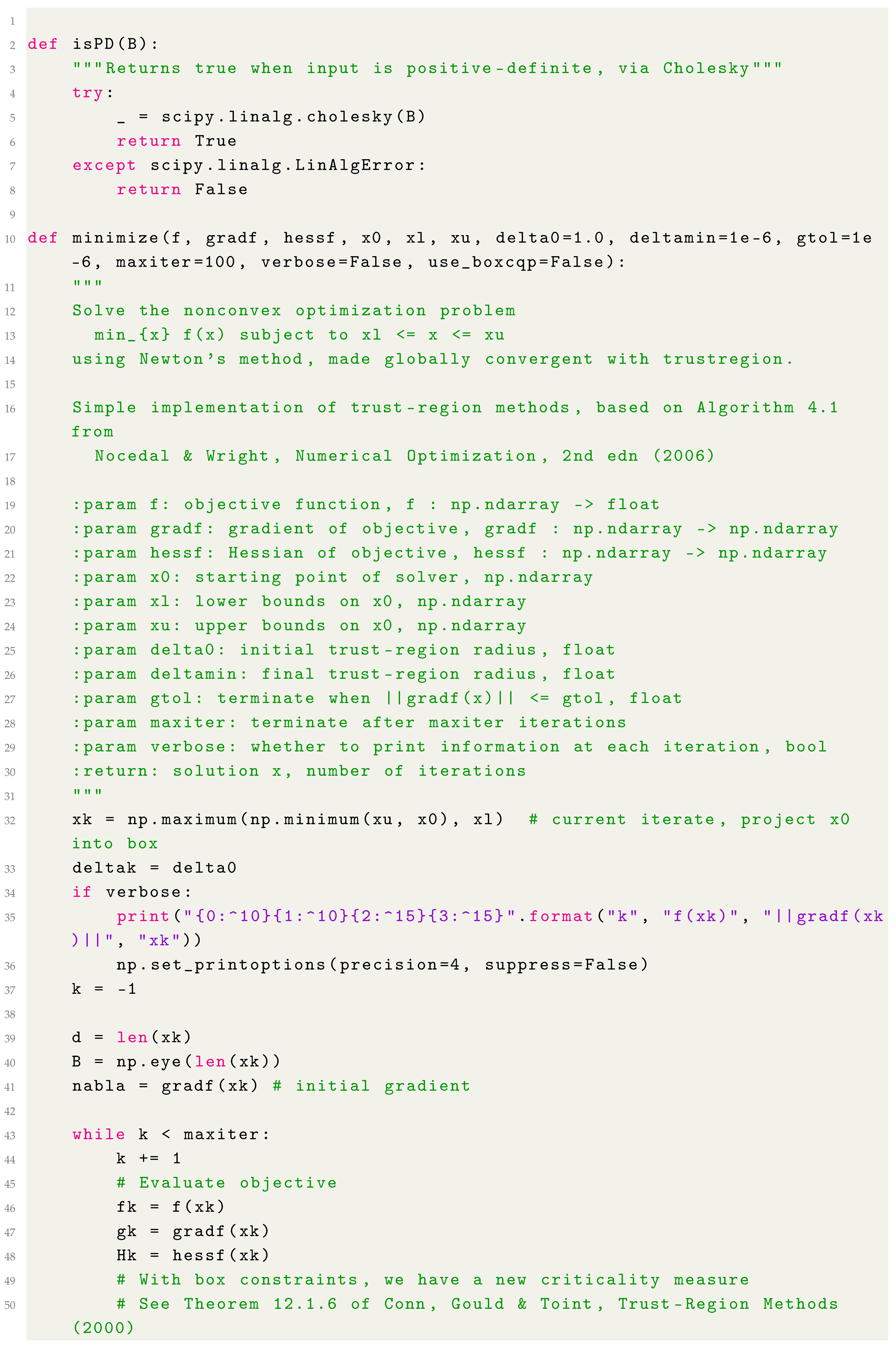

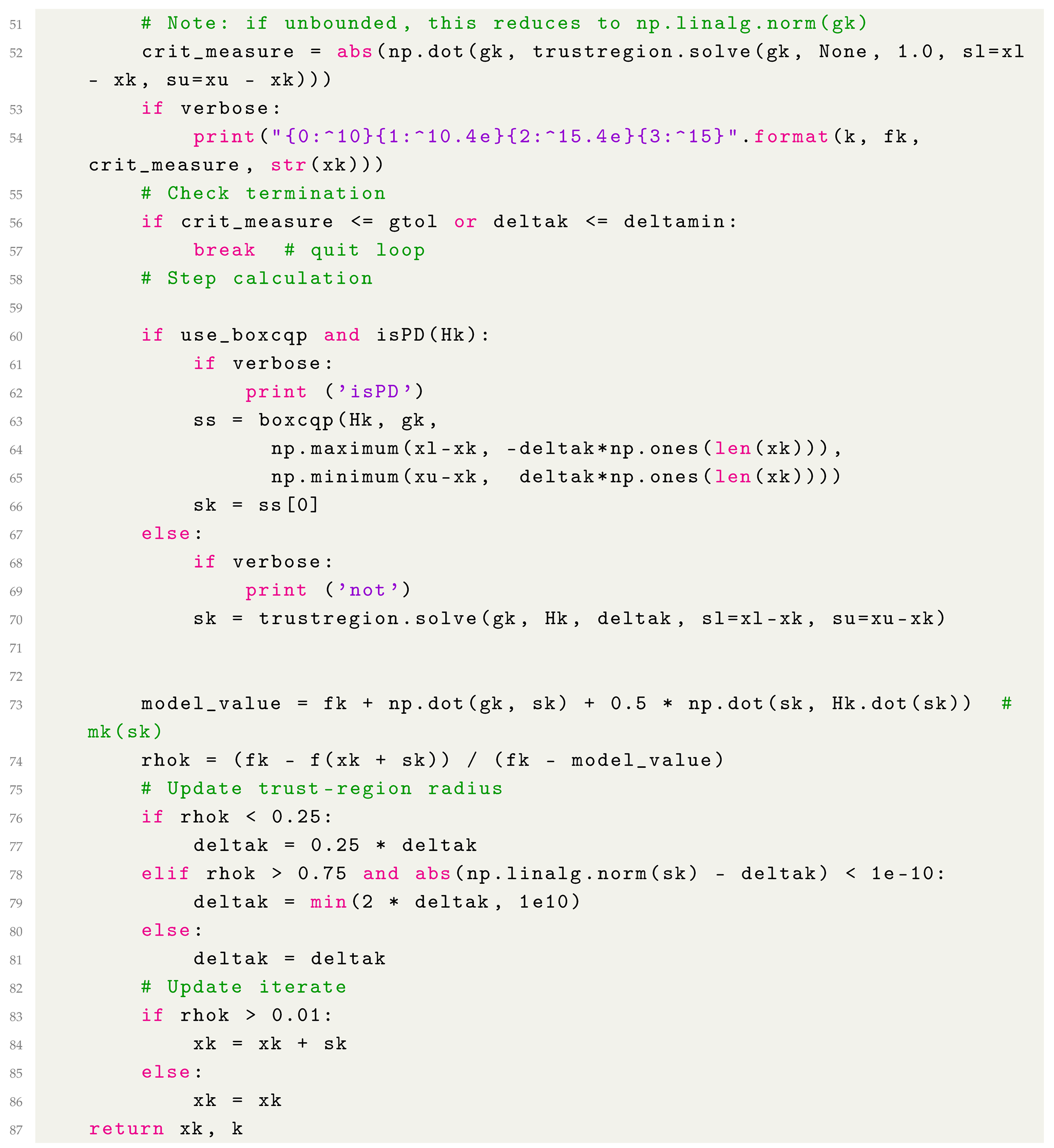

5.6. Bound-Constrained Non-Linear Optimization

We introduce a trust-region method for non-linear optimization with bound constraints, where the trust region is defined as a hyperbox, differing from the conventional hypersphere or hyperellipsoid approaches. The rectangular trust region is particularly well suited for problems with bound constraints, as it maintains its geometric structure even when intersecting the feasible region.

Trust-region methods fall in the category of sequential quadratic programming [41,42]. These algorithms are iterative and the objective function (assumed to be twice continuously differentiable) is approximated in a proper neighborhood of the current iterate (the trust region), using a quadratic model. Namely, at the iteration, the model is given by:

where and , in the case of Newton’s method, is a positive definite modification of the Hessian, while in the case of quasi-Newton methods, it is a positive definite matrix produced by the relevant update.

The trust region may be defined by:

It is obvious that different choices for the norm lead to different trust-region shapes. The Euclidean norm corresponds to a hypersphere, while the norm defines a hyperbox.

Given the model and the trust region, we seek a step that minimizes . We compare the actual reduction to the model reduction . If they agree to a certain extend, the step is accepted and the trust region is either expanded or remains the same. Otherwise, the step is rejected and the trust region is contracted. The basic trust-region algorithm is sketched in Algorithm 2.

| Algorithm 2 Basic trust region |

|

Consider the bound-constrained problem:

The unconstrained case is obtained by letting .

Let be the k-th iterate of the trust-region algorithm.

Hence, step 3 of Algorithm 2 becomes:

We have developed a hybrid trust-region algorithm that utilizes the Hessian matrix to determine the appropriate optimization approach. When the Hessian is positive definite, the algorithm transitions to solving the quadratic subproblem (see Equation (20)). However, if the Hessian is indefinite, we employ the classical method described in [41] to ensure stability and convergence. It is important to note that in the vicinity of a local minimum, the Hessian matrix is typically positive definite, reinforcing the effectiveness of this approach. The complete implementation of the algorithm is provided in Appendix A Listing A3: Python TrustBox Implementation. Preliminary results with random settings (bounded and unbounded) of the well known Rosenbrock function

showed us that (a) half of the iterations are positive definite and (b) we achieve a reduction in the total number of iterations.

6. Conclusions

We have presented an active-set algorithm for solving bound-constrained convex quadratic problems, leveraging an approach that dynamically updates both the primal and dual variables at each iteration. The algorithm efficiently determines the active set, allowing for systematic modifications that guide the solution toward feasibility and optimality while maintaining computational efficiency. This approach ensures robust convergence properties and significantly improves the solver’s performance, particularly in handling large-scale problems where traditional quadratic programming methods may struggle.

Extensive experimental testing has demonstrated the superior performance of our approach compared to several well-established quadratic programming solvers. Across a variety of problem sizes and structures, the proposed algorithm exhibited faster execution times, improved numerical stability, and enhanced scalability, making it a compelling alternative for applications requiring bound-constrained optimization. The method performed particularly well in large-scale settings, where efficiently handling constraints is crucial for reducing computational overhead.

Additionally, a trust-region method for non-linear objective functions has emerged as a natural extension of our active-set framework. By integrating the proposed algorithm into the subproblem solver, the trust-region approach is capable of efficiently handling both unconstrained and bound-constrained optimization problems. The flexibility of this integration allows for enhanced adaptability in solving non-linear problems where traditional approaches may fail due to instability or attain a slow convergence.

Author Contributions

Conceptualization, I.E.L.; Software, K.V.; Validation, K.V. and I.E.L.; Writing—original draft, K.V.; Writing—review & editing, I.E.L.; Visualization, K.V.; Supervision, I.E.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Problems Descriptions

Appendix A.1. Random Problems

The Hessian matrices B have the form:

where

where is a positive real number controlling the condition number of B

and Z is a Householder matrix:

The vectors and b are created by the following procedure, which is controlled by two real numbers in , namely and . The algorithmic steps for the random problem creation are shown in Algorithm A1.

| Algorithm A1 Random problem creation |

|

We have created three classes of random problems:

- Problems for which the solution has approximately 50% of the variables on the bounds, with equal probability to be either on the lower or on the upper bound (, ).

- Problems for which the solution has approximately 90% of the variables on the bounds, with equal probability to be on either the lower or on the upper bound (, ).

- Problems for which the solution has approximately 10% of the variables on the bounds, with equal probability to be either on the lower or on the upper bound (, ).

Appendix A.2. Circus Tent Problem

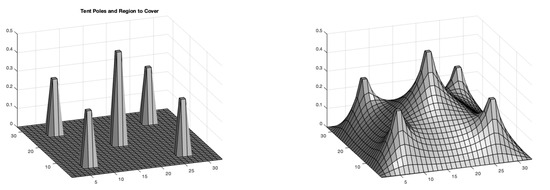

The circus-tent problem is a well-known example in optimization, taken from Matlab’s optimization package, demonstrating large-scale quadratic programming (QP) with simple bounds. In this problem, the objective is to determine the equilibrium shape of an elastic tent supported by five poles over a square lot, while minimizing the system’s potential energy under constraints imposed by the poles and the ground. The problem is formulated as a convex quadratic optimization task, where the quadratic objective function represents the elastic properties of the tent material, and the constraints enforce lower bounds set by the support poles and the ground surface [44].

Beyond this specific example, similar structural optimization problems can be posed as convex quadratic programming formulations in various topics of engineering and applied mathematics. For instance, in elastic membrane modeling, finding the equilibrium shape of a membrane under constraints involves minimizing the Dirichlet energy, which represents the elastic potential energy and leads to a quadratic objective function with bound constraints [45,46,47]. This formulation is particularly relevant in material science and computational physics, where deformable surfaces need to be optimized under fixed constraints.

Another key application is structural design optimization, where engineers optimize material distribution in load-bearing structures to achieve maximum efficiency while adhering to displacement and stress constraints. This optimization framework is used in architectural engineering, aerospace, and mechanical design, where lightweight yet stable structures are crucial [48]. Additionally, mechanical equilibrium analysis often involves minimizing elastic potential energy in mechanical systems with deformable components, leading to convex QP formulations [24].

These examples illustrate the broad applicability of convex quadratic programming with box constraints in structural optimization, where the goal is to determine equilibrium configurations that minimize energy functions while satisfying physical constraints.

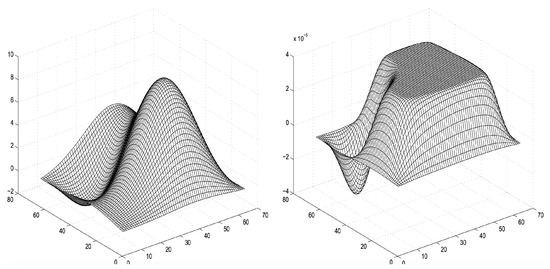

Figure A1.

Circus-tent problem.

As we can see on the left side of Figure A1, the problem has only lower bounds imposed by the five poles and the ground. The surface formed by the elastic tent is determined by solving the bound constrained optimization problem:

where corresponds to the energy function and H is a 5-point finite difference Laplacian over a square grid.

Appendix A.3. Biharmonic Equation Problem

The mathematical formulation follows standard texts in elasticity theory and variational methods [49,50,51,52,53].

Let be a rectangular domain, and let represent the vertical displacement of the membrane. The governing equation for the biharmonic problem is given by:

where is the biharmonic operator, defined as , and f is the external vertical force applied to the membrane [53,54]. The boundary conditions for a clamped membrane are:

This ensures that the membrane is fixed along the boundary and cannot rotate. Additionally, the membrane is constrained to remain below a given obstacle function , which introduces a bound constraint:

Multiplying the biharmonic equation by a test function v and integrating over gives the weak form:

where is the Sobolev space of functions with square-integrable second derivatives, satisfying the clamped boundary conditions [51]. The variational inequality formulation, incorporating the bound constraint , is given by:

This ensures that the solution u remains feasible under the obstacle constraint [55]. Using finite-element discretization, we approximate u in a finite-dimensional subspace , where is spanned by basis functions . We then write:

The discretized bilinear form associated with the biharmonic operator leads to a symmetric positive semidefinite stiffness matrix K, giving the system:

where is the vector of nodal values and F is the discrete load vector. Enforcing the bound constraint at each node, we obtain the quadratic programming problem:

This is a convex quadratic program with bound constraints. We see an example in Figure A2 of a membrane under the influence of a vertical force. The function describing the force is given by:

and

Figure A2.

The force is presented on the left, and the deformation on the right.

Appendix A.4. Intensity-Modulated Radiation Therapy

The dose distribution is expressed as a linear combination of fluence elements, allowing the dose calculation to be formulated in terms of a matrix–vector representation form [56] as

where represents the vector of dose distributions, indicating the dose for every voxel in the patient, H refers to the dose deposition matrix, combining the distribution vectors of all beamlets, and denotes the fluence vector containing the beamlet weights. In this study, the computation algorithm for H follows the method described in [57], employing a scatter radius of 3 cm. The quadratic objective function applied consists of two terms:

The first term represents a commonly used quadratic dose objective that has been adapted to include voxel-specific importance factors. Here, represents the dose distribution resulting from the fluence vector , while stands for the dose objective associated with voxels within the volume v. Each volume v is assigned an overall importance factor along with a set of voxel-specific importance factors denoted by . The tilde denotes the diagonal matrix form of the coefficient vector . This method considers a coefficient vector whose dimension equals the total number of patient voxels, but only some coefficients are non-zero, which is determined by the implementation of voxel-specific importance factors, with a maximum number of non-zero coefficients in being equal to the number of voxels in volume v.

The second term in Equation (A14) is the smoothing term, regulated by a smoothing factor . This term encourages the fluence to be smooth. Inspired by [58], the second derivative of the fluence was used as an indicator for smoothness. If the second derivative equals zero, the fluence is linear (linearly increasing or decreasing, like a wedge or constant).

For a two-dimensional fluence, the Laplacian of the fluence can be discretized using standard difference formulae for a fluence element . With resolutions h and k of the fluence in the x- and y-direction, respectively, we have

The ideal case for a smooth fluence is when . We choose to keep the denominator so the smoothing factor is independent of the fluence grid size. The discretization can be written in a matrix M, such that .

The scalar c in Equation (A16) can be neglected for minimization of . Matrix A is symmetric and positive definite.

Appendix A.5. Support Vector Classification

In this problem case, we are going to deal with the two-dimensional classification problem, to separate two classes using a hyperplane , which is determined from available examples:

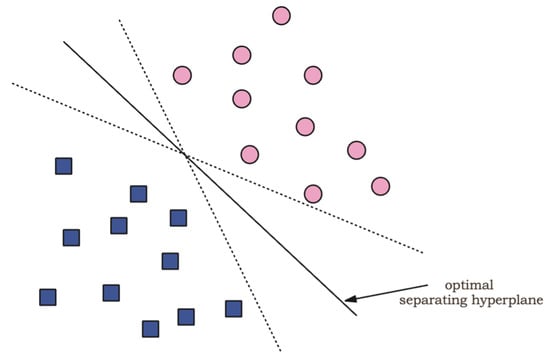

Furthermore, it is desirable to produce a classifier that will work well on unseen examples, i.e., it will generalize well. Consider the example in Figure A3. There are many possible linear classifiers that can separate the data, but there is only one that maximizes the distance to the nearest data point of each class. This classifier is termed the optimal separating hyperplane and intuitively, one would expect that generalizes optimally.

Figure A3.

Maximum distance classifier.

The formulation of the maximum distance linear classifier (if we omit the constant term b of the hyperplane equation (Also known as explicit bias)) is a convex quadratic problem with simple bounds on the variables [14,59,60,61]. The resulting problem has the form:

where and with , and is the kernel function performing the non-linear mapping into the feature space. The parameters are Lagrange multipliers of an original quadratic problem, that define the separating hyperplane using the relation:

Hence, the separating surface is given by:

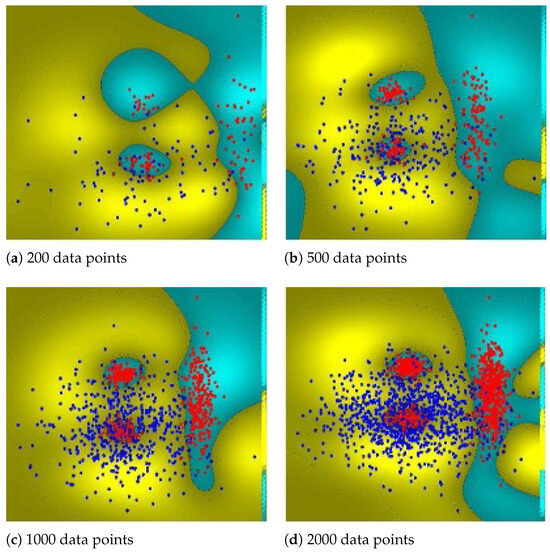

In our study, we used the two dimensional CLOUDS dataset [35], involving two distinct classes. We formulated the problem outlined in Equation (A20) by employing an RBF Kernel function defined as , with C set to 100. The methodology for our experiments included the following steps:

- We first extracted l examples from the dataset to create the training set, leaving the remaining (5000-l) examples for the test set.

- Next, we constructed the matrix Q corresponding to the problem in Equation (A20).

- Each solver was then applied to generate the separating surfaces and determine test-set errors

Notably, due to a high condition number in matrix Q, the problem became ill-conditioned, which we mitigated by adding a small positive value to the main diagonal of Q. The classification surfaces achieved for , 500, 1000, and 2000 training examples from the CLOUDS dataset are depicted in Figure A4.

The classification surfaces of the 2D Support Vector Machine (SVM) shown in the images evolve as the number of training data points increases. In (a) 200 data points, the decision boundary is highly irregular and appears to overfit the sparse dataset, struggling to generalize well across the feature space. There are regions with disconnected decision surfaces, indicating a lack of sufficient training samples to capture the underlying data distribution effectively.

As the number of points increases in (b) 500 data points, the decision boundary becomes more refined, though it still exhibits some irregularities, particularly in regions with complex data distributions. The increased density of support vectors (marked points along the boundary) suggests that the classifier is still adjusting to local variations. By (c) 1000 data points, the classification surface becomes smoother, demonstrating improved generalization. The previously disconnected boundary regions start forming a more coherent separation, reducing excessive curvature in low-density areas. Finally, in (d) 2000 data points, the decision surface stabilizes, capturing the overall structure of the dataset more effectively. The regions corresponding to different classes are now more clearly separated, and the classifier exhibits better robustness against noise and outliers.

Figure A4.

Four instances of classification problems used in this study.

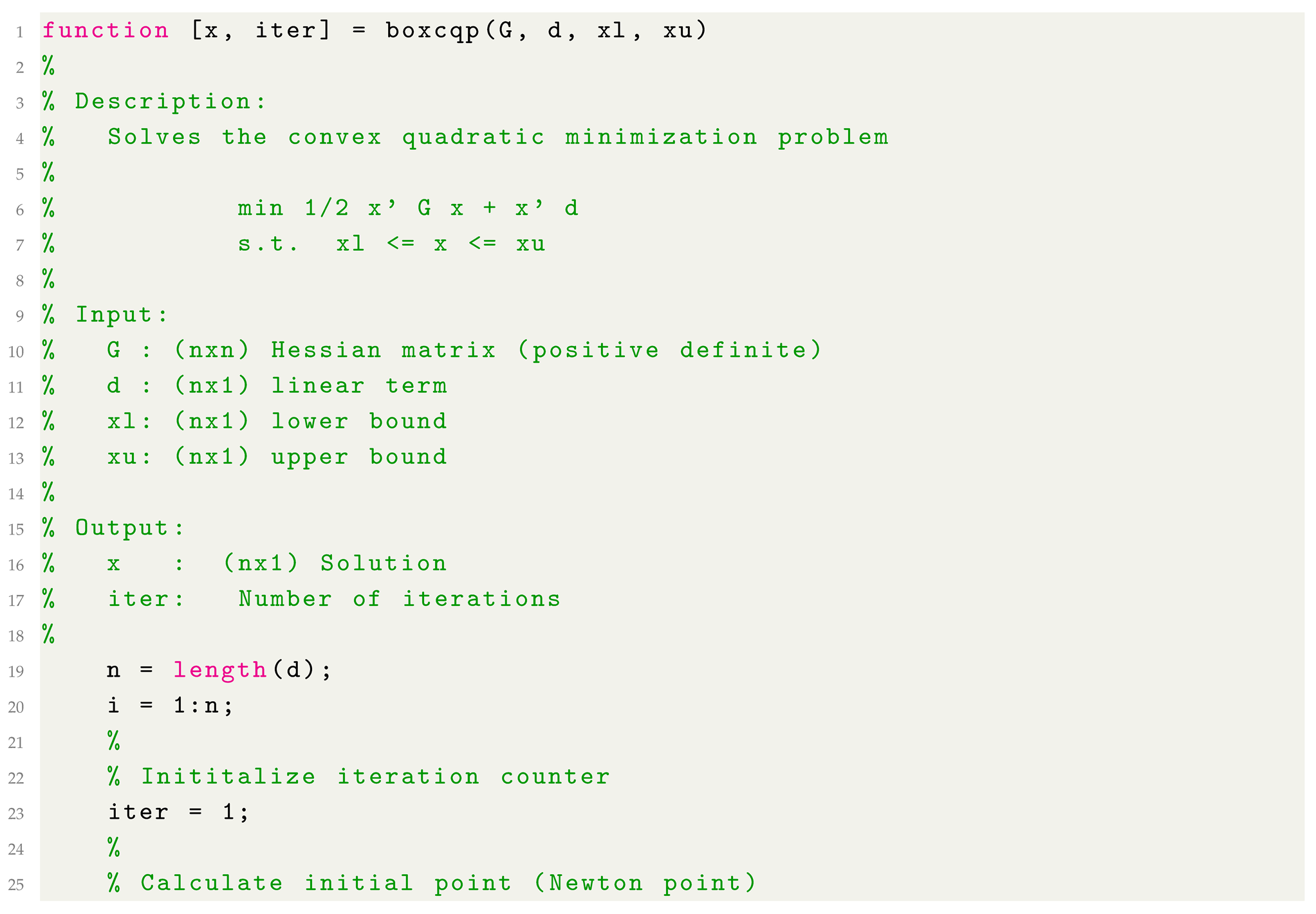

Appendix B. The Code

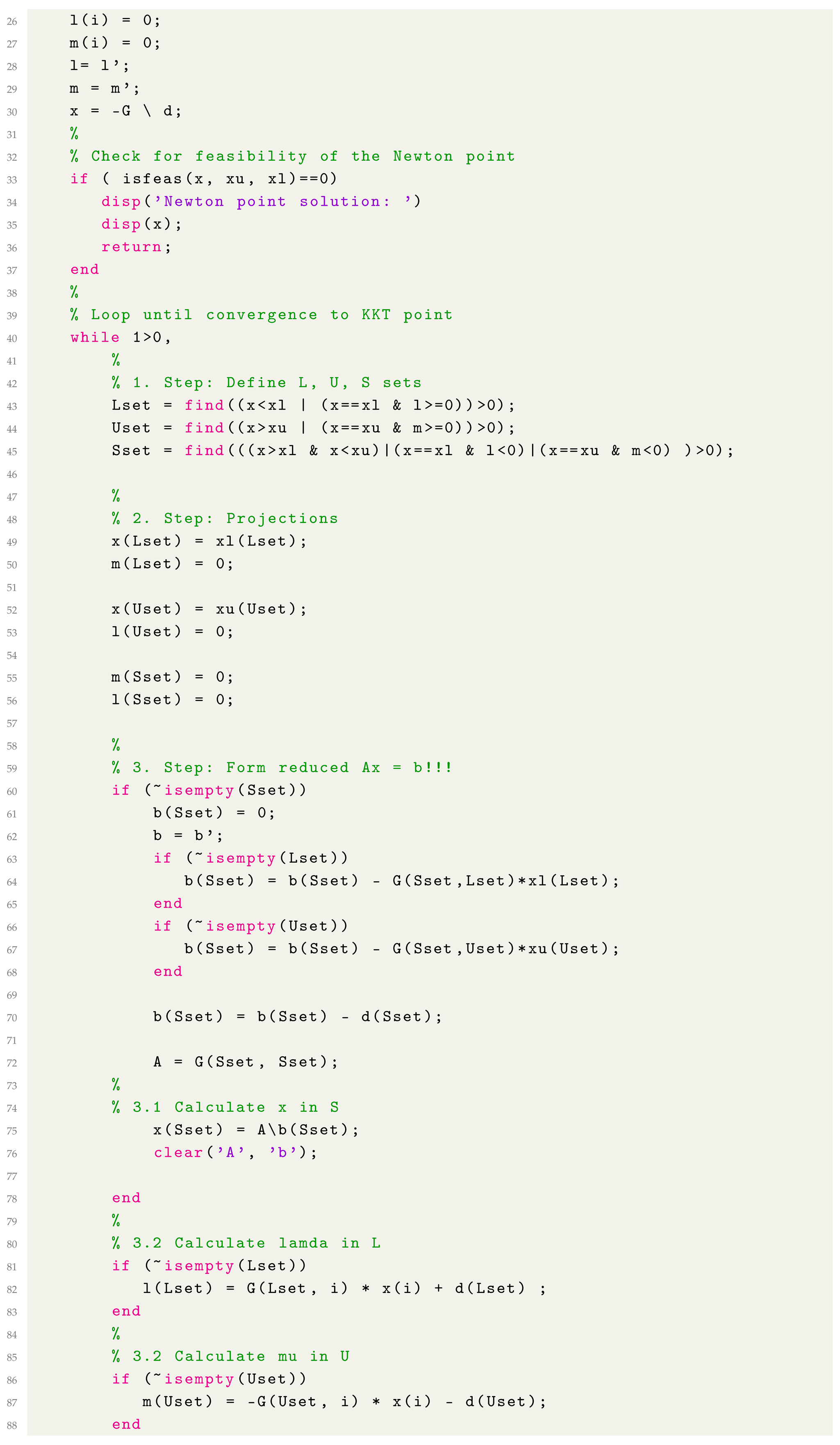

We present the Matlab version of the proposed quadratic programming code BoxCQP.

| Listing A1. Matlab implementation. |

|

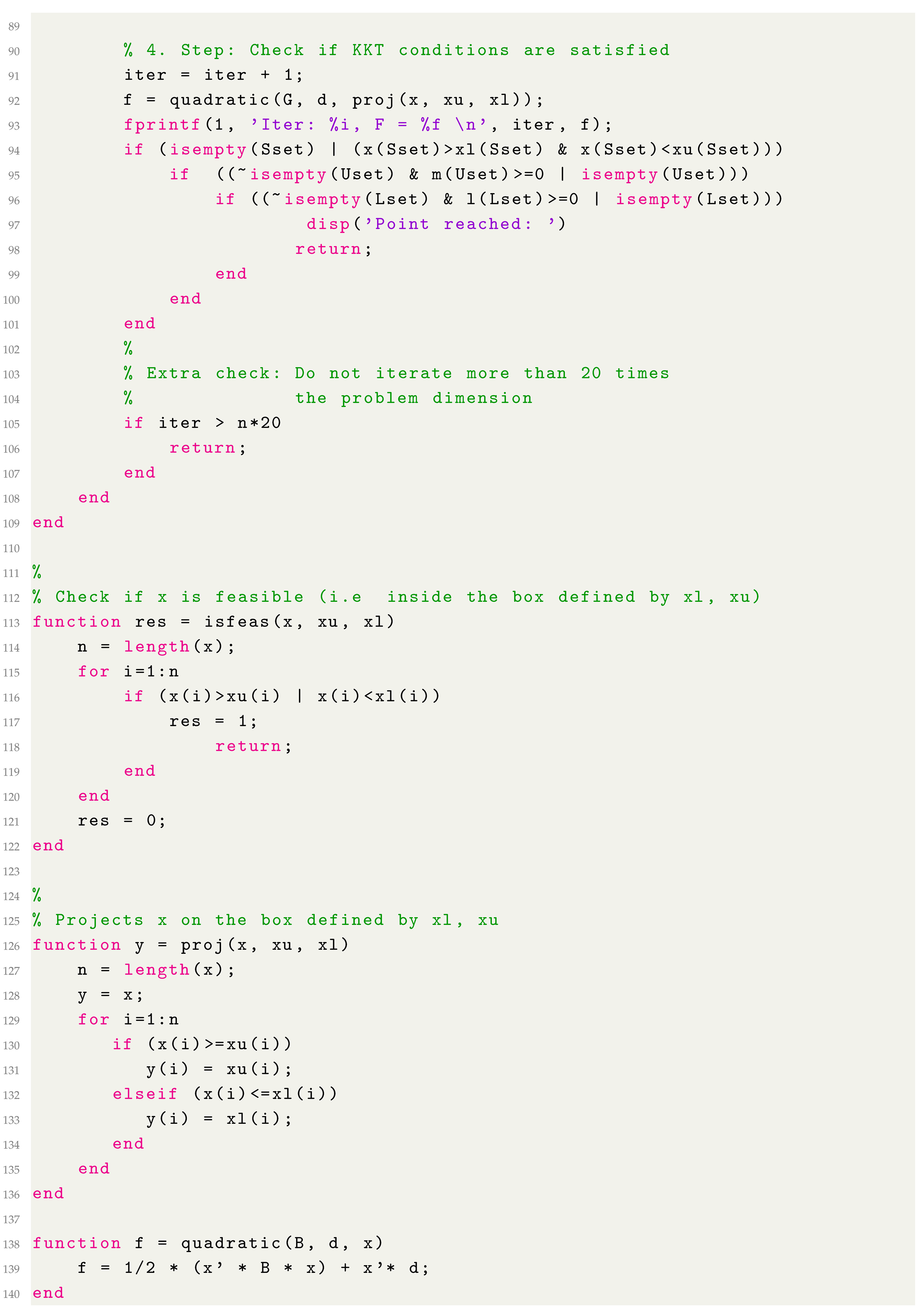

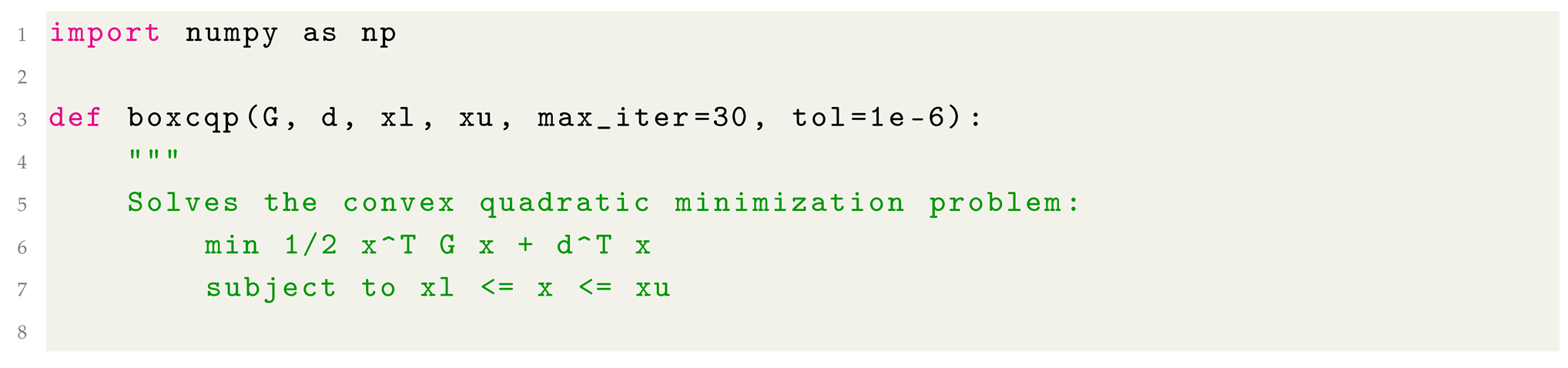

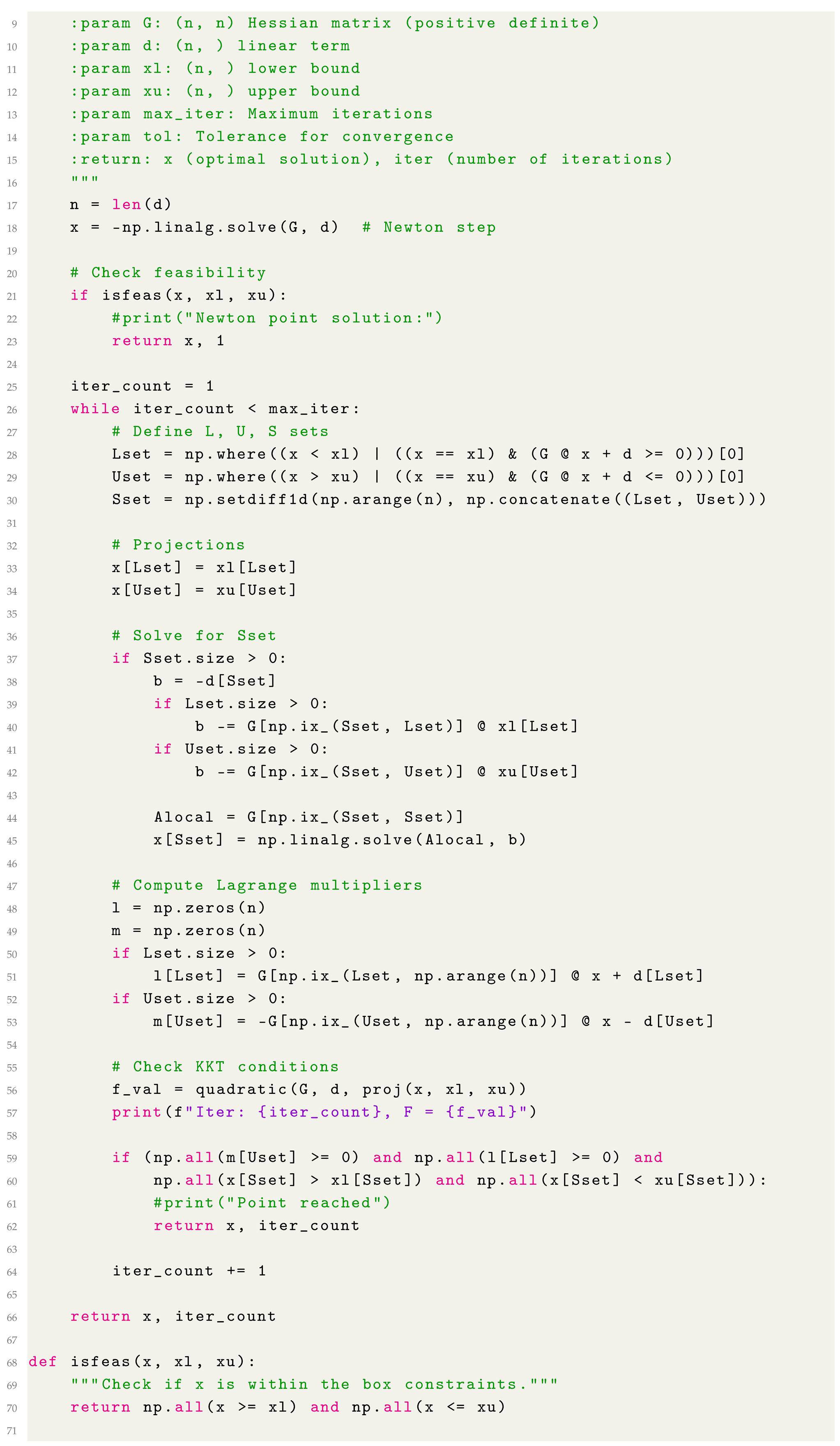

We present the Python version of the proposed quadratic programming code BoxCQP.

| Listing A2. Python implementation. |

|

| Listing A3. Python TrustBox Implementation. |

|

References

- Sun, W.; Yuan, Y.X. Optimization Theory and Methods: Nonlinear Programming; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; Volume 1. [Google Scholar]

- Fletcher, R. Practical Methods of Optimization, 2nd ed.; John Wiley & Sons, Inc.: New York, NY, USA, 1987. [Google Scholar]

- Gill, P.E.; Murray, W.; Wright, M.H. Practical Optimization; Academic Press: New York, NY, USA, 1981. [Google Scholar]

- Voglis, C.; Lagaris, I.E. A Rectangular Trust-Region Approach for Unconstrained and Box-Constrained Optimization Problems. In Proceedings of the International Conference of Computational Methods in Sciences and Engineering (ICCMSE 2004), Athens, Greece, 4–7 April 2004. [Google Scholar]

- Hungerlander, P.; Rendl, F. A feasible active set method for strictly convex quadratic problems with simple bounds. SIAM J. Optim. 2015, 25, 1633–1659. [Google Scholar] [CrossRef]

- Schwan, R.; Jiang, Y.; Kuhn, D.; Jones, C.N. PIQP: A Proximal Interior-Point Quadratic Programming Solver. In Proceedings of the 2023 62nd IEEE Conference on Decision and Control (CDC), Singapore, 13–15 December 2023; pp. 1088–1093. [Google Scholar] [CrossRef]

- Bambade, A.; Schramm, F.; El Kazdadi, S.; Caron, S.; Taylor, A.; Carpentier, J. Proxqp: An Efficient and Versatile Quadratic Programming Solver for Real-Time Robotics Applications and Beyond. 2023. Available online: https://inria.hal.science/hal-04198663/ (accessed on 1 February 2025).

- Yue, H.; Shen, P. Bi-affine scaling iterative method for convex quadratic programming with bound constraints. Math. Comput. Simul. 2024, 226, 373–382. [Google Scholar] [CrossRef]

- Ranasinghe, L.; Disanayake, A.; Cooray, T. Portfolio Optimization Using Quadratic Programming; University of Moratuwa, Institutional Repository: Moratuwa, Sri Lanka, 2020. [Google Scholar]

- Markowitz, H. Portfolio Selection. J. Financ. 1952, 7, 77–91. [Google Scholar]

- Kontosakos, V.E. Fast Quadratic Programming for Mean-Variance Portfolio Optimisation. In SN Operations Research Forum; Springer International Publishing: Cham, Switzerland, 2020; Volume 1, p. 25. [Google Scholar]

- Bodnar, T.; Parolya, N.; Schmid, W. On the Equivalence of Quadratic Optimization Problems Commonly Used in Portfolio Theory. arXiv 2012, arXiv:1207.1029. [Google Scholar] [CrossRef]

- Osuna, E.; Freund, R.; Girosi, F. An Improved Training Algorithm for Support Vector Machines. In Proceedings of the IEEE Workshop on Neural Networks for Signal Processing, Amelia Island, FL, USA, 24–26 September 1997; pp. 276–285. [Google Scholar]

- Platt, J.C. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines. In Advances in Kernel Methods—Support Vector Learning; MIT Press: Cambridge, UK, 1998; pp. 185–208. [Google Scholar]

- Gunn, S. Support Vector Machines for Classification and Regression; Technical Report, ISIS Technical Report; Image Speech & Intelligent Systems Group, University of Southampton: Southampton, UK, 1997. [Google Scholar]

- Osuna, E.; Freund, R.; Girosi, F. Support Vector Machines: Training and Applications; Technical Report; Massachusetts Institute of Technology: Cambridge, MA, USA, 1997. [Google Scholar]

- Bro, R.; De Jong, S. Fast Non-Negativity-Constrained Least Squares Regression. J. Chemom. 1997, 11, 393–401. [Google Scholar] [CrossRef]

- Lawson, C.L.; Hanson, R.J. Solving Least Squares Problems; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1995. [Google Scholar]

- Rawlings, J.B.; Mayne, D.Q.; Diehl, M. Model Predictive Control: Theory, Computation, and Design; Nob Hill Publishing: San Francisco, CA, USA, 2017. [Google Scholar]

- Diehl, M.; Bock, H.G.; Schloder, J. Real-Time Optimization for Large-Scale Nonlinear Processes. SIAM J. Optim. 2005, 15, 837–860. [Google Scholar]

- Breedveld, S.; Storchi, P.R.M.; Keijzer, M.; Heijmen, B.J.M. Fast, multiple optimizations of quadratic dose objective functions in IMRT. Phys. Med. Biol. 2006, 51, 3569. [Google Scholar] [CrossRef]

- Conn, A.R.; Gould, N.I.M.; Toint, P.L. LANCELOT: A Fortran Package for Large-Scale Nonlinear Optimization (Release A); Springer Series in Computational Mathematics; Springer: Berlin/Heidelberg, Germany, 1992; Volume 17. [Google Scholar]

- Bertsekas, D.P. Nonlinear Programming; Athena Scientific: Nashua, NH, USA, 1996. [Google Scholar]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Lu, H.; Yang, J. A practical and optimal first-order method for large-scale convex quadratic programming. arXiv 2023, arXiv:2311.07710. [Google Scholar]

- Voglis, C.; Lagaris, I.E. BOXCQP: An algorithm for bound constrained convex quadratic problems. In Proceedings of the 1st International Conference: From Scientific Computing to Computational Engineering, IC-SCCE, Athens, Greece, 8–10 September 2004. [Google Scholar]

- Kunisch, K.; Rendl, F. An Infeasible Active Set Method For Quadratic Problems With Simple Bounds. SIAM J. Optim. 2003, 14, 35–52. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.J. Conjugate gradient methods. In Numerical Optimization; Springer: New York, NY, USA, 2006; pp. 101–134. [Google Scholar]

- Hager, W.W.; Zhang, H. A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2006, 2, 35–58. [Google Scholar]

- Madsen, K.; Nielsen, H.B.; Pinar, M.C. Bound Constrained Quadratic Programming via Piecewise Quadratic Functions. Math. Program. 1999, 85, 135–156. [Google Scholar] [CrossRef]

- Schittkowski, K. QLD: A FORTRAN Code for Quadratic Programming, Users Guide; Technical Report; Mathematisches Institut, Universität Bayreuth: Bayreuth, Germany, 1986. [Google Scholar]

- More’, J.J.; Toraldo, G. On the solution of quadratic programming problems with bound constraints. SIAM J. Optim. 1991, 1, 93–113. [Google Scholar] [CrossRef]

- Friedlander, A.; Martinez, M. On the maximization of a concave quadratic function with box constraints. SIAM J. Optim. 1994, 4, 177–192. [Google Scholar] [CrossRef]

- Breedveld, S.; Department of Radiotherapy Erasmus MC Cancer Institute (Erasmus University Medical Center), Rotterdam, The Netherlands. Private communication, 2015.

- Blake, C.L.; Merz, C.J. UCI Repository of Machine Learning Databases. 1998. Available online: http://www.ics.uci.edu/~mlearn/MLRepository.html (accessed on 1 February 2025).

- Coleman, T.F.; Li, Y. An Interior, Trust Region Approach for Nonlinear Minimization Subject to Bounds. SIAM J. Optim. 1996, 6, 418–445. [Google Scholar] [CrossRef]

- Stark, P.B.; Parker, R.L. Bounded-variable least-squares: An algorithm and applications. Comput. Stat. 1995, 10, 129. [Google Scholar]

- Chang, T.J.; Meade, N.; Beasley, J.E.; Sharaiha, Y.M. Heuristics for cardinality constrained portfolio optimisation. Comput. Oper. Res. 2000, 27, 1271–1302. [Google Scholar] [CrossRef]

- Dantas, G.; Blondel, M.; Cournapeau, D. qpsolvers: A Unified Interface for Quadratic Programming Solvers in Python. 2020. Available online: https://qpsolvers.github.io/qpsolvers (accessed on 1 February 2025).

- Stellato, B.; Banjac, G.; Goulart, P.; Bemporad, A.; Boyd, S. OSQP: An Operator Splitting Solver for Quadratic Programs. Math. Program. Comput. 2020, 12, 637–672. [Google Scholar] [CrossRef]

- Conn, A.R.; Gould, N.I.; Toint, P.L. Trust Region Methods; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

- Byrd, R.H.; Gilbert, J.C.; Nocedal, J. A trust region method based on interior point techniques for nonlinear programming. Math. Program. 2000, 89, 149–185. [Google Scholar] [CrossRef]

- Dennis, J.E.; Schnabel, R.B. Numerical Methods for Unconstrained Optimization and Nonlinear Equations; SIAM: Philadelphia, PA, USA, 1996. [Google Scholar]

- MathWorks. Large-Scale Problem-Based Quadratic Programming. 2024. Available online: https://www.mathworks.com/help//releases/R2021a/optim/ug/large-scale-problem-based-quadratic-programming.html (accessed on 17 March 2025).

- Kanno, Y. Exploiting Lagrange Duality for Topology Optimization with Frictionless Unilateral Contact. arXiv 2020, arXiv:2011.07732. [Google Scholar]

- Bołbotowski, K. Optimal Design of Plane Elastic Membranes Using the Convexified Föppl’s Model. Appl. Math. Optim. 2023, 90, 23. [Google Scholar] [CrossRef]

- Burtscheidt, J.; Claus, M.; Conti, S.; Rumpf, M.; Sassen, J.; Schultz, R. A Pessimistic Bilevel Stochastic Problem for Elastic Shape Optimization. Math. Program. 2023, 198, 1125–1151. [Google Scholar] [CrossRef]

- Bendsoe, M.P.; Sigmund, O. Topology Optimization: Theory, Methods, and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Strang, G. Introduction to Applied Mathematics; Wellesley-Cambridge Press: Wellesley, MA, USA, 1986. [Google Scholar]

- Lions, J.L. Quelques Méthodes De Résolution Des Problèmes Aux Limites; Dunod: Malakoff, France, 1969. [Google Scholar]

- Glowinski, R. Numerical Methods for Nonlinear Variational Problems; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Duvaut, G.; Lions, J.L. Inequalities in Mechanics and Physics; Springer: Berlin/Heidelberg, Germany, 1976. [Google Scholar]

- Braess, D. Finite Elements: Theory, Fast Solvers, and Applications in Solid Mechanics; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Gilat, A.; Subramaniam, V. Numerical Methods for Engineers and Scientists; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Kinderlehrer, D.; Stampacchia, G. An Introduction to Variational Inequalities and Their Applications; SIAM: Philadelphia, PA, USA, 1980. [Google Scholar]

- Cho, P.S.; Phillips, M.H. Reduction of computational dimensionality in inverse radiotherapyplanning using sparse matrix operations. Phys. Med. Biol. 2001, 46, N117. [Google Scholar] [CrossRef] [PubMed]

- Storchi, P.; Woudstra, E. Calculation of the absorbed dose distribution due to irregularly shaped photon beams using pencil beam kernels derived from basic beam data. Phys. Med. Biol. 1996, 41, 637. [Google Scholar] [CrossRef] [PubMed]

- Webb, S.; Convery, D.; Evans, P. Inverse planning with constraints to generate smoothed intensity-modulated beams. Phys. Med. Biol. 1998, 43, 2785. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A Training Algorithm for Optimal Margin Classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, UK, 2001. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).