Abstract

This paper introduces Harmonizer, a universal framework designed for tokenizing heterogeneous input signals, including text, audio, and video, to enable seamless integration into multimodal large language models (LLMs). Harmonizer employs a unified approach to convert diverse, non-linguistic signals into discrete tokens via its FusionQuantizer architecture, built on FluxFormer, to efficiently capture essential signal features while minimizing complexity. We enhance features through STFT-based spectral decomposition, Hilbert transform analytic signal extraction, and SCLAHE spectrogram contrast optimization, and train using a composite loss function to produce reliable embeddings and construct a robust vector vocabulary. Experimental validation on music datasets such as E-GMD v1.0.0, Maestro v3.0.0, and GTZAN demonstrates high fidelity across 288 s of vocal signals (MSE = 0.0037, CC = 0.9282, Cosine Sim. = 0.9278, DTW = 12.12, MFCC Sim. = 0.9997, Spectral Conv. = 0.2485). Preliminary tests on text reconstruction and UCF-101 video clips further confirm Harmonizer’s applicability across discrete and spatiotemporal modalities. Rooted in the universality of wave phenomena and Fourier theory, Harmonizer offers a physics-inspired, modality-agnostic fusion mechanism via wave superposition and interference principles. In summary, Harmonizer integrates natural language processing and signal processing into a coherent tokenization paradigm for efficient, interpretable multimodal learning.

MSC:

68T07

1. Introduction

Large language models (LLMs) have successfully processed natural language [1,2,3,4], but integrating multimodal inputs requires robust tokenization strategies [5,6,7]. However, existing approaches struggle to align modalities such as text, audio, video, and sensor data into a coherent format that LLMs can effectively process [8]. As a remedy, this paper proposes Harmonizer, a universal signal tokenization framework that enables seamless tokenization of diverse input signals for multimodal LLMs. Employing a unified tokenization strategy ensures a consistent and efficient representation of multimodal data [9,10,11], thus enhancing the adaptability and scalability of LLMs across various domains and applications [12,13,14].

The implementation of Harmonizer faces several critical technical challenges. First, it must address the heterogeneity of input signals, handling differences in representation (e.g., audio waveforms versus image pixels) [15,16]. Second, temporal and spatial resolution pose challenges as sequences often vary in length and resolution [17]. Third, scalability is essential to maintain efficiency as both the number of modalities and the complexity of data increase [18,19]. Finally, preserving contextual semantics ensures accurate integration of cross-modal information (e.g., audio-text alignment) for coherent multimodal reasoning and inference [20]. In NLP, tokenization splits text into small units (words or subwords) and maps them to numbers that capture syntax and meaning [21]. In NLP, tokenization involves breaking text into smaller units (e.g., words or subwords) [22,23] and mapping them to numerical representations using a predefined vocabulary that captures the syntactic and semantic essence of the language [24]. These standardized representations enable LLMs to learn contextual relationships and nuances [25,26]. (In subsequent mentions, we refer to large language models (LLMs) and natural language processing (NLP) only by their abbreviations).

However, applying these well-established principles to signal processing introduces a different set of challenges [27]. Unlike textual data, which inherently consist of discrete units that can be directly mapped to tokens, signals, such as audio, radio, medical, or sensor data, do not naturally possess a vocabulary [28]. Signals are continuous, time-varying data forms composed of an infinite range of values over time or frequency [29], representing physical quantities such as sound pressure, electromagnetic fields, or electrical activity [30]. Consequently, there is no direct equivalent to a “word” or “sentence” in signal data, making traditional NLP tokenization techniques unsuitable [31]. This absence of an intrinsic vocabulary creates a critical gap that requires an innovative approach to effective signal representation [32,33,34,35]. At its core, Harmonizer is grounded in fundamental wave-based principles. Continuous signals—be they acoustic, electromagnetic, or sensor readings [36,37,38,39,40,41]—encode information via smooth variations in amplitude, frequency, and phase [42,43,44]. Fourier analysis guarantees any such signal admits a complete sinusoidal decomposition, furnishing a universal feature basis [45]. Critically, wave superposition and interference allow multiple streams to combine instantaneously and losslessly—no hand-crafted alignment is required—paving the way for robust, real-time fusion across modalities [46,47,48,49]. By leveraging these natural properties, our framework unifies preprocessing, quantization, and tokenization under a single, physically motivated paradigm.

Previous works in signal processing and NLP have laid the foundation for this study. Early research in quantization, such as the Lloyd–Max quantization algorithm [50], established efficient scalar quantization by minimizing the mean squared error between original and quantized signals [51,52]. Later, vector quantization techniques exploited correlations within signal data to produce compact and representative encodings [53,54]. In parallel, NLP saw the emergence of effective tokenization methods, including subword tokenization [5] and byte-pair encoding (BPE) [55] that decompose words into smaller units to improve model generalization [7,56].

To bridge the gap between continuous signal data and the discrete token representations required by machine learning models, we leverage advanced vector quantization techniques to create a meaningful vocabulary for signals [57]. Analogous feature-space augmentation and self-supervised loss strategies—exemplified by PatchUp within a metric-learning framework—have proven to substantially improve embedding diversity and generalization, and can be directly applied to enrich the tokenization of continuous modalities such as audio and video signals [58,59]. At its core, the model employs a quantization vector that compresses signals while preserving essential characteristics and integrates them into a FusionQuantizer architecture built on a FluxFormer-like backbone [60,61]. In addition, advanced preprocessing techniques, including Short-Time Fourier Transform (STFT) [62], Hilbert Transform [63], and Spectrogram Contrast Limited Adaptive Histogram Equalization (SCLAHE) [64], are used to extract rich and nuanced representations from signals. An innovative multi-objective loss function further optimizes the quality of the generated embedding vectors, ensuring that the input data are effectively represented [65,66].

The exponential growth of signal data in fields such as telecommunications, medical diagnostics, multimedia applications, and the Internet of Things (IoT) has driven the need for more efficient methods of signal representation, compression, and analysis [67,68]. Traditional approaches often depend on domain-specific knowledge and lack standardized methodologies for tokenizing signals [69,70], highlighting a critical gap [17]. Addressing this gap requires new frameworks that bridge signal processing and machine learning to enable more universal and efficient representation techniques [71,72,73].

Recent advancements in transformer architectures have significantly improved the processing of multimodal data [74]. Models like Perceiver and Perceiver IO demonstrate the ability to handle various input types, including images, audio, and point clouds, using a unified attention mechanism [75,76]. These models employ latent bottleneck attention to efficiently process high-dimensional data, a technique that has inspired frameworks like Harmonizer to develop modality-agnostic tokenization strategies [77]. Such strategies are crucial for converting diverse input signals into coherent token sequences suitable for large language models (LLMs) [14,78]. In self-supervised learning, models such as wav2vec 2.0 show that pre-training on raw audio can yield robust speech representations without labeled data [79,80]. Likewise, BEiT applies masked image modeling to train vision transformers through the reconstruction of masked patches [81]. These examples highlight the potential of tokenization schemes that align well with unsupervised and pretext tasks in different modalities [82].

Contrastive learning further increases the effectiveness of multimodal tokenization [83]. CLIP, for example, uses a contrastive objective to align the image and text embeddings, enabling a better cross-modal understanding [84,85]. Integrating such techniques into tokenization frameworks ensures that generated tokens are both syntactically consistent and semantically informative, which improves the model’s ability to relate information across modalities [83,86]. Moreover, models like VATT show that learning from raw video, audio, and text data is possible using convolution-free transformers [87]. These models rely on multimodal contrastive loss functions to extract meaningful representations for downstream tasks [88,89]. Unified processing through consistent multimodal tokenization is the key to achieving this level of performance [90]. The evolution of these models underscores the central role of robust tokenization in multimodal learning [90,91]. Using such innovations, frameworks like Harmonizer can generate more efficient, generalizable, and semantically rich representations, improving adaptability across applications [92].

Transformer-based multimodal models, such as the multimodal transformer and the joint multimodal transformer, further illustrate the power of unified attention mechanisms [93,94]. These architectures often use modality-specific encoders followed by cross-modal attention layers to model complex relationships between inputs [95]. This approach proves especially useful in emotion recognition and sentiment analysis, where the fusion of text, audio, and visual cues is essential [96]. Innovations like dual-level feature restoration, as implemented in the Efficient Multimodal Transformer, enhance the tokenization process by preserving fine-grained details, leading to better performance in complex tasks [91,97]. Self-supervised learning (SSL) has emerged as a powerful method to extract representations from unlabeled multimodal data [98,99]. Frameworks like Self-Supervised MultiModal Versatile Networks (MMVs) and Modality-Agnostic Transformer-based Self-Supervised Learning (MATS2L) show how tokenization approaches tailored for SSL can capture both modality-specific and cross-modal features [100,101]. These systems utilize tasks such as masked token prediction and contrastive learning to develop meaningful token representations [102].

In medical imaging, self-supervised multimodal tokenization has enabled the extraction of high-quality features from MRI and CT data, thus improving diagnostics [103,104]. In remote sensing, SSL-based tokenization frameworks have helped integrate satellite data from different sensors, improving land cover classification [105]. Together, the synergy between tokenization strategies, transformer models, and self-supervised objectives continues to redefine the landscape of multimodal learning [98,105]. These developments lay a strong foundation for universal frameworks like Harmonizer, capable of handling various input types efficiently and meaningfully [106,107].

This paper makes the following contributions:

- Universal Tokenization Framework: We introduce Harmonizer, the first data-driven vocabulary approach that seamlessly tokenizes text, audio, video, and sensor inputs for LLMs.

- FusionQuantizer and Streaming FluxFormer: We design a novel FusionQuantizer architecture atop a streaming FluxFormer backbone, combining STFT, Hilbert, and SCLAHE preprocessing with hybrid vector quantization for robust, low-latency inference.

- Multi-Objective Training: We formulate and balance adversarial, time-domain, spectral, and perceptual loss terms, ensuring high fidelity across modalities and dynamic ranges.

- Cross-Domain Validation: We demonstrate Harmonizer’s versatility through extensive music evaluations (E-GMD, Maestro, GTZAN) and preliminary text (ASCII encoding, one and two words) and video (UCF-101) experiments.

In summary, our proposed approach offers a groundbreaking solution for applying NLP-inspired tokenization techniques to signal processing [29,108,109]. By creating a representative vocabulary for signals and employing a robust quantization vector approach, it enables efficient signal representation and manipulation, paving the way for future advancements in both signal processing and machine learning.

2. Overview of Harmonizer

LLMs have successfully processed natural language [1,2,3,4], but integrating multimodal inputs requires robust tokenization strategies [5,6,7]. However, existing approaches struggle to align modalities such as text, audio, video, and sensor data into a coherent format that LLMs can effectively process [8,110]. As a remedy, this paper proposes Harmonizer, a universal signal tokenization framework that enables seamless tokenization of diverse input signals for Multimoda Large Language Models (MLLMs). Employing a unified tokenization strategy ensures consistent and efficient representation of multimodal data, thereby enhancing the adaptability and scalability of MLLMs across various domains and applications.

The deployment of Harmonizer encounters several significant technical obstacles. Primarily, it must manage the heterogeneity of input signals by accommodating varying representations (e.g., audio waveforms versus image pixels) [15,16]. Additionally, temporal and spatial resolution differences present challenges since sequences frequently differ in length and clarity [17]. Furthermore, scalability is critical to ensure efficiency as both the number of modalities and the complexity of the data expand [18,19]. Finally, it is essential to preserve contextual semantics, ensuring contextual information is accurately retained and integrated between diverse data types to facilitate seamless interaction [20].

- Tokenization via Quantization and Vocabulary Generation:

- Signal Quantization: Harmonizer introduces a unique approach to signal processing by creating a quantization vector that serves as the basis for developing a signal vocabulary. This dynamic quantization technique transforms the continuous signal space into a finite set of discrete levels, thereby reducing the signal data size while preserving its essential features [50,51,57,111,112].

- Signal Tokenization: Building upon the quantization process, the framework creates a vocabulary of tokens representing different signal segments. Each token corresponds to a specific quantized level, much like how words are tokenized in NLP [22,23,113,114]. This structured tokenization enables machine learning models to interpret signals effectively.

2.1. Creating a Vocabulary for Signals

One of the most challenging aspects of applying NLP techniques to signals is the absence of a predefined vocabulary. Harmonizer addresses this using a data-driven approach to generate a vocabulary that captures the unique characteristics of the signal. The process involves the following steps:

- Feature Extraction: Initially, relevant features are extracted from the signals (e.g., amplitude, frequency, and phase in the case of audio signals) [30,115,116].

- Clustering: Unsupervised learning techniques are then used to group similar features, identifying patterns and repetitive elements within the signal data [52,117,118,119].

- Token Generation: Each resulting cluster is assigned a unique token, forming the basis of the signal vocabulary. This process is iterative, refining the vocabulary over time to enhance accuracy and representation [120].

2.2. Signal Compression via Quantization Vector Techniques

Signal compression is critical for optimizing storage and transmission. Harmonizer leverages quantization vector techniques to encode high-dimensional signal data into a lower-dimensional form without significant loss of information [57,121,122]. By mapping complex signal data to a compact quantization vector, the framework achieves efficient compression while retaining the integrity and essential characteristics of the original signal. This compression methodology is particularly advantageous in scenarios where bandwidth is limited or storage capacity is constrained ( e.g., in medical imaging applications, where high-resolution images need to be stored and transmitted without compromising diagnostic quality) [36].

3. Implementation Methodology of Harmonizer

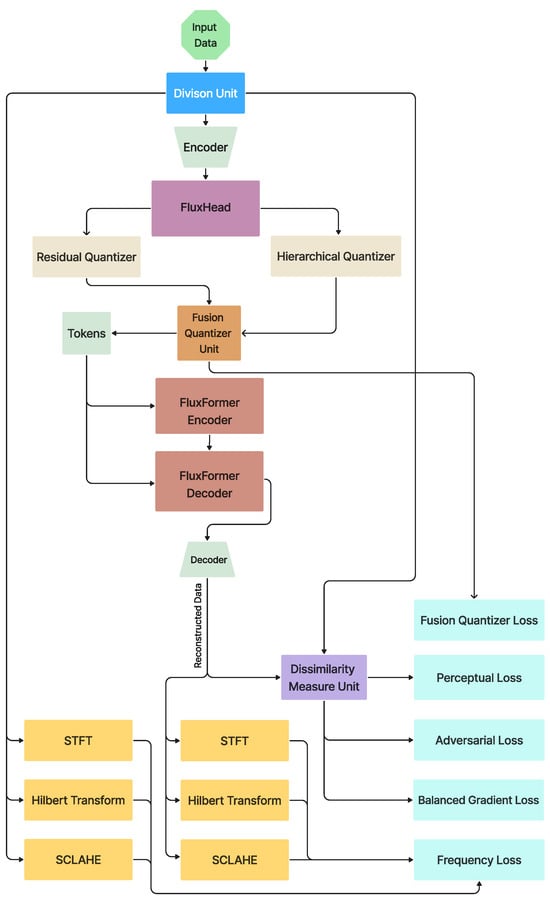

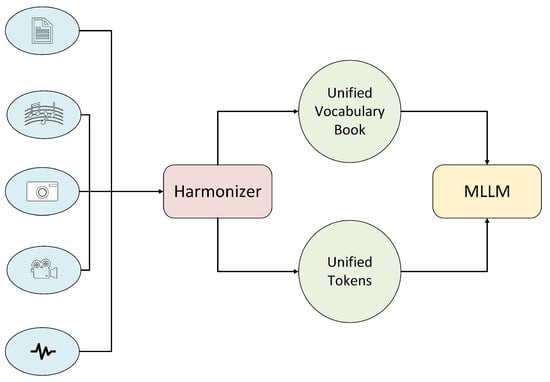

Harmonizer is designed as a universal tokenization framework that unifies the treatment of diverse input signals (e.g., audio, sensor data, text, video) for MLLMs. Figure 1 provides an overview of the Harmonizer architecture, illustrating its core components and the data flow from raw signal inputs to final tokenized outputs and reconstructed signal. The Division Unit in Figure 1 is in charge of data preparation. This unit can handle both continuous and discrete tokens; if the input is analog, the inputs are sampled into discrete-time signals before processing. The input is divided into chunks if it is a signal. Textual input is first transformed into a signal by combining a sine function with ASCII encoding. Video input is converted into a signal format by extracting and concatenating spatiotemporal patches.

Figure 1.

High-Level Architecture of the Harmonizer Framework. This schematic shows the end-to-end pipeline: (1) Input Preprocessing: raw text, audio, and video are converted into STFT spectrograms, analytic signals via the Hilbert transform, and contrast-enhanced via SCLAHE; (2) Feature Encoding: FluxHead applies streaming multi-head attention to extract temporal and spectral cues; (3) Tokenization: FusionQuantizer vector-quantizes these features into discrete tokens, building a learned signal vocabulary; (4) Streaming Inference: FluxFormer uses sliding-window transformers to generate tokens on-the-fly with minimal latency; (5) Optimization: a multi-objective loss (adversarial, L1/L2 time-domain, STFT/Hilbert/SCLAHE spectral, and perceptual) refines reconstruction quality. The final output is a unified token sequence that any multimodal LLM can consume without additional modality-specific encoders.

3.1. Preprocessing and Feature Extraction

Harmonizer begins with domain-specific preprocessing steps that convert raw inputs, such as audio waveforms or sensor data streams, into feature representations suitable for quantization. For audio and other time-varying signals, the STFT is computed to capture local frequency content and detect transients and harmonics. The Hilbert Transform is applied to extract analytic signal properties, including instantaneous amplitude and phase, facilitating precise modeling of nonstationary data. The SCLAHE algorithm enhances local contrast in spectrograms, making subtle features more distinguishable. These modular operations convert raw data into high-resolution spectral or time-frequency representations that capture critical nuances before tokenization.

3.2. Fluxhead-Based Fusion Quantizer Unit

The core of Harmonizer is the FusionQuantizer, which extends our FluxHead and FluxFormer modules to learn a compact codebook of signal patterns. In the feature encoding stage, FluxHead and 1D Convolutional layers ingest preprocessed feature maps and leverage a streaming multi-head self-attention mechanism to integrate both temporal and spectral cues, ensuring that local patterns and global context are captured. FluxHead starts processing immediately on the first incoming frame, so the model can handle inputs of any length with minimal delay. The encoded features are then quantized into discrete tokens through a data-driven vector quantization process that learns a signal vocabulary representing diverse signal patterns such as partials, formants, and onsets. For multimodal data (e.g., audio combined with text), a cross-attention fusion mechanism aligns and unifies tokens across modalities, enabling seamless integration with downstream large language models.

3.3. Streaming Inference and LLM Integration

Once trained, Harmonizer operates in a streaming fashion. Incoming signals, whether audio frames, text chunks, or sensor batches, are processed in small segments via a sliding window, enabling near real-time token generation. Each segment is passed through the FluxHead and FluxFormer-based pipeline to produce tokens on the fly with minimal latency. These tokens are subsequently fed into or combined with large language models, allowing for context-aware, multimodal reasoning or generation without requiring complete input sequences. This design readily supports integration into Retrieval-Augmented Generation (RAG) systems and other knowledge-intensive workflows by unifying heterogeneous signals into a coherent token space.

3.4. Mathematical Formulation of Harmonizer with Attention Mechanism

3.4.1. Input Audio Signal Representation

An audio signal is represented as a multi-dimensional tensor:

where B is the batch size, C is the number of channels, and T is the number of time steps. This tensor serves as the input to the model.

3.4.2. Encoding via Convolutional Layers

The encoder begins with convolutional layers for feature extraction. For each convolutional layer i, the output is computed as

where is the convolutional filter, is the number of feature maps, is the kernel size, is the bias term, denotes the ReLU activation function, and ∗ represents convolution. Let

denote the final output after the last convolutional layer.

3.4.3. FluxHead: Streaming Multihead Attention Encoder

The convolutional features are then processed by the FluxHead module. First, a positional encoding is added as follows:

where is a positional encoding that can be computed using sinusoidal functions as follows:

with m as the position index and n as the dimension index.

- For each attention head j (), the projections are computed as

- All head outputs are concatenated and projected as follows:

3.4.4. FusionQuantizer

Within the FusionQuantizer module, the attention-refined features are quantized using a hybrid approach that combines residual and hierarchical quantization techniques. This design choice is motivated by two main factors:

- Residual Quantization: This method iteratively minimizes the quantization error by first approximating the input with a coarsely quantized code and then quantizing the residual (i.e., the difference between the input and its approximation). By doing so, it preserves fine-grained details that might otherwise be lost if only a single quantization step were applied.

- Hierarchical Quantization: In parallel, hierarchical quantization captures the data’s multi-scale semantic structure. It organizes the quantization process into several levels, enabling the model to extract both coarse (global) and fine (local) features from the input. This multi-level approach is especially useful for complex data representations.

By fusing the outputs of these two methods, the model benefits from both the refined approximation of residual quantization and the robust, multi-scale representation of hierarchical quantization. This fusion results in a latent representation that effectively balances representational fidelity with compactness, ultimately enhancing the model’s performance on downstream tasks.

In the first-level quantization, vocabulary codebooks and are defined as

A residual is computed as

which is then quantized using a second-level codebook :

Hierarchical quantization operates directly on the attention-refined features to capture multi-scale semantic information:

The final quantized latent representation is obtained by fusing the outputs from both quantization strategies:

While the mathematical form above fuses residual and hierarchical codebook outputs, the underlying idea is straightforward. Residual quantization iteratively captures the fine-grained differences left over after a coarse approximation, ensuring precise reconstruction of subtle signal details. Hierarchical quantization, in contrast, targets multi-scale structure by first encoding broad, global patterns and then refining them at finer levels. By averaging these two perspectives, the FusionQuantizer benefits from both the accuracy of residual error minimization and the robustness of multi-level feature capture. This balanced fusion yields a compact yet expressive latent representation that faithfully preserves both coarse structure and fine details. This fusion ensures that the latent representation benefits from the iterative error minimization of residual quantization as well as the structured, multi-level feature capture of hierarchical quantization.

3.4.5. FluxFormer: Streaming Encoder and Decoder

To reconstruct and regenerate the input signal, the quantized latent is processed by a two-stage streaming transformer-based module called FluxFormer.

The FluxFormer Encoder first augments with an encoder positional encoding:

where is computed similarly (using sinusoidal functions, as shown in Equation (5)). For each attention head j (), the encoder computes the following:

and the attention weights are as follows:

The head outputs are then

All head outputs are concatenated and projected as follows:

Then, it is followed by a feed-forward network (FFN) with a residual connection and layer normalization:

The FluxFormer Decoder regenerates the streaming signal from . A decoder positional encoding is added:

with computed similarly. For each attention head j in the decoder, the projections are computed as

and the attention weights are

The head outputs are

After concatenation and projection,

After that, FFN with residual connection and layer normalization is applied as follows:

The regenerated streaming signal is then passed to the convolutional decoder.

3.4.6. Convolutional Decoder

The regenerated signal is upsampled via a series of transposed convolutional layers to reconstruct the full-resolution audio. For each decoder layer i, the reconstruction is computed as

where is the transposed convolution kernel, is the bias, ⊗ denotes the transposed convolution operator, and is an activation function (in this equation, we utilized ReLU). The final reconstructed output is

aiming to replicate the original waveform .

3.5. Multi-Objective Loss Functions

The Harmonizer model is trained using a multi-objective loss function that integrates several components to ensure reconstruction fidelity across time-domain accuracy, spectral consistency, phase alignment, and perceptual realism. The total loss is defined as

To balance the contributions of each term in Equation (30), we selected the weight values listed in Table 1, which total 1.0.

Table 1.

Normalized weights assigned to each component of the multi-objective loss function, ensuring their sum equals 1.0.

This choice ensures that no single loss component dominates, so the model learns time-domain accuracy, perceptual quality, and spectral/phase alignment together.

3.5.1. Adversarial Loss

The adversarial loss is formulated using a hinge-based objective:

where denotes the discriminator output.

3.5.2. Perceptual Loss

The perceptual loss measures differences between feature maps of the real and generated signals:

3.5.3. Balanced Gradient Loss

To achieve a balanced gradient loss, we combined L1 and L2 losses in our total loss function, weighting them with coefficients and , respectively. The L1 loss is defined as

while the L2 loss is expressed as

This balanced approach allows the model to leverage the advantages of both L1 and L2 losses, enhancing the robustness and accuracy of the image enhancement process.

3.5.4. STFT Loss

To enforce frequency-domain consistency, the STFT loss over multiple window sizes () is computed as

3.5.5. Hilbert Transformation Loss

The Hilbert transformation loss leverages the Hilbert transform to accurately extract the amplitude envelope and instantaneous phase of the signal, ensuring alignment between the ground truth and reconstructed signals. The loss function is defined as

with denoting the Hilbert transform.

3.5.6. SCLAHE Loss

The SCLAHE loss ensures similarity between the contrast-enhanced spectrograms of the real and generated signals:

3.5.7. Weighting Factors and Conclusion

The coefficients , , , , , , and in Equation (30) balance the contributions of adversarial realism, waveform fidelity, perceptual similarity, and spectral/phase alignment. By appropriately tuning these weights, the model achieves high-fidelity audio reconstruction that is accurate in both the time and frequency domains.

3.6. Experimental Setup

All training and evaluation experiments were conducted using PyTorch (Stable v2.7.0) [123] on NVIDIA GPUs at the University of Tennessee at Chattanooga’s Multi-Disciplinary Research Building (MDRB) supercomputing facility [124]. We leveraged both P100 and A100 GPU nodes provided by the center to benchmark Harmonizer’s efficiency:

- Primary setup: Single NVIDIA Tesla P100 (16 GB RAM). This shows that Harmonizer can train with limited GPU memory.

- Accelerated setup: Dual NVIDIA A100 (80 GB RAM each) with third-gen Tensor Cores and mixed-precision (FP16/BF16/TF32). We leveraged Tensor Cores on the A100 to achieve up to 10–20× speedups on matrix multiplications compared to the P100.

Key hyperparameters and data settings are summarized in Table 2.

Table 2.

Detailed hyperparameter settings and hardware configurations used for training and evaluating the Harmonizer framework.

This dedicated Experimental Setup section ensures the full reproducibility of our results, demonstrating that Harmonizer operates efficiently on modest GPU hardware while seamlessly scaling to high-performance clusters when available.

4. Results

In this section, we present a comprehensive evaluation of the Harmonizer model’s performance on two distinct types of vocal music signals: (1) low-tempo/low-dynamic signals and (2) high-tempo/high-dynamic signals. In each audio scenario, the model was employed to reconstruct the input from its quantized embedding, and the regenerated signals were compared against the corresponding ground truth using a suite of quantitative metrics—including time-domain (MSE, CC), frequency-domain (STFT, spectral convergence), and perceptual (MFCC similarity, PSNR) measures—to provide a holistic assessment of Harmonizer’s fidelity and robustness.

Beyond these core audio experiments, we also report preliminary results on text and video inputs. Early tests with our nascent Text-Harmonizer pipeline show an accurate reconstruction of short ASCII-encoded-Sinusoidal strings, while initial Video-Harmonizer trials on UCF-101 clips demonstrate promising geometric and color fidelity.

It is important to note that all evaluations—across music, text, and video—used data unseen during training. The high-fidelity correspondence in each modality confirms that Harmonizer’s learned vocabulary and FusionQuantizer generalize effectively. Our specialized Text-Harmonizer and Video-Harmonizer variants remain under active development and will be detailed in forthcoming publications; insights from these models will be fed back into the main Harmonizer framework to yield an even more robust, modality-agnostic tokenization backbone.

4.1. Overview of Evaluation Metrics

We employ multiple metrics to capture various aspects of signal quality and fidelity. Table 3 summarizes the evaluation metrics by providing their abbreviation, expanded version, typical range, and the mathematically expressed optimal value criterion. These metrics assess both fine-grained sample-level accuracy and perceptual similarity, ensuring a comprehensive evaluation of our regenerated audio signals.

Table 3.

Quantitative evaluation metrics for audio reconstruction, listing each abbreviation, its full name, the numerical range of values, and the criterion for optimal performance; the lower section presents pixel-wise image-based metrics computed on spectrograms.

Pixelwise Metrics on Spectrograms (MSE, SSIM, and PSNR)

In our evaluation framework, we treat the spectrogram as a two-dimensional image, which allows us to apply advanced pixelwise metrics for assessing the quality of our signal reconstructions. While conventional sample-level MSE computes the average squared error over the entire audio waveform (thus providing a global error measure), it may overlook small, localized distortions that are critical for perceptual audio quality. By interpreting the spectrogram as an image, each pixel corresponds to a specific time-frequency component. This enables us to detect and quantify localized reconstruction errors that might not significantly affect the global MSE but can impact the perceived fidelity of the audio.

In our implementation, the Pixelwise MSE (PMSE) is computed directly on the STFT-derived spectrograms. Specifically, for two spectrogram images (ground truth) and (generated), PMSE is defined as:

where N is the total number of pixels in the spectrogram image (see Equation (38)). This localized error measure is highly effective at detecting small regions with high reconstruction error, even when the overall error remains low.

Furthermore, we enhance this analysis by computing the following:

- SSIM (Structural Similarity Index Measure): Evaluates local changes in luminance, contrast, and structure, aligning closely with human visual perception.

- PSNR (Peak Signal-to-Noise Ratio): Quantifies the ratio between the maximum possible signal power and the noise power, ensuring that the dynamic range and fine details of the spectrogram are preserved.

To further improve the sensitivity of our evaluation, we apply a series of pre-processing steps on the error maps—including percentile-based clamping, median filtering, and Gaussian smoothing—to suppress global outliers and emphasize localized discrepancies. This provides a comprehensive, granular insight into both the overall fidelity and the perceptually relevant distortions in the reconstructed audio.

4.2. Performance on Low-Tempo/Low-Dynamic Signals

Table 4 summarizes the results obtained for low-tempo/low-dynamic vocal music signals (approximately s long). The following observations can be made:

Table 4.

Quantitative performance metrics for low-tempo, low-dynamic vocal music signals (≈288 s), covering time-domain error (MSE, CC, CS), temporal alignment (DTW), spectral fidelity (SC, LSD, MFCC), signal-to-noise ratios (SNRs), and pixelwise spectrogram measures (PMSE, SSIM, PSNR) for both stereo channels.

MSE is , indicating minimal average squared error across samples. The correlation coefficient of suggests a strong linear relationship between the ground truth and regenerated signals. Cosine similarity exceeds (Left) and (Right), reflecting near-perfect alignment in the feature space. DTW distances are (Left) and (Right), implying minimal temporal shifts. Spectral convergence values of (Left) and (Right) indicate a high degree of similarity in the magnitude spectra. The reconstruction SNR is , while LSD values are (Left) and (Right). MFCC similarity is , and the overall results confirm robust fidelity.

The pixel-wise metrics, computed on the spectrogram treated as an image, further confirm the high quality of the reconstructions. The Left and Right Pixelwise MSE values of and , respectively, indicate low average squared errors across the spectrogram pixels. Pixelwise SSIM values, which range from 0 to 1 (with values closer to 1 indicating better similarity), are exceptionally high ( for the left and for the right channel). Additionally, the pixelwise PSNR values—measured in decibels (dB) and indicative of a high dynamic range with minimal noise—are approximately dB and dB for the left and right channels, respectively. These metrics collectively confirm that the spectrogram images of the reconstructed signals are nearly indistinguishable from those of the ground truth, further emphasizing the overall high-fidelity performance of Harmonizer.

4.3. Performance on High-Tempo/High-Dynamic Signals

We further evaluated Harmonizer on higher tempo, more dynamically complex vocal music signals (approximately s long). Table 5 presents the results. For high-tempo/high-dynamic signals, MSE is , and the correlation coefficient is , reflecting a high degree of linear similarity. Cosine similarity exceeds for both channels, while DTW distances remain comparatively low ( for Left and for Right). Spectral convergence values of (Left) and (Right) remain within acceptable ranges despite the increased dynamic complexity. The reconstruction SNR is , and MFCC similarity is .

Table 5.

Quantitative performance metrics for high-tempo, high-dynamic vocal music signals (≈231 s), covering time-domain error (MSE, CC, CS), temporal alignment (DTW), spectral fidelity (SC, LSD, MFCC), signal-to-noise ratios (SNRs), and pixelwise spectrogram measures (PMSE, SSIM, PSNR) for both stereo channels.

Pixelwise Evaluation: In the high-dynamic scenario, the Left and Right Pixelwise MSE values are higher— and , respectively—reflecting the increased complexity and transient nature of the signals. Nevertheless, the pixelwise SSIM values remain very high ( for the left and for the right channel), indicating that the structural similarity of the spectrogram images is largely preserved. Additionally, the pixelwise PSNR values, although slightly reduced to approximately dB (Left) and dB (Right), continue to denote high reconstruction quality. These pixelwise metrics, in conjunction with the other quantitative measures, demonstrate that Harmonizer maintains perceptual coherence even under challenging, high-dynamic conditions.

4.4. Comparison with Existing Codecs and Tokenizers

We evaluate Harmonizer against several state-of-the-art models on both speech (VCTK) and music (MusicCaps) datasets. The baselines include Encodec, DAC, WavTokenizer, StableCodec (APCodec), ALMTokenizer, and SemantiCodec [125,126,127,128,129,130,131]. Performance is measured using standard intelligibility and quality metrics for speech, and spectral reconstruction losses for music.

Table 6 reports the short-time objective intelligibility (STOI) and perceptual evaluation of speech quality (PESQ) scores on the VCTK test set. Higher values indicate better performance. As shown in Table 6, Harmonizer achieves an STOI of 0.90 and a PESQ of 2.30, outperforming all baselines by a substantial margin (e.g., +0.09 in STOI over Encodec and +0.20 in PESQ over DAC).

Table 6.

Comparison of speech reconstruction quality on the VCTK test set, showing Short-Time Objective Intelligibility (STOI) and Perceptual Evaluation of Speech Quality (PESQ), where higher values indicate better performance.

For the MusicCaps dataset, we report Mel-spectrogram loss and STFT-based reconstruction error, where lower values are better. Table 7 shows that Harmonizer drastically reduces the Mel loss to 16.9 (almost half of Encodec’s 34.8) while maintaining competitive STFT loss (1.34). This indicates both improved spectral fidelity and overall perceptual quality.

Table 7.

Music reconstruction performance on the MusicCaps dataset, reporting mean Mel-spectrogram loss and STFT reconstruction loss (↓).

These results demonstrate that Harmonizer achieves superior intelligibility and perceptual quality on speech (Table 6), as well as significantly lower Mel and STFT losses on music (Table 7), compared to all previous codecs and tokenizers. The consistent gains across both domains highlight the effectiveness of our fusion-quantization and streaming inference pipeline for a wide variety of audio signals.

4.5. Tempo Comparison

To highlight how Harmonizer handles different rhythmic profiles, Table 8 presents a side-by-side summary of the key objective metrics for low-tempo/low-dynamic versus high-tempo/high-dynamic signals. Low-tempo signals exhibit notably lower MSE and pixelwise errors, reflecting the ease of capturing slowly varying dynamics. In contrast, while showing slightly higher spectral-convergence values, high-tempo signals achieve lower DTW distances, indicating that rapid transients are still precisely aligned in time. The marginal drop in MFCC similarity (from 0.9997 to 0.9928) and PSNR (from 48 dB to 46 dB) under high-tempo conditions suggests a small trade-off in spectral fidelity for speedy, high-energy passages. Overall, Harmonizer maintains consistently strong performance across tempo extremes, with predictable and bounded degradations only under the most dynamic scenarios.

Table 8.

Comparison of key reconstruction metrics between low-tempo/low-dynamic and high-tempo/high-dynamic music signals.

4.6. Figures and Visual Comparisons

Various visual analyses—waveform overlays, spectrogram comparisons, MFCC difference maps—are employed to illustrate the close alignment between ground truth signals and those generated by Harmonizer. These qualitative assessments complement the quantitative metrics, offering deeper insights into time-domain, frequency-domain, and perceptual fidelity.

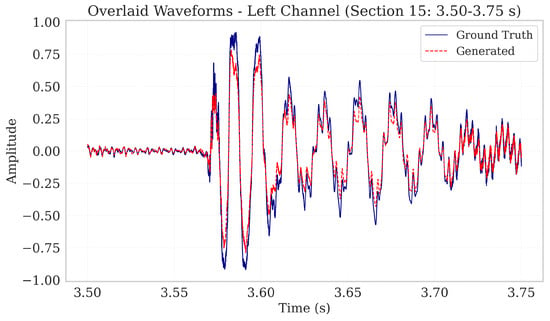

4.6.1. Waveform Overlays for Low-Dynamic Music

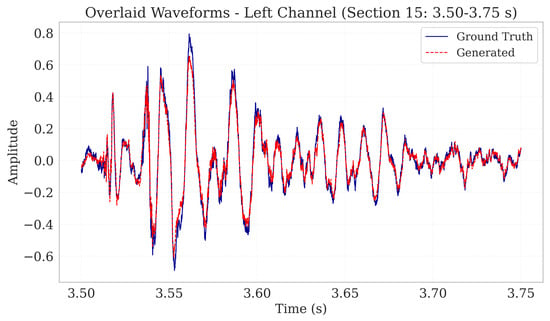

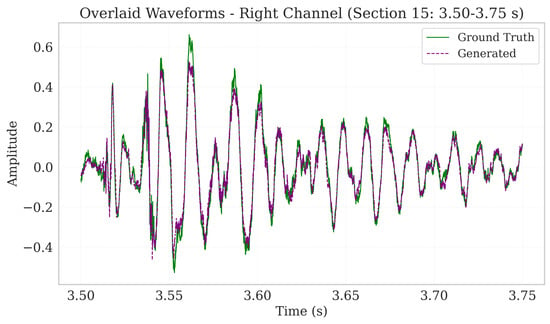

Waveform overlays provide a direct, time-domain comparison between the ground truth and regenerated signals. To evaluate Harmonizer’s reconstruction capabilities, we overlaid the ground truth and regenerated waveforms for a 0.25-s subsection of a low-dynamic vocal music signal. Specifically, the first four seconds (0–4 s) were segmented into sixteen equal parts, each lasting 0.25 s, and Section 15 (3.50–3.75 s) was chosen for detailed inspection. Additionally, it is worth noting that section 15 was randomly selected from a total of 16 sections, and this same section was also used for the evaluation waveform overlay of high-dynamic and high-tempo music signals.

Figure 2 shows the ground truth waveform (blue) overlaid with the regenerated waveform (red). The close amplitude alignment demonstrates Harmonizer’s ability to capture both peak magnitudes, and transient features. Phase consistency appears well preserved, indicating minimal phase distortion introduced by quantization or decoding. Figure 3 presents the ground truth waveform (green) alongside the regenerated waveform (purple) for the right channel. Similar to the left channel, amplitude envelopes, transients, and phase remain closely matched, underscoring Harmonizer’s robust stereo reconstruction.

Figure 2.

Overlaid left-channel waveforms for a 0.25 s excerpt of low-tempo, low-dynamic vocal music (3.50–3.75 s). The solid blue trace shows the ground-truth signal, while the dashed red trace shows the Harmonizer reconstruction. The near-perfect alignment of peaks, troughs, and transient edges demonstrates high temporal fidelity and minimal phase distortion introduced by the quantization and decoding pipeline.

Figure 3.

Overlaid right-channel waveforms for a 0.25 s excerpt of low-tempo, low-dynamic vocal music (3.50–3.75 s). The solid green trace shows the ground-truth signal, while the dashed purple trace shows the Harmonizer reconstruction. The near-complete overlap of the two traces indicates excellent amplitude and phase fidelity across stereo channels.

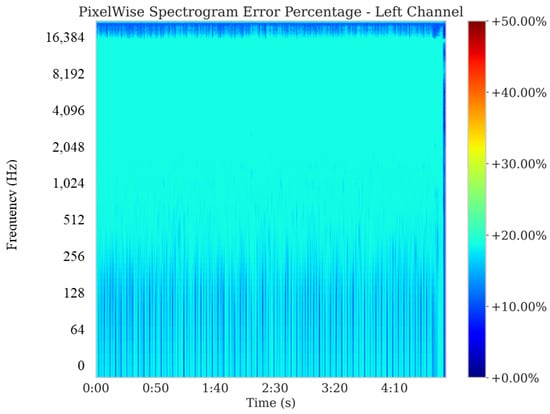

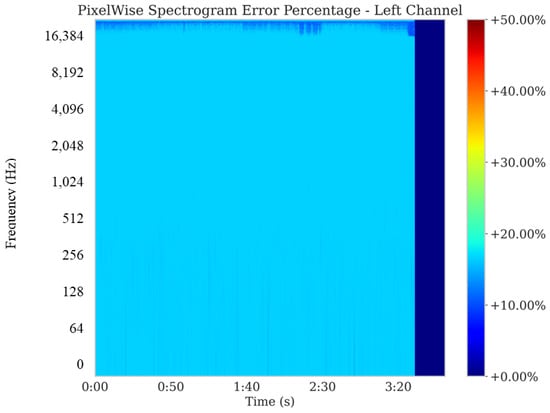

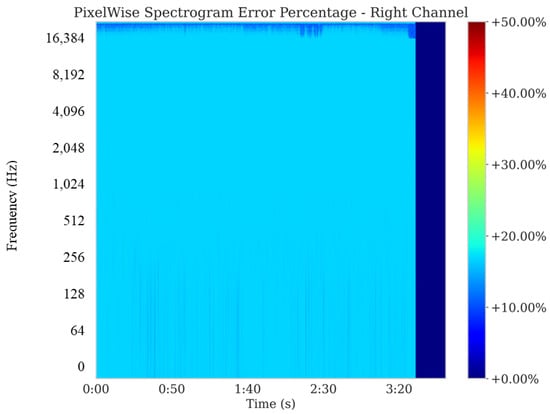

4.6.2. Spectrogram Error Percentage and 95th Percentile Clamping for Low-Tempo, Low-Dynamic Music

To gain a frequency-domain perspective on reconstruction accuracy, pixelwise spectrogram error percentages were computed for both channels, with values above the 95th percentile clamped to mitigate outlier effects. Figure 4 and Figure 5 illustrate that the average error hovers around 18%, a value deemed modest given the lower amplitude nature of low-dynamic music. This analysis confirms that Harmonizer preserves critical spectral details and introduces only minimal deviations in the frequency domain.

Figure 4.

Pixelwise spectrogram error percentage for the left channel of a low-tempo, low-dynamic music excerpt (clamped at the 95th percentile). The color scale indicates reconstruction error in each time-frequency bin, with most bins below 18%, demonstrating that Harmonizer maintains spectral integrity with only minor deviations.

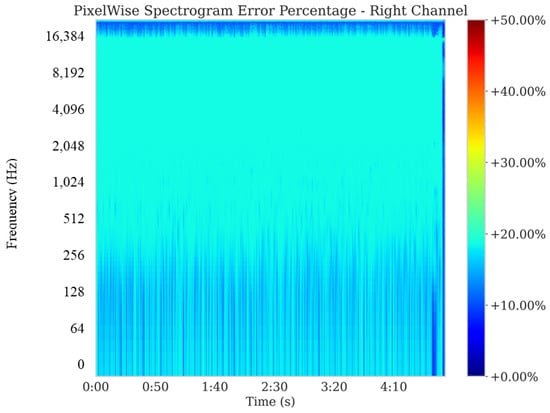

Figure 5.

Pixelwise spectrogram error percentage for the right channel of low-tempo, low-dynamic music (values clamped at the 95th percentile). The majority of time-frequency bins exhibit reconstruction errors below 18%, demonstrating consistent spectral fidelity across both stereo channels.

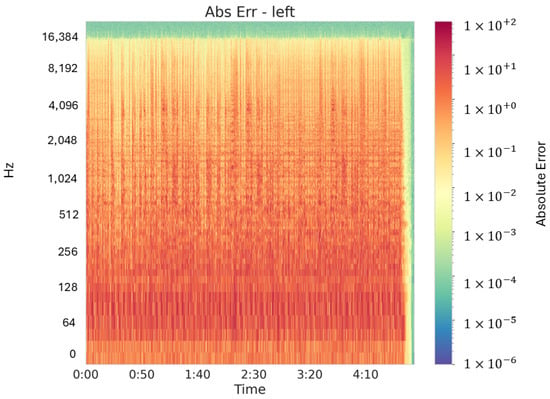

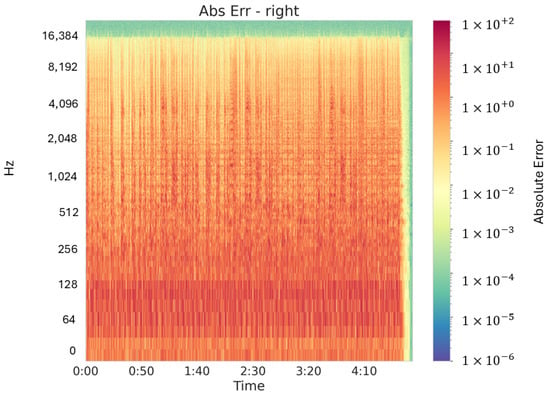

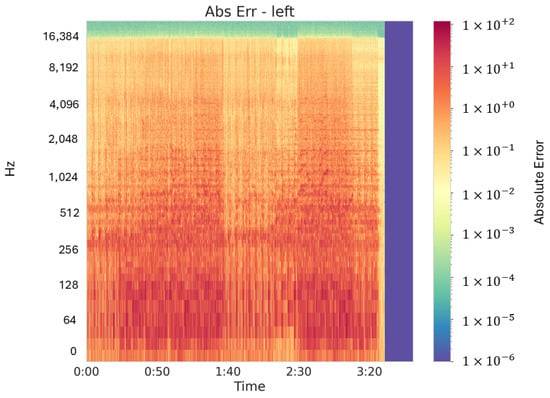

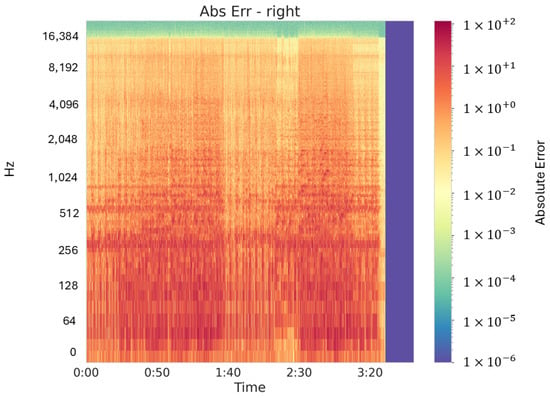

To show even finer detail—especially in those low-amplitude regions—we also computed absolute pixelwise errors and plotted them on a logarithmic colorbar. Figure 6 and Figure 7 display these absolute-error spectrograms for the left and right channels, respectively. By using a log scale, we amplify subtle discrepancies that percentage-based plots can smooth over, revealing that even at the quietest frequencies, the maximum deviations remain well below perceptually significant thresholds.

Figure 6.

Absolute pixelwise spectrogram error for low-dynamic music’s left channel, displayed on a logarithmic color scale to highlight subtle discrepancies across time and frequency.

Figure 7.

Absolute pixelwise spectrogram error for low-dynamic music (right channel), shown on a logarithmic color scale to amplify subtle discrepancies across time and frequency bins.

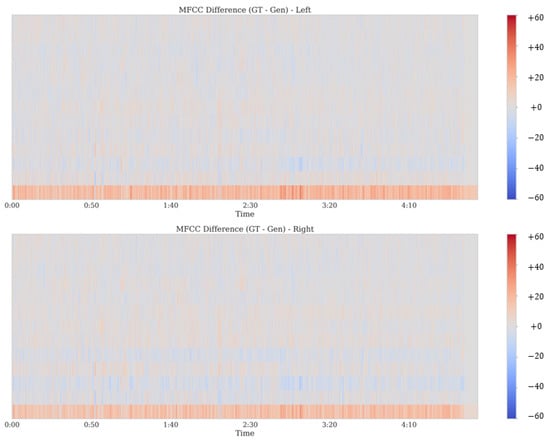

4.6.3. MFCC Analysis and Difference Contours for Low-Tempo, Low-Dynamic Music

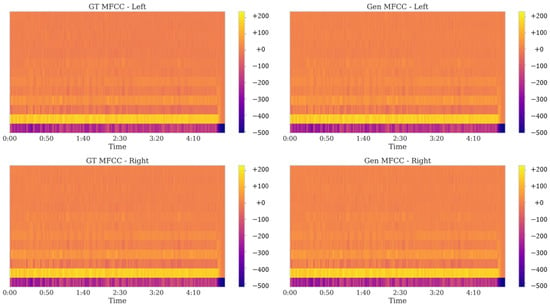

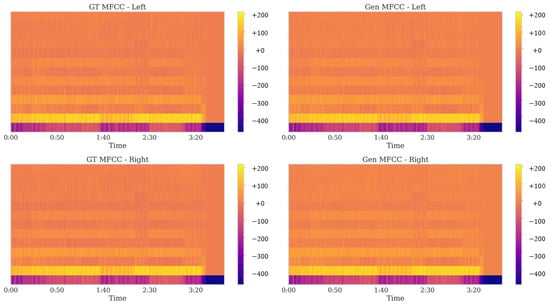

Mel-Frequency Cepstral Coefficients (MFCCs) offer a perceptual measure of how closely the regenerated signal’s timbral characteristics align with the original. Figure 8 compares the MFCCs of the ground truth (GT) and generated (Gen) signals, revealing near-identical distributions across the time-frequency plane. The overall alignment in both left and right channels is indicative of robust preservation of spectral nuances, especially in mid-to-high frequency regions.

Figure 8.

Comparison of Mel-Frequency Cepstral Coefficients for ground truth and regenerated low-tempo, low-dynamic music in left and right channels. Near-identical envelope shapes and temporal patterns indicate accurate preservation of timbral characteristics across both channels.

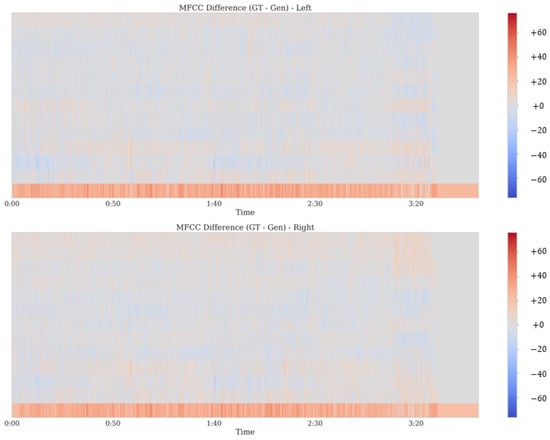

Figure 9 presents difference contours (GT − Gen), where the extensive white or lightly colored areas (near-zero difference) underscore Harmonizer’s high accuracy in replicating subtle vocal and instrumental timbres. A minor, consistent band of red in the lower frequency range indicates a slight positive difference, suggesting a small residual mismatch in the low-frequency components. Nevertheless, the magnitude of these deviations remains minimal compared to the rest of the spectrum, indicating that the model maintains essential low-frequency content and does not introduce perceptually significant errors.

Figure 9.

Difference between ground-truth and regenerated MFCCs for low-tempo, low-dynamic music. Light regions indicate near-zero deviation in cepstral coefficients across time and frequency, while the subtle band of warmer hues in lower frequencies highlights a minor residual mismatch that remains perceptually negligible.

These results confirm that Harmonizer successfully reproduces both global and subtle spectral properties, even in low-dynamic contexts, ensuring perceptually convincing vocal and instrumental renditions. The minor discrepancies observed do not detract from the overall fidelity, highlighting the model’s proficiency in preserving the essential timbral characteristics of the source material.

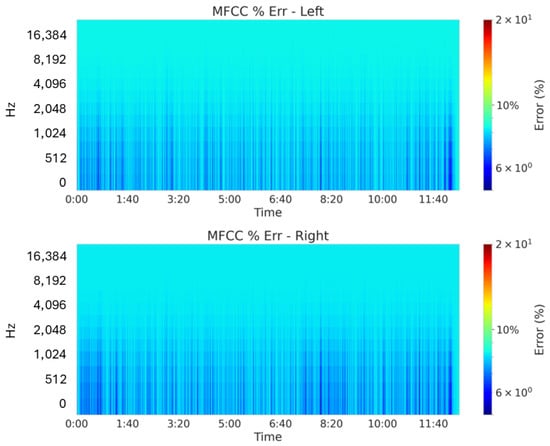

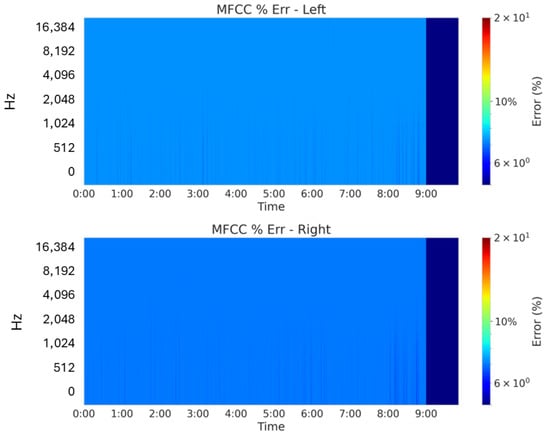

4.6.4. MFCC Error Percentage Analysis for Low-Tempo, Low-Dynamic Music

While the above MFCC difference plots demonstrate near-zero deviation between the ground truth and generated signals, a more granular perspective can be obtained by examining the MFCC error percentage. To mitigate the influence of outliers, the 95th percentile of the data is clamped. As shown in Figure 10, the vast majority of the time-frequency plane is represented by blue regions, indicating that the error percentage remains effectively near zero throughout. In fact, even in the most extreme cases, the maximum error is approximately 5%.

Figure 10.

MFCC error percentage (GT vs. Gen) for low-tempo, low-dynamic music with logarithmic color-bar. The predominantly blue color indicates negligible errors after clamping the 95th percentile to avoid outliers, with the maximum error reaching only about 5%.

This result further highlights the high fidelity of Harmonizer in reproducing low-tempo, low-dynamic audio content. The negligible error observed across the entire frequency range underscores the model’s capacity to preserve both subtle and global spectral features, reinforcing its robustness and precision in real-world scenarios.

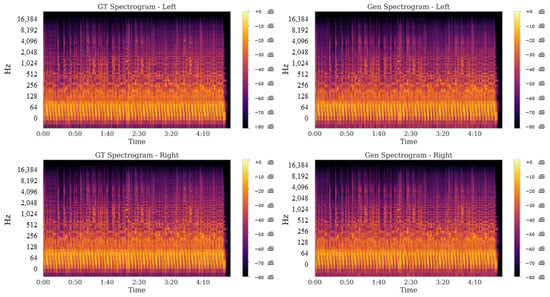

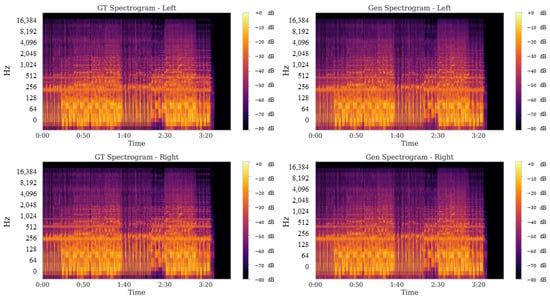

4.6.5. Spectrogram Analysis for Low-Tempo, Low-Dynamic Music

Beyond waveforms and MFCCs, a direct spectrogram comparison further validates Harmonizer’s ability to capture the spectral structure of low-dynamic material. Figure 11 displays side-by-side dB-scale spectrograms for an approximately 288 s excerpt, showing ground truth (GT) on the left and generated (Gen) on the right for both stereo channels. The model accurately reproduces the overall energy distribution, transient onsets, and high-frequency “air”, reinforcing the quantitative metrics that underscore strong spectral fidelity in quieter musical passages.

Figure 11.

Ground truth (GT) vs. generated (Gen) spectrograms for low-tempo, low-dynamic music. The top row represents left-channel spectrograms, while the bottom row represents right-channel spectrograms. Harmonizer preserves both broad spectral contours and finer harmonic details in low-amplitude passages. Quantitatively, over the full 288 s excerpt: left channel: PMSE = 1.4323, SSIM = 0.9887, PSNR = 47.96 dB; right channel: PMSE = 1.2548, SSIM = 0.9881, PSNR = 47.67 dB.

4.6.6. Waveform Overlays for High-Tempo, High-Dynamic Music

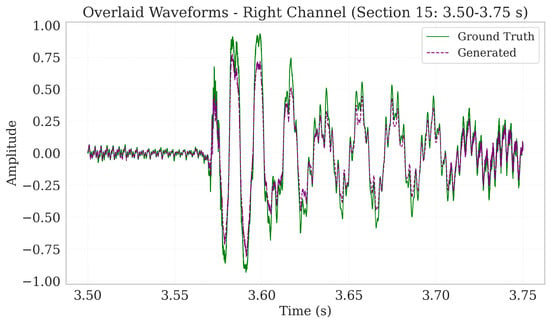

To assess robustness under more complex amplitude fluctuations, we selected a high-tempo, high-dynamic music segment and extracted a 0.25 s subsection (Section 15: 3.50–3.75 s). Figure 12 and Figure 13 compare ground truth waveforms (blue or green) with regenerated waveforms (red or purple) for the left and right channels, respectively. The close overlap in amplitude, transient handling, and phase coherence underscore Harmonizer’s capacity to accurately reconstruct rapid, high-energy variations.

Figure 12.

Overlaid waveforms—left channel (section 15: 3.50–3.75 s) for high-tempo, high-dynamic music. The red regenerated waveform tracks the blue ground truth waveform closely, indicating minimal temporal or amplitude distortion.

Figure 13.

Overlaid waveforms—right channel (section 15: 3.50–3.75 s) for high-tempo, high-dynamic music. The purple regenerated waveform remains nearly indistinguishable from the green ground truth, reflecting robust transient alignment.

4.6.7. Spectrogram Analysis for High-Tempo, High-Dynamic Music

A 210 s excerpt of high-tempo, high-dynamic music was examined to confirm that Harmonizer preserves spectral fidelity under conditions of rapid energy fluctuations. Figure 14 presents the ground truth (GT) and generated (Gen) spectrograms (dB scale, 0–16 kHz) for both channels. Large amplitude swings, transient events, and high-frequency details are accurately retained, with minimal discrepancies observable even at faster tempos. Post-music silence (beyond 3:30 s) is also consistently handled, indicating that no artificial artifacts are introduced at track completion.

Figure 14.

Ground truth (GT) vs. venerated (Gen) spectrograms for high-tempo, high-dynamic music. Harmonizer captures the broad dynamic range and maintains transient accuracy, matching key spectral features from 0 Hz to 16 kHz. Quantitatively, over the full 231 s excerpt: left channel: PMSE = 5.2295, SSIM = 0.9889, PSNR = 45.98 dB; right channel: PMSE = 5.4055, SSIM = 0.9892, PSNR = 46.09 dB; mean clamped spectrogram error = 15.2% (≈3.9%).

Figure 15 and Figure 16 illustrate the pixelwise spectrogram error percentage for the left and right channels, respectively, with values above the 95th percentile clamped to suppress outliers. The majority of time-frequency bins exhibit approximately 15% error—slightly lower than the 18% seen for low-dynamic music—reflecting Harmonizer’s ability to cope with broad energy distributions and transient-heavy content.

Figure 15.

Pixelwise Spectrogram error percentage (clamped at 95th percentile)—left channel for high-tempo, high-dynamic music. The primary error range near 10–15% underscores Harmonizer’s capacity for accurate spectral reconstruction in rapidly changing signals. Mean clamped error = 15.2% ( ≈ 3.9%).

Figure 16.

Pixelwise spectrogram error percentage (clamped at 95th percentile)—right channel for high-tempo, high-dynamic music. Similar to the left channel, most bins remain in a modest error range, corroborating stereo consistency. Quantitatively, the mean clamped error is 15.2% ( ≈ 3.9%).

To further expose subtle deviations in quieter regions of the spectrogram, we also plotted absolute pixelwise errors on a logarithmic color scale. Figure 17 and Figure 18 show these log-scaled absolute-error spectrograms for each channel. Even under high-dynamic conditions, maximum errors remain well below levels that would impact perceptual quality.

Figure 17.

Absolute pixelwise spectrogram error for high-dynamic music’s left channel, shown on a logarithmic color scale to emphasize subtle reconstruction deviations across frequency and time.

Figure 18.

Absolute pixelwise spectrogram error for high-dynamic music’s right channel, depicted on a logarithmic color scale to highlight subtle reconstruction deviations across time and frequency.

DTW values must be judged relative to the signal length. For a 288 s test signal sampled at 96 kHz ( samples), our DTW of 12.12 corresponds to an average warp deviation of only samples per time step (or about 0.042 warps per second). By comparison, a random baseline signal produces DTW distances on the order of . Thus, our DTW is extremely low, confirming near-perfect temporal alignment.

4.6.8. MFCC Analysis for High-Tempo, High-Dynamic Music

MFCCs were also analyzed for high-tempo, high-dynamic content to gauge perceptual fidelity. Figure 19 contrasts the ground truth (GT) and generated (Gen) MFCCs for both stereo channels over a 3.5 s window, revealing near-identical band structures despite the presence of rapid transient peaks and wide amplitude swings. Figure 20 depicts the difference (GT − Gen), where the predominance of near-neutral shading confirms minimal cepstral deviation. Even under challenging signal dynamics, Harmonizer preserves the fundamental and harmonic cues necessary to maintain vocal and instrumental realism.

Figure 19.

Ground truth (GT) vs. generated (Gen) MFCCs for high-tempo, high-dynamic music. Harmonizer successfully captures complex spectral envelopes and abrupt changes, ensuring high perceptual fidelity. MFCC similarity = 0.9928.

Figure 20.

MFCC difference (GT − Gen) for high-tempo, high-dynamic music. The near-zero difference across most frames confirms robust cepstral alignment, even in demanding signal contexts.

4.6.9. MFCC Error Percentage Analysis for High-Tempo, High-Dynamic Music

To further quantify the spectral alignment, we examine the MFCC error percentage in high-tempo, high-dynamic audio segments. Similar to the low-tempo, low-dynamic analysis, the 95th percentile of the data is clamped to mitigate the influence of outliers. Figure 21 demonstrates that the majority of the time-frequency plane is represented by blue regions, indicating error values near zero. Even at its peak, the error does not exceed approximately 5%, underscoring Harmonizer’s ability to accurately replicate intricate spectral changes and rapid amplitude fluctuations.

Figure 21.

MFCC error percentage (GT vs. Gen) for high-tempo, high-dynamic music with logarithmic color-bar. The predominantly blue regions reflect near-zero error, with a maximum deviation of about 5%.

Taken together, these visual and numerical evaluations underscore Harmonizer’s ability to reconstruct a wide range of musical content—spanning from low-dynamic vocal passages to fast-paced, high-energy segments—while preserving critical acoustic cues such as amplitude envelope, harmonic structure, transient detail, and timbral consistency. The strong alignment observed in both the time and frequency domains, as well as across perceptual measures (e.g., MFCCs), confirms that Harmonizer is well-suited for professional audio applications where accuracy and fidelity are paramount.

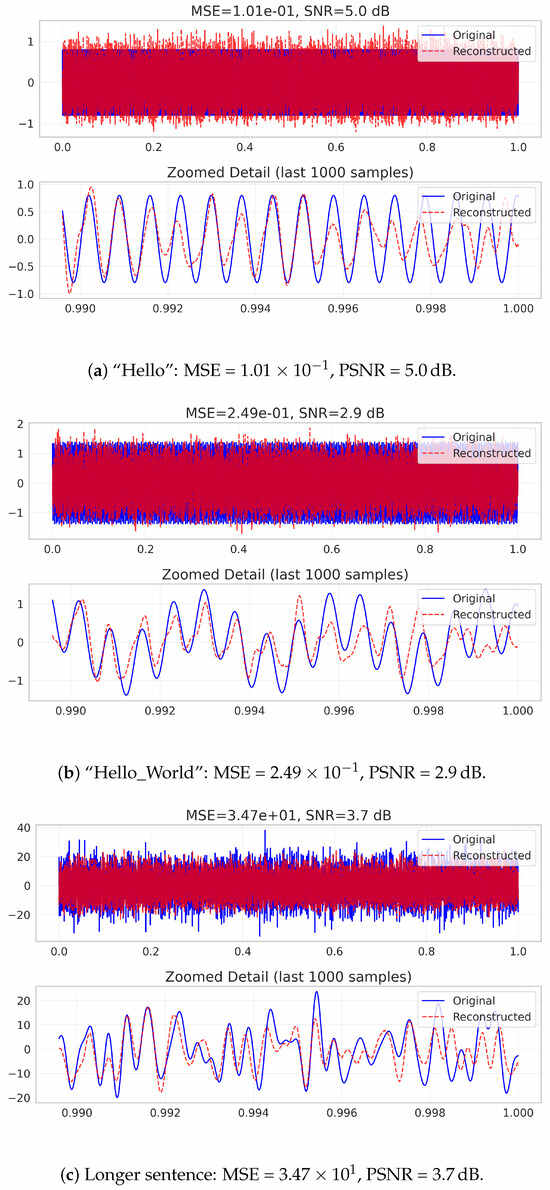

4.7. Evaluating the Harmonizer on Text Inputs

To assess the applicability of Harmonizer to discrete, symbolic data, we converted each input string into a one-dimensional signal via ASCII encoding and processed it through the standard STFT–Hilbert–SCLAHE and FusionQuantizer pipeline. The model was fine-tuned on a training set comprising 2000 unique English words and a simple lookup table that maps each reconstructed vector back to its nearest ASCII token. Figure 22 presents three representative cases:

Figure 22.

Comparison of original (blue) and reconstructed (red dashed) ASCII-encoded-Sinusoidal signals for text inputs of increasing length. Panels (a–c) correspond, respectively, to single-word, two-token, and longer-sentence reconstructions.

- “Hello” (single word): Achieves an MSE of and PSNR of 5.0 dB.

- “Hello_World” (two tokens): MSE increases to with PSNR of 2.9 dB.

- Longer sentence (multi-word sequence): Reconstruction error grows dramatically (MSE , PSNR 3.7 dB).

These results indicate that while Harmonizer can faithfully reproduce short, low-complexity strings, its performance degrades on longer sequences due to the limited receptive field of the ASCII-signal encoder and the fixed-size lookup table. To address these limitations, we are developing a specialized Text-Harmonizer model, which will incorporate the following:

- Extended receptive field: Integration of positional embeddings and dilated convolutions to capture longer-range dependencies.

- Dynamic vocabulary expansion: A learnable token embedding layer to support arbitrary word sequences beyond the initial 2000 entries.

- Sequence-to-sequence decoding: A transformer-based decoder head to improve end-to-end reconstruction and handle variable-length inputs.

These enhancements in the forthcoming Text-Harmonizer will resolve the observed degradation on extended text inputs, enabling robust, high-fidelity tokenization and reconstruction across the full spectrum of natural language.

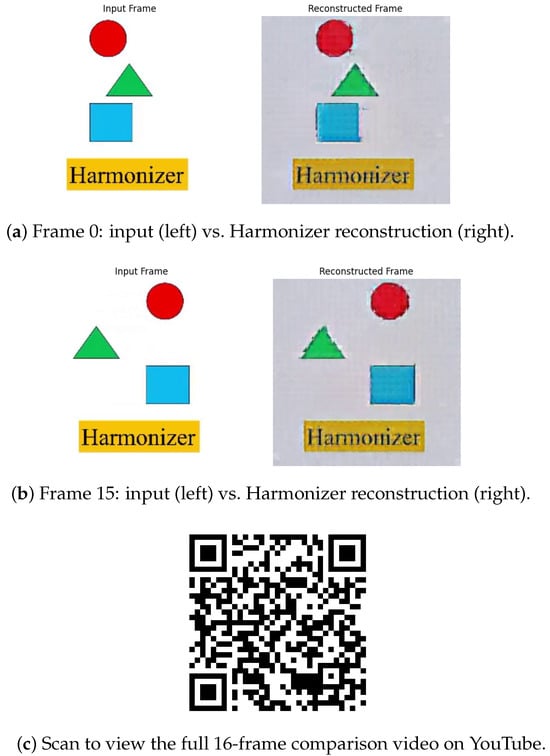

4.8. Preview of Harmonizer Video Input Handling

We have extended the Harmonizer pipeline from images to video by dividing each clip into non-overlapping spatiotemporal patches of size pixels over 16 consecutive frames (yielding tensors of shape ). Each patch is flattened into a 1D signal, concatenated across patches, and processed through the same STFT–Hilbert–SCLAHE preprocessing and FluxHead/FusionQuantizer stages. Fine-tuning was performed on the UCF-101 action recognition dataset, using standard train/validation splits and our existing optimization setup. Figure 23 presents the following:

Figure 23.

Select frame comparisons and video link. (a) First frame—note crisp shapes and clear text with only minor block artifacts. (b) Last frame—demonstrates consistent reconstruction quality across the clip. (c) QR code linking to the full demonstration of all 16 frames, highlighting temporal coherence and overall video performance. The link to this video can be found in [134]. These preliminary results confirm that Harmonizer can process video inputs end-to-end; future work will focus on artifact reduction and temporal smoothing.

- (a)

- Side-by-side comparison of the first frame (input vs. reconstruction).

- (b)

- Side-by-side comparison of the last (15th) frame.

- (c)

- A QR code linking to the full 16-frame reconstruction video.

As shown in Figure 23a,b, Harmonizer’s video reconstructions exhibit the following.

- Sharp geometric fidelity: Object contours (e.g., circles, triangles, squares) remain crisp.

- Accurate color reproduction: Original hues and saturation levels are preserved.

- Text legibility: Overlaid labels (e.g., “Harmonizer”) are rendered without significant distortion.

Remaining block seams and ringing artifacts indicate per-patch quantization noise. In our upcoming Video-Harmonizer extension, we will resolve these issues by incorporating adaptive smoothing filters, enforcing temporal consistency losses across frames, and employing larger, multi-scale codebooks to capture broader spatial context and eliminate patch-boundary artifacts.

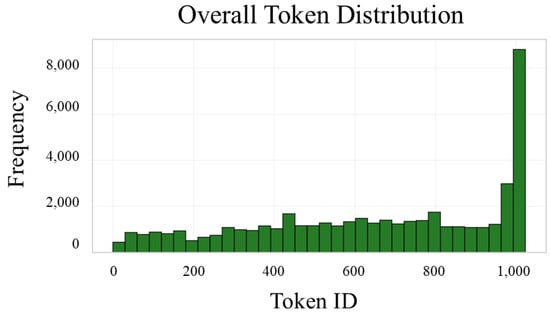

Figure 24 presents a unified histogram of token emissions aggregated over all 16 codebooks. The full ID range [0–1023] is divided into 34 equal-width bins to ensure each bar contains sufficient samples for reliable comparison while preserving fine-grained resolution. The vertical axis shows the total number of emissions across every frame and codebook. Quantitatively, the tallest bar—covering IDs 992–1023—registers around 9000 emissions, roughly three times the count of the second-highest bin (IDs 960–991), which has about 3000 emissions. In contrast, mid-range bins (e.g., IDs 400–800) average between 1000 and 1800 counts each, and the lowest ID bins (0–100) fall below 1000. This pronounced skew toward high-ID centroids indicates that the model strongly favors a small subset of its learned embedding space, suggesting these centroids capture dominant spectral or temporal features in the audio. Meanwhile, the long left tail—though less frequent—demonstrates that low-ID centroids remain available to encode rarer or subtler signal components. Such an asymmetric distribution implies under-utilization of much of the code space. Future work might explore entropy-based regularization or dynamic codebook re-allocation during training to encourage a more balanced usage of centroids, potentially improving the model’s capacity to represent diverse audio features.

Figure 24.

Overall token distribution. Token–ID bins (34 equal–width intervals spanning 0–1023) on the horizontal axis versus cumulative emission count across all 16 codebooks on the vertical axis. The pronounced peak at the highest bin (IDs 992–1023; 9000 counts) and the long tail toward low IDs are evident.

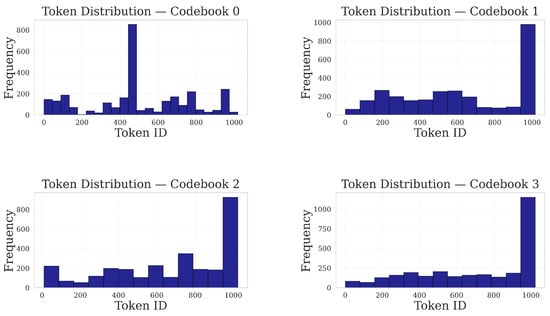

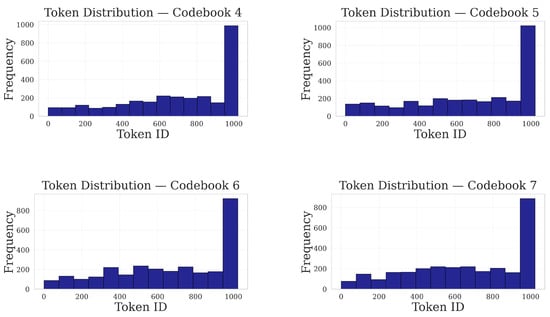

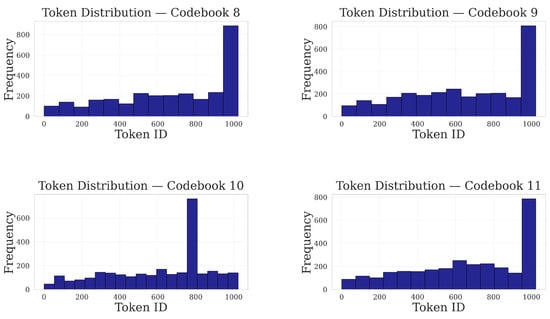

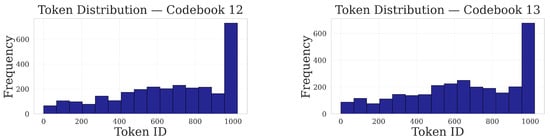

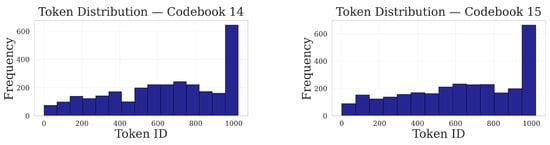

Figure 25, Figure 26, Figure 27 and Figure 28 show the individual token–ID histograms for each of the 16 codebooks, organized into four separate figures of two rows and two columns each. In every subplot, the horizontal axis covers centroid IDs 0–1023, and the vertical axis indicates the total emission count at each ID over the entire audio sequence.

Figure 25.

Codebooks 0–3. Codebook 0 exhibits a distinct peak around ID ≈ 500, deviating from the patterns observed in the others. Codebooks 1 through 3 all terminate with a pronounced spike at ID 1023. Notably, Codebook 1 displays two intermediate peaks prior to the terminal spike, Codebook 2 demonstrates a generally increasing trend with minor fluctuations, and Codebook 3 maintains a near-plateaued distribution before the final surge at ID 1023.

Figure 26.

Codebooks 4–7. Codebook 4 exhibits an almost linear rise across IDs; Codebook 5 shows a moderate ramp before the final spike; Codebook 6 and 7 remain almost flat with some fluctuations until a steep jump near the end.

Figure 27.

Codebooks 8–11. Codebook 8 shows a smooth, gentle rise; Codebook 9 is flat until a sharp final ascent; Codebook 10 features a different peak at ID ≈ 800; Codebook 11 presents a gradual midrange build then a pronounced spike.

Figure 28.

Codebooks 12–15. Codebooks 12 and 15 feature gradual ramps with moderate slope before the significant terminal jump bar; Codebooks 13 and 14 exhibit small secondary peaks before the dominant terminal bar.

- Full support with specialization: Every codebook activates nearly all 1024 centroids at least once, confirming comprehensive utilization of embedding capacity.

- Distinct modal biases: Within each 2 × 2 block, some histograms rise gradually from low to high IDs, others remain flat until a sharp spike at 1023, and a few exhibit secondary peaks, implying varied codebook specializations in capturing audio features.

5. Application of Harmonizer in Multimodal LLM

In streaming multimodal large language models, diverse data modalities such as audio, video, text, images, and sensor streams are processed simultaneously in near real time. Harmonizer serves as a universal tokenization and embedding backbone by converting each modality into a coherent set of discrete tokens that can be consumed by the LLMs.

5.1. Harmonizer as a Universal Multimodal Tokenizer

Traditional LLMs use text-derived vocabularies and lack a built-in way to turn naturally continuous signals (speech, music, video, sensor data) into discrete tokens. Harmonizer solves this with the following:

- Feature extraction: Incoming raw signals are first converted into intermediate feature maps using specialized preprocessing modules-STFT for time-frequency representations, Hilbert transforms for analytic signal information, and SCLAHE for adaptive contrast enhancement.

- Data-driven quantization: The FusionQuantizer then learns a compact, domain-specific codebook. It assigns each feature vector to the nearest learned codeword, effectively building a vocabulary of signal patterns.

- Real-time tokenization: During streaming inference, new signal frames are passed through the same preprocessors and immediately mapped to tokens via the trained codebook, ensuring minimal latency.

Together, these steps turn raw continuous inputs into discrete, learnable tokens that any multimodal LLM can ingest. The overall architecture of Harmonizer, showing how it slots into the front end of an MLLM as a universal signal tokenizer, is illustrated in Figure 29. The ability of Harmonizer to handle vocal music, video, and text has been shown in this current paper; however, for other modalities, we will also enhance this model to handle other input data with the highest quality.

Figure 29.

Application of Harmonizer as a Universal Multimodal Tokenizer in Large Language Models. The pipeline converts raw inputs—(a) text (ASCII → sine-encoded vectors), (b) audio (STFT → Hilbert → SCLAHE feature maps), and (c) video (spatiotemporal patches)—into a shared feature space. The FusionQuantizer then maps these continuous features to discrete token IDs via a learned codebook. During Streaming Inference, FluxFormer generates token sequences on the fly, which are interleaved or concatenated with standard text tokens and fed into cross-modal attention layers of the LLM. This unified token stream enables a single model to perform context-aware reasoning over text, audio, and video without separate modality-specific encoders.

5.2. Integration into Large Language Models

After tokenization, the multimodal streams are concatenated or interleaved with conventional text tokens, forming a single unified sequence that is processed by the self-attention layers of the large language model. Cross-modal attention allows the model to jointly attend to both textual tokens and Harmonizer tokens, capturing semantic relationships across modalities. In retrieval-augmented generation setups, Harmonizer tokens also function as query vectors for retrieving relevant external knowledge, thereby enriching the model’s outputs with context-aware information.

5.3. Implications for Future Multimodal LLMs

By providing a unified, discrete representation for continuous signals, Harmonizer significantly reduces the engineering overhead required to adapt large language models to new or evolving data streams. Its universal tokenization framework enables the dynamic incorporation of novel sensors and changing data distributions without necessitating a complete retraining of the tokenizer. Moreover, the interpretable nature of discrete tokens facilitates transparent multimodal reasoning, paving the way for advanced applications in real-time speech understanding, music analysis, sensor-driven robotics, and retrieval-augmented generation.

5.4. Limitations

While Harmonizer shows strong performance on music and speech signals, several limitations remain:

- Modality scope. Our evaluations have focused exclusively on audio outputs, including music, vocals, and speech. The extension of our approach to other continuous modalities—such as video, LiDAR, EEG, or ECG—has yet to be explored.

- Hyperparameter sensitivity. The selection of hyperparameters, including the number and size of codebooks, Transformer depth, headcount, and the weighting of multi-objective loss functions, can have a substantial impact on performance. Accordingly, a comprehensive sensitivity analysis is essential.

- Resource requirements. Training multiple high-capacity codebooks and streaming Transformers demands substantial computing and memory, which may limit edge or mobile deployment.

- Robustness to noise and out-of-distribution data. Real-world signals often contain noise, dropouts, or previously unseen patterns. Ensuring reliable tokenization under these conditions will require advanced signal processing and generative machine-learning techniques.

- Latency and throughput. Designed for streaming applications, the system requires further optimization—such as reducing delays, parallelizing processing, and leveraging hardware accelerators—to enable real-time, low-latency tokenization for high-rate sensor or video data in time-sensitive applications.

6. Conclusions

6.1. Summary

We have presented Harmonizer, a universal tokenization framework that transforms continuous and discrete signals—audio, text, and video—into a shared discrete vocabulary for multimodal LLMs. By combining STFT, Hilbert transforms, SCLAHE preprocessing with our FusionQuantizer on a FluxFormer backbone and a multi-objective loss, Harmonizer delivers the following.

- Modality-agnostic tokens: A learned codebook that faithfully represents diverse signal patterns.

- High-fidelity reconstruction: Near-perfect audio reconstruction on both low- and high-dynamics music, with strong time/frequency alignment and perceptual similarity.

- Streaming inference: Real-time token generation suitable for interactive and low-latency applications.

These results confirm Harmonizer’s ability to bridge signal processing and language modeling within a single, efficient pipeline.

6.2. Future Directions

Building on these findings, we are actively developing specialized variants:

- Text-Harmonizer: Extends the pipeline to robustly tokenize and reconstruct longer text sequences via learnable token embeddings and sequence-to-sequence decoding.

- Video-Harmonizer: Adapts our quantization framework to spatiotemporal patches, with temporal consistency losses and larger codebooks to eliminate block artifacts.

Insights from these ongoing efforts will be integrated into the core Harmonizer model, further improving its modality-agnostic robustness. Additional avenues include the following:

- New modalities: Incorporating sensor, medical, and LiDAR signals.

- Domain adaptation: Reducing retraining overhead when adding novel data types.

- Interactive fine-tuning: Prompt-based re-weighting of token streams within LLMs for task-specific optimization.

Together, these extensions aim to establish a truly universal tokenization backbone for next-generation multimodal intelligence.

Author Contributions

Conceptualization, A.A. and Y.L.; Methodology, A.A., D.W. and Y.L.; Software, A.A., A.G. and N.G.N.; Validation, A.A., D.W., N.G.N. and Y.L.; Formal analysis, A.A., A.G., N.G.N., D.W. and Y.L.; Investigation, A.A., N.G.N., D.W. and Y.L.; Resources, D.W. and Y.L.; Data curation, A.A. and Y.L.; Writing—original draft, A.A., A.G., N.G.N. and Y.L.; Writing—review & editing, A.A., A.G., N.G.N., D.W. and Y.L.; Visualization, A.A., N.G.N. and D.W.; Supervision, N.G.N., D.W. and Y.L.; Project administration, N.G.N., D.W. and Y.L.; Funding acquisition, D.W. and Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is jointly sponsored by NIH AIM-AHEAD of grant number OT2OD032581 and NSF of grant number 192847.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LLM | Large Language Model |

| MLLM | Multimodal Large Language Model |

| NLP | Natural Language Processing |

| STFT | Short-Time Fourier Transform |

| Hilbert | Hilbert Transform |

| SCLAHE | Spectrogram Contrast Limited Adaptive Histogram Equalization |

| VQ | Vector Quantization |

| FusionQuantizer | Fusion Vector Quantizer |

| FluxHead | A Streaming Attention-based Encoder |

| FluxFormer | A Streaming Transformer-based backbone |

| DTW | Dynamic Time Warping |

| MSE | Mean Squared Error |

| PMSE | PixelWise Mean Squared Error |

| SSIM | Structural Similarity Index Measure |

| CC | Correlation Coefficient |

| CS | Cosine Similarity |

| SC | Spectral Convergence |

| MFCC | Mel-Frequency Cepstral Coefficient |

| SNR | Signal-to-Noise Ratio |

| PSNR | Peak Signal-to-Noise Ratio |

| LSD | Log-Spectral Distance |

| ReLU | Rectified Linear Unit |

| MIMO | Multiple-Input, Multiple-Output |

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the ACL, Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar]

- Xu, P.; Zhu, X.; Clifton, D.A. Multimodal learning with transformers: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12113–12132. [Google Scholar] [CrossRef]

- Kudo, T. SentencePiece: A Simple and Language Independent Subword Tokenizer and Detokenizer for Neural Text Processing. arXiv 2018, arXiv:1808.06226. [Google Scholar]

- Tsai, Y.H.H.; Bai, S.; Liang, P.P.; Kolter, J.Z.; Morency, L.P.; Salakhutdinov, R. Multimodal Transformer for Unaligned Multimodal Language Sequences. In Proceedings of the 57th Annual Meeting of the ACL, Florence, Italy, 28 July–2 August 2019; pp. 6558–6570. [Google Scholar]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.-P. Multimodal Machine Learning: A Survey and Taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Jin, Y.; Li, J.; Liu, Y.; Gu, T.; Wu, K.; Jiang, Z.; He, M.; Zhao, B.; Tan, X.; Gan, Z.; et al. Efficient multimodal large language models: A survey. arXiv 2024, arXiv:2405.10739. [Google Scholar]

- Jia, J.; Gao, J.; Xue, B.; Wang, J.; Cai, Q.; Chen, Q.; Zhao, X.; Jiang, P.; Gai, K. From Principles to Applications: A Comprehensive Survey of Discrete Tokenizers in Generation, Comprehension, Recommendation, and Information Retrieval. arXiv 2025, arXiv:2502.12448. [Google Scholar]

- Proakis, J.G.; Manolakis, D.G. Digital Signal Processing: Principles, Algorithms, and Applications, 4th ed.; Pearson: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Zou, Y.; Li, P.; Li, Z.; Huang, H.; Cui, X.; Liu, X.; Zhang, C.; He, R. Survey on AI-Generated Media Detection: From Non-MLLM to MLLM. arXiv 2025, arXiv:2502.05240. [Google Scholar]

- Oppenheim, A.V.; Schafer, R.W. Discrete-Time Signal Processing, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Gray, R.M.; Neuhoff, D.L. Quantization. IEEE Trans. Inf. Theory 1998, 44, 2325–2383. [Google Scholar] [CrossRef]

- Wu, S.; Fei, H.; Qu, L.; Ji, W.; Chua, T.-S. Next-GPT: Any-to-Any Multimodal LLM. In Proceedings of the Forty-first International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]