Abstract

Structural health monitoring (SHM) plays a critical role in ensuring the safety and longevity of civil infrastructure by enabling the early detection of structural changes and supporting preventive maintenance strategies. In recent years, deep learning techniques have emerged as powerful tools for analyzing the complex data generated by SHM systems. This study investigates the use of deep belief networks (DBNs) for classifying structural conditions before and after retrofitting, using both ambient and train-induced acceleration data. Dimensionality reduction techniques such as principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE) enabled a clear separation between structural states, emphasizing the DBN’s ability to capture relevant classification features. The DBN architecture, based on stacked restricted Boltzmann machines (RBMs) and supervised fine-tuning, was optimized via grid search and cross-validation. Compared to traditional unsupervised methods like K-means and PCA, DBNs demonstrated a superior performance in feature representation and classification accuracy. Experimental results showed median cross-validation accuracies of for ambient data and for train-induced data, with low variability. Although random forests slightly outperformed DBNs in classifying ambient data (), DBNs achieved better results with more complex train-induced signals (). Robustness analysis under Gaussian noise further demonstrated the DBN’s resilience, maintaining over accuracy for ambient data at noise levels up to . These findings confirm that DBNs are a reliable and effective approach for data-driven structural condition assessment in SHM systems.

Keywords:

structural health monitoring; deep belief networks; machine learning; classification; noise analysis MSC:

68T07

1. Introduction

Some globally recognized structural failures, such as the Tacoma Narrows Bridge collapse (1940), the Morandi Bridge in Genoa (2018), and the Florida International University pedestrian bridge failure (2018), have accelerated the integration of structural health monitoring (SHM) techniques in civil engineering. SHM enables the early detection of damage and supports timely interventions to prevent catastrophic failures [1].

Retrofitting, or structural reinforcement, plays a key role in SHM by improving the safety and durability of aging infrastructure [2]. By identifying vulnerable components, SHM helps prioritize interventions and optimize resource allocation. A notable example is the KW51 railway bridge, where the continuous monitoring during retrofitting allowed for the precise evaluation of reinforcement effects [3].

Recent advances in artificial intelligence (AI) and machine learning (ML) have significantly improved SHM capabilities, especially in processing large volumes of heterogeneous sensor data. Deep learning- and ensemble-based models have led to notable improvements in damage detection and predictive maintenance planning [4,5,6]. These models have proven effective in analyzing diverse inputs such as vibration signals, strain data, and visual inspections [7,8].

Moreover, deep learning methods have shown high accuracy in automating damage detection tasks [9,10]. AI-powered sensor networks are facilitating a shift from reactive maintenance to predictive strategies, enhancing infrastructure resilience [11]. For example, Mansouri et al. [12] developed a Bluetooth-enabled strain gauge network capable of real-time crack detection and localization. Tang et al. [13] proposed a deep learning approach to infer vehicle loads from bridge response data, further showcasing AI’s role in operational SHM.

Nevertheless, the effectiveness of ML models in SHM is highly dependent on the quality, quantity, and dimensionality of the available data. High-dimensional noisy signals can lead to increased uncertainty and reduced model reliability [14,15].

To address these challenges, Presno et al. [16] proposed a random forest (RF) classifier trained in the spectral domain to classify structural states of the KW15 bridge using both ambient and train-induced accelerometer signals. Although the RF model achieved accuracy with ambient data, its performance declined when applied to train-induced signals due to dataset imbalance—ambient data being approximately 50 times more abundant. Furthermore, the discriminative information was concentrated at low frequencies for ambient data, whereas train-induced signals exhibited a frequency-dependent relevance based on Fisher ratio analysis.

To build on this prior work, we explore deep belief networks (DBNs) [17] as a deep learning alternative for SHM. DBNs provide several advantages, such as robust feature extraction, noise resilience, unsupervised pretraining, and effective initialization strategies. These attributes are particularly relevant in SHM applications involving limited labeled data and noisy sensor inputs. Kamada et al. [18] demonstrated the utility of DBNs in image-based crack detection under data scarcity, highlighting their ability to automate hierarchical feature learning.

Preliminary experiments using long short-term memory (LSTM) networks have also shown potential for modeling the temporal dynamics of structural responses during retrofitting. By casting transition detection as a sequence classification problem, LSTMs have demonstrated early success in capturing dynamic changes in real-world vibration data. Future work will include comparative evaluations with hybrid CNN-LSTM models for vision-based frequency tracking [19], LSTM-based signal reconstruction [20], and CNN-GRU models for denoising and data recovery in SHM systems [21], aiming to improve robustness across diverse operating conditions.

In this study, we compare the performance of DBNs with random forests and classical unsupervised methods, such as PCA and K-means, applied to both ambient and train-induced accelerometer data from the KW15 bridge. We further benchmark our results against recent CNN- and LSTM-based SHM approaches [22,23,24].

The remainder of this paper is organized as follows: Section 2 presents the dataset and methodology, including preprocessing, feature extraction, and the DBN architecture. Section 3 discusses experimental results and model comparisons. Section 4 interprets the findings, and Section 5 concludes with future research directions.

2. Materials and Methods

Data were collected on the KW15 railway bridge, located in Leuven, Belgium, which connects the towns of Herent (2.3 km) and Leuven (2.2 km). This steel bridge, operational since 2003, accommodates both railway and vehicular traffic on its lower level. It has a total length of 115 m and a width of 12.4 m.

The dataset used in this study was obtained using 12 uniaxial accelerometers—six positioned on the bridge deck and six on the arches—recording acceleration signals as trains passed in either direction. Monitoring began on 2 October 2018, and concluded on 15 January 2020, covering the entire retrofitting period. Acceleration measurements started 10 s before a train entered the bridge and continued for 30 s after it exited. The data collection was divided into three phases: (i) before the reinforcement (2 October 2018–15 May 2019); (ii) during the reinforcement (15 May 2019–27 September 2019); and (iii) after the reinforcement (27 September 2019–15 January 2020). If a sensor was inactive or malfunctioning, its data were replaced with “NaN” values. The retrofitting process focused on reinforcing the joints between the bridge deck and the arches to improve structural safety, addressing stability concerns that had been identified during construction [1].

In general, retrofitting refers to the process of strengthening or modifying a structure—or parts of it—to improve its durability. This technique is frequently used to increase resilience against environmental effects, aging, or deficiencies in design and construction. In the case of the KW15 bridge, it was assumed that structural deficiencies were present prior to retrofitting, which were addressed through reinforcement. Therefore, data recorded before the intervention reflect a structurally deficient state, whereas data collected afterward correspond to a structurally sound condition.

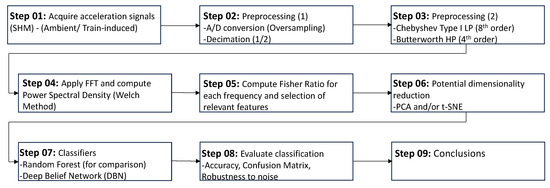

The main objective of this study is to compare the results obtained from ambient data with those derived from non-ambient (train-induced) data. This comparison aims to evaluate whether signals recorded under significant loading conditions, such as train passages, provide greater discriminatory power than signals captured in the absence of such loading. The methodology used follows the framework established in [16], as illustrated in Figure 1.

Figure 1.

Flow chart of the methodology.

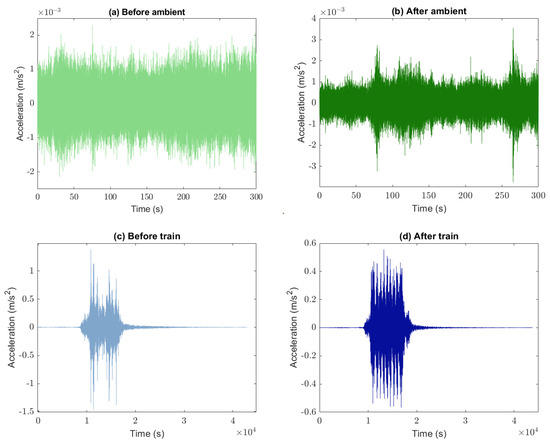

Figure 2 shows four types of acceleration signals collected by the sensors: before and after retrofitting, as well as ambient and non-ambient (train-induced) data. Notably, the maximum amplitude of the acceleration signals recorded prior to retrofitting (before-train) is more than twice that observed after retrofitting (after-train). However, the post-retrofitting data displays a more homogeneous amplitude profile, whereas the before-train signals exhibit a central region with markedly lower amplitude.

Figure 2.

Four types of signals collected by the accelerometers: before and after retrofitting under ambient and train-induced (while the train is crossing the bridge).

The number of train-induced samples recorded before retrofitting is 4232, while the corresponding post-retrofitting dataset contains 4968 samples—which are both approximately 50 times smaller than the ambient dataset. Furthermore, when compared to ambient data (recorded in the absence of passing trains), the amplitude of the before-train signals is approximately 750 times greater. After retrofitting, this excess amplitude is reduced to a factor of around 150.

Signals are transformed from the time domain to the frequency domain using power spectral density (PSD) analysis. The Welch PSD method offers insights into the contribution of each frequency to the total power of the signal [25]. The frequencies used in the PSD calculations correspond to the bridge’s modal frequencies, with the aim of identifying how each frequency contributes to the vibration energy and thereby revealing the structure’s natural oscillation patterns. Additionally, the PSD enables the comparison of different measurements helps detect the behavioral changes in the bridge and assess the potential causes of failure by analyzing shifts in the contribution of these frequencies to the overall vibration energy.

As in the analysis of ambient data, once the accelerometer signals (before-train and after-train) are processed, any NaN values are removed, and the Welch PSD is computed for the 14 modal frequencies published in [3]. It is assumed that structural faults were present before the retrofitting and were effectively mitigated by the intervention.

Deep Belief Network (DBNs) and Restricted Boltzmann Machines (RBMs)

Deep belief networks (DBNs) are based on probabilistic graphical models, unsupervised learning, and neural networks. They consist of multiple layers of restricted Boltzmann machines (RBMs), which learn probabilistic representations of the input data.

DBNs are trained in two stages: first, the RBMs undergo unsupervised pretraining to capture hierarchical features, followed by supervised fine-tuning for classification or regression tasks [17,26]. Mathematically, each layer defines a conditional probability distribution over the preceding layer.

This hierarchical structure enables DBNs to learn increasingly abstract representations: lower layers capture basic features, while upper layers extract high-level patterns. The architecture forms a directed acyclic graph in which the upper layers serve as a generative model and the lower layers function as a discriminative network. This hybrid nature allows DBNs to effectively model complex data relationships, leveraging the combined strengths of RBMs and deep neural networks.

Restricted Boltzmann Machines (RBMs)

A restricted Boltzmann machine (RBM) consists of two units:

- -

- A set of m visible layers (), representing the input data. The notation means that the vector is a binary vector of dimension m, that is, a set of m elements where each element can be either 0 or 1.

- -

- A set of n hidden layers () representing the learned representations of the input data.

- -

- and are the activations (0 or 1) of the visible and hidden units. In RBMs, there are no connections between the units within the same layer. The units in the visible and hidden layers are connected by weights.

The energy of a particular state in an RBM can be written as [27]:

where and are the bias vectors for the visible and hidden layers and represents the weight between visible unit and hidden unit .

The joint probability of a configuration can be written as [17]:

where K is a normalization factor for P to be a probability.

The network optimization process aims to minimize the energy function in order to learn a plausible representation of the data. This is equivalent to maximizing the probability of a given configuration. Learning in a restricted Boltzmann machine (RBM) involves adjusting the weights W, the visible biases , and the hidden biases to maximize the log-likelihood of the input data. This process is commonly referred to as RBM training.

This is performed using the contrastive divergence (CD) method. In CD, the gradient of the log-probability with respect to the weights is given by:

where denotes the expectation over the empirical data distribution, and represents the expectation under the distribution defined by the RBM [17,28].

A DBN is built by stacking multiple RBMs hierarchically [29]. After training an initial RBM to model the distribution of the input data, the activations of the hidden units are used as input to train the next RBM. This process is repeated for all layers, allowing each level to learn progressively more abstract representations. Mathematically, if represents the hidden units of layer k, then [17]:

where and are the weights and biases of layer k, and

is the element-wise sigmoid function.

After pretraining the layers, the RBMs are assembled into a DBN for supervised tasks. The supervised model adds an output final layer F that predicts the labels using the learned latent representations [17]:

where represents the DBN forward model, are the inputs, and and are the results of the binary cross-entropy loss function optimization:

3. Results

This section aims to compare retrofitting predictions via DBNs using ambient and train data, highlighting their main similarities and differences. Specifically, the study assesses the discriminatory power of train data relative to ambient data. A key challenge associated with the train dataset is its imbalance: it not only contains an unequal number of measurements before and after retrofitting, but also includes 50 to 58 times fewer samples than the ambient dataset.

The signals are analyzed in the frequency domain by computing the power spectral density (PSD) at the bridge’s 14 modal frequencies. As shown in the study by Presno et al. [16], these modal frequencies are optimal for predicting the impact of retrofitting, as they reflect the bridge’s natural vibration modes and associated energy distributions.

To train and test the machine learning models, we used the values of , where represents the array of modal frequencies, categorized into two groups:

- -

- Ambient data: Class 1, comprising 14,864 measurements recorded before retrofitting, and Class 2, consisting of 16,272 measurements taken afterward.

- -

- Train data: Class 1, comprising 4232 measurements recorded before retrofitting, and Class 2, consisting of 4968 measurements taken afterward.

3.1. Discriminatory Frequencies for Ambient and Train Data

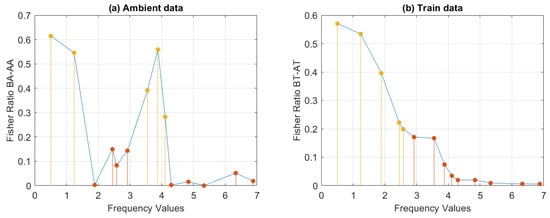

The Fisher ratio of the data is calculated to identify which features (modal frequencies) are most effective for distinguishing between the data before and after retrofitting, as explained in [16]. A high ratio value indicates that the difference between the means of the two classes is large relative to the variability within each class, suggesting that the corresponding feature is highly informative for class separation.

Figure 3 shows the values of the Fisher ratio of at the 14 modal frequencies for both ambient and train data. In the case of ambient data (Figure 3a), the Fisher ratio values do not exhibit a decreasing trend as the modal frequency increases. The maximum Fisher ratio is slightly above , and there are five modal frequencies for which the ratio exceeds the mean: , , , , and Hz.

Figure 3.

Fisher ratio of before and after retrofitting for: (a) ambient data; (b) train data. The points marked in red indicate the modal frequencies with a Fisher ratio value lower than .

A substantial difference in the case of train data is that the modal frequencies appear ordered according to the decreasing Fisher ratio, from lower to higher frequency values (see Figure 3b). Another notable difference is that no frequencies with Fisher ratios above the mean are found within intervals where surrounding frequencies fall below the mean. In other words, only the first five frequencies appear to be discriminatory with respect to the retrofitting effect.

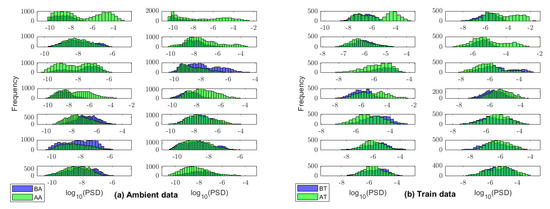

To provide additional insight into the discriminatory power of the modal frequencies, Figure 4 presents the combined histograms of before and after retrofitting for both ambient and train data. As expected, the less discriminative frequencies in the ambient data exhibit greater overlap and/or higher variability in their distributions. In contrast, the higher and less discriminative frequencies in the train data show a more Gaussian-like distribution with lower variability, while the more informative frequencies display broader distributions.

Figure 4.

Combined histogram of before and after retrofitting, considering modal frequencies arranged in ascending order from left to right and top to bottom. (a) Ambient data, (b) Train data.

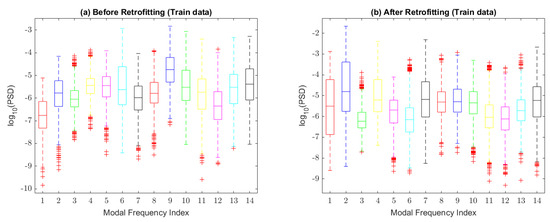

The boxplot provides a quick view of data dispersion and compares before and after retrofitting for both ambient and train data. For ambient data, [16] showed that the distribution range shifts from before retrofitting to a narrower interval post-retrofitting . This aligns with initial accelerometer signals, confirming that retrofitting effectively reduced structural vibrations.

Figure 5 show the boxplots before and after retrofitting for train data. It can be observed that the median values of after retrofitting also fall within a narrower range than before retrofitting. Also, the distribution range reduced from to post-retrofitting, which is significantly higher in magnitude compared to ambient data.

Figure 5.

Interquartile range of for train data at each modal frequency: (a) before retrofitting and (b) after retrofitting.

3.2. Unsupervised PCA Analysis and t-SNE

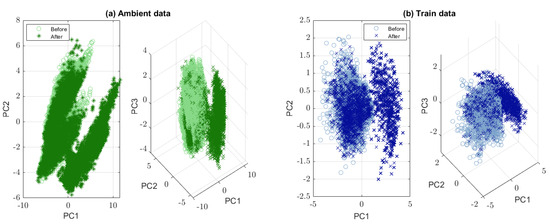

For both ambient and train data, principal component analysis (PCA) reveals the discriminatory power of the 14 modal frequencies, allowing dimensionality reduction without significant information loss, as shown in [16].

Figure 6 presents the projections of in two- and three-dimensional PCA subspaces for ambient and train data, based on the three most discriminative modal frequencies identified in each case. For ambient data, these frequencies are , , and . The first principal component explains of the variance, while the first two components account for . A total of five components are required to explain more than of the variance.

Figure 6.

Principal components 1 and 2, and 1, 2, and 3 of before (light color) and after (dark color) retrofitting, respectively: (a) ambient data, (b) train data.

For train data, the most discriminative frequencies are , , and . The first principal component explains only of the variance. Three components are needed to reach , five to exceed , and nine to surpass , indicating a higher intrinsic complexity in the train dataset.

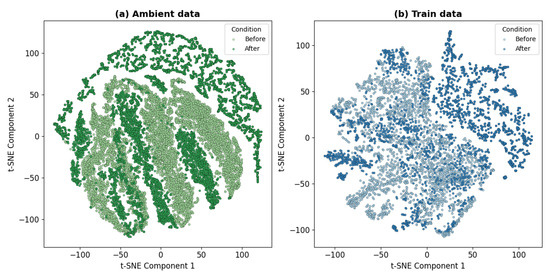

Figure 7 shows the t-SNE representation of the data, comparing the ambient (left) and train (right) datasets. t-SNE validates the effectiveness of the extracted features, assesses the discriminative power of each dataset, and complements the PCA dimensionality reduction, thereby enhancing the robustness of the classification approach. This technique reveals the structure of high-dimensional data in a two-dimensional space.

Figure 7.

t-SNE visualization of (a) ambient data and (b) train data. In both cases, the light color represents before and the dark color represents after retrofitting.

In the ambient dataset, the data points are more dispersed, suggesting less clear separability between groups. In contrast, the train dataset exhibits more compact and distinct clusters, indicating a stronger grouping of similar patterns. However, this two-dimensional projection does not fully align with the cross-validation results, implying that the DBN predictions are governed by higher-dimensional relationships that cannot be fully captured in a 2D space.

3.3. Unsupervised K-Means Classifier for Train Data

The K-means algorithm is used to divide the dataset into two clusters of similar characteristics by minimizing the sum of the squared distances from each data point to the centroid of its cluster, as shown in [16] for the case of ambient data.

The confusion matrix of this classifier for train data turned to be

where represents the number of samples correctly classified as before retrofitting; represents the number of samples incorrectly classified as after retrofitting; represents the number of samples correctly classified as after retrofitting; and represents the number of samples incorrectly classified as before retrofitting. The accuracy achieved by this algorithm with the first five most discriminatory modal frequencies was %, slightly higher than % achieved with the ambient data. This suggests that train data have a greater discriminatory power in detecting the effect of retrofitting compared to ambient data, as it subjects the structure to a higher load.

The ratio represents the ability to correctly identify the samples before retrofitting. This ratio is % in the case of train data compared to for ambient data. However, the ability to correctly identify the samples after retrofitting drops to % for train data, which is still slightly higher than in the case of ambient data. It can be observed that there is a lack of ability to classify the data after retrofitting, due to the simplicity of this classifier.

3.4. Random Forest Classifier

Presno et al. [16] used a random forest classifier to predict the effect of the retrofitting by using ambient data () for both classes: before and after retrofitting. This algorithm achieved a median accuracy of with a nearly perfect receiver operating characteristic (ROC) curve. Also, the final model, tested on the entire dataset, achieved accuracy, demonstrating its effectiveness in predicting retrofitting effects for other civil structures. For the training data, the algorithm attains a median accuracy of , reaching a final model accuracy of , close to that obtained in the case of ambient data.

3.5. Deep Belief Network (DBN) Classifier

The implemented procedure provides a complete pipeline for training and evaluating a Deep Belief Network (DBN). This model combines unsupervised layer-wise learning using restricted Boltzmann machines (RBMs) with a supervised fine-tuning phase for classification tasks. The following section describes the process step by step, outlining its purpose and the mathematical foundations underlying each stage.

3.5.1. Data Loading and Preprocessing

The first stage consists of data loading and preprocessing. These data are divided into two sets: and , which are labeled as and , respectively. The two subsets are then merged into a single dataset X and normalized to ensure that each feature has a zero mean and unit standard deviation. Finally, the data are split into training and testing subsets.

In the next stage, unsupervised training is introduced using RBMs. A grid search for hyperparameters is also performed to optimize the model’s performance. The search evaluates different parameter configurations, such as the number of hidden units, the learning rate, and the number of epochs.

3.5.2. Hyperparameter Tuning via Grid Search

When tuning a restricted Boltzmann machine (RBM), several crucial parameters should be considered to optimize its performance:

- Number of hidden units: The number of hidden units (neurons) in the RBM determines the model’s capacity to learn complex features from the input data. Using too few hidden units may prevent the model from capturing the full complexity of the data. Conversely, an excessively high number of hidden units may lead to overfitting and increase the computational cost of training. Therefore, it is necessary to strike a balance between model complexity and data representation.

- Learning rate: This parameter controls the step size at each iteration when updating the weights. It determines how much the model adjusts its weights based on the gradient. If the learning rate is too high, training may become unstable or diverge. If too low, convergence may be slow, and the model may become trapped in local minima. Typically, values between and are used, although the optimal value may vary depending on the specific problem.

- Number of training epochs: This defines how many times the algorithm iterates over the entire dataset. Using too few epochs can lead to underfitting, where the model fails to learn sufficient patterns. In contrast, too many epochs can cause overfitting, where the model memorizes the training data rather than generalizing to new inputs.

- Batch Size: This refers to the number of samples processed in each training step. Smaller batches can provide a more precise gradient estimate but increase noise in the training process. Larger batches produce smoother gradients but may slow convergence and require more memory. In this study, cross-validation is used to evaluate generalization performance and mitigate the risk of overfitting.

The hyperparameter analysis explored various configurations for the number of layers, units, and learning rates according to the following variables:

- Model complexity

- −

- Number of layers:

- −

- Number of units per layer:

- Learning rate

- −

- Number of training epochs

- −

- Epochs per RBM layer:

- −

- Epochs per DBN layer:

- Batch size: Cross-validation experiments were conducted with partitions.This analysis has shown that the optimal configurations were:

- −

- Number of layers: 4

- −

- Number of units per layer:

- −

- Learning rate:

- −

- Epochs per RBM layer: 10

- −

- Epochs per DBN layer: 100

The performance of a deep belief network (DBN) strongly depends on the careful selection of hyperparameters.

For DBNs, the per-epoch time complexity is approximately per layer, where n is the number of samples and h the number of hidden units. The use of pretraining with RBMs mitigates training time and improves convergence. In this study, a grid search was performed to identify the optimal network configuration by evaluating key parameters, including the number of hidden units, learning rate, training epochs, and batch size [29]. The results suggest that moderately complex architectures outperform deeper networks, which are more susceptible to overfitting.

The best-performing configuration consisted of four layers with 128 to 1024 units per layer. In this case, a learning rate of provided the best balance between speed and stability. Optimal performance was achieved with 10 epochs per layer during the pretraining phase using Restricted Boltzmann Machines (RBMs), and 100 epochs in the supervised fine-tuning phase. Additionally, a 5-fold cross-validation was performed to evaluate the model’s generalization ability.

3.5.3. Cross-Validation Results with Ambient and Train Data Using DBNs

After pretraining, the DBN is fine-tuned using a fully connected output layer with a sigmoid activation function to perform binary classification. The entire network is then trained end-to-end using binary cross-entropy loss and the Adam optimization algorithm, refining the RBM-learned features.

To ensure that this fine-tuning process results in a model capable of generalizing beyond the training data, cross-validation is employed as a key evaluation technique. This approach provides a robust assessment of generalization performance, and the results show low variability across the folds. Cross-validation is particularly valuable for detecting issues such as overfitting, where the model memorizes training examples rather than learning generalizable patterns.

The statistical descriptors used to report the cross-validation performance were:

The training and testing sizes are determined using 5-fold cross-validation, which splits the dataset into five equally sized subsets. In each fold, four subsets (80%) are used for training and one subset (20%) for testing. The process is repeated five times to ensure robustness and minimize bias.

- Ambient data:

- −

- Training size: samples (approx. of the total ambient dataset)

- −

- Testing size: 6727 samples (approx. )

- Train data:

- −

- Training size: 7608 samples (approx. of the total train dataset)

- −

- Testing size: 1892 samples (approx. )

Table 1 shows the cross-validation results using the train and ambient datasets with 14 modal frequencies. Ambient data achieve a higher mean accuracy () but with greater variability (), ranging from (Fold 1) to (Fold 3). In contrast, train data show a lower mean accuracy (), suggesting potential room for improvement, but with more consistent results (), with accuracy values between (Fold 3) and (Fold 5). These results suggest that, while the model benefits from a higher accuracy when trained on ambient data, its performance is more stable with train data, albeit at a slightly lower accuracy.

Table 1.

Cross-validation accuracy results for ambient and train data at 14 modal frequencies.

To address this, an expanded feature set using 100 evenly distributed frequencies within the modal frequency range was evaluated, leading to a significant accuracy improvement, as shown in Table 2. Although the preselection of 14 modal frequencies had previously shown no relevant information loss in other machine learning models [16], this assumption does not extend directly to DBN-based approaches involving unsupervised learning. In this case, incorporating a broader frequency range of 100 frequencies significantly enhanced the results.

Table 2.

Cross-validation accuracy results for ambient and train data with the information of the evenly distributed 100 frequencies. The training and testing sizes are the same as in the previous case.

To further investigate this trend, additional tests were performed using 500 and 1000 equidistant frequencies within the same range. The mean accuracy for the training data reached with 500 frequencies and with 1000, both considerably higher than the obtained with 100 frequencies. This behavior suggests that DBNs benefit from a dense parameterization of the feature space in order to fully exploit their learning capacity.

Table 2 presents the cross-validation accuracy results for ambient data and train data, both evaluated using five folds with the first 100 frequencies. Ambient data achieve a higher mean accuracy () but with greater variability (), with accuracy values ranging from (Fold 4) to (Fold 5). Train data, on the other hand, shows a slightly lower mean accuracy () but with more consistent results (), with accuracy values between (Fold 1) and (Fold 3). These results suggest that, while both datasets yield high accuracy, the model trained on ambient data achieves slightly better performance at the cost of increased variability, whereas the model trained on train data offers more stable results.

The confusion matrices obtained after model evaluation on unseen test data, for ambient data () and train data () are:

Both matrices present a good balance between true positives and negatives, revealing high sensitivity and specificity in classification.

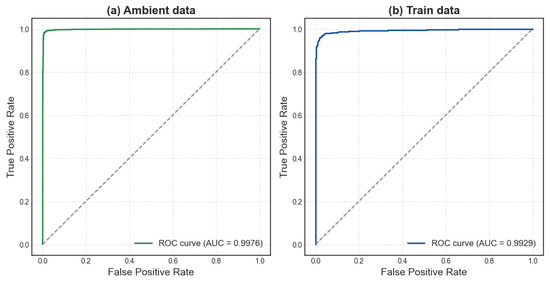

The model is evaluated using metrics such as the ROC curve, which describes the relationship between the true positive rate (TPR) and the false positive rate (FPR):

The area under the curve (AUC) is calculated as:

where t stands for the false positive rate .

Figure 8 shows the AUC curve for the ambient and train data. The AUC is very high in both cases ( for ambient and for the train).

Figure 8.

Receiver operating characteristic (ROC) curves.

Based on these results, there are no signs of overfitting, which would typically manifest as poor generalization. This is evident even though the model achieves high classification performance on both datasets.

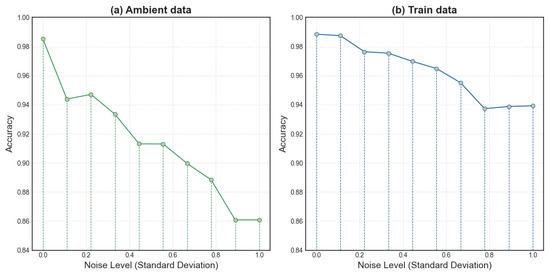

3.5.4. Robustness to Noise

The robustness of the model against noise is also evaluated by adding Gaussian noise to the test data:

where represents the noise level. This evaluation analyzes how the model’s accuracy changes as the noise in the input data increases. In our case, the noise is added directly to the attributes, which are .

A noise-robust DBN improves generalization, enhances reliability under real-world noisy conditions, reduces errors in inverse problems, and supports adaptable, reliable models for optimization and machine learning tasks.

Figure 9 shows the impact of noise on the model’s accuracy for ambient data (left) and train data (right). It can be observed that:

Figure 9.

Robustness to noise on model accuracy.

- 1.

- Both graphs show a decreasing trend in accuracy as the noise level increases, indicating that the model’s performance deteriorates under noisier conditions. This trend is expected, as higher noise levels introduce uncertainty, making it more difficult for the model to preserve high accuracy.

- 2.

- For ambient data, accuracy fluctuates slightly, with a noticeable dip in the range , followed by a minor increase before continuing to decline.

- 3.

- In contrast, the train data results appear more robust to noise, with a smoother and more gradual decline in accuracy. Even at higher noise levels, the performance does not degrade as sharply as with ambient data.

- 4.

- The train data accuracy remains relatively stable at lower noise levels, with slight fluctuations in the range , including a brief increase before gradually declining. A more noticeable drop occurs around , after which accuracy stabilizes slightly.

- 5.

- The ambient data graph (left) exhibits a steeper decline in accuracy compared to the train data graph (right). At the highest noise level, the accuracy for ambient data drops to , whereas the train data maintains a slightly higher accuracy of approximately .

These findings indicate that the DBN is more robust to noise when trained on train data than on ambient data. This behavior may be attributed to the higher signal-to-noise ratio and structural clarity in train-induced vibrations, which help the model retain predictive capacity under noise. In contrast, the ambient data appear more susceptible to distortion, possibly due to its lower amplitude and more complex background variability.

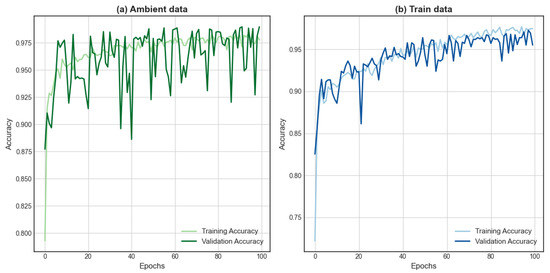

Figure 10 illustrates the accuracy progression during training and validation on both the ambient and training datasets. In both cases, the model shows proper convergence, indicating that the selected hyperparameters are suitable.

Figure 10.

Learning curves: training and validation accuracy.

The graph presents the learning curve, showing the evolution of training and validation accuracy over 100 epochs. Initially, both accuracies (training and validation) increase rapidly, stabilizing around . The validation accuracy exhibits greater fluctuations, especially up to 30 epochs for the train data, but follows a trend similar to the training accuracy, indicating that the model generalizes well. The presence of fluctuations in validation accuracy suggests some variability in model performance, though it remains close to the training accuracy, indicating an overall good fit without severe overfitting.

4. Discussion

The results obtained in this study confirm the effectiveness of deep belief networks (DBNs) for structural state classification within structural health monitoring (SHM) systems, validating the working hypothesis that DBNs can match or outperform traditional and state-of-the-art machine learning models, particularly in scenarios involving complex excitation signals. This finding aligns with previous works that emphasize the benefits of deep learning architectures for SHM tasks [22,23,24]. However, unlike most prior studies that focused on image-based or vibration data under a single excitation source, our work expands this scope by demonstrating the DBN performance across both ambient and train-induced vibration datasets, offering a more comprehensive evaluation of model robustness.

Compared to Presno et al. [16], who employed a Random Forest classifier on the same dataset, the DBN showed comparable or superior performance, especially when dealing with the more intricate dynamics of train-induced signals. While the Random Forest model achieved marginally better accuracy for ambient data, the DBN outperformed it in the train dataset. This result reinforces the advantage of deep hierarchical models in capturing nuanced temporal and spectral features that simpler ensemble methods may overlook.

Moreover, our implementation of dimensionality reduction techniques (PCA and t-SNE) not only improved computational efficiency but also facilitated clearer class separability in the input space. This outcome supports previous studies that recommend feature space transformations for enhancing classification tasks in high-dimensional SHM datasets [10]. The observed improvement in DBN classification performance after these transformations validates their role in identifying discriminative features relevant to retrofitting detection.

The noise robustness experiments further underscore the practical applicability of DBNs in real-world SHM settings, where sensor data are frequently contaminated by environmental or operational noise. The model’s ability to maintain over 90% classification accuracy for ambient data under moderate noise levels () confirms its resilience, although performance degradation under high-noise conditions suggests an avenue for future work. Strategies such as data augmentation, denoising autoencoders, or robust training methods may enhance noise tolerance in subsequent developments.

From a broader perspective, the findings have meaningful implications for infrastructure management. DBNs offer an automated, accurate, and scalable tool for continuous condition monitoring, enabling more informed decisions about retrofitting, maintenance, and resource allocation. The ability to reliably process data from different excitation scenarios expands the operational range of SHM systems and supports the deployment of intelligent sensor networks in diverse civil engineering contexts.

Despite the promising results, several limitations remain. The imbalance between ambient and train-induced datasets affected the learning dynamics, particularly under complex excitation. Future research should explore balancing strategies or transfer learning techniques to improve model generalization under asymmetric data distributions. Additionally, the exploration of hybrid deep architectures, such as DBN-CNN or DBN-LSTM models, may further improve performance by leveraging the strengths of different learning paradigms.

Preliminary experiments using long short-term memory (LSTM) networks have also demonstrated the potential of recurrent models to capture the temporal evolution of structural behavior during retrofitting. By framing transition detection as a sequence classification task, the model has shown early success in identifying dynamic shifts from real-world vibration data. These findings support the suitability of recurrent architectures for progressive monitoring in SHM. As part of future work, comparative studies will be conducted with other deep learning approaches, such as hybrid CNN-LSTM models for vision-based modal frequency tracking [19], the LSTM-based reconstruction of incomplete monitoring signals [20], and CNN-GRU frameworks for denoising and data recovery in SHM systems [21]. These directions aim to enhance robustness under varying operational conditions and expand the scope of structural health assessment.

In conclusion, this study provides empirical support for the integration of DBNs in SHM applications, laying the groundwork for more advanced machine learning frameworks tailored to infrastructure monitoring. Future research should not only refine model robustness and scalability but also investigate real-time deployment strategies in operational SHM systems.

5. Conclusions

This study has explored the application of deep belief networks (DBNs) for classifying structural states in the context of structural health monitoring (SHM), using real-world data collected under ambient and train-induced vibrations. The results demonstrate that DBNs are capable of extracting informative features from high-dimensional accelerometer data, achieving high classification accuracy with strong generalization across different data folds.

The implementation of dimensionality reduction techniques such as PCA and t-SNE enhanced class separability and provided insights into the discriminative power of extracted features. These tools confirmed the DBN’s ability to distinguish between pre- and post-retrofitting structural conditions in both excitation scenarios.

Comparative analysis against previously published results based on Random Forest models revealed that while random forest slightly outperformed DBNs under ambient excitation, and DBNs yielded better performance under more complex conditions, such as train-induced vibrations. This highlights their robustness and suitability for real-time monitoring in operational environments.

Furthermore, the study evaluated the model’s resilience to noise, revealing that DBNs can tolerate moderate levels of artificial perturbation, particularly in ambient data. However, a notable drop in accuracy under severe noise conditions indicates the need for enhanced robustness techniques.

In summary, DBNs represent a promising approach for data-driven structural assessment, offering scalability, high performance, and adaptability to various SHM settings. Their integration into SHM systems could significantly improve maintenance strategies and infrastructure reliability. Future work will focus on improving robustness to noise, handling imbalanced datasets, and exploring hybrid architectures to further boost predictive capabilities.

Author Contributions

Conceptualization, Á.P.V., Z.F.M. and J.L.F.M.; methodology, Á.P.V., Z.F.M. and J.L.F.M.; software, Á.P.V.; validation, Á.P.V., Z.F.M. and J.L.F.M.; formal analysis, Á.P.V., Z.F.M. and J.L.F.M.; investigation, Á.P.V., Z.F.M. and J.L.F.M.; resources, Á.P.V., Z.F.M. and J.L.F.M.; data curation, Á.P.V., Z.F.M. and J.L.F.M.; writing—original draft preparation, Á.P.V., Z.F.M. and J.L.F.M.; writing—review and editing, Á.P.V., Z.F.M. and J.L.F.M.; visualization, Z.F.M. and J.L.F.M.; supervision, Z.F.M. and J.L.F.M.; project administration, Z.F.M. and J.L.F.M.; funding acquisition, Z.F.M. and J.L.F.M. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by Mathematics MDPI.

Data Availability Statement

The raw data supporting the findings of this study are openly available in Zenodo at: Kristof Maes & Geert Lombaert. (2020). Monitoring data for railway bridge KW51 in Leuven, Belgium, before, during, and after retrofitting (1.0) [Data set]. Zenodo. https://doi.org/10.5281/zenodo.3745914. Derived datasets generated during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SHM | Structural Health Monitoring |

| DBN | Deep Belief Network |

| RBM | Restricted Boltzmann Machine |

| PCA | Principal Component Analysis |

| t-SNE | t-Distributed Stochastic Neighbor Embedding |

| PSD | Power Spectral Density |

| ROC | Receiver Operating Characteristic |

| CV | Cross-Validation |

| RF | Random Forest |

| SNR | Signal-to-Noise Ratio |

| ML | Machine Learning |

| AI | Artificial Intelligence |

| DL | Deep Learning |

References

- Maes, K.; Lombaert, G. Monitoring railway bridge KW51 before, during, and after retrofitting. J. Bridge Eng. 2021, 26, 04721001. [Google Scholar] [CrossRef]

- Omori Yano, M.; Figueiredo, E.; da Silva, S.; Cury, A.; Moldovan, I. Transfer Learning for Structural Health Monitoring in Bridges That Underwent Retrofitting. Buildings 2023, 13, 2323. [Google Scholar] [CrossRef]

- Maes, K.; Van Meerbeeck, L.; Reynders, E.P.B.; Lombaert, G. Validation of vibration-based structural health monitoring on retrofitted railway bridge KW51. Mech. Syst. Signal Process 2022, 165, 108380. [Google Scholar] [CrossRef]

- Altabey, W.A.; Noori, M. Artificial-Intelligence-Based Methods for Structural Health Monitoring. Appl. Sci. 2022, 12, 12726. [Google Scholar] [CrossRef]

- Plevris, V.; Papazafeiropoulos, G. AI in Structural Health Monitoring for Infrastructure Maintenance and Safety. Infrastructures 2024, 9, 225. [Google Scholar] [CrossRef]

- Farrar, C.; Worden, K. Structural Health Monitoring: A Machine Learning Perspective; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Ye, X.W.; Jin, T.; Yun, C.B. A Review on Deep Learning-Based Structural Health Monitoring of Civil Infrastructures. Smart Struct. Syst. 2019, 24, 567–585. [Google Scholar] [CrossRef]

- Jung, M.; Koo, J.; Choi, A.J. Advances in Structural Health Monitoring: Bio-Inspired Optimization Techniques and Vision-Based Monitoring System for Damage Detection Using Natural Frequency. Mathematics 2024, 12, 2633. [Google Scholar] [CrossRef]

- Cha, Y.; Ali, R.; Lewis, J.; Buyukozturk, O. Deep Learning-Based Structural Health Monitoring. Autom. Constr. 2024, 161, 105328. [Google Scholar] [CrossRef]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-Driven Structural Health Monitoring and Damage Detection through Deep Learning: State-of-the-Art Review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef]

- Sargiotis, D. Transforming Civil Engineering with AI and Machine Learning: Innovations, Applications, and Future Directions. Int. J. Res. Publ. Rev. 2025, 6, 3780–3805. [Google Scholar] [CrossRef]

- Mansouri, T.S.; Lubarsky, G.; Finlay, D.; McLaughlin, J. Machine Learning-Based Structural Health Monitoring Technique for Crack Detection and Localisation Using Bluetooth Strain Gauge Sensor Network. J. Sens. Actuator Netw. 2024, 13, 79. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, Z.; Wang, K.; Li, H. Vehicle Load Identification Based on Bridge Response Using Deep Learning. J. Civ. Struct. Health Monit. 2024, 14, 2328634. [Google Scholar]

- Malekloo, A.; Ozer, E.; AlHamaydeh, M.; Girolami, M. Machine learning and structural health monitoring overview with emerging technology and high-dimensional data source highlights. Struct. Health Monit. 2021, 21, 147592172110368. [Google Scholar] [CrossRef]

- Lee, Y.; Kim, H.; Min, S.; Yoon, H. Structural damage detection using deep learning and FE model updating techniques. Sci. Rep. 2023, 13, 18694. [Google Scholar] [CrossRef]

- Presno Vélez, A.; Fernández Muñiz, M.Z.; Fernández Martínez, J.L. Enhancing Structural Health Monitoring with Machine Learning for Accurate Damage Prediction. AIMS Math. 2024, 9, 30493–30514. [Google Scholar] [CrossRef]

- Hinton, G.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Kamada, S.; Ichimura, T.; Iwasaki, T. An Adaptive Structural Learning of Deep Belief Network for Image-based Crack Detection in Concrete Structures Using SDNET2018. In Proceedings of the 2020 International Conference on Image Processing and Robotics (ICIP), Negombo, Sri Lanka, 6–8 March 2020. [Google Scholar] [CrossRef]

- Yang, R.; Singh, S.K.; Tavakkoli, M.; Amiri, N.; Yang, Y.; Karami, M.A.; Rai, R. CNN-LSTM Deep Learning Architecture for Computer Vision-Based Modal Frequency Detection. Mech. Syst. Signal Process. 2020, 144, 106885. [Google Scholar] [CrossRef]

- Ba, P.; Zhu, S.; Chai, H.; Liu, C.; Wu, P.; Qi, L. Structural Monitoring Data Repair Based on a Long Short-Term Memory Neural Network. Sci. Rep. 2024, 14, 9974. [Google Scholar] [CrossRef]

- Nhung, N.T.C.; Bui, H.N.; Minh, T.Q. Enhancing Recovery of Structural Health Monitoring Data Using CNN Combined with GRU. Infrastructures 2024, 9, 205. [Google Scholar] [CrossRef]

- Fu, L.; Tang, Q.; Gao, P.; Xin, J.; Zhou, J. Damage Identification of Long-Span Bridges Using the Hybrid of Convolutional Neural Network and Long Short-Term Memory Network. Algorithms 2021, 14, 180. [Google Scholar] [CrossRef]

- Zhang, G.Q.; Wang, B.; Li, J.; Xu, Y.-L. The Application of Deep Learning in Bridge Health Monitoring: A Literature Review. Adv. Bridge Eng. 2022, 3, 22. [Google Scholar] [CrossRef]

- Qin, Y.; Tang, Q.; Xin, J.; Yang, C.; Zhang, Z.; Yang, X. A Rapid Identification Technique of Moving Loads Based on MobileNetV2 and Transfer Learning. Buildings 2023, 13, 572. [Google Scholar] [CrossRef]

- Welch, P. The use of fast Fourier transform for the estimation of power spectra: A method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 1967, 15, 70–73. [Google Scholar] [CrossRef]

- Fischer, A.; Igel, C. Training Restricted Boltzmann Machines: An Introduction. Pattern Recognit. 2014, 47, 25–39. [Google Scholar] [CrossRef]

- Smolensky, P. Information processing in dynamical systems: Foundations of harmony theory. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; MIT Press: Cambridge, MA, USA, 1986; Volume 1, pp. 194–281. [Google Scholar]

- Hinton, G. Training products of experts by minimizing contrastive divergence. Neural Comput. 2002, 14, 1771–1800. [Google Scholar] [CrossRef]

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy Layer-Wise Training of Deep Networks. Adv. Neural Inf. Process. Syst. 2007, 19, 153–160. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).