Abstract

Self-supervised depth estimation methods enable the recovery of scene depth information from monocular endoscopic images, thereby assisting endoscopic navigation. However, existing monocular endoscopic depth estimation methods generally fail to capture the inherent continuity of depth in intestinal structures. To address this limitation, this work presents the Mono-ViM framework, a CNN-Mamba hybrid architecture that enhances depth estimation accuracy through an innovative depth-first scanning mechanism. The proposed framework comprises a Depth Local Visual Mamba module employing depth-first scanning to extract rich structural features, and a cross-query layer, which reframes depth estimation as a soft classification problem to significantly enhance robustness and uncertainty handling in complex endoscopic environments. Experimental results on the SimCol Dataset and C3VD demonstrate that the proposed method achieves high depth estimation accuracy, with Abs Rel of 0.070 and 0.084, respectively. These results correspond to error reductions of 16.7% and 19.4% compared to existing methods, highlighting the efficacy of the proposed approach.

MSC:

68T07

1. Introduction

Endoscopic technology, as a pivotal diagnostic tool in modern minimally invasive medicine, plays a critical role in clinical practice due to its minimally invasive access and real-time imaging capabilities [1]. With the increasing prevalence of gastrointestinal cancers and related diseases, precise intraoperative navigation and spatial awareness are essential for enhancing early lesion detection and ensuring surgical safety. However, in complex anatomical regions, conventional endoscopic systems with limited field of view often impede surgeons’ ability to precisely assess spatial distances between instrument tips and target tissues, posing operational risks from visual blind zones, such as tissue injury or perforation. Monocular depth estimation technology based on deep learning algorithms addresses this geometric perception deficiency by reconstructing 3D depth maps directly from single-frame endoscopic images. This approach effectively compensates for the inherent lack of geometric awareness in endoscopic visualization systems [2]. It provides a fundamental geometric perception basis for enabling safe automatic robotic intervention, accurate lesion measurement, and quantitative postoperative assessment. Supervised depth estimation methods rely on ground truth for model training. However, the acquisition of high-precision depth labels faces significant challenges due to the confined operational environments of endoscopes [3]. In contrast, self-supervised approaches utilizing sequential images have emerged as a research priority by achieving depth prediction without labeled data through multi-view geometric consistency constraints and Structure-from-Motion (SFM) optimization. Self-supervised methods are more applicable to real clinical data, because the natural geometric relationships between consecutive frames in endoscopic videos are more suitable for constructing unsupervised signals. In addition, the depth information learned by the model under unlabeled conditions has the ability to generalize and adapt to different organs and lighting conditions.

Several self-supervised paradigms have been explored for monocular depth estimation. Geometry and temporal-consistency methods [4,5] leverage multi-view reconstruction and Structure-from-Motion (SfM) constraints to predict depth, yet they are sensitive to non-rigid tissue deformation. Optical-flow-based approaches [6] both estimate motion and depth to enhance robustness in dynamic scenes, but their performance deteriorates when large occlusions occur. Generative reconstruction models [7] synthesizing target views as implicit supervision are efficient, but they struggle to preserve fine structural details in complex endoscopic scenes.

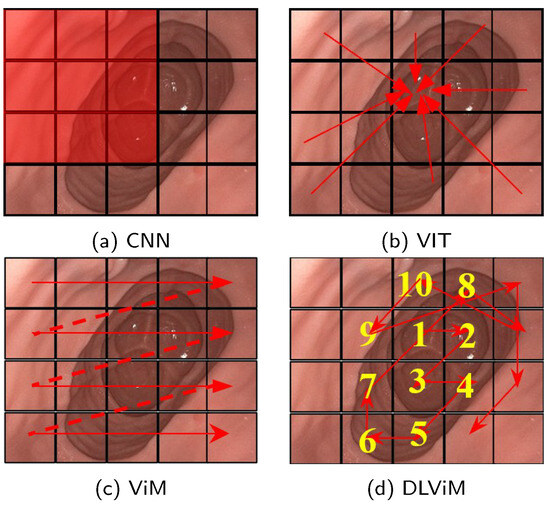

Recent advancements in model architectures [8] centered on convolutional neural networks (CNNs) and Vision Transformers (ViTs) [9] have demonstrated remarkable efficacy in monocular self-supervised depth estimation tasks. CNN-based and ViT-based models exhibit inherent limitations in depth estimation tasks. Convolutional Neural Networks (CNNs), a class of deep learning models that use convolutional operations to extract local image features such as edges and textures, demonstrate strong local feature perception, as shown in Figure 1a. However, their restricted receptive fields—the region of the image a model can analyze in a single operation—limit their ability to model geometric correlations between spatially distant regions. Vision Transformers (ViTs), which are deep learning models that process images by dividing them into non-overlapping patch sequences and applying self-attention mechanisms, partition input images and establish global long-range dependencies via multi-head self-attention, as illustrated in Figure 1b. The self-attention mechanism allows ViTs to capture relationships across the entire image, but its computation of attention weights incurs quadratic computational complexity relative to image size, resulting in significant overhead when processing high-resolution endoscopic images. The recently proposed Vision Mamba (ViM) architecture [10], based on Structured State Space Models (SSMs)—a framework for modeling dynamic systems that evolve over time—represents a breakthrough, as shown in Figure 1c. ViM captures positional information across all directions through a cross-scan strategy while maintaining linear computational complexity for long-range spatial relationship modeling. Nevertheless, its predefined fixed scanning paths may inadequately adapt to anisotropic feature distributions, where features vary unevenly across directions, in complex anatomical structures. For example, the rigid, geometry-driven scanning order often disrupts the continuity of semantically cohesive regions, thereby hindering effective learning of the structural priors essential for depth estimation.

Figure 1.

Feature extraction methods in depth estimation. The red rectangle represents the convolution kernel, the red arrows, dotted lines and numbers indicate the processing order of the algorithm. (a) CNN extracts local features using sliding convolutional kernels. (b) ViT splits the image into patches and applies self-attention to model inter-patch relationships, enabling global feature extraction. (c) ViM employs a cross-scanning state space model to efficiently capture global receptive fields. (d) DLViM adopts a depth-first dynamic modeling path to extract global structural features.

In order to obtain omnidirectional position information and adapt to complex environments while reducing the amount of calculation, this work proposes Mono-ViM, a lightweight and efficient self-supervised deep learning model based on the Mamba architecture. The proposed method uses the efficient sequence modeling of SSMs to achieve linear computational complexity. Compared to its based method ViM, we propose a depth-first scanning mechanism coupled with a cross-query interaction module. In detail, the Depth-Local Visual Mamba (DLViM) extends the ViM framework with adaptive depth-first scanning to model anatomical continuity, while the Cross-Query Layer (CQL), inspired by ViTs, employs query-based interaction to enhance global contextual understanding. Specifically, for endoscopic imaging where depth continuity induces distinct feature representations across varying tissue layers, we innovatively design the Depth Local Visual Mamba (DLViM) module. The core of DLViM is a depth-first scanning mechanism that prioritizes the serialized processing of image tokens originating from deeper anatomical structures before progressing to shallower ones. This scanning order is inherently aligned with the spatial configuration of endoscopic scenes, allowing the model to first establish a coherent representation of the underlying tissue geometry. As illustrated in Figure 1d, this sequential feature aggregation from deep to shallow enhances the correlation of features along the depth dimension, thereby effectively preserving the global spatial coherence of the environment. Furthermore, we designed an enhanced cross-query layer that utilizes ViT to extract encoded features as object queries, which interrogate the decoded depth map to derive depth binning and probability maps for high-precision depth prediction.

The main contributions of this work can be summarized as follows:

- Propose Mono-ViM, a lightweight Mamba-based model for monocular depth estimation in Endoscopic Images.

- Propose a novel depth-first scanning strategy to enhance local feature representation.

- Propose a Cross-Query Layer to boost fine-grained detail representation.

- Demonstrate that Mono-ViM achieves simplicity, effectiveness, and higher accuracy through comprehensive experiments on the SIMCOL Dataset, C3VD and KITTI.

2. Related Work

2.1. Monocular Depth Estimation

Estimating depth from a single image is an inherently ill-posed problem. Therefore, methods using deep learning enabled significant progress in the field.

Using ground-truth depth as supervision, the predictive model can exploit the relationship between color images and their corresponding depth values. Eigen et al. [11] first introduced a multi-scale convolutional framework combining global coarse prediction and local fine refinement, inaugurating the use of CNNs for depth regression. Subsequent studies enhanced accuracy through various architectural and loss-level innovations—such as Conditional Random Fields [12,13] for post-processing, the reverse Huber loss and improved up-sampling modules [14]. These networks demonstrated that deeper and better-designed CNNs could significantly improve pixel-wise depth prediction quality. Moreover, multi-scale ordinal regression strategies [15] are proposed by Fu et al. However, this is challenging to acquire in varied real-world settings. Recent work has shown that conventional structure-from-motion (SfM) pipelines [16] can generate sparse training signal for both camera pose and depth.

However, with the fully supervised methods advanced rapidly, the availability of precise depth labels in the real world became a major issue. Network architectures also play an important role in achieving good results in self-supervised depth estimation. Numerous studies explored monocular depth estimation through architectural refinements such as attention mechanisms [17], multi-scale feature modulation [18], and lightweight backbone designs [19]. These methods can capture local and global cues, yet they were limited by either computational complexity or restricted fields. With the evolution of deep vision models, self-supervised depth estimation based on CNNs and ViTs has achieved significant progress. Monodepth2 [20] substantially improves the accuracy and robustness of monocular video depth prediction through photometric consistency loss in multi-view synthesis and an automatic masking mechanism to handle dynamic objects and occlusions. SQLDepth [21] further captures fine-grained scene structures via self-query layers and self-cost convolution. SPIDepth [22] introduces a more robust pose network to enhance geometric understanding of scenes, thereby achieving more precise depth estimation. Lite-Mono [23] enables real-time and efficient inference on resource-constrained devices through depthwise separable convolutions and channel attention mechanisms. MonoViT [24] leverages vision transformers with multi-scale feature fusion and self-supervised learning to obtain high-precision depth predictions without paired annotations. However, CNNs are constrained by their limited local receptive fields, while ViTs suffer from quadratic computational complexity. To address these limitations, we propose a ViM-based depth estimation model that achieves a balanced integration of efficient computation and long-range dependency modeling.

2.2. State Space Models and Vision Mamba

Structured State Space Models (SSMs) have emerged as a powerful framework for long-range sequence modeling. S4 [25] leverages parameterized state transitions and convolutional kernels to achieve linear-complexity infinite-context modeling. However, its time-invariant design—with static system dynamics over time—limits adaptation to dynamic patterns. Mamba [26] overcomes this via selective SSMs (S6), where input-dependent gating dynamically adjusts state transitions, enabling feature-aware computation without sacrificing efficiency.

VMamba [27] adapts Mamba to vision via cross-scan patch partitioning, transforming 2D images into 1D sequences while preserving local context. LocalMamba [28] processes images through overlapping/non-overlapping windows with local selective scans and cross-window aggregation, balancing global-local modeling. However, medical images’ blurred boundaries challenge fixed scanning strategies. We propose a depth-first scanning approach to prioritize structurally similar regions, enhancing depth estimation accuracy in blurred medical images.

2.3. Architectural Comparison with Related Models

To clearly elucidate the architectural innovations of Mono-ViM, we first provide a systematic comparison with its most relevant counterparts, LocalMamba [28] and MonoViT [24], in Table 1. While all three frameworks target enhanced visual representation learning, they diverge fundamentally in core architectural paradigms.

Table 1.

Architectural comparison of Mono-ViM with related models.

LocalMamba [28] processes images through overlapping/non-overlapping windows with local selective scans, emphasizing local feature extraction while maintaining computational efficiency through linear complexity. However, its window-based mechanism inherently limits global receptive fields, which may compromise the modeling of long-range dependencies in endoscopic scenes with continuous anatomical structures. MonoViT [24] leverages vision transformers with multi-scale feature fusion, achieving global context modeling through self-attention mechanisms. Nevertheless, the quadratic computational complexity of self-attention poses significant challenges when processing high-resolution endoscopic images, limiting its practical deployment in real-time applications.

In contrast, Mono-ViM introduces a novel depth-first scanning strategy within the Visual State Space Model (ViM) framework, enabling global receptive field coverage while maintaining linear computational complexity. The proposed Depth Local Visual Mamba (DLViM) module specifically addresses the challenges of endoscopic imaging by prioritizing structurally similar regions through adaptive scanning paths, effectively capturing both local details and global contextual information essential for accurate depth estimation in complex anatomical environments.

Beyond direct architectural comparisons, Mono-ViM’s Mamba-based design offers distinct advantages over other popular depth estimation paradigms. Compared to diffusion-based methods, which iteratively refine depth maps from noise through a multi-step denoising process, Mamba offers a significant advantage in inference speed by producing depth estimates in a single forward pass. However, diffusion models incur substantial computational overhead due to their iterative nature, hindering real-time application. In contrast, recurrent frameworks (e.g., based on LSTMs or GRUs) share Mamba’s sequential nature and are designed for efficient step-by-step processing. However, traditional RNNs often struggle with long-range dependencies due to vanishing gradients. Mamba’s selective state space mechanism fundamentally overcomes this limitation, enabling effective modeling of long-range contextual information—critical for understanding endoscopic scenes—while retaining high computational efficiency.

Thus, as summarized in Table 1, Mono-ViM occupies a unique niche: it integrates the global receptive field of Transformers, overcomes the quadratic complexity bottleneck through selective state spaces, and introduces a depth-first scanning strategy specifically optimized for the structural continuities and blurred boundaries characteristic of endoscopic imagery. This positions it as a highly suitable paradigm for real-time medical imaging applications where accuracy, efficiency, and contextual awareness are paramount.

3. Our Method

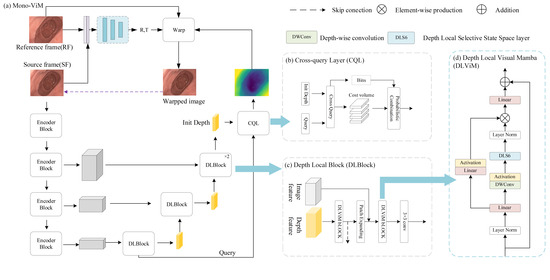

To overcome the incompatibility of fixed scanning paths with endoscopic anatomy, the Mono-ViM is proposed in this work (see Figure 2). The proposed framework utilizes the Depth Local Visual Mamba (DLViM) module for structured feature modeling, and the Cross-Query Layer (CQL) for high-precision depth decoding. This section introduces the basic principles of Mamba and detailed architecture of the Mono-ViM framework.

Figure 2.

(a) Overall architecture of Mono-ViM with a U-shaped structure consisting of DepthNet and Posenet. (b) CQL module, which uses encoded deep information as queries to optimize fine-grained results. (c) DLBlock, which combines the global linear modeling capability of ViM with the channel-aware capability of CNN. (d) DLViM, a key component of Mono-ViM.

3.1. Preliminaries

We first introduce the basic principles of Mamba. Mamba is a highly efficient sequence modeling architecture based on SSM. Its core component, the S6 module, improves traditional SSM through selective state space mechanisms. The state equation is defined as:

where the dynamic parameter are obtained from the continuous-time dynamic equations and . The step size parameter is generated in real-time from the input parameter through a projection function, which constitutes the core of the selective mechanism.

This architecture is the key to achieving linear computational complexity. Unlike the self-attention mechanism in Transformers, which requires comparing every token to every other token (resulting in complexity for a sequence of length , the SSM processes the sequence recursively. As shown in Equation (1), the computation at each time step t depends only on the current input and the previous hidden state . This recurrence means that processing an entire sequence of length N requires operations. Furthermore, the selective mechanism (where , B, C are input-dependent) does not alter this linear scaling. Instead, it allows the model to dynamically focus on or ignore inputs based on the current context, significantly enhancing representation power without sacrificing computational efficiency. During training, hardware-optimized parallel scans are employed to exploit modern accelerators, while the inherent recurrent nature dominates during inference, ensuring high efficiency throughout the model’s life-cycle. This mechanism enables the S6 module to dynamically adjust the state transition process based on the input, thereby achieving linear complexity while maintaining strong performance.

3.2. Model Overall Framework

The overall framework of the proposed Mono-ViM framework is shown in Figure 2. The decoder plays a critical role in reconstructing the high-level semantic features extracted by the encoder into the original spatial resolution of the input image, producing depth maps with precise structural details and well-defined boundaries. This work introduces a lightweight decoder based on the Mamba architecture, specifically designed to efficiently recover encoded information. The framework comprises a DepthNet and a PoseNet. Given an input image for depth prediction, the DepthNet first estimates its inverse depth map . For a source image and its reference image pair , the DepthNet computes the relative camera motion between consecutive frames. The reference image can then be synthesized using the camera motion , intrinsic parameters K, and the estimated depth from the original image:

where denotes a projection function that projects the 3D points obtained from onto the image plane of , and represents the sampling operator. During training, DepthNet and PoseNet are jointly optimized through image reconstruction error.

3.2.1. Depth Encoder

In this work, Lite-Mono is adopted as the encoder, featuring an efficient hybrid design of lightweight CNN and Transformer components. It employs a continuous cavity convolution module with multi-rate convolutions to extract multi-scale local features, and a local–global feature interaction module to incorporate global context via lightweight self-attention. As shown in Figure 2, the size of the output three-stage feature map is , , .

3.2.2. Depth Decoder

Mono-ViM employs four stages to aggregate the multi-scale features obtained from the encoder, and feeds the results into a Mamba-based decoder. In the decoder, global information is efficiently captured by DLViM and the feature maps are upsampled using Patch Expanding. The skip connection features are then concatenated, followed by further global integration using DLViM. Subsequently, convolutional blocks are applied to adjust channel dimensions and enhance inter-channel correlations. The input channels of the convolutional blocks are (144, 80, 24, 12), and the output channels are (6, 48, 24, 12). Finally, the CQL module refines the depth map by querying and integrating global contextual cues, enabling more accurate depth reconstruction.

3.2.3. PoseNet

We adopt the same PoseNet as Monodepth2 for pose estimation. Specifically, during the encoding stage, a pre-trained ResNet18 network extracts features from adjacent image pairs, while the decoding stage employs a 4-layer CNN to regress the 6-DoF relative pose parameters.

3.2.4. Depth Local Visual Mamba

The Depth Local Visual Mamba (DLViM), as depicted in Figure 2, serves as the central component of DLBlock, leveraging a dual-branch hierarchical architecture to enhance feature representation. Input features undergo layer normalization before parallel processing: The first branch employs linear projection to map features into the hidden state space of SSM, followed by non-linear activation to capture complex visual patterns such as edges, textures, and object shapes. The second branch sequentially undergoes linear transformation, depthwise separable convolution, and activation before being fed into the core module DLS6 for feature encoding. After layer normalization, the outputs of both branches are fused via element-wise multiplication. Finally, a linear layer projects the merged features back to the original input dimension, establishing a residual connection with the initial input to ensure stable gradient propagation.

The Depth Local Selective State Space Model (DLS6), as the core innovation of the DLViM module, builds upon the foundation of the S6 module. While the S6 module excels at processing 1D causal sequences through capturing sequential dependencies, images as 2D spatial data exhibit non-causal characteristics. Directly flattening them into 1D sequences disrupts local spatial correlations, making it challenging for models to effectively recognize 2D structural relationships. This dimensional discrepancy constitutes the fundamental challenge. Existing methods employ strategies like cross-scan to mitigate spatial information loss during serialization, yet their fixed scanning patterns remain inadequate for adapting to the complex anatomical features in endoscopic images. Our proposed hierarchical DLS6 architecture innovatively establishes a three-dimensional collaborative modeling paradigm: At the global level, a cross-scan strategy horizontally and vertically traverses flattened images to capture macro spatial correlations; Locally, a multi-scale window partitioning mechanism enhances neighborhood associations while preserving global awareness; The framework innovatively introduces a depth-first dynamic scanning algorithm that adaptively adjusts scanning paths based on depth correlations of anatomical structures, achieving fine-grained tissue modeling.

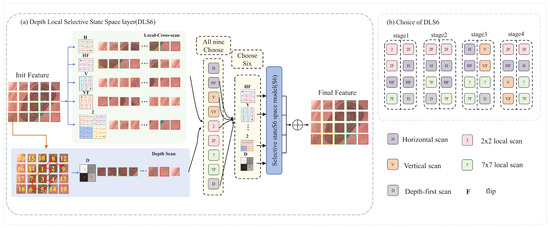

Following the approach introduced in [28], we employ a dynamic architecture search mechanism to determine the most suitable scanning directions for each layer. The method constructs the search space as an over-parameterized model, which enables simultaneous optimization of both the network parameters and architectural coefficients within a standard training paradigm. After training, we obtain the final directional configuration by selecting the four directions with the highest softmax confidence scores. A visualization of the selected directions is provided in Figure 3b.

Figure 3.

(a) The DLS6 layer, a core module of DLViM, employs nine different methods to process the input feature maps. Among them, four methods use cross-scan modules to flatten the patched feature maps and scan the flattened features in four different directions. Four local-scan methods divide the feature maps into 2 × 2 and 7 × 7 patches and perform horizontal scanning to extract fine-grained features as well as broader local contextual information. The final method is depth-first scan, which traverses the feature map in a depth-first manner to model hierarchical semantic paths and capture richer global contextual information. (b) Visualization of Search Strategies Across Different stages.

3.2.5. Cross-Query Layer

The Cross-Query Layer (CQL) leverages relative distance information as a geometric cue to enhance depth estimation. Inspired by [21], it computes the dot product between pixel embeddings from the same image to capture intrinsic geometric relationships within the image. This approach approximates relative distances as dot products between pixels and image patches, effectively preserving geometric information while significantly reducing computational overhead.

The cross-cost volume represents the relative distances between pixels and local patches, but such distances inherently lack explicit physical meaning. To further capture more semantically meaningful geometric relationships between pixels and structural objects in the image, we introduce coarse-grained queries. First, incorporate positional encodings into the high-level semantic features extracted by the encoder and feed them into a Transformer to generate a set of query vectors Q representing the image’s structural patterns. These queries are then applied to the embedded feature maps from the decoder stage, and the final cost volume is obtained through dot-product computations:

Then, use the potential depth of the cost volume to compute depth intervals to estimate depth bins b:

Here, ⨁ denotes concatenation, MLP denotes multi-layer perceptron.

The cost volume is aligned with the depth bin dimensions using a convolution, followed by a planar softmax operation to obtain probability distributions. Pixel-level depth prediction is then achieved through a linearly weighted combination of the center values from all depth intervals, ultimately forming a high-precision depth map.

To address the potential performance degradation of depth estimation in complex scenarios caused by the limited number of query heads in CQL architecture, we propose two model variants: the full-featured Mono-ViM retaining CQL structures, and its lightweight counterpart Mono-ViM-small with CQL modules removed. This dual-model design is driven by the following considerations: For scenarios demanding high-precision depth estimation, Mono-ViM preserves complete feature representation capability through intact CQL components; while in computation-sensitive scenarios requiring lightweight solutions, Mono-ViM-small achieves operational efficiency through CQL elimination.

3.3. Loss Function

Self-supervised depth estimation problem can be regarded as a multi-view integration task. Specifically, DepthNet and PoseNet are used to predict the appearance of the current frame from the reference frame. The network is trained to minimize both the image reconstruction loss between the target image and the reconstructed current frame, and the edge-aware smoothness loss on the predicted depth map.

3.3.1. Image Reconstruction Loss

Following [23], we use the following formula with L1 and SSIM to construct the photometric error between two images.

where . To mitigate the effects of invisible pixels and occluded objects in the source images, the minimum photometric loss is computed across the sequence of frames:

Additionally, a binary mask is applied to compute the training loss only on pixels that are reliably reconstructed after warping using depth and pose, thereby excluding uncertain or misleading pixels caused by static camera, dynamic objects moving in sync, or low-texture regions.

Therefore, the final image reconstruction loss is defined as:

3.3.2. Edge-Aware Smoothness Loss

To make the depth map smoother and more reasonable in the untextured region, we use edge-aware smoothing loss:

The total loss can be expressed as:

4. Experiments

In this section, we evaluate the proposed framework with effects on three public datasets.

4.1. Implementation Details

All models were implemented in the PyTorch 1.13.0 and CUDA 11.7 framework, and trained on an RTX 4090 GPU with a batch size of 12. We use AdamW as the optimizer with an initial learning rate of , which decays to following a cosine schedule, and training is conducted for 25 epochs. Following the settings in [23], we apply color and flip augmentations to the images during training. To ensure fair comparison, each baseline model uses its own default learning rate settings.

The accuracy is evaluated using the seven metrics proposed in [23]: absolute relative error (Abs Rel), squared relative error (Sq Rel), root mean squared error (RMSE), root mean squared log error (RMSE log), and accuracy under threshold values (, , and ).

4.2. Datasets

To support intestinal surgical navigation, the C3VD dataset provides real clinical colonoscopy data for realistic evaluation, while the SimCol dataset offers high-precision synthetic data for quantitative validation. In addition, the KITTI dataset is included to assess the method’s generalization beyond medical scenes. The combination of these datasets allows comprehensive evaluation across synthetic, clinical, and outdoor domains.

The SimCol dataset: The SimCol Dataset [29] integrates three categories of anatomical structure data derived from real human CT scans, with each category containing multiple trajectory paths generated. Each trajectory contains synchronously captured RGB rendered images, corresponding camera intrinsic parameter matrices, high-precision depth field information, and camera extrinsic pose parameters. All available data maintains an original image resolution of The images are of size pixels, which is downsampled to to accommodate model requirements. The data partitioning scheme of the SimCol dataset is illustrated in Table 2, with the triplet index set as . The ternary frame structure means that the current frame is the center and the first 3 frames and the last 3 frames are used as reference frames for training. This choice can increase the parallax between adjacent frames while ensuring sufficient overlap between frames.

Table 2.

Data splits used in the SIMCOL Dataset.

C3VD: Colonoscopy 3D Video Dataset (C3VD) [30] is an open source dataset designed to promote colonoscopy computer vision research, containing 22 high-definition clinical colonoscopy videos and their accurate 3D truth data. The dataset provides depth map, surface normal, optical flow, occlusion annotation, and 6-degree-of-freedom position, which effectively solves the bottleneck of missing truth data in colonoscopy vision tasks. All available data maintains an original image resolution of pixels, which is downsampled to to accommodate model requirements. The data partitioning scheme of the SimCol dataset is illustrated in Table 3, with the triplet index set as due to the slow movement of the endoscope.

Table 3.

Data splits used in C3VD.

KiTTI: The KITTI dataset [31] is a widely adopted benchmark for stereo road scene understanding, encompassing multi-modal data from cameras, LiDAR, and an inertial measurement unit (IMU). Following the Eigen split [32], we partition the monocular sequences into 39,180 triplets for training, 4424 for validation, and 697 for testing. Notably, the test set employs refined ground truth depth maps generated through the method described in [33], ensuring enhanced measurement accuracy.

4.3. SimCol Dataset Results

We compare our model with previous representative methods, and the results are presented in Table 4. Mono-ViM outperforms all approaches while being the second smallest model. It achieves AbsRel (0.070), SqRel (0.061), RMSE (0.356), and RMSElog (0.099), demonstrating its accuracy in endoscopic image depth estimation.

Table 4.

Comparison of Mono-ViM with recent representative methods on the SimCol dataset.

Additionally, Mono-ViM exhibits significant performance improvements compared to Lite-mono, a prototype model that lays the groundwork for further development. In the self-supervised setting, Mono-ViM shows improvements of 16.7% in AbsRel, 14.8% in SqRel, 6.6% in RMSE, and 12.4% in RMSElog. These substantial improvements underscore the impact of strengthening the depth decoder network and its information flow in Mono-ViM.

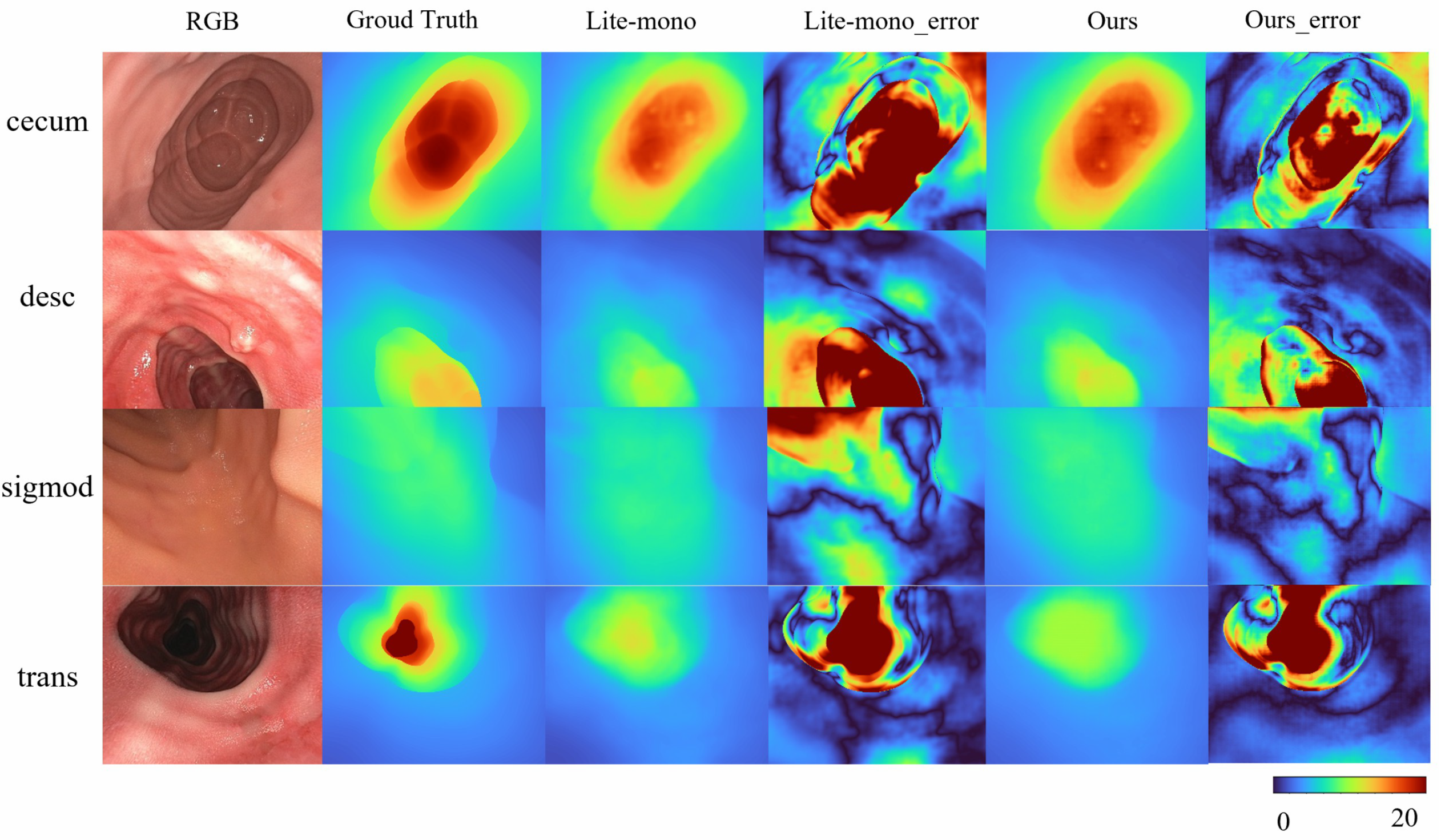

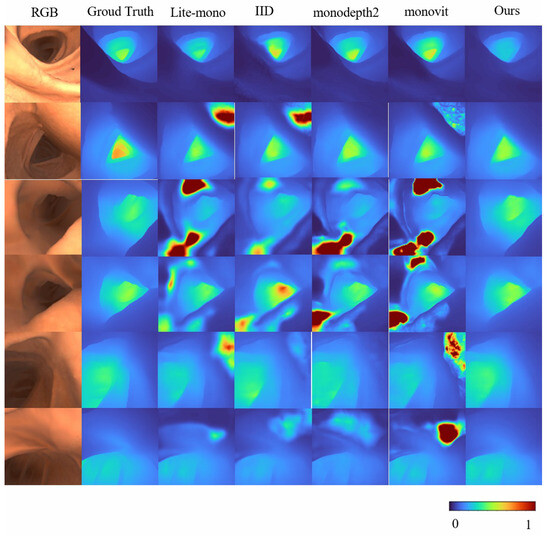

Overall, these results validate the effectiveness of Mono-ViM in self-supervised monocular depth estimation. The qualitative experiments in Figure 4 further demonstrate its superior performance by effectively reducing overestimated depth regions (e.g., diminished red high-depth areas) and generating depth maps that align closely with the ground truth, especially in challenging scenarios. Furthermore, compared to baseline models, our approach exhibits improved coherence characterized by smoother depth transitions and enhanced inter-region consistency. These improvements contribute to both higher reliability and superior visual quality of the depth estimation outputs.

Figure 4.

Qualitative results on SimCol Dataset. Here are some depth maps generated by Lite-mono and Mono-Vim (ours).

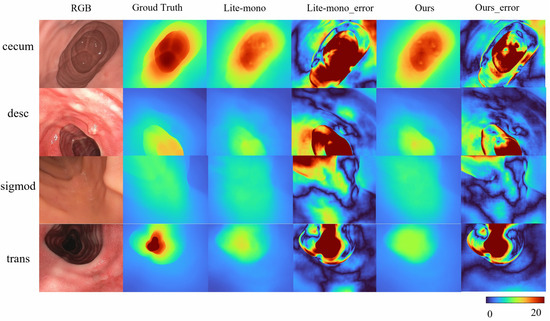

4.4. C3VD Results

To address the domain gap in SimCol Dataset, we train our model on the real-world endoscopic dataset C3VD. As shown in Table 5 Mono-ViM (small) achieves state-of-the-art performance on real-world benchmarks, with metrics of AbsRel (0.081), SqRel (0.005), RMSE (0.048), and RMSElog (0.109), demonstrating its robust adaptation to real endoscopic scenes. Qualitative comparisons in Figure 5 further highlight the framework’s superior capability in reconstructing fine-grained depth details from real endoscopic images.

Table 5.

Comparison of Mono-ViM with recent representative methods on C3VD.

Figure 5.

Qualitative results on C3VD. Here are some depth maps generated by Lite-mono and Mono-Vim (ours). Error amplification visualization of depth prediction: .

4.5. Kitti Results

Furthermore, we evaluate our model on the outdoor KITTI dataset, conducting comparative experiments with lightweight baseline models (<10 M parameters). These baseline models are tested using their publicly available optimal configurations without additional fine-tuning, ensuring a fair comparison under identical training protocols.

As shown in Table 6, the proposed Mono-ViM-small achieves the lowest absolute relative error (0.081) and the highest accuracy under the threshold (0.929) among all compared methods, while maintaining a compact model size of only 3.3 M parameters. Compared to other lightweight models such as Lite-mono (3.1 M), our method exhibits superior accuracy with marginal parameter overhead. Moreover, it surpasses heavier counterparts like R-MSFMX3-GC (5.0 M) and R-MSFMX6-GC (5.3 M), indicating a more efficient utilization of model capacity. These results demonstrate that Mono-ViM-small offers a favorable trade-off between accuracy and computational cost, making it well-suited for real-world applications with limited hardware resources.

Table 6.

Comparison of Mono-ViM with recent lightweight methods on KITTI.

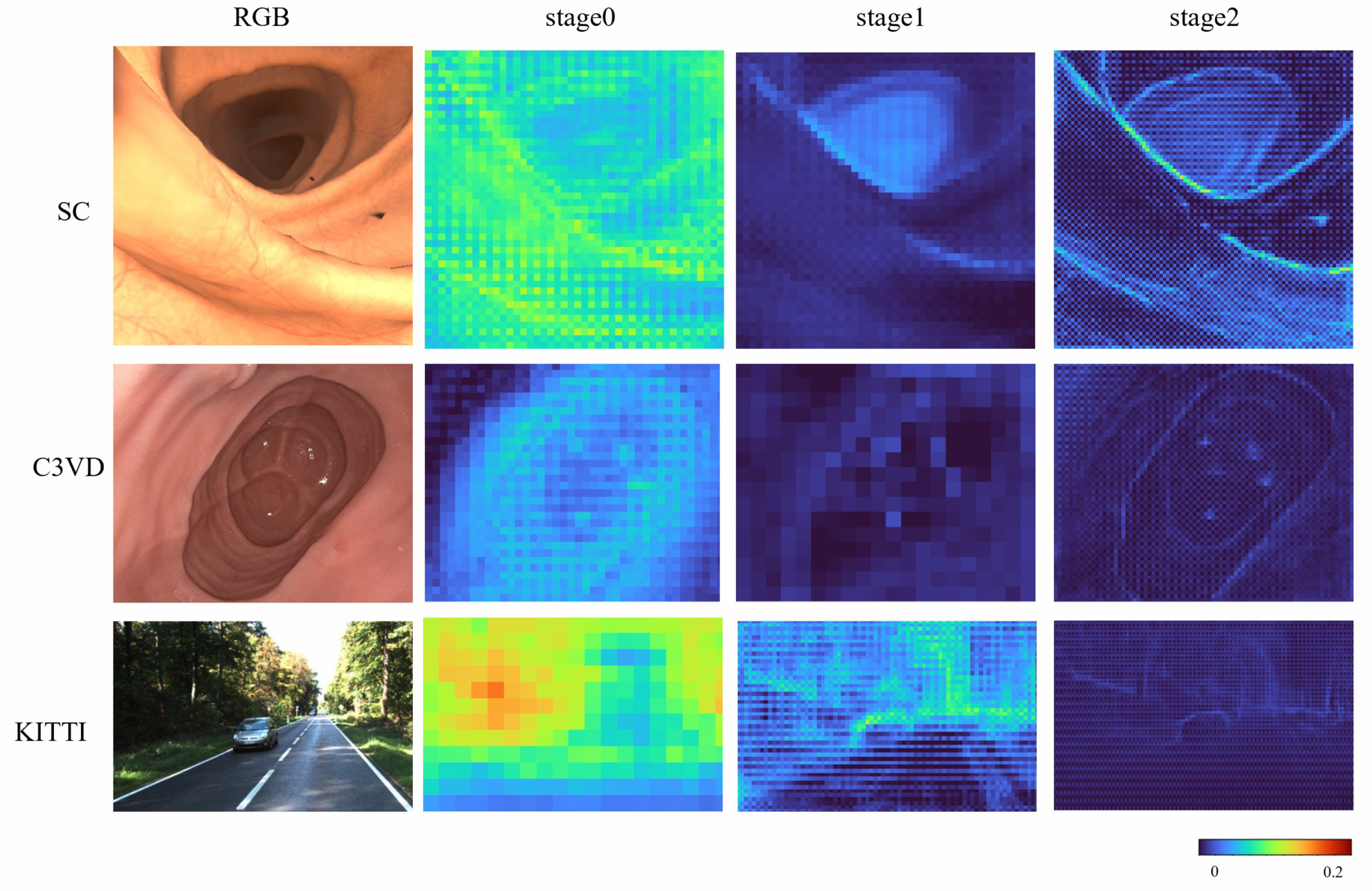

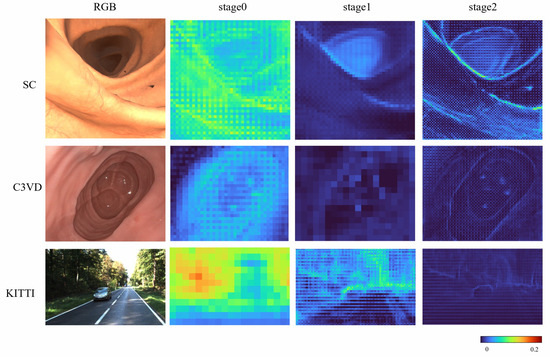

4.6. Ablation Study on Model Architectures

To more systematically evaluate the effectiveness of the proposed DLViM, we performed a qualitative analysis of the gating units across different stages. As illustrated in Figure 6, the activation weights are distributed relatively uniformly over the entire image at the early stage; however, as the network depth increases, these weights progressively concentrate on regions containing salient structural information, indicating that the model develops stronger spatial selectivity and structural awareness during the feature extraction process.

Figure 6.

Attention results on all datasets.

To further assess the effectiveness of the proposed model, we conducted a series of ablation studies to examine the contribution of individual components. All experiments were performed on the SimCol dataset, as illustrated in Table 7.

Table 7.

Ablation on the SimCol dataset.

First, we evaluated the impact of removing the CQL module. This led to a reduction in model size by 0.3 M parameters; however, the RMSE increased by 12.5%, indicating a notable decline in accuracy. This highlights the importance of CQL in enhancing the model’s predictive performance. Next, we replaced the DLViM module with a standard CNN. While this replacement also reduces the model size by 0.3 M, it leads to a significant increase in prediction error. This suggests that DLViM is critical for capturing remote global context, a capability lacking in traditional CNNs limited to local feature extraction.

Furthermore, we examined the effect of substituting DLViM with the scanning strategy from LocalMamba. Although this modification did not significantly alter the number of model parameters, it led to a performance drop. This suggests that the proposed depth-first scan mechanism contributes to the model’s ability to understand structural information, reinforcing its role in maintaining global coherence in the learned representation.

5. Discussion and Conclusions

This work presents Mono-ViM, a novel self-supervised monocular depth estimation framework tailored for endoscopic imaging. The proposed architecture employs a hybrid design that seamlessly combines CNNs with Mamba to enhance global contextual understanding and refine semantic detail perception. Experiments on the SimCol and C3VD dataset both confirm that Mono-ViM comprehensively outperforms all state-of-the-art methods. In a self-supervised setting on the SimCol dataset, the proposed framework reduces errors by up to 16.7% in AbsRel, 14.8% in SqRel, 6.6% in RMSE, and 12.4% in RMSElog over current models. These results demonstrate the strength of incorporation of the DLViM module for capturing local–global feature dependencies and the CQL mechanism for improved fine-grained depth reconstruction. Furthermore, evaluations on the KITTI dataset confirm that the proposed framework maintains robust performance and exhibits strong generalization capability across varying environments.

The proposed Mono-ViM framework still presents several limitations. First, in endoscopic scenes, the presence of digestive fluids can produce strong specular reflections that disrupt the photometric consistency assumed in self-supervised learning. This will lead to local depth discontinuities. To address this, future work will incorporate reflection-aware photometric loss to enhance robustness under reflective conditions. Second, the proposed Mono-ViM assumes structural continuity between adjacent frames. However, periodic peristaltic motion introduces non-rigid deformation of tissues, which violates this assumption and affects depth stability. To mitigate this issue, optical-flow consistency constraints or temporal feature aggregation mechanisms can be adopted to explicitly model periodic deformation and reduce transient estimation errors.

Author Contributions

Conceptualization, S.C., J.L. and T.C.; software, J.L.; validation, S.C. and J.L.; formal analysis, K.Y., Y.C. and X.X.; investigation, X.X. and K.Y.; resources, Y.C., X.X. and T.C.; data curation, J.L.; writing—original draft preparation, S.C. and J.L.; writing—review and editing, J.L. and T.C.; visualization, X.X.; supervision, Y.C. and T.C.; project administration, Y.C. and T.C.; funding acquisition, S.C., Y.C. and X.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Jiangsu Funding Program for Excellent Postdoctoral Talent; the Science and Technology Program of Jiangsu Administration for Market Regulation (No.KJ2025006); and the Open Foundation of the State Key Laboratory of Fluid Power and Mechatronic Systems.

Data Availability Statement

The original data presented in the study are openly available in [KITTI Vision Benchmark Suite] at [https://www.cvlibs.net/datasets/kitti/, accessed on 12 August 2025], [Colonoscopy 3D Video Dataset] at [https://durrlab.github.io/C3VD/, accessed on 15 August 2025], and [Simcol3D dataset] at [https://rdr.ucl.ac.uk/articles/dataset/Simcol3D-3DReconstructionduringColonoscopyChallengeDataset/24077763, accessed on 10 September 2025].

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this work.

References

- Yu, H.; Zhou, C.; Zhang, W.; Wang, L.; Yang, Q.; Yuan, B. A Three-Dimensional Measurement Method for Binocular Endoscopes Based on Deep Learning. Front. Inf. Technol. Electron. Eng. 2022, 23, 653–660. [Google Scholar] [CrossRef]

- Yang, Y.; Shao, S.; Yang, T.; Wang, P.; Yang, Z.; Wu, C.; Liu, H. A Geometry-Aware Deep Network for Depth Estimation in Monocular Endoscopy. Eng. Appl. Artif. Intell. 2023, 122, 105989. [Google Scholar] [CrossRef]

- Rau, A.; Bhattarai, B.; Agapito, L.; Stoyanov, D. Task-Guided Domain Gap Reduction for Monocular Depth Prediction in Endoscopy. In Proceedings of the MICCAI Workshop on Data Engineering in Medical Imaging, Vancouver, BC, Canada, 8 October 2023; pp. 111–122. [Google Scholar] [CrossRef]

- Garg, R.; Bg, V.K.; Carneiro, G.; Reid, I. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. In Computer Vision–ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 740–756. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion From Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yin, Z.; Shi, J. GeoNet: Unsupervised Learning of Dense Depth, Optical Flow and Camera Pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Aleotti, F.; Tosi, F.; Poggi, M.; Mattoccia, S. Generative Adversarial Networks for unsupervised monocular depth prediction. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Xu, W.; Zou, L.; Wu, L.; Qi, Y.; Qian, Z. Depth Estimation Using an Improved Stereo Network. Front. Inf. Technol. Electron. Eng. 2022, 23, 777–789. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021; pp. 1–21. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. In Proceedings of the 41st International Conference on Machine Learning (ICML), Vienna, Austria, 21–27 July 2024; pp. 62429–62442. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image using a Multi-Scale Deep Network. In Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Li, B.; Shen, C.; Dai, Y.; van den Hengel, A.; He, M. Depth and surface normal estimation from monocular images using regression on deep features and hierarchical CRFs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1119–1127. [Google Scholar] [CrossRef]

- Liu, F.; Shen, C.; Lin, G.; Reid, I. Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2016, 38, 2024–2039. [Google Scholar] [CrossRef] [PubMed]

- Lyu, X.; Liu, L.; Wang, M.; Kong, X.; Liu, L.; Liu, Y.; Chen, X.; Yuan, Y. HR-Depth: High-resolution self-supervised monocular depth estimation. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Virtual, 2–9 February 2021; Volume 35, pp. 2294–2301. [Google Scholar] [CrossRef]

- Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; Tao, D. Deep ordinal regression network for monocular depth estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 2002–2011. [Google Scholar] [CrossRef]

- Klodt, M.; Vedaldi, A. Supervising the new with the old: Learning structure-from-motion from structure-from-motion. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 713–728. [Google Scholar] [CrossRef]

- Zhou, H.; Greenwood, D.; Taylor, S. Self-supervised monocular depth estimation with internal feature fusion. arXiv 2021, arXiv:2110.09482. [Google Scholar] [CrossRef]

- Zhou, Z.; Fan, X.; Shi, P.; Xin, Y. R-MSFM: Recurrent multi-scale feature modulation for monocular depth estimation for indoor environments. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 12783–12792. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Godard, C.; Aodha, O.M.; Firman, M.; Brostow, G.J. Digging into self-supervised monocular depth estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3828–3838. [Google Scholar] [CrossRef]

- Wang, Y.H.; Liang, Y.J.; Xu, H.; Jiao, S.H.; Yu, H.K. Sqldepth: Generalizable self-supervised fine-structured monocular depth estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 5713–5721. [Google Scholar] [CrossRef]

- Lavreniuk, M. SPIdepth: Strengthened Pose Information for Self-supervised Monocular Depth Estimation. arXiv 2024, arXiv:2404.12501. [Google Scholar] [CrossRef]

- Zhang, N.; Nex, F.; Vosselman, G.; Kerle, N. Lite-mono: A lightweight CNN and Transformer architecture for self-supervised monocular depth estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 18537–18546. [Google Scholar] [CrossRef]

- Zhao, C.; Zhang, Y.; Poggi, M.; Tosi, F.; Guo, X.; Zhu, Z.; Huang, G.; Tang, Y.; Mattoccia, S. Monovit: Self-supervised monocular depth estimation with a Vision Transformer. In Proceedings of the 2022 International Conference on 3D Vision (3DV), Prague, Czechia, 12–15 September 2022; pp. 668–678. [Google Scholar] [CrossRef]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.J.; Zhao, Y.Z.; Yu, H.T.; Xie, L.X.; Wang, Y.W.; Ye, Q.X.; Jiao, J.B.; Liu, Y.F. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar] [CrossRef]

- Huang, T.; Pei, X.H.; You, S.; Wang, F.; Qian, C.; Xu, C. LocalMamba: Visual state space model with windowed selective scan. arXiv 2024, arXiv:2403.09338. [Google Scholar] [CrossRef]

- Rau, A.; Bhattarai, B.; Agapito, L.; Stoyanov, D. Bimodal Camera Pose Prediction for Endoscopy. IEEE Trans. Med. Robot. Bionics 2023, 5, 978–989. [Google Scholar] [CrossRef]

- Bobrow, T.L.; Golhar, M.; Vijayan, R.; Akshintala, V.S.; Garcia, J.R.; Durr, N.J. Colonoscopy 3D Video Dataset with Paired Depth from 2D-3D Registration. Med. Image Anal. 2023, 90, 102956. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar] [CrossRef]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity invariant CNNs. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar] [CrossRef]

- Li, B.; Liu, B.; Zhu, M.; Luo, X.; Zhou, F. Image Intrinsic-Based Unsupervised Monocular Depth Estimation in Endoscopy. IEEE J. Biomed. Health Inform. 2024, 1, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.K.; Fan, X.N.; Shi, P.F.; Xin, Y.X. R-MSFM: Recurrent multi-scale feature modulation for monocular depth estimating. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 12777–12786. [Google Scholar] [CrossRef]

- Zhou, Z.K.; Fan, X.N.; Shi, P.F.; Xin, Y.X.; Duan, D.L.; Yang, L.Q. Recurrent Multiscale Feature Modulation for Geometry Consistent Depth Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9551–9566. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).