1. Introduction

Initial-value problems governed by nonlinear ordinary differential equations arise in many fields in science and engineering, e.g., radiative heat transfer [

1], electrical circuits [

2], and thermal explosions [

3]. These problems may arise from either lumped models of physical phenomena or the discretization of the spatial variables in evolution problems governed by partial differential equations of, say, the advection–reaction–diffusion type. The latter are usually characterized by many time scales, e.g., residence, diffusion, and chemical kinetics times, that may be quite different. As a consequence, the nonlinear ordinary differential equations resulting from the spatial variable discretization are stiff [

4], and their solution is usually obtained by means of implicit methods, which are not subject to the time step limitations associated with the stability of explicit ones [

4,

5].

Although iterative methods may be used to solve the nonlinear algebraic equations that result from the implicit discretization of nonlinear ordinary differential equations, they may be computationally costly, especially for equations arising from the spatial discretization of two- or three-dimensional problems. However, if the nonlinear ordinary differential equation consists of the sum of linear and nonlinear terms, one may solve the linear part exactly and deal with the nonlinear contribution by means of a quadrature that may also result in a nonlinear equation for which iterative Krylov subspace techniques may be of great use [

6]; these techniques are known as exponential integrators. Alternatively, one may employ linear implicit methods that combine the Runge– Kutta collocation formalism with collocation techniques, e.g., ref. [

7], or combine rational implicit and explicit multistep methods, e.g., refs. [

8,

9], in such a way that the resulting finite differences are linearly implicit and have a high order, etc.

Another method to deal with nonlinear differential problems is to use splitting methods, whereby the nonlinear operator is decomposed into the sum of much simpler ones that may be solved more easily than the original problem, e.g., refs. [

10,

11], or compositional techniques that make use of lower-order techniques that, upon composition, result in higher accuracy than individual ones [

10,

11]. Such splitting methods were initially developed in the former union and applied to solve, say, advection–reaction–diffusion equations by solving sequentially the advection, diffusion, and reaction terms/operators; this procedure uncouples the physical processes and results in splitting-operator errors.

Splitting methods may also be used to solve multidimensional problems by using a sequence of one-dimensional problems; in this case, the resulting technique is usually referred to as dimensional splitting and is affected by (dimensionality) decoupling errors. However, dimensional splitting has the great advantage that the solution of a multidimensional problem is reduced to the solution of much simpler one-dimensional ones. Dimensional splitting found many applications in the 1970s and 1980s in computational fluid dynamics, especially in aerodynamics. Note that exponential integrators and linear implicit techniques may also be used to solve the equations arising from either operator or dimensional splitting.

In the early 1960s, Pope [

12] first and then Certaine [

13] developed linearization methods for the solution of initial-value problems of nonlinear ordinary differential equations based on the linearization of those equations with respect to both the dependent and independent variables. These methods are exact for linear differential equations, which depend linearly on the independent variable and are characterized by analytical expressions that depend exponentially on time and the partial derivative of the right-hand side of the equation with respect to the dependent variable and, are, therefore, exponential integrators [

6]. However, there are several important differences between linearization and exponential integrator methods for initial-value problems of ordinary differential equations. First, exponential integrators require that the nonlinear operator contain a linear part; the linear part in linearization methods arises naturally because it corresponds to the linear term of the Taylor series expansion of the right-hand side of the differential equation. Second, exponential integrators require numerical quadrature if the equation is nonlinear; however, linearization methods provide exact solutions to the approximate linear equations that these methods deal with. For systems of nonlinear ordinary differential equations, both exponential integrators and linearization methods require the evaluation of matrix exponentials. Third, if the nonlinear ordinary differential equation does not contain a linear term on the dependent variable, exponential integrators may not be used unless one adds and subtracts a linear one. The choice of this linear term is not an easy one because it introduces a new time scale.

Linearization methods result in explicit finite difference expressions and are second-order accurate and

A-stable [

14,

15]. As a consequence, they become very useful to deal with stiff problems.

Since the 1960s, time linearization methods have been rediscovered several times, e.g., refs. [

14,

15,

16], and higher-order linearization techniques based on adding a correction term to the linear approximation, which is computed by solving an auxiliary ODE by means of standard explicit integrators, have been developed [

16]. Time linearization or simply linearization methods are also referred to as exponentially fitted methods, exponential Euler methods, piece-wise linearized methods and exponentially fitted Euler methods [

16]. More recently, attempts have been made to develop

A-stable, explicit finite difference methods for initial-value problems in ordinary differential equations based on the Taylor series expansion of the right-hand side of these equations to second-order rather than the first one used in linearization methods [

17]. These attempts were in part successful but resulted in explicit finite difference equations that contained the ratio of two series. As a consequence, their accuracy depends on both the number of terms retained in these series and the step size.

The main objective of this paper is to present piecewise analytical solutions to scalar, nonlinear, initial-value problems of ordinary differential equations that are continuous everywhere and provide A-stable, explicit finite difference methods based on a second-order Taylor of the right-hand side of the differential equation, only with respect to the dependent variable, and assess the accuracy of their corresponding finite difference equations in several examples. A second objective is to develop partial linearization methods that only account for the linearization of the right-hand side of the differential equation with respect to the dependent variable and assess their accuracy. A third objective is to compare the accuracy of the piecewise-linear and quadratic approximation methods presented in the paper with those of the well-known second-order accurate trapezoidal rule and the fourth-order accurate Runge–Kutta procedure.

The paper has been arranged as follows. In

Section 2, the quadratic approximation method based on the second-order Taylor series expansion with respect to both the dependent and independent variables [

17] is first briefly reviewed, before introducing three new, approximate quadratic methods. A full-linearization and three new partial-linearization schemes are presented in

Section 3. The jump in the first-order derivative at the grid points and the residual or defect errors of the linear and quadratic approximate methods presented here are determined in

Section 4. The explicit finite difference methods resulting from the linear and quadratic approximations reported in the paper are summarized in

Section 5, where the linear stability and local truncation errors of the linear and quadratic approximations are also reported. The accuracy of the methods presented in the paper is assessed and illustrated in

Section 6 for equations with smooth growing or decaying solutions and an equation whose solution exhibits blowup in finite time. In

Section 6, comparisons with the numerical results obtained with the trapezoidal and fourth-order Runge–Kutta method are also reported. A Conclusions section summarizes the most important findings of the paper.

2. Piecewise-Continuous Methods Based on Quadratic Approximations

As stated in the Introduction, in this section, a summary of the full piecewise-continuous methods based on quadratic approximations for scalar, nonlinear, first-order, ordinary differential equations previously reported in [

17] is first presented in order to emphasize that these methods provide analytical solutions, which are given by the ratio of two series. Then, in the second subsection, novel piecewise-continuous methods, also based on quadratic approximations that result in analytical solutions that do not involve series, are presented; these methods are one of the main subjects considered in this manuscript.

2.1. Full Piecewise-Continuous Methods Based on Quadratic Approximations

In a previous paper [

17], herein referred to as Part 1, approximate, piecewise analytical solutions to scalar, nonlinear, first-order, ordinary differential equations, i.e.,

where

x and

y denote the independent (time) and dependent variables,

is a nonlinear function of both

x and

y, and

denotes a constant (initial) value, were obtained by approximating

by the constant, linear and quadratic terms of its Taylor series expansion about

in the interval

; i.e., Equation (1) was approximated by

where

,

,

,

,

,

,

,

,

is the interval of integration, and

.

If

, Equation (2) is a Riccati equation whose coefficients depend on

X, and its analytical solution was found to be the ratio of two series (cf. Equation (32) of [

17]) unless

, i.e., unless

does not depend on

x. Although these series converge, their rate of convergence depends on

,

,

,

,

and

in each interval

and may be very small for large values of

X and/or large values of the step size, i.e.,

.

In the next subsection, piecewise-continuous methods also based on quadratic approximations that result in closed-form analytical solutions that are not given by the ratio of two series as those presented in this subsection, are presented.

2.2. Piecewise-Continuous Solutions Based on Quadratic Approximations

If

in Equation (1) is approximated by

in

, where

, and

is then approximated by its second-order Taylor series expansion about

, one obtains

where

Note that setting the right-hand side of Equation (6) to zero results in a quadratic equation whose radicand is .

Equation (6) is a Riccati equation with constant coefficients if and a first-order, linear, constant-coefficient, ordinary differential equation otherwise.

For

, the solution to Equation (6) may be obtained by introducing

where the prime denotes differentiation with respect to

X and

.

Use of Equation (8) in Equation (6) yields the following linear, homogeneous, second-order, constant-coefficient, ordinary differential equation:

whose solution depends on the sign of the radicand

, as discussed next.

If the radicand is positive, the solution to Equations (9) and (8) subject to

is

where

, while if the radicand is nil, the solution to Equations (9) and (8) subject to

is

where

.

If the radicand is negative, then the solution to Equations (9) and (8) subject to

is

where

and

.

If

and

, the solution to Equation (6) subject to

is

which corresponds to an exponential integrator, e.g., [

6,

12,

13,

14,

15,

16]. On the other hand, for

and

, the solution to Equation (6) subject to

is

which yields Euler’s forward or explicit method for

and

, where

is the step size.

Herein, we shall refer to the solutions reported in Equations (10)–(12) for , and as methods or approximations Rn, Rnp1 and Rmp, respectively, where R stands for Riccati or Equation (6) with . These methods are exact for autonomous equations, i.e., , with , and a, b and c constant.

Equations (10)–(12) provide analytical solutions that are the ratios of exponential, polynomial, and trigonometric functions, respectively, for

. By way of contrast, the solution to Equation (2) with

is given by the ratio of two series, as shown in Equation (32) of Part 1 [

17]. Although these two series converge, both their rate of convergence and the number of terms that must be retained in order to obtain accurate results depend on

. By way of contrast, convergence issues do not arise for Equations (10)–(12), but their accuracy decreases as

X is increased or as

is increased.

Remark 1. The solution to Equation (6) may also be obtained by determining the roots of the right-hand side of that equation and then applying the well-known method of partial fractions and integrating the resulting terms.

Remark 2. If is expanded in Taylor series about up to third- or fourth-order, then the right-hand side of Equation (6) will contain cubic or quartic, respectively, polynomials, whose roots may be determined analytically, e.g., [18]. However, upon using the method of partial fractions, it is an easy exercise to show that the resulting analytical solutions provide in an implicit fashion and their corresponding finite difference equations are implicit compared with the explicit ones for the methods presented in Section 2 and Section 3, as shown below in Section 5. Furthermore, Taylor series expansion of about of an order equal to or greater than one result in the right-hand side of Equation (6), which is a polynomial of degree equal to or higher than five, respectively, whose roots cannot be determined analytically and, therefore, have to be determined numerically. 3. Piecewise-Continuous Solutions Based on Linear Approximations

If

in Equation (1) is approximated in

by its first-order Taylor series approximation about

, one obtains a first-order, linear, ordinary differential equation that may be deduced from Equations (2)–(5) by setting

in those equations and whose solution may be written as

if

, where

.

If

, the solution Equation (2) with

is

Herein, we shall refer to Equations (15) and (16) as an approximation or method , where stands for (time) linearization or the solution to Equation (2) with

in Equations (2)–(5). This method is exact for , and a, b and c constant.

Remark 3. It should be noted that Equation (15) is an exponential integrator, although this term is usually reserved for methods based on equations such as (cf. Equation (1)),where is a nonlinear function of y [6]. Upon integration of the above equation in , one obtainswhere , andwhich, for quadrature techniques that employ the upper end point in the evaluation of the integrand, is an implicit equation for , which requires the use of iterative methods to obtain its solution. For the case that with and is a square constant matrix, exponential integrators result in matrix exponentials. Matrix exponentials also appear in the linear approximation methods presented in this section if with . If

in Equation (1) is first approximated in

by

, which is then approximated by its first-order Taylor series approximation about

, where

, one obtains a first-order, linear, ordinary differential equation that may be deduced by setting

in Equation (6); the solution to the resulting equation may be written as

if

, where

. If

, the solution is

Equations (17) and (18) are identical to Equations (13) and (14), respectively, if .

Herein, we shall refer to the solutions corresponding to Equations (17) and (18) as methods or approximations Ln, Lnp1 and Lmp for , and , respectively, where L stands for linearization or Equation (6) with . These methods are exact for with a and b constant.

4. Properties of the Piecewise-Continuous Solutions Based on Linear and Quadratic Approximations

The piecewise solutions based on the quadratic and linear approximations of

reported in

Section 2 and

Section 3, respectively, provide analytical solutions in

that are continuous everywhere and exhibit a jump in the first-order derivative at

for

. For example, for the

Rn,

Rnp1 and

Rmp methods or approximations reported in

Section 2, one may obtain from Equation (6) that the left-side derivative at

is

whereas the right-side derivative at that point is

where, for example,

,

and

, and the subscripts

L and

R and the superscripts − and + denote left- and right-side derivatives, respectively; these derivatives are in general different.

For the sake of convenience, herein we shall refer to the left- and right-side derivatives corresponding to Equations (19) and (20) as

and

, respectively; the absolute values of these slopes will be referred to as

and

, respectively. Either the difference between or the ratio of these two derivatives indicates the smoothness of the approximate solutions reported in

Section 2 and

Section 3 at the grid points

for

. Clearly, the decimal logarithm of this ratio, i.e.,

, is expected to tend to zero as

.

The piecewise analytical solutions reported in Equations (10)–(18) when substituted in Equation (1) result in the following residual or defect

for

, where

denotes the approximate analytical solutions corresponding to Equations (10)–(18), which, as stated above, depend not only on

but also on

and

; therefore, their residuals also depend on

x,

and

.

The residual or defect indicates by how much the approximate solution fails to satisfy the original differential Equation (1), whereas the difference between and for denotes the error or accuracy of the piecewise-analytical solution to Equation (1), where stands for the exact solution to that equation. Herein, we shall assess the error of the approximate solution as for .

As stated above, Equation (21) is not valid at the grid points

for

because the approximation methods presented in this paper are continuous and analytical in

, but the left- and right-side derivatives of the approximate solutions obtained here are not equal at

for

. However, since

in Equation (1) denotes the slope of

, and

appears in Equation (21), it proves to be convenient to introduce the following expression

as an estimate of the residual. Note that, if

and

, then

and

, which is

if

but may be very large if

.

6. Results

In this section, the finite difference methods reported in

Section 5 are applied to five variable-coefficient Riccati equations thtat have analytical solutions in order to assess the influence of

on the accuracy of their solutions as a function of the step size. These examples represent a sample of the numerical studies on twenty nonlinear, ordinary differential equations, which have been analyzed with the finite difference methods reported in

Section 5.

For nonlinear, ordinary differential equations that do not have exact solutions, numerical experiments were performed with step sizes equal to for , where denotes the initial step size, and the solutions thus obtained were denoted by , where the superscript refers to and the subscript m to the step size; a numerical solution was considered to be accurate whenever and if , where and are the user’s specified tolerances that depend on the (solution of the) nonlinear ordinary differential equation to be solved.

The examples considered here include Riccati equations with coefficients that are polynomials and/or exponential functions of the independent variable, smooth solutions, and solutions that blow up in finite time and have been selected to illustrate the effects of both the magnitude and the sign of

introduced in

Section 2 on the methods accuracy. As shown in that section, the finite difference equations of the methods

Rn,

Rnp1 and

Rmp depend on the sign of

as illustrated in Equations (23)–(25).

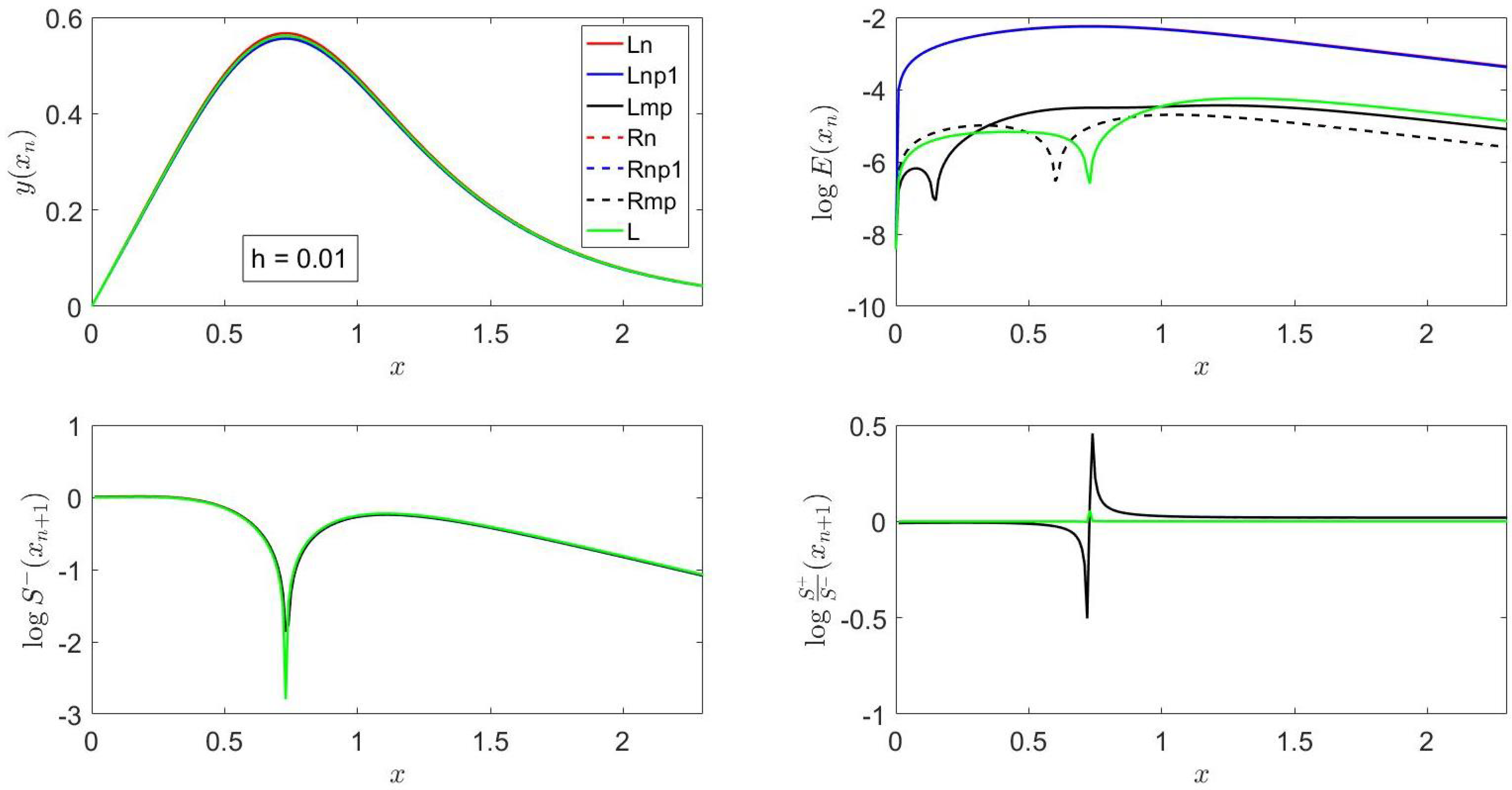

6.1. Example 1

This example corresponds to

whose solution is

where

. This solution tends to zero as

and exhibits a relative maximum at

, as shown in

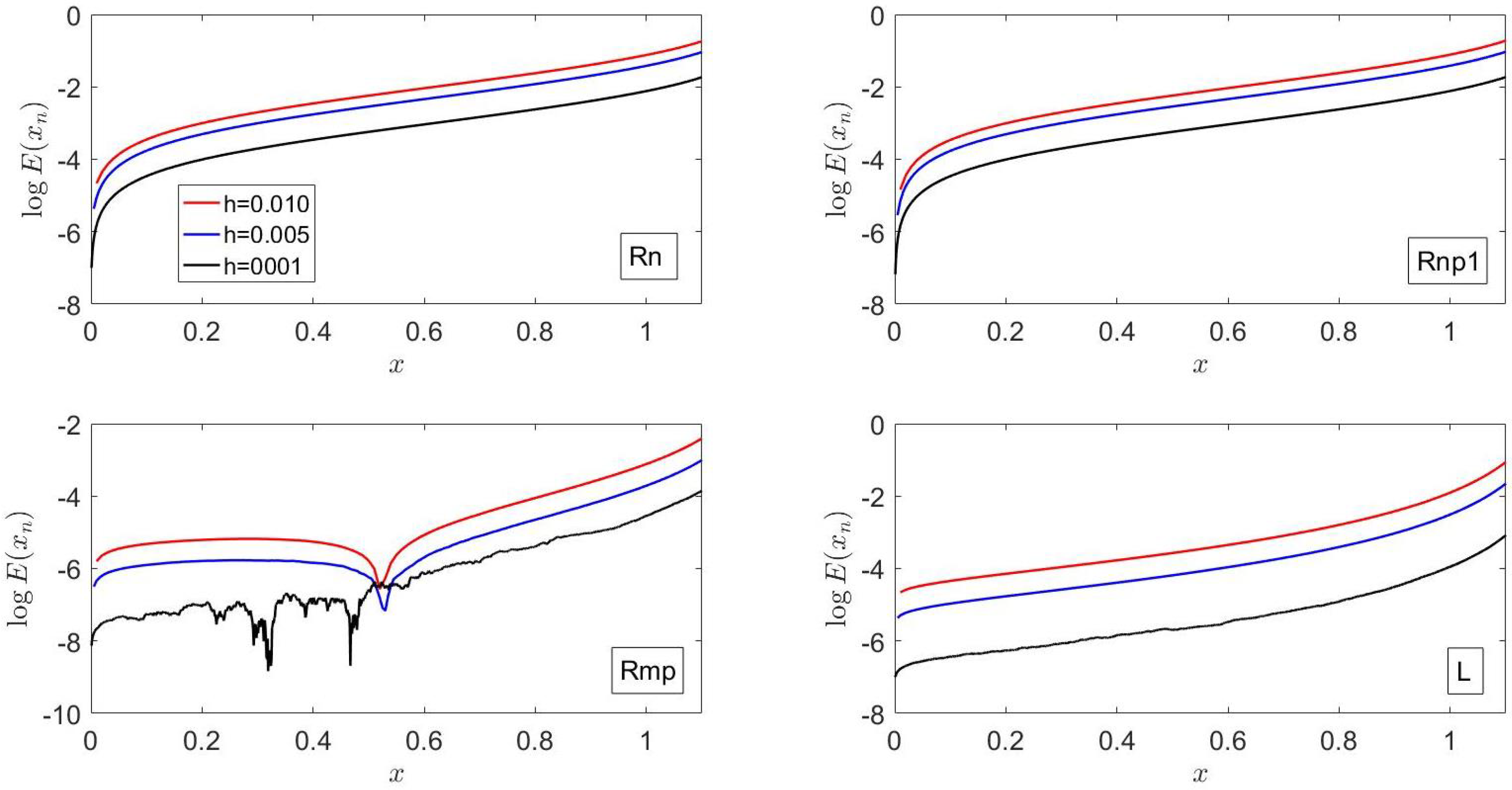

Figure 1.

For this example, the radicand defined in

Section 2 is

, and, therefore, the finite difference expression for the methods

Rn,

Rnp1 and

Rmp is that of Equation (23), i.e., the ratio of exponential functions.

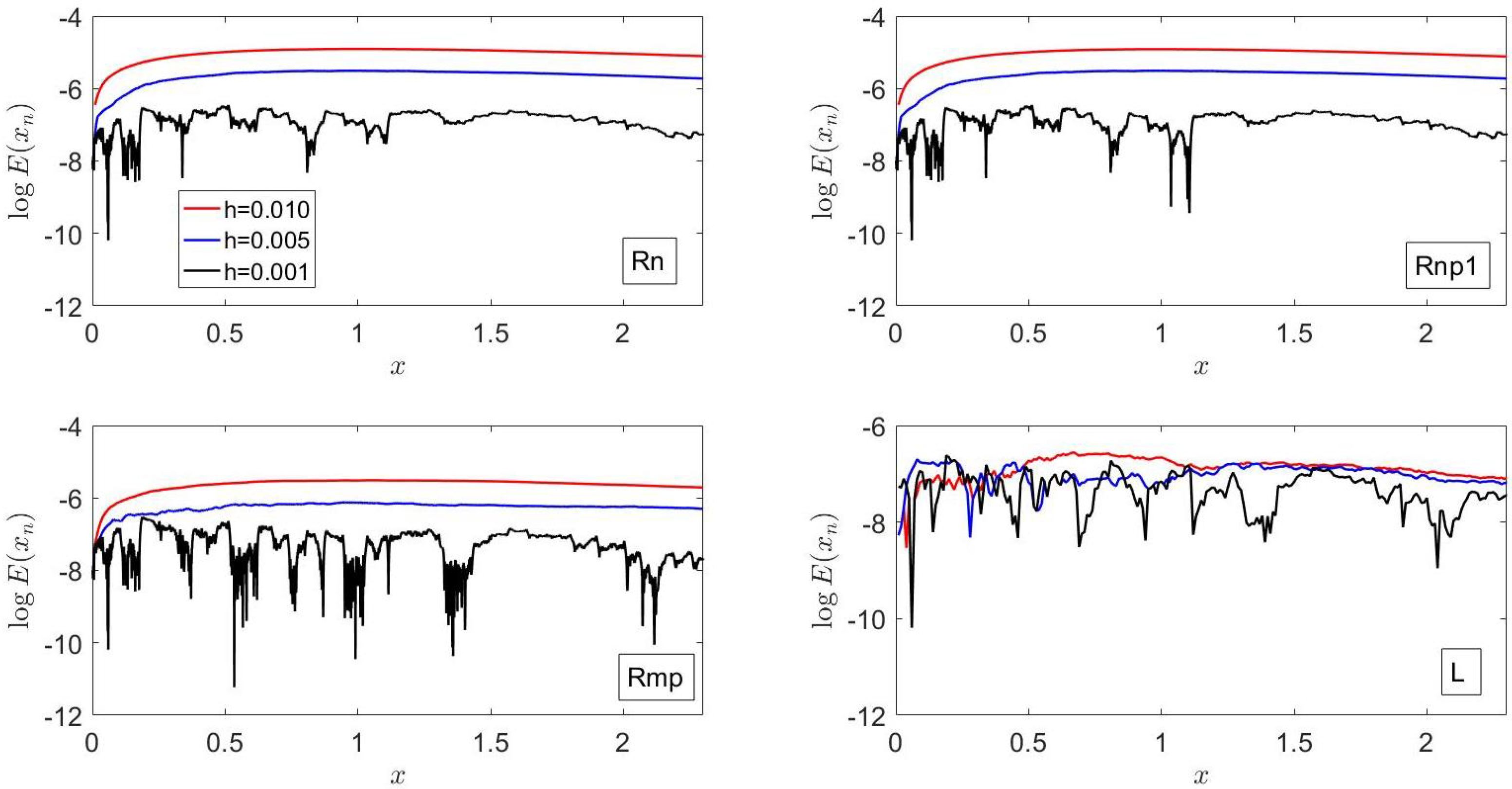

Figure 1 shows that the errors of

Ln,

Lnp1,

Rn and

Rnp1 exhibit the same trends and have similar values, which are larger than those of

Lmp and

Rmp, in accordance with the local truncation error analysis reported in the previous section. The errors of these six methods first increase as

x increases from its initial value until

reaches its relative maximum and then decrease at a smaller rate; i.e., they exhibit the same trends as the analytical solution of Equation (38).

For

x greater than unity,

Figure 1 indicates that the errors of

Lmp and

Rmp are smaller than those of

L; the error of the latter exhibits a relative minimum at the location of the maximum value of

, i.e., at the location where

.

As shown in

Section 2 and

Section 3, the piecewise-analytical methods

Lmp and

Rmp do not account for the dependence of

x in

since the independent variable is assumed to be equal to

in that interval; on the other hand,

L takes into account that dependence in a linear fashion, as described in

Section 3.

Note that, since for

, Equation (38) behaves as

, and, therefore,

, one would expect that

L is less accurate than

Lmp and

Rmp for

, as illustrated in and in in accordance with the errors shown in

Figure 1.

For this example, all the methods presented in this manuscript show that decreases from its initial value until reaches a relative maximum, then decreases and later increases sharply up to the inflection point of , exhibits a relative maximum, and then decreases at a much slower rate. The smallest value of the left-side derivative corresponds to L, which includes (cf. Equation (4)).

As illustrated in

Figure 1, the ratio of the right- to the left-side derivative is almost one for all

except in a neighborhood of the abscissa where the relative maximum of

occurs; on the left of this abscissa, the slope ratio is less than unity and greater (in absolute value) for

Ln,

Lnp1,

Lmp,

Rn,

Rnp1 and

Rmp than for

L, whereas, on the right of this abscissa, the slope ratio is greater than one and greater (in absolute value) for

Ln,

Lnp1,

Lmp,

Rn,

Rnp1 and

Rmp than for

L. The behavior of

Rn,

Rnp1 and

Rmp just described is a consequence of the fact that

,

, increases exponentially as

x increases and

for

, whereas those of

Ln,

Lnp1,

Lmp and

L are due to the behavior of

described above as well as to the fact that

for

.

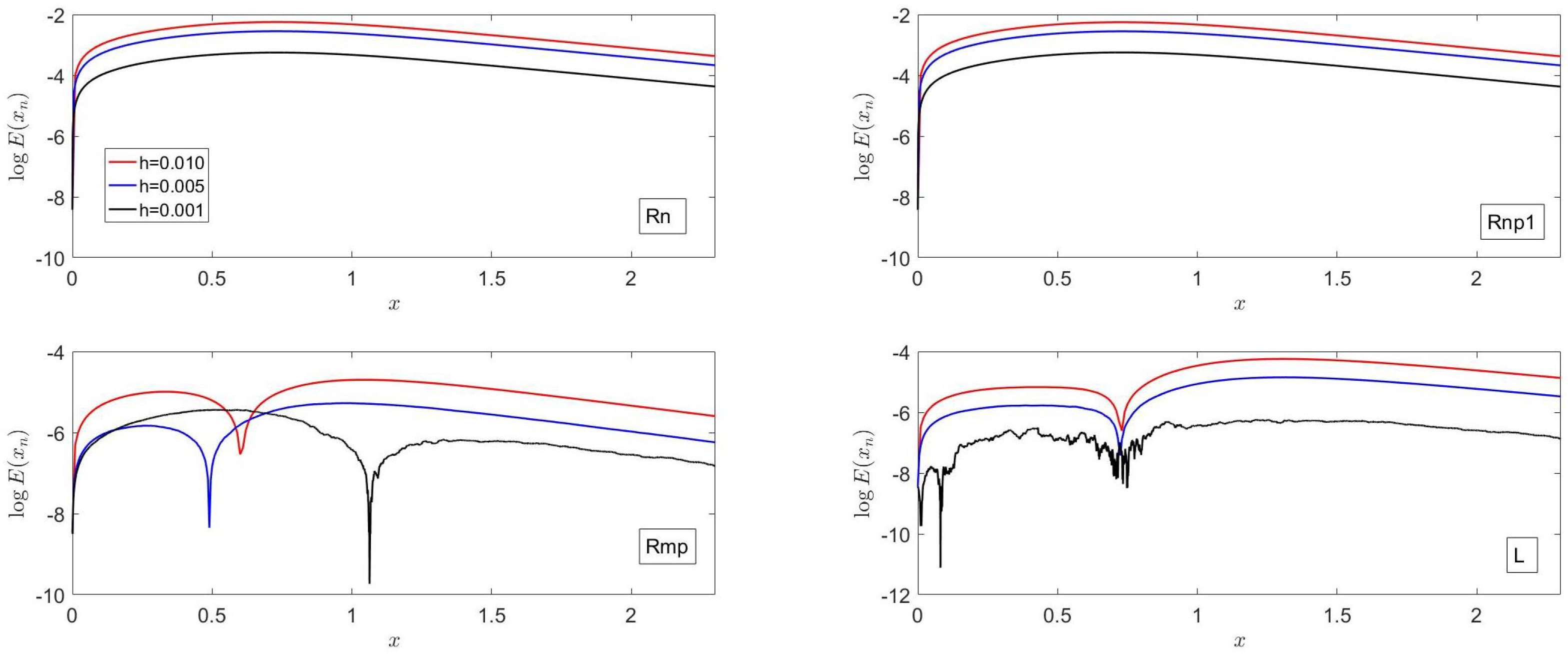

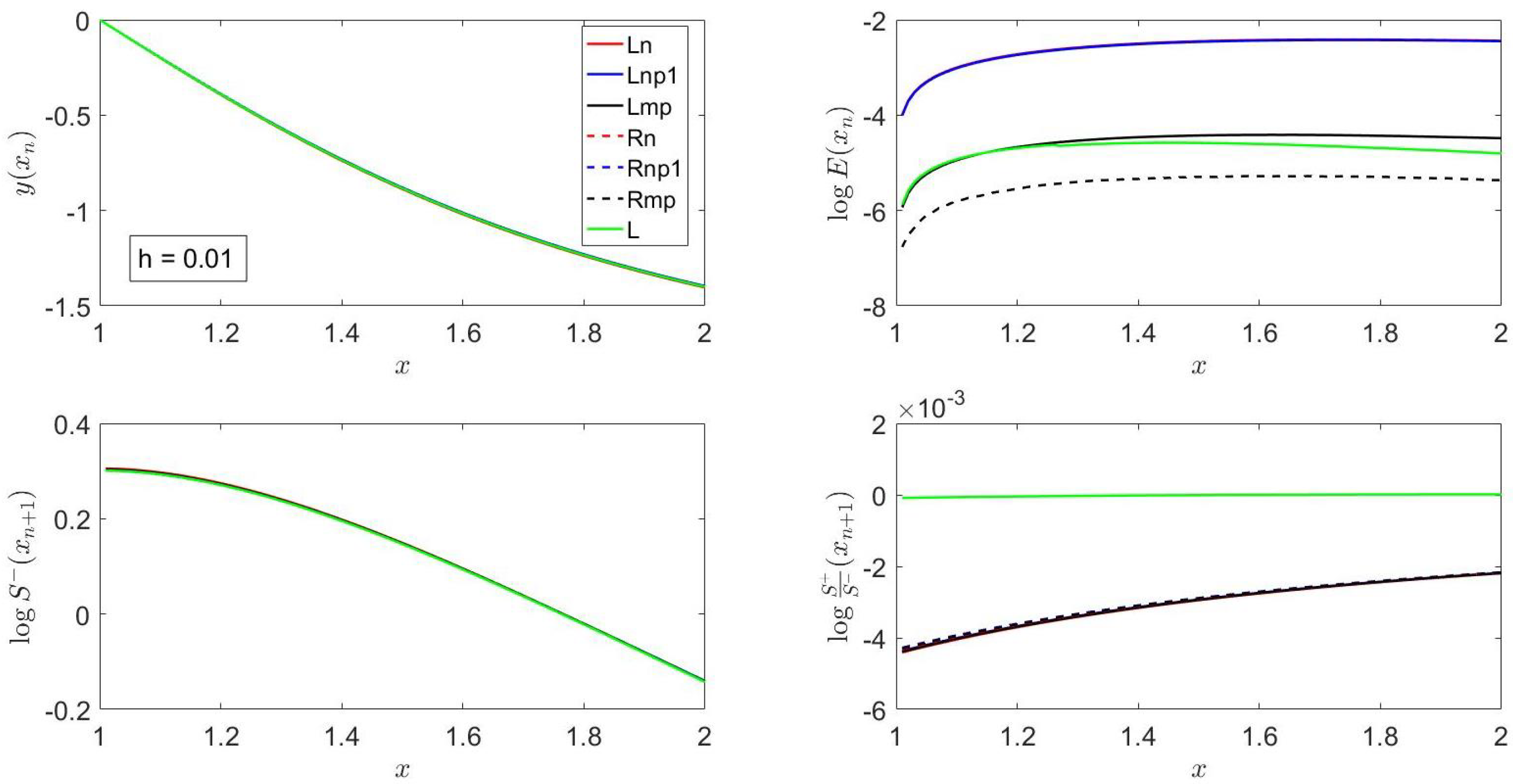

In

Figure 2, it is shown that, at

, the accuracy of

Rn is about the same as that for

Rnp1; the accuracy of these two methods first decreases sharply, reaches a relative minimum, and then increases slowly as

x increases; the order of accuracy of these methods is in accordance with what was stated previously in this subsection, as well as with what was is stated in

Section 5.

For , Rmp is more accurate than L despite the fact that both are ; the opposite behavior is, however, observed for . The difference in accuracy between these two methods is mainly due to the coefficient that multiplies the term in Equation (32), as discussed in greater length in example 5 below.

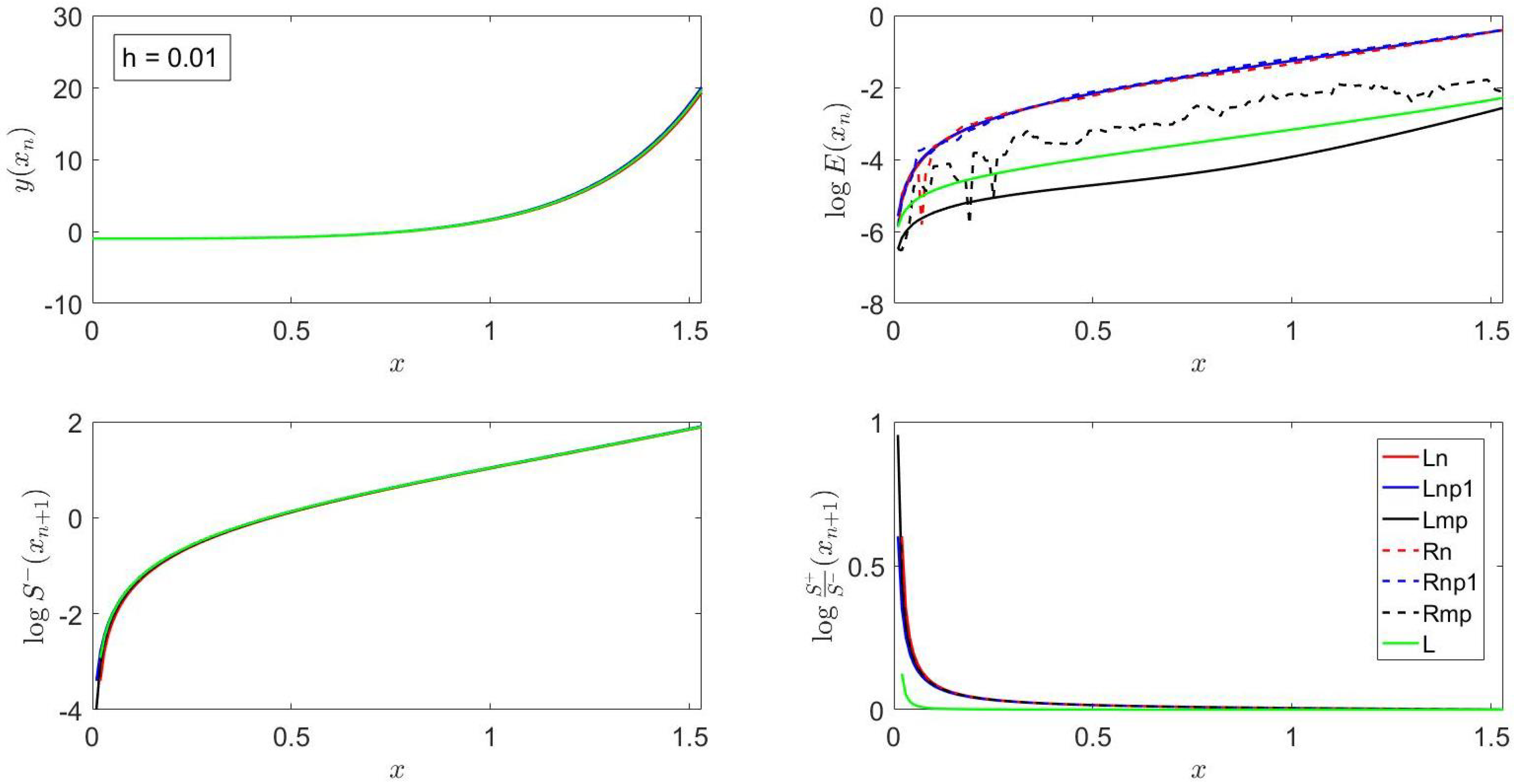

6.2. Example 2

This example corresponds to

whose solution is

and exhibits blowup in finite time at

, i.e.,

; however, since the principal branch of

is

, the results presented here correspond to this branch.

Figure 3 indicates that the exact solution increases as

x increases; in fact, for

,

. Moreover, for this example,

(cf.

Section 2), and, therefore, the finite difference equation for the methods

Rn,

Rnp1 and

Rmp is that of Equation (24).

Figure 3 also shows that

Lmp is the most accurate method;

L is more accurate than

Rn; the accuracy of

Rmp is lower than that of

L but higher than that of

Rnp1; and the accuracy of

Rnp1 is comparable to those of

Ln,

Lnp1 and

Rn. These results are in accordance with the local truncation error analysis reported in

Section 5 and the smooth behavior of Equation (40) in the principal branch of

.

The errors illustrated in

Figure 3 increase as

x is increased in accordance with the fact that the exact solution given by Equation (40) increases as

x increases.

Figure 3 also shows that all the methods presented here provide almost the same value of

except near

due to the exponential dependence of the coefficients that appear in Equation (40). On the other hand, the slope ratio is much larger for

Ln,

Lnp1,

Lmp,

Rn,

Rnp1 and

Rmp than for

L due to the fact that the latter makes use of

(cf. Equation (4)) and, therefore, accounts for the (explicit) dependence on

x in

(through

), as indicated in

Section 3.

Figure 3 also shows that

Ln,

Lnp1,

Rn, and

Rnp1 exhibit the same trends and have similar errors: these methods are less accurate than

Rmp, which, in turn, is less accurate than L, and the latter is, in turn, less accurate than

Lmp. The higher accuracy of

Lmp as compared with that of

L is due to the fact that, since

is an increasing function of

x for

,

; in addition, for

,

for

. On the other hand, the lower accuracy of

Rmp compared with that of

Lmp for this example is due to the fact that, since

,

(cf. first line below Equation (11)), and

,

and

(cf. Equations (40) and (24)).

Figure 4 shows that the errors of

Rn exhibit similar trends to those of

Rnp1, but the errors of these two methods do not exhibit a monotonic behavior with

h for all

. For example, the accuracy of

Rnp1 is lower for

than for

initially, whereas the opposite behavior is observed at later times. A similar behavior is observed for

. This is in marked contrast with the accuracy of

, which depends monotonically on

h, i.e., it increases as

h is decreased and is greater than those of

Rn,

Rnp1 and

Rmp; this is consistent with the fact that, as indicated previously, for this example,

, but

and

L depends on

,

and

h (cf. Equations (2)–(4)). In addition,

L depends exponentially on

J and

h, whereas

Rn,

Rnp1 and

Rmp depend algebraically on

J and

h as indicated in Equations (28) and (24), respectively.

On the other hand, the accuracy of

Rmp seems to decrease as

h is decreased but is higher than those of

Rn and

Rnp1 for

. The behavior of

Rmp on

h observed in

Figure 4 is due to the exponential coefficients that appear in Equation (40) and the fact that

,

and

, which imply that, for

,

,

and

. This, in turn, implies that Equation (24) may be approximated by

, which, for

, becomes

, which corresponds to Euler’s forward or explicit method.

6.3. Example 3

This example corresponds to

whose exact solution is

and exhibits blowup in finite time at

, i.e., at

. Note that

for

and that the last term in the right-hand side of Equation (42) is not differentiable at

; however,

and the total derivative of the third term in the right-hand side of Equation (42) with respect to

x at

is 1. In the neighborhood of the blowup time,

.

For this example,

(cf.

Section 2), where

, whose zeroes are

and

, but only the former is a valid one. Moreover, since

,

for

,

for

and

for

and, therefore, these three cases correspond to Equations (25), (24) and (23), respectively. This implies that, as

x is increased from zero, the finite difference equations of the

Rn,

Rnp1 and

Rmp methods evolve from the ratio of trigonometric functions to the ratio of exponential ones.

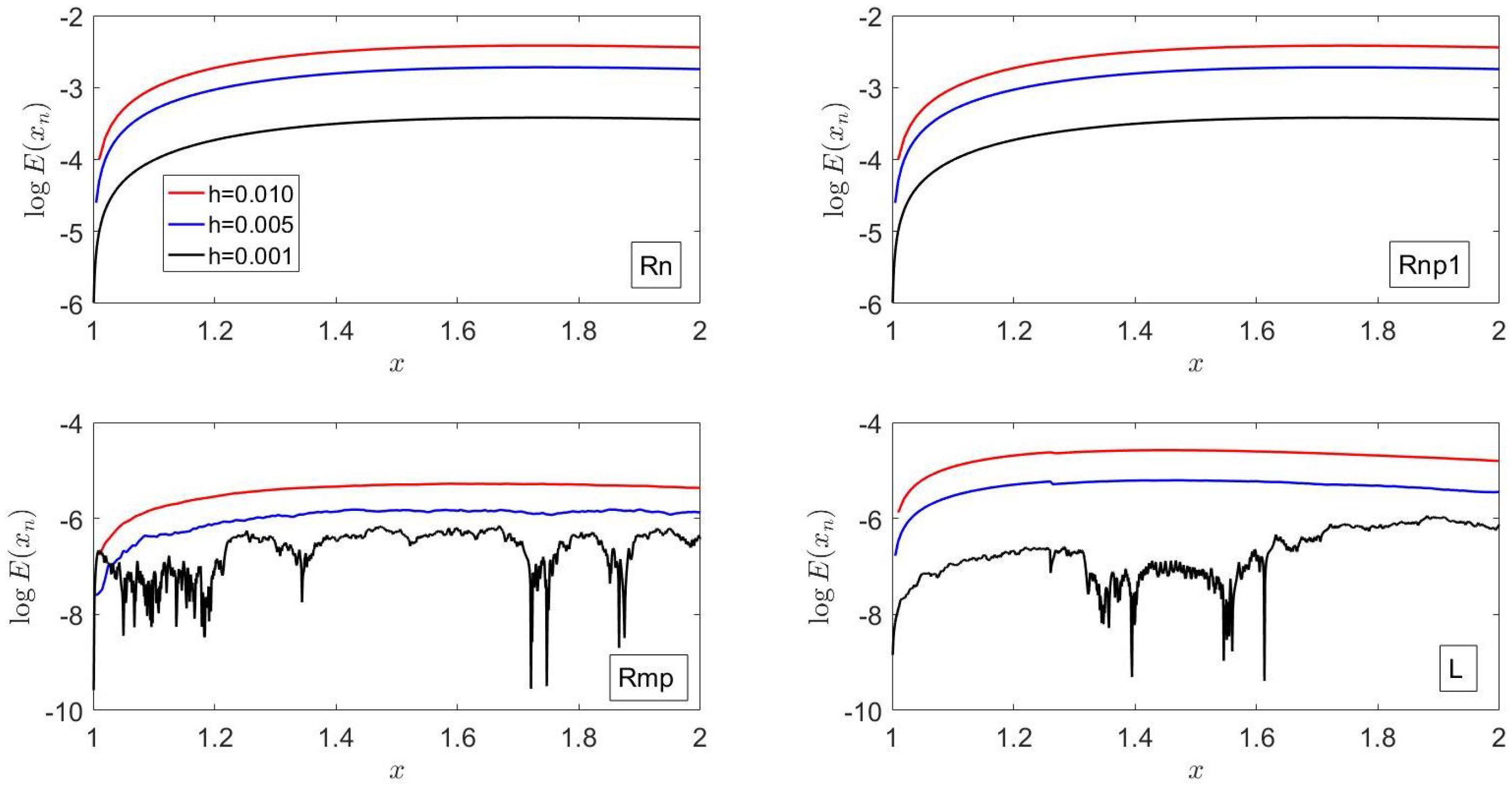

Figure 5 shows that

Lmp is more accurate than

L, which, in turn, is more accurate than

Lnp1; the latter is, in turn, more accurate than

Rnp1, whose accuracy is comparable to but higher than that of

Ln. These results are consistent with the fact that the coefficients that appear in Equation (42) depend on

x, and

Ln,

Lnp1 and

Lmp evaluate these coefficients at different

, whereas, although

L makes use of a Taylor series expansion with respect to both the independent and dependent variables, this expansion is a linear one, and its coefficients are evaluated at

, as shown in

Section 2.

Figure 5 also shows that the most accurate method is

Rmp and that the slope ratio at

is very close to one for all the methods considered in this manuscript.

L predicts the closest slope ratio to unity, followed by

Lmp, which exhibits similar trends to those observed by the other methods presented in this paper except near the blowup time. Note that

,

,

and

Rmp accounts for

,

and

, whereas

Lmp only accounts for

and

, and

L accounts for

,

and

(cf. Equations (23)–(31)).

It should be noted that the errors of the numerical methods presented here increase very steeply as the blowup time is approached; this is consistent with the fact that the singularity of the solution derivatives increases as their order is increased. It should also be noticed that an accurate study of blowup in finite time requires the use of variable step sizes whose magnitude decreases as, for example, the slope of the solution increases. This was not pursued in this paper, which is concerned with piecewise-analytical solutions to initial-value problems of nonlinear, first-order, ordinary differential equations. Nevertheless, some comments on the numerical solution of problems that exhibit blow up in finite seem to be appropriate here.

Blowup phenomena in finite time are currently being investigated by the author using variable step sizes based on the slope and/or curvature of the solution; the growth or decay of the solution, etc.; and modifications of the methods L, Lmp and Rmp reported in this manuscript.

Strictly speaking, blowup in either ordinary or evolution partial differential equations means that the solution becomes unbounded at a finite time. When this occurs, the solution loses regularity in a finite time. Since numerical methods cannot deal with infinite values, the issues of how to numerically determine solutions near the blowup time accurately and how to compute an approximate blowup time are of great current interest. Numerical experiments performed to date indicate that adaptive methods can reproduce finite-time blow-up reasonably well; however, they usually do not faithfully reproduce the solution and the blowup rate. In addition, it has been observed that different discretizations result in different growth rates and may introduce spurious behavior in the neighborhood of singularities.

The results presented in

Figure 6 indicate that

Rn,

Rnp1 and

L exhibit the same accuracy trends; the accuracy of

Rn is comparable to that of

Rnp1, which, in turn, is smaller than that of

L; and

Rmp is more accurate than

L. Moreover, close to the blowup time, the errors of L are smaller than those of

Rn and

Rnp1 but larger than those of

Rmp. The higher accuracy of

Rmp is due to the fact that this method accounts for

(cf. Equation (3)), which, when close to the blowup time, is more singular than

, while the lower accuracy of

Rn and

Rnp1 is due to the dependence of the coefficients of Equation (42) on

x and the fact that

,

and

are evaluated at

and

, respectively.

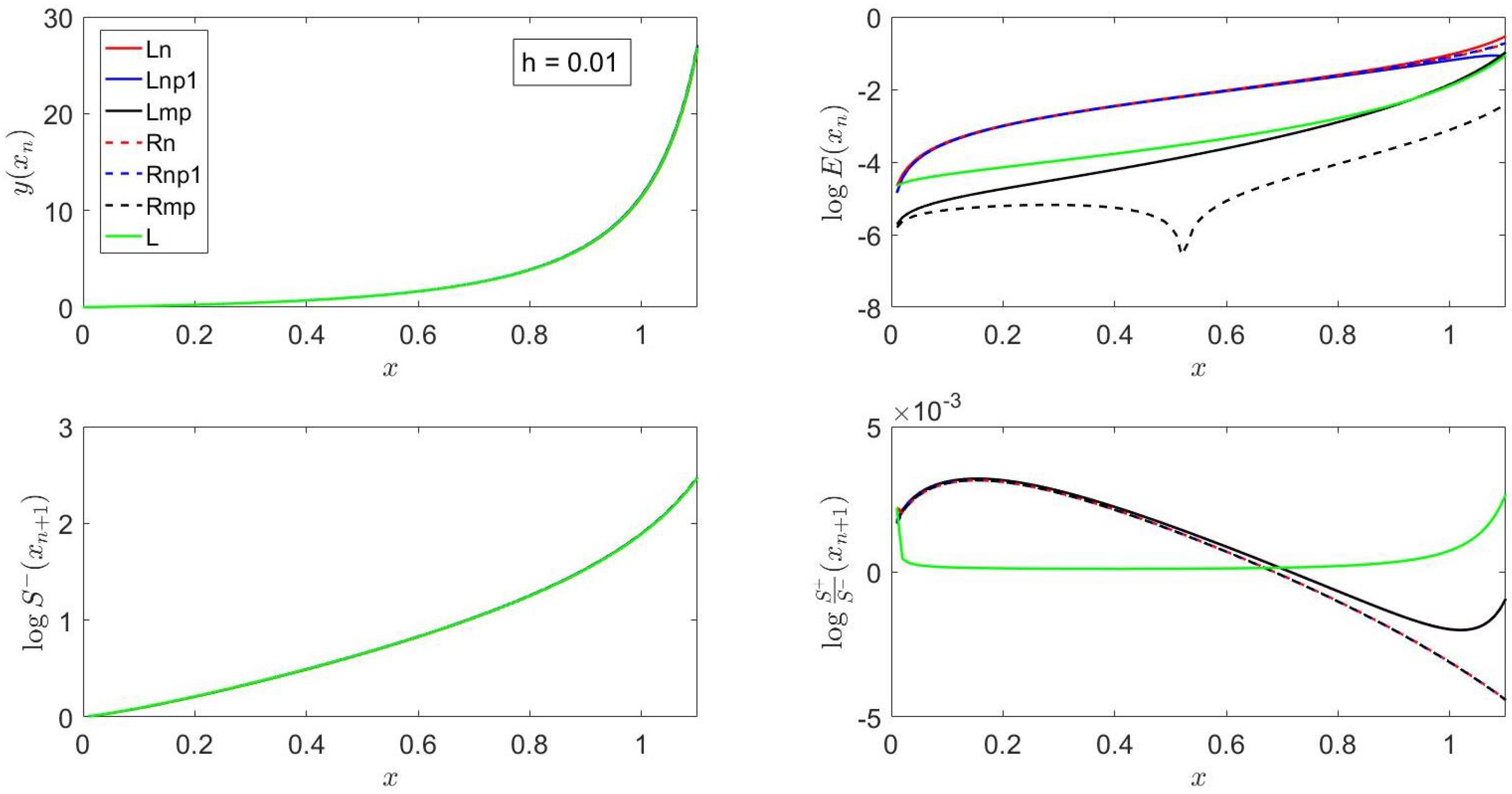

6.4. Example 4

This example corresponds to

whose solution is

and tends to

as

in a monotonic fashion.

For , the finite difference expressions for L, Ln, Rn exhibit a singularity at . For this reason, the results presented here correspond to the initial condition and .

For this example

(cf.

Section 2) and, therefore, the finite difference expressions for the methods

Rn,

Rnp1 and

Rmp are those corresponding to Equation (23). Moreover,

and

. This means that

f,

R,

J,

H tend to zero as

and, therefore, the finite difference Equation (23) tends to that of Equation (24) as

.

Figure 7 indicates that, for

,

L is more accurate than

Lmp, which, in turn, is more accurate than

Ln,

Lnp1,

Rn and

Rnp1; the latter four methods do not only exhibit similar trends they also have similar accuracy. The results presented in

Figure 7 are in accordance with the local truncation error analysis reported in

Section 5. The differences between the errors of

L and

Lmp, which are both second-order accurate, are mainly due to the coefficient that multiplies the

in Equation (32) which includes second- and higher-order derivatives of

.

Figure 7 also indicates that the most accurate method is

Rmp for

;

L predicts the closest slope ratio to unity; and

Ln,

Lnp1,

Rn,

Rnp1 and

Rmp predict analogous slope ratios.

Figure 8 indicates that the accuracy of

Rn is comparable to that of

Rnp1 and both methods exhibit the same trends; the accuracy of

Rn is lower than that of

L whose accuracy, in turn, is smaller than that of

Rmp for

; and the accuracy of all the methods presented in this figure first decreases, then reaches a relative maximum and then increases at a slow pace except for

L and

Rmp for

.

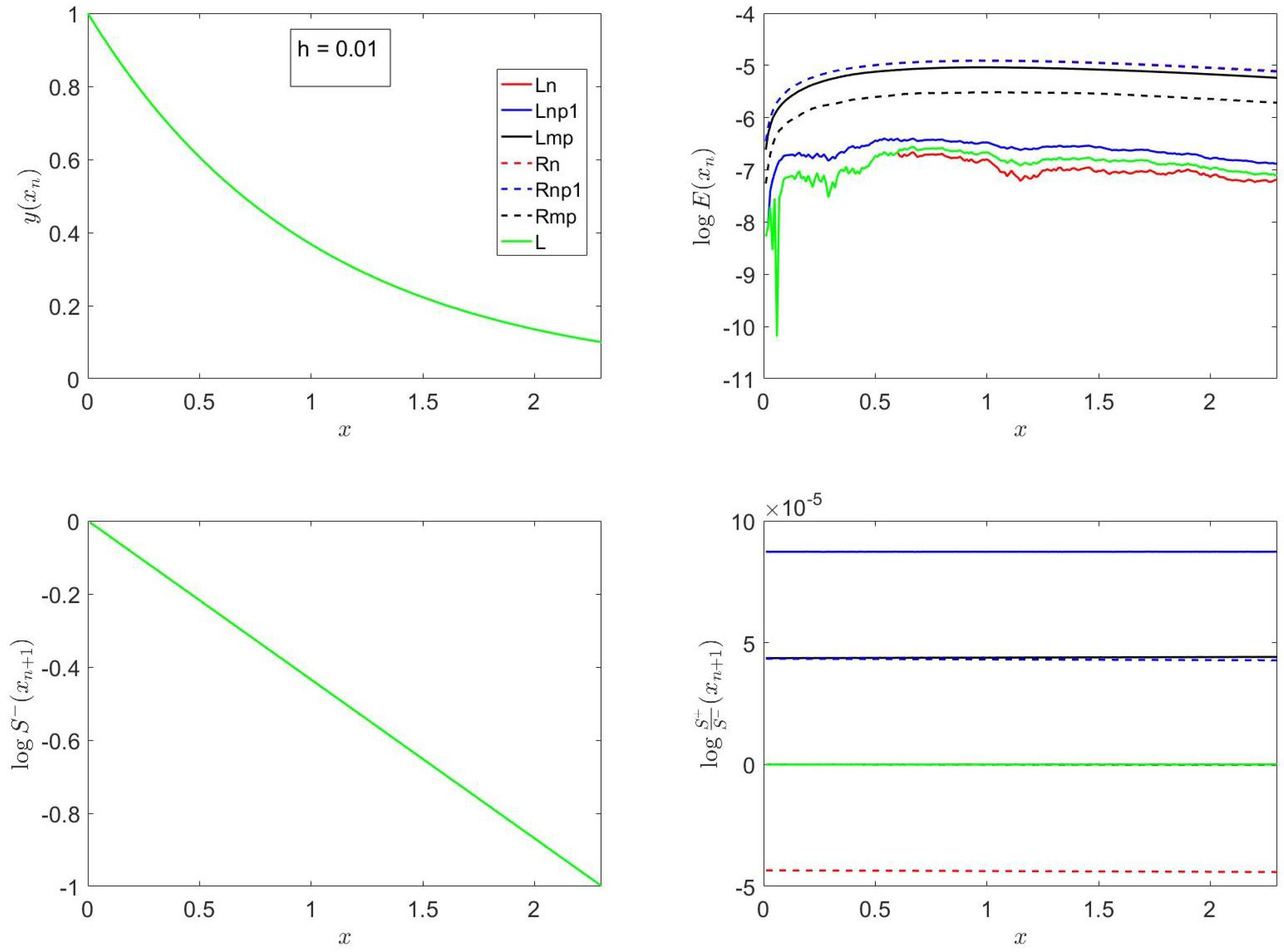

6.5. Example 5

This example corresponds to

whose solution is

and decreases exponentially as

x increases.

For this example, and, therefore, the finite difference equation for the methods Rn, Rnp1 and Rmp is Equation (25). This means that the explicit finite difference Equations (23)–(25) are the ratios of trigonometric functions, whereas those of L, Ln, Lnp1 and Lmp are of exponential type because they depend exponentially on (cf. Equations (28) and (30)). Moreover, , , and . Note also that as in an exponential fashion.

Figure 9 indicates that the most accurate method for this example is

Ln, followed by

L,

Lnp1,

Rmp,

Lmp,

Rn and

Rnp1. This result is due to the fact that the exact solution decreases exponentially with time, the time-dependent coefficients that appear in Equation (46) are exponential functions of time,

, and

increases exponentially with time, as stated above. In addition,

Ln and

Lnp1 evaluate their corresponding first-order Taylor series approximation at

and

, respectively, as indicated in

Section 3 and, therefore,

.

Figure 9 also shows that the differences between the errors of

Ln and

L are very small; in fact, the errors of these two methods are almost identical for

. In addition, the slope ratio predicted by all the methods presented in this paper is very close to unity, as illustrated in

Figure 9, although

Rn predicts a slope ratio slightly less than one, whereas

Ln,

Lnp1,

Lmp,

Rmp and

Rnp1 predict a slope ratio slightly greater than unity for

.

The results illustrated in

Figure 10 show that the errors of

Rn are comparable to those of

Rnp1 and decrease as

h is decreased; these errors are larger than those of

L, which, in turn, are smaller than those of

Rmp, except for

. The reason for this behavior has been explained above; i.e., for

, the approximate, piecewise-analytical methods

Rn,

Rnp1 and

Rmp involve the ratio of trigonometric functions, whereas the linear methods

Ln,

Lnp1,

Lmp and

L are of the exponential type. Note also that for non-autonomous, nonlinear, ordinary differential equations,

L is second-order accurate, as indicated in

Section 5.

The results presented in

Figure 1,

Figure 2,

Figure 3,

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10 clearly indicate that the most accurate method for Examples 1, 3 and 4 is

Rmp for

. These three examples are characterized by well-behaved solutions that first increase and then decrease with

x for example 1 and solutions that exponentially growi or decrease for examples 3 and 4, respectively. However, the accuracy of this method decreases substantially for equations with solutions that blow up in finite time, as in example 2 and equations with

(cf.

Section 2) with exponentially decaying solutions; the loss of accuracy in these cases is due to exponentially growing second-order derivative

and the ratio of trigonometric functions (cf. Equation (25)), respectively.

6.6. Accuracy of the Linear and Quadratic Approximations

Methods at Selected Times

In this subsection, the accuracy of the linear approximation methods L, Ln, Lnp1 and Lmp, as well as that of the quadratic ones Rn, Rnp1 and Rmp, is presented for the five examples considered in previous subsections at selected times.

In

Table 1, the decimal logarithm of the error, i.e.,

, at selected times is presented for all the methods and the five examples considered in this paper. This table shows that the errors decrease as the step size is decreased except for

Rnp1 and

Rmp for example 2;

Lmp is more accurate than

Ln and

Lnp1 for all the examples, except for example 5 and

;

L is more accurate than

Lmp for example 2 and examples 4 and 5 for

; and

Rmp is more accurate than

Rn and

Rnp1 for examples 1, 3, 4 and 5 and example 2 for

.

Table 1 also indicates that, for

, the most accurate method is

Lmp for examples 1 and 2, and L,

Rmp and

Ln are the most accurate methods for examples 3, 4 and 5, respectively.

Table 1 also shows that L exhibits an order of convergence slightly above two for examples 1, 2, 3 and 5 and between 1 and 2 for example 4. On the other hand, the order of convergence of

Lmp is greater than two for examples 3 and 5, two for example 4, and slightly below two for examples 1 and 2. These orders of convergence are mainly in accordance with the local truncation errors analysis reported in

Section 5, which are based on Taylor series expansions of the finite difference equations and require that the step size be small. But even though, for example, L and

Rmp are second-order accurate methods (cf., e.g., Equation (32)), the coefficient of the

term in that equation, which is proportional to the second- and higher-order derivatives of

, may become so large that the neglected

term may become comparable to or even larger than (the lower-order) retained ones. This may be the case for problems that exhibit blowup in finite time, such as example 3, and problems with very fast-growing or decaying solutions, such as examples 1, and 2 and 5, unless

h is sufficiently small. When such a problem is observed, the step size should be varied according to the solution behavior.

For the five examples considered in this section, except example 2, the accuracy of Rn is very close to that of Rnp1, and the accuracy of Ln is very close to that of Lnp1 for examples 1, 2 and 4 and example 5 with , but lower than that of Lnp1 for example 3.

Note that the comments made above on the results in

Table 1 are only valid for the times specified in that table and, therefore, may not be applicable to the whole range of

x considered in the examples illustrated in

Figure 1,

Figure 2,

Figure 3,

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10 of this paper.

6.7. Comparisons with Other Numerical Procedures

In this subsection, comparisons between the results of the second-order accurate

L and

Rmp methods presented in this paper and those of the second-order trapezoidal scheme (

T), and the fourth-order Runge–Kutta technique (

RK4) are presented for the five examples considered in the manuscript. Such comparisons are made in terms of the error with respect to the exact solution and for three different step sizes and are illustrated in

Figure 11 and

Figure 12.

Before discussing these figures, it is convenient to emphasize several points. First,

L and

Rmp are implicit methods that provide explicit finite difference formulae, as indicated in

Section 5;

T is an implicit scheme that, for the nonlinear ordinary differential equations of examples 1–5, result in nonlinear algebraic equations that were solved iteratively by means of the Newton–Raphson technique with the convergence criteria:

and

provided that

, where the subscript

k denotes the

k-th iteration within each

, and

RK4 is a four-stage, explicit scheme. Second,

L and

Rmp are

-stable;

T is

A- but not

-stable; and

RK4 is conditionally stable. Third, although

L,

Rmp and

T are second-order accurate, the coefficient that multiplies the

term in Equation (32) is different for each of these methods; this should be kept in mind when comparing the errors of these methods, as indicated previously in the subsection on example 5. Fourth, for the examples whose solutions exhibit either blowup in finite time or grow exponentially, the use of a fixed step size in the trapezoidal rule usually results in an increase in the number of iterations required for convergence, i.e., an increase in the computational time, and not very accurate solutions if the step size is not sufficiently small; however, only the accuracy of

L and

Rmp is affected in such cases. Fifth, for the same step size,

RK4 requires more operations than

T, and, for the examples and time steps considered in this work,

T required more operations (due to its iterative character) than

Rmp, which, in turn, required a few more operations than

L. And, sixth,

Rmp makes use of

and its first- and second-order derivatives with respect to the dependent variable, and

L makes use of

and its first-order derivative with respect to the dependent variable, whereas

T and

RK4 only make use of

. This means that step adaptation comes in a natural way for both

L and

Rmp through the magnitude of

, whereas that of

T would require, for example, computations based on different step sizes as discussed at the beginning of this section.

RK4 could also be used as an adaptive strategy based on computations with different step sizes as just described for

T or embedded formulations such as, for example, the Runge–Kutta–Fehlberg–45 procedure.

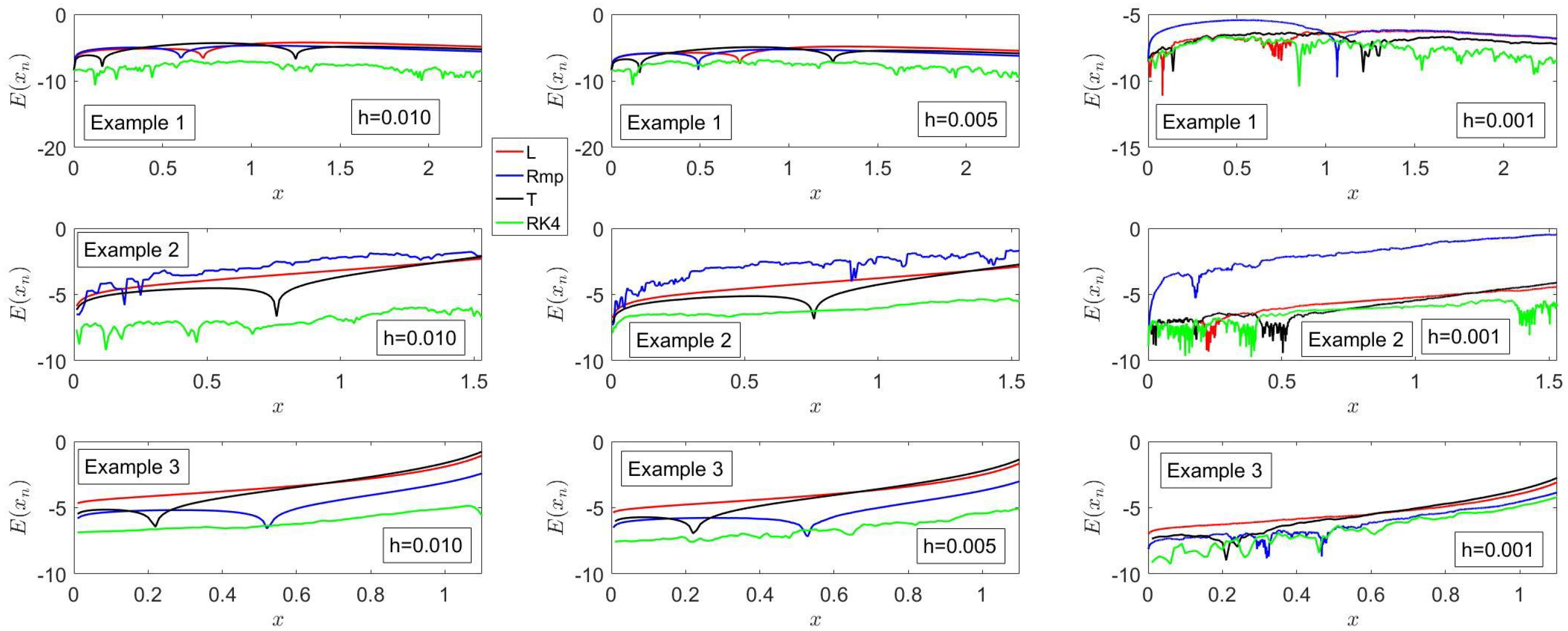

Figure 11 shows that for

and example 1, the methods considered in this section can be ordered in terms of accuracy (from higher to lower) as

RK4,

T,

Rmp and

L, but the errors of the latter three are similar. However, for

, the ordering is

RK4,

T,

L and

Rmp for

and

RK4,

T,

Rmp and

L for

.

For Examples 2 and 3, the ordering of accuracy is RK4, T, Rmp and L, and RK4, Rmp, T and L, respectively. As stated previously, the solution to example 2 is an exponentially growing one, whereas that of example 3 exhibits blowup in finite time.

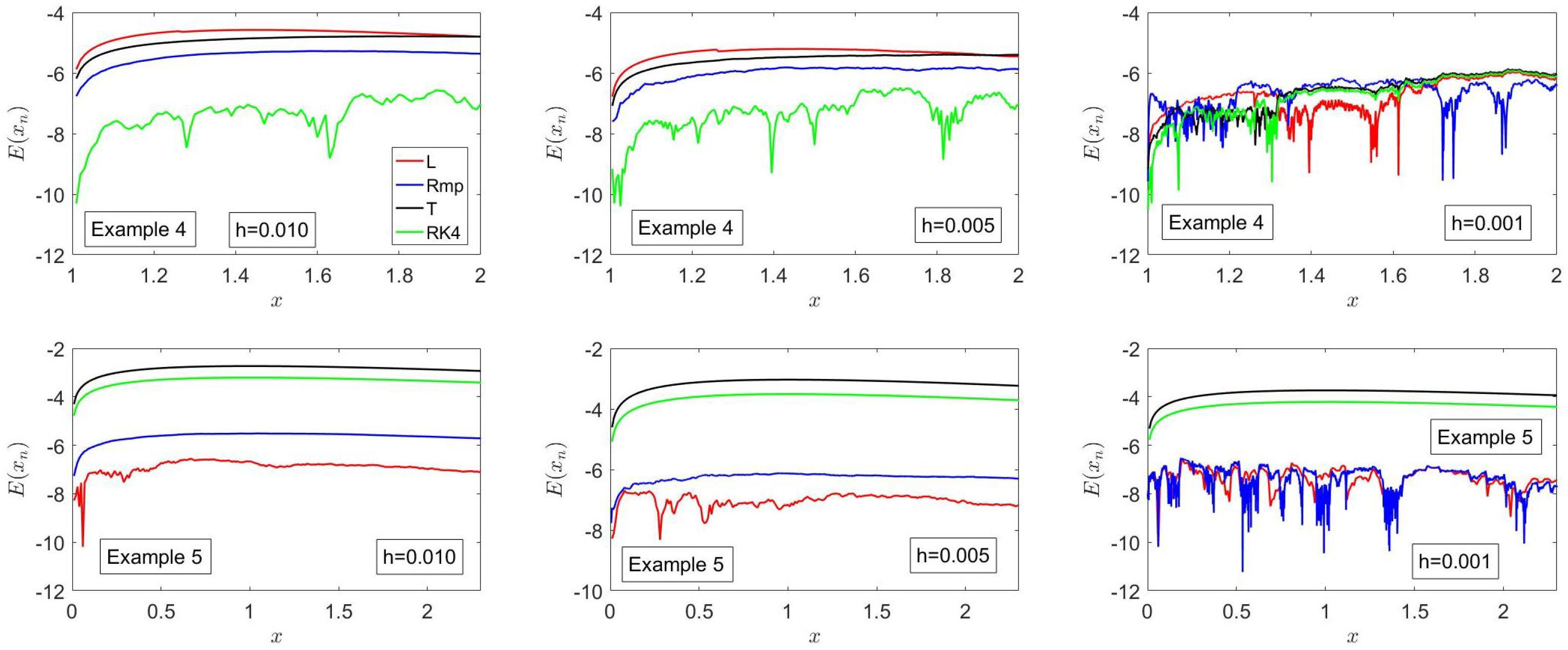

Figure 12 shows that the accuracy order for example 4 is

RK4,

Rmp,

T and

L, for

, and

Rmp,

L,

T and

RK4, for

. By way of contrast, the accuracy ordering for example 5 is

L,

Rmp,

RK4 and

T, for

, and

Rmp,

L,

RK4 and

T, for

. Note that, as stated previously, the solution to example 5 is an exponentially decreasing one and that

Rmp and

L make use of

and

, and

, respectively.

The results presented in

Figure 11 and

Figure 12 clearly illustrate that although

L,

Rmp and

T are second-order accurate and their errors are

, the magnitude of their errors may be different due to the difference in the coefficient that multiplies the

term in their series expansions (cf. Equation (32)).

For example 5, the results illustrated in

Figure 12 indicate that

L,

Rmp are more accurate than

T and

RK4 because

L and

Rmp account for

f and

;

Rmp also accounts for

, whose absolute value is an exponentially increasing function of time. On the other hand,

T and

RK4 only account for

f, as discussed previously.

7. Conclusions

Approximate piecewise-analytical solutions to scalar, nonlinear, first-order, ordinary differential equations have been reported based on fixing the independent variable on the right-hand side of these equations and approximating the resulting term by either its first- or second-order Taylor series expansion.

For second-order Taylor series truncations, the approximate methods presented here result in Riccati equations with constant coefficients whose analytical solutions are obtained as the ratio of polynomials, trigonometric or exponential functions instead of the ratio of the two series that result when the Taylor series expansions also include the dependence on the independent variable.

For first-order Taylor series approximations, the piecewise-analytical methods presented here result in first-order, linear, ordinary differential equations with constant coefficients and result in exponential solutions, whereas the solution of linearized methods based on first-order Taylor series approximations that also account for the dependence on the independent variables include exponential and first-degree polynomials.

The approximate piecewise-analytical methods based on both the first- and the second-order Taylor’s series approximations of the right-hand side of the ordinary differential equations have been shown to result in explicit finite difference methods that are unconditionally stable.

Numerical experiments carried out on five first-order, nonlinear, ordinary differential equations that have analytical solutions that either grow or decrease monotonously, first increase and then decrease, or exhibit blowup in finite time have been performed in order to assess the accuracy of the methods presented in the paper. For three of these equations, which exhibit either monotonically decreasing or increasing solutions or solutions that first increase and then decrease, it has been found that a second-order Taylor series expansion of the right-hand side of the differential equation evaluated at each interval’s midpoint results in the most accurate method, whereas methods based on either first- or second-order Taylor series expansion of the right-hand side of the differential equation evaluated at the left and right points of each interval have similar accuracy, except for the second example considered in this study.

For one example that exhibits blowup in finite time, it has been found that methods based on a second-order Taylor series expansion may result in very much poorer accuracy than those based on a first-order expansion, as the blowup time is approached due to the increase in the singularity of the derivatives with respect to the dependent variable as their order is increased. However, the accuracy of first- and second-order Taylor series expansion methods for blowup problems may be substantially improved by employing variable step sizes that are changed according to, for example, the slope of the solution.

It has also been found that if the radicand of a quadratic equation that depends on the right-hand side of the nonlinear, ordinary differential equations and its first- and second-order derivatives is negative, the accuracy of the quadratic approximation methods presented in this manuscript is much smaller than those based on linear approximations because the former are characterized by finite difference expressions that are the ratio of trigonometric functions, whereas the latter depend exponentially on the first-order partial derivative of the right-hand side with respect to the dependent variable.

It has also been shown that the accuracy of the methods Rmp and L presented in this paper is of the same order as that of a trapezoidal rule that requires an iterative procedure, whereas Rmp and L provide explicit finite difference formulae that are -stable.