1. Introduction

The Proportional–Integral–Derivative (PID) controller represents the most widely implemented control scheme in industrial systems, characterized by its simple structure, direct physical interpretation, and effectiveness in a wide variety of dynamic processes [

1]. Its formulation, based on the combination of proportional, integral, and derivative actions, provides a balanced trade-off between response speed, steady-state error elimination, and oscillation damping, and, in turn, lower implementation costs compared to other robust control techniques, such as dead-time compensation (DTC) or model predictive control (MPC), which explains its widespread adoption in sectors such as manufacturing, chemical industry, robotics, and energy [

2,

3]. However, the effectiveness of PID critically depends on the proper selection of its gains (

,

,

), which determines the system’s stability and transient performance of the system [

2,

4]. Inappropriate configuration of these parameters leads to degraded behavior, such as excessive overshoot, persistent oscillations, or slow settling, which in turn result in energy inefficiencies, increased mechanical wear, and productivity losses [

1,

5]. Furthermore, the adjustment process remains highly dependent on operator experience and the operating environment, resulting in highly variable results and a lack of reproducibility across implementations [

4]. This set of factors makes PID tuning one of the main bottlenecks in the performance of industrial control systems, particularly in contexts involving non-stationary or nonlinear behavior [

5]. Consequently, understanding the structural advantages and limitations of PID has been essential for the development of adaptive approaches and more generalizable optimization strategies.

In response to the limitations of conventional adjustment methods, there has been sustained development of optimization strategies based on metaheuristic algorithms, designed to address the nonlinear, uncertain, and multivariable nature of industrial processes. These strategies, inspired by biological, physical, or social principles, employ global search mechanisms to estimate the optimal parameters of the PID controller in the absence of accurate models [

6,

7]. This category includes evolutionary algorithms, such as Genetic Algorithm (GA) and Differential Evolution (DE); swarm algorithms, such as Particle Swarm Optimization (PSO) [

7,

8].

The attractiveness of these methods lies in their ability to explore complex search spaces without requiring precise knowledge about the process model, offering robust solutions in the face of uncertainty, noise, or parametric variability. In addition, their stochastic nature allows them to avoid local minima, achieving superior results in systems with nonlinear dynamics or significant delays [

6]. However, their performance depends heavily on the appropriate parameterization of the algorithm and the definition of the optimization criterion, factors that affect the stability and reproducibility of the results [

7]. These challenges, together with their high computational cost, have driven the search for approaches with greater adaptive capacity and less dependence on external calibrations, particularly those based on machine learning techniques, which have gained increasing attention in a wide range of areas of study, including those aimed at evaluating their integration into tuning strategies.

Advances in artificial intelligence algorithms have led to the incorporation of machine learning (ML) techniques in control problems, either directly or through controller tuning, the latter motivated by the need to overcome the limitations of traditional heuristic strategies. These approaches enable the direct extraction of knowledge from process data, modeling nonlinear behaviors and temporal dependencies without the need for a detailed physical model of the system [

6,

9]. Within the field of ML, three main paradigms are commonly distinguished: supervised learning, used in system identification and modeling tasks; unsupervised learning, aimed at discovering hidden patterns in the data; and reinforcement learning (RL), which has gained relevance due to its ability to autonomously adjust control policies through direct interaction with the environment [

10,

11,

12].

Numerous algorithmic proposals have been developed, including the use of recurrent neural networks (RNNs) and their extensions, such as LSTM, which have proven highly effective in capturing the temporal dynamics of industrial processes, facilitating the development of predictive and adaptive models that generalize better under disturbances and nonlinearities [

9]. In turn, deep reinforcement learning architectures, particularly those based on Actor-Critic (AC) structures, and policy-optimization approaches such as Deep Deterministic Policy Gradient (DDPG) and Proximal Policy Optimization (PPO), have enabled the implementation of self-tuning PID controllers capable of optimizing their gains online from reward signals without relying on exact process models [

12,

13,

14,

15]. However, the computational cost and the difficulties inherent in hyperparameter selection associated with training limit their large-scale adoption [

16,

17].

In this context, it is pertinent to investigate the design of hybrid algorithms that combine the robustness and simplicity of PID with the adaptive capacity of more advanced optimization methods. This challenge raises a logical question: Is it possible to design reinforcement learning architectures that maintain transparency and remain effective in continuous control tasks?

One promising approach is to restrict the complexity of the agent and shift the design toward schemes where adaptation mechanisms are observable and interpretable. This involves prioritizing direct interpretability over post-hoc explanations (external methods that seek to explain a black box), allowing for the inspection, verification, and traceability of decisions. In this sense, the study of RL approaches with discretized states and actions, explicit update rules, and low-complexity value/policy functions, such as classic Q-learning or other algorithms that offer an adequate compromise between adaptability and readability of the decision mechanism, are relevant to validate and extend [

18]. This is because when these decisions are explicitly set, the perception-decision-action cycle becomes auditable, allowing informed human orchestration or intervention to enhance learning stability [

18,

19,

20], a decisive step toward intelligent control that is verifiable and aligned with industrial requirements for robustness and explainability.

In turn, the evolution toward systems with multiple interconnected agents/controllers has given rise to a set of distributed strategies that combine local autonomy with global cooperation, and highlighting key aspects and promising alternatives for their integration. Among these, studies have shown that exposing consensus relationships among control inputs facilitates the analysis of collective behavior [

21]. Asynchronous distributed predictive approaches provide an effective solution in environments where agents operate with heterogeneous sampling times, ensuring stability and compliance with physical constraints even under external disturbances [

22]. Meanwhile, studies in distributed differential games have demonstrated that formulating control as a strategic interaction between agents with individual objectives allows Nash equilibria to be achieved through linear feedback laws that consider the partial knowledge of each subsystem [

23].

Taken together, these underscore key considerations for incorporating of structural interpretability principles into RL architectures, where coordination and local decisions can be analyzed in terms of their physical impacts and contribution to the overall objective of the system. From this perspective, tabular Q-learning is revisited here as a fundamental strategy for online PID tuning. The central idea is to address the design of an appropriate training framework to analyze how agents progressively configure tuning policies for each PID gain, so that the tuning of each gain can be understood as a sequential decision process evaluated across defined intervals. This approach shifts the focus from mere parameterization of the algorithm to observing how the adjustment itself unfolds over evaluation time windows, highlighting the interaction between reward design, convergence behavior, and the emergence of stabilizing strategies under conditions of reduced observability as well as the lack of access to explicit system models.

To further expand this understanding and address the rapid growth of Q-learning tables, the study adopts a configuration of independent multi-agents, each of which autonomously regulates its own PID gains (

,

,

). Decoupling the search process reduces the complexity of local convergence, favoring scalability toward multi-agent architectures and the design of more robust and transparent strategies for application in industrial environments. However, it also introduces specific challenges, such as the need for implicit coordination, the non-stationarity of the learning environment caused by simultaneous interactions with the environment, and the inherent trade-off that arises between exploration and stability [

24]. Given these challenges, this study hypothesizes that a set of independent agents acting within a discrete and limited action space (decrease, maintain, or increase each gain), guided solely by an aggregate global reward function, can effectively explore the parameter space and converge toward stabilizing policies without resorting to complex RL structures. Therefore, the overall objective of this study is to evaluate the feasibility and mechanisms by which this discrete multi-agent Q architecture operates for online PID tuning of continuous dynamic systems.

This objective is detailed through three specific aims:

- 1.

To determine whether agents with restricted observability in the state space, whose only interaction with the environment is the modification of their own gains, can achieve stabilizing policies from global reward signals.

- 2.

To analyze the emerging exploration trajectories in the PID parameter space, identifying characteristic patterns of learning and adaptation.

- 3.

To examine the convergence properties of the learning dynamics, ensuring that the adaptation of the gains results in stable and consistent control behavior.

Rather than competing with deep RL schemes or other tuning algorithms with respect to efficiency or accuracy, the study seeks to provide a deeper understanding of the principles that enable effective integration between two classical approaches: control theory and reinforcement learning. To this end, the paper integrates two consolidated theoretical frameworks: on the one hand, the principles of classical control embodied by PID, particularly those related to the stability and dynamic response of closed-loop systems; and on the other hand, the fundamentals of reinforcement learning, centered on the formalism of Markov decision processes and the convergence properties of the Q-learning algorithm. Both domains exhibit a strong theoretical and operational compatibility, while PID control adjusts system actions in response to error dynamics, reinforcement learning provides a powerful framework for optimizing adaptive decision sequences. This synergy enables the development of simple, interpretable, and scalable architectures, suitable for implementation in resource-constrained industrial environments.

2. Theoretical Frameworks

2.1. Proportional–Integral–Derivative Controller

The PID controller is a closed-loop feedback control mechanism that forms the basis of many industrial automation, robotics and embedded control systems, thanks to its simple formulation and proven effectiveness [

2]. In this work, the PID controller is used in its parallel discrete form, expressed as Equation (

1), where

represents to the control signal applied to the system,

is the error at instant

t (defined as

, where

is the reference signal and

is the system output), and

,

and

are the proportional, integral and derivative gains, respectively. Each term contributes distinctly to system dynamics: the proportional component introduces an immediate correction based on the current error, but if

is excessive, it can induce oscillations or instability; the integral component accumulates the error history to eliminate steady-state errors, although it can generate overshoot and the integral wind-up phenomenon if not properly regulated; and the derivative term anticipates the error dynamics, generating a useful damping action to reduce overshoot, although it is sensitive to high-frequency noise in the measured signal. Overall, the PID controller seeks to modulate the system response as a function of temporal error, although the nonlinear interaction among the terms makes tuning a complex and delicate process.

PID tuning involves identifying an appropriate combination of

,

and

values that optimizes system performance according to metrics such as settling time, overshoot, stability, and robustness to perturbations. Historically, empirical methods such as the Ziegler-Nichols [

25] (first published in 1942) and Cohen-Coon [

26] (first published in 1953) rules have been proposed, useful in first-order linear processes but with limitations in delayed or nonlinear systems. More advanced techniques, such as

-tuning or IMC-based PID tuning [

27], offer a systematic approach that rely on model-based information, with good results in stationary systems. In more complex contexts, methods such as relay feedback [

28], adaptive self-tuning algorithms, and heuristic approaches based on optimization (genetic algorithms, Particle Swarm Optimization, Bayesian strategies) have been employed. In addition, approaches have emerged that integrate machine learning and neural networks to determine optimal configurations in complex search spaces. Although these methods have extended the capabilities of PID, in many cases they involve high computational costs or significant dependence on accurate models, which restricts their applicability in dynamic industrial environments. These limitations have motivated the search for alternative methods that allow the PID parameters to be adapted online without requiring an explicit model of the system, a context in which reinforcement learning is positioned as a particularly promising approach.

2.2. Reinforcement Learning

Reinforcement learning is grounded in sequential decision theory, originally formalized by Richard Bellman in the 1950s through dynamic programming. This theory gave rise to the general framework known as the Markov Decision Process (MDP), which forms the basis for a large family of RL algorithms [

29].

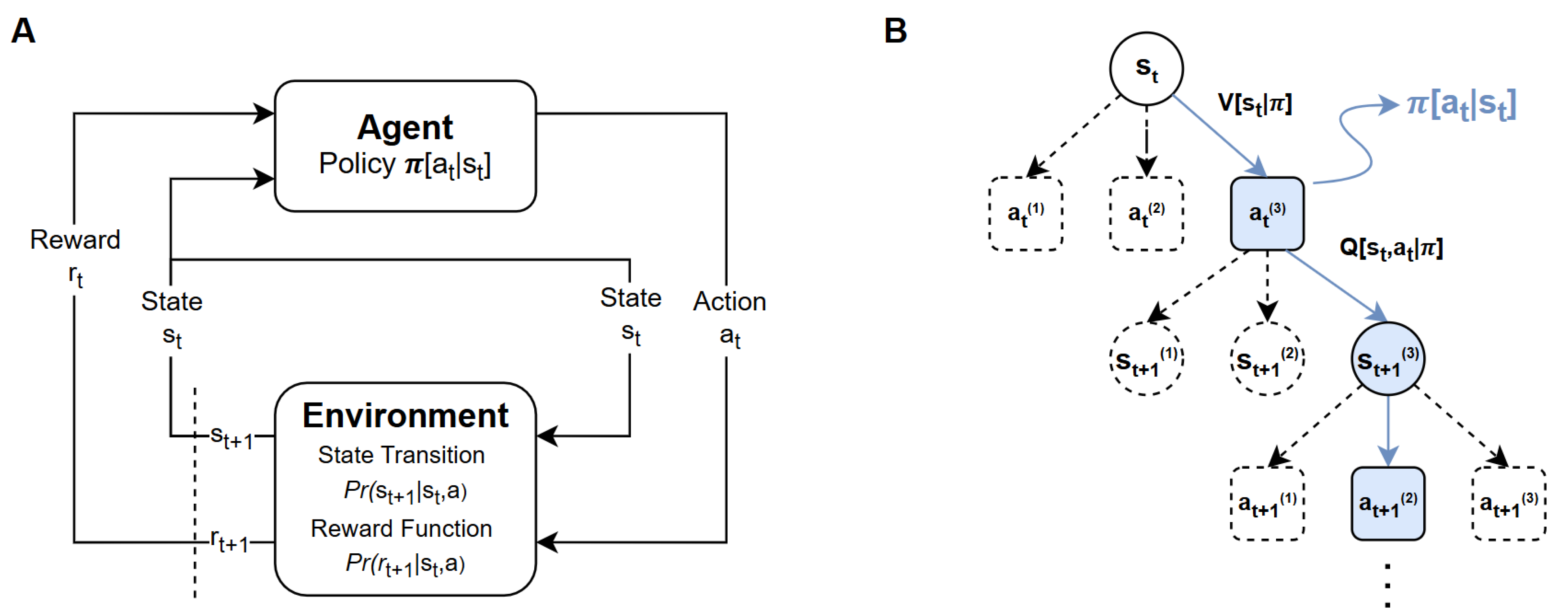

Figure 1A illustrates the fundamental interaction structure between an agent and its environment: at each time instant

t, the agent observes a state

, selects an action

according to its policy

, and receives a reward

as the environment transitions to a new state

, according to a state transition probability function

. Simultaneously, the reward function determines the distribution

governing the feedback from the environment and is therefore fundamental to the learning process.

The agent’s objective is to learn a policy

that maximizes the expected return,

generally defined as the discounted sum of future rewards (see Equation (

2)), where

is the discount factor that weights the relative importance of future rewards. Values close to zero cause the agent to focus on immediate benefits, while values close to one promote decisions with a long-term perspective.

Figure 1B schematically depicts the expansion of the decision tree from a state

. Each possible action

from that state can lead to different future states

, and so on, generating multiple trajectories for the system evolution. In this framework, the policy

guides the selection of actions, while the quality of decisions can be evaluated using value functions. The state-value function

represents the expected cumulative reward when the agent starts from state

and continues to act according to policy

. In contrast, the state-action value function

represents the expected cumulative reward obtained when the agent first executes the action

in state

and then follows policy

. Intuitively, as shown in

Figure 1B,

measures how desirable it is is to be in a given state under a policy, whereas

measures how good it is to take a specific action in that state before continuing with the policy.

These functions form the core of RL algorithms, both in their classical tabular variants, such as Q-learning (off-policy) and SARSA (on-policy), and in their modern versions based on function approximators and deep neural networks. However, as the number of possible states and actions increases, the complexity of the decision space grows exponentially, posing significant challenges to designing optimal policies and formulating effective reward functions in partially observable or model-free environments.

2.3. Q-Learning

The mathematical formulation of reinforcement learning is based on the Bellman equation, which defines the optimal value of a policy recursively, decomposing the expected return as a function of future decisions. However, its classical formulation assumes full knowledge of the environment model, i.e., all transition probabilities and the reward function [

29]. This limitation was overcome through the development of Temporal Difference (TD) methods, which allow the agent to learn directly from its experience when interacting with the environment [

30].

The key principle of TD learning is the iterative update of value estimates through the temporal difference error

, which measures the discrepancy between predicted and observed returns. This principle has given rise to a broad family of bootstrapping algorithms [

31], ranging from tabular methods such as Q-learning and SARSA, to intermediate formulations like Dyna-Q and AC, and modern deep reinforcement learning variants including Deep Q-Networks (DQN), PPO, DDPG, and Asynchronous Advantage Actor-Critic (A3C). Within this spectrum, the Q-learning algorithm [

32] remains particularly relevant for its conceptual simplicity and practical robustness. Its update rule (see Equation (

3)) is based on adjusting the value of a state-action pair based on both the immediate reward and the best estimated value of the next state. Importantly, Q-learning is classified as off-policy because, even if the agent explores the environment following a certain policy, the update always assumes that the best possible action will be taken in the future. The learning rate

controls the extent to which new information influences the update, enabling the agent to progressively refine its estimates of the Q-function as it interacts with the environment.

Under conditions of sufficient exploration and a sufficiently small learning rate, Q-learning has been shown to converge to the optimal value function

[

29]. However, the guaranteed convergence of Q-learning only holds under discrete and finite state and action spaces. To apply this algorithm to continuous control problems, such as PID tuning, requires performing an explicit discretization of the parameter space and possible observable values. This operation introduces a critical tension trade-off between accuracy and computational feasibility.

On the one hand, a coarse discretization reduces the size of the table, favoring the speed of learning and the use of lightweight architectures, but on the other hand, it limits the agent’s ability to approximate optimal solutions in continuous environments. Conversely, a fine discretization improves the resolution of the learned policy, but generates a combinatorial explosion of the state-action space, a phenomenon known as the “curse of dimensionality”, which can render learning impractical in terms of memory and convergence time.

In this context, Q-learning acquires strategic significance as a foundation for exploring the balance between simplicity and impact. Its explicit structure allows for a transparent analysis of how the agent’s decisions evolve based on the feedback obtained, which is especially valuable in tasks such as adaptive PID tuning, where understanding the interaction between local parameter settings and the global system response is essential.

2.4. RL Applied to PID Tuning

The integration of RL techniques with PID controllers has been widely studied, with deep reinforcement learning approaches being particularly prominent. Algorithms such as DDPG, PPO, and SAC have proven effective in continuous control tasks, where neural networks allow PID parameters to be adjusted based on experience, optimizing performance metrics such as cumulative error, overshoot, and robustness against disturbances [

11,

33,

34,

35]. In addition, architectures such as AC, A3C, and variants of PPO and DDPG have been applied in various scenarios, confirming the adaptability of deep RL to complex environments [

17,

36,

37,

38,

39].

In parallel, hybrid schemes combining RL with heuristic techniques have been proposed. Among them, the use of fuzzy Q-learning in multi-agent systems has attracted particular attention, where each agent adjusts a PID parameter with rewards based on integral error indices (IAE, ITAE) [

40]. Along the same lines, Li et al. [

41] incorporated hybrid mechanisms capable of operating in continuous domains based on classical control performance metrics.

Conversely, discrete reinforcement learning algorithms have been effectively applied in robust predictive control models [

42,

43], integrated with other techniques for the adaptive management of actively perturbed environments, and for the tuning of PID controllers [

44,

45], although mainly under conditions of high observability and constrained methodological framework. Specifically, works such as those by Elhakim et al. [

46], Shi et al. [

47], and Thanh et al. [

48] defined states based on error variables and their temporal evolution, while actions were defined actions as discrete increases or decreases in the gains. In these cases, reward functions penalized significant deviations and encouraged convergence toward stable configurations. Other proposals have introduced variants including incremental and double Q-learning approach, where the state space was progressively refined and the action space was adaptively expanded, using Gaussian rewards to improve reference tracking in mobile robotics [

49,

50]. In a similar framework, Fan et al. [

51] applied Q-learning to trajectory tracking and lane guidance, and Yeh and Yang [

52] compared Q-learning and SARSA with programmed actions in piezoelectric control scenarios, demonstrating the ability of these techniques to adapt the PID parameters in dynamic environments without requiring explicit system models.

Despite advances in the use of deep RL for PID controller tuning, there is still a lack of systematic studies evaluating the application of classical discrete algorithms, such as tabular Q-learning, in continuous systems, especially under limited observability conditions and without explicit models. This gap has been widened by the preference for high-resolution continuous approaches, which has limited the exploration of alternatives based on discretization. However, discrete methods offer advantages in terms of simplicity, interpretability, and consequently greater ease of implementation, which are relevant for industrial applications.

In this context, classical RL algorithms can be a viable alternative, provided critical aspects such as the reward function, exploration strategy, and action-space discretization are carefully defined. These considerations are fundamental to obtaining stable and reproducible policies. On this basis, this study revisits the role of discrete algorithms in the online tuning of PID controllers and proposes a multi-agent architecture based on Q-learning. The following section describes the methodology in detail and the proposed validation process, which combines episode training, specific reward functions, and a framework for evaluating training processes for transparent and interpretable algorithms.

3. Methodology

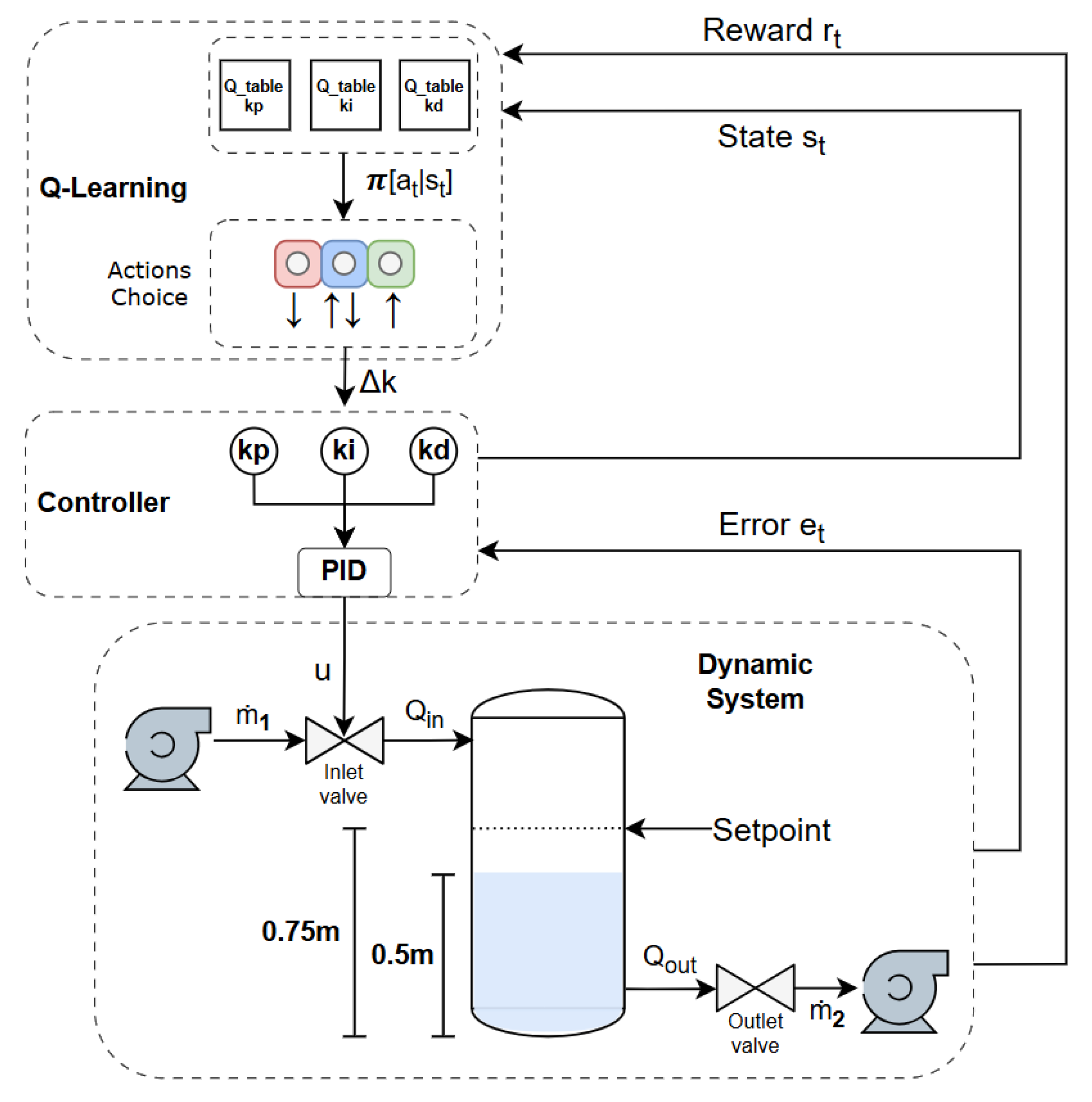

An experimental computational methodology based on episode-based simulation is adopted to enable the empirical evaluation of continuous dynamic control systems and to conceptually validate an integrated framework that combines a discrete RL scheme with classical PID control. The proposed approach aims to assess the feasibility of automatic gain tuning through decentralized multi-agent learning, applied to nonlinear systems of different orders.

The proposed control framework integrates a discrete multi-agent Q-learning scheme for the automatic tuning of PID controller gains in continuous dynamic systems. Each PID gain (, , ) is adjusted by an independent agent operating under low observability in a decentralized manner; that is, no agent directly observes the physical environment or exchanges information with other agents. The system is trained to achieve a single stabilization objective, relying solely on a global reward signal derived from the overall performance of the controlled system, without relying on explicit models or agent-specific reward functions.

3.1. State-Action Space

As described above, the state space of each agent is not defined by the physical variables of the dynamic system, but by the discrete set of possible values for its corresponding PID gain. The action space is the same for all agents and consists of three discrete options: decrease, maintain, or increase the current gain value. Actions that modify the gain produce a fixed change , which determines the granularity of the state-space discretization and is limited to an appropriate range of action. This design directs each agent to explore its gain space in search of combinations that simultaneously maximize the overall reward, thus indirectly identifying an effective control policy.

3.2. Training Plan

Training is structured into independent episodes with a fixed maximum duration. Each episode begins with the dynamic system in a perturbed state; all system variables are reset to predefined initial conditions, except for the agents learned Q-values, which accumulate across episodes. Within each episode, time is discretized into fixed-duration decision intervals . At the beginning of each interval, every agent selects an action to update its respective gain. The selected gains remain fixed during that interval while the PID controller computes the control signal based on the current system error. The resulting control action is applied to the environment; the system dynamics are simulated by numerically integrating the corresponding differential equations, and the new environment state is obtained. The reward at each time step is accumulated and used to update the agents Q-tables, allowing them to evaluate the cumulative impact of their actions before making subsequent decisions. Episode termination occurs when any of the following conditions is met:

- 1.

The maximum episode duration is reached.

- 2.

The system achieves stabilization according to predefined control thresholds.

- 3.

The deviation limits of the environment are exceeded.

If the episode ends prematurely, the final Q-value update excludes a temporal difference term, since no successor state exists; only the reward obtained up to that point is considered.

The learning process follows an off-policy framework that employs an exploration strategy with adaptive decay, enabling a gradual shift from exploration to exploitation as training progresses. Initially, a high promotes active exploration of the reward space, while its gradual reduction progressively favors the exploitation of learned policies. Similarly, the learning rate is adaptively decayed to stabilize Q-table convergence and to reduce sensitivity to recent observations during later stages of training.

3.3. Reward Function

The reward function, a key component of the proposed control framework, is computed at each decision interval as the sum of instantaneous rewards, which are in turn defined as an additive combination of multiple criteria reflecting both control performance and efficiency. The reward design guides the learning process toward PID gain configurations that maintain system stability while minimizing undesirable control actions.

The first component is the base reward

(Equation (

4)), which is modeled as a weighted Gaussian function centered at the desired operating point of

n selected system variables. For each variable value

,

denotes the setpoint value,

the sensitivity parameter that controls the curvature steepness, and

the relative weight:

A time penalty,

(Equation (

5)), is applied at each step to encourage rapid stabilization and discourage excessively long episodes:

where

is a proportionality constant.

An additional penalty

is introduced to penalize abrupt changes in the control signal (Equation (

6)). This term is modeled as a quadratic function of the control signal variation:

where

is the control action at the current step,

is the previous action, and

a weighting factor.

To further encourage the agent to maintain the system within a desirable operating range, a per-step bandwidth bonus

, is introduced (Equation (

7)). This component provides a small constant reward whenever the observed variables remain within predefined limits of the target region.

where

is the bonus magnitude,

and

denote the lower and upper bounds of the target range, respectively, and

denotes the set of variables for which the bandwidth condition is defined.

A goal-achievement bonus

is granted when the system reaches the stabilization target (Equation (

8)); otherwise, this bonus reward is set to zero:

where

when the target variables are within the predefined stabilization thresholds.

The total reward

is accumulated over

m time steps within each decision interval

, combining all active components of the instantaneous rewards as expressed in Equation (

9):

3.4. Pseudocode

The core logic of the proposed training framework is summarized in Algorithm 1. The pseudocode formalizes the sequential process; from environment and agent initialization, through the episode loop and decision-interval cycle, to the Q-learning update stage, detailing the interactions among the simulation model, the PID controller, and the independent learning agents.

The pseudocode provides a structured representation of the proposed methodology, clarifying the operational flow and illustrating the integration of the PID controller with the decentralized multi-agent learning process.

| Algorithm 1 Adaptive PID control in line with independent multi-agent Q-learning. |

Nomenclature: Maximum number of episodes; Physical initial conditions; Controller gains; Delta gain; Current time; Integration time step; state_config←Discretize range of respective gains; Decision counter; Actions (, , );

Cumulative reward; -greedy policy; Interval decision time; Control variable error.

|

| I. Episode Initialization: | |

| The system is set to the initial state. | |

| 1: | ▷ Initialize learning matrix in zeros for each agent |

| 2: for episode to do | |

| 3: ; ; ; | ▷ Initialize system parameters |

| 4: | ▷ Create state space with function for |

| combinations | |

| 5: for do | |

| 6: ; | ▷ Initialize state-action space from initial conditions |

| 7: | ▷ Initialize decision counter |

| II. Decision interval cycle: | |

| Maintaining PID gains during the decision interval | |

| 8: while not and do | |

| 9: | ▷ Initialize cumulative reward |

| 10: for do | |

| 11: | ▷ Select actions by strategy |

| 12: | ▷ Calculate gains actions |

| 13: | ▷ Apply limits to gains |

| 14: | ▷ Apply controller gains for interval of each agent |

| 15: | ▷ Create state for each agent |

| 16: | ▷ Calculate steps for decision interval |

| 17: for to N do | |

| 18: | ▷ Calculate PID action control |

| 19: | ▷ Calculate new state of dynamical system |

| 20: | ▷ Calculate new error of the system |

| 21: | ▷ Update time for each step |

| 22: | ▷ Calculate instantaneous reward |

| 23: | ▷ Accumulate instantaneous reward |

| 24: | ▷ Update new physical state for next iteration |

| 25: | ▷ Evaluate end of episode due to restrictions |

| or stabilization | |

| 26: if then break | |

| III. Update Q-Learning: | |

| Update using the cumulative reward | |

| 27: for do | |

| 28: | ▷ Create new state for each agent |

| 29: | |

| 30: if then | |

| 31: | ▷ Bellman TD |

| update equation | |

| 32: if not then | |

| 33: | ▷ Update decision counter |

| 34: | ▷ Update error of the system |

| IV. End of episode: | |

| 35: | ▷ Update number episode |

| 36: ; | ▷ Update training parameters |

5. Discussion

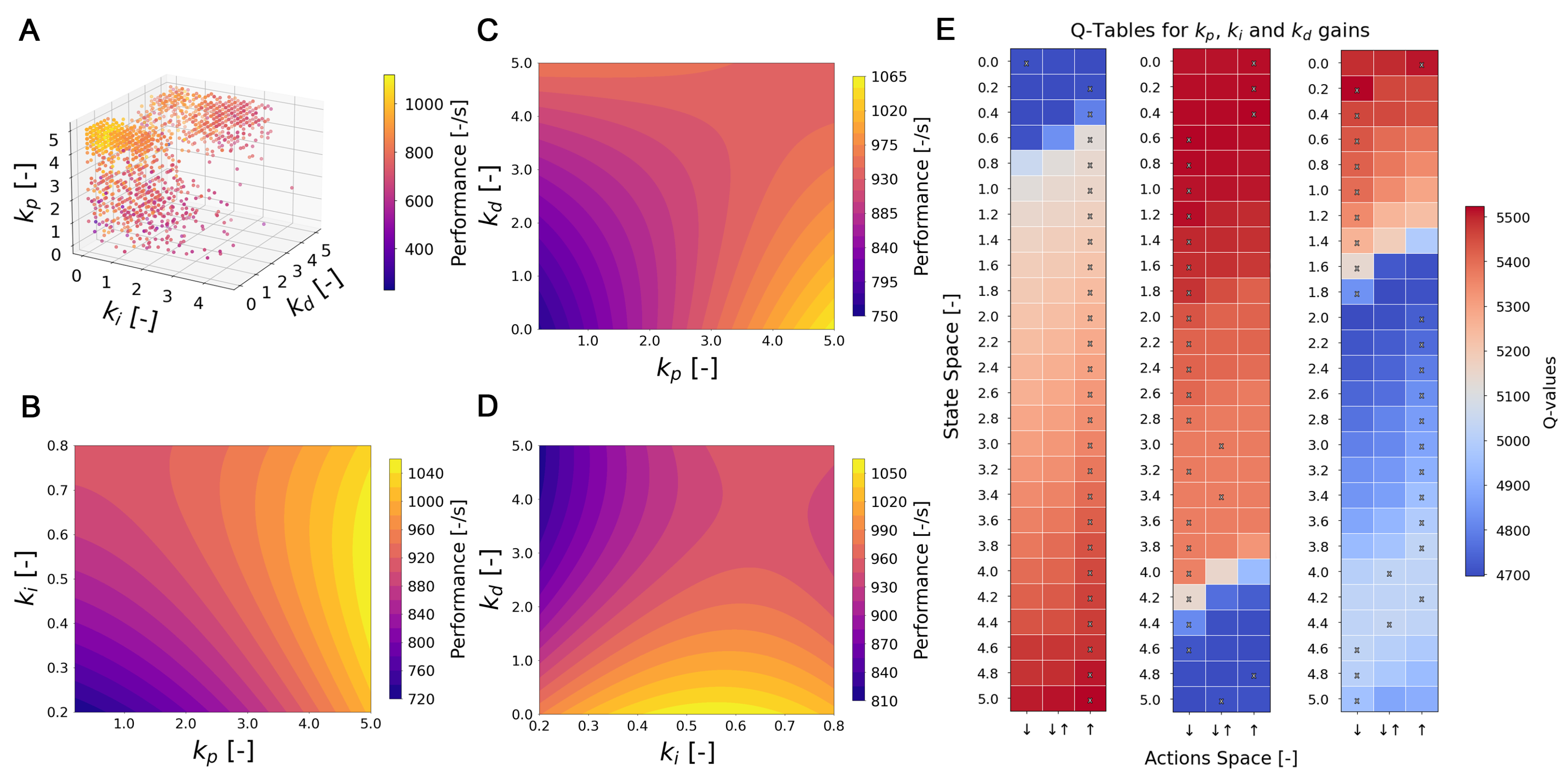

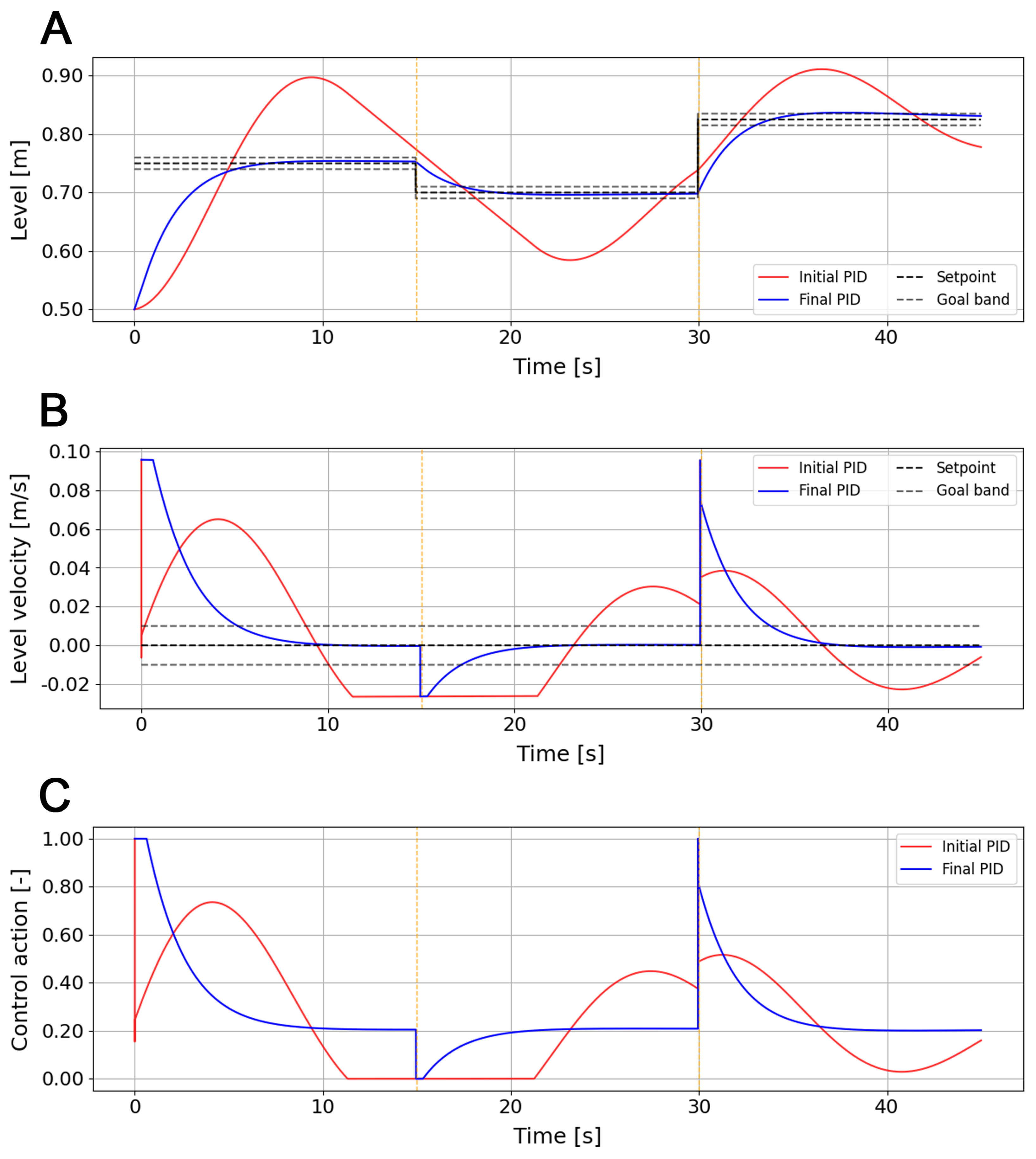

The results confirm the feasibility of a multi-agent tabular scheme for online PID tuning under limited observability conditions. The findings of the proposed framework provide insight into the mechanism through which agents explore the gain space, converge toward reproducible configurations, and respond to reward function design.

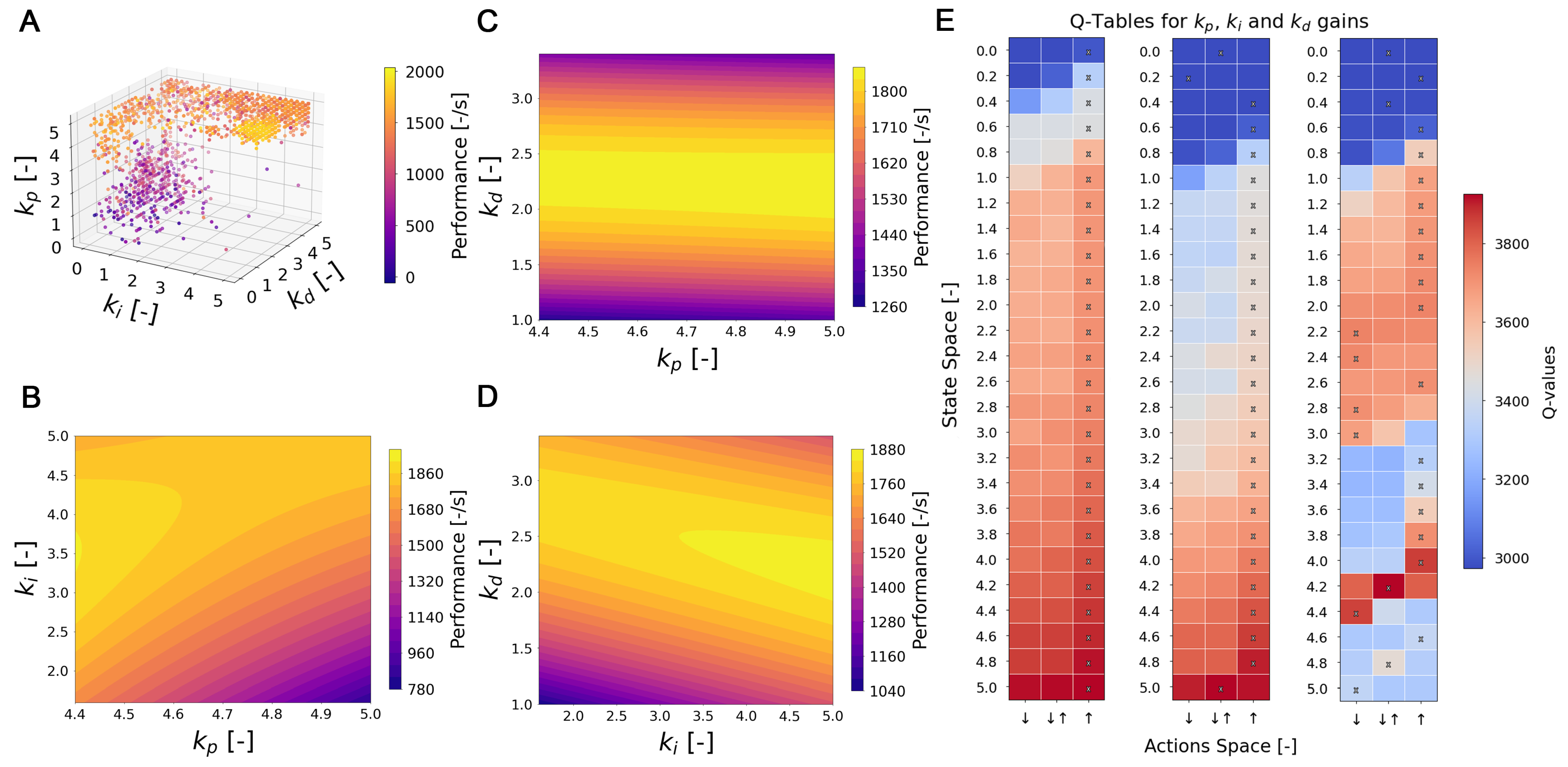

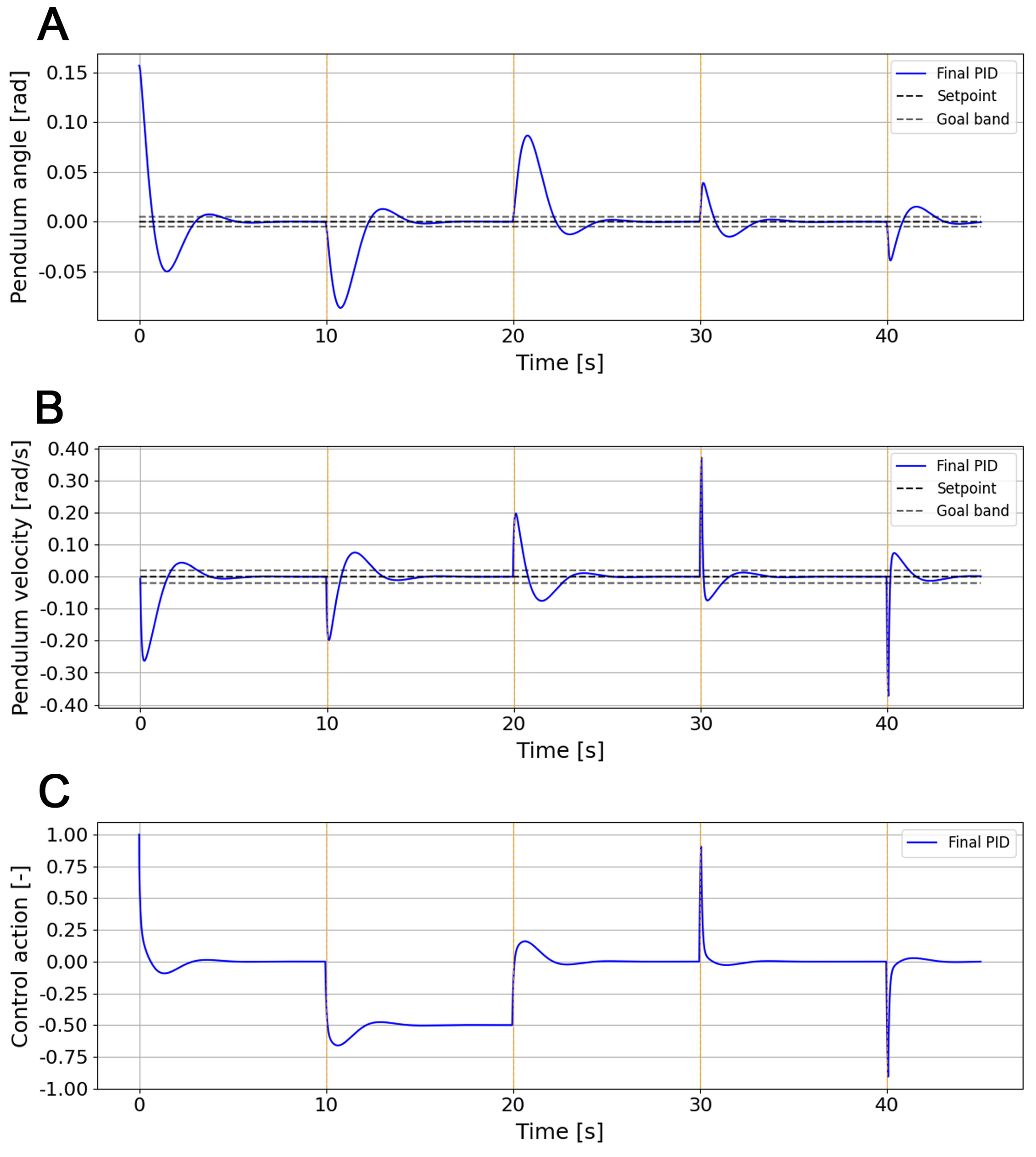

Regardless of whether aggregate performance could be improved through finer-grained gain adjustments, the learned gain patterns align with the dynamic structure of each process. In the case of water-tank leveling, the predominance of high values accompanied by reduced and gains reflects the nature of a first-order system, where proportional action is sufficient to achieve a stable and rapid response without the need for significant integrative or derivative actions. This pattern contrasts with the cart-pole, an inherently unstable system that requires high and gains and an intermediate level of to effectively compensate for sustained error and moderate oscillations. These gains were obtained consistently (given the compact stability regions), demonstrating the agent’s ability to adapt to the specific dynamic requirements of each process.

As Jingliang Duan et al. [

53] point out, the design of the reward function is a key factor in the stability and efficiency of learning. Poorly balanced rewards can induce undesirable behaviors, while carefully crafted functions facilitate more efficient learning trajectories and consistent results. Two operational design choices proved both decisive and scalable. First, the penalty for abrupt control action (

); in the case of the tank, it regulated the effective influence of the derivative, since its activation led to a brief initial saturation followed by stable valve behavior. Without the penalty, the agent tended to oversize

, generating undesirable initial oscillations. Second, in cart-pole, even when receiving only the angular error as feedback, the learned controller stabilized the pendulum and stopped the cart due to the reward design (Gaussian component on cart speed, operating bands, and goal bonus), internalizing implicit objectives without additional variables.

Additionally, the feasibility of the approach is further confirmed through analysis using fixed gains extracted from the converged Q-tables, where it is observed that with the same gains, the controller maintains its stabilizing capacity for references close to the training setpoint in both systems. Although extrapolation to targets or operating conditions far from the training range is not guaranteed, this suggests that the learned policies are not restricted to the training reference signal, a synergy that can be favorable in more complex proposals by preserving this capability of the PID algorithm.

Finally, it should be noted that the test environments are idealized, with perfect actuators and no internal or external disturbances, so scalability to more complex scenarios requires additional validation. Likewise, in order to scale the proposed framework, strategies and certain design challenges must be addressed, as they could directly affect system stability and convergence. On the one hand, the design of the state-action space as a discretization of gains is a limitation that affects both the identification of the status of the dynamic system itself and the fine tuning of the PID. A single-gain state representation was adopted to maximize interpretability and maintain the system’s modular applicability. Although closed-loop feedback carries environmental information, the agent’s perception remains partially observable, limiting context-dependent decisions in the event of large setpoint changes or unseen disturbances. Within the considered operating range, the readjustment procedure remains effective; however, extending the generalization would benefit from a hybrid state that increases discretized gains with critical loop variables, the inclusion of other agents that handle the context for adaptive control objectives, or adaptive discretization of gains [

49]. However, care should also be taken when selecting the gain-change step size, since, together with the decision cadence, it imposes a resolution-efficiency trade-off that depends on both the sensitivity and the adjustment requirements of the system dynamics (longer windows for slow processes, immediate corrections for fast ones). On the other hand, sensitivity to hyperparameters, such as the reward,

exploration rate, learning rate, and their decay schedules, must be carefully tuned according to the learning capacity and multi-agent configuration to avoid severe non-stationarity issues. These aspects represent key challenges that must be addressed before implementing the framework in more complex industrial applications or scaling it to higher-dimensional control problems.

6. Conclusions

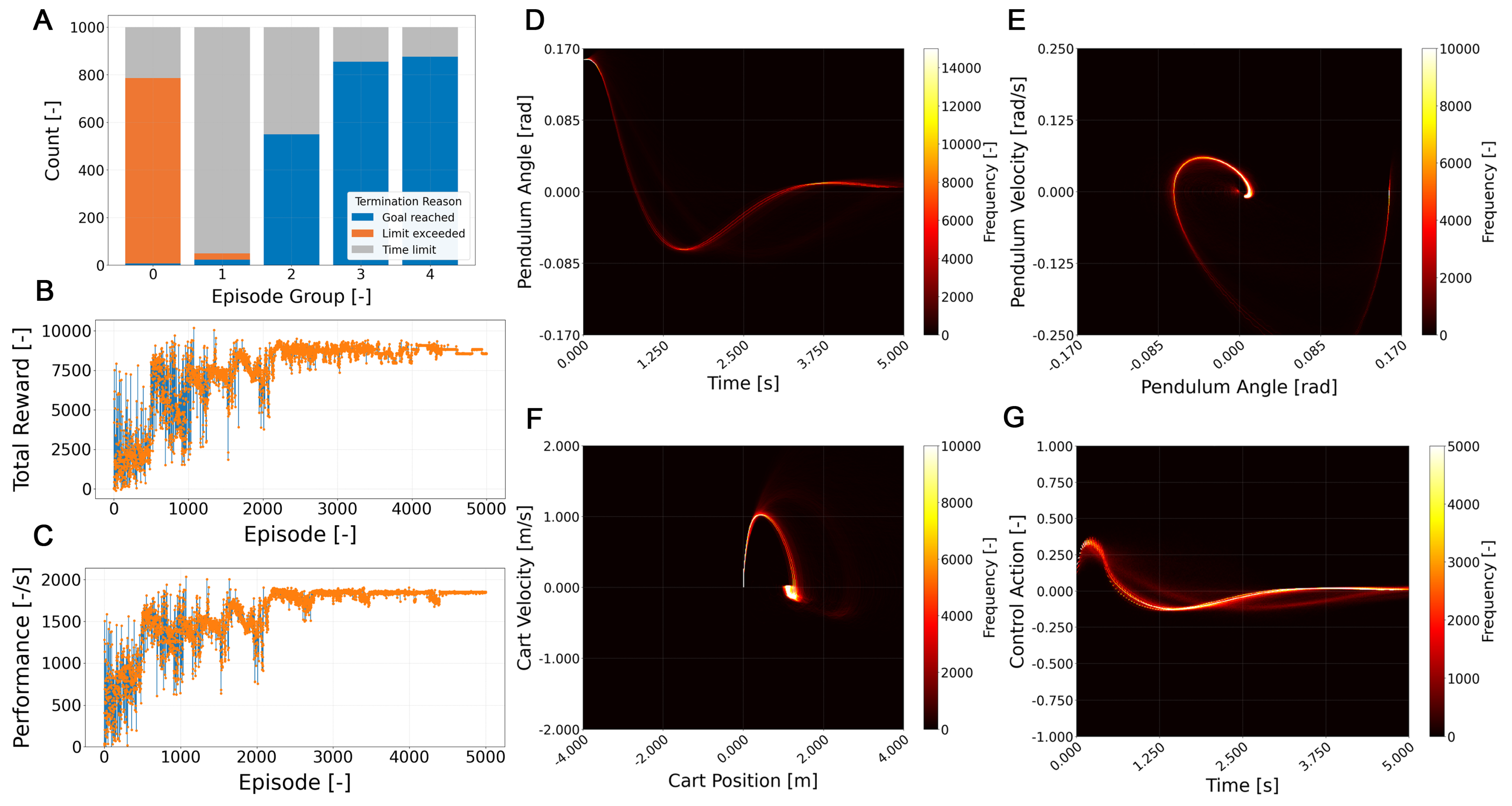

The results demonstrate that the proposed multi-agent tabular Q-learning framework effectively performs online PID tuning under limited observability. In the water-tank control problem, the first signs of convergence appeared around 300 episodes, reaching full stability after approximately 2500–3000 episodes, where each of the last two groups of 1000 episodes consistently achieved success rates above , maintaining a mean performance deviation below . Over the complete 5000 episode training, of the runs reached the goal condition, while 50.4% ended due to time limit. In the cart-pole system, initial convergence emerged near 500 episodes and was consolidated around 2000–2500 episodes, where each of the last two groups of 1000 episodes exceeded successful stabilizations, with performance fluctuations below . Across the full training horizon, of episodes were successful, failed due to exceeding physical limits, and terminated by time limit. These results confirm that independent agents can learn reproducible and stable gain configurations capable of sustaining continuous control without explicit process models.

The proposed framework provides a transparent and interpretable foundation for adaptive control, where the learning process can be examined directly in terms of PID gain evolution and the resulting control behavior. Its modular multi-agent design allows each component to operate independently, facilitating scalability toward more complex environments and enabling integration with analog PID architectures commonly used in industry. A key outcome of this study is the demonstrated influence of the reward function as a guiding mechanism for coordinated exploration, ensuring both stability and the emergence of desirable implicit behaviors such as minimizing control effort and maintaining safe operating ranges. This interpretability, together with the discrete and low-complexity nature of the scheme, positions the approach as a practical and scalable alternative for deployment in industrial environments requiring transparency, reliability, and explainable reinforcement learning control.

The evidence obtained not only confirms its feasibility but also highlights its potential as a research avenue for broadening the scope of RL applications in automatic control. Future work should focus on extending this framework toward more robust multi-agent systems capable of addressing intelligent adaptive control in complex industrial scenarios involving multiple controllers, strongly nonlinear dynamics, and diverse disturbance conditions. In addition, special attention should be paid to the design of advanced reward mechanisms, as the reward structure, together with fixed-gain decision intervals, proved essential in mitigating non-stationarity by allowing agents to perceive quasi-stationary environments. Therefore, in subsequent studies, it would be interesting to further investigate how these mechanisms contribute to stability, explore reward configurations and multi-objective formulations, and evaluate the impact of reducing observable parameters on convergence. Finally, assessing the feasibility and computational cost of the proposed approach on various integrated control platforms will be an essential step toward its practical implementation and validation under real operating conditions.