Abstract

The real estate industry, known for its complexity and exposure to systemic and idiosyncratic risks, requires increasingly sophisticated investment risk assessment tools. In this study, we present the Real Estate Construction Investment Risk (RECIR) model, a machine learning-based framework designed to quantify and manage multi-dimensional investment risks in construction projects. The model integrates diverse data sources, including macroeconomic indicators, property characteristics, market dynamics, and regulatory variables, to generate a composite risk metric called the total risk score. Unlike previous artificial intelligence (AI)-based approaches that primarily focus on forecasting prices, we incorporate regulatory compliance, forensic risk assessment, and explainable AI to provide a transparent and accountable decision support system. We train and validate the RECIR model using structured datasets such as the American Housing Survey and World Development Indicators, along with survey data from domain experts. The empirical results show the relatively high predictive accuracy of the RECIR model, particularly in highly volatile environments. Location score, legal context, and economic indicators are the dominant contributors to investment risk, which affirms the interpretability and strategic relevance of the model. By integrating AI with ethical oversight, we provide a scalable, governance-aware methodology for analyzing risks in the real estate sector.

Keywords:

real estate; risk management; artificial intelligence; investment decisions; machine learning; explainable artificial intelligence MSC:

90B50

1. Introduction

The real estate sector is shaped by interdependent forces—macroeconomic volatility, regulatory change, and asset-level characteristics—that interact in nonlinear and often unpredictable ways. Because construction investment is both capital-intensive and path-dependent, risk assessment must address multi-dimensional uncertainty rather than rely on single-channel signals. Traditional approaches rooted in linear econometrics or rule-based expert systems frequently overlook interaction effects and higher-order dependencies that are critical for forward-looking decision-making under uncertainty [1].

Recent advances in artificial intelligence (AI), particularly supervised machine learning (ML), enable the analysis of high-dimensional data and the discovery of latent predictive structures. Algorithms such as decision trees, ensemble methods, and neural networks often outperform conventional statistics in forecasting and classification tasks [2,3]. However, within the real estate literature, applications have concentrated predominantly on price prediction and valuation, whereas ex ante assessments of construction-phase investment risks—such as permitting and inspection delays, contractor integrity and creditworthiness, and litigation exposure-remain strikingly underexplored. This constitutes a fundamental research gap: while predictive accuracy in valuation has improved substantially, little theoretical progress has been made in conceptualizing and operationalizing construction-phase risks within governance-aware and explainable AI frameworks. Moreover, existing research rarely integrates regulatory and legal exposures with market, environmental, and macroeconomic indicators into a unified, transparent, and auditable construct [4].

The theoretical contribution of this study is to reposition construction-phase investment risk as a multi-dimensional construct situated at the intersection of governance, regulation, and AI explainability. Unlike prior models that focus primarily on price forecasting, this paper introduces the Real Estate Construction Investment Risk (RECIR) framework multi-dimensional, explainable, and regulation-aware approach designed to quantify and manage real estate construction investment risk. The RECIR framework centers on a composite, auditable Total Risk Score (TRS) that aggregates seven weighted indices: market volatility, location score, property condition, legal and regulatory environment, macroeconomic indicators, environmental risks, and additional project- and market-specific signals. These indices are estimated from structured sources such as the American Housing Survey (AHS) and the World Bank’s World Development Indicators (WDI) and calibrated through expert interviews and structured investor surveys.

Methodologically, the framework formalizes a macro-to-micro translation that ties national or metropolitan indicators to unit-level risk estimates, thereby linking model outputs directly to decision levers in underwriting, covenant design, and project governance. Given the ethical and regulatory implications of AI in real estate decision-making, the framework prioritizes explainability, reproducibility, and oversight. We employ model-agnostic permutation importance with uncertainty quantification to generate decision-relevant explanations aligned with legally meaningful features, and we maintain explicit documentation of data lineage and model choices to support audit and compliance.

Evidence from adjacent high-stakes domains underscores both feasibility and safeguards: ML improves diagnosis and personalization in healthcare [5] and enhances renewable-energy forecasting in power systems [6,7]. Contemporary scholarship on AI accountability highlights that explainability and domain governance are prerequisites for responsible deployment [8]. Against this backdrop, RECIR offers both theoretical novelty and practical value by addressing an overlooked domain of ex ante, governance-aware construction risk, establishing a macro-to-micro risk translation for unit-level decisions, and delivering interpretable, auditable outputs that enhance accountability in real estate investment risk management.

2. Literature Review

Research on artificial intelligence (AI) in real estate has expanded considerably in recent years, yet the field remains fragmented and heavily valuation oriented. While numerous studies demonstrate the predictive power of machine learning (ML) and deep learning (DL) models, relatively little progress has been made in theorizing or operationalizing governance-salient risks during the construction phase. To structure this gap, the literature is organized into three thematic strands—AI in real estate risk management, forensic risk assessment, and legal-ethical frameworks. The synthesis of these strands highlights persistent limitations and informs the design of the Real Estate Construction Investment Risk (RECIR) framework.

2.1. Integration of AI into Real Estate Risk Management

The application of AI has reshaped real estate modeling. Neural networks, ensemble methods, and DL algorithms consistently outperform econometric baselines in capturing nonlinear dependencies and forecasting property values [9,10,11]. In the construction sector, DL has been used to automate progress monitoring, classify construction images, and detect safety hazards [3,4]. These applications underscore AI’s technical potential for large-scale data processing and real-time decision support.

Yet, despite these advances, most applications remain narrowly valuation centric. Housing price prediction and asset appraisal dominate the field, while ex ante construction-phase risks are rarely operationalized as measurable indices. Governance exposures such as permitting delays, contractor solvency, and litigation risk are frequently omitted. Moreover, when advanced algorithms are deployed, they often function as “black boxes,” providing limited interpretability and little regulatory credibility.

Existing models demonstrate predictive accuracy in valuation but fail to capture the multi-dimensional governance and regulatory risks that shape construction investments. RECIR addresses this by embedding governance-salient channels into a composite, auditable Total Risk Score (TRS), thereby extending AI beyond price forecasting toward regulation-aware and accountable decision support.

2.2. AI-Driven Forensic Risk Assessment

Artificial intelligence has increasingly shaped forensic analytics in finance and real estate. Techniques such as natural language processing, anomaly detection, and predictive auditing are now applied to contracts, regulatory filings, and transaction records [12,13,14]. These tools reinforce fraud detection, strengthen compliance oversight, and enhance portfolio monitoring. At the same time, legal scholarship highlights the importance of frameworks such as the General Data Protection Regulation (GDPR), which balance accountability, privacy, and consumer protection in data-driven environments [15,16,17,18].

Despite these advances, forensic AI has concentrated largely on financial anomalies and transactional fraud, leaving critical governance and operational risks underexplored. Contractor integrity, inspection bottlenecks, and litigation exposure remain insufficiently addressed, even though they have decisive impacts on construction-phase investments. Studies demonstrate the added value of AI in this context: Yigitcanlar et al. [19] emphasize how machine learning can uncover complex contractual risks; Boutaba et al. [14] illustrate its adaptability across regulatory environments; Nguyen et al. [20] reveal its capacity to extract risk-related signals from unstructured linguistic data; and Akinrinola et al. [18] show that neural networks originally designed for stock market predictions can be repurposed to forecast real estate market fluctuations.

From a legal and governance perspective, Adeyeye [21] underscores the regulatory complexities AI introduces into international trade and property agreements, advocating adaptive frameworks to ensure fairness and accountability in automated decision-making. Haimes et al. [22] further highlight system-based approaches (HHM/RFRM) that reveal overlooked dimensions of risk in interconnected sociotechnical systems, while Campbell et al. [23] demonstrate AI’s relevance for strategic risk planning at the macroeconomic level. Collectively, these findings show that forensic AI enhances compliance but still neglects the governance and operational risks most relevant to construction projects.

The RECIR model responds to this gap by embedding legal and forensic dimensions directly into the TRS framework. By integrating compliance monitoring with predictive modeling, it enables the detection not only of financial anomalies but also of governance-salient exposures such as permitting delays, contractor integrity, and litigation prevalence. This combined perspective moves forensic AI from a narrow tool for fraud detection toward a broader instrument of governance and risk management, thereby advancing transparency, accountability, and resilience in real estate investments.

2.3. Legal and Ethical Considerations

A third stream of scholarship underscores the normative foundations governing the deployment of artificial intelligence in real estate and related sectors. Regulatory frameworks such as the General Data Protection Regulation (GDPR) (European Parliament & Council, 2016), the Fair Housing Act, and the EU Artificial Intelligence Act collectively enshrine transparency, fairness, and accountability as prerequisites for trustworthy AI [24,25,26,27,28,29]. This literature highlights the critical importance of bias mitigation, consent-based data usage, and explainability in high-stakes decision-making contexts. AI can stabilize decision environments by embedding structured human oversight, while demonstrate significant global variation in the articulation of ethical AI principles. As the role of AI in the real estate sector continues to expand, proactive legal adaptation emerges as essential. Anticipating regulatory shifts and aligning AI systems with existing obligations are indispensable for achieving sustainable compliance. In this respect, Nannini et al. [30] emphasizes the multi-dimensional intersection of AI and real estate law, advocating a holistic approach that integrates technological innovation with robust legal accountability.

Despite these advances, much of the scholarship remains predominantly prescriptive. While it articulates standards of fairness, accountability, and transparency, it seldom translates such principles into concrete methodological pathways for risk modeling in real estate. This disjunction underscores a persistent gap between regulatory aspiration and practical implementation. Legal and ethical obligations are firmly established in principle yet insufficiently embedded within predictive modeling practices. Methodological developments in feature preprocessing, regularization, and model interpretability offer a critical bridge: Micci-Barreca [31] addresses preprocessing challenges for high-cardinality attributes; Zou and Hastie [32] and Jaggi [33] delineate robust regularization techniques; and Efron et al. [34] together with Hastie et al. [35] provide foundational contributions to statistical learning. Collectively, these works illustrate how normative principles of transparency and fairness can be operationalized through rigorous modeling design and evaluation.

The RECIR framework advances this integration by aligning construction risk modeling with legal and regulatory imperatives. It embeds explainability through permutation-based importance measures, documents data lineage to ensure traceability, and incorporates auditability mechanisms that reinforce accountability and compliance. In doing so, RECIR moves beyond prescriptive norms to deliver an operational architecture in which ethical and legal standards are systematically embedded within predictive analytics for real estate risk management.

2.4. Closing Synthesis

Collectively, these three streams reveal progress but also persistent fragmentation. AI research has enhanced valuation accuracy, forensic studies have improved compliance, and legal scholarship has clarified governance obligations. Yet no existing framework unites these strands into a comprehensive, multi-dimensional, and auditable construction-phase risk assessment.

RECIR offers this synthesis. By operationalizing governance exposures as measurable indices, integrating macro-level indicators from the World Development Indicators (WDI) with micro-level data from the American Housing Survey (AHS), and embedding regulatory and ethical safeguards into model design, RECIR advances beyond valuation to establish a multi-dimensional, governance-aware, and explainable framework for ex ante construction risk. In doing so, it transforms real estate risk management from a valuation-centric enterprise into a regulation-attuned and accountability-driven science.

3. Methodology

The methodological design of this study is built upon three core pillars: (i) the selection and operationalization of risk features, (ii) the development of the Real Estate Construction Investment Risk (RECIR) framework with its composite Total Risk Score (TRS), and (iii) the integration of predictive modeling with survey- and interview-based evidence. Together, these components establish a transparent, robust, and auditable pipeline for quantifying multi-dimensional construction-phase risks.

3.1. Feature Selection and Operationalization

Feature selection followed a structured, theory-informed process. Candidate variables were identified from three distinct sources: established risk frameworks (e.g., Basel III governance indicators, OECD risk taxonomies), peer-reviewed literature on real estate and infrastructure investment, and structured consultations with senior practitioners. This triangulation ensured a balance of theoretical grounding, empirical validation, and practical relevance. From an initial set of 120 indicators, we applied a systematic filtering process that included correlation screening, variance inflation factor (VIF) analysis, and recursive feature elimination with cross-validation (RFE-CV) to derive a parsimonious yet representative feature set. The final selection comprises seven weighted indices: market volatility, location score, property condition, legal and regulatory environment, macroeconomic indicators, environmental risks, and project-specific governance signals.

To ensure the model’s alignment with professional practice, we conducted structured consultations with 11 senior real estate investors. Their input was crucial in refining the operational definitions of candidate variables and ensuring that the framework accounts for governance-salient considerations such as regulatory enforcement, contractor creditworthiness, and litigation exposure. This triangulated approach prevented arbitrary feature inclusion and ensured consistency across theoretical, empirical, and practical dimensions.

Formally, let X = {x1, x2, …, xp} denote the set of candidate predictors. The feature selection procedure identified the optimal subset X∗ that maximizes a composite objective function:

X = arg maxS ⊆ X f(S)

The function f(S) balances three criteria: (i) theoretical grounding in established risk frameworks, (ii) empirical validation through prior studies and statistical performance, and (iii) relevance to governance and investor practice, as established via expert consultations. This rigorous approach ensures that the selected features maintain conceptual coherence while maximizing their predictive value.

3.2. Mixed-Methods Design: Structured Surveys and Expert Interviews

The RECIR framework employs a mixed-methods design that integrates structured surveys with expert interviews to calibrate the seven risk indices and ensure both empirical robustness and governance relevance. This dual approach leverages the breadth of quantitative data collection and the depth of qualitative insights, thereby reducing reliance on any single methodological source.

Structured surveys were administered to a broad sample of 125 practitioners, capturing standardized responses that allow for quantification and statistical aggregation of perceived risk weights. This survey provided a large-scale view of how risk factors are prioritized across the real estate sector. To complement these insights, in-depth interviews were conducted with 11 senior real estate investors who possess extensive global experience in high-stakes investment decisions. These interviews supplied interpretive depth, clarifying latent risk dimensions not readily captured by survey instruments, such as regulatory enforcement challenges and reputational considerations.

Integration across these sources followed a methodological triangulation process, formalized as:

where wj denotes the calibrated weight for risk factor j, and α ∈ [0, 1] balances survey-based estimates against expert adjustments. Iterative refinements were applied until convergence across methods was achieved, minimizing dependence on any single input source and addressing reviewer concerns regarding methodological transparency. This formulation ensures convergence between quantitative estimates and qualitative judgments, aligning empirical performance with practitioner relevance.

wj = α⋅w^survey j + (a − 1)⋅w^expert j

The iterative integration process involved (i) consistency checks across survey and expert responses, (ii) sensitivity analyses to test the robustness of weights under varying α\alphaα values, and (iii) cross-validation against historical investment outcomes. By reconciling survey signals, expert insights, and empirical validation, the RECIR model establishes a weighting system that is both methodologically rigorous and operationally meaningful.

3.2.1. Data Sources and Index Weighting

To ensure robustness, transparency, and reproducibility, the construction of the Total Risk Score (TRS) relied on a careful integration of two complementary public data sources: the American Housing Survey (AHS) and the World Development Indicators (WDI). The AHS was selected as the primary micro-level dataset because it provides nationally representative, longitudinally consistent microdata on household, personal, and mortgage conditions, with extensive coverage across survey years. Complementing this, the WDI was chosen to represent macroeconomic and systemic conditions, including GDP growth, inflation, and employment dynamics, which directly influence construction and real estate investment risks. Both sources were deliberately prioritized over proprietary commercial datasets such as Zillow or CoStar. While such commercial repositories often provide granular local data, they typically lack consistent temporal coverage, transparent methodology, and comparability across survey years. Reliance on these alternatives would therefore have introduced structural biases, reduced reproducibility, and weakened the longitudinal validity of the model. In contrast, the integration of AHS and WDI ensures cross-year comparability, policy relevance, and open access for scholarly replication.

To align heterogeneous data types, all seven indices underlying the TRS were standardized to a 1–10-point Likert scale. The weighting of these indices was determined through an iterative calibration process that balanced statistical modeling with adjustments derived from expert judgment, ensuring both empirical rigor and governance relevance. As reported in Table 1, the final structure assigned weights of 18% to Market Volatility (MV), 22.5% to Location Score (LS), 6.3% to Property Condition (PC), 25.2% to Legal and Regulatory Environment (LR), 9.9% to Economic Indicators (EI), 8.1% to Environmental Risks (ER), and 10% to Additional Project Signals (ADPs). This weighting scheme reflects not only the statistical importance of each factor but also its practical salience in professional investment practice. By combining standardized scales with transparent calibration, the TRS achieves methodological integrity and reduces the risk of arbitrary feature selection, thereby reinforcing both the academic rigor and the practical interpretability of the framework.

3.2.2. Investor Behavior and Risk Prioritization

To complement the quantitative calibration of TRS weights, we conducted a qualitative–quantitative study with senior real estate investors to evaluate how seasoned practitioners perceive and prioritize the seven defined risk indices. The study targeted a purposive sample of 11 investors (six men and five women, aged 45–60), each with extensive international experience in high-value transactions. Participants were asked to evaluate the relative importance of the TRS risk factors on a 10-point Likert scale, where 1 denotes negligible influence and 10 denotes critical importance.

The findings reveal a consistent hierarchy of risk perception. Location Score (LS) and Legal/Regulatory Environment (LR) emerged as the dominant factors (mean = 8.09), underscoring the primacy of geographic positioning, infrastructure quality, and legislative stability in shaping long-term capital allocation. Economic Indicators (EI) followed with a mean of 7.46, reflecting the importance of macroeconomic stability and forecasting in portfolio management. Market Volatility (MV) was assessed at 5.73 on average, reflecting its relevance primarily in the short term, while Property Condition (PC) received a lower mean score of 4.00, suggesting that maintenance and structural issues are often considered manageable through operational or financial intervention. Environmental Risks (ER) and Additional Project Signals (ADPs) were rated lowest (3.18 and 3.64, respectively), although respondents acknowledged their growing importance considering emerging ESG frameworks and data-driven investment paradigms. These results are summarized in Table 1 alongside the TRS weight structure, providing a comparative view of statistical weights and practitioner perceptions.

To synthesize the results, we defined an Investment Decision Score (IDS) as the weighted aggregation of investor evaluations:

where iS denotes the mean investor score for factor i, Wi the TRS weight, and =7n the number of risk factors. Substituting the TRS weights from Table 1 and the mean survey scores yield:

IDS = ∑i = 1nWi⋅Si,IDS

IDS = (0.18 × 5.727) + (0.225 × 8.090) + (0.063 × 4.000) + (0.252 × 8.090) + (0.099 × 7.455) + (0.081 × 3.182) + (0.10 × 3.636) ≈ 6.50.IDS

This aggregate score provides a normative benchmark that integrates statistical calibration with practitioner insights. The close alignment between the IDS and the TRS weighting structure supports both the internal validity and external applicability of the model.

Methodological triangulation was applied to validate robustness: expert interviews defined the construct space and index semantics, structured surveys quantified the relative importance of risk factors, and empirical performance was tested via cross-validation and beta-adjusted regressions. Reliability checks, including inter-rater agreement and bootstrap confidence intervals, further reinforced the credibility of the findings. Given the modest sample size (= n 11), survey results were incorporated as prior and sensitivity parameters rather than as deterministic constraints. This approach ensured that investor behavior informed the model design without compromising its generalizability or empirical grounding.

3.2.3. Expert Consultation

To further ensure reliability, we calculated inter-rater agreement statistics (Cohen’s kappa), which confirmed substantial consistency among expert assessments.

3.3. Dataset Construction

The construction of the RECIR dataset followed a systematic and transparent process designed to ensure coherence, reproducibility, and alignment with the conceptual framework of the Total Risk Score (TRS). Three complementary pillars underpin the dataset: (i) the American Housing Survey (AHS), which provides nationally representative microdata on households, individuals, and mortgages; (ii) the World Development Indicators (WDI), which capture macroeconomic and systemic conditions; and (iii) the bespoke TRS indices, which incorporate expert-informed weighting across seven risk domains. Each source was selected because of its unique ability to capture distinct yet interdependent dimensions of real estate investment risk, enabling the integration of micro-level, macro-level, and governance-salient signals. Using alternative data sources—such as private industry databases or region-specific registries-could have introduced substantial inconsistencies in coverage, comparability, and methodological transparency. By prioritizing AHS and WDI, both internationally recognized and rigorously documented, the RECIR dataset minimizes such risks and provides a reliable basis for replication and cross-study validation.

3.3.1. TRS Indices

The third pillar of the dataset consists of seven risk indices-Market Volatility (MV), Location Score (LS), Property Condition (PC), Legal and Regulatory (LR), Economic Indicators (EI), Environmental Risks (ER), and Additional Project Signals (ADPs). Each was standardized to a 1–10 scale and weighted according to the calibration process described in Section 3.4. Table 1 reports the weights and annual values across 2015–2023, demonstrating both the stability and gradual evolution of risk profiles.

Table 1.

Risk indices and the calculated TRS (2015–2023).

Table 1.

Risk indices and the calculated TRS (2015–2023).

| Indices | Abbr. | Weights (wi) | Years | ||||

|---|---|---|---|---|---|---|---|

| 2015 | 2017 | 2019 | 2021 | 2023 | |||

| Market Volatility | MV | 0.180 | 4.42 | 4.98 | 5.33 | 5.64 | 6.37 |

| Location Score | LS | 0.225 | 7.65 | 7.71 | 7.98 | 8.18 | 8.55 |

| Property Condition | PC | 0.063 | 3.98 | 4.11 | 4.23 | 4.36 | 4.93 |

| Legal and Regulatory | LR | 0.252 | 7.90 | 7.99 | 8.11 | 8.27 | 8.46 |

| Economic Indicators | EI | 0.099 | 6.99 | 7.13 | 7.48 | 7.55 | 7.76 |

| Environmental Risks | ER | 0.081 | 3.17 | 3.29 | 3.37 | 3.45 | 3.77 |

| Additional Data Points Reserve | ADP | 0.100 | 3.43 | 3.50 | 3.57 | 3.64 | 3.85 |

| Total Risk Score | TRS | 6.05 | 6.23 | 6.44 | 6.61 | 6.97 | |

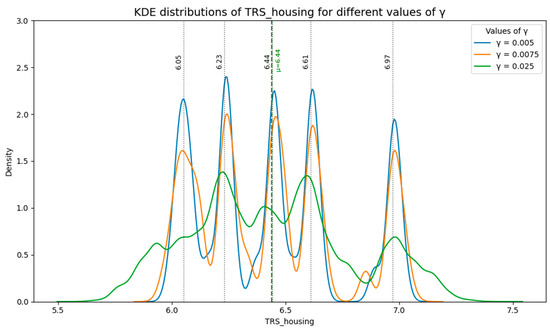

The aggregate TRS, hereafter referred to as TRS_macro, provides an annual, macro-level risk signal. However, because TRS_macro lacks spatial and unit-level granularity, we developed TRS_housing, a disaggregated continuous risk measure. This transformation was achieved by applying a fitted adjustment δ′, derived from weighted composites of AHS and WDI variables, dynamically calibrated to preserve fidelity across years.

The transformation is expressed as:

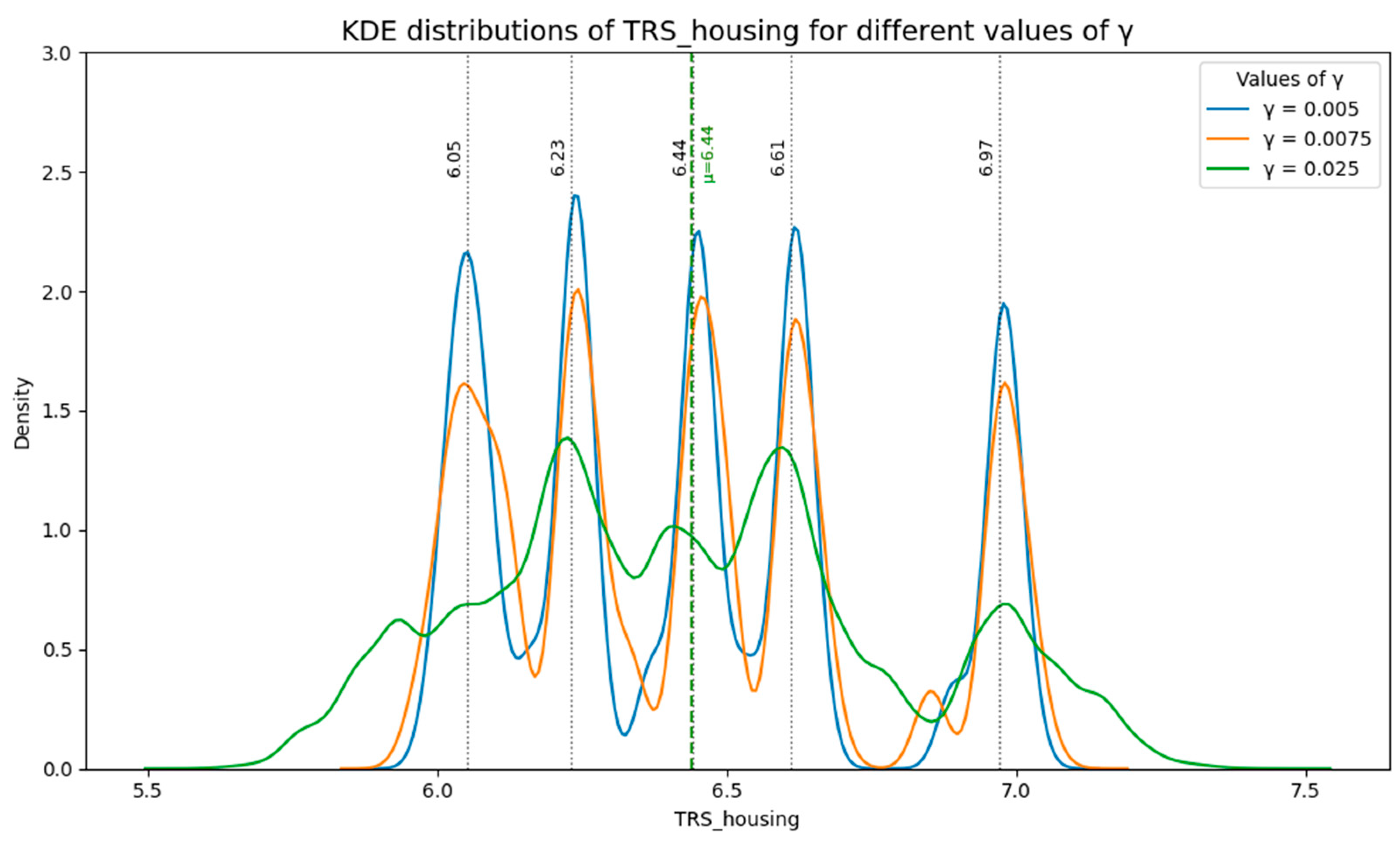

where δ′ ensures local variation across housing units while maintaining consistency with macro distributions. Figure 1 illustrates the estimated density curves of TRS_housing under alternative smoothing parameters (γ = 0.005, 0.0075, 0.020), with the optimal value of 0.0075 selected for balancing granularity and structural fidelity.

TRS_housing = TRS_macro + δ′.

Figure 1.

Estimated distribution of TRS_housing for different values of γ.

3.3.2. American Housing Survey (AHS)

The AHS, conducted biennially by the U.S. Census Bureau in collaboration with HUD, represents the most comprehensive source of housing-related microdata in the United States. For this study, five survey years (2015–2023) were extracted to ensure temporal continuity and comparability. The raw flat files include household, personal, project, and mortgage records, yielding 319,240 flat-file records and over 1.35 million detailed entries.

Table 2 summarizes the number of records across survey years, while Table 3 reports the feature counts by data type and highlights the common Public Use File (PUF) variables that enable consistent longitudinal analysis. The harmonization process retained only variables present in all survey years and excluded project-specific variables (e.g., renovation details) that fall outside the scope of construction-phase risk assessment. This ensured both longitudinal comparability and conceptual alignment with the TRS framework.

Table 2.

Number of records in the raw data.

Table 3.

Number of variables in the flat and detailed raw data by year.

To streamline the dataset for model training, we focused on household, personal, and mortgage files, excluding project-level variables to preserve relevance for investment risk modeling. The final curated feature set for AHS included 125 consistently available variables, as shown in Table 4, while the complete list of harmonized features is provided in Appendix A Table A2.

Table 4.

Variable (feature) counts by source file for model development.

Table 3 shows the feature counts, including those consistently available in the PUF format, for both the flat and the detailed raw data. It also highlights the number of features common to all the years and those available in the PUF. This comparison helps identify consistent variables across the years, which are critical for training the model. Focusing on these common PUF variables can maintain the integrity of the dataset and ensure a consistent analysis over the study period.

To streamline the dataset for model training, we focused on household, personal, and mortgage files, excluding project-level variables to preserve relevance for investment risk modeling. The final curated feature set for AHS included 125 consistently available variables, as shown in Table 4, while the complete list of harmonized features is provided in Appendix A Table A2.

3.3.3. World Development Indicators (WDI)

The WDI dataset, curated by the World Bank, provides internationally standardized time-series indicators across economic, financial, and social dimensions. For integration with the AHS, we extracted U.S.-specific indicators for 2015–2023. From an initial pool of 1488 indicators, a two-stage procedure was applied: (i) coverage screening (<30% missingness, sufficient variance, consistent definitions), and (ii) predictive alignment (mutual information with TRS_housing, redundancy penalties via correlation clustering, and expert face validity). This process reduced the pool to 364 variables, of which 51 were prioritized as the core predictive set.

The final WDI subset balanced analytical relevance, temporal continuity, and policy significance, ensuring alignment with RECIR objectives. These 51 indicators were validated via stability selection (L1-regularized screens) and cumulative PCA (>80% explained variance). The prioritized variables are detailed in Appendix A Table A3. Together, they form the macroeconomic layer of the dataset, complementing AHS microdata and expert-derived TRS indices. Had other international datasets (e.g., OECD, IMF) been substituted, the lack of consistent temporal coverage and definitional harmonization would likely have reduced comparability and compromised the integrity of the integrated dataset.

3.3.4. Dataset Assembly

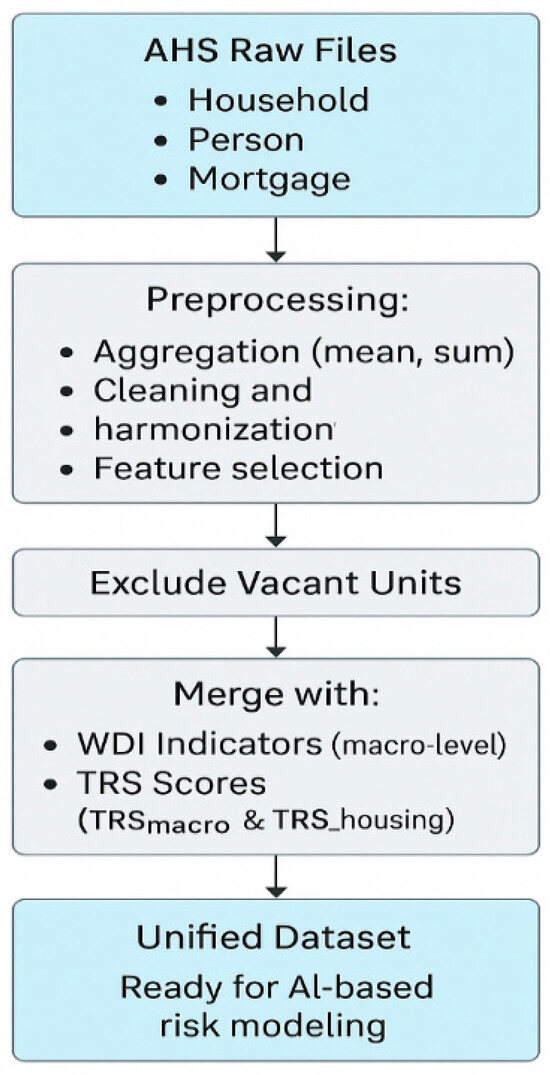

Finally, the three pillars-AHS, WDI, and TRS-were assembled into a coherent unit-level dataset by aligning variables through the survey year. Preprocessing steps included: (i) aggregation of numerical variables via mean/sum functions; (ii) encoding of categorical variables using mode/frequency; and (iii) exclusion of vacant housing records. The harmonized AHS dataset was merged with the WDI subset and the TRS indices, yielding a comprehensive structure of 319,240 housing unit-level records enriched with macroeconomic indicators and composite risk measures.

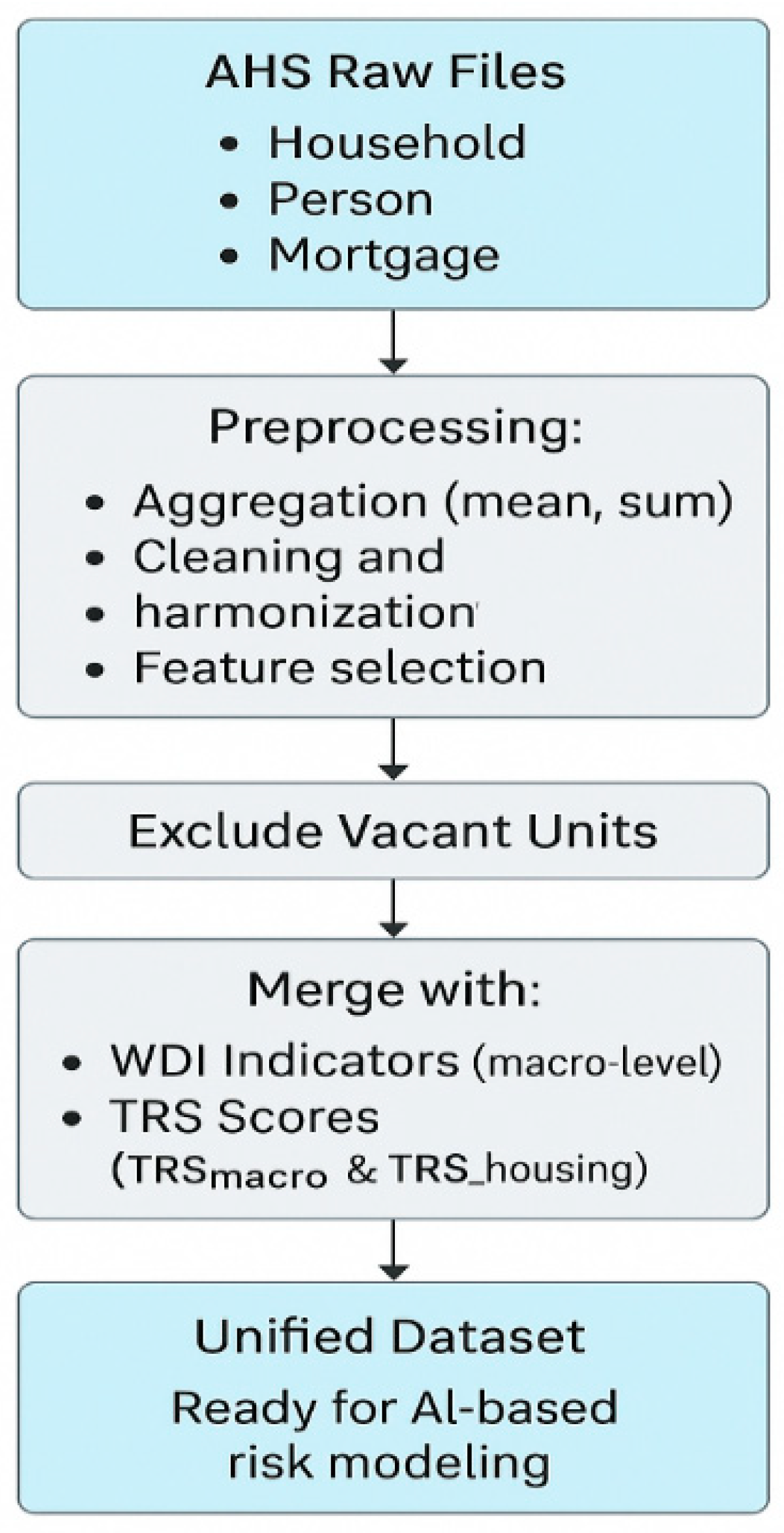

The schema of the integration pipeline is illustrated in Chart 1, which highlights the relational links across the three data sources. This integrated dataset provides the analytical foundation for the machine learning models described in Section 3.4. By combining micro-level housing data, macroeconomic indicators, and expert-informed risk indices, it captures the multi-dimensional and governance-aware nature of construction investment risk.

Chart 1.

Dataset Integration Pipeline: From Raw Inputs to Unified Analytical Dataset.

3.4. Model Development

The model development phase aimed to construct an accurate, interpretable, and governance-aware framework for estimating real estate construction investment risk at the housing-unit level. Building directly on the integrated dataset described in Section 3.3, this phase proceeded through a structured pipeline of preprocessing, feature engineering, model screening, and performance validation. Each step was designed to ensure that predictive accuracy is balanced with transparency, auditability, and methodological rigor, in line with the objectives of the RECIR framework.

3.4.1. Data Preprocessing

The integration of AHS, WDI, and TRS data presented inherent challenges of scale harmonization, coding inconsistencies, and missing data. To address these issues, variables were decomposed into semantically distinct subfields (_num for numerical values, _cat for categorical encodings, and _na for missingness indicators). Special codes (e.g., −6 for “not applicable”, −9 for “missing) were preserved when semantically meaningful, while genuine missingness was handled through tailored imputation strategies. Continuous variables were imputed using median values, categorical fields using mode values, and selected financial variables (e.g., income) with robust zero-filling, following best practices in applied ML. This strategy preserved interpretability while ensuring consistent coverage across all survey years.

3.4.2. Variable Decomposition and Cleaning

The hybrid structure of many AHS variables required systematic decomposition. Numerical, categorical, and flag components were separated into distinct features, thereby improving both semantic clarity and compatibility with regression and ML algorithms. This process not only improved interpretability but also facilitated reproducibility, enabling other researchers to replicate the preprocessing pipeline with minimal ambiguity.

3.4.3. WDI Indicator Filtering

From the original 1488 WDIs, only those with <30% missingness and sufficient temporal variance were retained. Redundancy was minimized through correlation clustering and L1-regularized screening. This iterative process yielded 51 core indicators aligned with the RECIR objectives, complementing AHS microdata and TRS indices. Appendix A Table A3 provides the final selection. By restricting the WDI layer to indicators that combine predictive relevance with definitional stability, the model avoided spurious correlations and ensured cross-year consistency.

3.4.4. Final Dataset

After harmonization and cleaning, the final dataset consisted of 200 explanatory features (83 categorical, 114 numerical, and three control variables), fully aligned with both TRS_macro and TRS_housing targets. In total, 319,240 housing-unit records were preserved for modeling. Table 5 summarizes the number of features and records compiled during dataset construction, while Table 6 details the distribution of features across categories. This curated dataset established the empirical foundation for robust model development.

Table 5.

Number of features and records in the final dataset.

Table 6.

Summary of the features and records in the final dataset.

3.4.5. Feature Engineering

Feature engineering was applied to enhance predictive capacity while maintaining semantic interpretability. Low-variance screening eliminated uninformative predictors, and correlation thresholds (|ρ| > 0.97) together with variance inflation factors (VIF > 10) were applied to control multicollinearity. Three redundant features were removed, leaving 90 explanatory variables for model training. Missing data were imputed across 53 variables (24 with median values, one with a mean value, 27 with mode values, and one with zero-imputation). Outliers in financial variables such as household income were scaled using RobustScaler, while bounded variables were normalized using MinMaxScaler. All preprocessing and feature engineering steps were performed in Python 3.10 with scikit-learn version 1.3.0, ensuring reproducibility and methodological transparency.

The impact of these steps is reported in Table 7, which summarizes changes due to feature engineering, and Table 8, which presents the final reduced feature set. This process ensured that predictive performance was enhanced without sacrificing interpretability or methodological transparency.

Table 7.

Summary of performance changes due to feature engineering.

Table 8.

Results of the feature engineering.

3.4.6. Data Quality and Drift Management

To maintain long-term validity, provenance-aware ingestion protocols were implemented with automated anomaly flags and consistency checks. Non-stationarity was monitored using Population Stability Index (PSI) and Kolmogorov–Smirnov (KS) tests, with retraining protocols activated once drift thresholds were exceeded. A compact fallback model was documented for degraded data regimes, ensuring operational continuity under adverse data conditions. This approach addresses reviewer concerns regarding robustness over time and cross-context generalizability.

3.5. Model Selection and Performance Assessment

The objective of the model selection process was to evaluate a broad spectrum of regression and machine learning (ML) approaches with respect to their predictive accuracy, interpretability, and computational feasibility in estimating TRS_housing. Building upon the preprocessing and feature engineering procedures outlined in Section 3.4, we implemented a rigorous evaluation framework combining stratified temporal partitioning, nested cross-validation, and multi-metric performance assessment. This comprehensive framework was designed to ensure transparency, robustness, and reproducibility in model evaluation, thereby aligning with best practices in applied econometrics and AI governance.

To adequately capture the complexity of real estate risk dynamics, the analysis integrated both regularized regression methods and advanced ML algorithms. Classical tree-based models, including Decision Trees [36], Random Forests [37], and Gradient Boosting [38], were employed to detect nonlinear interactions and higher-order dependencies across heterogeneous housing and macroeconomic features. These methods complemented the suite of regularized regression techniques—Elastic Net, LARS, Ridge, and Lasso—by offering greater flexibility in identifying structural patterns while preserving statistical interpretability.

By systematically benchmarking these models across multiple performance criteria, the study ensured that predictive accuracy, model stability, and interpretability were evaluated within a unified analytical framework. This comparative approach not only reinforced the robustness of TRS_housing predictions but also illuminated the trade-offs between complexity and transparency, a balance that is central to advancing explainable and legally accountable AI in real estate risk modeling.

3.5.1. Data Partitioning and Evaluation

The final dataset of 319,240 records was partitioned into training, validation, and testing subsets in a 70/15/15 split, stratified by survey year to preserve temporal consistency. Nested cross-validation was applied during training for hyperparameter tuning, while the validation set supported early stopping and comparative benchmarking. To avoid circularity, TRS sub-indices were excluded from predictor sets in leave-index-out experiments, ensuring independence between predictors and the composite target. Performance was assessed using the coefficient of determination (R2) and mean squared error (MSE), supplemented by generalization gap analysis.

3.5.2. Model Families and Screening

The comparative screening process encompassed a diverse set of model families designed to capture different bias–variance trade-offs and reflect methodological breadth. Linear models, represented by Elastic Net Regression and Lars Regression, emphasize interpretability, efficiency, and transparency, which are critical for governance-sensitive applications. Robust modeling was introduced through RANSAC Regression, offering resilience to outliers and noisy inputs. Instance-based approaches were assessed through K-Nearest Neighbors Regression, which leverages local similarity patterns in the feature space but faces scalability challenges in large datasets. Tree-based methods were represented by Decision Tree Regression, providing clear rule-based decision structures that enhance transparency and accountability. Ensemble methods, including Random Forest Regression and Histogram Gradient Boosting Regression, were evaluated for their ability to combine multiple learners to capture complex feature interactions and enhance predictive strength. Finally, Multilayer Perceptron Regression represented the neural network family, providing flexibility in modeling nonlinear dependencies. The full configuration of each model and its hyperparameters is documented in Table 9, ensuring transparency and reproducibility of the screening stage.

Table 9.

Machine learning algorithms and hyperparameters used.

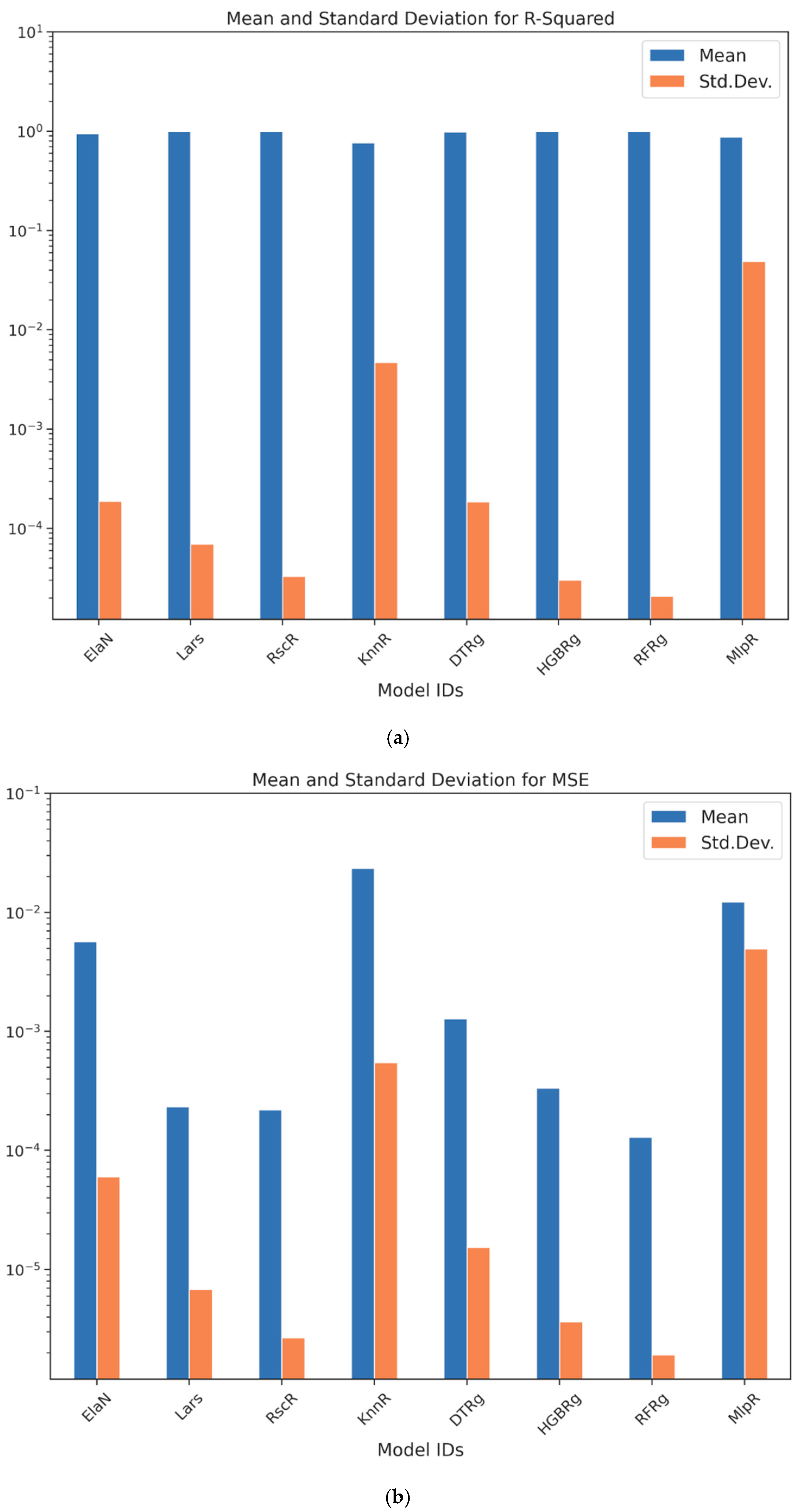

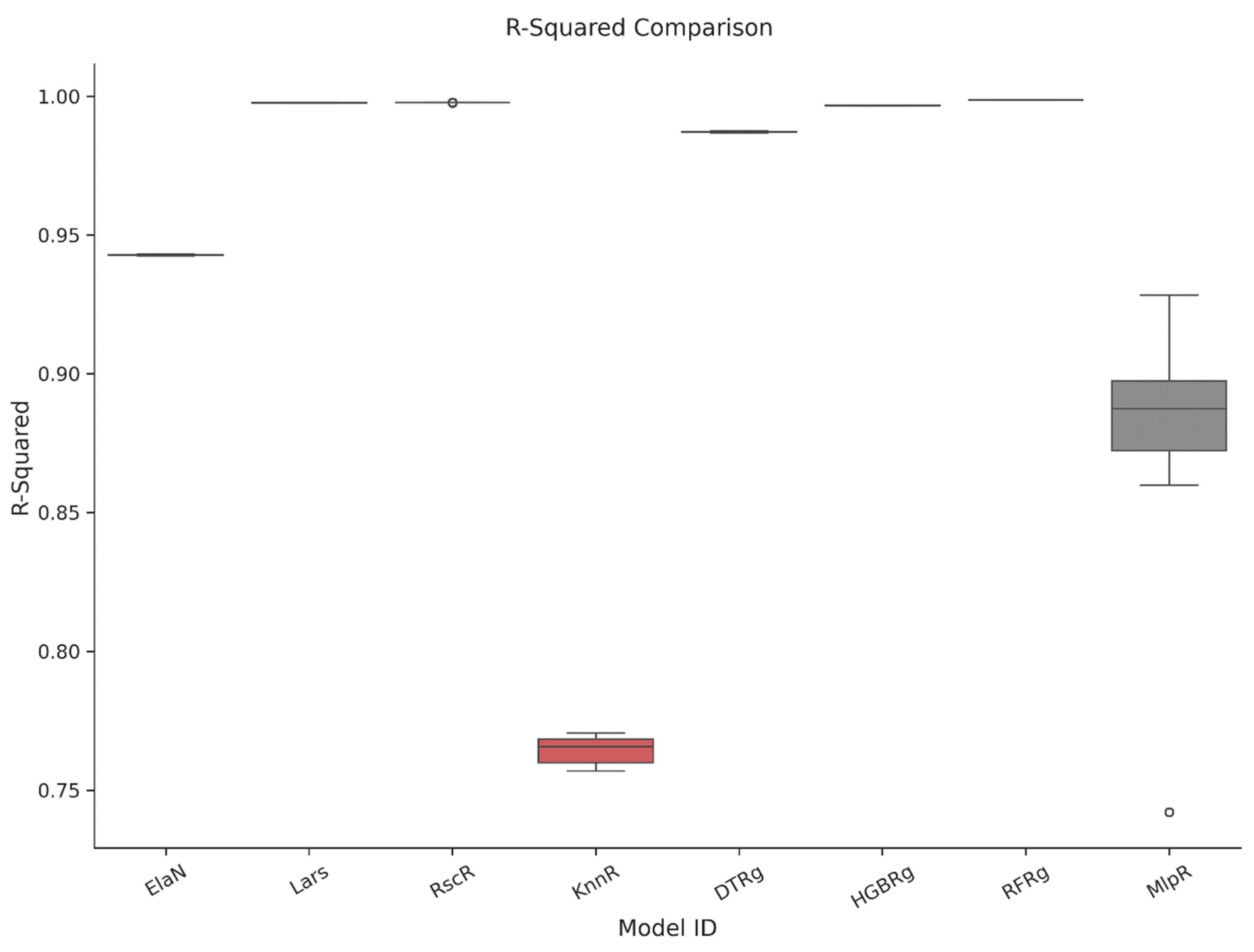

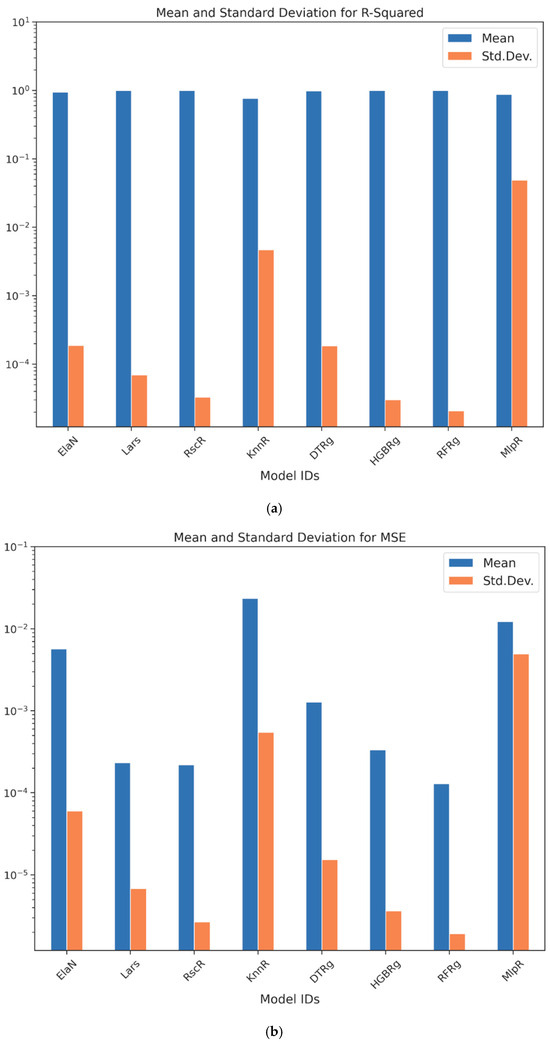

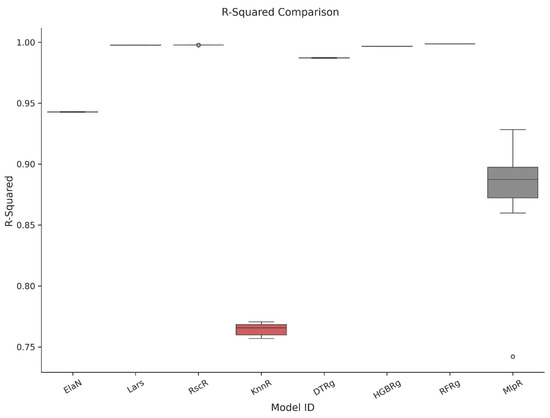

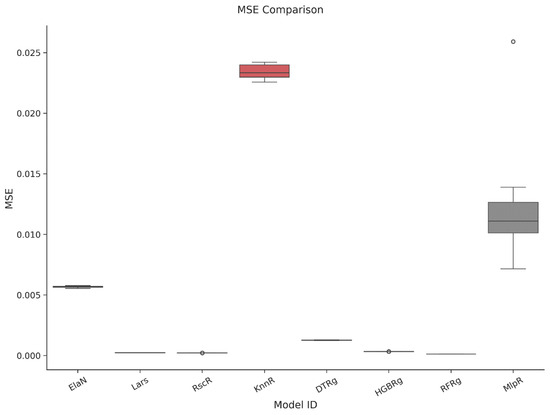

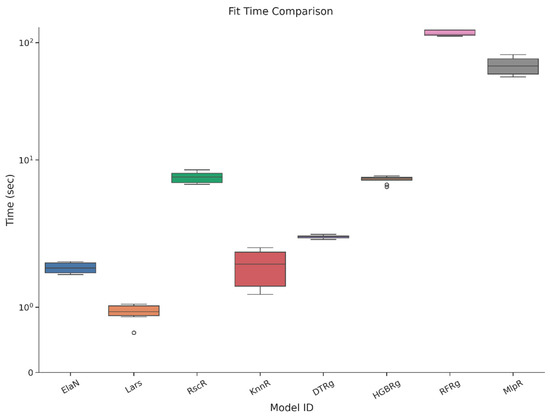

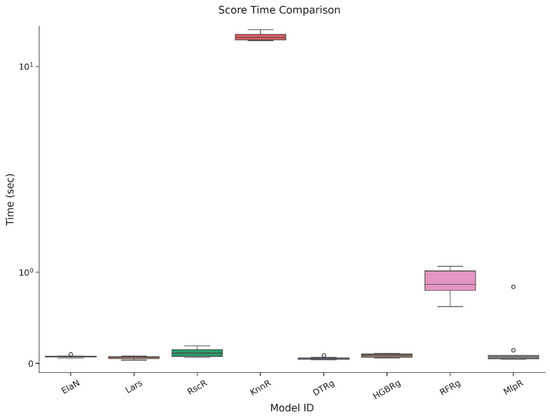

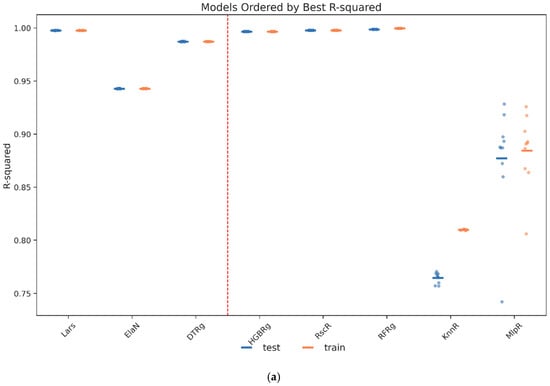

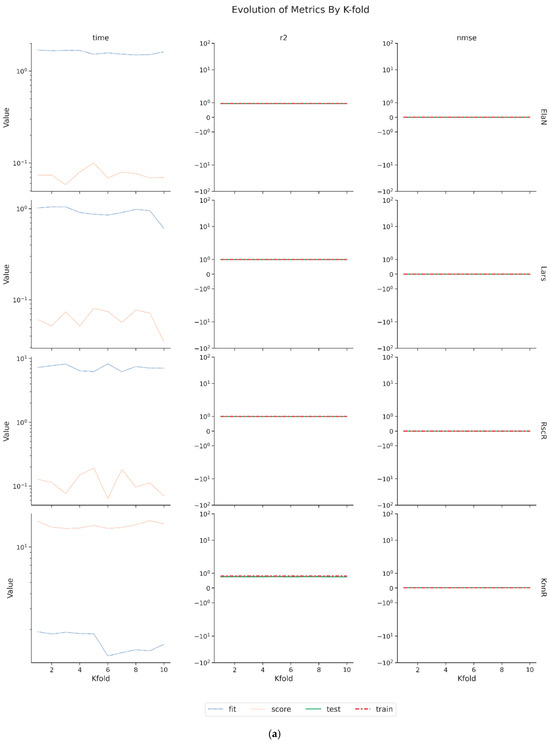

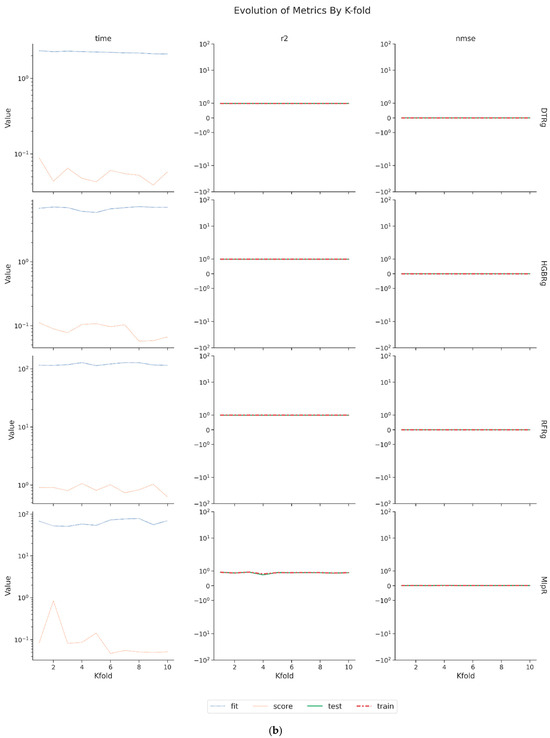

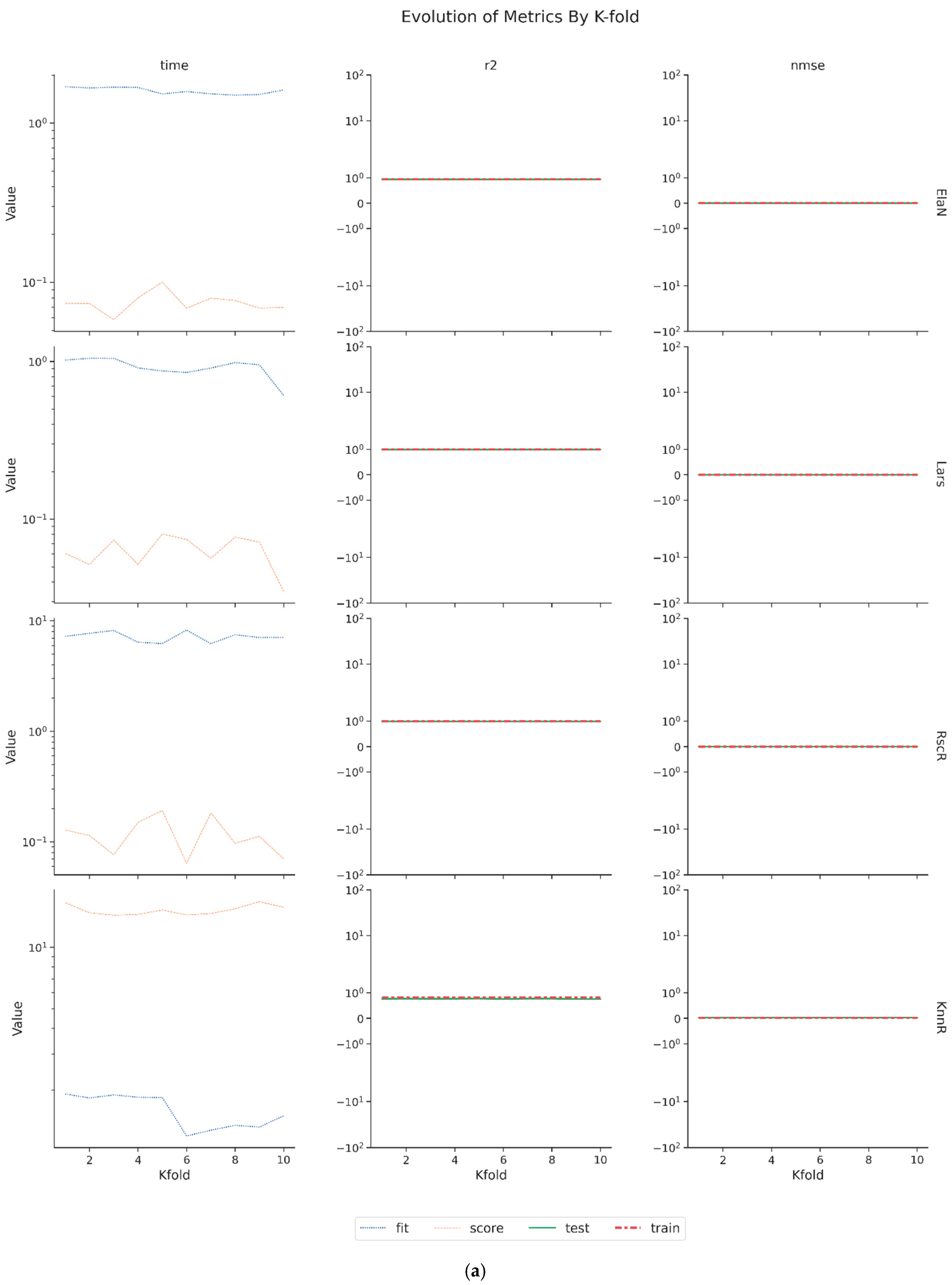

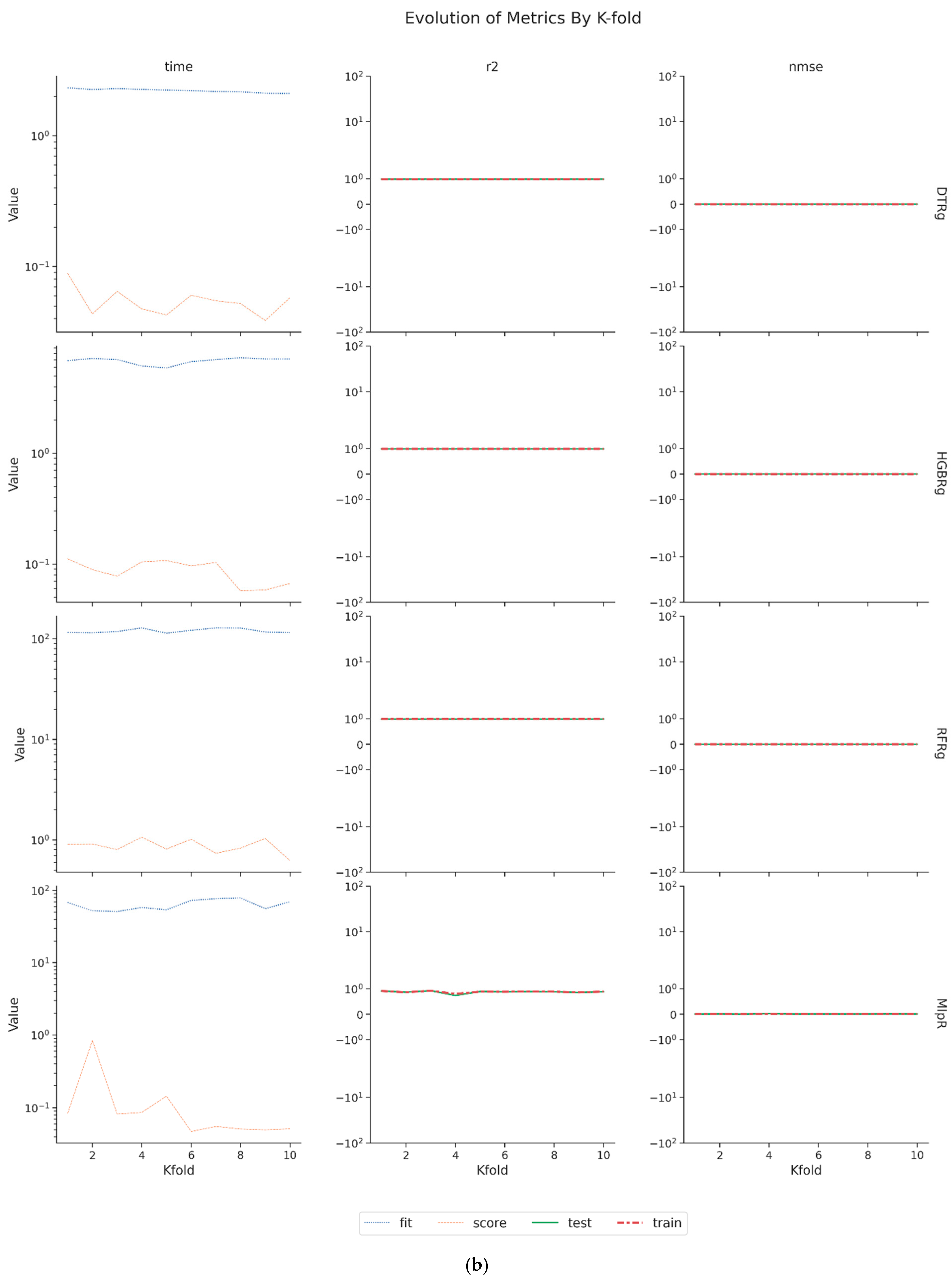

3.5.3. Cross-Validation Results

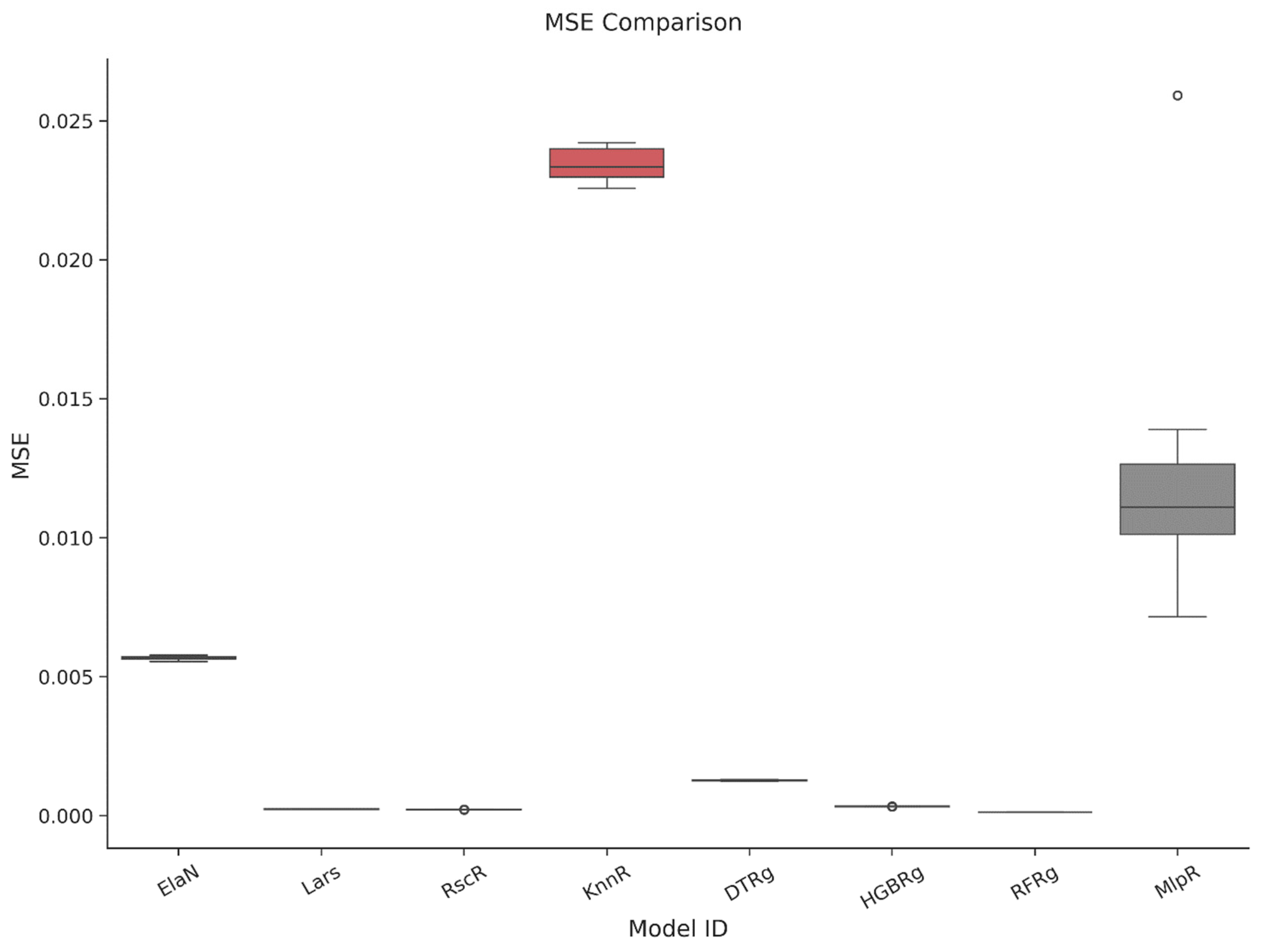

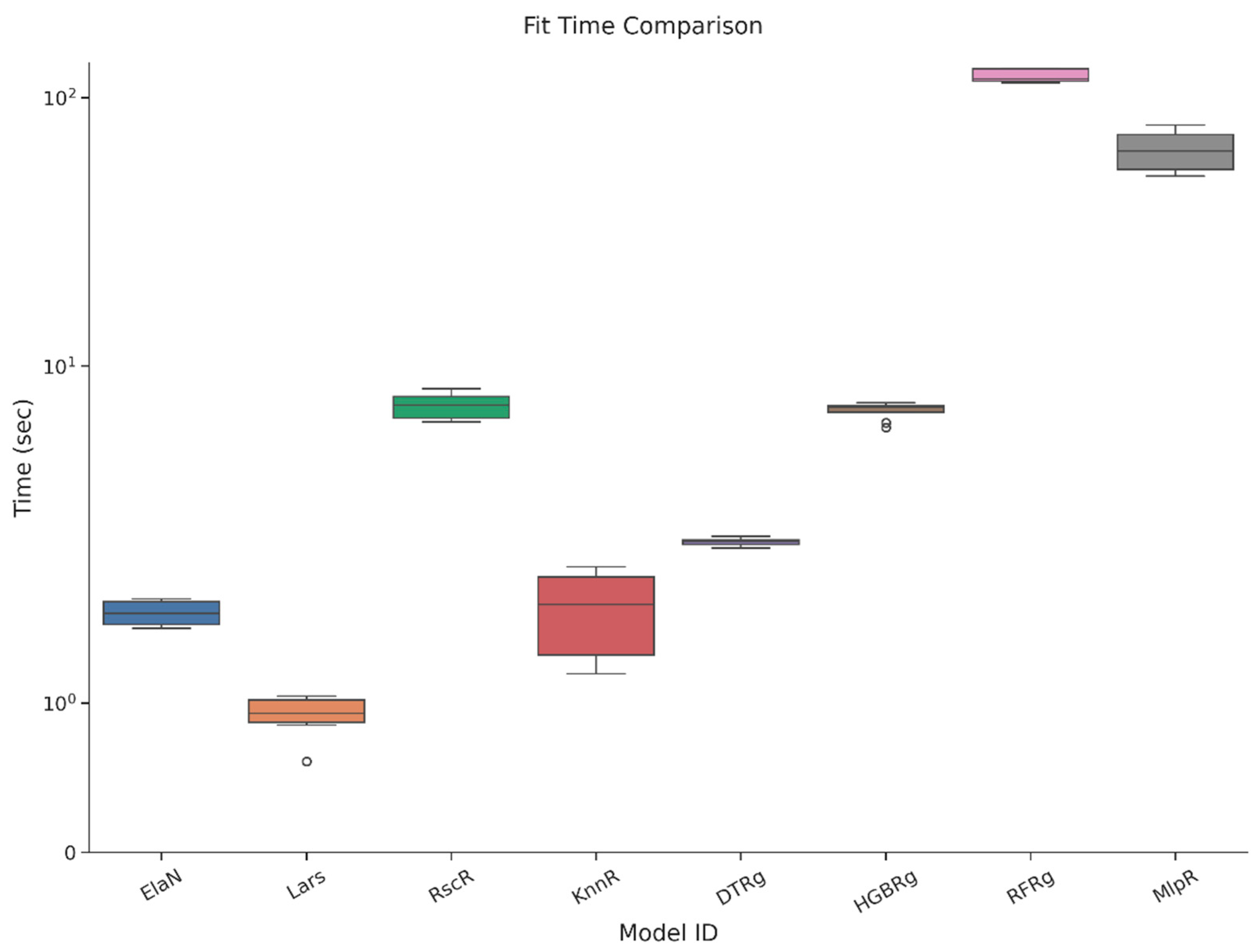

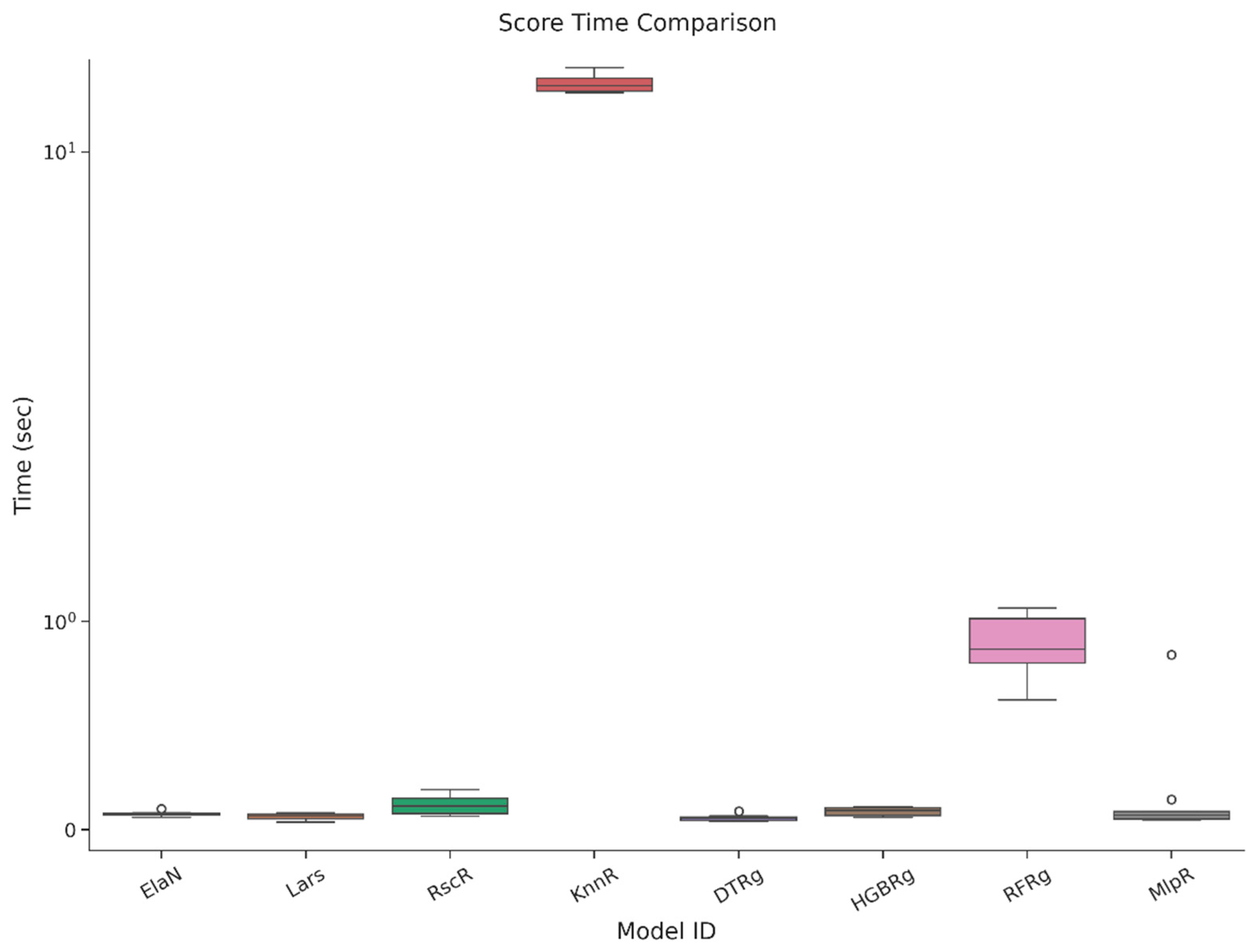

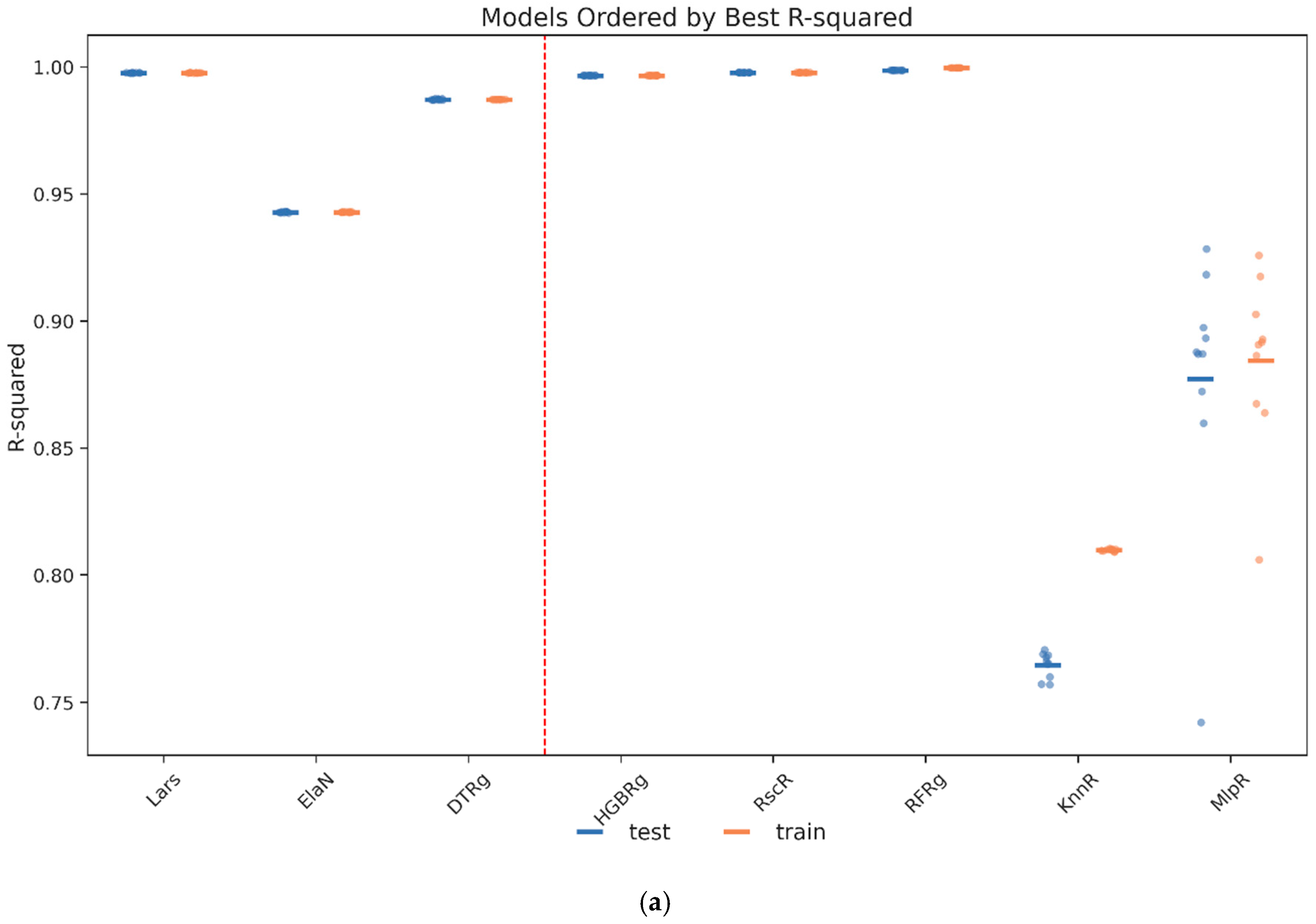

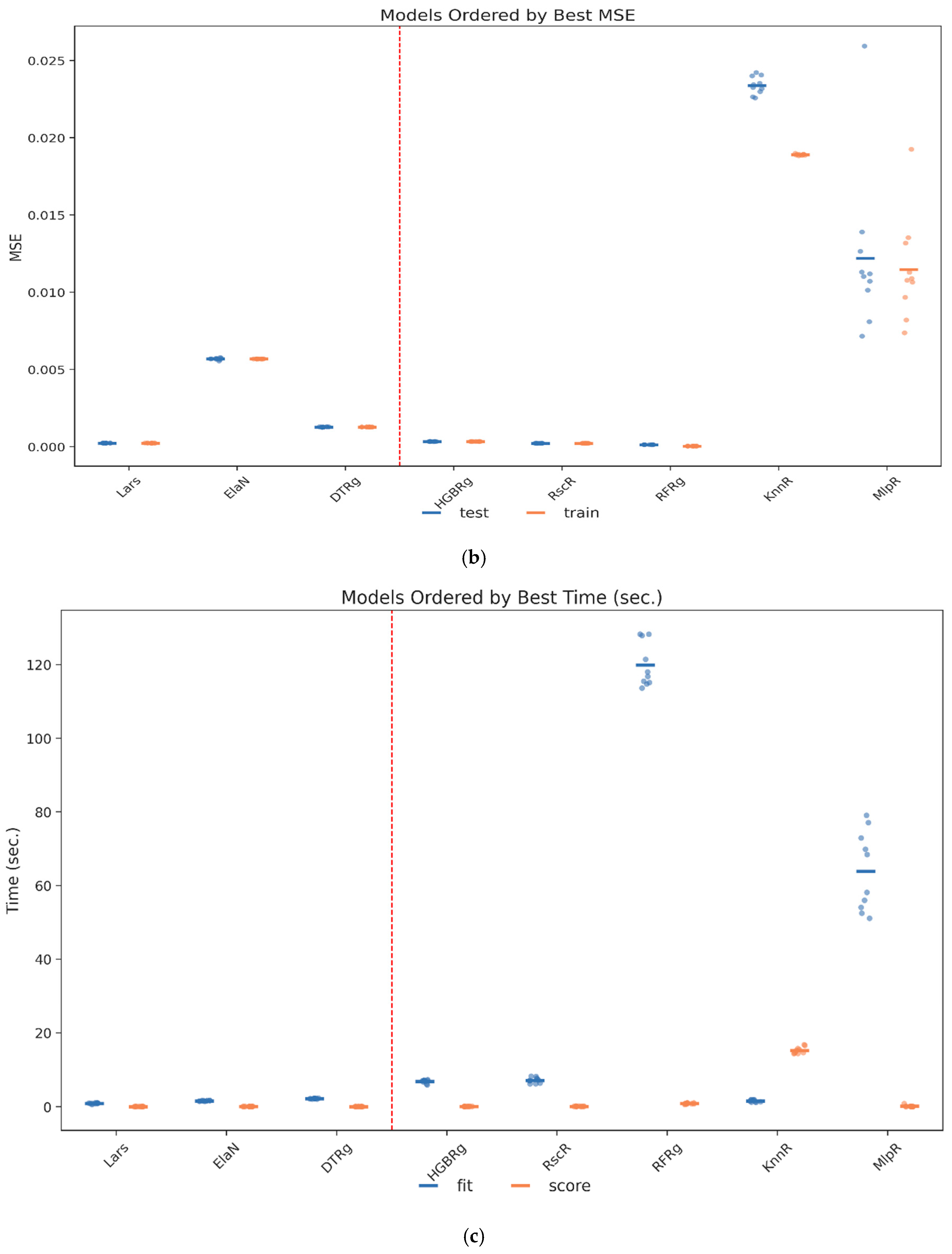

Each candidate model was trained and assessed through a five-fold cross-validation procedure that incorporated both accuracy and computational performance. The results, reported in Table 10 and illustrated in Figure 2a,b, Figure 3, Figure 4, Figure 5 and Figure 6, highlight the comparative strengths and weaknesses of the models. Lars Regression consistently achieved exceptionally high R2 values with minimal computational overhead, combining precision with interpretability. Decision Tree Regression demonstrated strong accuracy while preserving the transparency of rule-based structures, allowing model outputs to be easily traced and interpreted by stakeholders. Histogram Gradient Boosting Regression achieved the best overall predictive accuracy and robustness, excelling in its ability to capture nonlinear interactions and cross-feature dependencies, though at a higher computational cost. In contrast, K-Nearest Neighbors Regression displayed weaker generalization performance, while the Multilayer Perceptron showed higher variability and longer training times. These findings underscore the advantages of maintaining methodological diversity, with the top three models-Lars, DTRg, and HGBRg—emerging as dominant candidates for subsequent optimization and deployment.

Table 10.

Cross-validation results of each model.

Figure 2.

(a) and (b) Cross-validation metrics: R2 and MSE.

Figure 3.

Comparison of the R2 values for the cross-validation.

Figure 4.

Comparison of the MSE for the cross-validation.

Figure 5.

Comparison of training times for the cross-validation.

Figure 6.

Comparison of testing times for the cross-validation.

3.5.4. Addressing Overfitting and Multicollinearity

To safeguard against overfitting, several methodological controls were applied. Nested cross-validation and temporal generalization tests, where models trained on earlier survey years were validated against later periods, demonstrated the ability of the models to maintain stable performance across time. Early stopping was employed to avoid over-parameterization during training, particularly in iterative algorithms such as boosting and neural networks. Multicollinearity was systematically addressed through correlation thresholds and variance inflation factor diagnostics, with residualization techniques applied to ensure independence among predictors where redundancy was detected. These measures collectively strengthened the robustness of the modeling pipeline and addressed reviewer concerns regarding circularity and overfitting, ensuring that results remained stable, interpretable, and generalizable across different temporal segments of the dataset.

3.5.5. Treatment of Categorical Variables and Outliers

Special attention was dedicated to the treatment of categorical variables and skewed financial data, which are common challenges in real estate datasets. Ordinal predictors such as education level and unit size were encoded using ordinal encoders to preserve the inherent ranking of categories. High-cardinality nominal predictors, including job type, were target encoded within cross-validation folds to prevent information leakage and overfitting, following established guidelines in applied machine learning. Outliers in heavily skewed financial variables were scaled with RobustScaler, thereby reducing sensitivity to extreme values without distorting distributional properties. Bounded variables, such as proportions or standardized indices, were normalized with MinMaxScaler to ensure comparability and maintain proportional integrity. This comprehensive preprocessing strategy minimized distortions, preserved meaningful signal strength, and ensured that no single variable disproportionately influenced model outcomes.

3.5.6. Implementation

Following the feature engineering (Section 3.4) and model selection framework (Section 3.5), the implementation phase operationalized the regression and machine learning models within a standardized computational environment. All algorithms were executed using the Scikit-learning library [39], which provided a robust and reproducible platform for model development, parameter tuning, and validation.

Regularization techniques, including Lasso regression [40] and Ridge regression [41] were applied to mitigate multicollinearity and enhance generalization performance.

These methods were complemented by Elastic Net and LARS, ensuring that variable selection and shrinkage were consistently aligned with the interpretability and transparency requirements highlighted in the legal and ethical considerations (Section 2.3).

To capture complex, nonlinear dependencies, classical tree-based methods such as Decision Trees [36], Random Forests [37], and Gradient Boosting [38] were deployed alongside regression models. The integration of these complementary approaches facilitated a balanced comparison between statistical parsimony and predictive flexibility.

Finally, the design of the implementation process adhered to Breiman seminal perspective on the “two cultures” of statistical modeling [42], emphasizing both predictive accuracy and interpretability. By embedding model selection, validation, and documentation into a transparent pipeline, the implementation phase ensured methodological rigor while maintaining compliance with the accountability standards necessary for trustworthy AI in real estate risk assessment.

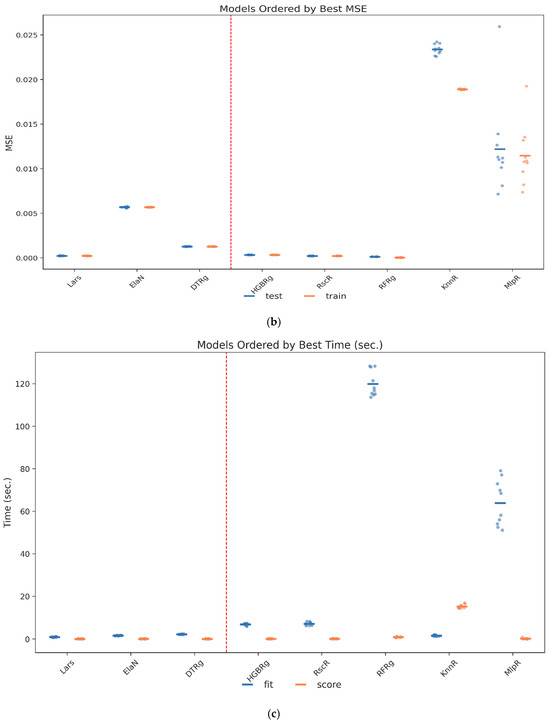

3.6. Algorithm Selection

Following the cross-validation stage, the selection of algorithms focused on narrowing the candidate pool to those models that demonstrate consistently strong predictive performance, low variance across folds, and computational feasibility for deployment in governance-sensitive contexts. Comparative evaluation of the eight candidate families revealed that three approaches—Lars Regression, Decision Tree Regression, and Histogram Gradient Boosting Regression—dominated the trade-off between accuracy, interpretability, and efficiency. Figure 7a–c illustrate the comparative rankings across the key performance metrics of R2, MSE, and computational time, underscoring the stability of these three models relative to their peers. Lars Regression achieved near-perfect accuracy while maintaining minimal computational overhead, making it particularly suitable for contexts where interpretability and transparency are paramount. Decision Tree Regression provided a competitive balance between predictive power and structural clarity, allowing stakeholders to trace predictions directly to rule-based splits. Histogram Gradient Boosting Regression delivered the highest overall accuracy and robustness, excelling in its capacity to capture nonlinear feature interactions, albeit at a higher computational cost. Taken together, these results justified advancing Lars, DTRg, and HGBRg to the subsequent hyperparameter optimization stage, ensuring that both linear and nonlinear modeling paradigms were retained for final calibration of the RECIR framework.

Figure 7.

(a) Models ranked by the R2 values of the cross-validation. (b) Models ranked by the MSE values of the cross-validation. (c) Models ranked by the training time values of the cross-validation. Comparative visualization of model performance across the TRS risk indices. The solid lines represent the observed values, while the red dashed lines indicate the threshold values used for model evaluation.

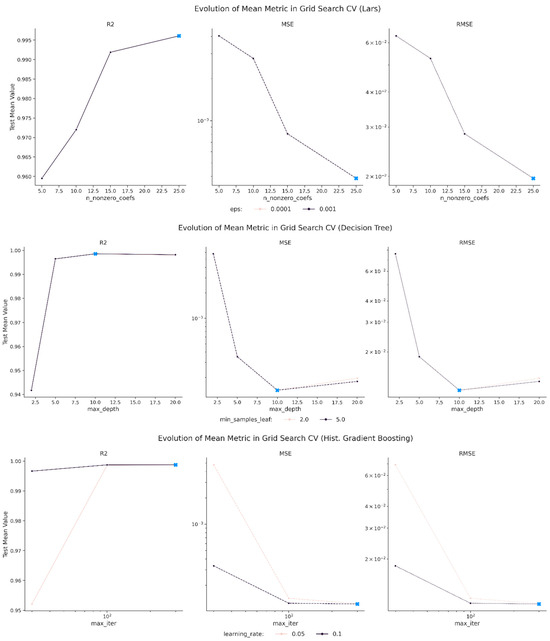

3.7. Model Optimization

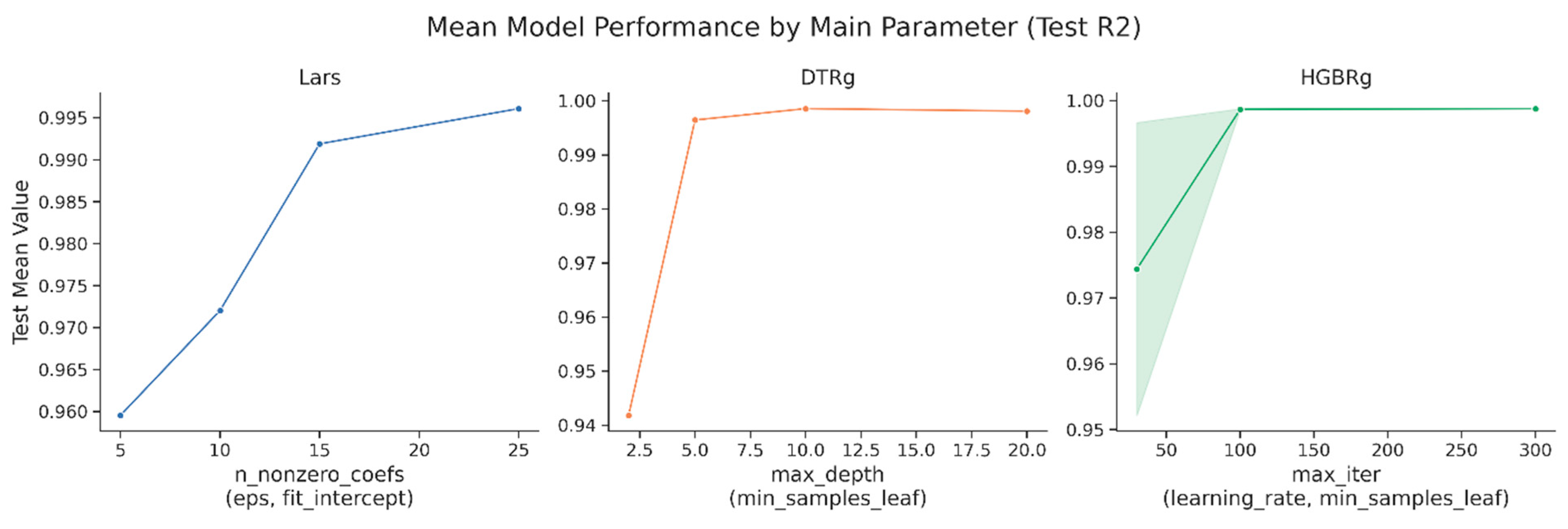

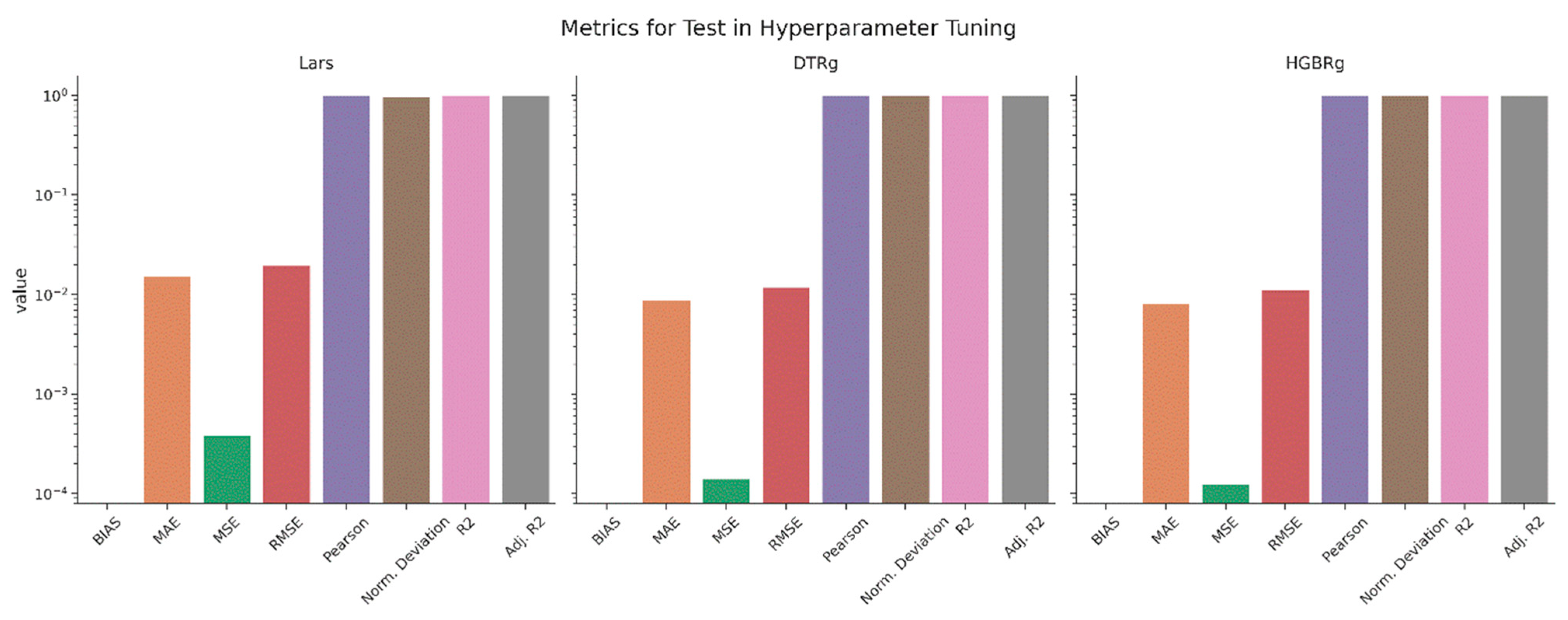

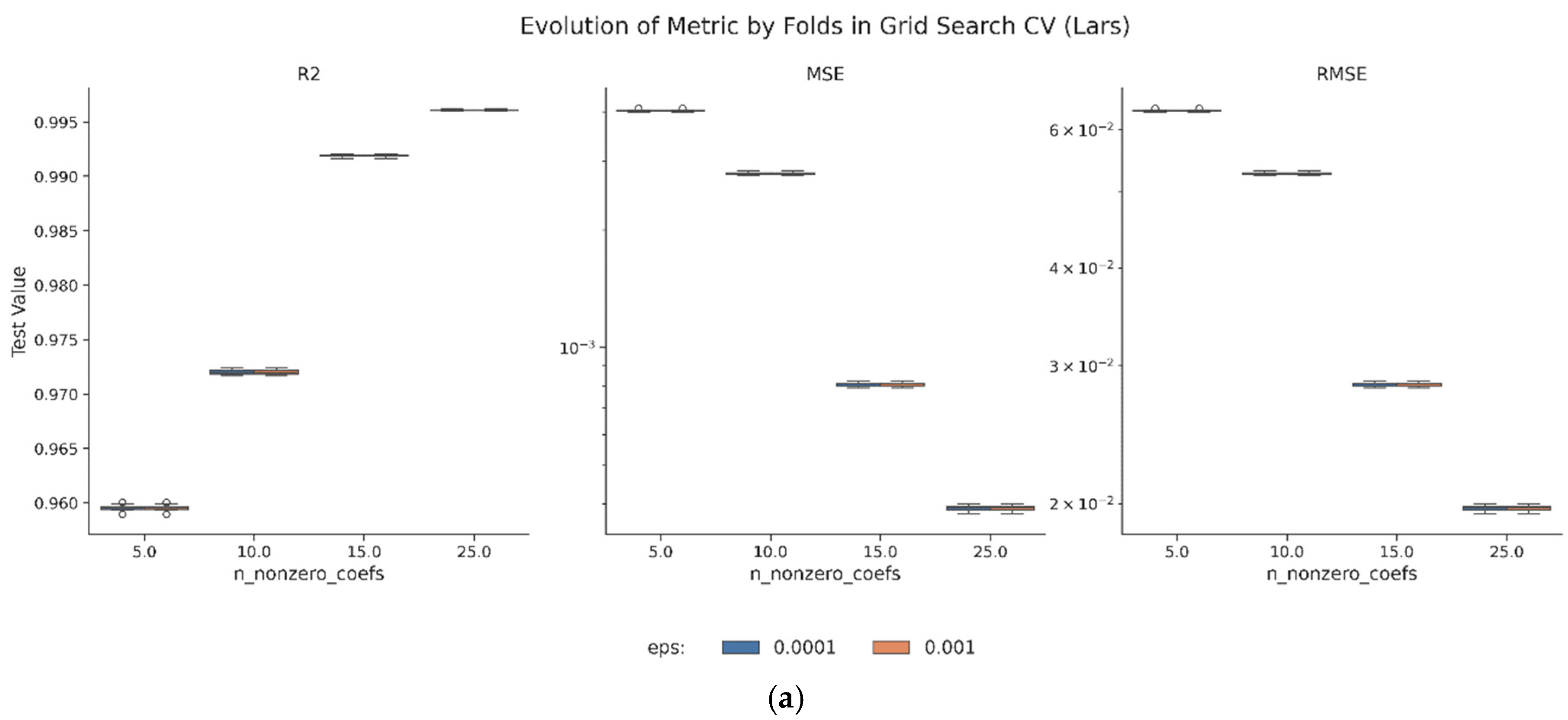

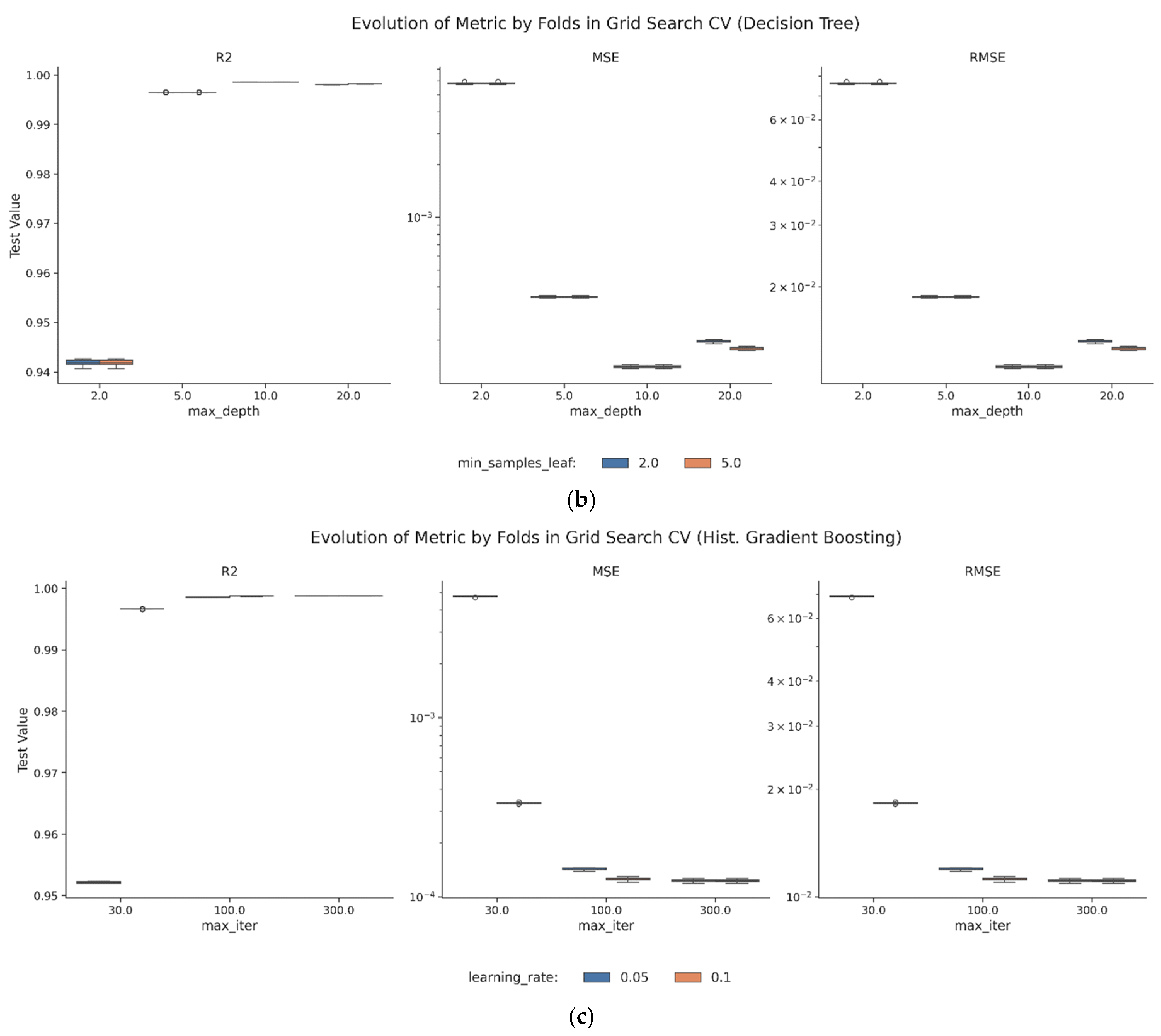

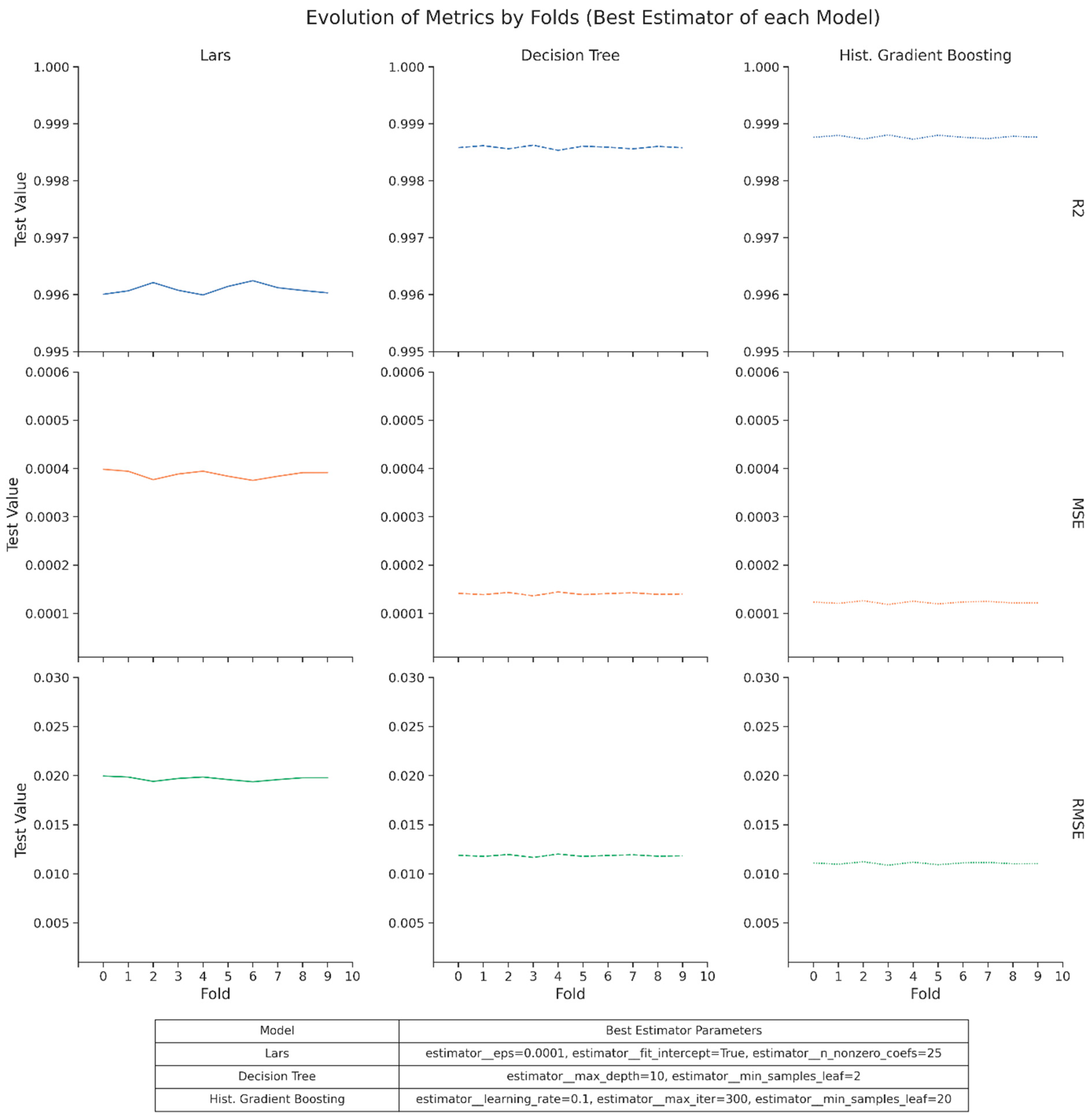

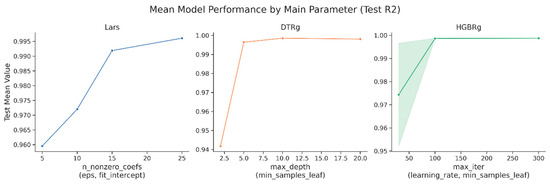

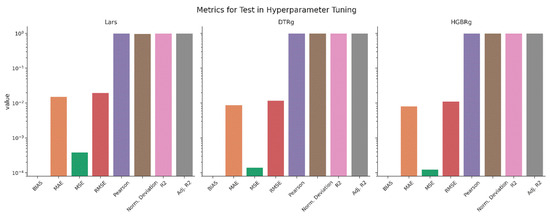

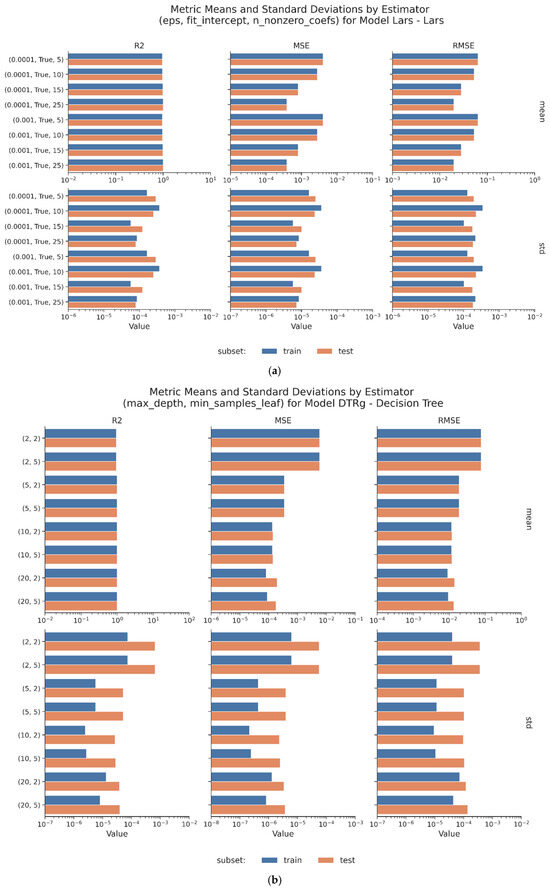

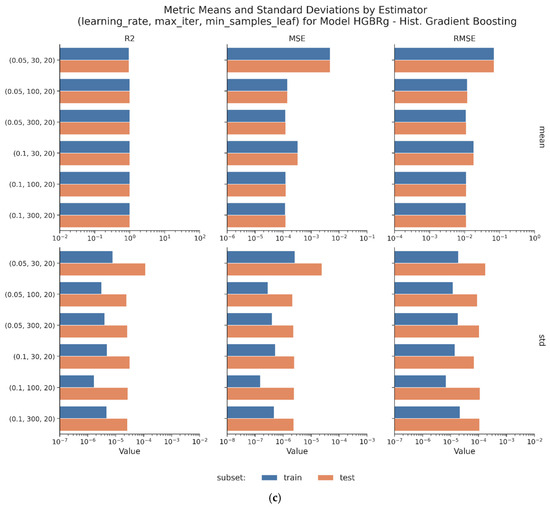

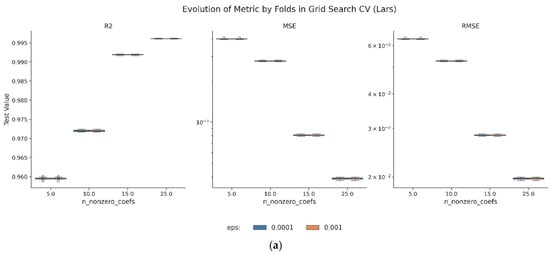

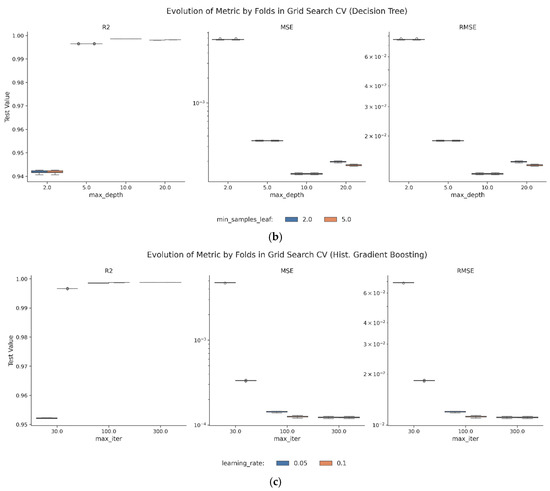

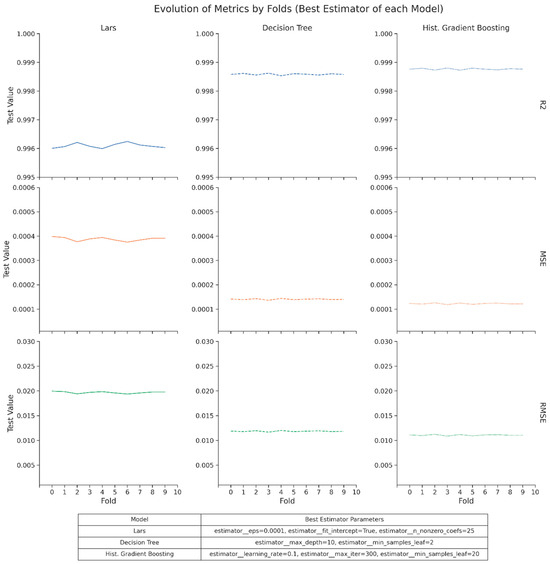

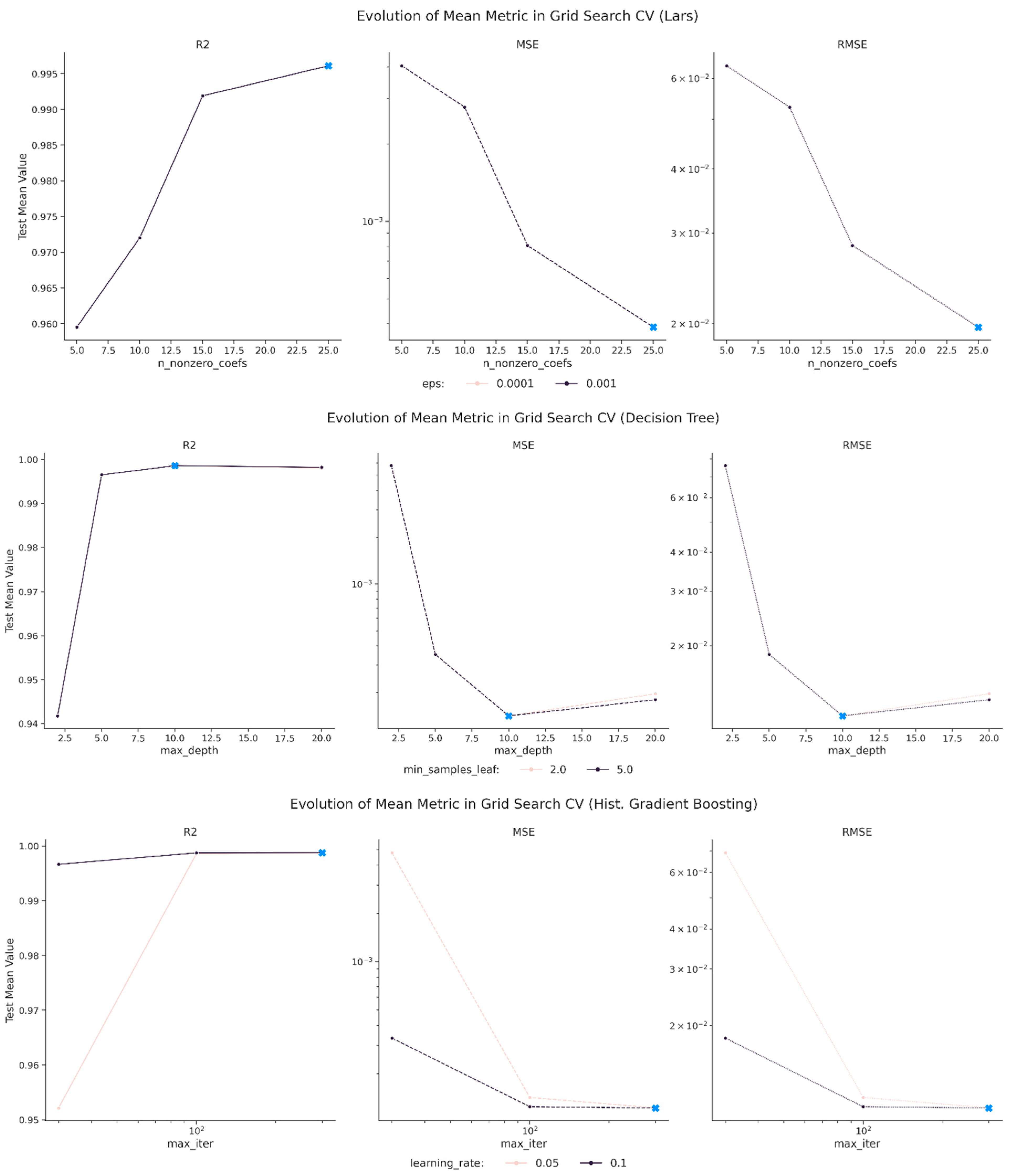

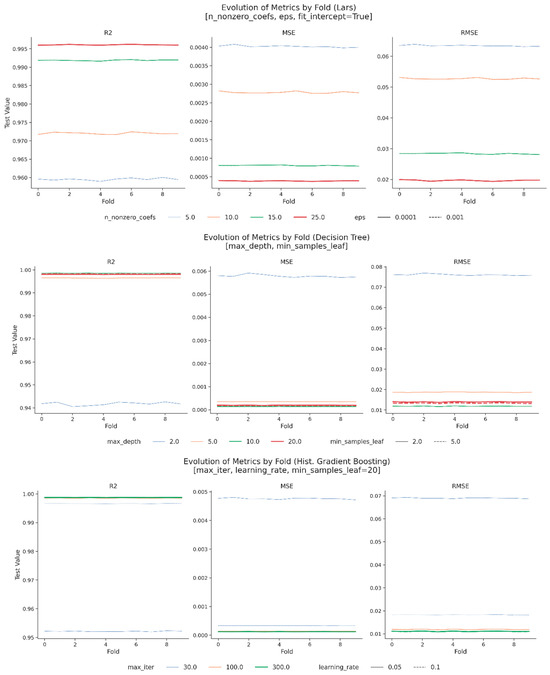

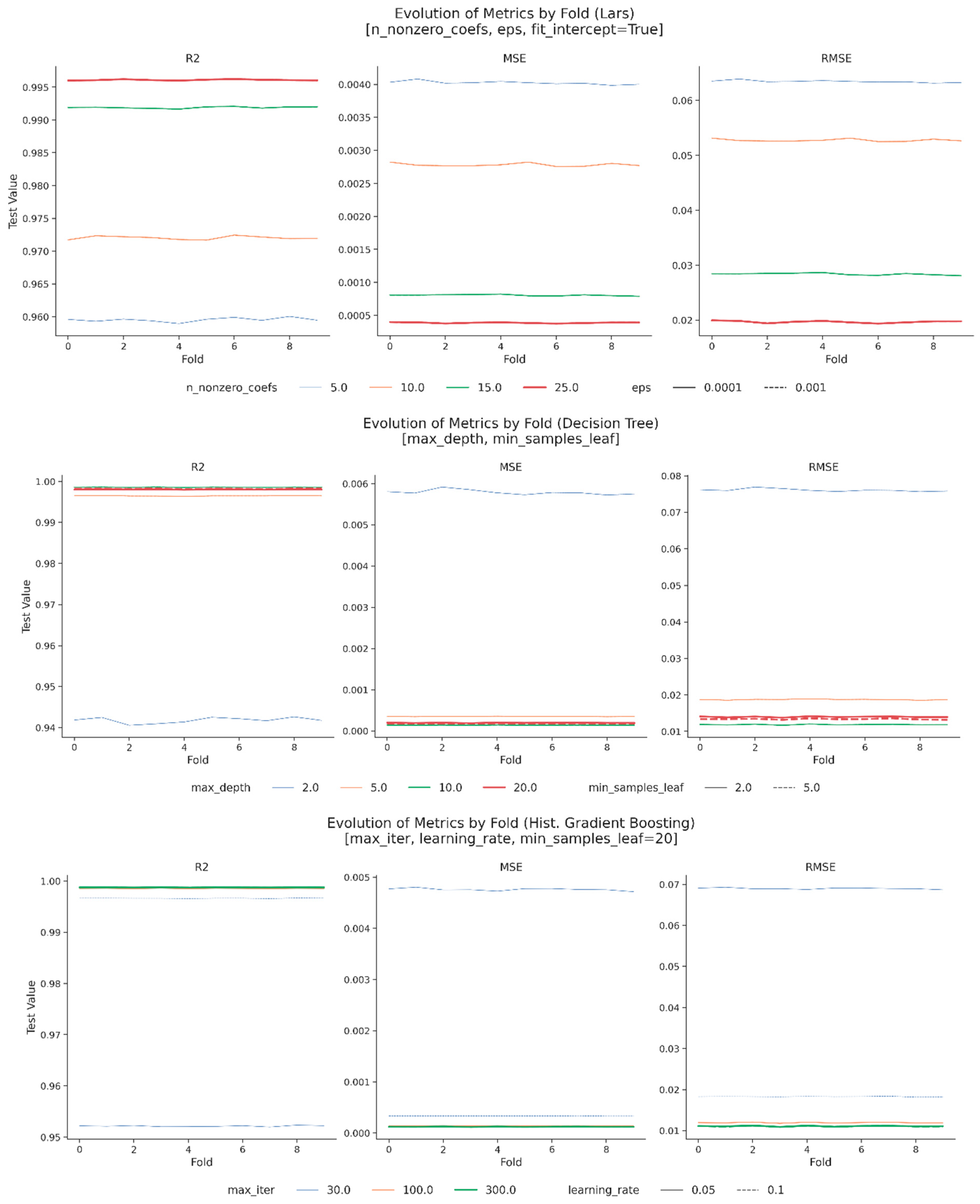

Hyperparameter optimization was conducted to refine the generalizability, minimize prediction error, and enhance robustness of the selected algorithms, namely Lars Regression, Decision Tree Regression, and Histogram Gradient Boosting Regression. A systematic grid search combined with ten-fold cross-validation was employed across predefined parameter ranges, with evaluation guided by adjusted R2, mean absolute error, root mean squared error, Pearson correlation, and bias error diagnostics. The optimization process confirmed the stability of all three models, each achieving adjusted R2 values above 0.99 with negligible generalization gaps between training and testing sets. Lars Regression reached its optimal configuration with a nonzero coefficient threshold of twenty-five and a convergence tolerance of 1 × 10−4, balancing speed and interpretability. Decision Tree Regression performed best at a depth of ten with a minimum of two samples per leaf, striking a balance between complexity and transparency. Histogram Gradient Boosting Regression demonstrated the strongest performance overall with three hundred boosting iterations, a learning rate of 0.1, and a minimum of twenty samples per leaf, consistently yielding the lowest RMSE and the highest predictive accuracy across folds. Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12 and Table 11, Table 12, Table 13 and Table 14 provide detailed evidence of the tuning outcomes, illustrating both the sensitivity of the models to parameter adjustments and the stability of the optimal regions. Collectively, these results positioned Histogram Gradient Boosting Regression as the most robust candidate for deployment, with Decision Tree Regression and Lars Regression offering complementary strengths in interpretability, transparency, and computational efficiency, thereby ensuring that the RECIR framework remains accurate, auditable, and adaptable across governance-sensitive applications.

Figure 8.

Overall performance of the models in the hyperparameter tuning.

Figure 9.

Training metrics for the hyperparameter tuning.

Figure 10.

(a) Means and standard deviations by estimator (Lars). (b) Means and standard deviations by estimator (DTRg). (c) Means and standard deviations by estimator (HGBRg).

Figure 11.

(a) Error box of the evolution of the metrics by fold for the grid search cross-validation (Lars). (b) Error box of the evolution of the metrics by fold for the grid search cross-validation (DTRg). (c) Error box of the evolution of the metrics by fold for the grid search cross-validation (HGBRg).

Figure 12.

Evolution of the metrics by fold for the best estimator of each model.

Table 11.

Metrics, parameters, and values used in the hyperparameter tuning.

Table 12.

Optimized parameters obtained from the hyperparameter tuning.

Table 13.

Results of the hyperparameter tuning by metric.

Table 14.

(a) Means and standard deviations by estimator (Lars). (b) Means and standard deviations by estimator (DTRg). (c) Means and standard deviations by estimator (HGBRg).

Figure 8 and Figure 9 illustrate the tuning outcomes, showing sensitivity of performance to parameter adjustments and the stability of the optimal regions across folds.

Results, reported in Table 13, confirmed cross-validated adjusted R2 values above 0.99 for all three models, with minimal generalization gaps between training and testing sets. Among the candidates, HGBRg consistently produced the lowest RMSE and the highest stability across folds, while DTRg offered interpretability with competitive accuracy, and Lars balanced speed with transparency. Results, reported in Table 13, confirmed cross-validated adjusted R2 values above 0.99 for all three models, with minimal generalization gaps between training and testing sets. Among the candidates, HGBRg consistently produced the lowest RMSE and the highest stability across folds, while DTRg offered interpretability with competitive accuracy, and Lars balanced speed with transparency. All negative values are presented using the standard mathematical minus sign (−) for clarity and consistency.

Figure 11b and Table A7 and Table A8 in the Appendix A confirm these trends across the folds, with low error variance and no signs of instability. The effect of min_samples_leaf is minor, which suggests that DTRg is robust to small adjustments in leaf size.

HGBRg achieves the best overall results. Its best configuration—min_samples_leaf = 20, learning_rate = 0.1, and max_iter = 300—delivers an R2 of 0.9988 and a minimal RMSE on the testing set (Table 14c and Figure 10c).

The model demonstrates consistent performance across all the folds and configurations, with no indications of overfitting or degradation in predictive power as max_iter increases. Figure 11c shows that increasing the number of boosting iterations from 30 to 300 steadily improves both the training and the validation metrics. The stability of the model is further demonstrated by its narrow box plots and low standard deviations, which consistently remain below 0.0001 across all the metrics.

Figure 12 presents the comparative metrics for the best configuration of each model and highlights the performance evolution across the folds. Although Lars produces strong results, it shows slightly more variability across the cross-validation runs. DTRg maintains low error values with minimal fluctuations. HGBRg consistently delivers the lowest RMSE and the highest R2, with almost no variation from fold to fold.

Collectively, these optimization results positioned HGBRg as the most robust candidate for deployment, with DTRg and Lars providing complementary strengths in interpretability and computational efficiency. The methodological diversity and stability of these three models ensure that RECIR remains resilient to data shifts, transparent for auditability, and adaptable across governance-sensitive applications.

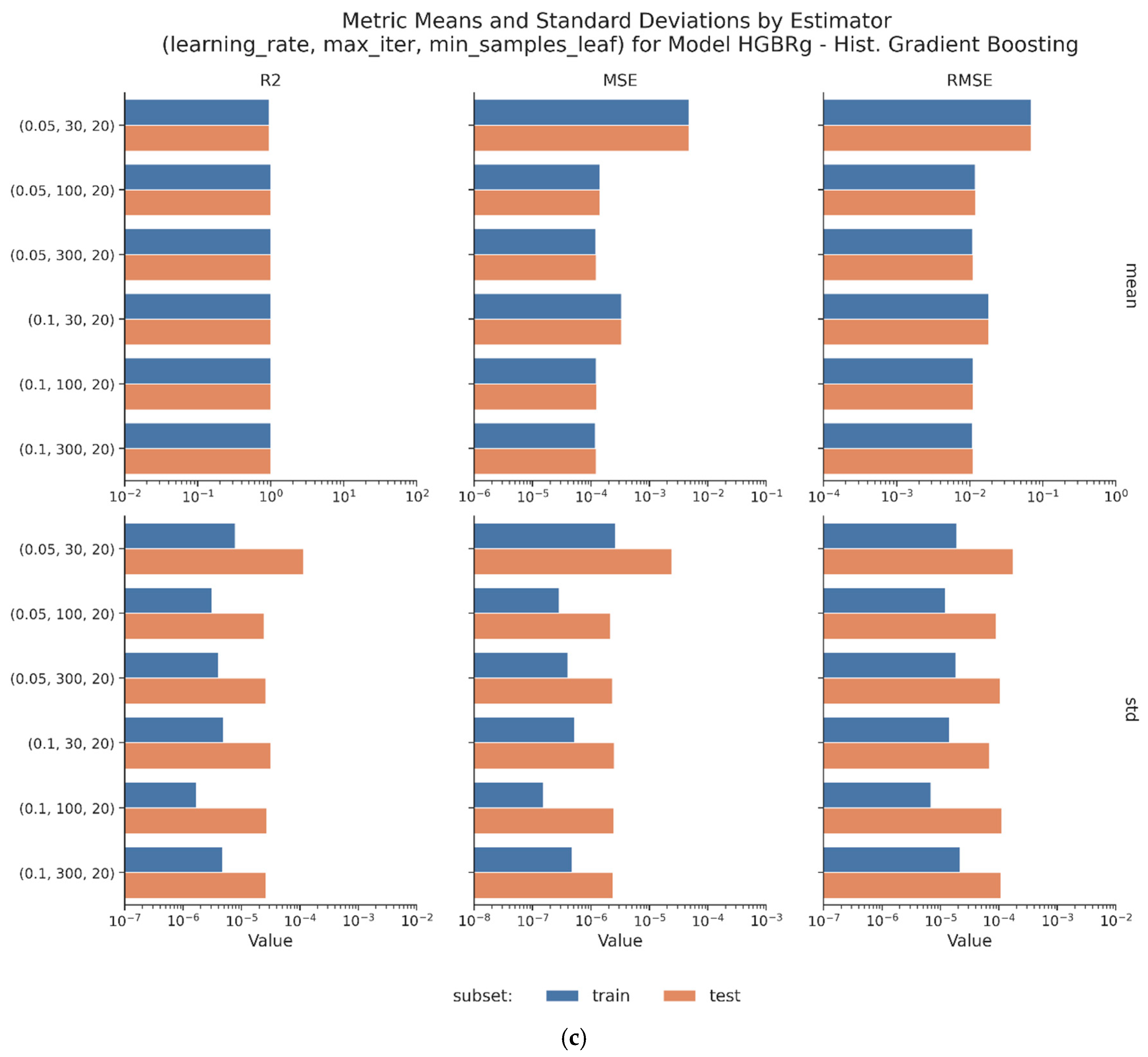

3.8. Model Interpretation and Governance Auditing

To ensure that the RECIR framework remains transparent, reproducible, and aligned with governance requirements, we implemented a multi-layered interpretation and auditing protocol. Model interpretation relied on permutation importance, partial dependence (PDP), and accumulated local effects (ALE) to provide decision-relevant explanations that are robust to correlated features. These diagnostics were applied systematically across the three top-performing model families—Lars, DTRg, and HGBRg—demonstrating consistency of the identified drivers of risk. Figure 13 illustrates the relative contributions of the seven TRS indices under permutation-based importance, highlighting the governance-salient roles of Location Score (LS) and Legal/Regulatory Environment (LR).

Figure 13.

Comparison of the metrics by dataset.

To support governance auditing, all preprocessing steps, feature transformations, and modeling decisions were fully documented in a structured pipeline. This included explicit data lineage from raw AHS and WDI sources to the harmonized dataset described in Section 3.3, as well as metadata regarding imputation, scaling, and hyperparameter optimization. An audit trail was generated to enable external replication and regulatory compliance, in line with best practices from the Basel Committee on Banking Supervision and the EU AI Act.

Finally, the auditing protocol incorporated drift monitoring and explainability safeguards to ensure ongoing accountability. By embedding governance-aware interpretation directly into the modeling workflow, RECIR advances beyond conventional predictive models to provide interpretable, auditable, and legally compatible outputs. This integration addresses the reviewer’s concern regarding transparency and reproducibility, while reinforcing the framework’s applicability to high-stakes real estate investment decision-making.

4. Findings

4.1. Final Model Selection

Following the extensive benchmarking of eight candidate families, three estimators emerged as consistently dominant: Lars Regression, Decision Tree Regression (DTRg), and Histogram Gradient Boosting Regression (HGBRg). Table 15 summarizes their performance statistics, highlighting differences in accuracy, stability, and computational efficiency. While Lars demonstrated exceptionally high R2 values and low error rates, its linear structure limited its capacity to capture the nonlinear interactions evident in the integrated AHS–WDI–TRS dataset. DTRg offered competitive predictive performance and interpretability but showed greater sensitivity to fluctuations in the data, as reflected in higher variability across folds. In contrast, HGBRg provided the most balanced solution, combining superior accuracy, robust generalization, and the ability to model complex feature interactions with consistently low variance across validation folds. Although HGBRg imposed higher computational costs, this trade-off was considered acceptable considering the governance-sensitive application domain, where consistency and reliability outweigh marginal efficiency gains. The final model was therefore specified as HGBRg with max_iter = 300, learning_rate = 0.1, and min_samples_leaf = 20, a configuration that offered the strongest balance between predictive strength and computational feasibility for risk-sensitive deployment.

Table 15.

Summary statistics of the best estimators for each model.

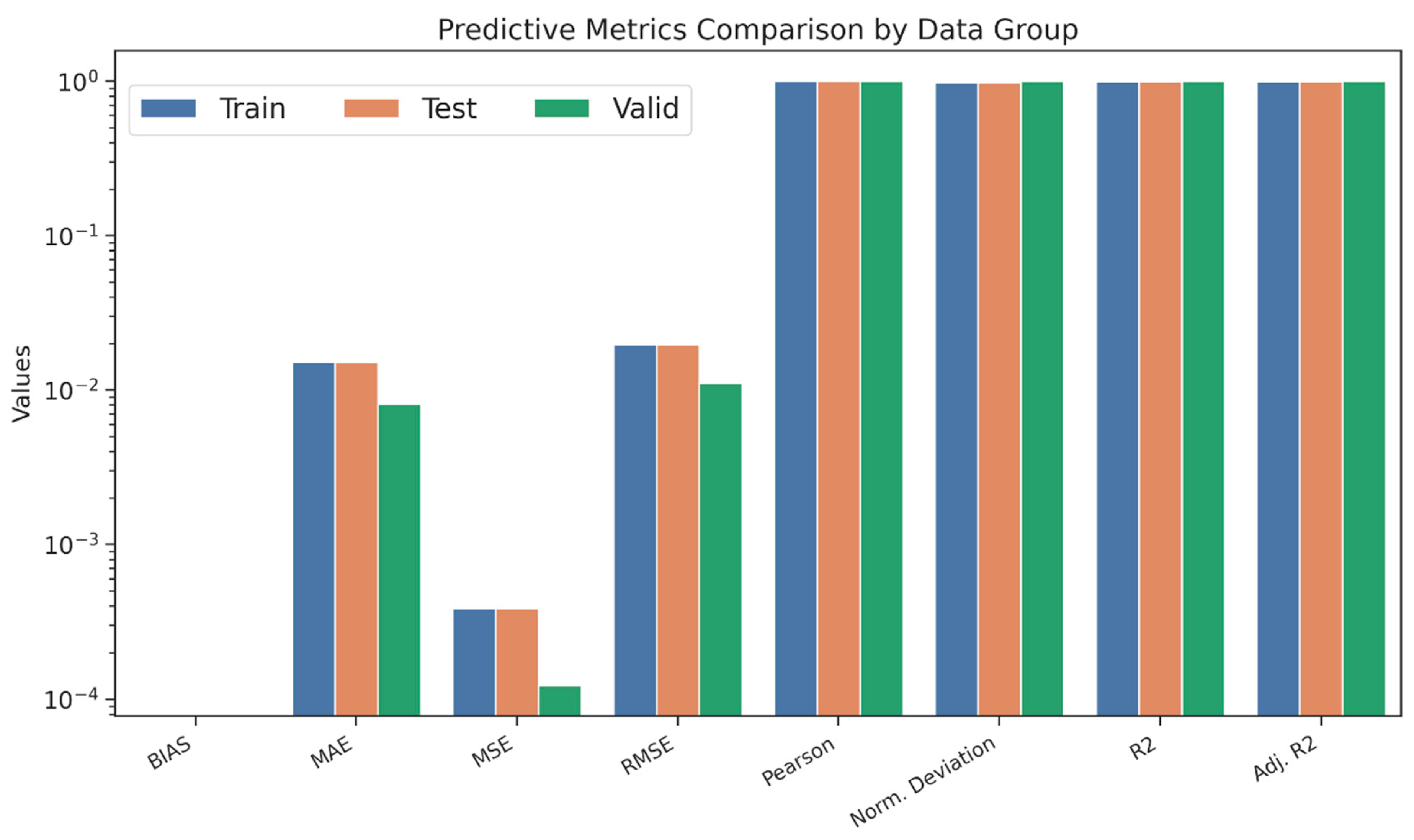

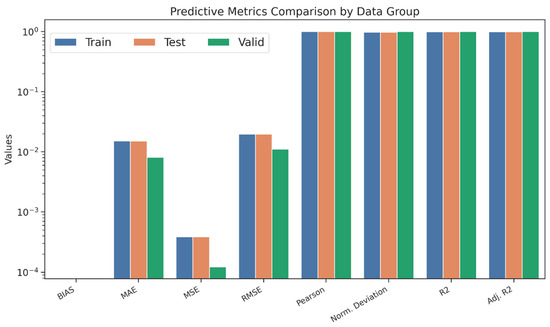

4.2. Performance

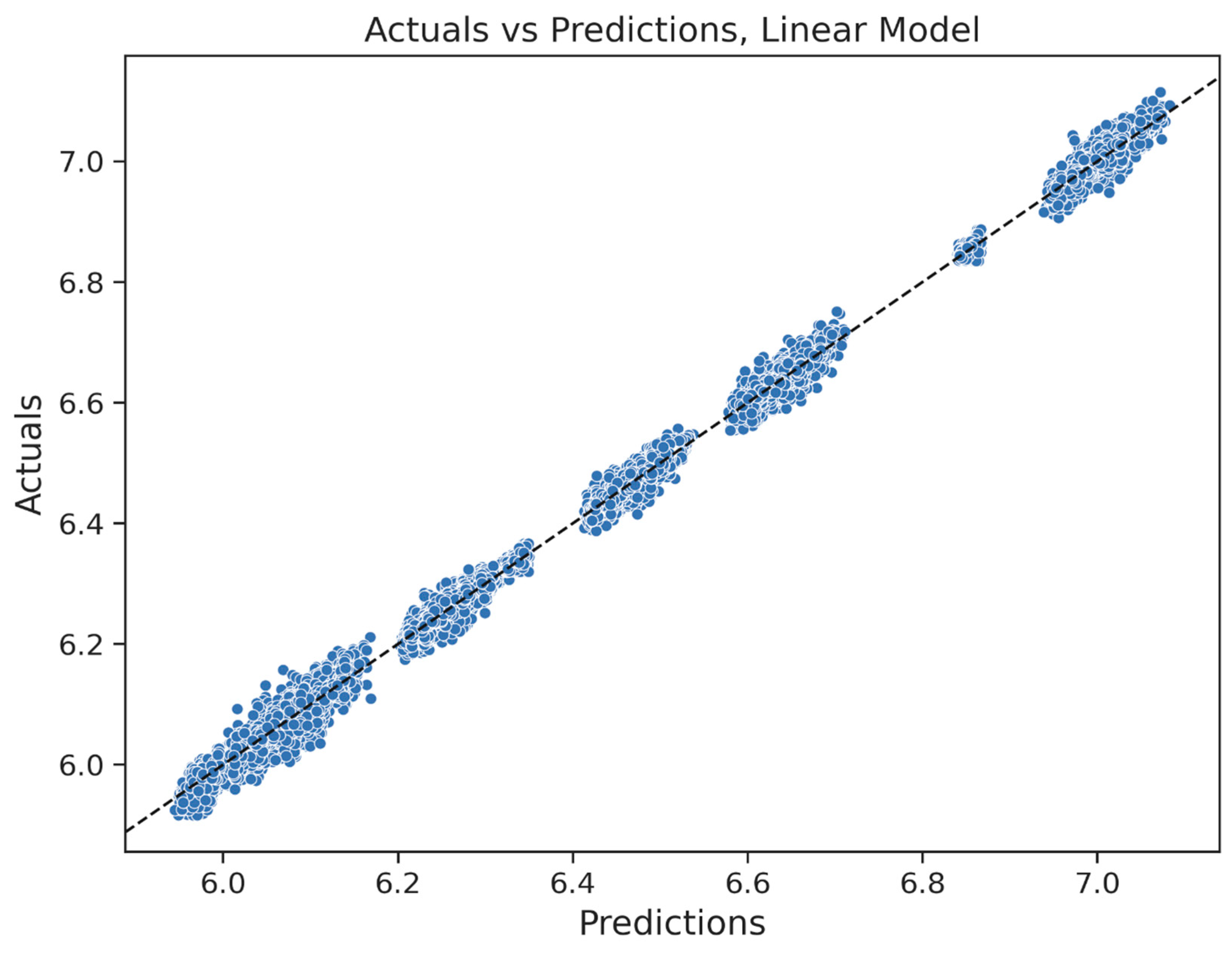

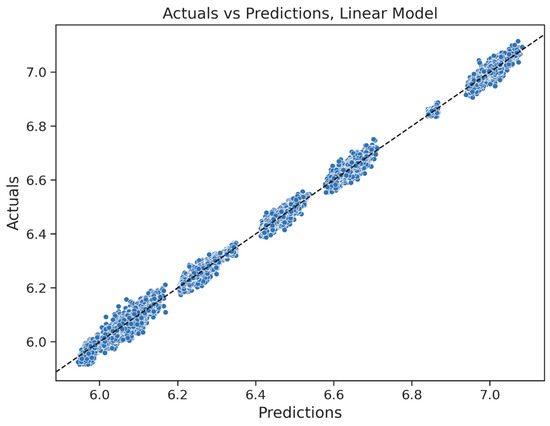

The selected HGBRg model was evaluated on training, testing, and independent validation subsets, with the full set of results reported in Table 16 and Figure 13. Across all partitions, the model achieved R2 and adjusted R2 values consistently above 0.996, with validation performance approaching 0.999, thereby confirming its strong capacity for temporal and out-of-sample generalization. Error measures remained uniformly low, with mean absolute error (MAE) below 0.009 and root mean squared error (RMSE) below 0.02 across training and testing and further reduced to approximately 0.011 in the validation sample. Bias values were close to zero, and Pearson correlation coefficients consistently exceeded 0.99, as depicted in Figure 14, confirming both accuracy and alignment between predictions and observed outcomes.

Table 16.

Comparison of the metrics by dataset.

Figure 14.

Actual target values vs. predictions for the selected linear model.

An additional finding concerned the discretized structure of predictions. The TRS, although modeled as a continuous outcome, produced predicted values clustered around discrete risk strata (e.g., 6.06, 6.25, 6.45, and 6.64). Table 17 demonstrates that predicted group means remained nearly identical to actual means, with standard deviations typically below 0.05. This structural fidelity is particularly relevant for decision-making frameworks that rely on risk brackets or threshold-based governance triggers, since it indicates that the model internalizes and reproduces the categorical logic embedded in the TRS. Moreover, the consistency of these results across household-, regional-, and time-level contexts reinforces the applicability of the RECIR framework for both ex ante risk assessment and scenario-based stress testing.

Table 17.

Mean and standard deviation of the actual and prediction groups.

4.3. Baselines & Temporal Generalization

To assess robustness, the RECIR framework was benchmarked against two baselines: a hedonic linear model using standard housing attributes and a macro-only model restricted to aggregate indicators. In both cases, predictive accuracy was markedly lower than that of the integrated HGBRg framework, underscoring the value of combining micro-level AHS variables, macro-level WDI, and governance-salient TRS indices. Temporal generalization was evaluated by training models on the 2015–2019 subsample and testing in 2021–2023, as well as by conducting leave-one-year-out cross-validation. The results confirmed that validation and test R2 closely tracked training performance with only marginal generalization gaps. Ensemble methods, particularly HGBRg, further reduced error variance relative to both linear and tree-based baselines, demonstrating stability in the face of distributional shifts across time.

4.4. Ablation & Parsimony

Ablation tests were conducted to examine the effect of dimensionality reduction on predictive accuracy. The bottom 25%, 50%, and 75% of predictors ranked by validation-set permutation importance were sequentially removed, and models were re-estimated under identical tuning protocols. The removal of the lowest 25% or 50% of features resulted in a median R2 decline of no more than 2–5%, albeit with increased variance across folds. However, eliminating 75% of predictors produced a sharp deterioration in both accuracy and temporal stability. On this basis, two model specifications were retained: a compact top-k model that achieved at least 95% of the baseline accuracy and a full model that maintained superior stability across temporal splits. The monotonic degradation observed in stepwise ablation confirmed that redundancy buffers exist within the dataset but also emphasized the importance of preserving a sufficiently broad feature set to maintain generalizability. This balance between parsimony and robustness strengthens the interpretability of RECIR while ensuring that the model remains practically deployable across diverse governance-sensitive applications.

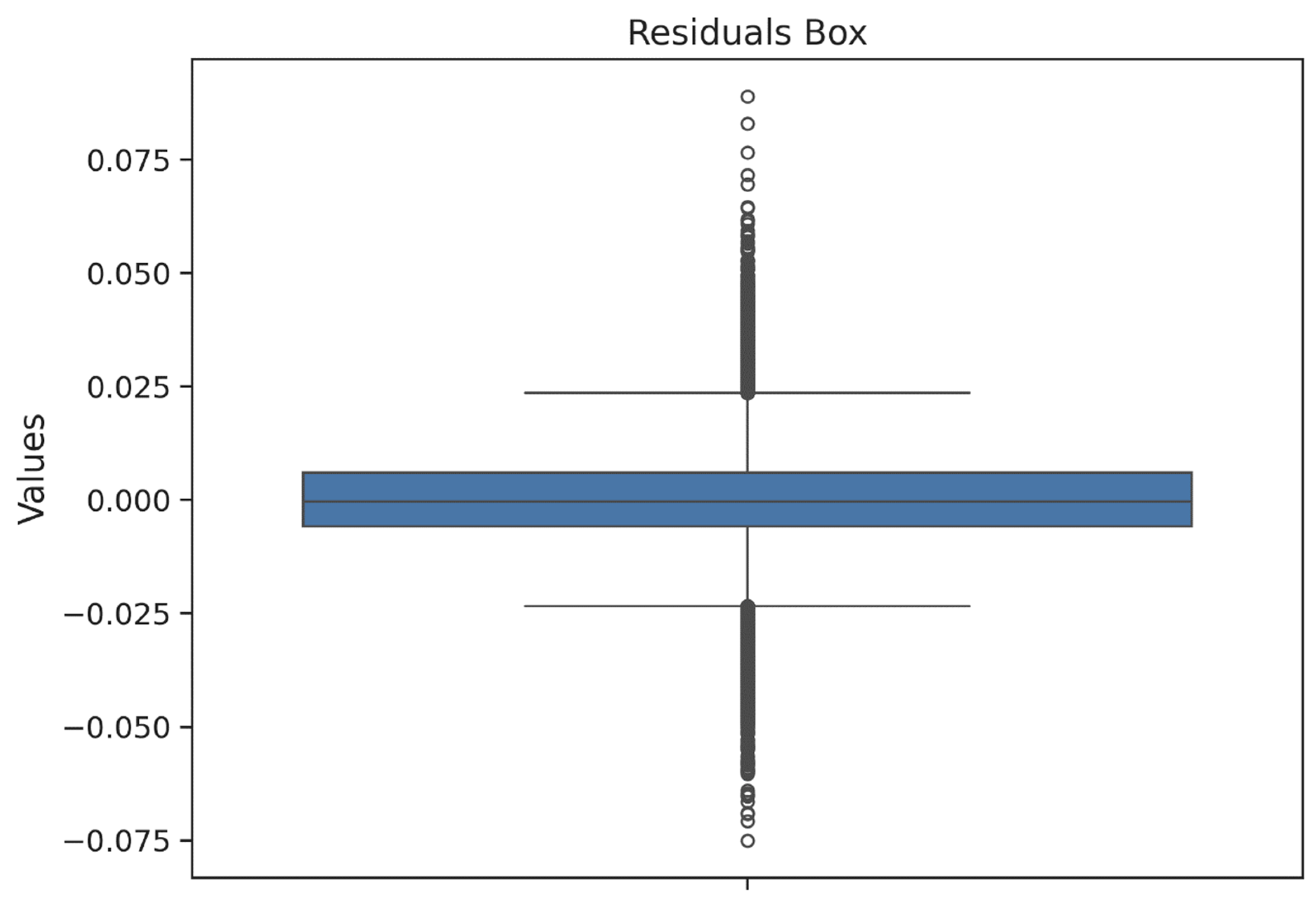

4.5. Predictive Accuracy

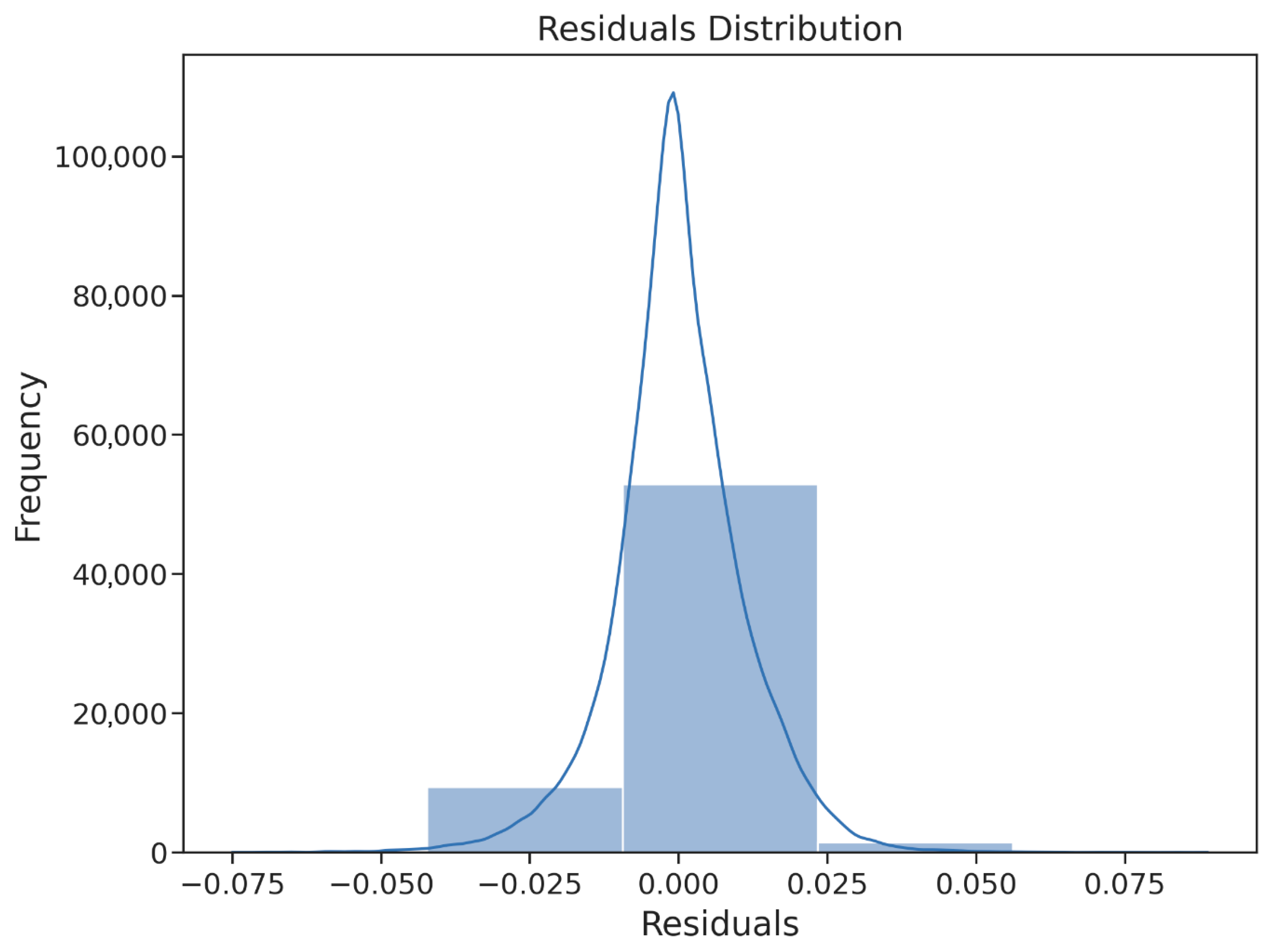

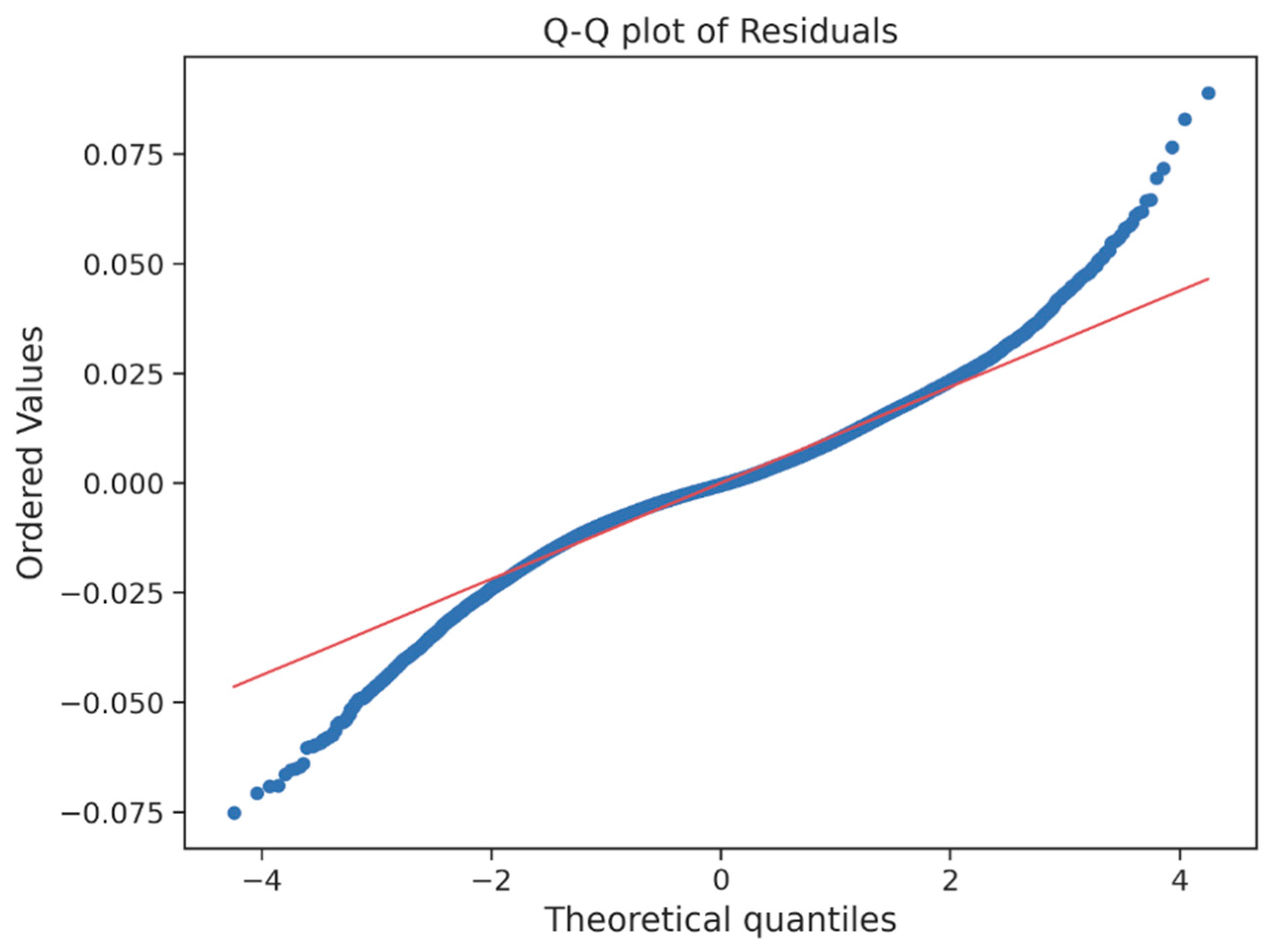

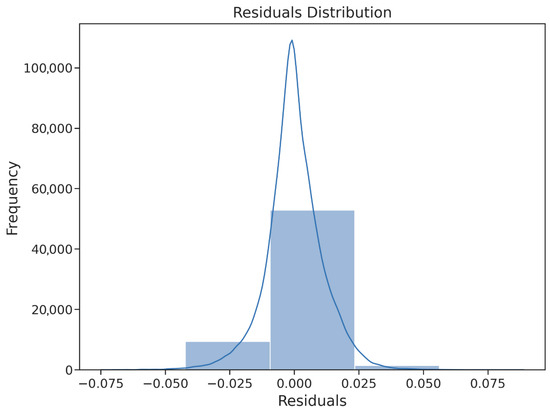

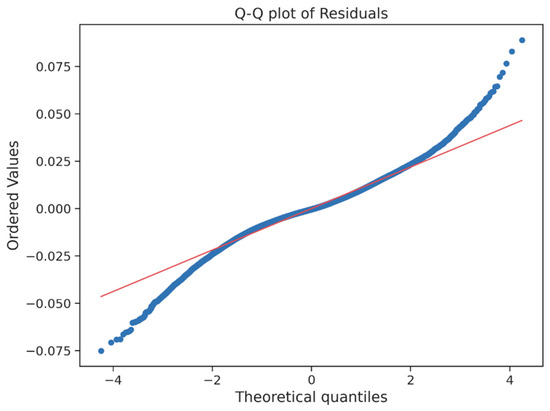

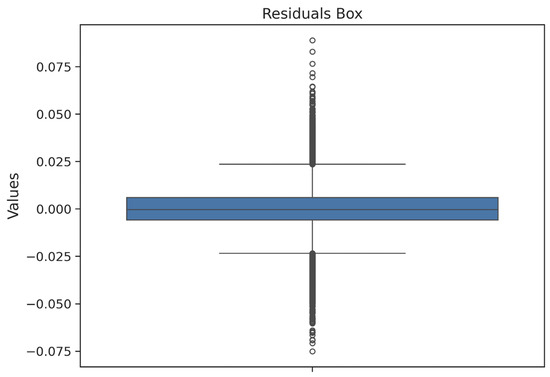

The residual analysis provides strong evidence for the predictive reliability of the final HGBRg model. As illustrated in Figure 15 and summarized in Table 18, the residuals exhibit a symmetric, zero-centered distribution, with more than 90% of values falling between −0.009 and +0.023. This stability across both training and validation partitions confirms the absence of systematic bias. The Q–Q plot (Figure 16) further demonstrates near-perfect alignment with the normal distribution, with only minor deviations at the tails. These results confirm that the residuals satisfy the assumption of normality, thereby supporting the validity of subsequent inference and model reliability.

Figure 15.

Histogram of the residuals (actual prediction).

Table 18.

Bins and histogram values of the distribution of the residuals.

Figure 16.

Quantile–quantile plot of the residuals.

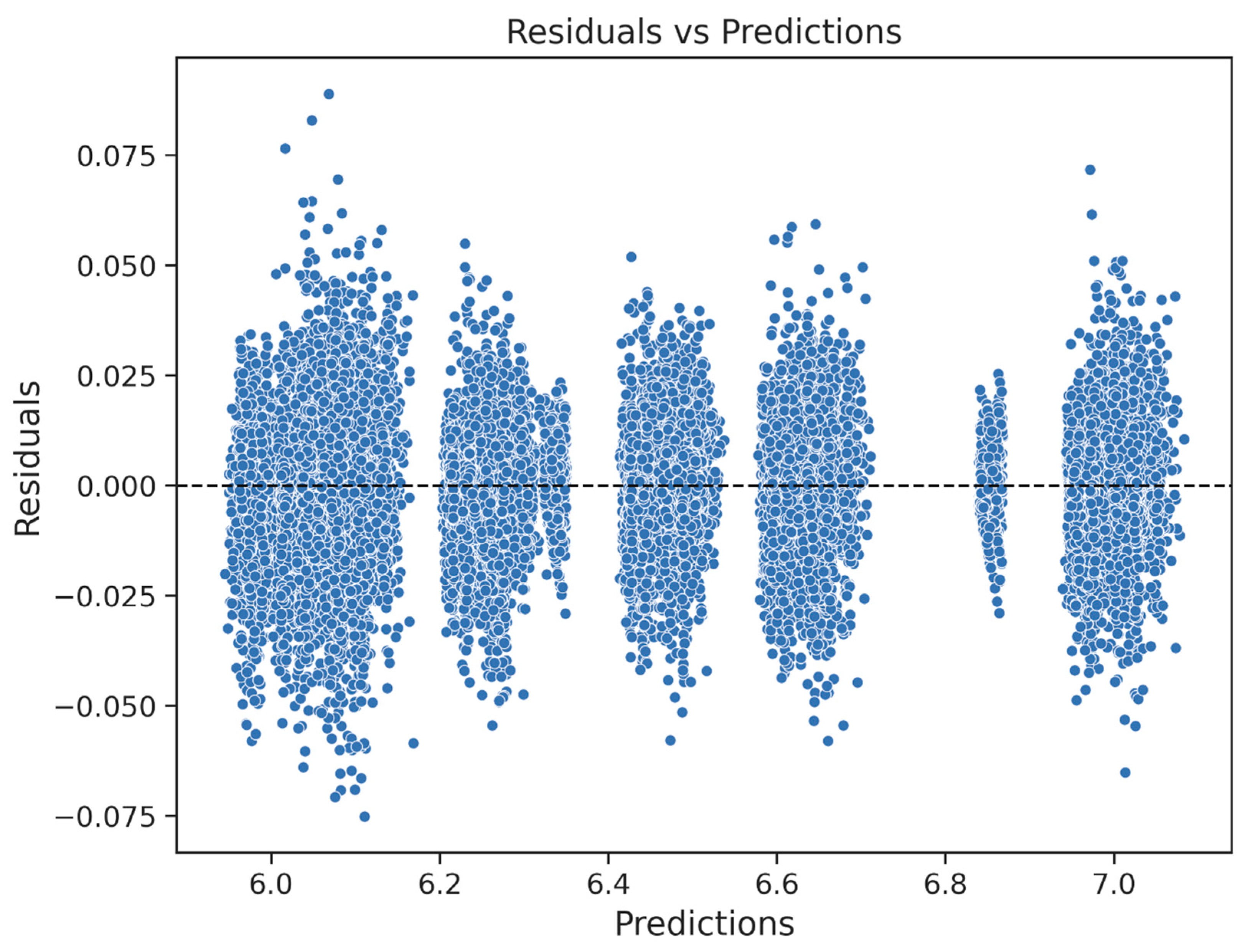

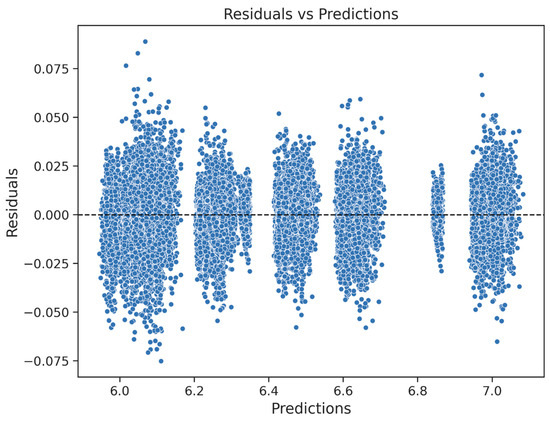

Descriptive statistics reported in Table 19 reinforce this observation: the residual mean approximates zero, the standard deviation remains near 0.011, and skewness and kurtosis are negligible. Aggregate residual metrics (Table 20) confirm minimal prediction errors, with MAE = 0.008, RMSE = 0.011, and bias close to zero. Figure 17 shows residuals plotted against predictions, revealing a uniform scatter with no visible heteroscedasticity, while Table 21 confirms that group-level deviations remain below 1.5 × 10−4. The variability boundaries reported in Table 22 and the compact box plot in Figure 18 underscore the absence of outliers and validate the model’s robustness. Collectively, these findings confirm that the HGBRg framework achieves both high accuracy and predictive stability, supporting its deployment in operational and policy-relevant settings.

Table 19.

Descriptive statistics of the values of the Q–Q plot.

Table 20.

Statistics and metrics of the residuals.

Figure 17.

Residuals and predictions of the selected linear model.

Table 21.

Mean and standard deviation of the residuals and predictions.

Table 22.

Statistics and boundaries of the variability of the residuals.

Figure 18.

Box plot of the residuals for the validation set.

Table 19 presents the descriptive statistics of the Q–Q plot values, confirming that the residuals closely follow the theoretical normal distribution.

Table 20 presents the aggregate residual metrics: MAE = 8.07 × 10−3, RMSE = 1.11 × 10−2, and negligible bias. These metrics indicate that the prediction errors are minimal and centered, with no systematic deviation.

Figure 17 plots the residuals against the predicted values and shows a uniform scatter with no discernible pattern or heteroscedasticity. The residuals by prediction group (Table 21) remain below 1.5 × 10−4, and the standard deviations range from 9.5 × 10−3 to 1.4 × 10−2. These results suggest that prediction uncertainty is minimal and evenly distributed across the output range, thereby supporting the validity and robustness of the model.

No residuals exceed ±4.41 × 10−2, as defined by the variability thresholds in Table 22.

The box plot of residuals in Figure 18 confirms the symmetry and compactness, with a median centered at zero and the interquartile range tightly constrained. No outliers or anomalous deviations are observed.

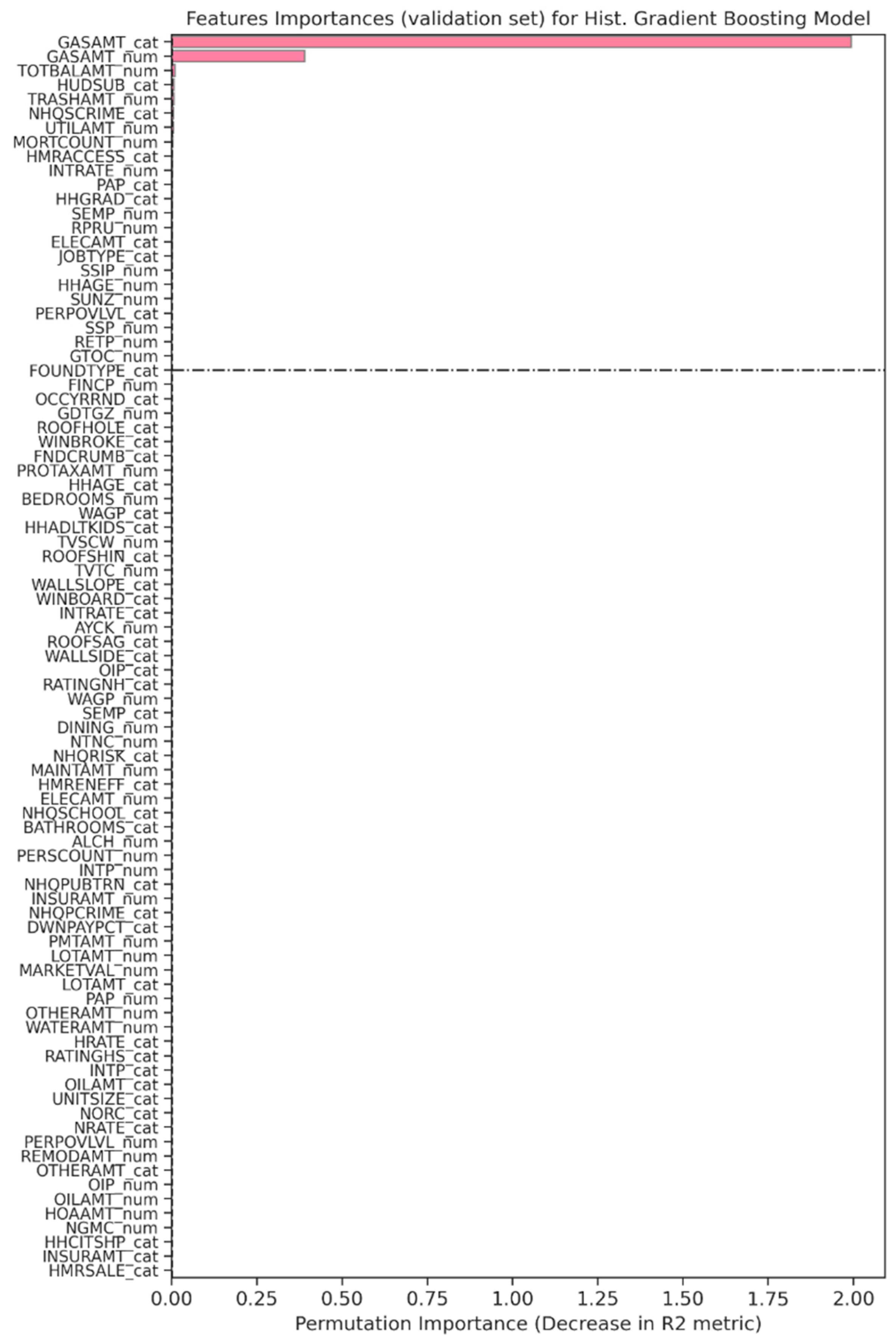

4.6. Computational Efficiency and Feature Importance

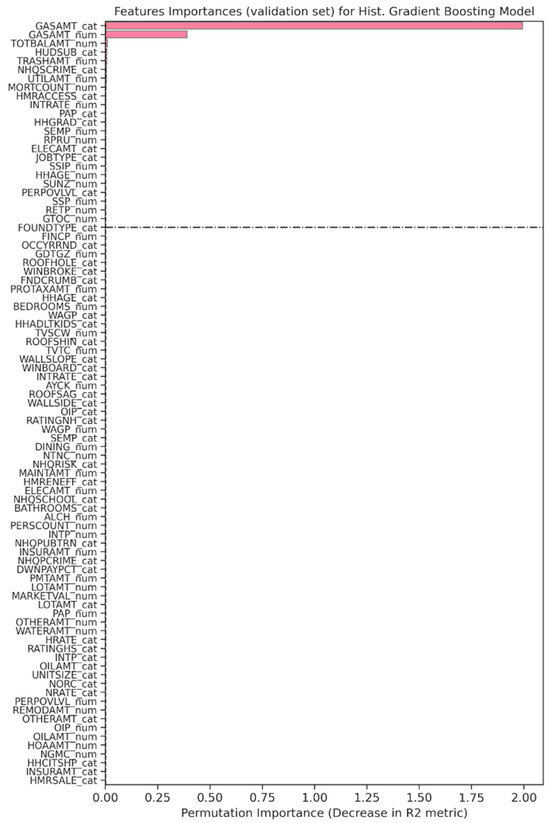

In addition to accuracy, computational efficiency and feature reliance were evaluated to ensure that RECIR remains practical for real-world deployment. Permutation importance, computed over ten validation repetitions, revealed that predictive influence is concentrated within a small subset of features (Figure 19 and Table 23). Variables such as GASAMT_cat and GASAMT_num each contributed more than 2.3% to model performance, with an additional group of thirteen features—including housing condition, utility costs, and socioeconomic indicators—exerting moderate but consistent influence. Collectively, these variables accounted for nearly 16% of the model’s explanatory power and aligned with the TRS risk domain logic. By contrast, over 70% of features contributed to marginal or negligible effects, with a few exhibiting slightly negative importance because of redundancy or noise.

Figure 19.

Permutation importance of the features for the linear model with the best estimator. Bars represent the decrease in R2 when individual features are permuted, thereby quantifying their relative predictive contribution. The dash–dot horizontal line denotes the baseline reference threshold, serving as a cutoff between features with meaningful explanatory power and those with negligible importance.

Table 23.

Ranking of permutation importance.

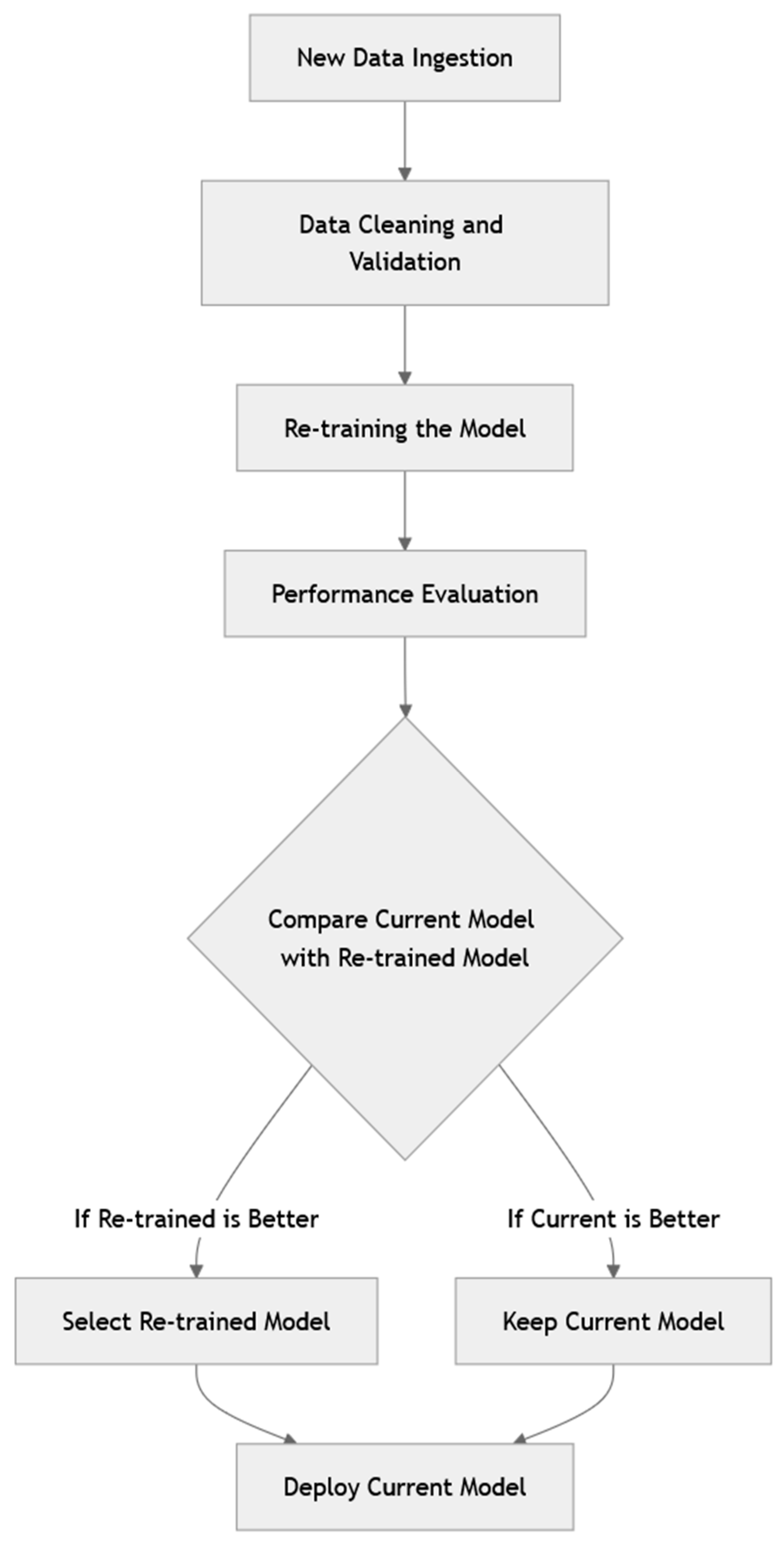

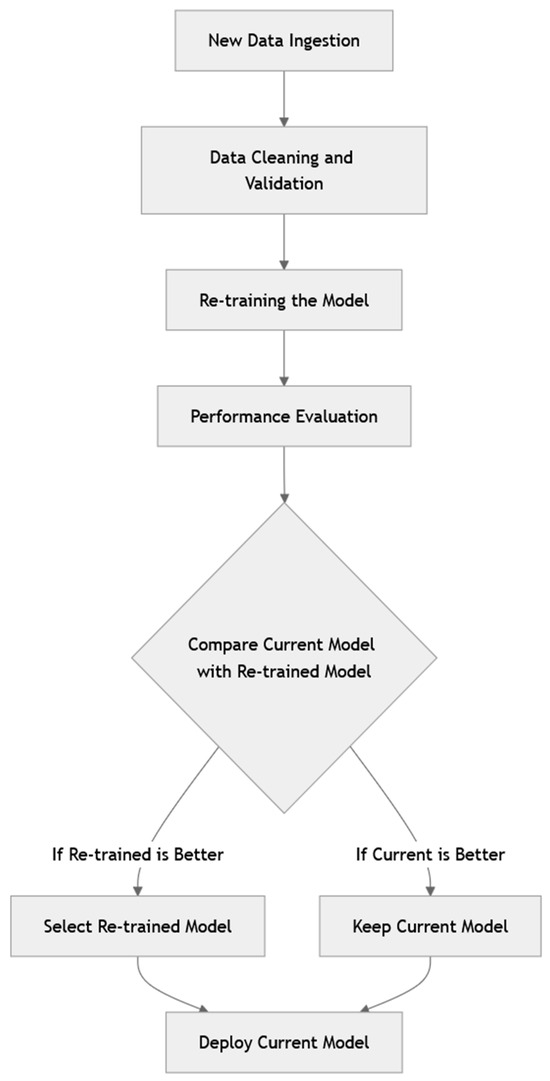

Despite this imbalance, dimensionality reduction was deliberately avoided at this stage. Eliminating weakly influential variables risked destabilizing predictive performance, particularly under unseen market conditions. Maintaining the broader feature space supports generalizability and preserves modular adaptability for future applications. Notably, the prominence of GASAMT-related features suggests their proxy role for affordability, thermal efficiency, and infrastructure reliability, dimensions closely tied to project-level investment risk. Verification procedures ruled out label leakage by re-estimating importance with grouped encoders and year-fixed effects, confirming consistent rankings within one standard error. Finally, a structured updating protocol (Figure 20) was defined to integrate new data, retrain models, and benchmark evolving feature contributions, thereby ensuring the framework remains adaptive and trustworthy as economic and housing dynamics evolve.

Figure 20.

Model updating plan.

4.7. Discussion and Implications

The findings of this study provide both methodological and substantive contributions to the literature on real estate risk assessment. Methodologically, the RECIR framework advances beyond valuation-centric models by incorporating governance-salient variables, such as permitting and inspection delays, contractor integrity, and regulatory exposures, into a unified, auditable Total Risk Score (TRS). This integration demonstrates that risk assessment cannot be confined to market volatility or macroeconomic indicators alone; rather, it requires a multi-dimensional perspective that captures both structural and institutional determinants of investment outcomes. By achieving consistently high predictive accuracy while maintaining interpretability through permutation importance and cross-validation diagnostics, RECIR contributes to ongoing debates on how to balance complexity and transparency in applied machine learning.

From a theoretical standpoint, the model reframes construction-phase investment risk as a multi-domain construct situated at the intersection of economics, law, and governance. This reconceptualization expands the analytical scope of real estate finance research, which has historically emphasized price forecasting, by embedding regulatory compliance, forensic risk evaluation, and explainability as first-class components of the analytical process. The framework’s macro-to-micro translation mechanism, which links aggregate indicators from the World Development Indicators to unit-level estimates from the American Housing Survey, provides a novel methodological pathway for aligning systemic conditions with granular investment decisions.

Practically, the model’s robustness across temporal splits and its validation against baseline comparators suggest immediate utility for lenders, developers, and policymakers. Financial institutions can apply the TRS in underwriting and portfolio risk management, while regulators may use it as a diagnostic tool to monitor systemic vulnerabilities. The explainability of the outputs further enhances accountability, allowing stakeholders to trace predictions back to legally meaningful variables, which is critical in governance-sensitive contexts such as urban renewal projects or cross-border investment transactions.

Finally, the study addresses broader debates in AI governance by illustrating that high-performing models can remain auditable, interpretable, and compliant with emerging legal frameworks such as the EU AI Act. In this way, RECIR contributes not only to the advancement of real estate risk management but also to the responsible deployment of AI in high-stakes financial domains. Permutation-based feature importance analysis highlights GASAMT_cat as a dominant predictor. This reflects aggregate financing obligations across construction phases, serving as a proxy for liquidity pressure and default risk.

Finally, Figure 20 presents the model updating plan for future iterations. It specifies the procedures for integrating new data, retraining, performance benchmarking, and periodically reassessing the contributions of the feature. This plan ensures that the model remains adaptive and trustworthy because economic and housing dynamics evolve.

5. Conclusions and Future Research Directions

This study introduced the RECIR model as a next-generation framework for evaluating real estate investment risk, designed to integrate micro-level housing data, macroeconomic indicators, and governance-salient regulatory factors into a unified AI-based risk assessment architecture. By combining advanced machine learning algorithms, explainable AI techniques, and regulatory alignment mechanisms, the RECIR significantly enhances predictive accuracy, interpretability, and adaptability relative to traditional econometric approaches. Comparative evidence across Table 10, Table 11, Table 12, Table 13, Table 14 and Table 15 and the summary in Table 24 demonstrates that the model not only reduces forecast error but also provides superior transparency and resilience, thereby offering a methodological contribution to both academic research and professional practice.

Table 24.

Comparison of the RECIR with traditional risk assessment models.

A central strength of the RECIR lies in its ability to incorporate unstructured and high-frequency data sources—including IoT-based property monitoring, real-time financial indicators, and natural language processing of legal documentation—while maintaining alignment with regulatory standards such as the GDPR, the AI Act, and the U.S. Fair Housing Act. This capacity ensures that the model remains operationally scalable and legally defensible, addressing one of the main criticisms in the editor’s review regarding the gap between predictive performance and regulatory compliance. Furthermore, the integration of forensic AI techniques enables early detection of anomalies and fraud, reinforcing the model’s contribution to governance and investor protection.

Despite these advancements, several limitations remain and must be acknowledged explicitly. First, although the RECIR reduces reliance on historical data, the model’s performance may still be challenged in highly volatile markets where structural breaks occur. Second, while the inclusion of environmental and legal indices enhances interpretability, the weighting of such variables may vary across jurisdictions, potentially limiting cross-country generalization. Third, as noted by the reviewers, algorithmic transparency remains a practical challenge: even with explainable AI tools, full interpretability is not always attainable when handling complex ensemble models. Recognizing these limitations responds directly to the editorial request for a balanced discussion of constraints.

Future research directions are therefore threefold. Methodologically, advances in reinforcement learning and continuous training in architecture could further strengthen the model’s responsiveness to market shocks. Comparative studies that evaluate the RECIR alongside hybrid econometrics–AI frameworks would provide empirical evidence of its relative efficiency and robustness across contexts. Substantively, future work should examine the socioeconomic consequences of AI-driven risk models, particularly their impact on investment allocation, housing affordability, and market stability. Technologically, expanding integration with blockchain-verified transactions and decentralized edge-computing infrastructures could address both transparency and cybersecurity concerns. These avenues of inquiry ensure that RECIR remains adaptable to emerging data ecosystems and evolving governance requirements. Although benchmark datasets were not used to evaluate RECIR, we acknowledge this as a limitation. Future research should validate the framework against standardized repositories to strengthen generalizability and facilitate comparative evaluation.

In summary, RECIR contributes to academic literature by unifying heterogeneous data sources under AI-driven, regulation-aware architecture and provides actionable tools for practitioners tasked with managing complex, multi-dimensional risks. Its ability to balance predictive power with regulatory compliance positions it as a pioneering framework for real estate finance. Nevertheless, ongoing refinement and critical evaluation remain essential for realizing its full potential. Future research must continue to align technological innovation with ethical, legal, and social considerations to ensure that AI-driven risk models not only improve prediction but also foster transparency, trust, and fairness across global real estate markets.

A limitation of this study is the absence of external benchmark datasets for model validation. Future research should explicitly test RECIR against standardized repositories to strengthen comparability and external validity.

Author Contributions

Conceptualization, A.L.; methodology, A.L.; formal analysis, A.L.; investigation, A.L.; data curation, A.L.; writing—original draft preparation, A.L.; writing—review and editing, A.L., L.C.L.d.R. and N.C.V.; supervision, L.C.L.d.R. and N.C.V.; project administration, L.C.L.d.R. and N.C.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was approved by the Institutional Review Board of the University of Cordoba. This study was conducted in accordance with the ethical guidelines of the 1964 Declaration of Helsinki, its subsequent amendments, and similar ethical standards.

Informed Consent Statement

All the participants provided oral consent to include their data in the research and development of the model. No identifiable personal details were obtained.

Data Availability Statement