Toward Intelligent AIoT: A Comprehensive Survey on Digital Twin and Multimodal Generative AI Integration

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Research Questions

- RQ1 (Conceptual Complementarity): How can the distinctive roles of DTs and GAI be conceptualized as complementary pillars of AIoT intelligence, and what theoretical framework can unify their contributions to virtualization and cognition?

- RQ2 (System-Level Challenges): What domain-independent advantages, limitations, and recurring system-level challenges (e.g., efficiency, reliability, privacy, standardization) can be synthesized from a cross-literature comparison of DT and GAI approaches?

- RQ3 (Formal Models and Evaluation): In what ways can DT–GAI interaction be formalized through functional or probabilistic models and optimization frameworks, and which mathematical indicators provide rigorous criteria for evaluating robustness, complexity, cross-modal accuracy, and twin–reality consistency?

1.3. Contributions

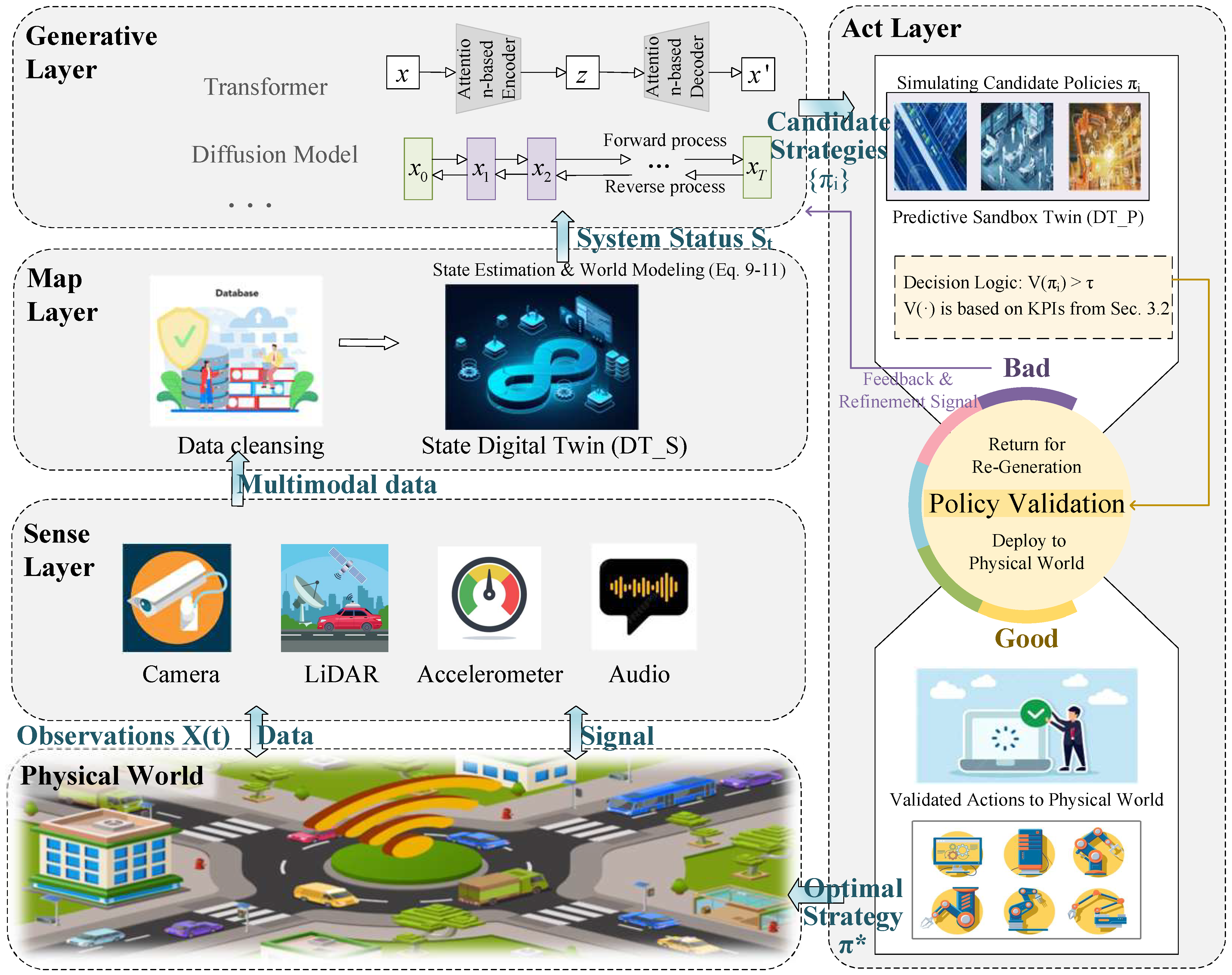

- Addressing RQ1 (Complementarity). We systematically review how DTs and GAI tackle different aspects of AIoT intelligence—DTs providing structured and temporally grounded digital replicas, and GAI contributing semantic reasoning, synthetic data generation, and cross-modal adaptation. We synthesize these insights into a unified framework, which is illustrated through mechanisms such as the Sense–Map–Generate–Act (SMGA) loop.

- Addressing RQ2 (Comparative Challenges). We conduct a critical review of representative approaches—GANs, diffusion models, and large language models (LLMs)—and highlight their distinct strengths and limitations across domains such as manufacturing, healthcare, and transportation. We identify common challenges including efficiency, reliability, privacy, and standardization.

- Addressing RQ3 (Mathematical Formalization and Evaluation). We move beyond descriptive accounts by proposing functional and probabilistic models of DT–GAI interaction, formulating optimization problems under edge resource constraints, and consolidating evaluation practices into a set of mathematical indicators for robustness, complexity, accuracy, and twin–reality consistency.

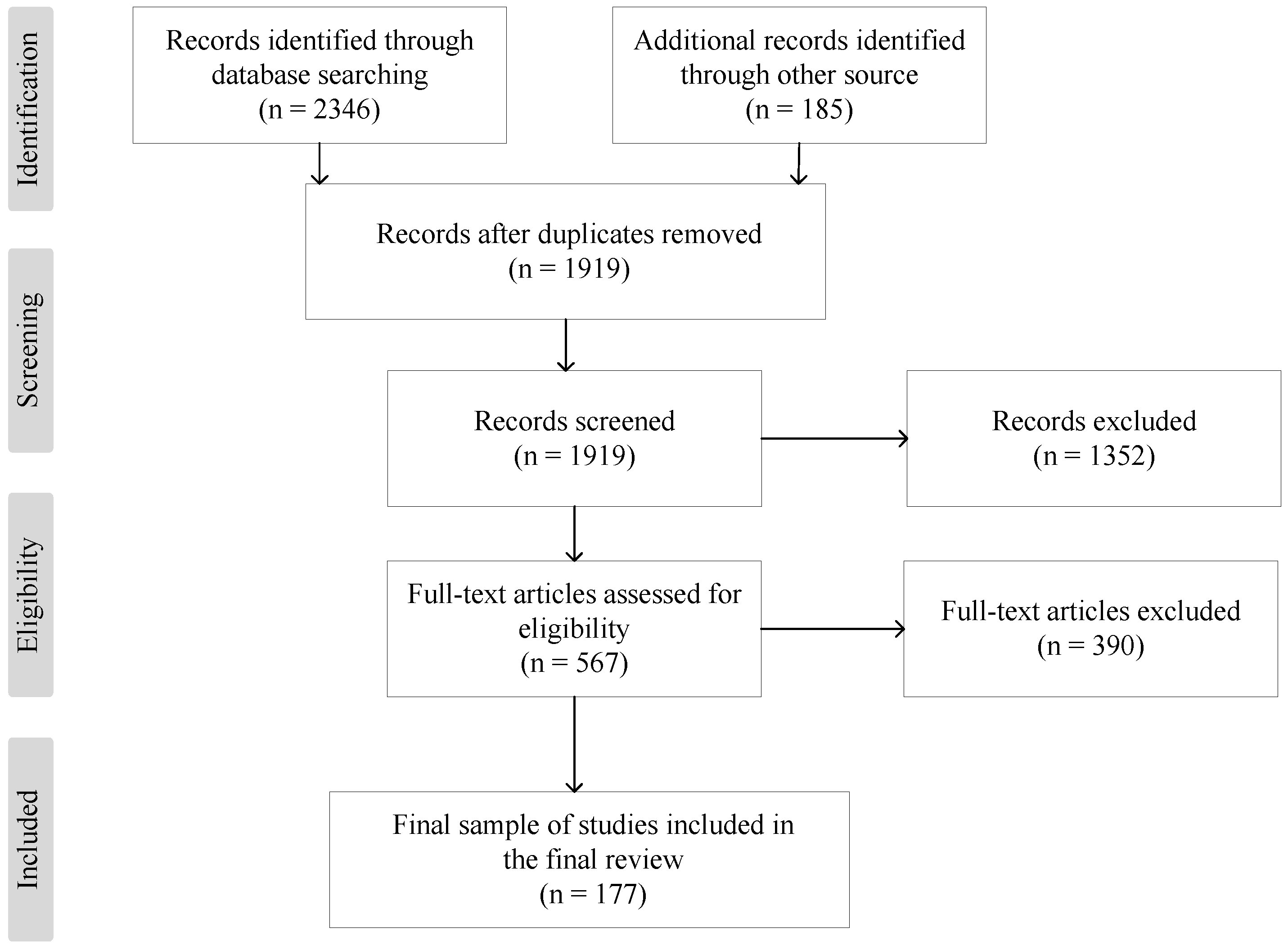

1.4. Literature Search and Screening Process

("digital twin" OR "DT") AND ("generative AI" OR "GAI" OR "multimodal")

AND ("AIoT" OR "IoT" OR "edge computing")

AND ("pruning" OR "quantization" OR "knowledge distillation")

2. Foundations: Digital Twins and Multimodal Generative AI

2.1. DTs in AIoT

2.2. Multimodal Generative AI: The Rise of Contextual Understanding and Creation

2.3. GAI as Cognitive Augmentation Layer for ML-Based DT

2.4. The Symbiosis: Why DT and GAI Are Complementary Partners

3. A Framework for Integration

3.1. The Proposed SMGA Architecture for AIoT

3.1.1. Sense Layer

3.1.2. Map Layer

3.1.3. Generate Layer

3.1.4. Act Layer

- Simulation: It receives the set of candidate strategies from the Generate Layer. Within the risk-free DT_P environment, each strategy is simulated to predict its potential outcomes and consequences on the system.

- Validation: Following simulation, a Policy Validation Module evaluates each strategy’s performance. This evaluation is not arbitrary; it is performed using a comprehensive validation function, , which quantifies the strategy’s quality based on the Key Performance Indicators (KPIs) detailed in Section 3.2, such as accuracy (Equation (16)), robustness (Equation (24)), and computational efficiency (Equation (29)).

- Decision: A strategy is deemed ’valid’ if its score exceeds a predefined acceptance threshold (). The highest-scoring valid strategy is selected as the optimal strategy, , and it is translated into executable instructions for deployment to actuators in the physical world.

- Feedback and Refinement: Conversely, strategies that fail the validation () are rejected. Crucially, information about the failure—for instance, which specific KPIs were not met—is compiled into a refinement signal and fed back to the Generate Layer. This intelligent feedback guides the model to produce improved strategies in subsequent iterations, addressing the shortcomings of the previous attempts.

3.2. Quantitative Evaluation Metrics for DT–GAI Integration

3.2.1. Accuracy and Performance Metrics

- Classification MetricsFor classification tasks, common metrics include accuracy, precision, recall, and F1-score. Accuracy measures the proportion of correctly predicted instances over the total number of samples:where denotes the number of true positives, denotes the number of true negatives, denotes the number of false positives, and denotes the number of false negatives. Precision quantifies the fraction of correctly predicted positive instances among all predicted positives:Recall evaluates the fraction of actual positive instances that are correctly identified:The F1-score, as the harmonic mean of precision and recall, balances the trade-off between false positives and false negatives:Threshold-independent metrics such as the ROC-AUC (Receiver Operating Characteristic Area Under the Curve) and the PR-AUC (Precision–Recall Area Under the Curve) are also widely adopted to compare classifier performance under varying decision thresholds.

- Regression MetricsFor regression or continuous prediction tasks, commonly used metrics include the Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and the coefficient of determination (). These metrics are defined as shown below:where and denote the ground truth and predicted values, respectively, is the mean of the true values, and n is the total number of samples.

- Multi-Task and Composite MetricsIn scenarios where DT–GAI frameworks handle multiple tasks simultaneously, composite metrics that aggregate performance across tasks are often adopted. The weighted averages of F1-scores or task-specific accuracies provide a holistic evaluation, enabling fair comparison across heterogeneous datasets or modalities.

3.2.2. Robustness Metrics

- Sensitivity to Noise or OutliersOne common approach is to introduce controlled noise or simulate outliers in the input data and observe the model’s performance degradation. Let denote the performance of the model on clean data, and let denote its performance on noisy data. The degradation rate can be calculated as

- Adversarial RobustnessAdversarial robustness evaluates the model’s resistance to adversarial examples crafted to induce incorrect predictions. Metrics include the adversarial success rate or accuracy on perturbed inputs. Formally, if is the prediction on an adversarial input , the adversarial accuracy iswhere is the indicator function and is the true label.

- Model Stability MetricsStability metrics evaluate how consistently a model performs under repeated experiments or cross-validation folds. One widely used measure is the variance of performance across k-fold cross-validation:where is the performance metric (e.g., accuracy, F1-score) on the j-th fold, and is the mean performance across all folds. Another indicator is the difference between training and testing performance, which reflects overfitting or underfitting:

3.2.3. Cross-Modal and Multi-Source Metrics

- Consistency Across ModalitiesConsistency metrics measure agreement between predictions or representations derived from different modalities. For two modalities A and B, a simple consistency score can be calculated aswhere and are predictions from modalities A and B for sample i.

- Domain Adaptation AccuracyCross-domain accuracy evaluates the model’s generalization when applied to a target domain different from the training domain. Let and denote performance on source and target domains; the adaptation accuracy can be expressed as

- Fusion EfficiencyFusion efficiency assesses how well multi-source data are combined to improve model performance without excessive computational overhead. It can be quantified as the performance gain per unit of computational cost:where is the performance of the fused model, is the best single-modality performance, and represents the computational cost of the fused model.

3.2.4. Computational and Resource Metrics

- Inference TimeInference time measures the duration a model takes to process an input and generate an output. Formally, for a set of n inputs, the average inference time iswhere is the processing time for the i-th input.

- Model Parameter CountThe number of trainable parameters provides an indicator of model complexity and storage requirements. Let L denote the total number of layers and the number of parameters in layer l, then

- FLOPs (Floating Point Operations)FLOPs quantify the total number of arithmetic operations required for a single forward pass. This is widely used to compare the computational cost between models:

- Memory and Energy ConsumptionMemory usage and energy consumption assess the model’s suitability for deployment on edge devices. Let denote memory usage in bytes and the energy consumed per inference; these metrics can be measured empirically using profiling tools.

4. Key Enabling Technologies

4.1. Multimodal Data Fusion and Representation Learning

4.1.1. Unimodal and Multimodal Data Processing Techniques

4.1.2. Multimodal Fusion Techniques Under Multimodal Conditions

4.2. GAI for Dynamic DT Evolution

4.2.1. Data Generation Techniques for DTs Enabled by GAI

4.2.2. Prediction Techniques for DTs Enabled by GAI

4.3. Cloud–Edge–End Collaborative Intelligence

4.3.1. Lightweight Model Deployment

4.3.2. Intelligent Resource Allocation

5. Application Scenarios

5.1. Smart Manufacturing: Generative Design and Autonomous Optimization

5.2. Smart Cities: City-Scale Simulation and Emergency Response

5.3. Autonomous Driving: Lifelong Learning and Simulation Testing

5.4. Healthcare: Personalized Medicine and Surgical Planning

5.5. Comparative Summary and Observations

6. Challenges and Future Research Directions

6.1. Technical Challenges

6.1.1. The Tension Between Computational Demand and Efficiency

6.1.2. Reliability and Hallucination Risks

6.1.3. Data Security and Privacy

6.1.4. Privacy-Preserving Mechanisms for DT–GAI

6.1.5. Lack of Standardization and Interoperability

6.2. Future Research Directions

6.2.1. Neuro-Symbolic Verified Generation

6.2.2. GAI for DT Compression

6.2.3. Toward Sustainable and Green AIoT

6.2.4. Lifelong and Continual Learning in Dynamic Environments

6.2.5. Ethical and Governance Frameworks for Intelligent AIoT

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, P.; Zhu, X.; Clifton, D.A. Multimodal Learning with Transformers: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12113–12132. [Google Scholar] [CrossRef]

- Zhu, Y.; Wu, Y.; Sebe, N.; Yan, Y. Vision + X: A Survey on Multimodal Learning in the Light of Data. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9102–9122. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, C.; Geng, B. Deep Multimodal Data Fusion. ACM Comput. Surv. 2024, 56, 216. [Google Scholar] [CrossRef]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A survey on multimodal large language models. Natl. Sci. Rev. 2024, 11, nwae403. [Google Scholar] [CrossRef]

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Yu, P.; Sun, L. A Survey of AI-Generated Content (AIGC). ACM Comput. Surv. 2025, 57, 125. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, K.; Zhang, Y. Digital Twin Networks: A Survey. IEEE Internet Things J. 2021, 8, 13789–13804. [Google Scholar] [CrossRef]

- Liu, X.; Jiang, D.; Tao, B.; Xiang, F.; Jiang, G.; Sun, Y.; Kong, J.; Li, G. A Systematic Review of Digital Twin about Physical Entities, Virtual Models, Twin Data, and Applications. Adv. Eng. Inform. 2023, 55, 101876. [Google Scholar] [CrossRef]

- Bibri, S.E.; Huang, J.; Jagatheesaperumal, S.K.; Krogstie, J. The Synergistic Interplay of Artificial Intelligence and Digital Twin in Environmentally Planning Sustainable Smart Cities: A Comprehensive Systematic Review. Environ. Sci. Ecotechnol. 2024, 20, 100433. [Google Scholar] [CrossRef]

- Qin, B.; Pan, H.; Dai, Y.; Si, X.; Huang, X.; Yuen, C.; Zhang, Y. Machine and Deep Learning for Digital Twin Networks: A Survey. IEEE Internet Things J. 2024, 11, 34694–34716. [Google Scholar] [CrossRef]

- Pan, Y.; Lei, L.; Shen, G.; Zhang, X.; Cao, P. A Survey on Digital Twin Networks: Architecture, Technologies, Applications, and Open Issues. IEEE Internet Things J. 2025, 12, 19119–19143. [Google Scholar] [CrossRef]

- Glaessgen, E.; Stargel, D. The digital twin paradigm for future NASA and US Air Force vehicles. In Proceedings of the 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference, Honolulu, HI, USA, 23–26 April 2012; p. 1818. [Google Scholar] [CrossRef]

- Ariyachandra, M.R.M.F.; Wedawatta, G. Digital Twin Smart Cities for Disaster Risk Management: A Review of Evolving Concepts. Sustainability 2023, 15, 11910. [Google Scholar] [CrossRef]

- Adibfar, A.; Costin, A.M. Creation of a Mock-up Bridge Digital Twin by Fusing Intelligent Transportation Systems (ITS) Data into Bridge Information Model (BrIM). J. Constr. Eng. Manag. 2022, 148, 04022094. [Google Scholar] [CrossRef]

- Mulder, S.T.; Omidvari, A.H.; Rueten-Budde, A.J.; Huang, P.H.; Kim, K.H.; Bais, B.; Rousian, M.; Hai, R.; Akgun, C.; van Lennep, J.R. Dynamic Digital Twin: Diagnosis, Treatment, Prediction, and Prevention of Disease During the Life Course. J. Med. Internet Res. 2022, 24, e35675. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Qu, T.; Liu, Y.; Zhong, R.Y.; Xu, G.; Sun, H.; Gao, Y.; Lei, B.; Mao, C.; Pan, Y. Sustainability Assessment of Intelligent Manufacturing Supported by Digital Twin. IEEE Access 2020, 8, 174988–175008. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y.C. Digital twin in industry: State-of-the-art. IEEE Trans. Ind. Inform. 2019, 15, 2405–2415. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Y.; Mu, E. A review of intelligent subway tunnels based on digital twin technology. Buildings 2024, 14, 2452. [Google Scholar] [CrossRef]

- Guzina, L.; Ferko, E.; Bucaioni, A. Investigating digital twin: A systematic mapping study. In Proceedings of the 10th Swedish Production Symposium (SPS2022), Västerås, Sweden, 7–10 September 2022; pp. 449–460. [Google Scholar] [CrossRef]

- Ding, G.; Guo, S.; Wu, X. Dynamic scheduling optimization of production workshops based on digital twin. Appl. Sci. 2022, 12, 10451. [Google Scholar] [CrossRef]

- Nascimento, F.H.N.; Cardoso, S.A.; Lima, A.M.N.; Santos, D.F.S. Synchronizing a collaborative arm’s digital twin in real-time. In Proceedings of the 2023 Latin American Robotics Symposium (LARS), 2023 Brazilian Symposium on Robotics (SBR), and 2023 Workshop on Robotics in Education (WRE), Salvador, Brazil, 9–11 October 2023; pp. 230–235. [Google Scholar] [CrossRef]

- Kritzinger, W.; Karner, M.; Traar, G.; Henjes, J.; Sihn, W. Digital Twin in Manufacturing: A Categorical Literature Review and Classification. IFAC-PapersOnLine 2018, 51, 1016–1022. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital Twin: Enabling Technologies, Challenges and Open Research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F. Digital Twin and Big Data Towards Smart Manufacturing and Industry 4.0: 360 Degree Comparison. IEEE Access 2018, 6, 3585–3593. [Google Scholar] [CrossRef]

- Okegbile, S.D.; Cai, J.; Niyato, D.; Yi, C. Human digital twin for personalized healthcare: Vision, architecture and future directions. IEEE Netw. 2022, 37, 262–269. [Google Scholar] [CrossRef]

- Uhlemann, T.H.-J.; Lehmann, C.; Steinhilper, R. The digital twin: Realizing the cyber-physical production system for industry 4.0. Procedia CIRP 2017, 61, 335–340. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30 (NIPS 2017); Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Huang, Y.; Xu, J.; Lai, J.; Jiang, Z.; Chen, T.; Li, Z.; Yao, Y.; Ma, X.; Yang, L.; Chen, H.; et al. Advancing transformer architecture in long-context large language models: A comprehensive survey. arXiv 2023, arXiv:2311.12351. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems 27 (NIPS 2014); Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar] [CrossRef]

- Müller-Franzes, G.; Niehues, J.M.; Khader, F.; Arasteh, S.T.; Haarburger, C.; Kuhl, C.; Wang, T.; Han, T.; Nolte, T.; Nebelung, S.; et al. A multimodal comparison of latent denoising diffusion probabilistic models and generative adversarial networks for medical image synthesis. Sci. Rep. 2023, 13, 12098. [Google Scholar] [CrossRef] [PubMed]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. arXiv 2020, arXiv:2006.11239. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion models beat GANs on image synthesis. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021); Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P.S., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; pp. 8780–8794. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar] [CrossRef]

- Ramachandram, D.; Taylor, G.W. Deep multimodal learning: A survey on recent advances and trends. IEEE Signal Process. Mag. 2017, 34, 96–108. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.-P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 423–443. [Google Scholar] [CrossRef]

- Liang, P.P.; Zadeh, A.; Morency, L.-P. Foundations & trends in multimodal machine learning: Principles, challenges, and open questions. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Wu, R.; Wang, H.; Chen, H.-T.; Carneiro, G. Deep multimodal learning with missing modality: A survey. arXiv 2024, arXiv:2409.07825. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Brookline, MA, USA, 2021; Volume 139, pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Brookline, MA, USA, 2021; Volume 139, pp. 4904–4916. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the 39th International Conference on Machine Learning (ICML 2022), Baltimore, MD, USA, 17–23 July 2022; Chaudhuri, K., Jegelka, S., Song, L., Szepesvari, C., Niu, G., Sabato, S., Eds.; PMLR: Brookline, MA, USA, 2022; Volume 162, pp. 12888–12900. [Google Scholar]

- Alayrac, J.-B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A visual language model for few-shot learning. In Advances in Neural Information Processing Systems 35 (NeurIPS 2022); Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2022; pp. 23716–23736. [Google Scholar]

- Wang, P.; Yang, A.; Men, R.; Lin, J.; Bai, S.; Li, Z.; Ma, J.; Zhou, C.; Zhou, J.; Yang, H. OFA: Unifying architectures, tasks, and modalities through a simple sequence-to-sequence learning framework. In Proceedings of the 39th International Conference on Machine Learning (ICML 2022), Baltimore, MD, USA, 17–23 July 2022; Chaudhuri, K., Jegelka, S., Song, L., Szepesvari, C., Niu, G., Sabato, S., Eds.; PMLR: Brookline, MA, USA, 2022; Volume 162, pp. 23318–23340. [Google Scholar]

- Rubenstein, P.K.; Asawaroengchai, C.; Nguyen, D.D.; Bapna, A.; Borsos, Z.; de Chaumont Quitry, F.; Chen, P.; El Badawy, D.; Han, W.; Kharitonov, E.; et al. AudioPaLM: A large language model that can speak and listen. arXiv 2023, arXiv:2306.12925. [Google Scholar] [CrossRef]

- Tang, C.; Yu, W.; Sun, G.; Chen, X.; Tan, T.; Li, W.; Lu, L.; Ma, Z.; Zhang, C. SALMONN: Towards generic hearing abilities for large language models. arXiv 2023, arXiv:2310.13289. [Google Scholar] [CrossRef]

- Li, S.; Tang, H. Multimodal alignment and fusion: A survey. arXiv 2024, arXiv:2411.17040. [Google Scholar] [CrossRef]

- Li, Z.; Wu, X.; Du, H.; Liu, F.; Nghiem, H.; Shi, G. A survey of state of the art large vision language models: Alignment, benchmark, evaluations and challenges. arXiv 2025, arXiv:2501.02189. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, H.; Liu, Y.; Ma, C.; Zhang, X.; Pan, Y.; Liu, M.; Gu, P.; Xia, S.; Li, W. A comprehensive review of multimodal large language models: Performance and challenges across different tasks. arXiv 2024, arXiv:2408.01319. [Google Scholar] [CrossRef]

- Sloman, A. Multimodal cognitive architecture: Making perception more central to intelligent behavior. In Proceedings of the 21st National Conference on Artificial Intelligence (AAAI-06), Boston, MA, USA, 16–20 July 2006; AAAI Press: Menlo Park, CA, USA, 2006; Volume 2, pp. 1488–1493. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Zhao, H.; Karypis, G.; Smola, A. Multimodal chain-of-thought reasoning in language models. arXiv 2023, arXiv:2302.00923. [Google Scholar] [CrossRef]

- Liu, Y.; Deng, Y.; Liu, A.; Liu, Y.; Li, S. Fine-grained multi-modal prompt learning for vision–language models. Neurocomputing 2025, 636, 130028. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, Z.; Xia, Y.; Li, X.; Xia, Z.; Chang, A.; Yu, T.; Kim, S.; Rossi, R.A.; Zhang, R.; et al. Visual prompting in multimodal large language models: A survey. arXiv 2024, arXiv:2409.15310. [Google Scholar] [CrossRef]

- Gu, J.; Han, Z.; Chen, S.; Ma, Y.; Torr, P.; Tresp, V. A systematic survey of prompt engineering on vision-language foundation models. arXiv 2023, arXiv:2307.12980. [Google Scholar] [CrossRef]

- NVIDIA Corporation. Vision Language Model Prompt Engineering Guide for Image and Video Understanding; Technical Report; NVIDIA Developer Blog: Santa Clara, CA, USA, 2025; Available online: https://developer.nvidia.com/blog/vision-language-model-prompt-engineering-guide-for-image-and-video-understanding/ (accessed on 6 September 2025).

- Jiao, T.; Guo, C.; Feng, X.; Chen, Y.; Song, J. A comprehensive survey on deep learning multi-modal fusion: Methods, technologies and applications. Comput. Mater. Contin. 2024, 80, 1–35. [Google Scholar] [CrossRef]

- Kaur, M.J.; Mishra, V.P.; Maheshwari, P. The Convergence of Digital Twin, IoT, and Machine Learning: Transforming Data into Action. In Digital Twin Technologies and Smart Cities; Farsi, M., Daneshkhah, A., Hosseinian-Far, A., Jahankhani, H., Eds.; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Sahal, R.; Alsamhi, S.H.; Brown, K.N.; O’Shea, D.; McCarthy, C.; Guizani, M. Blockchain-Empowered Digital Twins Collaboration: Smart Transportation Use Case. Machines 2021, 9, 193. [Google Scholar] [CrossRef]

- Semeraro, C.; Lezoche, M.; Panetto, H.; Dassisti, M. Digital Twin Paradigm: A Systematic Literature Review. Comput. Ind. 2021, 130, 103469. [Google Scholar] [CrossRef]

- Minerva, R.; Lee, G.M.; Crespi, N. Digital Twin in the IoT Context: A Survey on Technical Features, Scenarios, and Architectural Models. Proc. IEEE 2020, 108, 1785–1824. [Google Scholar] [CrossRef]

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of Digital Twin about Concepts, Technologies, and Industrial Applications. J. Manuf. Syst. 2021, 58, 346–361. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Casiraghi, E.; Fogli, D. A Survey on Digital Twin: Definitions, Characteristics, Applications, and Design Implications. IEEE Access 2019, 7, 167653–167671. [Google Scholar] [CrossRef]

- Rasheed, A.; San, O.; Kvamsdal, T. Digital Twin: Values, Challenges and Enablers from a Modeling Perspective. IEEE Access 2020, 8, 21980–22012. [Google Scholar] [CrossRef]

- IBM Corporation. Will Generative AI Make the Digital Twin Promise Real in the Energy and Utilities Industry? Technical Report; IBM Blog: Armonk, NY, USA, 2023; Available online: https://www.ibm.com/blog/will-generative-ai-make-the-digital-twin-promise-real-in-the-energy-and-utilities-industry/ (accessed on 6 September 2025).

- Muhammad, K.; David, T.; Nassisid, G.; Kumar, A.; Singh, R. Integrating generative AI with network digital twins for enhanced network operations. arXiv 2024, arXiv:2406.17112. [Google Scholar] [CrossRef]

- Plain Concepts. Digital Twins and Generative AI: The Perfect Duo; Technical Insights Report; Plain Concepts: Madrid, Spain, 2025; Available online: https://www.plainconcepts.com/digital-twins-generative-ai/ (accessed on 6 September 2025).

- Ray, A. EdgeAgentX-DT: Integrating digital twins and generative AI for resilient edge intelligence in tactical networks. arXiv 2025, arXiv:2507.21196. [Google Scholar] [CrossRef]

- Nielsen Norman Group. Digital Twins: Simulating Humans with Generative AI; UX Research Report; Nielsen Norman Group: Fremont, CA, USA, 2025; Available online: https://www.nngroup.com/articles/digital-twins/ (accessed on 6 September 2025).

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar] [CrossRef]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; Getoor, L., Scheffer, T., Eds.; Omnipress: Madison, WI, USA, 2011; pp. 689–696. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25 (NIPS 2012); Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Graves, A.; Mohamed, A.-R.; Hinton, G.E. Speech recognition with deep recurrent neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 6645–6649. [Google Scholar] [CrossRef]

- Tan, H.; Bansal, M. LXMERT: Learning cross-modality encoder representations from transformers. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 5099–5109. [Google Scholar] [CrossRef]

- Cavalieri, S.; Gambadoro, S. Proposal of mapping digital twins definition language to open platform communications unified architecture. Sensors 2023, 23, 2349. [Google Scholar] [CrossRef]

- Laubenbacher, R.C.; Mehrad, B.; Shmulevich, I.; Trayanova, N.A. Digital twins in medicine. Nat. Comput. Sci. 2024, 4, 184–191. [Google Scholar] [CrossRef]

- Vallée, A. Envisioning the future of personalized medicine: Role and realities of digital twins. J. Med. Internet Res. 2024, 26, e50204. [Google Scholar] [CrossRef]

- Russwinkel, N. A cognitive digital twin for intention anticipation in human-aware AI. In Intelligent Autonomous Systems 18; Strand, M., Dillmann, R., Menegatti, E., Lorenzo, S., Eds.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 795, pp. 637–646. [Google Scholar] [CrossRef]

- Jaegle, A.; Borgeaud, S.; Alayrac, J.-B.; Doersch, C.; Ionescu, C.; Ding, D.; Koppula, S.; Zoran, D.; Brock, A.; Shelhamer, E.; et al. Perceiver IO: A general architecture for structured inputs & outputs. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Brookline, MA, USA, 2021; Volume 139, pp. 5339–5350. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Che, L.; Wang, J.; Zhou, Y.; Ma, F. Multimodal Federated Learning: A Survey. Sensors 2023, 23, 6986. [Google Scholar] [CrossRef]

- Chen, J.; Yi, J.; Chen, A.; Jin, Z. EFCOMFF-Net: A multiscale feature fusion architecture with enhanced feature correlation for remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5604917. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, Y.; Zhan, H. MDE: Modality discrimination enhancement for multi-modal recommendation. arXiv 2025, arXiv:2502.18481. [Google Scholar] [CrossRef]

- Poudel, P.; Chhetri, A.; Gyawali, P.; Leontidis, G.; Bhattarai, B. Multimodal Federated Learning with Missing Modalities through Feature Imputation Network. arXiv 2025, arXiv:2505.20232. [Google Scholar] [CrossRef]

- Bao, G.; Zhang, Q.; Miao, D.; Gong, Z.; Hu, L.; Liu, Y.; Shi, C. Multimodal Federated Learning with Missing Modality via Prototype Mask and Contrast. arXiv 2023, arXiv:2312.13508. [Google Scholar] [CrossRef]

- Huy, Q.L.; Nguyen, M.N.H.; Thwal, C.M.; Qiao, Y.; Zhang, C.; Hong, C.S. FedMEKT: Distillation-based Embedding Knowledge Transfer for Multimodal Federated Learning. Neural Netw. 2025, 183, 107017. [Google Scholar] [CrossRef]

- Park, S.; Kim, C.; Youm, S. Establishment of an IoT-based smart factory and data analysis model for the quality management of SMEs die-casting companies in Korea. Int. J. Distrib. Sens. Netw. 2019, 15, 1550147719879378. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, Q.; Lv, J. FedEPA: Enhancing personalization and modality alignment in multimodal federated learning. In Intelligent Computing: Proceedings of the 2025 Computing Conference, London, UK, 19–20 June 2025; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer: Singapore, 2025; Volume 1017, pp. 115–124. [Google Scholar] [CrossRef]

- Yu, Q.; Liu, Y.; Wang, Y.; Xu, K.; Liu, J. Multimodal Federated Learning via Contrastive Representation Ensemble (CreamFL). arXiv 2023, arXiv:2302.08888. [Google Scholar] [CrossRef]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar] [CrossRef]

- Wang, H.; Kaplan, Z.; Niu, D.; Li, B. Optimizing Federated Learning on Non-IID Data with Reinforcement Learning. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1698–1707. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, J.; Shen, J.; Chen, M.; Wang, R.; Zhou, X.; Wu, Q. SATP-GAN: Self-attention based generative adversarial network for traffic flow prediction. Transp. B Transp. Dyn. 2021, 9, 552–568. [Google Scholar] [CrossRef]

- Li, P.; Zhang, H.; Wu, Y.; Qian, L.; Yu, R.; Niyato, D.; Shen, X. Filling the Missing: Exploring Generative AI for Enhanced Federated Learning Over Heterogeneous Mobile Edge Devices. IEEE Trans. Mob. Comput. 2024, 23, 10001–10015. [Google Scholar] [CrossRef]

- Roberts, M.C.; Holt, K.E.; Del Fiol, G.; Kohlmann, W.; Shirts, B.H. Precision public health in the era of genomics and big data. Nat. Med. 2024, 30, 1865–1873. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Z.-Y.; Liu, Q.; Sun, S.; Wang, K.; Chellappa, R.; Zhou, Z.; Yuille, A.; Zhu, L.; Zhang, Y.-D. Medical World Model: Generative Simulation of Tumor Evolution for Treatment Planning. arXiv 2024, arXiv:2506.02327. [Google Scholar] [CrossRef]

- Swerdlow, A.; Prabhudesai, M.; Gandhi, S.; Pathak, D.; Fragkiadaki, K. Unified Multimodal Discrete Diffusion. arXiv 2025, arXiv:2503.20853. [Google Scholar] [CrossRef]

- Shao, J.; Pan, Y.; Kou, W.B.; Feng, H.; Zhao, Y.; Zhou, K.; Zhong, S. Generalization of a deep learning model for continuous glucose monitoring-based hypoglycemia prediction: Algorithm development and validation study. JMIR Med. Inform. 2024, 12, e56909. [Google Scholar] [CrossRef]

- Yang, Y.; Lan, T.; Wang, Y.; Li, F.; Liu, L.; Huang, X.; Gao, F.; Jiang, S.; Zhang, Z.; Chen, X. Data imbalance in cardiac health diagnostics using CECG-GAN. Sci. Rep. 2024, 14, 14767. [Google Scholar] [CrossRef]

- He, Y.; Rojas, K.; Tao, M. Zeroth-order sampling methods for non-log-concave distributions: Alleviating metastability by denoising diffusion. arXiv 2024, arXiv:2402.17886. [Google Scholar] [CrossRef]

- Stoian, M.C.; Dyrmishi, S.; Cordy, M.; Lukasiewicz, T.; Giunchiglia, E. How realistic is your synthetic data? Constraining deep generative models for tabular data. arXiv 2024, arXiv:2402.04823. [Google Scholar] [CrossRef]

- Ren, F.; Aliper, A.; Chen, J.; Zhao, H.; Rao, S.; Kuppe, C.; Ozerov, I.V.; Peng, H.; Zhavoronkov, A. A small-molecule TNIK inhibitor targets fibrosis in preclinical and clinical models. Nat. Biotechnol. 2024, 42, 63–75. [Google Scholar] [CrossRef]

- Zhang, K.; Zhou, F.; Wu, L.; Xie, N.; He, Z. Semantic Understanding and Prompt Engineering for Large-Scale Traffic Data Imputation. Inf. Fusion 2024, 102, 102038. [Google Scholar] [CrossRef]

- Wang, J.; Ren, Y.; Song, Z.; Zhang, J.; Zheng, C.; Qiang, W. Hacking task confounder in meta-learning. In Proceedings of the 33rd International Joint Conference on Artificial Intelligence (IJCAI-24), Jeju, Republic of Korea, 3–9 August 2024; Bessiere, C., Ed.; IJCAI Organization: California, CA, USA, 2024; pp. 5064–5072. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, C.; Lan, S.; Zhu, L.; Zhang, Y. End-edge-cloud collaborative computing for deep learning: A comprehensive survey. IEEE Commun. Surv. Tutor. 2024, 26, 2647–2683. [Google Scholar] [CrossRef]

- Fan, W.; Su, Y.; Liu, J.; Li, S.; Huang, W.; Wu, F.; Liu, Y. Joint Task Offloading and Resource Allocation for Vehicular Edge Computing Based on V2I and V2V Modes. IEEE Trans. Intell. Transp. Syst. 2023, 24, 4277–4292. [Google Scholar] [CrossRef]

- Hu, H.; Jiang, C. Edge Intelligence: Challenges and Opportunities. In Proceedings of the 2020 International Conference on Computer, Information and Telecommunication Systems (CITS), Hangzhou, China, 5–7 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Navardi, M.; Aalishah, R.; Fu, Y.; Lin, Y.; Li, H.; Chen, Y.; Mohsenin, T. GenAI at the Edge: Comprehensive Survey on Empowering Edge Devices. Proc. AAAI Symp. Ser. 2025, 5, 180–187. [Google Scholar] [CrossRef]

- Cheng, H.; Zhang, M.; Shi, J.Q. A Survey on Deep Neural Network Pruning: Taxonomy, Comparison, Analysis, and Recommendations. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10558–10578. [Google Scholar] [CrossRef]

- He, Y.; Xiao, L. Structured Pruning for Deep Convolutional Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2900–2919. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.; Sun, J. Channel Pruning for Accelerating Very Deep Neural Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1398–1406. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.-Q. Unstructured Pruning and Low Rank Factorisation of Self-Supervised Pre-Trained Speech Models. IEEE J. Sel. Top. Signal Process. 2024, 18, 1046–1058. [Google Scholar] [CrossRef]

- Su, W.; Li, Z.; Xu, M.; Kang, J.; Niyato, D.; Xie, S. Compressing Deep Reinforcement Learning Networks with a Dynamic Structured Pruning Method for Autonomous Driving. IEEE Trans. Veh. Technol. 2024, 73, 18017–18030. [Google Scholar] [CrossRef]

- Lu, Y.; Guan, Z.; Zhao, W.; Gong, M.; Wang, W.; Sheng, K. SNPF: Sensitiveness-Based Network Pruning Framework for Efficient Edge Computing. IEEE Internet Things J. 2024, 11, 6972–6991. [Google Scholar] [CrossRef]

- Matos, J.B.P.; de Lima Filho, E.B.; Bessa, I.; Manino, E.; Song, X.; Cordeiro, L.C. Counterexample Guided Neural Network Quantization Refinement. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2024, 43, 1121–1134. [Google Scholar] [CrossRef]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar] [CrossRef]

- Tai, Y.-S.; Chang, C.-Y.; Teng, C.-F.; Chen, Y.-T.; Wu, A.-Y. Joint Optimization of Dimension Reduction and Mixed-Precision Quantization for Activation Compression of Neural Networks. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 4025–4037. [Google Scholar] [CrossRef]

- Motetti, B.A.; Risso, M.; Burrello, A.; Macii, E.; Poncino, M.; Pagliari, D.J. Joint Pruning and Channel-Wise Mixed-Precision Quantization for Efficient Deep Neural Networks. IEEE Trans. Comput. 2024, 73, 2619–2633. [Google Scholar] [CrossRef]

- Peng, J.; Liu, H.; Zhao, Z.; Li, Z.; Liu, S.; Li, Q. CMQ: Crossbar-Aware Neural Network Mixed-Precision Quantization via Differentiable Architecture Search. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2022, 41, 4124–4133. [Google Scholar] [CrossRef]

- Kim, N.; Shin, D.; Choi, W.; Kim, G.; Park, J. Exploiting Retraining-Based Mixed-Precision Quantization for Low-Cost DNN Accelerator Design. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2925–2938. [Google Scholar] [CrossRef]

- Yang, S.; Xu, L.; Zhou, M.; Yang, X.; Yang, J.; Huang, Z. Skill-Transferring Knowledge Distillation Method. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6487–6502. [Google Scholar] [CrossRef]

- Gou, J.; Sun, L.; Yu, B.; Du, L.; Ramamohanarao, K.; Tao, D. Collaborative Knowledge Distillation via Multiknowledge Transfer. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 6718–6730. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Tu, Z.; Liu, X.; Xiao, X. A General Dynamic Knowledge Distillation Method for Visual Analytics. IEEE Trans. Image Process. 2022, 31, 6517–6531. [Google Scholar] [CrossRef]

- Gou, J.; Chen, Y.; Yu, B.; Liu, J.; Du, L.; Wan, S.; Yi, Z. Reciprocal Teacher-Student Learning via Forward and Feedback Knowledge Distillation. IEEE Trans. Multimed. 2024, 26, 7901–7916. [Google Scholar] [CrossRef]

- Li, S.; Li, S.; Sun, Y.; Wang, B.; Wang, B.; Zhang, B. Digital Twin-Assisted Computation Offloading and Resource Allocation for Multi-Device Collaborative Tasks in Industrial Internet of Things. IEEE Trans. Netw. Sci. Eng. 2025, 1–16. [Google Scholar] [CrossRef]

- Sun, W.; Wang, P.; Xu, N.; Wang, G.; Zhang, Y. Dynamic Digital Twin and Distributed Incentives for Resource Allocation in Aerial-Assisted Internet of Vehicles. IEEE Internet Things J. 2022, 9, 5839–5852. [Google Scholar] [CrossRef]

- Mehdipourchari, K.; Askarizadeh, M.; Nguyen, K.K. Shared-Resource Generative Adversarial Network (GAN) Training for 5G URLLC Deep Reinforcement Learning Augmentation. In Proceedings of the ICC 2024—IEEE International Conference on Communications, Denver, CO, USA, 9–13 June 2024; pp. 2998–3003. [Google Scholar] [CrossRef]

- Naeem, F.; Seifollahi, S.; Zhou, Z.; Tariq, M. A Generative Adversarial Network Enabled Deep Distributional Reinforcement Learning for Transmission Scheduling in Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4550–4559. [Google Scholar] [CrossRef]

- Gu, R.; Zhang, J. GANSlicing: A GAN-Based Software Defined Mobile Network Slicing Scheme for IoT Applications. In Proceedings of the 2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Singh, P.; Hazarika, B.; Singh, K.; Huang, W.-J.; Duong, T.Q. Digital Twin-Assisted Adaptive Federated Multi-Agent DRL with GenAI for Optimized Resource Allocation in IoV Networks. In Proceedings of the 2025 IEEE Wireless Communications and Networking Conference (WCNC), Milan, Italy, 24–27 March 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Fang, J.; He, Y.; Yu, F.R.; Du, J. Resource Allocation for Video Diffusion Task Offloading in Cloud-Edge Networks: A Deep Active Inference Approach. In Proceedings of the GLOBECOM 2024—2024 IEEE Global Communications Conference, Cape Town, South Africa, 8–12 December 2024; pp. 2021–2026. [Google Scholar] [CrossRef]

- Li, M.; Gao, J.; Zhou, C.; Zhao, L.; Shen, X. Digital-Twin-Empowered Resource Allocation for On-Demand Collaborative Sensing. IEEE Internet Things J. 2024, 11, 37942–37958. [Google Scholar] [CrossRef]

- Liu, Z.; Du, H.; Lin, J.; Gao, Z.; Huang, L.; Hosseinalipour, S.; Niyato, D. DNN Partitioning, Task Offloading, and Resource Allocation in Dynamic Vehicular Networks: A Lyapunov-Guided Diffusion-Based Reinforcement Learning Approach. IEEE Trans. Mob. Comput. 2025, 24, 1945–1962. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, J.; Chen, J.; Fu, H.; Tong, Z.; Jiang, C. Diffusion-Based Reinforcement Learning for Cooperative Offloading and Resource Allocation in Multi-UAV Assisted Edge-Enabled Metaverse. IEEE Trans. Veh. Technol. 2025, 74, 11281–11293. [Google Scholar] [CrossRef]

- Wang, L.; Liang, H.; Mao, G.; Zhao, D.; Liu, Q.; Yao, Y.; Zhang, H. Resource Allocation for Dynamic Platoon Digital Twin Networks: A Multi-Agent Deep Reinforcement Learning Method. IEEE Trans. Veh. Technol. 2024, 73, 15609–15620. [Google Scholar] [CrossRef]

- Tang, L.; Wang, A.; Xia, B.; Tang, Y.; Chen, Q. Research on Integrated Sensing, Communication Resource Allocation, and Digital Twin Placement Based on Digital Twin in IoV. IEEE Internet Things J. 2025, 12, 17300–17315. [Google Scholar] [CrossRef]

- Ji, B.; Dong, B.; Li, D.; Wang, Y.; Yang, L.; Tsimenidis, C.; Menon, V.G. Optimization of Resource Allocation for V2X Security Communication Based on Multi-Agent Reinforcement Learning. IEEE Trans. Veh. Technol. 2025, 74, 1849–1861. [Google Scholar] [CrossRef]

- Yin, J.; Zhang, Y.; Li, X.; Zhao, Y.; Liu, Z.; Tang, M. QoS-Aware Energy-Efficient Multi-UAV Offloading Ratio and Trajectory Control Algorithm in Mobile-Edge Computing. IEEE Internet Things J. 2024, 11, 40588–40602. [Google Scholar] [CrossRef]

- Guo, Q.; Tang, F.; Kato, N. Federated Reinforcement Learning-Based Resource Allocation for D2D-Aided Digital Twin Edge Networks in 6G Industrial IoT. IEEE Trans. Ind. Inf. 2023, 19, 7228–7236. [Google Scholar] [CrossRef]

- Peng, H.; Shen, X. Multi-Agent Reinforcement Learning Based Resource Management in MEC- and UAV-Assisted Vehicular Networks. IEEE J. Sel. Areas Commun. 2021, 39, 131–141. [Google Scholar] [CrossRef]

- Kusiak, A. Generative artificial intelligence in smart manufacturing. J. Intell. Manuf. 2025, 36, 1–3. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Liu, Q.; Zhang, X.; Liu, M.; Ma, Y.; Yan, F.; Shen, W. A data and knowledge driven autonomous intelligent manufacturing system for intelligent factories. J. Manuf. Syst. 2024, 74, 512–526. [Google Scholar] [CrossRef]

- Alavian, P.; Eun, Y.; Meerkov, S.; Zhang, L. Smart production systems: Automating decision-making in manufacturing environment. Int. J. Prod. Res. 2019, 58, 828–845. [Google Scholar] [CrossRef]

- Leng, J.; Sha, W.; Lin, Z.; Jing, J.; Liu, Q.; Chen, X. Blockchained smart contract pyramid-driven multi-agent autonomous process control for resilient individualised manufacturing towards Industry 5.0. Int. J. Prod. Res. 2022, 61, 4302–4321. [Google Scholar] [CrossRef]

- Lin, H.; Guo, R.; Ma, D.; Kuai, X.; Yuan, Z.; Du, Z.; He, B. Digital-twin-based multi-scale simulation supports urban emergency management: A case study of urban epidemic transmission. Int. J. Digit. Earth 2024, 17, 2421950. [Google Scholar] [CrossRef]

- Wang, H.; Chen, X.; Jia, F.; Cheng, X. Digital twin-supported smart city: Status, challenges and future research directions. Expert Syst. Appl. 2023, 217, 119531. [Google Scholar] [CrossRef]

- Hu, X.; Li, S.; Huang, T.; Tang, B.; Huai, R.; Chen, L. How Simulation Helps Autonomous Driving: A Survey of Sim2real, Digital Twins, and Parallel Intelligence. IEEE Trans. Intell. Veh. 2024, 9, 593–612. [Google Scholar] [CrossRef]

- Niaz, A.; Shoukat, M.U.; Jia, Y.; Khan, S.; Niaz, F.; Raza, M.U. Autonomous driving test method based on digital twin: A survey. In Proceedings of the 2021 International Conference on Computing, Electronic and Electrical Engineering (ICE Cube), Quetta, Pakistan, 26–27 October 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Björnsson, B.; Borrebaeck, C.; Elander, N.; Gasslander, T.; Gawel, D.; Gustafsson, M.; Jörnsten, R.; Lee, E.; Li, X.; Lilja, S.; et al. Digital twins to personalize medicine. Genome Med. 2020, 12, 4. [Google Scholar] [CrossRef]

- Rajapakse, V.; Karunanayake, I.; Ahmed, N. Intelligence at the extreme edge: A survey on reformable TinyML. Acm Comput. Surv. 2023, 55, 1–30. [Google Scholar] [CrossRef]

- Hannou, F.Z.; Lefrançois, M.; Jouvelot, P.; Charpenay, V.; Zimmermann, A. A Survey on IoT Programming Platforms: A Business-Domain Experts Perspective. ACM Comput. Surv. 2024, 57, 1–37. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Asgari, E.; Montaña-Brown, N.; Dubois, M.; Khalil, S.; Balloch, J.; Yeung, J.A.; Pimenta, D. A framework to assess clinical safety and hallucination rates of LLMs for medical text summarisation. npj Digit. Med. 2025, 8, 274. [Google Scholar] [CrossRef]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef]

- Pati, S.; Kumar, S.; Varma, A.; Edwards, B.; Lu, C.; Qu, L.; Wang, J.J.; Lakshminarayanan, A.; Wang, S.H.; Sheller, M.J.; et al. Privacy preservation for federated learning in health care. Patterns 2024, 5, 100850. [Google Scholar] [CrossRef]

- Wang, F.; Li, B. Data reconstruction and protection in federated learning for fine-tuning large language models. IEEE Trans. Big Data 2024, 1–12. [Google Scholar] [CrossRef]

- Rehan, M.W.; Rehan, M.M. Survey, taxonomy, and emerging paradigms of societal digital twins for public health preparedness. npj Digit. Med. 2025, 8, 520. [Google Scholar] [CrossRef]

- Kuruppu Appuhamilage, G.D.K.; Hussain, M.; Zaman, M.; Ali Khan, W. A health digital twin framework for discrete event simulation based optimised critical care workflows. npj Digit. Med. 2025, 8, 376. [Google Scholar] [CrossRef]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farhad, F.; Jin, S.; Quek, T.; Poor, V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Andrew, G.; Thakkar, O.; McMahan, B.; Ramaswamy, S. Differentially private learning with adaptive clipping. Adv. Neural Inf. Process. Syst. 2021, 34, 17455–17466. [Google Scholar]

- Agarwal, N.; Kairouz, P.; Liu, Z. The Skellam mechanism for differentially private federated learning. Adv. Neural Inf. Process. Syst. 2021, 34, 5052–5064. [Google Scholar]

- Xin, B.; Geng, Y.; Hu, T.; Chen, S.; Yang, W.; Wang, S.; Huang, L. Federated synthetic data generation with differential privacy. Neurocomputing 2022, 468, 1–10. [Google Scholar] [CrossRef]

- Qi, Y.; Hossain, M.S. Semi-supervised federated learning for digital twin 6G-enabled IIoT: A Bayesian estimated approach. J. Adv. Res. 2024, 66, 47–57. [Google Scholar] [CrossRef]

- Hu, R.; Guo, Y.; Li, H.; Pei, Q.; Gong, Y. Personalized federated learning with differential privacy. IEEE Internet Things J. 2020, 7, 9530–9539. [Google Scholar] [CrossRef]

- Shen, X.; Liu, Y.; Zhang, Z. Performance-enhanced federated learning with differential privacy for internet of things. IEEE Internet Things J. 2022, 9, 24079–24094. [Google Scholar] [CrossRef]

- David, I.; Shao, G.; Gomes, C.; Tilbury, D.; Zarkout, B. Interoperability of Digital Twins: Challenges, Success Factors, and Future Research Directions; Springer: Berlin/Heidelberg, Germany, 2024; pp. 27–46. [Google Scholar]

- Xu, H.; Wu, J.; Pan, Q.; Guan, X.; Guizani, M. A survey on digital twin for industrial internet of things: Applications, technologies and tools. IEEE Commun. Surv. Tutor. 2023, 25, 2569–2598. [Google Scholar] [CrossRef]

- Sharan, S.P.; Choi, M.; Shah, S.; Goel, H.; Omama, M.; Chinchali, S. Neuro-Symbolic Evaluation of Text-to-Video Models using Formal Verification. In Proceedings of the 2025 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–17 June 2025; pp. 8395–8405. [Google Scholar] [CrossRef]

- Song, Y.; Dhariwal, P.; Chen, M.; Sutskever, I. Consistency Models. In Proceedings of the 40th International Conference on Machine Learning (ICML 2023), Honolulu, HI, USA, 23–29 July 2023. [Google Scholar] [CrossRef]

- Geng, Z.; Pokle, A.; Kolter, J.Z. One-Step Diffusion Distillation via Deep Equilibrium Models. In Proceedings of the 37th Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023. [Google Scholar] [CrossRef]

- Kim, H.; Yoo, J. Singular Value Scaling: Efficient Generative Model Compression via Pruned Weights Refinement. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–2 March 2025; Volume 39, pp. 17859–17867. [Google Scholar] [CrossRef]

- Xiong, K.; Wang, Z.; Leng, S.; Yang, K.; Chen, Y. A digital-twin-empowered lightweight model-sharing scheme for multirobot systems. IEEE Internet Things J. 2023, 10, 17231–17242. [Google Scholar] [CrossRef]

- Chiaro, D.; Qi, P.; Pescapè, A.; Piccialli, F. Generative AI-Empowered Digital Twin: A Comprehensive Survey with Taxonomy. IEEE Trans. Ind. Inform. 2025, 21, 4287–4295. [Google Scholar] [CrossRef]

- Schwartz, R.; Dodge, J.; Smith, N.A.; Etzioni, O. Green AI. Commun. ACM 2020, 63, 54–63. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Morán-Fernández, L.; Cancela, B.; Alonso-Betanzos, A. A review of green artificial intelligence: Towards a more sustainable future. Neurocomputing 2024, 599, 128096. [Google Scholar] [CrossRef]

- De Lange, M.; Aljundi, R.; Masana, M.; Parisot, S.; Jia, X.; Leonardis, A.; Slabaugh, G.; Tuytelaars, T. A Continual Learning Survey: Defying Forgetting in Classification Tasks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3366–3385. [Google Scholar] [CrossRef]

- Lee, D.; Yoo, M.; Kim, W.K.; Choi, W.; Woo, H. Incremental Learning of Retrievable Skills For Efficient Continual Task Adaptation. In Advances in Neural Information Processing Systems 37 (NeurIPS 2024); Globerson, A., Mackey, L., Belgrave, D., Fan, A., Paquet, U., Tomczak, J., Zhang, C., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2024. [Google Scholar] [CrossRef]

- Park, J.; Ji, A.; Park, M.; Rahman, M.S.; Oh, S.E. MalCL: Leveraging GAN-based generative replay to combat catastrophic forgetting in malware classification. In Proceedings of the Thirty-Ninth AAAI Conference on Artificial Intelligence and Thirty-Seventh Conference on Innovative Applications of Artificial Intelligence and Fifteenth Symposium on Educational Advances in Artificial Intelligence (AAAI’25/IAAI’25/EAAI’25), Philadelphia, PA, USA, 25 February–4 March 2025; pp. 658–666, Article 74. [Google Scholar] [CrossRef]

- Meng, Y.; Bing, Z.; Yao, X.; Chen, K.; Huang, K.; Gao, Y.; Sun, F.; Knoll, A. Preserving and combining knowledge in robotic lifelong reinforcement learning. Nat. Mach. Intell. 2025, 7, 256–269. [Google Scholar] [CrossRef]

- Papagiannidis, E.; Mikalef, P.; Conboy, K. Responsible artificial intelligence governance: A review and research framework. J. Strateg. Inf. Syst. 2025, 34, 101885. [Google Scholar] [CrossRef]

| Ref | Year | Description | Focus |

|---|---|---|---|

| [1] | 2023 | A survey on multimodal learning with transformers, covering their architectures, pretraining strategies, applications across vision, language, and speech, and open research challenges. | Multimodal generative artificial intelligence |

| [2] | 2024 | A comprehensive survey on multimodal learning from a data-centric perspective, reviewing datasets, benchmarks, and challenges in aligning and integrating vision with other modalities. | |

| [3] | 2024 | A survey on deep multimodal data fusion, discussing methods, applications across diverse domains, and key challenges in effectively integrating heterogeneous data. | |

| [4] | 2024 | Reviews multimodal large language models (MLLMs) focusing on their architectures, training strategies, datasets, and evaluation methods, highlighting emergent capabilities beyond traditional multimodal models. | |

| [5] | 2025 | A survey of AI-generated content (AIGC), outlining its evolution, core techniques, applications across text, vision, audio and code, and key challenges in alignment and evaluation. | |

| [6] | 2021 | This survey introduces digital twin networks, outlining their architectures, enabling technologies, representative applications, and key open issues for future intelligent networked systems. | Digital twins |

| [7] | 2023 | This review analyzes physical-based digital twins, emphasizing architectures, cross-domain applications, and the challenges of integrating physics-driven models with data-driven approaches. | |

| [8] | 2024 | A systematic review exploring the interplay of AI, AIoT, and urban digital twins to enhance data-driven strategies for environmental sustainability in smart cities. | |

| [9] | 2024 | A survey on applying machine and deep learning to digital twin networks for monitoring, optimization, and security. | |

| [10] | 2025 | A survey on digital twin networks covering architecture, enabling technologies, applications, and research challenges. |

| Reference | Research Object | Mathematical/ Modeling Focus | Strengths/ Limitations | Application Domains |

|---|---|---|---|---|

| Wu et al. (2021) [6] | DT networks | Network modeling, graph structures, performance equations | Comprehensive survey of DT networks; lacks cross-modal AI integration | IoT, communication networks |

| Liu et al. (2023) [7] | DT foundations | Categorization: entity, virtual model, twin data, application | Clear taxonomy; limited mathematical formalization | Engineering, manufacturing |

| Simon et al. (2024) [8] | DTs + AI for smart cities | System-level conceptual modeling | Good integration vision; limited quantitative models | Smart cities, sustainability |

| Qin et al. (2024) [9] | DTs with ML/DL | ML/DL optimization functions for DT networks | Mathematical rigor; lacks GAI perspective | IoT networks |

| Yidan et al. (2025) [10] | DT networks survey | Architectural and protocol modeling | Comprehensive; but largely descriptive | IoT, edge networks |

| Zhu et al. (2024) [2] | Multimodal fusion | Cross-modal alignment functions, embedding models | Detailed taxonomy; lacks DT integration | Computer vision, multimedia |

| Xu et al. (2023) [1] | Multimodal transformers | Transformer architectures, attention functions | Mathematical clarity; limited DT linkage | Vision, speech, language |

| Fei et al. (2024) [3] | Deep multimodal data fusion | Fusion operators, joint embedding, objective functions | Strong mathematical synthesis; lacks application scenarios | Multimedia, healthcare |

| Yin et al. (2024) [4] | Multimodal LLMs | Pre-training loss, alignment objectives | Good coverage; little DT consideration | LLMs, cross-modal AI |

| Cao et al. (2025) [5] | AIGC overview | GAN/diffusion model equations, generative objectives | Captures model diversity; limited DT context | AIGC, creative industries |

| Sector | Typical Validation (Examples) | Representative Works (by Ref. No.) | Maturity | Key Challenges |

|---|---|---|---|---|

| Smart Manufacturing | Case studies (throughput via PMA); DT + GAI predictive maintenance; simulation benchmarks for design optimization | [139,140,141,142] | High (pilot selective production lines) | Standardization across lines; data integration; cross-plant generalization |

| Smart Cities | City-scale traffic twins (real-time control); DT-enhanced evacuation simulations (response time); what-if analysis | [143,144] | Medium (many pilots; limited citywide ops) | Real-time fusion; multi-agent coordination; unified simulation standards |

| Autonomous Driving | Twin-driven RL training; sim2real evaluation; vehicle-in-the-loop via digital siblings | [145,146] | Emerging medium | Scenario realism; sim2real gap; safety certification |

| Healthcare | Patient-specific DTs; surgical rehearsal; virtual clinical trials (in silico cohorts) | [74,75,147] | High in research; medium in clinical translation | Privacy and ethics; interoperability; computational scalability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, X.; Wang, A.; Zhang, X.; Huang, K.; Wang, S.; Chen, L.; Cui, Y. Toward Intelligent AIoT: A Comprehensive Survey on Digital Twin and Multimodal Generative AI Integration. Mathematics 2025, 13, 3382. https://doi.org/10.3390/math13213382

Luo X, Wang A, Zhang X, Huang K, Wang S, Chen L, Cui Y. Toward Intelligent AIoT: A Comprehensive Survey on Digital Twin and Multimodal Generative AI Integration. Mathematics. 2025; 13(21):3382. https://doi.org/10.3390/math13213382

Chicago/Turabian StyleLuo, Xiaoyi, Aiwen Wang, Xinling Zhang, Kunda Huang, Songyu Wang, Lixin Chen, and Yejia Cui. 2025. "Toward Intelligent AIoT: A Comprehensive Survey on Digital Twin and Multimodal Generative AI Integration" Mathematics 13, no. 21: 3382. https://doi.org/10.3390/math13213382

APA StyleLuo, X., Wang, A., Zhang, X., Huang, K., Wang, S., Chen, L., & Cui, Y. (2025). Toward Intelligent AIoT: A Comprehensive Survey on Digital Twin and Multimodal Generative AI Integration. Mathematics, 13(21), 3382. https://doi.org/10.3390/math13213382