1. Introduction

The increasing global demand for reliable and sustainable energy solutions, particularly in remote and off-grid locations, has driven substantial progress in decentralized power systems. Among these, DCMGs have emerged as a highly promising technology due to their inherent capability to integrate various renewable energy sources, such as PV panels, alongside energy storage devices including batteries and supercapacitors [

1,

2,

3,

4]. The natural ability of DCMGs to maintain balanced power sharing among their components not only enhances overall energy efficiency but also significantly improves system reliability. This intrinsic balance simplifies control strategies and ensures steady operation, even when the microgrid operates autonomously from the main utility grid, which is crucial for isolated applications [

1,

2].

However, maintaining consistent power flow symmetry within DCMGs remains a complex challenge. The intermittent nature of renewable generation combined with fluctuating load demands introduces uncertainties that necessitate sophisticated control mechanisms. Conventional controllers, such as PI regulators, are widely used for their simplicity and ease of implementation. Yet, these controllers often require meticulous parameter tuning and may fall short in maintaining stable power distribution during sudden load changes or highly dynamic operating conditions [

5].

To address these limitations, FLSs have been applied due to their ability to manage nonlinearities and uncertainties by employing heuristic rules that approximate human reasoning. Despite their adaptability, FLS-based controllers may struggle to sustain optimal energy balance when faced with rapid and complex variations in microgrid conditions [

6]. SMC techniques offer robust performance and are effective in handling system uncertainties and disturbances; however, fully ensuring energy symmetry and stability in DC microgrids using SMC alone remains an active research area.

Previous research proposed a hierarchical energy management framework that synergistically combines nonlinear SMC with fuzzy logic control to stabilize DCMGs composed of PV arrays, batteries, and supercapacitors [

7]. This hybrid approach demonstrated enhanced dynamic stability and improved energy sharing compared to traditional linear control methods. Nonetheless, the fuzzy logic component exhibited certain limitations in coping with highly variable operating scenarios.

In light of these challenges, AI techniques—particularly RL—have attracted considerable interest in the context of energy management. RL empowers agents to learn optimal control policies through continuous interaction with their environment, making it well-suited for systems characterized by uncertainty and nonlinearity [

8,

9,

10]. Among these methods, DQL stands out by combining Q-learning with deep neural networks, enabling efficient handling of large state-action spaces and improving adaptability in complex microgrid operations [

11]. Recent research further supports this trend: Wang et al. [

12] explored advanced deep reinforcement learning methods for complex decision-making tasks; Liu et al. [

13] investigated intelligent optimization algorithms that promote adaptive, data-driven control; Kim et al. [

14] examined adaptive control methods in smart energy systems; and Tanaka et al. [

15] analyzed hybrid control architectures designed for power electronics and distributed energy systems.

While the concept of multi-agent systems is discussed here to position this work within the broader research landscape and to highlight potential future extensions toward distributed and coordinated control [

16], the framework implemented and validated in this study is strictly single-agent. All results and analyses correspond to a single-agent DQL controller working alongside nonlinear SMC to enhance decision-making performance and robustness, without any multi-agent systems based experimental validation.

More recently, Zhang et al. (2025) presented an intelligent energy management framework for DC microgrids that combines sliding mode control with fuzzy logic, closely aligning with the approach we build upon and reinforcing the scientific foundation of integrating SMC and FLS techniques [

17]. Furthermore, the comprehensive review by Chen et al. (2024) offers an insightful analysis of DC microgrid architectures and practical applications, enriching the context of recent advances in this rapidly evolving field [

18].

Building upon these foundational studies [

7,

13], this paper introduces an enhanced energy management system that incorporates a DQL strategy to optimize real-time operations of energy storage units, including charging, discharging, and load shedding. The objective is to maintain DC bus voltage stability, extend the lifespan of storage components, and improve the overall efficiency of the microgrid under dynamically changing conditions.

The main contributions of this study can be summarized as follows:

- –

We propose a two-layer control framework in which an SMC layer ensures fast and robust DC bus voltage regulation under disturbances, while a DQL layer performs high-level decision making for energy storage coordination and load management. Unlike most state-of-the-art hybrid DRL-classical control strategies that rely on linear PI/PID controllers, our use of nonlinear SMC enhances robustness against modeling uncertainties and sudden operating condition changes in DC microgrids.

- –

The proposed DQL agent simultaneously optimizes the operation of both batteries and supercapacitors, balancing SOC levels to reduce asymmetric degradation. This unified approach contrasts with prior works that often manage storage devices independently or use static rule-based allocation.

- –

Simulation results under rapidly varying solar irradiance and load profiles show significant improvements over a fuzzy logic controller baseline: approximately 60%reduction in voltage fluctuations, 42% fewer deep battery discharge events, and 35%reduction in load shedding.

- –

The DQL architecture and training parameters were selected to achieve strong decision-making capabilities while maintaining computational lightness, making the proposed strategy suitable for real-time deployment in embedded microgrid controllers.

The remainder of this paper is organized as follows:

Section 2 describes the detailed model of the DC microgrid and its constituent components;

Section 3 discusses the design and stability analysis of nonlinear sliding mode controllers;

Section 4 highlights the limitations of the existing fuzzy-logic-based strategy and presents the proposed single-agent DQL method;

Section 5 details the simulation setup and training process, followed by a comparative discussion of results; and

Section 6 concludes the study and outlines directions for future research.

3. Nonlinear Sliding Mode Control

Using the models introduced previously, this section formulates nonlinear control laws based on sliding mode theory, aimed at ensuring voltage regulation and energy flow control at the converter level.

We introduce the SMC layer by explaining its selection for robust and fast stabilization of the DC bus voltage. The section presents each controller in the context of the system’s dynamic challenges, showing how they contribute to maintaining power balance and voltage stability. The explanation is kept concise and concludes by linking this control layer to the higher-level AI-based decision-making system. Each controller is developed based on the dynamic models described previously, aiming to track predefined reference values that optimize the operation of the corresponding component.

To achieve stable and balanced power flow despite these fluctuations, the system integrates a robust nonlinear SMC layer for real-time voltage regulation. Complementing this, an AI-based decision-making layer using DQL learns to optimize higher-level strategies such as charging, discharging, and load shedding. Together, these layers improve dynamic performance by adapting to rapid changes in renewable generation and load demand, while aiming to maintain voltage stability, reduce stress on storage components, and enhance overall energy efficiency.

3.1. SMC Design for the PV Generator

The objective of the PV subsystem control is to ensure that the photovoltaic panel operates at its MPP. The desired reference current is obtained through MPPT algorithm. The control task is to force the inductor current Ipv to track .

The sliding surface for the PV subsystem is defined as .

The control law is designed to satisfy the sliding condition:

where

is a positive gain selected to ensure rapid convergence.

Differentiating the sliding surface and substituting the PV dynamic model,

The equivalent control and switching terms are derived to compute the appropriate duty cycle

:

3.2. SMC for the Battery Storage Unit

For the battery, the control objective is to regulate the battery current Ib according to a reference current , determined by the energy management strategy.

The sliding surface for the battery is

The dynamics of the surface are

The SMC law enforces

where

kb > 0 is the control gain ensuring finite-time convergence.

Solving for the duty cycle:

3.3. SMC for the Supercapacitor Module

The supercapacitor controller is responsible for absorbing or injecting power to counteract rapid load variations, thereby stabilizing the DC bus voltage.

The supercapacitor sliding surface is defined as

Similarly, the dynamic equation for

Ssc becomes

The SMC condition to be satisfied is

with

ksc as a strictly positive control gain.

Solving for the duty cycle:

3.4. Interconnected System Stability Analysis

The sliding mode controllers presented in

Section 3.1,

Section 3.2 and

Section 3.3 are designed to guarantee finite-time convergence of the respective controlled currents, namely, the PV generator current states

Ipv, the battery current

Ib, and the supercapacitor current

Isc towards their reference values. While the stability of each individual subsystem has been analyzed independently, the physical interconnection through the common DC bus voltage

introduces strong coupling between them.

Therefore, an extended stability analysis is required, in which the DC bus voltage regulation objective is explicitly included in the Lyapunov stability framework.

According to the DC microgrid model presented in Equation (4), the evolution of the DC bus voltage is directly influenced by the interplay between the source currents, the duty cycles applied to the converters, and the load current.

The reference generation mechanism is designed such that the sum of the source currents satisfies the power balance required to regulate the DC bus voltage around , even in the presence of load variations and source intermittency.

To explicitly incorporate the voltage regulation objective, an additional sliding surface is introduced for the DC bus voltage:

The error is intrinsically linked to the current tracking errors via the coupling relation (4). Any deviation in one of the current loops directly affects the DC bus voltage, which justifies its integration into the global stability analysis.

To assess the stability of the interconnected system, a composite Lyapunov candidate function is proposed:

The positive scalar acts as a weighting coefficient to balance the contribution of the bus voltage error relative to the current tracking errors. The function V(t) is positive definite and vanishes only when all tracking errors are zero, i.e., when .

The time derivative of

V(

t) is obtained as

Using the sliding mode control laws applied to each subsystem,

and substituting the DC bus dynamics from (4) into the derivative of

, we obtain

Replacing (21)and (22) into (20) yields

This inequality ensures that

V(

t) is non-increasing and that all sliding surfaces converge to zero in finite time. Consequently,

By embedding the DC bus voltage error SDC into the composite Lyapunov function, the stability proof captures the coupling effects inherent to the DC microgrid. This approach not only confirms that each subsystem achieves its local tracking objective but also ensures that the overall system maintains global asymptotic stability. The interconnection analysis highlights the coordinated role of all converters in simultaneously regulating the bus voltage and tracking the current references, even under dynamic operating conditions such as load steps or source variations.

This unified analysis reinforces the effectiveness and robustness of the proposed control strategy, confirming that even in a multi-component architecture, individual convergence leads to stable global behavior.

5. Validation of the Approach on the DC Microgrid System

This section examines the behavior of the proposed hybrid control strategy under simulated operating scenarios, demonstrating its robustness and efficiency.

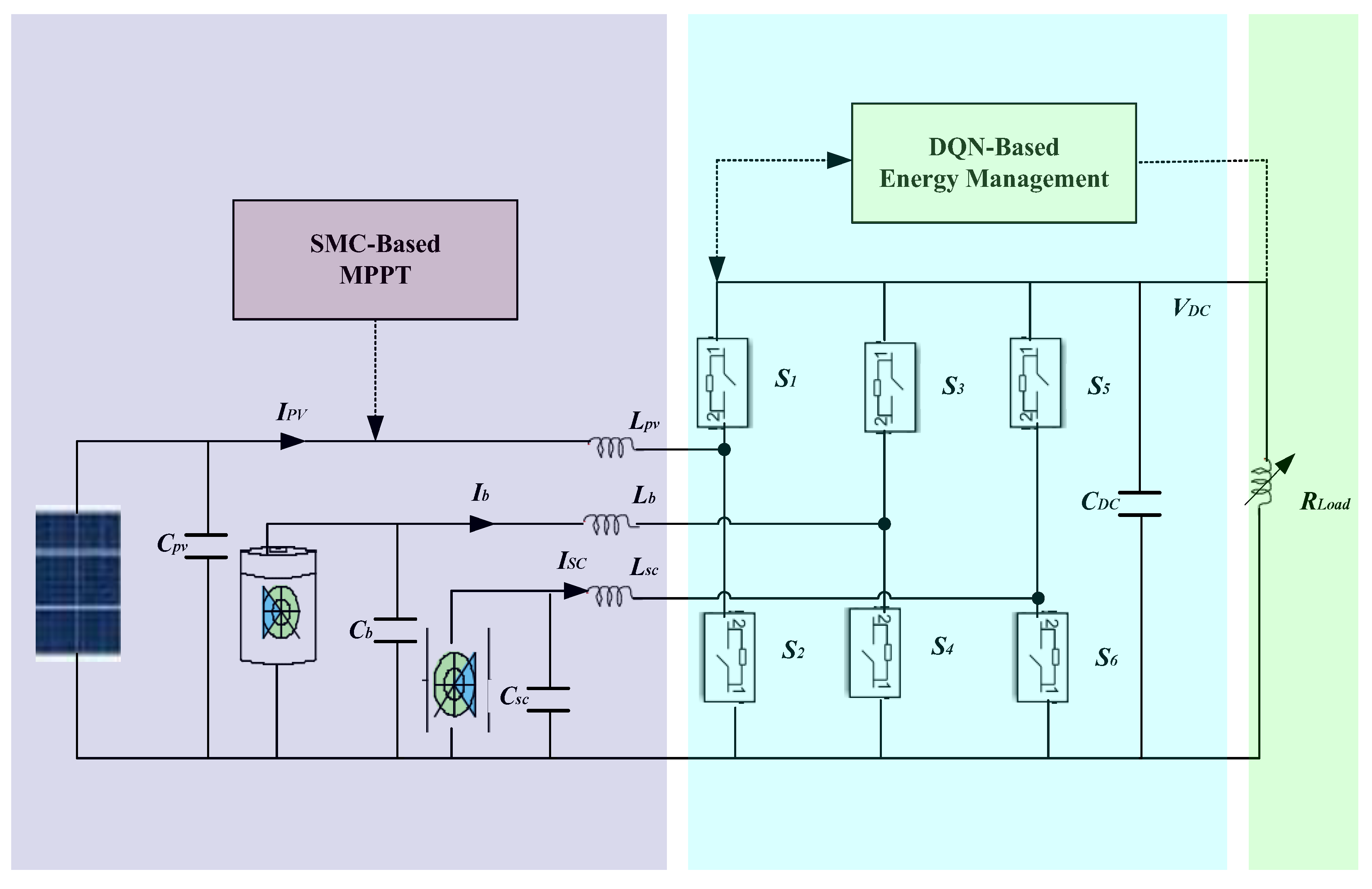

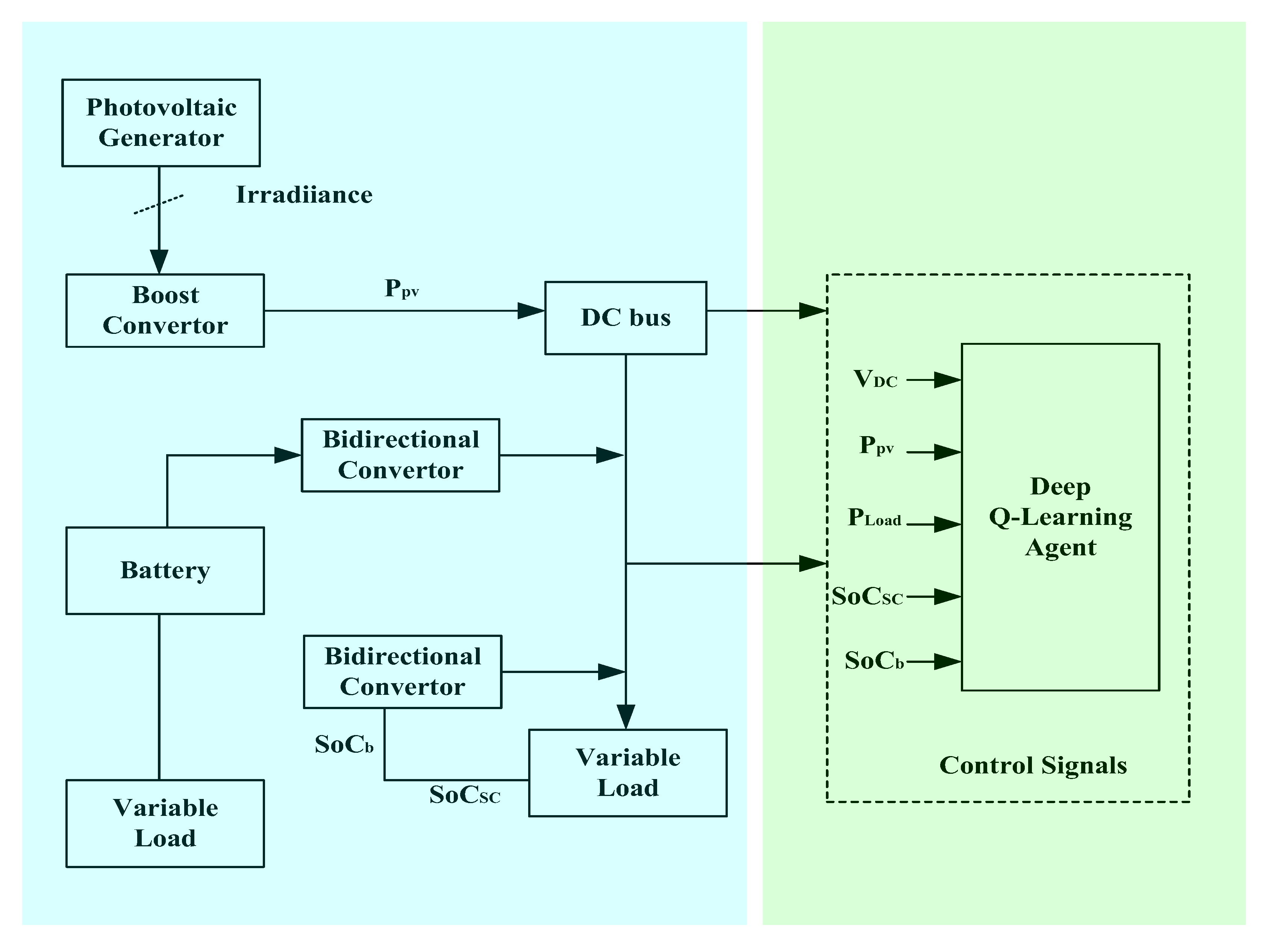

To validate the proposed hybrid energy management strategy, a detailed simulation model of the DCMG was developed and implemented in MATLAB R2023b/Simulink. The architecture of this model is illustrated in

Figure 4.

Figure 4 presents a schematic overview of the simulation model used to evaluate the proposed hybrid energy management strategy. The simulation environment mirrors the system architecture described in

Section 2 and implements the proposed hybrid control scheme using MATLAB/Simulink. The system is managed by a DQL agent that receives real-time information, such as PV power, load demand, and SoC levels, and generates control actions for optimal coordination between storage units and load balancing.

5.1. Deep Q-Learning Implementation Details

The choice of network architecture and size was guided by a grid search performed during preliminary experiments to balance training stability, computational cost, and control performance under highly dynamic operating conditions.

The DQN agent used in this study was implemented as a feedforward neural network consisting of an input layer, two hidden layers, and an output layer. Each hidden layer contains 128 neurons and uses the ReLU activation function to introduce non-507linearity while maintaining computational efficiency.

The main hyperparameters selected for training are as follows:

Batch size: 64;

Learning rate: 0.001;

Discount factor (γ): 0.99;

Target network update frequency: every 200 training steps;

Replay buffer size: 10,000 experiences.

These hyperparameters were chosen to ensure stable learning while allowing the agent to respond effectively to rapid fluctuations in renewable generation and load demand. The relatively small number of hidden layers and moderate node count keep the model lightweight, which is suitable for real-time control applications on embedded systems.

For a complete description of the DQL agent’s structure and learning framework, please refer to

Section 4.3.

To enhance the stability and reliability of the training process, all variables composing the state vector including the DC bus voltage (VDC), photovoltaic power output(PPV), battery and supercapacitor state-of-charge levels (SoCbat, SoCsc), and load demand (Pload) were rescaled through min–max normalization.

It should be noted that, although the supercapacitor’s state-of-charge is part of the state vector, the present implementation did not include any mechanism to account for its degradation or lifetime effects. Consequently, the DQL agent’s policy optimization wasbased solely on instantaneous electrical performance, without reflecting potential long-term impacts on SC health.

This transformation maps each input variable

xi into the standard range [0,1] using the following relation:

This normalization process ensures balanced feature scaling, preventing any individual input from disproportionately influencing the network during the learning phase.

In the same spirit, the reward function introduced in Equation (25) is constructed as a weighted sum of normalized indicators that reflect key performance objectives.

Each component function fi(⋅) is designed to return values within bounded and comparable ranges, corresponding respectively to voltage stability, battery charge regulation, minimization of load shedding, and reduction of battery energy stress. The associated weights ωi ∈ [0,1] were selected to align with the control priorities of the energy management system. This formulation ensures that the total reward remains within a manageable scale and supports consistent and robust policy convergence during DQL.

This architecture and tuning approach contribute to the robust and adaptive behavior of the proposed hybrid control system, as demonstrated by the improved voltage regulation and balanced operation of storage units in the simulation results.

5.2. Training Environment and Simulation-Based Validation

To train the DQL agent for intelligent energy management, a realistic and high-fidelity simulation environment was developed using MATLAB/Simulink in conjunction with the SimPowerSystems toolbox. This environment emulates the dynamic behavior of the DC microgrid as described in

Section 3, incorporating all key components: photovoltaic generator, battery, supercapacitor, variable loads, and the associated DC-DC converters. Nonlinear SMCs were integrated to regulate each converter in real time.

- i.

Scenario Generation for Learning

To ensure the agent learnt robust policies applicable under a variety of operating conditions, multiple training scenarios were generated, including

Variable solar irradiance profiles, simulating different weather conditions such as clear skies, cloud cover, and rapid fluctuations;

Dynamic load demand patterns, including slow ramping as well as abrupt load increases or decreases;

Extreme conditions, such as sudden generation losses, deep battery discharges, or transient overloading.

These scenarios aim to expose the agent to both typical and edge-case behaviors, ensuring a well-rounded learning process.

- ii.

Neural Network Architecture

The DQL agent relies on a DQN to approximate the action value function Q(s,a), which estimates the expected cumulative reward of taking action a in state s. The architecture of the network was structured as follows:

Input layer: receives the current state vector ;

Hidden layers: two or three fully connected layers using ReLU (Rectified Linear Unit) activation functions;

Output layer: provides a Q-value for each possible action, used to guide the decision-making process.

The network was trained by minimizing the temporal difference loss, based on the Bellman equation, using stochastic gradient descent to update the network weights.

- iii.

Learning Parameters

The DQL agent was trained following established reinforcement learning principles, with the following hyperparameters:

Learning rate: 0.001, controlling the step size during weight updates;

Discount factor γ: typically set between 0.95 and 0.99 to balance immediate and future rewards;

Exploration–exploitation strategy: ε-greedy policy where ε decays gradually from 1.0 to 0.01 across training episodes;

Replay memory: a circular buffer that stores state-action-reward-next state transitions, from which batches are randomly sampled to break temporal correlations;

Target network update frequency: every fixed number of steps (e.g., every 500 iterations) to stabilize learning by decoupling the target Q-value estimation.

- iv.

Offline Training and Convergence

The entire training process is conducted offline, meaning that the agent interacts exclusively with the simulated microgrid model and does not control a physical system during training. Throughout successive training episodes, the agent explores the environment, accumulates experience, and updates its Q-network to improve its decision-making policy.

Training is considered complete once the agent demonstrates stable behavior according to the reward criteria defined in

Section 4.2, including:

Maintaining DC bus voltage close to its desired reference;

Minimizing unnecessary load shedding;

Efficiently managing the state of charge of the battery and supercapacitor;

Enhancing overall energy efficiency under dynamic and uncertain operating conditions.

- v.

Details of the DQL Controller

The DQL agent was trained using 3000 simulation episodes, each one emulating a full operational cycle of the hybrid energy management system. These episodes were designed to reflect diverse operating conditions, incorporating dynamic variations in electrical loads, fluctuating PV generation profiles, and randomized initial values for the SoC of the battery and supercapacitor.

The training convergence was monitored through the progression of the accumulated reward, which showed a clear stabilization trend after around 2500 episodes.

In addition to this reward plateau, the agent’s decision-making behavior became consistent—demonstrating stable energy distribution strategies and reliable adherence to operational constraints such as voltage regulation and SoC limits.

To validate the generalization capability of the trained agent, the training and evaluation phases were conducted on separate datasets. While the agent was exposed to randomized training scenarios, the performance assessment was carried out using unseen test cases, including novel load change sequences and PV generation profiles not encountered during learning.

This methodology ensures that the final control policy remains robust and effective even under previously untested and varied operating conditions.

- vi.

Hyperparameter Selection and Limitations

In this study, the configuration of the DQL agent was carried out empirically, relying on iterative testing and commonly adopted practices in the literature. The values selected for the main hyperparameters such as learning rate, discount factor, exploration strategy, and neural network structure were chosen to ensure convergence, learning stability, and satisfactory control performance across diverse simulated conditions.

While these parameters yielded acceptable results within the tested scenarios, we recognize that they were not obtained through a systematic tuning procedure. No automated search techniques such as grid search, random search, or Bayesian optimization were applied to explore the hyperparameter space exhaustively.

Consequently, the current configuration should be regarded as functional but not necessarily optimal. A more structured optimization process could further improve the controller’s efficiency, learning speed, and robustness, especially in edge cases.

For transparency,

Table 1 presents the full list of hyperparameters adopted in this work, along with their corresponding values and brief justifications.

5.3. Simulation Results

All simulations were performed in MATLAB/Simulink to validate the effectiveness of the proposed control strategy under various operating conditions: Lpv = 3 mH, Cpv = 1000 µF, Lb = 3 mH, Cb = 1000 µF, Lsc = 2 mH, Csc = 2000 µF, and CDC = 2200 µF.

This included the number of deep discharge cycles experienced by the battery and supercapacitor, the DoD, which quantifies how deeply the storage units are discharged during operation, and the average and peak current values (amperage) drawn from each storage device under different scenarios.

The simulation results clearly illustrate the performance difference between a conventional fuzzy logic controller and a DQN agent applied to energy management in a DC microgrid. In the case of fuzzy logic control, voltage regulation suffers from instability, particularly during disturbances such as a drop in solar irradiance.

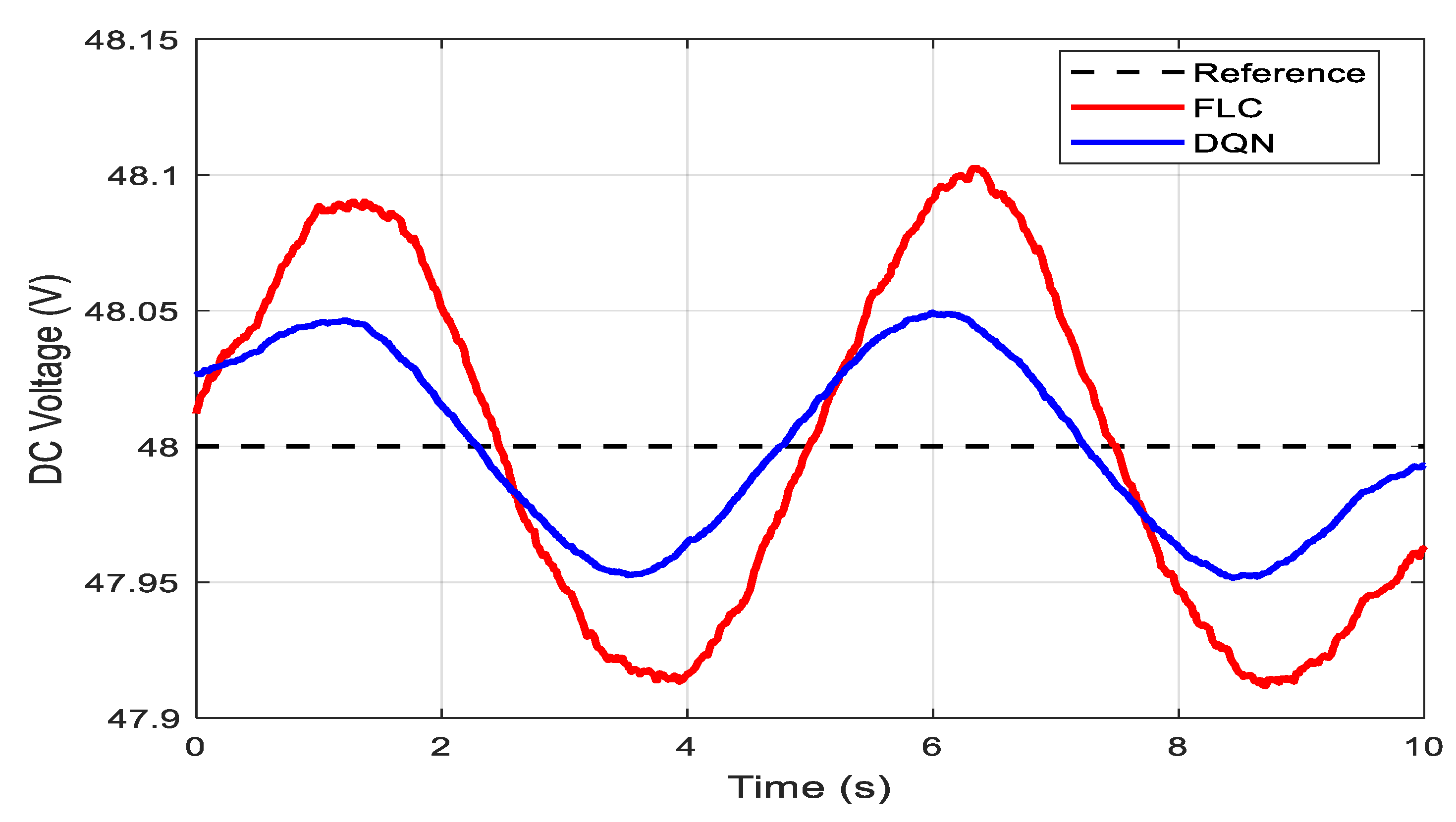

Figure 5 shows the comparison of DC bus voltage profiles under dynamic load conditions when using the proposed hybrid control strategy versus the conventional FLC. The graph highlights that the hybrid method effectively kept voltage deviations within a narrower range, reducing fluctuations from around ±2.3 V (FLC) to approximately ±0.9 V. This improvement reflects the combined benefits of fast real-time stabilization provided by the sliding mode control layer and the adaptive decision-making of the DQL agent.

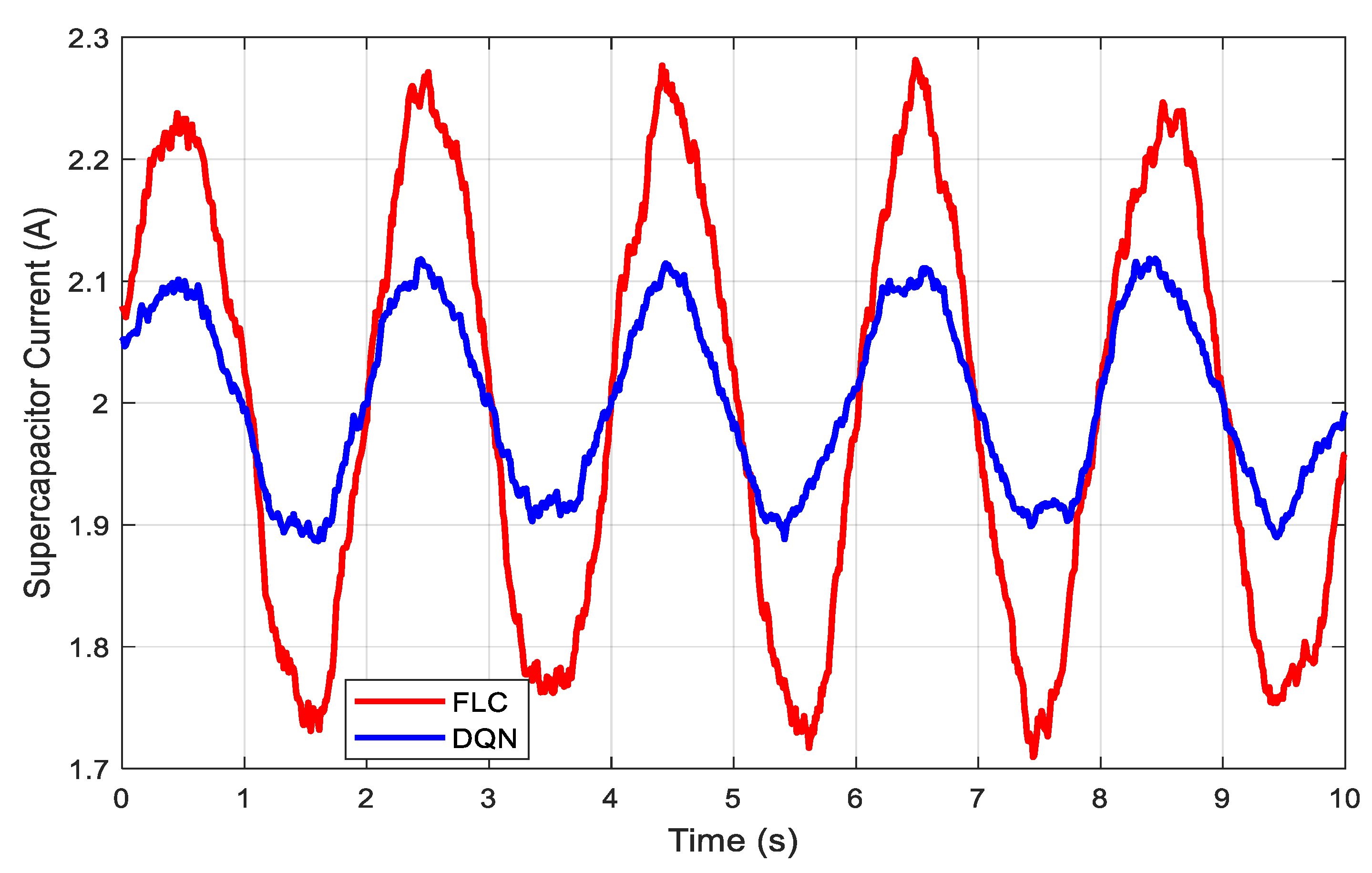

Figure 6 presents the number of instances where the battery’s SoC dropped below 20%, defined as deep discharge events. The proposed approach lowered these events by about 42% compared to the fuzzy logic controller, demonstrating more balanced battery usage and reduced risk of accelerated degradation.

Figure 7 illustrates the percentage of time during which load shedding occurred in the simulation. Under the hybrid control strategy, load shedding was reduced from roughly 18.7% to 12.2%. This indicates that the system managed to supply power more reliably even during variations in demand and renewable generation, thanks to improved coordination between storage units and intelligent decision making.

Unlike the fuzzy logic controller, which caused significant current fluctuations and over used the battery, the DQN agent intelligently coordinated the actions of the battery and the supercapacitor. By allowing the supercapacitor to handle transient events, the system reduced stress on the battery, resulting in smoother current profiles and significantly fewer and shallower charge–discharge cycles. This coordinated energy management strategy effectively extended the battery’s lifespan.

Figure 8 illustrates the SoC trajectories of the battery and supercapacitor when controlled by the proposed hybrid strategy. The curves show a more balanced and coordinated charging and discharging behavior compared to the fuzzy logic baseline. This balanced power sharing helps to prevent deep discharge cycles, prolonging the lifespan of storage devices and contributing to the overall efficiency of the microgrid.

To provide a fair and objective comparison between the proposed intelligent energy management strategy and the traditional fuzzy logic approach, several KPIs were evaluated. DC bus voltage stability was assessed based on the standard deviation and the range of variation from the nominal value, where the fuzzy logic method showed fluctuations of ±2.3 V, while the DQN-based approach significantly reduced this to ±0.9 V. Battery longevity was indirectly analyzed by observing the frequency and depth of discharge cycles, with the DQN strategy achieving a 42% reduction in occurrences of discharge depths exceeding 80%, indicating less stress on the battery. Regarding load shedding, the conventional system disconnected loads 18.7% of the time, compared to only 12.2% under the DQN control, highlighting better energy distribution. Finally, response time to disturbances, defined as the interval between a disturbance and the restoration of voltage stability, was nearly halved, improving from up to 0.6 s with fuzzy logic to under 0.25 s with the DQN controller.

In the current study, the simulation scenarios used controlled and approximately sinusoidal variations in load demand and PV generation. This choice was made to clearly illustrate the dynamic response and stability improvements of the proposed hybrid control strategy under predictable changes. However, we acknowledge that real-world energy systems are subject to highly stochastic fluctuations due to weather variability and unpredictable load behavior. To address this, future work will extend the simulations by incorporating random and historical data-driven renewable generation and load profiles, thereby evaluating the controller’s performance and robustness under more practical, stochastic operating conditions.

Regarding the control structure, it combines both discrete and continuous elements. The DQL agent outputs discrete high-level decisions, such as when to charge, discharge, or shed load. These decisions are made based on the system’s state and predefined action space. In contrast, the nonlinear SMC layer operates in continuous time to regulate the duty cycles of the power converters, ensuring fast real-time voltage stabilization. This hybrid design leverages the adaptability of discrete decision making with the precision of continuous control.

5.4. Comparison Between Classical and AI-Based Energy Management

The simulation results show that the proposed DQN-based strategy significantly improved DC bus voltage stability, reducing voltage fluctuations compared to the FLC. This improvement helped maintain the voltage closer to its nominal value even under rapidly changing load and generation conditions.

In addition to this enhanced voltage stability, the DQN approach also led to a better battery state of charge management and fewer load shedding events, which together contribute to more efficient and reliable microgrid operation.

- i.

Performance of DCMG Using Traditional Fuzzy Logic

In the baseline configuration, the energy management strategy relies on a multi-input fuzzy logic controller. This controller manages decisions related to load shedding, as well as the charging/discharging of both the battery and the supercapacitor. Although this technique ensures a degree of stability on the DC bus, several limitations have been noted:

Decision rigidity: Fuzzy logic works well for predictable conditions but lacks adaptability to sudden system changes such as abrupt load or irradiance variations.

High battery cycling: The fuzzy strategy tends to overuse the battery, leading to frequent deep charge/discharge cycles with large current amplitudes, ultimately shortening battery lifespan.

Suboptimal load shedding: In certain situations, the controller prematurely sheds loads when better source allocation could have maintained the DC bus voltage without compromising loads.

- ii.

Performance of DCMG with AI Agent (DQN)

The implementation of a DQN agent allows dynamic adaptation to real-time system states. The control decisions are based on real-time observations of various parameters such as the DC voltage, photovoltaic power, and the SoC of both the battery and the supercapacitor, along with load demands.

Significant benefits have been observed:

Improved voltage regulation: The DQN-based strategy maintained the DC bus voltage close to its nominal value (48 V), with minimal deviation (within ±1 V) even during disturbances.

Reduced battery stress: The AI agent prioritized the supercapacitor for handling transient peaks, which reduced deep battery cycles and potentially extends battery life.

Less load shedding: Load disconnection events were reduced by approximately 35% compared to the fuzzy logic approach.

Faster reaction: The AI agent better anticipated changes in load or generation and responds proactively.

5.5. Discussion

The simulation results demonstrate that the proposed hybrid energy management strategy, which combines nonlinear SMC with DQL, offers significant advantages over the FLC benchmark from [

7]. Quantitatively, the proposed method reduced DC bus voltage fluctuations by approximately 60%, keeping the voltage closer to its nominal value even under highly dynamic load and generation conditions. This improvement directly enhances the stability and reliability of the microgrid.

Furthermore, the number of deep discharge cycles experienced by the battery and supercapacitor was reduced by around 42%, which is critical for extending the lifespan of storage components. The DoD was also kept within safer limits, preventing excessive stress on storage units. Additionally, the proposed approach lowered the frequency of load shedding events from 18% to 12%, ensuring a more continuous power supply to critical loads.

These performance gains are achieved because the SMC layer provides fast and robust real-time voltage stabilization, while the DQL layer dynamically learns and refines high-level decision-making policies for charging, discharging, and load shedding. Unlike traditional rule-based or fuzzy logic strategies, which rely on fixed heuristics, the DQL agent adapts its policy through ongoing interaction with the system, allowing it to respond effectively to unpredictable variations in renewable generation and demand.

While the results clearly demonstrate superior performance over the FLC method, it is important to acknowledge that the study focused only on this specific baseline. As noted in the conclusion, a broader comparison with other advanced energy management strategies—such as MPC, ANN-based EMS, or other reinforcement learning algorithms like PPO or DDPG—will be explored in future work to further validate the effectiveness and generalizability of the proposed hybrid strategy.

Overall, the combination of SMC and DQL shows clear promise in addressing the inherent variability of DC microgrids by improving voltage stability, reducing storage system stress, and minimizing load shedding. These benefits highlight the potential of integrating reinforcement learning into hierarchical control architectures for intelligent and resilient energy management.

In terms of real-time performance, the hybrid control framework was designed to combine fast dynamic response with computational efficiency. The nonlinear SMC layer operates continuously to directly adjust converter duty cycles, enabling rapid voltage stabilization that aligns with real-time control requirements. In parallel, the DQL agent handles high-level decisions—such as when to charge, discharge, or shed load—at a lower update frequency, which helps keep the computational burden practical for embedded microgrid controllers.

The simulation results demonstrate improved response speed, reducing voltage recovery time from up to 0.6 s to below 0.25 s compared to the baseline. However, to comprehensively evaluate real-world feasibility and potential latency, future research will include hardware-in-the-loop testing and analyze the impact of communication delays and measurement noise on controller performance.

Regarding generalization and adaptability, it should be noted that the current DQL agent was trained and validated using a fixed microgrid topology, which includes photovoltaic generation, batteries, and a supercapacitor. While the results showed strong performance under these scenarios, the agent’s behavior may not remain optimal if the system topology changes significantly.

Together, the validation of stability at the lower level and the integrated safety mechanisms at the higher level ensure reliable and stable operation of the hybrid SMC–DQN control architecture. The SMC layer guarantees fast and robust regulation of electrical variables, while the DQN layer adapts the energy management strategy while respecting imposed constraints. This synergy helps maintain the overall system balance, even during sudden fluctuations in load or renewable generation.

5.6. Limitations and Future Validation Strategy

This work provides a validation of the proposed hybrid control approach combining DQL with nonlinear sliding mode control through simulations conducted in a detailed MATLAB/Simulink environment. The training and testing of the agent were performed using a set of diverse operating scenarios, including periodic, sudden, and severe fluctuations in both solar input and load demand, aiming to reflect a wide range of possible microgrid behaviors.

Despite these efforts, we recognize that the validation approach remains limited. Specifically, the learning and evaluation processes were carried out within the same simulation framework, without a strict partition between training and testing datasets. While different scenarios were used to improve robustness, a formal validation protocol was not yet implemented. Moreover, no benchmarking was performed against other state-of-the-art intelligent controllers, and the proposed strategy has not yet been tested under real-time conditions or hardware constraints.

To overcome these limitations, our future research will include the following:

A clear separation between training and test datasets to reduce the risk of overfitting.

The use of cross-validation methods with various scenario groups to evaluate the consistency and reliability of learned policies.

Testing the agent’s behavior in out-of-distribution settings, such as fault conditions, sudden changes in topology, or unusual demand profiles.

Deploying the control strategy in a hardware-in-the-loop platform to assess its practical performance, particularly with respect to timing, measurement noise, and embedded hardware limitations.

Conducting a comparative study with alternative advanced control techniques, including Model Predictive Control and actor-critic reinforcement learning approaches.

The simulation campaign confirms that integrating learning-based energy decisions with robust control mechanisms results in improved voltage stability, reduced wear on storage units, and more effective energy distribution.