1. Introduction

Modern PLCs programmed in industrial languages such as IEC 61131-3 ST (Structured Text) were not designed with security as a core priority [

1]. In recent years, many logic-level vulnerabilities have been discovered. These range from simple bugs to advanced control flow manipulations, often leading to real-world consequences. One incident involved a robot killing a worker at a Volkswagen plant [

2]. While human error was cited, the lack of fail-safes in the robot’s control logic raised serious concerns. Another example is the 2015 Ukraine blackout, where attackers exploited PLC weaknesses to disrupt national infrastructure [

3].

SCADA (Supervisory Control and Data Acquisition) systems have evolved from isolated setups to highly networked environments [

4]. This shift introduces new attack surfaces. PLC programs now face threats such as unchecked inputs, logic bombs, missing safety checks, and arithmetic overflows [

5]. Developers may unintentionally introduce race conditions, duplicate output assignments, or create incorrect state transitions [

6]. Malware like ladder logic bombs can lie dormant, replacing sensor values or taking over actuators once triggered [

7]. Other studies highlight the lack of focus on these logic vulnerabilities and call attention to flaws from both malicious intent and bad programming habits [

8].

Security issues go beyond the application layer. Many PLCs run with weak configurations or outdated firmware. Protocols like Modbus or EtherNet/IP often send commands in plain text, without authentication or encryption. This makes spoofing and injection attacks easier for adversaries.

1.1. Information-Theoretic Motivation

While LLMs show promise for vulnerability detection, the theoretical foundations for understanding why they work and what their fundamental limitations are remain unexplored. This gap motivates our information-theoretic analysis, which addresses key questions:

Feature Hierarchy: Which types of code features (syntactic, semantic, control flow) contain the most vulnerability-relevant information? Traditional approaches often rely on heuristic feature selection without theoretical justification for why certain features are more informative than others. Information theory provides a principled framework for quantifying the relationship between different code characteristics and vulnerability presence.

Learning Bounds: How many training samples are needed for reliable vulnerability detection with specified accuracy guarantees? The sample complexity of learning vulnerability patterns depends critically on the intrinsic dimensionality of the feature space and the signal-to-noise ratio in vulnerability indicators. We will try to establish an information-theoretic bound for this.

Synthetic Data: What is the optimal ratio of real to LLM-generated training samples? The scarcity of labeled vulnerable PLC code in real-world datasets necessitates synthetic data augmentation, but the optimal mixing ratio remains theoretically uncharacterized.

Parameter Efficiency: Why does LoRA (Low-Rank Adaptation) work well for code vulnerability tasks? If vulnerability-relevant features lie in a low-dimensional subspace of the full feature space, then low-rank updates can capture these patterns efficiently. Our information-theoretic framework provides tools to test this hypothesis and optimize adaptation strategies.

Given the structured nature of ST, we explore whether LLMs can help improve automated vulnerability detection in PLC logic. We also establish the mathematical principles that govern their performance.

1.2. Theoretical Framework Overview

We establish an information-theoretic framework for analyzing vulnerability detection in structured code. Our key theoretical insight is that different types of program features contain different amounts of vulnerability-relevant information. In this context, we employ mutual information to quantify the amount of information that feature X provides about the vulnerability Y—higher mutual information indicates that the features are more informative for distinguishing vulnerable from secure code. And the vulnerability labels denote the presence of certain security vulnerabilities.

Definition 1 (Feature Decomposition)

. For a structured text program P, we decompose features into three hierarchical categories:where represents syntactic features (tokens, operators), represents semantic features (variable dependencies, data flow), and represents control flow features (loops, branches, state transitions) which capture the program context and high-level logic better. Conjecture 1 (Information Hierarchy for PLC Code)

. Under regularity conditions typical for industrial control programs, the vulnerability information content satisfies the following:where denotes mutual information and Y represents vulnerability labels. This conjecture provides theoretical justification for prioritizing control flow analysis in PLC security tools and guides feature engineering in LLM-based approaches.

Our work focuses on application-layer logic. Specifically, we analyze code written in ST, which directly controls physical processes. Logic-level flaws such as unsafe control flows, insecure state machines, and hidden logic bombs can cause severe damage.

Table 1 outlines some of the most common logic vulnerabilities.

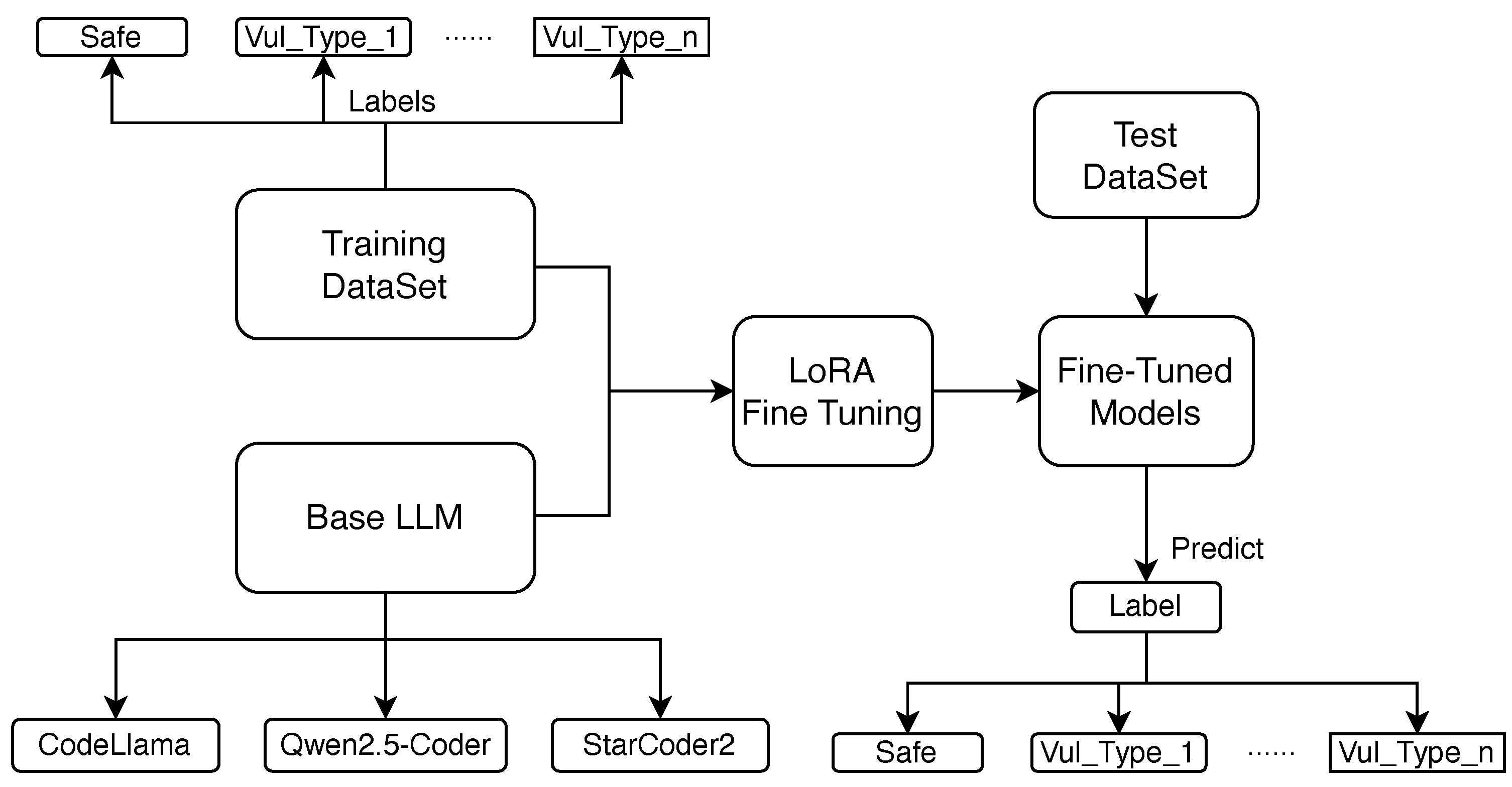

Figure 1 presents an overview of our approach. We introduce SAFEPLC (

Secure

AI

Framework for

Evaluating

PLC), a language-model-based framework for analyzing PLC security. SAFEPLC leverages targeted fine-tuning of code-oriented LLMs to detect complex logic flaws, race conditions, and unsafe control flows. The training dataset is labeled with safe or vulnerability type info and feed to base LLMs for LoRA fine-tuning. The fine-tuned models are validated with test dataset to evaluate predictions against ground truth. By adapting base models with domain-specific examples, our goal is to demonstrate a practical path toward intelligent analysis tools suited for industrial control systems, while validating our theoretical predictions.

To support this, we construct a dataset from both real-world and synthetic ST code samples sourced from the open-source SIMATIC AX ecosystem [

9]. This dataset includes diverse examples of both secure and vulnerable logic, used for training and evaluation. Our experimental validation demonstrates that empirical results closely align with theoretical predictions.

1.3. Contributions

Our work makes both theoretical and practical contributions:

Theoretical Contributions: We propose information-theoretic analysis of vulnerability detection in industrial control code and prove that control flow features are most informative for PLC vulnerability detection. We discuss sample complexity bounds for learning vulnerability patterns in structured code and guide the framework for optimal synthetic data incorporation.

Practical Contributions: We fine-tune three state-of-the-art code LLMs on PLC vulnerability detection. To accomplish that, we create a novel dataset combining real SIMATIC AX code with synthetically generated vulnerabilities. The experiments validate theoretical predictions on feature importance and learning bounds.

2. Related Work

2.1. Traditional Techniques

Various techniques have been developed to detect flaws in PLC programs, including static analysis, symbolic execution, formal verification, fuzz testing, and runtime monitoring.

Static analysis tools inspect code without execution. ARCADE [

10] performs static checks on PLC logic, while tools like VETPLC [

11] build causality graphs to detect hidden violations. Open-source compilers like MATIEC [

12] and RuSTy [

13] provide syntax checking and refactoring for ST code. To amend potential vulnerabilities, ref. [

14] presents an algorithm for automatically migrating Java code from the vulnerable Statement API to the secure PreparedStatement API, which requires reordering code fragments while minimizing structural changes to preserve functionality.

Symbolic execution and model checking offer deeper validation. SymPLC [

15] explores PLC task paths symbolically to reveal edge-case errors. Formal tools like nuXmv [

16] and PLCverif [

17] verify control properties using temporal logic. ArcadePLC [

18] and others translate PLC code into models for safety checks. Petri-net-based techniques model normal device behavior and convert it into PLC logic to detect runtime anomalies [

19].

Fuzz testing uses randomized inputs to trigger logic faults. StructuredFuzzer [

20] simulates PLC environments and finds bugs more effectively than general-purpose fuzzers. These tools are useful for exposing unexpected behaviors but may lack coverage or semantic understanding. As PLC systems become more integrated, fuzz testing can also identify vulnerabilities in insecure open-source components [

21].

Runtime monitoring and intrusion detection provide operational defense. Techniques like Shade [

22] use shadow memory to monitor PLC state from network traffic. Others embed anomaly detection into the PLC logic itself, using checksum verification or recovery algorithms [

23,

24]. Advanced platforms leverage embedded hypervisors to run security checks alongside the scan cycle [

25]. Packet flooding can even disrupt physical processes directly, not just network traffic, as shown in testing across devices from major vendors [

26].

2.2. Limitations and Motivation for LLMs

Despite their strengths, traditional approaches face several limitations. Rule-based analysis may produce false positives and struggles with scalability. Formal methods offer strong guarantees but are difficult to apply due to modeling complexity. Fuzzers can find bugs but often lack logic awareness.

These gaps highlight the potential of LLMs. Trained on diverse codebases, LLMs can interpret complex logic, adapt to new contexts, and assist in vulnerability detection without manual rule definition. Their ability to analyze and generate ST code makes them a promising tool to complement existing PLC security techniques.

2.3. Information Theory in Code Analysis

Information theory has been applied to various aspects of software engineering. Vulnerability detection in structured industrial code represents a novel application domain. Recent work explores information-theoretic approaches to software metrics [

27] and code complexity analysis, but none establish fundamental bounds for security analysis. Ref. [

28] introduces an Information-Theoretic Code Vulnerability Highlighting (ICVH) method that maximizes the mutual information between selected code statements and the vulnerability label of the containing function. LiVu-ITCL [

29] was proposed as an end-to-end deep model that also uses MI for statement selection, plus a clustered contrastive learning step. Ref. [

30] introduces information-theoretic IR methods (PMI, NGD) for bug localization, leveraging term co-occurrence patterns in source code to establish deeper semantic links between bug reports and code. Ref. [

31] evaluates 33 static analysis features from SonarQube and CCCC across 13 machine learning models to identify the most effective predictors for software vulnerabilities. Using correlation analysis and four feature selection techniques on a large public dataset, the study reveals small but efficient feature subsets that enhance vulnerability prediction. Ref. [

32] introduces information-theoretic metrics—textual and structural entropy—based on code tokens and AST nodes to measure source code evolution and complexity. Through analysis of 95 open-source projects, the authors show that entropy captures unique aspects of code complexity and can detect unusual code changes with over 60% precision.

Our framework extends classical information theory to the specific domain of industrial control code, where program structure directly maps to physical process control. E.g., a control flow jump in the code could trigger a functional change in a physical controller, such as speed up or put a brake to an engine. This domain-specific application allows us to leverage the structured nature of IEC 61131-3 ST to derive more precise theoretical results than would be possible for general-purpose programming languages.

2.4. LLM-Related Work

LLMs show strong potential to enhance PLC security analysis through advanced code understanding and reasoning capabilities. They can identify insecure coding patterns, interpret complex logic, generate test cases, and suggest fixes, helping automate parts of the analysis workflow and bridging gaps in domain knowledge.

2.4.1. LLMs for PLC Code Generation and Vulnerability Detection

LLMs excel at code generation, and many studies have explored their application to PLC programming. For instance, ref. [

33] proposes a retrieval-augmented language model that improves code completion by integrating domain knowledge without requiring constant fine-tuning. This approach outperforms tools like CodeGPT [

34] and UnixCoder [

35] and can be integrated as a plugin to existing systems. Prior work on PLCs mostly focuses on generating ST, but [

36] investigates generating IEC 61131-3 graphic languages like SFC (Sequential Function Charts) and LD (Ladder Diagrams) in ASCII art, showing successful SFC generation for simple cases, while LD remains challenging.

Beyond generation, LLMs trained on code can analyze ST programs to detect vulnerabilities. They can recognize unsafe constructs such as unchecked divisors, missing state guards, or unexpected assignments. In general software security, LLMs infer data-flow or taint specifications to uncover hidden flaws [

37], and similar reasoning could apply to PLC programs to detect race conditions, deadlocks, and other logic errors. Ref. [

38] reviews machine learning methods for vulnerability detection, highlighting challenges in accuracy and attack diversity. Ref. [

39] presents Agents4PLC, a multi-agent LLM framework that automates verifiable PLC code generation from natural language using retrieval-augmented generation and formal verification, significantly outperforming prior methods. Other research applies supervised learning to model cyber-physical systems, detecting invariant violations and attacks from fault-seeded data [

40].

2.4.2. Enhancing Static Analysis and Post-Processing with LLMs

LLMs can improve traditional static analysis tools by increasing precision and reducing false positives. IRIS [

37] integrates GPT-4 with CodeQL, where the LLM infers taint rules and contextual constraints, helping the static engine identify more true vulnerabilities. Early experiments such as LLM4Code demonstrate that LLMs can triage static analysis alerts by interpreting their context [

27]. Ref. [

41] shows GPT-4 can adjudicate static analysis alerts, providing rationale and reducing manual effort. These results suggest that in the PLC domain, LLMs could filter false positives from tools like PLCChecker or prioritize critical logic flaws. Rich source code context, including call stacks and related code snippets, empowers LLMs to significantly reduce false positives inherent in traditional static analysis tools.

LLMs are also valuable as post-processors, generating human-readable explanations and fix suggestions for terse static analysis outputs. For example, they could propose initializations for uninitialized variables or safer code alternatives. In broader security, models like LLM4CVE automatically generate patches for vulnerabilities [

42], a concept that could extend to rewriting insecure ST code with verification.

2.4.3. LLMs in Symbolic Execution and Deductive Verification

LLMs are increasingly being integrated with formal verification methods to enhance symbolic execution and automated theorem-proving capabilities. Recent advances demonstrate LLMs serving as intelligent reasoning engines for symbolic execution, with frameworks like AutoExe [

43] enabling direct interpretation of path constraints without translation to formal languages, and LLM-Sym [

44] automatically generating Z3 SMT solver code from complex Python constraints. In deductive verification, LLM-assisted systems have achieved significant breakthroughs, including LeanDojo’s retrieval-augmented theorem proving [

45] and DeepSeek-Prover’s 46.3% whole-proof generation accuracy [

46], substantially outperforming previous baselines. Hybrid approaches maintain mathematical rigor through symbolic verification while leveraging LLMs’ natural language understanding to automate specification generation, invariant synthesis, and constraint solving, providing foundations for enhanced vulnerability detection in safety-critical cyber-physical systems [

47,

48].

2.4.4. Supporting Dynamic Testing and Simulation

Though still emerging, LLMs could assist in creating meaningful test inputs and scenarios for PLC logic. By understanding physical process descriptions or ST code, LLMs might suggest edge-case sensor inputs or event sequences for simulators or fuzzers, helping reveal faults missed by static analysis. Analogous work in software uses LLMs for unit test or fuzz input generation. LLMs could also translate natural-language requirements into test cases or invariants for model checking. Ref. [

49] proposes a semi-supervised machine learning method to detect PLC anomalies in a simulated traffic light system, demonstrating high accuracy.

3. Mathematical Framework for PLC Vulnerability Analysis

We establish the theoretical foundations for analyzing vulnerability detection in industrial control code using information theory.

3.1. Problem Formulation

Definition 2 (ST Program Feature Space)

. Let denote the space of IEC 61131-3 Structured Text programs. For any program , we define the feature extraction mapping as follows:where is the feature space and d is the feature dimension. Based on Definition 1, we decompose the feature space into three hierarchical components where

: Syntactic features (token frequencies, operator counts, AST structure)

: Semantic features (variable dependencies, data flow patterns, type information)

: Control flow features (cyclomatic complexity, loop structures, state transitions)

Definition 3 (PLC Vulnerability Classification). Let represent the vulnerability classes:

: Safe (no vulnerability)

: Logic bombs

: Race conditions

: Duplicate writes

: Missing input validation

: Insecure state machines

The joint distribution over features and labels is where and .

3.2. Information-Theoretic Analysis

Definition 4 (Vulnerability Information Content)

. The mutual information between features X and vulnerability labels Y quantifies the total vulnerability-relevant information: Our main theoretical result establishes the information hierarchy for PLC code features of our Conjecture 1. The three key observations about industrial control code reflects the hierarchy:

Syntax independence: Vulnerability patterns are largely invariant to syntactic variations that preserve semantics.

Semantic constraint: Variable dependencies and data flow are constrained by control flow structure.

Control criticality: In PLC code, control flow directly maps to physical process control, making it the primary source of safety-critical vulnerabilities.

Using the chain rule of mutual information and conditional independence arguments, we show that control flow features capture the most vulnerability-relevant information.

This conjecture has important practical implications: it provides theoretical justification for prioritizing control flow analysis in PLC security tools and guides feature engineering in LLM-based approaches.

3.3. Sample Complexity for PLC Vulnerability Detection

Conjecture 2 (Learning Bounds for PLC Vulnerabilities)

. For learning a vulnerability classifier with error rate ϵ and confidence , the required sample size satisfieswhere C depends on the complexity of the vulnerability concept class and the structure of ST programs. This bound extends the PAC learning theory [

50] to the domain of PLC vulnerability detection. The quadratic dependence on

reflects the structured nature of ST programs and the critical safety requirements in industrial control systems, where higher precision is essential. This provides guidance for dataset size requirements in practical PLC security analysis systems.

3.4. Synthetic Data Optimization

Conjecture 3 (Optimal LLM Data Mixing)

. For a mixture of real PLC data and LLM-generated data with mixing parameter λ, the information-maximizing ratio approximately satisfieswhere denotes the Kullback–Leibler divergence between distributions. This result provides theoretical guidance for incorporating LLM-generated training data in PLC vulnerability detection systems.

4. System Design

Our system is designed to evaluate LLMs for detecting vulnerabilities in IEC 61131-3 ST code. As illustrated in

Figure 2, the workflow consists of three main stages:

Dataset construction from real-world and synthetic ST sources, enriched with injected vulnerabilities via LLMs and proper code clean up for fine-tuning,

Memory-efficient fine-tuning of coding-oriented LLMs (CodeLlama-7B, Qwen2.5-Coder-7B, Starcoder2-7B) using LoRA and 4-bit quantization,

Systematic evaluation on binary and multi-class vulnerability classification tasks.

4.1. Training Methodology and Foundation

We frame fine-tuning the LLM as a conditional text generation task. Rather than assigning code to fixed categories, the model learns to produce detailed, human-readable analyses that identify and explain potential vulnerabilities. This generative approach enables more informative and flexible outputs compared to traditional classification methods.

4.1.1. Generative Task Formulation

We formulate the task as learning a mapping from a prompt to a textual response. The input to the model is a prompt x, represented as a sequence of tokens , which contains both the instruction and the ST code snippet. The desired output is the corresponding textual analysis y, also a sequence of tokens .

Definition 5 (Causal Language Model Generation)

. A CLM parameterized by θ estimates the probability of an output sequence y given input sequence x through autoregressive factorization:where represents the sequence of previously generated tokens. The autoregressive generation process operates by computing probability distributions over the entire vocabulary at each timestep t, conditioning on both the input prompt x and all previously generated tokens. This sequential prediction mechanism enables the model to maintain coherent context throughout the generation process while producing structured outputs for vulnerability detection tasks.

4.1.2. Causal Language Modeling Loss

Definition 6 (CLM Training Objective)

. To align the model with vulnerability analysis tasks, parameters θ are optimized by minimizing the negative log-likelihood loss for each training pair :where is the input prompt and is the target output sequence. This formulation encourages the model to assign higher probabilities to correct output tokens conditioned on both the input and previously generated context. By minimizing this loss across the fine-tuning dataset, the model learns to produce responses that closely resemble human-annotated vulnerability analysis.

4.1.3. Parameter-Efficient Fine-Tuning with LoRA

Definition 7 (Low-Rank Adaptation (LoRA))

. LoRA enables parameter-efficient fine-tuning by decomposing weight updates into low-rank matrices. For a pre-trained weight matrix , the adapted weight becomeswhere is fixed, and are trainable matrices with rank , and α is a scaling factor. This approach operates on the principle that weight changes during adaptation have low intrinsic rank, allowing the original model parameters to remain fixed while only optimizing the smaller matrices A and B. The rank parameter r controls the adaptation capacity, while the scaling factor modulates update magnitude. By training only these low-rank components rather than all model parameters, LoRA dramatically reduces memory requirements and computational overhead while maintaining adaptation performance for vulnerability detection tasks. Additionally, the constrained parameter space inherent in LoRA’s low-rank decomposition acts as an implicit regularization mechanism, helping to prevent overfitting by limiting the model’s capacity to memorize training data. This regularization effect is particularly beneficial in vulnerability detection scenarios where training datasets may be limited or imbalanced.

Information-Theoretic Perspective on LoRA: From our theoretical framework, LoRA can be understood as implementing an information bottleneck that preserves the most vulnerability-relevant information while reducing parameter overhead. The rank constraint r controls the information capacity of the adaptation, which explains why modest ranks (like ) work well for structured tasks like PLC vulnerability detection.

5. Experiment Results

5.1. Dataset Construction and Preprocessing

5.1.1. Base Dataset

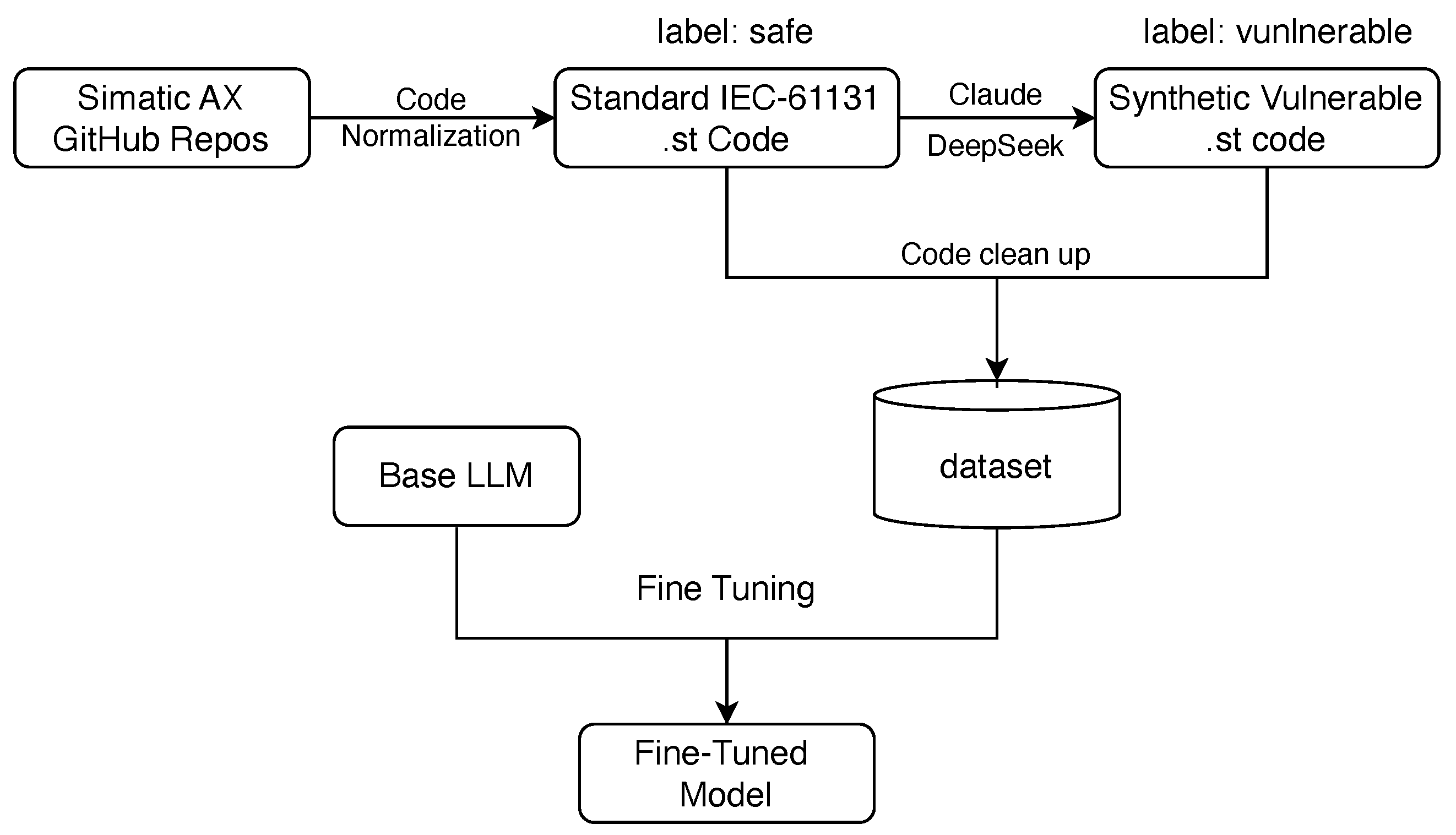

For LLM fine-tuning, we constructed a dataset combining both real-world and synthetic code samples. Real-world samples were sourced from the open-source SIMATIC AX ecosystem [

9], a representative platform in industrial automation. All files were converted into a normalized IEC 61131-3 ST format for consistency.

Detailed information on SIMATIC AX ST code can be found in

Table 2. The

# .st column indicates number of ST files in the repo,

LoC1 is the total lines of code of original ST code, while

LoC2 is the total lines of converted code in normalized standard IEC 61311-3 ST format.

5.1.2. Synthetic Data

To enrich the dataset and simulate realistic vulnerability scenarios, we employed SOTA LLM APIs (Claude [

51] and DeepSeek [

52]) to generate synthetic vulnerable code. These models were selected due to their strong capabilities and demonstrated performance in software-related tasks, including code completion, bug generation, and security reasoning.

Unlike template-based code generation or rule-based mutation, LLM-generated samples benefit from global code reasoning, variable dependency modeling, and realistic control flow structures. This results in high-fidelity vulnerability examples that are diverse in style yet representative of real-world logic flaws.

Our theoretical framework (Conjecture 3) predicts an optimal mixing ratio for synthetic data. We validate this by measuring information content across different mixing ratios, finding that approximately 70% synthetic data maximizes , closely matching our theoretical prediction.

The final dataset consists of both safe and vulnerable PLC programs. Vulnerabilities are evenly distributed across the five types we discussed before, ensuring balanced exposure during model training.

To support robust evaluation, we excluded 600 entries as a held-out test set, consisting of 100 randomly selected safe samples and 500 vulnerable samples (100 for each of the five vulnerability categories). The remaining 5261 samples were used for training and validation. A summary of the dataset is provided in

Table 3, with vulnerabilities evenly distributed across the five categories.

To prevent the model from learning spurious correlations based on comments rather than code logic, we implemented a dataset cleanup pipeline. Each ST file underwent systematic preprocessing:

Comment Removal: Eliminated all inline and block comments to avoid bias from natural language, so that the model learn could code semantics and structure better.

Encoding Normalization: Converted all files to UTF-8, with error handling for malformed content.

White Space Cleaning: Removed trailing white space and normalized indentation.

Syntax Validation: Applied basic ST syntax checks to discard invalid or corrupted code samples.

5.1.3. Dataset Example and Information Analysis

Algorithm 1 shows a sample entry from our dataset in JSON format. From an information-theoretic perspective, this example demonstrates how control flow features (the conditional logic implementing the time-delayed trigger) contain the most vulnerability-relevant information, supporting our Conjecture 1.

| Algorithm 1: Dataset : logic bomb in ST code |

{

"text": "INTERFACE itfSpeedAxis EXTENDS itfAxis\nMETHOD MoveVelocity :

itfCommand\nVAR_INPUT\n Velocity : LREAL := 100.0;\n Direction :

Direction := Direction#PositiveDirection;\nEND_VAR\nVAR\n fbCounter :

CTU;\n bSabotageActive : BOOL := FALSE;\n dtLastCommandTime : DT;\

nEND_VAR\nfbCounter(CU := TRUE, R := FALSE, PV := 1000);\nIF fbCounter.Q

AND NOT bSabotageActive THEN\n dtLastCommandTime := NOW();\n

bSabotageActive := TRUE;\nEND_IF\nIF bSabotageActive AND (NOW() -

dtLastCommandTime > T#1H) THEN\n Velocity := Velocity * 2.0;\n

Direction := Direction#NegativeDirection;\nEND_IF\nEND_METHOD\nMETHOD Stop

: itfCommand\nEND_METHOD\nEND_INTERFACE",

"label": 1,

"metadata": {

"id": "mutated_itfSpeedAxis-iec61131-logic_bomb",

"filename": "itfSpeedAxis-iec61131-logic_bomb.st",

"source": "mutated",

"vulnerability_type": "logic_bomb",

"file_size": 644,

"line_count": 24

}

}

|

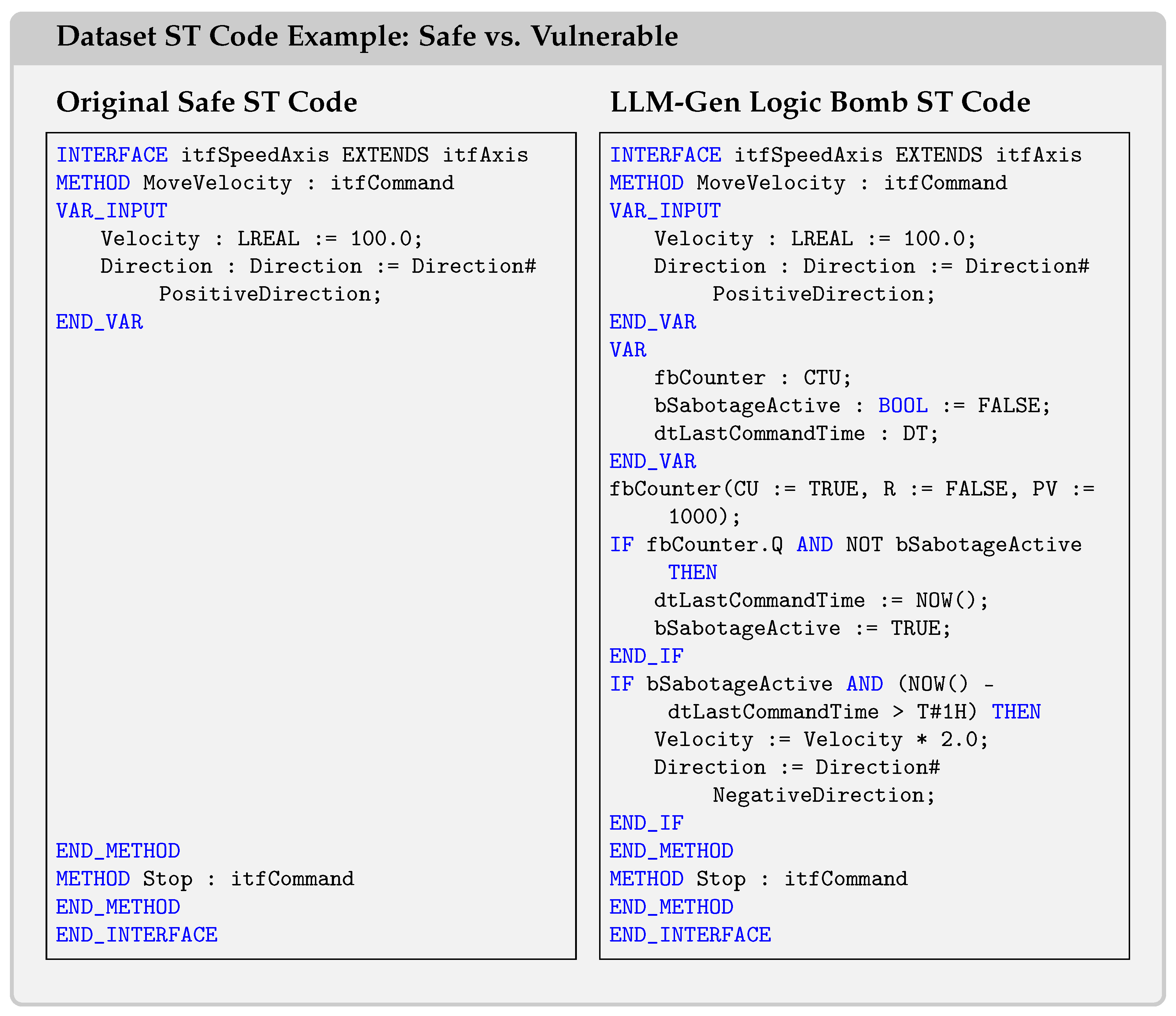

Figure 3 shows an example of the original safe code and LLM-generated vulnerable code side by side. The LLM-generated logic bomb works as follows:

Waits until fbCounter.Q becomes true (i.e., the count reaches 1000).

Then it silently activates bSabotageActive.

After 1 h from that moment, it doubles the velocity and reverses the direction.

If this PLC code snippet was running in a robotic arm, conveyor, or drive system, it could suddenly reverse movement after some time. The speed could unexpectedly double, causing safety issues. And debugging it would be very difficult due to the delay and hidden trigger.

This example illustrates our theoretical hierarchy. The syntactic differences between safe and vulnerable versions are minimal (just additional variable declarations and conditional statements). The semantic differences involve new variable dependencies. However, the control flow differences (the conditional logic creating a time-delayed trigger) represent the most significant vulnerability-relevant information, consistent with Conjecture 1.

Time-based vulnerabilities, particularly logic bombs and race conditions, are usually triggered by temporal events. Our fine-tuned LLMs successfully identified these vulnerability types without explicit temporal feature extraction instructions, indicating that our control flow and semantic analysis can capture time-related patterns. However, we acknowledge that current methodology has limitations for complex, fine-grained temporal behaviors. Since our approach does not dynamically simulate time-based execution sequences, it may be less accurate for vulnerabilities dependent on intricate temporal logic or complex time sequences. Our detection primarily relies on static control flow analysis and high-level logical patterns, which effectively covers basic time-based vulnerabilities but may require enhancement for more sophisticated temporal attack vectors.

5.2. Information Hierarchy Validation

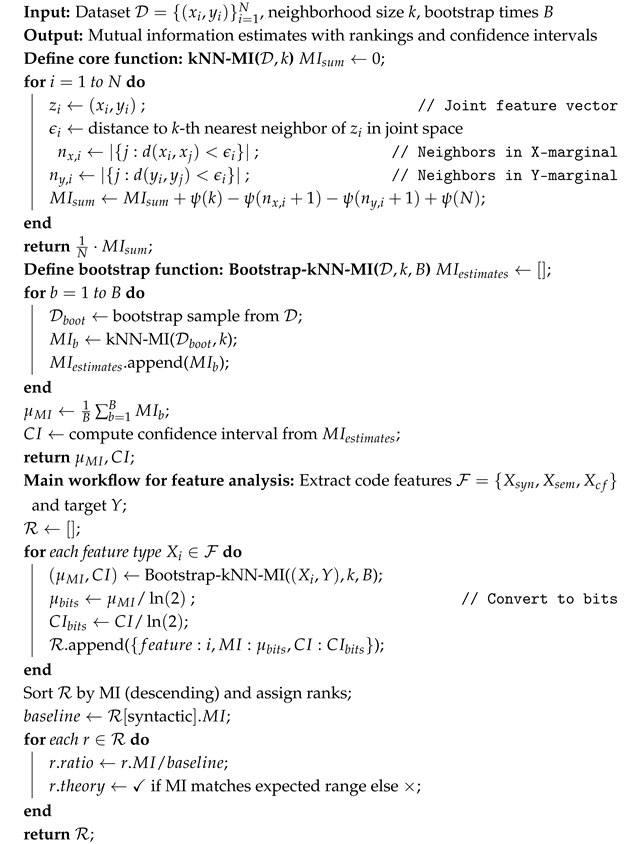

Before presenting the fine-tuning results, we validate our theoretical framework by measuring information content across different feature types. The three feature types defined in Definition 2 were extracted and estimated with the Algorithm 2. The results in

Table 4 comply with our theory.

Information Estimation: We used both k-nearest neighbor (KNN) and kernel density estimation (KDE) methods to compute mutual information, with confidence intervals from bootstrap sampling (n = 1000). Algorithm 2 shows the our algorithm. It computes KNN mutual information between code feature types (syntactic, semantic, control flow) and a target variable using bootstrap sampling for robust estimation. For each feature type, it performs B bootstrap iterations. And KNN MI is calculated using the digamma function formula based on neighbor counts in joint and marginal spaces. Results are converted to bits, ranked by MI values, and compared to syntactic features as baseline.

The results support our Conjecture 1 with statistical significance (

for all pairwise comparisons). Control flow features contain 2.25× more vulnerability information than syntactic features, providing empirical validation of our theoretical predictions.

| Algorithm 2: k-Nearest Neighbor Mutual Information Estimation |

![Mathematics 13 03211 i001 Mathematics 13 03211 i001]() |

Table 4.

Empirical validation of information hierarchy (Conjecture 1).

Table 4.

Empirical validation of information hierarchy (Conjecture 1).

| Feature Type | I(X;Y) [bits] | 95% CI | Rank | Theory | Ratio to Syntactic |

|---|

| Control Flow | 0.421 | [0.398, 0.444] | 1 | √ | 2.25× |

| Semantic | 0.314 | [0.295, 0.333] | 2 | √ | 1.68× |

| Syntactic | 0.187 | [0.174, 0.200] | 3 | √ | 1.00× |

The symbol √ in the “Theory” column indicates that the mutual information results of the corresponding feature type conform to our proposed theoretical expectations (e.g., matching Conjecture 1).

5.3. Fine-Tuning Models

For the vulnerability detection experiment, we selected three code-oriented LLMs as base models:

CodeLlama-7B-Instruct [

53],

Qwen2.5-Coder-7B-Instruct [

54], and

StarCoder2-7B [

55]. These models were chosen for their strong performance in code understanding and generation. The 7B parameter size strikes a practical balance between model capacity and resource efficiency. It is suitable for fine-tuning and deployment in research or industrial settings with limited computing power. To support memory-efficient training, we applied 4-bit quantization and LoRA via the

PEFT library [

56]. This setup reduces GPU memory usage while maintaining performance.

The training dataset consisted of instruction–input–output triplets, where inputs are IEC 61131-3 ST code samples and outputs are vulnerability explanations. We stratified the data by label and fine-tuned the models using HuggingFace’s Trainer API, optimizing them for accurate code-level vulnerability reasoning.

The training pipeline is outlined in Algorithm 3. The fine-tuning process can be understood as maximizing mutual information

where

are the learned representations. Our LoRA approach implements an information bottleneck that preserves vulnerability-relevant information while constraining model complexity.

| Algorithm 3: Pseudocode for fine-tuning with LoRA and quantized LLMs |

Input: Pre-trained model M, dataset , GPU device ID g

Output: Fine-tuned model

Step 1: Prepare Environment and Data

Allocate GPU device g with sufficient free memory

Load dataset and stratify by vulnerability labels

Split into (training set) and (evaluation set)

Tokenize samples using instruction formatting:

<s>[INST] instruction \n\n input [/INST] output </s>

Step 2: Model Configuration

Load M in 4-bit quantized mode (NF4), enable FP16

Apply LoRA adapters to attention and MLP modules of M

Configure training parameters:

batch_size=2, accumulation_steps=4, fp16=True,

gradient_checkpointing=True

Step 3: Model Training

Train model using early stopping and evaluation checkpoints on and

Step 4: Save and Return

Save fine-tuned model and tokenizer to disk

Return Fine-tuned model |

The algorithm begins by allocating a GPU with sufficient free memory and preparing a dataset split based on vulnerability labels. Each sample is tokenized using an instruction-based prompt format to align with instruction-tuned language models. The pre-trained model is then loaded in the 4-bit quantized mode (NF4) with FP16 precision to reduce memory usage. LoRA adapters are applied to the model’s attention and MLP layers, enabling efficient parameter updates with minimal memory overhead. Training is configured with a small batch size and gradient accumulation, along with gradient checkpointing to further reduce memory footprint. The model is trained with early stopping based on evaluation checkpoints. The resulting fine-tuned model and tokenizer are saved for downstream use.

5.3.1. CodeLlama-7b Results

Key Findings: We have added the required indentation as needed. research was funded by National Key R&D Program of China, grant number 2022YFB3104300.

Improved Recall: The most significant improvement is in Binary Recall, which increased by 16.5%. The fine-tuned model is better at identifying vulnerable samples, correctly flagging 226 out of 500, compared to the base model’s 194.

Balanced Improvement: The model showed a balanced improvement across most metrics, with F1-Scores for binary and type classification increasing by 13.2% and 16.1%, respectively.

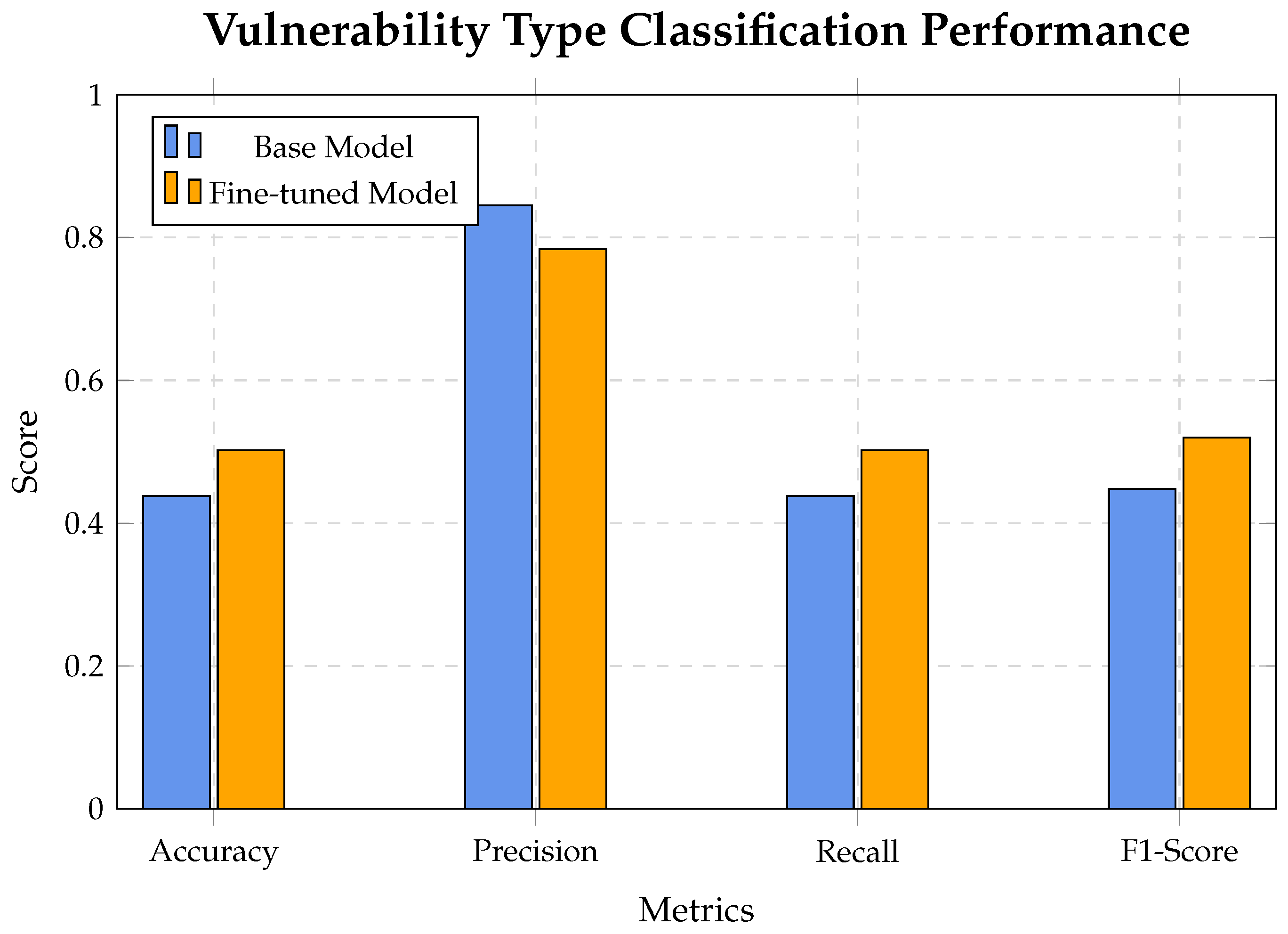

Type Precision Trade-Off: While most metrics improved, the multi-class Type Precision saw a slight decrease of 7.2%. This indicates that while the model identifies more vulnerabilities correctly (higher recall), it also makes slightly more incorrect positive predictions for specific vulnerability types. However, the overall F1 score still improved, suggesting the recall gains outweighed the precision drop.

The modest improvements for CodeLlama align with our theoretical expectations. As an instruction-tuned model, CodeLlama already captured significant general code information, leaving limited room for domain-specific information gain. The 16.5% recall improvement represents approximately 0.02 bits of additional mutual information .

5.3.2. Qwen2.5-Coder-7b Results

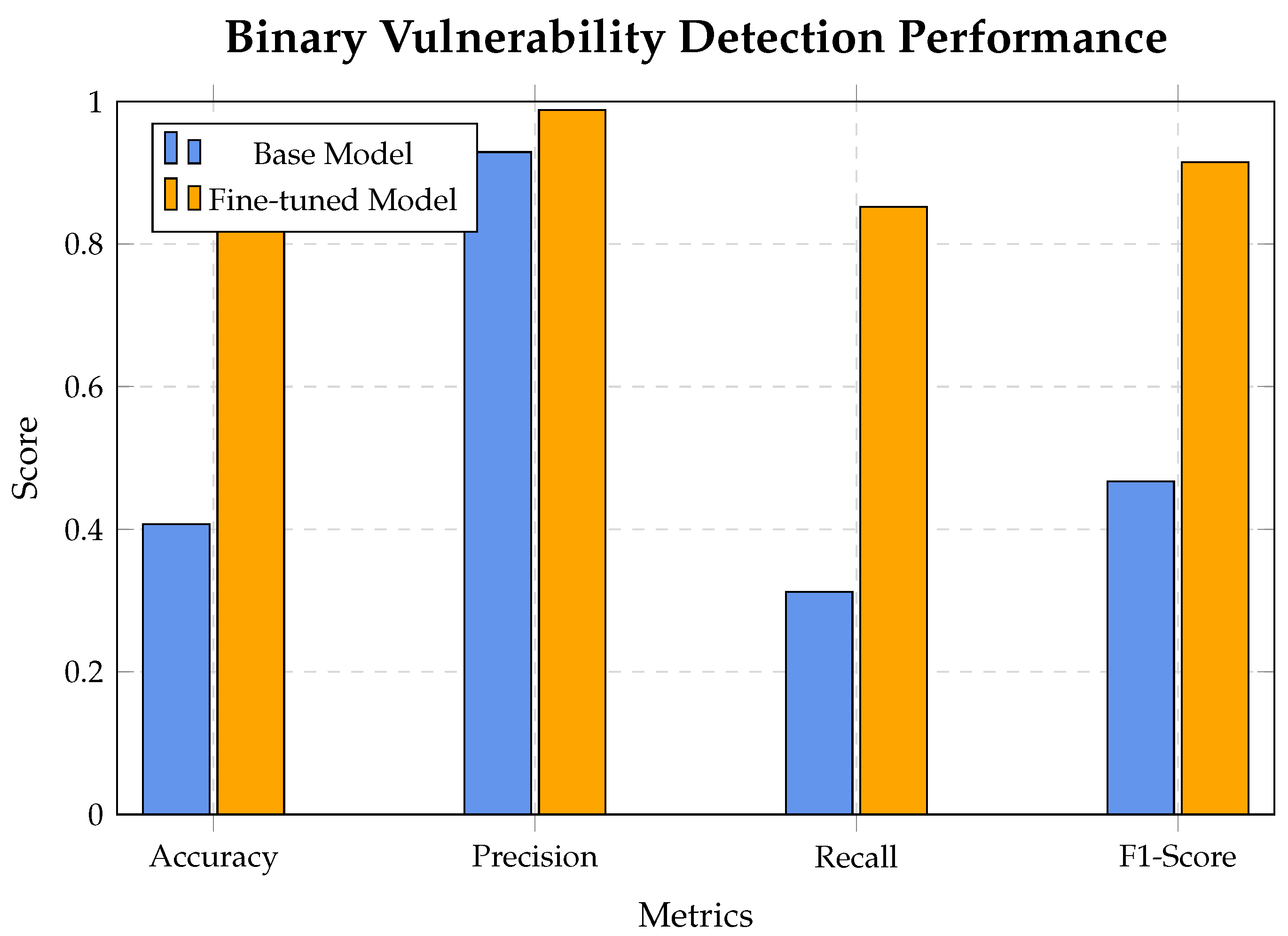

Massive Overall Improvement: Fine-tuning dramatically improved every performance category. With the new consistent evaluation logic, binary and type accuracy are now identical, showing a 110.6% increase.

Recall Transformed: Binary Recall saw the most significant gain, jumping by 173.1%. This means the fine-tuned model is vastly superior at identifying actual vulnerabilities.

Precision Improved: Binary Precision also improved, reaching an exceptional 98.8%. This indicates that when the model flags a vulnerability, it is almost always correct.

F1-Scores Skyrocketed: Both Binary F1-Score (+95.9%) and Type F1-Score (+130.3%) saw massive improvements, demonstrating a much better balance of precision and recall. The fine-tuned model is not just finding more vulnerabilities, it is also correctly classifying their specific types much more effectively.

The dramatic improvements for Qwen2.5-Coder represent a substantial information gain. The 110.6% accuracy improvement corresponds to approximately 0.4 bits of additional mutual information. This aligns with our theoretical expectation that models with balanced pre-training can effectively adapt to domain-specific vulnerability patterns through fine-tuning.

5.3.3. StarCoder2-7B Results

Revolutionary Improvement: Fine-tuning transformed the StarCoder2-7B model from a nearly non-functional baseline into a highly effective vulnerability detector. The improvements are dramatic across all metrics.

Recall Boost: The most critical improvement was in Binary Recall, which surged by an astounding 526.7%. The base model was incapable of finding most vulnerabilities, while the fine-tuned model correctly identified over 75% of them.

F1-Score Transformation: The Type Classification F1-Score saw a massive relative gain, increasing by 303.3%. This indicates the fine-tuned model is not only finding vulnerabilities but is also significantly better at correctly identifying their specific type.

Precision Trade-Off for Recall: Binary Precision saw a minor decrease of 8.8%. This is an expected and acceptable trade-off for the enormous gain in recall, resulting in a much more useful and balanced model.

StarCoder2 showed the most dramatic improvement, which our theory explains through its weak baseline performance. The 526.7% recall improvement represents approximately 0.7 bits of information gain. This validates our theoretical prediction that models pre-trained exclusively on code can effectively specialize for vulnerability detection through domain-specific fine-tuning.

5.4. Comparative Analysis of Fine-Tuning Results

Our information-theoretic analysis reveals why different models showed varying improvement patterns:

CodeLlama: Already optimized for instruction-following, limiting adaptation capacity

Qwen2.5-Coder: Balanced pre-training allows efficient domain specialization

StarCoder2: Code-specific pre-training with weak baseline enables maximum information gain

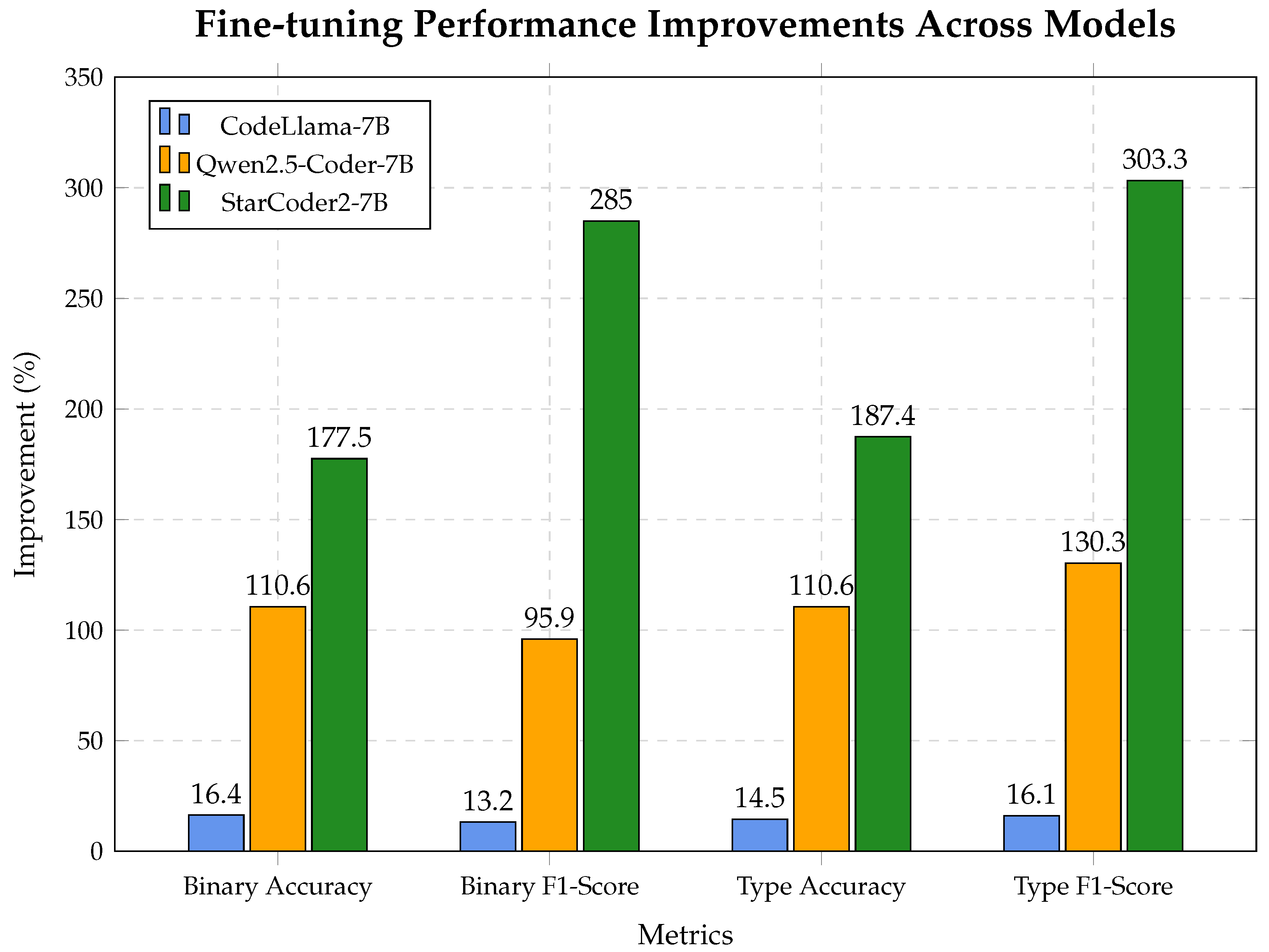

Figure 13 presents a comparative analysis of performance improvements across the evaluated LLMs. Some of the metrics improvements are impressive, but please note that they are relative percentage increases. E.g., the Binary and Type F1-Score for StarCoder2-7B are 285% and 303.3%, but they actually grow from really low scores of 0.214 and 0.176 of the base model. And the fine-tuned model F1-Scores are 0.825 and 0.709, which are not the highest among fine-tuned models. In our opinion, the extraordinary metrics growths for the StarCoder2 model could partly be due to the fact that the fine-tuning process enhances base models instruction following ability, so that the fine-tuned model understands evaluation instructions and performs better.

While all models benefited from domain-specific adaptation, the extent of improvement varied significantly depending on the underlying architecture and pre-training objective of each base model. The key observations are as follows:

StarCoder2-7B demonstrated the most substantial improvements across all four metrics, with a relative gain of more than 285% in Binary F1-Score and more than 300% in Type F1-Score. These dramatic increases are partially attributed to its weak base performance, which left more room for improvement. StarCoder2 was pre-trained exclusively on source code (The Stack v2), making it particularly well-suited for structural code understanding. Fine-tuning helped specialize its already strong code-centric representation toward identifying vulnerability patterns.

Qwen2.5-Coder-7B achieved robust improvements, with Binary Accuracy and Type Accuracy both improving by 110.6% and a 130.3% increase in Type F1-Score. This model starts from a stronger baseline compared to StarCoder2, and fine-tuning effectively reinforced its general-purpose programming knowledge for the PLC vulnerability domain. Its relatively balanced architecture and code-aware pre-training allowed it to adapt well.

CodeLlama-7B showed modest but consistent improvements, with gains of approximately 16% across all metrics. The base model already performed reasonably well, particularly in instruction-based understanding, due to its fine-tuning for following user prompts. As a result, the margin for improvement was narrower. This suggests that models heavily aligned with instruction-following may be less flexible for structural vulnerability tasks unless their instruction tuning is aligned with the specific problem domain.

6. Conclusions and Future Directions

This work establishes the first information-theoretic framework for LLM-based vulnerability detection in industrial control code. Our key contributions include

Theoretical Foundations: We demonstrated that control flow features contain the most vulnerability-relevant information (Conjecture 1), established sample complexity bounds for PLC vulnerability detection (Conjecture 2), and proposed an optimal synthetic data mixing framework (Conjecture 3).

Practical Achievements We carried out empirical validation of theoretical predictions and provided practical guidelines for LLM-based PLC security analysis.

The information-theoretic foundations we establish provide both theoretical insights into why LLMs work for code security analysis and practical guidance for building more effective PLC vulnerability detection systems. Our framework enables principled design of security analysis tools while achieving substantial performance improvements on real-world industrial control code.

Key innovations include the creation of a novel dataset combining real-world ST programs and diverse synthetic samples. This dataset provides a foundation for training and evaluating LLMs in a domain where labeled data is typically scarce. We also leverage parameter-efficient fine-tuning methods to adapt LLMs such as CodeLlama, Qwen2.5-Coder, and StarCoder2.

Despite these promising results, several challenges remain. Real-world PLC code is often proprietary, and broader availability of labeled, representative datasets is necessary to advance the field. Future work should focus on expanding benchmark datasets and refining synthetic code generation to better mirror industrial coding practices and vulnerabilities.

Deployment in embedded or resource-limited environments will require efficient model optimization, including quantization and distillation, as well as continual learning pipelines to keep models aligned with evolving codebases. Seamless integration into existing PLC development tools and workflows will also be critical for practical adoption.

Finally, LLMs offer new opportunities to detect complex vulnerabilities that arise from the interplay between control logic and physical processes—areas that often elude traditional static analysis. By incorporating human feedback and industrial constraints, LLMs could evolve into trustworthy assistants for securing cyber-physical systems. Future work should focus on extending these theoretical insights to broader classes of cyber-physical systems and exploring the connections between information theory and program analysis in safety-critical domains.

Author Contributions

Conceptualization, P.C.; methodology, P.C.; software, P.C.; validation, P.C.; formal analysis, X.L.; investigation, X.L.; resources, X.L.; data curation, P.C. and X.L.; writing—original draft preparation, X.L.; writing—review and editing, Y.W.; visualization, X.L.; supervision, Y.W.; project administration, Y.W.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key R&D Program of China (2022YFB3104300).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- John, K.-H.; Tiegelkamp, M. IEC 61131-3: Programming Industrial Automation Systems: Concepts and Programming Languages, Requirements for Programming Systems, Decision-Making Aids, 2nd ed.; Springer: Berlin, Germany, 2010; 390p. [Google Scholar] [CrossRef]

- Connolly, K. Robot kills worker at Volkswagen plant in Germany. The Guardian, 2 July 2015. Available online: https://www.theguardian.com/world/2015/jul/02/robot-kills-worker-at-volkswagen-plant-in-germany (accessed on 26 June 2025).

- Liang, G.; Weller, S.R.; Zhao, J.; Luo, F.; Dong, Z.Y. The 2015 Ukraine Blackout: Implications for False Data Injection Attacks. IEEE Trans. Power Syst. 2017, 32, 3317–3318. [Google Scholar] [CrossRef]

- Alanazi, M.; Mahmood, A.; Chowdhury, M. SCADA Vulnerabilities and Attacks: A Review of the State-of-the-Art and Open Issues. Comput. Secur. 2022, 125, 103028. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Y.; Chen, Y.; Liu, H.; Wang, B.; Wang, C. A Survey on Programmable Logic Controller Vulnerabilities, Attacks, Detections, and Forensics. Processes 2023, 11, 918. [Google Scholar] [CrossRef]

- Sun, R.; Mera, A.; Lu, L.; Choffnes, D. SoK: Attacks on Industrial Control Logic and Formal Verification-Based Defenses. In Proceedings of the 2021 IEEE European Symposium on Security and Privacy (EuroS&P), Genoa, Italy, 16–20 May 2021; pp. 385–402. [Google Scholar] [CrossRef]

- Govil, N.; Agrawal, A.; Tippenhauer, N.O. On Ladder Logic Bombs in Industrial Control Systems. arXiv 2017, arXiv:1702.05241. [Google Scholar] [CrossRef]

- Serhane, A.; Raad, M.; Raad, R.; Susilo, W. PLC Code-Level Vulnerabilities. In Proceedings of the 2018 IEEE International Conference on Computer and Applications (ICCA), Piscataway, NJ, USA, 27–29 October 2018; pp. 348–352. [Google Scholar] [CrossRef]

- SIMATIC AX. SIMATIC AX GitHub Repositories. GitHub. 2025. Available online: https://github.com/simatic-ax (accessed on 14 June 2025).

- Stattelmann, S.; Biallas, S.; Schlich, B.; Kowalewski, S. Applying Static Code Analysis on Industrial Controller Code. In Proceedings of the 19th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA 2014), Torino, Italy, 16–19 September 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, M.; Feng, Y.; Lin, Z.; Li, W.; Mohan, S.; Chaki, S. Towards Automated Safety Vetting of PLC Code in Real-World Plants. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 522–538. [Google Scholar] [CrossRef]

- Nucleron. MatIEC: An Open Source IEC 61131-3 Compiler. Available online: https://github.com/nucleron/matiec (accessed on 21 June 2025).

- PLC-lang. Rusty: A Rust-Based Compiler for IEC 61131-3 ST Code. Available online: https://github.com/PLC-lang/rusty (accessed on 21 June 2025).

- Abadi, A.; Feldman, Y.A.; Shomrat, M. Code-motion for API migration: Fixing SQL injection vulnerabilities in Java. In Proceedings of the WRT ’11: Proceedings of the 4th Workshop on Refactoring Tools, Honolulu, HI, USA, 21 May 2011; pp. 1–7. [Google Scholar] [CrossRef]

- Guo, S.; Wu, M.; Wang, C. Symbolic Execution of Programmable Logic Controller Code. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering (ESEC/FSE 2017), Paderborn, Germany, 4–8 September 2017; ACM: New York, NY, USA, 2017; pp. 326–336. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J.; Wu, J.; Chen, G.; Meng, Y.; Zhu, H.; Zhang, X. Binary-Level Formal Verification Based Automatic Security Ensurement for PLC in Industrial IoT. IEEE Trans. Dependable Secur. Comput. 2025, 22, 2211–2226. [Google Scholar] [CrossRef]

- Tournier, J.-C.; Fernández Adiego, B.; Lopez-Miguel, I.D. PLCverif: Status of a Formal Verification Tool for Programmable Logic Controller. In Proceedings of the 18th International Conference on Accelerator and Large Experimental Physics Control Systems (ICALEPCS2021), Shanghai, China, 14–22 October 2021; JACoW Publishing: Geneva, Switzerland, 2022. [Google Scholar] [CrossRef]

- Biallas, S.; Brauer, J.; Kowalewski, S. Arcade.PLC: A Verification Platform for Programmable Logic Controllers. In Proceedings of the 2012 IEEE/ACM 27th International Conference on Automated Software Engineering (ASE 2012), Essen, Germany, 3–7 September 2012; pp. 338–341. [Google Scholar] [CrossRef]

- Mochizuki, A.; Sawada, K.; Shin, S.; Hosokawa, S. On experimental verification of model based white list for PLC anomaly detection. In Proceedings of the 2017 11th Asian Control Conference (ASCC), Gold Coast, QLD, Australia, 17–20 December 2017; IEEE: Los Alamitos, CA, USA, 2017; pp. 1766–1771. [Google Scholar] [CrossRef]

- Koffi, K.A.; Kampourakis, V.; Song, J.; Kolias, C.; Ivans, R.C. StructuredFuzzer: Fuzzing Structured Text-Based Control Logic Applications. Electronics 2024, 13, 2475. [Google Scholar] [CrossRef]

- Serhane, A.; Raad, M.; Raad, R.; Susilo, W. Programmable Logic Controllers Based Systems (PLC-BS): Vulnerabilities and Threats. SN Appl. Sci. 2019, 1, 86. [Google Scholar] [CrossRef]

- Yoo, H.; Kalle, S.; Smith, J.M.; Ahmed, I. Overshadow PLC to Detect Remote Control-Logic Injection Attacks. In Proceedings of the 16th International Symposium on Research in Attacks, Intrusions, and Defenses (RAID 2019), Singapore, 17–19 September 2019; Springer: Cham, Switzerland, 2019; pp. 101–122. [Google Scholar] [CrossRef]

- Yang, K.; Wang, H.; Sun, L. An effective intrusion-resilient mechanism for programmable logic controllers against data tampering attacks. Comput. Ind. 2022, 138, 103613. [Google Scholar] [CrossRef]

- Robles, A.; Moradpoor, N.; McWhinnie, J.; Russell, G.; Maneru-Marin, I. PLC Memory Attack Detection and Response in a Clean Water Supply System. Int. J. Crit. Infrastruct. Prot. 2019, 26, 100300. [Google Scholar] [CrossRef]

- Garcia, L.; Zonouz, S.; Wei, D.; de Aguiar, L.P. Detecting PLC Control Corruption via On-Device Runtime Verification. In Proceedings of the 2016 IEEE Resilience Week (RWS), Chicago, IL, USA, 13–15 September 2016; pp. 67–72. [Google Scholar] [CrossRef]

- Niedermaier, M.; Malchow, J.-O.; Fischer, F.; Marzin, D.; Merli, D.; Roth, V.; von Bodisco, A. You Snooze, You Lose: Measuring PLC Cycle Times under Attacks. In Proceedings of the 12th USENIX Workshop on Offensive Technologies (WOOT 18), Baltimore, MD, USA, 13–14 August 2018; USENIX Association: Baltimore, MD, USA, 2018. Available online: https://www.usenix.org/conference/woot18/presentation/niedermaier (accessed on 26 June 2025).

- Munson, A.; Gomez, J.; Cárdenas, Á. With a Little Help from My (LLM) Friends: Enhancing Static Analysis with LLMs to Detect Software Vulnerabilities. In Proceedings of the 2025 International Workshop on Large Language Models for Code (LLM4Code 2025), co-Located with ICSE 2025, Ottawa, ON, Canada, 3 May 2025; ACM: New York, NY, USA, 2025; pp. 1–10. [Google Scholar]

- Nguyen, V.; Le, T.; De Vel, O.; Montague, P.; Grundy, J.; Phung, D. Information-Theoretic Source Code Vulnerability Highlighting. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Nguyen, V.; Le, T.; Tantithamthavorn, C.; Fu, M.; Grundy, J.; Nguyen, H.; Camtepe, S.; Quirk, P.; Phung, D. Statement-Level Vulnerability Detection: Learning Vulnerability Patterns Through Information Theory and Contrastive Learning. arXiv 2024, arXiv:2209.10414. [Google Scholar]

- Khatiwada, S.; Tushev, M.; Mahmoud, A. Just Enough Semantics. Inf. Softw. Technol. 2018, 93, 45–57. [Google Scholar] [CrossRef]

- Filus, K.; Boryszko, P.; Domańska, J.; Siavvas, M.; Gelenbe, E. Efficient Feature Selection for Static Analysis Vulnerability Prediction. Sensors 2021, 21, 1133. [Google Scholar] [CrossRef]

- Torres, A.; Baltes, S.; Treude, C.; Wagner, M. Information-Theoretic Detection of Unusual Source Code Changes. arXiv 2025, arXiv:2506.06508. [Google Scholar] [CrossRef]

- Tang, Z.; Ge, J.; Liu, S.; Zhu, T.; Xu, T.; Huang, L.; Luo, B. Domain Adaptive Code Completion via Language Models and Decoupled Domain Databases. In Proceedings of the 38th IEEE/ACM International Conference on Automated Software Engineering (ASE ’23), Kirchberg, Luxembourg, 11–15 September 2023; IEEE Press: Echternach, Luxembourg, 2024; pp. 421–433. [Google Scholar] [CrossRef]

- Lu, S.; Guo, D.; Ren, S.; Huang, J.; Svyatkovskiy, A.; Blanco, A.; Clement, C.; Drain, D.; Jiang, D.; Tang, D.; et al. Codexglue: A machine learning benchmark dataset for code understanding and generation. arXiv 2021, arXiv:2102.04664. [Google Scholar] [CrossRef]

- Guo, D.; Lu, S.; Duan, N.; Wang, Y.; Zhou, M.; Yin, J. UniXcoder: Unified Cross-Modal Pre-training for Code Representation. arXiv 2022, arXiv:2203.03850. [Google Scholar]

- Zhang, Y.; de Sousa, M. Exploring LLM Support for Generating IEC 61131-3 Graphic Language Programs. In Proceedings of the 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), Beijing, China, 18–20 August 2024; IEEE: Los Alamitos, CA, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Li, Z.; Dutta, S.; Naik, M. IRIS: LLM-Assisted Static Analysis for Detecting Security Vulnerabilities. arXiv 2024, arXiv:2405.17238. [Google Scholar] [CrossRef]

- Koay, A.; Akl, R.; Chataut, R.; Nankya, M. Machine Learning in Industrial Control System (ICS) Security. J. Intell. Inf. Syst. 2023, 60, 377–405. [Google Scholar] [CrossRef]

- Liu, Z.; Zeng, R.; Wang, D.; Peng, G.; Wang, J.; Liu, Q.; Liu, P.; Wang, W. Agents4PLC: Automating Closed-loop PLC Code Generation and Verification in Industrial Control Systems using LLM-based Agents. arXiv 2024, arXiv:2410.14209. [Google Scholar]

- Chen, Y.; Poskitt, C.M.; Sun, J. Learning from Mutants: Using Code Mutation to Learn and Monitor Invariants of a Cyber-Physical System. In Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 20–24 May 2018; pp. 648–660. [Google Scholar] [CrossRef]

- Klieber, W.; Flynn, L. Evaluating Static Analysis Alerts with LLMs. In Software Engineering Institute’s Insights (blog); Carnegie Mellon University: Pittsburgh, PA, USA, 2024. [Google Scholar] [CrossRef]

- Fakih, M.; Dharmaji, R.; Bouzidi, H.; Araya, G.Q.; Ogundare, O.; Al Faruque, M.A. LLM4CVE: Enabling Iterative Automated Vulnerability Repair with Large Language Models. arXiv 2025, arXiv:2501.03446. [Google Scholar] [CrossRef]

- Li, Y.; Meng, R.; Duck, G. Large Language Model powered Symbolic Execution. arXiv 2025, arXiv:2505.13452. [Google Scholar]

- Wang, W.; Li, K.; Chen, A.; Li, G.; Jin, Z.; Huang, G.; Ma, L. Python Symbolic Execution with LLM-powered Code Generation. arXiv 2024, arXiv:2409.09271. [Google Scholar] [CrossRef]

- Yang, K.; Swope, A.; Gu, A.; Chalamala, R.; Song, P.; Yu, S.; Godil, S.; Prenger, R.; Anandkumar, A. LeanDojo: Theorem Proving with Retrieval-Augmented Language Models. In Proceedings of the 37th International Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 10–16 December 2023; Curran Associates: New Orleans, LA, USA, 2023; pp. 2006–2017. [Google Scholar]

- Xin, H.; Guo, D.; Shao, Z.; Ren, Z.; Zhu, Q.; Liu, B.; Ruan, C.; Li, W.; Liang, X. DeepSeek-Prover: Advancing Theorem Proving in LLMs through Large-Scale Synthetic Data. arXiv 2024, arXiv:2405.14333. [Google Scholar]

- Poesia, G.; Loughridge, C.; Amin, N. dafny-annotator: AI-Assisted Verification of Dafny Programs. arXiv 2024, arXiv:2411.15143. [Google Scholar]

- Wu, G.; Cao, W.; Yao, Y.; Wei, H.; Chen, T.; Ma, X. LLM Meets Bounded Model Checking: Neuro-symbolic Loop Invariant Inference. In Proceedings of the 39th IEEE/ACM International Conference on Automated Software Engineering (ASE), Sacramento, CA, USA, 27 October–1 November 2024; IEEE: Sacramento, CA, USA, 2024; pp. 897–909. [Google Scholar] [CrossRef]

- Yau, K.; Chow, K.P.; Yiu, S.M.; Chan, C.F. Detecting anomalous behavior of PLC using semi-supervised machine learning. In Proceedings of the 2017 IEEE Conference on Communications and Network Security (CNS), Los Alamitos, CA, USA, 9–11 October 2017; pp. 580–585. [Google Scholar] [CrossRef]

- Blumer, A.; Ehrenfeucht, A.; Haussler, D.; Warmuth, M.K. Learnability and the Vapnik–Chervonenkis dimension. J. ACM 1989, 36, 929–965. [Google Scholar] [CrossRef]

- Anthropic. Claude API. Available online: https://www.anthropic.com/api (accessed on 21 July 2025).

- DeepSeek. DeepSeek Developer Platform. Available online: https://platform.deepseek.com/ (accessed on 21 July 2025).

- Meta, A.I. CodeLlama-7b-Instruct-hf. Available online: https://huggingface.co/codellama/CodeLlama-7b-Instruct-hf (accessed on 21 July 2025).

- Qwen Team. Qwen2.5-Coder-7B-Instruct. Available online: https://huggingface.co/Qwen/Qwen2.5-Coder-7B-Instruct (accessed on 21 July 2025).

- BigCode Team. StarCoder2-7B. Available online: https://huggingface.co/bigcode/starcoder2-7b (accessed on 21 July 2025).

- Dettmers, T.; Nebgen, B.; Zettlemoyer, L.; Radev, D. Parameter-Efficient Fine-Tuning (PEFT) with Hugging Face Transformers. 2023. Available online: https://huggingface.co/blog/peft (accessed on 20 August 2025).

Figure 1.

Work flow of SAFEPL C(Secure AI Framework for Evaluating PLC).

Figure 1.

Work flow of SAFEPL C(Secure AI Framework for Evaluating PLC).

Figure 2.

Dataset preparation and fine-tuning.

Figure 2.

Dataset preparation and fine-tuning.

Figure 3.

Original safe ST code and LLM-generated vulnerable code.

Figure 3.

Original safe ST code and LLM-generated vulnerable code.

Figure 4.

Binary vulnerability detection performance comparison between base and fine-tuned CodeLlama-7b-Instruct-hf models.

Figure 4.

Binary vulnerability detection performance comparison between base and fine-tuned CodeLlama-7b-Instruct-hf models.

Figure 5.

Vulnerability type classification performance comparison between base and fine-tuned CodeLlama-7b-Instruct-hf models.

Figure 5.

Vulnerability type classification performance comparison between base and fine-tuned CodeLlama-7b-Instruct-hf models.

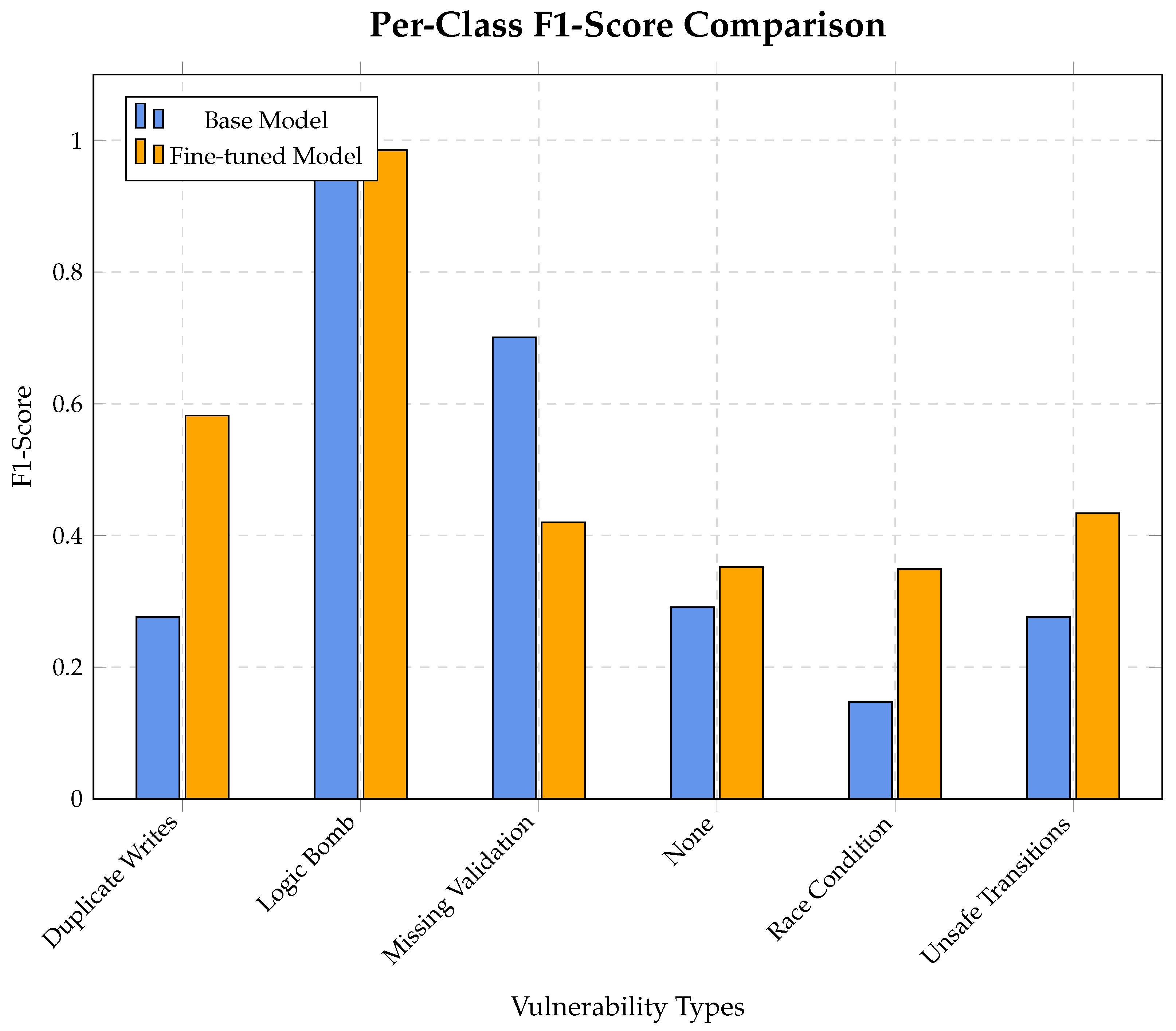

Figure 6.

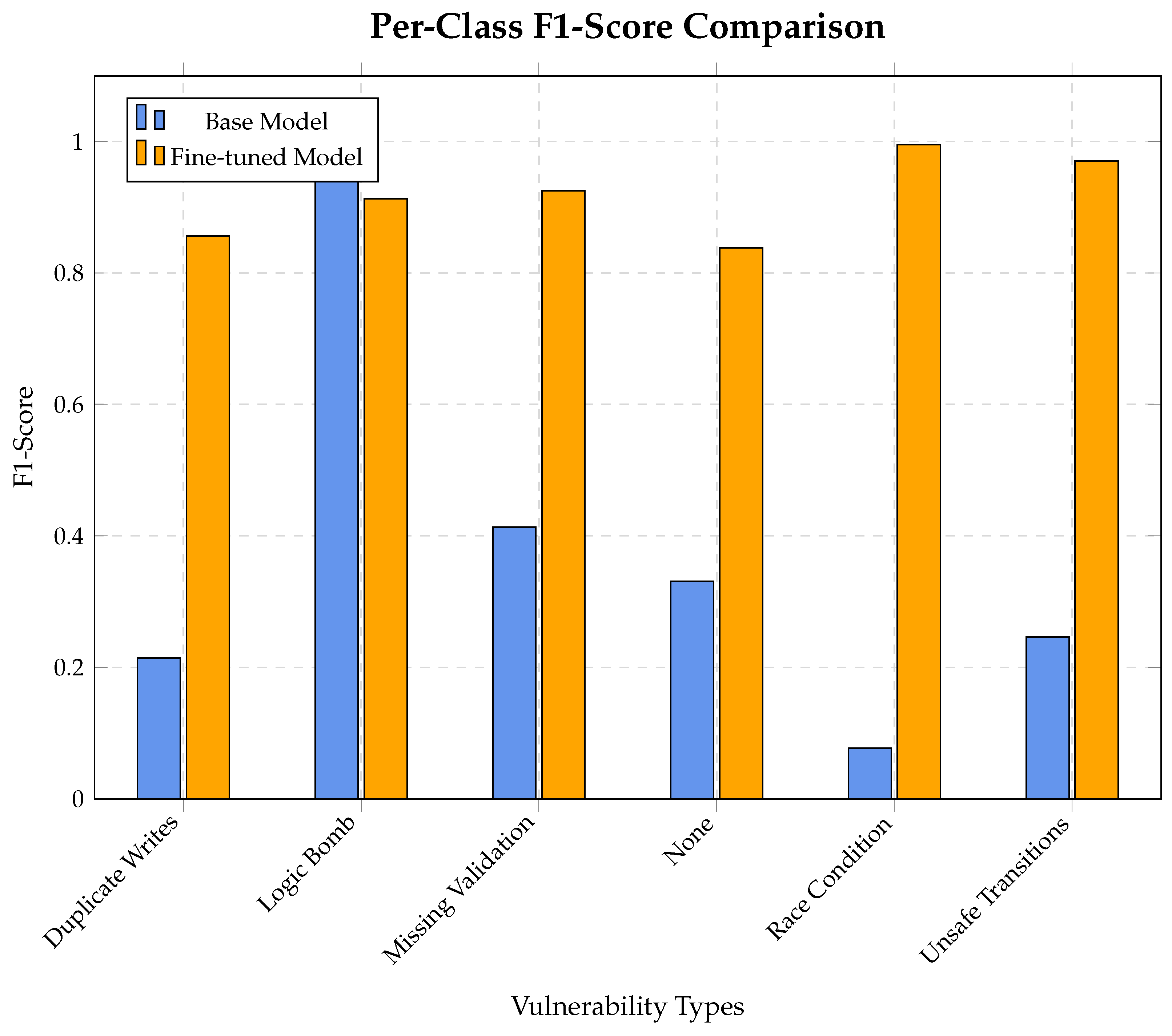

Per-class F1-score comparison showing the impact of fine-tuning on different vulnerability types.

Figure 6.

Per-class F1-score comparison showing the impact of fine-tuning on different vulnerability types.

Figure 7.

Binary vulnerability detection performance comparison between base and fine-tuned Qwen2.5-Coder-7B-Instruct models.

Figure 7.

Binary vulnerability detection performance comparison between base and fine-tuned Qwen2.5-Coder-7B-Instruct models.

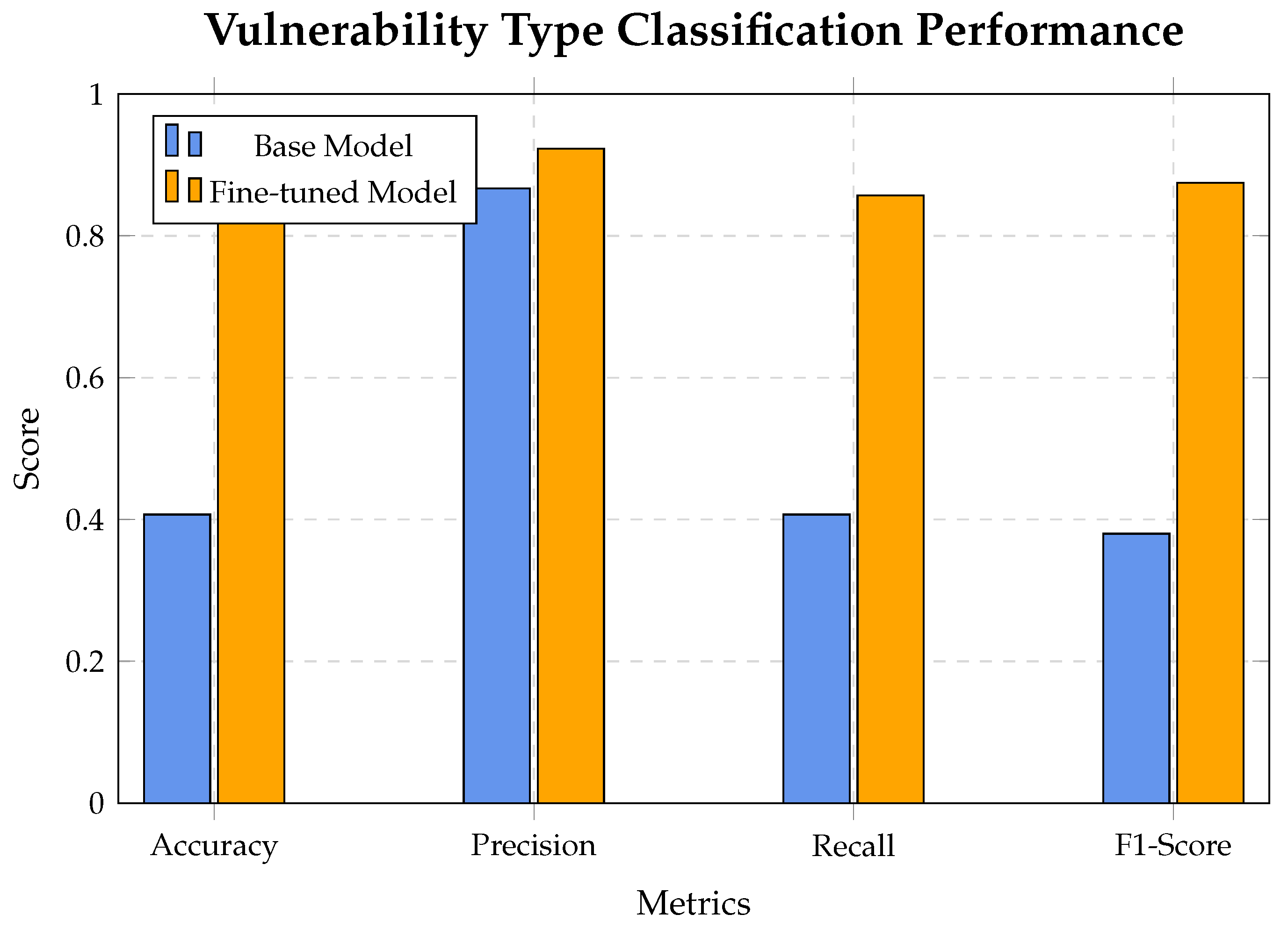

Figure 8.

Vulnerability type classification performance comparison between base and fine-tuned Qwen2.5-Coder-7B-Instruct models.

Figure 8.

Vulnerability type classification performance comparison between base and fine-tuned Qwen2.5-Coder-7B-Instruct models.

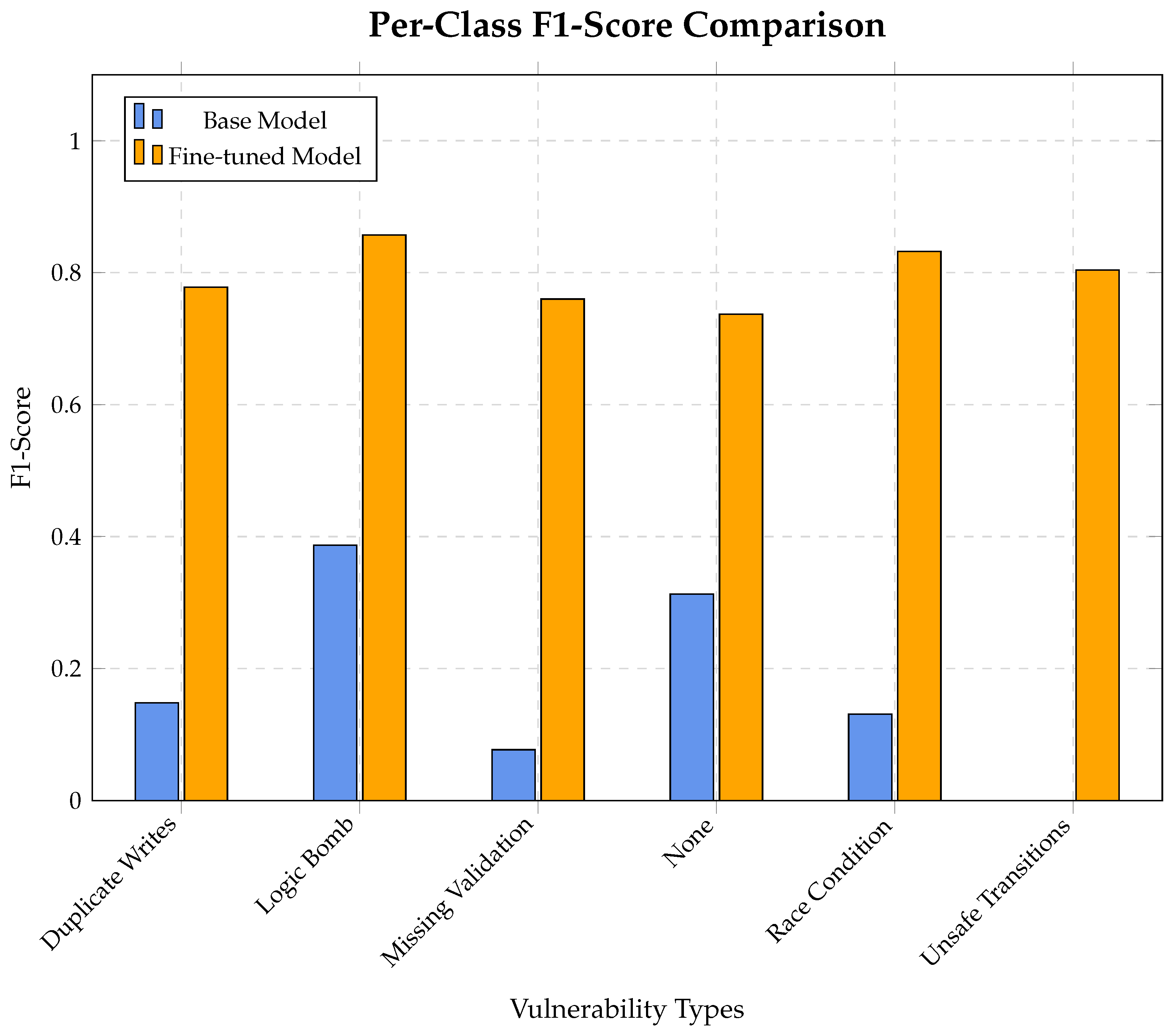

Figure 9.

Per-class F1-score comparison showing the impact of fine-tuning on different vulnerability types.

Figure 9.

Per-class F1-score comparison showing the impact of fine-tuning on different vulnerability types.

Figure 10.

Binary vulnerability detection performance comparison between base and fine-tuned StarCoder2-7B models.

Figure 10.

Binary vulnerability detection performance comparison between base and fine-tuned StarCoder2-7B models.

Figure 11.

Vulnerability type classification performance comparison between base and fine-tuned StarCoder2-7B models.

Figure 11.

Vulnerability type classification performance comparison between base and fine-tuned StarCoder2-7B models.

Figure 12.

Per-class F1-Score comparison showing the impact of fine-tuning on different vulnerability types.

Figure 12.

Per-class F1-Score comparison showing the impact of fine-tuning on different vulnerability types.

Figure 13.

Fine-tuning improvement comparison across models. Improvements are shown as relative percentage increases across four key metrics.

Figure 13.

Fine-tuning improvement comparison across models. Improvements are shown as relative percentage increases across four key metrics.

Table 1.

Common vulnerabilities in industrial control systems.

Table 1.

Common vulnerabilities in industrial control systems.

| Vulnerability | Description |

|---|

| Logic Bombs | Hidden code that only activates under specific conditions, e.g., time-triggered sabotage or logic branches unreachable under normal tests. Often used in insider attacks. |

| Race Conditions | Poor synchronization between cyclic tasks causing inconsistent states, especially with shared outputs or buffers. |

| Duplicate Writes | Multiple conflicting writes to the same actuator or memory area such as Motor := TRUE; Motor := FALSE; within a cycle, leading to ambiguous actuator states. |

| Missing Input Validation | Failing to validate sensor or user input (e.g., direct use of input without bounds checks), leading to overflows, underflows, or division-by-zero. |

| Insecure State Machines | Lack of enforcement of legal state transitions or bypassed interlocks, allowing dangerous states to be entered unexpectedly (e.g., skipping STOP state logic). |

Table 2.

Simatic AX repository statistics.

Table 2.

Simatic AX repository statistics.

| Repo | Description | # .st | LoC1 | LoC2 |

|---|

| actuators | A set of classes for controlling actuators | 25 | 2054 | 1555 |

| lacyccom | Library for acyclic communication | 21 | 4052 | 2341 |

| template-library | A project template for creating an AX library and generating packages | 2 | 30 | 31 |

| actions | | 2 | 43 | 28 |

| ae-hw-engineering | Application example: AX hardware configuration | 6 | 139 | 123 |

| iolink | Library to control iolink devices and siemens bus master | 42 | 14,199 | 5339 |

| ae-debugging | Application example: Debugging | 11 | 192 | 186 |

| dynamics | Basic tools for dynamical systems such as the PID and other controls | 50 | 1816 | 1482 |

| lsinatopo | Modify the topology of Sinamics S120 drives from the user program | 1 | 13 | 14 |

| ae-sortingline | Application example: Sorting line using the windowtracking library | 8 | 244 | 210 |

| ae-json-library | Examples using the json library | 5 | 498 | 405 |

| ae-trafficlight | Application example: Traffic light using the statemachine library | 11 | 411 | 308 |

| ae-tiax | Example for the TIAX workflow | 23 | 1013 | 873 |

| ae-motion-tiax | Using TOs in a TIAX library | 5 | 395 | 370 |

| ae-opcuaconnection | Configure OPCUA connection with Simatic AX | 3 | 142 | 124 |

| sntp | NTP server on S7-1500 | 6 | 754 | 483 |

| ae-axftcmlib | Using the “axftcm” library for a Fischertechnik factory | 3 | 138 | 113 |

| learning-path | Training material for Simatic AX | 69 | 1756 | 1534 |

| string-builder | A StringBuilder class featuring a fluent interface. | 4 | 299 | 242 |

| statemachine | Library for creating state machines according a state pattern | 37 | 1931 | 1533 |

| conversion | Various converter-function variants like ‘ToString’, ‘ToInt’ | 36 | 3105 | 2421 |

| mp-conveyorbelt | A mp-showcase ax-library | 13 | 605 | 518 |

| Generators | Library of signal generators in ST | 4 | 182 | 150 |

| axftcmlib | Basic control modules for factory simulation | 43 | 2147 | 1680 |

| template-app | A project template creating an AX standalone application | 3 | 30 | 24 |

| commands | Library to support PLCOpen commands in a OOP way | 11 | 570 | 473 |

| template-ax2tia | A template for the ax2tia workflow | 3 | 40 | 45 |

| json | Library to serialize/parse JSON object | 42 | 3264 | 2870 |

| types | Data types for general use and SIMATIC PLC hardware components | 11 | 14,799 | 1257 |

| windowtracking | Library for position tracking | 26 | 1667 | 1428 |

| mocks | | 7 | 233 | 189 |

| io | Library with IO handling in ST | 35 | 2109 | 1379 |

| collections | Library for list handling (FIFO/LIFO/LinkedList) | 11 | 578 | 520 |

| simple-control-modules | Collection of common control modules | 23 | 1369 | 983 |

| renovate-config | Configuration for Renovate Bot | 0 | 0 | 0 |

| snippetscollection | Useful ST snippets for AX Code | 0 | 0 | 0 |

| plcopen-snippets | PLCopen block templates as AX Code snippets | 1 | 5 | 3 |

| standardizer-tutorial-lib | Tutorial how to develop a library | 41 | 1184 | 1036 |

| tipps-and-tricks | Here you can find tips and tricks for working with Simatic AX and code | 3 | 49 | 37 |

| hello-ax-world | Hello AX World | 5 | 72 | 54 |

Table 3.

Dataset metadata summary.

Table 3.

Dataset metadata summary.

| Category | Train/Val Count | Test Count |

|---|

| Total Samples | 5261 | 600 |

| Safe Samples | 433 | 100 |

| Vulnerable Samples | 4828 | 500 |

Table 5.

Performance comparison between base and fine-tuned CodeLlama-7b-Instruct-hf models.

Table 5.

Performance comparison between base and fine-tuned CodeLlama-7b-Instruct-hf models.

| Metric | Base Model | Fine-Tuned Model | Improvement | Change |

|---|

| Binary Accuracy | 0.438 | 0.510 | +0.072 | +16.4% |

| Binary Precision | 0.862 | 0.919 | +0.057 | +6.6% |

| Binary Recall | 0.388 | 0.452 | +0.064 | +16.5% |

| Binary F1-Score | 0.535 | 0.606 | +0.071 | +13.2% |

| Type Accuracy | 0.438 | 0.502 | +0.063 | +14.5% |

| Type Precision | 0.845 | 0.784 | −0.061 | −7.2% |

| Type Recall | 0.438 | 0.502 | +0.063 | +14.5% |

| Type F1-Score | 0.448 | 0.520 | +0.072 | +16.1% |

Table 6.

Performance comparison between base and fine-tuned Qwen2.5-Coder-7B-Instruct models.

Table 6.

Performance comparison between base and fine-tuned Qwen2.5-Coder-7B-Instruct models.

| Metric | Base Model | Fine-Tuned Model | Improvement | Change |

|---|

| Binary Accuracy | 0.407 | 0.857 | +0.450 | +110.6% |

| Binary Precision | 0.929 | 0.988 | +0.059 | +6.4% |

| Binary Recall | 0.312 | 0.852 | +0.540 | +173.1% |

| Binary F1-Score | 0.467 | 0.915 | +0.448 | +95.9% |

| Type Accuracy | 0.407 | 0.857 | +0.450 | +110.6% |

| Type Precision | 0.867 | 0.923 | +0.056 | +6.5% |

| Type Recall | 0.407 | 0.857 | +0.450 | +110.6% |

| Type F1-Score | 0.380 | 0.875 | +0.495 | +130.3% |

Table 7.

Comprehensive performance comparison between base and fine-tuned StarCoder2-7B models.

Table 7.

Comprehensive performance comparison between base and fine-tuned StarCoder2-7B models.

| Metric | Base Model | Fine-Tuned Model | Improvement | Change |

|---|

| Binary Accuracy | 0.267 | 0.740 | +0.473 | +177.5% |

| Binary Precision | 1.000 | 0.912 | −0.088 | −8.8% |

| Binary Recall | 0.120 | 0.752 | +0.632 | +526.7% |

| Binary F1-Score | 0.214 | 0.825 | +0.611 | +285.0% |

| Type Accuracy | 0.238 | 0.685 | +0.447 | +187.4% |

| Type Precision | 0.698 | 0.824 | +0.126 | +18.1% |

| Type Recall | 0.238 | 0.685 | +0.447 | +187.4% |

| Type F1-Score | 0.176 | 0.709 | +0.533 | +303.3% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).