1. Introduction

Vehicle Routing Problems (VRPs) are a class of combinatorial optimization formulations of significant importance due to their computational complexity [

1] and broad practical applications [

2]. Generally, VRPs are employed to model and optimize transportation and delivery systems, leading to an extensive taxonomy of problem variants. These include applications in goods distribution [

3], service operations [

4], navigation [

5], passenger transportation [

6], and food delivery [

7]. It is crucial to note that these domains incorporate diverse constraints and operational conditions. The comprehensive review conducted by Rios et al. [

8] provides an in-depth analysis of this diversification.

Many different computational methods have been proposed to tackle routing formulations, including exact algorithms [

9], heuristics [

10], and metaheuristics [

11]. Exact algorithms guarantee optimality but are often computationally prohibitive for large-scale or online problems. Heuristics and metaheuristics, while significantly more efficient, rely on empirically designed rules and human-crafted logic, which may result in suboptimal solutions and limited adaptability to problem variants. Although many heuristic approaches are fast and scalable, their solution quality tends to degrade with increasing problem complexity, additional constraints, or changing instance distributions.

Alternatively, Deep Learning (DL) frameworks offer standardized structures capable of identifying patterns across different instances of a problem and their corresponding solutions [

12]. This ability helps address the challenges posed by the lack of generalization and aims to automatically learn effective policies from data, reducing the reliance on handcrafted rules. Supervised Learning (SL) and Reinforcement Learning (RL) have been employed as training paradigms to ensure the effectiveness of these frameworks, as demonstrated by Vynials et al. [

13] and Bello et al. [

14], respectively. SL relies on optimal labels, which are computationally expensive to obtain; however, the approach proposed by Luo et al. [

15] leverages partial labels, highlighting the potential advantages of this paradigm in the VRPs context. In contrast, RL has become the dominant paradigm in the research community, relying solely on reward signals to optimize model parameters. This trend has enabled the wide development of Deep Reinforcement Learning (DRL) frameworks for VRPs, including contributions for static and deterministic variants [

14,

15,

16,

17,

18].

Significant extensions of DRL algorithms for stochastic and dynamic VRP variants—an active and growing area of research—have been proposed by Bono et al. [

19] and Gama et al. [

20]. However, these methods do not leverage the algorithmic innovations and structural insights achieved in state-of-the-art approaches for static VRP variants, most notably POMO (Policy Optimization with Multiple Optima) [

16]. POMO is specifically tailored to exploit the combinatorial structure of routing problems through multi-trajectory generation and stands as the culmination of a series of advances in policy-based DRL for combinatorial optimization.

A more recent study by Pan et al. [

21] proposes a DRL framework for dynamic routing under uncertain customer demands using a Partially Observable Markov Decision Process and a type of dynamic attention. However, it does not account for time-sensitive constraints, focusing primarily on adaptability and demand fulfillment.

For non-learning approaches—such as those based on stochastic programming [

22] or hybrid metaheuristics [

23]—solutions to Stochastic VRPs (SVRPs) often depend on scenario sampling or rule-based evaluation. While these methods can produce high-quality routes in specific settings, they require full re-optimization whenever input conditions change, such as variations in customer demand or travel times, and cannot generalize across different problem instances. This limits their overall efficiency, scalability, and robustness.

This work addresses a challenging stochastic variant of the VRP, termed the Stochastic Capacitated Vehicle Routing Problem with Service Times and Deadlines (SCVRPSTD). The problem can be viewed as a simplified version of the SCVRP with Soft Time Windows (SCVRPSTW), in which travel times and/or service times are modeled as random variables. In the literature, some works have addressed this more general formulation, e.g., Li et al. [

24] and Taş et al. [

25]; however, their solution strategies rely on conventional methods—exact, heuristic, or metaheuristic—which suffer from the limitations discussed earlier.

The proposed formulation models a delivery system in which a single vehicle with a fixed capacity must execute a sequence of geographically distributed deliveries, returning to a central depot as necessary. Each customer is characterized not only by location and demand but also by temporal features, including service times and delivery deadlines (i.e., latest allowable service start times). Three constraints are addressed: (1) every route must start and end at the depot, (2) the total load per route must not exceed the vehicle’s capacity, and (3) all deliveries must be completed before their respective deadlines. The objective is to minimize the total travel time while also reducing cumulative delays caused by late deliveries.

The solution pipeline for this problem is based on the DRL approach proposed by Kwon et al. [

16]. Hence, this study aims to:

Solve the SCVRPSTD through POMO with Dynamic Context (POMO-DC), which is based on the POMO framework. The proposal incorporates a time-aware dynamic context, enriched state representations, and modified state-transition and reward functions that explicitly capture cumulative travel time and delivery delays.

Design adaptive state-update mechanisms that ensure feasibility under stochastic travel and service times, enforcing time, and operational constraints throughout the decision-making process.

Evaluate the solution model against state-of-the-art metaheuristics implemented in Google OR-Tools, including Guided Local Search, Tabu Search, Simulated Annealing, and Greedy Tabu Search, enabling a robust cross-paradigm comparison.

The remainder of this paper is organized as follows.

Section 2 reviews related work, with emphasis on DRL-based approaches to VRPs.

Section 3 details the problem formulation, proposed model architecture, and training algorithm.

Section 4 presents the experimental setup and discusses the results in comparison with the baselines. Finally,

Section 5 and

Section 6 discuss limitations, outline promising avenues for future research, and summarize the key findings.

3. Materials and Methods

This section presents the qualitative and formal modeling of the delivery system under study, referred to as the SCVRPSTD. To contextualize this variant, it is first necessary to revisit the foundational CVRP and its most closely related extension to the proposed formulation, the SCVRPSTW.

The CVRP extends the TSP by incorporating vehicle capacity constraints [

2]. In this formulation, a fleet of vehicles with limited load capacity is tasked with serving a set of geographically distributed customers, each associated with a known demand. All vehicle routes begin and end at a central depot, and each route must respect the vehicle’s capacity limit, ensuring that the total demand served does not exceed its carrying capacity. The objective is to construct a set of feasible tours that collectively fulfill all customer demands while minimizing the total travel cost.

Similarly, the SCVRPSTW builds upon the CVRP, but integrates temporal features with uncertainty and relaxes strict temporal requirements. Hence, travel times and/or service times are modeled as random variables—typically with known probability distributions—to capture real-world variability arising from both predictable factors (e.g., traffic patterns) and unpredictable disruptions (e.g., accidents, adverse weather). Customers are assigned time windows representing preferred service intervals; however, these are treated as “soft” constraints, allowing early or late arrivals at the cost of penalties proportional to the deviation. The formulation thus aims to balance cost efficiency with service quality under stochastic operating conditions.

In the specific variant examined in this study—consistent with the single-vehicle framework employed by Kwon et al. [

16] and Kool et al. [

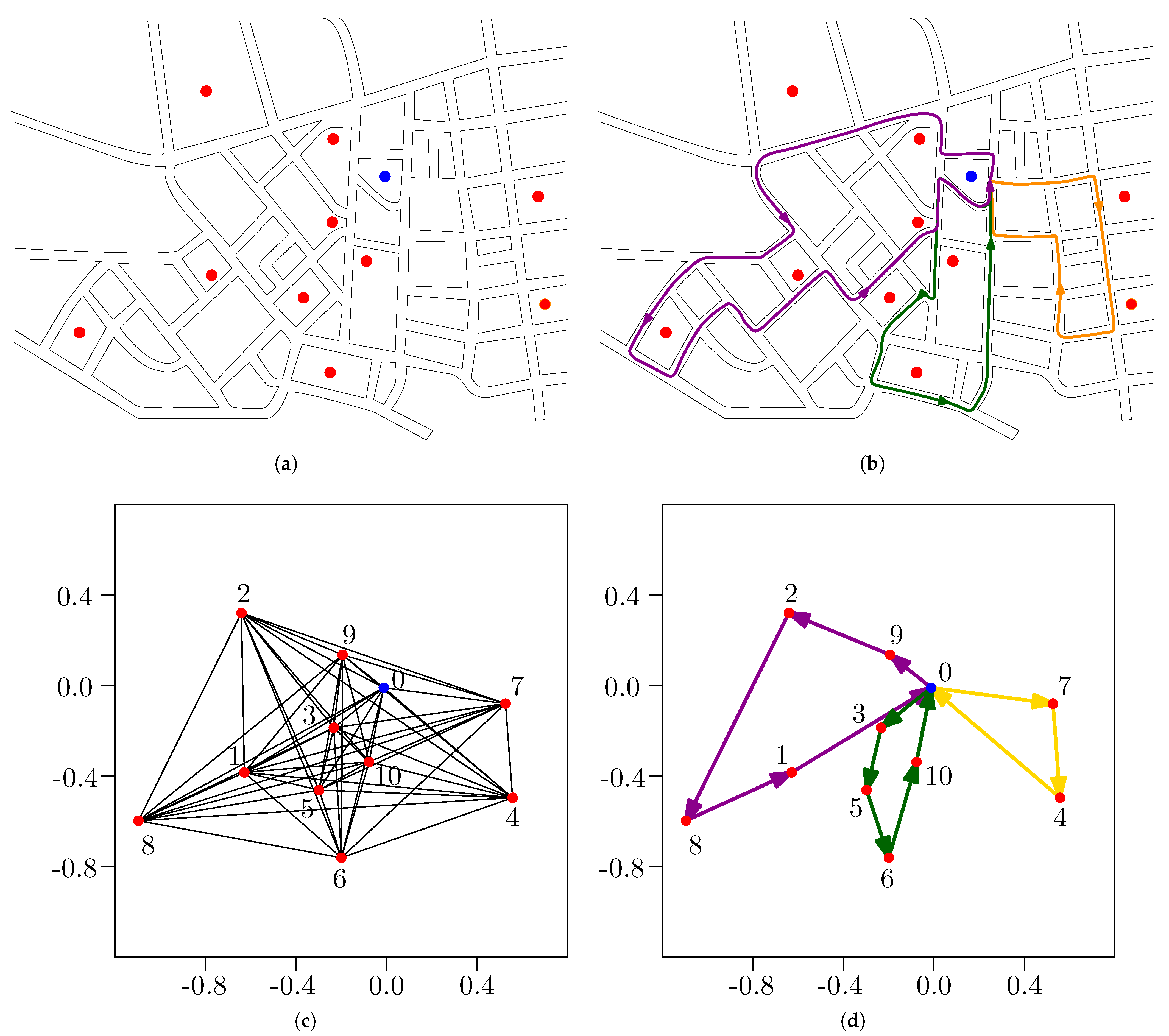

17]—a single vehicle is responsible for serving all customer requests. Given the vehicle’s limited capacity, multiple returns to the depot are permitted to reload, effectively decomposing the global tour into a sequence of sub-tours, as illustrated in

Figure 1. This structure guarantees satisfaction of customer demands even when the aggregate exceeds the vehicle’s carrying capacity in a single trip. The resulting solution can thus be interpreted as a single route that revisits the depot, forming a series of Hamiltonian circuits over disjoint subsets of customers. Nevertheless, in cases where the travel time of a single vehicle becomes infeasible (e.g., exceeding an 8-hour work shift), these Hamiltonian circuits or sub-routes may be reassigned across multiple vehicles, thereby maintaining operational feasibility.

Additionally, to more faithfully capture real-world operational complexities, the formulation preserves the classical CVRP setting—each customer specified by a location and demand—while incorporating three additional features:

Stochastic travel times: Travel durations between locations are represented as random variables, accounting for variability induced by factors such as traffic congestion, weather, or road conditions. These realizations become known only upon route completion or during traversal.

Uncertain service times: The service duration at each customer is drawn from a known distribution, reflecting variability in customer availability, unloading procedures, or accessibility constraints.

Delivery deadlines: Each customer is associated with a latest admissible service start time; arrivals beyond this deadline incur penalties in the reward function, discouraging excessive delays and promoting service reliability.

The objective is to minimize the expected total travel time while simultaneously reducing deadline violations, all within the bounds of strict capacity constraints. By integrating these elements, the SCVRPSTD formulation explicitly addresses the dual challenges of uncertainty and time sensitivity that characterize urban delivery systems.

3.1. Markov Decision Process Formulation

The problem introduced above is formally represented as a Markov Decision Process (MDP), characterized by the tuple , where denotes the state space, the action space, the transition function, and the reward function. The precise definition of each component is provided in the following subsections.

3.1.1. State Space

A state

at decision epoch

t contains all information required to make a decision [

30]. In the proposed formulation, the state is composed of both static and dynamic elements:

Static elements

- -

Vehicle capacity . An integer specifying the maximum number of items the vehicle can carry. Its value depends on the instance size, defined by the number of delivery requests n.

- -

Depot features . A tensor with three attributes for the depot in each of the b problem instances (batch dimension): 2D coordinates and the service time for reloading, .

- -

Delivery features . A tensor with five attributes for each of the n customer locations across the b instances: 2D coordinates, demand (number of items to deliver), service time (delivery duration), and deadline (latest possible time for fulfilling the request).

Dynamic elements

- -

Current actions

. The set of locations selected at decision epoch

t, representing feasible next locations or nodes that respect problem constraints. The symbol

is the number of parallel solutions or trajectories computed per instance [

16].

- -

Current vehicle load . The load carried by the vehicle at epoch t, updated according to the cumulative demands of visited customers.

- -

Accumulated travel time . The elapsed travel time up to epoch t, computed from stochastic travel durations and service times. This feature informs both state transitions and the reward signal.

- -

Accumulated delay . The total penalty time incurred when customer deadlines are violated, dynamically updated throughout the route.

- -

Feasibility mask . A binary tensor that excludes invalid actions by masking locations already visited or infeasible due to load constraints at epoch t.

- -

Trajectory completion mask . A binary tensor indicating whether a trajectory has been fully constructed, thereby disabling further action selection for that trajectory.

It is important to note that most of the temporal features and tensors defined above were not part of the original CVRP formulation addressed in [

16]. Consequently, the state transition and reward functions have been consistently adapted to the extended problem structure.

3.1.2. Action Space

The action space consists of all valid action tensors for the b instances in the batch and the trajectories at the current decision epoch t. These tensors are generated sequentially by the NNM based on the current state .

After

T decision epochs, the solution tensor

is constructed by concatenating the sequence of actions along the last dimension, as described in Equation (

1).

To ensure feasibility concerning problem constraints, such as vehicle capacity and unvisited nodes, action masking is applied during inference using

. A detailed description of the action generation mechanism and masking process is provided in

Section 3.2.2, where the NNM’s decoding procedure is discussed.

3.1.3. Transitions

Transitions capture the effects of actions on the environment, manifesting as changes in the states.

Given the action tensor

, the type of each selected location is evaluated. If the selected location corresponds to the depot (i.e.,

), the vehicle’s available load is reset to its maximum capacity:

. Additionally, the corresponding travel time

is updated to include the time required to reload the vehicle, as defined in Equation (

2).

On the other hand, for entries in

corresponding to delivery requests, the delivery delays, available supplies, and travel times are updated according to Equations (

3), (

4) and (

5), respectively. Where

denotes the demand tensor, and

is the tensor of service times—features included in

.

Following these updates, the next set of requests to be delivered is determined and gathered into the action tensor

. The edge travel time between consecutive locations is computed based on Euclidean distance, scaled by a stochastic multiplier

. Specifically, the distance between locations

j and

k, with coordinates

and

, respectively, is given by

. The corresponding travel time is proportional to this distance:

, where

is sampled independently for each edge, introducing the main stochastic component of the formulation. These values are accumulated into the global travel time tensor

, in a similar way as expressed by Equation (

5).

This transition mechanism ensures that the state evolves dynamically in response to agent actions, incorporating both the constraints and stochastic factors of the formulation.

3.1.4. Reward Function

The objective of this formulation is to jointly minimize the total travel time

and the accumulated delivery delays

, given the solution tensor

. The reward is computed by simply summing the expected values of these two terms, as shown in Equation (

6).

In addition to this direct formulation, a normalized weighted sum was also explored for computing the reward. This is a common approach used in multiobjective formulations; refer to Lin et al. [

43] for a detailed explanation of this and other schemes. While this approach allows for explicit control over the trade-off between objectives, the empirical results indicate that the simpler additive form in Equation (

6) consistently leads to better performance. Comments that argue this behavior are discussed in

Section 5.

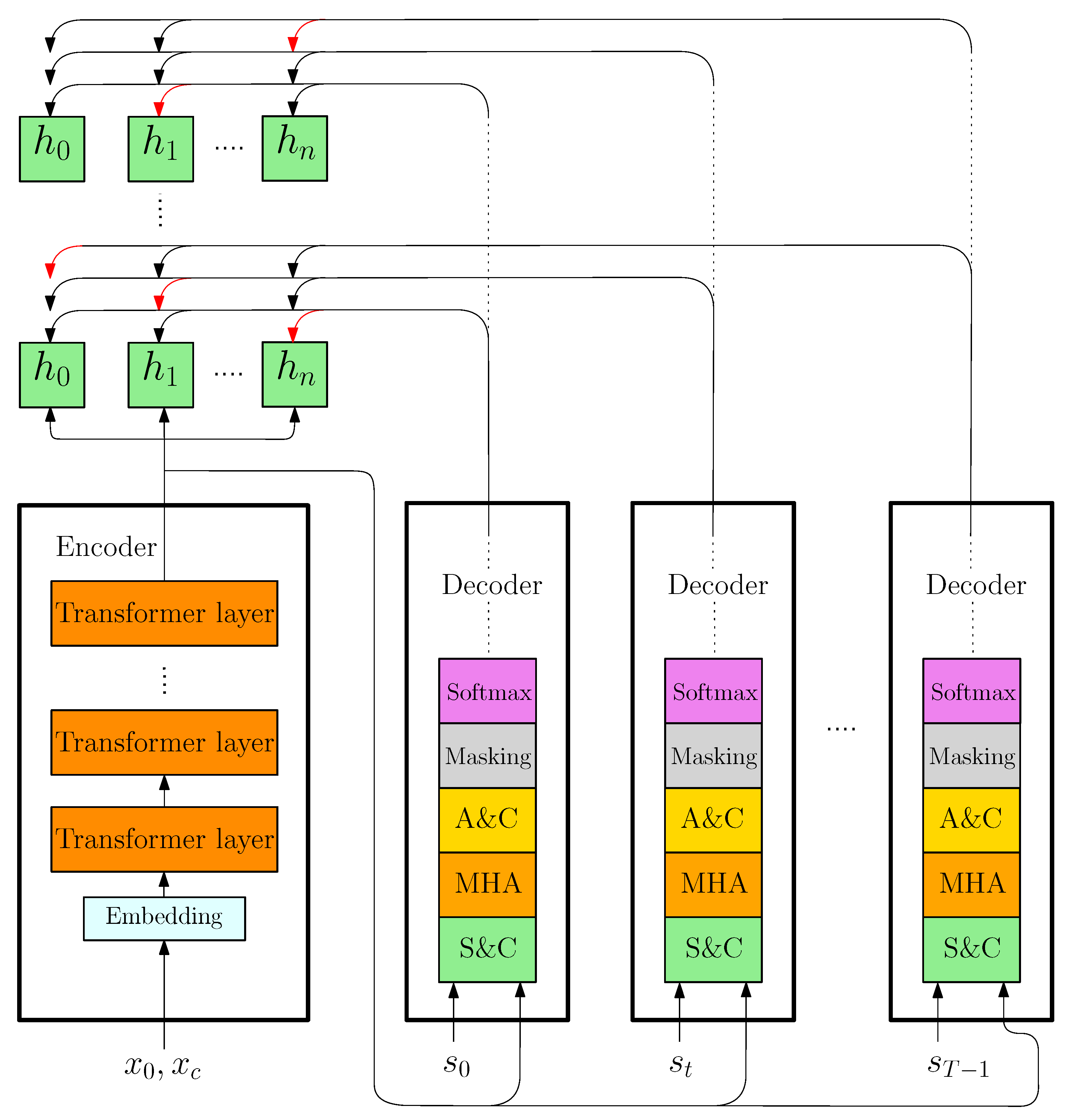

3.2. Solution Model

Figure 2 presents a schematic representation of the computational pipeline executed by the proposed NNM. The architecture builds upon the AM framework introduced by Kool et al. [

17] and follows the implementation principles of POMO [

16], while incorporating key adaptations and contributions developed in this work. As discussed in

Section 2, Transformer-based architectures offer superior modeling capabilities compared to RNNs, particularly through their ability to process input sequences in parallel and capture long-range dependencies. These attributes, combined with their capacity to exploit structural symmetries in graph-structured problems make them especially well-suited for combinatorial optimization tasks, specifically VRPs. The mentioned features provide a strong rationale for adopting this type of architecture in the context addressed and argue for the design of the proposed model.

The architecture follows an encoder–decoder structure. The encoder maps the input problem instance into a set of vector representations using the standard Transformer architecture [

37]. The decoder, on the other hand, generates multiple solution trajectories in parallel for each instance by leveraging both the encoder outputs and a dynamic context vector that evolves over decision epochs. This process is carried out in an autoregressive manner, enabling sequential decision-making conditioned on the current state. The following sections formally describe the design and functionality of each component in the proposed architecture.

3.2.1. Encoder

The encoder transforms raw input features into high-dimensional representations through a series of learned embeddings and Transformer layers [

37]. This process enables the model to capture both local node characteristics and global structural dependencies within the routing instance.

To facilitate unified processing of heterogeneous node types, the depot and customer features are projected into a shared latent space of dimension

. This ensures that all nodes—despite differing input dimensions—are represented in a common “semantic” or “functional” space, enabling the attention mechanism to reason over them coherently. In

Figure 2, such a process is depicted within the encoder under the “Embedding” block.

The transformation of depot features into this latent space is defined in Equation (

7). Specifically, the normalized depot tensor

is linearly mapped using a learnable weight matrix

and bias vector

, resulting in the embedded representation

.

Customer features, which include coordinates, demand, service time, and deadline, are processed through a separate but analogous transformation, as given in Equation (

8). The normalized tensor

is projected using learnable parameters

and

.

This separation preserves the distinct semantics of depot and customer nodes while mapping them into the same latent space.

Once computed, the individual embeddings are combined to form a global node representation. As expressed in Equation (

9),

and

are concatenated along the second dimension to yield a unified feature tensor

.

This tensor serves as the input to a stack of L Transformer layers, where relational reasoning among all nodes is performed.

Each Transformer layer applies Multi-Head Attention (MHA) to refine the node representations. For the

m-th attention head in layer

l, the key, query, and value projections are computed according to Equations (

10), (

11) and (

12), respectively.

where

, and the projection matrices

,

,

are learned to extract relevant features for attention computation.

The attention scores are derived from the dot products of queries and keys, scaled by

to prevent vanishing gradients and stabilize training. This operation is formalized in Equation (

13).

The resulting scores are normalized via softmax and used to weight the value vectors, producing the output of the attention head. Such a transformation is specified in Equation (

14).

Each thus represents an instance-aware summary, where attention is focused on the most relevant locations.

The outputs from all

M heads are concatenated and linearly transformed to restore the original dimensionality. This step, shown in Equation (

15), integrates information from multiple attention subspaces.

The matrix

projects the concatenated output back into

, ensuring compatibility with the residual connection [

44].

A residual connection is then applied, followed by instance normalization, as defined in Equation (

16). This promotes stable gradient propagation and accelerates convergence [

44].

Subsequently, a Feed-Forward Network (FFN) further processes the normalized output. This transformation, detailed in Equation (

17), consists of a linear projection, ReLU activation, and a second linear layer.

Finally, a second residual connection and normalization yield the output of the

l-th layer, as given in Equation (

18).

This two-sublayer structure—attention followed by FFN—constitutes one Transformer block, as depicted in

Figure 2 by the orange boxes, and is repeated

L times to progressively refine the node embeddings. After

L layers, the final output

contains enriched, instance-sensitive representations for all nodes. Specifically, it consists of

feature vectors of dimension

for each of the

b instances in the batch. Such embeddings are used by the decoder to guide the sequential construction of vehicle routes.

3.2.2. Decoder

While Kool et al. [

17] introduced dynamic context in attention-based models by incorporating the vehicle’s remaining load and the representation of the last visited node during decoding, the proposed decoder extends this concept by explicitly integrating a broader set of dynamic, time-varying features. In addition to these, it accounts for variables such as elapsed travel time and cumulative delay (or time-outs), which evolve as the route progresses. This enriched dynamic context enables more accurate modeling of realistic routing scenarios, where decisions must adapt continuously to changing environmental conditions.

At every decision point

t, the decoder processes the current dynamic state and uses it to construct a context-aware query that guides attention toward the most promising next node (as depicted in

Figure 2 by the arrows). Specifically, the first step is to “Scale and Concatenate (S&C)” the relevant dynamic quantities, forming the dynamic context vector. These quantities include the embedding of the last visited location

, the current vehicle load

, the cumulative travel time

, and the accumulated delay

, each normalized by its respective maximum possible value (the result of this operation is denoted by the hat symbol). The resulting context vector is defined in Equation (

19).

where the concatenation is applied along the second dimension. As a result, the dynamic context tensor satisfies

.

This context vector serves as the query input to the MHA mechanism in the decoder. The key and value projections are derived from the encoder’s final output

using linear transformations, as specified in Equations (

20) and (

21), respectively.

The query is obtained by projecting the dynamic context

, as defined in Equation (

22).

Attention scores are computed using scaled dot products between the query and key matrices. To ensure feasibility, inadmissible actions are masked out during this computation. The masked attention scores are given in Equation (

23).

In Equation (

23),

is the dynamic feasibility mask that disables invalid actions at the current decision or time step. For the depot, the mask is activated if it has already been visited in a previous iteration. For customer locations, a mask is applied if the location has either already been visited (i.e., its demand is zero) or if its current demand exceeds the available load in the vehicle. These constraints are mathematically defined in Equations (

24)–(

27). In this context,

represents an expanded tensor that indicates the demands for all

locations (with the depot having zero demand) across all

trajectories and

b samples in the current batch.

The masked scores are passed through a softmax function and used to compute a weighted sum of the value vectors, as shown in Equation (

28).

The outputs from all

M attention heads are concatenated and linearly transformed to restore the original embedding dimension. This operation, defined in Equation (

29), produces the refined context vector

.

Such an output integrates information from multiple attention subspaces and serves as input to the final attention layer. In this layer, compatibility scores between

and the encoded node features

are computed via scaled dot product attention. The resulting score tensor

is defined in Equation (

30).

A second masking operation (Note that the first masking operation is performed within the MHA block) is applied to enforce feasibility constraints based on the dynamic mask

. As described in Equation (

31), if a location is deemed infeasible (i.e.,

), its corresponding score is set to

to eliminate it from further consideration during the softmax operation effectively, while valid scores are clipped using a hyperbolic tangent function scaled by a constant

, ensuring that extreme values are smoothly controlled.

Finally, the policy’s output distribution over feasible actions is obtained by applying a softmax function to the masked and clipped scores, as given in Equation (

32).

The tensor represents the probability of selecting each node at step t, or it is understood a realization of the policy (i.e., the NNM). During training, actions are sampled from to enable exploration; during inference, a greedy selection is typically used. Additionally, is appended to a global tensor , which is used in policy gradient updates.

The decoding process continues until all trajectories are complete. The trajectory completion mask is updated at each step based on whether all customer demands have been satisfied. This condition is evaluated using the visit mask (if all their elements in the last dimension are set to 1, then the computation is finished).

3.3. Training Scheme

The NNM introduced in the preceding section is trained using a variant of the REINFORCE algorithm [

34] that uses a shared baseline [

16] to reduce the variance of the gradient estimates. For a given sample

i in a batch of size

b, the shared baseline is computed as the average reward across all

trajectories:

This tensor should be expanded to match the shape of the full reward tensor, yielding .

The complete training procedure, detailing the computational steps performed at each epoch, is outlined in Algorithm 1. POMO-DC refers to the proposed DRL model trained using a POMO-based pipeline and enhanced with the dynamic context-aware decoder. The whole process involves encoding the batch of problem instances, executing rollouts to collect rewards and log-probabilities of actions, and updating the model parameters using policy gradient updates. The learning rate is dynamically adjusted according to a predefined schedule, and model checkpoints are periodically saved to facilitate evaluation and ensure fault-tolerant training.

In this form, the objective function to be minimized is approximated by:

This loss encourages the model to assign a higher probability to trajectories that outperform the average solution for the same instance.

| Algorithm 1 Overview of the POMO-DC training procedure for the SCVRPSTD |

- 1:

Input: Environment hyperparameters , model hyperparameters , train hyperparameters , and optimizer hyperparameters - 2:

Output: Trained model parameters - 3:

Initialize environment - 4:

Initialize model - 5:

Initialize optimizer - 6:

Initialize learning rate scheduler - 7:

for epoch to do - 8:

Reset loss: - 9:

for episode to with step size do - 10:

Sample batch instances: - 11:

Encode instances: - 12:

Initialize rollout: - 13:

repeat - 14:

Select actions and probabilities: - 15:

Apply actions: - 16:

Accumulate log-probabilities: - 17:

until termination - 18:

Compute reward: - 19:

Compute advantage: - 20:

Compute loss: - 21:

Update mode parameters: - 22:

end for - 23:

Step learning rate scheduler: - 24:

end for

|

4. Experiments and Results

4.1. Experimental Setup

Table 2 summarizes the parameters used for the environment setup, model architecture, and training configuration. The symbol

denotes a uniform distribution, with braces indicating discrete sets and parentheses representing continuous intervals. These settings are based on prior work in the literature—such as [

17,

19]—as well as operational conditions observed in local logistics companies. Particularly, the stochastic multiplier

is calibrated to produce a maximum velocity of 61 km/h when traversing an edge. The environment setup (first part of

Table 2) can be adapted to specific real-world scenarios or delivery system requirements using historical data. Three instance sizes were considered, corresponding to 20, 30, and 50 customers. Vehicle capacity was scaled accordingly, with maximum loads of 30, 35, and 40 units, respectively. The NNM model was trained for 50 epochs using over 1.28 million synthetic samples, in batches of 64. During evaluation, a separate set of 100 independent test instances was considered.

Concerning hardware, all experiments were conducted on a workstation equipped with an Intel(R) Core(TM) i7-4790 CPU (3.60 GHz) and an NVIDIA GeForce RTX 3080 Ti GPU.

The remainder of this section presents a detailed analysis of the results obtained by the proposed method, referred to as POMO-DC, and its performance in comparison with state-of-the-art metaheuristic approaches. Direct comparisons with other learning-based methods are challenging to establish, as existing architectures typically require significant adaptations to align with the specific formulation addressed. Such modifications often result in models that differ substantially from the original and may be considered entirely new proposals.

4.2. General Results

To evaluate the performance of the proposed approach against the considered classical metaheuristic methods, a benchmarking framework is implemented using the Google OR-Tools library [

42], an open-source toolkit designed for solving a wide range of combinatorial optimization problems. OR-Tools has been widely adopted in both academic and industrial research, e.g., Zhang et al. [

41] and Bono et al. [

19], serving as a standard baseline for VRPs and scheduling applications. This software enables the use of different algorithms to obtain solutions for a particular problem once the features of the formulation have been established.

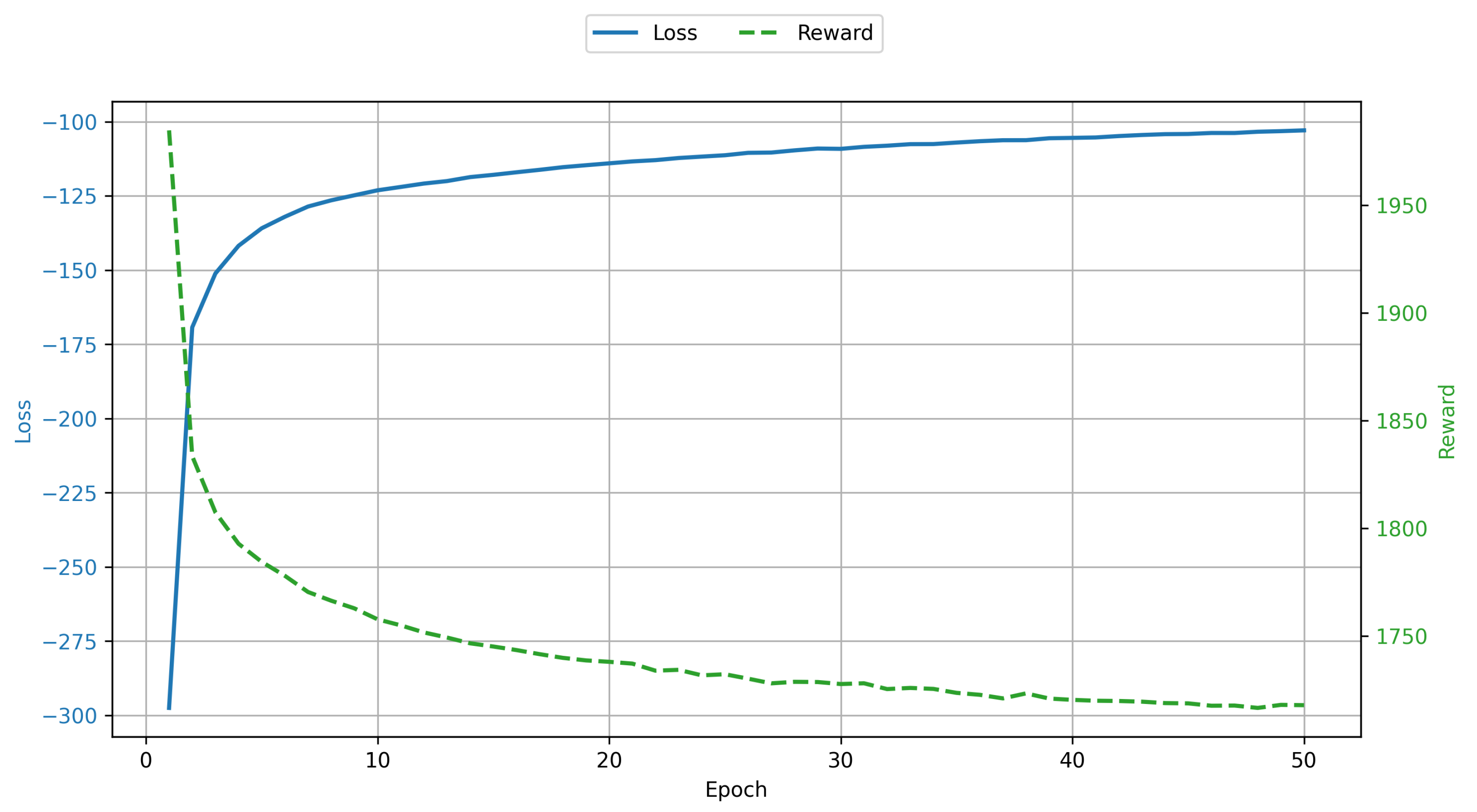

Three NNM using the POMO-DC approach (see Algorithm 1) were trained using different instance sizes (

).

Figure 3 shows the evolution of the training loss and reward across epochs for the proposed POMO-DC model using instances of

customers; for the other instance sizes, the behavior is similar. As expected, the loss decreases steadily, indicating that the policy network is effectively learning the direction in which its parameters should be changed to maximize expected return, in line with the principles of policy-based RL. Concurrently, the reward curve exhibits a consistent upward trend (to simplify training visualization, a minus sign is applied to this value, making the reward positive), demonstrating that the model progressively improves its routing performance as training proceeds.

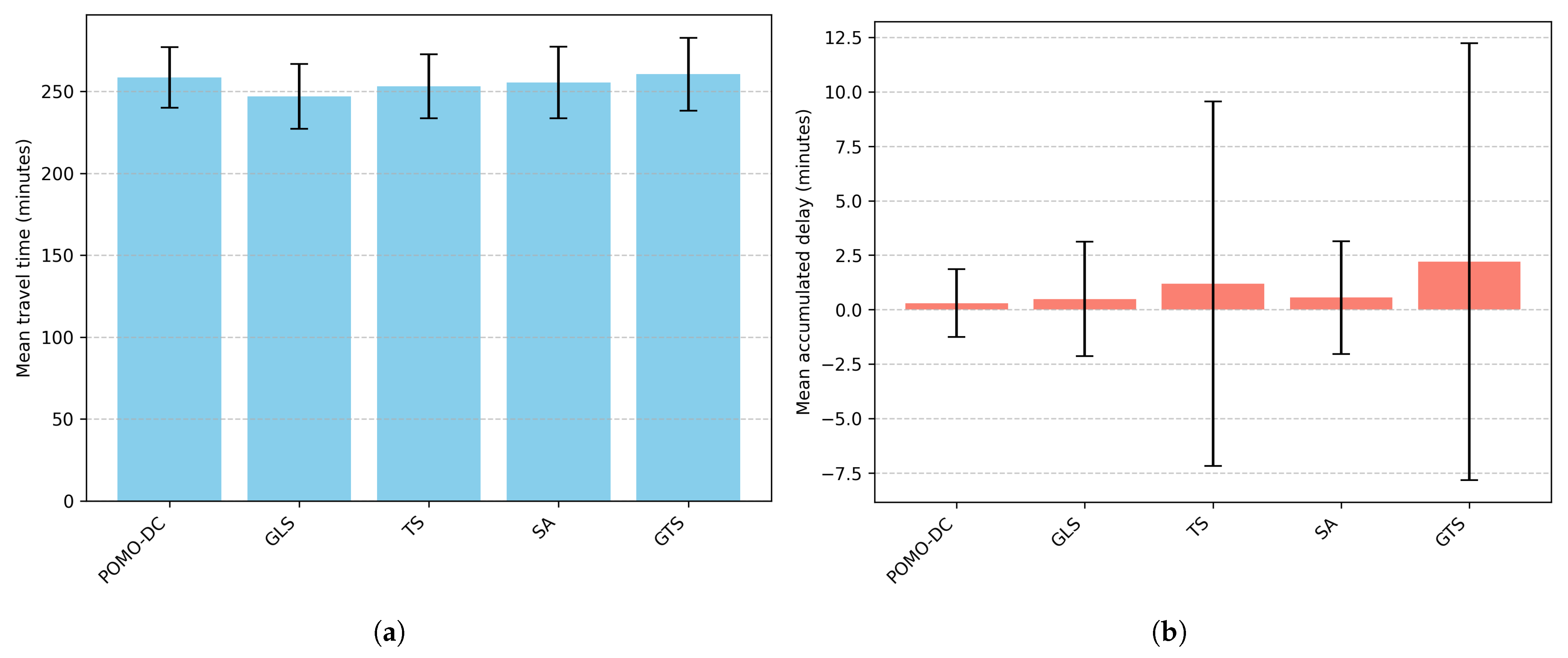

After training, the models are evaluated on 100 previously unseen instances and benchmarked against OR-Tools configurations employing Guided Local Search (GLS), Tabu Search (TS), Simulated Annealing (SA), and Greedy Tabu Search (GTS).

Table 3 and

Figure 4 present the numerical and graphical results for

. In terms of travel time, GLS obtains the best average performance (246.46 min), followed closely by TS (252.22 min) and SA (255.34 min). The proposed method, POMO-DC, achieves a mean travel time of 258.44 min—slightly above metaheuristics—but with the lowest variability (±18.47 min). These outcomes highlight the model’s ability to generate efficient and reliable solutions for small-scale instances, despite relying on a learning-based approach rather than a deterministic optimization procedure.

When considering delivery delays, POMO-DC outperforms all baselines in instances with 20 customers. It reports the lowest mean delay accumulation (0.30 min) and the lowest variance (±1.55 min), indicating consistent schedule adherence across problem instances. Although GLS achieves a low mean delay as well (0.49 min), its variability is nearly double that of POMO-DC. The other metaheuristics, TS, SA, and GTS, incur larger and more variable delays, with GTS reaching a mean delay accumulation of 2.20 min and a relatively high standard deviation of 10.03 min. This reflects signs of unstable performance under scheduling constraints.

For

,

Table 4 and

Figure 5 report the performance of POMO-DC and the four benchmarks (GLS, TS, SA, and GTS). The reported mean travel time for our proposal is not only the lowest among all methods but also comes with a relatively tight spread (±26.74 min), reflecting consistent performance across instances. The metaheuristics, while still within a comparable range of travel times (approximately 391–394 min), incur substantially higher penalties from out-of-schedule deliveries. As the problem size increases, the advantage of POMO-DC in terms of delay control becomes more pronounced. It achieves a mean delay of only 20.35 min, compared to well over 170 min for all metaheuristic methods. Furthermore, the standard deviation of delays for POMO-DC (65.27 min) is nearly four times lower than that of its closest metaheuristic counterpart, underscoring the model’s robustness and stability in maintaining schedule feasibility.

Finally,

Table 5 and

Figure 6 summarize the performance of the proposal and the mentioned metaheuristic approaches for

. POMO-DC continues to demonstrate strong generalization capabilities. It achieves the lowest travel time (537.62 min) among all methods, outperforming the metaheuristic baselines by more than 60 units on average. Additionally, its travel time variation (±27.75 min) remains tightly controlled, reinforcing the model’s consistency across complex instances.

The most significant advantage of POMO-DC for this setting (), as in the case for , lies in the accumulation of delays. While the NNM records an average delay of 1098.97 min, the metaheuristic methods exhibit massive delay values exceeding 4300 min. This indicates that, although the benchmarks can find reasonably short routes, they struggle to maintain feasibility concerning delivery deadlines. Moreover, the variance in delays for the metaheuristics remains high (the standard deviations are above 900 min), revealing unstable performance in time-sensitive scenarios.

5. Discussion

Overall, POMO-DC demonstrates competitive performance within the proposed comparative framework. As shown in

Table 3, the model achieves travel time values higher than the best metaheuristic but remains competitive across instances with 20 customers. It is important to note that the proposed method relies on learned policies, which approximate optimal behavior through pattern recognition rather than exhaustive combinatorial search. Therefore, these models can produce a high-quality but slightly suboptimal route simply because they learn statistical patterns using average performance as a reference.

In contrast, for the second optimization objective—delay accumulation—the mean values achieved by POMO-DC are comparable for

and significantly superior for

and

. These outcomes confirm that DRL-based models are capable of effectively managing soft time constraints, mitigating infeasibility through the incorporation of penalty terms in the reward function. The proposed framework is also generalizable to other problem formulations, objectives, and constraints. For instance, in a customer inconvenience minimization scheme, such as that of Taş et al. [

25], service quality ratings could be accumulated over a route and incorporated into the reward function and the dynamic context to promote higher service levels. Future work could also extend the approach to additional operational constraints, including, but not limited to, energy consumption, route duration limits, and pickup-and-delivery requirements.

The values reported in

Table 5 for delay accumulation are impractical in real-world operations. This limitation stems from the computational setup, which assumes that a single vehicle is responsible for serving all requests—a scenario that diverges from typical delivery systems, where a fleet of vehicles is usually deployed. An alternative interpretation is to consider that multiple vehicles are available but constrained to begin their routes sequentially, with each departing only after the previous one has completed its journey. A short-term mitigation strategy would be to assign a vehicle to each route identified in the solution and dispatch them simultaneously; however, this may still yield suboptimal outcomes due to the time constraints embedded in the training process. A more effective and sustainable approach is to integrate multi-vehicle scheduling directly into the model’s training pipeline, ensuring that routing decisions are optimized jointly with vehicle assignment. The inductive biases proposed by Zhang et al. [

41] offer valuable insights for pursuing this research direction.

Some conjectures may explain why the simplest additive form of the reward computation, Equation (

6), outperforms a normalized weighted sum. In RL—particularly in policy gradient methods such as REINFORCE and its variants—reward design plays a crucial role in training stability. The weighted sum introduces additional hyperparameters (weights, normalization constants) that must be tuned carefully; suboptimal settings could amplify variance or bias in the policy gradient estimates, leading to slower convergence or unstable learning. Moreover, simpler reward structures could produce more stable gradients, especially in stochastic environments such as the proposed formulation. Nevertheless, these statements must be supported by quantitative results based on experimentation. To the best of our knowledge, no research has developed a comprehensive survey on reward functions for VRPs, particularly addressing their design choices, stability implications, and impact on policy generalization. Such a study could provide valuable guidelines for selecting or tuning reward structures in DRL-based VRP solvers.

It is also important to acknowledge the limitations of this work regarding comparability. In particular, comparisons with other DRL approaches are inherently constrained, as existing methods often address different VRP variants with distinct constraints and operational conditions, making direct benchmarking non-trivial. A systematic head-to-head evaluation across DRL models under standardized conditions remains an open and valuable direction for future research, though it would require substantial effort to adapt and train different NNMs on a common test formulation. In the case of the CVRP, for example, such cross-model evaluations have been pursued by Nazari et al. [

18] Kool et al. [

17] and Kwon et al. [

16]. Bono et al. [

19] also claim to perform this type of comparison between their proposal and the AM; however, details regarding the adaptation process are not provided in the manuscript, which limits reproducibility and interpretability of their results.

Other immediate extensions of this proposal include evaluating the model on real-world instances. In this context, publicly available benchmarks derived from historical data—such as TSPLib [

46] or CVRPLib [

47]—provide valuable resources for standardized testing. Alternatively, constructing a custom dataset tailored to a specific operational context would allow direct assessment of the model’s impact on real transportation system performance. Furthermore, the stochastic components used in instance generation can be refined by aligning their distributions with empirical studies focused on dataset design. Fachini et al. [

22] offer a dataset based on several previous contributions, combining them effectively.

Summarizing, the results demonstrate that the POMO-DC algorithm can effectively address stochastic variants of the VRP—a significant advancement given the strong inductive biases embedded in its design. In particular, the integration of a richer context within the decoding process provides a simple and adaptable mechanism for incorporating dynamic aspects of the environment. This design enables the model to track cumulative metrics such as travel time and delays, which can be directly leveraged in the reward function.

6. Conclusions

This work addresses the SCVRPSTD. The formulation is solved using an attention-based DL model trained through RL. The proposed model, POMO-DC, is a novel extension of the POMO approach, which integrates a dynamic context mechanism to capture travel times and delivery delays. By enriching the state representation and modifying the transition and reward dynamics, the model effectively handles uncertainty and enforces time constraints in a stochastic environment.

Empirical results show that POMO-DC achieves competitive travel times while significantly outperforming classical metaheuristics in delay management. This demonstrates the potential of DRL models to balance efficiency and schedule adherence in complex routing scenarios. Nevertheless, the high delay values observed in large single-vehicle instances highlight the need for multi-vehicle coordination. The study also suggests that simpler reward formulations can yield more stable learning in stochastic VRP settings, though systematic experimentation is required to validate this hypothesis.

Several directions emerge for future research. First, incorporating multi-vehicle coordination within the training framework would address the limitations of single-vehicle assumptions and improve real-world applicability. Second, exploring additional operational constraints—such as energy use, duration limits, or pickup-and-delivery—could broaden the scope of the proposed model. Third, systematic studies on reward design are needed to assess its role in stability and generalization across VRP variants. Finally, standardized cross-model evaluations and testing on real-world or empirically grounded datasets would enhance comparability, reproducibility, and practical impact.