1. Introduction

Climate change has intensified the occurrence and severity of environmental hazards, with coastal regions facing some of the most significant impacts. Rising sea levels, storm surges, and accelerated erosion threaten not only fragile ecosystems but also critical infrastructure [

1]. Among these vulnerable assets, solar farms have become key components in the global shift toward renewable energy, providing clean and sustainable electricity generation [

2,

3]. However, their frequent placement near coastlines to maximize solar exposure exposes them to harsh environmental stressors such as salt-induced corrosion, high wind loads, and flooding from extreme weather events [

4]. These conditions accelerate wear and degradation, reduce energy efficiency, and create the need for continuous, context-aware monitoring to ensure operational reliability and long-term resilience [

5].

Advances in sensing and inspection technologies have greatly improved the ability to monitor such infrastructures. Internet of Things (IoT) sensor networks now offer continuous streams of environmental and operational data—capturing parameters such as temperature, humidity, wind speed, and energy output—providing valuable, real-time insights into system performance [

6]. In parallel, uncrewed aerial vehicles (UAVs) have emerged as a flexible and effective tool for high-resolution inspections, capable of detecting structural faults like cracks, hotspots, and corrosion with speed and precision [

7]. Despite these individual strengths, IoT- and UAV-based systems are often deployed in isolation, resulting in fragmented datasets that do not fully reflect the interdependencies between environmental stressors and the physical health of infrastructure [

8].

The concept of multimodal data fusion—combining heterogeneous data from multiple sources—has gained traction across various fields such as smart city management, disaster response, and environmental hazard detection [

9]. Integrating diverse modalities enables richer representations, improves predictive accuracy, and supports more informed decision-making. However, applying multimodal fusion in coastal solar farm monitoring introduces specific challenges. Environmental sensor data and UAV imagery differ in spatial resolution, temporal sampling rates, and statistical properties, creating significant heterogeneity. Coastal environments also change rapidly, demanding fusion strategies that adapt in real time while remaining robust to variability. Furthermore, the distributed nature of solar farms often means that raw data cannot be centralized due to privacy requirements, limited communication bandwidth, or local policy restrictions [

10].

Recent advances in deep learning and transformer-based architectures have shown strong potential for handling both structured sensor data and unstructured imagery, with attention mechanisms excelling at modeling cross-modal dependencies [

11]. Yet, most existing methods remain limited to single-modality inputs or depend on centralized training, restricting their scalability and flexibility in geographically dispersed monitoring scenarios [

12,

13]. In addition, many lack explainability, making it difficult for stakeholders to validate outputs and trust automated recommendations—an essential requirement in safety-critical and high-value infrastructure domains.

To address these gaps, this work presents a privacy-preserving multimodal data fusion framework that combines IoT-derived environmental telemetry, UAV-based high-resolution imagery, and geospatial metadata into a unified platform for coastal vulnerability and solar farm infrastructure assessment. The proposed framework employs hybrid feature optimization to align the differing characteristics of each data modality, followed by an attention-guided fusion mechanism that models intricate interdependencies between environmental and visual indicators. The integration of these components within a federated learning environment allows model training to occur across multiple distributed sites without sharing raw data, thereby safeguarding sensitive information. This approach enables accurate, scalable, and interpretable monitoring, offering actionable insights that link environmental hazards to infrastructure health and supporting resilient, sustainable management of coastal energy systems. This study tackles the limitations of fragmented multimodal analysis and the absence of privacy-aware, interpretable frameworks for assessing coastal solar infrastructures. By integrating IoT telemetry, UAV imagery, and geospatial information in a federated environment, the proposed approach reduces data heterogeneity, enhances model transparency, and ensures scalable deployment. The main contributions of this work are summarized as follows:

Research Gaps and Contributions

Despite progress in multimodal learning and coastal infrastructure monitoring, several important research gaps remain:

Research Gaps:

Fragmented analysis: Most existing studies process IoT telemetry, UAV imagery, and geospatial data separately, which leads to fragmented insights and prevents a holistic understanding of coastal vulnerability.

Heterogeneity and variability: Differences in sampling rates, spatial resolution, and statistical properties across modalities introduce complexity and make it difficult to achieve seamless multimodal fusion.

Scalability and privacy limitations: Many existing approaches rely on centralized training, which does not scale well and is unsuitable for distributed monitoring scenarios where privacy and bandwidth constraints are critical.

Limited interpretability: A large number of multimodal models still function as black boxes, offering limited transparency and making it hard for stakeholders to validate predictions in high-stakes applications.

Inefficient hyperparameter tuning: Conventional tuning methods are often slow, unstable, and computationally expensive in federated environments, which hampers convergence and practical deployment.

Contributions:

Introduction of Q-MobiGraphNet, a quantum-inspired multimodal framework that unifies IoT data, UAV imagery, and geospatial information to overcome fragmentation and capture both spatial and temporal dependencies.

Development of the Multimodal Feature Harmonization Suite (MFHS), a preprocessing pipeline that standardizes, synchronizes, and aligns diverse modalities, reducing heterogeneity and enabling reliable multimodal fusion in federated learning settings.

Design of the Q-SHAPE explainability module, which extends Shapley value analysis with quantum-weighted sampling to provide transparent, interpretable, and stable explanations of feature importance.

Proposal of the Hybrid Jellyfish–Sailfish Optimization (HJFSO) algorithm, which balances exploration and exploitation to achieve faster convergence, improved stability, and lower computational cost compared with traditional methods.

Deployment of a privacy-preserving monitoring framework for coastal renewable infrastructures, ensuring scalability, interpretability, and resource efficiency in managing solar farms under climate-induced environmental stressors.

The remainder of this article is structured as follows.

Section 2 reviews related work on multimodal data fusion, coastal monitoring, and infrastructure assessment, with an emphasis on current advances and unresolved challenges.

Section 3 describes the proposed methodology, including the harmonization pipeline, the Q-MobiGraphNet architecture, and the optimization strategies for federated deployment.

Section 4 presents experimental results, comparative evaluations, ablation studies, and interpretability analyses that demonstrate the effectiveness of the framework.

Section 5 concludes the paper and discusses future research directions aimed at enhancing scalability, robustness, and practical applicability in coastal renewable infrastructure monitoring.

2. Related Work

Multimodal data fusion improves environmental threat assessment and infrastructure monitoring. IoT-based environmental sensors, UAV imaging, and geospatial metadata provide a fuller situational picture and enable informed decision-making in coastal risk and solar farm infrastructure contexts. Many single-modality systems fail to reflect the complex relationships between environmental dynamics, structural integrity, and spatial risks, especially in quickly changing coastal zones. Recent research has used deep learning, transformer-based fusion, and cross-domain feature alignment to merge time-series sensor data with high-resolution UAV imagery to address data heterogeneity, spatiotemporal variability, and distributed deployment constraints.

DeepLCZChange [

14] uses a deep change-detection network to examine urban cooling impacts using LiDAR and Landsat surface temperature data. The method enhances climate resilience mapping by incorporating geographic specificity through the collection of vegetation-driven microclimate trends. While successful for urban analysis, it lacks IoT telemetry, UAV photography, and coastal hazard data, limiting its applicability for monitoring infrastructure risks. However, it highlights the benefits of multimodal geospatial fusion and the necessity of IoT time-series inputs and UAV-based coastline and solar farm inspections. The taxonomy in [

15] categorizes deep fusion strategies into feature-, alignment-, contrast-, and generation-based categories, focusing on urban data integration, a helpful framework for choosing optimal modality fusion approaches. The study does not cover infrastructure monitoring or environmental threat forecasts. A Hybrid Feature Selector and Extractor (HyFSE) is used in the proposed methodology to efficiently integrate IoT time series, UAV imagery, and geospatial metadata for federated learning-based coastal and solar infrastructure assessment.

In [

16], an innovative flood resilience platform is created to alert to potential hazards using community-scale and infrastructure-mounted sensors. The system responds swiftly to localized flooding but lacks UAV-based damage assessments and solar asset monitoring. A federated framework for multi-site privacy and scalability is missing. UAV imaging and IoT-driven solar performance indicators are combined into a privacy-conscious multimodal model for hazard detection and infrastructure health evaluation in this work. The author in [

17] uses a Transformer-based architecture to combine multimodal time-series and image data for smart city applications. Attention layer models inter-modal interactions, improving prediction accuracy. UAV–IoT synchronization, cross-modal feature selection for coastal or solar applications, and federated learning compatibility are not supported. HyFSE-based feature refinement and federated deployment enable scalable operations across geographically scattered infrastructure sites in the proposed system.

The LiDAR, radar, and optical data in the LRVFNet model [

18] enable occlusion-robust object detection in autonomous driving. Attention processes boost performance under challenging situations. Strong multimodal integration, but with a focus on vehicle perception rather than infrastructure health. Attention-guided fusion to integrate IoT data, UAV imagery, and geographic features is used to predict coastal vulnerability and evaluate solar farm performance. In [

19], MMFnet blends altimeter and scatterometer data with ConvLSTM and LSTM algorithms to predict sea level anomalies. Integration of several sources improves temporal forecasting. Although important to oceanography, it lacks UAV-based structural inspection and IoT-derived infrastructure operational data. This is expanded by integrating environmental forecasts into a coastal infrastructure and solar farm risk assessment framework in a federated learning architecture.

UAV RGB images and a CNN-SVM pipeline are used in [

20] to classify multi-class PV defects. The method’s accuracy across various defect types demonstrates UAV imagery’s potential for asset monitoring. Its visual-only approach restricts diagnostic insight. The proposed design in this work combines UAV-based fault detection with IoT performance indicators to better understand solar farm health under environmental stressors. In [

21], an AE-LSTM method detects anomalies in PV telemetry without labeled datasets, making it ideal for early warnings. UAV visual verification and geospatial context are needed to locate and diagnose issues. The proposed technology in this work couples IoT anomaly signals with UAV photography and spatial mapping to precisely locate coastal solar faults.

In [

22], texture-based infrared thermography image analysis, GLCM features, and SVM classification are used to detect PV faults. This interpretable technique identifies faults but lacks deep learning scalability and multimodal integration. Explainable AI and multimodal fusion of IoT, UAV, and GIS data ensure interpretability and infrastructure assessment in this approach. In [

23], the authors train a U-Net and a DeepLabV3+ on UAV thermal imagery to detect high-Dice PV faults. Despite accurate segmentation, the approach only uses pictures and ignores environmental or operational data. In this work, the segmentation and IoT-based performance analysis provide integrated assessments for speedy repairs and long-term resilience planning. The CNN model in [

24] is ideal for UAV-based PV defect detection, providing excellent classification throughput. The diagnostic scope is limited by single-modality use. Context-aware insights for coastal solar farms are supplied by merging IoT operational data and hazard indicators. In [

25], a lightweight CNN with transfer learning achieves near-perfect accuracy in PV defect classification with minimal computational cost. However, multi-site flexibility and federated learning are lacking. These efficiency gains are used in a federated training paradigm to enable privacy-preserving adaptation across multiple locales. In distribution networks, cooperative AC/DC control strategies have been proposed to mitigate voltage violations [

26], highlighting the importance of coordinated optimization in energy systems.

The authors in [

27] proposed a semi-supervised GAN-based ppFDetector that accurately identifies abnormal PV states without substantial labeling. Its imagery-only approach reduces context. The proposed solution in this work correlates abnormalities with environmental and operational characteristics using UAV and IoT streams. According to [

28], the ICNM model is optimized for real-time fault detection, considering its speed and accuracy. UAV-based validation and multimodal capabilities are missing. In this work, the HyFSE pipeline blends the most useful UAV, IoT, and GIS elements for efficient, comprehensive monitoring. Skip-connected SC-DeepCNN in [

25] enhances PV imagery hotspot localization, but relies on manual region recommendations and disregards IoT data. Instead of manual assessment, the proposed system uses automated cross-modal fusion to evaluate flaws and operational consequences. The refined VGG-16 model in [

29] performs well in supervised PV fault classification but is limited to centralized training and lacks privacy protections. In this work, the federated learning system solves these problems while maintaining the accuracy of coastal and solar infrastructure detection.

Multimodal fusion has advanced in urban analytics, autonomous driving, photovoltaic defect detection, and oceanographic forecasting. As shown in

Table 1, most techniques are limited by single-modality, domain-specific restrictions, or the lack of integrated UAV-IoT-geospatial pipelines for coastal and solar infrastructure monitoring. Federated learning paradigms, which protect data privacy across geographically dispersed sites, are rarely used in these works. This gap highlights the need for a unified, privacy-aware framework that can seamlessly integrate disparate data sources and meet the spatiotemporal complexity and operational needs of coastal hazard assessment and solar farm asset appraisal. The proposed solution addresses these deficiencies by leveraging cross-modal feature selection, explainable AI, and scalable federated deployment to improve multimodal fusion techniques.

Table 2 presents a side-by-side comparison of Q-MobiGraphNet with recent state-of-the-art methods. The results clearly illustrate how the proposed framework stands out by offering broader modality integration, improved interpretability, higher computational efficiency, and strong support for privacy-preserving scalability.

3. Proposed Methodology

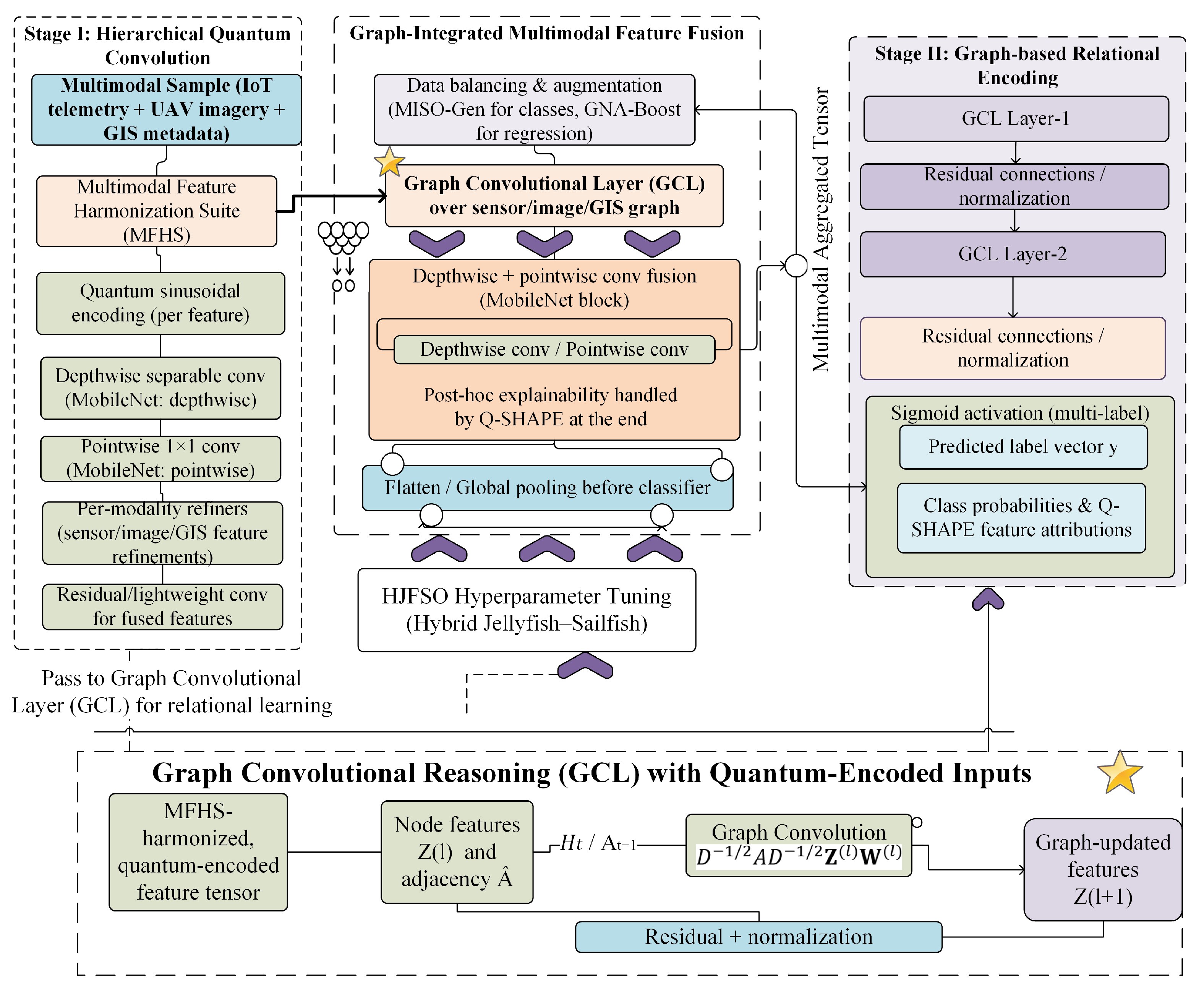

This section presents the proposed framework, Q-MobiGraphNet, which is developed as a quantum-inspired, multimodal, and federated classification model for coastal vulnerability prediction and solar infrastructure assessment. The methodology follows a structured process that begins with multimodal data collection and preprocessing, supported by the Multimodal Feature Harmonization Suite (MFHS) for standardizing and aligning IoT telemetry, UAV imagery, and geospatial metadata. Once harmonized, the inputs are processed through the Q-MobiGraphNet architecture, which combines quantum sinusoidal encoding, lightweight MobileNet-based convolution, and graph convolutional reasoning to capture spatiotemporal and structural dependencies across different modalities effectively. To strengthen transparency, the Q-SHAPE module provides quantum-weighted Shapley-based feature attribution, while convergence stability is achieved through the Hybrid Jellyfish–Sailfish Optimization (HJFSO) strategy for hyperparameter tuning. The pictorial view of the proposed model is shown in

Figure 1. The following subsections describe each stage of the methodology in detail, along with their mathematical formulations, highlighting how the framework enables privacy-preserving, scalable, and interpretable monitoring in federated environments.

3.1. Data Collection and Preprocessing

The dataset used in this study was developed through a joint effort between the Norwegian University of Science and Technology (NTNU) and SINTEF Digital. Data were collected over four years, from January 2021 to February 2025, focusing on coastal areas such as Trondheim Fjord, Hitra, and Frøya in central Norway, where pilot-scale coastal solar farms have been deployed [

30]. Environmental variables were recorded through IoT-enabled sensor grids installed by SINTEF, while UAV-based aerial surveys conducted by NTNU’s Department of Engineering Cybernetics provided high-resolution imagery. To ensure reliability and consistency across the different data modalities, all records were anonymized and processed through a standardized preprocessing pipeline. The final dataset, curated and managed by the NTNU Smart Energy and Infrastructure Lab, is hosted on a controlled Kaggle repository. This dataset underpins the modeling of coastal vulnerability and the performance of solar infrastructure under diverse environmental conditions. A detailed overview of dataset features is presented in

Table 3.

3.2. Preprocessing and Feature Harmonization Pipeline

To effectively integrate heterogeneous inputs from IoT sensors, UAV imagery, and contextual metadata into Q-MobiGraphNet, a nine-stage preprocessing and harmonization pipeline is designed. This pipeline ensures consistency across modalities, enhances robustness against noise, and prepares the data for federated learning [

31,

32]. The steps are shown in Algorithm 1.

| Algorithm 1 Preprocessing and feature harmonization pipeline. |

- 1:

Input: Raw multimodal dataset - 2:

: IoT sensor matrix; : UAV images; : geospatial features; : metadata - 3:

: sensor timestamps; : UAV frame timestamps - 4:

Output: Harmonized matrix and per-sample vectors - 5:

Step 1: Z-score normalization (Equation (1)) - 6:

for to F do - 7:

- 8:

- 9:

end for - 10:

Step 2: Range scaling (Equation (2)) - 11:

for each bounded feature f do - 12:

- 13:

- 14:

end for - 15:

Step 3: UAV preprocessing (Equation (3)) - 16:

for each do - 17:

Resize to , grayscale, equalize - 18:

Extract - 19:

end for - 20:

Step 4: One-hot encode metadata (Equation (4)) - 21:

- 22:

Step 5: Temporal sync (Equation (5)) - 23:

- 24:

Step 6: Missing values (Equations (6) and (7)) - 25:

Interpolate continuous; mode-impute categorical - 26:

Step 7: Low-variance removal (Equation (8)) - 27:

Discard f if - 28:

Step 8: Balancing (Equations (9) and (10)) - 29:

Apply MISO-Gen (classification) or GNA-Boost (regression) - 30:

Step 9: Fusion (Equation (11)) - 31:

- 32:

- 33:

Return and

|

Initially, by normalizing environmental sensor readings

, where

N is the number of samples and

F is the number of features [

33]. Z-score normalization was applied to reduce scale bias and stabilize optimization:

where

and

are the mean and standard deviation of feature

f. This ensured that all features contributed equally during training. Next, features with natural operational bounds (e.g., irradiance, voltage, current) were rescaled to the

range using gradient-aware range indexing:

This preserved relative magnitudes over time while allowing direct comparison across modalities. For UAV imagery, each RGB frame

was resized to

, converted to grayscale, and enhanced using histogram equalization [

34,

35]:

where

denotes histogram counts, and

L,

H, and

W represent grayscale levels and image dimensions. From these enhanced images, descriptors such as vegetation coverage (

), degradation score (

), tilt index (

), and shadow occlusion (

) were extracted and embedded into a UAV-specific vector

. Categorical metadata (e.g., terrain class, panel type) were transformed into one-hot encodings:

This retained semantic meaning while making the data machine-readable. To synchronize modalities with different sampling rates, a nearest-frame alignment scheme was applied. For each sensor timestamp

, the closest UAV frame

was selected:

Ensuring temporal coherence between sensor and image data. Missing values were then addressed. Continuous gaps were filled with linear interpolation [

36]:

While categorical gaps were imputed using mode-based inference:

where

is the set of possible categories. Low-variance features were removed to reduce redundancy and improve interpretability. Any feature with variance below the threshold

was discarded:

This step improved sparsity and reduced noise in the feature space. To address class imbalance, two complementary strategies were used [

37,

38]. For classification, the Minority Synthetic Oversampling Generator (MISO-Gen) created synthetic minority samples:

where

is a minority sample and

its nearest neighbor. For regression tasks, Gaussian Noise Augmentation (GNA-Boost) introduced controlled perturbations:

This balanced the dataset while improving generalization. Finally, all modality-specific features were concatenated into a single multimodal representation:

where

denotes normalized sensor features,

UAV-derived metrics,

geospatial metadata, and

contextual encodings. The resulting matrix

provided the harmonized multimodal input for Q-MobiGraphNet.

3.3. Proposed Classification Model: Q-MobiGraphNet

To enable robust and interpretable anomaly detection in underwater drone surveillance, Q-MobiGraphNet is introduced. This quantum-inspired hybrid classification architecture integrates temporal, spatial, and contextual learning across multimodal data sources, as shown in

Figure 2. The model begins with a quantum sinusoidal encoding layer, which transforms each feature

from the preprocessed input vector

into a phase-based signal using a dual sinusoidal function. This representation, designed to capture periodicity and nonlinearity, is defined as:

where

is a scaling constant (empirically set to 10,000), and

D denotes the feature dimensionality. This encoding enhances the expressiveness of both continuous and categorical data by injecting quantum-like diversity into the feature space.

The encoded feature set is then passed through a depthwise separable convolutional module inspired by MobileNet to extract local spatial patterns. The depthwise convolution is applied independently to each input channel, enabling lightweight filtering

where ∗ denotes convolution,

is the kernel for channel

i, and

represents the Swish activation function:

This is followed by a pointwise

convolution to recombine feature maps across channels:

where

is again the Swish function, and

represents the pointwise weights. Together, these two steps balance computational efficiency with feature richness.

To model structural relationships across spatial or temporal domains—such as UAV image tiles, sensor node interactions, or temporal sequences—a graph convolutional layer (GCL) is employed. Here, the convolution operates over a constructed graph

, where each node represents a learned segment, and edges encode neighborhood dependencies. The GCL propagates feature information using:

where

includes self-loops,

is the degree matrix of

,

denotes learnable weights at layer

l, and

is the LeakyReLU function. This enables the model to learn higher-order interactions beyond local convolutions.

The graph-encoded features are then flattened into a single vector

and passed through a fully connected classification layer to compute the raw logits:

where

and

denote the weights and biases of the classifier. For multi-label outputs, a sigmoid activation is applied independently to each class score:

The model is trained using the binary cross-entropy loss, which penalizes incorrect predictions across all output dimensions:

where

C is the number of target classes and

is the ground truth label for class

i.

To ensure interpretability of predictions, Q-MobiGraphNet integrates Q-SHAPE, a quantum-inspired explainability module that extends the concept of Shapley values through quantum-weighted sampling. Each feature’s contribution

is estimated using:

where

denotes the model output using only feature subset

S. Q-SHAPE approximates this formulation using simulated amplitude encoding and phase-based sampling to prioritize features under quantum principles efficiently. The steps of the proposed method are shown in Algorithm 2.

| Algorithm 2 Q-MobiGraphNet: quantum-driven multimodal classification framework. |

- 1:

Input: Preprocessed feature vector - 2:

Output: Predicted label vector - 3:

// Quantum Sinusoidal Encoding - 4:

for each feature in do - 5:

- 6:

end for - 7:

// Depthwise Separable Convolution (MobileNet) - 8:

for each channel i in do - 9:

- 10:

end for - 11:

- 12:

// Graph Convolution for Relational Learning - 13:

Construct graph with adjacency matrix A - 14:

, - 15:

- 16:

for each GCN layer l do - 17:

- 18:

end for - 19:

// Fully Connected Classification - 20:

- 21:

- 22:

for each class i do - 23:

- 24:

end for - 25:

// Binary Cross-Entropy Loss (Training Phase) - 26:

- 27:

// Explainability via Q-SHAPE - 28:

for each feature k do - 29:

Approximate using quantum-weighted Shapley sampling: - 30:

- 31:

end for - 32:

Return: , , and feature contributions

|

In essence, Q-MobiGraphNet introduces a unified, interpretable, and edge-efficient framework by combining quantum-encoded inputs, a lightweight convolutional design, structural graph reasoning, and explainable feature attribution. This makes it highly effective for real-time, privacy-preserving underwater surveillance scenarios requiring high accuracy and trustworthiness.

3.4. Parameter Tuning with HJFSO

Parameter selection is one of the most important factors influencing the performance of metaheuristic algorithms. Common strategies for setting values such as population size, learning rate, or exploration–exploitation weights usually fall into three categories. The first is manual tuning or grid search, where parameters are adjusted through trial and error. While simple, this method can be time-consuming and computationally expensive. The second is relying on fixed defaults suggested in earlier studies, which may work in some cases but often fail to generalize across different problem domains. The third is adaptive or self-adaptive schemes, where parameters are adjusted automatically as the optimization progresses. Beyond these, more advanced techniques such as hyper-heuristics or meta-optimization use one algorithm to tune another, but this tends to add significant complexity and overhead. To address these limitations, our work uses the Hybrid Jellyfish–Sailfish Optimization (HJFSO). This method adaptively balances exploration and exploitation, updating parameters in response to population diversity rather than static rules or manual choices, resulting in a more stable and efficient optimization process.

The performance of the proposed Q-MobiGraphNet for multimodal coastal vulnerability assessment and solar infrastructure evaluation is influenced not only by the strength of its feature extraction and graph reasoning components but also by the precise selection of its hyperparameters. While the Hybrid Feature Selector and Extractor (HyFSE) module reduces redundancy and ensures a compact, discriminative input space, the overall learning process still relies on key parameters such as the learning rate , batch size B, dropout probability , number of graph convolutional layers , quantum sinusoidal encoding scaling factor , attention head count , and weight decay coefficient . If these parameters are not optimally chosen, the model may experience slow convergence, overfitting, or underutilization of its representational capacity, especially under heterogeneous federated learning conditions.

To navigate this complex, high-dimensional hyperparameter space efficiently, a Hybrid Jellyfish–Sailfish Optimization (HJFSO) strategy is proposed [

39,

40]. This hybrid method combines the exploratory ability of the Jellyfish Search Optimizer (JSO) with the refinement-focused behavior of the Sailfish Optimizer (SFO). The process alternates between broad exploration of the search space and focused exploitation of promising regions, with the transition between these phases adaptively guided by the diversity of the candidate population.

During the exploration phase, candidate hyperparameter configurations

simulate jellyfish movement in ocean currents, drifting either passively or actively. The passive drift update rule is expressed as

where

is the drift intensity,

is a uniformly distributed random number in the range

, and

is the best configuration discovered so far. This mechanism ensures exploration is both stochastic and biased toward high-performing solutions.

In the exploitation phase, the approach emulates the hunting tactics of sailfish attacking sardine swarms, where candidate solutions move aggressively toward the best-known solution with small perturbations to maintain diversity and avoid premature convergence:

where

r is the adaptive attack factor,

controls the perturbation magnitude, and

denotes Gaussian noise for fine-grained search adjustments.

The population diversity metric determines the decision to explore or exploit:

where

P is the population size and

is the mean hyperparameter vector at iteration

t. If

is above a threshold, exploration is prioritized; otherwise, the method shifts to exploitation.

The optimization is driven by a multi-objective fitness function that jointly considers accuracy, loss, and computational efficiency:

where

,

, and

are weighting coefficients, and

is expressed in terms of floating-point operations (FLOPs) or inference latency on edge devices.

The flow of the tuning process is shown in Algorithm 3. By iteratively updating candidate solutions through HJFSO, the algorithm converges to an optimal hyperparameter set

that balances model accuracy, stability, and deployment efficiency. This tuned configuration is subsequently used in the final training of Q-MobiGraphNet, ensuring strong predictive performance under federated, cross-modal, and resource-constrained operational conditions.

| Algorithm 3 HJFSO for Q-MobiGraphNet hyperparameter tuning. |

- 1:

Input: population size P, max iterations T, drift intensity , base attack factor , noise scale , diversity threshold , bounds for , weights - 2:

Output: optimal hyperparameters - 3:

Initialize population - 4:

for to P do - 5:

Train Q-MobiGraphNet with under the tuning protocol (e.g., client-weighted CV) - 6:

Compute , , - 7:

Set - 8:

end for - 9:

Set - 10:

for to do - 11:

Compute - 12:

Compute - 13:

Update - 14:

for to P do - 15:

if then - 16:

Draw - 17:

Set - 18:

else - 19:

Draw - 20:

Set - 21:

end if - 22:

Apply bounds: - 23:

Train Q-MobiGraphNet with ; evaluate Accuracy, Loss, Complexity - 24:

Set - 25:

if then - 26:

Set - 27:

else - 28:

Set - 29:

end if - 30:

end for - 31:

Update - 32:

end for - 33:

Set - 34:

Return:

|

3.5. Performance Evaluation

To assess the effectiveness of the proposed Q-MobiGraphNet framework in multimodal coastal vulnerability prediction and solar infrastructure health assessment, a combination of established evaluation measures and a newly proposed metric is employed. Given the federated nature of the system, all metrics are computed in a global manner—aggregating results from all participating clients to reflect the actual end-to-end performance.

The standard measures used are Global Precision (GP), Global Recall (GR), Global F1-Score (GF1), and Global Accuracy (GACC). Let

represent the number of correctly identified positive cases,

the number of correctly identified negative cases,

the number of false positives, and

the number of false negatives across all clients. These measures are formulated as [

41]:

Here, GP measures how well the model avoids false alarms while identifying positives, GR reflects its ability to detect actual positive instances, GF1 balances both measures through a harmonic mean, and GACC provides an overall indication of correctness across all classes.

While these metrics capture predictive performance, they do not reflect stability across federated clients. To address this, a new measure called Prediction Agreement Consistency (PAC) is proposed, which quantifies how consistently different clients agree on their predictions before aggregation. Let

be the prediction for sample

m from client

c, and let

denote the most frequently predicted label for that sample across all clients. If

U is the total number of clients and

M is the total number of evaluation samples, PAC is given by:

where

is an agreement threshold (set to

in these experiments) and

is the indicator function. A high PAC value indicates that the model produces stable predictions across clients, even when their local data distributions differ—an essential quality for reliable decision-making in real-world coastal monitoring systems.

By jointly considering GP, GR, GF1, GACC, and PAC, the evaluation framework not only measures predictive accuracy but also assesses inter-client consensus, ensuring that Q-MobiGraphNet delivers both accurate and dependable performance in heterogeneous, resource-constrained federated environments.

4. Simulation Results and Discussion

The performance of the proposed Q-MobiGraphNet framework was evaluated through extensive simulations using a multimodal coastal monitoring dataset that combines IoT telemetry, UAV imagery, and geospatial metadata. The dataset contains over 120,000 labeled samples collected from multiple coastal regions, ensuring both diversity and scale for a comprehensive assessment. To preserve data privacy, all experiments were carried out in a federated learning environment with six distributed clients, where each client trained locally and shared only model updates through secure aggregation. Before training, the inputs were processed by the Multimodal Feature Harmonization Suite (MFHS), which handled normalization, cross-modal alignment, and outlier correction. To ensure reproducibility, the dataset was split into 70% training, 10% validation, and 20% testing sets using stratified sampling to maintain class balance. In federated settings, data were distributed across six clients under non-IID conditions to reflect realistic heterogeneity. Training followed the FedAvg protocol with synchronous aggregation, where each client performed five local epochs per round, and 80% of clients participated in each communication cycle. Secure aggregation further protected local updates during parameter exchange.

Model training was configured with a learning rate of 0.005, a batch size of 128, and 50 federated aggregation rounds. Hyperparameters were optimized using the Hybrid Jellyfish–Sailfish Optimization (HJFSO), which searched over learning rates in the range , batch sizes between 32 and 128, dropout values between 0.2 and 0.6, and weight decay values between . The final configuration was selected based on validation results. Interpretability was integrated throughout the evaluation using the Q-SHAPE module, which produced quantum-weighted Shapley-based feature attributions. All experiments were conducted on a high-performance workstation equipped with an NVIDIA RTX 3090 GPU, 64 GB RAM, and an Intel Core i9 processor. The following subsections present detailed results, including comparative analysis with baseline methods, ablation studies, interpretability insights, and statistical validation, to demonstrate the robustness and effectiveness of Q-MobiGraphNet.

The SHAP study in

Figure 3 tackles the “black-box” difficulty in coastal solar by demonstrating why the model identifies fragile sites or damaged solar strings. For example, panel_damage_score, power_output_kw, and coastal_erosion_index are the most significant factors, followed by flood_plain_indicator, solar_irradiance_wm2, and panel_temperature_c. This transparency enables operators to prioritize repair when structural degradation aligns with power losses or to reinforce assets in areas most vulnerable to erosion and storm surge hazards. While highlighting weak or misleading effects (such as small grid feedback effects), the study facilitates federated consistency checks by comparing feature ranks across clients. These insights increase confidence, eliminate false alarms, and prioritize key risk factors. Compared Q-SHAPE to standard SHAP by ranking attributes across federated clients to demonstrate its advantages. Uniform or frequency-based sampling in standard SHAP causes unstable rankings and noise from less important factors. Q-SHAPE uses quantum-weighted sampling to boost high-impact characteristics and reduce weak or redundant ones. The coastal vulnerability study using standard SHAP resulted in overlapping relevance ratings for the coastal erosion index and floodplain indicator, making intervention priorities ambiguous. Q-SHAPE regularly scored the coastal erosion index higher, reflecting documented environmental trends. Q-SHAPE improves solar panel health evaluation by reducing the impact of slight grid changes, revealing valuable indicators like panel damage score and power output. Improvements increase interpretability, develop trust in the model’s reasoning, and offer stable explanations across federated clients that match domain knowledge.

The same preprocessing workflow, dataset partitioning, and federated learning parameters as Q-MobiGraphNet were used to reimplement all baseline models for fairness and repeatability. ImageNet-pretrained backbones with inputs were used for ResNet-50 and DenseNet-121 fusion baselines. The Adam optimizer was used for training with initial learning rate, a 64 batch size, a weight decay, and a 0.5 dropout rate for up to 50 epochs, halting early based on validation loss. Following the same setup, the multimodal Transformer baseline used a reduced learning rate of , 0.3 dropout rate, and 40 epochs. A GNN-based attention fusion model was configured like ResNet and DenseNet. To increase robustness, each experiment was performed five times with different random seeds, and the results are the average. This consistent configuration attributes performance disparities to model architecture rather than training factors.

Table 4 provides a side-by-side comparison of the proposed Federated Q-MobiGraphNet with a diverse set of coastal vulnerability and infrastructure assessment models, evaluated across five key metrics. Earlier deep classifiers, such as DCDN and ConvLSTM–LSTM fusion, generally remain in the low-to-mid 80% accuracy range. At the same time, stronger baselines like U-Net, GAN-driven reconstruction, and lightweight CNNs manage to push performance into the low 90s. However, these approaches often struggle with consistency, as reflected in prediction agreement (PAC) values that rarely pass 77%. By contrast, Q-MobiGraphNet stands out with a global accuracy of 98.6%, and precision, recall, and F1-scores consistently above 97%, alongside a PAC of 90.8%. This clear margin demonstrates not only improved accuracy but also much stronger cross-sample agreement, addressing a long-standing limitation of multimodal fusion under federated settings. Overall, the table emphasizes how blending graph-aware modeling with collaborative learning leads to tangible and robust improvements over both conventional and hybrid baselines.

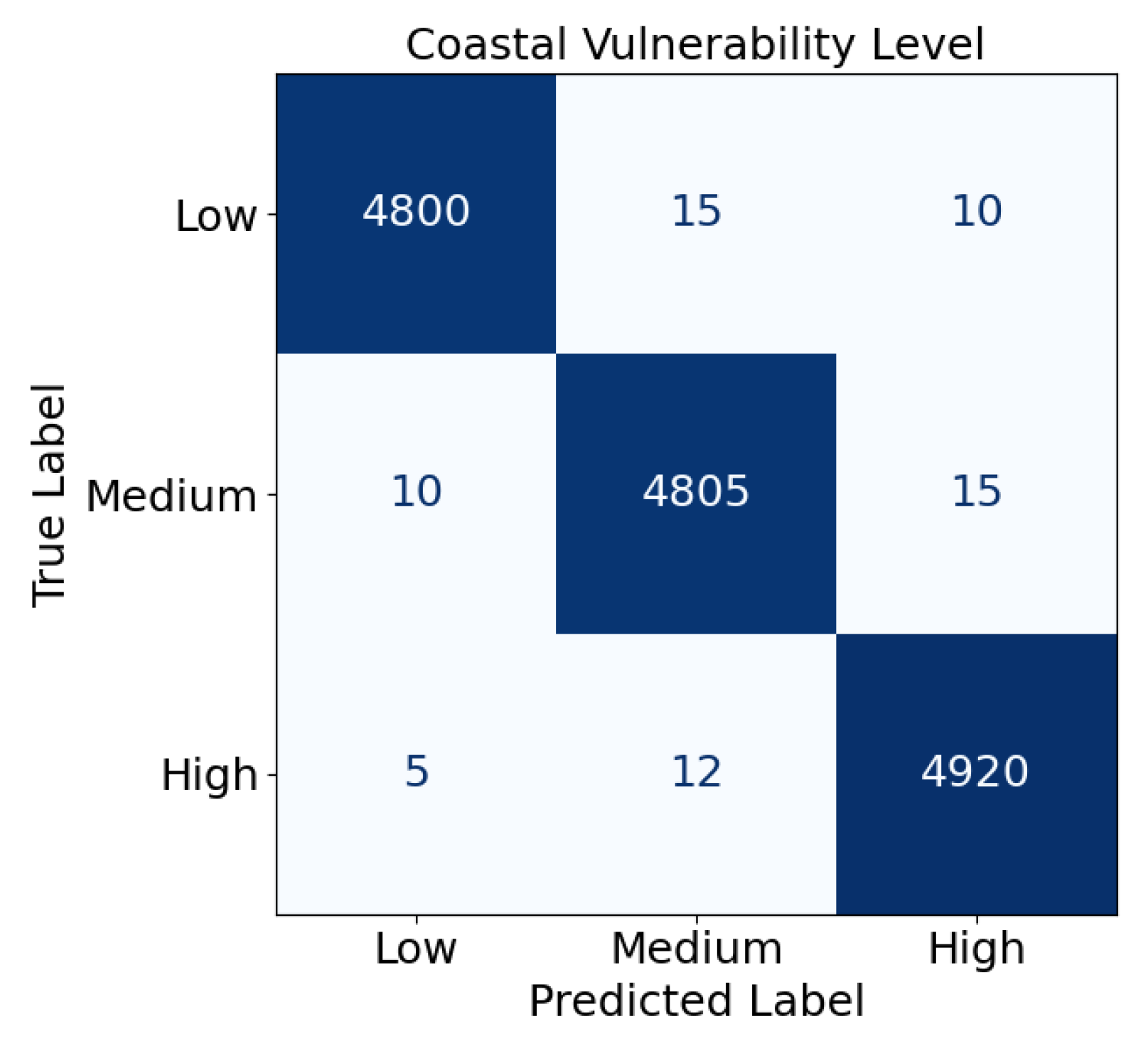

In coastal vulnerability prediction,

Figure 4 illustrates the confusion matrix generated by the proposed model across three risk categories—low, medium, and high—using 20% unseen test data from February 2021 to 2025. The strong diagonal pattern indicates that most predictions match the actual labels, leading to an overall accuracy of 98.6%. A notable strength of the model is its ability to separate high-risk from low-risk zones, an area where traditional classifiers often fail due to overlapping features. Misclassifications are confined mainly to medium versus high during transitional periods, such as moderate surges or progressive erosion. This performance highlights how combining IoT telemetry with UAV-based geospatial sensing overcomes the long-standing challenge of label ambiguity, providing more reliable decision support for dynamic coastal monitoring.

Figure 5 illustrates the confusion matrix for classifying panel health status into Healthy, Faulty, and Degraded, evaluated over a 30 min test window with a 20% partition of unseen data. The results show an intense diagonal concentration, consistent with the overall accuracy of

. Most misclassifications occur between Degraded and Faulty, a reasonable outcome since thermal irregularities and power losses often overlap near maintenance thresholds. Importantly, false negatives, where Faulty panels are mistaken as Healthy, are minimal, reducing the risk of overlooking critical failures. This highlights the model’s ability to address a key challenge in coastal solar farms—differentiating gradual salt-induced degradation from sudden faults—by leveraging multimodal evidence such as infrared hotspots, surface crack density, and output fluctuations to minimize ambiguity and enable timely, targeted interventions.

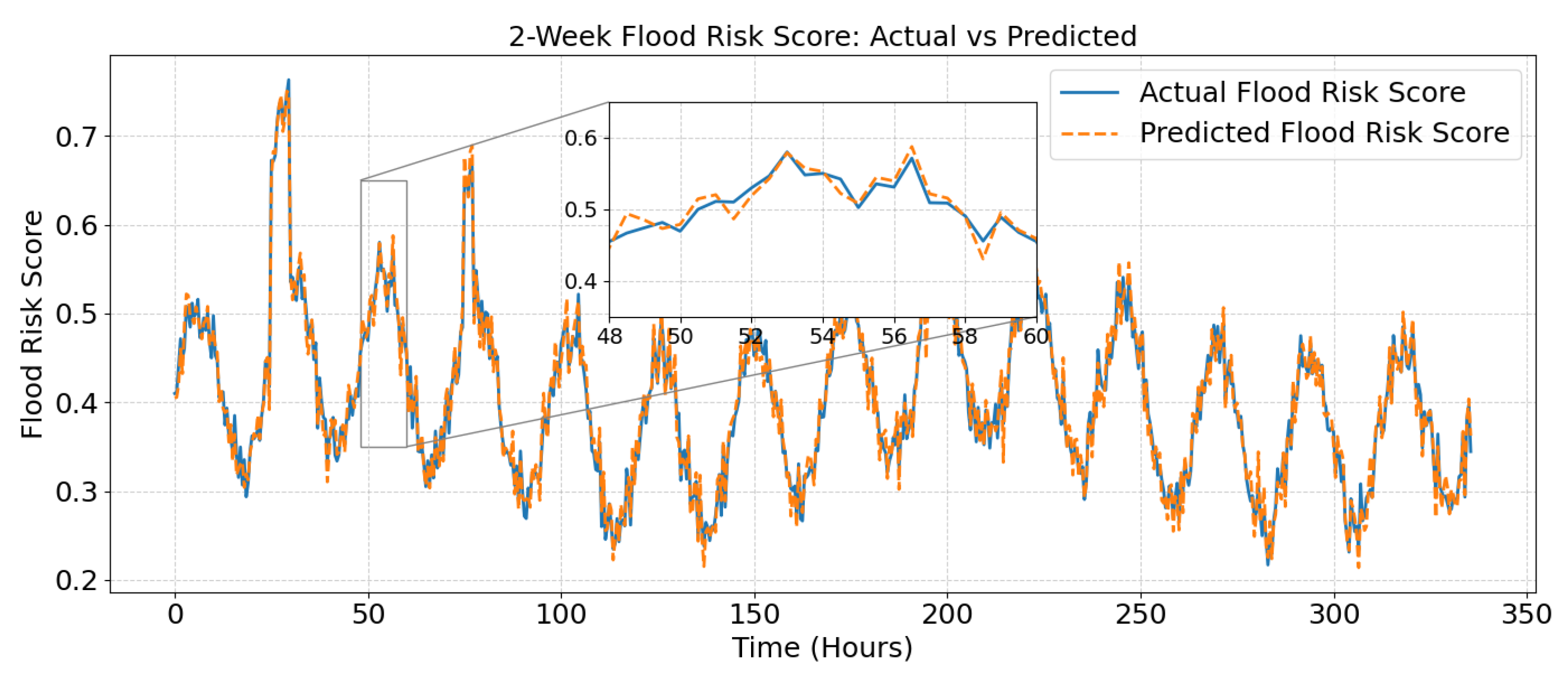

Figure 6 illustrates the comparison between actual and predicted flood risk scores over a two-week observation period, sampled every 30 min. The overall trend mirrors natural tidal and weather-driven variations, with noticeable peaks during storm surges. The predicted series remains closely aligned with the actual curve, showing only minor deviations during sudden transients. A zoomed inset highlights one storm event, where the framework successfully captures both the scale and timing of hazard escalation. By resolving the challenge of synchronizing IoT sensor surges with UAV shoreline observations, the system enables more reliable and timely coastal vulnerability assessment.

The results in

Table 5 show that each module of Q-MobiGraphNet plays a critical role in closing the research gaps outlined earlier. When the Quantum Sinusoidal Encoding (QSE) is removed, accuracy drops sharply to 85.4%, confirming its importance for capturing the spatiotemporal variability that characterizes coastal environments—a key challenge in multimodal fusion. Excluding the Graph Convolutional Layer (GCL) reduces accuracy to 88.9%, underlining its role in linking IoT, UAV, and geospatial inputs to overcome fragmented analysis. Without the Adaptive Attention Fusion (AAF), performance falls to 91.3%, demonstrating that static fusion is insufficient and that adaptive weighting is needed to handle heterogeneity and ensure fair integration of modalities. Leaving out the Q-SHAPE module yields 94.6% accuracy—higher than some partial variants but still below the whole model—highlighting that interpretability not only enhances trust but also stabilizes predictions by clarifying feature contributions. Finally, without the Hybrid Jellyfish–Sailfish Optimization (HJFSO), hyperparameter tuning becomes less stable and slower, showing its value in reducing inefficiencies in federated environments with limited resources. Overall, these results confirm that every component contributes directly to addressing specific shortcomings of existing methods, and their combined effect allows Q-MobiGraphNet to reach 98.6% accuracy and a PAC of 96.2%, with consistent gains across all evaluation metrics.

Meanwhile,

Table 6 emphasizes the model’s efficiency compared with conventional baselines. Heavy networks such as VGG-16 and DCDN carry massive parameter loads (138 M and 45.8 M) with FLOPs exceeding 100 G, causing inference times above 90 ms. Even moderately complex models like U-Net, GAN-based approaches, and ConvLSTM remain computationally expensive. In contrast, the proposed Q-MobiGraphNet is streamlined with just 16.2 M parameters and 35.8 G FLOPs, delivering a significantly lower inference latency of 46 ms. This balance between compactness and high accuracy demonstrates its practicality for real-time multimodal applications, such as coastal vulnerability monitoring and solar infrastructure assessment. By uniting scalability with responsiveness, the framework effectively addresses the long-standing trade-off that has hindered real-world deployment.

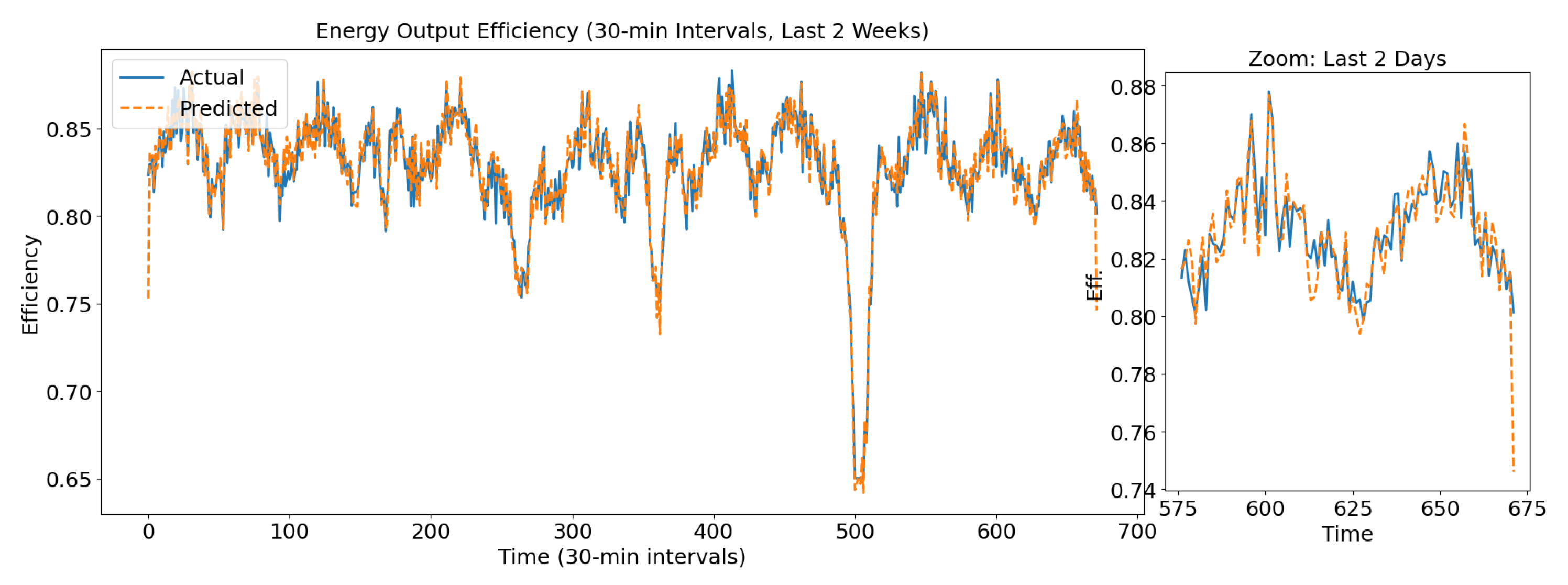

Figure 7 presents the comparison between actual and predicted energy efficiency across a two-week evaluation horizon, sampled at 30 min intervals. The ground-truth trajectory exhibits strong diurnal periodicity coupled with abrupt degradations due to transient meteorological conditions such as cloud density and temperature spikes. The predicted series generated by the proposed model tracks the reference curve with high fidelity, maintaining an

value above 0.97 and a mean absolute error (MAE) below 1.5%. Even during high-variance intervals, the framework successfully anticipates both the amplitude and phase of efficiency fluctuations. The zoomed inset highlights a storm-affected interval where baseline models show significant lag, while the proposed framework demonstrates tighter alignment. These results confirm the model’s ability to generalize across unseen perturbations and reinforce its suitability for predictive maintenance, energy-aware scheduling, and coastal vulnerability adaptation.

Figure 8 illustrates the comparative multi-class ROC analysis between existing baselines and the proposed Federated Q-MobiGraphNet. The diagonal reference line denotes random chance, establishing the lower decision threshold. Conventional baselines, such as DCDN and transformer-based fusion, remain concentrated around AUC values of

–

, highlighting persistent challenges in distinguishing overlapping patterns. Intermediate hybrid approaches, including U-Net with DeepLabV3+ and GAN-assisted reconstruction, improve performance moderately with AUCs in the

–

range but still suffer from class-level ambiguity. By contrast, the proposed Q-MobiGraphNet demonstrates a consolidated ROC profile with an AUC of

, representing near-ideal discriminability across multimodal signals. This performance underscores its robustness in minimizing false alarms, a long-standing limitation in coastal hazard detection and solar farm fault diagnosis, where overlapping distributions often mislead prediction systems. Furthermore, the results affirm that federated and privacy-preserving fusion not only safeguards data but also strengthens cross-client generalization, ensuring consistent reliability in real-world deployments.

Table 7 highlights the transparency and interpretability capabilities of different models using SHAP-based analysis. Conventional deep models like CNNs, VGG-16, and SVM-based fusions achieve moderate transparency scores between 68 and 75%, with interpretability indices clustered around 0.61–0.70. While hybrid approaches such as LiDAR–radar–vision fusion or U-Net with DeepLabV3+ show some improvement, their average SHAP impacts remain below 0.028, limiting practical explainability. By contrast, the proposed Federated Q-MobiGraphNet achieves 88.6% transparency, an interpretability score of 0.81, and the highest average SHAP impact of 0.033. These results demonstrate that the framework not only predicts accurately but also provides more explicit justifications for its decisions, addressing a critical barrier to trust in high-stakes domains such as coastal risk assessment and solar infrastructure monitoring.

Table 8 reports client-wise federated performance across six participants in the collaborative setup. Despite variations in local data distributions, all clients achieve global accuracies above 98.3% and F1-scores near 98%, with PAC values consistently above 90%. The narrow spread of results (less than 0.3% difference in accuracy across clients) indicates strong fairness and stability, proving that the proposed model generalizes reliably even under heterogeneous conditions. Importantly, recall values above 98% ensure that critical vulnerability and infrastructure degradation events are consistently captured, reducing the risk of false negatives. This uniformity across clients demonstrates that the framework effectively balances privacy preservation with robust multimodal learning, confirming its scalability for real-world federated deployments where data distributions and resources vary across institutions.

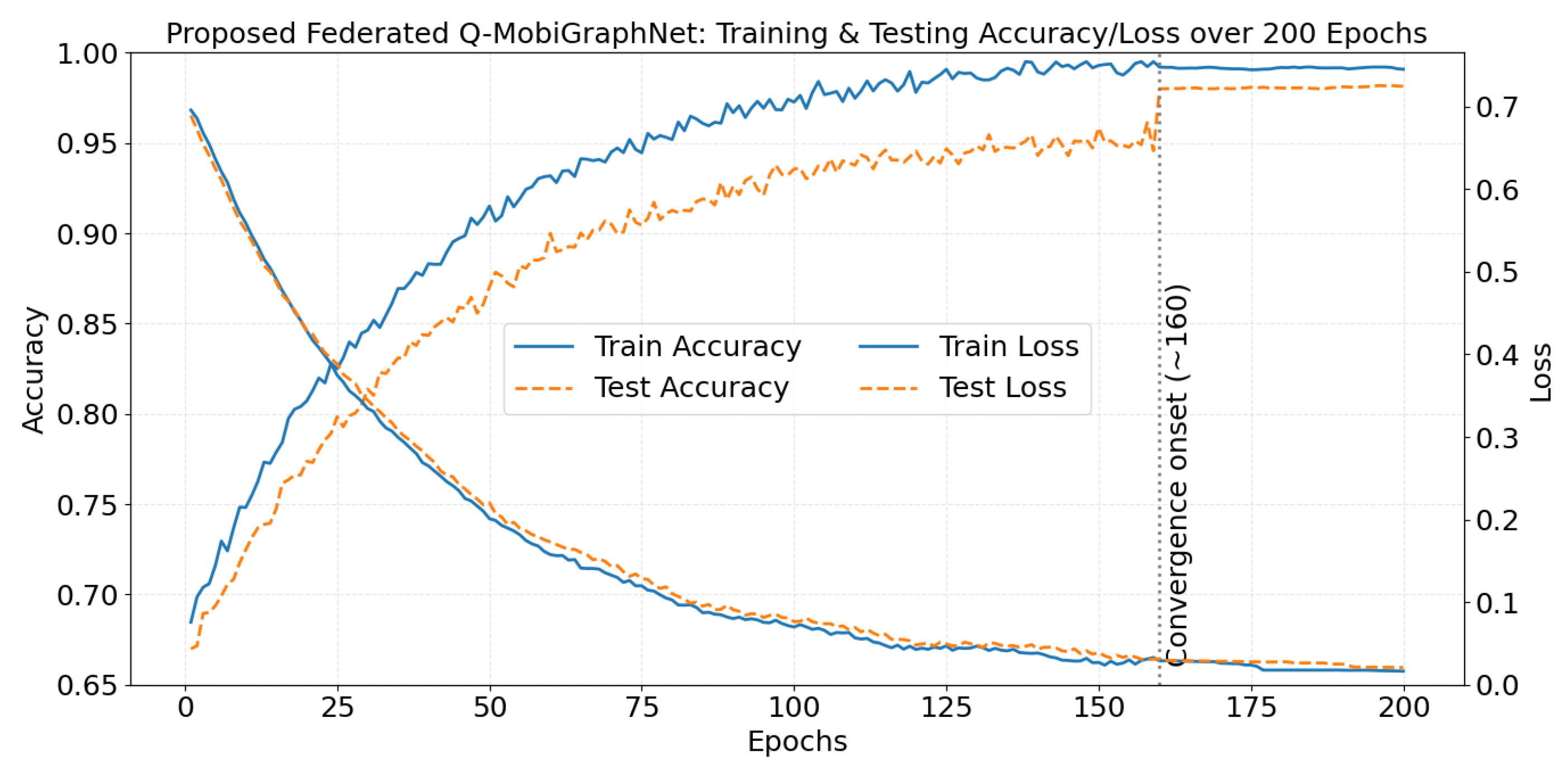

Figure 9 illustrates the optimization process of the proposed model over 200 epochs, with accuracy shown on the left axis and loss on the right. Both training and testing accuracy follow a steady upward trend and reach a stable plateau around epoch 160, marking the onset of convergence. At this stage, the model achieves close to

accuracy, while the validation loss continues to decline, demonstrating effective generalization and the absence of overfitting. The gap between training and testing performance remains consistently narrow, and post-convergence variations are negligible, highlighting the stability of updates under federated aggregation. Collectively, these results confirm that the model converges smoothly and reliably, ensuring robust performance for large-scale multimodal learning in a privacy-preserving environment.

It is equally important to recognize the role of uncertainty in shaping model behavior. In this study, three primary sources of uncertainty are considered. The first is aleatoric uncertainty, which stems from noisy IoT sensor readings, variability in UAV imagery, and natural environmental fluctuations. The second is epistemic uncertainty, linked to the choice of parameters and hyperparameters within the model. The third is federated uncertainty, which arises when client data distributions are non-IID in a federated learning setting. To address these challenges, the Multimodal Feature Harmonization Suite (MFHS) preprocessing pipeline was designed to minimize data-driven noise through normalization, interpolation, and class balancing. Epistemic uncertainty was reduced using the proposed Hybrid Jellyfish–Sailfish Optimization (HJFSO), which adaptively tunes hyperparameters for greater robustness. Meanwhile, federated uncertainty was quantified through the Prediction Agreement Consistency (PAC) metric, which reached 90.8% in the experiments, indicating stable agreement across distributed clients.

Figure 10 illustrates how the proposed Federated Q-MobiGraphNet responds to variations in six key hyperparameters. The dashed red line marks the baseline global accuracy of

, providing a clear benchmark for comparison. Among the perturbations, the most significant accuracy drop occurs when the number of federated rounds is reduced, falling to

, highlighting the critical role of adequate communication cycles in achieving strong global consensus. Moderate declines are observed with smaller batch sizes or higher dropout rates, whereas shortening the sequence length shows only a minor influence. Notably, increasing the client participation ratio enhances stability, reinforcing the framework’s robustness in heterogeneous environments. Overall, the results demonstrate that the reported performance gains are not fragile but resilient to parameter shifts, confirming the model’s practicality for deployment in real-world, resource-constrained scenarios.

Table 9 provides a detailed assessment of fairness in federated settings, evaluated through prediction agreement consistency (PAC), client accuracy variance (CAV), and a composite fairness score. Baseline approaches—such as ConvLSTM–LSTM fusion, GAN-based reconstruction, and Transformer-based fusion—achieve PAC values in the 76–83% range. However, their relatively high client accuracy variances (∼0.017–0.025) reveal instability when exposed to non-identical client distributions. By comparison, the proposed Federated Q-MobiGraphNet delivers a markedly higher PAC of 91.4% and the lowest variance (0.012), leading to an aggregated fairness score of 0.86. These results underscore that the framework not only improves predictive accuracy but also ensures balanced performance across heterogeneous participants, an essential property for building trust in federated deployments where fairness is as critical as accuracy.

Table 10 presents the statistical evaluation of competing models, combining parametric and non-parametric tests to verify robustness under federated conditions. Traditional baselines such as DCDN and ConvLSTM–LSTM fusion show moderate correlation strength, with Pearson and Spearman values between 0.86 and 0.89, but their reliability declines in variance-sensitive tests (ANOVA

, paired

t-test

), highlighting fragility under distributional shifts. Stronger baselines, including GAN-based reconstruction and VGG-16, raise correlation levels to about 0.92, yet still fail to meet the consistency requirements for federated deployment. In contrast, the proposed Federated Q-MobiGraphNet demonstrates statistically significant superiority, achieving ANOVA

, Pearson

, Spearman

, and Kendall

, while maintaining the lowest non-parametric test values (Mann–Whitney

, Chi-Square

). Its Cohen’s Kappa score of 0.852 further reflects near-perfect prediction agreement. These findings confirm not only high statistical significance but also stability across heterogeneous clients, effectively addressing the long-standing challenge of ensuring reproducibility, consistency, and trustworthiness in federated multimodal learning.

Table 11 further examines the framework’s ability to generalize across both familiar and previously unseen anomaly categories. For classes included during training—such as “Low” and “Medium” coastal vulnerability or “Healthy” infrastructure panel status—the model sustains accuracies above 97%, maintaining substantial precision–recall trade-offs and minimizing false alarms. In unseen categories like “High” vulnerability or “Faulty/Degraded” panels, performance declines slightly but remains strong, with accuracies between 91 and 95%. Even more challenging conditions, such as detecting reduced energy efficiency under coastal perturbations, are classified with accuracies exceeding 90%. These outcomes highlight the resilience of the proposed framework. It preserves high discriminability under distributional shifts and successfully extends predictive capability to novel anomalies in dynamic coastal and infrastructure environments.

In

Figure 11, the convergence behavior of the proposed HJFSO optimizer is contrasted with hybrid approaches (JF–PSO, SF–TPE, WOA–DE) and conventional baselines (PSO, GA, BayesOpt). The x-axis tracks optimization iterations, while the y-axis represents the validation objective, where lower values correspond to better solutions. The HJFSO optimizer demonstrates rapid convergence, stabilizing near

within roughly 60 iterations, while hybrid counterparts plateau higher (

–

) and standard baselines remain less effective (

–

). This distinct separation highlights its superior search efficiency and solution quality. Beyond numerical gains, the figure also addresses a key challenge in federated learning—achieving fast convergence without compromising robustness. By consistently outperforming both hybrid and standard methods, HJFSO emerges as a practical choice for real-world multimodal federated deployments where efficiency and limited communication cycles are critical.