Abstract

This paper delves into the exploration of the indefinite linear quadratic optimal control (LQOC) problem for discrete-time stochastic singular systems driven by discrete-time Markov chains. Initially, the conversion of the indefinite LQOC problem mentioned above for stochastic singular systems into an equivalent problem of normal stochastic systems is executed through a sequence of transformations. Following this, the paper furnishes sufficient and necessary conditions for resolving the transformed LQOC problem with indefinite matrix parameters, alongside optimal control strategies ensuring system regularity and causality, thereby establishing the solvability of the optimal controller. Additionally, conditions are derived to verify the definiteness of the transformed LQOC problem and the uniqueness of solutions for the generalized Markov jumping algebraic Riccati equation (GMJARE). The study attains optimal controls and nonnegative cost values, guaranteeing system admissibility. The results of the finite horizon are extended to the infinite horizon. Furthermore, it introduces the design of an output feedback controller using the LMI method. Finally, a demonstrative example demonstrates the validity of the main findings.

Keywords:

stochastic singular system; discrete-time Markovian chain; LQ optimal control problem; output feedback control MSC:

93E20

1. Introduction

Singular systems (also referred to as implicit or descriptor or combined differential and algebraic systems) are often more appropriate for modeling real-world systems such as those found in mechanics, biology, economics, and chemistry, etc. [1,2,3,4]. Recent academic studies on singular systems have prominently emphasized investigations into stability analysis, fuzzy control methodologies, quantized regulation techniques, adaptive control strategies, and related domains [5,6,7]. Stochastic singular systems are widely used in power systems, financial markets, biomedicine, and robot control, etc. For example, in power systems, the system can be used to model the dynamic behavior of the grid, taking into account the effects of random fluctuations such as load variations and renewable energy intermittency, and thus improve the stability and reliability of the grid. In financial markets, stochastic singular systems can be used to model asset price fluctuations and help in risk management. In the field of biomedicine, it can be used to model the dynamic behavior of biological systems and assist in disease diagnosis and treatment. In robot control, it can be used to model the dynamic behavior of a robot and improve its adaptability in a complex environment. These applications show the reliability and importance of stochastic singular systems and provide a broad prospect for subsequent research. Furthermore, researchers have integrated Markov chains into the discrete-time singular systems, adding complexity to the systems while yielding significant findings [8,9,10]. With further research, the stochastic theory has made considerable advancements in the modeling of mechanical engineering, circuit dynamics, and economic systems and has been extended to singular systems. Notably, stochastic singular systems offer superior expressiveness concerning the structural features inherent in dynamic systems when compared to conventional singular systems [11,12,13]. Incorporating stochastic disturbances within a system provides a means to emulate the uncertainties and fluctuations characteristic of real-world contexts. These uncertainties may emanate from a myriad of factors, including environmental volatility, external interferences, or inherent stochasticity within the system dynamics. By integrating stochastic disturbances, a more accurate evaluation of the system’s resilience, equilibrium, and capacity to accommodate diverse environmental stimuli can be achieved. Hence, the examination of stochastic singular systems driven by discrete-time Markov chains bears substantial pragmatic implications.

Reference [14] pioneered the LQOC problem, significantly influencing modern control theory. On the one hand, the LQOC problem provides a framework for optimizing controller design by minimizing a quadratic cost function, thereby achieving a balance between system stability, performance, and robustness. On the other hand, the LQOC problem studied in this paper is to adjust the weights of system states and inputs in the cost function according to practical needs, allowing for flexible optimization of system performance metrics such as response speed, stability, and energy consumption. Hence, the LQOC problem is currently being applied in various dynamic systems, including mechanical, electrical, and control systems, with notable applications in engineering, robotics, aerospace, and other fields [15,16]. Simultaneously, the Riccati equation stands as a pivotal tool in addressing the LQOC problem in the discrete-time case. By solving the Riccati equation, the linear feedback optimal controller in the discrete time case was obtained, and the optimal control of the studied system was finally realized [17,18]. Recently, the study of the LQOC problem has attracted the attention of many scholars. Reference [19] centered on the LQOC problem applied to discrete-time Markovian jumping systems (DTMJSs), with a particular emphasis on constraining control actions within a suitable subspace. Reference [20] concerned singular DTMJSs in infinite and finite horizons to address the LQOC problem with indefinite parameters. References [21,22] analyzed the LQOC problem in fractional-order discrete systems. Recent works in this area have shown that despite weight matrices Q and R having certain uncertainties in the stochastic system, the associated stochastic LQOC problem possibly still be well-posed. In discrete-time LQOC problems, positive definiteness, which was necessary for the control weighting matrix, will not be a mandatory condition, and it may even be negative in the presence of uncertainty factors within the system [23,24]. The above article fails to take into account the influence of stochastic disturbances on the system in practical applications. Thus, this paper investigated the subject where the LQOC problem of the discrete-time stochastic singular systems was affected by stochastic disturbances and discrete-time Markov chains.

Based on the above discussion, we investigated discrete-time stochastic singular systems with stochastic disturbances and discrete-time Markov chains. Compared with existing studies in this field, our findings highlight the following motivations and advantages.

- •

- In this paper, both the state and control weighting matrices could be introduced by indefinite ones in the quadratic cost function; that is, it allows negative eigenvalues for the weighting matrix. Secondly, an equivalent transformation from the indefinite LQOC problem of stochastic singular systems to normal stochastic systems is obtained in this paper, with the condition for equivalent transformation expressed in terms of the initial coefficient matrix of singular stochastic systems, which is straightforward to verify and imposes relatively few constraints.

- •

- This paper introduces stochastic disturbances to simulate the actual impact of a stochastic environment on the operation of real systems while also considering the output feedback control to enhance the robustness of the closed-loop system. Additionally, for the purpose of ensuring the stochastic admissibility of the singular stochastic systems of the system under study, this paper constructs a Lyapunov function with relatively low conservatism and utilizes MATLAB’s LMI toolbox to design the controller gains.

The structure of the paper is outlined as follows. In Section 2, the transformation equivalently converts the indefinite LQOC problem of stochastic singular systems driven by discrete-time Markov chains into an indefinite LQOC problem for regular systems through a sequence of equivalent transformations. Section 3 establishes necessary and sufficient conditions for the solvability of the indefinite LQOC problem. Specifically, it proves that the corresponding optimal control strategy and optimal cost can be obtained, ensuring the regularity and causality of the resulting closed-loop system. Additionally, criteria are provided to guarantee the definiteness of the transformed LQOC problem for stochastic singular systems, and it is shown that the semi-positive definite solution to the GMJARE is unique. Furthermore, the acquisition of the optimal control gain matrix and optimal cost values is detailed, demonstrating that the resulting closed-loop system is stochastically admissible with the output feedback control. At the end of Section 3, the specific form of the optimal control gain matrix is derived using the rigorous LMI method. Finally, in Section 4, a numerical example with four Markovian switching modes is presented to validate the main results.

2. Foundation and Problem Formulation

Consider a class of discrete-time singular systems subjected to stochastic disturbances and driven by discrete-time Markov chains:

where , and . The matrix is singular and . represents a Markov chain in discrete-time case to take values in the positive-integer set and its transition probabilities are , which means the probability of being j under , with and (). All the system parameter matrices can be abbreviated as , , , , , for . Assume that is unrelated to the initial state . is a one-dimensional white noise in a given complete probability space , where and are independent with each other.

Problem 1.

For the LQOC problem of system (1), it defines the quadratic cost function as

where stands for the mathematical expectation. It is worth mentioning that the weight matrix for the system states and control inputs is only symmetric for , , with , while is symmetric.

Note that the weight matrix in LQOC Problem 1 is merely symmetric and thus may appear inappropriate at this stage. To solve LQOC Problem 1, it is necessary to obtain the controller in a -measurable space that minimizes (2) over the set of admissible controls of (1). The optimal value function is defined as

Now, we will introduce the following definitions and lemma needed for our analysis in this paper.

Definition 1

([2,25]). Consider a class of systems of the form as .

- (i)

- If for any , the regularity will be satisfied.

- (ii)

- If it is regular and for any , causality will be satisfied.

- (iii)

- If the consider system is regular, causal and stochastically stable, stochastic admissibility will be satisfied.

Definition 2

([26]). For the compatible initial , if

the LQOC Problem 1 is referred to be well-posed.

Definition 3

([26]). The LQOC Problem 1 is called attainable if there has an admissible control that minimizes (2) for any initial state .

Assumption 1.

Remark 1.

The rank condition in Assumption 1 ensures that the singular system preserves regularity and causality in each mode. This is a fundamental requirement in the design of control systems. Second, this rank condition also implies the controllability and observability of the system in each mode. For singular systems, these properties are defined through the rank of the matrix. The existence of Assumption 1 ensures that the system has enough degrees of freedom in each mode to achieve the control objective.

In the following, the form of LQOC Problem 1 after a series of transformations will be given. First, assume that the singular matrix E satisfies the following singular value decomposition (SVD):

where and . and are the corresponding orthogonal matrices. Consequently, indicate

where , , , , , , , , , and . Thus system (1) can be viewed as restrictively equivalent to system

Meanwhile, the corresponding equivalent transformation of (2) can also be given as

where

and , , , . According to [20], Assumption 1 is synonymous with a full-row rank matrix . Suppose that there is a nonsingular matrix satisfying

with appropriate dimensions for any . It is important at this point, and are dependent on the Markovian switching modes i and j, thus we will introduce a non-singular matrix M that is uncorrelated with the Markovian switching mode to replace in the follow-up study.

Remark 2.

The purpose of the proposed Equation (10) is to transform the singular system into an ordinary system by matrix transformation. This transformation is mathematically sound because it provides a way to simplify the solution of the problem while preserving the dynamics of the system. This is crucial for designing effective controllers. By choosing an appropriate matrix , a complex singular system can be transformed into a more tractable ordinary system. Moreover, the matrix in (10) is nonsingular, which means that the transformation is invertible. This ensures that the transformed system is mathematically equivalent to the original system and no information is lost. Moreover, it not only improves the computational efficiency, but also reduces the difficulty of implementing complex control algorithms.

Proposition 1.

For Assumption 1, there exists a nonsingular matrix M, it holds that

if and only if

for , where is nonsingular.

Proof.

To proceed, denote

with

where , , , , , , , . Then using the following nonsingular linear transformation

system (7) can be transformed into

Let , , and , then (15) can be converted to

From the second formula of (16) and Proposition 1, we can obtain . By substituting this formula into (14), one has

Problem 2.

According to the above analysis and transformation, LQOC Problem 1 and LQOC Problem 2 are equivalent, as shown in (17). Therefore, in Section 3, LQOC Problem 1 will be solved using LQOC Problem 2. Subsequently, some lemmas will be presented to prove the main results of Section 3.

Lemma 1

([27]). Allow matrices , and with suitable dimensions. And the quadratic form is , where x and u are random variables with appropriate dimensions defined in a probability space . The following conditions are considered to be equivalent.

- (i)

- There is for any random variable x.

- (ii)

- There is for any x and matrix , where matrix is symmetric.

- (iii)

- and .

- (iv)

- and .

- (v)

- There is for the existing symmetric matrix T.

In addition, there exists such that (ii) is satisfied if any of the above conditions holds. Furthermore, it holds for any T in (v). Eventually, the optimal control and the optimal value can be obtained for any random variable x.

3. Main Result

From above, we know that LQOC Problem 1 and LQOC Problem 2 can be studied equivalently. Therefore, in this section, we directly investigate the solvability of LQOC Problem 2, which is the system’s indefinite LQOC problem. It can be obtained by Lemma 1 that the LQOC Problem 2 is clearly well-posed when all the matrices should be symmetric and satisfy the following LMI condition

where , , and .

Immediately following, we will obtain the optimal control and cost value for LQOC Problem 2 by some consequent results.

Theorem 1.

When there exists for , LQOC Problem 2 is solvable if and only if the following GMJARE can be solved,

Furthermore, the optimal control is defined by

Finally, the corresponding optimal cost value is

Proof.

In the first part, the proof of necessity holds. Assuming that LQOC Problem 2 can be solved, we will demonstrate that when (21) and (22) hold, there exists a symmetric matrix satisfying (20). By further induction assumptions, we firstly show that (20)–(22) holds when . From this, the following middle-range issue is introduced by

and

Notice that the discrete-time Markov chain and the disturbance signal are independent with each other. Combining with system (16) for , (24) could be transformed to

where

Later, it follows from the conclusion of Lemma 1 that there exists suitable such that

where

Furthermore, the corresponding optimal control expression can be obtained by

In the case of , when (26)–(28) hold, it is convenient to obtain that (20)–(22) hold. Subsequently, we suppose that (20) has a solution with such that (21) and (22) holding as well for . Therefore, from system (16) and the Bellman equation, we can obtain that

Similarly, there exists satisfying

such that

and its optimal control is

From (29), (31) and (32), the conclusion that can be drawn is that

with

then, (20)–(22) hold when . To sum up, we can conclude that (20)–(22) is satisfied when .

In the second part, we prove that the sufficiency holds. Thus, we need to display that when GMJARE (20) can be solved, then (21) and (22) are obtainable. We get

It is evident that from (19) and the Schur Complement lemma. Then applying (16), GMJARE (20) and the Moore–Penrose pseudoinverse definition with , we can obtain that

When to N, the two sides of the above formula are processed by the corresponding summation

and this indicates that

Since , it holds

with . Subsequently, the value of optimal cost can be obtained

Therefore, is always less than 0. That concludes the proof of Theorem 1. □

Theorem 1 proves that the optimal controller is solvable and its cost function will also reach the optimal value under the determined optimal controller. The following Theorem 2 will be further solved for the specific form of the optimal controller via LMI.

With Assumption 1 and (16), the LQOC Problem 2 is solvable if there is a matrix satisfying GMJARE (20), while the unique solution of the optimal control expression is described by

Secondly, the corresponding cost value of LQOC Problem 2 is given by

Eventually, it will have the following closed-loop system equation

Building on previous work, we now translate the indefinite LQOC Problem 1 into a infinite-horizon definite LQOC Problem 3.

Problem 3.

Under system (16), the equivalent transformation is considered by

where , , , and the quadratic cost function associated is given by

The LQOC Problem 3 is to find a -measurable controller , to minimize (44) subject to (43) and guarantee that . It can be found that the LQOC Problem 1 is equivalent to the LQOC Problem 3, if the LQOC Problem 3 has a solution, then the LQOC Problem 1 solvable as well.

Notice that and are easily derived by the original coefficient matrices of system (1) and weight matrices of cost function (2), while , then , which shows the success of the definite LQOC Problem 3 in infinite horizon based on [28].

Next, we consider the LQOC Problem 3, then define the following GMJARE

where and .

On the basis of and , we find that the existing solution for GMJARE (45) could directly judge the solvability of the LQOC Problem 3. We may reasonably come to the conclusion.

Proposition 2.

With Assumption 1, (12), and , if GMJARE (45) has a solution , then the LQOC Problem 3 is solvable. Moreover, the unique optimal control is

with its optimal cost value function as

Hence, it can achieve the stochastic stability for the closed-loop system

In system (1), it is assumed that the state information is unmeasurable, while the input and output data remain measurable. The output feedback control law is given by

Just like in (46), the objective is to attain a numerical approximate solution with . Interestingly, a relationship can be observed between the optimal state feedback control (46) and the static output feedback control (49). Thus, to achieve the minimum value of the cost function (44), the minimal cost (47) can be attained via the following optimal output-feedback control law.

where the matrix is a suitable optimal control gain for the system (1).

Theorem 2.

Under Theorem 1, the optimal closed-loop system (43) is stochastically admissible with the the control law (49), if there exist matrices , and , symmetric matrices , and , positive-definite symmetric matrices , and such that

where , , , and

Proof.

Choose a stochastic Lyapunov function

with . Then, the differential of is obtained as follows:

Let . Introduce a symmetric matrix and , then we can get

By substituting (43) and (49) into (55), we can utilize the matrix inequality method and obtain that

where

We know that , so it could be deduced that . From the Schur Complement lemma, is equivalent to (51) in Theorem 2.

4. Simulations Example

Three-dimensional discrete-time stochastic singular systems (16) driven by a one-dimensional stochastic disturbances and a discrete-time Makrov chain with four switching modes are chosen by

with the three-dimensional state and . And the jumping transfer matrix is given by

First, we calculated the SVD of the corresponding system parameters and transformed the system matrix using the SVD results, and constructed the LMI conditions based on the transformed system matrix. Subsequently, the solution of GMJARE is obtained by solving the LMI using MATLAB. The optimal control gain matrix is calculated from the solution of GMJARE and the optimal control law is formed. Through the calculation of MATLAB LMI toolbox, the feasibility solution of our designed LMI in Theorem 2 can be obtained, where the weighting matrices in Problem 3 are given by

and the obtained parameters are as follows

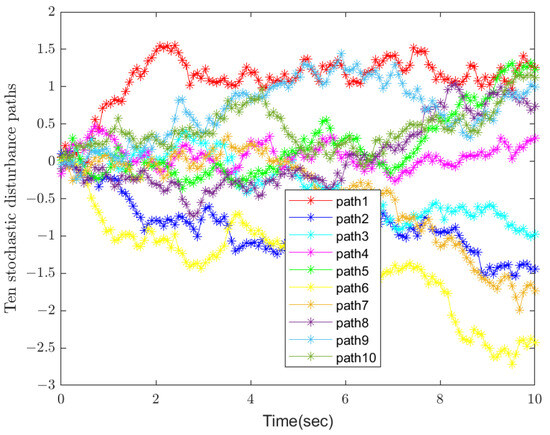

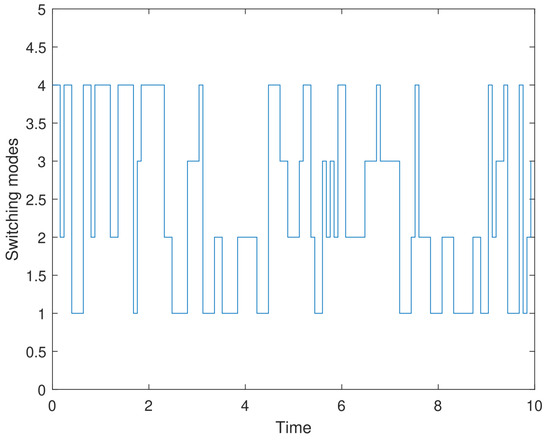

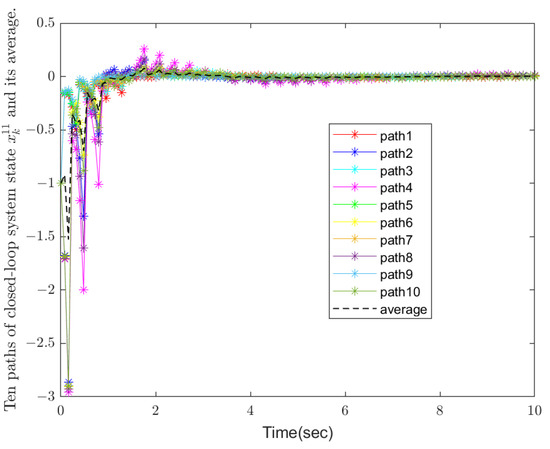

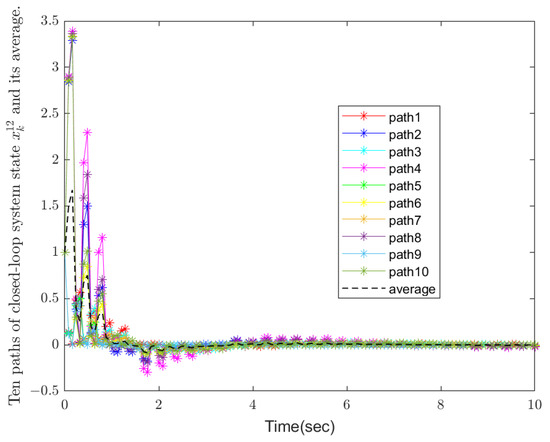

In this simulation example, an indefinite weight matrix is allowed to be introduced into the quadratic cost function, i.e., the weight matrix can have negative eigenvalues. This is significantly innovative compared with the traditional linear quadratic optimal control problem. Traditional methods usually require the weight matrix to be positive definite or positive semi-definite. However, through a series of equivalent transformations and theoretical derivations, we successfully solve the challenge brought by the indefinite weight matrix, which provides a broader space for flexible optimization of system performance. Then the LQOC problem in total could be indefinite. Thus the conditions of Theorem 2 are satisfied. We consider both random disturbance and discrete-time Markov chain in the modeling. The simulation results under the influence of 10 independent sets of disturbances and their average values are also presented. This comprehensive consideration enables the system to better simulate the uncertainty and dynamic changes in the real world and improves the robustness and adaptability of the system. In the existing research, few works can deal with these two complex factors at the same time, so it is significantly innovative in this respect. The initial condition is given by . The simulation results are given in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6.

Figure 1.

Ten stochastic disturbance paths.

Figure 2.

Markovian switching modes.

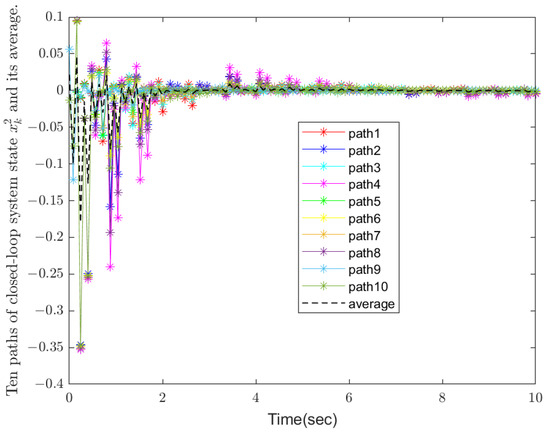

Figure 3.

State trajectories of the closed-loop model.

Figure 4.

State trajectories of the closed-loop model.

Figure 5.

State trajectories of the closed-loop model.

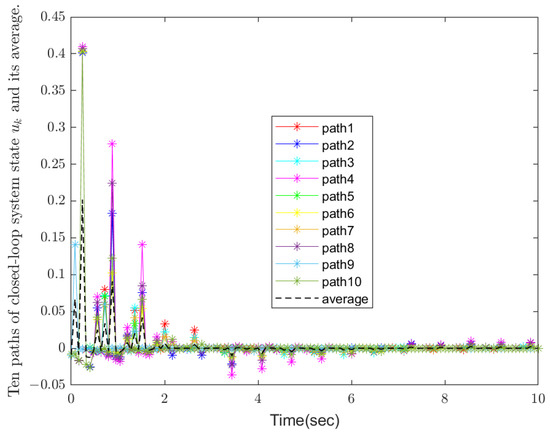

Figure 6.

System control signal .

Figure 1 and Figure 2 show ten paths of stochastic disturbances and switching modes, respectively. It is easy to see that all the ten stochastic disturbances are independent and completely random, making the results more convincing and closer to real-world scenarios. Figure 3, Figure 4 and Figure 5 demonstrate the state trajectories , , and of the closed-loop systems along ten stochastic disturbances, while their average trajectories under ten samples are also shown, respectively. It indicates that each system state gradually smooths and tends to stabilize within a finite time. Then, Figure 6 shows control signals , and it demonstrates that the designed controller is effective for the system. Finally, the optimal output feedback controller has been calculated by Theorem 2. Obviously, the figure shows that each of the 10 stochastic singular systems can reach stability and hold within under the influence of 10 independently generated stochastic disturbances, and the experimental simulation shows that the method has good results.

5. Conclusions

In conclusion, this study tackled the indefinite LQOC problem in discrete-time stochastic singular systems driven by stochastic disturbances and discrete-time Markov chains. This paper transformed the problem equivalently and proposed conditions for solvability, obtaining optimal control strategies and ensuring system regularity and causality. Definiteness conditions were established, yielding unique solutions for the GMJARE. This paper acquired optimal controls and nonnegative cost values to ensure system admissibility. And the related research conclusions are extended from the finite horizon to the infinite horizon. Output feedback controller design was presented using the LMI method, validated through illustrative examples. Future research could explore extensions to more complex system dynamics, investigate robustness to uncertainties, and consider practical implementation aspects for real-world applications. Additionally, incorporating machine learning techniques for adaptive control in stochastic environments could further enhance system performance and robustness.

Author Contributions

Conceptualization, J.X. and B.Z.; methodology, J.X., B.Z. and T.Z.; software, J.X., B.Z. and X.K.; validation, J.X., B.Z. and T.Z.; formal analysis, J.X., B.Z. and T.Z.; investigation, J.X., B.Z. and T.Z.; resources, J.X., B.Z. and X.K.; writing—original draft preparation, J.X. and B.Z.; writing—review and editing, J.X., B.Z., T.Z. and X.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Qingdao Municipality under Grant 23-2-1-156-zyyd-jch.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Dai, L.Y. Singular Control Systems, Lecture Notes in Control and Information Sciences; Springer: Berlin, Germany, 1989. [Google Scholar]

- Lam, J.; Xu, S.Y. Robust Control and Filtering of Singular Systems; Springer: Berlin, Germany, 2006. [Google Scholar]

- Xia, Y.Q.; Zhang, J.H.; Boukas, E.K. Control for discrete singular hybrid systems. Automatica 2008, 44, 2635–2641. [Google Scholar] [CrossRef]

- Yang, H.L.; Yang, L.J.; Ivanov, I.G. Reachable set estimation of discrete singular systems with time-varying delays and bounded peak inputs. Mathematics 2025, 13, 79. [Google Scholar] [CrossRef]

- Chang, X.H.; Wang, X.Y.; Hou, L.W. New LMI approach to H∞ control of discrete-time singular systems. Appl. Math. Comput. 2024, 474, 128703. [Google Scholar] [CrossRef]

- Xu, Y.C.; Zhang, Y.J.; Zhang, J.F. Singularity-free adaptive control of discrete-time linear systems without prior knowledge of the high-frequency gain. Automatica 2024, 165, 111657. [Google Scholar] [CrossRef]

- Wang, X.Y.; Zhang, Y.Q.; Yan, J.J.; Shi, Y. Event-triggered finite-time state estimation for discrete singular Markovian systems with quantization. J. Frankl. Inst. 2023, 360, 14430–14442. [Google Scholar] [CrossRef]

- Han, Y.Y.; Zhou, S.S. Novel criteria of stochastic stability for discrete-time Markovian jump singular systems via supermartingale approach. IEEE Trans. Autom. Control 2022, 67, 6940–6947. [Google Scholar] [CrossRef]

- Zhang, L.; Zong, G.D.; Zhao, X.D.; Zhao, N.; Sharaf, S. Reachable Set Control for Discrete-Time Takagi-Sugeno Fuzzy Singular Markov Jump System. IEEE Trans. Fuzzy Syst. 2023, 31, 3173–3184. [Google Scholar] [CrossRef]

- Kao, Y.G.; Han, Y.Q.; Zhu, Y.Z.; Shu, Z. Stability analysis of delayed discrete singular piecewise homogeneous Markovian jump systems with unknown transition probabilities via sliding-mode approach. IEEE Trans. Autom. Control 2024, 69, 315–322. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Zhang, J.Y.; Wang, Y.Y. Sliding-mode control for singular Markovian jump systems with Brownian motion based on stochastic sliding mode surface. IEEE Trans. Syst. Man, Cybern. Syst. 2019, 49, 494–505. [Google Scholar] [CrossRef]

- Zhou, H.; Hu, Y.Z.; Zhao, J.J. Numerical method for singular drift stochastic differential equation driven by fractional Brownian motion. J. Comput. Appl. Math. 2024, 447, 115902. [Google Scholar] [CrossRef]

- Wang, S.Q.; Lian, G.G.; Cheng, C.; Chen, H.T. A novel method of rolling bearings fault diagnosis based on singular spectrum decomposition and optimized stochastic configuration network. Neurocomputing 2024, 574, 127278. [Google Scholar] [CrossRef]

- Kalman, R.E. Contributions to the theory of optimal control. Bol. Soc. Mexicana 1960, 5, 102–119. [Google Scholar]

- Elsisi, M.; Zaini, H.G.; Mahmoud, K.; Bergies, S.; Ghoneim, S.S.M. Improvement of trajectory tracking by robot manipulator based on a new co-operative optimization algorithm. Mathematics 2021, 9, 3231. [Google Scholar] [CrossRef]

- Messerer, F.; Baumgartner, K.; Nurkanovic, A.; Diehl, M. Approximate propagation of normal distributions for stochastic optimal control of nonsmooth systems. Nonlinear Anal. Hybrid Syst. 2024, 53, 101499. [Google Scholar] [CrossRef]

- Ferrante, A.; Ntogramatzidis, L. The generalized continuous algebraic Riccati equation and impulse-free continuous-time LQ optimal control. Automatica 2014, 50, 1176–1180. [Google Scholar] [CrossRef]

- Ntogramatzidis, L.; Ferrante, A. On the solution of the Riccati differential equation arising from the LQ optimal control problem. Syst. Control Lett. 2010, 59, 114–121. [Google Scholar] [CrossRef]

- Chávez-Fuentes, J.R.; Costa, E.F.; Terra, M.H.; Rocha, K.D.T. The linear quadratic optimal control problem for discrete-time Markov jump linear singular systems. Automatica 2021, 127, 109506. [Google Scholar] [CrossRef]

- Li, Y.C.; Ma, S.P. Finite and infinite horizon indefinite linear quadratic optimal control for discrete-time singular Markov jump systems. J. Frankl. Inst. 2021, 358, 8993–9022. [Google Scholar] [CrossRef]

- Lu, Q.Y.; Zhu, Y.G. LQ optimal control of fractional-order discrete-time uncertain systems. Chaos Solitons Fractals 2021, 147, 110984. [Google Scholar] [CrossRef]

- Lu, Q.Y.; Zhu, Y.G.; Li, B. Necessary optimality conditions of fractional-order discrete uncertain optimal control problems. Eur. J. Control 2023, 69, 100723. [Google Scholar] [CrossRef]

- Xu, M.Z.; Tang, M.N.; Meng, Q.X. Forward-backward stochastic evolution equations in infinite dimensions and application to LQ optimal control problems. Syst. Control. Lett. 2024, 185, 105748. [Google Scholar] [CrossRef]

- Ye, L.W.; Zhao, Z.G.; Liu, F. Stochastic LQ optimal control for Markov jumping systems with multiplicative noise using reinforcement learning. Syst. Control Lett. 2024, 186, 105765. [Google Scholar] [CrossRef]

- Wang, G.L.; Zhang, Q.L.; Yan, X.G. Analysis and Design of Singular Markovian Jump Sysytems; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Liu, X.K.; Li, Y.; Zhang, W.H. Stochastic linear quadratic optimal control with constraint for discrete-time systems. Appl. Math. Comput. 2014, 228, 264–270. [Google Scholar] [CrossRef]

- Rami, M.A.; Chen, X.; Zhou, X.Y. Discrete-time indefinite LQ control with state and control dependent noises. J. Glob. Optim. 2002, 23, 245–265. [Google Scholar] [CrossRef]

- Zhu, L.M.; Modares, H.; Peen, G.O.; Lewis, F.L.; Yue, B.Z. Adaptive suboptimal output-feedback control for linear systems using integral reinforcement learning. IEEE Trans. Control Syst. Technol. 2015, 23, 264–273. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).