Abstract

In this article, we propose a variant Poisson item count technique (VPICT) that explicitly accounts for respondent non-compliance in surveys involving sensitive questions. Unlike the existing Poisson item count technique (PICT), the proposed VPICT (i) replaces the sensitive item with a triangular model that combines the sensitive and an additional non-sensitive item; (ii) utilizes data from both control and treatment groups to estimate the prevalence of the sensitive characteristic, thereby improving the accuracy and efficiency of parameter estimation; and (iii) limits the occurrence of the floor effect to cases where the respondent neither possesses the sensitive characteristic nor meets the non-sensitive condition, thus protecting a subset of respondents from privacy breaches. The method introduces a mechanism to estimate the rate of non-compliance alongside the sensitive trait, enhancing overall estimation reliability. We present the complete methodological framework, including survey design, parameter estimation via the EM algorithm, and hypothesis testing procedures. Extensive simulation studies are conducted to evaluate performance under various settings. The practical utility of the proposed approach is demonstrated through an application to real-world survey data on illegal drug use among high school students.

Keywords:

poisson item count technique; non-compliance; EM algorithm; hypothesis test; stochastic representation MSC:

62K99

1. Introduction

Accurate data collection and analysis in surveys involving sensitive or stigmatizing behaviors remain a persistent challenge in social science, public health, and behavioral research. When participants are asked directly about private or potentially incriminating information—such as illicit behavior, socially undesirable actions, or taboo personal experiences—they often respond in ways that align with perceived social norms rather than disclosing the truth. This response bias seriously compromises the validity of prevalence estimates and can distort the results of any subsequent analysis. For instance, van der Heijden et al. [1] reported that only 19% of respondents who had committed welfare or unemployment benefit fraud admitted doing so when asked directly, despite confidentiality assurances. Such reluctance to reveal sensitive truths reflects the deeply rooted social pressures and privacy concerns that respondents face, leading to substantial underreporting and biased findings.

To mitigate this issue and enhance the reliability of survey responses, a number of indirect questioning techniques have been developed. Among the most established is the randomized response (RR) technique, introduced by Warner [2]. This method involves using a randomizing device, such as a coin flip or a random number generator, to determine how a respondent should answer a question—either truthfully or following a pre-specified rule—thereby introducing a controlled level of uncertainty that protects individual privacy while still allowing for unbiased population-level estimates. Extensions and refinements of the RR method have been widely explored in the literature, offering sophisticated mechanisms for maintaining anonymity while improving response accuracy.

In more recent years, non-randomized response (NRR) techniques have gained attention as viable alternatives that avoid the use of randomizing devices altogether. Instead, these designs incorporate a non-sensitive question alongside a sensitive one in a strategic manner, such that the response to the composite query does not reveal which component triggered the answer. This innovation allows for the preservation of respondent confidentiality while reducing administrative complexity. Yu et al. [3] provide an excellent overview of NRR techniques, highlighting their advantages and limitations in practical settings.

Another influential method in this domain is the item count technique (ICT) [4], also known as the unmatched count technique or block total response method [5]. Under ICT, participants are randomly assigned to either a control group or a treatment group. Both groups receive a list of innocuous yes/no questions, but the treatment group’s list includes one additional sensitive question. Rather than answering each question individually, respondents report only the total number of “yes” answers. The difference in mean totals between the two groups is then used to estimate the prevalence of the sensitive attribute. ICT offers several advantages: it is simple to administer, easy to explain to respondents, and effectively conceals individual responses to sensitive items.

Despite its intuitive appeal and growing popularity, ICT has several important drawbacks [6]. One of the most significant is the presence of ceiling and floor effects. If a respondent in the treatment group answers “yes” to all questions, they may be identifiable as possessing the sensitive attribute, potentially compromising anonymity. Similarly, if a respondent in either group answers “no” to all questions, it may be inferred that they do not possess the sensitive attribute. These vulnerabilities can discourage respondents from answering truthfully and may even lead to non-compliance, particularly among those with the sensitive trait who fear disclosure. Additionally, ICT lacks standard guidelines for determining the number and composition of non-sensitive items, and it often assumes independence and known prevalence of these items—assumptions that may not hold in real-world applications.

Moreover, like many other indirect questioning techniques, ICT and its variants generally assume that respondents comply fully with the survey design and answer honestly. This so-called “no-liar” assumption is often unrealistic. Even under RR conditions, it was reported that fewer than 52% of those who admitted to fraud when protected by randomization were truthful, indicating a significant level of residual dishonesty [1]. Wu and Tang [7] showed that failure to model non-compliance in NRR contexts could lead to substantial underestimation of the true prevalence of sensitive behaviors, such as premarital sex. Recognizing the seriousness of this issue, a growing body of research has attempted to model deliberate underreporting as a form of self-protective behavior. For example, Böckenholt and van der Heijden [8] proposed a mixture model that accounts for non-cooperative respondents in RR surveys. Similarly, Cruyff et al. [9] introduced a log-linear randomized response model capable of measuring self-protective tendencies. In the NRR setting, Wu and Tang [7] incorporated an explicit non-compliance parameter to improve estimation accuracy.

The Poisson item count technique (denoted as PICT) was recently introduced as a variant of ICT to address the ceiling effect by assuming that the total number of “yes” answers follows a Poisson distribution [10]. This refinement enhances privacy protection, particularly for respondents with the sensitive attribute. However, the PICT still does not account for the floor effect and continues to rely on the assumption that all respondents comply with the survey instructions. In this setting, a response of zero can unambiguously identify a participant as not having the sensitive trait, which may prompt those who do possess the attribute to falsely report a zero in order to avoid exposure. Consequently, deliberate underreporting persists, and the resulting parameter estimates are biased and unreliable.

To address these challenges, we propose a novel method called the variant Poisson item count technique (denoted as VPICT). Our method extends the existing PICT by explicitly modeling respondent non-compliance. Specifically, we assume that individuals with the sensitive characteristic may choose to falsely report zero—denying their attribute—due to feelings of guilt, fear, or a desire to conform to social expectations. We introduce a non-compliance parameter, denoted by , which represents the probability that a respondent with the sensitive trait will misreport. Conversely, respondents who do not possess the sensitive attribute are assumed to comply with the survey protocol and respond truthfully. This modeling framework allows for more accurate estimation of the true prevalence of sensitive attributes and provides valuable insights into the extent of self-protective behavior among respondents.

The structure of this paper is as follows. In Section 2, we present the survey design and derive the maximum likelihood estimators for the model parameters. Section 3 develops hypothesis tests for both the target prevalence and the non-compliance rate. Section 4 reports the results of simulation studies designed to evaluate the statistical performance of our proposed method under various scenarios. In Section 5, we apply the VPICT to real-world survey data concerning illegal drug use among high school students to illustrate its practical utility. Finally, Section 6 offers concluding remarks and discusses potential avenues for further research.

2. Variant Poisson Item Count Techniques with Non-Compliance

In this section, we will propose a variant of the existing Poisson ICT (i.e., VPICT) which allows the estimation of the target proportion in the presence of the non-compliance assumption. Point as well as confidence interval estimation will be developed.

2.1. Survey Design

Assume that the sensitive question of interest is binary (e.g., whether the respondent has ever used illegal drugs in the past 30 days), and our objective is to estimate the prevalence of this sensitive characteristic in the presence of non-compliance. To this end, let and respondents be randomly assigned to the first and second groups, respectively, where . All n respondents are instructed to answer the following non-sensitive question:

(1) How many times did you travel abroad last year?

In addition, respondents in the first group ( individuals) are required to answer:

(2) If you were born between January and March and you have never used illegal drugs in the past 30 days (i.e., you do not possess the sensitive characteristic), answer 0; otherwise, answer 1.

Similarly, respondents in the second group ( individuals) are instructed:

(2) If you were born between April and December and you have never used illegal drugs in the past 30 days, answer 0; otherwise, answer 1.

Finally, all respondents are asked to report only the sum of their answers to questions (1) and (2). For example, a respondent born in January who has never used illegal drugs in the past 30 days and traveled abroad four times last year would report a total of 4 in the first group and 5 in the second group.

In this design, the response to the first question (i.e., the number of trips abroad) is modeled as a count variable , assumed to follow a Poisson distribution with parameter . In the second question, the non-sensitive binary variable (birth month), denoted by W, is assumed independent of the sensitive binary variable Z (whether the respondent has ever used illegal drugs in the past 30 days). Specifically, indicates birth between April and December, and otherwise. The probability is assumed known.

Let Z denote the sensitive characteristic, where if the respondent has used illegal drugs in the past 30 days and otherwise. We assume , where is the unknown parameter of interest. However, some respondents may provide a dishonest answer of 0 with probability , in order to conceal the sensitive behavior and project a socially desirable image.

To account for this non-compliance, we introduce a binary variable U, where indicates non-compliance. We let denote the response to the second question for group i, where corresponds to the first group and to the second group. Note that U and Z are not independent. Specifically, . Here, we assume a respondent will always (i) comply with the design if (s)he does not possess the sensitive characteristic (i.e., ), and (ii) choose the safe answer if (s)he does not comply with the design (i.e., , ). Therefore, a respondent born in January who has used illegal drugs in the past 30 days, traveled abroad four times last year, and belonged to the first group would report a total of 5 if he complied with the instruction, or 4 if he refused to comply with the instruction.

It is noteworthy that Wu et al. [11] proposed another PICT which accounts for non-compliance (denoted as PICTNC). However, our proposed VPICT differs from PICTNC (and also PICT) in three key aspects. First, while PICTNC incorporates only the Poisson count question (i.e., X) and the sensitive question (i.e., Z), the VPICT additionally introduces a non-sensitive question (i.e., W or its complement), which is combined with the sensitive question. This modification is indeed a combination of the PICT and the triangular model in [3]. That is, the proposed method uses the PICT’s way of asking respondents the answer to the non-sensitive Poisson item and adds the idea of the triangular model of combining sensitive and non-sensitive items. Second, in PICTNC, the control group is asked to respond only to the Poisson count question (X), whereas the treatment group is required to report the sum of the Poisson count and the sensitive question (). Consequently, the control group only contributes to the estimation of the Poisson parameter (), resulting in a less efficient estimation of the prevalence of the sensitive characteristic (). In contrast, VPICT utilizes the sensitive question in the first and second groups, allowing all respondents to contribute information for the estimation of . This integrated design improves the efficiency of the estimator for . Third, under the PICT(NC), if a respondent in the treatment group’s truthful answers to all of the items are negative, then the final answer is zero, which reveals the non-possession of the sensitive characteristic (i.e., floor effect). Under VPICT, the floor effect occurs only when the respondent does not have the sensitive characteristic AND fulfils the non-sensitive condition. As a result, a portion of the respondents who do not possess the sensitive characteristic will be protected from the floor effect.

2.2. Point Estimate

Let the observed data in the first and second groups be and , respectively. It is easy to show that (see Appendix A)

We first derive the method of moment estimate (MOME) (denoted as ) for . For this purpose, we note that (see Appendix A)

It is easy to see that

However, the method of moment estimate may occasionally yield values outside the admissible interval if or . This often occurs especially when the true parameter is close to its boundaries (i.e., 0 or 1).

To overcome the above issue, we consider the maximum likelihood estimate (MLE). For this purpose, we assume the first and observations in the first and second groups are 0, respectively, and notice that the observed likelihood function is

It is easy to observe that there is no closed-form solution for the target parameter . Here, we will develop the EM algorithm to obtain the MLEs. First, we introduce the missing data with , being the answers to the counting question in the first and second group respectively, and being the answers to the sensitive question in the first and second group respectively, and and being the non-compliance variables.

The likelihood function based on the complete observation is:

and the log-likelihood is then

where c is a constant.

The M step calculates the MLEs based on the complete likelihood and yields

The E step finds the conditional expectation and gives

The derivations of the E and M steps are presented in Appendix B. Here, we use the MOME as the initial value and repeat the E and M steps until the estimates converge.

2.3. Confidence Interval Estimate

Let = be the MLEs of = obtained from the EM algorithm. Usually, we can construct the Wald-type confidence intervals (CIs) of the parameters using the square root of the diagonal elements of the inverse Fisher information, evaluated at = . It should be noted that both Bernoulli parameters (i.e., and ) should lie between 0 and 1. In practice, the upper (or lower) bounds of these Wald CIs may be greater (or less) than 1 (or 0), resulting in invalid CIs. Alternatively, we employ the bootstrap method to construct the bootstrap CI for any arbitrary function of , denoted by = h(). Briefly, based on the obtained MLEs = , we can independently generate according to the stochastic representation in (1). Having obtained the observed data, we can calculate the parameter estimates and obtain the bootstrap replication = h(). Independently repeating this process G times, we obtain G replications {. The bootstrap CI for can be constructed by

where L and U are the 100(/2) and 100(1 −/2) percentiles of {, respectively.

3. Hypothesis Testing

3.1. Hypothesis Testing of Sensitive Proportion

Suppose that we are interested in testing the following hypotheses

where is a pre-specified number. For the null hypothesis specified above, the likelihood ratio test (LRT) statistic is given by

where are the unconstrained MLEs being calculated by (3) and (4), and and are the constrained MLEs under the null hypothesis . The following EM algorithm can be employed to find the constrained MLEs and under .

The M step is to calculate the constrained MLEs:

The E step is to find the conditional expectation:

Here, the moment estimate is employed to be the initial value, and we repeat the E step and M step until the estimates are convergent. Let be the chi-square random variable with one degree of freedom and be the observed value of . Under the null hypothesis, is asymptotically chi-squared distributed with one degree of freedom. Hence, the null hypothesis is rejected if the p-value (= ) is less than the prespecified significance level .

3.2. Hypothesis Testing of Non-Compliance Parameter

To check whether the respondents comply with the design, we test whether the non-compliance parameter is 0 or not. That is, we consider the following hypotheses

Again, we consider the following likelihood ratio test

where are the constrained MLEs under the null hypothesis , and , , and are unconstrained MLEs.

It is noteworthy that the null hypothesis corresponds to the parameter of interest (i.e., ) lying on the boundary of the parameter space (i.e., 0). As pointed out by Self and Liang [12] and Feng and McCulloch [13], the standard asymptotic theory suggesting that under is chi-squared distributed may not be appropriate. Instead, it is suggested that the appropriate reference null distribution for is a mixture of distributions. Specifically, the appropriate reference distribution under is an equal mixture of a (i.e., a constant at zero) and a with the corresponding p-value being given by [14,15]

where is the observed value of . Again, the hypothesis is rejected if is less than the prespecified significance level .

4. Simulation Studies

In this section, all the simulations are performed using R Version 4.4.2.

4.1. Parameter and Confidence Interval Estimates

To evaluate the performance of the proposed point and confidence interval estimates, we consider two non-compliance cases: and . In surveys with sensitive questions, the proportion of such individuals is generally small. For both cases, we therefore consider = , , and = 2. For each configuration, we generate according to (1) with , and calculate the MLEs via the EM algorithm (3) and (4) and the 95% bootstrap CIs with 1000. Here, we independently repeat this process 1000 times. The corresponding average MLE and average width and average coverage probability of the bootstrap CIs are reported in Table 1 for and Table 2 for .

Table 1.

Point and confidence interval estimates with non-compliance parameter 0.3 and .

Table 2.

Point and confidence interval estimates with non-compliance parameter 0.4 and .

It is interesting to note that our proposed VPICT (with non-compliance) produces satisfactory point estimates and confidence interval estimates when the sensitive proportion lies away from boundary values (i.e., 0 and 1). When = , for example, larger estimate biases, wider confidence interval widths, and smaller coverage probabilities are observed. For = , all biases, confidence interval widths, and coverage probabilities substantially improve. On the contrary, estimation of is robust for different values of and .

4.2. Hypothesis Testing

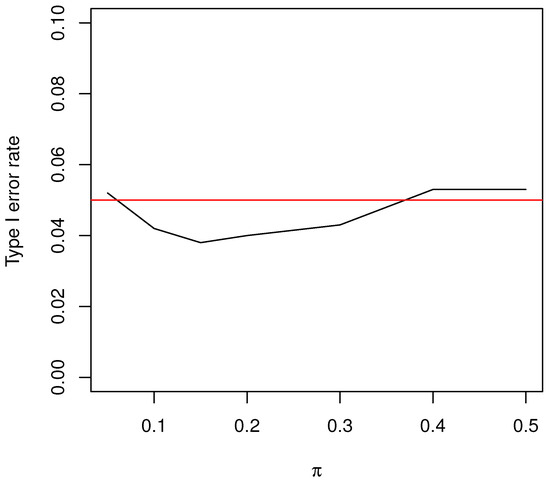

In this section, we conduct simulation studies to assess the performance of the likelihood ratio test for the hypothesis regarding the non-compliance parameter (i.e., ). First, we investigate the performance of its type I error rate. For this purpose, we set , and . To examine the effect of the value of , we set and . For each parameter configuration, we generate according to Equation (1). The test statistic is then calculated according to Equation , and the null hypothesis is rejected if is less than the pre-specified value . This process is independently repeated 1000 times, and the proportion of rejections is plotted as the simulated type I error rate in Figure 1. It is clear that the empirical type I error rates fluctuate around the pre-specified nominal level of being .

Figure 1.

The type I error rates of the LRT for testing against .

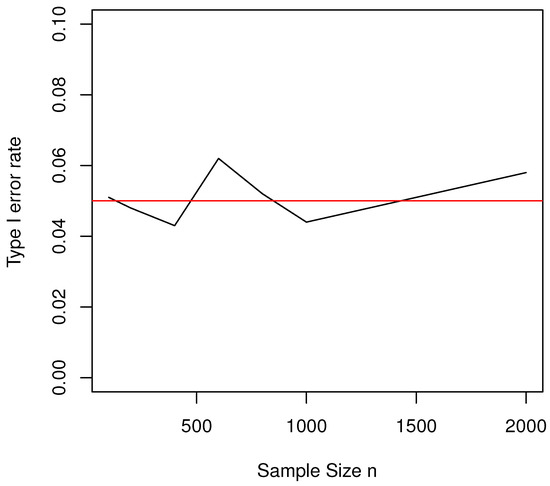

To investigate the influence of sample size on the type I error rate, we set , and . Similar to the previous simulation study, the empirical type I error rates are computed based on 1000 repetitions for each configuration. The results are plotted in Figure 2. It is clear that our proposed test is robust for different sample sizes n, even for small sample sizes (e.g., ). The simulation results from Figure 1 and Figure 2 show that our proposed likelihood ratio test is a valid test and behaves satisfactorily in the sense that its empirical type I error rate is close to the nominal level (i.e., = ) for different sensitive proportions (i.e., ) and sample sizes (i.e., n).

Figure 2.

The type I error rates of the LRT for testing against .

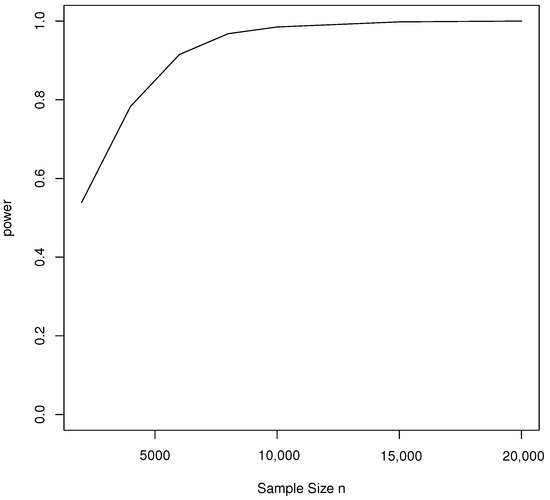

Finally, we investigate the power of the proposed test for the non-compliance parameter when , and . Here, we set the true non-compliance parameter at and present the simulated power based on 1000 repetitions in Figure 3. As expected, randomized and non-randomized response techniques are designed to improve the validity of survey responses by reducing social desirability bias, but this often comes at the cost of reduced statistical power compared to direct questioning. Figure 3 shows that our proposed VPICT requires substantially more sample size in order to identify the non-compliance effect at an acceptable statistical power (e.g., ). From Figure 1, Figure 2 and Figure 3, our simulation results showed that moderate sample sizes (e.g., ) are enough for controlling the type I error rate at the pre-specified nominal level (e.g., 0.05), but substantially larger sample sizes (e.g., 5000) are required to achieve desirable statistical power (e.g., 0.8).

Figure 3.

The power of the LRT for testing against .

5. Real Data Analysis

Substance use—including tobacco, alcohol, and illicit drugs—has long been linked to a range of health and social problems worldwide. Adolescents are particularly vulnerable, often engaging in risky behaviors such as smoking, drinking, and drug use. Research indicates that many begin experimenting with these substances at an early age. In the U.S., adolescent lifetime use of illicit drugs declined steadily between 2000 and 2010, then plateaued from 2019 onward. Marijuana remains the most commonly used illicit substance among youth due to its accessibility and increasing social acceptance. Between 2008 and 2013, past 30-day marijuana use rose across all grade levels: from 5.8% to 7% in 8th graders, 13.8% to 18% in 10th, and 19.4% to 22.7% in 12th. In Europe, lifetime use of drugs such as cocaine and ecstasy rose from 12% in 1995 to 20% in 2011, then gradually declined. Marijuana use peaked in 2011 (7.6%) and has since stabilized. Similar downward trends are observed in Asia: in Hong Kong, under-21 drug use dropped from 17% in 2011 to 9% in 2020, while Macau reported a 1.9% past-month use among students (2014–2018). In mainland China, 8% of registered drug users in 2016 were under 25, though adolescent-specific data were lacking [16].

To illustrate our proposed methods, we conduct two small scale surveys on drug use among senior high school students aged from 16–18 in an urban city in Guangdong Province in Mainland China. We apply both the direct question survey and our proposed variant Poisson item count technique with non-compliance for the investigation. In the first survey, 200 interviewees were directly asked whether they used illegal drugs in the past 30 days. A total of 175 of them responded, and only one indicated illegal drug usage in his/her final answer. Thus, the estimate for the sensitive proportion of illegal drug usage is . Although all respondents were instructed to provide responses in an anonymously answered questionnaire, a non-response rate was observed. This result is not surprising as illegal drug usage is a sensitive topic among Asian families. Compared to the aforementioned illegal drug usage prevalences among youth in Europe, Macau, and Hong Kong, a of illegal drug usage prevalence obtained from this direct question survey seems to be an underestimate. It is reasonable to believe that social desirability effects (e.g., high student school students should not smoke and take illegal drugs) may bias prevalence estimates of sensitive behaviors (i.e., illegal drug usage) and opinions obtained using direct questioning. This supports us to conduct another survey about illegal drug usage among high school students using our proposed variant Poisson item count technique (VPICT).

In the second survey, 400 people participated in the first and second groups with 200 participants in each group based on our proposed VPICT. Briefly, the sensitive variable (i.e., Y) represents whether an interviewee had engaged in illegal drug usage in the past 30 days (i.e., Y = 1 if an interviewee had engaged in illegal drug usage in the past 30 days; =0 otherwise) and the non-sensitive variable (i.e., W) represents whether the respondent was born between April and December (i.e., W = 1 if the respondent was born between April and December; = 0 otherwise). The common non-sensitive question for all participants is the frequency of travelling outside their home cities last year. We received 195 and 187 valid questionnaires from the first and second groups. That is, only and non-response rates were reported in the first and second groups, respectively. These seem to suggest that VPICT as an indirect questioning survey method substantially reduces the non-response rate. The observed answers and frequencies from Groups 1 and 2 are reported in Table 3. The parameter estimates and bootstrap confidence interval are shown in Table 4. Compared to the direct questioning survey, the reported prevalence for illegal drug usage among high school students is significantly greater, which is close to that reported from the aforementioned Europe studies. This also supports that the prevalence estimate from the direct questioning survey is an underestimate. It is interesting to notice that an estimate of of the respondents who took illegal drugs in the past 30 days did not comply with the design and intentionally chose the safe answer in their responses. However, these results should be interpreted with caution since the sample sizes (i.e., 195 and 187) may not be sufficiently large to attain a reasonable statistical power according to our power simulation study in Figure 3.

Table 3.

Observed answers and frequencies for Groups 1 and 2.

Table 4.

Parameter estimates and confidence interval.

6. Discussion

In this manuscript, we introduce a novel survey methodology grounded in the Poisson item count technique (ICT), which incorporates respondent non-compliance into the modeling framework. This enhancement is aimed at improving the credibility and validity of self-reported data on sensitive topics. The proposed model effectively addresses the floor effect—a common limitation in ICT—by incorporating a non-sensitive control question, thereby increasing the variability of responses and improving parameter estimation.

To estimate the model parameters, we develop an Expectation-Maximization (EM) algorithm that efficiently computes the maximum likelihood estimates (MLEs). Furthermore, we propose hypothesis testing procedures for the key model parameters, including the sensitive population proportion () and the non-compliance parameter (). These tests provide a rigorous statistical framework for making inferences about sensitive behaviors or attributes, which are often underreported in traditional survey methods.

Despite the methodological advances presented, this study does not yet explore the relationship between the sensitive attribute and other relevant covariates. Prior literature has shown that factors such as individual characteristics (e.g., rebelliousness and low religiosity), family background (e.g., low parental education and neglect), and community influences (e.g., association with peers who engage in drug abuse) play significant roles in predicting adolescent drug use globally [17]. However, modeling such associations within the context of indirect questioning methods like ICT remains relatively underexplored. Future research could extend the current framework by integrating covariate information through generalized linear models or other regression-based approaches, thereby enabling more comprehensive analyses of sensitive behaviors.

Another critical consideration in survey design is the determination of an appropriate sample size. Broadly speaking, sample size calculations fall into two categories: hypothesis testing-based approaches and confidence interval-based approaches. In the former, sample size is determined to ensure that a test of hypothesis achieves a pre-specified statistical power at a given significance level. In the latter, the goal is to control the width of the confidence interval for a parameter estimate at a desired confidence level [18]. Notably, sample size formulas derived from hypothesis testing account for both Type I error (significance level) and power (the probability of correctly rejecting a false null hypothesis). In contrast, confidence interval-based methods typically focus on precision without explicit reference to statistical power. In the context of surveys involving sensitive questions, especially those using complex response mechanisms like the Poisson ICT, determining optimal sample sizes becomes particularly challenging due to the additional model complexity and potential for measurement error. As such, the development of robust sample size formulas tailored to both hypothesis testing and confidence interval criteria remains a promising direction for future methodological research. Such advancements would support more efficient survey designs while maintaining the integrity and interpretability of statistical inferences in sensitive data collection contexts.

Author Contributions

Conceptualization, M.-L.T., Q.W., D.H.-S.C. and G.-L.T.; methodology, M.-L.T., Q.W. and G.-L.T.; software, Q.W.; validation, M.-L.T. and Q.W.; formal analysis, Q.W.; investigation, Q.W. and D.H.-S.C.; resources, Q.W. and D.H.-S.C.; data curation, Q.W.; writing—original draft preparation, Q.W. and M.-L.T.; writing—review and editing, M.-L.T. and D.H.-S.C.; visualization, Q.W.; supervision, M.-L.T.; project administration, D.H.-S.C.; funding acquisition, M.-L.T., Q.W. and G.-L.T. All authors have read and agreed to the published version of the manuscript.

Funding

The research of Qin WU was supported by a grant (12171167) from National Natural Science Foundation of China (NSFC). Man-Lai TANG’s research was supported by Research Matching Grant (project: 700006 Applications of SAS Viya in Big Data Analytics) from the Research Grants Council of the Hong Kong Special Administration Region, and the Big Data Intelligence Centre in The Hang Seng University of Hong Kong. The research of Guo-Liang TIAN was fully supported by a grant (12171225) from National Natural Science Foundation of China (NSFC).

Data Availability Statement

The data used in this manuscript are available in Table 3.

Acknowledgments

The authors thank the associate editor and anonymous referees for their insightful comments that improved the final version of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Derivations of Formulas for E[Y(i)] (i=1,2) and MOME (i.e., )

We recall that (i) a respondent will always comply with the design if (s)he does not possess the sensitive characteristic (i.e., ), (ii) a respondent will always choose the safe answer if (s)he does not comply with the design (i.e., , ), and (iii) represents the response to the second question of a subject in group i, where . Let first consider .

- (a)

- When a respondent does not comply with the design (i.e., ), the corresponding value for must be 0 (i.e., ).

- (b)

- When a respondent complies with the design (i.e., ), = 0 if the respondents does not satisfy the sensitive (i.e., ) nor non-sensitive () items; otherwise. That is, = .

- Combining (a) and (b), we have = . Similarly, we have = . Hence, = X + ().

To derive the formulas for E[] , we observe that

Therefore, = = . Similarly, = = . Since = X + ( ), we have = + = + . Therefore,

In other word, = 1 - []/(2p − 1). Hence, we have = .

Appendix B. Derivations of E and M Steps for the EM Algorithm

In this section, the details of the EM algorithm will be represented. First, we derive the closed-form prepsentations for the M steps. For this purpose, we differentiate the log-likelihood function ℓ with respect to each of the three parameters (). Then, we have

Setting these equations equal to zero yields the M steps in (3).

Second, we derive the E steps in (4). For this purpose, we notice that the conditional distribution of is given as:

The conditional expectations of the missing data given the observations in Group 1 are as follows:

Similarly, we can obtain the results for the second group.

References

- van der Heijden, P.G.M.; van Gils, G.; Boutes, J.; Hox, J.J. A Comparison of Randomized Response, Computer-Assisted Self-Interview, and Face-to-Face Direct Questioning Eliciting Sensitive Information in the Context of Welfare and Unemployment Benefit. Soc. Methods Res. 2000, 4, 505–537. [Google Scholar] [CrossRef]

- Warner, S.L. Randomized response: A survey technique for eliminating evasive answer bias. J. Am. Stat. Assoc. 1965, 60, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.W.; Tian, G.L.; Tang, M.L. Two new models for survey sampling with sensitive characteristic: Design and analysis. Metrika 2008, 67, 251–263. [Google Scholar] [CrossRef]

- Miller, J.D. A New Survey Technique for Studying Deviant Behavior. Ph.D. Thesis, The George Washington University, Washington, DC, USA, 1984. [Google Scholar]

- Raghavarao, D.; Federer, W.T. Block total response as an alternative to the randomized response method in surveys. J. R. Stat. Soc. B 1979, 41, 40–45. [Google Scholar] [CrossRef]

- Imširević, E. Sensitive Questions in Surveys: The Ceiling and Floor Effects of the Item Count Technique. Master’s Thesis, Johannes Kepler University Linz, Linz, Austria, 2024. [Google Scholar]

- Wu, Q.; Tang, M.L. Non-randomized response model for sensitive survey with noncompliance. Stat. Methods Med. Res. 2016, 25, 2827–2839. [Google Scholar] [CrossRef] [PubMed]

- Böckenholt, U.; Van der Heijden, P.G.M. Item randomized-response models for measuring noncompliance: Risk-return perceptions, social influences, and self-protective responses. Psychometrika 2007, 72, 245–262. [Google Scholar] [CrossRef]

- Cruyff, M.J.L.F.; van der Hout, A.; van der Heijden, P.G.M.; Böckenholt, U. Log-Linear Randomized-Response Models Taking Self-Protective Response Behavior Into Account. Sociol. Methods Res. 2007, 36, 266–282. [Google Scholar] [CrossRef]

- Tian, G.L.; Tang, M.L.; Wu, Q.; Liu, Y. Poisson and negative binomial item count techniques for surveys with sensitive question. Stat. Methods Med. Res. 2017, 26, 931–947. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Tang, M.L.; Fung, W.H.; Tian, G.L. Poisson item count techniques with noncompliance. Stat. Med. 2020, 39, 4480–4498. [Google Scholar] [CrossRef] [PubMed]

- Self, S.G.; Liang, K.Y. Asymptotic properties of the maximum likelihood estimators and likelihood ratio tests under nonstandard conditions. J. Am. Stat. Assoc. 1987, 82, 605–610. [Google Scholar] [CrossRef]

- Feng, Z.D.; McCulloch, C.E. Statistical inference using maximum likelihood estimation and the generalized likelihood ratio when the true parameter is on the boundary of the parameter space. Stat. Probab. Lett. 1992, 13, 325–332. [Google Scholar] [CrossRef][Green Version]

- Jansakul, N.; Hinde, J.P. Score tests for zero-inflated Poisson models. Comput. Stat. Data Anal. 2002, 40, 75–96. [Google Scholar] [CrossRef]

- Joe, H.; Zhu, R. Generalized Poisson distribution: The property of mixture of Poisson and comparison with negative binomial distribution. Biom. J. 2002, 47, 219–229. [Google Scholar] [CrossRef] [PubMed]

- Li, S.D.; Xie, L.; Wu, K.; Lu, J.; Kang, M.; Shen, H. The Changing Patterns and Correlates of Adolescent Substance Use in China’s Special Administrative Region of Macau. Int. J. Environ. Res. Public Health 2022, 19, 7988. [Google Scholar] [CrossRef] [PubMed]

- Nawi, A.M.; Ismail, R.; Ibrahim, F.; Hassan, M.R.A.; Amit, N.; Ibrahim, N.; Shafurdin, N.S.; Manaf, M.R. Risk and protective factors of drug abuse among adolescents: A systematic review. BMC Public Health 2021, 21, 2088. [Google Scholar] [CrossRef] [PubMed]

- Qiu, S.F.; Lei, J.; Poon, W.Y.; Tang, M.L.; Wong, R.S.F.; Tao, J.R. Sample size determination for interval estimation of the prevalence of a sensitive attribute under non-randomized response models. Br. J. Math. Stat. Psychol. 2024, 77, 508–531. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).