1. Introduction

The rapid advancement of information technology has enabled large-scale data collection across sectors such as healthcare, finance, and public administration. While the rise of deep learning has significantly improved data-driven decision-making, most applications remain confined within organizational boundaries. Expanding data sharing with external parties holds great promise for value creation but introduces serious privacy risks.

Many real-world datasets contain Personally Identifiable Information (PII) such as names, genders, ages, and locations [

1]. Even after removing direct identifiers, quasi-identifiers (e.g., age, gender, zip code) can still enable re-identification. For instance, Sweeney demonstrated that anonymized medical records could be cross-linked with voter registrations to re-identify individuals [

2], while Narayanan and Shmatikov showed that movie ratings can be deanonymized via statistical matching [

3]. These privacy risks have prompted strict legal regulations such as the EU’s General Data Protection Regulation (GDPR) (

https://gdpr-info.eu/, accessed on 20 July 2025) and the California Consumer Privacy Act (CCPA) (

https://oag.ca.gov/privacy/ccpa, accessed on 20 July 2025).

Traditional anonymization techniques like

k-anonymity [

2],

l-diversity [

4], and

t-closeness [

5] offer some protection but often sacrifice data utility [

6]. Synthetic data generation has emerged as an alternative, aiming to generate artificial datasets that retain statistical fidelity without linking to real individuals. Recent research [

7] extended and evaluated synthetic data generation for longitudinal cohorts using statistical methods grounded in

k-anonymity and l-diversity, ensuring privacy protection and analytical reproducibility. Generative Adversarial Networks (GANs) [

8] have shown promise in synthetic data generation by training a generator–discriminator pair adversarially [

9,

10]. However, applying GANs to tabular data introduces several challenges, such as mixed data types, imbalanced variables, and non-Gaussian distributions.

To address these challenges, deep learning-based methods such as Table-GAN [

11], CTGAN [

12], and TVAE [

12] have been proposed. These models incorporate innovations like mode-specific normalization and stratified sampling to capture tabular structures better. CTAB-GAN+ [

13] further introduces downstream task alignment and differential privacy, while federated methods like FedEqGAN [

14] integrate encryption to address cross-source heterogeneity. In addition, for privacy-aware synthesis, DP-CTGAN [

15] embeds rigorous

-differential privacy into Conditional Tabular GANs through gradient clipping and Gaussian noise injection, preserves higher statistical fidelity and downstream predictive accuracy than prior private baselines.

In parallel, tree-based machine learning approaches have also gained traction for tabular data synthesis. ARF [

16] uses ensembles of random forests to model local feature distributions, while Generative Trees (GTs) [

17] mimic the partition logic of decision trees to synthesize data along learned splits. A recent study by Emam et al. [

18] utilized a sequential synthesis framework based on gradient-boosted decision trees (GBDT) to assess the replicability of health data analyses, showing high consistency between synthetic and real datasets. While efficient, these models struggle to capture global feature dependencies or provide substantial privacy enhancement.

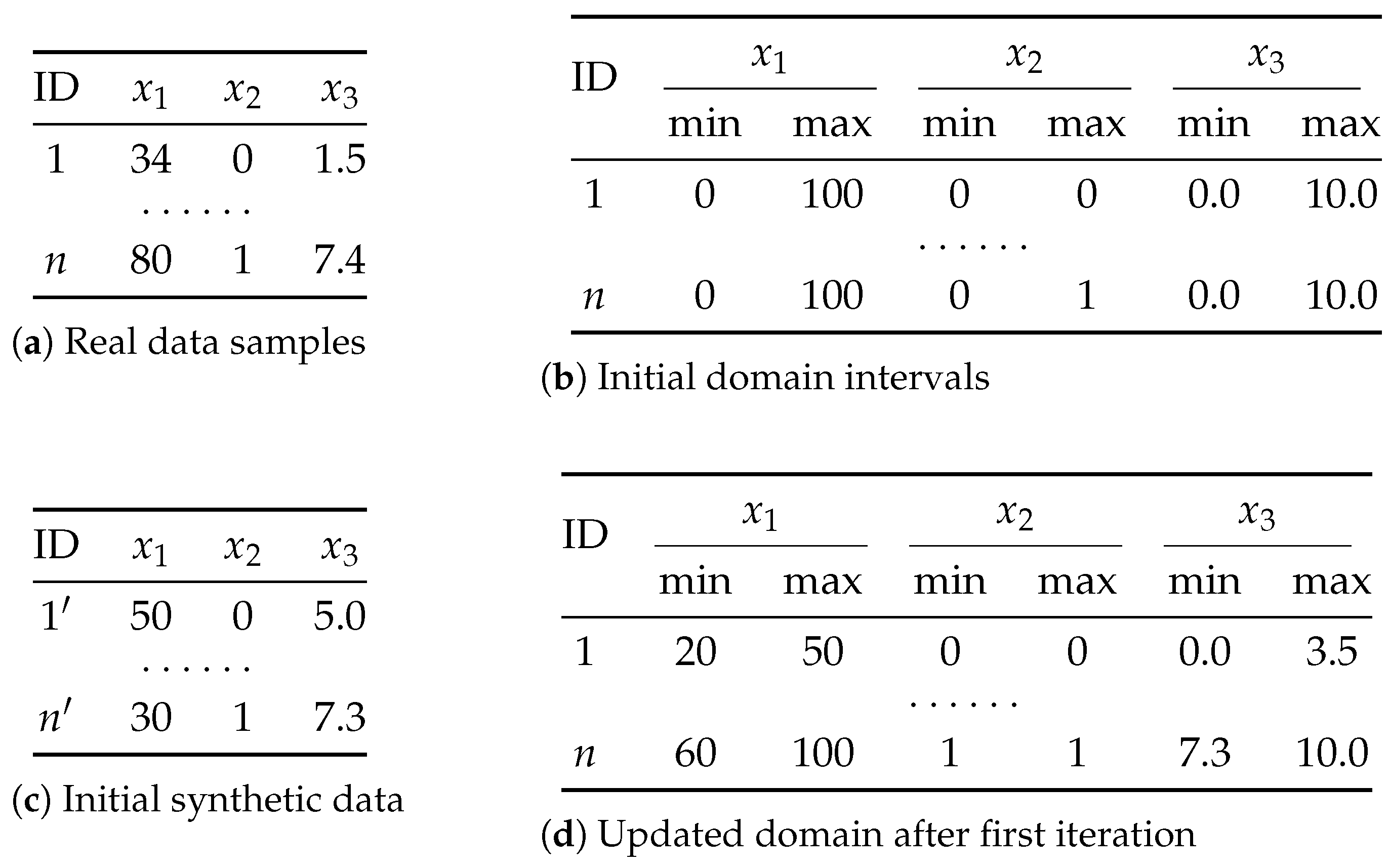

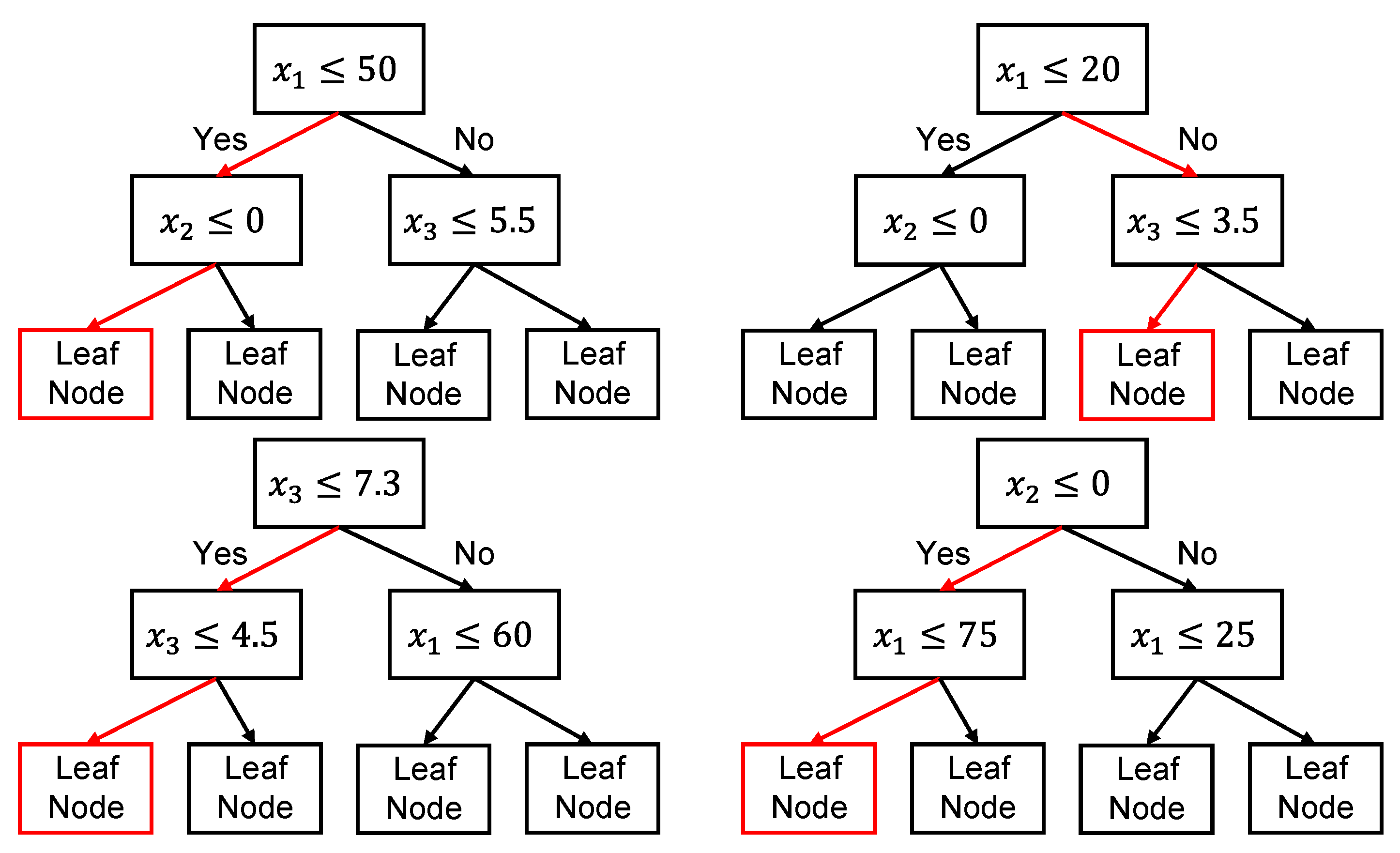

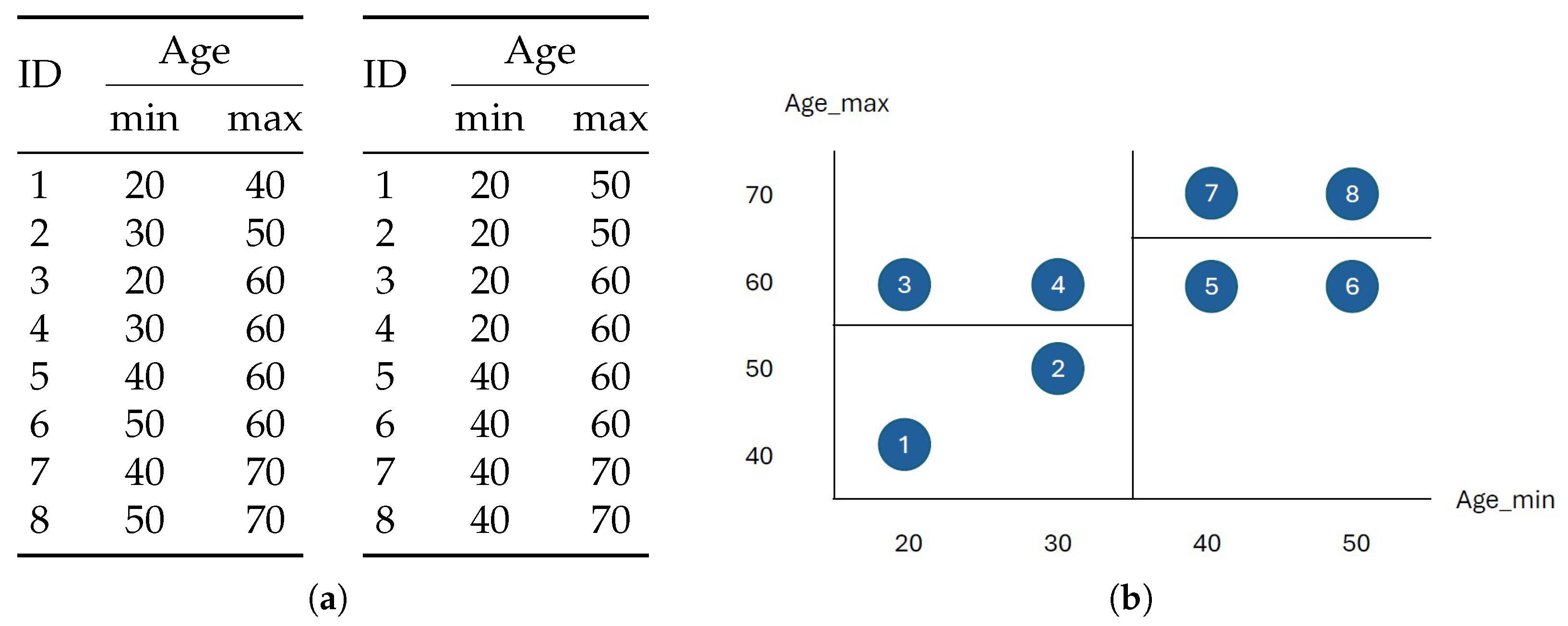

Building on this line of work, we propose a novel method called Adversarial Gradient Boosting Decision Tree (AGBDT). It integrates Gradient Boosting Decision Tree ensembles with adversarial training to generate high-quality tabular data while ensuring privacy. Our method iteratively updates data generation domains through discriminator feedback, then employs a k-anonymity Mondrian sampling strategy to generate privacy-aware synthetic records. To further enhance protection, we extend our framework to support l-diversity and t-closeness constraints.

Our main contributions include the following:

A novel hybrid framework combining gradient boosting and adversarial learning for tabular data synthesis.

A domain update mechanism guided by decision path logic, enabling privacy-preserving generation with controlled fidelity.

Empirical evaluation on five datasets against several baselines, with ablation studies on k-anonymity constraints.

4. Discussion

Based on our experimental results and observations, several critical points merit discussion to guide future research and practical applications clearly:

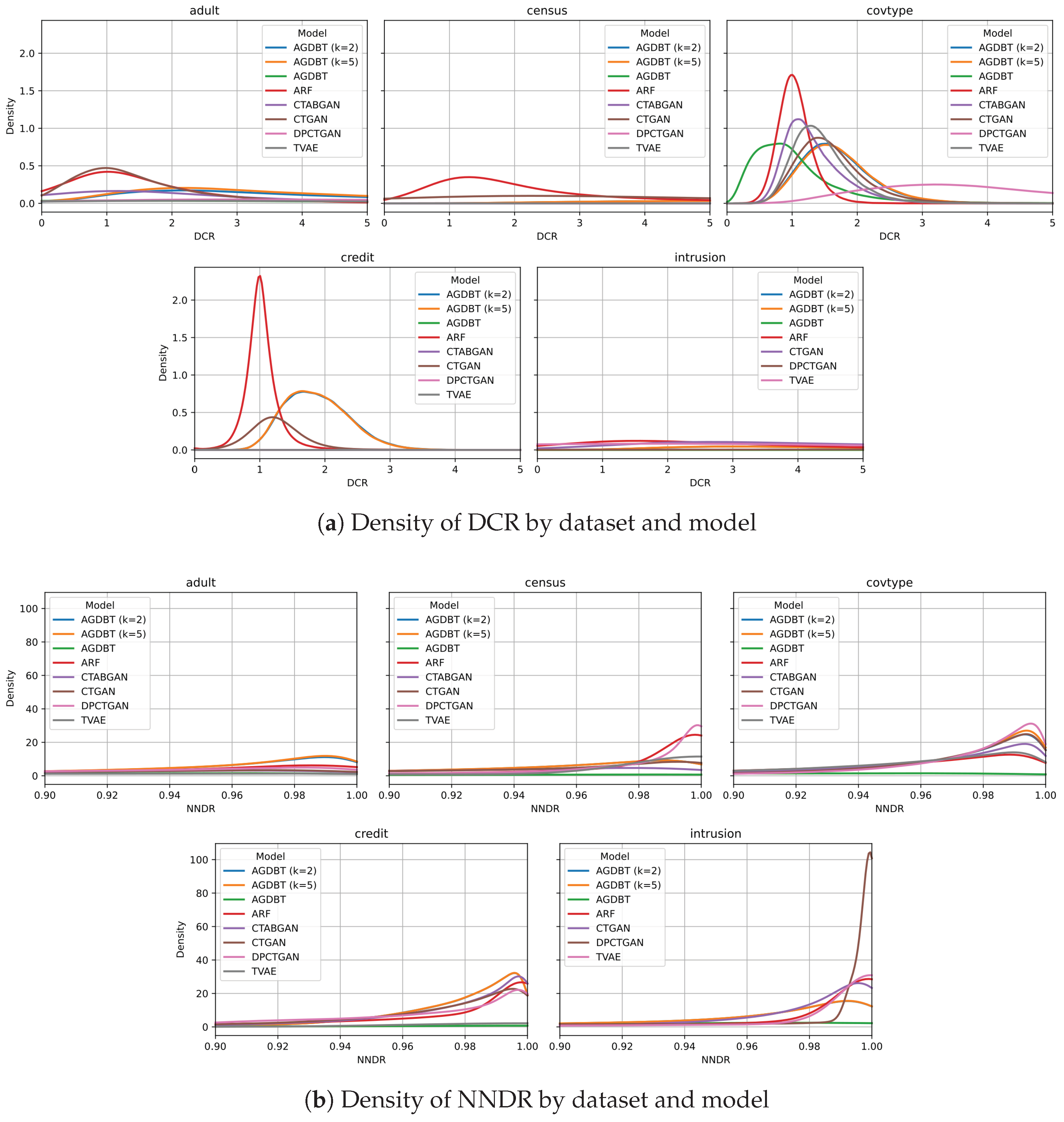

Utility vs. Privacy Tradeoff: The proposed AGBDT model demonstrated superior or competitive performance across multiple datasets regarding statistical similarity and machine learning utility, confirming its strong capability for generating analytically valuable synthetic data. However, without explicit privacy constraints, the method showed relatively low novelty and potential privacy risks. Introducing k-anonymity effectively mitigated these risks while maintaining acceptable utility degradation.

Handling Severe Class Imbalance: Our method showed limitations in replicating severely imbalanced datasets, notably the Credit dataset. In such cases, alternative generative models (e.g., CTAB-GAN+ or ARF), which better manage minority class distributions, may offer improved synthetic data quality. Enhancing our framework to better capture imbalanced class structures will be a priority in future work.

Alternative Privacy Protection Approaches: Although Mondrian k-anonymity aligns naturally with gradient boosting tree logic, it can lead to information loss and reduced data diversity. Exploring alternatives such as k-concealment or differential privacy for more rigorous privacy guarantees, possibly through hybrid methods, will be valuable in future developments.

Missing Data Handling: All datasets in this study were complete, but real-world data frequently contain missing values. Integrating advanced imputation techniques (e.g., median/mode imputation, predictive models, or surrogate splits in tree structures) into the AGBDT framework would significantly enhance its applicability and robustness to common practical scenarios.

Sensitivity to Hyperparameters: The parameter analysis (see

Table A4) demonstrated that moderate complexity (tree depth = 3, estimators = 50) provided optimal balance between data quality and computational efficiency in most datasets. With higher depths and estimators, data quality deteriorated significantly, indicating increased risks of overfitting and decreased generalizability. Future research should investigate automated hyperparameter tuning strategies, such as Bayesian optimization or validation-driven dynamic adjustment, to improve model adaptability across diverse datasets.

In summary, AGBDT effectively balances data utility and privacy under general scenarios. However, further improvements addressing its limitations—including handling severe class imbalance, integrating formal privacy guarantees, dealing with missing data, and optimizing parameter sensitivity—will significantly enhance its generalizability and practical applicability.

5. Conclusions

In this study, we proposed an Adversarial Gradient Boosting Decision Tree (AGBDT) framework for synthesizing privacy-aware tabular data by integrating adversarial training with gradient boosting trees. Empirical evaluations on five diverse datasets demonstrated that AGBDT achieved superior or highly competitive results in statistical similarity and machine learning utility compared to state-of-the-art baseline models.

Key findings indicated that our approach effectively balanced data fidelity and privacy risks, particularly in datasets with moderate diversity and class balance. Nevertheless, we observed performance degradation in datasets characterized by severe class imbalance, highlighting a limitation regarding the current model’s sensitivity to minority class distributions. Additionally, our application of k-anonymity provided intuitive privacy protection, but lacks formal probabilistic privacy guarantees available through differential privacy.

Practical implications of our study suggest that while AGBDT effectively synthesizes highly usable tabular data suitable for typical analytical tasks, careful consideration should be given when dealing with severely imbalanced datasets or stringent formal privacy constraints. Future research directions include exploring differential privacy integration for formal guarantees, improving methods for handling missing values common in real-world data, and conducting comprehensive sensitivity analyses to enhance the robustness and generalizability of the proposed framework.