1. Introduction

Pollution control in large cities is a problem of great interest worldwide, and that is why various organizations are continually seeking strategies to mitigate it. As for scientists, they have begun to analyze models that describe the stock of pollution through ordinary and stochastic differential equations. In particular, optimal control theory has been applied for the optimal management of pollution in economic sciences. This theory considers an economy that consumes some good and, as a by-product of that consumption, generates pollution. The hypotheses in our model are as follows:

Social welfare is defined by the net utility from the consumption of some good vis à vis the disutility caused by pollution. Our objective is to find an optimal consumption policy for society. That is, we seek to maximize the difference between the utility function of consumption vs. the disutility caused by the polluting stock (see [

2,

3]).

This paper represents the second part of a project related to the constrained optimal control problem of pollution accumulation with an unknown parameter. To be in context, we begin by briefly summarizing the results obtained in

Robust statistic estimation in constrained optimal control problems of pollution accumulation (Part I) (see [

4]).

The first part considers the scenery where the dynamic system is given by a diffusion process and depends on an unknown parameter, say

. First, assuming the parameter

as known, an approach with restrictions and one without them is proposed. The respective optimal value functions are

and

. Then, estimation techniques for the parameter

are applied and later combined with the characterizations and results previously analyzed. Roughly speaking, one of the results considers a sequence of estimated parameters (

:

m = 1, …) such that

, then the value functions converge (in some sense), that is

Another result states the existence of optimal policies such that . Furthermore, the relationship between the value functions and is shown.

In this piece, we obtain similar results considering the case where the dynamics of the stock of pollution evolves as a diffusion process with Markovian switching whose drift function, as well as the reward function, depends on the unknown parameter . In addition, we impose some natural constraints on the performance index.

To avoid confusion, we try to preserve the notation of Part I in this work. First, a constrained control problem is proposed. Subsequently, assuming is the real parameter, we study a standard control problem under the discounted criterion, where it is possible to apply standard techniques and dynamic programming tools to determine optimal policies. Then a (discrete) procedure to estimate the unknown parameter is applied in combination with the standard results formerly mentioned to obtain the so-called adaptive policies that maximize a discounted reward criterion with constraints.

The idea is to estimate the parameter

, and then solve the optimal control problem when such an estimated value is replaced in the problem. In the literature, this approach is known as the

Principle of Estimation and Control. This problem has been studied in several contexts. For instance, refs. [

5,

6,

7,

8] and the references therein are about stochastic control systems evolving in discrete time. On the other hand, adaptive optimal control for continuous time is studied in [

9,

10,

11]. The estimation for diffusion processes using discrete observations has been studied in the works [

12,

13,

14,

15,

16].

Dynamic optimization has been used to study the problem of pollution accumulation in the past; for example, the papers [

17,

18] use a linear quadratic model to explain this phenomenon, the article [

2] deals with the average payoff in a deterministic framework, while [

3,

19] extend the former’s approach to a stochastic context, and [

20] uses a robust stochastic differential game to model the situation. The study [

21] is a statistical analysis of the impact of air pollution on public health. In order to develop adaptive policies that are almost surely optimal for the restricted optimization problem under the discounted reward on an infinite horizon with Markovian switchings, we use a statistical estimation approach to determine the unknown parameter

. These adaptive policies are created by replacing the estimates into optimal stationary controls (that is, the use of the PEC); for more information, see the works of Kurano and Mandl (cf. [

7,

8]). The statistic estimation method we use for the unknown parameter

is the so-called

least square estimator for stochastic differential equations based on many discrete observations. This resembles existing robust estimation techniques, such as the

method, in the fact that in the applications, the dynamic systems are linear. However, the computational complexity of these techniques is greater. Indeed, with our least square estimator, only the inverse of a matrix must be calculated to obtain the estimator, while there are way more computations to be performed in the other algorithms (see [

22,

23]). Most risk analysts will not be as familiar with our methods as they are with, for example, the model predictive control, MATLAB’s robust control toolbox, or the polynomial chaos expansion method, which have been used in the literature to address similar issues. Since we review a constructive method for robust and adaptive control under deep uncertainty, our findings are similar to those reported in the article [

24]. Moreover, our methods also resemble the adaptive moving mesh method for optimal control problems in viscous incompressible fluid used in [

25].

This piece can be also be considered an extension of [

26,

27,

28,

29], who also study adaptive constrained optimal control methods. In fact, ref. [

28] studies a constrained optimal control problem, but unlike our case, there, all the parameters are known, while [

26] does the same but in the context of pollution accumulation. The references [

27,

29] study an unconstrained adaptive optimal control problem. Finally, it is important to highlight the numerical estimation technique that illustrates the results of this article.

The rest of the paper is organized as follows. We present the elements of our model and assumptions in

Section 2. Next,

Section 3 introduces our optimality criterion and the main results; an interesting numerical example illustrating our results is given in

Section 4. We give our conclusions in

Section 5, and finally, we included the proof of the important (but rather distracting) Theorem A1 on the convergence of the HJB equation under the topology of relaxed controls in

Appendix A.

Notation and Terminology

For vectors

and matrices

, we denote by

the Euclidean norm, that is,

where

and

denote the transpose and the trace of matrix, respectively. As an abbreviation, we write

and

to refer to

, and

, respectively.

Given a Borel set

B, we denote by

its natural

-algebra. As usual,

, stands for the space of continuous functions whose domain is

and

Consequently we denote as the subspace of composed by bounded functions. The set , where is the space of all real-valued continuous functions f on the bounded, open and connected subset with continuous derivatives up to order .

Fix and a measure space , we denote as the Lebesgue space of functions g on such that for .

Let X and Y be Borel spaces. A stochastic kernel on X given Y is a function such that is a probability measure on X for each and is a measurable function on Y for each .

Finally, the set denotes the family of probability measures on B endowed with the topology of weak convergence.

2. Model Formulation and Assumptions

Taking as reference the problem analyzed in Part I, we consider the scenery where the dynamics of the pollution stock is modeled as an

n-dimensional controlled stochastic differential equation (SDE) with Markovian switching. Specifically, such a dynamic takes the form

where

,

and

are given functions,

is an

-adapted

d-dimensional Wiener process such that

and

are pairwise independent,

is independent of

, and the evolution of the Markov chain

has intensity

and transition rule given by

for

and

. The compact set

is called the control set. In the context of our problem,

is a stochastic process on

U such that, at time

t, it represents the flow of consumption which, in turn, is considered bounded to reflect the policies and rules imposed by governments or social entities.

It is important to remark that throughout this work, we assume that is an unknown parameter taking values on a compact set , which is called the parameter set. Note that in the context of pollution problems, can be seen as the pollution decay rate.

Now we define the so-called randomized policies, also known as relaxed controls, or just policies.

Definition 1. A policy

is a family of stochastic kernels on (see Section 1). We denote by Π the set of stationary policies. In particular, a randomized policy is said to be stationary if there is a probability measure such that for all and . Let be the set of measurable functions f from to U. We denote the set of stationary Markov policies as . For each randomized policy

and a function whose domain is contained to

U, say

, we use the abbreviated notation

A suitable adjustment should be made for functions with a different domain.

We endow

with a topology (see [

30]) determined by the convergence criterion defined below (see [

31,

32], Lemma 3.2 in [

30,

33].

Definition 2. A sequence in Π converges to iffor all , and (see (3)). Since this mode of convergence was introduced by Warga (cf. [30]), we denote it as . For

,

and

, the infinitesimal generator associated with the process

is

where

is the

k-th component of the drift function

b, and

is the

component of the matrix

. As in (

3), for each policy

, we write

The following set of assumptions and conditions ensures the existence and uniqueness of a strong solution as well as stability of the dynamic system (

1) and (

2) (see [

31,

33,

34,

35]).

Assumption 1. - (a)

The random process (1) belongs to a complete probability space . Here, is a filtration on such that each is complete relative to , and is the law of the state process given the parameter and the control . - (b)

The drift function in (1) is continuous and satisfies that for each , there exist non-negative constants and such that, for all , all and , Moreover, the function is continuous on U.

- (c)

The diffusion coefficient σ satisfies a local Lipschitz condition; that is, for each , there exists a constant such that, for all less than R, - (d)

A global linear growth condition is satisfiedwhere > 0 is a constant. - (e)

The matrix satisfies that, for some constant ,

Remark 1. - (i)

Properties such as continuity or Lipschitz continuity given in Assumption 1 are inherited to the drift function .

- (ii)

Under Assumption 1, once a policy and a parameter are fixed, the references [31] and [33] guarantee the existence of a probability space in which there exists a unique process with the Markov–Feller property which, in turn, is an almost surely strong solution.

The next hypothesis is known as the Lyapunov stability condition.

Assumption 2. There exists a function , and constants such that

- (a)

uniformly in .

- (b)

for all , and .

Assumption 2 essentially asks for a twice-continuously differentiable function to solve the problem at hand. This hypothesis is equivalent to requiring positive-definite matrices in the context of linear matrix inequalities (see [

36] and pages 113–135 in [

37]). The existence of a function

w with the conditions in Assumption 2 implies that the rate functions involved in our model can be unbounded (see Assumption 3). As in the first part, we define next an adequate space for these functions.

Definition 3. Let v be a function from to , we define its w-norm as Even more, let be the Banach space of real-valued measurable functions with finite w-norm.

Let r and c be measurable functions from to identified as reward (social welfare) rate and the cost rate, respectively, and let from to be another measurable function that models the constraint rate. In the context of pollution accumulation, in some situations, such a restriction is due to each country’s legal framework, and the cost of cleaning the environment must be bounded for some given quantity.

Assumption 3. For each fixed, the payoff rate , the cost rate and the constraint rate are continuous on . Moreover, they are locally Lipschitz on , uniformly on E, U and Θ. That is, for each , there are positive constants and such that for all , Even more, the rate functions belong to and there exists such that for all , 3. Discounted Optimality Problems and Main Results

Through this section, we establish the contamination problem of our interest in terms of the terminology of optimal control. To this end, we will introduce the functions that evaluate the behavior of the system throughout the process associated with payments, costs, and restrictions.

In order to avoid confusion, we will preserve the notation and the ordering in the presentation of the results from the first part of the project.

3.1. Discounted Optimality Criterion

Definition 4. Given the initial state , a parameter value and a discount rate , we define the total expected α-discounted reward, cost and constraint when the controller uses a policy π in Π asrespectively, and is the expectation of · taken with respect to the probability measure when starts at . Proposition 1. If Assumptions 1–3 hold, the functions and belong to for each π in Π; in fact, for each and we havewhere , the constants c and d are as in Assumption 2, and M is as in Assumption 3 (b). Proposition 1 can be obtained directly using the following inequality, which is an application of Dynkin’s formula to the function

, and Assumption 2 (b) yield that, for all

,

,

and

,

Remark 2. The function is in for each . Moreover, for each , we have Let

be fixed, and again apply Dynkin’s formula to the function

V (see Theorem 1.45 in p. 48 in [

34] or Theorem 1 (iii) in [

38]) to yield the following result.

Proposition 2. Let Assumptions 1, 2 hold, and let v be a measurable function on satisfying Assumption 3. Then, for , the associated expected α-discounted reward belongs to , and satisfies Conversely, if some function in satisfies Equation (5), then Even more, if relation (5) is an inequality, then (6) holds with the respective inequality. Consider that is the Sobolev space of real-valued measurable functions on whose derivatives up to order are in for .

Given the initial conditions

a parameter

, and a constraint function

satisfying Assumption 3, we define the set of policies

We assume, for the moment, that the set defined in (

7) is nonempty. Up to this point, we are in a position to formulate the discounted problem with constraints (DPC), which is defined below.

Definition 5. Given the initial condition and the parameter we say that policy is optimal for the DPC if and Furthermore, the function is known as the discount optimal reward for the DPC.

3.2. Unconstrained Discounted Optimality

The objective of this part is to transform the original DPC (presented above) into an unconstrained problem, and thus, to be able to propose results and techniques known in the literature. To this end, we will apply the Lagrange multipliers technique used in [

26]. Take

and consider the function

For our purpose,

represents the new reward rate. Now recalling (

3), we write (

8) as

Remark 3. For each and , by direct calculations, it is possible to show that uniformly in and . Even more, by Assumption 3, this new reward rate is a Lipschitz function.

In the same way as in Definition (4), for all

and

, we define the function

So, the discounted unconstrained problem is defined as follows.

Definition 6 (The adaptive

-control problem with Markovian switching).

A policy is said to be α-discount optimal for the λ-DUP given that θ is the true parameter value, if for all . The function will be called the value function of the adaptive θ-control problem with Markovian switching. Let

be a measurable function satisfying the conditions given in Assumption 3. The following result (obtained from [

33]) shows that the function

is the unique solution of (

10), and also proves the existence of stationary optimal policies.

Proposition 3. Suppose that Assumptions 1–3 hold. Then we have the following:

- (i)

The α-optimal discount reward belongs to and it verifies the discounted reward HJB equation. That is, for all and Conversely, if a function satisfies (10), then for all . - (ii)

There exists a stationary policy that maximizes the right-hand side of (10). That is,and is α-discount optimal given that θ is the true parameter value.

Remark 4. - (a)

Notice that and by Definition 4, ,

- (b)

Remark 3 and Proposition 1 yield that with and is a bound of , implying in turn that .

- (c)

If Assumptions 1, 2 and 3 hold, then by Proposition 3.4 in [28], the mappings , and are continuous on Π for each and

3.3. Convergence of Value Functions and Estimation Methods

Finally, in this part, we will present one of the main results of this work, which combines optimality and the statistical approximation scheme (in a discrete way) of our unknown parameter. To do this, we define the concept of consistent estimator and the approximation technique that will be used for it.

Definition 7. A sequence of measurable functions is said to be a sequence of uniformly strongly consistent (USC) estimators of if, as , For ease of notation, we write . Let be a measurable function satisfying similar conditions as those given in Assumption 3. The following observations and estimation procedure are an adaptation to what was done in the first part and show us that our set of hypotheses and procedures are consistent.

Remark 5.

- (a)

Let be a sequence of USC estimators of and let be a function that satisfies the Assumptions 1–3. Theorem 4.5 in [29], guarantees that every sequence converges to , almost surely. - (b)

Let be a sequence in Π. Since Π is a compact set, there exists a subsequence such that , and thus, combining Remark 4 (a) and Remark 4 (c), and applying a suitable triangular inequality, it is possible to deduce that for every measurable function v satisfying Assumption 3, - (c)

By Proposition 3, and taking into account that in (8), the function verifies (10). In addition, the second part of Proposition 3 ensures the existence of stationary policy . - (d)

For each , and , we define the set Since can be seen as an embedding of Π, Proposition 3 (ii) guarantees that is a nonempty set.

- (e)

As in [4], the set of hypotheses considered in this paper and Lemma 3.15 in [28] ensures that for each fixed and any sequence , converging to λ (with ); if there exists a sequence of policies such that , then . - (f)

Lemma 3.16 in [28] ensures that the mapping is differentiable on . In fact, for each and

The unknown parameter

will be estimated as Pedersen [

39] describes. That is, the functions

will measure how likely the different values of

are. If for each

fixed, the function

has a unique maximum point

, then

is estimated by

.

Under the assumption that, for and , is a measurable function of and that it is also twice continuously differentiable in for all almost all , it is proven that the function is continuous and has a unique maximum point for each fixed. The number is the index of a sequence of random experiments on the measurable space . This method is known as the approximate maximum likelihood estimator.

In our scenery, given a partition of times from , the outcomes of the random experiments will be represented by a sequence of a trajectory up to time T on and the function will be called the least square function (LSE), i.e.,

It is evident that

in (

1) is observed up to a finite time, say

T, for which we define

with the drift function

b as in (

1). The above function generates the least square estimator until time

T with

m observations:

Remark 6. The fact that in (1) can only be observed in a finite horizon is one of the hypotheses of the so-called model predictive control. However, at least from a theoretical point of view, our version of the PEC makes no such assumption, but still chooses T as large as practically possible and thus defines (13) and (14). In this sense, there is a connection between these two perspectives. In [

12,

16,

39], the consistency and asymptotic normality of

are studied. In particular, Shoji (see [

16]) shows that the optimization based on the LSE function is equivalent to the optimization based on the discrete approximate likelihood ratio function in the one-dimensional stochastic differential equation case and with a constant diffusion coefficient considered:

with

b and

as in (

1). The MLR function generates the discrete approximate likelihood ratio estimator:

Now, we will establish our main result.

Theorem 1. Let be a sequence of USC estimators of . For each m, let be a α-discount optimal policy. Then there exists a subsequence of and a policy such that . Moreover, if Assumptions 1–3 hold, as ,and is α-discount optimal for the θ-control problem almost surely. Proof. Consider a sequence of USC estimators such that as . Let , and take the open ball . For , let be a sequence of -discounted optimal policies. Since is a compact set, there exists a subsequence such that converges to in the topology of relaxed controls given in Definition 2.

Let us first fix an arbitrary

. Then, Theorem 6.1 in [

33] ensures that the value function

in (

9) is the unique solution of the HJB Equation (

10), i.e., it satisfies

and by Theorem 9.11 in [

40], there exists a constant

(depending on

R) such that, for fixed

and

, we have

where

represents the volume of the closed ball with radius

, and

M and

are the constants in Assumption 3 (b) and Proposition 1, respectively.

Now, observe that conditions (a)–(e) of Theorem A1 hold. In fact, for each

, (

15) can be written in terms of the operator (A2) as

with the functions

,

,

equal to zero,

and

. So, taking

,

, conditions (a),(c) and (d) hold. In addition, by (

16), condition (b) is verified as well.

Then, by Theorem A1, we claim the existence of a function

, together with a subsequence

such that

uniformly in

, and pointwise on

as

and

. Furthermore,

satisfies

with

. Since the radius

is arbitrary, we can extend our analysis to all of

.

Thus, as

is the unique solution of the HJB equation (

17), we can deduce that

coincides with

. So, by (

15) and (

17), as

,

On the other hand, by Proposition 3, for each

and

fixed, we have

Hence, letting

and using Theorem A1 from appendix again, we obtain that (

18) converges to

Thus, by (

17) and (

19), we obtain

implying that

is

-optimal for the

-control problem with Markovian switching. □

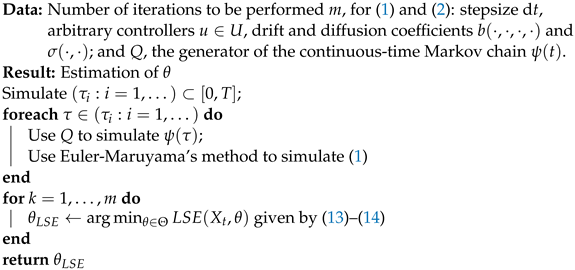

In the following section, we present a numerical example to illustrate our results. To this end, we implement Algorithm 1. In it, first we introduce the number of iterations in our process and define the variables we need to simulate the dynamic system

and the Markov chain

. Such simulations are inspired by the algorithm proposed in [

41], and allow us to obtain the discrete observations

needed to feed (

13) and (

14) and thus approximate the real value of

.

| Algorithm 1: Method of LSE to find |

![Mathematics 11 01045 i001 Mathematics 11 01045 i001]() |

Remark 7. Now we list some limitations of our approach.

- 1.

Approximation of the derivative. In our case, we use central differences, but in each application, the approximation type to be used must be analyzed.

- 2.

Least squares approximation. The most common restrictions are the amount of data, the regularity of the samples, and the size of the subintervals.

- 3.

Euler–Maruyama method. The most common restrictions in this method occur if the differential equation presents stiffness, inappropriate step size, or sudden growth. In our application, the Euler–Maruyama method converges with strong order 1/2 to the true solution. See Theorem 10.2.2 in [42].

4. Numerical Example

This application complements the one we used in [

38]. We represent the stock of pollution as the controlled diffusion process with Markovian switchings of the form

where

is a Markov chain with generator

stands for the perception of society toward the current level of pollution at each time. It takes values from the set

. So, if the Markov chain is initially in state

, then before its first jump from state 1 to state 2 at its first random jump time

, the stock of pollution obeys the following SDE

with initial state

. At time

, the Markov chain jumps to 2, where it will stay until the next jump, at time

. During the period

, the stock of pollution is driven by the SDE

with initial value

at time

, and the stock of pollution switches to (

22) from (

21). The stock of pollution will continue to alternate between these two states ad infinitum.

We also consider the pollution flow to be constrained. This means that our controller variable

will be taking values in

for a constant

So,

. We introduce the reward rate function

, that represents the social welfare defined by

whereas the cost and constraint rates are

where

q is a positive constant. Clearly, (

20) satisfies Assumption 1. The infinitesimal generator for a function

is

We use . It is easy to verify that , with , where .

Take such that , and note that for every , is continuous on the compact sets U and ; therefore, there exists a constant such that for all , and . So, Assumption 2 is satisfied.

In this problem, the payoff rate is

where

is the Lagrange multiplier, the

discounted expected payoff is

and the value function is

In order to find the optimal control and the value function

given in (

23), we need to solve (

10) for each

. The HJB equations associated with this example are

Assuming that a solution to (

24) and (

25) has the form

with

measurable functions, we get

and

Replacing the derivatives of

into (

24) and (

25), we obtain

with

Notice that the suprema in (

26) and (

27) are attained at

Thus,

can be written as

By Proposition 3, the optimal control is (

28) and (

29), and the value function is

, i.e.,

For the numerical experiment, we consider the particular form of (

1) given by (

20) to test Algorithm 1 with

as the generator of the continuous-time Markov chain

embedded within (

20). Also, let

,

,

,

,

,

, and

as the true parameter value of the pollution decay rate. These last data allow us to simulate (

20) in the interval

, and, for the sake of comparison, it will be considered as the real model (see

Figure 1). Based on this information,

discrete observations were obtained. Now, we suppose that

is the unknown parameter and we estimate it by means of the least square function LSE in (

13) and (

14). Substituting

in (

13), we obtain the following estimator for each state

.

where

Given that the dynamic system for

is governed by a stochastic differential equation with Markovian switching, it is not possible to have a single value for

, but rather a set of values (the number of these values strictly depends on the number of jumps that occur in the interval

), which we will denote as in (

31). These approximations allow us to simulate the stochastic differential equation with Markovian switching again with the same jumps. The outputs of the approximate stochastic differential equation with Markovian switching

and the one with the real value for

,

are displayed in

Table 1.

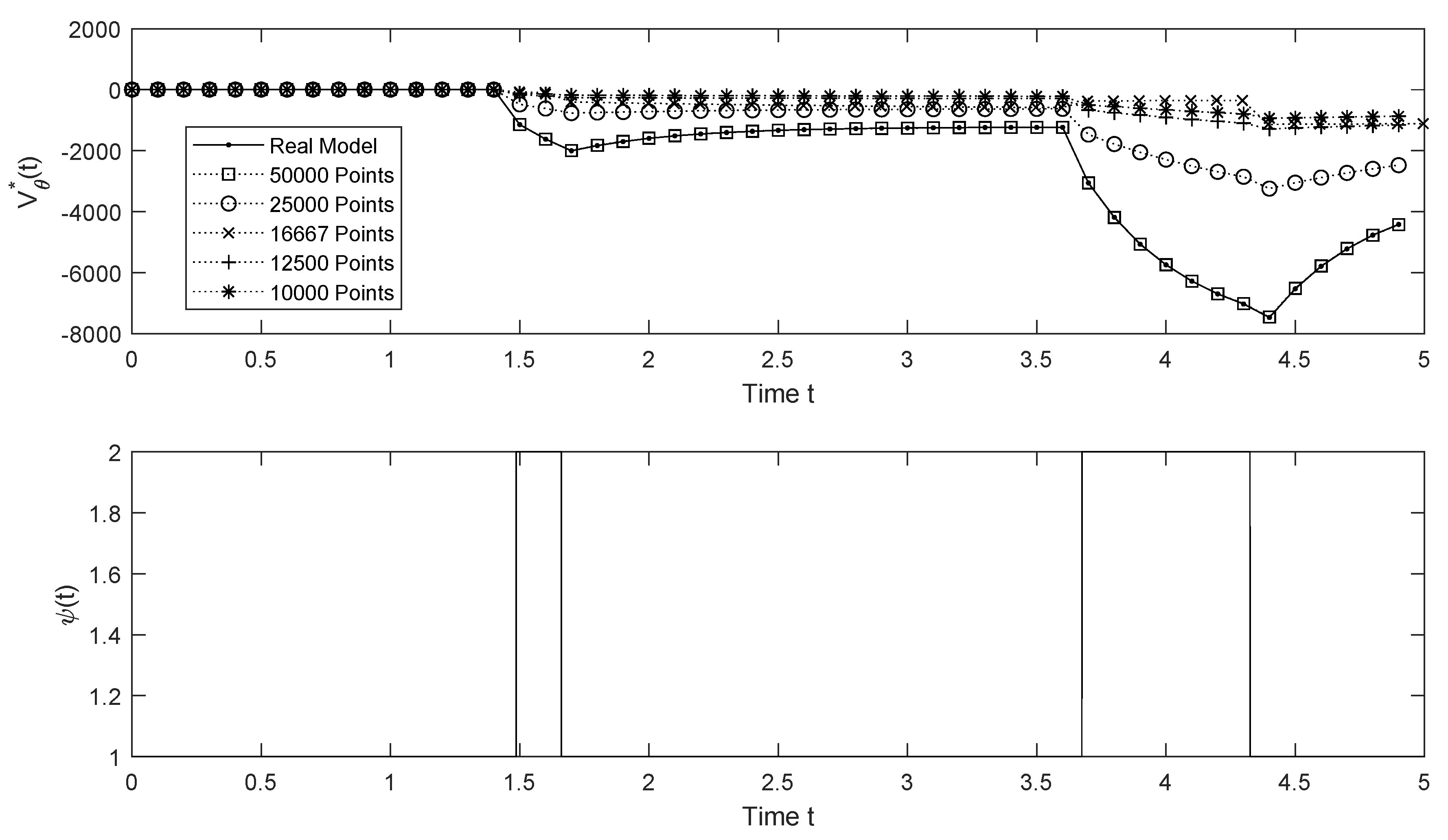

To graph the value function

given in (

30) we take

,

,

,

,

,

,

, and

, and

with

and 5000, see

Figure 2.

The symbol denotes the value estimates of the dynamic system , the optimal control and of the value function when we take instead of .

We obtained

discrete observations of (

20) on

. Given that the Markov chain is known, the vector of jump times

is known as well. The estimator used in each interval

with

and

is

given in (

31).

Figure 1 and

Figure 2, together with

Table 1 show that, as

m increases, the estimator approaches the true parameter value

, and the RMSE between the estimations

and the actual values of

decreases, thus implying a good fit.

Theorem 5.5 in [

28] ensures that for a fixed point

such that

if the inequality

holds, then the mapping

admits a critical point

satisfying

Therefore, every

is

-optimal for the DPC and

; in particular, the

-optimal policy for the DPC is

of the form

and the

-optimal value for the DPC is given by

5. Conclusions

We studied controlled stochastic differential equations with Markovian switching of the form (

1), where the drift coefficient depends on an unknown parameter

.

Two problems were analyzed, each one under a corresponding reward criterion: the discounted unconstrained problems (DUP) and the discounted problem with constraints (DPC) with optimal value functions and , respectively. Once a suitable procedure estimation of is obtained, it generates a sequence of estimators such that as , and the results obtained guarantee the following:

For each initial state and parameter , almost surely for both problems.

For each estimation and problem (DUP or PDC), there are optimal policies .

There is a subsequence of policies and a policy such that , and, moreover, is optimal for the -OCP.

Similar to the previous point, for the DUP, there is a subsequence of policies and a policy such that , and is optimal for the -DUP. Moreover, if is a critical point of , then is optimal for the -DCP.

The numerical part is one of the strengths of this work. Indeed, it aims at solving an estimation problem and a control problem. This task requires knowledge and storage of the optimal policies for all the values of , which may take considerable offline execution time. In addition, we propose and implement an algorithm to approximate .

Finally, the idea of modeling the dynamic

as a controlled diffusion process with Markovian switchings allows us to consider extra factors or elements that affect the pollution stock. Such factors could be seen, in particular, as multiple pollution sources. An interesting task or challenge would be to pose this scenario as a multi-objective problem, where both sources of contamination and the stock require to be minimized under certain restrictions. This could be done by adapting and defining a suitable multi-objective linear program (convex program) and guaranteeing the existence of a saddle-point—or Pareto optimal policy—as studied in [

43,

44]. Another technique, called the multi-objective evolutionary algorithm, combines multi-objective problems with statistical techniques to approximate the Pareto optimal as in [

45]. In both cases, it is still necessary to apply extra techniques due to the unknown parameter

.