1. Introduction

With the vigorous growth of big data and large-scale data processing, traditional computing models can no longer meet daily computing needs [

1]. Cloud computing and its associated virtualization are the most vital architectures for providing cloud services to users, which have become the standard infrastructure for supporting Internet services [

2]. In general, cloud computing has a service-oriented architecture, in which services can be categorized into IaaS (Infrastructure-as-a-Service), PaaS (Platform-as-a-Service), and SaaS (Software-as-Service) by the provided layer to clients [

3]. Specifically, IaaS provides essential computing, storage, and networking resources on demand. PaaS allows users to access hardware and software computing platforms such as virtualized servers and operating systems over the internet. SaaS provides cloud users access to hosted applications and other hosted services over the internet. In recent decades, many companies have attempted to integrate such servers into a virtual server using PaaS architecture to reduce server management and maintenance costs. For example, Bitbrains is a service provider specializing in hosted services and business computing for enterprises [

4]. Clients include many banks (ING), credit card operators (ICS), insurers (Aegon), etc. Bitbrains hosts applications in the solvency domain and examples of its application vendors are Towers Watson and Algorithmics.

PaaS cloud service providers need to gain insight into the relationship between the performance of the cloud service platform and the available resources, not only to meet users’ performance needs but also to fully utilize the infrastructure and resources of the cloud service platform. For cloud service users, performance evaluation quantitatively assesses the various cloud services to ensure that their needs are met. For example, users can conduct quantitative analysis on the same service provided by different cloud service providers through performance evaluation and make the best service selection and decision. Generally, a PaaS cloud computing platform has a vast number of collaborative physical machines, each of which includes multiple virtual machines (VMs). Additionally, performance evaluation of the integrated virtual platform during the design phase of a computer system can help the computer designer plan the system’s capacity [

5]. However, with the rapid increase in data, every upgrade to software or hardware (e.g., CPU, memory) comes with high risk and expense [

6]. Performance evaluation that evaluates the utility of given upgrades facilitates cost reduction and construction optimization at a datacenter, while erroneous analysis leads to ultimately huge losses. Therefore, it is essential to estimate the system performance from the statistics of existing servers.

Cloud services differ from traditional hosting in three main aspects. First, the cloud provides service on demand; second, cloud services are elastic because users can use the services they want at any given time; third, the cloud provider fully manages the cloud services [

7]. Queueing models provide an efficient manner to simulate the behaviors and evaluate the performance of a cloud datacenter. Queueing theory often models web applications as queues and VMs as facility (service) nodes [

8]. The parameters of queueing models (e.g., arrivals and service rates) can then be estimated as services arrive in a first-come-first-served (FCFS) manner [

9]. Usually, a queueing model can be standardized as

, where

A and

B represent the arrival and service distributions, respectively.

S and

K are the number of service nodes and queue capacity, respectively. For example,

represents that the arrival and service times follow Poisson and exponential distributions, respectively, and the number of servers and queue length are one and

K, respectively. Tasks sent to the cloud datacenter are usually served within a suitable waiting time and will leave the queue when the service is over. However, there remain many challenging issues with queueing models, despite their ability to represent the behavior of cloud datacenters [

10]. A cloud center usually has a lot of service nodes. Traditional queueing models rarely consider the system size. Besides, approximation methods are sensitive and inaccurate to the probability distribution of arrival and service times. Furthermore, traditional queueing systems usually observe the inter-arrival times or waiting times to estimate arrival rates for VMs. In a cloud datacenter, task arrivals and wait times are difficult to monitor and collect.

As a solution, our previous work [

11] proposed an approach to estimate the arrival intensity of computer systems only from CPU utilization data. CPU utilization data is one of the most commonly used statistics to monitor CPU behavior during task execution. Most operating systems have the function to calculate CPU utilization by default. Besides, the CPU behavior of an existing server was modeled by an

queueing system, where the arrival stream is according to a non-homogeneous Poisson process (NHPP), and the service time obeys an exponential distribution. NHPP is the best-known generalization of the Poisson process in that the arrival intensity is given as a function of time

t [

12]. Therefore, an NHPP can better approximate the arrival process of the tasks accurately than a homogeneous Poisson process (HPP) [

13]. For the Poisson process, the renewal process is the partial sum process associated with independent random variables with an exponential law [

14]. An alternative way to avoid the independently and identically distributed requirement is the Markovian arrival process (MAP) [

15].

To reasonably plan the cloud computing platform and improve its performance, we aim to evaluate the performance of Bitbrains cloud servers in a PaaS architecture in this paper. More precisely, we use an queueing model to represent the CPU behavior of each VM, which can dynamically calculate the task arrival rate at different times. In addition, because the arrivals and waiting times of tasks in computer systems are difficult to monitor and collect, we estimate the arrival intensity of each VM in the cloud datacenter from the CPU utilization data. Finally, the performance of the integrated virtual platform is evaluated by applying the superposition technique of NHPPs. The main contributions are organized as follows:

Performance evaluation of a cloud server: It is non-trivial and significant to evaluate the performance of a cloud datacenter (i.e., Bitbrains) to meet user performance needs and make full use of the resources of the PaaS cloud service platform.

Parameter estimation for a queueing model subject to NHPP arrival using CPU utilization data: An NHPP is used as the arrival process of the queueing model, which can dynamically calculate the task arrival rate at different times. Additionally, we use CPU utilization to estimate the parameters because of the unobservability of the task arrival process and waiting times of the computer system. CPU utilization is the most commonly used statistic, but task arrival and service processes are not visible.

Flexibility and scalability for performance evaluation: any queuing model based on utilization data can be solved using our approach. In addition, our model can be combined with distributed computing to further improve the capability of performance evaluation.

The remainder of the paper is as follows. The related research works are reviewed in

Section 2. In

Section 3, the cloud datacenter system (i.e., Bitbrains) is first introduced in detail. Then, the EM algorithm-based parameter estimation method is proposed. In

Section 4, we first estimate the parameters (i.e., arrival intensity function) of the

queueing model. Then, we evaluate the performance of the Bitbrains using only CPU utilization data. Finally, the paper is concluded in

Section 5.

2. Literature Review

In recent years, data has exploded with the rapid growth of computers and the Internet. As one of the solutions to cope with the era of big data, cloud computing has gained wide attention and application. It is an extremely worthwhile task to evaluate the performance of cloud computing platforms. For cloud computing platform designers, performance evaluation can help them decide the size of system memory, the number of CPUs, etc. For cloud computing providers, performance evaluation can help them allocate facilities and resources appropriately. For users, it can help them choose the right provider.

2.1. Queueing Models as Solutions

However, only a small part of the work involves the performance evaluation of cloud computing data centers, and many researchers prefer to evaluate the performance evaluation of cloud computing centers using queuing models [

16,

17,

18,

19,

20]. Moreover, most of the literature estimates the parameters of the queuing model by collecting data such as queue lengths or waiting times. For example, Thiruvaiyaru et al. [

16] collected queue length data and estimated parameters from an

queueing model. Ross et al. [

19] collected queue length data at successive time points and estimated the parameters of an

queuing system. Then, they generated density-dependent transition rates for Markov processes by placing the arrival rates in the same order as the number of servers. Liu et al. [

20] calculated the queue lengths using the number of vehicles in the queueing system and proposed a real-time length estimation method through the probed vehicles. Unlike queue length data, waiting time data is another commonly used data that can be used to estimate the parameters of queueing models. Waiting times contain partial information about inter-arrivals and service times. Basawa et al. [

17] collected waiting times of

n successive customers from

and

, respectively. Fischer et al. [

18] used the Laplace transform method to approximate the distribution of waiting times and then estimated the parameters of an

queueing model. For performance evaluation of cloud computing systems, Khazaei et al. [

10] modeled a cloud center as an

queuing system. They used a combination of a transformation-based analytical model and an embedded Markov chain model to obtain a complete probability distribution of response times and the number of tasks. Finally, they evaluated the performance of the system.

However, for observable queuing systems, the collection of queue lengths and waiting times consumes much time. For non-observable queuing, data such as queue lengths and waiting times, which can visually reflect queue information, are difficult to collect. For example, the arrival time, time interval, and waiting time of two successive tasks are not observable in a cloud computing system.

CPU utilization data is one of the most common statistics used to monitor CPU behavior during task execution. Most operating systems have the function to calculate CPU utilization by default. However, unlike queue lengths and waiting times, CPU utilization is utilization data. In other words, CPU monitoring is not continuous but at certain time intervals. Therefore, CPU utilization data belongs to incomplete data, which increases the difficulty of the parameter estimation of a queueing model. Moreover, the utilization data does not reflect the queue information intuitively like the queue lengths but reflects its information implicitly. For example, a high CPU utilization indicates that many tasks are waiting to be processed in a queue or that the current task is taking a long time to process. Therefore, it is more challenging to estimate parameters and evaluate performance from CPU utilization data. To the best of our knowledge, there are no other papers on queueing parameter evaluation based on utilization data other than our proposed [

11,

15].

2.2. Non-Homogeneous Poisson Process

Most works prefer to assume the arrival process of tasks as a homogeneous Poisson process (HPP) with a constant arrival rate. However, a Poisson arrival process is not a good choice in practice. For example, cloud computing datacenter visits are generally characterized by higher working hours than evenings and higher weekdays than weekends. The arrival process of tasks usually varies dynamically with time, i.e., it is a non-homogeneous Poisson process (NHPP) [

12]. In general, queuing systems based on NHPP arrivals are more difficult to estimate parameters than HPP-based systems. Rothkopf and Oren [

21] proposed an approximate method to estimate a dynamically varying arrival process of a queuing system. Heyman and Whitt [

22] modeled an

queuing system to deal with the non-simultaneous arrival process of asymptotic behavior. They defined an intensity function

to represent the time-varying Poisson arrival rate. In addition, Green et al. [

23] evaluated the performance of a queuing system with the NHPP arrival process and exponential service times. Pant et al. [

24] assumed a

queuing system in which customers arrive at the system with a sinusoidal arrival intensity function

.

2.3. Parameter Estimation of Queueing Models

The maximum likelihood estimates (MLE) is the most commonly used estimation method of queueing models [

25,

26,

27]. Wang et al. [

26] proposed an

queueing model, which had

R multiple severs in the queue. They estimated the HPP arrival rate and service time to obey the exponential distribution using MLE. Amit et al. [

27] collected the number of customers in an

queueing system, and then estimated the traffic intensity by MLE. We have stated above that CPU utilization data is different from observable data and belongs to incomplete data. Therefore, a statistical inference technique for evaluating the performance of incomplete data is required. Expectation maximization (EM) [

28] is a useful algorithm that can iteratively compute partial data with MLE. The EM algorithm is powerful for stochastic models with multiple parameters. An EM algorithm generally has two steps (i.e., the expectation step and the maximization step, respectively). MLE iteratively computes the expectation step and the maximization step iteratively and then stops iterating until the loss function converges. Wu [

29] verified the convergence of the EM algorithm theoretically. They proposed that the MLE of the EM sequence can converge to a unique value in the case that the likelihood function is unimodal and differentiable. Rydén [

30] estimated the parameters of a queueing model with Markov Modulated Poisson Process (MMPP) using MLE via an EM algorithm. Similarly, Basawa et al. [

31] estimated the parameters of an

queueing system using an EM algorithm from waiting times. Okamura et al. [

32] defined group data and tried to estimate arrival and processing rates of a queueing system with an Markovian arrival process (MAP) using an EM algorithm-based approach.

In this paper, we propose a novel statistical inference technique for incomplete data to evaluate the performance of cloud datacenters. Empirically, the task arrival process in a datacenter often exhibits a cyclical and recurring nature. The cycle of this process can be in the form of a day, a week, a year or other units. For example, datacenter access is greater during business hours than early mornings and evenings. Weekday accesses will also be larger than weekend accesses. Therefore, we model each virtual machine (VM) of a cloud datacenter as an queueing system. An NHPP can better represent this cyclic characteristic. Then, we estimate parameters only from CPU utilization data using the EM algorithm. In a cloud datacenter, CPU utilization is the most commonly used statistic to monitor the behavior of each VM. Because any computer operating system can calculate CPU utilization by default, we do not need to spend time collecting information such as queue length and waiting times in the queuing system.

3. Methodology

In this section, we first briefly introduce the Bitbrains cloud datacenter. The system behavior can be modeled by an queueing model. Then, we define utilization data formally and approximate the NHPP to a series of HPPs. Finally, the details of the MLE optimization method based on an EM algorithm are described.

3.1. Bitbrains Cloud Datacenter

Bitbrains is a service provider that specializes in managed hosting and business computation for enterprises [

33]. One of the typical applications of Bitbrains is for financial reporting, which is used predominately at the end of financial quarters. The workloads of Bitbrains are master-worker models, where the workers are used to calculate Monte Carlo simulations [

34]. For example, a customer would request a cluster of computing nodes to run such simulations. The request is accompanied by the requirements as follows. First, data transmission between the customer and the datacenter through a secure channel, computing nodes rented as VM in the datacenter to provide predictable performance, and high availability for running critical business simulations.

Bitbrains uses the standard VMware provisioning mechanisms to manage computing resources, such as dynamic resource scheduling and storage dynamic resource scheduling. In general, Bitbrains consist of three types of VMs: management servers, application servers, and computing nodes. The management servers can be used for the daily operation of customer environments. Application servers are used as database servers, web servers, and head nodes. Computing nodes are mainly used to compute and simulate financial risk assessment. CPU utilization data of Bitbrains used in this work were collected between August and September 2013 two traces, which are described in

Table 1.

3.2. Collection of CPU Utilization Data

CPU utilization is the most commonly used statistic to monitor the behavior of each VM in the cloud datacenter Bitbrains. We define CPU utilization in each time interval of each VM in the cloud datacenter Bitbrains as

. CPU utilization can be considered as the ratio of the busy time to the total time of one monitoring. The busy time is given by the cumulative time in which the server is processing a task. For each fixed time interval, a computer system or VM calculates the time fraction as utilization. Parameter estimation from utilization data is more challenging than other related work. Based on the behavior of the CPU utilization data, we assume that the utilization within a time interval consists of an unobserved time and a successive observed time, respectively. Furthermore, since the CPU cannot be monitored during an unobserved period, we can only collect CPU utilization data during an observed period. For the CPU, each observation period is short (only a few milliseconds), so there is at most one change from busy to idle or idle to busy during each observation period. Formally, let

and

be the lengths of the unobserved and observed periods of each time interval, respectively.

and

are the lengths of busy and idle times in time slot

t. According to the above assumptions, CPU utilization for one-time interval

can be defined as follows:

where

u indicates the CPU utilization for one-time interval,

and

represent the lengths of busy and idle times in

t, respectively, and

.

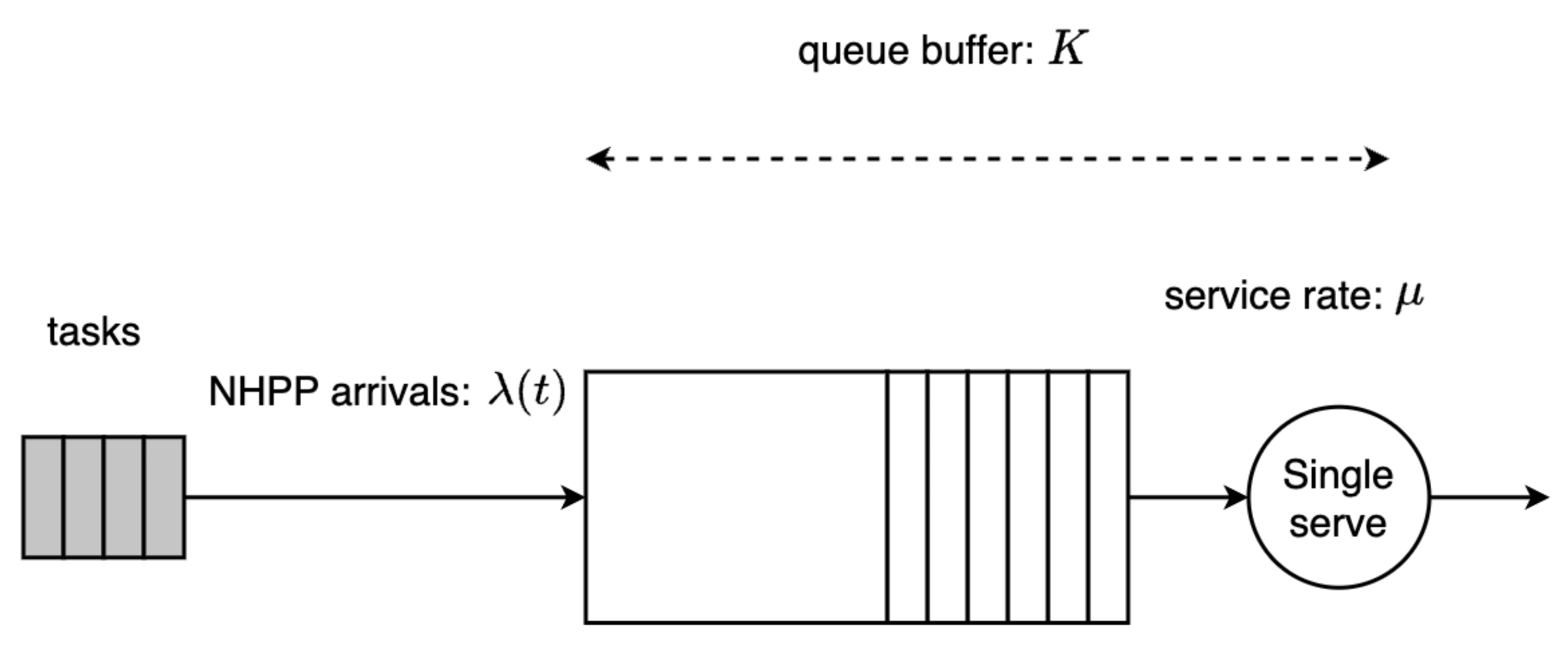

3.3. System Behavior as the Queueing Model

First, users send the task requests that need to process by Bitbrains. Then, Bitbrains allocates computing resources for each task. Note that user tasks are independent of each other. When the CPU of the VM is idle, the first assigned task can be sent directly to the CPU for processing, or it needs to wait in the buffer of size K. For the VM with a buffer size of K, the arriving tasks that exceed K cannot enter the buffer for processing. Additionally, the waited tasks in the buffer will be processed by the CPU. The served task will leave the VM once finished.

Therefore, the system behavior of Bitbrains can be represented by the queueing model: web applications are often modeled as queues, and VMs are modeled as service nodes. We assume that the arrivals obey an NHPP with the intensity function is

. Service time is according to exponential distribution whose rate is

. As shown in

Figure 1, the system can be modeled by an

queueing model.

In queueing theory, we usually use a continuous time Markov chain (CTMC) to formalize the behavior of a queueing model. For the

queueing model, the infinitesimal generator matrix of the CTMC can be expressed as follows:

3.4. Approximate NHPP as a Series of HPPs

The time dynamics

of an NHPP, often called time intensity, is a function of time

t, which is hardly estimated. Usually, the arrival process of an NHPP can be regarded as a series of independent HPPs. To simplify the model and estimation, the intensity function

of an NHPP can be approximated by a series of HPPs. Specifically, assume that the total time for utilization data collection is

T.

T can be divided into

n (

) same periods, and each divided time interval can be represented by

. In practice, a large value of

n is chosen. The

n HPPs can be approximated as an NHPP with the intensity of

. At the

ith (

) time interval, the arrival tasks obey the HPP with the arrival rate being a constant of

. The approximated piecewise constant function of NHPP is shown as

3.5. Parameter Estimation of the Queueing Model

According to Equation (

3), the infinitesimal generator matrix of Equation (

2) can be modified to

n independent infinitesimal generator matrices, and the

ith matrix can be denoted by

(

).

is defined as the

ith infinitesimal generator matrix with CPU states that transfer from idle to idle or from busy to busy.

is denoted by

Similarly,

is defined as the

ith infinitesimal generator matrix with CPU states that transfer from idle to busy or from busy to idle.

is represented as follows.

where

is zero matrix and,

Define utilization as

. The utilization data in

i-th period

is defined as

,

. Then the likelihood function can be formulated from utilization data by using MLE, as shown below:

With Equations (

4)–(

6), the items of Equation (

8) can be expressed as

where

is the initial probability vector. Also

and

are

-by-

block matrices;

3.6. EM Algorithm for CPU Utilization Data

An EM algorithm is an effective machine learning algorithm that can be used for parameter estimation of queueing models with incomplete data. We have stated that CPU utilization data belongs to incomplete data and assumed that the utilization within a time interval consists of an unobserved time and a successive observed time, respectively. Since data could be collected only during observed time, we try to use the EM algorithm to estimate the parameters. An EM algorithm aims to find the MLE for the queueing model from incomplete observation. The two steps of an EM algorithm is demonstrated as follows:

Expectation step: The expected log-likelihood function is calculated using the posterior probabilities of the hidden variables. The equation is calculated as follows:

where

and

are the observed and missing data in an unobserved time interval, respectively. The

is a vector of parameters to be estimated.

Maximization step: The parameter

is updated by maximizing the expected log-likelihood function found in the expectation step. The equation is shown below:

Then, the parameter estimates from the maximization step are used as the initial parameters in the next expectation step to determine the distribution of the latent variables. Finally, the optimal parameters can be obtained by iterating these two steps several epochs until convergence. The EM algorithm can represent the arrival rate

by

where

is the arrival rate from

i state to

j state,

is the number of transition from state

i to state

j,

is the sojourn time in state

i,

is the number of transition from state

i to state

j at unobserved time period,

is the number of transition from state

i to state

j at observed time period,

is the sojourn time in state

i at unobserved period and

is the sojourn time in state

i at observed period.

3.7. The Number of Time Intervals

n is is the total number of the divided time intervals in data collection time of

T.

n is a hyperparameter of the proposed approach. Different

n determines different models. If

n is too small, the estimated intensity function does not reflect the non-homogeneity property. For example,

means that the NHPP is simplified to an HPP, while if

n is too large, the estimated intensity function overreacts to small fluctuations, resulting in an overfitting phenomenon. To choose an appropriate

n, we use the Akaike Information Criterion (AIC) [

35] to quantify the goodness of the models. Note that a smaller AIC indicates a better fit of the model. Therefore, we choose the

n when the AIC takes the minimum value. The formula is shown as follows:

where LLF denotes the maximum value of the log-likelihood function.

3.8. Superposition of Arrival Intensities

In the PaaS environment, the cloud server of Bitbrains provides computer platforms (e.g., CPUs) as a service by using a hardware virtualization technique. Therefore, the arrival process for the non-virtual CPU can be estimated by a superposition of the arrival process of virtual servers. Formally, define

as the arrival intensity of NHPP for the

i-th virtualized platform. Since the CPU task arrival processes for virtualized platforms can be regarded as independent stochastic processes, the CPU task arrival process in the PaaS is given by

The procedure for the parameter estimation of the

is summarized as in Algorithm 1.

| Algorithm 1 Parameter estimation procedure for the model |

- Step 1:

Divide the total time interval into n fixed periods:

- Step 2:

Approximate as a piecewise intensity function:

- Step 3:

Determine parameters by maximizing the LLF:

- Step 4:

Select the optimal model by minimizing AIC:

- Step 5:

Evaluate performance of the cloud datacenter (e.g., average response time).

|

Finally, we evaluate the performance of the integrated platform by using the estimated parameters, such as the arrival intensity and service rate.

4. Results

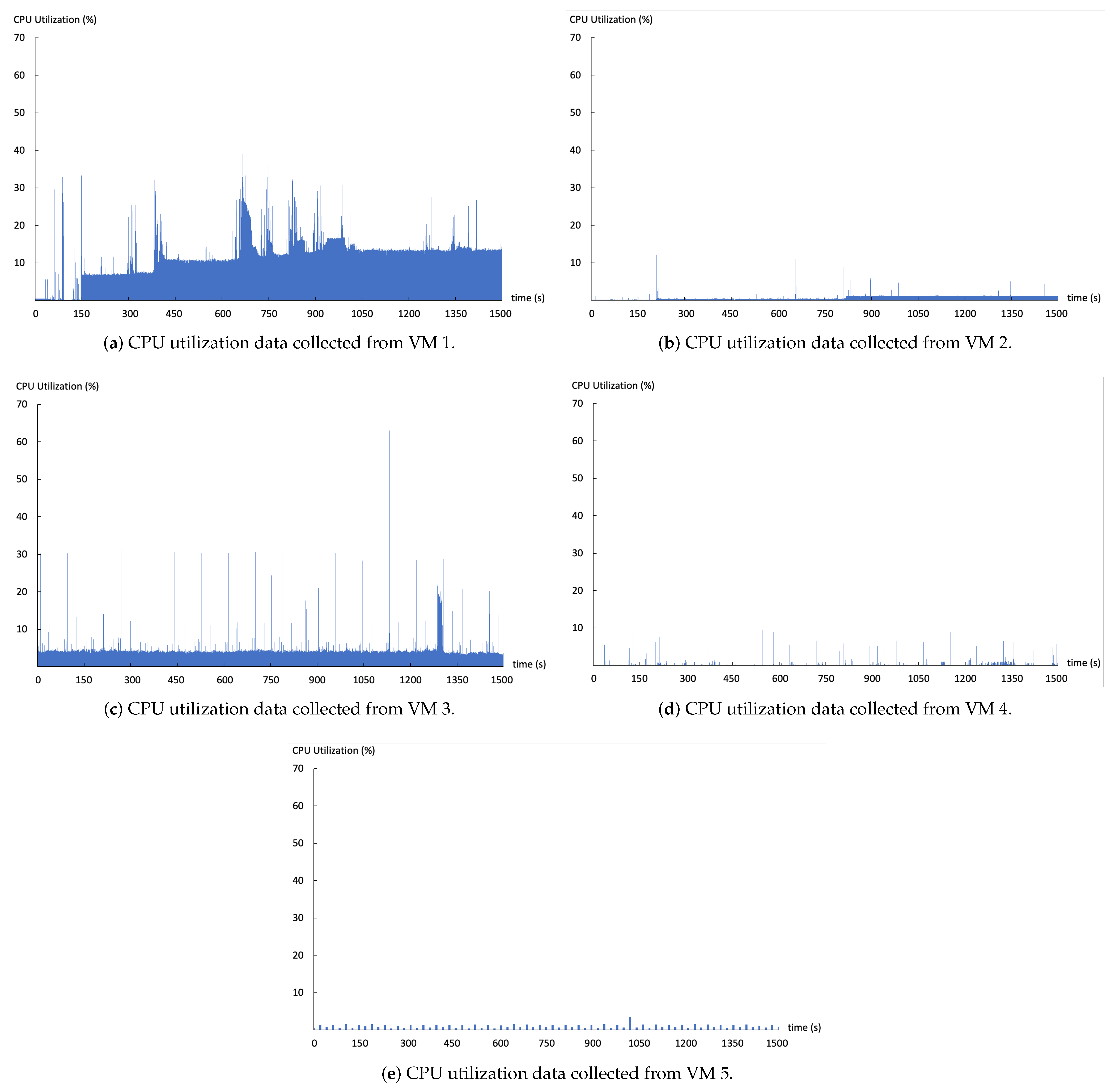

We randomly select five VMs from the trace of Bitbrains and estimate their arrival intensities of queueing model from CPU utilization data. Then, we evaluate the average response time of the superposed platform. The service rate of the exponential distribution is set as . Buffer size of the queueing model is set as . Because Bitbrains monitors CPU utilization every s, we fix the unobserved and observed time length to s and s, respectively.

Figure 2 demonstrates CPU utilization data collected from five VMs. The CPU utilization collected from VM 1, VM 2, and VM 3 is dense. Their CPU utilization values vary drastically in the interval [0, 0.2], [0, 0.02], and [0, 0.1]. Compared with VM 1, VM 2, and VM 3, the CPU utilization data collected from VM 4 and VM 5 are more sparse, with their utilization data varying in the interval [0, 0.1]. Moreover, the five sets of data seem to have a certain periodicity, which is one of the reasons we choose the NHPP as the arrival stream of Bitbrains.

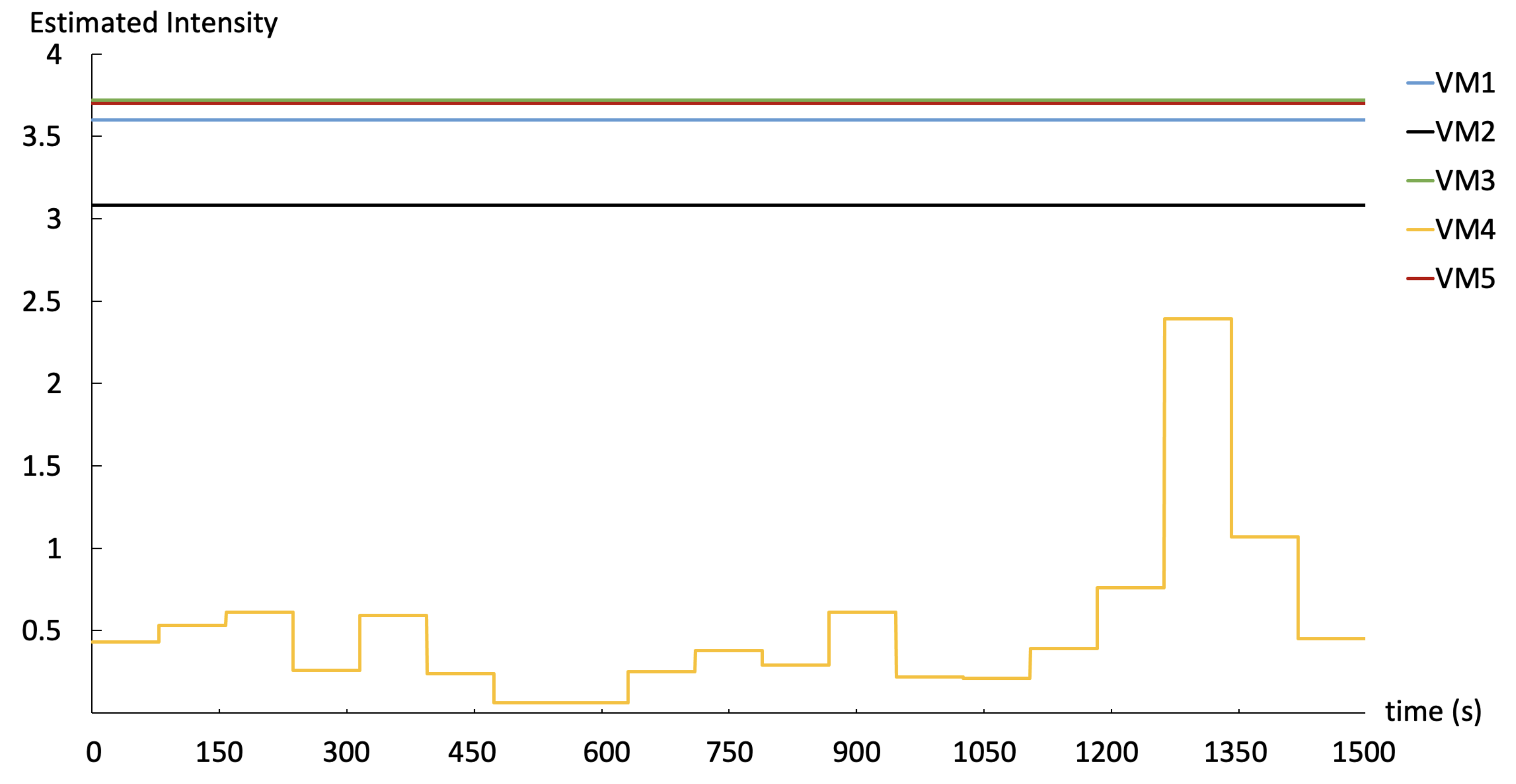

4.1. Results of Parameter Estimation

To get the optimal value of

n, the AIC values are calculated.

Table 2 exhibits the AIC values, where

for the five VMs. From the table, the optimal number of the time intervals of the five VMs can be obtained when the values of AIC are the smallest (i.e., 7448.08 for VM 1, 4428.04 for VM 2, 7953.98 for VM 3, 886.04 for VM 4, and 7957.62 for VM 5). The optimal number of the

n are

, and

for the five VMs. The five estimated intensity functions are demonstrated in

Figure 3.

From

Figure 3, we can find that the task arrival rates for VM1, VM2, VM3, and VM5 are

, and

. In other words, the four VMs obey four HPPs, not the NHPPs. Furthermore, since the AIC of VM 4 is smallest when

, the NHPP arrivals of VM 4 can be approximated as 19 HPPs with different arrival rates, and the arrival rate is reached to the maximum in the 17th time interval. In summary, the estimated arrival rate from the CPU utilization of VM 3 is the largest and the arrival process obeys an HPP, while the estimated arrival rate from VM 4 is the smallest and the arrival obeys an NHPP.

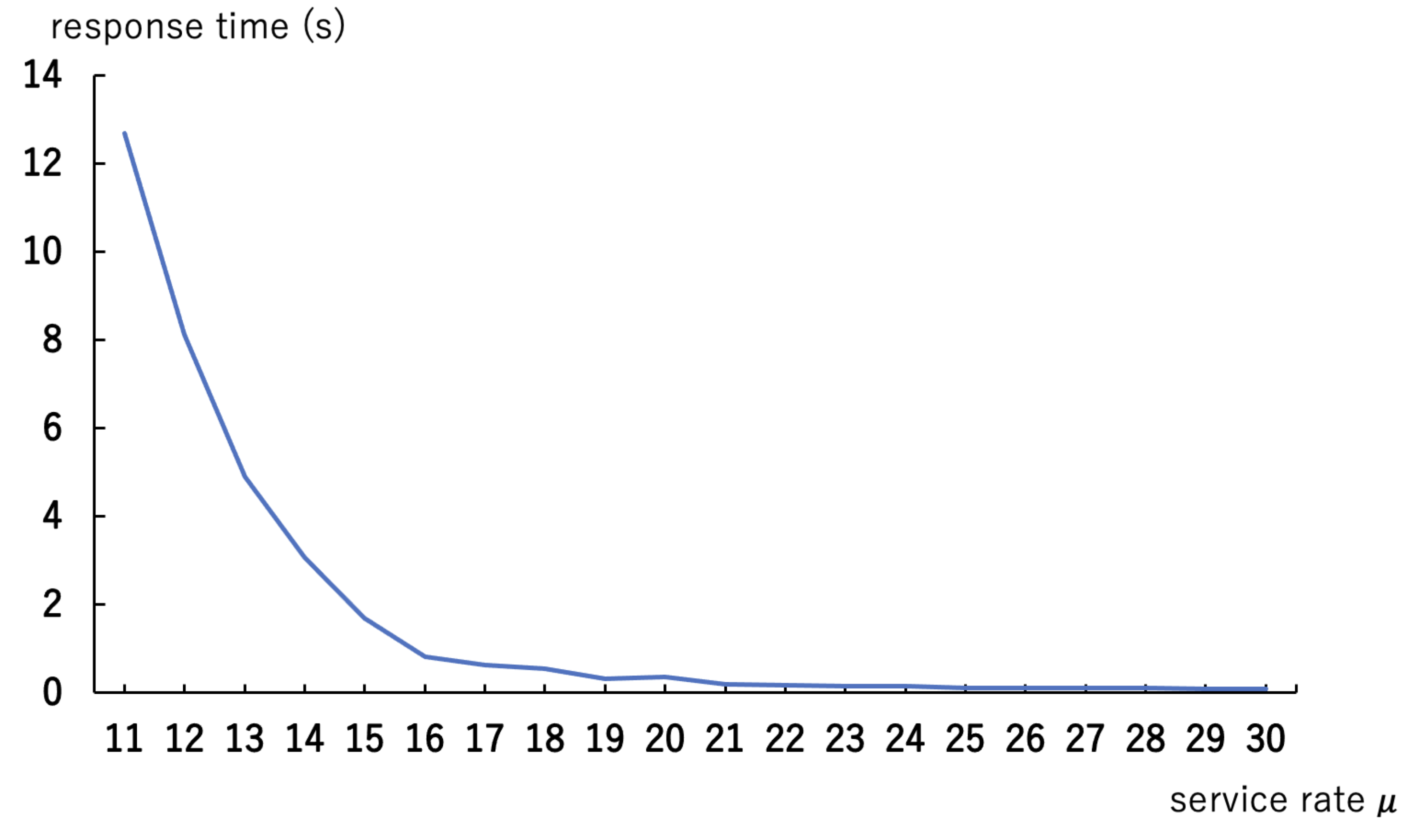

4.2. Results of Performance Evaluation

Finally, we evaluate performance in the scenario of the integrated systems using the estimated arrival rates and intensity function. According to Equation (17), we can calculate the integrated arrival intensity function

. Using

, we can simulate arrival streams whose arrival intensity obeys

. Thus, we can get the arrival time of each task in the arrival stream. Then, by simulating processing times that obey an exponential distribution, we can similarly simulate the CPU processing time for each task. Finally, using the arrival time and processing time, we can calculate the average response time of the arrival stream. The algorithm is shown in Algorithm 2.

| Algorithm 2 Performance evaluation for the model |

- Step 1:

Calculate the intensity function of the integrated system, which obeys NHPP according to Equation (17):

- Step 2:

Simulate the arrival times ( ) whose arrival intensity obeys :

- Step 3:

Simulate the service time ( ) for an exponential distribution with service rate :

- Step 4:

Calculate the average response time ().

|

Table 3 shows an example of the calculation of response times. During the processing of the first arrival (P1), P2 has to wait for 8 ms before it can be processed. In addition, the arrival time of P2 is 1 ms. Therefore, the response time of P2 is

ms. Similarly, the arrival of P3 has to keep waiting until P1 and P2 are served (i.e., after

ms). Since the arrival time of P3 is 2 ms, the response time of P3 is

ms.

We conduct ten loops and calculate the average response times. The average response times of the superposition of the five VMs are evaluated by changing the service rate from

to

. The results are given in

Table 4. Furthermore, to make the response times more intuitive, we make the the results of

Table 4 into

Figure 4.

When the service rate is small (), the average response times of the integrated system tend to be very large ( s). With the increase of service rate (), the effect of on the average response times becomes smaller. In other words, as a designer of a cloud computing platform, at least 16 CPUs need to be designed to meet user requirements. As a cloud computing provider, 16 CPUs are good enough to provide cloud computing services. Therefore, 16 CPUs can be allocated to all users in the interval of [0, T]. As a user of a cloud computing, we can choose an appropriate number of CPUs to balance performance and cost.

5. Conclusions

In this paper, we have modeled the behavior of five VMs of Bitbrains by using an queueing model. In particular, the model parameters were estimated by approximating an NHPP using a series of discrete HPPs and the MLE with EM algorithm. The performance of the integrated virtual platform was evaluated based on the superposition of the estimations of five VMs.

However, our proposed approach have a main limitation. In general, a cloud data center contains a large number of physical machines and virtual machines. For each virtual machine, the arrival and service rates of the tasks can be calculated independently. Therefore, the parameters of each virtual machine can be estimated in a distributed manner. However, due to hardware limitations, we cannot compute the parameters of each virtual machine. In this paper, we estimated the parameters for five VMs.

In the future, we would like to address the above limitation first. We would like to evaluate all the arrival processes of the VMs in Bitbrians in a distributed manner offline. Due to the fundamental weaknesses of an EM algorithm, the iterative convergence process is time-consuming. Therefore, we would choose a faster iterator, such as the Adam, for the parameter estimation. Finally, the performance of the whole cloud computing platform can be evaluated, which is meaningful for the cloud service providers and users.

In addition, a assumption will be considered to estimate the arrival rates and evaluate the system performance. As a generalization of an NHPP, a MAP takes account of the dependency between consecutive arrivals and is often used to model complex, bursty, and correlated traffic streams. Therefore, we would like to concentrate on the MAP parameter estimation of quasi-birth-death queueing systems using utilization data.