Abstract

This paper presents a method for unsupervised classification of entities by a group of agents with unknown domains and levels of expertise. In contrast to the existing methods based on majority voting (“wisdom of the crowd”) and their extensions by expectation-maximization procedures, the suggested method first determines the levels of the agents’ expertise and then weights their opinions by their expertise level. In particular, we assume that agents will have relatively closer classifications in their field of expertise. Therefore, the expert agents are recognized by using a weighted Hamming distance between their classifications, and then the final classification of the group is determined from the agents’ classifications by expectation-maximization techniques, with preference to the recognized experts. The algorithm was verified and tested on simulated and real-world datasets and benchmarked against known existing algorithms. We show that such a method reduces incorrect classifications and effectively solves the problem of unsupervised collaborative classification under uncertainty, while outperforming other known methods.

MSC:

68T37

1. Introduction

Classification under uncertainty by a group of agents is a common task that appears in different fields. In some applications it is formulated as a labeling process of similar entities (also called “instances”), while in others it is formulated as clustering procedures. For example, consider a group of physicians analyzing the medical records of a patient. Each physician analyzes the symptoms of the patient and diagnoses possible diseases, thus classifying or tagging the case with the disease name. The final diagnosis of the group is made based on the collective classifications provided by the group members. Naturally, with prior knowledge of the expertise of each physician, a larger weight can be given to those physicians who are experts in the specific disease. Note, however, that the challenge of reaching a collective decision is further enhanced when there is no prior knowledge on the agents’ expertise. This can be the case when ad hoc classifications are obtained by online surveys and questionnaires based on anonymous users with different yet unknown expertise levels.

One of the most popular methods to reach a collective classification based on a group of agents’ answers is known as the “wisdom of the crowd” (WOC). According to this approach, a decision can be reached based on the aggregated opinion of the agents, including both the experts and non-experts [1]. WOC is usually based on a majority (or plurality) vote, meaning that an opinion preferred by most of the agents is considered to be a correct answer. The WOC’s main assumption is that the expertise level of the agents is distributed somewhat symmetrically around the unknown true answer. Therefore, it makes sense to apply a majority vote procedure to obtain better accuracy (i.e., relying on the law of large numbers). Numerically, the majority vote is represented by the median statistics, and for a relatively large number of non-skewed agents, it effectively solves the group classification problem. Another setting where a majority vote is effective is when agents who make classifications have high and homogeneous levels of expertise in the considered field.

Nonetheless, in various settings, the WOC assumption does not hold. For example, in online questionnaires over the internet in specific fields, only a few of the users are real experts in the field, while most of the users are non-experts, and considering their opinions can seriously reduce the collective classification accuracy.

In this paper, we focus on ad hoc classification by a group of agents with unknown different levels of expertise. The suggested algorithm includes two stages:

- -

- Classification of the agents according to the levels of their expertise;

- -

- Classification of the entities with respect to the agents’ levels of expertise.

In other words, in the first stage, the algorithm recognizes the experts in the fields of the presented entities, and in the second stage it classifies the entities, preferring the opinions of these experts (for example, using some weighting scheme or expectation-maximization scheme).

In the classification of the agents, we assume that the agents with the same fields of expertise have relatively close or even the same opinions in their field of expertise, while the non-experts’ opinions (if they are not biased) are more scattered over other possible classifications. Accordingly, if the agents propose similar classes for the same entities, then these agents are considered to be experts in these classes. Consequently, a lower level of expertise can be associated with agents who are inconsistent in their opinions and create classes that differ from the classes proposed by the other agents. Certainly, if the levels of the agents’ expertise are known, then this stage can be omitted, and the problem can be reduced to the majority or plurality votes and further optimization procedures.

In the classification of the entities, one can utilize conventional methods such as combination of the weighted agents’ classifications. We follow the expectation-maximization (EM) approach as suggested by Dawid and Skene [2]. In the expectation (E) step, the algorithm estimates correct choices according to the agent’s expertise, and in the maximization (M) step, it maximizes the likelihood of the agent’s expertise with respect to the distances from the correct choices. To measure the distances between the agents’ classifications, we use the weighted Hamming distance, which is a normalized metric over the set of partitions that represent the agents’ classifications.

The suggested algorithm was validated and tested using simulated and real-world datasets [3,4]. The obtained classifications were compared against several approaches: (i) classifications obtained by a brute-force likelihood-maximization (LM) algorithm (see Section 4), (ii) majority vote (see Section 6.2.1), (iii) the recently developed fast Dawid–Skene (FDS) algorithm [5], and (iv) the widely known GLAD classification algorithm [6]. It was found that the proposed algorithm considerably outperforms these popular methods due to its higher accuracy and lower computation time.

The rest of this paper is organized as follows: In Section 2, we briefly overview the related methods that form a basis for the suggested techniques. Section 3 includes a formal description of the considered problem. In Section 4, we outline and clarify the brute-force likelihood-maximization algorithm, which is used for comparisons of the classifications of small datasets. Section 5 presents the suggested distance-based collaborative classification (DBCC) algorithm. Section 6 includes the results of the numerical simulations and the comparisons of the proposed DBCC algorithm with other classification techniques. Section 7 concludes the discourse.

2. Related Work

In terms of the classification problems, aggregation of the agents’ opinions is often treated as a proper application of crowdsourcing techniques. Chiu et al. [7] considered decision-making processes with crowdsourcing and outlined three potential roles of the crowd: intelligence (problem identification), design (alternative solutions), and choice (evaluation of alternatives). Each of these problems can be considered by different methods and, in particular, by recognition of the crowd’s preferences and choices of the alternatives based on these preferences, or in contrast, by as many alternating opinions as possible and further aggregation of these opinions into the unified one.

Crowd opinion aggregation is conducted by several methods and depends on the problem set. For example, Ma et al. [8] (2014) developed an algorithm for gradual aggregation based on measuring the distances between opinions at three similarity levels. In the above-considered problems, following the “wisdom of the crowd” (WOC) approach, the most commonly used aggregation technique was expectation maximization (EM) [9], which was also implemented in the well-known Dawid–Skene (DS) algorithm [2]. First, this algorithm was applied to analyze the error rate, and then it was extended to different problems that required an aggregation of opinions. In particular, Zhang et al. [10] proposed a two-stage version of the algorithm and justified its performance using spectral methods. Shah et al. [11] considered a permutation-based model and introduced a new error metric that compares different estimators in the DS algorithm. Finally, Sinha et al. [5] suggested a fast-executable version of the DS algorithm (termed the FDS algorithm), and at the estimation step (E-step) the dataset is estimated based on the current values of the parameters. Moreover, while at the maximization step (M-step), the values of the parameters are chosen such that the likelihood of the dataset is maximized. Starting from the initial estimates, the algorithm alternates between the M-step and the E-step until the estimates converge to a unified decision.

In parallel to the development of the DS method, other studies have focused on the data analysis phase of the problem, as well as on the possible extensions of the method to multilabel classifications. Following this direction, Duan et al. [12] proposed three statistical quality control models based on the DS algorithm. The authors incorporated label dependency to estimate the multiple true labels given crowdsourced multilabel agents for each instance (entity). Another approach based on the Bayesian models was suggested by Wei et al. [13], who considered the agent’s reliability and the dependency of the classes.

The EM-based methods were also enriched by learning methods to obtain a better classification. In particular, the techniques of multiple Gaussian processes enabled us to learn from the agents and estimate the reliability of the individual agents from the data without any prior knowledge. Groot et al. [14] and Rodrigues and Pereira [15] introduced different models based on standard Gaussian classifiers and presented a precise handling of multiple agents with different levels of expertise.

Other suggested methods for estimating the agents’ expertise levels were based on probabilistic methods. Using such an approach, Whitehill et al. [6] proposed a procedure for determining the agents’ expertise (called the GLAD algorithm), while Raykar et al. [16] suggested a method for estimating the classes’ true labels. Bachrach et al. [17] proposed a probabilistic graphical model that considered the entities, the agents’ expertise, and the true labels of the entities. Finally, since the considered problem could be deemed a framework of unsupervised learning, Rodrigues and Pereira [15] addressed it as a problem of deep learning using crowd opinions in neural networks. Moayedikia et al. [18] proposed an unsupervised approach based on optimization methods using the “harmony search” over different agent combinations.

Following the work of Chiu et al. [7], the present paper focuses on the evaluation of classification alternatives, where the crowd preferences are identified and analyzed ad hoc for further support of the decision-making process. This study presents a novel heuristic that follows the direction outlined in the DS algorithm and its faster FDS (fast DS) version. This study addresses the problem of unsupervised classification for a relatively small number of entities and varying levels of the agents’ expertise. The performance of the suggested heuristic is compared with several known approaches and, especially, with the performance of the popular majority voting method and the FDS algorithm.

3. Problem Setup

Let be a set of entities that represent certain characteristics of some phenomenon, and let be the labels by which the set of entities can be divided into classes , such that and while . The set of the correct classes forms an ordered partition , where the order of the classes is defined by the order of the labels in the sense that if the labels and holds , then class precedes class in .

We assume that the classification of the entities is conducted by agents. Consequently, each th agent, , generates a partition of the set by labeling the entities, and this partition represents the agent’s opinion on the considered phenomenon. Similar to the partition , the order in the agents’ partitions , is defined by the order of the labels . It is assumed that the agents are independent in their opinions. However, different agents, and , , can generate equivalent classifications , where , . In addition, it is assumed that for each class, , , there exists at least one agent who is an expert in this class. This assumption implies that if the correct classification is available, class from the agent’s classification is equivalent to class from the correct classification .

The considered problem is formulated as follows: given the set of entities and the set of classifications created by experts using labels, find a classification , , which is as close as possible to the unknown correct classification .

To clarify the problem, let us consider a toy example of the dataset presented in Table 1. The dataset consists of entities classified by agents with classes. The unknown correct classification is denoted by . In addition, we use to denote the classification obtained by the majority vote.

Table 1.

Example of the simulated data with the correct classification and the majority vote for agents classifying entities by classes.

The columns in the table are denoted by , …, , where the table entry represents the classification of element by agent to one of the classes , , , and . The actual table entries are the tags of the corresponding class, namely, , , and .

In this example, we assume that the first agent, is an expert in class , the second agent, , is an expert in class , the third agent, , is an expert in classes and , the fourth agent, is an expert in class , the fifth agent, is an expert in class , and finally, the sixth agent, , is an expert in the last classes and . The data are summarized in Table 1.

The results of the comparison of the agents’ classifications with the correct classification appear in the eighth column of Table 1. It can be seen that each agent provides the classification which is rather far from the correct classification, . Similarly, the classification , in the last column of Table 1, generated by the majority vote is also far from the correct classification (with an accuracy level of ). Thus, majority voting does not work well in this case, since the agents’ classifications are not symmetrically distributed around the correct class. Note, however, that classification of the proposed algorithm that is presented in Section 5, and denoted by , which classifies the entities by the agent’s expertise (which is unknown a priori), is equivalent to the correct classification , i.e., it results in a accurate classification, where for

- -

- Expert , class ;

- -

- Expert , class ;

- -

- Expert , classes and ;

- -

- Expert , class ;

- -

- Expert , class ;

- -

- Expert , classes and .

Thus, by identifying the expert agents, a correct classification can be achieved (see the implementation of the proposed algorithm to Table 1 at the end of Section 5).

Note, again, that in the considered setup both the correct classification and the agents’ levels and fields of expertise are unknown, and this information should be estimated only from the agents’ classifications. As seen later, the recognition of the expert agents is based on the assumption that experts in the same field of expertise provide closer answers than the answers of the non-expert agents.

4. Local Search by Likelihood Maximization

Inspired by the considered example, where the best classification is provided by considering the opinions of the experts, we start with an algorithm that provides an exact solution by maximization of the expected likelihood between the agents’ classifications. This algorithm follows the brute force approach and, because of its high computational complexity, it can be applied only to small datasets.

Let be a set of entities and be the set of agents’ classifications , , while the correct classification is unknown to the agents.

Let be the tag by which the th agent-labeled entity is (see the columns in Table 1); in other words, the values are the opinions of the agents about that entity, and denotes that in the classification of an agent , entity is in class .

Assume that in the correct classification an entity belongs to class . Since is unknown, we consider the probability that the th agent classifies an entity as a member of the class while the correct class is , and denotes the probability matrix that includes the opinions of the th agent, , on the membership of the entity , to the classes , . If agent is completely reliable, then is a unit matrix. In general, the agent is considered to be an expert in class if is close to one, while and are close to zero for all .

Finally, we denote by the probability that class includes at least one entity. Then, if is an estimated class for the entity , then is the probability that entity will be classified to class . Additionally, we denote by the label associated with class . Similarly, denotes the correct class; for the th entity , the value is the probability that the entity will be correctly included in the class .

Using these terms, the classification problem can be formulated as a problem of finding the classes , , the matrices , , and the probabilities that maximize the likelihood function

In the other words, it is required to maximize the value of the likelihood function

with respect to its arguments and subject to the relevant conditions:

An approximated solution of this problem can be defined as follows:

where is an indicator function that is if and otherwise. The approximated solution can be obtained, for example, by majority vote (see Section 6.2.1), which can also be used as an initial solution in the considered optimization algorithm.

The proposed algorithm, which aims to solve optimization problem (1) by local search. is outlined as follows (Algorithm 1).

| Algorithm 1: Likelihood Maximization |

Given the set of items , , and the set of the agents’ classifications , , do:

|

Following the outlined algorithm, an initial solution is refined iteratively until reaching the maximal expected likelihood. Such a method can provide an optimal solution to the problem; however, it requires high computation power and can be implemented only for relatively small problems. The time complexity of the Algorithm 1 is , where is the number of entities, is the number of agents, is the number of classes, and is the number of iterations until algorithm convergence. Here, is the number of repetitions of lines 5–8 in the while loop, where a maximum of classes are defined for each entity of a maximum of entities, and the optimization problem is solved by steps. Since the number of classes is at most equal to the number of items , the complexity of the Algorithm 1 in the worst case is .

Having said that, the above Algorithm 1 can be used to prove the existence of a solution to the problem under the indicated assumption. Moreover, in the simulations shown below, we use this algorithm for analysis and comparison of the optimal classifications against the classifications generated by the heuristic method that is suggested next.

5. Suggested Algorithm: Distance-Based Collaborative Classification

The suggested algorithm, called the distance-based collaborative classification (DBCC) algorithm, consists of two stages: in the first stage, based on the presented opinions, the agents are tagged as experts and non-experts for each of the different classes, and in the second stage, the classification of the entities is conducted with respect to the agents’ levels of expertise.

Classification of the agents according to their expertise levels is based on the assumption that agents with similar fields of expertise produce similar classifications of the related entities. On the other hand, the classifications of non-expert agents are distributed over a relatively larger range of classes. Consequently, the tagging of the agents as experts and non-experts is conducted by clustering the agents’ classifications , , with respect to the different classes.

Let be a certain measure of similarity between two classifications and with respect to the class , . Then, over all of the agents’ classifications , , a central classification with respect to class can be defined as follows:

The assumption about the closeness of the classifications produced by experts in a certain class implies that the values of similarities between the agents’ classifications , , and some central classification are distributed according to the mixture of two distributions: the first represents the distribution of the experts in class , and the second represents the distribution of the non-experts in this class.

The similarity between the classifications can be measured by several methods, for example, by the Rokhlin or Ornstein distances, or by the symmetric version of the Kullback–Leibler divergence (for the use of such metrics, refer, e.g., to [19]). However, to avoid additional specification of probabilistic measures over the entities, in the suggested algorithm, we use a normalized version of the well-known Hamming distance. This distance is defined as follows:

Let and be two classifications of the set entities. Consider the classes and , , and let denote the cardinality of the class , while denotes the cardinality of the class . The values and are the numbers of entities that are included in the th class or, similarly, are tagged with the label by agents and , respectively. In other words, and represent the independent opinions of agents and about the th class.

In addition, let denote the cardinality of the symmetric difference between the classes and . The number represents the disagreement of the agents about the th class. The normalized Hamming distance between the classifications and is defined as the following ratio:

For each , the defined distance is a metric such that . This represents the disagreements between the agents with respect to different classes and, consequently, enables the definition of experts and non-experts per class.

Additionally, using this distance, the set of classifications and, consequently, the set of agents can be considered as a metric space that allows for the application of conventional clustering algorithms. In the suggested DBCC algorithm, we apply Gaussian mixture clustering and the expectation-maximization algorithm [20].

As a result of the clustering, the agents are tagged according to their level of expertise with respect to each class . These levels are represented by the weights associated with the agents and are used at the classification stage of the entities.

Classification of the entities , , based on the agents’ opinions , , with respect to their expertise levels , , is conducted using conventional voting techniques; in the suggested DBCC algorithm, we use the relative majority vote.

In general, the suggested algorithm acts as follows: In the first stage, for each class, the differences (in terms of the normalized Hamming distance) between the agents’ classifications are defined. Using these distances, the agents are divided into two groups: experts and non-experts. At this stage, an assumption is made that the experts in their area of expertise provide similar classifications of the related instances, unlike the non-experts, whose classifications are more diverse. Accordingly, the opinions of the experts gain higher weights with respect to the non-experts when all of the opinions are aggregated.

In the second stage, the entities are classified by majority vote with respect to the weighted opinions of the agents. Then, the obtained solution is corrected following the stages of the EM algorithms; the resulting classification of the entities is considered as an estimated classification obtained at the M-step and is used at the E-step for the definition of more precise levels of the agents’ expertise.

The DBCC algorithm is outlined as follows (Algorithm 2):

| Algorithm 2: Distance-Based Collaborative Classification (DBCC) Algorithm |

| Given the set of items , , the enumeration of possible classes and the set of the agents’ classifications , , do: Initialization

|

The suggested DBCC algorithm is a heuristic procedure utilized the EM techniques. At the M-step, it maximizes the likelihood of agents’ expertise by using the distances from the estimated correct classifications. The latter is obtained at the E-step with respect to the agents’ expertise at the previous iteration. The process converges in the sense that the difference between the classifications obtained in two sequential steps tends to be zero. In practice, the process can be terminated when the difference between two sequential classifications decreases more than a certain predefined value of order .

The time complexity of the suggested Algorithm 2 is , where is the number of entities, is the number of agents, is the number of classes, and is the number of iterations up to the convergence of the EM part of the algorithm. Here, defines the number of iterations of the algorithm (see Line 32); in the term , is the number of iterations the for loop (Lines 3–20), and is the number of iterations for the loops (lines 4–8) and the number of steps in the operation in Line 9 (the other loops require steps), and the term represents the number of iterations for the loop in Lines 21–29 and two internal for loops (Lines 22–24 and 25–27). Since the number of classes is at most equal to the number of items , the complexity of the algorithm in the worst case is .

To clarify the main advantage of the algorithm that aims to find experts and non-experts for further classification, let us refer back to the dataset presented in Table 1.

Consider the classifications and provided by the first and the second agents with respect to class . Following Equation (3), the distance between the classifications and is a ratio between the number of disagreements of the agents about the membership of the entity to a certain class. For the first and the second agents with respect to , one obtains , which represents the disagreement regarding three entities , , and that were classified by the first agent to the class (); however, they were classified to other classes by the second agent (. Thus, the distance is the maximal possible distance between these classifications.

Similarly, the distance between the classifications and with respect to the class is as follows: The number of disagreements between the agents is (entities , , , , and ), while regarding entity , the agents agree with one another. The numbers of independent classifications of the third and fourth agents about class are (entities , and ) and (entities , , , and ), respectively. Thus, .

Calculation of the distances among the agents with respect to all four classes , results in the following tables (zero distances are shown in bold font):

| 0 | ||||||

| 0 | ||||||

Note that, for each class, the minimal distance between the classifications is zero, and the experts can be defined with respect to this distance. For class , a zero distance is obtained between classifications and , i.e., the first and third agents are considered to be experts with respect to class . Similarly, for class , zero distances are obtained for and, thus, the second and third agents are considered to be experts with respect to class ; , so the fourth and sixth agents are experts in class ; and , so the fifth and sixth agents are considered to be experts with respect to class .

Following these distance calculations, the “experts” in each class obtain a weight of , while the other nonexpert agents obtain zero weights. Thus, in this weighting scheme, only expert classifications are considered. Finally, in the considered dataset (see Table 1), according to the opinions of the experts (the first and third agents), the first class is ; according to the opinions of the experts (the second and third agents), the second class is ; according to the opinions of the experts (the fourth and sixth agents), the third class is ; and according to the opinions of the experts (the fifth and sixth agents), the fourth class is .

Then, the resulting partitioning of the dataset is as follows:

Note that this straightforward, illustrative example does not require (and does not demonstrate) the complicated clustering and correction steps by the E-M algorithm, which plays an important role in the real-world datasets, where the division of the agents into experts and non-experts is not binary.

6. Numerical Simulations and Comparisons

The suggested algorithm was studied using two data settings: simulated data with known characteristics, which enabled the analysis of the effectiveness and robustness of the DBCC algorithm, and real-world data obtained from a dedicated questionnaire.

Classifications obtained by the suggested Algorithm 2 were compared with the results provided by the optimal likelihood-maximization brute-force algorithm, the majority vote, the most accurate heuristic FDS algorithm, and the fastest GLAD algorithm.

The algorithms were implemented in the Python programming language and run on a standard Lenovo ThinkPad T480 PC with an Intel® Core™ i7-8550U Processor (8M Cache, 4.00 GHz) and 32 GB memory (DDR4 4267 MHz).

6.1. Data

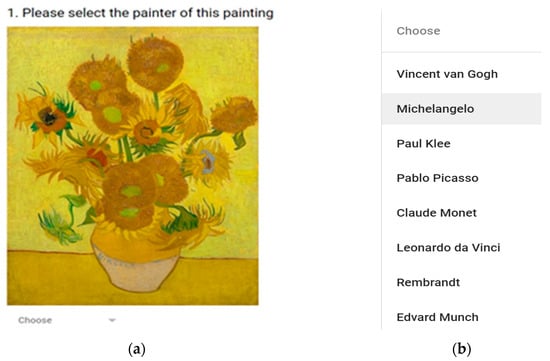

To analyze the proposed method, it was applied to different datasets: (i) simulated data, (ii) real-world data with simulated classes and, finally, (iii) an entirely real-world questionnaire dataset. In the first case, for a given entity , , we simulated both the classes , , and the agents’ classifications , ; in the second case, we used real-world data with simulated labeled datasets; and in the third case, we created and analyzed an online questionnaire that measures the levels of expertise of users regarding famous paintings and painters (the questionnaire is available via the link Famous painters (google.com); see the Appendix A).

6.1.1. Simulated Data

In the simulated data, we used agents in the trials, while their classifications , , were randomly generated. The probability of obtaining correct classifications for expert agents was specified as , and for non-expert agents as . The number of entities in the trials was , and the number of classes was .

6.1.2. Real-World Data with Simulated Classes

In the case of real-world data with simulated classes, we considered the real-world data from different databases, where to define multiple agents with different expertise, we used simulated labeling of the data. The agents were simulated by using different classifiers (e.g., random forests), and their expertise over different classes was simulated by scrambling the features in the dataset. In a comparative analysis, we used seven known datasets from Kaggle [3], as follows: Iris, Abalone Age, Glass Type, Students’ Results, User Activity, Robots Conversation, and Wine Quality.

For example, in the Iris dataset, the agents’ expertise was defined as follows: Agent 1 and Agent 2 are experts in the class “Iris-setosa”, Agent 2 is an expert in the class “Iris-versicolor”, and Agent 4 is an expert in the class “Iris-verginica”. Recall that according to this definition, the probability that these agents provide correct classification of the entities of these classes is higher.

In addition, we used the Wi-Fi localization database from the Machine Learning Repository [21]. The datasets have different numbers of entities and different numbers of classes ; per the different numbers of classes, different numbers of agents are simulated with various levels of expertise.

6.1.3. Real-World Data

To obtain real-world data, we designed and distributed an online questionnaire that contains questions on painters and paintings based on common knowledge. In particular, the questionnaire contains 40 paintings created by eight famous painters. The agents were asked to indicate the painter of each painting. Thus, in terms of classification, the agents were required to classify entities into classes. The questionnaire was offered to volunteers in the university, including both students and professors, without any specific educational background in the arts. An example of the paintings and questionnaire that was used are presented in the Appendix A.

6.2. Algorithms for Comparisons

The results obtained by the suggested algorithm were compared with the results obtained by four baseline methods: (i) the widely used majority voting algorithm; (ii) the brute-force maximum-likelihood optimization; (iii) the FDS algorithm, which was recently proposed as an effective heuristic to establish an expert-based classification; and (iv) the GLAD algorithm.

6.2.1. Majority Vote

A majority vote is a simple and popular rule that is often used in different tasks of social choices. The algorithm based on this rule acts as follows:

Let be a set of entities that should be classified by experts to possible number of classes. Then, the entity , , is classified to class , , if the majority of the agents classified it to this class (thus, labeling it by the th label); ties are broken randomly.

As indicated above, despite its simplicity, in crowdsourcing tasks, the majority vote rule provides good results when the number of agents is relatively large and with similar levels and fields of expertise.

6.2.2. Likelihood Maximization

The likelihood-optimization procedure—the Algorithm 1 presented in Section 4, is an optimal brute-force algorithm that is used to obtain an optimal solution in relatively small problems.

In the numerical simulations, optimization problem (1) has been solved by using a local search heuristic that is feasible for considered cases with a small number of agents.

6.2.3. Fast Dawid–Skene Algorithm

As indicated above, the fast Dawid–Skene (FDS) algorithm [5] is a modification of the original DS aggregation algorithm proposed by Dawid and Skene [2].

The FDS algorithm follows the EM approach, such that at the E-step, the data are classified using the current parameter values, and at the M-step, these values are corrected to maximize the likelihood of the data. The algorithm starts with some initial classification. It then alternates between the E-step and the M-step up to convergence, such that the difference between the current and the previously obtained classifications is less than the predefined small value.

The unsupervised classification algorithm follows the same approach with the above-indicated differences in the classifications conducted at the E-step and in the used parameters.

6.2.4. GLAG Algorithm

The generative model for labels, abilities, and difficulties (GLAD) [6] is a probabilistic algorithm that simultaneously infers the expertise of each agent, the context of the entity, and the most likely class for each entity.

Similar to the other indicated methods, this algorithm follows the EM approach, namely, given the agents’ classifications and initial expertise. At the E-step, it computes the posterior probability for every entity, and at the M-step it maximizes the expectation of the log-likelihood of the observed and hidden parameters using gradient descent.

6.3. Simulation Results

The suggested algorithm was implemented over different datasets, as indicated above, with different groups of agents, and compared with the four outlined algorithms.

6.3.1. Likelihood Maximization vs. Majority Voting

The comparison of the algorithm based on majority voting (Section 6.2.1) and the likelihood-maximization Algorithm 1 (Section 6.2.2) was conducted using the simulated settings, with and agents. In both cases, the number of entities was , and the number of classes was . Such a relatively small dataset enables the application of the optimal likelihood-maximization Algorithm 1 and its timely execution. The results of the simulations are summarized in Table 2.

Table 2.

Simulation results of majority voting and likelihood maximization for and agents classifying entities by classes.

In the considered settings, the likelihood-maximization Algorithm 1 outperformed majority voting both for and for agents and provided a higher accuracy hit rate within similar computation times.

6.3.2. Suggested Algorithm vs. Majority Voting

In the next simulations, the proposed DBCC algorithm was compared with the majority voting rule. In the simulations, agents of different levels of expertise were selected, such that their classifications , would follow a correct classification with probabilities for non-expert agents, and with probabilities (reliability) for expert agents. Since the probabilities and are, in essence, measures of the agents’ levels of expertise in certain fields, we refer to these probabilities as the reliabilities of the agents.

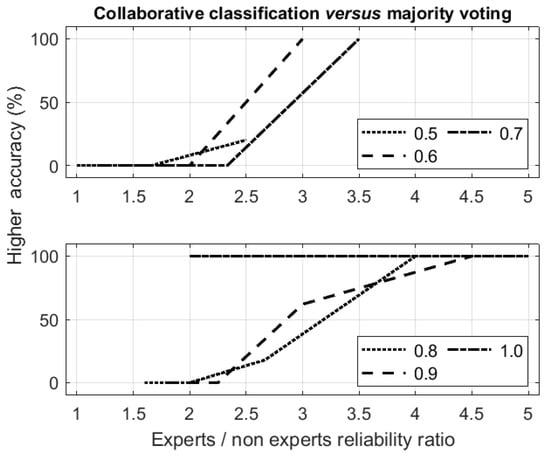

The trials were executed for experts classifying entities into classes. The probabilities of correct classifications (considered as the reliabilities of the agents) were . The percentage of times where the proposed DBCC algorithm outperformed the majority vote method with respect to the ratio of expert and non-expert reliability is given in Figure 1.

Figure 1.

Percentage of cases in which the suggested algorithm outperformed the majority voting method in terms of accuracy (y-axis) with respect to the ratio of expert reliability to non-expert reliability (x-axis). Different dashed lines correspond to different levels of the agents’ reliability, which represent the levels of the agents’ expertise.

As expected, for homogeneous groups that included agents with close levels of expertise, majority voting outperformed the suggested algorithm. However, for heterogeneous groups of agents with different levels of expertise, the suggested DBCC algorithm outperformed the majority voting method.

These results demonstrate once again that the suggested algorithm is preferable over a majority vote for practical tasks where the group of agents includes both experts and non-experts with respect to different fields.

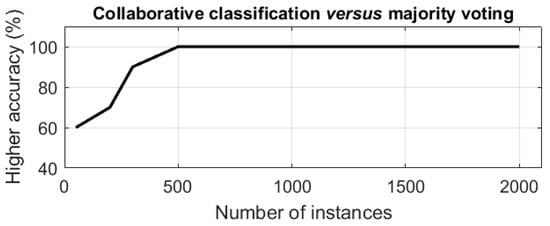

Figure 2 demonstrates the percentage of times when the proposed DBCC algorithm outperformed the majority voting method in a classification of entities. In these simulations, the probability that the experts would provide correct classifications was , and the probability that the non-experts would provide correct classifications was .

Figure 2.

Percentage of cases where the suggested algorithm obtained more accurate classifications than the majority voting method (y-axis) with respect to the number of entities (x-axis).

It can be seen that for a heterogeneous group of agents that includes both experts and non-experts, the suggested algorithm substantially outperforms the majority voting method, and its effectiveness increases with the size of the group.

In both settings, that group of agents included experts and non-experts of different levels of expertise. For these agents, the suggested DBCC algorithm outperformed the majority voting method. At the same time, the effectiveness of the suggested algorithm decreased with the decrease in expertise levels, and when the group of agents included only non-experts, it became less effective than majority voting.

The obtained results demonstrate that the suggested algorithm is preferable in tasks where a small number m of agents classifies a large number n of entities. In contrast, if the number of entities is small and the number of agents is large, it is preferred to use a majority vote.

6.3.3. Accuracy Analysis

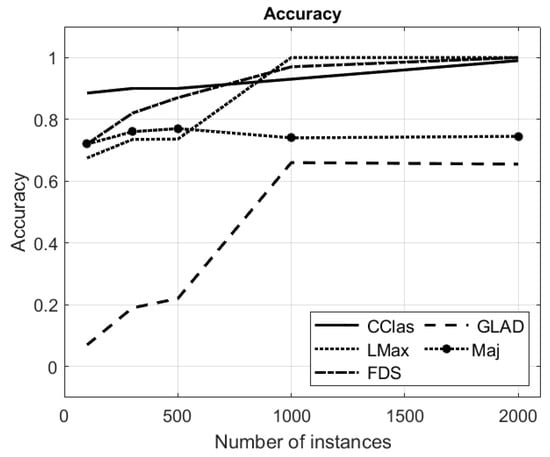

The accuracy of the suggested algorithm was compared against the accuracy of the majority voting (see Section 6.2.1), the likelihood-maximization Algorithm 1 (see Section 6.2.2), and the FDS algorithm (see Section 6.2.3). In addition, we also present the results of the GLAD algorithm [6]. The results of the simulations are shown in Figure 3.

Figure 3.

Accuracy of the suggested DBCC algorithm and the benchmark algorithms (y-axis) with respect to the number of entities (x-axis). In the figure, the algorithms are denoted as follows: CClas—the suggested DBCC algorithm, LMax—the likelihood-maximization algorithm, FDS—fast Dawid–Skene algorithm, GLAD—GLAD algorithm, Maj—majority voting method.

It can be seen that for a relatively small number of entities (), the suggested DBCC algorithm outperforms the benchmark algorithms. For a larger number of entities, the DBCC is close to the FDS and the likelihood-maximization algorithms. The other two methods, majority voting and GLAD, result in lower accuracy.

For many entities (n > 1000), the confusion matrices in the likelihood-maximization become more accurate and very close to optimal, which affects the algorithm’s accuracy until it becomes closer to the optimal solution, obtaining 100% accuracy.

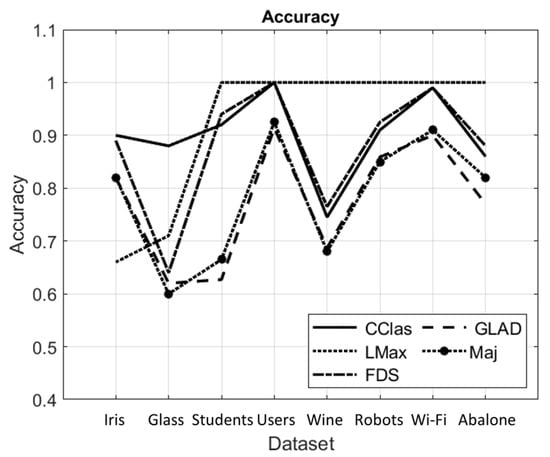

In the next simulations, the algorithms were applied to the real-world data [3,21] with simulated labeling, as indicated in Section 6.1.2. The results of the simulations are shown in Figure 4.

Figure 4.

Accuracy of the suggested and benchmark algorithms applied to real-world data with simulated labeling. In the figure, the algorithms are denoted as follows: CClas—suggested DBCC algorithm, LMax—the likelihood-maximization algorithm, FDS—fast Dawid–Skene algorithm, GLAD—GLAD algorithm, Maj—majority voting method. The number of entities in the datasets is as follows: Iris—, Glass—, Students—, User—, Wine—, Robots—, Wi-Fi—, and Abalone—.

The DBCC algorithm and the FDS algorithm outperformed the majority voting in all of the datasets. Additionally, it should be noted that since the likelihood-maximization Algorithm 1 utilizes the probabilities that the agents provide correct classification and depends on the correctness of these probabilities, it results in lower accuracy on the datasets with a relatively small number of entities than the other algorithms. In contrast, the datasets with large numbers of entities demonstrate optimal accuracy. This observation illustrates the well-known difference between statistical probabilities estimated by relatively small samples vs. theoretical probabilities that are defined over infinite populations.

6.3.4. Run Time until convergence

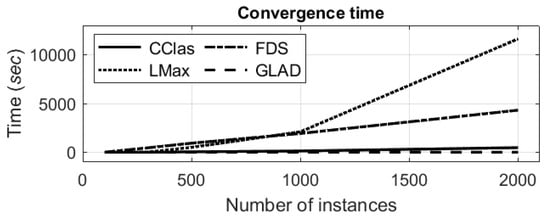

In the last simulations, the run time until convergence of the suggested algorithm was studied. We compared it with the benchmark methods: the likelihood-maximization Algorithm 1 (see Section 6.2.2), the FDS algorithm (see Section 6.2.3), and the previously mentioned GLAD algorithm (see Section 6.2.2). Since the majority voting method is not an iterated process, we did not consider it in these simulations. The graphs of the run time with respect to the number of entities are shown in Figure 5.

Figure 5.

Run time of the suggested and known algorithms with respect to the number of entities. In the figure, the algorithms are denoted as follows: CClas—the proposed DBCC algorithm, LMax—the likelihood-maximization algorithm, FDS—fast Dawid–Skene algorithm, GLAD—GLAD algorithm.

It can be seen that the run time of the suggested algorithm is very close to the run time of the fastest GLAD algorithm and, as in the GLAD algorithm, it linearly depends on the number of entities.

The likelihood-maximization Algorithm 1 is the slowest algorithm, since it checks all of the possibilities to find the maximum likelihood according to the given confusion matrices. The number of such possibilities increases exponentially with the number of entities.

The FDS algorithm is faster than the likelihood-maximization Algorithm 1, but it is still slower than the suggested algorithm since, in contrast to the suggested algorithm, it calculates the maximum likelihood of every class for every entity in the E-step.

Thus, from the run time point of view, the suggested algorithm acts similarly to the fastest algorithm and results in classifications that are close in accuracy to the classifications created by the most accurate algorithms.

7. Conclusions

In this paper, we present a novel algorithm for unsupervised collaborative classification of a set of arbitrary entities. In contrast to the existing methods, the suggested algorithm starts with the classification of the agents to experts and non-experts in each domain, and then it generates classification of the entities by preferring the opinions of the expert agents.

Classification of the agents is based on the assumption that the experts have similar opinions in their field of expertise, while the non-experts often tend to disagree and adopt different opinions in fields in which they are not experts.

Classification of the entities is based on the conventional expectation-maximization method initialized by majority vote and using the agents’ levels of expertise, as defined at the stage of the agents’ classification.

To verify the activity of the algorithm, we also formalized the considered task in the form of an optimization problem and suggested the likelihood-maximization algorithm (LMax) that uses brute force and provides the accurate solution.

Numerical simulations of the suggested DBCC algorithm and its comparisons with the known methods, such as majority vote, the FDS algorithm, and the GLAD algorithm, demonstrated that the run time of the suggested Algorithm 2 depends linearly on the number of entities, and it is close in run time to the fastest GLAD algorithm.

The accuracy of the suggested Algorithm 2 depends on the expertise levels of the agents. For the heterogeneous group that includes both experts and non-experts, the suggested Algorithm 2 resulted in a higher accuracy than the known heuristic algorithms and, especially, outperformed them in the scenarios where a small group of the agents considered a dataset with many entities.

Author Contributions

Conceptualization, I.B.-G. and P.G.; methodology, I.B.-G., E.K. and T.R.; software, A.G.; validation, P.G., A.G. and P.K.; formal analysis, A.G. and E.K.; investigation, T.R.; resources, I.B.-G.; data curation, P.K.; writing—original draft preparation, E.K.; writing—review and editing, P.K.; visualization, A.G.; supervision, I.B.-G.; project administration, I.B.-G. and P.G.; funding acquisition, I.B.-G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by Koret Foundation, the Digital Living 2030 grant.

Data Availability Statement

Data in applicable by the links appearing in the references.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The data were collected using Google Forms [22]. The questionnaire included the images of the paintings and the list of options regarding the author of the painting. The resulting dataset can be downloaded via the link at [4].

An example of the question is shown in Figure A1.

Figure A1.

Example of the question: (a) the painting and (b) the list of possible alternatives to be selected by the user.

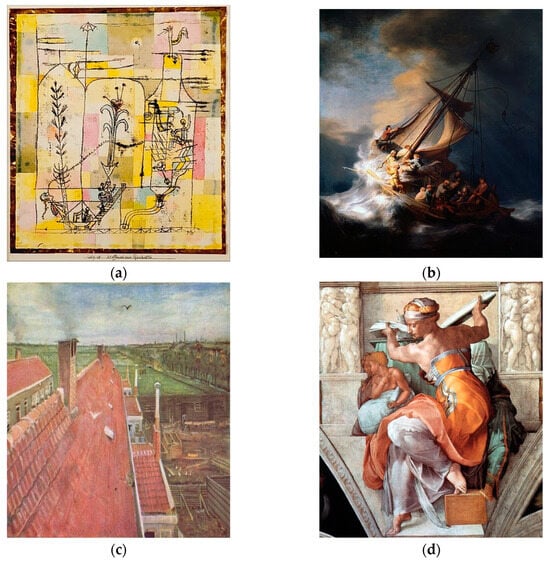

The image is accompanied by a list of painters, and the respondent is required to choose the painter who authored the presented painting. The other examples of the paintings are shown in Figure A2.

Figure A2.

Other examples of the paintings appearing in the questionnaire: (a) Paul Klee, Tale a la Hoffmann; (b) Rembrandt Harmenszoon van Rijn, Storm on the Sea of Galilee; (c) Vincent van Gogh, Rooftops; (d) Michelangelo di Lodovico Buonarroti Simoni, Libyan Sibyl.

References

- Hamada, D.; Nakayama, M.; Saiki, J. Wisdom of crowds and collective decision making in a survival situation with complex information integration. Cogn. Res. 2020, 5, 48. [Google Scholar] [CrossRef] [PubMed]

- Dawid, A.P.; Skene, A.M. Maximum likelihood estimation of observer error-rates using the EM algorithm. J. Roy. Stat. Soc. Ser. C 1979, 28, 20–28. [Google Scholar] [CrossRef]

- Kaggle Inc. Iris Flower Dataset/Abalone Age Prediction/Glass Classification/Students Test Data/User Activity/Classification of Robots from Their Conversation/Wine Quality Dataset. Available online: https://www.kaggle.com/datasets/ (accessed on 23 November 2023).

- The Paintings Authorship. Dataset. Available online: https://www.iradbengal.sites.tau.ac.il/_files/ugd/901879_2cafbbe73b0248828ed5dece50c6c3f0.csv?dn=Painters_dataset.csv (accessed on 23 November 2023).

- Sinha, V.B.; Rao, S.; Balasubramanian, V.N. Fast Dawid-Skene: A fast vote aggregation scheme for sentiment classification. In Proceedings of the 7th KDD Workshop on Issues of Sentiment Discovery and Opinion Mining, London, UK, 20 August 2018. [Google Scholar]

- Whitehill, J.; Ruvolo, P.; Wu, T.; Bergsma, J.; Movellan, J. Whose vote should count more: Optimal integration of labels from labelers of unknown expertise. Adv. Neural Inf. Process. Syst. 2009, 22, 2035–2043. [Google Scholar]

- Chiu, C.; Liang, T.; Turban, E. What can crowdsourcing do for decision support? Decis. Support Syst. 2014, 65, 40–49. [Google Scholar] [CrossRef]

- Ma, J.; Lu, J.; Zhang, G. A three-level-similarity measuring method of participant opinions in multiple-criteria group decision supports. Decis. Support Syst. 2014, 59, 74–83. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–38. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, X.; Zhou, D.; Jordan, M.I. Spectral methods meet EM: A provably optimal algorithm for crowdsourcing. J. Mach. Learn. Res. 2016, 17, 1–44. [Google Scholar] [PubMed]

- Shah, N.B.; Balakrishnan, S.; Wainwright, M.J. A Permutation-based model for crowd labeling: Optimal estimation and robustness. arXiv 2016, arXiv:1606.09632. [Google Scholar] [CrossRef]

- Duan, L.; Oyama, S.; Sato, H.; Kurihara, M. Separate or joint? Estimation of multiple labels from crowdsourced annotations. Expert Syst. Appl. 2014, 41, 5723–5732. [Google Scholar] [CrossRef]

- Wei, X.; Zeng, D.D.; Yin, J. Multi-Label Annotation Aggregation in Crowdsourcing. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018. [Google Scholar]

- Groot, P.; Birlutiu, A.; Heskes, T. Learning from multiple annotators with Gaussian processes. In Proceedings of the 21st Int Conf Artificial Neural Networks and Machine Learning, Espoo, Finland, 14–17 June 2011; pp. 159–164. [Google Scholar]

- Rodrigues, F.; Pereira, F.C. Deep learning from crowds. In Proceedings of the 32nd Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1611–1618. [Google Scholar]

- Raykar, V.C.; Yu, S.; Zhao, L.H.; Valadez, G.H.; Florin, C.; Moy, L. Learning from crowds. J. Mach. Learn. Res. 2010, 11, 1297–1322. [Google Scholar]

- Bachrach, Y.; Minka, T.; Guiver, J.; Graepel, T. How to Grade a Test Without Knowing the Answers—A Bayesian Graphical Model for Adaptive Crowdsourcing and Aptitude Testing. In Proceedings of the 29th International Conference on Machine Learning, Edinburgh, UK, 26 June–1 July 2012; pp. 819–826. [Google Scholar]

- Moayedikia, A.; Yeoh, W.; Ong, K.; Ling, Y. Improving accuracy and lowering cost in crowdsourcing through an unsupervised expertise estimation approach. Decis. Support Syst. 2019, 122. [Google Scholar] [CrossRef]

- Kagan, E.; Ben-Gal, I. Probabilistic Search for Tracking Targets; Wiley & Sons: Chichester, UK, 2013. [Google Scholar]

- van Dyk, D.A. Fitting Mixed-effects models using efficient EM-type algorithms. J. Comput. Graph. Stat. 2000, 9, 78–98. [Google Scholar]

- UCI. 2007. Available online: https://archive.ics.uci.edu/dataset/196/localization+data+for+person+activity (accessed on 23 November 2023).

- The Paintings Authorship. Questionnaire (Google Form). Available online: https://docs.google.com/forms/d/e/1FAIpQLSf_iUo1T7gMIPJyEoG0Cz3xoetfv6LNZvHjcmUyRL_Z4i3Kqw/viewform (accessed on 23 November 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).