Lightweight and Elegant Data Reduction Strategies for Training Acceleration of Convolutional Neural Networks

Abstract

1. Introduction

2. Related Works

2.1. Problem Definition

2.2. Key Research Directions in the Field

2.2.1. Quantization and Pruning Methods

2.2.2. Distributed Training

2.2.3. Dataset Reduction

2.2.4. New Training Strategies

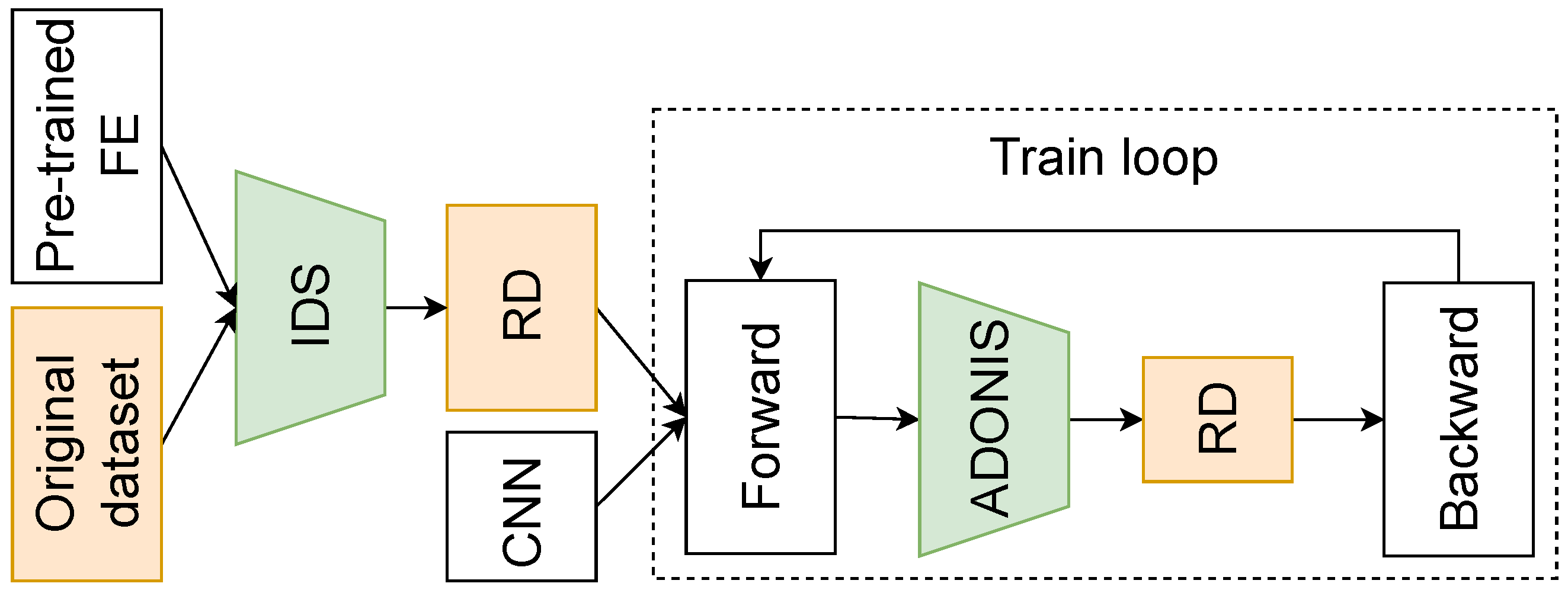

3. Proposed Methods for Training Acceleration

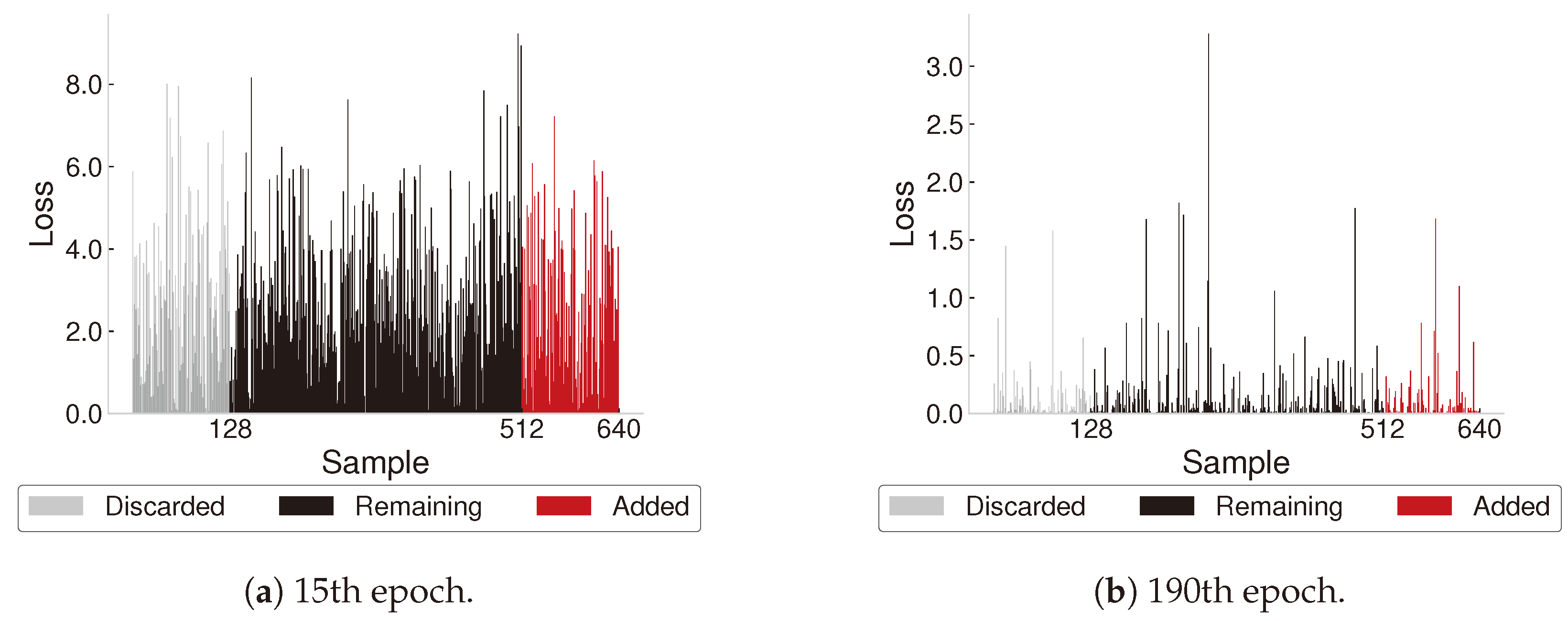

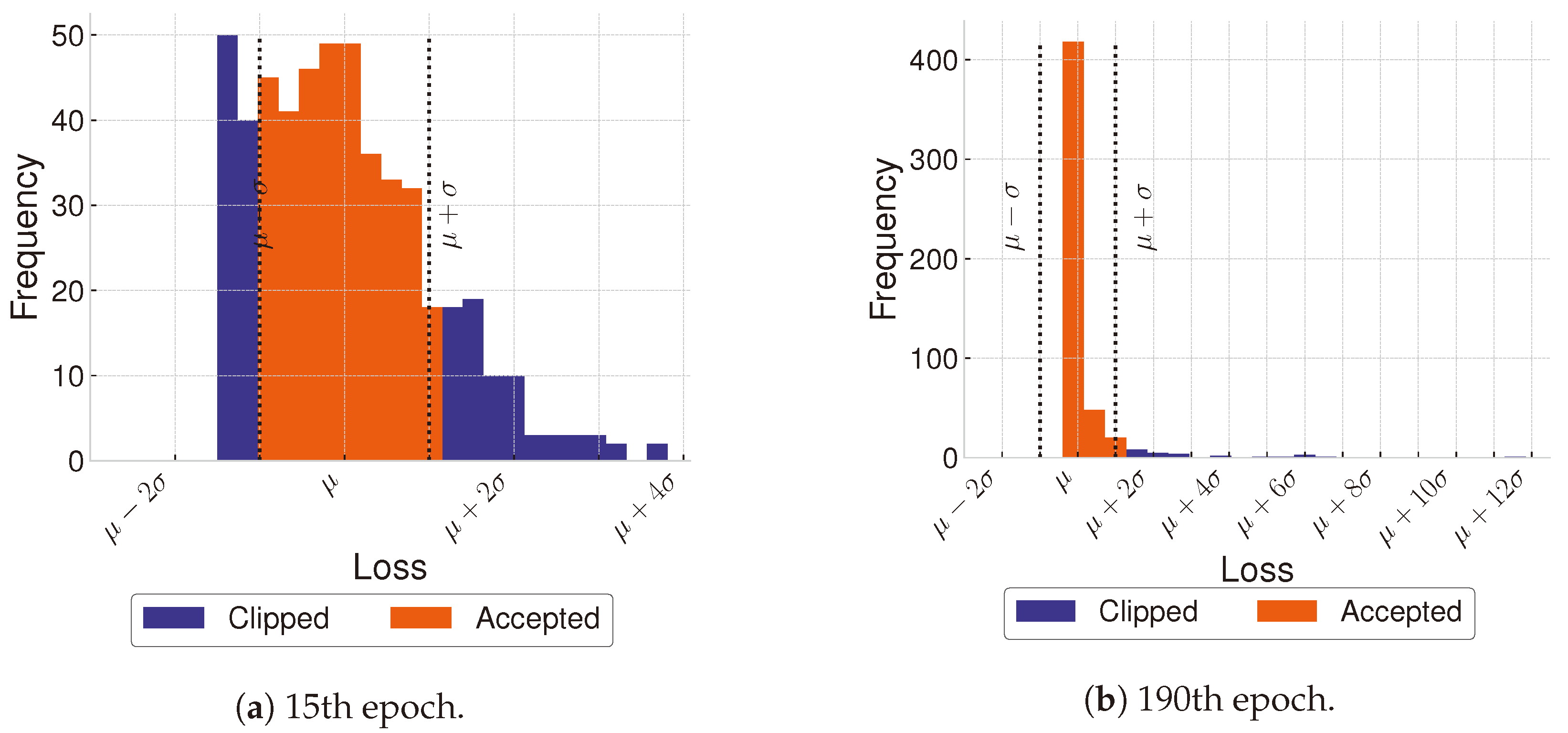

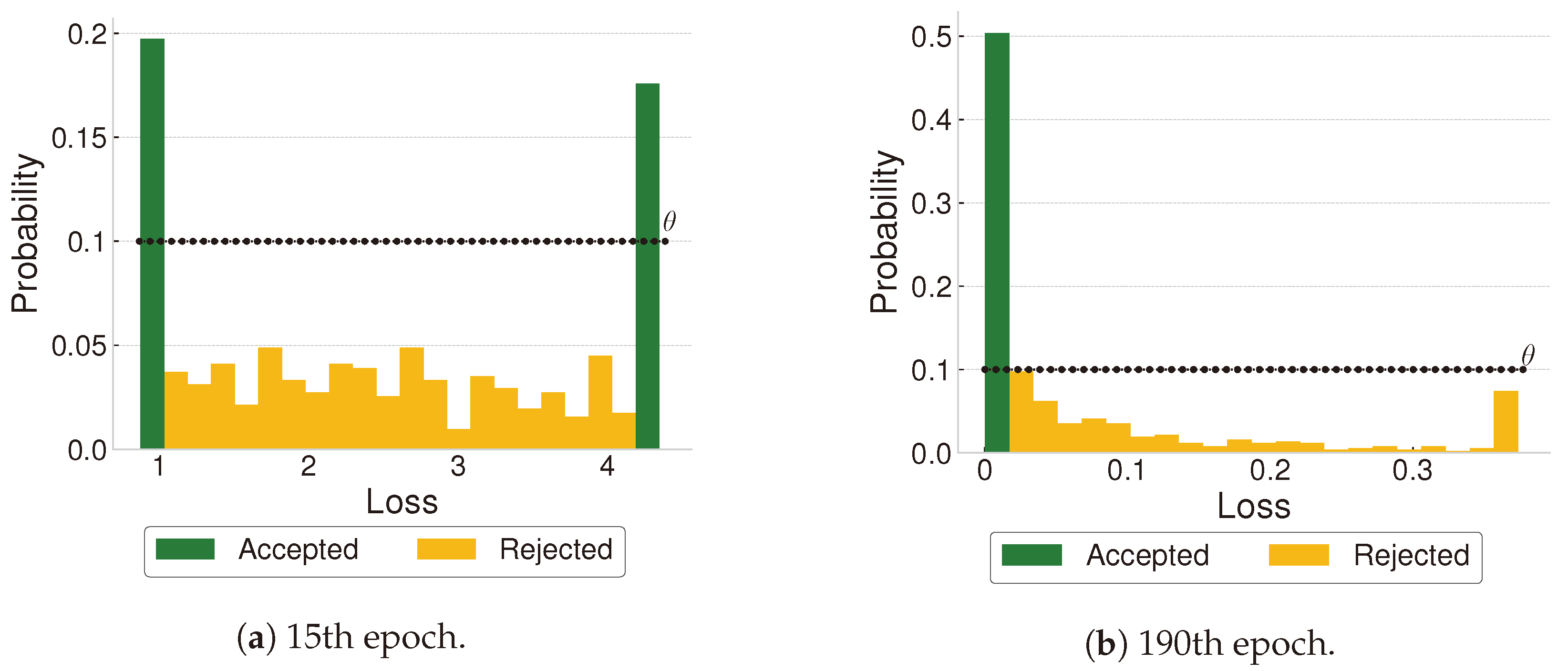

3.1. Adaptive Online Importance Sampling

| Algorithm 1 Adaptive Online Importance Sampling training algorithm |

|

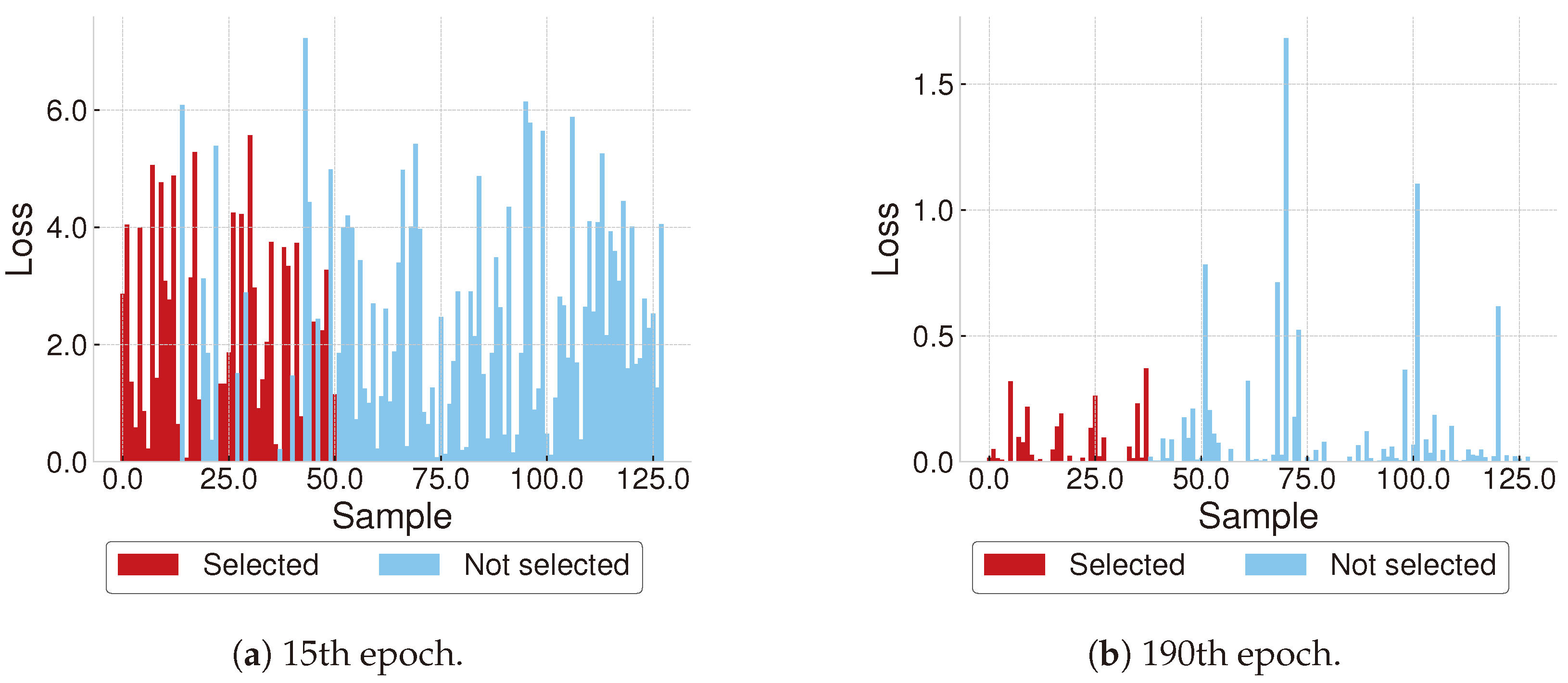

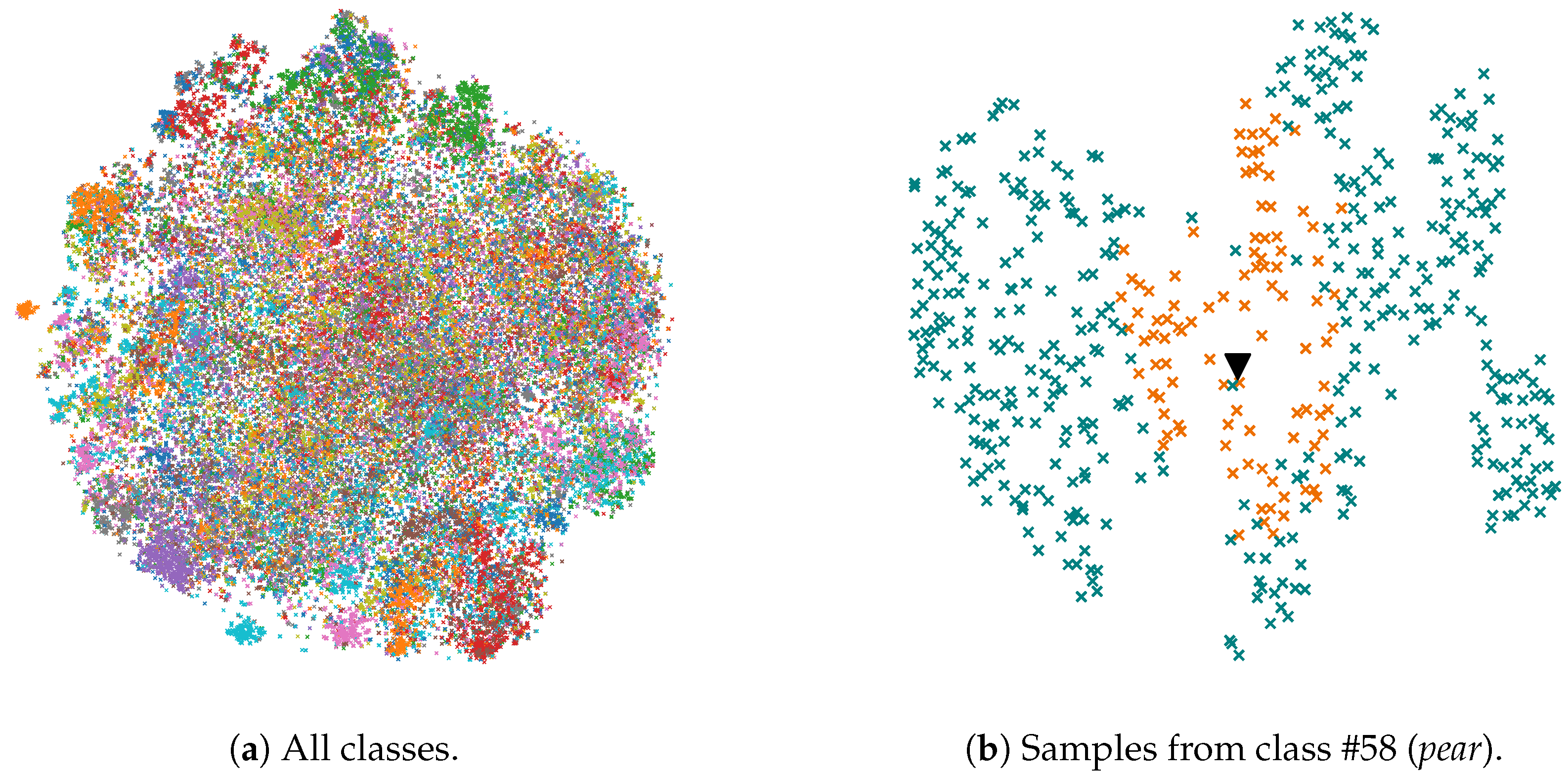

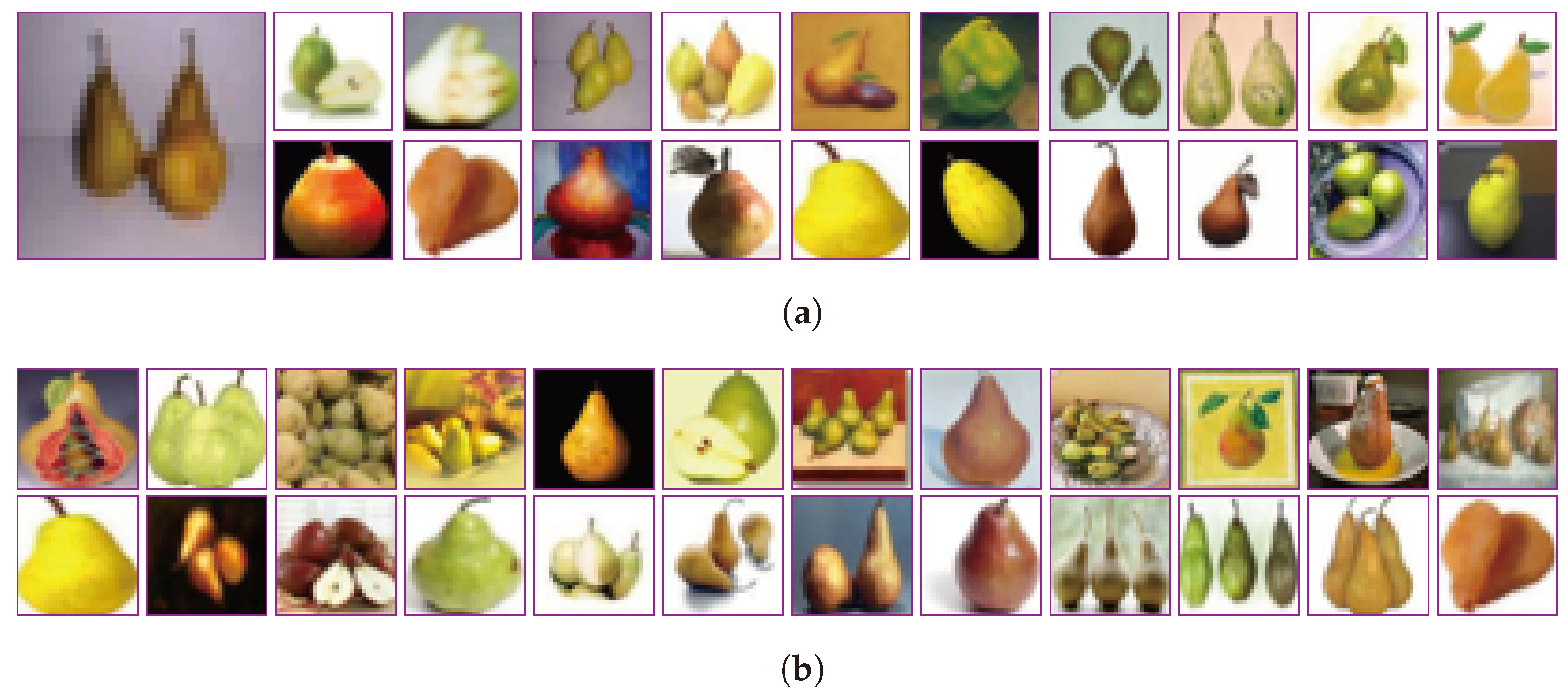

3.2. Intellectual Data Selection

| Algorithm 2 Intellectual Data Selection (IDS) algorithm |

|

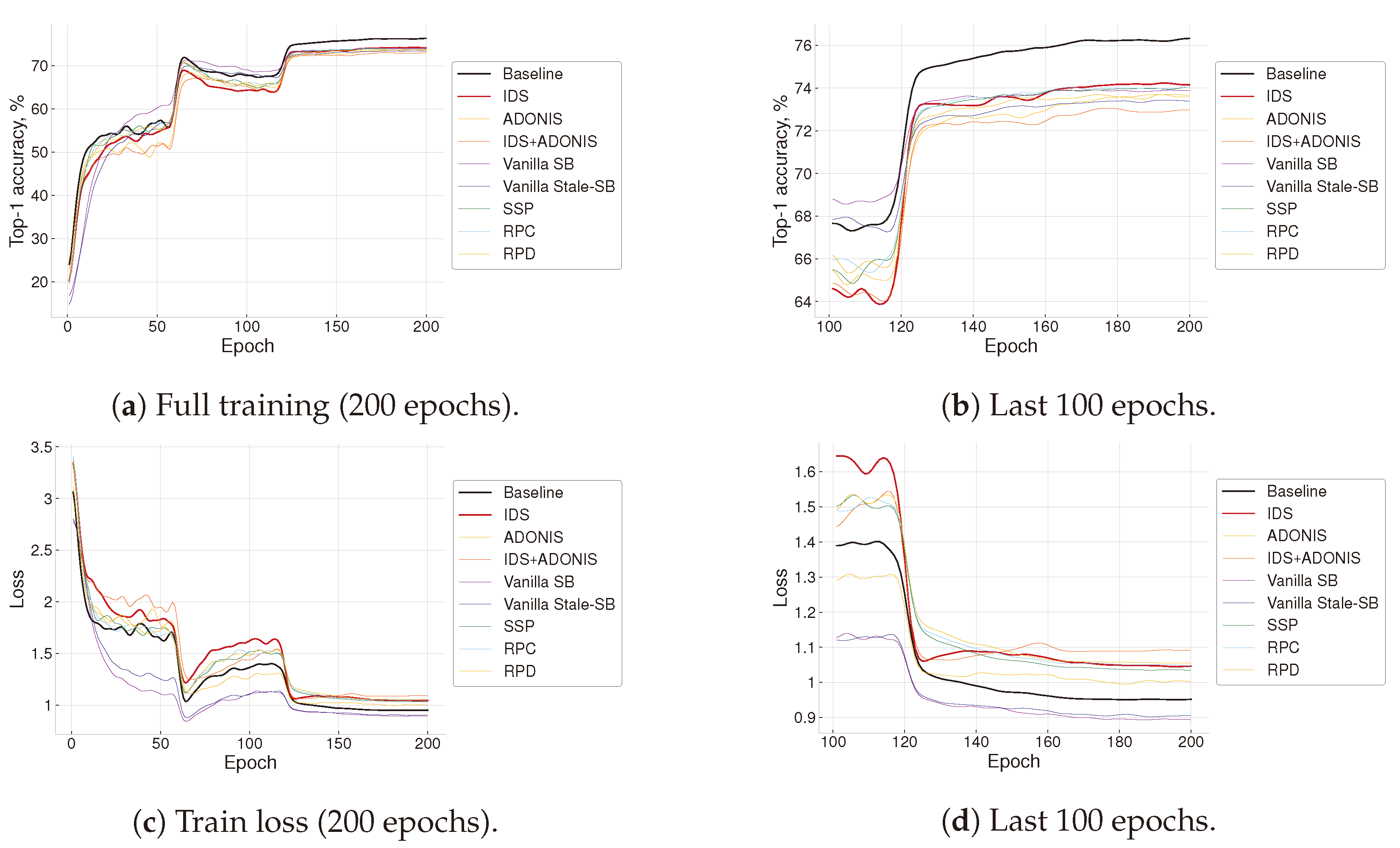

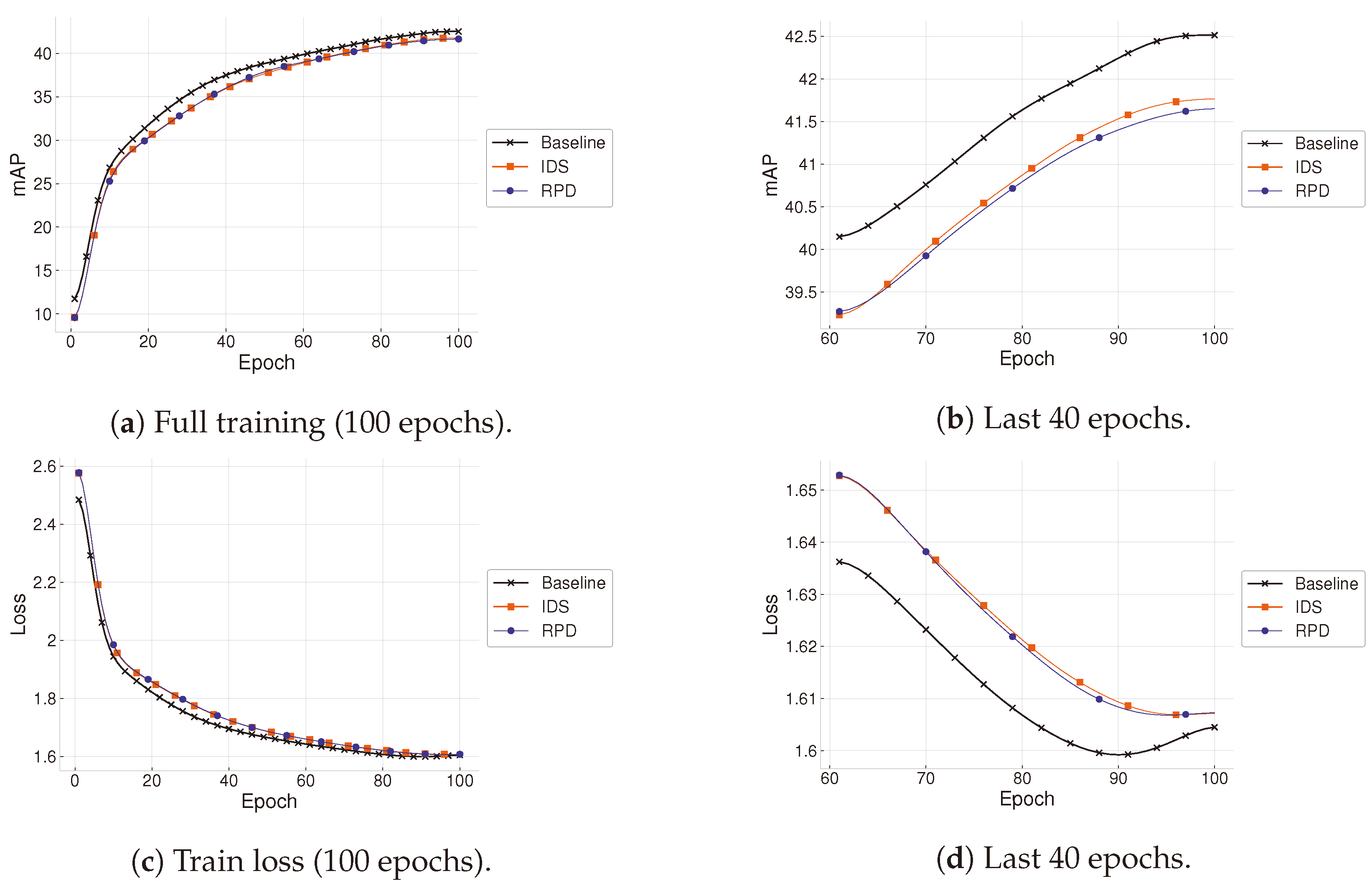

4. Results

5. Discussion

5.1. Summary

5.2. Limitations and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Detailed Results on CV Datasets

| Dataset | Model | Method | Acc. Drop (Full Train), p.p. | Train Boost (Full Train), x | Boost to 5 p.p. Drop, x |

|---|---|---|---|---|---|

| CIFAR-10 | ResNet18 | IDS () | 0.72 | 1.21 | 3.91 |

| ADONIS () | 0.76 | 1.28 | 4.0 | ||

| ADONIS () | 0.77 | 1.41 | 4.05 | ||

| ADONIS () | 0.55 | 1.46 | 4.35 | ||

| IDS () + ADONIS () | 1.27 | 1.58 | 4.08 | ||

| IDS () + ADONIS () | 1.05 | 1.75 | 4.87 | ||

| IDS () + ADONIS () | 1.29 | 1.81 | 4.99 | ||

| SSP | 0.65 | 1.24 | 3.97 | ||

| RPC | 0.74 | 1.23 | 3.95 | ||

| RPD | 0.53 | 1.23 | 3.93 | ||

| CIFAR-10 | ResNet50 | IDS () | 0.45 | 1.22 | 4.01 |

| ADONIS () | 0.97 | 1.35 | 3.77 | ||

| ADONIS () | 0.99 | 1.52 | 4.07 | ||

| ADONIS () | 0.98 | 1.69 | 4.49 | ||

| IDS () + ADONIS () | 1.62 | 1.59 | 2.62 | ||

| IDS () + ADONIS () | 1.69 | 1.76 | 2.79 | ||

| IDS () + ADONIS () | 1.65 | 1.93 | 3.05 | ||

| SSP | 0.78 | 1.18 | 3.79 | ||

| RPC | 0.38 | 1.18 | 3.87 | ||

| RPD | 0.58 | 1.19 | 3.95 | ||

| CIFAR-10 | MobileNet v2 | IDS () | −0.24 | 1.27 | 1.72 |

| ADONIS () | 0.44 | 1.39 | 1.86 | ||

| ADONIS () | 0.19 | 1.59 | 2.08 | ||

| ADONIS () | 0.4 | 1.68 | 2.18 | ||

| IDS () + ADONIS () | 1.04 | 1.75 | 2.31 | ||

| IDS () + ADONIS () | 1.17 | 1.96 | 2.56 | ||

| IDS () + ADONIS () | 0.96 | 2.04 | 2.66 | ||

| SSP | 0.55 | 1.28 | 1.73 | ||

| RPC | 0.23 | 1.19 | 1.62 | ||

| RPD | 0.29 | 1.13 | 1.53 | ||

| CIFAR-100 | ResNet18 | IDS () | 2.12 | 1.15 | 1.9 |

| ADONIS () | 2.76 | 1.16 | 1.83 | ||

| ADONIS () | 2.5 | 1.23 | 1.97 | ||

| ADONIS () | 2.66 | 1.27 | 2.03 | ||

| IDS () + ADONIS () | 3.55 | 1.58 | 2.5 | ||

| IDS () + ADONIS () | 3.26 | 1.71 | 2.72 | ||

| IDS () + ADONIS () | 3.26 | 1.75 | 2.76 | ||

| Vanilla SB | 2.43 | 0.92 | 2.84 | ||

| Vanilla Stale-SB | 2.81 | 1.1 | 1.8 | ||

| SSP | 2.32 | 1.14 | 3.63 | ||

| RPC | 2.49 | 1.29 | 2.12 | ||

| RPD | 2.43 | 1.16 | 1.91 | ||

| CIFAR-100 | ResNet50 | IDS () | 1.88 | 1.22 | 1.85 |

| ADONIS () | 2.2 | 1.32 | 1.96 | ||

| ADONIS () | 2.36 | 1.56 | 2.28 | ||

| ADONIS () | 2.19 | 1.63 | 2.37 | ||

| IDS () + ADONIS () | 4.24 | 1.61 | 2.21 | ||

| IDS () + ADONIS () | 4.65 | 1.89 | 2.1 | ||

| IDS () + ADONIS () | 4.92 | 1.9 | 2.08 | ||

| SSP | 2.74 | 1.17 | 1.76 | ||

| RPC | 2.77 | 1.18 | 1.79 | ||

| RPD | 2.13 | 1.18 | 1.78 | ||

| CIFAR-100 | MobileNet v2 | IDS () | 1.82 | 1.3 | 1.73 |

| ADONIS () | 1.9 | 1.54 | 1.94 | ||

| ADONIS () | 1.87 | 1.7 | 2.14 | ||

| ADONIS () | 1.37 | 1.77 | 2.23 | ||

| IDS () + ADONIS () | 2.77 | 1.78 | 1.96 | ||

| IDS () + ADONIS () | 3.41 | 1.99 | 2.05 | ||

| IDS () + ADONIS () | 3.7 | 2.08 | 2.06 | ||

| SSP | 0.96 | 1.27 | 1.69 | ||

| RPC | 1.59 | 1.21 | 1.6 | ||

| RPD | 1.63 | 1.21 | 1.61 | ||

| ImageNet 2012 | ResNet18 | IDS () | 1.2 | 1.22 | 1.72 |

| ADONIS () | 2.55 | 1.1 | 1.53 | ||

| ADONIS () | 2.39 | 1.11 | 1.54 | ||

| ADONIS () | 2.51 | 1.11 | 1.54 | ||

| IDS () + ADONIS () | 3.64 | 1.63 | 2.23 | ||

| IDS () + ADONIS () | 3.84 | 1.66 | 2.25 | ||

| IDS () + ADONIS () | 3.74 | 1.74 | 2.35 | ||

| SSP | 4.41 | 1.22 | 1.52 | ||

| RPC | 4.64 | 1.22 | 1.51 | ||

| RPD | 4.52 | 1.22 | 1.52 | ||

| ImageNet 2012 | ResNet50 | IDS () | 1.12 | 1.27 | 1.81 |

| ADONIS () | 2.18 | 1.48 | 2.02 | ||

| ADONIS () | 2.4 | 1.71 | 2.27 | ||

| ADONIS () | 2.45 | 1.82 | 2.37 | ||

| IDS () + ADONIS () | 3.48 | 1.77 | 2.37 | ||

| IDS () + ADONIS () | 3.43 | 2.17 | 2.88 | ||

| IDS () + ADONIS () | 3.5 | 2.31 | 3.05 | ||

| SSP | 4.03 | 1.23 | 1.67 | ||

| RPC | 4.22 | 1.25 | 1.62 | ||

| RPD | 4.17 | 1.28 | 1.66 | ||

| ImageNet 2012 | MobileNet v2 | IDS () | 0.61 | 1.25 | 2.61 |

| ADONIS () | 2.25 | 1.6 | 2.46 | ||

| ADONIS () | 1.74 | 1.72 | 2.66 | ||

| ADONIS () | 1.91 | 1.87 | 2.99 | ||

| IDS () + ADONIS () | 3.36 | 1.95 | 2.62 | ||

| IDS () + ADONIS () | 3.36 | 2.19 | 2.82 | ||

| IDS () + ADONIS () | 3.38 | 2.3 | 2.95 | ||

| SSP | 0.33 | 1.24 | 2.85 | ||

| RPC | 0.73 | 1.25 | 2.45 | ||

| RPD | 0.83 | 1.25 | 2.69 | ||

| MS COCO 2017 | YOLO v5 | IDS () | 0.76 | 1.19 | 2.41 |

| RPD | 0.86 | 1.23 | 2.46 |

Appendix B. Sensitivity Analysis of Hyperparameters Selection in ADONIS

| Method | Settings |

|---|---|

| SSP | |

| RPC | |

| RPD | |

| IDS | |

| ADONIS |

Appendix C. Sensitivity Analysis of Hyperparameters Selection in IDS

| Model | Latent Space |

|---|---|

| ResNet18 | 512 |

| ResNet18 (5) | 512 |

| ResNet18 (10) | 512 |

| ResNet18 (15) | 512 |

| SwAV | 2048 |

| DINOv2 | 384 |

References

- Shen, D.; Wu, G.; Suk, H.I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221. [Google Scholar] [CrossRef]

- Tuncer, T.; Ertam, F.; Dogan, S.; Aydemir, E.; Pławiak, P. Ensemble residual network-based gender and activity recognition method with signals. J. Supercomput. 2020, 76, 2119–2138. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Andrychowicz, O.M.; Baker, B.; Chociej, M.; Jozefowicz, R.; McGrew, B.; Pachocki, J.; Petron, A.; Plappert, M.; Powell, G.; Ray, A.; et al. Learning dexterous in-hand manipulation. Int. J. Robot. Res. 2020, 39, 3–20. [Google Scholar] [CrossRef]

- Zhu, H.; Yuen, K.V.; Mihaylova, L.; Leung, H. Overview of environment perception for intelligent vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2584–2601. [Google Scholar] [CrossRef]

- Janai, J.; Güney, F.; Behl, A.; Geiger, A. Computer vision for autonomous vehicles: Problems, datasets and state of the art. Found. Trends® Comput. Graph. Vis. 2020, 12, 1–308. [Google Scholar] [CrossRef]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed]

- Rydning, D.R.J.G.J.; Reinsel, J.; Gantz, J. The digitization of the world from edge to core. Fram. Int. Data Corp. 2018, 16, 1–28. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar]

- Zhai, X.; Kolesnikov, A.; Houlsby, N.; Beyer, L. Scaling vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12104–12113. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Knight, W. A New Chip Cluster Will Make Massive AI Models Possible. 2023. Available online: https://www.wired.com/story/cerebras-chip-cluster-neural-networks-ai/ (accessed on 15 June 2023).

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and policy considerations for deep learning in NLP. arXiv 2019, arXiv:1906.02243. [Google Scholar]

- Mirzasoleiman, B.; Bilmes, J.; Leskovec, J. Coresets for data-efficient training of machine learning models. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 13–18 July 2020; pp. 6950–6960. [Google Scholar]

- Sener, O.; Savarese, S. Active learning for convolutional neural networks: A core-set approach. arXiv 2017, arXiv:1708.00489. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Jiang, A.H.; Wong, D.L.K.; Zhou, G.; Andersen, D.G.; Dean, J.; Ganger, G.R.; Joshi, G.; Kaminksy, M.; Kozuch, M.; Lipton, Z.C.; et al. Accelerating deep learning by focusing on the biggest losers. arXiv 2019, arXiv:1910.00762. [Google Scholar]

- Sorscher, B.; Geirhos, R.; Shekhar, S.; Ganguli, S.; Morcos, A.S. Beyond neural scaling laws: Beating power law scaling via data pruning. arXiv 2022, arXiv:2206.14486. [Google Scholar]

- Cui, J.; Wang, R.; Si, S.; Hsieh, C.J. DC-BENCH: Dataset Condensation Benchmark. arXiv 2022, arXiv:2207.09639. [Google Scholar]

- Lei, S.; Tao, D. A Comprehensive Survey to Dataset Distillation. arXiv 2023, arXiv:2301.05603. [Google Scholar]

- Mayer, R.; Jacobsen, H.A. Scalable deep learning on distributed infrastructures: Challenges, techniques, and tools. ACM Comput. Surv. (CSUR) 2020, 53, 1–37. [Google Scholar] [CrossRef]

- Verbraeken, J.; Wolting, M.; Katzy, J.; Kloppenburg, J.; Verbelen, T.; Rellermeyer, J.S. A survey on distributed machine learning. Acm Comput. Surv. (CSUR) 2020, 53, 1–33. [Google Scholar]

- Wang, H.; Qu, Z.; Zhou, Q.; Zhang, H.; Luo, B.; Xu, W.; Guo, S.; Li, R. A comprehensive survey on training acceleration for large machine learning models in IoT. IEEE Internet Things J. 2021, 9, 939–963. [Google Scholar]

- Gupta, S.; Agrawal, A.; Gopalakrishnan, K.; Narayanan, P. Deep learning with limited numerical precision. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 1737–1746. [Google Scholar]

- Zhou, S.; Wu, Y.; Ni, Z.; Zhou, X.; Wen, H.; Zou, Y. Dorefa-net: Training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv 2016, arXiv:1606.06160. [Google Scholar]

- Seide, F.; Fu, H.; Droppo, J.; Li, G.; Yu, D. 1-bit stochastic gradient descent and its application to data-parallel distributed training of speech dnns. In Proceedings of the Fifteenth Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014. [Google Scholar]

- Wen, W.; Xu, C.; Yan, F.; Wu, C.; Wang, Y.; Chen, Y.; Li, H. Terngrad: Ternary gradients to reduce communication in distributed deep learning. Adv. Neural Inf. Process. Syst. 2017, 30, 1509–1519. [Google Scholar]

- Liang, T.; Glossner, J.; Wang, L.; Shi, S.; Zhang, X. Pruning and quantization for deep neural network acceleration: A survey. Neurocomputing 2021, 461, 370–403. [Google Scholar]

- Lym, S.; Choukse, E.; Zangeneh, S.; Wen, W.; Sanghavi, S.; Erez, M. Prunetrain: Fast neural network training by dynamic sparse model reconfiguration. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, Denver, CO, USA, 17–22 November 2019; pp. 1–13. [Google Scholar]

- Google. Edge TPU. 2023. Available online: https://cloud.google.com/edge-tpu/ (accessed on 26 January 2023).

- Corporation, N. GeForce RTX 30 Series. 2023. Available online: https://www.nvidia.com/en-gb/geforce/graphics-cards/30-series/ (accessed on 15 June 2023).

- Huawei Technologies Co., Ltd. Ascend 910 Series. 2023. Available online: https://e.huawei.com/ae/products/cloud-computing-dc/atlas/ascend-910 (accessed on 15 June 2023).

- Vepakomma, P.; Gupta, O.; Swedish, T.; Raskar, R. Split learning for health: Distributed deep learning without sharing raw patient data. arXiv 2018, arXiv:1812.00564. [Google Scholar]

- Kang, Y.; Hauswald, J.; Gao, C.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative intelligence between the cloud and mobile edge. ACM SIGARCH Comput. Archit. News 2017, 45, 615–629. [Google Scholar] [CrossRef]

- Birodkar, V.; Mobahi, H.; Bengio, S. Semantic Redundancies in Image-Classification Datasets: The 10% You Do not Need. arXiv 2019, arXiv:1901.11409. [Google Scholar]

- Toneva, M.; Sordoni, A.; Combes, R.T.d.; Trischler, A.; Bengio, Y.; Gordon, G.J. An empirical study of example forgetting during deep neural network learning. arXiv 2018, arXiv:1812.05159. [Google Scholar]

- Cazenavette, G.; Wang, T.; Torralba, A.; Efros, A.A.; Zhu, J.Y. Dataset distillation by matching training trajectories. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4750–4759. [Google Scholar]

- Wang, T.; Zhu, J.Y.; Torralba, A.; Efros, A.A. Dataset distillation. arXiv 2018, arXiv:1811.10959. [Google Scholar]

- Coleman, C.; Yeh, C.; Mussmann, S.; Mirzasoleiman, B.; Bailis, P.; Liang, P.; Leskovec, J.; Zaharia, M. Selection via proxy: Efficient data selection for deep learning. arXiv 2019, arXiv:1906.11829. [Google Scholar]

- Shim, J.h.; Kong, K.; Kang, S.J. Core-set sampling for efficient neural architecture search. arXiv 2021, arXiv:2107.06869. [Google Scholar]

- Johnson, T.B.; Guestrin, C. Training deep models faster with robust, approximate importance sampling. Adv. Neural Inf. Process. Syst. 2018, 31, 7265–7275. [Google Scholar]

- Katharopoulos, A.; Fleuret, F. Biased importance sampling for deep neural network training. arXiv 2017, arXiv:1706.00043. [Google Scholar]

- Katharopoulos, A.; Fleuret, F. Not all samples are created equal: Deep learning with importance sampling. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 2525–2534. [Google Scholar]

- Zhang, Z.; Chen, Y.; Saligrama, V. Efficient training of very deep neural networks for supervised hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1487–1495. [Google Scholar]

- Dogra, A.S.; Redman, W. Optimizing neural networks via Koopman operator theory. Adv. Neural Inf. Process. Syst. 2020, 33, 2087–2097. [Google Scholar]

- Lagani, G.; Falchi, F.; Gennaro, C.; Amato, G. Hebbian semi-supervised learning in a sample efficiency setting. Neural Netw. 2021, 143, 719–731. [Google Scholar] [CrossRef]

- Amato, G.; Carrara, F.; Falchi, F.; Gennaro, C.; Lagani, G. Hebbian learning meets deep convolutional neural networks. In Proceedings of the Image Analysis and Processing–ICIAP 2019: 20th International Conference, Trento, Italy, 9–13 September 2019; Proceedings, Part I 20. Springer: Berlin/Heidelberg, Germany, 2019; pp. 324–334. [Google Scholar]

- Krithivasan, S.; Sen, S.; Venkataramani, S.; Raghunathan, A. Accelerating DNN Training Through Selective Localized Learning. Front. Neurosci. 2022, 15, 759807. [Google Scholar] [CrossRef]

- Talloen, J.; Dambre, J.; Vandesompele, A. PyTorch-Hebbian: Facilitating local learning in a deep learning framework. arXiv 2021, arXiv:2102.00428. [Google Scholar]

- Miconi, T. Hebbian learning with gradients: Hebbian convolutional neural networks with modern deep learning frameworks. arXiv 2021, arXiv:2107.01729. [Google Scholar]

- Cekic, M.; Bakiskan, C.; Madhow, U. Towards robust, interpretable neural networks via Hebbian/anti-Hebbian learning: A software framework for training with feature-based costs. Softw. Impacts 2022, 13, 100347. [Google Scholar] [CrossRef]

- Moraitis, T.; Toichkin, D.; Journé, A.; Chua, Y.; Guo, Q. SoftHebb: Bayesian inference in unsupervised Hebbian soft winner-take-all networks. Neuromorphic Comput. Eng. 2022, 2, 044017. [Google Scholar] [CrossRef]

- Tang, J.; Yuan, F.; Shen, X.; Wang, Z.; Rao, M.; He, Y.; Sun, Y.; Li, X.; Zhang, W.; Li, Y.; et al. Bridging biological and artificial neural networks with emerging neuromorphic devices: Fundamentals, progress, and challenges. Adv. Mater. 2019, 31, 1902761. [Google Scholar] [CrossRef]

- Lagani, G.; Gennaro, C.; Fassold, H.; Amato, G. FastHebb: Scaling Hebbian Training of Deep Neural Networks to ImageNet Level. In Proceedings of the Similarity Search and Applications: 15th International Conference, SISAP 2022, Bologna, Italy, 5–7 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 251–264. [Google Scholar]

- Journé, A.; Rodriguez, H.G.; Guo, Q.; Moraitis, T. Hebbian deep learning without feedback. arXiv 2022, arXiv:2209.11883. [Google Scholar]

- Lagani, G.; Falchi, F.; Gennaro, C.; Amato, G. Comparing the performance of Hebbian against backpropagation learning using convolutional neural networks. Neural Comput. Appl. 2022, 34, 6503–6519. [Google Scholar] [CrossRef]

- Sturges, H.A. The choice of a class interval. J. Am. Stat. Assoc. 1926, 21, 65–66. [Google Scholar] [CrossRef]

- Jiang, A.H. Official Repository with Source Code for Selective Backpropagation Algorithm. 2023. Available online: https://github.com/angelajiang/SelectiveBackprop (accessed on 15 June 2023).

- Team, T.M.M. Composer. 2021. Available online: https://github.com/mosaicml/composer/ (accessed on 15 June 2023).

- MosaicML. Selective Backpropagation Implementation within Composer Library. 2023. Available online: https://github.com/mosaicml/composer/tree/dev/composer/algorithms/selective_backprop (accessed on 15 June 2023).

- Zhang, J.; Yu, H.F.; Dhillon, I.S. Autoassist: A framework to accelerate training of deep neural networks. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper_files/paper/2019/file/9bd5ee6fe55aaeb673025dbcb8f939c1-Paper.pdf (accessed on 15 June 2023).

- Wang, L.; Zhang, Y.; Feng, J. On the Euclidean distance of images. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1334–1339. [Google Scholar] [CrossRef] [PubMed]

- Eskicioglu, A.M.; Fisher, P.S. Image quality measures and their performance. IEEE Trans. Commun. 1995, 43, 2959–2965. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 15 June 2023).

- weiaicunzai. Pytorch-CIFAR100. 2022. Available online: https://github.com/weiaicunzai/pytorch-cifar100 (accessed on 15 June 2023).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- PyTorch. Torchvision Library. 2023. Available online: https://pytorch.org/vision/stable/index.html (accessed on 15 June 2023).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Caron, M.; Misra, I.; Mairal, J.; Goyal, P.; Bojanowski, P.; Joulin, A. Unsupervised learning of visual features by contrasting cluster assignments. Adv. Neural Inf. Process. Syst. 2020, 33, 9912–9924. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. DINOv2: Learning Robust Visual Features without Supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

| Model | Dataset | Acc. | Train Time, s |

|---|---|---|---|

| ResNet18 | CIFAR-10 | 95.09 | 2518 |

| CIFAR-100 | 76.43 | 2540 | |

| ImageNet 2012 | 70.02 | 55,943 | |

| ResNet50 | CIFAR-10 | 95.1 | 5913 |

| CIFAR-100 | 79.06 | 5910 | |

| ImageNet 2012 | 75.6 | 153,409 | |

| MobileNet v2 | CIFAR-10 | 90.89 | 4074 |

| CIFAR-100 | 68.26 | 4173 | |

| ImageNet 2012 | 68.04 | 94,470 | |

| YOLO v5 | MS COCO 2017 | 42.55 | 110,581 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demidovskij, A.; Tugaryov, A.; Trutnev, A.; Kazyulina, M.; Salnikov, I.; Pavlov, S. Lightweight and Elegant Data Reduction Strategies for Training Acceleration of Convolutional Neural Networks. Mathematics 2023, 11, 3120. https://doi.org/10.3390/math11143120

Demidovskij A, Tugaryov A, Trutnev A, Kazyulina M, Salnikov I, Pavlov S. Lightweight and Elegant Data Reduction Strategies for Training Acceleration of Convolutional Neural Networks. Mathematics. 2023; 11(14):3120. https://doi.org/10.3390/math11143120

Chicago/Turabian StyleDemidovskij, Alexander, Artyom Tugaryov, Aleksei Trutnev, Marina Kazyulina, Igor Salnikov, and Stanislav Pavlov. 2023. "Lightweight and Elegant Data Reduction Strategies for Training Acceleration of Convolutional Neural Networks" Mathematics 11, no. 14: 3120. https://doi.org/10.3390/math11143120

APA StyleDemidovskij, A., Tugaryov, A., Trutnev, A., Kazyulina, M., Salnikov, I., & Pavlov, S. (2023). Lightweight and Elegant Data Reduction Strategies for Training Acceleration of Convolutional Neural Networks. Mathematics, 11(14), 3120. https://doi.org/10.3390/math11143120