Stability Analysis of Recurrent-Neural-Based Controllers Using Dissipativity Domain

Abstract

1. Introduction

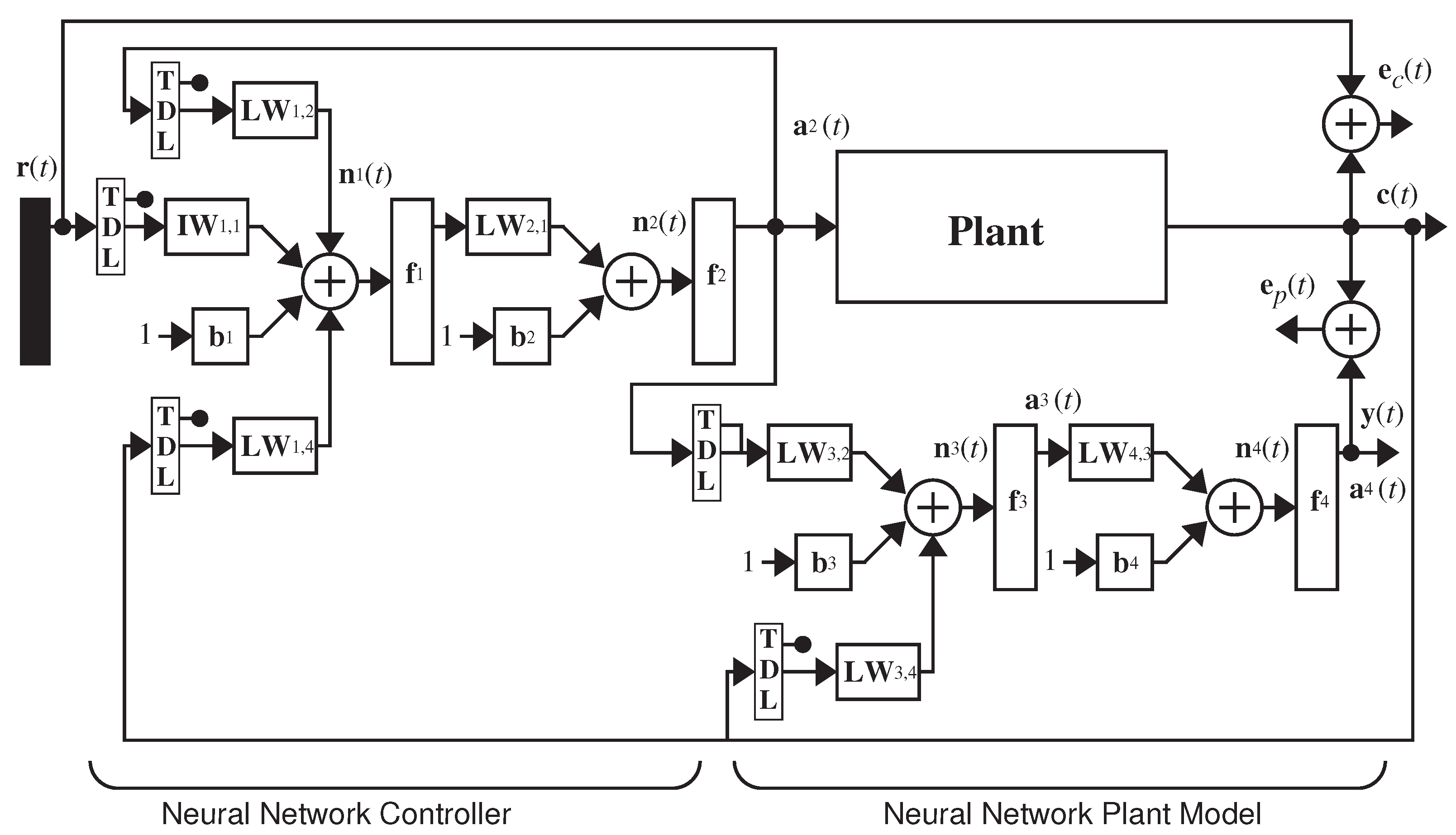

2. Layered Digital Dynamic Network

3. Reachable Set

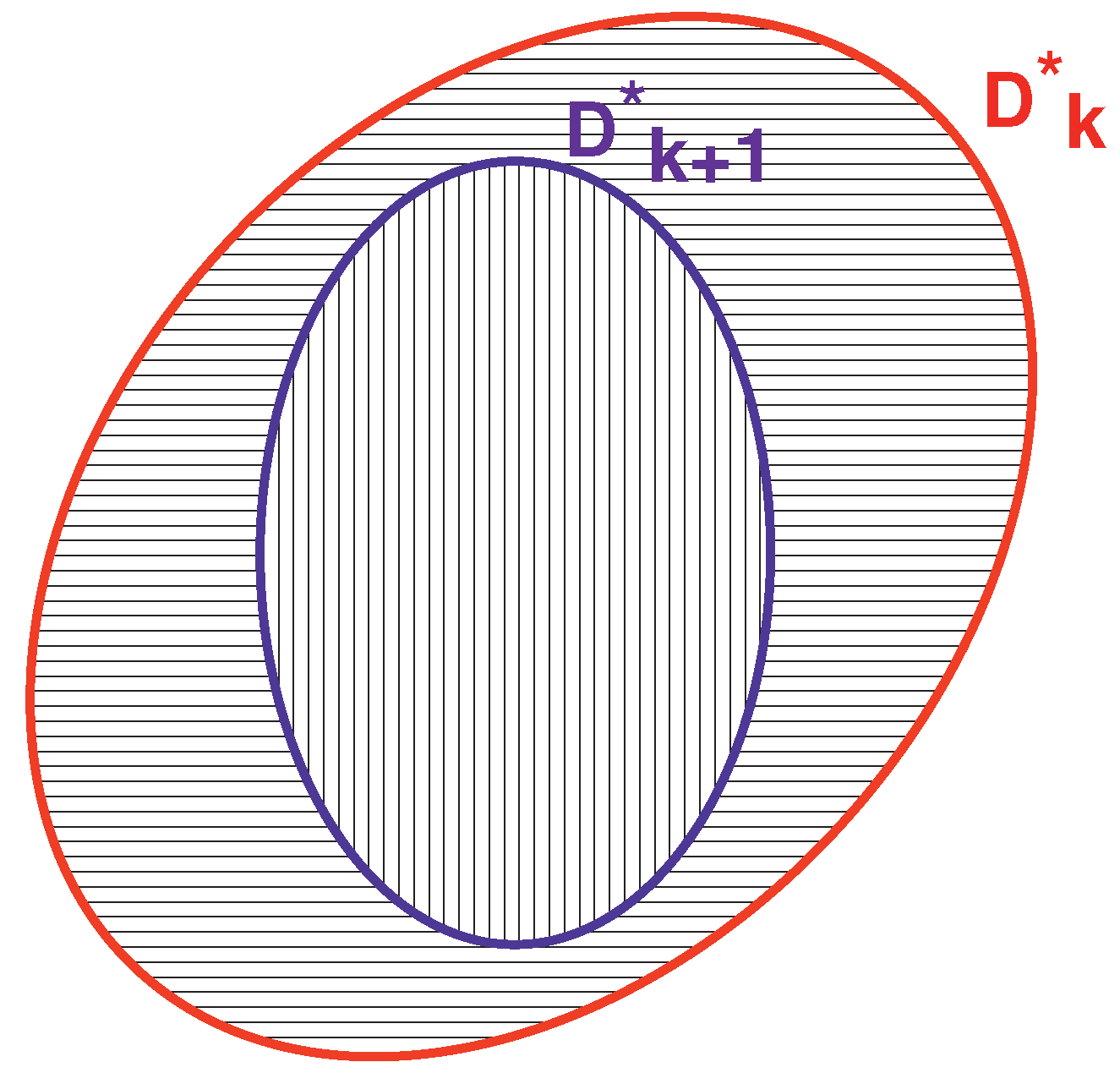

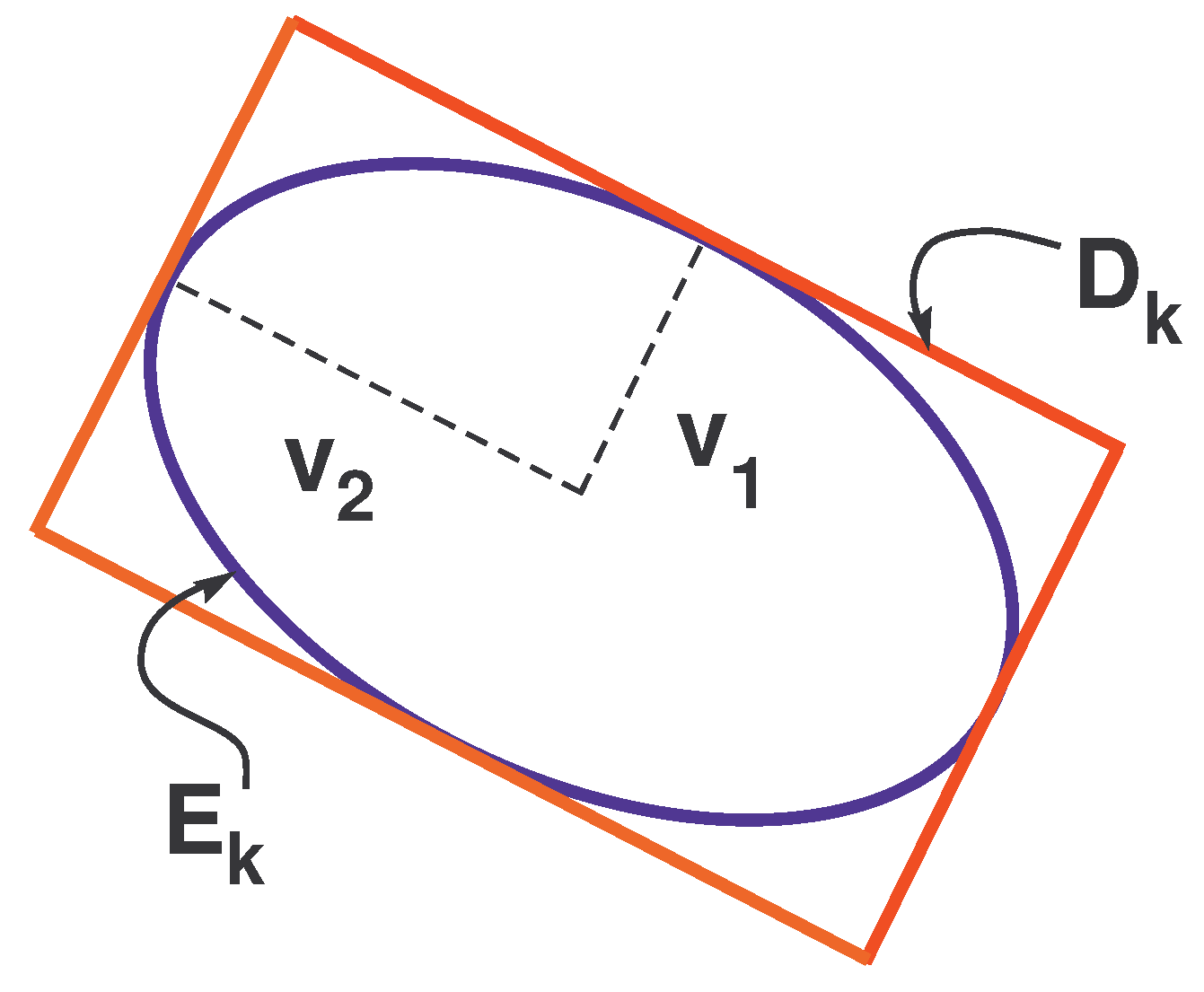

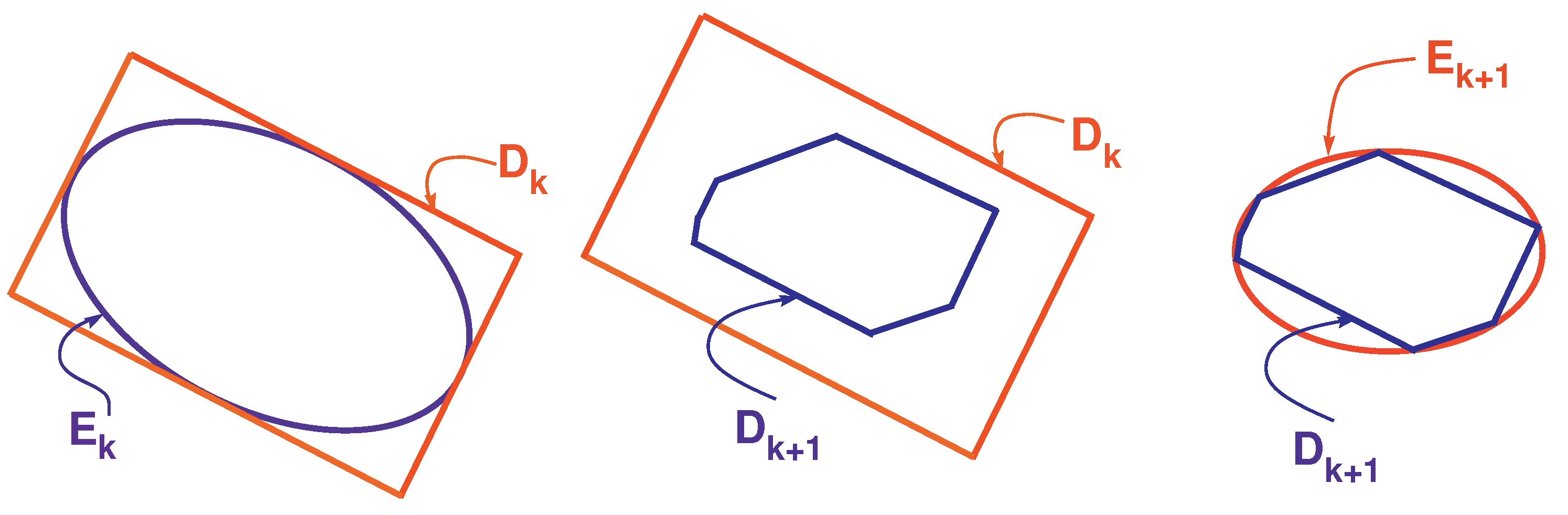

4. Estimation of Reachable Set

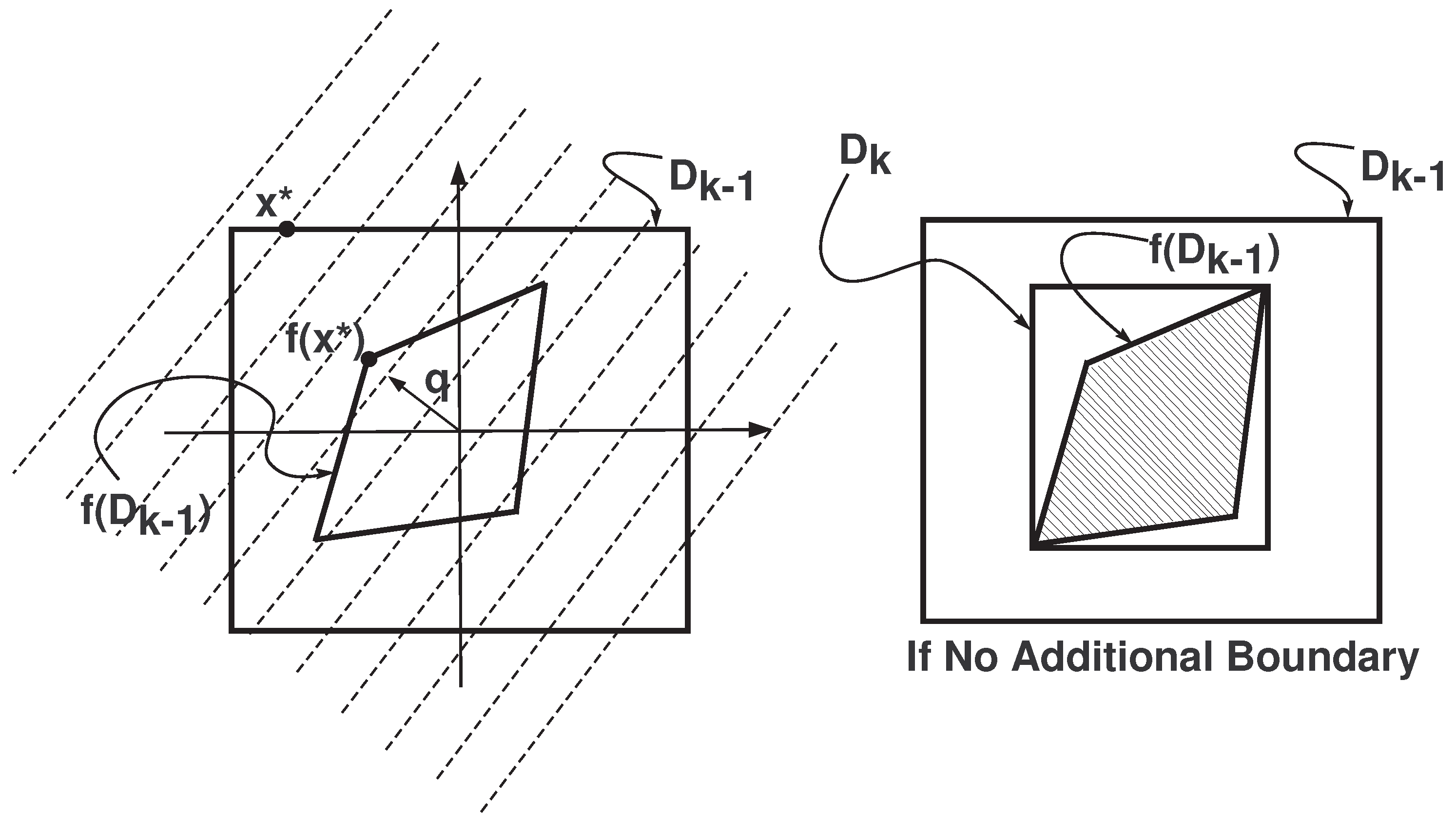

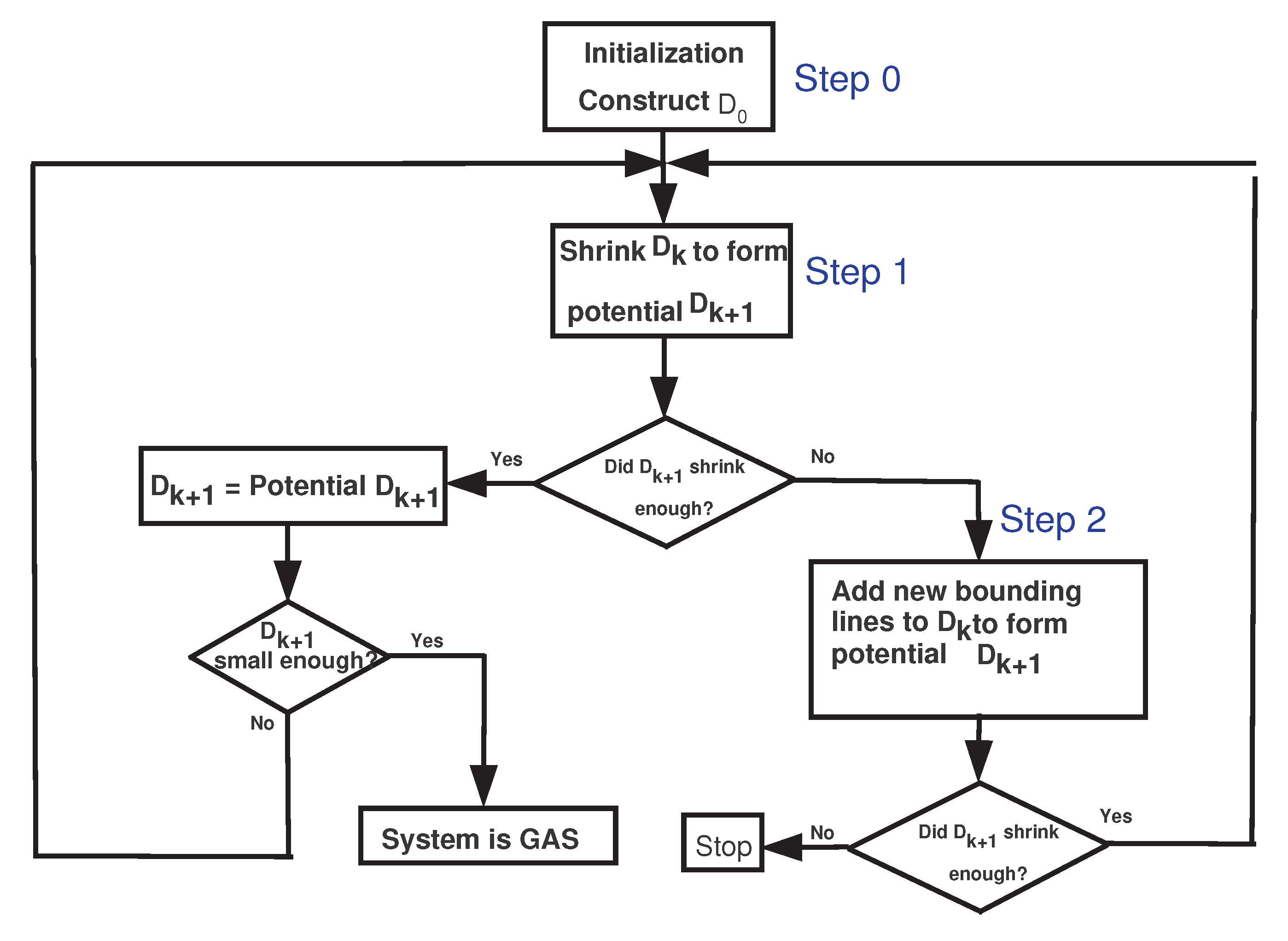

5. RODD-LB1 [9]

5.1. Step 0

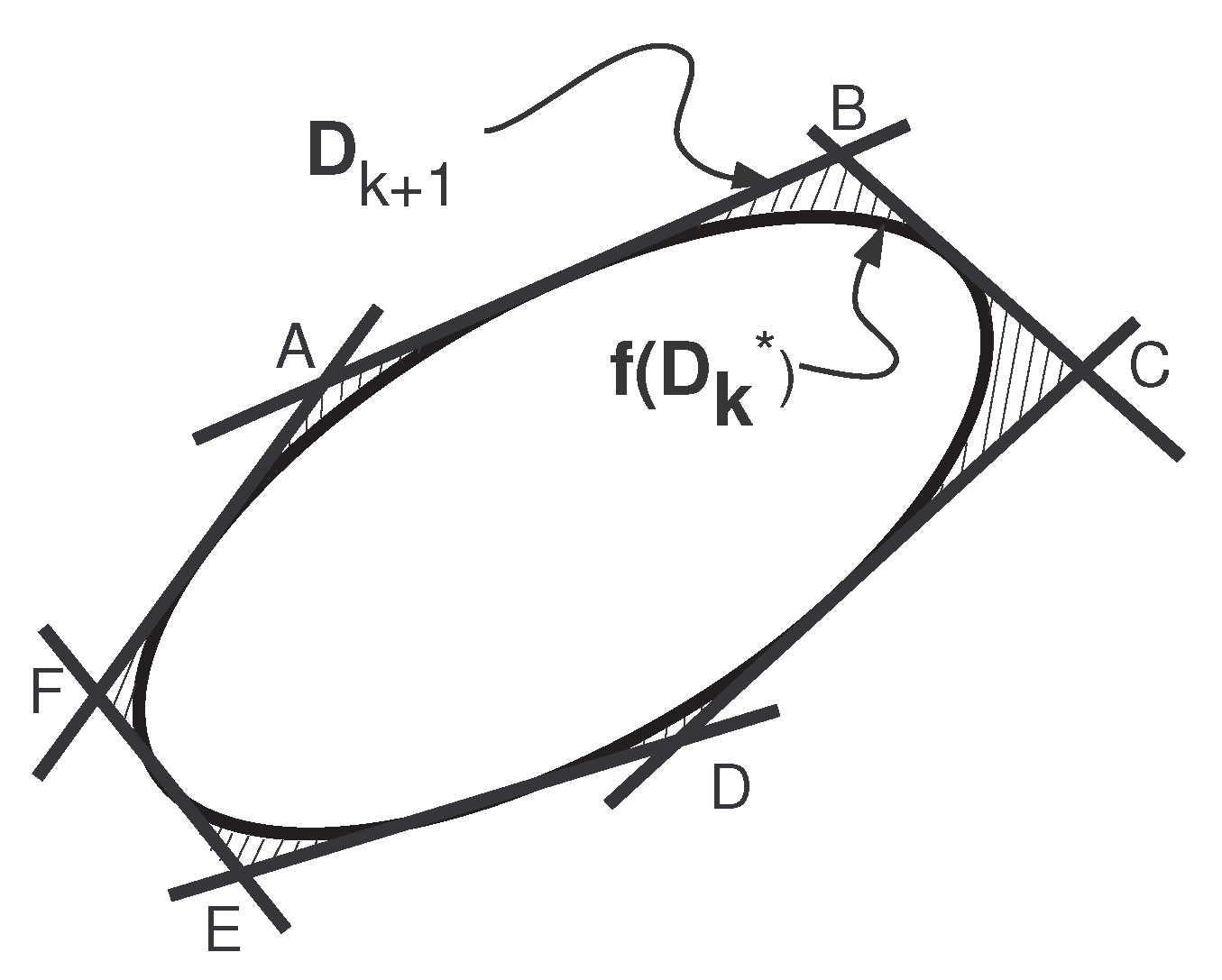

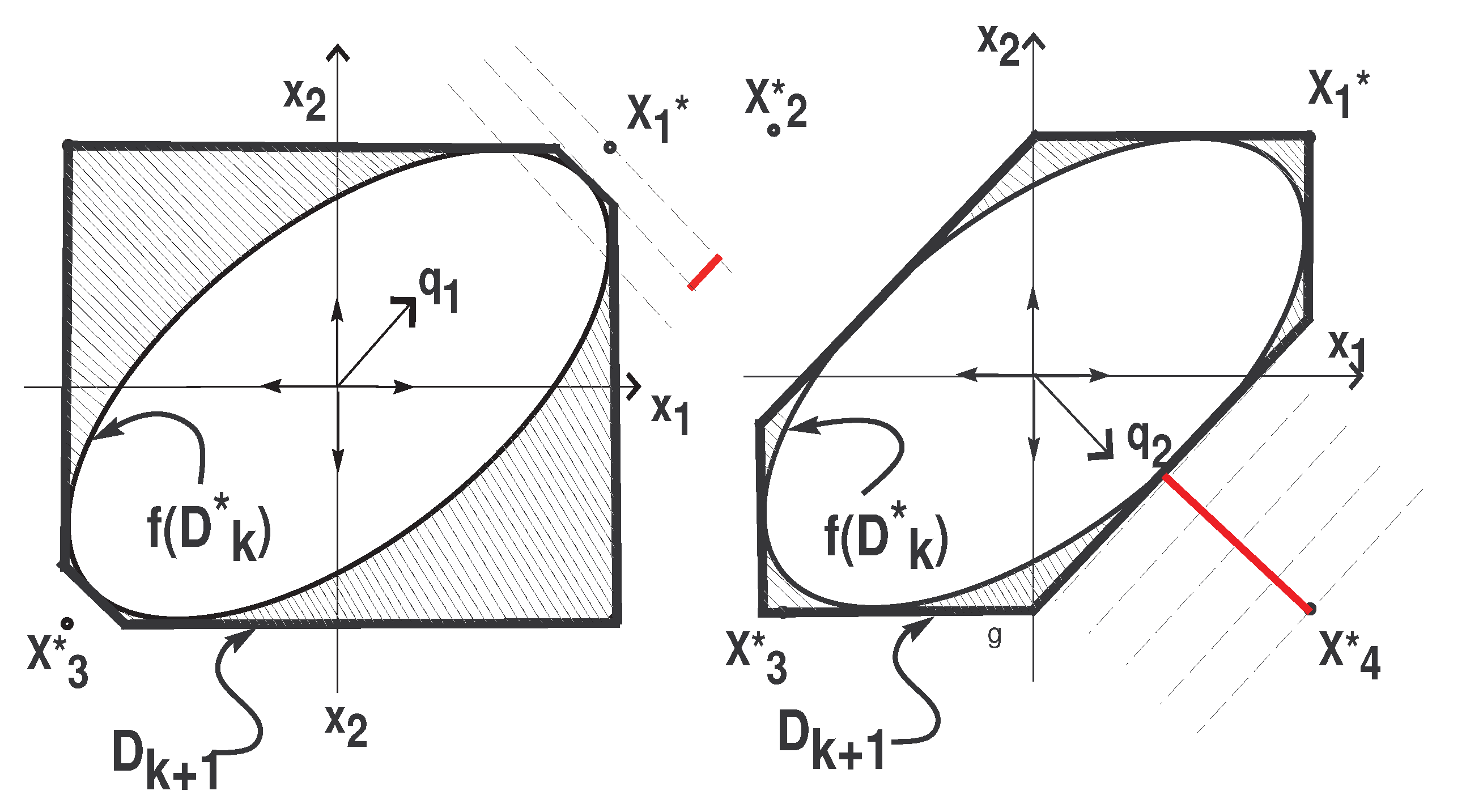

5.2. Step 1

5.3. Step 2

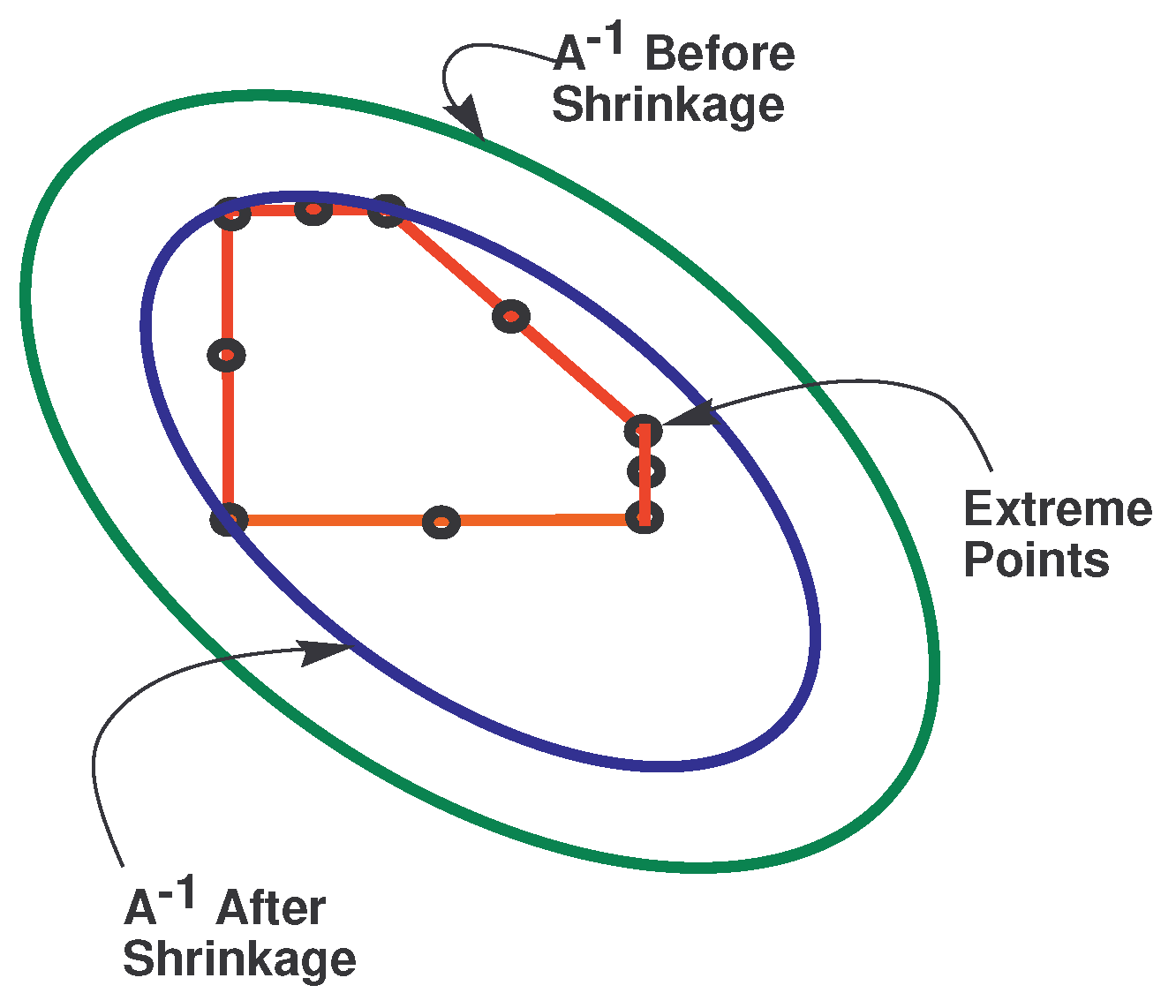

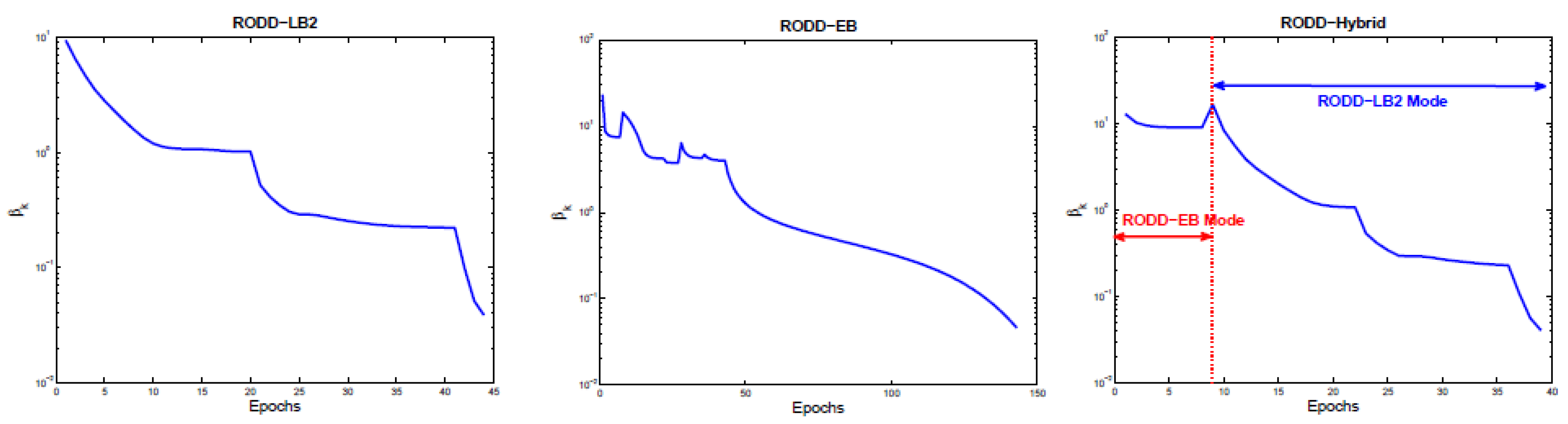

6. RODD-LB2

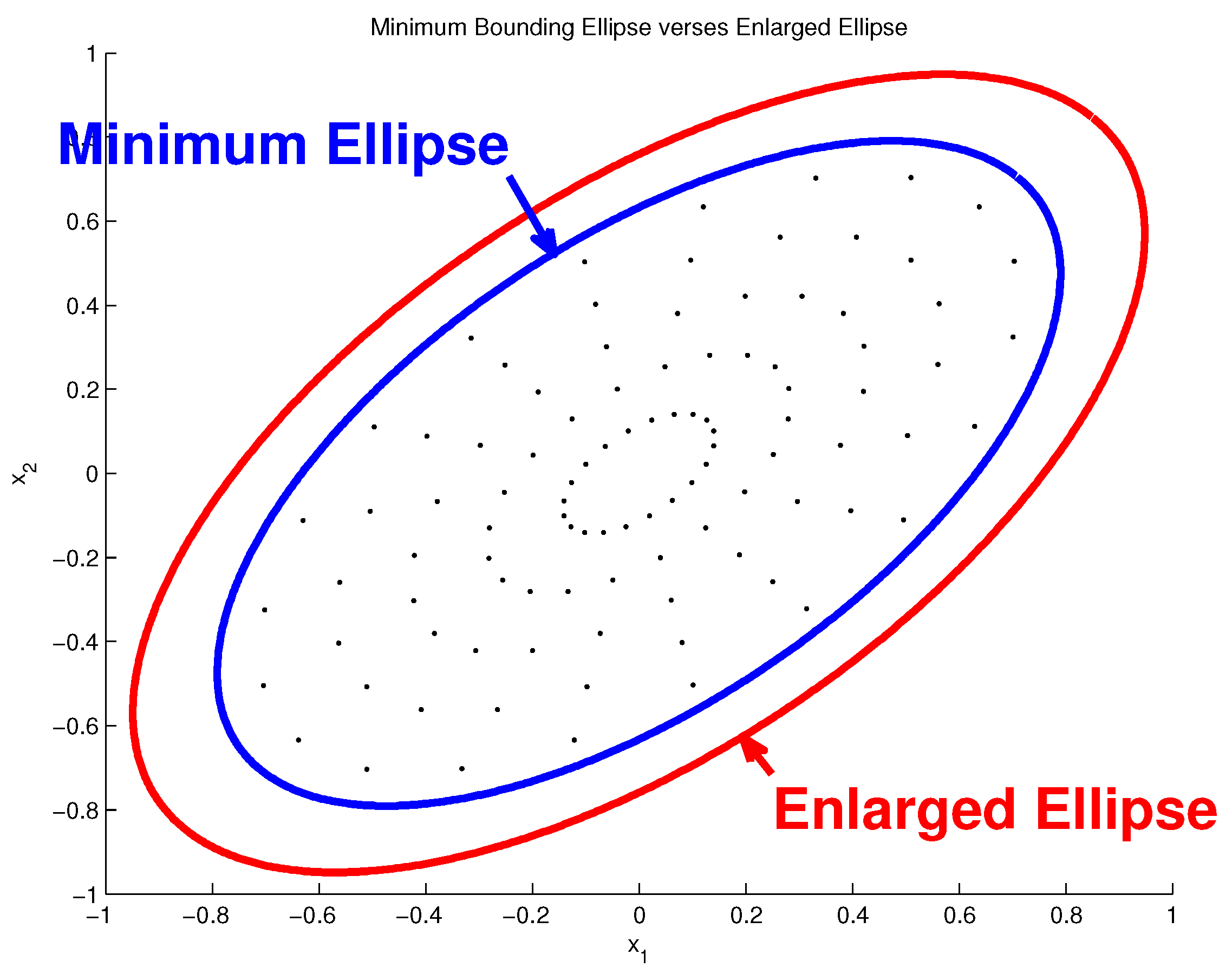

7. RODD-EB

7.1. RODD-EB Algorithm

7.1.1. Step 0

7.1.2. Step 1

7.2. Step 2

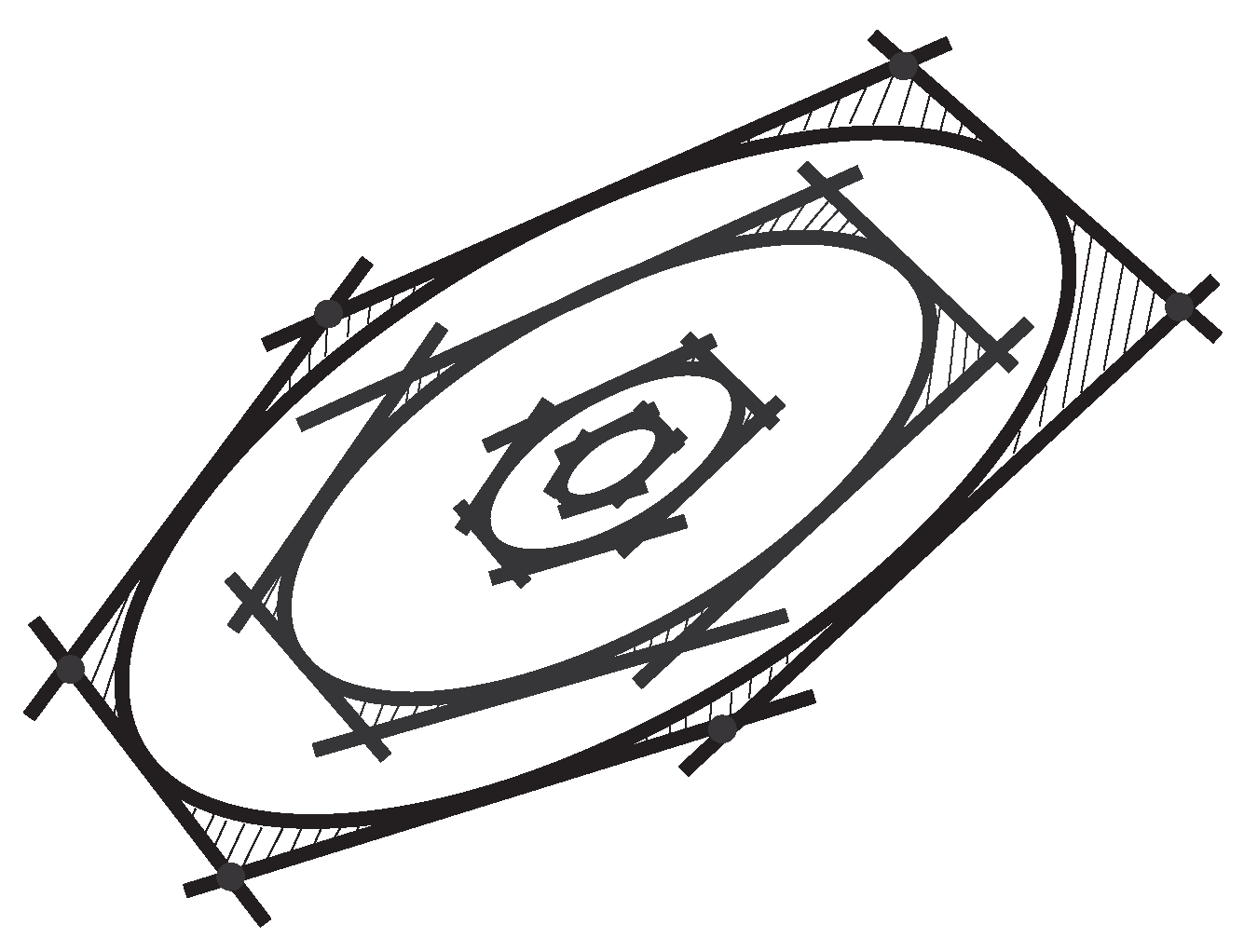

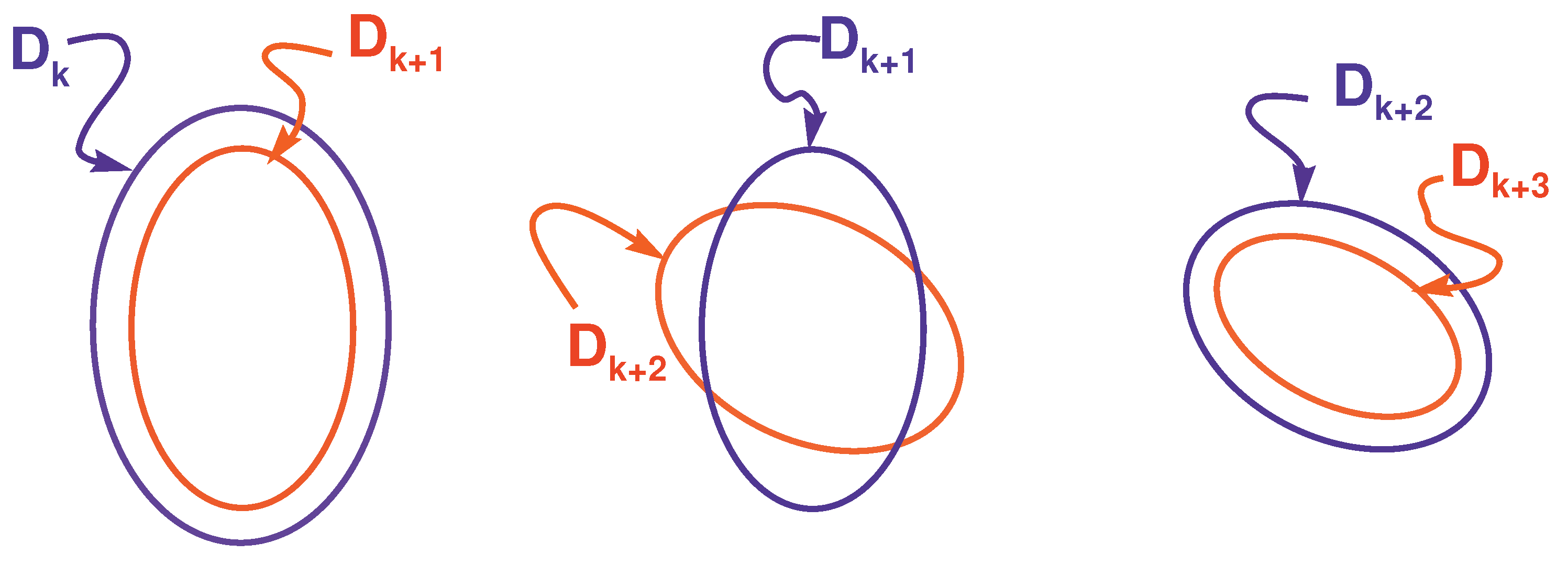

8. RODD-Hybrid

8.1. RODD-EB Mode

8.2. Transition from RODD-EB to RODD-LB Mode

8.3. RODD-LB2 Mode

8.4. Transition from RODD-LB to RODD-EB Mode

9. Discussion

9.1. Examples

9.2. Comparing Performance

10. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hu, S.; Liu, Y.; Liu, Z.; Chen, T.; Wang, J.; Yu, Q.; Deng, L.; Yin, Y.; Hosaka, S. Associative memory realized by a reconfigurable memristive Hopfield neural network. Nat. Commun. 2015, 6, 7522. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Wang, C.; Cui, L.; Sun, Y.; Zhang, X.; Yao, W. Hyperchaotic memristive ring neural network and application in medical image encryption. Nonlinear Dyn. 2022, 110, 841–855. [Google Scholar] [CrossRef]

- Horn, J.; De Jesus, O.; Hagan, M.T. Spurious Valleys in the Error Surface of Recurrent Networks—Analysis and Avoidance. IEEE Trans. Neural Netw. 2009, 20, 686–700. [Google Scholar] [CrossRef] [PubMed]

- Suykens, J.A.K.; De Moor, B.L.; Vandewalle, J. Nlq theory: A Neural Control Framework with Global Asymptotic Stability Criteria. Neural Netw. 1997, 10, 615–637. [Google Scholar] [CrossRef] [PubMed]

- Phan, M.; Hagan, M. Error surface of recurrent neural networks. IEEE Trans. Neural Networks Learn. Syst. 2013, 24, 1709–1721. [Google Scholar] [CrossRef] [PubMed]

- Suykens, J.A.K.; De Moor, B.L.; Vandewalle, J. Artificial Neural Networks for the Modeling and Control of Nonlinear Systems; Kluwer: Boston, MA, USA, 1996. [Google Scholar]

- Tanaka, K. An Approach to Stability Criteria of Neural-Network Control Systems. IEEE Trans. Neural Netw. 1996, 7, 629–642. [Google Scholar] [CrossRef] [PubMed]

- Barabanov, N.E.; Prokhorov, D.V. Stability Analysis of Discrete-Time Recurrent Neural Networks. IEEE Trans. Neural Netw. 2002, 13, 292–303. [Google Scholar] [CrossRef] [PubMed]

- Barabanov, N.E.; Prokhorov, D.V. A New Method for Stability of Nonlinear Discrete-Time Systems. IEEE Trans. Autom. Control 2003, 48, 2250–2255. [Google Scholar] [CrossRef]

- DeJesus, O.; Hagan, M. Backpropagation algorithms for a broad class of dynamic networks. IEEE Trans. Neural Netw. 2007, 18, 14–27. [Google Scholar]

- Jafari, R. Stability Analysis of Recurrent Neural-Based Controllers. Ph.D. Dissertation, Oklahoma State Unioversity, Stillwater, OK, USA, 2012. [Google Scholar]

- Khalil, H.K. Nonlinear Systems; Prentice Hall: Hoboken, NJ, USA, 2001. [Google Scholar]

- Jafari, R.; Hagan, M. Global stability analysis using the method of reduction of dissipativity domain. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 2550–2556. [Google Scholar]

- Buck, R.; Buck, E. Advanced Calculus, 3rd ed.; McGraw-Hill: New York, NY, USA, 1978. [Google Scholar]

- Kleder, M. Constraints to Vertices; Delta Epsilon Technologies, LLC: Mc Lean, VA, USA, 2003. [Google Scholar]

- Jolliffe, I.T. Principal Component Analysis, 2nd ed.; Springer: New York, NY, USA, 2002. [Google Scholar]

- The MathWorks Inc. MATLAB, Version 7.10.0 (R2010a); The MathWorks Inc.: Natick, MA, USA, 2010. [Google Scholar]

| RODD-LB2 | RODD-EB | RODD-Hybrid | |

|---|---|---|---|

| Result | Stability detected | Stability detected | Stability detected |

| Runtime (s) | 302 | 282 | 282 |

| Improvement | 1.07x | Base | Base |

| RODD-LB1 | RODD-LB2 | RODD-EB | RODD-Hybrid | |

|---|---|---|---|---|

| Stable | base | Improves by 16x | Improves by 75x | Improves by 95x |

| Unstable | base | Improves by 16x | Improves by 190x | Improves by 112x |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jafari, R. Stability Analysis of Recurrent-Neural-Based Controllers Using Dissipativity Domain. Mathematics 2023, 11, 3050. https://doi.org/10.3390/math11143050

Jafari R. Stability Analysis of Recurrent-Neural-Based Controllers Using Dissipativity Domain. Mathematics. 2023; 11(14):3050. https://doi.org/10.3390/math11143050

Chicago/Turabian StyleJafari, Reza. 2023. "Stability Analysis of Recurrent-Neural-Based Controllers Using Dissipativity Domain" Mathematics 11, no. 14: 3050. https://doi.org/10.3390/math11143050

APA StyleJafari, R. (2023). Stability Analysis of Recurrent-Neural-Based Controllers Using Dissipativity Domain. Mathematics, 11(14), 3050. https://doi.org/10.3390/math11143050