1. Introduction

Urban transportation systems face significant challenges with rapidly developing urbanization and population growth. Traffic flow prediction, as one of the essential components of intelligent transportation systems (ITS) [

1], aims to predict the future state of urban transportation systems (e.g., traffic flow, speed, and passenger demand). As an essential foundation of intelligent transportation systems (ITS), traffic flow prediction has attracted significant attention in deep learning research [

2]. Accurate traffic flow prediction is essential and valuable for optimizing traffic management [

3], reducing traffic congestion, reducing vehicle emissions, improving traffic safety, improving the urban environment, and promoting economic development [

4].

Traffic flow between interconnected roads is closely related due to the complex dynamic connectivity of the traffic road network in the spatial dimension [

5]. For example, as time evolves, traffic congestion usually propagates from one road node to its neighboring streets and even to more distant connected road segments [

6]. Thus, it is shown that changes in observations generated at a roadway observation point are often related not only to the past historical data of its observation point but also to the past historical traffic data of its neighboring observation points [

7,

8]. However, most current spatial modeling approaches assume that the spatial correlation is constant, which may not hold in traffic practice.

Traffic flows at the observed nodes in each road network show highly dynamic and complex patterns of spatial–temporal correlation [

9]. In addition, the degree of relationship between the observation nodes changes dynamically throughout the day as time changes [

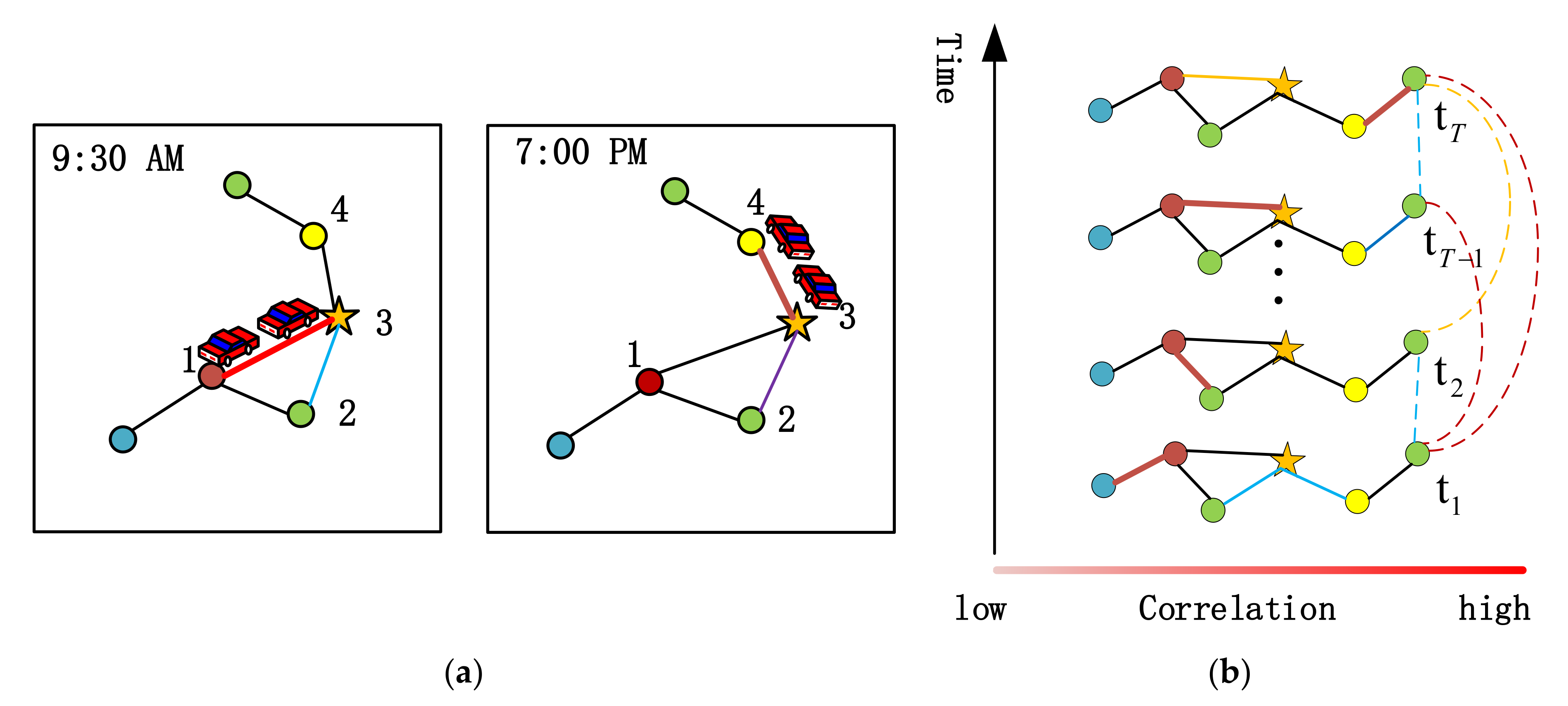

6]. As shown in

Figure 1a, at 9:30 AM, more traffic is observed between node 1 and node 3, indicating a strong correlation between the two points. However, as time changes, there is a substantial correlation between observation node 3 and node 4 at 7:00 PM, and the correlation between nodes 1 and 3 decreases. In addition, the observed values at the exact location at different moments show nonlinear variations, and the traffic state at far time steps sometimes significantly impacts the observed time points more than at similar time steps. As shown in

Figure 1b, the correlation degree between the observed values at moment

and moment

is more significant than between moment

and moment

. Therefore, it shows that the traffic data has spatial–temporal dynamics and keeps changing with time and space. In addition, the degree of influence of different locations on the predicted position changes dynamically with time [

10].

A static adjacency matrix cannot reflect complex and hierarchical urban traffic flow characteristics [

11]. For example, in graph convolutional neural network (GCN)-based prediction models [

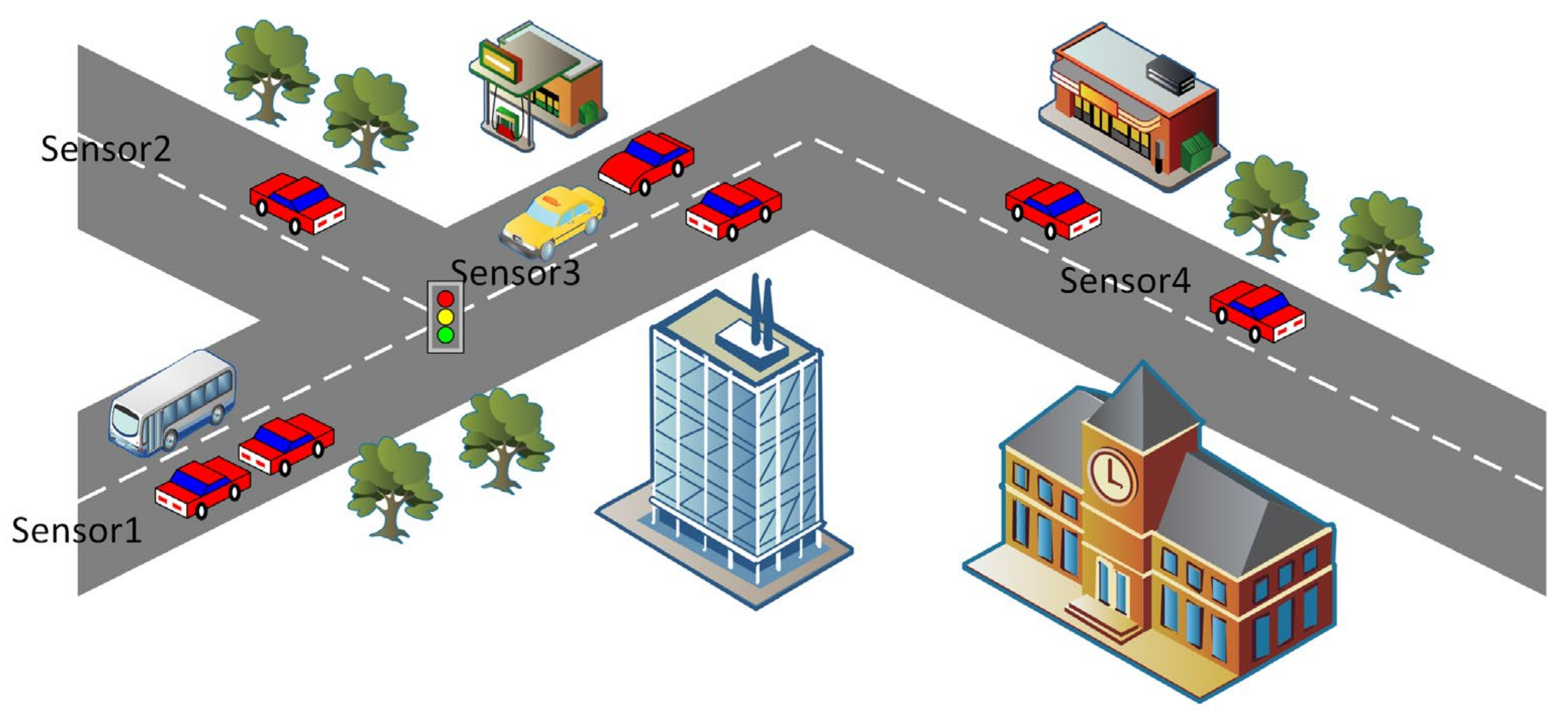

12], the spatial architecture of the road network is represented as a predefined adjacency matrix. However, in real applications, the traffic signal over a while can be decomposed into a smooth component determined by the road topology (connectivity and distance of sensors at observation points) and a dynamic component determined by the real-time traffic state and unexpected events. As shown in

Figure 2 the traffic flow is cross-regional, and a change in traffic flow in one region inevitably leads to a change in another region. Traffic flow in the Sensor 1 region changes over time, gradually flowing to the Sensor 3 region over a more extended period, possibly to the Sensor 2 region. Therefore, the hidden dynamic spatial–temporal relationship between regions must be considered simultaneously when spatial–temporal modeling. Considering the complex dynamical characteristics of urban traffic flow, existing GNN methods use predefined adjacency matrices to learn the spatial correlations between different observed nodes, which inevitably limits the model to learning the spatial–temporal characteristics of urban traffic dynamics. However, most current works must integrate them while maintaining efficiency and avoiding over-smoothing.

Due to the powerful ability of graph neural networks to capture spatial correlation from non-Euclidean traffic flow data [

13], they can effectively characterize geospatial correlation features. With the rapid development of deep learning research, graph neural networks (GNNs) have become the frontier of deep learning research [

4] and a popular method in traffic flow prediction tasks, for example, such as STGCN [

14], STSGCN [

15], LSGCN [

16], etc. However, these methods cannot effectively capture the dynamic spatial correlation in the traffic system. Existing GNN-based methods [

17,

18,

19] are usually built on static adjacency matrices (either predefined or self-learning) to learn the spatial correlation between different sensors, even though the influence of two sensors can change dynamically. Although Guo [

20] uses self-attention to dynamically compute the correlation of all sensor pairs, its ability to be applied to large-scale graphs is also limited by the complexity of the secondary computation.

Most current research adopts a composition based on predefined static graphs, which fails to consider the dynamic characteristics of traffic flow data. Since traffic data exhibit dynamic solid correlations in the spatio-temporal dimension, mining nonlinear and complex spatio-temporal relationships is an important research topic. On the one hand, methods using static adjacency matrices in graph convolutional networks need to be revised to reflect dynamic spatial correlations in traffic systems. On the other hand, most current methods ignore the hidden dynamic correlations between road network nodes evolving.

Therefore, to solve the above problems, we propose a new traffic prediction framework, the spatio-temporal dynamic graph differential equation network (ST-DGDE). Static distance-based and dynamic attribute-based graphs describe the topology of traffic networks from different perspectives and integrating them can provide a broader view for models to capture spatial dependencies [

9]. In conclusion, the contributions of this paper are as follows:

We extract dynamic features in node attributes using dynamic filters generated by the dynamic graph learning layer at each time step and fuse the dynamic graph generated by node embedding filtering with the predefined static graph. The dynamic graph learning layer is then employed to capture the dynamic graph topology of spatial nodes as they change over time.

To effectively capture the dynamic spatio-temporal correlations in traffic road networks, this paper uses neural graph differential equations (NGDEs) to learn the hidden dynamic spatio-temporal correlations in spatial and temporal dimensions. Finally, a temporal causal convolutional network and neural graph differential equations are used to successfully model the multivariate dynamic time series in the potential space by intersecting. It is shown through extensive experiments on real road traffic datasets that our proposed algorithm has improved performance compared to several baselines, including state-of-the-art algorithms.

The rest of the paper is organized as follows. We provide a comprehensive overview of the work related to traffic forecasting in

Section 2.

Section 3 elaborates on the problem definition of traffic forecasting and indicates the study’s objectives. The general framework of our ST-DGDE and the specific solutions are detailed in

Section 4. In

Section 5, we design several experiments to evaluate our model. Our work and directions for future research are summarized in

Section 6.

3. Preliminaries

In this section, we describe some of the essential elements of urban flow and define the problem of urban flow prediction.

Theorem 1. (Static adjacency graph):

The graph is defined by the edges between the vertices and by . In traffic flow prediction problems, the adjacency matrix usually characterizes the relationship between nodes. Therefore, the elements of the adjacency matrix are defined by the set of edges .

Theorem 2. (Time and space dynamic graph): The correlation between road observation points is dynamic and varies from morning to evening. The dynamic nature of traffic flow data motivates us to construct a dynamic graph containing a set of line segments as its nodes but with different properties between each time point edge. For time slot , the traffic graph is denoted as , where is the sequence of nodes, and is the set of edges. In, denotes the weight between node and node in time slot as . In addition, can be represented as a matrix

Theorem 3. (Traffic flow prediction problem): The spatio-temporal traffic flow prediction target can be described as follows: given the entire traffic network historical time segments, the observations of vertices, defined as , The function is learned from the comments to predict the traffic flow conditions for all vertices on the road network for the next time steps, denoted as .

4. Methodology

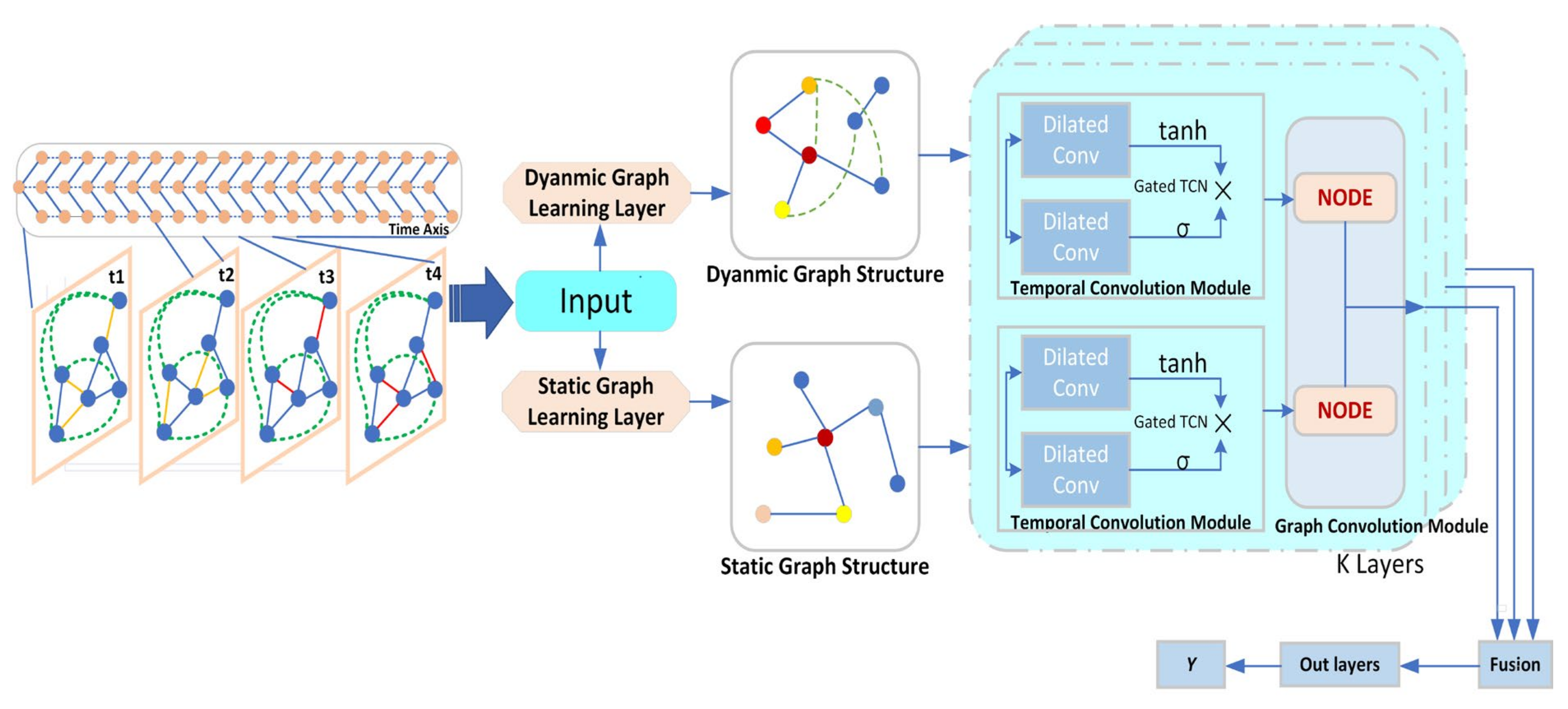

To solve the spatio-temporal dynamics problem in traffic flow, we propose the spatio-temporal dynamic graph ordinary differential equation network (ST-DGDE) framework, as shown in

Figure 3. The main framework of the model consists of four modules: dynamic graph learning layer (DGL), static graph learning layer (SGL), temporal convolution module (gated TCN), and dynamic neural graph differential equation (DNGDE) module. The dynamic graph learning layer extracts the dynamic spatial graph structure features hidden in the temporal traffic data and then constructs the generated dynamic graph. The static adjacency matrix is also constructed based on the geographic distance between spatial nodes for auxiliary functions. Then the dynamic and static graphs are fed into the dynamic neural graph ordinary differential equation module in parallel. The distance-based static graph and the node attribute-based dynamic graph reflect the correlation between nodes from different perspectives. Combining the dynamic map with the predefined map gives the model a larger view of the traffic network, thus improving the performance of traffic prediction.

In this paper, we propose a spatial dynamic neural graph differential equation network (GDE) and a graph learning model to learn spatial–temporal dynamic correlations between continuous long time series, which alleviates the reliance on static graph prior knowledge and mitigates the common problem of over-smoothing in GNN construction of deep networks. First, the model learns the continuous fine-grained temporal dynamics between time series through the continuous temporal causal aggregation (TCN) and dynamic graph learning layer (DGL) in the middle part; then, the information of long time series extracted from the temporal convolution module is fed into the differential equation module to learn the hidden dynamic temporal dependencies between continuous time series. Finally, the output of each layer’s dynamic graph differential equation module is used as the input of the next layer module, and the iterative fusion learning results are used as the output.

4.1. Dynamic Graph Learning Layer

Due to the dynamic nature of the traffic road network, there is a dynamic adjustment of the spatial dependencies between different road segments with time. Therefore, in this chapter, dynamic graph constructors are used to capture the different changing relationships between nodes, and they can capture the unstructured patterns hidden in the graph. For traffic networks, the correlations between nodes change over time, and simply applying GCN to traffic networks will not capture this dynamic. For this reason, this chapter employs a dynamic graph learning layer (DGL), which adaptively adjusts correlation strength between nodes. The output vector of the graph learning layer is in continuous space, and its goal is to extract the desired features of the graph.

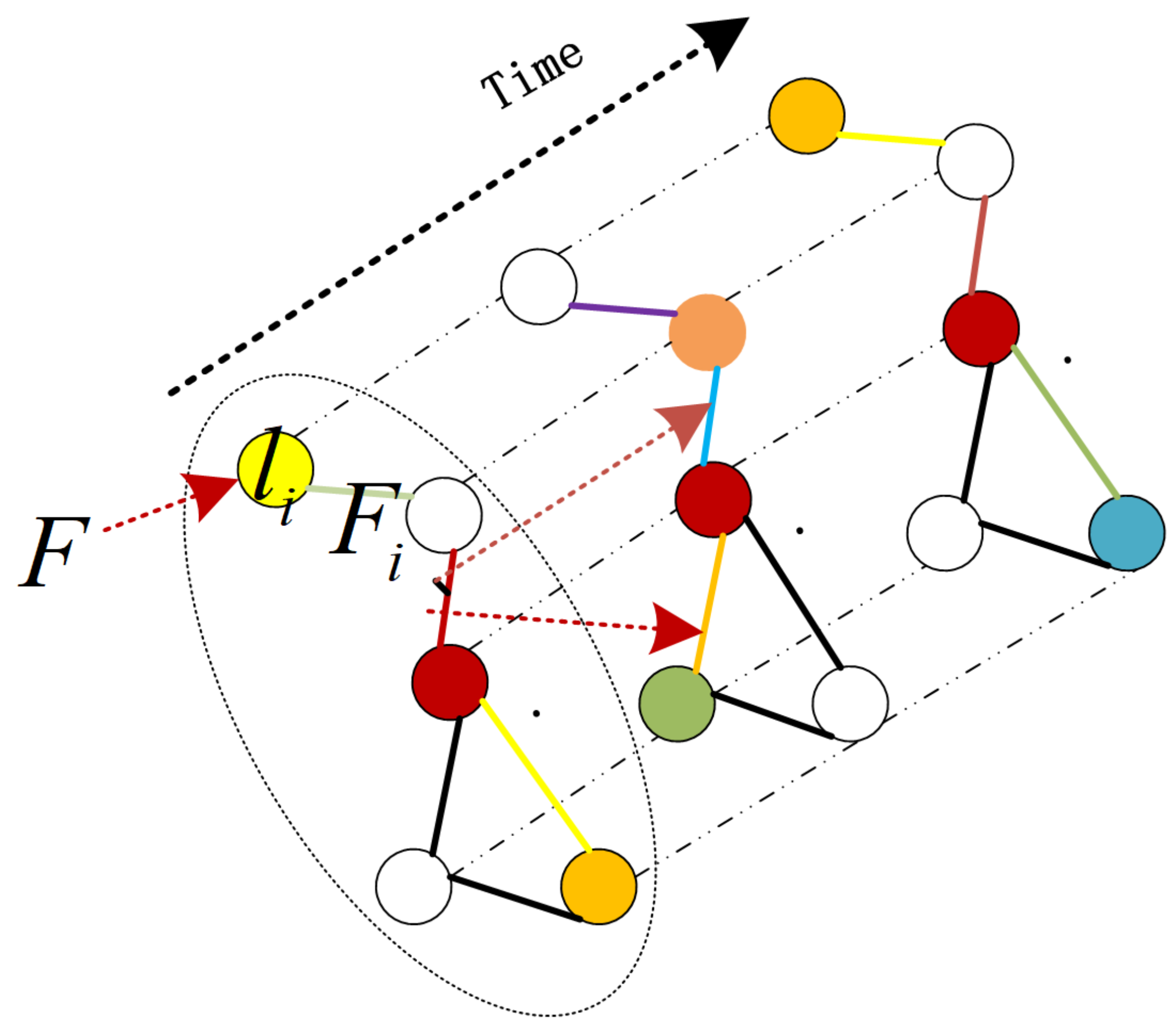

Figure 4 shows that each node is associated with an input label. As time evolves, the correlations between nodes in the spatial graph generated at each time point change. The feature representations of the same observed node at different time points also differ. Therefore, node

corresponding label can be denoted as

. For the dynamic spatial information propagation process, the input graph feature is denoted as

, where

is the associated feature of node

. The output feature of the spatial information graph learner at the next moment is denoted as

. Equation (3) describes the information propagation process of node

.

In Equation (3), represents a function with parameters called the local transformation function, which is spatially localized, i.e., the information transfer process of node involves only its 1-hop neighbors. When performing the filtering operation, all nodes in the graph share the function . Note that the node label information can be considered a fixed initial input information for the filtering process. In Equation (3), represents a function with parameters called the local transformation function, which is spatially localized, i.e., the information transfer process of node involves only its 1-hop neighbors. When performing the filtering operation, all nodes in the graph share the function . Note that the node label information can be considered a fixed initial input information for the filtering process.

Static distance-based graphs and dynamic node attribute-based graphs reflect the correlation between nodes from different perspectives. In order to broaden the application of traffic network models, this chapter deploys the graph learning module by combining dynamic graphs with predefined graphs. Moreover, the underlying topology is obtained dynamically using the graph constructor. It is then fed into a gated temporal convolutional network to learn long-range spatial dynamic features by dynamically extracting continuous and more distant message-passing graph structures, thus improving the performance of traffic prediction. In addition, the convolutional structure of TCN combines the historical information with the current moment through a flexible information aggregation approach to extract the temporal and structural information in the dynamic graph, which also unifies temporal and spatial convolution from another perspective.

4.2. Neural Graph Differential Equations (Neural GDEs)

In conventional neural networks, the hidden state is represented by a series of discrete transformations:

where

is the layer

neural network with parameters

, such as

, so that

can also be interpreted as a time coordinate, and the data input x at the moment

is transformed into the output

at the moment

.

Traffic flow time series data are composed of a sparse set of measurements together (the data format in the dataset is an aggregation of data in periods of every five minutes), so these data measurements can be interpreted in terms of many kinds of potentially dynamic processes. Although neural network (NP) [

35] models have the characteristics of providing uncertainty estimates and the ability to adapt to the data quickly, they cannot capture dynamic time series features. Therefore, to achieve a dynamic representation of continuous time series, the approach we adopted introduces neural ODE processes (NDPs) [

35], a new class of stochastic processes determined by the distribution over the neural ODE. By maintaining adaptive data-dependent distributions on the underlying ODEs, the dynamical processes of low-dimensional systems can be successfully captured from spatiotemporal data. Meanwhile, the ordinary differential equations (ODEs) can effectively capture the underlying spatial–temporal data dynamics and overcome the over-smoothing and over-squeezing problems of graphical neural networks, which in turn can build deep neural network models [

36] that can model the time series in traffic flow represented as an ordinary differential equation (ODE).

Given the initial state data of traffic flow

, the input data are modeled as the rate of change

so that the ODE can describe the evolution of the state

in a given dynamic system with time

. As shown in Equation (6), when

is that of a neural network, the equation is considered a neural ordinary differential equation (neural ODE). We can transform a neural ODE into an integral form to obtain

.

CGNN [

37] first extends the ordinary differential equations of God to graphical structured data by employing a continuous message-passing layer that portrays the dynamic representation between nodes and thus enables the construction of a deep network. Influenced by this work, we construct the neural graph differential equation network module shown in

Figure 5 by fusing graph neural networks with ordinary differential equations based on the dynamic mesh properties of traffic data.

As shown in

Figure 5, an example of an ODE solver connecting location observation graphs to prediction graphs is shown. The information on the dynamic changes in the road network from

to

moments is first learned by the observation function, and the feature maps sampled at each time point are mapped into the potential space by a neural encoder and aggregated into

. Then the uncertainty

of the dynamic graph changes and the uncertainty

representation of the ODE derivatives are decomposed by

. The ODE solver is designed to define the continuous dynamics of the node representations, where the dynamic long-term dependence between nodes can be efficiently modeled. Since the different nodes in the graph are interconnected, ODE solver considers the structural information in the graph and allows the information to be propagated between different nodes. The way the information is propagated is shown by Equation (7).

is the spatial embedding matrix of all nodes at time step n. Intuitively, each node at stage learns node information from its neighbors through and remembers its original node features through . This allows us to learn the graph structure dynamically without forgetting the original node features.

Given the initial time

and the target time point

, the neural graph differential equation network (neural GDE) predicts the corresponding state

by performing the following integrated encoding and decoding operations:

is a depth-varying vector field function defined on graph G and is denoted as the parameterized velocity of state at time . and are two affine linear mapping functions on the encoder and decoder, respectively. , the mapping function, inputs historical time series observations into the ODE solver to learn continuous dynamic time features. Then, the output of the ODE solver is transformed into the predicted future traffic condition by the mapping function .

4.3. Gated Temporal Causal Convolutional Networks

Bai S et al. [

38] proposed a temporal convolutional neural network (TCN). They experimentally evaluated and demonstrated that temporal convolutional networks (TCNs) are effective in modeling long-time series data and have excellent long-range spatial information capture capability [

39]. The TCN architecture is more canonical than LSTM and GRU, and the network model is more straightforward and transparent. Therefore, applying deep networks to time series data may be a suitable starting point.

The TCN network has two distinctive features [

40]: on the one hand, the convolution in the model architecture is causal, which effectively avoids “leakage” of future messages; the convolutional computation is also performed layer by layer, with simultaneous updates at each time step, which effectively improves the computational efficiency of the model. On the other hand, the architecture can be mapped to the output sequence using an input sequence of arbitrary length, as in RNN. Therefore, it is possible to use dilated convolution to construct very long effective history sequences, increasing the model’s perceptual field.

The input time is first given in the sequence modeling. The input time sequence

is first given in the sequence modeling. The goal of the model is to predict the output value

at each future moment.

In Equation (11), if the output value at the future moment satisfies the causal sequence, its input relies only on the input sequence and not on the future time sequence .

To achieve consistent network input and output lengths, the TCN uses a 1D fully convolutional network (FCN) architecture in which each hidden layer is the same length as the input layer and adds a zero-padding length (kernel size of 1) to keep subsequent layers the same length as previous layers. To avoid future information “leakage,” TCNs use causal convolution, where the output at time

is only convolved with elements from input time

t and earlier in the previous layers. For example, the TCN network architecture consists of a 1D FCN and a causal convolution. A significant drawback of this basic design structure is that a deeper network structure is required to capture long-time series information [

41]. Therefore, to build deeper networks and capture long-time series information, it is necessary to integrate modern convolutional network architectures into TCNs.

4.4. Module Fusion Output

The ST-DGDE model framework contains one or more blocks, as shown in

Figure 3. The computational procedure of the network framework containing

L T-DGDE blocks can be expressed as Equation (12).

where

and

denote the adjacency matrix of the static graph and the initial node features of the dynamic graph, respectively.

denotes the mapping functions that can be learned on each network model layer.

The output of one neural graph differential equation module is the input of the next consecutive block, as shown in

Figure 3. When there is only one block, i.e., when

= 1, the ST-DGDE model framework is planar and generates graph-level features directly from the original graph; when

L is more significant than 1, the T-DGDE module can be viewed as a hierarchical process that gradually summarizes node features to form predictive graph features by generating smaller, coarsened graphs.

where

is the Hadamard product, and

and

learning parameters reflect the degree of influence of static and dynamic graphs on the prediction target. Finally, a two-layer MLP is designed as the output layer to convert the output of the maximum pooling layer into the final prediction.

5. Experiment

This section is optional but can be added to the manuscript if the discussion is unusually long or complex.

5.1. Datasets

As shown in

Table 1, the PEMSO4 and PEMS08 data used in the experiments were collected in real time every 30 s by the Caltrans Performance Measurement System (PeMS). The system has over 39,000 detectors deployed on freeways in major California metropolitan areas. The collected traffic data are aggregated into one-time steps every 5 min interval, with 288 time steps for a day’s traffic flow. As shown in

Table 1, the total time duration of the PEMS04 data set is 59 days with 16,992 time steps, and the total time duration of the PEMS08 data set is 62 days with 17,856 time steps.

The experiment uses two metrics, including mean absolute error (MAE) and root mean square error (RMSE), to evaluate all methods widely used to assess the accuracy of regression problems. For all metrics, the lower the value, the better. They are defined as

5.2. Baseline Methods

To evaluate the performance of our model, we choose eight baselines to compare with.

VAR [

8]: Vector autoregressive model is an unstructured system of equations model that captures the pairwise relationships between traffic flow time series.

SVR [

10]: Support vector regression uses linear support vector machines to perform the regression task.

DCRNN [

30] (Li et al. 2017): Uses bipartite graph random wandering to simulate spatial dependencies and gated recursive units with integrated graph convolution to capture temporal dynamics.

STGCN [

19]: Spatial–temporal graph convolution network. Integrates graph convolution into a single convolution unit to capture spatial dependencies.

ASTGCN [

38]: Attention mechanism-based spatial–temporal graph convolutional network uses spatial attention and temporal attention to model temporal and spatial dynamic information, respectively.

STSGCN [

21]: Spatial–temporal simultaneous graph convolutional network which not only captures local spatial–temporal correlations efficiently but also takes into account the heterogeneity of spatial–temporal data.

LSGCN [

34]: Integrating graph attention networks and graph convolutional networks (GCNs) into a spatial gating block, which can effectively capture complex spatial–temporal features and obtain stable prediction results.

5.3. Experimental Parameter Settings

We decompose the PEMS04 and PEMS08 datasets into a training set, a validation set, and a test set in the ratio of 6:2:2 concerning ASTGCN and STSGCN. Specifically, the total time duration of the PEMS04 dataset is 59 days, with 16,992 time steps. Therefore, the first 10,195 time steps are set as the training set, 3398 time steps as the validation set, and 3398 time steps as the test set.

The total time duration of the PEMS08 dataset is 62 days, of which there are 17,856 time steps. The first 10,714 time steps are set as the training set, 3572 time steps as the validation set, and 3572 time steps as the test set. In addition, we normalized the data samples of each road section by the following Equation (6), and the normalized data were input to the model. The model was optimized by inverse mode auto-differentiation and Adam.

We implement our ST-DGDE model using the PyTorch framework, and all experiments are compiled on a Linux server. All experiments in this paper were conducted in a computer environment with CPU: Intel(R) Core(TM) i7-9900k CPU @ 3.60 GHz and TESLA T4 16G GPU card. We use Adam optimizer to optimize the ST-DGDE model with the number of training iterations set to 200, the batch size to 16, and the learning rate parameter to 0.001.

5.4. Experimental Results and Comparative Analysis

As shown in

Table 2, our ST-DGDE model is compared with the baseline of the proposed eight methods on two real-world datasets.

Table 2 shows the average results of the traffic prediction performance for the next hour.

These experiments will be summarized to answer the following research questions.

RQ1: What is the overall traffic prediction performance of STDGDE compared to the various baselines?

RQ2: How do the different submodules designed improve the model performance?

RQ3: How does the model perform on long-term prediction problems?

RQ4: How do the hyperparameters of the model affect the experimental results?

5.5. Ablation Experiments

To evaluate the effect of different modules on STDGDE by ablation analysis of the model, we divided the model into three primary modules to test the effect of different modules on the prediction effect of the model separately. For simplicity of representation, we use English abbreviations to denote the module names, which contain three different modules of gated time convolution (T), dynamic graph learning module (DGL), and neural graph differential equations (NGDEs), respectively. In this chapter, we use the PEMS04 dataset to complete the ablation experiments and then analyze the experimental results.

We present the details of the three ablation modules as follows:

T: Gated temporal causal convolution module (Gated TCN).

T + DGL: Adopt T as the base module and add the dynamic graph learning module (DGL) for capturing dynamic change features in spatial–temporal data.

T + NGDE: Adding the neural graph differential equation module to the T module to verify the ability of God’s frequent differential equations to capture hidden dynamic spatial–temporal correlation information.

T + DGL + NGDE: add the neural graph differential equation module to the T + DGL module, which is this chapter model’s spatial–temporal dynamic graph differential equation (STDGDE).

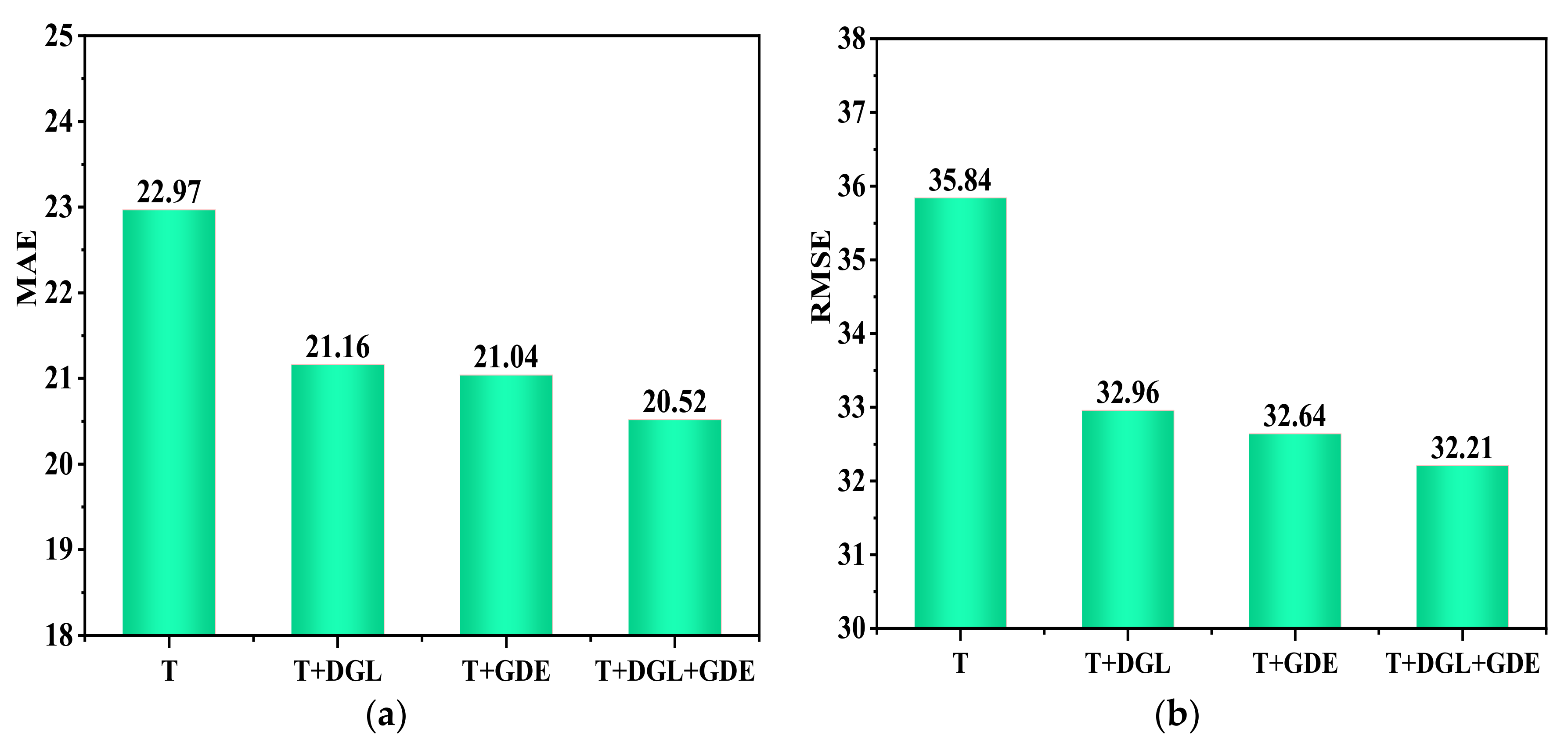

It can be seen from

Figure 6 that the STDGDE (T + DGL + NGDE) model in this chapter outperforms other variants in MAE and RMSE evaluation metrics. The result analysis, as shown in

Figure 6 (T + DG) data metrics, improves model prediction performance in both MAE and RMSE evaluation metrics when the dynamic graph learning module (DGL) is added. This indicates that the dynamic graph learning module can effectively construct dynamic spatial graphs in the temporal data to improve the model performance. The neural graph differential equations are added separately to the gated temporal causal convolution module, and the spatial perception capability of the model is expanded by combining static and dynamic graph modeling with STDGDE (T + DGL + NGDE) to capture the hidden dynamic spatial–temporal correlations in the data effectively.

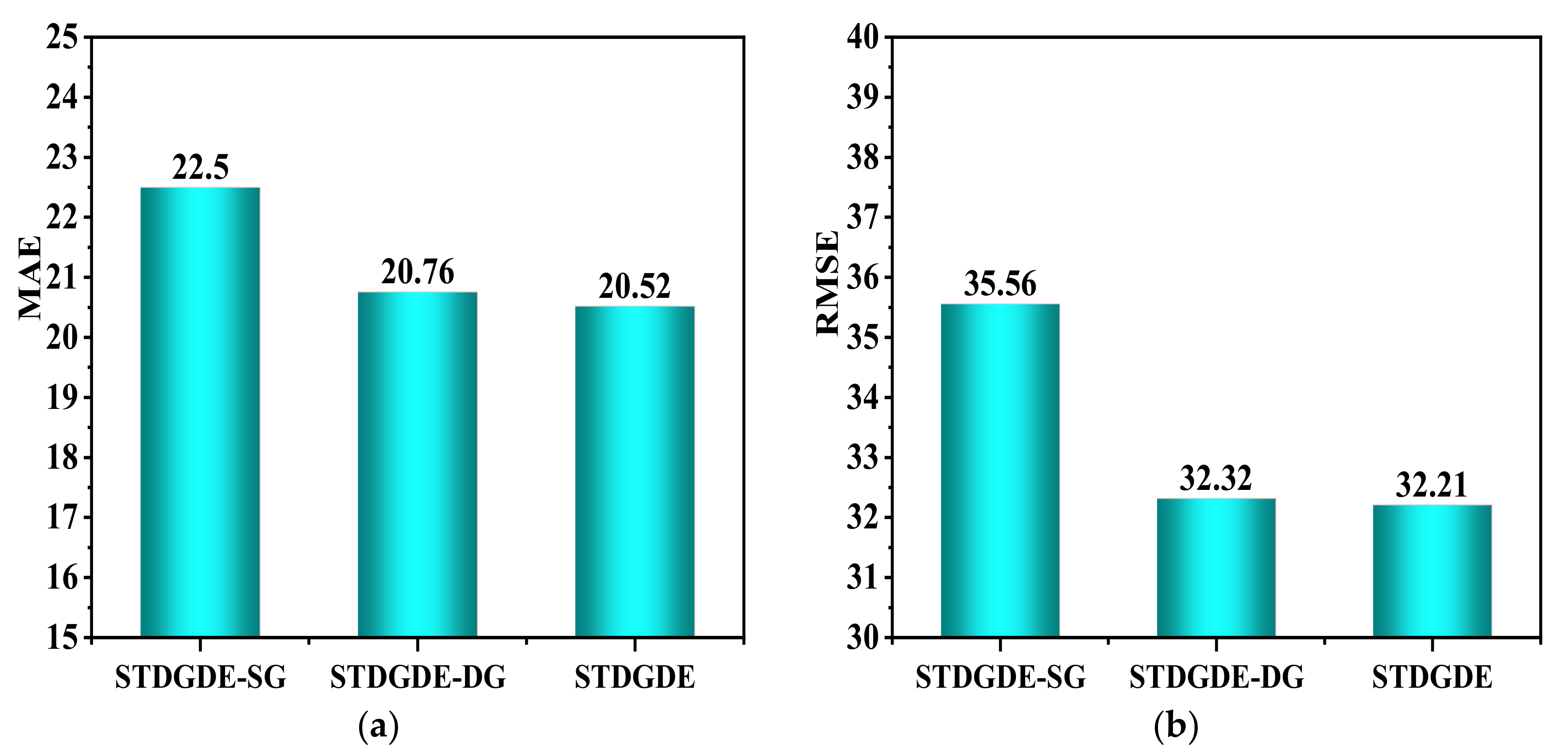

To further analyze the effects of static and dynamic plots on model prediction performance, we conducted ablation experiments on static and dynamic plot modules in the PEMS04 dataset. As shown in

Figure 7, STDGDE-SG indicates the use of static plots, and STDGDE-DG indicates the use of static plots.

Figure 7 shows that the prediction effect is greatly improved when the model uses dynamic graphs, indicating that the model can better capture the potential dynamic change information in the data and better accomplish the dynamic prediction task. The comparison indexes of STDGDE and STDGDE-SG in MAE and RMSE data illustrate that static graphs can assist dynamic graph prediction and improve the prediction effect.

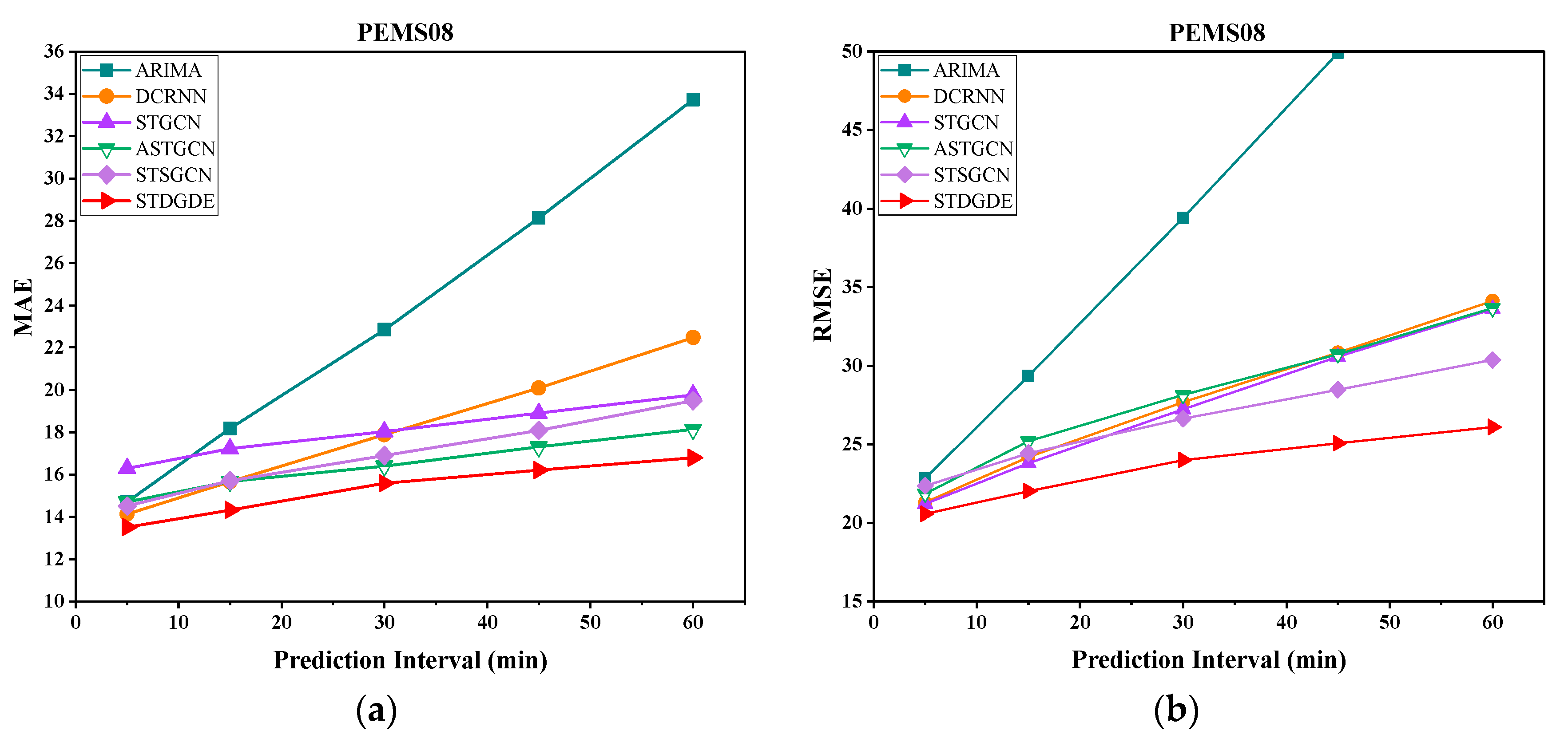

As the prediction time interval increases, the prediction difficulty will also increase, and the model’s prediction performance will decrease. Therefore, in order to investigate the prediction results of the model at different time steps, we show in

Figure 8 and

Figure 9 the effect of different prediction time intervals on the prediction performance of different methods, and it can be seen that the prediction error of the model increases with the increase in the prediction time length. Our model STDGDE has the best prediction performance at each time step. Additionally, the increase in model prediction error with increasing prediction time length on dataset PEMS04 is relatively slow. The missing data in the PEMS08 dataset leads to poorer prediction results when performing short-term predictions of 5 min and 15 min. However, the model, STDGDE in this chapter, can effectively alleviate the data loss problem in short-term prediction because of the joint capture of spatial–temporal data changes by static and dynamic maps, and the overall stability of the model is better.