Abstract

The quantile regression model is widely used in variable relationship research of moderate sized data, due to its strong robustness and more comprehensive description of response variable characteristics. With the increase of data size and data dimensions, there have been some studies on high-dimensional quantile regression under the classical statistical framework, including a high-efficiency frequency perspective; however, this comes at the cost of randomness quantification, or the use of a lower efficiency Bayesian method based on MCMC sampling. To overcome these problems, we propose high-dimensional quantile regression with a spike-and-slab lasso penalty based on variational Bayesian (VBSSLQR), which can, not only improve the computational efficiency, but also measure the randomness via variational distributions. Simulation studies and real data analysis illustrated that the proposed VBSSLQR method was superior or equivalent to other quantile and nonquantile regression methods (including Bayesian and non-Bayesian methods), and its efficiency was higher than any other method.

MSC:

62-08

1. Introduction

Quantile regression, as introduced by Koenker and Bassett (1978) [1], is an important statistical inference about the relationship between quantiles of the response distribution and available covariates, and can offer a practically significant alternative to the traditional mean regression, because it provides a more comprehensive description of the response distribution than the mean. Moreover, quantile regression can capture the heterogeneous impact of regressors on different parts of the distribution [2], has excellent computational properties [3], exhibits robustness to outliers, and has a wide applicability [4]. For these reasons, quantile regression has attracted extensive attention in the literature. For example, see [5] for a Bayesian quantile regression with asymmetric Laplace distribution used to specify the likelihood, [6] for a Bayesian nonparametric approach to inference for quantile regression, ref. [7] for a mechanism of Bayesian inference of quantile regression models, and [8] for model selection in quantile regression, among others.

Although there is a growing literature on quantile regression, to the best of our knowledge, little of the existing quantile regression models have focused on high-dimensional data with variable numbers and larger sample sizes. In practice, a large number of variables may be collected and some of these will be insignificant and should be excluded from the final model. In the past two decades, there has been active methodological research on penalized methods for significant variable selection in linear parametric models. For example, see [9] for ridge regression, ref. [10] for a least absolute shrinkage and selection operator (Lasso), ref. [11] for smoothly clipped absolute deviation penalty (SCAD), ref. [12] for elastic net penalty, and ref. [13] for adaptive lasso methods. These methods have been extended to quantile regression; for example, see [14] for a -regularization method for quantile regression, and ref. [15] for variable selection of quantile regression using SCAD and adaptive-Lasso penalties. Nevertheless, the aforementioned regularization methods discussed are both computationally complex and unstable. Additionally, they fail to account for prior information about parameters, which can lead to an unsatisfactory parametric estimation accuracy. In recent decades, Bayesian approaches to variable selection and parameter estimation have garnered significant attention. This is because they can substantially enhance the accuracy and efficiency of parametric estimation, by imposing various priors on model parameters, consistently selecting crucial variables, and providing more information for variable selection than penalization methods for highly nonconvex optimization problems. For example, see [16] for a Bayesian Lasso where the penalty is involved in Laplace prior, [17] for a Bayesian form of adaptive Lasso, ref. [18] for Bayesian Lasso quantile regression (BLQR), and [19] for Bayesian adaptive Lsso quantile regression (BALQR). The literature mentioned above implemented the standard Gibbs sampler for posterior computation, which is not easily scalable for high-dimensional data, where the number of variables is large compared with the sample size [20].

To address this issue, the Bayesian variable selection method with a spike-and-slab prior [20] has been favored by researchers, and this can be applied to high-dimensional data at the cost of a heavy computation burden. As a computationally efficient alternative to Markov chain Monte Carlo (MCMC) simulation, variational Bayes (VB) methods are gaining traction in machine learning and statistics for approximating posterior distributions in Bayesian inference. High-efficiency variational Bayesian spike-and-slab lasso(VBSSL) has been explored for certain high-dimensional models. Ray and Szabo (2022) [21] used a VBSSL method in a high-dimensional linear model, with regression coefficient’s prior specified as a mixture of Laplace distribution and Dirac mass. Yi and Tang (2022) [22] used VBSSL technology in high-dimensional linear mixed models, with a interesting prior parameter as a mixture of two Laplace distributions. However, to the best of our knowledge, there has been little work done on a VBSSL method for quantile regression. Xi et al. (2016) [23] considered Bayesian variable selection for nonparametric quantile regression with a small variable dimension, in which a spike-and-slab prior was chosen as a mixture of the point mass at zero and normal distribution. In this paper, to reduce the computational burden and quantify the parametric uncertainty, we propose quantile regression with spike-and-slab lasso penalty based on variational Bayesian (VBSSLQR), in which the prior is a mixture of two Laplace distributions, with a smaller or larger variance, respectively.

The main contributions of this paper are as follows: First, our proposed VBSSLQR method can perform variable selection for high-dimensional quantile regression at a relatively low computational cost, and without the need for nonconvex optimization, while also avoiding the curse of dimensionality problem. Second, in contrast to mean regressions, our proposed quantile approach offers a more systematic strategy for analyzing how covariates impact the various quantiles of the response distribution. Third, in ultra-high-dimensional regression, the mean regression errors are frequently presumed to be subGaussian, which is not required in our setting.

The rest of the paper is organized as follows: In Section 2, for high-dimensional data, we propose an efficient quantile regression with a spike-and-slab lasso penalty based on variational Bayes (VBSSLQR). In Section 3, we randomly generate high-dimensional data with n = 200 and p = 500 (excluding intercept items), and perform 500 simulation experiments, to explore the performance of our algorithm and compare it with other quantile regression methods (Bayesian and non-Bayesian) and nonquantile regression methods. The results show that our method is superior to other approaches in the case of high-dimensional data. We applied VBSSLQR to a real dataset that contained information about crime in various cities in the United States, and compared it with other quantile regression methods. The results showed that our method also had a good performance and excellent efficiency with real data, and the relevant results are shown in Section 4. Some concluding remarks are given in Section 5. Technical details are presented in the Appendix A, Appendix B, Appendix C and Appendix D.

2. Models and Methods

2.1. Quantile Regression

Consider a dataset of n independent subjects. For the ith subject, let be the response, while is an predictor vector, a simple linear regression model is defined as follows:

where is the regression coefficient vector with corresponding to the intercept terms, and represents the error term with unknown distribution. It is usual to assume that the th quantile of the random error term is 0; that is, for . According to this assumption, the form of th quantile regression of model (1) is specified as follows:

where is the inverse cumulative distribution function of , given evaluated at . The estimate of the regression coefficient vector in Equation (2) is

where the loss function with the indicator function .

In light of [5,24], minimizing Equation (3) is equivalent to maximizing the likelihood of n independent individuals with the ith one distributed as an asymmetric Laplace distribution (ALD) specified as

where the local parameter , the scale parameter , and the skewness parameter is between 0 and 1; obviously, ALD is a Laplace distribution when and . However, it is computationally infeasible to carry out statistical inference based directly on Equation (4) involving the nondifferentiable point . Following [25], Equation (4) can be rewritten in the following hierarchical fashion:

where , and denotes the exponential distribution with mean , whose specific density function is . Equation (5) illustrates that an asymmetric Laplace distribution can also be represented as a mixture of exponential and standard normal distributions, which allows us to express a quantile regression model as a normal regression model, in which the response has the following conditional distribution:

For the above-defined quantile regression model with high-dimensional covariate vector (r is large enough), it is of interest to estimate the parameter vector and to identify the critical covariates. To this end, we considered Bayesian quantile regression based on spike-and-slab lasso, as follows:

2.2. Bayesian Quantile Regression Based on a Spike-and-Slab Lasso

As early as 2016, Xi et al. [23] applied a spike-and-slab prior to Bayesian quantile regression, but their proposed prior was a mixture of zero particle and normal distribution with large variance, and the estimate of posterior density was obtained using a Gibbs sampler. To offer novel theoretical insights into a class of continuous spike-and-slab priors, Rockova (2018) [26] introduced a novel family of spike-and-slab priors, which are a mixture of two density functions with spike or slab probability. In this paper, we adopt a spike-and-slab lasso prior with a mixture of two Laplace distributions with large or small variance, respectively [26], which facilitates the variational Bayesian technique for approximating the posterior density of parameters and for improving the efficiency of the algorithm. In light of ref. [26], given the indicator or 1, the prior of in the Bayesian quantile regression model (5) can be written as

where the Laplace density and with precision parameters and satisfying that , the indicator variable set , the jth variable is active when , and inactive otherwise. Similarly to [27], the Laplace distribution for the regression coefficient can be represented as a mixture of a normal distribution and an exponential distribution, specifically the distribution of can be expressed as a hierarchical structure, as follows:

where denotes the Bernoulli distribution, with being the probability that indicator variable equals one for , and specifies the prior of as a Beta distribution , where hyperparameters and , , and are regularization parameters to identify important variables, for which we consider the following conjugate priors:

where denotes the gamma distribution with shape parameter a and scale parameter b. As mentioned above, and should satisfy that , to this end, we select hyperparmeters and to satisfy that . The prior of scale parameter in (5) is an inverse gamma distribution of the hyperparameters and in the paper leading to almost non-informative prior.

Under a Bayesian statistical paradise, based on the above priors and likelihood of quantile regression, it is required to induce the following posterior distribution , where , the latent variable set , , , observing set with the response set and covariate set . Based on the hierarchical structure (5) of the quantile regression likelihood and the hierarchical structure (7) of the spike-and-slab prior to regression coefficient vector , we derive the joint density

Although sampling from the aforementioned posterior is simple, it becomes increasingly time-consuming for higher dimension quantile models. To tackle this issue, we developed a faster and more efficient alternative method based on variational Bayesian.

2.3. Quantile Regression with a Spike-and-Slab Lasso Penalty Based on Variational BAYESIAN

At present, the most commonly variational Bayesian approximation posterior distribution methods use mean field approximation theory [28], which has the highest efficiency among variational methods, especially for those parameters or parameter block with conjugate priors. Bayesian quantile regression needs to take into account that the variance of each observation value is different, and each corresponds to a potential variable , which will result in the algorithm efficiency of quantile regression being lower than that of general mean regression. Therefore, in this paper, we use the variational Bayesian algorithm of the mean field approximation, which is the most efficient algorithm, to derive the quantile regression model with the spike-and-slab lasso penalty.

Based on variational theory, we choose a densities for random variables from variational family , which having the same support as the posterior density . We approximate the posterior density by any variational density . The variational Bayesian method is to seek the optimal approximation to by minimizing the Kullback-Leibler divergence between and , which is an optimization problem that can be expressed as:

where , which is not less than zero and equal to zero if, and only if, . The posterior density with the joint distribution of parameter and data and the marginal distribution data . Since , which does not have an analytic expression for our considered model, it is rather difficult to implement the optimization problem presented above. It is easy to induce that

in which the evidence lower bound (ELOB) with representing the expectation taken with respect to the variational density . Thus, minimizing is equivalent to maximizing because log does not depend on . That is,

which indicates that seeking the optimal approximation problem of becomes maximizing under the variational family . The complexity of the approximation problem heavily is related to the variational family . Therefore, choosing a comparatively simple variational family to optimize the objective function with respect to is fascinating.

Following the commonly used approach to choosing a tractable variational family in the variational studies, we consider the frequently-used mean-field theory, which assumes that blocks of are mutually independent and each is measured by the parameters of the variational density. Obviously, the variational density is assumed to be factorized across the blocks of :

in which form of each variational densities ’s is unknown, but the above assumed factorization across components is predetermined. Moreover, the best solutions for ’s are to be achieved by maximizing with respect to variational densities by the coordinate ascent method, where where can be either a scalar or a vector. This means that when the correlation between several unknown parameters or potential variables cannot be ignored, they should be put in the same block and merged into . Following the idea of the coordinate ascent method given in ref. [29], when fixing other variational factors for , i.e., the optimal density , which maximizes with respect to , is shown to take the form

where is the logarithm of the joint density function and is the expectation taken with respect to the density for .

According to Equations (8) and (9), we can derive the variational posterior for each parameter as follows (see Appendix A for the details):

where denotes generalized inverse Gaussian distribution,

and

In the above equation, , and , , , are similar to , with , and is a vector with the jth component of vector deleted, with .

In the section above, we derived the variational posterior of each parameter. Using the idea of coordinate axis optimization, we can update each variational distribution iteratively until it converges.

For this reason, we list the variational Bayesian spike-and-slab lasso quantile regression (VBSSLQR) algorithm as shown in Algorithm 1:

| Algorithm 1 Variational Bayesian spike-and-slab lasso quantile regression (VBSSLQR). |

| Input: |

| Data , predictors , prior parameters ,, , , , precision and quantile ; |

| Output: |

| Optimized variational parameters , for and the corresponding Bayesian confidence interval. |

| Initialize: ; ; ;; |

| ; ; ;; |

| ;; ; |

| while do |

| for to r do |

| Update , , and according to Equation (10). |

| Update , , , according to , |

| Update , , , according to , |

| Update according to the variational posterior , |

| Update and , |

| end for |

| Update according to the variational posterior , |

| Update according to the variational posterior , |

| Update , and according to , |

| for to n do |

| Update and according to the variational posterior , |

| end for |

| Update and according to the variational posterior , |

| , |

| end while |

In Algorithm 1 above, is the digamma function and the expectation of with respect to generalized inverse Gaussian distribution. Thus, we assume that , then:

where represents the Bessel function of the second kind. Note that there is no analytic solution or function to the differential of the modified Bessel function. Therefore, we approximate using the second-order Taylor expansion of . This paper lists the expectations of some parameter functions about variational posteriors involved in Algorithm 1; see Appendix B for details. Based on our proposed VBSSLQR algorithm, in the next section, we randomly generate high-dimensional data, conduct simulation research, and compare the performance with other methods. Notably, the asymptotic variance of the quantile regression is reciprocal proportional to the density of the errors at the quantile point. In cases where n is small and we estimate extreme quantiles, the correlating asymptotic variance will be large, resulting in less precise estimates [23]. Therefore, the regression coefficient is difficult to estimate at an extreme quantile and this is feasible when the sample sizes needs to be increased appropriately.

3. Simulation Studies

In this section, we used simulated high-dimensional data with a sample size and variable number , in order to study the performance of VBSSLQR and compare it with existing methods, including linear regression with a lasso penalty (Lasso), linear regression with an adaptive lasso penalty (ALasso), quantile regression with a lasso penalty (QRL), quantile regression with an adaptive lasso penalty (QRAL), Bayesian regularized quantile regression with a lasso penalty (BLQR), and Bayesian regularized quantile regression with an adaptive lasso penalty (BALQR). The data in the simulation studies were generated using Equation (1), in which the covariate vector was randomly generated from the multivariate normal distribution with the th of being . Among these covariates, we only considered the ten important explanatory variables that have significant impact on the dependent variable. We set the 1, 51, 101, 151, 201, 251, 301, 351, 401, and 451 predictors to be active, and their regression coefficients are −3, −2.5, −2, −1.5, −1, 1, 1.5, 2, 2.5, 3, and the rest are zero. In addition, we discuss the performance of various approaches in the case of two types of random error; namely, independent and identically distributed (i.i.d.) random errors and heterogeneous random errors.

3.1. Independent and Identically Distributed Random Errors Random

In this subsection, with reference to [19,23], we set the random errors ’s in Equation (1) to be independently and identically distributed and consider the following five different distributions with quantile being zero:

- The error with being the quantile of , for ;

- The error with being the quantile of , where denotes the Laplace distribution with location parameter a and scale parameter b;

- The error with and respectively being the quantile of and ;

- The error with and respectively being the quantile of and ;

- The error with being the quantile of , where denotes the Cauchy distribution with location parameter a and scale parameter b;

For all of the above error distributions with any , we ran 500 replications for each method, and evaluated the performance using two criteria. The first criterion was the median of mean absolute deviations (MMAD), which quantifies the general distance between the estimated conditional quantile and the true conditional quantile. Specifically, the mean absolute deviation (MAD) in any replication is defined as where is the estimate of regression coefficient given . The second criterion of the mean of true positives (TP) and false positives (FP) was selected for each method.

Table 1 shows the median and standard deviation (SD) of MADs estimated using each method for simulations with homogeneous errors. It is clear that our method was either optimal (bold) in all cases, especially in quantile , or that our approach was significantly superior to the other six methods. When the error distribution was normal, a Laplace and normal mixture, the MMAD of the BALQR method was suboptimal. When the error distribution was Cauchy, the MMAD of the QRL approach was suboptimal, but Table 2 shows that this sacrificed the complexity of the model. When the error distribution was a Laplace mixture, the MMAD of the lasso approach was suboptimal; however, it selected an overfitting quantile regression model with about 30 FP variables, as can be seen from Table 2. It is particularly important to note that, in the case of high dimensional data, the MMAD for the quantile regression model with the lasso penalty or adaptive lasso penalty is not less than the MMAD for general linear models with a lasso penalty. Therefore, it is inappropriate to use a lasso penalty or adaptive lasso in this case.

Table 1.

The median and standard deviation of 500 MADs estimated using various methods in simulations with i.i.d. errors.

Table 2.

Mean TP/FP of various methods for simulation with i.i.d. errors.

In order to show the results of variable selection more intuitively, we introduced TP (true positives) and FP (false positives), to calculate the mean of TP and FP of 500 repeated simulations, respectively. Detailed results are shown in Table 2 below:

It is possible to conclude from the results in Table 2 that the lasso method can generally select all true active variables, but it fits many false active variables; and when the random error is a Cauchy distribution, it cannot select all true active variables; A lasso approach can identify true active variables, but there are also some misjudged behaviors, especially when the random error distribution is a Cauchy distribution; Although the QRL approach can identify real active variables, it still contains many false active variables, and the model it identifies has a high complexity; the QRAL method cannot identify all true active variables, and incorrectly identifies some inactive variables; In the case of high-dimensional data, the BLQR approach cannot select all the true active variables, but it also does not incorrectly select inactive variables. The true active variable selected using the BALQR method is better than using the BLQR method, but some inactive variables are incorrectly selected, especially when the random error distribution is the Cauchy distribution. Our VBSSLQR method not only had the smallest MMAD, but also could select true active variables and eliminate most false active variables. As a whole, our method was superior to the other six methods for variable selection, especially in quantile , performing significantly better than BLQR, BALQR, and QRL.

3.2. Heterogeneous Random Errors

Now we consider the case of heterogeneous random errors, to demonstrate the performance of our method. In this subsection, the data were generated from the following model:

where , in which represents the uniform distribution with support set . The design matrix was generated in the same way as above, and the regression coefficient was set as before. Furthermore, in the simulation study, we combined and ; that is, was also a covariate. Finally, random error was also generated from the five different distributions defined in Section 3.1.

We also studied the performance of the quantile under the different methods and simulated 500 times, to calculate the MMAD and mean of TP/FP. We list the experimental results in Table 3 and Table 4.

Table 3.

The median and standard deviation of 500 MADs were estimated via various methods for simulations with heterogeneous random errors.

Table 4.

Mean TP/FP of the various methods for simulations with heterogeneous random errors.

For heterogeneous random errors, our approach was still the best, similarly to for i.i.d. random errors. It is noteworthy that the VBSSLQR method was more robust than the other methods, and our method had the smallest MMAD change compared to the case of i.i.d. random errors. We can see that the MMAD of our method remained basically unchanged, while the MMAD of the other six methods differed by more than 0.5 compared to the i.i.d. random errors in some states.

We also investigated the mean of TP and FP for heterogeneous random errors under different quantiles . The results are listed in Table 4, which shows that the effect of variable selection of heterogeneous random errors was slightly lower than the effect of variable selection of i.i.d. random errors under the same sample size, but our method still provided the best selection results.

We also calculated the mean execution times of various Bayesian quantile regressions under different quantile for different distributions of random errors, and list the results in Table A1 of Appendix C, which illustrates that our proposed VBSSLQR approach was a lot more efficient than BLQR and BALQR, for which we sampled MCMC 1000 times and discarded the first 500 times, and made a statistical inference based on 500 samples (the experimental study shows that the algorithm converged after 500 samples). In order to illustrate the feasibility of applying our proposed VBSSLQR to cases with smaller effect sizes, we changed the above active predictors to 1, while the other settings remained unchanged. The performance of our proposed method is shown in Table A2, which illustrates that the results were not significantly different from those of Table 1 and Table 2 when random errors had an independent identical distribution, and slightly worse than those of Table 3 and Table 4 when the random errors were heterogeneous.

4. Examples

In this section, we analyzed a real dataset containing information about crime in various cities across the United States. This dataset is accessible from the University of Irvine machine learning repository (http://archive.ics.uci.edu/ml/datasets/communities+and+crime, accessed on 1 May 2023). We calculated the per capital rate of violent crimes by dividing the total number of violent crimes by the population of each city. The violent crimes considered in our analysis were those classified as murder, rape, robbery, and assault, as per United States law. The observed individuals were communities. The dataset has 116 variables, where the first four columns are response, name of the community, code of county, and code of community; the middle features are demographic information about each community, such as population, age, race, and income; and the final columns are regions. According to the source: “the data combines socio-economic data from the 1990 US Census, law enforcement data from the 1990 US LEMAS survey, and crime data from the 1995 FBI UCR”. This dataset has been applied for quantile regression [30]. The dataset is available at

- train set: https://academics.hamilton.edu/mathematics/ckuruwit/Data/Crime/train.csv (accessed on 1 May 2023).

- test set: https://academics.hamilton.edu/mathematics/ckuruwit/Data/Crime/test.csv (accessed on 1 May 2023).

Our dependent variable of interest was the murder rate of each city, denoted as for the ith city. As the murder rate denotes the most dangerous violent crimes, we choose this variable. Studying factors correlated with the response dependent variable is of significant importance for the public and law enforcement agencies.

To adapt the data to our model, we preprocessed the data as follows:

- Delete columns from the data set that contain missing data.

- Delete the data when the response variable equals 0, because this is not an issue of interest to us.

- Transform : and let be the new response variable.

- Convert some qualitative variables into quantitative variables.

- Standardized covariates.

After the above data preprocessing, we obtained 1060 observation objects and 95 covariates in the training set, and we obtained 122 observation objects and 95 covariates in the testing set. We implemented a quantile regression model between the 96 predictors (including intercept) and the response with different quantiles.

In this section, we compare QRL, QRAL, BLQR, and BALQR with our method VBSSLQR for real datasets, all with quantile penalty regression. Under different quantiles , we compared the performance of the different approaches. We counted the root mean squared error (RMSE) of each method under each quantile and the number of selected active variables, to evaluate the performance of each approach on the test set, where the RMSE was evaluated using with being the fitted value of response under quantile . Finally, the results are listed in Table 5 below.

Table 5.

RMSE of fitting test dataset and the number of active variables for the various methods for the real dataset.

To visually show the results listed in Table 4, the best results are in bold under each quantile . Clearly, our method performed better than the other methods for all quantiles, and the active variables selected by our method were suitable, which means that our method was very competitive compared with the other methods. The BLQR and BALQR approaches could only identify the intercept, and they could not identify the variables that really affected the response quantile. The QRL and QRAL methods were prone to overfitting, because they recognized too many active variables. Finally, the efficiency of our method with real data was also significantly higher than that of the other approaches. Although BLQR, QRL, and our proposed method had the same RMSE performance at , BLQR showed underfitting and QRL showed overfitting. Thus, we believe there is sufficient evidence to show that our method was very competitive with the other approaches.

Similarly to [30], we list the active variables selected under each quantile in Table 6. Thus, Table 6 shows the variable selection of our proposed method with real data.

Table 6.

Crime data analysis: variable selection.

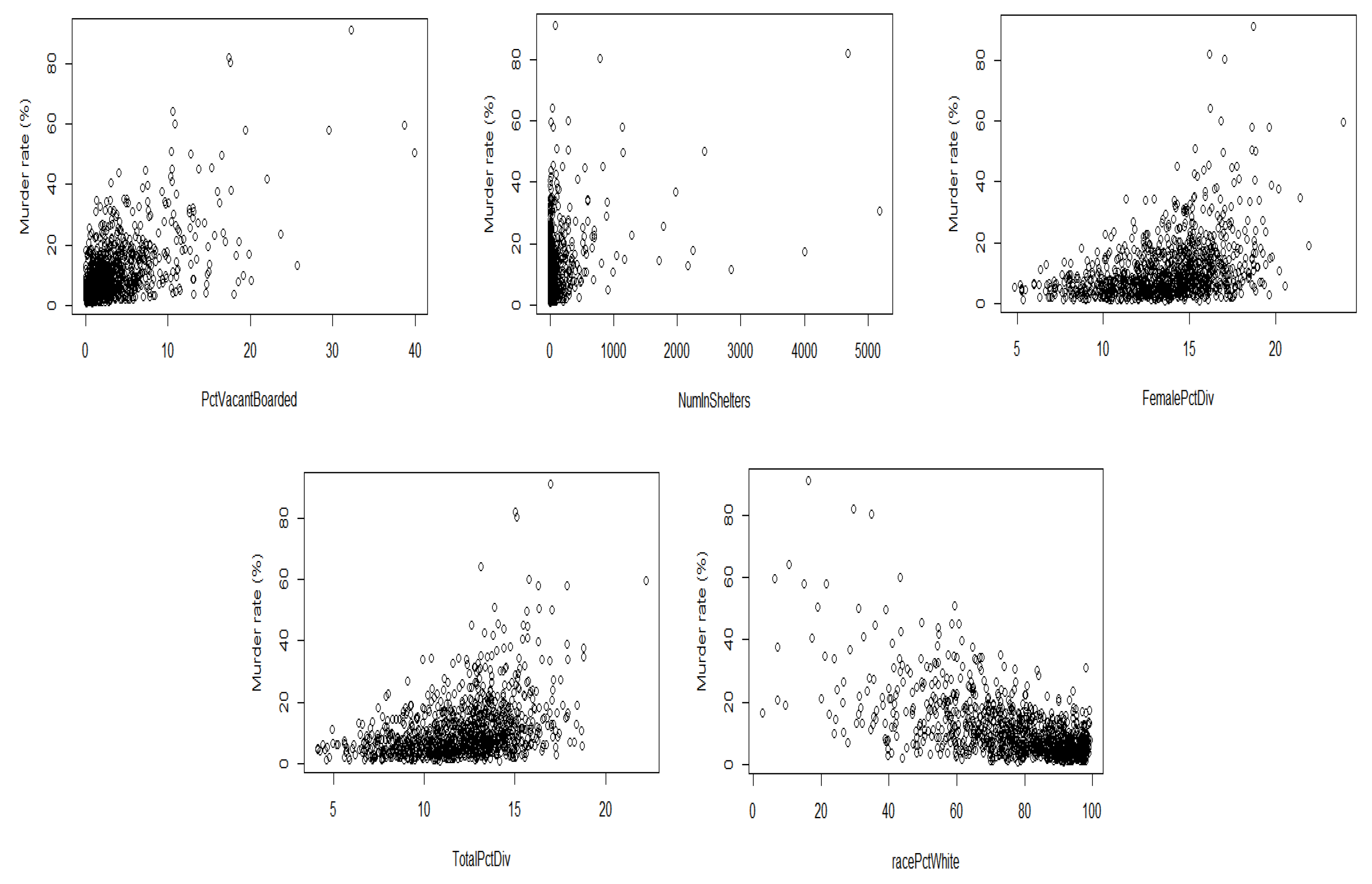

In the above table, our method selected only a small number of predictors at each quantile level. Notably, only the variable “NumInShelters” had an impact on the response at all quantiles; the variables “FemalePctDiv” and “TotalPctDiv” had an impact on the response at ; the variable “PctVacantBoarded” had an impact on the response at ; the variable “racePctWhite” had an impact on the response at ; and the other variables affected a few quantiles of response. Therefore, the five variables selected from the quantile regression model were

- PctVacantBoarded: percentage of households that are vacant and boarded up to prevent vandalism.

- NumInShelters: number of shelters in the community.

- FemalePctDiv: percentage of females who are divorced.

- TotalPctDiv: percentage of people who are divorced.

- racePctWhite: percentage of people of white race.

In order to obtain a better understanding of these five common variables, we plot their correlation to the response.

Figure 1 depicts the relationship between the five common variables and the murder rate. Only the correlation between the NumInShelters and murder rate is not obvious, with MalePctDivorce, RentLowQ, racePctWhite, and PctVacantBoarded significantly affecting the murder rate. The variable racePctWhite and murder rate are negatively correlated, while FemalePctDiv, TotalPctDiv, PctVacantBoarded, and murder rate are positively correlated. This result was in accordance with the practical situation, and it can be seen that our results were basically consistent with that of [30]. Our method could more comprehensively select important variables under the same quantile. Therefore, the percentage of females who are divorced, percentage of persons who are divorced, the percentage of households that are vacant and boarded up to prevent from vandalism, and the percentage of people with white race affect the murder rate.

Figure 1.

Correlation between murder rate and common variables.

5. Conclusions

In this paper, we propose variational Bayesian spike-and-slab lasso quantile regression variable selection and estimation. This method applies spike-and-slab lasso prior to each regression coefficient , thus punishing the regression coefficient . The spike prior distribution we choose is a small variance Laplace distribution, while the slab prior distribution is a large variance Laplace distribution [26]. It is precisely because it punishes each regression coefficient that it has a powerful variable selection function, but this also brings a problem of low efficiency, especially when introducing a spike-and-slab prior into quantile regression (note, the algorithm efficiency of quantile regression is inherently lower than that of general regression approaches). In order to solve the problem of inefficiency (influenced by both the quantile regression and spike-and-slab lasso prior) and to make the algorithm feasible. We introduced a variational Bayesian method, to approximate the posterior distribution of each parameter. The simulation studies and real data analyses illustrated that the quantile regression with the spike-and-slab lasso penalty based on variational Bayesian method performed effectively and exhibited a robust competitiveness compared with other approaches (Bayesian method or non-Bayesian method, quantile regression, or nonquantile regression), especially in the case of high-dimensional data. In future research work, it would be significant to improve the interpretability and computational efficiency of ultra-high-dimensional quantile regression based on VB and dimension reduction techniques.

Author Contributions

Conceptualization, D.D. and A.T.; methodology, A.T. and D.D.; software, D.D. and J.Y.; validation, A.T., D.D. and J.Y.; formal analysis, D.D. and A.T; investigation, D.D., J.Y. and A.T.; Preparation of the original work draft, A.T. and D.D.; visualization, D.D. and J.Y.; supervision, funding acquisition, D.D., A.T. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 11961079), Yunnan Univeristy Multidisciplinary Team Construction Projects in 2021, and Yunnan University Quality Improvement Plan for Graduate Course Textbook Construction (CZ22622202).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The research data are available on the website http://archive.ics.uci.edu/ml/datasets/communities+and+crime, accessed on 1 May 2023.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Deduction

Based on the mean-field variational Formula (9), from the joint density (8), it is required to induce the following variational distributions.

where . therefore, given , for and 1, then

if then

if then

therefore , where

for and 2. similarly, if then

if then

therefore

similarly,

where and are the same as above, for and 0, from then

integral out obtain

similarly,

letting

then

therefore , for , where and are the same as above, for and 2.

similarly,

where and are hyperparameters.

therefore where a, b are hyperparameters.

therefore , for , where

with and being constants and is a diagonal matrix with jth entry being .

therefore , where , are hyperparameters and

Appendix B. Expectation

The expectation of some parameter functions about variational posteriors:

Since , therefore

Since , therefore

where and ,

Since , therefore

where and ,

Since , therefore

Since , therefore

Since , for and 1, therefore

Since , therefore

Since , therefore

Appendix C. Efficiency Comparison between Bayesian Quantile Regression Methods

Table A1.

Mean execution times of the various Bayesian quantile regression methods in the simulation.

Table A1.

Mean execution times of the various Bayesian quantile regression methods in the simulation.

| Quantile | Error Distribution | Method | |||||

|---|---|---|---|---|---|---|---|

| i.i.d. Random Errors | Heterogenous Random Errorss | ||||||

| BLQR | BALQR | VBSSLQR | BLQR | BALQR | VBSSLQR | ||

| normal | 146.45 s | 144.56 s | s | 146.48 s | 174.88 s | s | |

| Laplace | 145.26 s | 144.62 s | s | 145.86 s | 173.44 s | s | |

| normal mixture | 144.52 s | 145.27 s | s | 143.48 s | 147.63 s | s | |

| Laplace mixture | 145.86 s | 146.28 s | s | 145.82 s | 171.69 s | s | |

| Cauchy | 145.24 s | 144.63 s | s | 145.89 s | 174.68 s | s | |

| normal | 144.62 s | 144.52 s | s | 144.67 s | 174.39 s | s | |

| Laplace | 144.54 s | 145.26 s | s | 144.57 s | 172.84 s | s | |

| normal mixture | 144.62 s | 145.26 s | s | 144.63 s | 173.62 s | s | |

| Laplace mixture | 147.68 s | 145.83 s | s | 147.62 s | 172.25 s | s | |

| Cauchy | 145.21 s | 144.53 s | s | 145.86 s | 173.42 s | s | |

| normal | 147.29 s | 144.37 s | s | 146.48 s | 173.46 s | s | |

| Laplace | 144.62 s | 145.27 s | s | 144.64 s | 174.61 s | s | |

| normal mixture | 145.84 s | 145.24 s | s | 145.27 s | 172.84 s | s | |

| Laplace mixture | 147.57 s | 147.98 s | s | 147.68 s | 173.42 s | s | |

| Cauchy | 145.87 s | 142.86 s | 16.87 s | 146.28 s | 173.45 s | s | |

The bold represents the optimal result in each scenario and “s” denotes seconds.

Appendix D. Simulation Studies for Cases with Smaller Effect Sizes

Table A2.

The performance of our proposed method for cases with smaller effect sizes.

Table A2.

The performance of our proposed method for cases with smaller effect sizes.

| Quantile | Distribution | Errors | |||||

|---|---|---|---|---|---|---|---|

| i.i.d. Random Errors | Heterogenous Random Errorss | ||||||

| MMAD(sd) | TP | FP | MMAD(sd) | TP | FP | ||

| normal | 0.21(0.05) | 10.00 | 0.13 | 0.33(0.10) | 10.00 | 0.25 | |

| Laplace | 0.25(0.08) | 10.00 | 0.15 | 0.42(0.34) | 9.56 | 0.37 | |

| normal mixture | 0.23(0.06) | 10.00 | 0.07 | 0.36(0.16) | 9.93 | 0.23 | |

| Laplace mixture | 0.29(0.10) | 9.99 | 0.16 | 0.98(0.50) | 8.32 | 0.38 | |

| Cauchy | 0.12(0.55) | 9.43 | 0.00 | 0.16(0.76) | 8.73 | 0.01 | |

| normal | 0.20(0.06) | 10.00 | 0.16 | 0.32(0.09) | 10.00 | 0.29 | |

| Laplace | 0.20(0.06) | 10.00 | 0.06 | 0.32(0.11) | 9.99 | 0.20 | |

| normal mixture | 0.22(0.06) | 10.00 | 0.10 | 0.34(0.10) | 10.00 | 0.21 | |

| Laplace mixture | 0.22(0.07) | 10.00 | 0.06 | 0.33(0.26) | 9.76 | 0.17 | |

| Cauchy | 0.07(0.50) | 9.55 | 0.00 | 0.10(0.69) | 9.00 | 0.00 | |

| normal | 0.21(0.06) | 10.00 | 0.13 | 0.34(0.10) | 10.00 | 0.37 | |

| Laplace | 0.25(0.08) | 10.00 | 0.15 | 0.40(0.18) | 9.91 | 0.38 | |

| normal mixture | 0.23(0.06) | 10.00 | 0.16 | 0.36(0.12) | 9.99 | 0.31 | |

| Laplace mixture | 0.27(0.09) | 10.00 | 0.17 | 0.43(0.30) | 9.66 | 0.33 | |

| Cauchy | 0.11(0.61) | 9.38 | 0.00 | 0.15(0.67) | 9.13 | 0.00 | |

References

- Koenker, R.; Bassett, G. Regression quantile. Econometrica 1978, 46, 33–50. [Google Scholar] [CrossRef]

- Buchinsky, M. Changes in the united-states wage structure 1963–1987—Application of quantile regression. Econometrica 1994, 62, 405–458. [Google Scholar] [CrossRef]

- Thisted, R.; Osborne, M.; Portnoy, S.; Koenker, R. The gaussian hare and the laplacian tortoise: Computability of squared-error versus absolute-error estimators—Comments and rejoinders. Stat. Sci. 1997, 12, 296–300. [Google Scholar]

- Koenker, R.; Hallock, K. Quantile regression. J. Econ. Perspect. 2001, 15, 143–156. [Google Scholar] [CrossRef]

- Yu, K.; Moyeed, R. Bayesian quantile regression. Stat. Probab. Lett. 2001, 54, 437–447. [Google Scholar] [CrossRef]

- Taddy, M.A.; Kottas, A. A bayesian nonparametric approach to inference for quantile regression. J. Bus. Econ. Stat. 2010, 28, 357–369. [Google Scholar] [CrossRef]

- Hu, Y.; Gramacy, R.B.; Lian, H. Bayesian quantile regression for single-index models. Stat. Comput. 2013, 23, 437–454. [Google Scholar] [CrossRef]

- Lee, E.R.; Noh, H.; Park, B.U. Model selection via bayesian information criterion for quantile regression models. J. Am. Stat. Assoc. 2014, 109, 216–229. [Google Scholar] [CrossRef]

- Frank, I.; Friedman, J. A statistical view of some chemometrics regression tools. Technometrics 1993, 35, 109–135. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser.-Stat. Methodol. 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Koenker, R. Quantile regression for longitudinal data. J. Multivar. Anal. 2004, 91, 74–89. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, Y. Variable selection in quantile regession. Stat. Sin. 2009, 19, 801–817. [Google Scholar]

- Park, T.; Casella, G. The bayesian lasso. J. Am. Stat. Assoc. 2008, 103, 681–686. [Google Scholar] [CrossRef]

- Leng, C.; Tran, M.N.; Nott, D. Bayesian adaptive Lasso. Ann. Inst. Stat. Math. 2014, 66, 221–244. [Google Scholar] [CrossRef]

- Li, Q.; Xi, R.; Lin, N. Bayesian regularized quantile regression. Bayesian Anal. 2010, 5, 533–556. [Google Scholar] [CrossRef]

- Alhamzawi, R.; Yu, K.; Benoit, D.F. Bayesian adaptive Lasso quantile regression. Stat. Model. 2012, 12, 279–297. [Google Scholar] [CrossRef]

- Ishwaran, H.; Rao, J. Spike and slab variable selection: Frequentist and bayesian strategies. Ann. Stat. 2005, 33, 730–773. [Google Scholar] [CrossRef]

- Ray, K.; Szabo, B. Variational bayes for high-dimensional linear regression with sparse priors. J. Am. Stat. Assoc. 2022, 117, 1270–1281. [Google Scholar] [CrossRef]

- Yi, J.; Tang, N. Variational bayesian inference in high-dimensional linear mixed models. Mathematics 2022, 10, 463. [Google Scholar] [CrossRef]

- Xi, R.; Li, Y.; Hu, Y. Bayesian quantile regression based on the empirical likelihood with spike and slab priors. Bayesian Anal. 2016, 11, 821–855. [Google Scholar] [CrossRef]

- Koenker, R.; Machado, J. Goodness of fit and related inference processes for quantile regression. J. Am. Stat. Assoc. 1999, 94, 1296–1310. [Google Scholar] [CrossRef]

- Tsionas, E. Bayesian quantile inference. J. Stat. Comput. Simul. 2003, 73, 659–674. [Google Scholar] [CrossRef]

- Rockova, V. Bayesian estimation of sparse signals with a continuous spike-and-slab prior. Ann. Stat. 2018, 46, 401–437. [Google Scholar] [CrossRef]

- Alhamzawi, R.; Ali, H.T.M. The bayesian adaptive lasso regression. Math. Biosci. 2018, 303, 75–82. [Google Scholar] [CrossRef]

- Parisi, G.; Shankar, R. Statistical field theory. Phys. Today 1988, 41, 110. [Google Scholar] [CrossRef]

- Beal, M.J. Variational Algorithms for Approximate Bayesian Inference. Ph.D Thesis, University of London, London, UK, 2003. [Google Scholar]

- Kuruwita, C.N. Variable selection in the single-index quantile regression model with high-dimensional covariates. Commun.-Stat.-Simul. Comput. 2021, 52, 1120–1132. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).