Abstract

The quality assessment of training courses is of utmost importance in the medical education field to improve the quality of the training. This work proposes a hybrid multicriteria decision-making approach based on two methodologies, a Likert scale (LS) and the analytic hierarchy process (AHP), for the quality assessment of medical education programs. On one hand, the qualitative LS method was adopted to estimate the degree of consensus on specific topics; on the other hand, the quantitative AHP technique was employed to prioritize parameters involved in complex decision-making problems. The approach was validated in a real scenario for evaluating healthcare training activities carried out at the Centre of Biotechnology of the National Hospital A.O.R.N. “A. Cardarelli” of Naples (Italy). The rational combination of the two methodologies proved to be a promising decision-making tool for decision makers to identify those aspects of a medical education program characterized by a lower user satisfaction degree (revealed by the LS) and a higher priority degree (revealed by the AHP), potentially suggesting strategies to increase the quality of the service provided and to reduce the waste of resources. The results show how this hybrid approach can provide decision makers with helpful information to select the most important characteristics of the delivered education program and to possibly improve the weakest ones, thus enhancing the whole quality of the training courses.

Keywords:

medical education; analytic hierarchy process; Likert scale; multicriteria decision making MSC:

90B50; 92C50; 97M60

1. Introduction

It is known that, in recent decades, in all the major countries of the world, a high level of knowledge relating to theory, practice and communication in the healthcare field is expected. This means that training courses are provided for each specialist medical area and for all healthcare professions. Moreover, in health environments too, services’ quality and cost saving are becoming increasingly important. It is quite difficult to precisely define the quality concept, and the difficulty increases in health services. In the literature, different qualities’ features are faced, which are intercorrelated and can be treated as converging to the definition of a quality triangle (shown in Figure 1).

Figure 1.

Triangle of quality (the dimension concept will be defined in the following).

In any case, basically, we can say that “quality” indicates the degree of correspondence between the customer’s wishes and their fulfillment with the product or service offered. Obviously, to assess this correspondence, an evaluation tool is necessary.

The aim of educational evaluation concerns the analysis, the interpretation and the judgment of all the main characteristics of a training course for both direct recipients, i.e., the learners who attend the courses, and the educators, lecturers, teachers and organizers. Indeed, the course assessment aims to measure and monitor those key variables representing the efficiency, the effectiveness and the overall quality of education programs.

In the literature [1,2], the distinction between training evaluation’s target areas in terms of its design and content as well as its effects on and result for the organization’s and learner’s improvement is provided. A relationship of cause–effect can be identified among target areas, in which the learner’s acceptance could affect the organizational work.

The popularity rating can be considered a particular method of a user satisfaction survey to assess the internal efficiency of the intervention, the quality of training and the effectiveness of the teaching/learning path. The approval correlates with the individual perception of the experience (perceived quality) and, therefore, relies on qualitative methods and tools. Popularity can be monitored during the evaluation process or after the conclusion of the course. It should be noted that the above-mentioned assessment provides information at a low cost, and it is a useful key for more complex assessments. It is the “oldest” evaluation type, as it coincides with the birth of the education and training systems. Indeed, a very important variable in the evaluation of training effectiveness is the goals–results match of the entire training course or parts of it (modules, units, etc.) [3]. This is closely linked to the type of skills learned: basic, cognitive, professional, technical, instrumental, etc. Learning objects and training course appending affect the choice of methods and tools to use, mainly concerning the actual working modalities, i.e., checking whether the theoretical issues learned during the training courses are transferred to work situations—in other words, how far the skills, abilities and knowledge are useful to improve job performance—and the assessment of the organizational changes caused by the training process.

As mentioned, researchers are focused on the assessment and analysis of service quality for properly managing and organizing health resources due to the continuous increase in service demand in the medical education and clinical contexts, leading to a continuous update of physicians’ knowledge (also called continuing medical education (CME) [1]).

In this context, this paper, which is the extension of a previous study presented as a conference paper [4], aimed to describe a novel methodology to assess and monitor the quality of the medical training program offered by the Centre of Biotechnology of the National Hospital A.O.R.N. “A. Cardarelli” of Naples. The goal of this study was to propose a hybrid approach to verify whether the needs expressed by the learners correspond to the actual service priorities, i.e., those services needing improvements. In particular, the services’ quality was evaluated through the measurement of customer satisfaction and the prioritization of the interventions which, in turn, were evaluated combining two approaches, namely, a Likert scale (LS) and the analytic hierarchy process (AHP). Both methods have been widely used in the literature to develop and maintain a relevant plan for ongoing improvements in service quality. We adopted and combined these two techniques and show that, despite the fact both the AHP and LSs are widely used and recognized methodologies in several fields, if they are used separately, they can provide misleading conclusions, possibly compromising the decision-making process. Instead, if we combine them, we can provide decision makers with a wise trade-off between the voice of the customer (i.e., the needs expressed by the users, learners) and the voice of the process (i.e., those services that need to be improved). This could suggest better decisions regarding the prioritization of the improvement actions and a better organization of resources.

As far as the AHP is concerned, it is used across various fields to plan, to select the best alternatives, for resource allocations and for resolving conflicts and optimization [5], and it offers an efficient evaluation of training programs [6]. The AHP was developed by Saaty [7] as a multicriteria decision-making method to deal with complex problems with multiple subjective criteria.

The AHP performs pairwise comparisons to derive the relative importance of some predetermined items and assess alternatives to make the best decision. The prioritization mechanism is accomplished by assigning a number from a comparison scale developed by Saaty (Saaty’s scale) [8].

The AHP, as well as other tools based on multi-attribute utility functions such as the Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS), the analytic network process (ANP) and the Simple Multi-Attribute Rating Technique (SMART), has been applied in several contexts [9,10,11,12] including the fields of energy renewal [13,14,15], education [9,16,17], urban areas and mobility [18,19,20] and, more recently, health technology assessment [21,22,23,24,25,26,27] and quality improvement in the healthcare sector [11,28,29,30,31]. From the basic AHP principles, many other hybrid approaches for multicriteria decision making have been developed such as the fuzzy AHP [32,33,34,35], aimed at handling imprecise criteria with the use of fuzzy logic [34,36], the Interval Rough AHP–Multi-Attributive Border Approximation Area Comparison (IR-AHP-MABAC) [37], aimed at treating uncertainties in group multicriteria decision-making problems, and other hybrid approaches combining the AHP with other methodologies such as AHP-fuzzy TOPSIS, fuzzy AHP-PROMETHEE or AHP-Simple Additive Weighting [38,39,40]. In particular, the traditional AHP is among the most widely used approaches, and it has proved to be particularly suitable for cases with a limited number of criteria and in healthcare applications [41].

On the other hand, an LS is a psychometric scale widely used to evaluate questionnaires, e.g., it is employed for evaluating the satisfaction degree of users through the measurement of their opinions [29,42,43]. Questionnaires which apply the LS formula permit a choice based on an n-point scale. Participants are asked to express their judgment on an even-numbered scale, while an odd-numbered scale involves a score corresponding to indecision or neutrality. This method is reliable for obtaining measurements of training effectiveness and overall training impact at work [44]. However, evaluations carried out using an LS also have some disadvantages, such as biasness displayed by respondents in answering the questions honestly, the reproducibility of results and the validity of the results. Compared to other scales, such as the Saaty scale usually employed in the AHP method, an LS collects the absolute level of agreement/disagreement (generally on a five-point or seven-point scale) with respect to a finite number of items related to a specific object under examination [45]. Despite the fact it is a numeric scale, it is highly subjective, and it is used to represent the attitude of a person with respect to an object. On the other hand, Saaty’s scale introduces some objective elements in the evaluation through pairwise comparison, which collects relative measurements of the agreement/disagreement level with respect to an object by comparing it with another object. In this way, the Saaty scale aims to overcome the limitation in the use of absolute scales for psychometric assessment by replacing them with relative measurements by pairwise comparisons [46]. Despite this, an LS is helpful to highlight the positive/negative attitude of the user with respect to an item, and it is particularly able to assess the absolute satisfaction degree towards some aspects, in our case concerning the training courses.

In order to better analyze a generic training service, Grönroos (1988) [47] proposed the following specific criteria: professionalism and competence, attitude and behavior, accessibility and flexibility, ability to repair an error, reputation and credibility of the operator, reliability and trust. Parasuraman et al. (1985) [48] classified measurable variables in order to standardize the dimensions of perceived quality: tangible aspects, reliability, responsiveness, competence, courtesy, credibility, safety, accessibility, communication, understanding. In this work, dimensions defined based on those proposed by Grönroos (1988) and Parasuraman et al. (1985) were used together with numerical scales, in accordance with Saaty’s works [3,49,50], to quantify and compare the obtained results. This study extends our previous conference contribution [4] by widening the methodological aspects of the work and clarifying how the two proposed techniques (AHP and LS) are combined together. More specifically, an LS was used since it provides a well-known and versatile tool to assess user satisfaction, and, in our study, it was used to represent the degree of satisfaction of the learners with respect to the training courses; meanwhile, the AHP, among other multicriteria decision-making methods, appears to be more often applied to healthcare-related problems, also with some applications to medical education, and, in our study, we used it in combination with the LS to prioritize the improvement actions to enhance the quality of the training courses provided. Thus, in this manuscript, the hybrid and generalizable approach is described in depth from both a theoretical and practical perspective, the proposed novel workflow is presented and each step of the procedure is detailed. In addition, further results obtained by applying this hybrid approach to the real scenario are presented and widely discussed, and an extensive interpretation of the achievements is provided and discussed in view of the impact that the generalization of this approach could have on the medical training field and, more generally, in the quality assessment of education programs.

2. Materials and Methods

2.1. Proposed Methodology

For carrying out a customer satisfaction survey, the main steps to be followed are:

- Selection of the analysis dimensions;

- Sample choice;

- Questionnaire development;

- Questionnaire submission;

- Data processing.

The first step was introduced in the previous section. Obviously, the sample was chosen among people attending courses; in particular, the questionnaires were administered to a group of 110 students who attended training courses at the “Centre of Biotechnology of the National Hospital A.O.R.N. “A. Cardarelli” in the period from September 2013 to February 2014. The other steps will be described in the following.

However, the novel contribution of the proposed methodology is that the data processing is based on the combination of the AHP and LS tools and comparison of the obtained results to plan, if necessary, corrective actions.

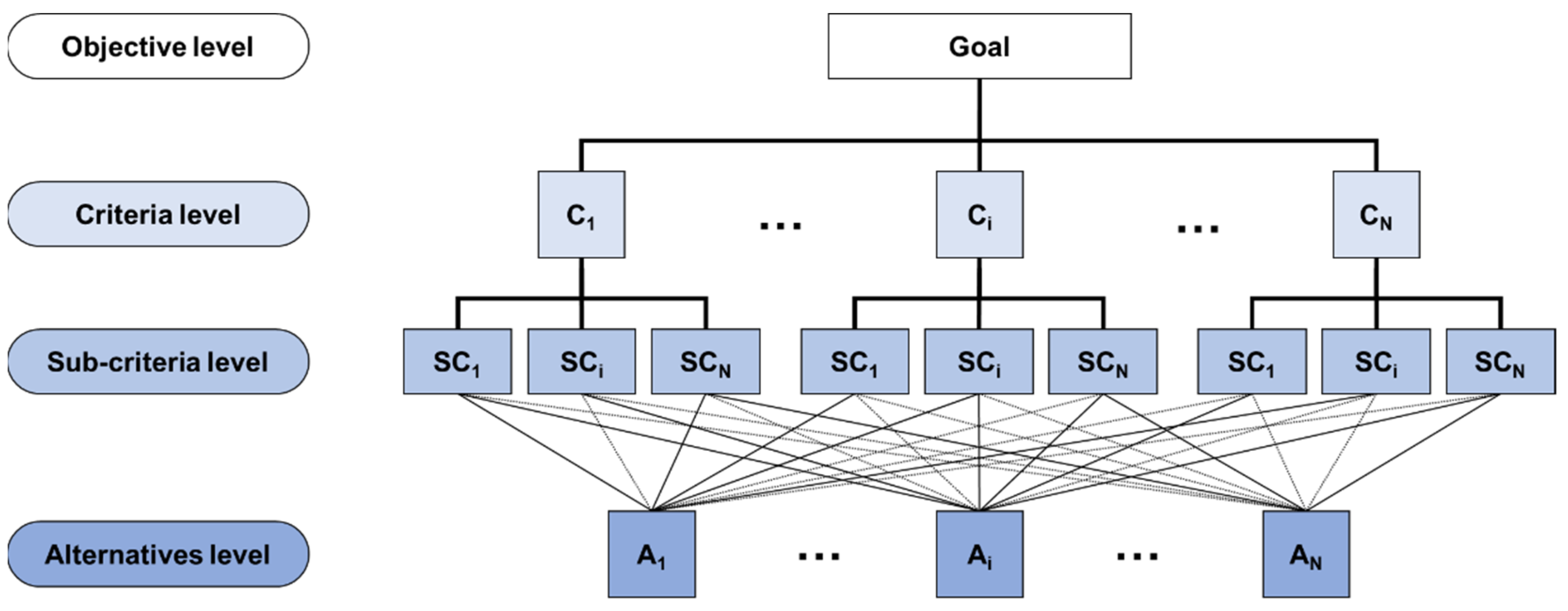

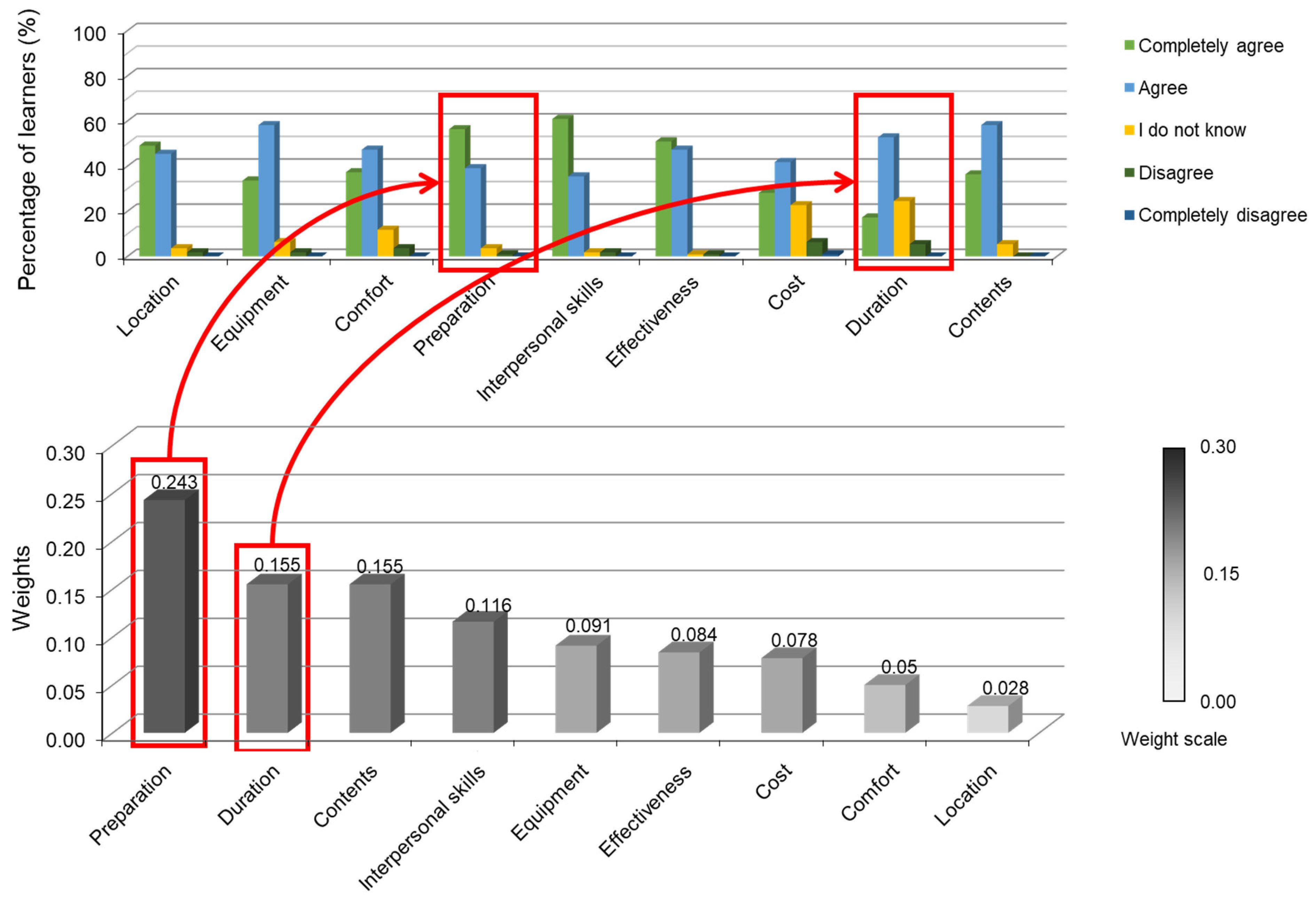

Let us very briefly recall that the AHP is a multicriteria decision-making tool. According to the AHP, the general objective to be met is defined first, followed by the criteria to achieve this objective, the possible sub-criteria into which the criteria can possibly be broken down, etc., up to the lowest level of the hierarchy, or alternatives that must be prioritized (see Figure 2).

Figure 2.

Multicriteria decision making by means of the analytic hierarchy process (from the top: Goal = objective of the analysis; Ci = i-th criterion; SCi = i-th sub-criterion; Ai = i-th alternative).

In particular, it comprises questionnaires for comparison of each element with the others and employs the geometric mean to arrive at the final solution, i.e., a priority list. The geometric AHP method has been largely used since it proved to be more efficient than the arithmetic method. From a mathematical point of view, indeed, the geometric mean is the only one that maintains the reciprocity of the matrix, i.e., preserves the matrix consistency [51,52,53,54].

On the other side, questions were designed using the LS forecast scores which represent agreement or disagreement (see, for example, Table 1).

Table 1.

Scores and meaning assigned to the items according to a 5-point Likert scale.

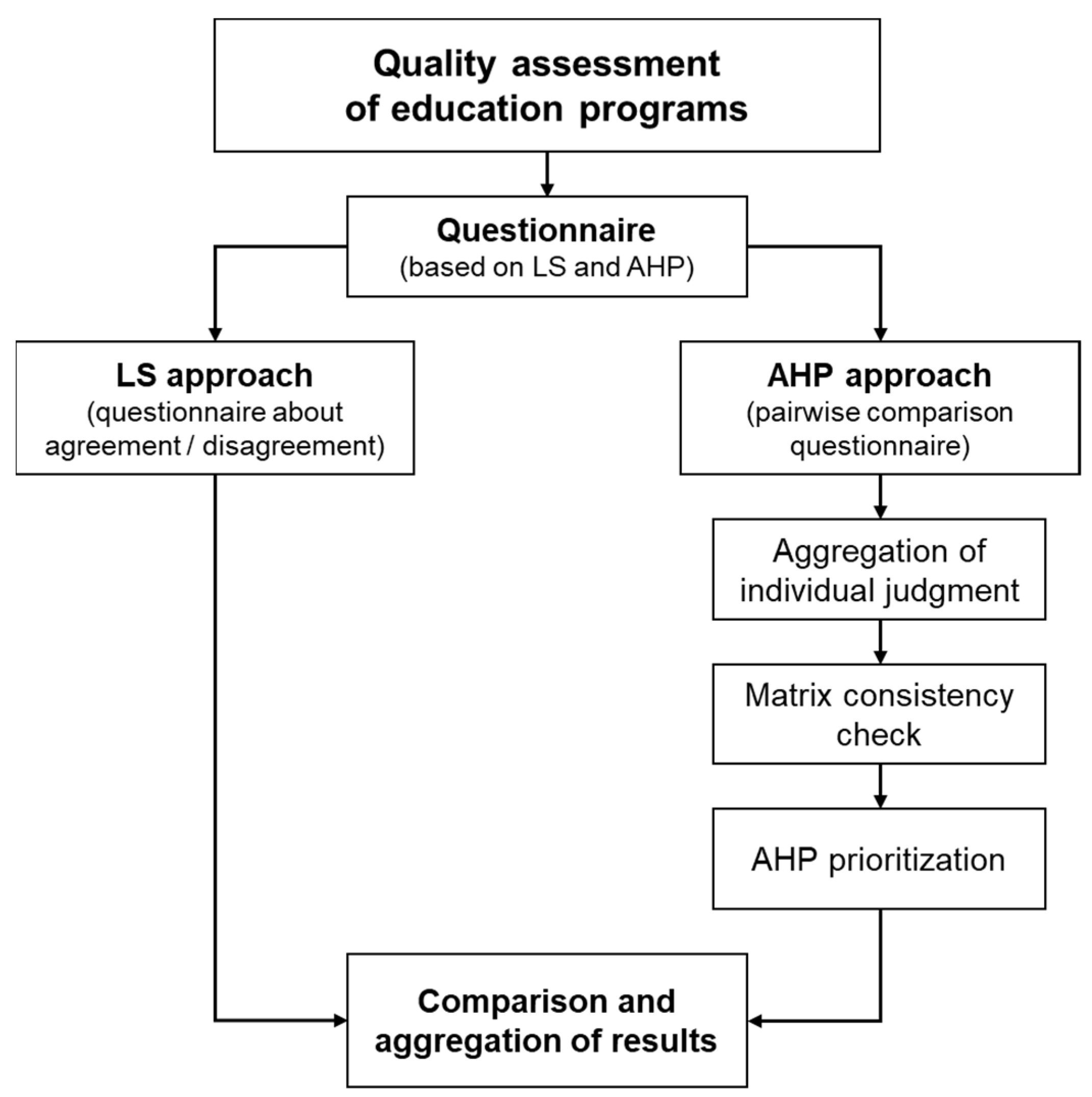

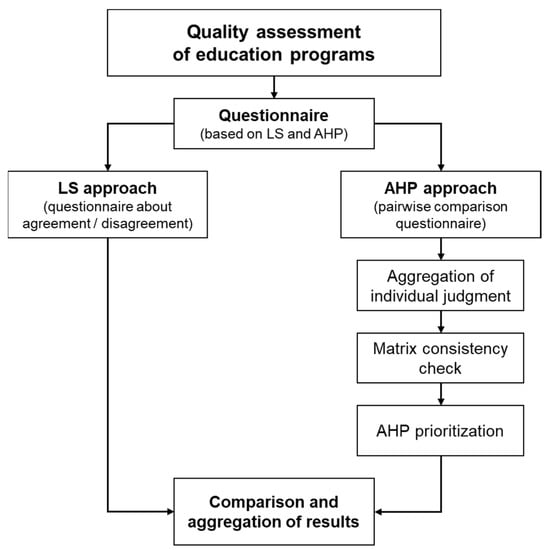

This allowed us to numerically quantify, for each main need, the related satisfaction levels of the learners. The logical flow chart of the proposed methodological approach is reported in Figure 3.

Figure 3.

Proposed methodological approach for the quality assessment of education programs.

As shown in Figure 3, the method adopted for the analysis of the perceived quality was the use of questionnaires; of course, the items defined in the questionnaires are related to each other.

2.2. Application of the Approach to a Case Study: AHP Approach

In accordance with the AHP model, firstly, the survey target was defined; the goal was to assess and analyze the service quality of the training courses provided by the Centre of Biotechnology of the National Hospital A.O.R.N. “A. Cardarelli” of Naples. Then, according to the dimensions identified by Grönroos (1988) [47] and Parasuraman et al. (1985) [48], three main dimensions of the service, representative of the training, and three corresponding sub-dimensions, which together make up the so-called dominance hierarchy, were identified, as reported in Table 2.

Table 2.

Definition of the hierarchy of dominance according to the AHP.

In particular, structure, teacher and organization were chosen as the main dimensions (criteria). The following sub-dimensions (sub-criteria) are associated with the “structure” dimension:

- Location: the strategic location of the building with respect to the hospital structure and to the transport facilities nearby;

- Equipment: presence and suitability of the equipment provided with the training courses;

- Comfort: characteristics making the environment pleasant and comfortable.

The following sub-dimensions (sub-criteria) are associated with the “teacher” dimension:

- Preparation: adequacy of the level of training and preparation of the teacher;

- Interpersonal skills: ability to relate to learners by interpreting their requests and providing clear and comprehensive answers;

- Effectiveness: ability to deal with topics aimed at achieving the objectives of the course.

Finally, “organization” is the criterion that includes the general aspects that characterize the entire training process, which can be better defined through the following sub-criteria:

- Cost: costs sustained for the course;

- Duration: number of hours of the course;

- Content: topics and subjects of the course.

2.3. Planning of the LS Methodology

Before developing LS questionnaires, some criteria have to be followed for correctly establishing the items, as shown in the following list:

- Each item must be formulated in such a way that people with opposite attitudes give different responses;

- It is often helpful to present statements in an impersonal form;

- Statements must be concise and formulated with a simple language;

- Double negation sentences must be avoided;

- The items must be formulated half with a favorable attitude to the object and half with an unfavorable attitude.

Based on the above-listed criteria, a questionnaire composed of two parts was realized [4].

2.4. Data processing Using AHP

As mentioned, the AHP method involves the calculation of matrices of pairwise comparisons. In order to determine the matrices’ coefficients, a 9-point semantic Saaty scale [3] was employed to quantify qualitative judgments.

Based on the 9-point LS questionnaire, a comparison table was obtained for each couple of parent criteria (teacher–organization; teacher–structure; organization–structure).

A weighted geometric mean method (WGMM) was adopted to cluster the judgments as in the following equation:

which can be re-formulated by applying a constant weight equal to 1/N as

then, applying the logarithm, the computation is simplified in a sum:

Since non-integer values are obtained by applying the geometric mean method, we rounded it to the nearest integer referring to a specific degree of relative importance on the adopted 9-point semantic Saaty scale. The pairwise comparisons between the parent criteria are shown in Table 3.

Table 3.

Pairwise comparisons between parent criteria.

Starting from Table 3 and from the weighted geometric mean values, it was possible to build the pairwise comparison matrix inherent to the three parent criteria, i.e., teacher, organization and structure (see Table 4).

Table 4.

Pairwise comparison matrices for parent criteria.

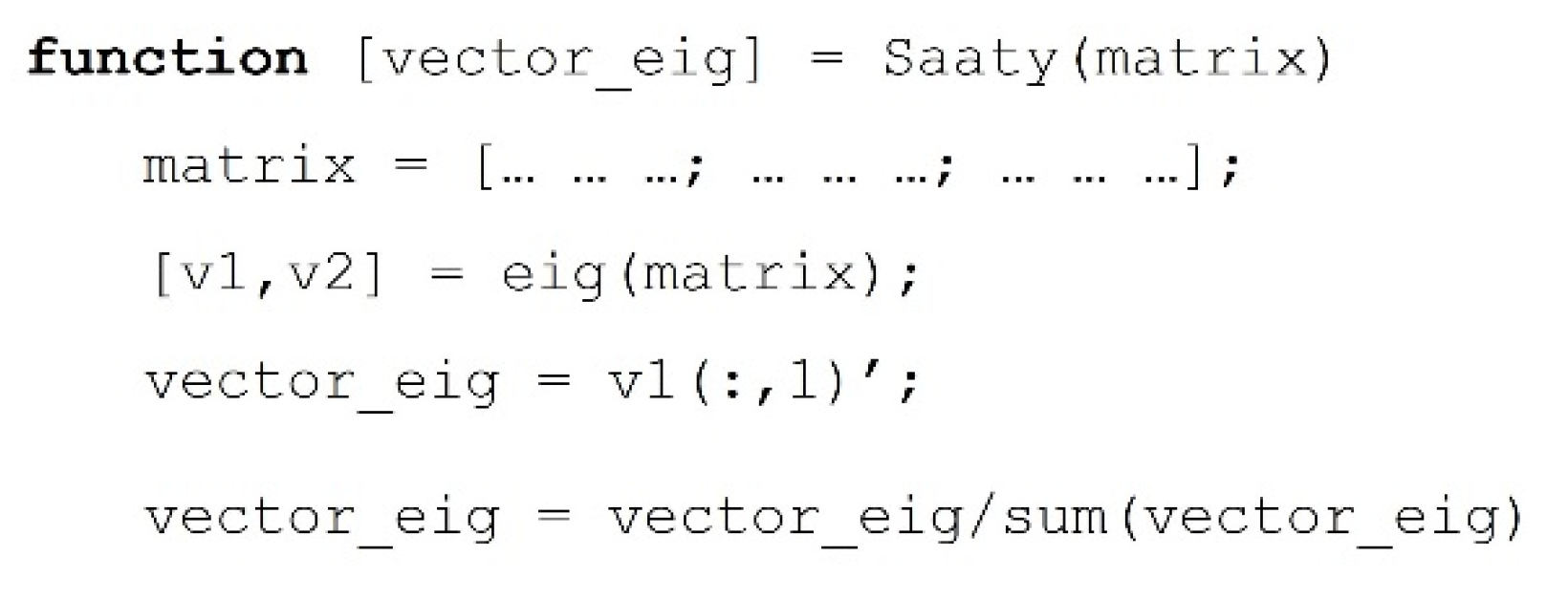

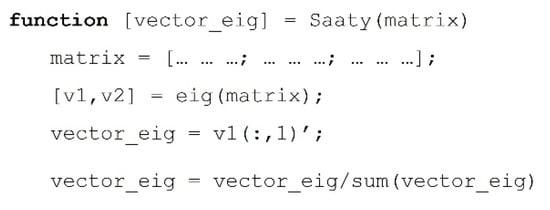

The data contained in the pairwise matrix (Table 4) were used to derive the order of the pairs between the elements of each matrix, i.e., a scale of values that expresses the final choice of priorities. The priority scale is a scale obtained using the eigenvalue method proposed by Saaty [3], and, here, it was implemented by developing a straightforward algorithm in the MatLab environment (Figure 4).

Figure 4.

Proposed MatLab algorithm to obtain the priority vector.

The same process was then iterated for each sub-criterion to build the corresponding pairwise comparison matrices (the pairwise comparison matrices for the sub-criteria are reported in the Results section together with the priority values). The next step was to calculate each sub-criterion’s global (or overall) weight, by multiplying the sub-criterion priorities by the corresponding parent criterion’s priority.

2.5. Matrix Consistency Check

The next step was to calculate the consistency index (CI) and consistency ratio (CR) for all matrices according to the following Equations (4) and (5):

where is the largest eigenvalue, is the matrix dimension and RI is the Saaty random consistency index, which depends on the matrix dimension, as reported by Saaty [50,55]; in our case, for a matrix size of 3, the RI is equal to 0.52.

2.6. Application of the Approach to a Case Study: Processing LS-Based Questionnaires

Concerning the Likert scales, the first step was to evaluate the percentage of learners who provided a specific response based on their satisfaction with the service quality provided, as shown in Table 5.

Table 5.

Percentages of response for each sub-criterion.

From the obtained data, it was observed that high satisfaction percentages (rates) were expressed for each item, and for the preparation of the teacher and his/her availability. Starting from these percentage values, the percentages of response for each parent criterion were calculated by averaging the values of the 3 respective sub-categories, as shown in Table 6.

Table 6.

Percentages of response for each parent criterion.

3. Results

3.1. Data Processing

The procedure described in the Methods section was applied for all the pairwise comparison matrices.

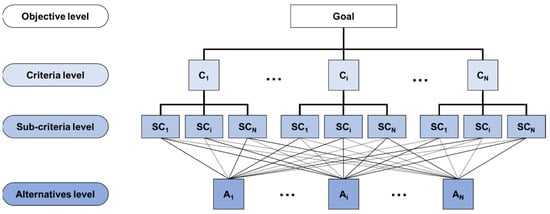

3.2. Calculation of Local and Global Priorities (AHP Method)

Regarding the local priorities, the eigenvalue method provided the following ranking concerning the three parent criteria:

- TEACHER weight: 0.443;

- ORGANIZATION weight: 0.387;

- STRUCTURE weight: 0.169.

Both the matrix and the corresponding weights for all parent criteria (teacher, organization and structure) and sub-criteria are shown in Table 7.

Table 7.

Pairwise comparison matrix and AHP priority weights of both parent criteria and sub-criteria.

After calculating these values, which represent, as mentioned, the absolute weights of the sub-criteria, it was possible to define the “hierarchy of needs” of the users (Table 8).

Table 8.

Learners’ needs in decreasing priority order.

Table 8 shows that the top priority is to meet very competent teachers (preparation). High importance is assigned to all three teacher characteristics: preparation, interpersonal skills and effectiveness, as well as to the duration and contents of the courses. Lower importance is instead assigned to their cost, to the comfort of the rooms and to the location.

3.3. Matrix Consistency

By applying Equations (4) and (5), the following CR values were obtained for each category:

The CR equals 0, indicating that the judgments are perfectly consistent [56,57,58]. Moreover, according to Saaty (2008) [3], when the CR exceeds 0.1, the judgments set may result in being inconsistent and unreliable. Nevertheless, in practical applications and in quality assessment studies involving a large number of participants, CR values slightly higher than 0.1 can be considered acceptable. In our case, except for the matrix related to the parent criterion “teacher”, which shows a very slight degree of inconsistency, all the other matrices of the pairwise comparisons are strongly consistent.

3.4. Sensitivity Analysis

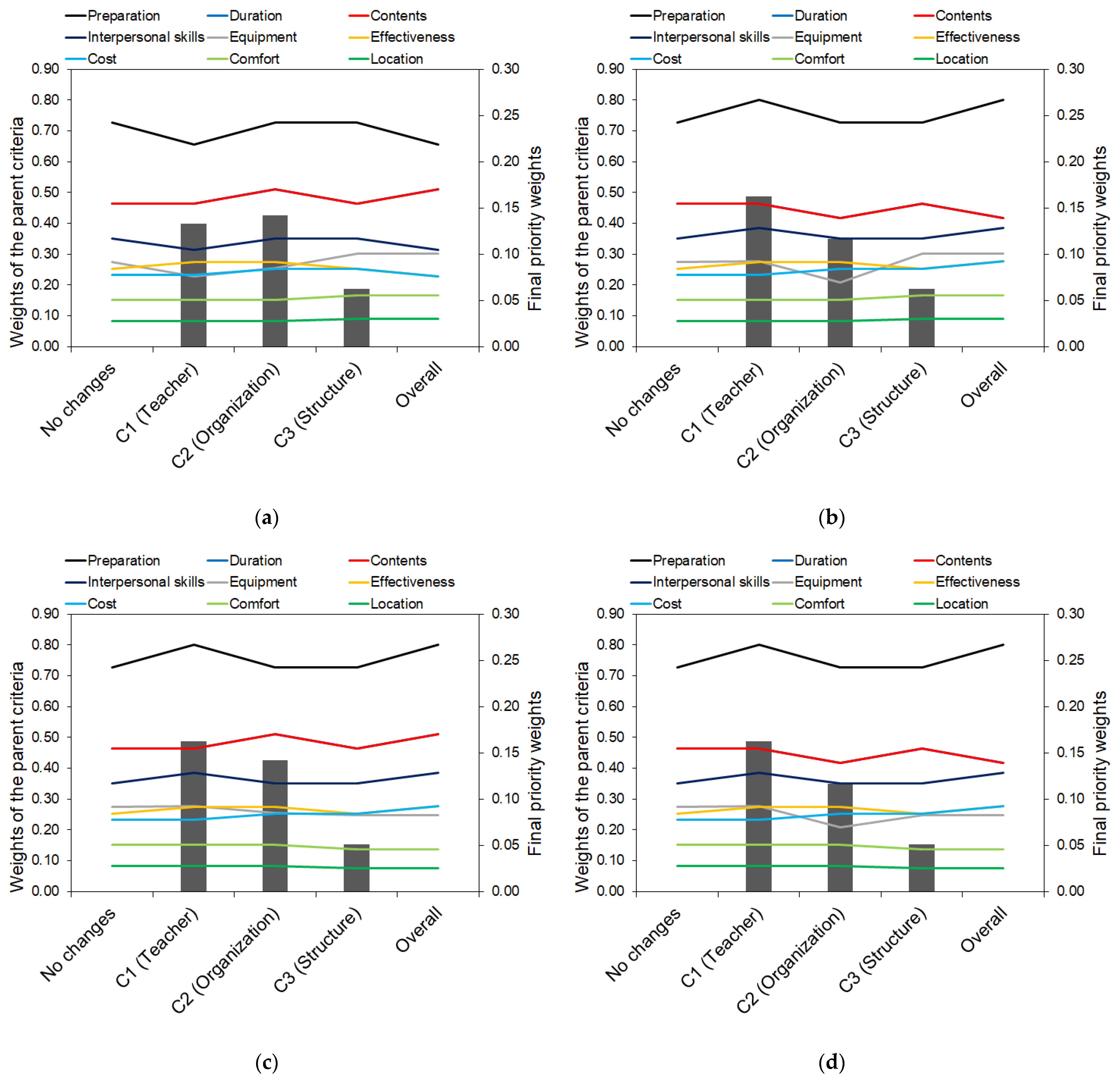

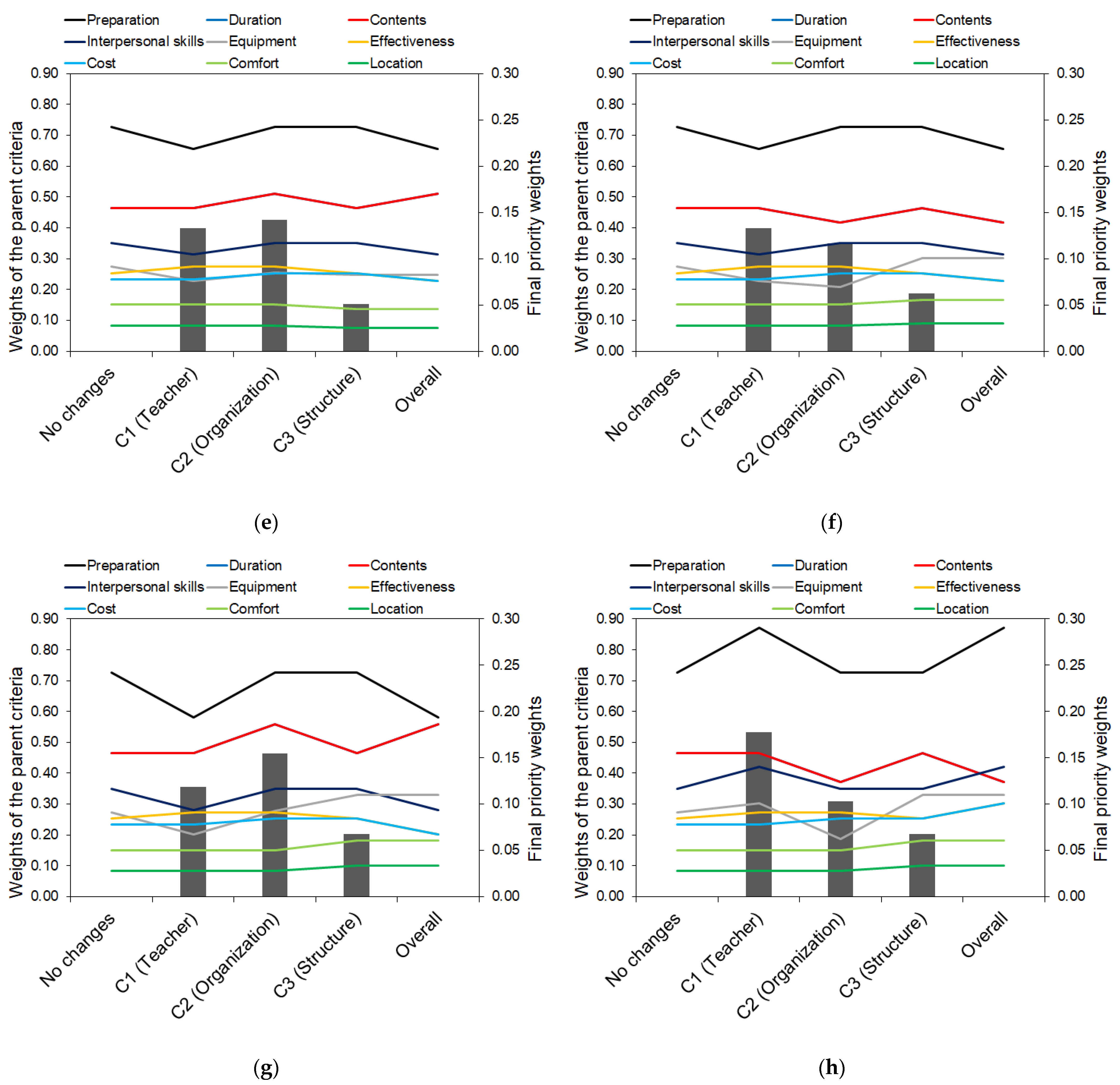

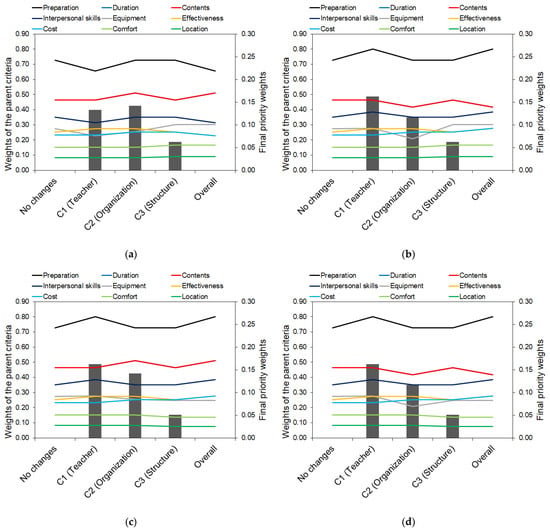

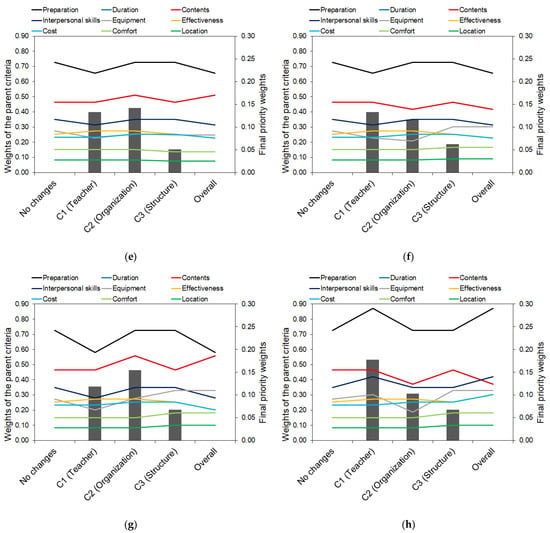

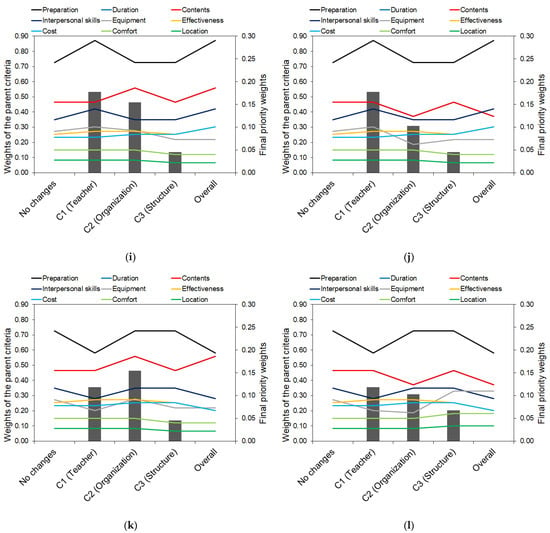

In order to widen and improve the AHP process, we also carried out a sensitivity analysis to assess the impact that variations in the parent criteria’s weights, e.g., changes due to a higher or lower importance assigned to a specific criterion, can have on the obtained hierarchy of needs (final priority weights). Twelve dynamic scenarios were simulated by considering a ±10% and ±20% increase/decrease in all the parent criteria’s weights, as briefly summarized in Table 9.

Table 9.

Increase in the percentage of each parent criterion in all the simulated scenarios for the sensitivity analysis.

The graphs shown in the following Figure 5 report the dynamical changes in the hierarchy of needs (final priority weights) as a function of the changes in the parent criteria’s weights. Each graph represents a specific scenario (as reported in Table 9) where the weights of the parent criteria are represented as gray histograms and refer to the primary y-axis, while dynamical changes in the final priority weights are represented as colored straight lines and refer to the secondary y-axis. For each scenario, five cases are represented on the x-axis: (i) original case (no changes in the parent criteria’s weights); (ii) change in the weight of only the first parent criterion (C1—teacher) without altering the other two parent criteria; (iii) change in the weight of only the second parent criterion (C2—organization) without altering the other two parent criteria; (iv) change in the weight of only the third parent criterion (C3—structure) without altering the other two parent criteria; (v) simultaneous changes in the weights of all the parent criteria (overall).

Figure 5.

Simulated scenarios (according to Table 9) in the AHP sensitivity analysis: (a) scenario 1; (b) scenario 2; (c) scenario 3; (d) scenario 4; (e) scenario 5; (f) scenario 6; (g) scenario 7; (h) scenario 8; (i) scenario 9; (j) scenario 10; (k) scenario 11; (l) scenario 12. Weights of the parent criteria are represented as gray histograms and refer to the primary y-axis, while dynamical changes in the final priority weights are represented as colored straight lines and refer to the secondary y-axis. The x-axis represents five cases: original case (no changes in the parent criteria’s weights); change in the weight of only the first parent criterion (C1—teacher); change in the weight of only the second parent criterion (C2—organization); change in the weight of only the third parent criterion (C3—structure); simultaneous changes in the weights of all the parent criteria (overall). Straight lines for the “Duration” and “Contents” items appear as overlapped in all the graphs.

The analysis of the simulated scenarios showed that the AHP is slightly sensitive to changes in the importance of the parent criteria. Indeed, the ranking of the four main priorities of the original hierarchy of needs (Preparation, Duration, Contents and Interpersonal skills) does not exhibit significant changes, preserving their order in the most dynamical scenario presented. For example, the Preparation item preserves its relative position in the ranking in all the presented scenarios (Figure 5a–l); a small shift from the original ranking occurs in Figure 5h,j, where the overall priority weight associated with the term Interpersonal skills slightly exceeds the overall weight for the Duration and Contents items compared to the original ranking. On the contrary, low or moderate sensitivity is detectable by looking at the variations in the final priority weight of the Equipment item, which was shown to be particularly sensitive to changes in the importance of the organization criteria. For example, when the importance of organization is decreased from 0.39 to 0.31 (e.g., in Figure 5h,l), the overall rank of Equipment changes from 0.09 to 0.06, producing slight deviations from the original ranking. Overall, we can state that the hierarchy of needs is preserved with only low variations in some specific scenarios simulated in the sensitivity analysis.

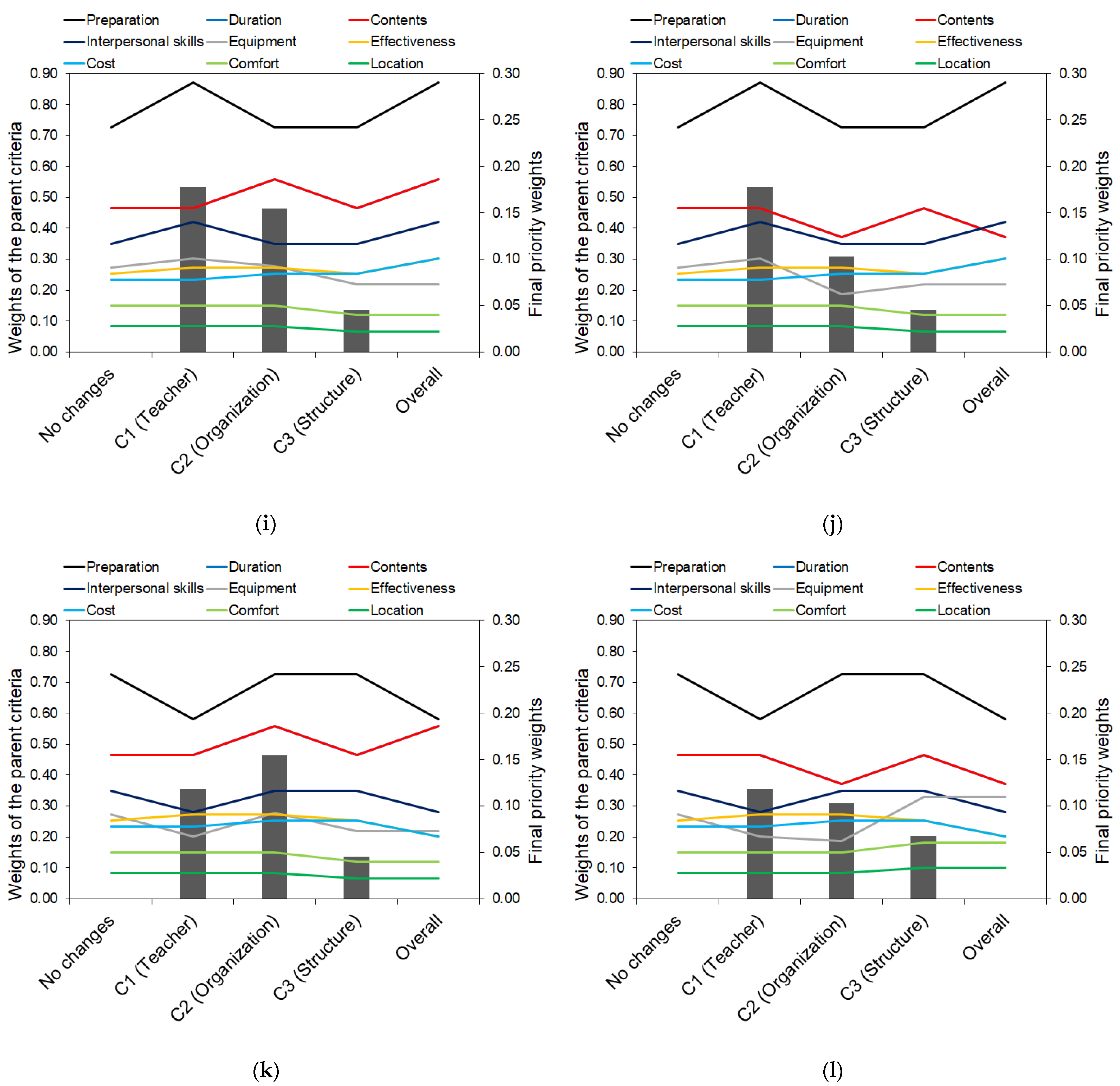

3.5. Data Aggregation and Comparison

As a final step, the information gathered by means of the employed techniques (AHP and LS) was combined in order to propose a practical tool that can facilitate the process of decision making and then improve the users’ perceived quality.

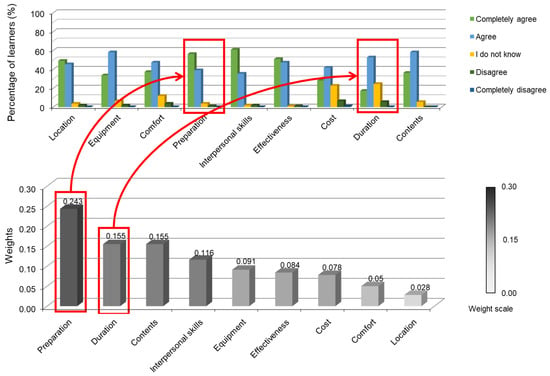

In Figure 6, a direct, visual (therefore simple to interpret and quick) comparison of the results obtained with the two methods is presented.

Figure 6.

Comparison of the LS and AHP results.

As stated, it is easy to understand from Figure 6, in which we summarize users’ satisfaction through an LS (top of Figure 6) and the AHP (bottom of Figure 6), that one of the main features that, according to the AHP, could affect the quality of training courses concerns the teacher’s preparation (bottom of Figure 6), which is first in the AHP ranking. However, according to the LS, the same feature is associated with a high value of learners’ satisfaction (top of Figure 6), thus suggesting that, regarding the teacher’s preparation, the Centre of Biotechnology has already reached its target. Furthermore, the duration of the courses is the second most important feature according to the AHP hierarchy (bottom of Figure 6), thus requiring priority intervention. For the same feature, the LS results (top of Figure 6) show a low satisfaction value associated with the course duration. This indicates that the course duration, while representing an element of great importance, generates a degree of satisfaction that is relatively low; thus, a higher priority should be given to this aspect in order to achieve a greater improvement in the course quality.

Analogous considerations and analysis can be extended to all the items, so it is possible to identify the users’ needs through the application of the AHP and users’ satisfaction through the application of the LS. Furthermore, in view of the sensitivity analysis of the AHP that was carried out, we may also state that, since the hierarchy of needs from the AHP does not exhibit significant changes, especially for the items with the highest priority, when the parent criteria weights are varied, the comparison with the LS results is far more necessary since it can suggest considering as priorities those items that, despite having a lower AHP ranking, show a lower user satisfaction degree and thereby require primary improvements even with respect to the top-ranked AHP items.

4. Discussion

The concept of quality has become increasingly popular in business, a very competitive environment and nowadays substantially without borders. The national and international competition requires adaptation of products offered to the customers’ expectations. In particular, it is very important to continuously improve the quality of goods and services in order to reduce costs and eliminate waste. The quality evaluation implies some difficulties related to their definition, often dependent on the context, and to the different methods that can be employed.

This issue is strongly felt in the healthcare field, in which the quality assessment is particularly complicated due to the characteristics of the structure, which does not produce a good but rather a special service, with large intangible components and enhanced customization. Costs and quality are two key points concerning the healthcare industry worldwide [59,60,61]. Thus, methods developed and used in the industrial and manufacturing sectors have also been extended to the transactional and service fields [62], including methods aiming at assisting and supporting decision makers and health personnel with healthcare issues [63,64,65,66]. The introduction of the continuing medical education (CME) concept in health organizations allowed health personnel to maintain their own competences and to update themselves about new and developing areas and new care modalities [67], ultimately leading to better patient health [1,68,69,70]. However, studies have shown a substantial difference between the real and ideal performance of CME, suggesting a lack of effect of formal CME [67,71,72,73].

In this study, we extended the methodology, results and discussion briefly presented in our previous conference paper [4]. In particular, we proposed the use of a hybrid approach by combining two different methodologies, namely, an LS and the AHP, for quality assessment and multicriteria decision making in the field of medical education.

We are aware that many methodologies are available in the field of multicriteria decision making, as detailed in the Introduction section, such as: the IR-AHP-MABAC model [37], which is mainly used to determine the weight coefficients of the criteria; Level Based Weight Assessment (LBWA), which enables the involvement of experts from different fields, and for this reason, we considered it not to be pertinent to our study that involves a number of learners attending medical training courses; the Best Worst Method (BWM), which is considered the most data- and time-efficient method when there are a large number of criteria, which is, however, not in line with the case study proposed here because it considers a limited number of criteria; Multi-Attributive Ideal-Real Comparative Analysis (MAIRCA) and TOPSIS [74,75], both based on the comparison of theoretical and empirical alternative ratings, which, however, fall outside of our methodological framework; the Characteristic Objects Method (COMET), Stable Preference Ordering Towards Ideal Solution (SPOTIS) and the SIMUS method, which have been developed to avoid the rank reversal phenomenon [76,77,78], which, however, does not occur in the present study. Therefore, we decided to focus on the combination of more generalizable, versatile and traditional approaches to tailor them to our specific case. Of course, other methods and their variations could also be considered; however, we chose an LS and the AHP for two substantial reasons. First, the objective of this paper was not to investigate the mathematical details and the performances of the different possible models of multicriteria decision-making methods, but rather to propose a proof of concept. Secondly, the chosen approaches appear to be widely used in the healthcare field.

After presenting the theoretical aspects that guided us to the design of such a hybrid approach, we applied it to the problem of evaluating the level of quality achieved in the training course provision at the Centre of Biotechnology of the National Hospital A.O.R.N. “A. Cardarelli”, in the service of high medical education. The proposed analysis was performed by taking into account the users’ perspectives (i.e., medical specialists attending medical training courses). The quality in the delivery of medical training courses perceived by the learners, which is presented in Figure 5, represents a driver for continuously improving and re-designing the training process in order to effectively respond to the dynamic user needs.

In addition to the information presented in [4], further methodological detail, procedural steps, results and discussion were included and thoroughly described in view of the opportunity to generalize the proposed approach to more complex scenarios and different decision-making problems in the sector of quality assessment of education programs.

In the presented case study, the combination of the two methodologies allowed us to highlight the most important expectations of the learners, and then to verify if the quality of the service offered fulfils the necessities of the users. Three parent criteria, with three sub-criteria associated with each parent criterion, were defined. Then, a questionnaire to evaluate the user satisfaction degree was designed based on the LS technique, which already proved to be a successfully adopted assessment of user satisfaction in the health sector [4,23,28]. The obtained outcomes underline that an analytical and qualitative evaluation of the service quality of the training process can be accomplished by combining the two methods in order to provide a more complete perspective on what learners perceive. In particular, using the AHP alone, the obtained hierarchy does not provide sufficient information, which could be misleading, to reveal which interventions are necessary to improve the service, because this technique does not provide information about user satisfaction. In turn, combining the two methods improves the understanding of service quality, as is easy to note from the obtained results in this study.

In summary, it is worth highlighting that the goal of this study was to propose a methodology to verify whether the needs expressed by the stakeholders (i.e., the learners in our case) correspond to the actual services needing improvements. In this sense, our contribution lies in the impact of this approach. Indeed, we propose a novel combination of traditional methods, i.e., the AHP and an LS, and adopted and combined these two techniques to show that, despite the fact both the AHP and LSs are widely used and recognized methodologies in several fields, if they are used separately, they can provide misleading conclusions. Instead, if we combine them, we can provide decision makers with a wise trade-off between the voice of the customer (i.e., the needs expressed by the users, learners) and the voice of the process (i.e., those services that need to be improved more than others). This could suggest better decisions regarding the prioritization of the improvement actions and a better organization of resources.

5. Conclusions

This study aimed to provide a proof of concept about the combined use of the AHP and an LS in the quality assessment of medical training courses. Through the synergistic application of both methods, the AHP and an LS, a decision maker could be able to improve those service features which are very important but that do not meet the users’ satisfaction. Obviously, assessing and analyzing users’ satisfaction through a continuous collection of learners’ opinions are important drivers to improve the quality of the training process, as well as designing a monitoring system, whose aim is to reveal deficiency and the related corrective actions. Furthermore, by using this approach, a decision maker can avoid intervening on less relevant aspects, thus saving money and time. The aim of this analysis was to reveal the main areas in which people can invest money and time for continuously improving the training process according to the user satisfaction degree.

Future studies will aim to start from the proposed proof of concept in order to optimize it and compare it with other methodologies and to generalize the approach to multiple applications. Alternative and revised AHP approaches will be evaluated alone or in combination with the LS to address the same or analogous issues in the medical education field. In addition, future research directions will aim at overcoming the major limitations of this study. Indeed, the very specific case study proposed in this work could be generalized in a multi-center study involving multiple courses and education programs. Moreover, a sensitivity analysis will be carried out to assess the performance of the method and identify possible strengths and weaknesses in order to optimize the approach and also test it in different scenarios.

Author Contributions

Conceptualization, F.A., S.C., G.R. and G.I.; methodology, A.M.P., M.R. and G.I.; software, A.M.P., M.R. and G.I.; validation, A.M.P., F.A, S.C. and G.R.; formal analysis, A.M.P., M.R. and G.I.; investigation, F.A., M.R. and G.I.; resources, S.C., G.R. and F.A.; data curation, S.C., G.R. and G.I.; writing—original draft preparation, A.M.P., M.R. and G.I.; writing—review and editing, A.M.P., F.A. and M.R.; visualization, A.M.P. and M.R.; supervision, F.A., M.R. and G.I.; project administration, S.C. and G.R. All authors have read and agreed to the published version of the manuscript. M.R. and G.I. contributed equally to the work.

Funding

The authors confirm they have not received any fundings for this research.

Institutional Review Board Statement

The authors confirm that all the procedures have been carried out in compliance with Declaration of Helsinki. Given the nature of collected and analyzed data, no ethic authorizations and no consent declarations were required. National regulations require preliminary ethical approval of research in case of clinical trials of investigational medicinal products, medical devices, drug/device combination, clinical investigation. Our study is solely a service/satisfaction evaluation and does not involve patients. Furthermore, national regulations on privacy and data protection requires formal actions in case of personal and/or sensitive data. Our questionnaires are completely anonymous and no personal information are linked or linkable to a specific respondent. Moreover, given the nature of the study, no sensitive data was collected nor analyzed.

Data Availability Statement

The data presented in this study cannot be made publicly available.

Acknowledgments

The authors warmly thank dr. Gianluca Parente for the support provided in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Davis, D.; Galbraith, R. American College of Chest Physicians Health and Science Policy Committee Continuing Medical Education Effect on Practice Performance: Effectiveness of Continuing Medical Education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest 2009, 135, 42S–48S. [Google Scholar] [CrossRef] [PubMed]

- Udo, G.G. Using Analytic Hierarchy Process to Analyze the Information Technology Outsourcing Decision. Ind. Manag. Data Syst. 2000, 100, 421–429. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision Making with the Analytic Hierarchy Process. Int. J. Serv. Sci. 2008, 1, 83–98. [Google Scholar] [CrossRef]

- Improta, G.; Ponsiglione, A.M.; Parente, G.; Romano, M.; Cesarelli, G.; Rea, T.; Russo, M.; Triassi, M. Evaluation of Medical Training Courses Satisfaction: Qualitative Analysis and Analytic Hierarchy Process. In Proceedings of the 8th European Medical and Biological Engineering Conference, Portorož, Slovenia, 29 November–3 December 2020; Jarm, T., Cvetkoska, A., Mahnič-Kalamiza, S., Miklavcic, D., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 518–526. [Google Scholar]

- Vaidya, O.S.; Kumar, S. Analytic Hierarchy Process: An Overview of Applications. Eur. J. Oper. Res. 2006, 169, 1–29. [Google Scholar] [CrossRef]

- Agha, S.R. Evaluating and Benchmarking Non-Governmental Training Programs: An Analytic Hierarchy Approach. JJMIE 2008, 2, 2. [Google Scholar]

- Saaty, T.L. The Analytic Hierarchy Process: Planning, Priority Setting, Resource Allocation; RWS: Buckinghamshire, UK, 1990; ISBN 978-0-9620317-2-4. [Google Scholar]

- Franek, J.; Kresta, A. Judgment Scales and Consistency Measure in AHP. Procedia Econ. Financ. 2014, 12, 164–173. [Google Scholar] [CrossRef]

- Badri, M.A.; Abdulla, M.H. Awards of Excellence in Institutions of Higher Education: An AHP Approach. Int. J. Educ. Manag. 2004, 18, 224–242. [Google Scholar] [CrossRef]

- Benítez, J.; Delgado-Galván, X.; Izquierdo, J.; Pérez-García, R. An Approach to AHP Decision in a Dynamic Context. Decis. Support Syst. 2012, 53, 499–506. [Google Scholar] [CrossRef]

- Canco, I.; Kruja, D.; Iancu, T. AHP, a Reliable Method for Quality Decision Making: A Case Study in Business. Sustainability 2021, 13, 13932. [Google Scholar] [CrossRef]

- González-Prida, V.; Barberá, L.; Viveros, P.; Crespo, A. Dynamic Analytic Hierarchy Process: AHP Method Adapted to a Changing Environment. IFAC Proc. Vol. 2012, 45, 25–29. [Google Scholar] [CrossRef]

- Wang, C.-N.; Kao, J.-C.; Wang, Y.-H.; Nguyen, V.T.; Nguyen, V.T.; Husain, S.T. A Multicriteria Decision-Making Model for the Selection of Suitable Renewable Energy Sources. Mathematics 2021, 9, 1318. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, L.; Solangi, Y.A. Strategic Renewable Energy Resources Selection for Pakistan: Based on SWOT-Fuzzy AHP Approach. Sustain. Cities Soc. 2020, 52, 101861. [Google Scholar] [CrossRef]

- Heo, E.; Kim, J.; Boo, K.-J. Analysis of the Assessment Factors for Renewable Energy Dissemination Program Evaluation Using Fuzzy AHP. Renew. Sustain. Energy Rev. 2010, 14, 2214–2220. [Google Scholar] [CrossRef]

- Dorado, R.; Gómez-Moreno, A.; Torres-Jiménez, E.; López-Alba, E. An AHP Application to Select Software for Engineering Education. Comput. Appl. Eng. Educ. 2014, 22, 200–208. [Google Scholar] [CrossRef]

- Laguna-Sánchez, P.; Palomo, J.; de la Fuente-Cabrero, C.; de Castro-Pardo, M. A Multiple Criteria Decision Making Approach to Designing Teaching Plans in Higher Education Institutions. Mathematics 2021, 9, 9. [Google Scholar] [CrossRef]

- Nosal, K.; Solecka, K. Application of AHP Method for Multi-Criteria Evaluation of Variants of the Integration of Urban Public Transport. Transp. Res. Procedia 2014, 3, 269–278. [Google Scholar] [CrossRef]

- Almeida, F.; Silva, P.; Leite, J. Proposal of A Carsharing System to Improve Urban Mobility. Theor. Empir. Res. Urban Manag. 2017, 12, 32–44. [Google Scholar]

- Lu, X.; Lu, J.; Yang, X.; Chen, X. Assessment of Urban Mobility via a Pressure-State-Response (PSR) Model with the IVIF-AHP and FCE Methods: A Case Study of Beijing, China. Sustainability 2022, 14, 3112. [Google Scholar] [CrossRef]

- Ricciardi, C.; Ponsiglione, A.M.; Converso, G.; Santalucia, I.; Triassi, M.; Improta, G. Implementation and Validation of a New Method to Model Voluntary Departures from Emergency Departments. Math. Biosci. Eng. 2021, 18, 253–273. [Google Scholar] [CrossRef]

- Ponsiglione, A.M.; Ricciardi, C.; Improta, G.; Orabona, G.D.; Sorrentino, A.; Amato, F.; Romano, M. A Six Sigma DMAIC Methodology as a Support Tool for Health Technology Assessment of Two Antibiotics. Math. Biosci. Eng. 2021, 18, 3469–3490. [Google Scholar] [CrossRef]

- Improta, G.; Perrone, A.; Russo, M.A.; Triassi, M. Health Technology Assessment (HTA) of Optoelectronic Biosensors for Oncology by Analytic Hierarchy Process (AHP) and Likert Scale. BMC Med. Res. Methodol. 2019, 19, 140. [Google Scholar] [CrossRef] [PubMed]

- Improta, G.; Converso, G.; Murino, T.; Gallo, M.; Perrone, A.; Romano, M. Analytic Hierarchy Process (AHP) in Dynamic Configuration as a Tool for Health Technology Assessment (HTA): The Case of Biosensing Optoelectronics in Oncology. Int. J. Inf. Technol. Decis. Mak. 2019, 18, 1533–1550. [Google Scholar] [CrossRef]

- Improta, G.; Russo, M.A.; Triassi, M.; Converso, G.; Murino, T.; Santillo, L.C. Use of the AHP Methodology in System Dynamics: Modelling and Simulation for Health Technology Assessments to Determine the Correct Prosthesis Choice for Hernia Diseases. Math. Biosci. 2018, 299, 19–27. [Google Scholar] [CrossRef] [PubMed]

- Danner, M.; Hummel, J.M.; Volz, F.; van Manen, J.G.; Wiegard, B.; Dintsios, C.-M.; Bastian, H.; Gerber, A.; IJzerman, M.J. Integrating Patients’ Views into Health Technology Assessment: Analytic Hierarchy Process (AHP) as a Method to Elicit Patient Preferences. Int. J. Technol. Assess. Health Care 2011, 27, 369–375. [Google Scholar] [CrossRef]

- De Santo, A.; Galli, A.; Gravina, M.; Moscato, V.; Sperlì, G. Deep Learning for HDD Health Assessment: An Application Based on LSTM. IEEE Trans. Comput. 2022, 71, 69–80. [Google Scholar] [CrossRef]

- Dell-Kuster, S.; Sanjuan, E.; Todorov, A.; Weber, H.; Heberer, M.; Rosenthal, R. Designing Questionnaires: Healthcare Survey to Compare Two Different Response Scales. BMC Med. Res. Methodol. 2014, 14, 96. [Google Scholar] [CrossRef]

- Krzych, Ł.J.; Lach, M.; Joniec, M.; Cisowski, M.; Bochenek, A. The Likert Scale Is a Powerful Tool for Quality of Life Assessment among Patients after Minimally Invasive Coronary Surgery. Pol. Pol. J. Cardio-Thorac. Surg. 2018, 15, 130–134. [Google Scholar] [CrossRef]

- Kalaja, R.; Myshketa, R.; Scalera, F. Service Quality Assessment in Health Care Sector: The Case of Durres Public Hospital. Procedia Soc. Behav. Sci. 2016, 235, 557–565. [Google Scholar] [CrossRef]

- Melillo, P.; Delle Donne, A.; Improta, G.; Cozzolino, S.; Bracale, M. Assessment of Patient Satisfaction Using an AHP Model: An Application to a Service of Pharmaceutical Distribution. In Proceedings of the International Symposium on the Analytic Hierarchy Process, Sorrento, Italy, 15–18 June 2011. [Google Scholar]

- Wang, Y.-M.; Luo, Y.; Hua, Z. On the Extent Analysis Method for Fuzzy AHP and Its Applications. Eur. J. Oper. Res. 2008, 186, 735–747. [Google Scholar] [CrossRef]

- Chang, D.-Y. Applications of the Extent Analysis Method on Fuzzy AHP. Eur. J. Oper. Res. 1996, 95, 649–655. [Google Scholar] [CrossRef]

- Liu, Y.; Eckert, C.M.; Earl, C. A Review of Fuzzy AHP Methods for Decision-Making with Subjective Judgements. Expert Syst. Appl. 2020, 161, 113738. [Google Scholar] [CrossRef]

- Radovanovic, M.; Ranđelović, A.; Jokić, Ž. Application of Hybrid Model Fuzzy AHP—VIKOR in Selection of the Most Efficient Procedure for Rectification of the Optical Sight of the Long-Range Rifle. Decis. Mak. Appl. Manag. Eng. 2020, 3, 131–148. [Google Scholar] [CrossRef]

- Lima Junior, F.R.; Osiro, L.; Carpinetti, L.C.R. A Comparison between Fuzzy AHP and Fuzzy TOPSIS Methods to Supplier Selection. Appl. Soft Comput. 2014, 21, 194–209. [Google Scholar] [CrossRef]

- Pamučar, D.; Stević, Ž.; Zavadskas, E.K. Integration of Interval Rough AHP and Interval Rough MABAC Methods for Evaluating University Web Pages. Appl. Soft Comput. 2018, 67, 141–163. [Google Scholar] [CrossRef]

- Taha, Z.; Rostam, S. A Hybrid Fuzzy AHP-PROMETHEE Decision Support System for Machine Tool Selection in Flexible Manufacturing Cell. J. Intell. Manuf. 2012, 23, 2137–2149. [Google Scholar] [CrossRef]

- Sindhu, S.; Nehra, V.; Luthra, S. Investigation of Feasibility Study of Solar Farms Deployment Using Hybrid AHP-TOPSIS Analysis: Case Study of India. Renew. Sustain. Energy Rev. 2017, 73, 496–511. [Google Scholar] [CrossRef]

- Kusumawardani, R.P.; Agintiara, M. Application of fuzzy AHP-TOPSIS method for decision making in human resource manager selection process. Procedia Comput. Sci. 2015, 72, 638–646. [Google Scholar] [CrossRef]

- Widianta, M.M.D.; Rizaldi, T.; Setyohadi, D.P.S.; Riskiawan, H.Y. Comparison of Multi-Criteria Decision Support Methods (AHP, TOPSIS, SAW & PROMENTHEE) for Employee Placement. J. Phys. Conf. Ser. 2018, 953, 012116. [Google Scholar] [CrossRef]

- Joshi, A.; Kale, S.; Chandel, S.K.; Pal, D.K. Likert Scale: Explored and Explained. Br. J. Appl. Sci. Technol. 2015, 7, 396. [Google Scholar] [CrossRef]

- Sullivan, G.M.; Artino, A.R. Analyzing and Interpreting Data From Likert-Type Scales. J. Grad. Med. Educ. 2013, 5, 541–542. [Google Scholar] [CrossRef]

- Kersnik, J. An Evaluation of Patient Satisfaction with Family Practice Care in Slovenia. Int. J. Qual. Health Care. 2000, 12, 143–147. [Google Scholar] [CrossRef] [PubMed]

- Mcleod, S. Likert Scale Definition, Examples and Analysis. Simply Psychology. 2008. Available online: www.simplypsychology.org/likert-scale.html (accessed on 31 January 2022).

- Saaty, T.L. Basic Theory of the Analytic Hierarchy Process: How to Make a Decision. Rev. Real Acad. Cienc. Exactas Fis. Nat. 1999, 93, 395–423. [Google Scholar]

- Gronroos, C. Service Quality: The Six Criteria of Good Perceived Service. Rev. Bus. 1988, 9, 10. [Google Scholar]

- Parasuraman, A.; Zeithaml, V.A.; Berry, L.L. A Conceptual Model of Service Quality and Its Implications for Future Research. J. Mark. 2018, 49, 41–50. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision Making—The Analytic Hierarchy and Network Processes (AHP/ANP). J. Syst. Sci. Syst. Eng. 2004, 13, 1–35. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision-Making with the AHP: Why Is the Principal Eigenvector Necessary. Eur. J. Oper. Res. 2003, 145, 85–91. [Google Scholar] [CrossRef]

- Aguarón, J.; Escobar, M.T.; Moreno-Jiménez, J.M.; Turón, A. AHP-Group Decision Making Based on Consistency. Mathematics 2019, 7, 242. [Google Scholar] [CrossRef]

- Yadav, A.; Jayswal, S.C. Using Geometric Mean Method of Analytical Hierarchy Process for Decision Making in Functional Layout. Int. J. Eng. Res. Technol. 2013, 2, 775–779. [Google Scholar]

- Krejčí, J.; Stoklasa, J. Aggregation in the Analytic Hierarchy Process: Why Weighted Geometric Mean Should Be Used Instead of Weighted Arithmetic Mean. Expert Syst. Appl. 2018, 114, 97–106. [Google Scholar] [CrossRef]

- Dijkstra, T.K. On the Extraction of Weights from Pairwise Comparison Matrices. Cent. Eur. J. Oper. Res. 2013, 21, 103–123. [Google Scholar] [CrossRef]

- Saaty, T.L.; Tran, L.T. On the Invalidity of Fuzzifying Numerical Judgments in the Analytic Hierarchy Process. Math. Comput. Model. 2007, 46, 962–975. [Google Scholar] [CrossRef]

- Chang, C.-W.; Wu, C.-R.; Lin, C.-T.; Chen, H.-C. An Application of AHP and Sensitivity Analysis for Selecting the Best Slicing Machine. Comput. Ind. Eng. 2007, 52, 296–307. [Google Scholar] [CrossRef]

- Aller, M.-B.; Vargas, I.; Waibel, S.; Coderch, J.; Sánchez-Pérez, I.; Colomés, L.; Llopart, J.R.; Ferran, M.; Vázquez, M.L. A Comprehensive Analysis of Patients’ Perceptions of Continuity of Care and Their Associated Factors. Int. J. Qual. Health Care 2013, 25, 291–299. [Google Scholar] [CrossRef] [PubMed]

- Abbad, G. da S.; Borges-Andrade, J.E.; Sallorenzo, L.H. Self-Assessment of Training Impact at Work: Validation of a Measurement Scale. Rev. Interam. De Psicol./Interam. J. Psychol. 2004, 38, 277–284. [Google Scholar]

- Clark, N.M.; Gong, M.; Schork, M.A.; Kaciroti, N.; Evans, D.; Roloff, D.; Hurwitz, M.; Maiman, L.A.; Mellins, R.B. Long-Term Effects of Asthma Education for Physicians on Patient Satisfaction and Use of Health Services. Eur. Respir. J. 2000, 16, 15–21. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Awasthi, S.; Agnihotri, K.; Thakur, S.; Singh, U.; Chandra, H. Quality of Care as a Determinant of Health-Related Quality of Life in Ill-Hospitalized Adolescents at a Tertiary Care Hospital in North India. Int. J. Qual. Health Care 2012, 24, 587–594. [Google Scholar] [CrossRef][Green Version]

- El-Jardali, F.; Jamal, D.; Dimassi, H.; Ammar, W.; Tchaghchaghian, V. The Impact of Hospital Accreditation on Quality of Care: Perception of Lebanese Nurses. Int. J. Qual. Health Care 2008, 20, 363–371. [Google Scholar] [CrossRef]

- Nicolay, C.R.; Purkayastha, S.; Greenhalgh, A.; Benn, J.; Chaturvedi, S.; Phillips, N.; Darzi, A. Systematic Review of the Application of Quality Improvement Methodologies from the Manufacturing Industry to Surgical Healthcare. Br. J. Surg. 2012, 99, 324–335. [Google Scholar] [CrossRef]

- Arafeh, M.; Barghash, M.A.; Haddad, N.; Musharbash, N.; Nashawati, D.; Al-Bashir, A.; Assaf, F. Using Six Sigma DMAIC Methodology and Discrete Event Simulation to Reduce Patient Discharge Time in King Hussein Cancer Center. J. Healthc. Eng. 2018, 2018, 3832151. [Google Scholar] [CrossRef]

- Ponsiglione, A.M.; Romano, M.; Amato, F. A Finite-State Machine Approach to Study Patients Dropout From Medical Examinations. In Proceedings of the 2021 IEEE 6th International Forum on Research and Technology for Society and Industry (RTSI), Naples, Italy, 6–9 September 2021; pp. 289–294. [Google Scholar]

- Black, J.R.; Miller, D.; Sensel, J. The Toyota Way to Healthcare Excellence: Increase Efficiency and Improve Quality with Lean; HAP/Health Administration Press: Chicago, IL, USA, 2016; ISBN 978-1-56793-782-4. [Google Scholar]

- Mazzocato, P.; Savage, C.; Brommels, M.; Aronsson, H.; Thor, J. Lean Thinking in Healthcare: A Realist Review of the Literature. Qual. Saf. Health Care 2010, 19, 376–382. [Google Scholar] [CrossRef]

- Haynes, R.B.; Davis, D.A.; McKibbon, A.; Tugwell, P. A Critical Appraisal of the Efficacy of Continuing Medical Education. JAMA 1984, 251, 61–64. [Google Scholar] [CrossRef] [PubMed]

- Drescher, U.; Warren, F.; Norton, K. Towards Evidence-Based Practice in Medical Training: Making Evaluations More Meaningful. Med. Educ. 2004, 38, 1288–1294. [Google Scholar] [CrossRef] [PubMed]

- Zeiger, R.F. Toward Continuous Medical Education. J. Gen. Intern. Med. 2005, 20, 91–94. [Google Scholar] [CrossRef]

- Ataei, M.; Saffarian-Hamedani, S.; Zameni, F. Effective Teaching Model in Continuing Medical Education Programs. J. Mazandaran Univ. Med. Sci. 2019, 29, 202–207. [Google Scholar]

- Bloom, B.S. Effects of Continuing Medical Education on Improving Physician Clinical Care and Patient Health: A Review of Systematic Reviews. Int. J. Technol. Assess. Health Care 2005, 21, 380–385. [Google Scholar] [CrossRef]

- Khoshnoodi Far, M.; Mohajerpour, R.; Rahimi, E.; Roshani, D.; Zarezadeh, Y. Comparison between the Effects of Flipped Class and Traditional Methods of Instruction on Satisfaction, Active Participation, and Learning Level in a Continuous Medical Education Course for General Practitioners. Sci. J. Kurd. Univ. Med. Sci. 2019, 24, 56–65. [Google Scholar] [CrossRef]

- Davis, D.; O’Brien, M.A.; Freemantle, N.; Wolf, F.M.; Mazmanian, P.; Taylor-Vaisey, A. Impact of Formal Continuing Medical Education: Do Conferences, Workshops, Rounds, and Other Traditional Continuing Education Activities Change Physician Behavior or Health Care Outcomes? JAMA 1999, 282, 867–874. [Google Scholar] [CrossRef]

- Çelikbilek, Y.; Tüysüz, F. An In-Depth Review of Theory of the TOPSIS Method: An Experimental Analysis. J. Manag. Anal. 2020, 7, 281–300. [Google Scholar] [CrossRef]

- Pamucar, D.; Deveci, M.; Schitea, D.; Erişkin, L.; Iordache, M.; Iordache, I. Developing a Novel Fuzzy Neutrosophic Numbers Based Decision Making Analysis for Prioritizing the Energy Storage Technologies. Int. J. Hydrogen Energy 2020, 45, 23027–23047. [Google Scholar] [CrossRef]

- Munier, N. A New Approach to the Rank Reversal Phenomenon in MCDM with the SIMUS Method. Mult. Criteria Decis. Mak. 2016, 11, 137–152. [Google Scholar] [CrossRef]

- Dezert, J.; Tchamova, A.; Han, D.; Tacnet, J.-M. The SPOTIS Rank Reversal Free Method for Multi-Criteria Decision-Making Support. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; pp. 1–8. [Google Scholar]

- Kizielewicz, B.; Kołodziejczyk, J. Effects of the Selection of Characteristic Values on the Accuracy of Results in the COMET Method. Procedia Comput. Sci. 2020, 176, 3581–3590. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).