Abstract

In this paper, the mean-square strong stability and stabilization of discrete-time Markov jump systems are studied. Firstly, the definition of mean-square strong stability is given, and the necessary and sufficient conditions for mean-square strong stability are derived. Secondly, several necessary and sufficient conditions for mean-square strong stabilization via a state feedback controller and an output feedback controller are obtained. Furthermore, explicit expressions for the state feedback controller and static output feedback controller are obtained. Finally, two examples are given to illustrate the validity of the above results.

Keywords:

mean-square strong stability; Markov jump; discrete-time stochastic system; overshoot; output feedback MSC:

93E03

1. Introduction

As one of the most basic dynamic models, the Markov jump system has been studied extensively. This is mainly because these system models have many applications in engineering and finance, such as nuclear fission and population models, immunology, and portfolio optimization [1,2,3]. Therefore, research on the stability and control of these systems has gradually become a hot topic in the field of control theory and application. For example, the authors in [4,5] discussed the continuous-time Markov jump system and studied its stability and stabilization. In [6], the adaptive tracking problem of stochastic nonlinear systems with Markov switching was studied. Robust control for uncertain discrete stochastic bilinear systems was studied in [7]. Output tracking control problems for a class of switched linear systems were studied in [8]. In addition, the authors in [9,10] discussed the stability analysis of different Markovian jump systems, as well as some applications.

In practical engineering applications, the system is often required to have good transient performance and stability performance, which to a large extent limits the traditional meaning of the mean-square stability in engineering applications. For example, excessive equipment voltage in the power system may lead to irreversible damage to the power equipment [11]. A large overshoot indicates that the state of the system fluctuates greatly within a short period of time, indicating that the transient energy of the system may be too large and causing serious consequences. Therefore, it is of great significance to study how to restrain the overshoot response that a large overshoot indicates. Many scholars have made considerable achievements in this field. For instance, the authors in [12] presented the specification of optimal overshoot controllers when the controller order is fixed and the closed-loop system poles are at a fixed location. In [13,14,15], the notion of strong stability was introduced to restrain the overshoot response in different systems. The authors in [16] studied the problem of overcoming overshoot performance limitations for a class of minimum-phase linear time-invariant systems with relative degree one. The authors in [17] proposed a secondary frequency control approach to solve the problem of overshoot in the power-imbalance allocation control of power systems. Moreover, in recent years, the strong stability of systems with double and multiple delays has been studied [18,19]. Other relevant studies can be referred to [20,21]. However, there are few studies involving restricting the overshoot in discrete-time Markov jump systems.

Research on the sufficient and necessary conditions for the mean-square stability of the discrete-time Markov jump system can be traced back to 1993. The sufficient and necessary conditions for the mean-square stability of the discrete-time Markov jump systems were given, and these conditions could be transformed into a form suitable for solving for the existence of a unique solution of a set of Lyapunov equations [22]. In [23], the stability of discrete-time Markov jump systems was studied, and the sufficient and necessary conditions for stability were given, which is essentially equivalent to the mean-square stability. In [24], the classical theories of Markov jump systems were systematically introduced and studied. However, in the classical study of mean-square stability, the stability and stability problems are usually transformed into the form for solving a set of linear matrix inequalities (LMI), which can be solved using the LMI toolbox. Although this method is effective, it cannot give the explicit solution for the controller.

The key difficulty in solving these problems that have not been previously solved is twofold. On the one hand, the controller should be designed so that the system produces as little overshoot as possible under the premise of stability. On the other hand, there is the problem of finding the analytical solution of the controller without using the LMI toolbox. To sum up, we introduce the definition of mean-square strong stability in the discrete-time Markov jump system for the first time. As a stronger definition, mean-square strong stability can suppress the generation of overshoot well in the stochastic system and can improve the transient performance of the system. The main contributions of this paper are as follows. (i) The concept of mean-square strong stability of the discrete-time Markov jump system with multiplicative noise is proposed, and the necessary and sufficient condition for mean-square strong stability of the system is obtained. (ii) The design of a state feedback controller and static output feedback controller for the discrete-time Markov jump system is studied. Furthermore, two necessary and sufficient conditions are obtained to solve the controller. Finally, the analytical expressions of state feedback controllers and static output feedback controllers are given, using Finsler’s theorem.

The specific notations used in this paper are as follows. : the set of all real n-dimensional vectors. : the set of all complex numbers. : . : the set of all matrices. : the transpose of A. : the eigenvalue of A. : the Euclidean norm of x, where x is a vector. : the Euclidean norm of A, where A is a matrix. : the operation of taking the mathematical expectation. A>0 : A is a real symmetric positive definite (positive semi-definite, negative definite, negative semi-definite, respectively) matrix. : the left annihilator of B is a matrix of maximal rank such that .

2. Mean-Square Strong Stability Analysis

As important random switching systems, Markov jump systems are widely used in system control, including in motor systems, image enhancement, medical study, etc. [25,26]. In this paper, we consider the following discrete-time Markov jump systems:

where is a n-dimensional state vector, is the control input, and is the output. The definition of multiplicative noises is that , N are sequences of real random variables defined on a complete probability space , , and are independent wide-sense, stationary, second-order processes with , , where is a Kronecker function defined by if or if . Furthermore, for , , , and , , N are constant matrices of appropriate dimension, denoted by , , and , respectively, for simplicity. represents a discrete-time, discrete-state ergodic Markov chain taking values in with the transition probability , and , , , . The initial distribution of the Markov chain is with , for the case where . and are mutually independent.

Next, we introduce the concept of the mean-square strong stability of the discrete-time Markov jump system.

Definition 1.

The discrete-time Markov jump system (1) is said to be mean-square strongly stable, if it satisfies the following condition:

for all when [14].

Remark 1.

Remark 2.

According to the definition in [22,24], mean-square stability cannot guarantee that systems have good performance in a short time. This is because mean-square stability only limits the changing trend of the state when time tends to infinity, but not the state during the change process, which may cause the system to have large overshoots in a short time. Compared with the mean-square stable system, the mean-square strongly stable system has smaller overshoots.

First, we introduce the following useful lemma. It is a corollary of Finsler’s theorem and is quoted from [27].

Lemma 1.

Suppose that matrices B and symmetric matrices Q are given. Then the following two descriptions are equivalent:

- There is a symmetric matrix X such that

- One of the following two conditions is true

In the following theorem, we aim to give a sufficient and necessary condition for the mean-square strongly stable open-loop system (1) with .

Theorem 1.

The discrete-time Markov jump system (1) is mean-square strongly stable if and only if

where

for each .

Proof.

Necessity: From Definition 1, we can obtain the result that the mean-square strong stability of a discrete-time Markov jump system (1) is equivalent to

Furthermore, it is also equivalent to the following sequences of inequalities:

for each , where the indicator function is defined in the usual way, that is

for . Then, based on the assumptions , (7) is equivalent to

According to the previous assumption, , (8) is equivalent to

Due to the arbitrariness of , (11) can be written as:

which is equivalent to the condition in (5), assuming the initial distribution , .

3. Mean-Square Strong Stabilization via State Feedback

In this section, we aim to design a state feedback controller such that the resulting closed-loop system is mean-square strongly stable.

Definition 2.

The system in (1) is said to show mean-square strong stabilization if there are state feedback controllers which are denoted by , while for simplicity, such that

satisfies Definition 1 [14].

Next, a necessary and sufficient condition for the mean-square strong stabilization of the system (15) is given.

Theorem 2.

System (15) shows mean-square strong stabilization if and only if there are state feedback matrices such that

where

for each .

Proof.

The proof of this theorem can be obtained directly from the proof of Theorem 1. □

Next, the following theorem gives the necessary and sufficient condition for the system in (15) to show mean-square strong stabilization, and the design of its state feedback controller can also be obtained.

Theorem 3.

Suppose that, for each , has full column rank. Then, system (15) shows mean-square strong stabilization via a state feedback controller if and only if the following equations are true:

The explicit form of the state feedback matrix when can be obtained as follows:

where

and is an arbitrary matrix where for each .

Proof.

The following is divided into two steps for proving the feasibility of this theorem.

Step 1: In the first step, let us prove that condition (17) is equivalent to . First, is equivalent to there being a such that the following inequality sequence holds:

Using the matrix inversion lemma, we find that there is a such that

Then, using the Shur complement lemma, we find that it is also equivalent to there being a such that

Finally, according to Lemma 1, this is equivalent to

Step 2: In the second step of the proof, we only need to prove that is equivalent to the result that the system (15) can be strongly stabilized by Theorem 2.

Necessity: First, to prove the necessity, we only need to prove that the condition in (16) implies . Hence, we consider the following sequences of inequalities:

Then, since has full column rank, we have and . Furthermore, since the left side of the above inequality is non-negative, can be obtained.

Sufficiency: Since , one obtains that there are values of and such that

Hence, the solution can be obtained that:

which proves the theorem. □

Remark 3.

According to the assumption of Theorem 3, matrix is full column rank and is non-singular. Therefore, the inverse and square root of matrix in (16) and (17) both exist and are both unique.

Remark 4.

The matrix can be obtained by singular value decomposition. Let have a singular value decomposition as follow:

where is a diagonal matrix composed of a singular value and , , and are unitary matrices. Since all left annihilators of can be written as , with X representing arbitrary nonsingular matrices, can be taken as .

Remark 5.

Compared with the traditional controller for mean-square stabilization, which requires a set of LMIs to be solved [22,24], Theorem 3 gives the explicit expression of the controller that makes the system mean-square strongly stabilized.

4. Mean-Square Strong Stabilization via Output Feedback

Although state feedback can improve the system performance more effectively than output feedback, the state variables cannot be measured directly from outside the system, which often makes the technical implementation of state feedback more complicated than output feedback. In contrast, output feedback has significant advantages in technical implementation.

In this section, we consider the output feedback problem for the discrete-time Markov jump system (1). The system (1) is said to be mean-square strongly stabilized via an output feedback controller if there are output feedback matrices

denoted by , while for simplicity, such that

satisfies Definition 1.

The following theorem gives a necessary and sufficient condition for the system to show mean-square strong stabilization.

Theorem 4.

System (28) is mean-square strongly stabilized if and only if there are output feedback matrices such that

where

for each .

Proof.

The proof of this theorem can be obtained directly from the proof of Theorem 1. □

The following theorem gives a necessary and sufficient condition for mean-square strong stabilization via an output feedback controller and the explicit expressions of the output feedback matrix .

Theorem 5.

Suppose that for each , has full column rank and that has full row rank. Then, the system in (28) shows mean-square strong stabilization via an output feedback controller if and only if the following two equations are true:

and

The explicit expression for the output feedback matrix , when , can be obtained as follows:

where

and is an arbitrary matrix with .

Proof.

The following is divided into two steps to prove the feasibility of this theorem.

Step 1: In the first step, let us prove that conditions (30) and (31) are equivalent to and , respectively. First, is equivalent to there being a such that

Next, according to the matrix inversion lemma, this is equivalent to there being a such that

Using the Shur complement lemma, it is also equivalent to there being a such that

Finally, according to Lemma 1, this is equivalent to (30). The proof of (31) is similar to the above procedure.

Step 2: In the second step, we only need to prove that and are the same things as in (29).

Necessity: To prove the necessity, we only need to prove that the following inequality

implies and for by Theorem 4 and the properties of singular values. Hence, let us consider the following sequences of inequalities:

Next, since has full row rank, we have and . Then, according to the Schur complement lemma, (39) and are equivalent to

and . Once again, we can compute this formula in a similar way to the previous procedure in (38), and we obtain that (40) is equivalent to

Thus, and are true for the existing satisfying (38).

Then, one obtains

which proves the sufficiency. □

Remark 6.

The study of mean-square strong stability in Markov jump systems is still ongoing. For example, the conclusion of this paper can be extended to a broader context such as semi-Markov jump systems [28], monotone evolutions for the state vectors [29], and so on. In addition, the conditions of Theorems 3 and 5 can also be optimized for different aspects, in order to obtain a broader conclusion. These directions will be studied in the future.

5. Simulation

First, a mean-square strongly stable system is given, which can be verified by Theorem 1.

Example 1.

Consider a discrete-time Markov jump system (1) with two modes. The system parameters are given by

with initial distribution , , and the transition probability matrix

According to Theorem 1, the following can be obtained:

and

Therefore, the system is mean-square strongly stable.

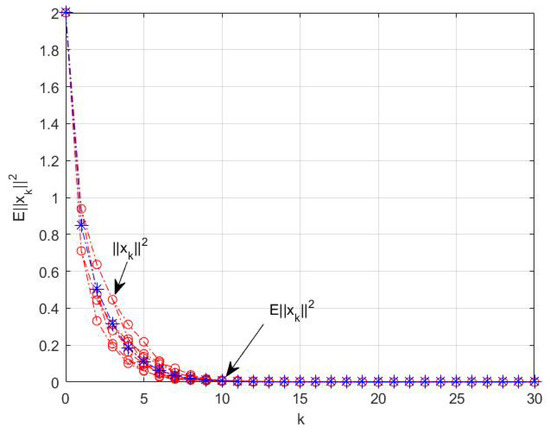

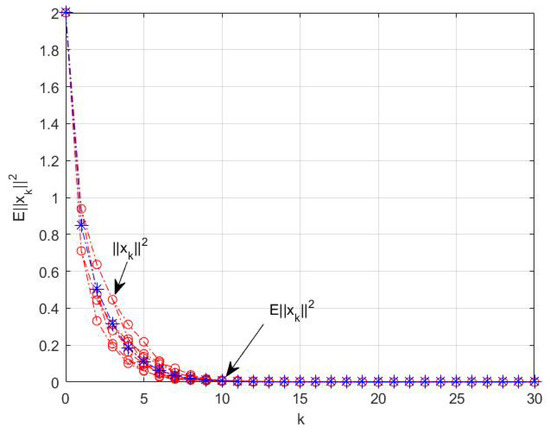

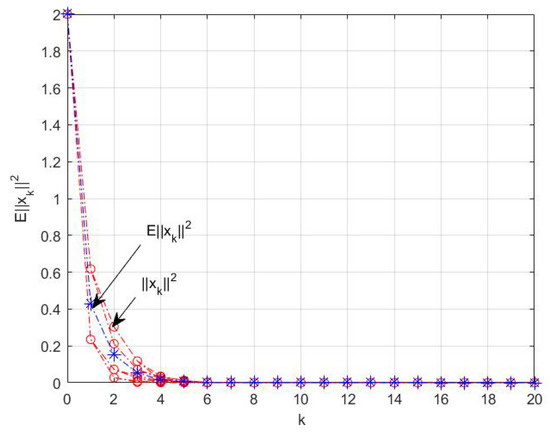

Taking , the system state curves are shown in Figure 1. It can be seen that the norm of the system state is convergent.

Figure 1.

The norm curve of the system state with respect to the iteration step k in Example 1.

Next, a system that is not mean-square strongly stable is given, and the design problems of the state feedback controller and output feedback controller are discussed.

Example 2.

Consider a discrete-time Markov jump system (1) with two modes, where the parameters of the system are given by

with initial distribution , , and the transition probability matrix

According to Theorem 1, the following can be obtained:

and

Therefore, the system is not mean-square strongly stable.

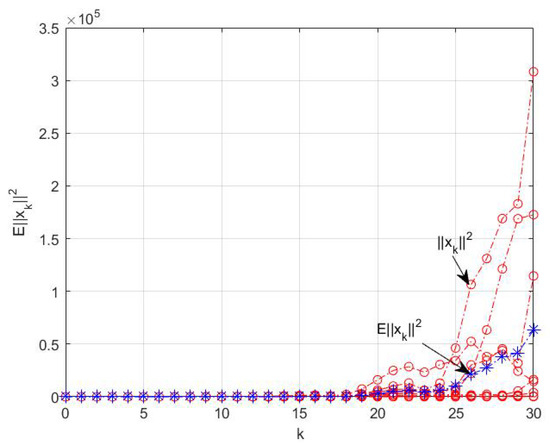

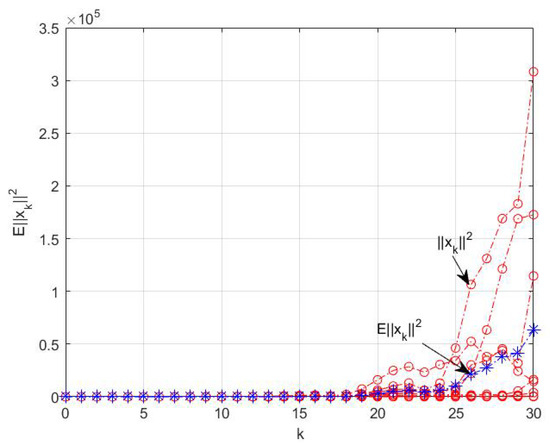

Taking , the system state curves are shown in Figure 2. It can be seen that the norm of the system state is divergent.

Figure 2.

The norm curve of the system state with respect to the iteration step k in Example 2.

Next, we consider the problems of mean-square strong stabilization by state feedback and output feedback with respect to Example 2.

Firstly, to consider the problems of state feedback controller design, the preconditions of (17) of Theorem 2 should be satisfied, and then the verification process is as follows.

According to Remark 4, the singular decomposition of is as follows.

In mode 1:

Then, we take

and obtain

which is a positive definite matrix because

Hence, by testing the conditions that has full column rank and

in Theorem 3, mode 1 satisfies the conditions of mean-square strong stabilization. Then, we can take

without loss of generality, and hence

from (18) and (19) in Theorem 3. According to Theorem 2, one obtains

In mode 2:

Then, we take

and obtain

which is a positive definite matrix because

Hence, by testing the conditions that has full column rank and

in Theorem 3, mode 2 satisfies the conditions for mean-square strong stabilization. Then, we can take

without loss of generality, and hence

from (18) and (19) in Theorem 3. According to Theorem 2, one obtains

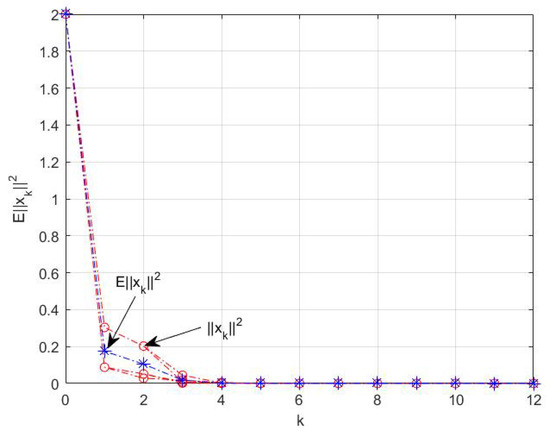

Taking , the closed-loop system state curves are shown in Figure 3. It can be seen that the system is mean-square strongly stabilized via state feedback control.

Figure 3.

The norm curve of the closed-loop system state with respect to the iteration step k in Example 2.

Next, we consider the problem of designing the static output feedback controller in the above example. First, we consider Example 2 with the output matrices as follows:

According to the above analysis, the system in Example 2 also satisfies the condition of (30) in Theorem 5. Hence, we only need to verify condition (31) in Theorem 5. As C and are square matrices, we consider the following procedure:

The eigenvalues of the above matrices are , respectively, and , . Hence we can design the output feedback controller for this system using Theorem 5, according to (32)–(34), and taking matrices as

without loss of generality. The explicit expressions for output feedback controllers can be obtained as follows:

Taking , the closed-loop system curves are shown in Figure 4. It can be seen that the system is mean-square strongly stabilized via output feedback control.

Figure 4.

The norm curve of the closed-loop system state with respect to the iteration step k in Example 2.

6. Conclusions

In this paper, the definition of the mean-square strong stability of discrete-time Markov jump systems was proposed, and the necessary and sufficient conditions for mean-square strong stability were obtained. Furthermore, several necessary and sufficient conditions guaranteeing mean-square strong stabilization via a state feedback controller and a static output feedback controller were derived. Moreover, the explicit expressions for the state feedback controller and static output feedback controller were provided. In future work, the conclusions of this paper could be extended to a broader context such as semi-Markov jump systems, monotone evolutions for the state vectors, and so on. In addition, Theorems 3 and 5 can also be optimized for some aspects, in order to obtain a broader conclusion.

Author Contributions

Formal analysis, Z.Y. and F.S.; methodology and funding acquisition, Z.Y.; investigation, software, and writing—original draft preparation and editing, F.S.; review and editing, F.S. and Z.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (61877062, 61977043, 62003181), and China Postdoctoral Science Foundation (2017M610425).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sazonov, I.; Grebennikov, D.; Meyerhans, A.; Bocharov, G. Markov Chain-Based Stochastic Modelling of HIV-1 Life Cycle in a CD4 T Cell. Mathematics 2021, 9, 2025. [Google Scholar] [CrossRef]

- Zhong, S.; Zhang, W.; Yan, Z. Finite-time annular domain stability and stabilization for stochastic Markovian switching systems driven by Wiener and Poisson noises. Int. J. Robust Nonlinear Control 2020, 31, 2290–2304. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, W.; Park, J.; Liu, X. Quantitative exponential stability and stabilization of discrete-time Markov jump systems with Multiplicative noises. IET Control Theory Appl. 2017, 11, 2886–2892. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Liu, X. Exponential Stabilization of a Class of Stochastic System With Markovian Jump Parameters and Mode-Dependent Mixed Time-Delays. IEEE Trans. Autom. Control 2010, 55, 1656–1662. [Google Scholar] [CrossRef] [Green Version]

- Deng, F.; Luo, Q.; Mao, X. Stochastic stabilization of hybrid differential equations. Automatica 2012, 48, 2321–2328. [Google Scholar] [CrossRef]

- Zhao, J.; Yang, J.; Peng, S. Adaptive tracking for stochastic nonlinear systems with Markovian switching. IEEE Trans. Autom. Control 2010, 55, 2135–2141. [Google Scholar]

- Xu, S.; Chen, T. Robust control for uncertain discrete-time stochastic bilinear systems with Markovian switching. Int. J. Robust Nonlinear Control 2005, 15, 201–217. [Google Scholar] [CrossRef]

- Yang, D.; Li, X.; Qiu, J. Output tracking control of delayed switched systems via state-dependent switching and dynamic output feedback. Nonlinear Anal. Hybrid Syst. 2019, 32, 294–305. [Google Scholar] [CrossRef]

- Kang, Y.; Zhao, Y.B.; Zhao, P. Stability Analysis of Markovian Jump Systems; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Zhao, P.; Kang, Y.; Zhao, Y.B. A Brief Tutorial and Survey on Markovian Jump Systems: Stability and Control. IEEE Syst. Man Cybern. Mag. 2019, 5, 37-C3. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Mahmud, M.; Yuan, Z.; Zhao, Y.; Mantooth, H.A. Busbar design and optimization for voltage overshoot mitigation of a silicon carbide high-power three-phase t-type inverter. IEEE Trans. Power Electron. 2020, 36, 204–214. [Google Scholar] [CrossRef]

- Moore, K.L.; Bhattacharyya, S.P. A technique for choosing zero locations for minimal overshoot. IEEE Trans. Autom. Control 1990, 35, 577–580. [Google Scholar] [CrossRef]

- Halikias, G.D.; Papageorgiou, A.; Karcanias, N. Non-overshooting stabilisation via state and output feedback. Int. J. Control 2012, 83, 1232–1247. [Google Scholar] [CrossRef]

- Halikias, G.D.; Dritsas, L.; Pantelous, A.; Tsoulkas, V. Strong stability of discrete-time systems. Linear Algebra Its Appl. 2012, 436, 1890–1908. [Google Scholar] [CrossRef] [Green Version]

- Karcanias, N.; Halikias, G.D.; Papageorgiou, A. Strong stability of internal system descriptions. Int. J. Control 2010, 83, 182–205. [Google Scholar] [CrossRef]

- Zhao, G.; Nesic, D.; Tan, Y.; Hua, C. Overcoming overshoot performance limitations of linear systems with reset control. Automatica 2019, 54, 289–294. [Google Scholar] [CrossRef]

- Xi, K.; Dubbeldam, J.; Lin, H.X.; Schuppen, J.H. Power-Imbalance Allocation Control of Power Systems-Secondary Frequency Control. Automatica 2018, 92, 72–85. [Google Scholar] [CrossRef] [Green Version]

- Zhou, B. On strong stability and robust strong stability of linear difference equations with two delays. Automatica 2019, 110, 0005–1098. [Google Scholar] [CrossRef] [Green Version]

- Song, Y.; Michiels, W.; Zhou, B.; Duan, G.R. Strong stability analysis of linear delay difference equations with multiple time delays. IEEE Trans. Autom. Control 2021, 66, 3741–3748. [Google Scholar] [CrossRef]

- Peng, D.; Li, X.; Rakkiyappan, R.; Ding, Y. Stabilization of stochastic delayed systems: Event-triggered impulsive control. Appl. Math. Comput. 2021, 401, 126054. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, X.; Cao, J. Global exponential stability for impulsive systems with infinite distributed delay based on flexible impulse frequency. Appl. Math. Comput. 2020, 386, 125467. [Google Scholar] [CrossRef]

- Costa, O.L.V.; Fragoso, M.D. Stability results for discrete-time linear systems with Markovian jumping parameters. J. Math. Anal. Appl. 1993, 179, 154–178. [Google Scholar] [CrossRef]

- Bouhtouri, A.E.; Hinrichsen, D.; Pritchard, A.J. Stability radii of discrete-time stochastic systems with respect to blockdiagonal perturbations. Automatica 2000, 36, 1033–1040. [Google Scholar] [CrossRef]

- Costa, O.L.V.; Fragoso, M.D.; Marques, R.P. Discrete-Time Markov Jump Linear Systems; Springer: London, UK, 2005. [Google Scholar]

- Nielsen, R.; Wakeley, J. Distinguishing migration from isolation: A Markov chain Monte Carlo approach. Genetics 2001, 158, 885–896. [Google Scholar] [CrossRef] [PubMed]

- Catak, M. Application of Markov chains on image enhancement. Neural Comput. Appl. 2014, 25, 1119–1123. [Google Scholar] [CrossRef]

- Skelton, R.E.; Iwasaki, T.; Grigoriadis, K. A Unified Approach to Linear Control Design; Taylor and Francis Series in Systems and Control; Taylor & Francis: Bristol, VA, USA, 2017. [Google Scholar]

- Verbeken, B.; Guerry, M.A. Discrete time hybrid Semi-Markov models in manpower planning. Mathematics 2021, 9, 1681. [Google Scholar] [CrossRef]

- Guerry, M.A.; Carette, P. On monotonically proceeding structures and stepwise increasing transition matrices of Markov chains. Commun. Stat.-Theory Methods 2020, 51, 51–67. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).