Abstract

This paper investigates the problem of computing the time-varying {2,3}- and {2,4}-inverses through the zeroing neural network (ZNN) method, which is presently regarded as a state-of-the-art method for computing the time-varying matrix Moore–Penrose inverse. As a result, two new ZNN models, dubbed ZNN23I and ZNN24I, for the computation of the time-varying {2,3}- and {2,4}-inverses, respectively, are introduced, and the effectiveness of these models is evaluated. Numerical experiments investigate and confirm the efficiency of the proposed ZNN models for computing the time-varying {2,3}- and {2,4}-inverses.

MSC:

15A24; 65F20; 68T05

1. Introduction and Preliminaries

Generalized inverses are widely utilized in engineering because they are essential to mathematics, particularly algebra. Applications in engineering include monitoring humanoid robot movements [1], regulating orbit and attitude control systems through force and torque actuators [2], regulating the short-period mode’s fault tolerant control of an aircraft [3], and tracking angle-of-arrival in localization systems [4], wireless communications [5] and navigation [6]. The following four Penrose equations are intimately related to the representation and calculation of many generalized inverses:

The set of all matrices satisfying the conditions included in a subset is signified by . The set of all -inverses of A with rank s is denoted . Those inverses that satisfy and of Penrose equations are known as {2,3}-inverses, while those that satisfy and of Penrose equations are known as {2,4}-inverses. For additional significant properties of generalized inverses, see [7,8]. The most famous of all generalized inverses is the Moore–Penrose inverse (MPI), or pseudoinverse, of a matrix A, and it is a generalization of the regular inverse of a matrix . The Moore–Penrose inverse satisfies all Penrose equations and has been studied thoroughly over the years. Usually, the MPI is computed using the singular value decomposition method [7,8]. However, over the last decades, a variety of methods for computing the MPI have been developed, such as in [9,10]. Fast computation algorithms are proposed for the calculation of the MPI in [9] for singular square matrices and rectangular matrices, whereas an error-bounds method that is applicable without restrictions on the rank of the matrix is presented in [10] for the calculation of the MPI of an arbitrary rectangular or singular complex matrix.

The zeroing neural network (ZNN) method is presently regarded as a state-of-the-art method for computing the time-varying MPI. Particularly, ZNN dynamical systems were initially developed for tracing the time-varying matrix inverse [11], but subsequent versions were dynamic models for determining the time-varying MPI of full-column or full-row rank matrices [12,13,14,15]. The creation of multiple models for computing various generalized inverses [16,17], including the ML-weighted MPI [18], the Drazin inverse [19], and outer inverses [20], has resulted from subsequent evolution. Additionally, the ZNN method has been extensively studied and has been applied to a wide range of time-varying problems, with problems of linear matrix equations systems [21,22,23,24], problems of quadratic optimization [25,26], and approximation of many matrix functions [27,28,29] constituting its main applications.

This paper proposes, for the first time, two ZNN models, dubbed ZNN23I and ZNN24I, for the computation of two special classes of generalized inverses, the time-varying {2,3}- and {2,4}-inverses, respectively.

A few of the paper’s basic symbols are worth noting: and , respectively, signify the set of all real and complex matrices; and , respectively, signify the set of all real and complex matrices of rank r; and , respectively, denote the zero and all-ones matrices; signifies the identity matrix; ⊗ signifies the Kronecker product; signifies the vectorization procedure; signifies the matrix Frobenius norm; denotes transposition; r denotes the rank of matrix A; denotes the time; and denotes the time derivative.

The general representations of {2,3}- and {2,4}-inverses are restated from [30,31,32] in the following Proposition 1.

Proposition 1

([30]). Let be an arbitrary matrix and , where , and assume the two arbitrary matrices and with the properties and . The next declarations for {2,3}- and {2,4}-inverses are then true:

- ,

- .

Based on Proposition 1 and without loss of generality, we consider a time-varying matrix and two constant arbitrary real matrices and with the properties r r and r r. It is important to keep in mind that although Proposition 1 applies for complex matrices, it also holds for real matrices. As a result, our research is focused on the following Proposition 2.

Proposition 2.

Let be a time-varying arbitrary matrix and , where , and assume the two arbitrary matrices and with the properties and . The next declarations for time-varying {2,3}- and {2,4}-inverses are then true:

- ,

- .

Proof.

The proof follows the same arguments as in the proof of Lemma 2.1 from [30]. □

The subject of this paper is the computation of the time-varying {2,3}- and {2,4}-inverses based on the concept of Proposition 2 using the ZNN method [11]. ZNN is inspired by the Hopfield neural network, which falls within the class of recurrent neural networks and is utilized to generate online solutions to time-varying problems by zeroing matrix equations. Defining an error matrix equation, , for the underlying problem is the initial step in producing ZNN dynamics. The next step employs the dynamical evolution:

where is a design parameter for scaling the convergence, whereas denotes element-wise use of an odd and increasing activation function on . The ZNN evolution (1) is explored under the linear activation function in our research, which yields the next formula:

Below are the key points of this research:

- Two new ZNN models, called ZNN23I and ZNN24I, respectively, for the computation of the time-varying {2,3}- and {2,4}-inverses are introduced and investigated.

- The ZNN23I and ZNN24I models are effective for the computation of the time-varying {2,3}- and {2,4}-inverses, respectively, according to three representative numerical experiments.

The following Section 2, Section 3, Section 4, Section 5 and Section 6 make up the rest of the paper’s layout. Particularly, the ZNN24I model for the computation of the time-varying {2,4}-inverse is defined and analysed in Section 2, while the ZNN23I model for the computation of the time-varying {2,3}-inverse is described and analysed in Section 3. The computational complexity of the ZNN23I and ZNN24I models is presented in Section 4. The results of three experiments for the computation of the time-varying {2,3}- and {2,4}-inverses are shown and analysed in Section 5. Last, Section 6 includes the last comments and inferences.

2. Design of a ZNN Model for Computing Time-Varying {2,4}-Inverses

This section introduces and analyses the ZNN model, called ZNN24I, that computes the time-varying {2,4}-inverse. Consider that is a differentiable matrix, and with is a matrix of normally distributed random numbers. Then, replacing and in Proposition 2, the following group of equations holds in the case of the {2,4}-inverse:

In the ZNN24I model, we consider the two unknowns, and , of (3) to be found. However, since (3) contains several unknowns, the ZNN method is more effective when the complex matrices are decomposed into real and imaginary parts [28].

By decomposing the matrices , and into real and imaginary parts:

where , (3) can be rewritten in the following form:

For zeroing the real and imaginary parts of (5), we set the following group of error matrix equations (GEME):

where and . Furthermore, considering and , the first derivative of GEME (6) is:

Substituting defined in (7) in place of into (2) and solving in terms of , one obtains:

To simplify the dynamic model of (8), the Kronecker product and vectorization techniques are applied as below:

Additionally, by setting

the next ZNN model, called ZNN24I, for computing the time-varying {2,4}-inverse of an input matrix is obtained:

which may effectively be addressed by utilizing an ode MATLAB solver. It is worth noting that , while is a nonsingular mass matrix. According to Theorem 1, the ZNN24I model (11) converges to the theoretical solution (TS) of the time-varying {2,4}-inverse.

Theorem 1.

Assume that is a differentiable matrix and with is a matrix of normally distributed random numbers. At each time , the ZNN24I model (11) converges to the TS exponentially, beginning from any starting point . Additionally, the TS of the time-varying {2,4}-inverse is the last components of .

Proof.

To obtain the TS of the time-varying {2,4}-inverse, the GEME is defined as in (6). Using the linear ZNN design (2) for zeroing (6), the model for (8) is acquired. From ([11], Theorem 1), each equation in the GEME (8) converges to the TS when . Therefore, the solution of (8) converges to the TS when . Since (11) is actually another form of (8), the ZNN24I model (11) also converges to the TS when . According to (10), the TS of the time-varying {2,4}-inverse is the last components of . The proof is thus completed. □

3. Design of a ZNN Model for Computing Time-Varying {2,3}-Inverses

This section introduces and analyses the ZNN model, called ZNN23I, that computes the time-varying {2,3}-inverse. Consider that is a differentiable matrix and with is a matrix of normally distributed random numbers. Then, replacing and in Proposition 2, the following group of equations holds in the case of the {2,3}-inverse:

Similar to the ZNN24I model, we consider the two unknowns, and , of (12) to be found in the ZNN23I model, which, as previously stated, performs better when the complex matrices are divided into real and imaginary parts.

By decomposing the matrices , and into real and imaginary parts, as in (4), (12) can be rewritten in the following form:

For zeroing the real and imaginary parts of (13), we set the following GEME:

where and . Moreover, considering and , the first derivative of GEME (14) is:

Substituting defined in (15) in place of into (2) and solving in terms of , one obtains:

To simplify the dynamic model of (16), the Kronecker product and vectorization techniques are applied as below:

Additionally, by setting

the next ZNN model, called ZNN23I, for computing the time-varying {2,3}-inverse of an input matrix is obtained:

which may effectively be addressed by utilizing an ode MATLAB solver. It is worth noting that , while is a nonsingular mass matrix. According to Theorem 2, the ZNN23I model (19) converges to the TS of the time-varying {2,3}-inverse.

Theorem 2.

Assume that is a differentiable matrix and with is a matrix of normally distributed random numbers. At each time , the ZNN23I model (19) converges to the TS exponentially, beginning from any initial point . Additionally, the TS of the time-varying {2,3}-inverse is the last components of .

Proof.

The proof follows the same rules as the proof of Theorem 1 and is thus omitted. □

4. Computational Complexity of the ZNN23I and ZNN24I Models

Overall computational complexity of the ZNN24I and ZNN23I models involves the complexity of generating and solving (11) and (19), respectively. Since, per iteration, (11) and (19) contain multiplication operations and addition operations, the computational complexity of generating (11) and (19) is operations. It is worth mentioning that an implicit solver such as ode15s solves a linear system of equations at each step. Since (11) and (19) involve a matrix, the complexity of solving them is . Therefore, each of the proposed explicit designs, (11) and (19), has an overall complexity of .

5. Computational Evaluation of the ZNN23I and ZNN24I Models

This section investigates the performance of the ZNN24I (11) and ZNN23I (19) models in three numerical experiments (NEs) that involve computing time-varying {2,3}- and {2,4}-inverses. The initial conditions in all NEs have been set to

for the ZNN24I model and

for the ZNN23I model, whereas the matrices F and G are generated through MATLAB function randn with . We also used the functions and in the declaration of the input matrix . During the computation of all NEs, the MATLAB solver ode15s is used under the time interval . Additionally, for the NEs in Section 5.1 and Section 5.2, ode15s was utilized with the default relative error tolerance of , whereas for the NEs in Section 5.3 and Section 5.4, the relative error tolerance was set to . Finally, the ZNN design parameter was set to for the NEs in Section 5.1 and Section 5.2, in Section 5.3, and in Section 5.4.

5.1. Numerical Experiment 1

Consider a input matrix of rank 3 with the following real and imaginary parts:

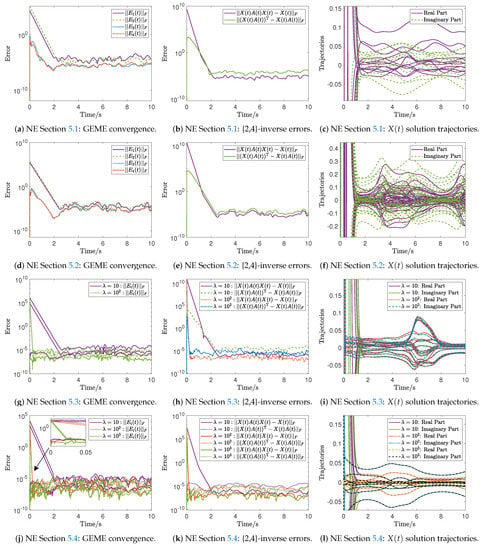

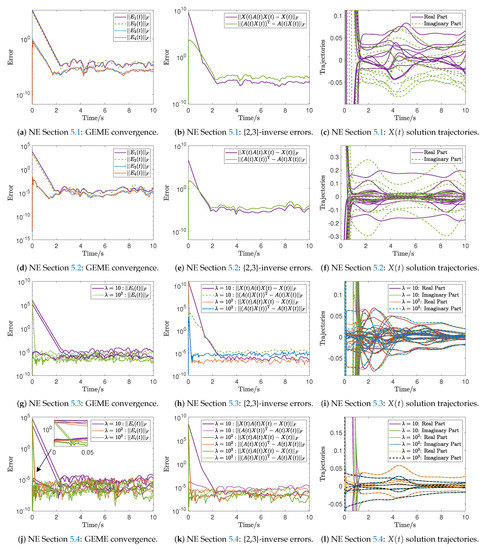

The findings of the ZNN23I and ZNN24I models are depicted in Figure 1a–c and Figure 2a–c, respectively.

Figure 1.

GEME convergence, {2,4}-inverse errors, and trajectories of the ZNN24I model’s solutions for the NEs in Section 5.1, Section 5.2, Section 5.3 and Section 5.4.

Figure 2.

GEME convergence, {2,3}-inverse errors, and trajectories of the ZNN23I model’s solutions for the NEs in Section 5.1, Section 5.2, Section 5.3 and Section 5.4.

5.2. Numerical Experiment 2

5.3. Numerical Experiment 3

5.4. Numerical Experiment 4

5.5. Discussion and Analysis of Numerical Experiments

The performance of the ZNN23I and ZNN24I models for computing time-varying {2,3}- and {2,4}-inverses, respectively, is investigated through the NEs in Section 5.1, Section 5.2, Section 5.3, Section 5.4. In the case of the ZNN24I model, the findings for computing a time-varying {2,4}-inverse are presented in Figure 1, while in the case of the ZNN23I model, the findings for computing a time-varying {2,3}-inverse are presented in Figure 2. Particularly, the GEME convergence of the ZNN24I model is depicted in Figure 1a,d,g,j for the NEs is Section 5.1, Section 5.2, Section 5.3, Section 5.4, respectively, and the GEME convergence of the ZNN23I model is depicted in Figures. Figure 2a,d,g,j for the NEs in Section 5.1, Section 5.2, Section 5.3, Section 5.4, respectively. In all these figures, we observe that the GEME convergence begins at in the range , but it ends in different periods t depending on the value of . Particularly, in Figure 1a,d,g,j, we observe that the GEME convergence ends before , with the lowest values in the range . In Figure 1d,j and Figure 2d,j, we observe that the GEME convergence ends before for and before for , with the lowest values in the range . In Figure 1j and Figure 2j, we observe that the GEME convergence ends before for , before for , and before for , with the lowest values in the range . That is, the GEME of the ZNN23I and ZNN24I models begins from a non-optimal initial condition and converges to the zero matrix. It is important to note that the relative error tolerance value defined in ode15s determines the GEME’s lowest values.

Furthermore, the {2,4}-inverse errors of the ZNN24I model are depicted in Figure 1b,e,h,k for the NEs in Section 5.1, Section 5.2, Section 5.3, Section 5.4, respectively, and the {2,3}-inverse errors of the ZNN23I model are depicted in Figure 2b,e,h,k for the NEs in Section 5.1, Section 5.2, Section 5.3, Section 5.4, respectively. In all these figures, we observe that the error begins at in the range , but it ends in different periods t depending on the value of , while its lowest values depend on the relative error tolerance value defined in ode15s. In other words, the {2,3}- and {2,4}-inverse errors of the ZNN23I and ZNN24I models exhibit the same convergence tendency as their associated GEMEs. Finally, the solution trajectories of the ZNN24I model are depicted in Figure 1c,f,i,l for the NEs in Section 5.1, Section 5.2, Section 5.3, Section 5.4, respectively, and the solution trajectories of the ZNN23I model are depicted in Figure 2c,f,i,l for the NEs in Section 5.1, Section 5.2, Section 5.3, Section 5.4, respectively. In all these figures, we observe that a particular pattern emerges in the {2,3}- and {2,4}-inverse trajectories before for , before for , and before for .

The following is deduced from the NEs of this section. The convergence of the ZNN23I and ZNN24I models begins from a non-optimal initial condition and converges to the zero matrix in a short amount of time. The relative error tolerance value defined in ode15s determines the GEME’s lowest values, while the GEME convergence ending period t depends on the value of . The {2,3}- and {2,4}-inverses errors and the solution trajectories behave in the same way due to the convergence tendency of the GEMEs. It is also crucial to note that when the value of the design parameter is higher, the models converge faster and the overall error is lowered even further. In essence, the time-varying {2,3}- and {2,4}-inverses are computed with outstanding and effective performance by the ZNN23I and ZNN24I models, respectively.

6. Conclusions

In this paper, the problem of computing the time-varying {2,3}- and {2,4}-inverses through the ZNN method was investigated. As a consequence, this study proposed and examined two new ZNN models, called ZNN23I and ZNN24I, respectively, for the computation of the time-varying {2,3}- and {2,4}-inverses. Theoretical results as well as three NEs show that the ZNN23I and ZNN24I models are effective for the computation of the time-varying {2,3}- and {2,4}-inverses, respectively.

Some potential study topics are listed below.

- 1.

- Future studies might examine the use of nonlinear activation functions [33,34] to accelerate the convergence speed of the ZNN dynamics.

- 2.

- ZNN designs with terminal convergence [35,36] could be employed to enhance the ZNN23I and ZNN24I models.

- 3.

- Since generalized inverses are crucial to mathematics, and specifically linear algebra, special types of generalized inverses, such as the time-varying {1,3}- and {1,4}-inverses, can be investigated in future works.

Author Contributions

All authors (X.L., C.-L.L., T.E.S., S.D.M. and V.N.K.) contributed equally. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported by Zhejiang Provincial Philosophy and Social Sciences Planning Project (20NDJC28Z) and is funded by the Humanities and Social Sciences Research Project of the Ministry of Education (21YJAZH044).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nenchev, D.N.; Konno, A.; Tsujita, T. Chapter 2—Kinematics. In Humanoid Robots; Nenchev, D.N., Konno, A., Tsujita, T., Eds.; Butterworth-Heinemann: Oxford, UK, 2019; pp. 15–82. [Google Scholar] [CrossRef]

- Canuto, E.; Novara, C.; Massotti, L.; Carlucci, D.; Montenegro, C.P. Chapter 9—Orbit and attitude actuators. In Spacecraft Dynamics and Control; Canuto, E., Novara, C., Massotti, L., Carlucci, D., Montenegro, C.P., Eds.; Aerospace Engineering, Butterworth-Heinemann: Oxford, UK, 2018; pp. 463–520. [Google Scholar] [CrossRef]

- Ciubotaru, B.; Staroswiecki, M.; Christophe, C. Fault tolerant control of the Boeing 747 short-period mode using the admissible model matching technique. In Fault Detection, Supervision and Safety of Technical Processes 2006; Zhang, H.Y., Ed.; Elsevier Science Ltd.: Oxford, UK, 2007; pp. 819–824. [Google Scholar] [CrossRef]

- Huang, H.; Fu, D.; Xiao, X.; Ning, Y.; Wang, H.; Jin, L.; Liao, S. Modified Newton integration neural algorithm for dynamic complex-valued matrix pseudoinversion applied to mobile object localization. IEEE Trans. Ind. Inform. 2020, 17, 2432–2442. [Google Scholar] [CrossRef]

- Noroozi, A.; Oveis, A.H.; Hosseini, S.M.; Sebt, M.A. Improved algebraic solution for source localization from TDOA and FDOA measurements. IEEE Wirel. Commun. Lett. 2018, 7, 352–355. [Google Scholar] [CrossRef]

- Dempster, A.G.; Cetin, E. Interference localization for satellite navigation systems. Proc. IEEE 2016, 104, 1318–1326. [Google Scholar] [CrossRef]

- Ben-Israel, A.; Greville, T.N.E. Generalized Inverses: Theory and Applications, 2nd ed.; CMS Books in Mathematics; Springer: New York, NY, USA, 2003. [Google Scholar] [CrossRef]

- Wang, G.; Wei, Y.; Qiao, S.; Lin, P.; Chen, Y. Generalized Inverses: Theory and Computations; Springer: Berlin, Germany, 2018; Volume 53. [Google Scholar]

- Katsikis, V.N.; Pappas, D.; Petralias, A. An improved method for the computation of the Moore–Penrose inverse matrix. Appl. Math. Comput. 2011, 217, 9828–9834. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Roy, F.; Gupta, D.K.; Srivastava, S. Computing the Moore-Penrose inverse using its error bounds. Appl. Math. Comput. 2020, 371, 124957. [Google Scholar] [CrossRef]

- Zhang, Y.; Ge, S.S. Design and analysis of a general recurrent neural network model for time-varying matrix inversion. IEEE Trans. Neural Netw. 2005, 16, 1477–1490. [Google Scholar] [CrossRef]

- Chai, Y.; Li, H.; Qiao, D.; Qin, S.; Feng, J. A neural network for Moore-Penrose inverse of time-varying complex-valued matrices. Int. J. Comput. Intell. Syst. 2020, 13, 663–671. [Google Scholar] [CrossRef]

- Sun, Z.; Li, F.; Jin, L.; Shi, T.; Liu, K. Noise-tolerant neural algorithm for online solving time-varying full-rank matrix Moore-Penrose inverse problems: A control-theoretic approach. Neurocomputing 2020, 413, 158–172. [Google Scholar] [CrossRef]

- Wu, W.; Zheng, B. Improved recurrent neural networks for solving Moore-Penrose inverse of real-time full-rank matrix. Neurocomputing 2020, 418, 221–231. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Y.; Tan, N.; Cai, B. Zhang neural network solving for time-varying full-rank matrix Moore-Penrose inverse. Computing 2011, 92, 97–121. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Stanimirović, P.S.; Mourtas, S.D.; Xiao, L.; Karabasević, D.; Stanujkić, D. Zeroing neural network with fuzzy parameter for computing pseudoinverse of arbitrary matrix. IEEE Trans. Fuzzy Syst. 2022, 30, 3426–3435. [Google Scholar] [CrossRef]

- Kornilova, M.; Kovalnogov, V.; Fedorov, R.; Zamaleev, M.; Katsikis, V.N.; Mourtas, S.D.; Simos, T.E. Zeroing neural network for pseudoinversion of an arbitrary time-varying matrix based on singular value decomposition. Mathematics 2022, 10, 1208. [Google Scholar] [CrossRef]

- Qiao, S.; Wei, Y.; Zhang, X. Computing time-varying ML-weighted pseudoinverse by the Zhang neural networks. Numer. Funct. Anal. Optim. 2020, 41, 1672–1693. [Google Scholar] [CrossRef]

- Qiao, S.; Wang, X.Z.; Wei, Y. Two finite-time convergent Zhang neural network models for time-varying complex matrix Drazin inverse. Linear Algebra Its Appl. 2018, 542, 101–117. [Google Scholar] [CrossRef]

- Wang, X.; Stanimirovic, P.S.; Wei, Y. Complex ZFs for computing time-varying complex outer inverses. Neurocomputing 2018, 275, 983–1001. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Zhang, Y. Solving complex-valued time-varying linear matrix equations via QR decomposition with applications to robotic motion tracking and on angle-of-arrival localization. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3415–3424. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Katsikis, V.N.; Li, S. Hybrid GNN-ZNN models for solving linear matrix equations. Neurocomputing 2018, 316, 124–134. [Google Scholar]

- Dai, J.; Tan, P.; Yang, X.; Xiao, L.; Jia, L.; He, Y. A fuzzy adaptive zeroing neural network with superior finite-time convergence for solving time-variant linear matrix equations. Knowl.-Based Syst. 2022, 242, 108405. [Google Scholar] [CrossRef]

- Xiao, L.; Tan, H.; Dai, J.; Jia, L.; Tang, W. High-order error function designs to compute time-varying linear matrix equations. Inf. Sci. 2021, 576, 173–186. [Google Scholar] [CrossRef]

- Mourtas, S.D.; Katsikis, V.N. Exploiting the Black-Litterman framework through error-correction neural networks. Neurocomputing 2022, 498, 43–58. [Google Scholar] [CrossRef]

- Mourtas, S.D.; Kasimis, C. Exploiting mean-variance portfolio optimization problems through zeroing neural networks. Mathematics 2022, 10, 3079. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Stanimirović, P.S.; Mourtas, S.D.; Li, S.; Cao, X. Generalized inverses: Algorithms and applications. In Mathematics Research Developments; Chapter Towards Higher Order Dynamical Systems; Nova Science Publishers, Inc.: New York, NY, USA, 2021; pp. 207–239. [Google Scholar]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Zhang, Y. Continuous-time varying complex QR decomposition via zeroing neural dynamics. Neural Process. Lett. 2021, 53, 3573–3590. [Google Scholar] [CrossRef]

- Kovalnogov, V.N.; Fedorov, R.V.; Generalov, D.A.; Chukalin, A.V.; Katsikis, V.N.; Mourtas, S.D.; Simos, T.E. Portfolio insurance through error-correction neural networks. Mathematics 2022, 10, 3335. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Cvetković-Ilić, D.S.; Miljković, S.; Miladinović, M. Full-rank representations of {2,4},{2,3}-inverses and successive matrix squaring algorithm. Appl. Math. Comput. 2011, 217, 9358–9367. [Google Scholar]

- Stanimirović, P.S.; Katsikis, V.N.; Pappas, D. Computation of {2,4} and {2,3}-inverses based on rank-one updates. Linear Multilinear Algebra 2018, 66, 147–166. [Google Scholar] [CrossRef]

- Shaini, B. Computing {2,4} and {2,3}-inverses using SVD-like factorizations and QR factorization. Filomat 2016, 30, 403–418. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y. From different ZFs to different ZNN models accelerated via Li activation functions to finite-time convergence for time-varying matrix pseudoinversion. Neurocomputing 2014, 133, 512–522. [Google Scholar] [CrossRef]

- Wang, X.Z.; Ma, H.; Stanimirović, P.S. Nonlinearly activated recurrent neural network for computing the Drazin inverse. Neural Process. Lett. 2017, 46, 195. [Google Scholar] [CrossRef]

- Xiao, L. A finite-time convergent neural dynamics for online solution of time-varying linear complex matrix equation. Neurocomputing 2015, 167, 254–259. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly-activated neurodynamic model and its finite-time solution to equality-constrained quadratic optimization with nonstationary coefficients. Appl. Soft Comput. 2016, 40, 252–259. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).