Daily Peak-Electricity-Demand Forecasting Based on Residual Long Short-Term Network

Abstract

1. Introduction

- The residual LSTM can help consumers reduce demand charges by distributing the concentration of electricity demand based on accurate forecasting performance;

- The residual LSTM can help consumers in individual buildings to distribute the concentration of electricity demand during peak hours, reduce electricity demand concentration at the regional level, and contribute to the stable operation of the national power system.

2. Literature Review

3. Methodology

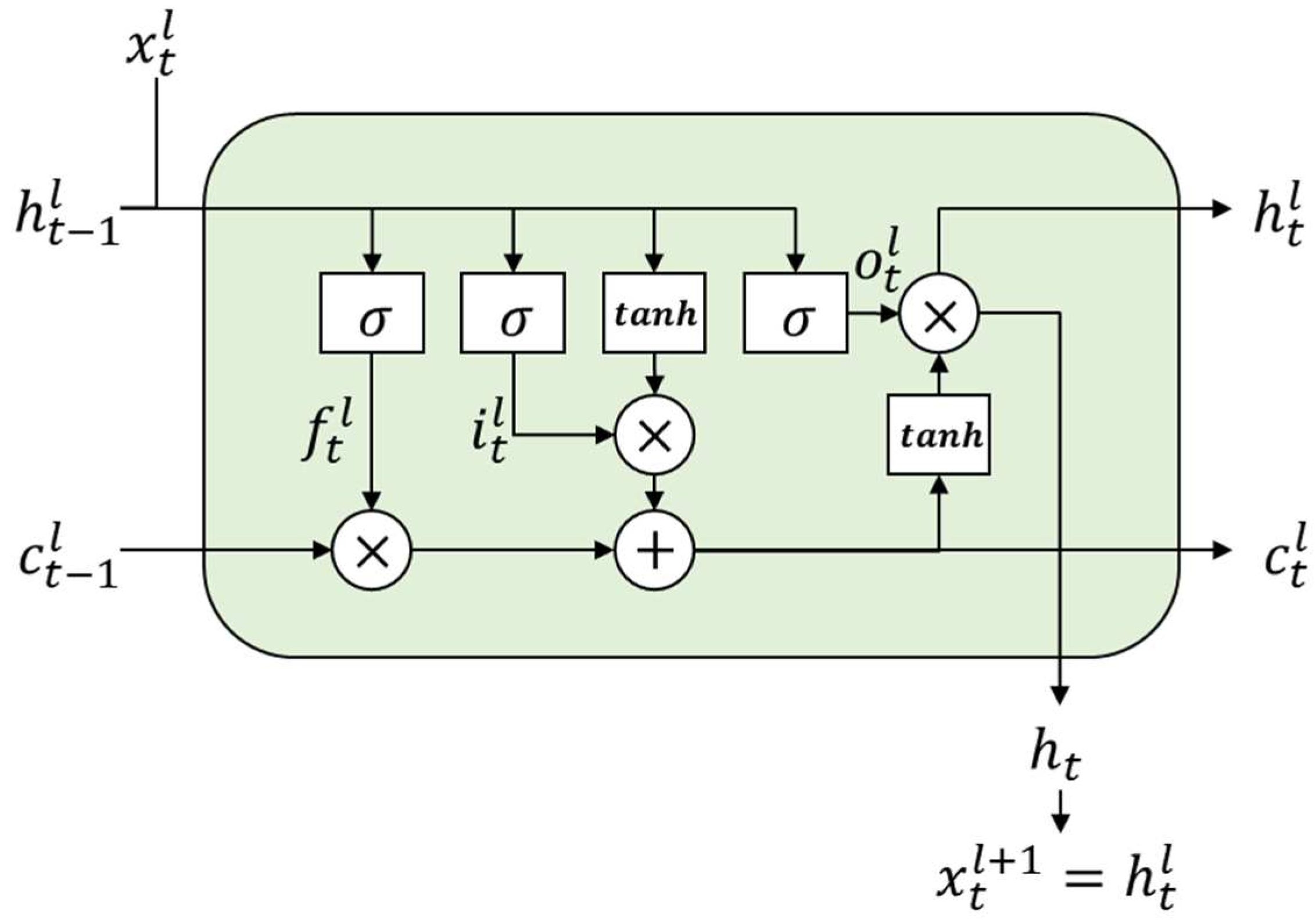

3.1. Long Short-Term Memory (LSTM)

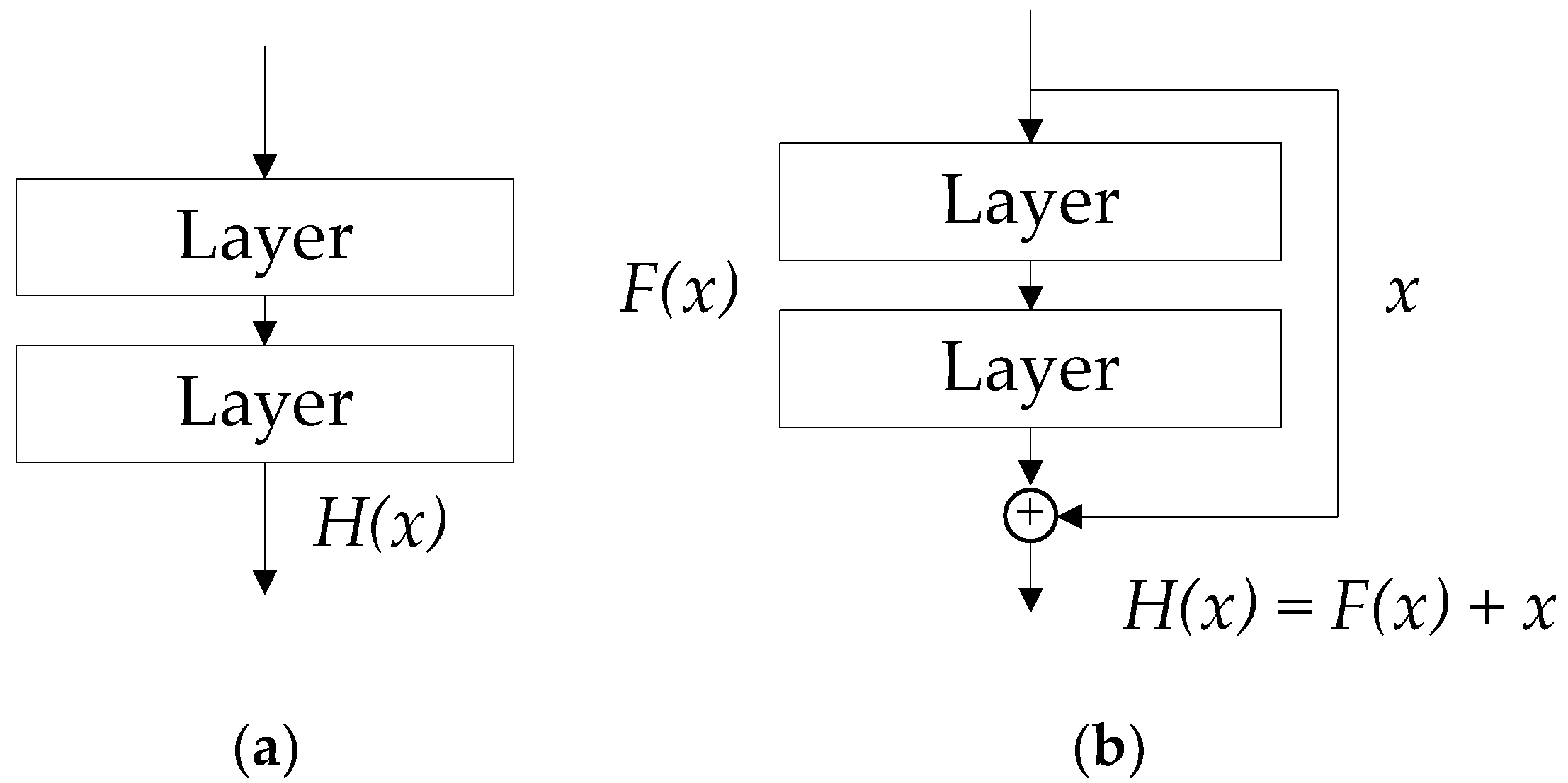

3.2. Residual Learning

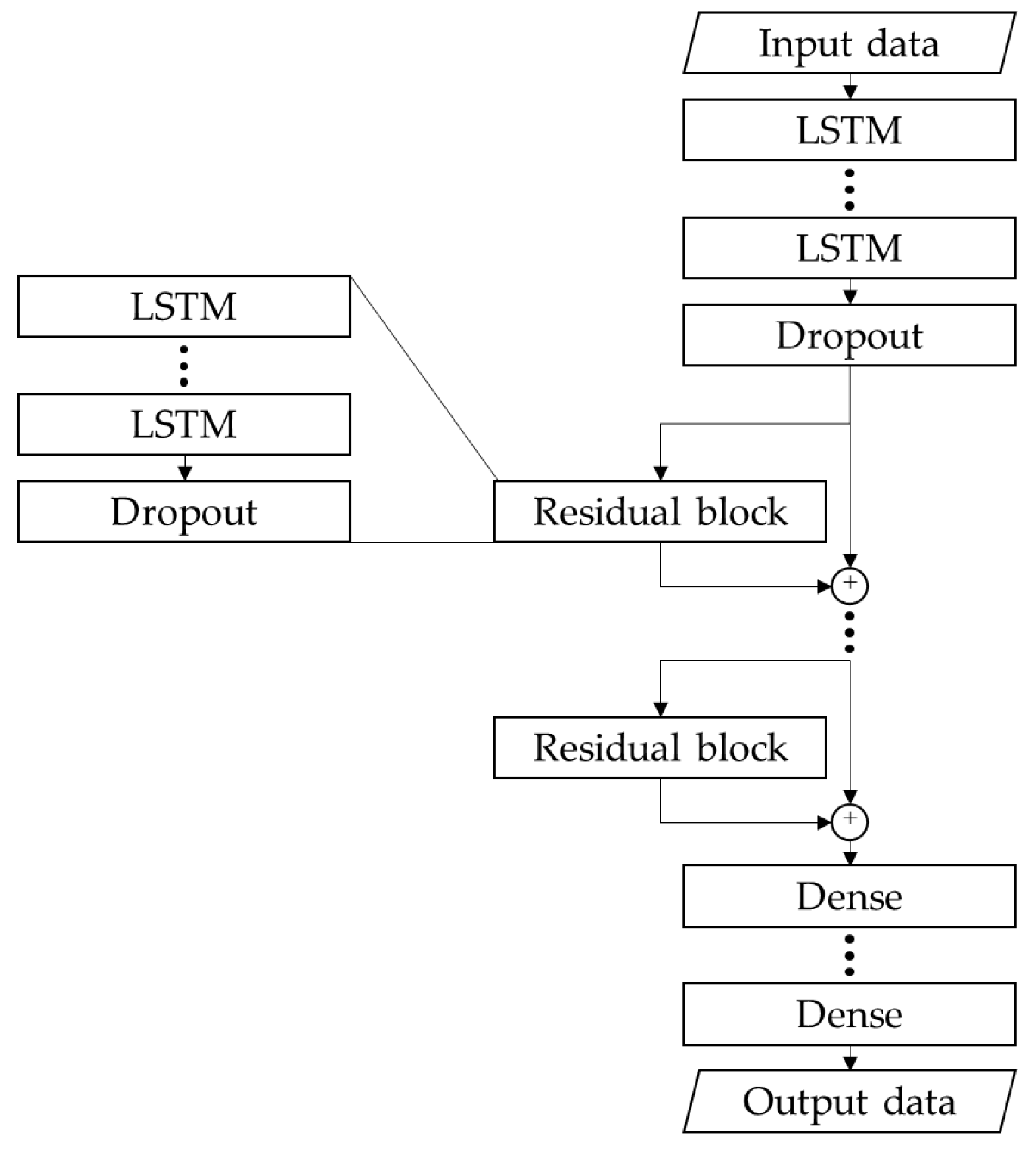

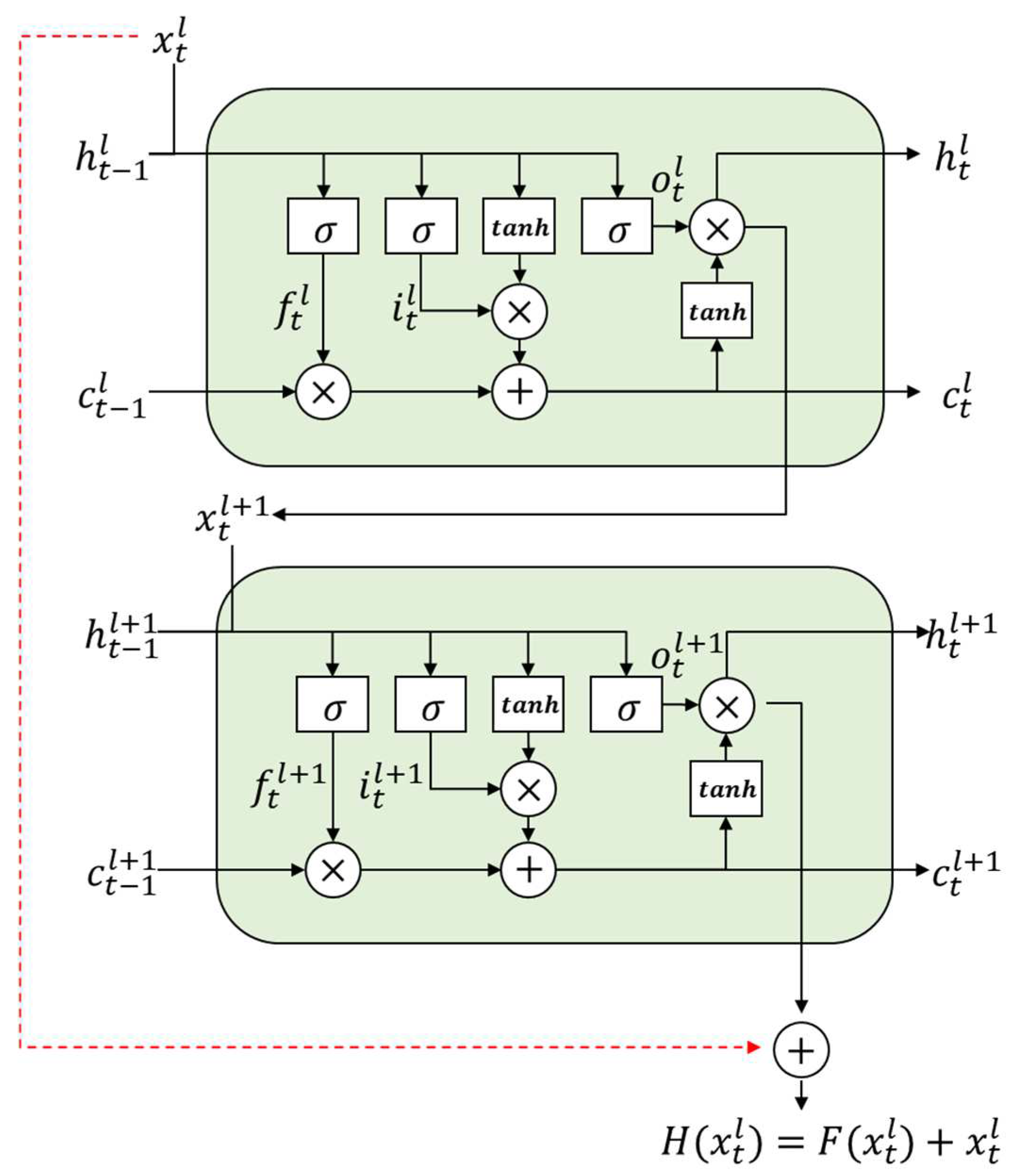

3.3. Residual LSTM

4. Experimental Procedure

4.1. Data Collection and Preprocessing

4.2. Benchmark Models

4.3. Hyperparameter Setting

4.4. Performance Measure

5. Results and Discussion

5.1. Peak-Demand Forecast Results

5.2. Overall and Hourly Forecast Results

5.3. Statistical Tests

- Null hypothesis (H0): The forecasting models have the same performance;

- Alternative hypothesis (H1): The performance of at least one model is statistically different from those of the other forecasting models.

6. Conclusions

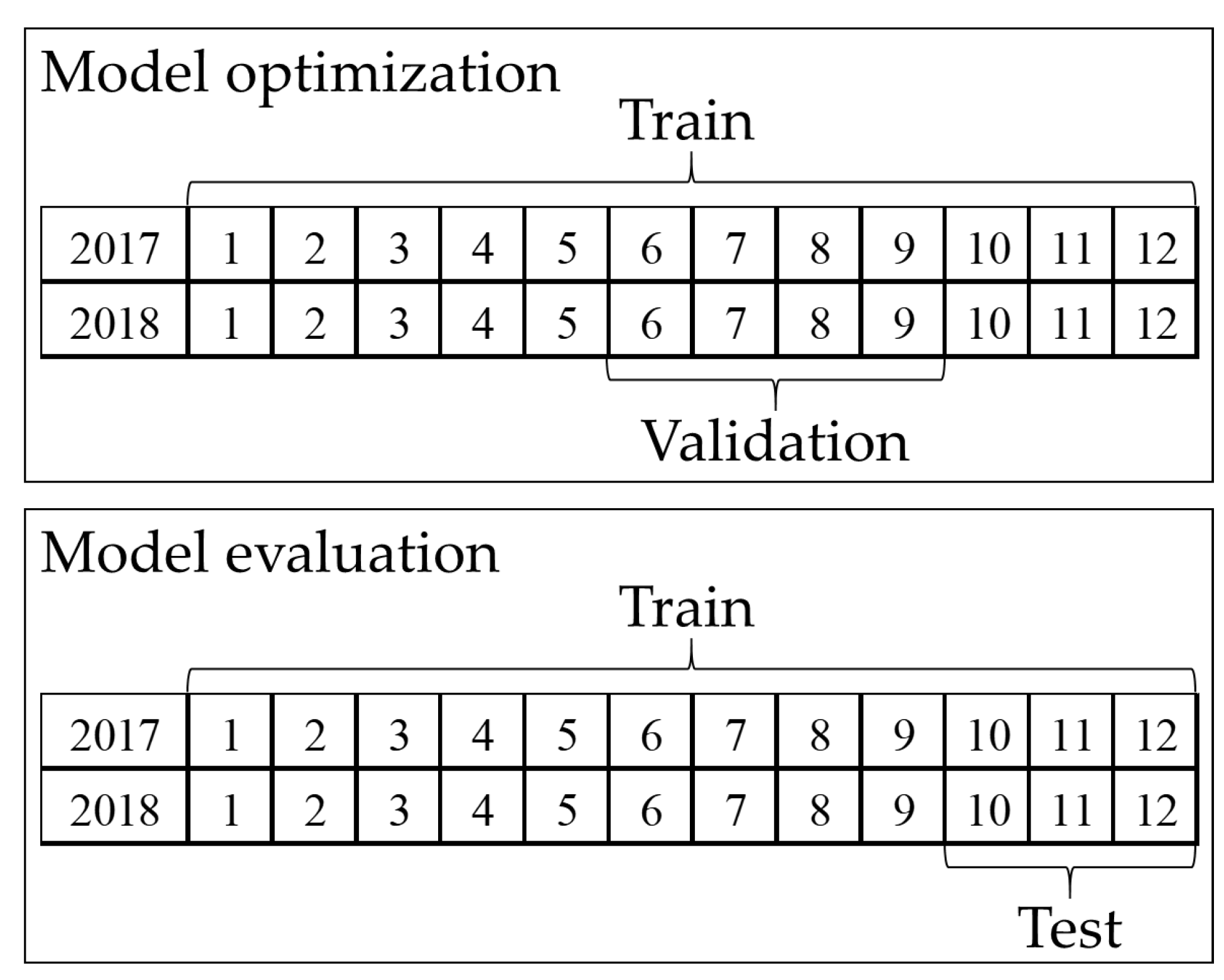

- The historical electricity demand and weather data between January 2017 and December 2018 were obtained for the area where the building used in the experiments was located;

- Three performance metrics, namely, MAPE, MAE, and RMSE, were used for assessing the performance of models when forecasting peak and next-day electricity demand;

- The peak-demand forecast by the MLP, LSTM, CNN LSTM, RICNN, and residual LSTM models were 11.85, 10.75, 11.13, 12.17, and 10.5 kW, respectively. Similarly, the RMSEs of the next-day electricity demand predicted by the models were 9.46. 7.7, 6.95, 7.73, and 6.91 kW, respectively;

- The performance evaluation of the models showed that the proposed residual LSTM was more accurate than MLP, LSTM, CNN LSTM, and RICNN in peak-demand forecasting;

- Regarding next-day electricity demand forecast, the performance of the proposed model was better for on-peak time slots with high electricity demand;

- This study demonstrates an improvement in performance when applying residual LSTM for forecasting the electricity demand of buildings;

- The proposed model can help distribute concentrated electricity demand and operation of the national power system for buildings.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Korea Electric Power Corporation. Korea Electricity Fee System. Available online: https://cyber.kepco.co.kr/ckepco/front/jsp/CY/H/C/CYHCHP00202.jsp (accessed on 4 September 2020).

- Korea Electric Power Corporation. Korea Electricity Power Supply Terms. Available online: https://cyber.kepco.co.kr/ckepco/front/jsp/CY/D/C/CYDCHP00204.jsp (accessed on 4 September 2020).

- Kavaklioglu, K.; Ceylan, H.; Ozturk, H.K.; Canyurt, O.E. Management. Modeling and prediction of Turkey’s electricity consumption using artificial neural networks. Energy Convers. Manag. 2009, 50, 2719–2727. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Adamowski, J.F.; Li, Y. Short-term electricity demand forecasting with MARS, SVR and ARIMA models using aggregated demand data in Queensland, Australia. Adv. Eng. Inform. 2018, 35, 1–16. [Google Scholar] [CrossRef]

- Kim, J. Statistics of Electric Power in Korea; Korea Electric Power Corporation: Naju, Republic of Korea, 2021; Volume 90. [Google Scholar]

- Ministry of Land, Infrastructure and Transport (MOTIE). 9th Basic Plan for Electricity Supply and Demand; South Korean Ministry of Trade, Industry and Energy: Seoul, Republic of Korea, 2020. [Google Scholar]

- Kim, C.; Lee, C.; Park, J.; Shin, D.; Kwon, Y. Development for Evaluation and Operation Program of Demand Response Resource; Korea Electrotechnology Research Institute (KERI): Changwon, Republic of Korea, 2014. [Google Scholar]

- Oprea, S.-V.; Bâra, A. Machine learning algorithms for short-term load forecast in residential buildings using smart meters, sensors and big data solutions. IEEE Access 2019, 7, 177874–177889. [Google Scholar] [CrossRef]

- Vaghefi, A.; Jafari, M.A.; Bisse, E.; Lu, Y.; Brouwer, J. Modeling and forecasting of cooling and electricity load demand. Appl. Energy 2014, 136, 186–196. [Google Scholar] [CrossRef]

- Liu, D.; Chen, Q. Prediction of building lighting energy consumption based on support vector regression. In Proceedings of the 2013 9th Asian Control Conference (ASCC), Istanbul, Turkey, 23–26 June 2013; pp. 1–5. [Google Scholar]

- Wang, X.; Fang, F.; Zhang, X.; Liu, Y.; Wei, L.; Shi, Y. LSTM-based short-term load forecasting for building electricity consumption. In Proceedings of the 2019 IEEE 28th International Symposium on Industrial Electronics (ISIE), Vancouver, BC, Canada, 12–14 June 2019; pp. 1418–1423. [Google Scholar]

- Luo, X.; Oyedele, L.O. Forecasting building energy consumption: Adaptive long-short term memory neural networks driven by genetic algorithm. Adv. Eng. Inform. 2021, 50, 101357. [Google Scholar] [CrossRef]

- Jin, N.; Yang, F.; Mo, Y.; Zeng, Y.; Zhou, X.; Yan, K.; Ma, X. Highly accurate energy consumption forecasting model based on parallel LSTM neural networks. Adv. Eng. Inform. 2022, 51, 101442. [Google Scholar] [CrossRef]

- Fan, C.; Wang, J.; Gang, W.; Li, S. Assessment of deep recurrent neural network-based strategies for short-term building energy predictions. Appl. Energy 2019, 236, 700–710. [Google Scholar] [CrossRef]

- Fard, A.K.; Akbari-Zadeh, M.-R. A hybrid method based on wavelet, ANN and ARIMA model for short-term load forecasting. J. Exp. Theor. Artif. Intell. 2014, 26, 167–182. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B.J.E. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Kim, J.; Moon, J.; Hwang, E.; Kang, P. Recurrent inception convolution neural network for multi short-term load forecasting. Energy Build. 2019, 194, 328–341. [Google Scholar] [CrossRef]

- Kim, Y.; Son, H.-g.; Kim, S. Short term electricity load forecasting for institutional buildings. Energy Rep. 2019, 5, 1270–1280. [Google Scholar] [CrossRef]

- Cai, M.; Pipattanasomporn, M.; Rahman, S. Day-ahead building-level load forecasts using deep learning vs. traditional time-series techniques. Appl. Energy 2019, 236, 1078–1088. [Google Scholar] [CrossRef]

- Li, K.; Hu, C.; Liu, G.; Xue, W. Building’s electricity consumption prediction using optimized artificial neural networks and principal component analysis. Energy Build. 2015, 108, 106–113. [Google Scholar] [CrossRef]

- Fan, C.; Xiao, F.; Wang, S. Development of prediction models for next-day building energy consumption and peak power demand using data mining techniques. Appl. Energy 2014, 127, 1–10. [Google Scholar] [CrossRef]

- Fan, H.; MacGill, I.; Sproul, A. Statistical analysis of drivers of residential peak electricity demand. Energy Build. 2017, 141, 205–217. [Google Scholar] [CrossRef]

- Moon, J.; Kim, K.-H.; Kim, Y.; Hwang, E. A short-term electric load forecasting scheme using 2-stage predictive analytics. In Proceedings of the 2018 IEEE International Conference on Big Data and Smart Computing (BigComp), Shanghai, China, 15–18 January 2018; pp. 219–226. [Google Scholar]

- Ke, X.; Jiang, A.; Lu, N. Load profile analysis and short-term building load forecast for a university campus. In Proceedings of the 2016 IEEE Power and Energy Society General Meeting (PESGM), Boston, MA, USA, 17–21 July 2016; pp. 1–5. [Google Scholar]

- Rahman, A.; Srikumar, V.; Smith, A.D. Predicting electricity consumption for commercial and residential buildings using deep recurrent neural networks. Appl. Energy 2018, 212, 372–385. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Khan, N.; Hussain, T.; Lee, M.Y.; Baik, S. Diving deep into short-term electricity load forecasting: Comparative analysis and a novel framework. Mathematics 2021, 9, 611. [Google Scholar] [CrossRef]

- Long, W.; Lu, Z.; Cui, L. Deep learning-based feature engineering for stock price movement prediction. Knowl. Based Syst. 2019, 164, 163–173. [Google Scholar] [CrossRef]

- Wang, Q.; Li, S.; Li, R. Forecasting energy demand in China and India: Using single-linear, hybrid-linear, and non-linear time series forecast techniques. Energy 2018, 161, 821–831. [Google Scholar] [CrossRef]

- Reddy, S.; Akashdeep, S.; Harshvardhan, R.; Kamath, S. Stacking Deep learning and Machine learning models for short-term energy consumption forecasting. Adv. Eng. Inform. 2022, 52, 101542. [Google Scholar]

- Bedi, J.; Toshniwal, D. Deep learning framework to forecast electricity demand. Appl. Energy 2019, 238, 1312–1326. [Google Scholar] [CrossRef]

- Amara, F.; Agbossou, K.; Dubé, Y.; Kelouwani, S.; Cardenas, A.; Hosseini, S. A residual load modeling approach for household short-term load forecasting application. Energy Build. 2019, 187, 132–143. [Google Scholar] [CrossRef]

- Hobby, J.D.; Tucci, G.H. Analysis of the residential, commercial and industrial electricity consumption. In Proceedings of the 2011 IEEE PES Innovative Smart Grid Technologies, Perth, WA, Australia, 13–16 November 2011; pp. 1–7. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Fu, S.; Zhang, Y.; Lin, L.; Zhao, M.; Zhong, S.-S. Deep residual LSTM with domain-invariance for remaining useful life prediction across domains. Reliab. Eng. Syst. Saf. 2021, 216, 108012. [Google Scholar] [CrossRef]

- Li, M.; Li, M.; Ren, Q.; Li, H.; Song, L. DRLSTM: A dual-stage deep learning approach driven by raw monitoring data for dam displacement prediction. Adv. Eng. Inform. 2022, 51, 101510. [Google Scholar] [CrossRef]

- Prakash, A.; Hasan, S.A.; Lee, K.; Datla, V.; Qadir, A.; Liu, J.; Farri, O. Neural paraphrase generation with stacked residual LSTM networks. ArXiv 2016, arXiv:1610.03098. [Google Scholar]

- Alghazzawi, D.; Bamasag, O.; Albeshri, A.; Sana, I.; Ullah, H.; Asghar, M.Z. Efficient prediction of court judgments using an LSTM + CNN neural network model with an optimal feature set. Mathematics 2022, 10, 683. [Google Scholar] [CrossRef]

- Moon, J.; Park, J.; Hwang, E.; Jun, S. Forecasting power consumption for higher educational institutions based on machine learning. J. Supercomput. 2018, 74, 3778–3800. [Google Scholar] [CrossRef]

- Korea Electric Power Corporation. Korea Electric Power Data Open Portal System. Available online: https://www.kps.co.kr/infoopen/infoopen_03_02.do (accessed on 12 October 2020).

- Korea Meteorological Agency. Weather Data Open Portal. Available online: https://data.kma.go.kr/data/grnd/selectAsosRltmList.do;jsessionid=w0E6ERBFNrhoVxhbDaIjpBYwiIPBZRq0koakrSIfQuCieXieb1EZZqtXC8ZJfEf9.was01_servlet_engine5?pgmNo=36 (accessed on 5 October 2020).

- Li, K.; Su, H.; Chu, J. Forecasting building energy consumption using neural networks and hybrid neuro-fuzzy system: A comparative study. Energy Build. 2011, 43, 2893–2899. [Google Scholar] [CrossRef]

- Mughees, N.; Mohsin, S.A.; Mughees, A.; Mughees, A. Deep sequence to sequence Bi-LSTM neural networks for day-ahead peak load forecasting. Expert Syst. Appl. 2021, 175, 114844. [Google Scholar] [CrossRef]

- Koehn, D.; Lessmann, S.; Schaal, M. Predicting online shopping behaviour from clickstream data using deep learning. Expert Syst. Appl. 2020, 150, 113342. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. ArXiv 2014, arXiv:1412.6980. [Google Scholar]

- Verma, A.; Ranga, V. Machine learning based intrusion detection systems for IoT applications. Wirel. Pers. Commun. 2020, 111, 2287–2310. [Google Scholar] [CrossRef]

- Jeong, J.; Kim, C. Comparison of Machine Learning Approaches for Medium-to-Long-Term Financial Distress Predictions in the Construction Industry. Buildings 2022, 12, 1759. [Google Scholar] [CrossRef]

| Category | Refs. | Input Variable(s) | Time Step | Method(s) | Prediction Objective |

|---|---|---|---|---|---|

| Statistical-based Modeling | [22] | Historical lighting load, Weather information, Occupant information | 30 min | MLR | Peak demand |

| [24] | Historical Load, Weather information, Time information | 15 min | Polynomial regression, Similar day approach, MLR | Load demand | |

| Machine learning-based modeling | [10] | Historical lighting load, Weather information, Occupant information | Hourly | ANN, SVR | Lighting load demand, Peak lighting demand |

| [18] | Historical Load, Weather information | Hourly | ANN | Load demand, Peak demand | |

| [21] | Historical Load, Weather information | Hourly | Voting ensemble | Load demand, Peak demand | |

| Deep learning-based modeling | [12] | Historical Load, Weather information | Hourly | LSTM | Load demand |

| [13] | Historical Load | 30 min | LSTM | Load demand | |

| [26] | Historical Load, Weather information | Hourly | LSTM | Load demand | |

| [16] | Historical Load, Weather information | Hourly | CNN-LSTM | Load demand | |

| [17] | Historical Load, Weather information, Time information | 30 min | RICNN | Load demand |

| Categories | Variable | Description | References |

|---|---|---|---|

| Weather variable | Wind speed | Wind speed (numeric) | [11,16] |

| Temperature | Adjusted temperature (numeric) | [9,11,14,16,23,39] | |

| Humidity | Humidity (numeric) | [11,14,16,23,39] | |

| Sequence variable | Month_x | Sine value at the month (numeric) | [15,16,21,23,39] |

| Month_y | Cosine value at the month (numeric) | [15,16,21,23,39] | |

| Day_x | Sine value on the day (numeric) | [14,15,16,17,21,29] | |

| Day_y | Cosine value on the day (numeric) | [14,15,16,17,21,29] | |

| Hour_x | Sine value at the hour (numeric) | [11,14,15,16,23] | |

| Hour_y | Cosine value at the hour (numeric) | [11,14,15,16,23] | |

| Holiday | Weekdays/holidays status (encoded vector) | [16,21,39] | |

| Monday | Monday (encoded vector) | [9,11,16,17,23,39] | |

| Tuesday | Tuesday (encoded vector) | [9,11,16,17,23,39] | |

| Wednesday | Wednesday (encoded vector) | [9,11,16,17,23,39] | |

| Thursday | Thursday (encoded vector) | [9,11,16,17,23,39] | |

| Friday | Friday (encoded vector) | [9,11,16,17,23,39] | |

| Saturday | Saturday (encoded vector) | [9,11,16,17,23,39] | |

| Sunday | Sunday (encoded vector) | [9,11,16,17,23,39] | |

| Electricity rate variable | Off-peak | Off-peak status (encoded vector) | [17] |

| Mid-peak | Mid-peak status (encoded vector) | [17] | |

| On-peak | On-peak status (encoded vector) | [17] |

| Model | Hyper-Parameter | Parameter Grid | Best Parameters |

|---|---|---|---|

| MLP | Epochs | [100, 200, … 5000] | 500 |

| Batch size | [16, 32, … 512] | 256 | |

| Filter size | [16, 32, … 512] | 512 | |

| Stacks of dense layers | [1, 2, 3, 4] | 4 | |

| LSTM | Epochs | [100, 200, … 5000] | 100 |

| Batch size | [16, 32, … 512] | 64 | |

| Filter size | [16, 32, … 512] | 256 | |

| Stacks of dense layers | [1, 2, 3, 4] | 1 | |

| Stacks of LSTM layers | [1, 2, 3, 4] | 2 | |

| CNN LSTM | Epochs | [100, 200, … 5000] | 100 |

| Batch size | [16, 32, … 512] | 128 | |

| Filter size | [16, 32, … 512] | 128 | |

| Stacks of dense layers | [1, 2, 3, 4] | 4 | |

| Stacks of LSTM layers | [1, 2, 3, 4] | 2 | |

| Stacks of CNN layers | [1, 2, 3, 4] | 2 | |

| Filter size of CNN | [16, 32, … 512] | 16 | |

| Kernel size of CNN | [1, 2, 3, 4, 5, 6, 7] | 1 | |

| RICNN | Epochs | [100, 200, … 5000] | 200 |

| Batch size | [16, 32, … 512] | 512 | |

| Filter size | [16, 32, … 512] | 128 | |

| Stacks of dense layers | [1, 2, 3, 4] | 4 | |

| Stacks of LSTM layers | [1, 2, 3, 4] | 2 | |

| Stacks of CNN layers | [1, 2, 3, 4] | 2 | |

| Filter size of CNN | [16, 32, … 512] | 16 | |

| RLSTM | Epochs | [100, 200, … 5000] | 1000 |

| Batch size | [16, 32, … 512] | 128 | |

| Filter size | [16, 32, … 512] | 128 | |

| Stacks of dense layers | [1, 2, 3, 4] | 2 | |

| Stacks of LSTM layers | [1, 2, 3, 4] | 2 | |

| Stacks of residual blocks | [1, 2, 3, 4] | 2 | |

| Stacks of LSTM layers in the block | [1, 2, 3] | 1 | |

| Dropout rate | [0.1, 0.2, … 0.9] | 0.8 |

| Measure | MLP | LSTM | CNN LSTM | RICNN | RLSTM |

|---|---|---|---|---|---|

| MAE | 8.71 | 7.16 | 8.32 | 8.28 | 6.86 |

| MAPE | 17.21 | 12.73 | 14.32 | 14.58 | 11.7 |

| RMSE | 11.85 | 10.75 | 11.13 | 12.17 | 10.5 |

| Measure | MLP | LSTM | CNN LSTM | RICNN | RLSTM |

|---|---|---|---|---|---|

| MAE | 8.06 | 8.38 | 8.79 | 8.60 | 6.76 |

| MAPE | 14.30 | 12.77 | 13.11 | 13.58 | 9.81 |

| RMSE | 10.54 | 10.79 | 10.70 | 11.41 | 9.48 |

| Measure | MLP | LSTM | CNN LSTM | RICNN | RLSTM |

|---|---|---|---|---|---|

| MAE | 5.17 | 4.48 | 3.98 | 4.48 | 3.99 |

| MAPE | 15.71 | 13.41 | 11.76 | 13.85 | 12.57 |

| RMSE | 8.46 | 7.7 | 6.95 | 7.73 | 6.91 |

| Demand Category | Months | |

|---|---|---|

| 3, 4, 5, 6, 7, 8, 9 | 10, 11, 12, 1, 2 | |

| Off-peak | 23:00–09:00 | 23:00–09:00 |

| Mid-peak | 09:00–10:00 | 09:00–10:00 |

| 12:00–13:00 | 12:00–17:00 | |

| 17:00–23:00 | 20:00–22:00 | |

| On-peak | 10:00–12:00 | 10:00–12:00 |

| 13:00–17:00 | 17:00–20:00 | |

| 22:00–23:00 | ||

| Demand Category | Time | MLP | LSTM | CNN LSTM | RICNN | RLSTM |

|---|---|---|---|---|---|---|

| Off-peak | 00–01 | 5.98 | 5.84 | 4.86 | 10.14 | 7.70 |

| Off-peak | 01–02 | 4.50 | 3.90 | 3.61 | 6.20 | 3.79 |

| Off-peak | 02–03 | 2.65 | 4.53 | 3.11 | 5.60 | 3.78 |

| Off-peak | 03–04 | 3.42 | 2.66 | 2.02 | 5.26 | 2.11 |

| Off-peak | 04–05 | 2.03 | 4.08 | 3.09 | 5.33 | 4.31 |

| Off-peak | 05–06 | 2.73 | 4.16 | 3.36 | 5.95 | 2.46 |

| Off-peak | 06–07 | 2.05 | 3.44 | 2.02 | 7.19 | 1.69 |

| Off-peak | 07–08 | 7.79 | 7.99 | 4.15 | 8.46 | 6.73 |

| Off-peak | 08–09 | 32.63 | 27.53 | 22.15 | 24.47 | 20.71 |

| Mid-peak | 09–10 | 86.66 | 73.59 | 52.01 | 64.13 | 63.88 |

| On-peak | 10–11 | 215.70 | 181.13 | 133.74 | 166.87 | 119.80 |

| On-peak | 11–12 | 218.89 | 171.39 | 128.67 | 154.80 | 124.27 |

| Mid-peak | 12–13 | 155.41 | 117.90 | 84.15 | 110.31 | 102.64 |

| Mid-peak | 13–14 | 180.79 | 146.21 | 111.94 | 124.99 | 112.55 |

| Mid-peak | 14–15 | 174.24 | 137.29 | 108.60 | 129.43 | 110.62 |

| Mid-peak | 15–16 | 170.17 | 125.94 | 112.62 | 140.29 | 112.44 |

| Mid-peak | 16–17 | 132.50 | 120.99 | 120.62 | 140.63 | 97.78 |

| On-peak | 17–18 | 110.24 | 101.95 | 94.28 | 116.46 | 82.56 |

| On-peak | 18–19 | 93.40 | 84.51 | 70.68 | 80.30 | 68.48 |

| On-peak | 19–20 | 54.67 | 41.24 | 44.01 | 43.16 | 39.77 |

| Mid-peak | 20–21 | 31.19 | 27.05 | 24.44 | 29.76 | 24.21 |

| Mid-peak | 21–22 | 14.20 | 11.98 | 10.81 | 18.19 | 12.67 |

| On-peak | 22–23 | 7.76 | 7.60 | 7.80 | 20.68 | 11.62 |

| Off-peak | 23–24 | 9.04 | 8.81 | 7.85 | 17.22 | 9.20 |

| Compared Models | Friedman Test | ||

|---|---|---|---|

| n = 2208 | α = 0.05 | ||

| RLSTM | vs. | MLP | |

| RLSTM | vs. | LSTM | |

| RLSTM | vs. | CNN LSTM | F = 92.3 |

| RLSTM | vs. | RICNN | P = 0.000 (Reject ) |

| Compared Models | Friedman Test | ||

|---|---|---|---|

| n = 92 | α = 0.05 | ||

| RLSTM | vs. | MLP | |

| RLSTM | vs. | LSTM | |

| RLSTM | vs. | CNN LSTM | F = 10.82 |

| RLSTM | vs. | RICNN | P = 0.000 (Reject ) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Jeong, J.; Kim, C. Daily Peak-Electricity-Demand Forecasting Based on Residual Long Short-Term Network. Mathematics 2022, 10, 4486. https://doi.org/10.3390/math10234486

Kim H, Jeong J, Kim C. Daily Peak-Electricity-Demand Forecasting Based on Residual Long Short-Term Network. Mathematics. 2022; 10(23):4486. https://doi.org/10.3390/math10234486

Chicago/Turabian StyleKim, Hyunsoo, Jiseok Jeong, and Changwan Kim. 2022. "Daily Peak-Electricity-Demand Forecasting Based on Residual Long Short-Term Network" Mathematics 10, no. 23: 4486. https://doi.org/10.3390/math10234486

APA StyleKim, H., Jeong, J., & Kim, C. (2022). Daily Peak-Electricity-Demand Forecasting Based on Residual Long Short-Term Network. Mathematics, 10(23), 4486. https://doi.org/10.3390/math10234486