Abstract

The group teaching optimization algorithm (GTOA) is a meta heuristic optimization algorithm simulating the group teaching mechanism. The inspiration of GTOA comes from the group teaching mechanism. Each student will learn the knowledge obtained in the teacher phase, but each student’s autonomy is weak. This paper considers that each student has different learning motivations. Elite students have strong self-learning ability, while ordinary students have general self-learning motivation. To solve this problem, this paper proposes a learning motivation strategy and adds random opposition-based learning and restart strategy to enhance the global performance of the optimization algorithm (MGTOA). In order to verify the optimization effect of MGTOA, 23 standard benchmark functions and 30 test functions of IEEE Evolutionary Computation 2014 (CEC2014) are adopted to verify the performance of the proposed MGTOA. In addition, MGTOA is also applied to six engineering problems for practical testing and achieved good results.

Keywords:

group teaching optimization algorithm; learning motivation strategy; random opposition-based learning; restart strategy; engineering problems MSC:

49K35

1. Introduction

Meta-heuristic algorithms (MAs) are commonly used to solve global optimization problems. They mainly solve the optimal solution by simulating nature and human intelligence. To a certain extent, they can search globally and find the approximate solution of the optimal solution. The core of MAs is exploration and exploitation. Among them, exploration is to explore the entire search space as much as possible because the optimal solution may exist anywhere in the entire search space. Furthermore, exploitation is to use effective information as much as possible. In most cases, there are specific correlations between the optimal solutions. Use these correlations to adjust gradually, and slowly search from the initial solution to the optimal solution. In general, MAs hope to balance exploration and exploitation as much as possible.

In recent years, many scholars have studied MAs because of their advantages, such as simple operation, intuitive operation, and fast running speed. So far, hundreds of meta-heuristic algorithms have been proposed. According to different design inspirations, MAs can be divided into four categories: swarm-based, evolutionary, physical, and human-based algorithms. The algorithms represented by swarm-based algorithms include Particle Swarm Optimization (PSO) [1], Ant Lion Optimizer (ALO) [2], Bat Algorithm (BA) [3], Slap Swarm Algorithm (SSA) [4], Ant Colony Optimization (ACO) [5], Artificial Bee Colony (ABC) [6], Gray Wolf Optimization (GWO) [7], Krill Herd (KH) [8], Whale Optimization Algorithm (WOA) [9], and Remora Optimization Algorithm (ROA) [10]. Evolutionary algorithms are represented by Genetic Algorithm (GA) [11], Evolution Strategy (ES) [12], Genetic Programming (GP) [13], Biogeography-Based Optimizer (BBO) [14], Evolutionary Programming (EP) [15], Differential Evolution (DE) [16], and Virulence Optimization Algorithm (VOA) [17]. Physical-based algorithms are represented by Simulated Annealing (SA) [18], Gravitational Search Algorithm (GSA) [19], Black Hole Algorithm (BH) [20], Multi-Verse Optimization (MVO) [21], Ray Optimization (RO) [22], and Thermal Exchange Optimization (TEO) [23]. Human-based algorithms are represented by Harmony Search (HS) [24], Teaching Learning-Based Optimization (TLBO) [25], Social Group Optimization (SGO) [26], and Exchanged Market Algorithm (EMA) [27]. These algorithms have a good optimization effect on MAs.

Group Teaching Optimization Algorithm (GTOA) is an MA that was proposed in 2020 [28] and inspired by the group teaching mechanism. GTOA divides students into elite students and ordinary students for group teaching. For different students, teachers have different teaching methods. However, each student is close to the teacher in essence, so the algorithm has better exploitation ability. Each student will consolidate the contents of the teacher’s teaching during the break. However, this way does not fully reflect the idea of group teaching. Therefore, Zhang et al. proposed a group teaching optimization algorithm with information sharing (ISGTOA) to distinguish the learning styles of different groups. ISGTOA has established two teaching methods for elite and ordinary students. The two groups of students can fully communicate through these two methods. This method increases the communication between groups, improves the dependence of students, and makes the algorithm converge faster [29]. However, ISGTOA still does not distinguish between different students’ learning status and learning methods in their spare time. Each student has the same learning method at the student phase. However, the learning motivation of elite students is different from that of ordinary students. Elite students have strong learning motivation. These students have strong self-learning ability and are good at self-summarizing and learning. Ordinary students lack the motivation to learn and often need the help of other students or teachers. Elite students acquire knowledge through self-study through learning motivation, while ordinary students learn through discussion among classmates. Therefore, this paper proposes a modified group teaching optimization algorithm (MGTOA). By adding learning motivation, elite students will learn independently according to their own learning motivation, and ordinary students will communicate with each other to improve their comprehensive abilities. At the same time, random reverse learning and restart strategy are added to enhance the optimization performance of the proposed algorithm.

The main contribution of this paper can be summarized as follows:

- A modified GTOA is proposed based on three strategies: learning motivation (LM), random opposition-based learning (ROBL), and restart strategy (RS).

- The optimization of MGTOA in different dimensions (dim = 30/500) among 23 standard benchmark functions is evaluated, and the distribution of MGTOA in some benchmark functions is shown.

- Test the optimization performance of MGTOA in CEC2014.

- MGTOA is compared with seven different optimization algorithms.

- Six process problems verify the engineering practicability of MGTOA.

The organizational structure of this paper is as follows: Section 2 gives a brief description of GTOA, while Section 3 describes the use of operators: learning motivation (LM), random opposition-based learning (ROBL), restart strategy (RS), and gives the framework of the proposed algorithm. Section 4 and Section 5 show the algorithm’s experimental results in solving benchmark and constraint engineering problems, while Section 6 summarizes the paper.

2. Group Teaching Optimization Algorithm (GTOA)

The idea of GTOA is to improve the knowledge level of the whole class by simulating the group learning mechanism. Considering that every student has different knowledge, it is very complicated in practice. In order to integrate the idea of group teaching into the optimization algorithm, this paper assumes that the population, decision variables, and fitness values are similar to the students, the subjects provided to the students, and the student’s knowledge. The algorithm is divided into the following four phases.

2.1. Ability Grouping Phase

In order to better show the advantages of group teaching, all students will be divided into two groups according to their knowledge level, and the teacher will carry out different teaching for these two groups of students. In grouping, the relatively strong group is called elite students, and the other group is called ordinary students. Teachers will be more capable of making different teaching plans when teaching. After grouping, the gap between elite and ordinary students will become larger with the teachers’ teaching. For better teaching, the grouping of GTOA is a dynamic process, and grouping is performed again after one cycle of learning.

2.2. Teacher Phase

The teacher phase refers to students acquiring knowledge through their teachers. In GTOA, teachers make two different teaching plans for two groups of students.

Teacher phase Ⅰ: Elite students have a strong ability to accept knowledge, so teachers pay more attention to improving students’ overall average knowledge level. In addition, the differences in students’ acceptance of knowledge are also considered. Therefore, elite students will learn according to the teachers’ teaching and students’ overall knowledge level. This can effectively improve the overall knowledge level.

where N is the number of students, and is the ith student. is a teacher. M is the average knowledge of students. is the solution obtained through the teacher phase. a, b, and c are random numbers in [0, 1]. The value of F is a coefficient of 1 or 2, as done in Rao et al. [25].

Teacher phase Ⅱ: Considering that ordinary students have a general ability to accept knowledge. Teachers will pay more attention to ordinary students and tend to improve students’ knowledge levels from an individual perspective. Therefore, ordinary students will be more inclined to obtain teachers’ knowledge.

where d is a random number between [0, 1].

In addition, students cannot guarantee that they will acquire knowledge in the teacher phase, so the minimum value is assigned to represent the knowledge level of students after the teacher phase.

2.3. Student Phase

Students can acquire knowledge through self-study or interaction with other students in their spare time. In order to improve their knowledge level. In the spare time, students will summarize the knowledge they have learned in the teacher phase for self-study.

where e and g are two random numbers in the range [0, 1], is the knowledge of student i by learning from the student phase and is the knowledge of student j by learning from the teacher phase. The random number of students j ∈ {1, 2, …, i − 1, i, …, N}. In Equation (6), the second and third items on the right mean learning from the other student and self-learning, respectively.

In addition, students cannot acquire knowledge through the student phase, so the minimum value is assigned to represent the knowledge level of students after the teacher phase.

2.4. Teacher Allocation Phase

Selecting excellent teachers can improve students’ learning ability. Establishing a good teacher matching mechanism is important to improve students’ knowledge level. In the GWO algorithm, the average value is obtained by selecting the best three wolves and using them to guide all wolves to prey. Inspired by the hunting behavior of the GWO algorithm, the allocation of teachers is expressed by the following formula:

where, , , and are the students with the first, second, and third fitness values, respectively. In order to accelerate the convergence of the algorithm, elite students and ordinary students generally use the same teacher.

2.5. The Proposed Approach

Step 1: Initialization.

(1.1) Initialization parameters.

These parameters include maximum evaluation times Tmax, current evaluation times t (t = 0), population size N, upper and lower bounds ub and lb of decision variables, dimension dim, and fitness function f(·).

(1.2) Initialize population.

Initialize solution x according to the test function. Each group of variables represents one student. It can be described as:

where k is a random number in the range [0, 1].

Step 2: Calculate fitness value.

The fitness value of the individual is calculated, and the optimal solution G is selected.

Step 3: Termination conditions

If the current iteration number t is greater than the maximum iteration number Tmax, the algorithm is terminated, and the optimal solution G is output.

Step 4: Teacher allocation phase

The first three best individuals are selected. Then, the teacher can be calculated by Equation (8).

Step 5: Ability grouping phase.

The students are divided into two groups based on the fitness value of the current students. The best half of the students are divided into elite group and the remaining half into the ordinary group . The two groups share a teacher.

Step 6: Teacher phase and student phase

(6.1). For the group , the teacher phase is implemented based on Equations (1)–(3) and (5). Then, the student phase is conducted according to Equations (6) and (7). Finally, the new students is obtained.

(6.2) For the group , the teacher phase is implemented based on Equations (4) and (5). Then, the student phase is conducted according to Equations (6) and (7). Finally, the new students is obtained.

Step 7: Population evaluation.

Calculate the fitness value of students, select the optimal solution G, and update the current iteration number t.

Then, Step 3 is executed.

3. Proposed Algorithm

3.1. Learning Motivation

Each student can acquire knowledge through self-study or communication with classmates in the student phase of the original algorithm. However, it does not fit elite students and ordinary students. Elite students have stronger learning abilities and motivation than ordinary students and are more inclined to self-study. However, ordinary students are weak in learning ability and tend to study among their classmates.

Therefore, elite students obtain learning motivation D according to Equation (12) and find a new solution through Equation (13). Ordinary students can find a new solution according to Equation (14).

where r is a random number of [0, 1].

3.2. Random Opposition-Based Learning

Opposition-based learning (OBL) is a new computational intelligence scheme [30]. In the past few years, OBL has been successfully applied to various population-based evolutionary algorithms [31,32,33,34,35]. Random opposition-based learning increases the random value of [0, 1] on the basis of opposition-based learning, so as to obtain the random solution within the range of inverse solution. It not only expands the search range but also strengthens the diversity of the population so that the algorithm has a stronger exploration ability and convergence ability. The specific formula is as follows:

where represents the solution obtained after random opposition-based learning.

It is determined whether the solution obtained by the random opposition-based learning is better than the original solution:

3.3. Restart Strategy (RS)

Restart strategy [36] can reassign the value of the solution which has been trapped in the local optimal state for a long time and make the poor solution jump out of the local optimal state. Adding the restart strategy can strengthen the exploration ability of the algorithm and make the algorithm converge better.

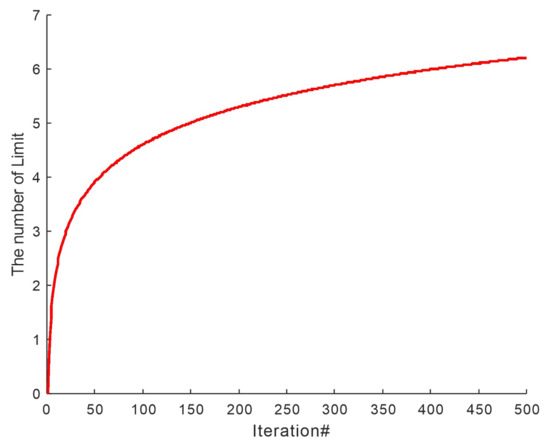

First, set the restart threshold Limit according to Equation (17). Then, set the vector trial for each solution, and record whether a better solution is obtained after each iteration. The initial value is 0. If a better solution is obtained, the corresponding trial is 0. Otherwise, the corresponding trial is increased by 1. If the vector trial is greater than the Limit, the restart strategy is executed. The Limit’s function image is shown in Figure 1.

Figure 1.

Limit schematic diagram.

The restart strategy generates two new solution T1 and T2, respectively, by Equations (18) and (19) and performs boundary processing by Equation (20), and then selects a better solution to replace the original solution. After that, the corresponding trial is reassigned to 0. The formula of the restart strategy is as follows:

3.4. MGTOA Complexity Analysis

The time complexity depends on the number of students (N), the dimension of the given problem (dim), the number of iterations of the algorithm (T), and the evaluation cost (C). Therefore, the time complexity of MGTOA can be presented as:

where the time complexities of the components of Equation (22) can be defined as follows:

- Initialization of problem definition demands O(1) time.

- Initialization of population creation demands O(N × dim) time.

- Updating the population position includes the teacher and student phases and the required time O(2 × T × N × dim).

- Time required for random opposition-based learning O(T × N × dim).

- Time required for restart strategy O(2 × T × N × dim/Limit).

- The cost time of the calculation function includes the calculation time cost of the algorithm itself, the calculation time cost of the random opposition-based learning strategy, and the calculation time cost of the restart strategy. The calculation time cost of the algorithm itself is O(T × N × C). The calculation time cost of the random opposition-based learning strategy is O(T × N × C). The calculation time cost of the restart strategy takes into account the change of the Limit value, so the time cost is O(T × N × C/Limit). The total time cost is O(2 × T × N × C + T × N × C/Limit).

Therefore, the time complexity of MGTOA is expressed as:

Because 1 ≪ T × N × C, 1 ≪ T × N × dim, N × dim ≪ T × N × C, and N × dim ≪ T × N × dim, Equation (22) can be simplified to Equation (23).

It can be seen from the above analysis that the time complexity of MGTOA is improved compared with GTOA, but after these strategies are added, the optimization effect of MGTOA is significantly improved. The following experiments also prove the feasibility of MGTOA.

3.5. MGTOA Implementation

Combining GTOA with the three strategies mentioned above, an improved group teaching optimization algorithm (MGTOA) is presented. Through the above three improvement strategies, the exploration ability of MGTOA is strengthened, making the calculation more global. Pseudocode is shown in Algorithm 1.

| Algorithm 1 Pseudo-code of MGTOA |

| 1. Initialization parameters t, Tmax, ub, lb, N, dim. |

| 2. Initialize population x according to Equations (9) and (10). |

| 3. The fitness values of all individuals are calculated, and the optimal solution G is selected. |

| 4. While t < Tmax |

| 5. Define the teacher according to Equation (8). |

| 6. Students are divided into elite students (Xgood) and ordinary students (Xbad). The number of elite students is Ngood. |

| 7. for i = 1:N |

| 8. if i < Ngood |

| 9. The teacher phase is achieved according to Equations (1)–(3), and (5). |

| 10. else |

| 11. The teacher phase is realized according to Equations (4) and (5). |

| 12. end |

| 13. Carry out boundary processing for the updated students. |

| 14. Calculate the average knowledge level of elite students (M) |

| 15. if i < Ngood |

| 16. for j = 1:dim |

| 17. The elite students get the learning motivation D according to Equation (12) and carry out the student phase through Equations (13) and (7). |

| 18. end |

| 19. else |

| 20. Ordinary students carry out the student phase according to Equations (14) and (7). |

| 21. end |

| 22. end |

| 23. Carry out boundary processing for the updated students. |

| 24. An inverse solution is generated using a random opposition-based learning strategy by Equation (15), and the student position is updated according to Equation (16). |

| 25. Calculate the new fitness value of the students and judge whether it is better. If it is better, replace the fitness value and the corresponding trial = 0. Otherwise, trial will add 1 |

| 26. Define Limit according to Equation (17). |

| 27. for i = 1:N |

| 28. while trial(i) < Limit |

| 29. T1 and T2 are generated by Equations (18) and (19), and T2 is subjected to boundary processing using Equation (20). Assign a smaller position to xi. |

| 30. trial(i) = 0 |

| 31. end |

| 32. end |

| 33. t = t + 1 |

| 34. end |

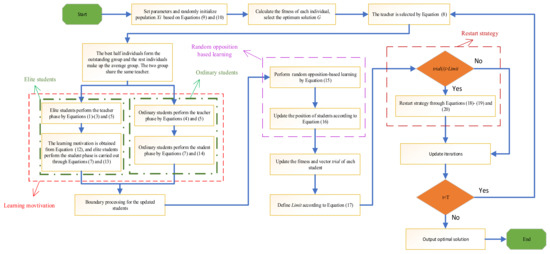

The MGTOA flow chart is shown in Figure 2.

Figure 2.

Flowchart for proposed MGTOA.

4. Experimental Results and Discussion

All the experiments in this paper are completed on a computer with the 11th Gen Intel(R) Core(TM) i7-11700 processor with the primary frequency of 2.50GHz, 16GB memory, and the operating system of 64-bit windows 11 using matlab2021a.

In this section, MGTOA is brought into two different types of benchmark functions to evaluate the performance of the modified algorithm. First, the performance of MGTOA in solving simple optimization problems is evaluated by experiments on 23 standard benchmark functions. After that, it is verified by CEC 2014 test function, which contains 30 test functions. Finally, in order to verify that the proposed MGTOA has better performance, the MGTOA is compared with GTOA [28], Genetic Algorithms (GA) [11], Sine Cosine Algorithm (SCA) [37], Bald Eagle Search (BES) [38], Remora Optimization Algorithm (ROA) [10], Arithmetic Optimization Algorithm (AOA) [39], Whale Optimization Algorithm (WOA) [9], Teaching Learning-Based Optimization Algorithm (TLBO) [25], and Balanced Teaching Learning-Based Optimization Algorithm (BTLBO) [40]. The parameter settings of these algorithms are shown in Table 1.

Table 1.

Parameter settings for the comparative algorithms.

4.1. Experiments on Standard Benchmark Functions

In this section, the performance of MGTOA is tested using 23 mathematical benchmark functions and compared with other nine algorithms. This benchmark contains seven unimodal, six multimodal, and ten fixed-dimension multimodal functions. As shown in Table 2, where F is the mathematical function, dim is the dimension, Range is the interval of the search space, and Fmin is the optimal value the corresponding function can achieve. Among the 23 standard benchmark functions, set the population number N = 30, the maximum number of iterations T = 500, and the dimension dim = 30/500. All the algorithms run independently 30 times to obtain the optimal fitness value, the average fitness value, and the standard deviation.

Table 2.

Details of 23 benchmark functions.

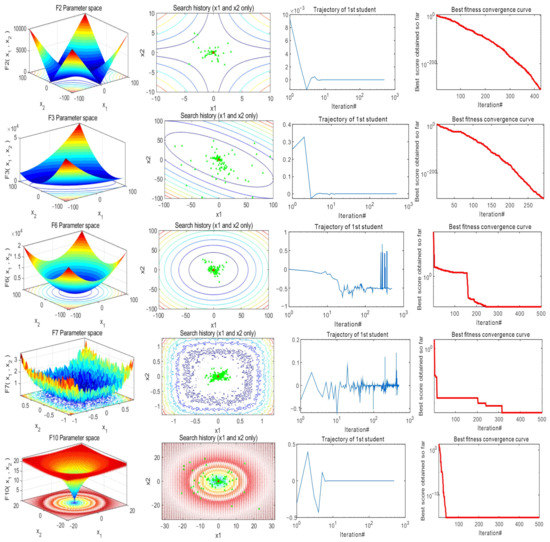

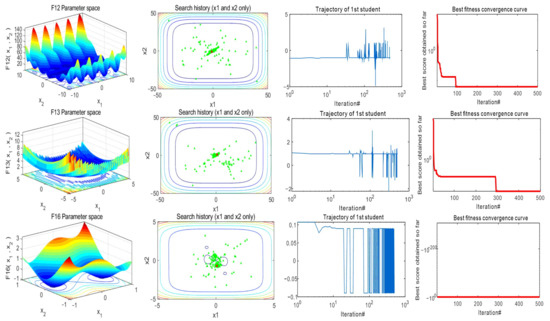

Figure 3 shows a partial graph of 23 benchmark functions, the historical distribution of MGTOA in the benchmark function, the historical trajectory of the first individual, and the convergence curve of MGTOA. It can be clearly seen from the figure that MGTOA obtained the results and position distribution after each optimization.

Figure 3.

Results of MGTOA in 23 benchmark functions.

Table 3, Table 4 and Table 5 are the statistical charts of 23 benchmark functions of MGTOA and nine other comparison algorithms, and the best values obtained are shown in bold black. It can be seen that MGTOA has been significantly improved compared with other algorithms and has achieved good results in 23 benchmark functions. In F1–F4, the statistical value of MGTOA has been significantly improved compared with GTOA, and the fitness value has reached the theoretical optimum. BES has achieved good results in F1, and AOA has achieved good results in F2. Other comparison algorithms are insufficient in F1–F4. In 30 dimensions, MGTOA did not obtain the minimum fitness value in F6 and F12, which indicates that MGTOA is insufficient. However, in the 500 dimensions, MGTOA has achieved the minimum fitness value. This shows that the optimization performance of MGTOA is more stable and has good effects in different dimensions. TLBO and BTLBO cannot guarantee good effects in high dimensions. Among other functions, MGTOA has achieved good results. BES and ROA have achieved good results in F9–F11, but other functions are not as effective as MGTOA. In Table 5, because the function is relatively simple, many algorithms can obtain better fitness values. MGTOA obtains the best fitness value in most functions and has good stability. ROA, WOA, BTLBO, and TLBO also achieved good results. The performance of other algorithms is relatively weak, and they only have good results in some functions. The above analysis verifies that MGTOA has a good effect on 23 benchmark functions.

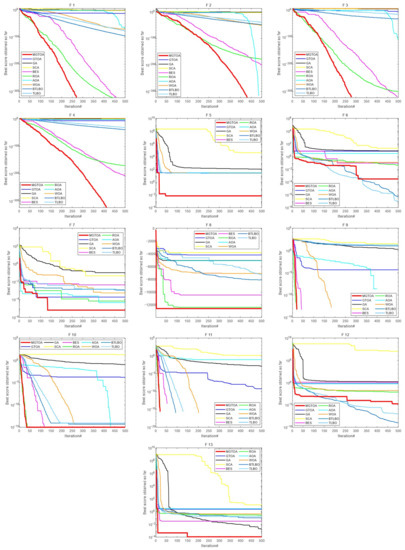

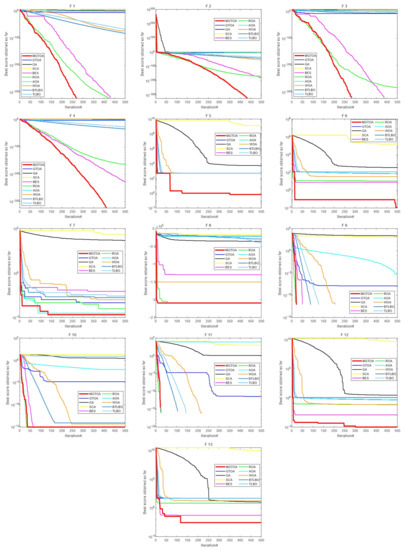

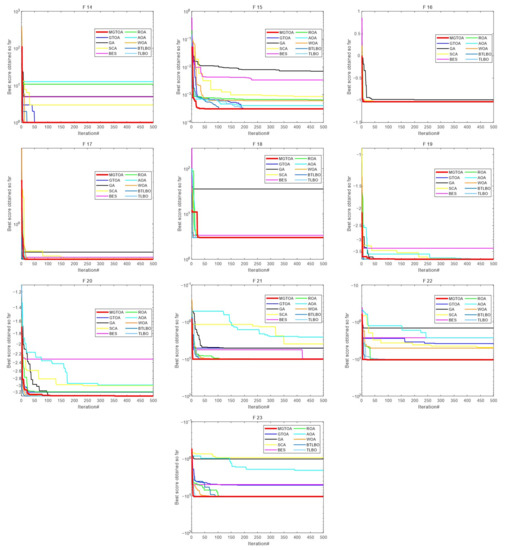

In order to more intuitively see the optimization ability of each algorithm, the convergence curves of each algorithm in 23 mathematical benchmark functions are shown in Figure 4, Figure 5 and Figure 6, which can more intuitively understand the convergence effect of each algorithm. From F1–F4, it can be seen that the MGTOA optimization effect is obviously superior to other algorithms. MGTOA has good convergence ability in the early stage of the algorithm and can quickly find the optimal value. In Figure 4, MGTOA is inferior to TLBO and BTLBO in F6 and F12. However, in Figure 5, the value obtained by MGTOA is significantly smaller, and other algorithms cannot converge. It shows that MGTOA has better performance when dealing with high-dimensional problems. In other functions, MGTOA can converge quickly and get a good value. It is further proved that MGTOA has better optimization ability in 23 benchmark functions.

Figure 4.

Convergence curves for the optimization algorithms for standard benchmark functions (F1–F13) with dim = 30.

Figure 5.

Convergence curves for the optimization algorithms for standard benchmark functions (F1–F13) with dim = 500.

Figure 6.

Convergence curves for the optimization algorithms for standard benchmark functions (F14–F23).

In addition, the Wilcoxon rank sum test is a nonparametric statistical test method that can find more complex data distribution. The general data analysis is only aimed at the average value and standard deviation of the current data and is not compared with the data of multiple algorithms runs. Therefore, the Wilcoxon rank sum test is required for further verification. Table 6 shows the experimental results of MGTOA and nine other different algorithms running 30 times in 23 mathematical benchmark functions. Among them, the significance level is 5%. The value of less than 5% indicates a significant difference between the two algorithms. Otherwise, it indicates that the two algorithms are close. It can be seen from the table that the p of most functions is less than 0.05, but some functions are greater than 0.05. MGTOA has four functions p = 1 compared with BES. MGTOA has three functions p = 1 compared with ROA. Compared with AOA, MGTOA has three functions p = 1 when dim = 30. This is because both these algorithms and MGTOA can find the theoretical optimal fitness value in these functions. Compared with MGTOA, WOA, BTLBO and TLBO have many p less than 0.05 in F9–F11. It shows that the optimization effect of these algorithms in this function is similar. However, in most cases, the p obtained by MGTOA and other algorithms is less than 0.05. In general, MGTOA has achieved good results in the Wilcoxon rank sum test.

Table 3.

Results of benchmark functions (F1–F13) under 30 dimensions.

Table 3.

Results of benchmark functions (F1–F13) under 30 dimensions.

| F | Metric | MGTOA | GTOA [28] | GA [11] | SCA [37] | BES [38] | ROA [10] | AOA [39] | WOA [9] | BTLBO [40] | TLBO [25] |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | min | 0 | 1.13 × 10−14 | 9.69 × 10−3 | 8.36 × 10−2 | 0 | 0 | 3.05 × 10−193 | 6.03 × 10−86 | 1.47 × 10−97 | 5.3 × 10−81 |

| mean | 0 | 5.92 × 10−6 | 2.37 × 10−2 | 16.5 | 0 | 2.99 × 10−319 | 2.56 × 10−30 | 2.21 × 10−68 | 9.71 × 10−96 | 6.99 × 10−79 | |

| std | 0 | 2.42 × 10−5 | 6.57 × 10−3 | 36.5 | 0 | 0 | 1.40 × 10−29 | 1.21 × 10−67 | 2.03 × 10−95 | 1.76 × 10−78 | |

| F2 | min | 0 | 3.43 × 10−7 | 3.64 × 10−1 | 1.47 × 10−6 | 2.38 × 10−229 | 1.70 × 10−182 | 0 | 1.7 × 10−59 | 3.54 × 10−49 | 1.24 × 10−40 |

| mean | 0 | 5.83 × 10−4 | 4.91 × 10−1 | 1.43 × 10−2 | 2.43 × 10−161 | 2.91 × 10−162 | 0 | 1.92 × 10−49 | 2.63 × 10−48 | 5.07 × 10−40 | |

| std | 0 | 1.67 × 10−3 | 7.28 × 10−2 | 2.31 × 10−2 | 1.33 × 10−160 | 1.56 × 10−161 | 0 | 8.92 × 10−49 | 2.63 × 10−48 | 5.35 × 10−40 | |

| F3 | min | 0 | 3.58 × 10−11 | 9.24 × 103 | 1.82 × 103 | 0 | 1.59 × 10−321 | 1.96 × 10−132 | 2.11 × 104 | 2.29 × 10−37 | 1.15 × 10−19 |

| mean | 0 | 5.10 × 10−4 | 2.16 × 104 | 8.87 × 103 | 1.76 × 10−27 | 1.02 × 10−284 | 2.9 × 10−3 | 4.08 × 104 | 5.54 × 10−33 | 1.3 × 10−16 | |

| std | 0 | 2.63 × 10−3 | 8.05 × 103 | 5.64 × 103 | 9.64 × 10−27 | 0 | 5.97 × 10−3 | 1.37 × 104 | 1.51 × 10−32 | 5.06 × 10−16 | |

| F4 | min | 0 | 3.04 × 10−7 | 2.15 × 10−1 | 9.56 | 9.19 × 10−237 | 7.35 × 10−180 | 7.99 × 10−51 | 8.24 | 3.52 × 10−40 | 2.09 × 10−33 |

| mean | 0 | 4.8 × 10−4 | 2.82 × 10−1 | 36.4 | 9.34 × 10−171 | 4.87 × 10−152 | 2.25 × 10−2 | 54.0 | 2.27 × 10−39 | 1.43 × 10−32 | |

| std | 0 | 8.52 × 10−4 | 3.86 × 10−2 | 13.1 | 0 | 2.63 × 10−151 | 2.12 × 10−2 | 24.3 | 3 × 10−39 | 1.30 × 10−32 | |

| F5 | min | 5.39 × 10−6 | 28.9 | 25.5 | 44.6 | 9.27 × 10−4 | 26.6 | 27.8 | 2.73 × 10−1 | 2.29 × 10−1 | 23.4 |

| mean | 8.90 × 10−1 | 28.9 | 70.7 | 8.17 × 104 | 21.8 | 27.1 | 28.5 | 28.0 | 23.9 | 24.7 | |

| std | 4.7 | 2.79 × 10−2 | 30.7 | 1.83 × 105 | 12 | 3.63 × 10−1 | 3.08 × 10−1 | 4.64 × 10−1 | 6.67 × 10−1 | 6.32 × 10−1 | |

| F6 | min | 1.4 × 10−5 | 4.42 | 7.83 | 5.52 | 3.21 × 10−4 | 1.69 × 10−2 | 2.69 | 1.14 × 10−1 | 4.84 × 10−9 | 2.66 × 10−8 |

| mean | 1.11 × 10−3 | 5.67 | 8.07 | 25.8 | 1.35 | 1.04 × 10−1 | 3.24 | 4.47 × 10−1 | 1.06 × 10−6 | 1.17 × 10−6 | |

| std | 1.18 × 10−3 | 7.71 × 10−1 | 1.38 × 10−1 | 49 | 2.8 | 1.21 × 10−1 | 2.74 × 10−1 | 3.27 × 10−1 | 2.64 × 10−6 | 3.77 × 10−6 | |

| F7 | min | 4.97 × 10−8 | 1.06 × 10−4 | 8.88 × 10−2 | 1.7 × 10−2 | 2.03 × 10−3 | 4.43 × 10−6 | 2.47 × 10−7 | 9.51 × 10−5 | 3.60 × 10−4 | 5.57 × 10−4 |

| mean | 3.79 × 10−5 | 4.49 × 10−4 | 1.98 × 10−1 | 1.1 × 10−1 | 6.19 × 10−3 | 1.85 × 10−4 | 8 × 10−5 | 3.32 × 10−3 | 8.10 × 10−4 | 1.13 × 10−3 | |

| std | 3.29 × 10−5 | 2.6 × 10−4 | 7.02 × 10−2 | 1.08 × 10−1 | 3.54 × 10−3 | 1.71 × 10−4 | 8.71 × 10−5 | 3.65 × 10−3 | 4.16 × 10−4 | 5.20 × 10−4 | |

| F8 | min | −1.26 × 104 | −6.16 × 103 | −5.94 × 103 | −4.73 × 103 | −9.97 × 103 | −1.26 × 104 | −6.19 × 103 | −1.26 × 104 | −9.87 × 103 | −9.45 × 103 |

| mean | −1.26 × 104 | −5.09 × 103 | −4.73 × 103 | −3.78 × 103 | −5.80 × 103 | −1.24 × 104 | −5.41 × 103 | −1.03 × 104 | −7.60 × 103 | −7.67 × 103 | |

| std | 6.00 × 10−2 | 6.87 × 102 | 6.85 × 102 | 3.61 × 102 | 3.23 × 103 | 3.26 × 102 | 4.37 × 102 | 1.68 × 103 | 1.01 × 103 | 1.21 × 103 | |

| F9 | min | 0 | 5.7 × 10−11 | 1.37 | 1.25 × 10−1 | 0 | 0 | 0 | 0 | 8.04 | 9.95 |

| mean | 0 | 2.44 × 10−5 | 2.74 | 34.7 | 0 | 0 | 0 | 7.58 × 10−15 | 19.0 | 14.4 | |

| std | 0 | 8.93 × 10−5 | 8.29 × 10−1 | 30.5 | 0 | 0 | 0 | 2.47 × 10−14 | 8.82 | 6.97 | |

| F10 | min | 8.88 × 10−16 | 2.55 × 10−8 | 9.25 × 10−2 | 4.78 × 10−2 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 4.44 × 10−15 | 4.44 × 10−15 |

| mean | 8.88 × 10−16 | 3.36 × 10−4 | 1.33 × 10−1 | 15.4 | 1.13 × 10−15 | 8.88 × 10−16 | 8.88 × 10−16 | 4.8 × 10−15 | 5.03 × 10−15 | 6.34 × 10−15 | |

| std | 0 | 7.52 × 10−4 | 3.09 × 10−2 | 7.96 | 9.01 × 10−16 | 0 | 0 | 2.35 × 10−15 | 1.35 × 10−15 | 1.8 × 10−15 | |

| F11 | min | 0 | 1.06 × 10−12 | 6.76 × 10−4 | 1.83 × 10−2 | 0 | 0 | 2.66 × 10−2 | 0 | 0 | 0 |

| mean | 0 | 1.11 × 10−5 | 8.6 × 10−2 | 9.91 × 10−1 | 0 | 0 | 1.88 × 10−1 | 1.18 × 10−2 | 0 | 2.76 × 10−6 | |

| std | 0 | 4.1 × 10−5 | 2.7 × 10−1 | 3.07 × 10−1 | 0 | 0 | 1.59 × 10−1 | 6.45 × 10−2 | 0 | 1.51 × 10−5 | |

| F12 | min | 3.52 × 10−7 | 3.44 × 10−1 | 1.55 | 8.5 × 10−1 | 1.48 × 10−6 | 2.78 × 10−3 | 3.98 × 10−1 | 7.12 × 10−3 | 2.49 × 10−10 | 5.78 × 10−11 |

| mean | 2.15 × 10−5 | 6.21 × 10−1 | 1.72 | 5.51 × 104 | 1.33 × 10−1 | 1.07 × 10−2 | 5.24 × 10−1 | 4.39 × 10−2 | 1.02 × 10−8 | 1.26 × 10−8 | |

| std | 2.01 × 10−5 | 1.88 × 10−1 | 5.17 × 10−2 | 1.74 × 105 | 3.28 × 10−1 | 6.41 × 10−3 | 4.53 × 10−2 | 1 × 10−1 | 2.68 × 10−8 | 3.63 × 10−8 | |

| F13 | min | 1.17 × 10−6 | 2.45 | 1.37 × 10−3 | 4.82 | 4.66 × 10−5 | 7.92 × 10−2 | 2.65 | 1.72 × 10−1 | 2.13 × 10−7 | 1.59 × 10−7 |

| mean | 2.65 × 10−4 | 2.87 | 4.46 × 10−3 | 1.89 × 105 | 1.22 | 2.4 × 10−1 | 2.82 | 5.69 × 10−1 | 9.96 × 10−2 | 8.4 × 10−2 | |

| std | 3.56 × 10−4 | 2.26 × 10−1 | 3.29 × 10−3 | 5.58 × 105 | 1.47 | 1.38 × 10−1 | 9.65 × 102 | 2.44 × 10−1 | 8.27 × 10−2 | 1.23 × 10−1 |

Table 4.

Results of benchmark functions (F1–F13) under 500 dimensions.

Table 4.

Results of benchmark functions (F1–F13) under 500 dimensions.

| F | Metric | MGTOA | GTOA [28] | GA [11] | SCA [37] | BES [38] | ROA [10] | AOA [39] | WOA [9] | BTLBO [40] | TLBO [25] |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | min | 0 | 3.10 × 10−13 | 67.2 | 9.04 × 104 | 0 | 0 | 5.60 × 10−1 | 2.86 × 10−81 | 3.95 × 10−85 | 1.12 × 10−68 |

| mean | 0 | 1.37 × 10−4 | 70.6 | 2.03 × 105 | 0 | 2.17 × 10−318 | 6.43 × 10−1 | 1.49 × 10−67 | 9.97 × 10−84 | 1.34 × 10−67 | |

| std | 0 | 6.23 × 10−4 | 2.63 | 8.08 × 104 | 0 | 0 | 4.45 × 10−2 | 8.06 × 10−67 | 1.03 × 10−83 | 1.78 × 10−67 | |

| F2 | min | 0 | 2.76 × 10−7 | 1.35 × 102 | 31.8 | 6.28 × 10−225 | 9.78 × 10−177 | 3.81 × 10−12 | 9.26 × 10−55 | 4.58 × 10−43 | 7.22 × 10−35 |

| mean | 0 | 5.98 × 10−3 | 1.40 × 102 | 1.07 × 102 | 2.93 × 10−153 | 3.10 × 10−151 | 1.82 × 10−3 | 1.3 × 10−47 | 2.06 × 10−42 | 2.16 × 10−34 | |

| std | 0 | 1.28 × 10−2 | 3.03 | 57.5 | 1.6 × 10−152 | 1.70 × 10−150 | 1.7 × 10−3 | 6.57 × 10−47 | 1.53 × 10−42 | 1.35 × 10−34 | |

| F3 | min | 0 | 9.38 × 10−9 | 4.86 × 105 | 5.09 × 106 | 0 | 7.16 × 10−299 | 13.7 | 1.88 × 107 | 2.77 × 10−13 | 4.58 × 10−3 |

| mean | 0 | 1.23 × 10−1 | 7.17 × 105 | 6.75 × 106 | 8.68 × 105 | 5.08 × 10−261 | 3.42 × 103 | 3.32 × 107 | 5.59 × 10−6 | 3.8 × 10−1 | |

| std | 0 | 5.99 × 10−1 | 1.39 × 105 | 1.42 × 106 | 4.43 × 106 | 0 | 1.85 × 104 | 1.23 × 107 | 2.41 × 10−5 | 1.27 | |

| F4 | min | 0 | 5.2 × 10−8 | 9.52 × 10−1 | 98.6 | 8.66 × 10−217 | 2.23 × 10−173 | 1.63 × 10−1 | 55.1 | 2.13 × 10−35 | 5.69 × 10−28 |

| mean | 0 | 2.8 × 10−4 | 9.71 × 10−1 | 99.0 | 1.3 × 10−128 | 7.74 × 10−152 | 1.81 × 10−1 | 81.3 | 1.22 × 10−34 | 1.42 × 10−27 | |

| std | 0 | 4.51 × 10−4 | 1.04 × 10−2 | 3.42 × 10−1 | 7.13 × 10−128 | 4.13 × 10−151 | 1.65 × 10−2 | 22.1 | 8.42 × 10−35 | 9.58 × 10−28 | |

| F5 | min | 4.12 × 10−8 | 4.99 × 102 | 4.90 × 103 | 1.12 × 109 | 1.13 × 102 | 4.94 × 102 | 4.99 × 102 | 4.96 × 102 | 4.96 × 102 | 4.96 × 102 |

| mean | 1.16 × 102 | 4.99 × 102 | 5.14 × 103 | 1.95 × 109 | 4.32 × 102 | 4.95 × 102 | 4.99 × 102 | 4.96 × 102 | 4.97 × 102 | 4.97 × 102 | |

| std | 2.14 × 102 | 3.20 × 10−2 | 1.76 × 102 | 5.05 × 108 | 1.67 × 102 | 2.94 × 10−1 | 1.03 × 10−1 | 4.20 × 10−1 | 6.30 × 10−1 | 4 × 10−1 | |

| F6 | min | 9.08 × 10−5 | 1.22 × 102 | 3.35 × 102 | 7.45 × 104 | 1.14 × 10−2 | 7.29 | 1.14 × 102 | 20.3 | 70 | 71.6 |

| mean | 16.8 | 1.23 × 102 | 3.45 × 102 | 2.42 × 105 | 30.6 | 15.3 | 1.16 × 102 | 32.6 | 75.4 | 75.5 | |

| std | 35.3 | 7.36 × 10−1 | 5.84 | 9.01 × 104 | 53 | 6.51 | 1.38 | 9.53 | 2.39 | 2.11 | |

| F7 | min | 2.6 × 10−8 | 7.82 × 10−5 | 4.30 × 103 | 9.38 × 103 | 8.2 × 10−4 | 5.87 × 10−6 | 1.21 × 10−5 | 8.52 × 10−5 | 7.16 × 10−4 | 8.04 × 10−4 |

| mean | 3.43 × 10−5 | 6.1 × 10−4 | 4.56 × 103 | 1.44 × 104 | 5.75 × 10−3 | 2.08 × 10−4 | 1.06 × 10−4 | 4.37 × 10−3 | 1.3 × 10−3 | 1.66 × 10−3 | |

| std | 3.22 × 10−5 | 6.31 × 10−4 | 2.74 × 102 | 3.4 × 103 | 4.01 × 10−3 | 1.66 × 10−4 | 9.94 × 10−5 | 5.6 × 10−3 | 4.27 × 10−4 | 5.37 × 10−4 | |

| F8 | min | −2.09 × 105 | −2.85 × 104 | −3.63 × 104 | −1.73 × 104 | −2.08 × 105 | −2.09 × 105 | −2.58 × 104 | −2.09 × 105 | −7.28 × 104 | −6.17 × 104 |

| mean | −2.09 × 105 | −2.15 × 104 | −3.29 × 104 | −1.53 × 104 | −1.61 × 105 | −2.05 × 105 | −2.3 × 104 | −1.7 × 105 | −3.39 × 104 | −4.39 × 104 | |

| std | 1.18 | 3.12 × 103 | 1.91 × 103 | 1.23 × 103 | 2.49 × 104 | 1.02 × 104 | 1.58 × 103 | 3.1 × 104 | 1.10 × 104 | 1.21 × 104 | |

| F9 | min | 0 | 0 | 2.26 × 103 | 4.19 × 102 | 0 | 0 | 0 | 0 | 0 | 0 |

| mean | 0 | 9.27 × 10−5 | 2.41 × 103 | 1.14 × 103 | 0 | 0 | 8.08 × 10−6 | 9.09 × 10−14 | 0 | 0 | |

| std | 0 | 3.52 × 10−4 | 72.5 | 5.78 × 102 | 0 | 0 | 7.61 × 10−6 | 3.66 × 10−13 | 0 | 0 | |

| F10 | min | 8.88 × 10−16 | 2.26 × 10−8 | 2.85 | 10.7 | 8.88 × 10−16 | 8.88 × 10−16 | 7.09 × 10−3 | 8.88 × 10−16 | 7.99 × 10−15 | 7.99 × 10−15 |

| mean | 8.88 × 10−16 | 2.84 × 10−4 | 2.91 | 18.4 | 8.88 × 10−16 | 8.88 × 10−16 | 8.02 × 10−3 | 4.91 × 10−15 | 7.99 × 10−15 | 2.22 | |

| std | 0 | 5.35 × 10−4 | 2.92 × 10−2 | 4.11 | 0 | 0 | 4.3 × 10−4 | 2.23 × 10−15 | 0 | 4.17 | |

| F11 | min | 0 | 2.10 × 10−12 | 2.28 × 10−1 | 1.13 × 103 | 0 | 0 | 6.52 × 103 | 0 | 0 | 0 |

| mean | 0 | 4.72 × 10−5 | 3.05 × 10−1 | 2.08 × 103 | 0 | 0 | 9.99 × 103 | 3.7 × 10−18 | 0 | 3.7 × 10−18 | |

| std | 0 | 2.56 × 10−4 | 2.66 × 10−1 | 7.11 × 102 | 0 | 0 | 3.09 × 103 | 2.03 × 10−17 | 0 | 2.03 × 10−17 | |

| F12 | min | 1.69 × 10−8 | 1.09 | 2.73 | 4.03 × 109 | 1.24 × 10−5 | 9.45 × 10−3 | 1.07 | 3.97 × 10−2 | 3.84 × 10−1 | 3.67 × 10−1 |

| mean | 1.08 × 10−5 | 1.14 | 2.80 | 6.28 × 109 | 2.42 × 10−1 | 4.54 × 10−2 | 1.08 | 1.01 × 10−1 | 4.3 × 10−1 | 4.27 × 10−1 | |

| std | 2.10 × 10−5 | 3.37 × 10−2 | 4.75 × 10−2 | 1.36 × 109 | 4.89 × 10−1 | 2.79 × 10−2 | 1.2 × 10−2 | 5.11 × 10−2 | 2.75 × 10−2 | 2.96 × 10−2 | |

| F13 | min | 5.42 × 10−11 | 50 | 10.2 | 6.62 × 109 | 3.46 × 10−3 | 3.08 | 50.1 | 10.7 | 49.8 | 49.8 |

| mean | 1.87 × 10−3 | 50 | 10.8 | 1.06 × 1010 | 12.2 | 8.69 | 50.2 | 19.3 | 49.8 | 49.8 | |

| std | 5.68 × 10−3 | 4.52 × 10−3 | 4.73 × 10−1 | 2.11 × 109 | 21.1 | 3.88 | 4.54 × 10−2 | 6.02 | 1.01 × 10−2 | 8.82 × 10−3 |

Table 5.

Results of benchmark functions (F14–F23).

Table 5.

Results of benchmark functions (F14–F23).

| F | Metric | MGTOA | GTOA [28] | GA [11] | SCA [37] | BES [38] | ROA [10] | AOA [39] | WOA [9] | BTLBO [40] | TLBO [25] |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F14 | min | 9.98 × 10−1 | 9.98 × 10−1 | 2.98 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 |

| mean | 9.98 × 10−1 | 1.16 | 9.43 | 1.83 | 3.06 | 5.75 | 10.2 | 3.68 | 9.98 × 10−1 | 9.98 × 10−1 | |

| std | 3.38 × 10−11 | 5.27 × 10−1 | 3.58 | 1.89 | 1.39 | 5.05 | 4.05 | 3.47 | 0 | 0 | |

| F15 | min | 3.07 × 10−4 | 3.07 × 10−4 | 4.35 × 10−4 | 3.94 × 10−4 | 3.14 × 10−4 | 3.08 × 10−4 | 3.54 × 10−4 | 3.19 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 |

| mean | 3.08 × 10−4 | 1.89 × 10−3 | 1.29 × 10−2 | 9.83 × 10−4 | 7.02 × 10−3 | 4.32 × 10−4 | 1.22 × 10−2 | 7.49 × 10−4 | 3.25 × 10−4 | 3.50 × 10−4 | |

| std | 2.54 × 10−7 | 5.34 × 10−3 | 2.75 × 10−2 | 3.98 × 10−4 | 8.72 × 10−3 | 2.27 × 10−4 | 2.66 × 10−2 | 5.11 × 10−4 | 7.35 × 10−5 | 1.19 × 10−4 | |

| F16 | min | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 |

| mean | −1.03 | −1.03 | −1.00 | −1.03 | −9.54 × 10−1 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | |

| std | 5.25 × 10−16 | 6 × 10−16 | 2.07 × 10−2 | 8.02 × 10−5 | 2.25 × 10−1 | 1.25 × 10−7 | 1.21 × 10−7 | 2.04 × 10−9 | 6.78 × 10−16 | 6.65 × 10−16 | |

| F17 | min | 3.98 × 10−1 | 3.98 × 10−1 | 4.50 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 |

| mean | 3.98 × 10−1 | 3.98 × 10−1 | 1.50 | 3.99 × 10−1 | 5.81 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | |

| std | 6.21 × 10−7 | 0 | 1.48 | 1.6 × 10−3 | 6.48 × 10−1 | 6.34 × 10−6 | 7.93 × 10−8 | 1.48 × 10−5 | 0 | 0 | |

| F18 | min | 3 | 3 | 3.2 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| mean | 3 | 3 | 29.5 | 3 | 4.39 | 3 | 9.3 | 3 | 3 | 3 | |

| std | 2.06 × 10−5 | 8.4 × 10−15 | 22.2 | 1.33 × 10−4 | 1.51 | 1.61 × 10−4 | 11.6 | 1.71 × 10−4 | 1.31 × 10−15 | 9.79 × 10−16 | |

| F19 | min | −3.86 | −3.86 | −3.86 | −3.86 | −3.85 | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 |

| mean | −3.86 | −3.86 | −3.71 | −3.85 | −3.65 | −3.86 | −3.85 | −3.86 | −3.86 | −3.86 | |

| std | 9.49 × 10−6 | 2.63 × 10−15 | 5.48 × 10−1 | 1.09 × 10−2 | 1.89 × 10−1 | 2.8 × 10−3 | 5.9 × 10−3 | 7.31 × 10−3 | 2.71 × 10−15 | 2.71 × 10−15 | |

| F20 | min | −3.32 | −3.32 | −3.32 | −3.11 | −3.19 | −3.32 | −3.17 | −3.32 | −3.32 | −3.32 |

| mean | −3.29 | −3.26 | −3.28 | −2.70 | −2.91 | −3.22 | −3.04 | −3.22 | −3.3 | −3.31 | |

| std | 5.27 × 10−2 | 7.66 × 10−2 | 5.55 × 10−2 | 5.27 × 10−1 | 2.4 × 10−1 | 1.24 × 10−1 | 8.89 × 10−2 | 1.71 × 10−1 | 3.67 × 10−2 | 3.71 × 10−2 | |

| F21 | min | −10.2 | −10.2 | −5.05 | −7.36 | −10.2 | −10.2 | −6.85 | −10.2 | −10.2 | −10.2 |

| mean | −10.2 | −8 | −1.47 | −2.27 | −6.55 | −10.1 | −3.93 | −7.94 | −10.1 | −9.56 | |

| std | 2.90 × 10−4 | 2.75 | 1.49 | 2.06 | 2.76 | 1.6 × 10−2 | 1.72 | 2.79 | 7.74 × 10−2 | 1.81 | |

| F22 | min | −10.4 | −10.4 | −5.07 | −5.62 | −10.4 | −10.4 | −6.05 | −10.4 | −10.4 | −10.4 |

| mean | −10.4 | −8.04 | −1.67 | −3.29 | −5.43 | −10.4 | −3.38 | −7.76 | −10.4 | −10 | |

| std | 1.29 × 10−4 | 2.97 | 1.25 | 1.85 | 2.61 | 2.41 × 10−2 | 1.49 | 2.91 | 7.38 × 10−16 | 1.35 | |

| F23 | min | −10.5 | −10.5 | −5.13 | −5.93 | −10.5 | −10.5 | −6.69 | −10.5 | −10.5 | −10.5 |

| mean | −10.5 | −7.98 | −1.76 | −3.73 | −5.55 | −10.5 | −3.52 | −6.27 | −10.3 | −10.1 | |

| std | 1.28 × 10−4 | 3.24 | 1.40 | 1.54 | 2.73 | 2.1 × 10−2 | 1.26 | 3.26 | 1.21 | 1.74 |

Table 6.

Experimental results of Wilcoxon rank sum test on 23 standard benchmark functions.

Table 6.

Experimental results of Wilcoxon rank sum test on 23 standard benchmark functions.

| F | dim | MGTOA vs. GTOA [28] | MGTOA vs. GA [11] | MGTOA vs. SCA [37] | MGTOA vs. BES [38] | MGTOA vs. ROA [10] | MGTOA vs. AOA [39] | MGTOA vs. WOA [9] | MGTOA vs. BTLBO [40] | MGTOA vs. TLBO [25] |

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1.25 × 10−1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 3.13 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F2 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F3 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.5 × 10−1 | 3.79 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 3.1 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F4 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F5 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.13 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.38 × 10−3 | 6.16 × 10−4 | 1.73 × 10−6 | 6.16 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F6 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.6 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.89 × 10−1 | 4.72 × 10−2 | 1.73 × 10−6 | 8.97 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F7 | 30 | 3.18 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 6.64 × 10−4 | 2.7 × 10−2 | 2.13 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.36 × 10−5 | 2.22 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F8 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 3.59 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 3.18 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F9 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1 | 5 × 10−1 | 1.73 × 10−6 | 3.79 × 10−6 |

| 500 | 2.56 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1.32 × 10−4 | 1 | 1 | 1 | |

| F10 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1 | 2.29 × 10−5 | 2.57 × 10−7 | 7.86 × 10−7 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1.73 × 10−6 | 2.04 × 10−5 | 4.32 × 10−8 | 1.11 × 10−6 | |

| F11 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1.73 × 10−6 | 6.25 × 10−2 | 1 | 1 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1.73 × 10−6 | 1 | 1 | 1 | |

| F12 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.92 × 10−6 |

| 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.48 × 10−3 | 2.77 × 10−3 | 1.73 × 10−6 | 2.77 × 10−3 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F13 | 30 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.6 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.11 × 10−3 | 2.41 × 10−4 |

| 500 | 1.73 × 10−6 | 2.8 × 10−3 | 1.73 × 10−6 | 2.26 × 10−3 | 2.77 × 10−3 | 1.73 × 10−6 | 1.71 × 10−3 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F14 | 2 | 4.07 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 4.07 × 10−5 | 1.73 × 10−6 | 2.13 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| F15 | 4 | 2.41 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 5.75 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 7.71 × 10−4 | 1.41 × 10−1 |

| F16 | 2 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 |

| F17 | 2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 5.79 × 10−5 | 4.53 × 10−4 | 6.64 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 |

| F18 | 2 | 1.73 × 10−6 | 1.73 × 10−6 | 5.79 × 10−5 | 1.73 × 10−6 | 1.04 × 10−3 | 9.75 × 10−1 | 3.61 × 10−3 | 1.73 × 10−6 | 1.73 × 10−6 |

| F19 | 3 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.84 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| F20 | 6 | 3.38 × 10−3 | 3.68 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.48 × 10−2 | 2.13 × 10−6 | 3.85 × 10−3 | 3.59 × 10−4 | 1.48 × 10−2 |

| F21 | 4 | 1.48 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.77 × 10−3 |

| F22 | 4 | 6.16 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.48 × 10−2 |

| F23 | 4 | 9.27 × 10−3 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.92 × 10−6 | 1.73 × 10−6 | 3.59 × 10−4 |

4.2. Experiments on CEC2014 Test Suite

In this section, we use CEC2014 test function to verify the performance of MGTOA. Set the population number N = 30, the maximum number of iterations T = 500, and the dimension dim = 10. The specific contents of CEC 2014 functions are shown in Table 7.

Table 7.

Details of 30 CEC2014 benchmark functions.

As can be seen in Table 8, MGTOA has achieved good results compared with GTOA. However, some results are inferior to BTLBO and TLBO. In CEC1–CEC3, MGTOA got the third place after BTLBO and TLBO. In CEC4–CEC5, MGTOA got the first. In CEC6–CEC9, the value of MGTOA is due to GTOA. In CEC10–CEC16, MGTOA has found a better value and is more stable. Among other functions, only the value of CEC20–CEC22 has not achieved the best result, and other functions have obtained better solutions.

Table 8.

Results of algorithms on the CEC2014 test suite.

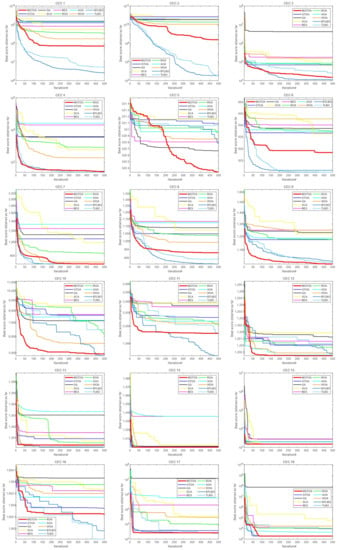

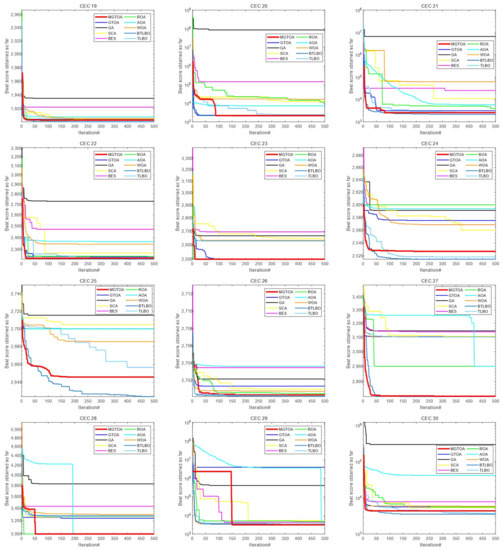

Figure 7 shows the convergence curves of MGTOA and the other nine algorithms. It can be seen that MGTOA has achieved good results and is obviously superior to GTOA. However, MGTOA has some shortcomings in some functions. In Unimodal Functions, MGTOA has achieved the third place in CEC1 and CEC2. It can be seen from the figure that the convergence ability of MGTOA in the later period is insufficient. The convergence ability of TBLO and BTLBO is better than that of MGTOA. However, in CEC3, MGTOA achieved good results. In Simple Multimodal Functions, MGTOA has some shortcomings in CEC6 and CEC8. Among other functions, MGTOA has better convergence ability, which can converge quickly and get a good solution. Hybrid Function 1 and Composition Functions test the overall ability of the algorithm, and MGTOA has also achieved good results. In CEC25 and CEC27, MGTOA can jump out of the local optimum to find a better solution. Compared with GTOA, MGTOA has greatly improved its overall performance. It can be concluded that MGTOA has a good optimization effect in CEC2014.

Figure 7.

Convergence curves for the optimization algorithms for test functions on CEC2014.

Table 9 shows the Wilcoxon rank sum test results obtained by running MGTOA and other nine different algorithms 30 times in CEC2014. It can be seen from the table that most p is less than 0.05, but many of the composite functions of CEC22–CEC30 have p greater than 0.05. This is because these functions are relatively simple, and many algorithms can find a better value. In CEC11 and CEC14, there are three p greater than 0.05. In CEC16 and CEC18, one p is greater than 0.05. In CEC16 and CEC18, one p is greater than 0.05, and the rest is less than 0.05. It shows that there is a big gap between MGTOA and these algorithms. Moreover, only two p values between MGTOA and GTOA are greater than 0.05, which indicates that MGTOA is very different from GTOA, which demonstrates that MGTOA achieves good results in Wilcoxon rank sum detection.

Table 9.

Experimental results of Wilcoxon rank sum test on the CEC2014 test suite.

5. Constrained Engineering Design Problems

The previous experiments have shown the optimization effect of MGTOA. In order to clearly understand the practical effect of MGTOA on engineering problems, six engineering problems are selected for our experiment, namely: welding beam design, pressure vessel design, tension/pressure spring design, three-bar truss design, car crashworthiness design, and gear train design. The detailed experimental results are as follows.

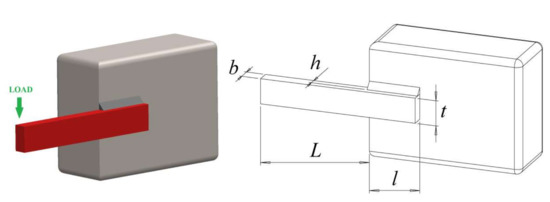

5.1. Welded Beam Design Problem

The design problem of the welded beam is to minimize the cost of the welded beam under four decision variables and seven constraints. Four variables need to be optimized: weld width h, connecting beam thickness b, connecting beam length l, and beam height t. The specific model of this problem is from literature [7]. The objective function f of this problem is shown in Figure 8.

Figure 8.

The welded beam design.

The mathematical formulation of this problem is shown below:

Consider:

Minimize:

Subject to:

Variable Range:

The results obtained from the welded beam design are shown in Table 10. It can be seen that the optimal solution obtained by MGTOA is much smaller than that obtained by GTOA, and the minimum weight is obtained when compared with other algorithms. It is proven that MGTOA has a better effect on the welded beam design.

Table 10.

Experimental results of Welded Beam Design.

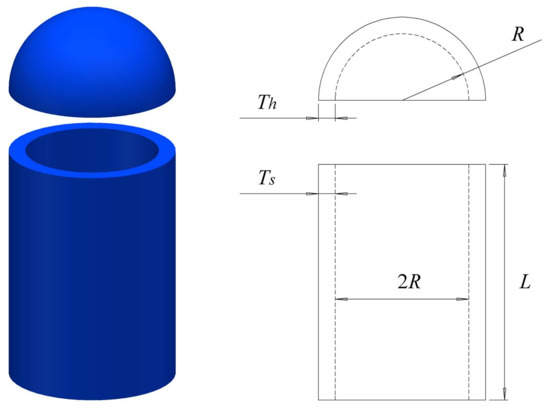

5.2. Pressure Vessel Design Problem

The purpose of pressure vessel design problems is to meet production needs while reducing the total cost of the container. The four design variables are shell thickness Ts, head thickness Th, inner radius R, and container length L regardless of the head. Where Ts and Th are integers of 0.625 and R and L are continuous variables. The specific constraints are referred to in [43]. The schematic diagram of optimal structure design is shown in Figure 9.

Figure 9.

The pressure vessel design.

The mathematical formulation of this problem is shown below:

Consider:

Minimize:

Subject to:

Variable Range:

The results of pressure vessel design problems are shown in Table 11. In the table, Ts = 0.754364, Th = 0.366375, R = 40.42809, L = 198.5652 of MGTOA. While for GTOA, Ts = 0.778169, Th = 0.38465, R = 40.3196, L = 200, the variables obtained by MGTOA are obviously better. The final cost of MGTOA is 5752.402458. Compared with other algorithms, the cost of MGTOA is greatly reduced, and good results are achieved, which shows that MGTOA has excellent effects on this problem.

Table 11.

Experimental results of Pressure Vessel Design.

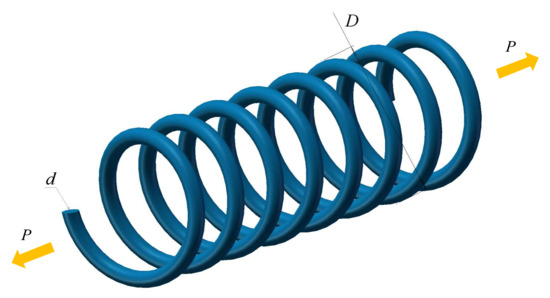

5.3. Tension/Compression Spring Design Problem

As shown in Figure 10, the tension/pressure spring design problem’s purpose is to reduce the spring’s weight under four constraint conditions. The constraint conditions include the minimum deviation (g1), shear stress (g2), impact frequency (g3), and outer diameter limit (g4). The specific constraints are referred to in [48]. Corresponding decision variables include wire diameter d, average coil diameter D, and adequate coil number N. f(x) is the minimum spring mass.

Figure 10.

Tension/Compression Spring Design.

The mathematical formulation of this problem is shown below:

Consider:

Minimize:

Subject to:

Variable Range:

The results of tension/pressure spring design problem are shown in Table 12. In the table, the wire diameter d of MGTOA has obtained the optimal value. Although the average coil diameter D and the effective coil number N have not reached the optimal value, the difference is not significant, and the minimum weight has been finally obtained, which can effectively indicate that MGTOA has a good effect on this problem.

Table 12.

Experimental results of tension/Compression Spring Design.

5.4. Three-Bar Truss Design Problem

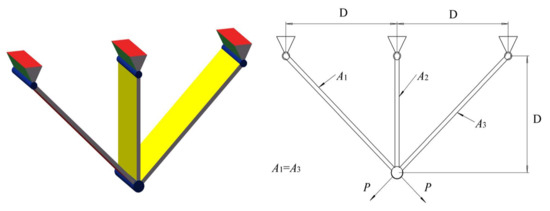

The main purpose of studying the design of a three-bar truss is to reduce the structure’s weight under the action of the total supporting load P. The geometry of this problem is given in Figure 11, where the cross-sectional area represents the design variable. Due to the system’s symmetry, it is necessary to determine the cross sections with A1 (=x1) and A2 (=x2). Among them, the constraint conditions refer to literature [4].

Figure 11.

Pressure Vessel Design Problem.

The mathematical formulation of this problem is shown below:

Consider:

Minimize:

Subject to:

Variable Range:

The results of the three-bar truss design problem are shown in Table 13. It can be seen from the results obtained by each algorithm that the results of each algorithm are very different, which also indicates that it is difficult to better optimize the problem. However, it can be seen from the table that MGTOA has achieved the best results among these algorithms and has a particular improvement compared with other algorithms.

Table 13.

Experimental results of Three-Bar Truss Design.

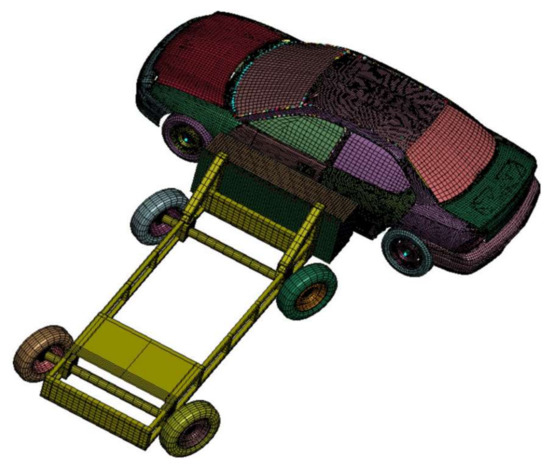

5.5. Car Crashworthiness Design Problem

The frequently used car crashworthiness design problem is considered firstly proposed by Gu et al. This problem also belongs to a minima problem with eleven variables, subject to ten constraints. Figure 12 shows the finite element model of this problem. The decision variables are, respectively, the internal thickness of B-pillar, the thickness of B-pillar reinforcement, the internal thickness of floor, the thickness of cross beam, the thickness of door beam, the thickness of door belt line reinforcement, the thickness of roof longitudinal beam, the internal material of B-pillar, the internal material of floor, the height of obstacle, and the impact position of obstacle. The constraints are, respectively, the abdominal load, the upper viscosity standard, the middle viscosity standard, the lower viscosity standard, the upper rib deflection, the middle rib deflection, the lower rib deflection Pubic symphysis force, B-pillar midpoint speed, and B-pillar front door speed. The constraints refer to literature [49].

Figure 12.

Car Crashworthiness Design.

The mathematical formulation of this problem is shown below:

Minimize:

Subject to:

Variable Range:

Table 14 shows the results of the car crashworthiness design problem. In MGTOA, the variables x1, x3, x4, and x7 all reached 0.5, and the final weight obtained the best solution compared with other algorithms.

Table 14.

Experimental results of Car Crashworthiness Design.

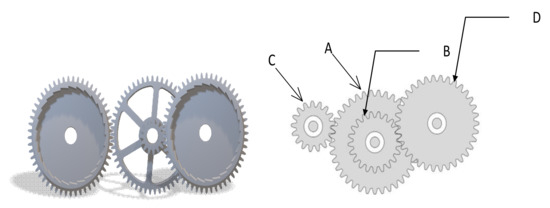

5.6. Gear Train Design Problem

The gear train design problem aims to minimize the gear ratio. This problem has four parameters. The gear transmission is as follows:

The parameters of this problem are discrete, and the increment is one because this problem only defines the tooth profiles of four gears (nA, nB, nC, nD). The constraints refer to literature [60]. Specific schematic diagram is shown in Figure 13.

Figure 13.

Gear train design problem.

The mathematical formulation of this problem is shown below:

Consider:

Minimize:

Variable Range:

Table 15 shows the results of MGTOA and other comparison algorithms in gear train design. It can be seen from the table that MGTOA has obtained the optimal solution for the design of the gear train, which is greatly improved compared with GTOA.

Table 15.

Experimental results of gear train design.

6. Conclusions

This paper proposes a modified GTOA (MGTOA), which improves the student phase of the algorithm according to the different learning motivations of different students and adopts the random opposition-based learning and restart strategy to enhance the optimization performance of the algorithm. The effect of MGTOA was tested using 23 standard benchmark functions and CEC2014 test functions and compared with nine other state-of-the-art algorithms. The proposed MGTOA was further verified by the Wilcoxon rank sum test. The data analysis illustrates that MGTOA has an excellent optimization effect. Compared with GTOA, the optimization performance of MGTOA has been greatly improved, with better optimization capability and lower error. However, the exploitation capability of MGTOA is weaker than that of BTLBO algorithms. In future work, we will strengthen the exploitation capability of MGTOA. After that, MGTOA will be used to solve the three-dimensional path planning problem of UAVs, text clustering problem, feature selection problem, scheduling in cloud computing, parameter estimation, image segmentation, intrusion detection problem, and others.

Author Contributions

Conceptualization, H.R. and H.J.; methodology, H.R.; software, H.R. and H.J.; validation, H.J. and D.W.; formal analysis, H.R., H.J., and D.W.; investigation, C.W. and S.L.; resources, Q.L. and C.W.; data curation, H.R. and L.A.; writing—original draft preparation, H.R. and H.J.; writing—review and editing, D.W. and L.A.; visualization, H.R., H.J. and D.W.; supervision, H.J. and D.W.; funding acquisition, H.J. and L.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by Sanming University National Natural Science Foundation Breeding Project (PYT2105), Fujian Natural Science Foundation Project (2021J011128), Fujian University Students’ Innovation and Entrepreneurship Training Program. The authors would like to thank the Deanship of Scientific Research at Umm Al-Qura University for supporting this work by Grant Code: (22UQU4320277DSR09).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the support of Fujian Key Lab of Agriculture IOT Application and IOT Application Engineering Research Center of Fujian Province Colleges and Universities, as well as the anonymous reviewers for helping us to improve the quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fearn, T. Particle swarm optimization. NIR News 2014, 25, 27. [Google Scholar]

- Assiri, A.S.; Hussien, A.G.; Amin, M. Ant lion optimization: Variants, hybrids, and applications. IEEE Access. 2020, 8, 77746–77764. [Google Scholar] [CrossRef]

- Yang, X.; Hossein Gandomi, A. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Hussien, A.G. An enhanced opposition-based salp swarm algorithm for global optimization and engineering problems. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 129–150. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stützle, T. Ant Colony Optimization. IEEE. Comput. Intell. M 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Global Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Jia, H.; Peng, X.; Lang, C. Remora optimization algorithm. Exp. Syst. Appl. 2021, 185, 115665. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Beyer, H.G.; Schwefel, H.P. Evolution strategies–A comprehensive introduction. Nat. Comput. 2002, 1, 3–52. [Google Scholar] [CrossRef]

- Banzhaf, W.; Koza, J.R. Genetic programming. IEEE Intell. Syst. 2000, 15, 74–84. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Sinha, N.; Chakrabarti, R.; Chattopadhyay, P. Evolutionary programming techniques for economic load dispatch. IEEE Trans. Evol. Comput. 2003, 7, 83–94. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Global Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Jaderyan, M.; Khotanlou, H. Virulence Optimization Algorithm. Appl. Soft. Comput. 2016, 43, 596–618. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.S. GSA: A Gravitational Search Algorithm. Inform. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Hatamlou, A. Black hole: A new heuristic optimization approach for data clustering. Inform. Sci. 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2015, 27, 495–513. [Google Scholar] [CrossRef]

- Kaveh, A.; Khayatazad, M. A new meta-heuristic method: Ray optimization. Comput. Struct. 2012, 112, 283–294. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A. A novel meta-heuristic optimization algorithm: Thermal exchange optimization. Adv. Eng. Softw. 2017, 110, 69–84. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A New Heuristic Optimization Algorithm: Harmony Search. Simulation 2001, 2, 60–68. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching-Learning-Based Optimization: An optimization method for continuous non-linear large scale problems. Inform. Sci. 2012, 183, 1–15. [Google Scholar] [CrossRef]

- Satapathy, S.; Naik, A. Social group optimization (SGO): A new population evolutionary optimization technique. Complex Intell Syst. 2016, 2, 173–203. [Google Scholar] [CrossRef]

- Naser, G.; Ebrahim, B. Exchange market algorith. Appl. Soft Comput. 2014, 19, 177–187. [Google Scholar]

- Zhang, Y.; Jin, Z. Group teaching optimization algorithm: A novel metaheuristic method for solving global optimization problems. Expert Syst. Appl. 2020, 148, 113246. [Google Scholar] [CrossRef]

- Zhang, Y.; Chi, A. Group teaching optimization algorithm with information sharing for numerical optimization and engineering optimization. J. Intell. Manuf. 2021, 1–25. [Google Scholar] [CrossRef]

- Ahandani, M.A.; Alavi-Rad, H. Opposition-based learning in the shuffled differential evolution algorithm. Soft Comput. 2012, 16, 1303–1337. [Google Scholar] [CrossRef]

- Shang, J.; Sun, Y.; Li, J.; Zheng, C.; Zhang, J. An Improved Opposition-Based Learning Particle Swarm Optimization for the Detection of SNP-SNP Interactions. BioMed Res. Int. 2015, 12, 524821. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Z.; Rahnamayan, S.; Liu, Y.; Ventresca, M. Enhancing particle swarm optimization using generalized opposition-based learning. Inform. Sci. 2011, 181, 4699–4714. [Google Scholar] [CrossRef]

- Liu, Q.; Li, N.; Jia, H.; Qi, Q.; Abualigah, L. Modified remora optimization algorithm for global optimization and multilevel thresholding image segmentation. Mathematics 2022, 10, 1014. [Google Scholar]

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M.A. Opposition-Based Differential Evolution. IEEE Trans. Evol. Comput. 2008, 12, 64–79. [Google Scholar] [CrossRef]

- Zhou, Y.; Hao, J.K.; Duval, B. Opposition-based Memetic Search for the Maximum Diversity Problem. IEEE Trans. Evol. Comput. 2017, 21, 731–745. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Z.; Chen, W.; Heidari, A.A.; Wang, M.; Zhao, X.; Liang, G.; Chen, H.; Zhang, X. Ensemble mutation-driven salp swarm algorithm with restart mechanism: Framework and fundamental analysis. Exp. Syst. Appl. 2021, 165, 113897. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl. Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Alsattar, H.A.; Zaidan, A.A.; Zaidan, B.B. Novel meta-heuristic bald eagle search optimisation algorithm. Artif. Intell. Rev. 2020, 53, 2237–2264. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Elaziz, M.A.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Method Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Ahmad, T.; Keyvan, R.Z.; Ravipudi, V.R. An efficient Balanced Teaching-Learning-Based optimization algorithm with Individual restarting strategy for solving global optimization problems. Inform. Sci. 2021, 576, 68–104. [Google Scholar]

- Babalik, A.; Cinar, A.C.; Kiran, M.S. A modification of tree-seed algorithm using Deb’s rules for constrained optimization. Appl. Soft Comput. 2018, 63, 289–305. [Google Scholar] [CrossRef]

- Hussien, A.G.; Amin, M.; Abd El Aziz, M. A comprehensive review of moth-flame optimisation: Variants, hybrids, and applications. J. Exp. Theor. Artif. Intell. 2020, 32, 705–725. [Google Scholar] [CrossRef]

- Wen, C.; Jia, H.; Wu, D.; Rao, H.; Li, S.; Liu, Q.; Abualigah, L. Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem. Mathematics 2022, 10, 3604. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intel. 2007, 20, 89–99. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. A hybrid particle swarm optimization with a feasibilitybased rule for constrained optimization. Appl. Math. Comput. 2007, 186, 1407–1422. [Google Scholar]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Laith, A.; Dalia, Y.; Mohamed, A.E.; Ahmed, A.E.; Mohammed, A.A.A.; Amir, H.G. Aquila Optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar]

- Liu, Q.; Li, N.; Jia, H.; Qi, Q.; Abualigah, L.; Liu, Y. A hybrid arithmetic optimization and golden sine algorithm for solving industrial engineering design problems. Mathematics 2022, 10, 1567. [Google Scholar] [CrossRef]

- Zheng, R.; Jia, H.; Abualigah, L.; Wang, S.; Wu, D. An improved remora optimization algorithm with autonomous foraging mechanism for global optimization problems. Math. Biosci. Eng. 2022, 19, 3994–4037. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Hui, L.; Cai, Z.; Yong, W. Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl. Soft Comput. 2010, 10, 629–640. [Google Scholar]

- Tsai, J.-F. Global optimization of nonlinear fractional programming problems in engineering design. Eng. Optimiz. 2005, 37, 399–409. [Google Scholar] [CrossRef]

- Min, Z.; Luo, W.; Wang, X. Differential evolution with dynamic stochastic selection for constrained optimization. Inform. Sci. 2008, 178, 3043–3074. [Google Scholar]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper Optimisation Algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Zong, W.G.; Gandomi, A.H. Reptile search algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2021, 191, 116158. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine predators algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Houssein, E.H.; Neggaz, N.; Hosney, M.E.; Mohamed, W.M.; Hassaballah, M. Enhanced Harris hawks optimization with genetic operators for selection chemical descriptors and compounds activities. Neural Comput. Appl. 2021, 33, 13601–13618. [Google Scholar] [CrossRef]

- Long, W.; Jiao, J.; Liang, X.; Cai, S.; Xu, M. A random opposition-based learning grey wolf optimizer. IEEE Access 2019, 7, 113810–113825. [Google Scholar] [CrossRef]

- Wang, S.; Sun, K.; Zhang, W.; Jia, H. Multilevel thresholding using a modified ant lion optimizer with opposition-based learning for color image segmentation. Math. Biosci. Eng. MBE 2021, 18, 3092–3143. [Google Scholar] [CrossRef] [PubMed]

- Absalom, E.E.; Jeffrey, O.A.; Laith, A.; Seyedali, M.; Amir, H.G. Prairie Dog Optimization Algorithm. Neural Comput. Appl. 2022, 1–49. [Google Scholar]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Softw. Comput. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).