Abstract

Aspect-based sentiment analysis (ABSA) is a fine-grained task of sentiment analysis that presents great benefits to real-word applications. Recently, the methods utilizing graph neural networks over dependency trees are popular, but most of them merely considered if there exist dependencies between words, ignoring the types of these dependencies, which carry important information, as dependencies with different types have different effects. In addition, they neglected the correlations between dependency types and part-of-speech (POS) labels, which are helpful for utilizing dependency imformation. To address such limitations and the deficiency of insufficient syntactic and semantic feature mining, we propose a novel model containing three modules, which aims to leverage dependency trees more reasonably by distinguishing different dependencies and extracting beneficial syntactic and semantic features to further enhance model performance. To enrich word embeddings, we design a syntactic feature encoder (SynFE). In particular, we design Dependency-POS Weighted Graph Convolutional Network (DPGCN) to weight different dependencies by a graph attention mechanism we proposed. Additionally, to capture aspect-oriented semantic information, we design a semantic feature extractor (SemFE). Extensive experiments on five popular benchmark databases validate that our model can better employ dependency information and effectively extract favorable syntactic and semantic features to achieve new state-of-the-art performance.

Keywords:

aspect-based sentiment analysis; graph neural networks; dependency trees; dependency types; graph attention mechanism; syntactic; semantic MSC:

18C50

1. Introduction

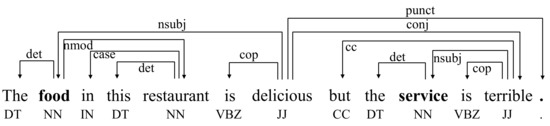

Aspect-based sentiment analysis (ABSA) is a popular topic in natural language processing with the purpose of identifying the sentiment polarities (i.e., positive, neutral and negative) toward the specific aspects in given sentences. Take this review “The food in this restaurant is delicious but the service is terrible” as an example. For aspect “food”, the polarity is positive, while it is negative for aspect “service”. An ABSA model aims to infer the sentiment polarities of the given aspects accurately on the fine-grained level.

The key to solving the ABSA task is to find the relations between aspects and corresponding opinion words properly. Early works combined recurrent neueal networks (RNNs) and the attention mechanism [1,2,3,4,5] to capture semantic information related to aspects and generate aspect-specific sentence representation. However, these methods are vulnerable to noises introduced by unrelated words. They also ignored the syntactic dependency information in the sentences, which makes it difficult for them to link aspects and corresponding opinion words due to the long position distance between them. Most recent works [6,7,8,9,10] applied graph-based networks such as graph convolutional networks (GCNs) and graph attention networks (GATs) over dependency trees to explicitly exploit syntactic dependency information, which achieved better performance. However, the drawback of these methods is that all dependencies are treated equally without weighting them according to their types. Linguistically, the dependencies with different types have dividual significance, with some dependencies among words providing benefits to the ABSA task, while others introduce noises that hurt model performance. As shown in Figure 1, the aspect “food” has dependencies with two other words “the” and “delicious”. Obviously, “delicious” as the opinion word is more important for sentiment analysis of aspect “food”, while “the” does not show explicit information. Thus, the dependency type “nsubj” means that “food” is a nominal subject of “delicious” and should be assigned more attention weight than “det” only meaning that “The” is a determiner of “food”. It can be seen that modeling dependency types properly is necessary for advancing the ABSA task. Meanwhile, the previous GCN-based models omitted the correlations between dependency types and POS labels. For example, with comprehensive investigation from the datasets, we find that the important dependency type “nsubj” is frequently connected with noun labels and verb labels such as “JJ”, “NN”, “NNS”, etc. Normally, there are many words in a sentence, but a few words are valuable for sentiment analysis of the aspect. After the investigation, we conclude that the POS of opinion words is typically an adjective or verb due to words with these POS usually carrying clear sentiment information. So, incorporating dependency information and POS information is a potential way for upgrading the ABSA task.

Figure 1.

An example sentence with its dependency tree and POS labels.

To tackle the above limitations and improve model performance, we propose a novel model including three effective modules: SynFE, DPGCN and SemFE. Particularly, the main module DPGCN incorporates dependency information and POS information to weight different dependencies. SynFE and SemFE supplement syntactic and semantic features for aspects to further improve model performance.

Our contributions are summarized as follows:

- We propose to weight dependencies with different types in dependency trees according to their contribution to ABSA task by capturing the correlations between dependency types and POS labels.

- We propose DPGCN to weight different dependencies with a graph attention mechanism which incorporates dependency information and POS information. SynFE and SemFE are developed to extract more feature information for elevating model performance.

- We conduct extensive experiments on five benchmark datasets, and the results prove the effectiveness of our model.

2. Related Work

With the booming development of deep learning, relevant models were applied to this task. Many early attention-based neural network models [1,2,3,4,5] achieved promising performance, which aroused great concern. Ref. [1] proposed an attention-based LSTM which focuses on the key part of sentences to obtain contextual representations. Refs. [2,3] introduced a memory network with an attention mechanism to extract sentiment information related to aspects. Ref. [4] utilized a multi-grained attention mechanism to capture word-level interactions between aspects and contexts. Ref. [5] exploited the Attention over Attention network to learn aspect representations and sentence representations together. In addition, pre-trained language model BERT [11] has achieved remarkable performance in a number of NLP tasks, including ABSA. Ref. [12] transformed the ABSA task into sentence–aspect pair classification and achieved excellent performance by fine-tuning the BERT model.

Early works lost sight of the usefulness of syntactic knowledge to the ABSA task. To explicitly exploit syntactic dependency information, ref. [13] proposed an attention model with syntactic dependency information to obtain attention weights, and ref. [14] introduced syntactic relative distance to reduce the negative effects of words that are weakly related to aspects. Graph Convolutional Network (GCN) [15] had achieved surprising performance in many NLP tasks, including ABSA. Applying a GCN-based model over dependency trees became a new trend, which developed several outstanding models. Refs. [6,7] applied GCN over dependency trees to capture the syntactic dependency information for aspects. Ref. [8] noticed the word co-occurrence information, building a hierarchical syntactic graph and lexical graph for graph convolution. Ref. [16] proposed a relational graph attention network (R-GAT) to encode the new dependency trees for sentiment analysis. Ref. [17] designed DualGCN including SynGCN and SemGCN to extract syntactic and semantic information for aspects, respectively.

3. Method

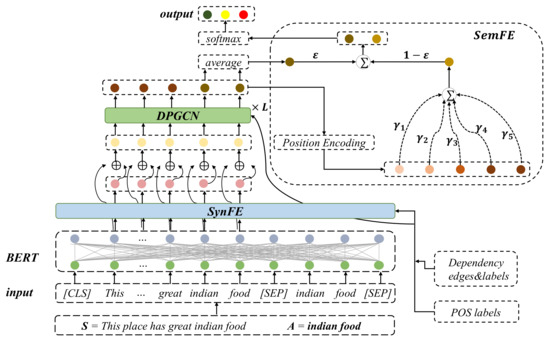

In this section, we elaborate the details of our proposed model. The overall structure of our model is shown in Figure 2, and the details of SynFE and DPGCN are depicted in Figure 3.

Figure 2.

The overall structure of our proposed model.

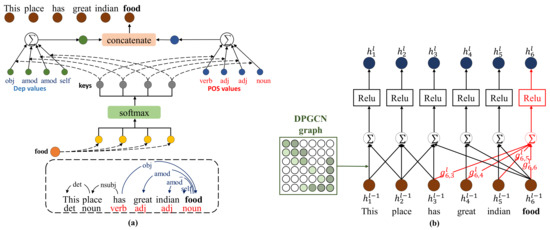

Figure 3.

The details of (a) SynFE and (b) DPGCN.

Our model mainly consists of three modules: (1) SynFE, which encodes the dependency information and POS information of the sentences to enrich word-level vector representations; (2) DPGCN, which captures the correlations between dependency types and POS labels to weight dependencies with different types; and (3) SemFE, which extracts semantic features from the overall sentence to supplement sentiment features for aspect representations. Each component will be presented in detail and analyzed for their contribution.

3.1. Problem Definition (ABSA)

Given an n-word review sentence with an m-word aspect in it, ABSA aims at identifying the sentiment polarity (i.e., positive, neutral or negative) of the given aspect in a sentence. If there is more than one m-word aspect in a sentence, our model processes the sentence several times, i.e., outputting the sentiment polarity of one aspect once.

3.2. Initial Embedding Module

The pre-trained language model BERT has the ability to provide word embeddnings with rich feature information; thus, we construct a sentence–aspect pair as the input of BERT to initialize aspect-aware word vectors with the input form: “[CLS] sentence [SEP] aspect [SEP]”, where ‘’ is a symbol token for encoding overall sentence-level representation, and ‘’ is a separator for separating sentence and aspect. The calculations in BERT are as follows:

where is the overall sentence-level representation, are the word-level representations of the sentence, where n is the number of words in one sentence and is the dimension of each word vector, and are the aspect representations. We only adopt , which contain aspect representations and contextual representations of the sentence.

3.3. Syntactic Feature Encoder (SynFE)

The quality of textual representations is critical to all NLP tasks. To enrich the features for word representations of aspects and contexts, we encode syntactic information (i.e., dependency information and POS information) and fuse them into word representations.

According to the structures of dependency trees, we construct a key–value network to learn syntax-aware representations. In detail, our module obtains dependency trees of the given sentences from an off-the-shelf NLP toolkit (i.e., StanfordCoreNLP). We map dependencies to key sets K, and dependency types and POS labels are mapped to dependency value sets and POS value set . As illustrated in Figure 1, each word has dependencies with other words. For (i.e., the i-th word in the sentence), we map the dependencies related to it and corresponding dependency types to a key set in K and a value set in , respectively. Each element in represents the weight for corresponding dependency; if there is no dependency between and . For , the element is the embedding vector for the corresponding dependency type. For example, in represents a -dimensional embedding vector for the dependency type between and . In particular, the type is denoted as “none” if there is no dependency between two words, while it is “self” between one word and itself. represent the embedding vectors of POS labels for words in the sentence. The embedding vectors in and are randomly initialized and trainable, but the weights in K are calculated by:

where T denotes vector transpose, if a dependency exists between and , and it is 0 otherwise, ∗ is element-wise multiplication, and and are the word representations of and from BERT. The syntactic feature representations for words are learnt by:

where and are the embedding vectors for the dependency type between and and the POS label of , and and are the dependency representation and POS representation for that provide beneficial syntactic features for word representation. Afterward, we incorporate them into the word representations from BERT by:

where refers to the output of the key-value network for , and ⊕ denotes vector concatenation. are updated word representations with syntactic features output by the key-value network.

3.4. Dependency-POS Weighted Graph Convolutional Network (DPGCN)

An adjacency matrix is used to represent the structure of a dependency tree in a traditional GCN-based model, A is a 0-1 matrix where if there exists a dependency between and , and otherwise. In order to make better use of syntactic dependency information, we propose a graph attention mechanism to construct DPGCN graph by combining dependency types and POS labels, where while . First, is denoted as the dependency type between and , and there is a trainable embedding vector for each type . Then, to alleviate noises introduced from POS, the POS labels of all words are divided into five categories (i.e., Nouns, Verbs, Adjectives, Adverbs and Others), where there is a POS mapping matrix corresponding to each POS category. DPGCN is an L-layer GCN-based module; each layer exists to a corresponding DPGCN graph , and all are calculated by:

where , and are hidden state representations for and output by the (L − 1)-th layer of DPGCN, l stands for the L-th DPGCN layer, and denotes the dependency weight between and at the L-th layer.

Based on DPGCN graph , DPGCN learns refined word representations (i.e., both aspect representations and contextual representations). First, from SynFE are fed into a feedforward network to obtain 768-dimensional hidden state representations as the input of DPGCN by:

where and are trainable weight matrix and bias. Then, all layers of DPGCN proceed convolution as follows:

where and are the trainable weight matrix and bias in the L-th layer, is the Relu activation function, and output by DPGCN represents the refined word representations for aspect and contexts. DPGCN learns high-quality word representations in the way of more reasonably leveraging dependency trees.

3.5. Semantic Feature Extractor (SemFE)

To retrieve more sentiment features from the overall sentence for sentiment classification, we extract semantic features related to aspect upon contexts to generate aspect-oriented sentence representation.

Position Encoding: In terms of the common sense of linguistics, contexts close to aspect generally have greater influences on the sentiment expression of aspect, so position weights are defined as follows:

where dis denotes the distance from contexts to aspect; it is aspect itself when dis = 0, and we mask the word representations when dis > d to avoid introducing noises. Take this simple sentence “The served food is delicious” as an example; the position weights are set to when the aspect is “served food” and . The position-encoded word representations P are obtained by:

where is a word representation for from DPGCN. The final aspect-oriented sentence representation s containing abundant semantic information is generated by:

where is the aspect representation from DPGCN for the i-th aspect word, and m and n are the length of the aspect and sentence, respectively.

3.6. Model Training

We obtain the final aspect representation and incorporate it and aspect-oriented sentence representation to obtain final representation by:

where is a trainable coefficient.The final representation z is passed through a fully connected layer and a softmax activation function to obtain the probability distribution of sentiment polarity, and the label of highest probability is chosen as the sentiment polarity of the specific aspect by:

where u is the probability distribution, and and are the trainable weight matrix and bias.

The model is trained by using the standard stochastic gradient descent to minimize the cross-entropy loss of sentiment classification. The loss function is formulated as:

where N is the number of training samples, is the prediction for the sentiment polarity of aspect, is the target label, represents all trainable parameters, and is the L2 regularization coefficient.

4. Experiments

4.1. Datasets

For the experiments, five benchmark datasets are adopted for the ABSA task to evaluate our model, including the Twitter dataset constructed by [18] and another four datasets all from the SemEval tasks [19,20,21], which are user reviews related to computers and restaurants. The samples in these datasets contain a given sentence, a specific aspect and the sentiment polarity (i.e., positive, neutral or negative) toward the aspect. The information of these five datasets is shown in Table 1.

Table 1.

The statistics of datasets.

4.2. Experimental Settings

In our experiments, the syntactic dependency trees and POS labels of the given sentences are constructed via using StanfordCoreNLP toolkit (https://stanfordnlp.github.io/CoreNLP/, accessed on 1 August 2022). The given sentences are encoded by pre-trained model BERT (we obtain the BERT model from (https://github.com/huggingface/pytorch-pretrained-BERT, accessed on 1 August 2022) to obtain 768-dimensional initialized word-embedding vectors for each word. In addition, the dimensions of dependency-embedding vectors and POS-embedding vectors are set to 100 and 50, respectively. For the parameter settings, BERT is initialized with pre-trained parameters, while other trainable parameters are initialized by Xavier [22]. The loss function of the model training is the cross-entropy function, and the Adam optimizer is adopted. For key hyper-parameter settings, the batch size is 16, the learning rate is , the L2 regularization coefficient is 0.001, and the dropout rate is 0.1. Our model is evaluated by both accuracy and macro-averaged F1 score.

4.3. Baselines

To verify the effectiveness of our model, many comparative state-of-art models are adopted and briefly described as follows:

IAN [23] proposes an interactive attention network based on LSTM and attention mechanism to generate representations for aspects and sentences.

MGAN [4] designs a novel multi-grained attention network model to capture interactions between the aspects and contexts.

ASGCN [6] first proposes leveraging GCN over dependency trees to learn aspect-specific representations for the ABSA task.

CDT [7] utilizes GCN over dependency trees to extract syntactic information for aspect representations.

BIGCN [8] constructs a syntactic graph and lexical graph for GCN to leverage word co-occurrence information and syntactic information.

BERT [11] is the vanilla BERT, which adopts “[CLS] sentence [SEP] aspect [SEP]” as input and uses the representation of [CLS] for predictions.

TD [24] implements a target-dependent BERT-based model with positioned output at the target terms and an optional sentence for the ABSA task.

R-GAT [16] employs a relational graph attention network to exploit syntactic structure information.

DGEDT [25] considers the flat representations and graph-based representations jointly to alleviate the noise and instability of dependency trees.

LCFS [14] models contextual and syntactical features for the ABSA task.

TGCN [26] encodes dependency types and integrates the representations from all GCN layers to learn aspect representations.

DualGCN [17] designs SynGCN and SemGCN to learn aspect representations by considering syntax structures and semantic correlations simultaneously.

4.4. Results and Analysis

Comparisons of all model performance are presented in Table 2. It can be seen that the results on the four SemEval task datasets outperform the previous models, but the performance on the Twitter dataset is lower than DualGCN and DGEDT due to the comments on the Twitter being informal and inclined to colloquial, which are insensitive to syntactic information. The comparisons show that our model surpasses many state-of-art GCN-based models, indicating that our model can better utilize syntactic dependency information, and extracting sufficient syntactic and semantic features for sentiment analysis is helpful for model performance.

Table 2.

The results of comparisons.

Compared with the attention-based models IAN and MGAN, our model avoids the noises introduced by the ordinary attention mechanism. Compared with the GCN-based models utilizing dependency information, such as ASGCN, CDT, R-GAT, TGCN, DualGCN and so on, our model combines dependency types and POS labels to weight dependencies with different types according to their contribution to the ABSA task, which better exploits syntactic dependency information.

4.5. Ablation Study

To explore the significance of each module, we conduct a series of ablation experiments, where the results are shown in Table 3. The findings are listed as follows:

Table 3.

The results of ablation study.

(1) The results decrease after removing the SynFE module, which indicates that the SynFE module can effectively encode the syntactic information in the sentences to enrich textual features. (2) If DPGCN removes dependency information (D) or POS information (P) separately, the results slightly reduce, but they strongly reduce when removing both simultaneously. It shows that DPGCN weighting dependencies with different types has a great impact on model performance; both (D) and (P) contribute to it. (3) For the SemFE module, similarly, the results demonstrate that position encoding helps to avoid introducing noises, and aspect-oriented sentence representation has the ability to supplement semantic features for a better performance. Overall, each component makes a contribution to the model performance.

4.6. Case Study

For the purpose of illustrating the effectiveness of our model, we randomly select sample data from the test set to launch a case analysis.

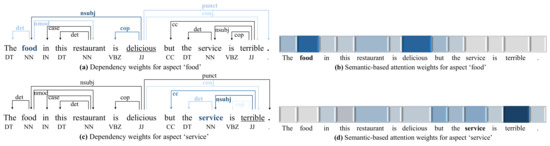

4.6.1. Weights Visualization

As can be seen in Figure 4a, for the aspect “food”, linguistically, the opinion word “delicious” leads to the sentiment polarity of “food”; our model has learnt to assign higher weight to dependency type “nsubj” between “food” and “delicious”. The dependency type “conj” between “delicious” and “terrible” may cause a negative effect for aspect “food”; our model reduces the dependency weight for “conj” denoting the relation between two elements connected by a coordinating conjunction. In addition, other secondary dependencies are assigned lower weights. In Figure 4b, under the guidance of DPGCN, aspect “food” has higher semantic-based attention weight with opinion word “delicious”.

Figure 4.

Visualization results of weights (darker color denotes higher weight).

Similarly, as seen in Figure 4c,d, vital dependencies are assigned higher weights; thus, aspect “service” aggregates feature information from important contexts which account for “service” having higher semantic-based attention weights with them. Specially, our model notices the semantic reversal information that “but” with dependency type “cc” standing for a coordination relation expresses.

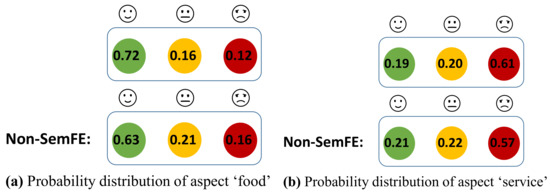

4.6.2. Probability Distribution Visualization

As shown in Figure 5, the SemFE module increases the probabilities of the true sentiment polarities for aspects “food” and “service”. Due to pre-trained model BERT being pretrained on large amounts of textual datasets, it can provide text embeddings containing rich semantic features. Thus, “delicious” is encoded with positive sentiment features and negative sentiment features for “terrible”. SemFE generating aspect-oriented sentence representation s is able to supplement corresponding sentiment features for aspects. For aspect “food”, the context words “service” and “terrible” carrying negative sentiment features are masked by position encoding, and the positive sentiment features from “delicious” are incorporated into s, which makes our model predict the sentiment polarity of “food” more accurately. Similarly, for “service”, although it is disturbed by “delicious”, “service” has higher semantic weight with “terrible”, and the s also can provide correct sentiment features for “service”.

Figure 5.

Visualization results of probability distributions.

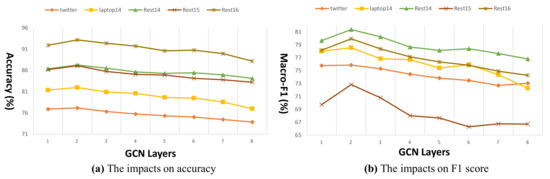

4.7. Impacts of DPGCN Layer Number

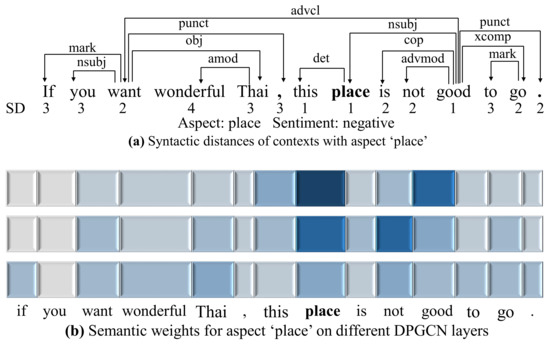

An appropriate layer number L for DPGCN is also beneficial to model performance, so the impacts of different L values are explored, and the results are shown in Figure 6. It shows that the accuracy and F1 score on all datasets are highest when , and we will analyze this phenomenon. As described in Figure 7a, aspect “place” has different syntactic distances with contexts; through one DPGCN layer, each word node aggregates feature information from neighbor word nodes (i.e., SD = 1), so aspect “place” only captures feature information from contexts that SD = 1 with it when . Obviously, “good” misleads the sentiment analysis for “place”; a two-layer DPGCN makes “place” capture vital sentiment features from context word “not”. However, if or more, it will introduce irrelevant feature information such as “Thai” and “wonderful”, which carry positive sentiment features.

Figure 6.

Impacts of the layer number L.

Figure 7.

Syntactic distances and semantic weights for aspect ‘place’.

As described in Figure 7b, when , aspect “place” has high semantic weights (from SemFE) on itself and “good” through the 1st DPGCN layer. Through the 2nd DPGCN layer, the semantic weight on “good” decreases and increases on “not”. After through the 3rd DPGCN layer, the distribution of semantic weights on contexts is decentralized, so aspect “place” is difficult to focus on vital information, and much irrelevant information is introduced.

In conclusion, our model is unable to capture sufficient contextual feature information for aspects when , but it may introduce much more irrelevant information for aspects that damage model performance when or more. is favorable for our model.

5. Conclusions

In this paper, we propose a novel model containing three effective modules (i.e., SynFE, DPGCN and SemFE) for the ABSA task. To address the limitations upon utilizing the syntactic dependency information of previous works, we propose to weight dependencies with different types according to their contribution to the ABSA task, and the DPGCN module is designed to combine dependency information and POS information to more reasonably and comprehensively leveraged syntactic dependency information. To further improve the model performance, SynFE is designed to encode syntax-aware features to enrich word representations, and SemFE is designed to extract aspect-oriented semantic information that supplements sentiment features for sentiment classification.

Extensive experiments are conducted on five benchmark datasets to demonstrate the validity of our model, and the results show that our model achieves new state-of-the-art performance. Compared to other outstanding models, our model achieves a higher accuracy and F1 score. The ablation experiments show that each module in our model contributes to the model performance, and the case study demonstrates that DPGCN has learnt to assign appropriate weights to dependency types, which overcome the shortcomings of the previous model on utilizing dependency trees. In addition, we have analyzed how SemFE is auxiliary for sentiment prediction and explored the effects of the number of DPGCN layers on the model performance.

Author Contributions

Conceptualization, J.Y. and Y.X.; methodology, J.Y.; formal analysis, J.Y. and A.D.; writing—original draft preparation, J.Y.; writing—review and editing, X.L. and B.Z.; supervision, Y.X. and B.Z.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Science and Technology Plan Project of Guangzhou under Grant Nos. 202102080258 and 202102020902.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Y.; Huang, M.; Zhu, X.; Zhao, L. Attention-based LSTM for aspect-level sentiment classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 606–615. [Google Scholar]

- Tang, D.; Qin, B.; Liu, T. Aspect level sentiment classification with deep memory network. arXiv 2016, arXiv:1605.08900. [Google Scholar]

- Chen, P.; Sun, Z.; Bing, L.; Yang, W. Recurrent attention network on memory for aspect sentiment analysis. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 452–461. [Google Scholar]

- Fan, F.; Feng, Y.; Zhao, D. Multi-grained attention network for aspect-level sentiment classification. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3433–3442. [Google Scholar]

- Huang, B.; Ou, Y.; Carley, K.M. Aspect level sentiment classification with attention-over-attention neural networks. In Proceedings of the International Conference on Social Computing, Behavioral-Cultural Modeling and Prediction and Behavior Representation in Modeling and Simulation, Washington, DC, USA, 10–13 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 197–206. [Google Scholar]

- Zhang, C.; Li, Q.; Song, D. Aspect-based sentiment classification with aspect-specific graph convolutional networks. arXiv 2019, arXiv:1909.03477. [Google Scholar]

- Sun, K.; Zhang, R.; Mensah, S.; Mao, Y.; Liu, X. Aspect-level sentiment analysis via convolution over dependency tree. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5679–5688. [Google Scholar]

- Zhang, M.; Qian, T. Convolution over hierarchical syntactic and lexical graphs for aspect level sentiment analysis. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 3540–3549. [Google Scholar]

- Chen, C.; Teng, Z.; Zhang, Y. Inducing target-specific latent structures for aspect sentiment classification. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 5596–5607. [Google Scholar]

- Liang, B.; Yin, R.; Gui, L.; Du, J.; Xu, R. Jointly learning aspect-focused and inter-aspect relations with graph convolutional networks for aspect sentiment analysis. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 150–161. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Sun, C.; Huang, L.; Qiu, X. Utilizing BERT for aspect-based sentiment analysis via constructing auxiliary sentence. arXiv 2019, arXiv:1903.09588. [Google Scholar]

- Nguyen, H.T.; Le Nguyen, M. Effective attention networks for aspect-level sentiment classification. In Proceedings of the 2018 10th International Conference on Knowledge and Systems Engineering (KSE), Ho Chi Minh City, Vietnam, 1–3 November 2018; pp. 25–30. [Google Scholar]

- Phan, M.H.; Ogunbona, P.O. Modelling context and syntactical features for aspect-based sentiment analysis. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3211–3220. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Wang, K.; Shen, W.; Yang, Y.; Quan, X.; Wang, R. Relational graph attention network for aspect-based sentiment analysis. arXiv 2020, arXiv:2004.12362. [Google Scholar]

- Li, R.; Chen, H.; Feng, F.; Ma, Z.; Wang, X.; Hovy, E. Dual graph convolutional networks for aspect-based sentiment analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing; Volume 1: Long Papers; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 6319–6329. [Google Scholar]

- Dong, L.; Wei, F.; Tan, C.; Tang, D.; Zhou, M.; Xu, K. Adaptive Recursive Neural Network for Target-dependent Twitter Sentiment Classification. In Proceedings of the ACL, Baltimore, MD, USA, 22–27 June 2014; pp. 49–54. [Google Scholar]

- Pontiki, M.; Papageorgiou, H.; Galanis, D.; Androutsopoulos, I.; Pavlopoulos, J.; Manandhar, S. SemEval-2014 Task 4: Aspect Based Sentiment Analysis. SemEval 2014, 2014, 27. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. Semeval-2015 task 12: Aspect based sentiment analysis. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015; pp. 486–495. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; Al-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; De Clercq, O.; et al. Semeval-2016 task 5: Aspect based sentiment analysis. In Proceedings of the International Workshop on Semantic Evaluation, San Diego, CA, USA, 16–17 June 2016; pp. 19–30. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Ma, D.; Li, S.; Zhang, X.; Wang, H. Interactive attention networks for aspect-level sentiment classification. arXiv 2017, arXiv:1709.00893. [Google Scholar]

- Gao, Z.; Feng, A.; Song, X.; Wu, X. Target-dependent sentiment classification with BERT. IEEE Access 2019, 7, 154290–154299. [Google Scholar] [CrossRef]

- Tang, H.; Ji, D.; Li, C. Dependency graph enhanced dual-transformer structure for aspect-based sentiment classification. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6578–6588. [Google Scholar]

- Tian, Y.; Chen, G.; Song, Y. Aspect-based Sentiment Analysis with Type-aware Graph Convolutional Networks and Layer Ensemble. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 2910–2922. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).