1. Introduction

Although there are varying definitions of mindfulness, there is near consensus that the capacity for non-distraction represents a key component [

1,

2]. Mindfulness training is often characterized as a way of systematically cultivating attentional control [

3]. A large and growing body of evidence indicates that training attention through mindfulness can elicit a range of social, cognitive, affective, and physical benefits [

4].

Based in part on this accumulating evidence base, scientists, educators, and policy makers have taken considerable interest in the role that mindfulness might play in educational settings. Research suggests that mindfulness training can help children as young as 5 years old as well as elementary, middle, and high school students [

5,

6]. Some experts posit that mindfulness may be especially helpful for older students due to the strengthened metacognitive and abstract thinking skills of adolescence [

7,

8]. Accordingly, numerous studies show that mindfulness training can help to address the serious and escalating issues of distraction, stress, and mental illness among adolescents [

6,

9,

10].

Mindfulness training programs for students typically involve in-person instruction offered by trained mindfulness teachers [

11]. However, there are logistical and financial challenges involved in training fully qualified mindfulness teachers and ensuring the effective delivery of a standardized intervention [

12]. These challenges make it difficult for schools to guarantee that their students are receiving evidence-based training [

13].

In recent years, numerous digital mindfulness-based interventions (d-MBIs) have emerged, presenting an unprecedented opportunity to share mindfulness training at scale. In contrast to in-person approaches to providing mindfulness training, d-MBIs leverage technology to deliver training through digital mediums, such as websites and mobile apps. There is already preliminary evidence that d-MBIs can be feasibly implemented with younger grade levels and elicit meaningful benefits for young students [

14]. However, the feasibility and effectiveness of d-MBIs in high school settings has received almost no attention [

15]. Given all the developmental and cultural differences between older and younger students, combined with the well-established challenges of delivering interventions to diverse students in school settings [

16], research is needed to assess the viability of d-MBIs in high schools.

In principle, a digital approach to mindfulness training has at least four key advantages. First, d-MBIs can reduce geographical, logistical, and financial constraints that would otherwise prevent access to training. Second, a digital approach allows for standardization of key elements of course content and presentation, thereby ensuring that all students receive the same high-quality instruction [

17,

18]. Third, digital training can also provide content that is personalized to the abilities, interests, and values of individual students, which heightens both engagement and learning [

19,

20]. Fourth, although one might assume that in-person instruction would elicit better outcomes, research suggests that well-designed digital training can elicit equal or even greater benefits [

19,

21,

22,

23].

However, the advantages of d-MBIs depend on effective design and execution, which is challenging to achieve. Of the numerous mindfulness apps that now exist, most fail to apply best practices in digital learning and have received only modest ratings in terms of engagement, functionality, and information quality [

24]. These shortcomings are likely to be especially problematic in school settings where students do not self-select into the intervention based on pre-existing interest and motivation. In one study where students were assigned to complete a d-MBI, only 1 of 85 students completed the entire course (where completion was defined as doing at least 40 of the 96 exercises) [

15]. To be successful, d-MBIs must creatively apply best practices in digital learning, including: (i) tailoring instruction to a well-defined audience, (ii) applying best practices in educational psychology to ensure effective learning, (iii) addressing audience diversity through personalization of program content, (iv) designing content that maximizes student engagement, and (v) anticipating and addressing common challenges [

25].

The primary research question (RQ1) of the present research was whether a digital mindfulness course designed specifically for implementation in high schools could be feasibly delivered in a school setting. Although the intervention is still under active development, the secondary research question (RQ2) was whether the intervention would have beneficial outcomes for students. Based on prior research showing the benefits of mindfulness training for adolescents [

10], we predicted the intervention would elicit improvements in focus, emotion regulation, and stress management if it could be implemented with a reasonably high level of fidelity. As described below, we also predicted improvements in classroom focus specifically for students who self-assessed at pretest as not paying attention in class as much as they should.

2. Materials and Methods

Research Design. This feasibility study used a one-group pre–post design. The research was approved by the Human Subjects Committee at the University of California Santa Barbara, and informed consent was obtained from all students and their guardians.

Procedure. Students completed anonymous online surveys before and after the course. Intervention adherence—operationalized as completion rates for course lessons and exercises—was recorded by the digital learning platform.

Participants. The sample consisted of students at a public high school outside of a large west-coast city in the United States. The school uses a lottery system for enrollment and operates as a magnet program with an emphasis on STEM education. A teacher at the school learned about the mindfulness-based attention training intervention at an academic conference and requested additional information. She then presented the course at a faculty meeting. Six teachers expressed interest in sharing the course with their students. In total, the course was shared with 346 students after spring break and before final exams. All freshmen completed the course during required Graphic Production and Entrepreneurism classes. Students in grades 10–12 completed the course in Computer Science, AP Physics, AP Psychology, and Special Education classes.

Teachers who were facilitating the course were encouraged to invite their students to participate in the research surveys. A total of 317 students completed the pretest. Teachers were repeatedly asked to share the post-test survey with every student who completed the pretest regardless of course adherence. A total of 214 students completed the post-test. This attrition was largely driven by two teachers who chose not to administer the post-test to their cumulative 82 students (the post-test would have coincided with final exams). Due to time constraints, these teachers chose not to continue facilitating the course shortly after beginning it. Adherence data from these students were still available and are included in relevant analyses.

Pretest and post-test surveys were linked using anonymous codes generated by students. Due to confusion or typos, these codes failed to match for multiple data entries. We only included entries that were clearly the correct match from pretest to post-test, leaving a final sample of 190 students. Two students did not provide demographic information. There were 114 freshmen, five sophomores, four juniors, and 65 seniors. Participants were asked what gender they identified with, and 106 said male, 78 said female, one said nonbinary, and three preferred not to say. The number of students identifying with specific races was as follows: African American/Black—3 students; Asian—94; Caucasian—71; American Indian/Alaskan Native—2; Native Hawaiian or Other Pacific Islander—1; Mix of two or more races—17. Six students identified as Hispanic.

Intervention. Students received mindfulness-based attention training via a 22-day digital course. The course was delivered through a custom digital learning platform developed at the University of California Santa Barbara that allowed students to access the course on computers, tablets, or phones. The entire course included 2.25 h of content, including four 12-min lessons and daily 4-min exercises. Teachers were encouraged to have students complete the lessons and at least some of the daily exercises during class.

A key objective of the course was to help students to train their ability to focus. Students also learned how to use attention to relate more effectively to thoughts, evaluations, and emotions.

The course lessons presented three fundamental skills: anchoring, focusing, and releasing. Anchoring was defined as deciding where you focus. Focusing was defined as directing your attention to a specific thing. Releasing was defined as letting something go by not giving it any more attention.

These three fundamental skills were trained through daily exercises. For example, students were invited to anchor their attention on a song, focus on the sounds, and release all distracting thoughts and perceptions.

Students also learned how to use these three skills in daily life by applying specific strategies like re-focusing (releasing a counterproductive thought and anchoring attention on something more worthwhile) and re-evaluating (releasing an unhelpful evaluation and focusing on a more empowering one).

The entire intervention was delivered on a custom digital learning platform that provides content tailored to the interests of individual users. For example, students indicated their preferred music genre and then received daily exercises in this genre. Each student completed the intervention independently using headphones on a computer, tablet or phone. The lessons were completed during class time, and each teacher decided independently whether daily exercises were completed in class or on students’ own time. The digital learning platform provided teachers with an interface to track student progress throughout the course.

Measures. Validated self-report instruments were used whenever possible. In cases where no validated instrument existed to address the specific research question of interest, researcher-developed measures were used. All of these measures were written to maximize face validity using vocabulary that is appropriate for adolescents. Qualitative data were also collected to assess (i) students’ initial reactions to the idea of completing the course and (ii) students’ perceived benefits after completing the course. The order of instruments was randomized.

Emotion Regulation. The Emotion Regulation Questionnaire for Children and Adolescents (ERQ-CA) is a version of the Emotion Regulation Questionnaire that is adapted to be more appropriate for ages 10–18 [

26,

27]. This scale consists of two subscales assessing cognitive reappraisal (“I control my feelings about things by changing the way I think about them”) and expressive suppression (“When I’m feeling bad (e.g., sad, angry, or worried), I’m careful not to show it”). Given ambiguity regarding the appropriateness of expressive suppression as a healthy strategy for emotion regulation, only the cognitive reappraisal subscale was included.

Life Satisfaction. The Brief Multidimensional Students’ Life Satisfaction Scale (BMSLSS) is a measure of student life satisfaction across five domains: family life, friendships, school experience, oneself, and where one lives [

28]. Students indicated their level of satisfaction with each of these domains on a scale from 1 (very dissatisfied) to 5 (very satisfied).

Mind-Wandering in Daily Life. The Mind-Wandering Questionnaire (MWQ) is a 5-item instrument measuring trait levels of mind wandering (“I find myself listening with one ear, thinking about something else at the same time”). The MWQ has been validated with both adults and adolescents [

29].

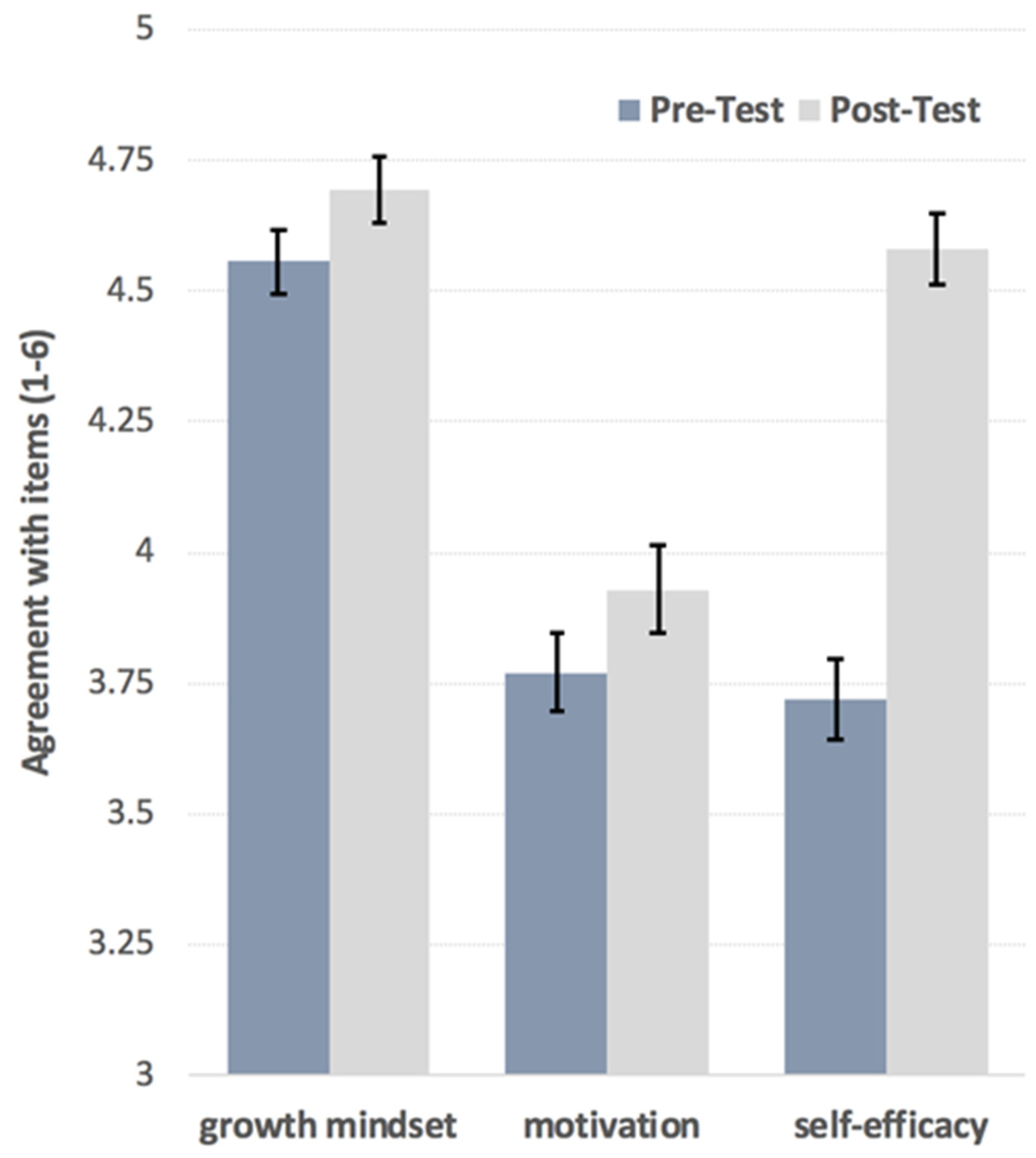

Mindsets about Focus. The Mindsets about Focus Scale is a 9-item measure developed in previous unpublished research by our team. The instrument evaluates whether an individual (i) believes their ability to focus can improve through training (growth mindset subscale), (ii) is motivated to train their ability to focus (motivation subscale), and (iii) is confident that they know how to train their attention (self-efficacy subscale).

Life Demands. Current demands were assessed by adapting an existing 1-item measure [

30]. The item asked, “Over the last 7 days, how much have you had on your plate to deal with (e.g., homework, exams, managing relationships, extracurricular commitments, health challenges, etc.)?” on a five-point scale from 1 (way less than usual) to 5 (way more than usual).

Stress and Stress Management. Two questions were used to capture the distinction between experiencing stress and effectively managing that stress. Participants first responded to the question, “Over the last two weeks, how stressed have you been?” on a scale from 0–100 where higher values indicated more stress. Participants then responded to the question, “Over the last two weeks, how well have you managed your stress?” on a scale from 0–100 where higher values indicated better stress management.

Classroom Distraction. Relatively few students believe that they should always pay attention in class, so two questions were used to capture the distinction between how much a student reports paying attention versus how much they feel they ideally should pay attention. The first question asked, “On average across all your classes, how often do you keep your undivided attention focused on class?”. The second question was stated as follows: “This next question is NOT about what other people think you should do. It’s about what you believe is best for yourself. On average across all your classes, how often would you ideally keep your undivided attention focused on class?”. Both questions were asked on a scale from 0% of the time to 100% of the time.

Initial Reactions and Perceived Benefits. Two open-ended questions were included at post-test. The first question assessed students’ initial reaction to the idea of completing the course before they had actually started it (“What was your initial reaction when your teacher told you that you would be doing this course?”). The second question examined perceived benefits of the course (“How did this course help you?”). Students were also asked to respond yes or no to the question: “Do you think taking this course improved your ability to focus?”

Data Analysis Overview. The feasibility of the intervention was evaluated as the percentage of course lessons and exercises that students completed. The preliminary assessment of student outcomes was assessed using the remaining quantitative and qualitative measures. Paired

t-tests were used to examine changes in quantitative data from pretest to post-test. Qualitative data were analyzed using a standard inductive coding approach. Three members of the research team first read through all responses to identify data-driven themes [

31,

32]. Following widely used recommendations, seven themes were identified and translated into a coding scheme [

33,

34,

35]. Next, a separate team of three coders scored all qualitative responses on the identified themes. Sufficient interrater reliability using Cronbach’s alpha was found for all themes (all Cronbach’s alphas greater than 0.76). We also calculated Krippendorff’s alpha, which is considered by some to be a superior reliability statistic for content analysis [

36]. Sufficient reliability was found for the large majority of themes. However, 4 of 14 themes had only moderate reliability (Krippendorff’s alphas between 0.58 and 0.65), suggesting findings for those themes should be interpreted as less definitive (

Table 1). Finally, a frequency score for each theme was calculated by (1) adding up all responses where a majority of coders agreed and (2) dividing that total count by the number of participants who answered the question.

3. Results

RQ1. Feasibility of the intervention.

Initial reactions to the idea of the course. Prior to beginning the course, students had varied reactions to the idea of completing it (

Table 1). The majority of reactions were positive. However, there were a considerable number of students who had negative reactions, suggesting that the initial presentation of the course is an important factor to consider.

Adherence. A total of 77% of students reported that their teacher set a clear expectation that they should complete the lessons and daily exercises. Only 47% of students said that they were given some sort of credit for completing the course.

The digital learning platform recorded completion of lessons and daily exercises for all students who created accounts regardless of whether they completed the pretest or post-test. Due to the anonymous nature of the survey data, adherence data could not be linked to survey data. On average, 80% of lessons were completed (91% for lesson 1; 82% for lesson 2; 76% for lesson 3; 69% for lesson 4). Students completed 77% of the daily exercises.

Table 2 presents completion rates by group. In some cases, multiple classrooms were facilitated as a single group within the digital learning platform, preventing breakdown of adherence data by classroom.

RQ2. Preliminary assessment of student outcomes.

Perceived benefits. In describing how the course helped them, seven themes were identified (

Table 1). The most common benefits were greater relaxation and increased focus.

Demands and stress. Students experienced substantially increased levels of demands and a marginal increase in stress from pretest to post-test

Table 3, which is consistent with prior research documenting increased levels of demands and stress at the end of an academic term [

30]. Changes in demands over time were positively correlated with changes in stress (r = 0.39,

p < 0.001). Although students experienced greater levels of demands and marginally more stress, they reported becoming significantly more effective at managing that stress (

Table 3).

Emotion regulation. After the course, students reported higher levels of emotional regulation (

Table 3). Although there was not a significant increase in life satisfaction, changes in emotional regulation were positively correlated with changes in life satisfaction (r = 0.27,

p = 0.002).

Mindsets, focus, and mind-wandering.

Figure 1 displays changes in growth mindset, motivation, and self-efficacy to train one’s ability to focus. After completing the course, these preliminary results suggest that students were more likely to adopt a growth mindset regarding their ability to focus (Cohen’s

d = 0.15). They also felt more motivated to train this ability (

d = 0.15) and were more confident that they knew how to do so (

d = 0.86). When asked whether or not the course improved their ability to focus, 64% of students reported feeling that completing the course enhanced their focus.

We predicted that the students most likely to increase their focus during class were the ones who reported a discrepancy at pretest in how much they paid attention relative to how much they felt they should pay attention. At pretest, students felt they should ideally keep their undivided attention focused on class 78.71% of the time (SD = 20.39). They estimated that they actually kept their attention focused on class 62.25% of the time (SD = 23.45). A total of 81.62% of students reported focusing less often than they felt they should.

Across the entire sample, levels of focus did not significantly change, t(1,188) = 0.93, p = 0.355. However, among the 81.62% of students with any discrepancy between ideal and actual focus at pretest, results suggest that actual focus improved significantly from pretest (M = 62.56, SD = 21.92) to post-test (M = 65.69, SD = 20.54), t(155) = 2.03, p = 0.044, d = 0.15.

The course did not affect how much students felt they should pay attention during class, t(1,188) = 0.46, p = 0.646. Levels of mind-wandering during daily life also did not change from pretest to post-test, t(1,188) = 0.75, p = 0.456.

4. Discussion

Although there is a proliferation of d-MBIs and growing evidence that they can be effective, research has yet to establish the feasibility and effectiveness of d-MBIs for use in high schools. The primary research question (RQ1) for this study was whether a digital mindfulness course could be feasibly delivered in a high school setting. Although this was the first time the school attempted to use the digital course, it was nevertheless implemented with adequate fidelity of implementation in the majority of classrooms. Overall, students completed 80% of lessons and 77% of daily exercises. These results support the feasibility of a d-MBI in a high school setting.

The secondary research question (RQ2) was whether the d-MBI would influence student outcomes. Although the one-group pre–post design precludes definitive conclusions about how the intervention affected students, several positive outcomes were observed. The course influenced students’ mindsets about attention, leading them to (i) adopt a growth mindset regarding their ability to focus, (ii) feel more motivated to train that ability, and (iii) have greater confidence that they know how to do so. The course also elicited significant improvements in stress management and emotional regulation. Although these improvements could be due to a natural progression of time, we believe this is unlikely since students were facing more demands during post-test. Previous research suggests that high demands lead students to feel a sense of burnout and being overwhelmed [

37] and stress associated with exam season is strongly negatively correlated with emotion regulation [

38]. Among those who reported paying attention in class less than they should at pretest, the course also increased levels of classroom focus.

Corroborating these findings, students’ qualitative data suggested improvements in stress management and focus. Students also reported being able to more effectively manage difficult thoughts, evaluations, and emotions. However, 18.7% of students reported no benefits. Because survey data could not be linked to adherence data, it is unclear how much of the intervention these students completed. Nevertheless, this finding indicates that continued development and personalization of the course is likely needed to effectively reach all students.

This investigation also revealed several opportunities for improving fidelity of implementation. First, overall levels of adherence were significantly influenced by two teachers that planned to complete the course but then decided that there was insufficient classroom time. This underscores the importance of selecting an appropriate class in which to share the course as well as an appropriate time of the school year when the course will not unduly compete for limited academic time.

A second opportunity to improve fidelity of implementation relates to students’ initial reactions to the idea of completing the course. Qualitative data revealed that about 20% of students were indifferent to the idea of completing the intervention, and 30% had skeptical and/or adverse reactions. Given that first impressions of an intervention significantly predict participant adherence [

39], researchers need to think strategically about how students are introduced to interventions and prepare teachers with the resources they need to optimize receptivity.

A third opportunity for enhancing implementation would be formalizing expectations and incentives for students. Twenty-three percent of students said that there was not a clear expectation from their teacher that they needed to complete the course. Less than 50% of students were formally incentivized to complete the course, and 70% of students never downloaded the mobile app that was designed to facilitate completion of the daily exercises. This highlights the need to better communicate with teachers about the necessity of creating the appropriate structure and incentives for optimal fidelity of implementation.

Although the present research provides a promising report on the feasibility of d-MBIs in high school settings, it has several limitations. First, the study did not include a control group, which limits conclusions that can be made regarding the observed improvements. As one example, the observed improvement in stress management could in principle be a function of natural fluctuations in stress management throughout the school year. As students’ demands and stress increase, they may naturally upregulate effective stress management strategies. Without a control group, this alternative explanation cannot be ruled out.

The attrition from pretest to post-test is a second limitation. Attrition can be particularly concerning if there is systematic dropout based on individual differences [

40], such as the most stressed students being the ones to skip the post-test survey. Given that the majority of attrition was due to two specific teachers deciding to not share the post-test survey, the risk of systematic dropout based on individual differences is considerably lower. In future research, researchers need to collaborate more effectively with each teacher to find the appropriate timing for intervention delivery. A second factor that contributed to survey attrition was the 10% of post-test responses were discarded due to inaccurate linking codes. Future research should identify less fallible methods for anonymously connecting pretest and post-test surveys.

A third limitation of the present research is the use of several researcher-generated survey items instead of validated self-report instruments. This was required to address the research questions of interest, and these measures were written to maximize face validity using vocabulary that is appropriate for adolescents. Nevertheless, findings from these researcher-generated items should be interpreted as less definitive. Future research should develop and validate instruments for adolescents to report more nuanced aspects of their focus and stress management than existing instruments allow.

Teenagers today are growing up in what is arguably the most distracting time in history amid alarming rates of stress and mental illness [

41,

42,

43]. Research indicates that mindfulness training can be a useful tool for addressing these challenges [

10], but it is far from trivial to deliver effective mindfulness training to the tens of millions of teenagers who matriculate into high school each year. This investigation provides support for the feasibility of digital mindfulness training for high schools, suggesting a promising path to sharing evidence-based mindfulness with teenagers at scale.