1. Introduction

Educational software is now increasingly common in many schools. Some of these are built around or include pedagogical agents. A pedagogical agent is a computer character in a pedagogical role, often based on some type of artificial intelligence. Some of these pedagogical agents can be really helpful and add much to the learning experience, but some are not as beneficial. So, how should a pedagogical agent in educational software be designed to support student learning? This question is complex as there are many types of pedagogical agent which can take different roles in the learning process. There are, for example, tutor agents from which the student can learn [

1], agents that work together with the student as companions or peers [

2,

3,

4], agents that take an expert role [

5,

6], and agents that act as mentors [

7,

8]. Yet another pedagogical role is that of a tutee, where the agent acts as the one being taught while the real student takes the teacher role. These agents are called teachable agents [

9] or digital tutees. A digital tutee is based on the idea that by teaching someone else you learn for yourself. Indeed, learning by teaching has been shown to be an efficient way to learn [

10,

11,

12].

The results of many studies involving pedagogical agents indicate that they can have positive effects on learning [

13,

14,

15,

16] and on self-efficacy [

17,

18,

19,

20]. For example, Kim, Baylor and Shen [

21] showed that girls who interacted with a pedagogical agent in an educational math game developed a more positive attitude towards mathematics and increased their self-efficacy beliefs in the subject compared to girls who played the same game but without an agent. Similarly [

20] found that girls increased their self-efficacy beliefs in learning mathematics after working with an animated agent embedded in computer-based learning. In [

19] they found that third graders who taught a digital tutee for nine weeks showed a significantly larger gain in self-efficacy compared to the control group who had engaged in regular math classes.

However, the mere addition of a pedagogical agent to a learning environment does not automatically improve learning or provide other beneficial effects. There are several aspects that need to be carefully considered when designing a pedagogical agent: visual appearance [

22,

23,

24], how the pedagogical agent behaves and interacts [

8,

21,

25,

26], and other characteristics such as whether the agent has high or low domain competence [

3,

4,

8,

17,

27,

28]. For example, [

4] explored the agent’s competence (high vs. low) in combination with different interaction styles (proactive vs. responsive). They found that students who interacted with a peer agent with high competence were better at applying what they had learned and showed a more positive attitude towards their agent. On the other hand, students interacting with a peer agent with low competence showed an increase in self-efficacy. An increase in self-efficacy was also found for students who worked with a more responsive agent. Hietala and Niemirepo [

3] similarly found that students in general preferred to collaborate with a more competent digital peer compared to a weak digital peer when presented with a choice. Uresti [

27] Presents opposite results pointing towards a trend (although not significant) that students who interacted with a weak learning companion learned more than students who interacted with a more competent companion. Therefore, even though the evidence is not conclusive as to whether an agent with high or low competence is most beneficial, we know that pedagogical agents with a high or low competence can have an effect on students’ self-efficacy and learning. In this paper, we explore these themes further, focusing on how the self-efficacy of both agent and student affect how students interact with the agent.

1.1. Conversational Teachable Agents

The type of pedagogical agent used in the study presented in this paper is a teachable conversational agent. It is teachable in the sense that it knows nothing about the topic from the beginning but then learns from the student, who acts as the teacher. By teaching the agent, the student at the same time learns for herself. This effect has been proven efficient in human–human interaction. Moreover, the same effect is also present in human–agent interaction [

29,

30]. Chase and colleagues [

30] found that students who were asked to learn in order to teach a digital tutee put more effort into the learning task compared to when students were asked to learn in order to take a test for themselves. This difference in effort and engagement is referred to as the protégé effect. Not only did the students in the study put more effort into the task when they were later supposed to teach, they also learned more in the end. Having a protégé (such as a digital tutee) can thus increase the motivation for learning. In addition, Chase et al. [

30] proposes that teaching a digital tutee can offer what they term an ego protective buffer: that is, the digital tutee protects them from the experience of direct failure, since it is the tutee that fails at a task or a test—even though students are generally aware of the fact that the (un)success of the tutee reflects their own teaching of it. Nevertheless, the failure can be shared with the tutee, which then shields the student from forming negative thoughts about themselves and their accomplishments.

Sjödén and colleagues [

31] made another observation regarding the social relation a student can build with her digital tutee. They found that low-performing students improved dramatically on a posttest when they had their digital tutee present during testing compared to when they did not. This difference was not found for high performing students. Even though the digital tutee did not contribute with anything but its mere presence, this had a positive effect for low performing students.

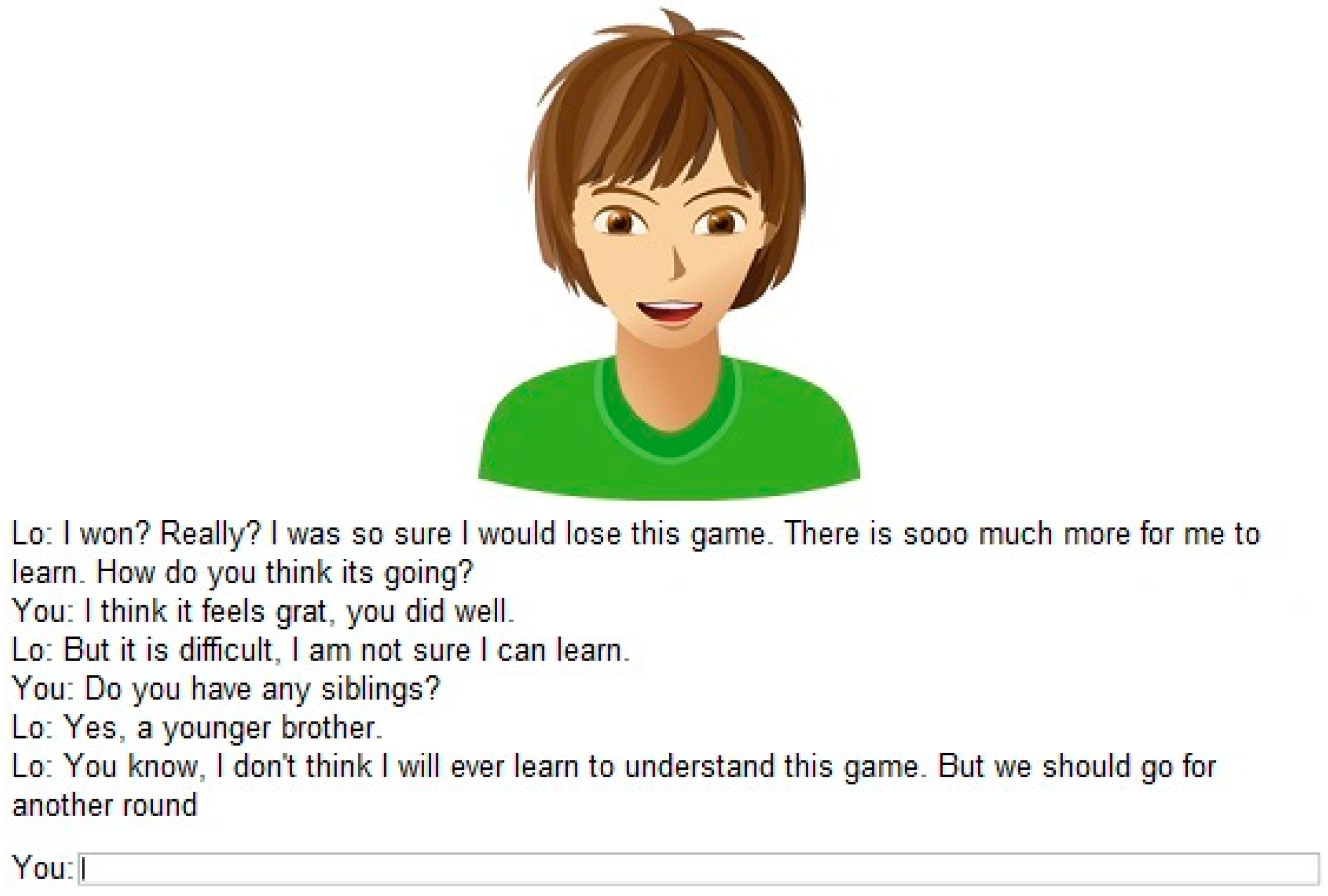

With respect to the learning by teaching paradigm, the kind of feedback students receive when using such software needs to be highlighted. In most studies on feedback and learning, feedback is something that is provided to the student from the teacher and concerns the students’ performance. In the teachable agent paradigm, the direction of the feedback is different. Here, it is the teacher (i.e., the real student) that receives feedback on how well they have been teaching by observing how well their tutee (i.e., the digital tutee) performs. The digital tutee provides feedback to the student regarding its (the tutee’s) own ability to solve tasks without explicitly saying anything about the students teaching abilities. Implicitly, however, a student can make use of the feedback from the digital tutee, including information on how the tutee performs, to infer how much she herself knows/or how well she has taught her tutee. This type of feedback is what [

29] call recursive feedback, namely feedback that occurs when the tutor observes her students use what she has taught them. This type of recursive feedback is present in the math game used in this study. It appears when the digital tutee attempts to play independently using its knowledge regarding the rules and strategies of the game as learned from the student. However, it also appears in the chat dialogue when the digital tutee reflects upon its own learning and performance. These reflections make up our manipulation in that they are colored by the self-efficacy the digital tutee is assigned (high or low).

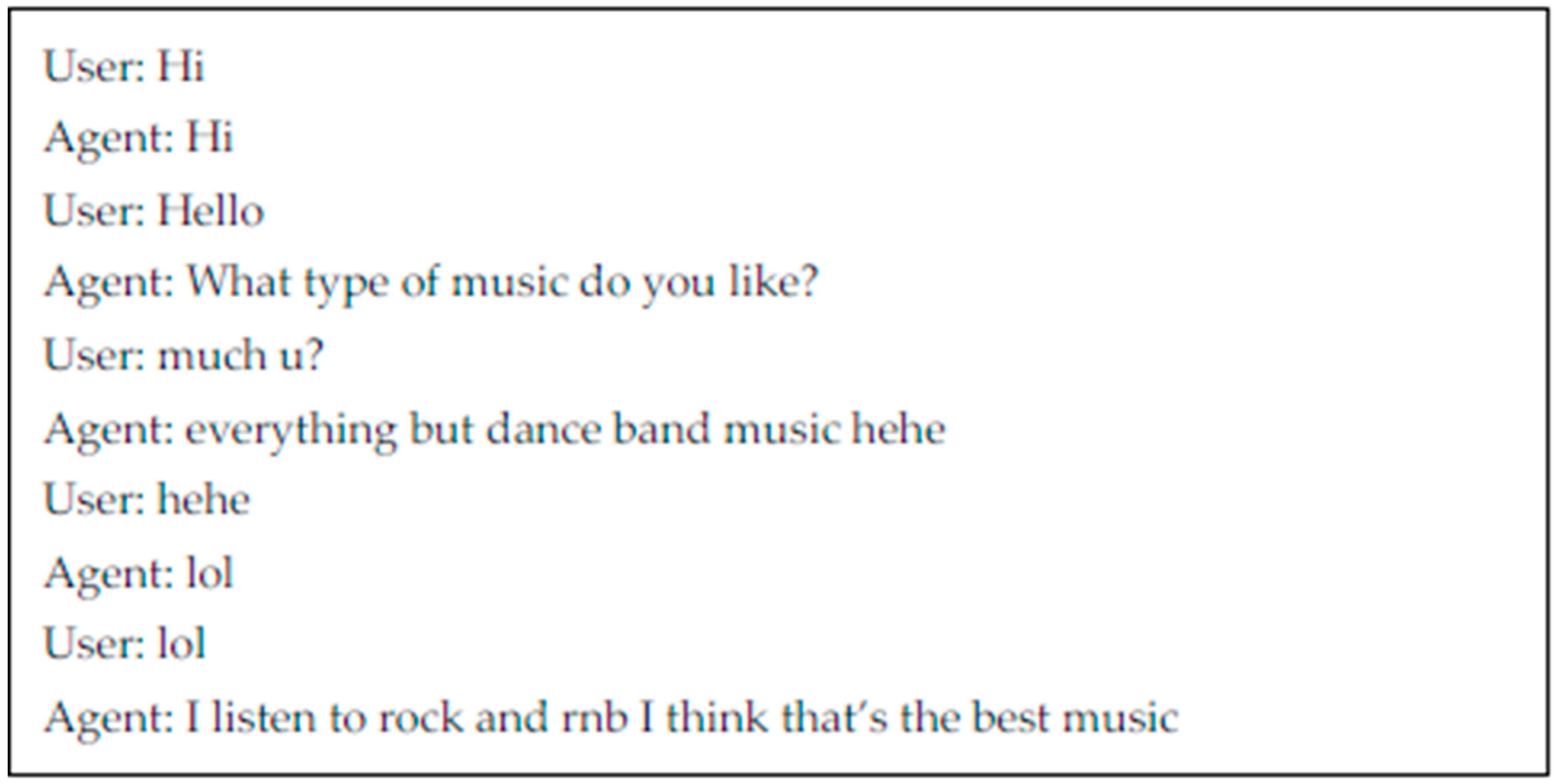

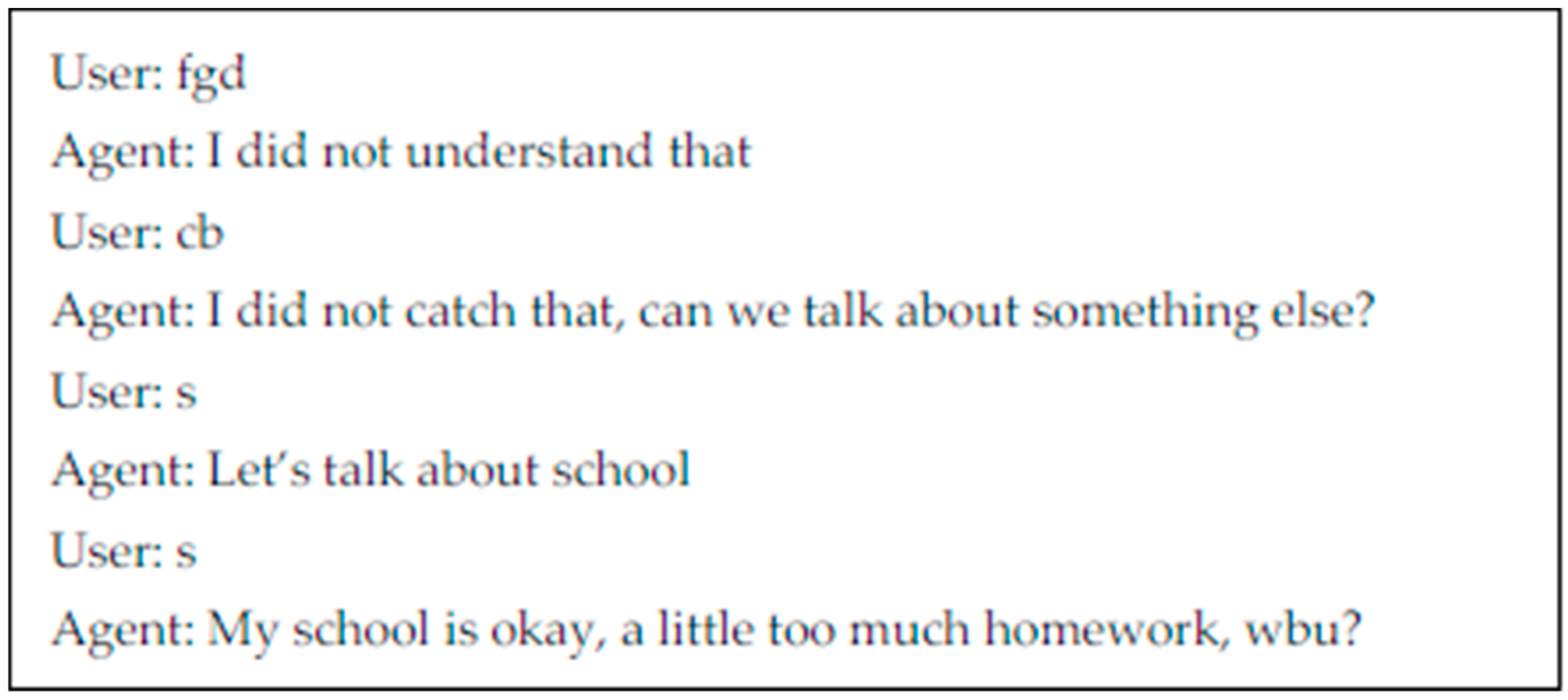

Our digital tutee also belongs to the pedagogical agent subgroup called conversational pedagogical agents. Conversational agents in the area of education are primarily able to carry out conversations relating to the learning topic at hand. However, some of them are also able to carry out so-called off-task conversation or “small-talk”, not related to the learning topic as such. Off-task conversation can make a learning situation more relaxed and has been shown to promote trust and rapport-building [

32,

33]. Off-task conversation is also something that many students are familiar with from real world learning experiences. Classroom interactions encompass a mix of on-task and off-task interactions and the teacher does not just go on about the topic to be learned, usually there is an ongoing conversation with little (apparent) relation to the topic to be learned. To be noted is that not all students experience off-task conversation as something positive; some find it time-consuming and meaningless [

34].

Previous research with the educational game used in this study investigated the effects of the off-task conversation within the chat. Those results showed that overall, the students did not experience the off-task conversation as disturbing, and students who were allowed to engage in off-task conversation had a more positive game experience compared to students who did not have the opportunity [

35]. The study also explored whether high-, mid-, and low-achievers would differ with respect to their experience of the off-task conversational module (the chat module). The outcome was that high- and mid-achievers liked the software more when the off-task conversation (the chat) was included—but that they chose to chat less than the low-achievers. Conversely, low-achievers were more indifferent towards the chat—but they chatted more than high-and mid-achievers. In a follow-up analysis of the material [

36] found that, the engagement differed between these sets of students. High-achieving students showed greater engagement than the low-achieving students did when chatting, but in situations where they appeared unengaged in the chat, they tended to choose to quit the chat and refrain from starting a new. The low achievers on the other hand were more inclined to continue a chat even when they appeared disengaged. The authors speculate that low-achievers do not take control over their learning situation to the same extent as high-achievers.

1.2. Designing a Teachable Agent with High or Low Self-Efficacy

From previous studies we know that pedagogical agents can have beneficial effects on for example students learning experience and self-efficacy. By for example manipulating the agent’s competence some students are more or less willing to work with it [

3,

4,

27]. In the context of a teachable agent, the level of competence or expertise is not an easily manipulated variable, since the competence of the digital tutee reflects the real students teaching. Simply put, if the student teaches the digital tutee well, the tutee will learn and increase its knowledge and competence. On the other hand, if the student does not teach her digital tutee well, the tutee will not increase its knowledge and competence. In contrast, the characteristic of self-efficacy is possible to design and manipulate in a digital tutee and this is what we have done.

In [

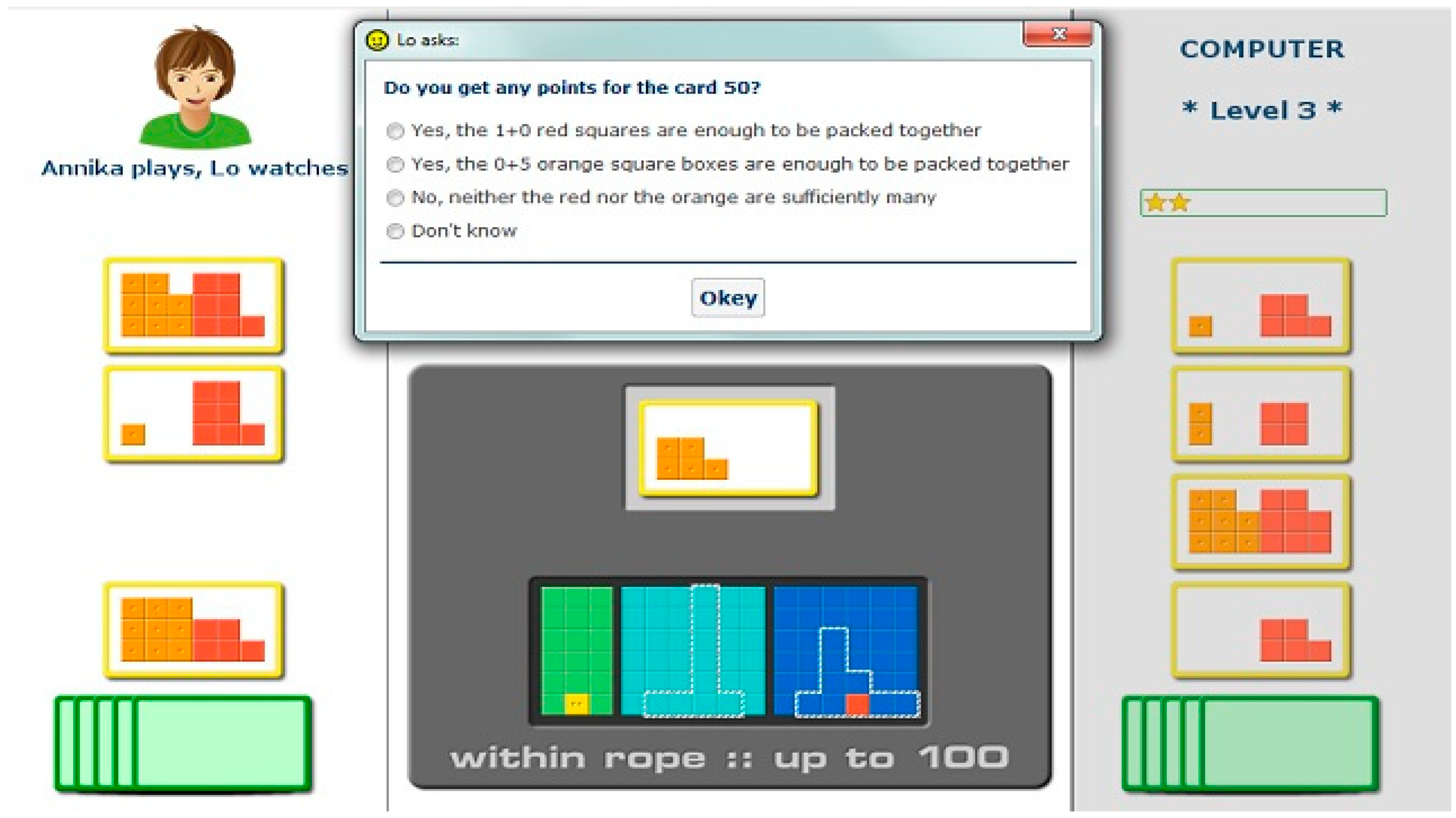

37], we studied whether the manipulation of self-efficacy in a digital tutee—in terms of low versus high self-efficacy—would affect any of the following for the (real) students who acted as teachers for the digital tutee: (i) their self-efficacy (ii) their in-game performance, (iii) their attitude towards the digital tutee. The study made use of an educational game targeting mathematics and the base ten concept [

38], further described in

Section 2.1. The digital tutee interacted with the student both via a scripted multiple-choice conversation and via a natural language chat conversation, see

Section 2.1.1. In the chat conversation in which the digital tutee commented on her performance, expectations and ability to perform and learn, the tutees’ self-efficacy was manipulated to be low or high. Following previous research focusing on matching/mis-matching effects of characteristics in students and pedagogical agents (e.g., [

3]), we investigated possible effects of the digital tutee and student having similar or dissimilar self-efficacy. A matching pair was when the digital tutee and the student both had low or high self-efficacy regarding mathematics and a mis-matching pair when the agent hade high self-efficacy while the student had low self-efficacy and vice versa.

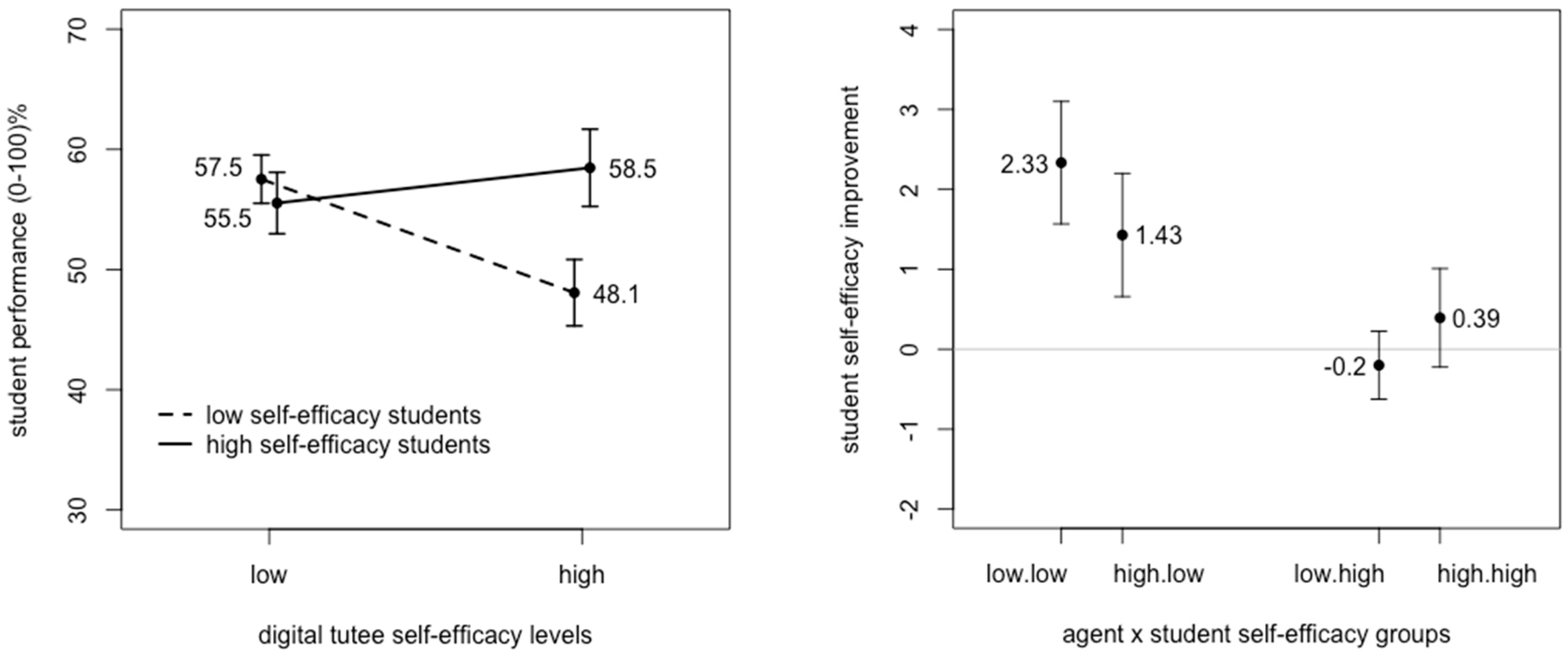

The analysis in [

37] showed that interacting with a digital tutee with low self-efficacy was beneficial for students’ performance. This was especially apparent for students who themselves had had reported low self-efficacy, they significantly increased their performance and performed as well as students with high self-efficacy when interacting with a digital tutee with low self-efficacy, see

Figure 1 (left side). Furthermore, the digital tutee with low self-efficacy also had another positive effect on students with low self-efficacy, who significantly increased their self-efficacy when interacting with it, see

Figure 1 (right side).

Together, these results indicate that interacting with a teachable agent with low self-efficacy was beneficial for students overall and in particular for students with low self-efficacy. In [

37] we speculated that this can be due to the protégé effect, in that students make a greater effort for an agent lacking in self-efficacy that seems more in need of help than an agent with high self-efficacy. The ego-protective buffer can also be in play, in that students with low self-efficacy, that also tend to be low performing, do not perceive the feedback as negatively since it is recursive and aimed at the agent rather than them.

In order to make these results into guidelines on how to design pedagogical agents with low (or possibly high) self-efficacy and know the benefits and possible disadvantages of doing so, we need to know more about the possible causes of these effects, but also look more carefully after differences between and within different student groups. It is possible that some students do not respond well to the agent with low self-efficacy, or that the positive effect is due to different causes for different groups. To do this, we turn to the chat dialogues between students and their digital tutees, that were collected during the study reported in [

37]. We wanted to explore if we could find any patterns in the chat interaction between tutee and student that possibly further explained the results in [

37]. In this paper we explore potential differences in how the students responded to the feedback that expressed either high or low self-efficacy of the agent, but also if the students on their own initiative commented on the agent’s intelligence and competence, or its attitude. We also compare matched and mis-matched cases, i.e., where students had similar or dissimilar self-efficacy (low or high) as their digital tutee. More specifically the research questions are the following:

- Q1.

What difference does the self-efficacy of digital tutees and students make, to what extent, and how:

- (a)

the students react and respond to the digital tutees’ feedback?

- (b)

the students comment on the digital tutees’ intelligence and competence?

- (c)

the students comment on the digital tutees’ attitude?

- Q2.

Are there any relations between students’ chat behavior and students’ performance?

3. Results

The aim of this paper is to explore how a digital tutee´s self-efficacy, expressed as feedback given in a chat, affect students interaction and behavior towards the agent. Our analysis of the chat logs focused on to what extent and how students react and respond to the digital tutees’ feedback, comment on the digital tutees’ intelligence and competence, and comment on the digital tutees’ attitude. We also wanted to see if there were any relations between students’ chat behavior and students’ in-game performance.

3.1. Responses to Feedback

Since the self-efficacy of the teachable agent was expressed through feedback delivered in a chat, we posed our first question, Q1: “How do the students react and respond to the digital tutees feedback on what went on in the game?” The first step was to see if the students would acknowledge the feedback and questions from the tutee, such as for instance “What do you think about the next round?”, or if they ignored this feedback from the digital tutee (freqAFB). Results were that, overall the students responded to 53% of the feedback.

A two-way ANOVA showed a small to medium sized significant main effect of the tutee’s self-efficacy on frequency of response (

F(1,88) = 3.99,

p < 0.05,

= 0.045), where students responded more frequently to feedback from the digital tutee with low self-efficacy (

M = 58.78,

SD = 24.41), than to feedback from the digital tutee with high self-efficacy (

M = 47.74,

SD = 27.10). There was no main effect of student self-efficacy on frequency of response (

F(1,88) = 1.39,

p = 0.24), nor an interaction effect of student and tutee self-efficacy (

F(1,88) = 0.245,

p = 0.62), see

Table 3 for means and standard deviation for these groups.

The next step was to look at the cases where the student had actually responded to the feedback and the digital tutee´s question, formulated as for example; “How do you feel?” When responding the student could do it in either a positive way such as “It feels very well, you did very well”, or a negative way such as “It doesn’t go very well, you need to practice more”, or in a neutral way, writing for example “okay”. Results show that on average, 72% the responses were positive (freqPosFB).

A two-way ANOVA showed a significant small to medium sized main effect (

F(1,87) = 4.87,

p < 0.05,

= 0.055) between students with high self-efficacy who responded positively more often (

M = 78.55,

SD = 24.46), than students with low self-efficacy (

M = 65.34,

SD = 30.85). There were no main effects of tutee self-efficacy (

F(1,87) = 0.23,

p = 0.63) nor any interaction effect (

F(1,87) = 0.59,

p = 0.30). See

Table 4 for means and standard deviation for these groups.

The most frequent type of positive answers from the students was simply a “good” or “well” when answering the digital tutee how well they thought it proceeded. These replies accounted for approximate one third of all positive answers. Some were more superlative like “great” and “awesome”, but these were rather few. One out of six answers commented on the tutee’s intelligence or competence, the most frequent answers being of the type “You are good”, or “You are learning”, or more seldom, “You are clever”. The neutral answers were usually a “don’t know”, “so-so” or “ok”. There were also occasions were the student instructed the digital tutee to observe more carefully or put more effort into the next round.

Frequent negative answers when the digital tutee asked, “How do you think it’s going?” were “badly” or “really badly”. Almost half of the negative answers were derogatory, or even abusive comments about the tutee’s intelligence or competence, like “You suck”, “You are dumb” or “You lost, idiot”.

To conclude question “Q1: To what extent and how do the students react and respond to the digital tutees feedback on what went on in the game?” we note that students responded more frequently to feedback from the digital tutee with low self-efficacy, and that the responses were mostly positive. Thus, the trait of having low self-efficacy in a digital tutee can lead the students to put more effort into the interaction and to respond more positively to comments about the tutees self-efficacy, learning and performance.

3.2. Comments on the Tutees’ Intelligence and Competence

Next, we looked at research question “Q2: To what extent and how do the students comment on the digital tutees’ intelligence and competence?” The comments in question appeared in the free conversation following the feedback from the digital tutee. Some of them were a direct response to the feedback utterance and prompted by the tutee, but more than half of these comments came spontaneously later in the conversation. Almost all students made comments, as they occurred for 89% of the students overall, for 93% of the students interacting with a tutee with low self-efficacy, and 84% of the students interacting with a tutee with high self-efficacy. On average, each student gave 4.9 comments to their digital tutee, 5.7 for students interacting with a tutee with low self-efficacy and 4.0 comments for students interacting with a tutee with high self-efficacy (numCI).

Overall the comments regarding the digital tutees intelligence or competence were negative, on average 60% of the comments were negative and only 40% were positive (freqPosCI). A two-way ANOVA showed a significant medium sized main effect of the tutee’s self-efficacy (

F(1,78) = 5.71,

p < 0.05,

= 0.071), where the digital tutee with low self-efficacy on average received more positive comments (

M = 49.91,

SD = 37.26), than the digital tutee with high self-efficacy (

M = 28.97,

SD = 38.13). There was no main effect of student self-efficacy (

F(1,78) = 0.34,

p = 0.56) nor any interaction effect (

F(1,78) = 0.21,

p = 0.65). See

Table 5 for the mean and standard deviation of these groups.

Most of the negative comments involved saying that the digital tutee was an idiot or that (s)he sucked. However, some of the comments referred to the tutee’s abilities to learn math and the game, for example, “You are not very good at math” or things like “How can you be so stupid” and “I mean, do you actually have a brain?”. The positive comments mostly concerned the tutee’s performance and ability to play, the student saying things like “You are super good” or “You did very well”. However, students also expressed happiness regarding their digital tutees’ performance saying things like “It feels very nice when you play as good as you do” or “Oh my God, you are really good, that is so fun to see!!!”

Thus, the results for “Q1b: To what extent and how do the students comment on the digital tutees intelligence and competence?” are in line with the results on Q1a. The digital tutee with low-self efficacy received more positive comments about its intelligence and competence than the digital tutee with high self-efficacy from both students with low and high self-efficacy.

3.3. Comments on the Tutees’ Attitude

Of special interest was to see if the students commented on the digital tutees attitude towards its learning and performance, since this attitude relates to the self-efficacy that the tutee expressed. Thus, we explored “Q1c: To what extent and how do the students comment on the digital tutees attitude?” Our results show that only 21 out of 89 students made any comments regarding the digital tutees attitude. Out of these, 17 directed the comments to the digital tutee with low self-efficacy. In other words, for the digital tutee with low self-efficacy 17 out of 45 tutees received comments, while for the digital tutee with high self-efficacy only 4 out of 44 tutees received comments on its attitude. Equally, as many students with high self-efficacy (10) as students with low self-efficacy (11) provided these comments.

Of the four comments to the tutee with high self-efficacy one was negative and expressed a frustration with the mismatch between performance and the tutees attitude “What do you mean, “you have learned a lot, I lost”. Two were positive “It’s good that you are confident, it will go well”, “It will go well, just believe in yourself” and one was neutral “Ok, but we need to continue working”.

The comments to the digital tutee with low self-efficacy are all listed in

Table 6 with the exception of similar comments from the same student in the same chat session. It is noted whether a student with low or high self-efficacy gave the comment.

Out of the comments to the tutee with low self-efficacy more than half state that the student and/or tutee is doing well. However, many of these comments express some frustration or sadness over the tutees negative attitude, for example “Yes, I think it goes well for both of us, why don’t you”. But there are also many that are encouraging, trying to boost the tutee, for example “Don’t worry you will do it”. Overall many of the comments tell the tutee to be less negative and more positive (e.g., “I know, but you are rather good, just think positive”), to not be unsure (e.g., “Do not be unsure you will win”) and believe in itself (e.g., “Tell yourself you can win”). Some students also tell the digital tutee to relax (e.g., “It’s cool, Lo”), focus on the task (e.g., “You need to focus more, do what you should, don’t think of anything else!”), or that it not kind to be so negative (e.g., “I don’t like it when you say so”). These comments vary in tone, with some being rater harsh (e.g., “You shouldn’t be so fucking negative, don’t be unsure you idiot”) and some very encouraging (e.g., “Don’t worry you will do it! Good if you believe in yourself, I think you can do it”)

The sparse data makes it hard to draw any definite conclusions, but it is important to note that while some students encourage the tutee when it expresses a low self-efficacy, some students also get a bit frustrated with it, especially if they think they or the tutee is performing well. Here, there likely are differences for students with high and low self-efficacy. Since students with high self-efficacy generally perform and teach their tutees better there will be a mismatch between the tutees’ low self-efficacy and high performance, which can lead to frustration. For students with low self-efficacy of which many will also not teach their tutees equally well, the discrepancy between the self-efficacy and performance of the tutee will not be as obvious.

3.4. Relations between Chat and Performance Measures

Thus far, we have found differences in how students with high and low self-efficacy interact with digital tutees expressing high or low self-efficacy in the chat. Since our starting point was to explore the effect that students with low self-efficacy perform better as well as gain a higher self-efficacy belief when interacting with a digital tutee displayed as having low rather than high self-efficacy, our final analysis concerned Q2: Are there any relations between students’ chat behavior and students’ in-game performance?

Students’ performance was calculated in two ways (see

Section 2.5.2 Performance measures): (i) the proportion of correct and incorrect answers given by the student in relation to the multiple-choice questions posed by the digital tutee regarding the game and its underlying mathematical model and (ii) the goodness of cards chosen by the student during gameplay. The first measure is more directly related to explicit teaching of the digital tutee, whereas the other is more of an indirect measure of how well the student performs during gameplay when the tutee is learning through observation.

We computed a Pearson correlation coefficient (

Table 7) for the two performance measures as well as for the two measures from the chat on students’ positive or negative attitudes towards the digital tutee and the feedback it provided: frequency of positive responses to feedback (freqPosAFB) and frequency of positive comments on the tutee’s intelligence and competence (freqPosCI). The comments on the tutees´ attitude had to be excluded due to the sparse data.

Overall, we found a significant correlation with large effect size (r(78) = 0.593, p < 0.01) between the frequency of students’ positive answers to the digital tutee´s feedback and the digital tutees comments on its own intelligence and competence. There was a significant correlation of medium effect size (r(88) = 0.388, p < 0.01) between the two performance measures; correctly answered multiple-choice questions and goodness (i.e., choosing the best card). We also found a significant correlation of small effect size between how well the student answered the multiple-choice questions and the frequency of providing positive comments on the digital tutees’ intelligence or competence (r(79) = 0.248, p < 0.05).

When looking at the different student groups we found no significant correlation between performance measures for students with low self-efficacy. But we did find a significant correlation of medium effect size (

r(39) = 0.359,

p < 0.05) between their proportion of correct answers to multiple-choice questions and the frequency of positive comments they provided on the digital tutees’ intelligence or competence. The correlation between the frequency of positive comments they provided on the digital tutees’ intelligence or competence and the frequency of positive feedback responses was also significant and of large effect size (

r(39) = 0.698,

p < 0.01) (see

Table 8).

For the students with high self-efficacy another pattern came forth, see

Table 9. There was a significant correlation of medium effect size (

r(44) = 0.368,

p < 0.05) between the proportion of correctly answered questions and goodness, as well as a significant correlation between the frequency of positive responses to the feedback and the frequency of positive comments they gave on the digital tutees intelligence or competence (

r(39) = 0.464,

p < 0.01). However, no significant correlation was found between their proportion of correct answers to the multiple-choice questions and the frequency of positive comments they provided on the digital tutee’s intelligence or competence.

This can be interpreted as students with low self-efficacy who express a more positive attitude towards their digital tutee in the sense of providing more positive comments on their digital tutee’s intelligence and competence also perform better when they answer the digital tutee’s multiple-choice question However, they do not play better, with reference to how they choose cards (i.e., the goodness value). The competence of students with high self-efficacy seems to be the driving force in how they perform. In this group, students who play the game well choose good cards (having a high goodness value) also answer the multiple-choice questions better, regardless of their attitude towards their digital tutee as expressed through their chat comments on the tutee’s intelligence and competence.

However, the differences in correlation between goodness and answers to multiple choice questions between the students with high and low self-efficacy, was not significant, Z = −0.816, p = 0.42, and neither was the correlation between positive comments on competence and intelligence and answer to multiple choice questions, Z = 1.11, p = 0.27. So, these results should be taken more as an indication of something that may be interesting to investigate further.

4. Discussion

In [

37] we drew the tentative conclusion that designing a digital tutee with low self-efficacy would be a good choice since the results suggested that students with low self-efficacy benefitted from interacting with a digital tutee with low rather than high self-efficacy. At the same time, students with high self-efficacy were not negatively affected when interacting with a digital tutee with low self-efficacy; rather they performed equally well when interacting with both types of tutees.

With the follow-up analysis carried out in this paper, we hoped to get a deeper understanding of these results by analyzing the interaction between student and his/her tutee. By looking at the dialogue we hoped to find interaction patterns that could explain why a digital tutee with low self-efficacy would be a better choice when designing pedagogical agents for educational software. The analysis was based on the dialogues between student and tutee that appeared as a chat after a finished game. It was in this chat the digital tutee expressed its self-efficacy (either high or low) when conversing/talking/interacting with the student.

Our findings can be summarized as follows:

- (i)

Students responded more frequently to feedback from a digital tutee with low self-efficacy, and these responses were mostly positive.

- (ii)

Students gave a digital tutee with low-self efficacy more positive comments about its intelligence and competence than they did to a digital tutee with high self-efficacy.

- (iii)

Students’ comments about the tutees attitude were almost exclusively given to the tutee with low self-efficacy. Most comments were positive, expressing that the tutee and/or student was doing well or were of an encouraging type. There were, however, also some comments that expressed frustration regarding the tutee’s low opinion of itself.

- (iv)

Students with low self-efficacy who expressed a more positive attitude towards their digital tutee, in the sense of providing more positive comments on the digital tutees intelligence and competence, also performed better when they answered the digital tutee’s multiple-choice questions. However, they did not play better in the sense of choosing more appropriate cards (i.e., goodness value). For students with high self-efficacy we found another pattern, namely a relation between how well they played and how well they answered the questions asked by the digital tutee.

Below, we discuss how these findings can be understood in the light of the following three constructs: the protégé-effect, role modelling, and the importance of social relations. We know from previous studies that the protégé-effect is one of the underlying factors that make students who teach someone else (for example a digital tutee) learn more and be more motivated compared to students who learn for themselves [

30]. That is, having someone who is dependent on you to learn and that you have responsibility for seems to lead to an increased effort. From our analysis, we see that students responded more frequently and more positively (finding (i)) to a tutee with low self-efficacy than to one with high self-efficacy. Many students tried to encourage a tutee with low self-efficacy when they for example commented on its attitude, saying things like “Tell yourself that you can win” or “Don’t worry, you can do it!” The tutee with low self-efficacy also received fewer negative comments on its intelligence and competence than the tutee with high self-efficacy (finding (ii)).

Possibly, students treat a digital tutee with low self-efficacy in a more positive manner, in that they respond more frequently and more positively to its comments since such a tutee comes across as someone more in need of help and who is more subordinate compared to a digital tutee with high self-efficacy. The experience of having a protégé to care for and to support might be especially relevant for students with low self-efficacy, in this case, notably low self-efficacy in mathematic. These students will, more often than students with high self-efficacy in math, lack the experience of being someone who teaches someone else. Students with high self-efficacy are more likely to already in regular classes have taken a teacher role and assisted or supported a less knowledgeable and/or less confident peer.

Based on the findings of [

41], that a person’s self-efficacy may be influenced by observing someone else perform a special task, one could have suspected that a digital tutee with high self-efficacy would function as a role model and thus boost the students’ self-efficacy. Seeing someone else doing something may boost the thought: “if (s)he can do it so can I”. But in our analyses, we only found three instances of comments where the students agreed with the digital tutee when it expressed high self-efficacy, saying for example “It’s good that you are confident, it will go well” and two of these comments came from students who themselves had high self-efficacy. Instead, a kind of reversed role modelling may be going on in which the student can be a role model for the tutee with low self-efficacy. In our analysis, we found that when the digital tutee expressed a very negative attitude some of the students were positive and encouraged it with wordings such as “You shouldn’t be worried, you will make it” or “I know… but you are pretty good, you just have to think positive” (finding (iii)). We did not find any comments where the student agreed with the tutee with low self-efficacy and expressed his or her own low self-efficacy. One can speculate that this is a result of the reversed roles that comes with teachable agents and learning by teaching, where the student takes the role as the teacher and the agent acts as the tutee.

Finally, we turn to the importance of the social relation with the tutee. Sjödén and colleagues [

31] have previously shown that the presence of a digital tutee can have a positive impact on low-performing students. Students with low self-efficacy do not equal low-performing students, but there is often a correlation between the groups. Looking at our analysis, we found that for students with high self-efficacy, their performance (i.e., goodness value) correlated with how well they answered the digital tutees multiple-choice questions. This correlation is not surprising since someone who answers the questions correctly is likely to be good at choosing good cards. What is interesting however is that we did not find this correlation for students with low self-efficacy. Instead, we found a correlation between how well they answered the digital tutees multiple-choice questions and to what extent they gave positive comments regarding their tutees’ competence and intelligence. It is possible that when students formed a social relationship with their digital tutees in the chat, by encouraging it, it had an effect on the students’ engagement and performance in the interaction with the agent outside the chat. If the student feels that he or she is the more knowledgeable one, it might fuel the will to invest more in the learning since they act as the role model. For students with low self-efficacy this is presumably a situation they are not as used to as students with high self-efficacy, and they may therefore react more strongly to it.

Limitations

Even though the digital tutee had the ability to talk about a wide array of topics its abilities were limited. You could sense that some of the students were a bit frustrated at points when the digital tutee could not answer the questions asked by the student. Maybe this led to more negative comments and frustration than otherwise would be the case.

The research questions focused on looking at agents and students on the extreme ends of the self-efficacy scale. The digital tutee was designed to have either clearly high or low self-efficacy and the analysis was restricted to the students with the highest and lowest self-efficacy score, with mid self-efficacy students excluded. Another way to perform the study would be to look at self-efficacy as a continuous metric and investigate if there are linear relationships between the students’ self-efficacy and other variables. The choice to not do so was partly based on limitations in resources to code the chat dialogues.