Abstract

Even though there is a plethora of research that can be used by educators to inform their practice, the deep implementation of evidence-based strategies remains unrealized in many schools and classrooms. The question we set out to answer was: What conditions help encourage educators to implement and adapt evidence from the Visible Learning synthesis when they encounter it? We examined two examples of the reception of the Visible Learning research in schools and identified the following six key conditions that helped foster the translation of the Visible Learning research into classroom practice in ways that demonstrated measurable impact on student learning: (1) The presence of a learning methodology; (2) clear examples of how to apply the strategies; (3) a ‘knowledgeable other’ to help assist educators in processing the research; (4) a supportive organizational environment; (5) the recognition of educators as agents of influence, and (6) the monitoring and adjustment of implementation strategies.

1. Introduction

In 2009, Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Student Achievement (Hattie, 2009) [1] was published. In this book, Professor John Hattie synthesized major findings from over 800 meta-analyses that investigated factors that influence student achievement. Hattie demonstrated the magnitude and overall distribution of thousands of effect sizes so that practitioners could compare influences in a meaningful way and use evidence to build and defend a model for teaching and learning. His major purpose was to “generate a model of successful teaching and learning based on the many thousands of studies” [1] (p. 237).

While Hattie’s is the largest synthesis of factors that influence student achievement to date, it is not the first. Meta-analyses and effect sizes have enabled researchers and educators to quantify, interpret, and compare overall effects of various factors since its introduction by Glass (1976) [2]. An effect size emphasizes the difference in the magnitude of given approaches for the purpose of comparison. The larger the effect size, the more powerful the influence. In education, an effect size of 0 reveals that the influence had no effect on student achievement. The Visible Learning synthesis identified factors that are likely to have a negative impact on student achievement (e.g., boredom, expulsion), factors that are likely to have a small positive impact on student achievement (e.g., one-to-one laptops, background music), and factors that have the potential to accelerate (e.g., problem-based learning) and considerably accelerate (e.g., deliberate practice, feedback, teacher clarity) student achievement. Since the original synthesis was published in 2009, Hattie continues to update the database, which now includes over 1600 meta-analyses, 95,000 studies, and 275,000+ effect sizes.

Unfortunately, even though there is an abundance of research that can be used by teachers to inform their practice, achieving quality implementation of evidence-based strategies remains problematic in education. It is not a matter of improving the supply of research but rather identifying the key features of research and conditions in schools that assist teachers in using research in meaningful ways to inform pedagogy. Reviews of literature on educators’ use of research to improve the professional practice demonstrate low and infrequent use [3,4,5,6]. It is rare that teachers use the results of meta-analyses to inform what goes on in their classrooms. It is important to determine the conditions that facilitate teachers’ engagement with research in ways that translate into meaningful changes in their classroom practice. As Hattie (2009) [1] noted, “the practice of teaching has changed little over the past century” [1] (p. 5).

In this paper, we present two examples where school districts used evidence from the Visible Learning meta-analysis to inform their practice and examine the conditions that facilitated their progress throughout the process. The question we set out to answer was: What conditions help to encourage educators to implement and adapt evidence from the Visible Learning synthesis as they encounter it?

2. Misconceptions and Missed Opportunities

Visible Learning has received much attention since its publication and, like many other multifaceted concepts in education, there are times when it has been misinterpreted and/or some of the major messages misunderstood. For example, the influences were often thought of as a checklist and school districts claimed they were ‘doing’ Visible Learning if they had incorporated the top 10 influences into their strategic improvement plans. There were also examples where school districts self-imposed labels such as “We are a Visible Learning District” when they had merely participated in a book study or engaged their teachers in a one-shot professional development session. When the messages from the Visible Learning synthesis are oversimplified, the resulting application of the research becomes superficial.

Hattie (2009) [1] noted that his aim was to provide an “explanatory story, not a ‘what works’ recipe” (p. 3) and a major part of this “explanatory story” includes the provision of “a method to evaluate the relative efficacy of different influences that teachers use” (p. 6). What will make a difference is in figuring out how to develop these influences, which have the greatest potential to positively impact student achievement and work in schools and classrooms. Unfortunately, in many cases, deep implementation of the factors that influence student achievement outlined in the Visible Learning research remain unrealized. What is largely missing is a learning methodology that is much needed to reach deep levels of implementation and assist teachers in examining evidence to inform them about the successes or otherwise of their teaching.

This is a core message in Visible Learning that has received little attention so far. Classroom contexts are diverse and what is needed is attention to the process through which evidence-based strategies get realized in practice (Donohoo & Katz, in press) [7]. As noted earlier, it is important to determine the conditions that facilitate teachers’ engagement with research in ways that translate into meaningful changes in their classroom practice and result in gains in student achievement. Hattie (2009) [1] noted that “a major theme is when teachers meet to discuss, evaluate, and plan their teaching in light of the feedback evidence” (p. 239). In this paper, we share two examples in which teams of teachers engaged in a process where they tried and tested influences from the Visible Learning research that had a high likelihood to improve student achievement in their practice. In both examples, the process resulted in critical reflection in light of evidence, deep levels of implementation, and gains in student achievement.

3. Schools of Implementation

We examined two examples of the reception of the Visible Learning research into practice. In doing so, we identified the key conditions that helped foster the translation of the Visible Learning research into practice in ways that demonstrated measurable impact on student learning. The two sites were selected because they were able to attain deep implementation, demonstrate measurable impact on student achievement, and were accessible to the authors of this paper. Below, we describe the two sites where implementation took place, the student learning focus, the professional learning methodology, and the resulting student outcomes.

4. Abu Dhabi, United Arab Emirates

One example took place in a school community in Abu Dhabi during the 2017–2018 school year. The school population is approximately 2000 students from 86 nationalities attending kindergarten through 12th grade. The school, founded in 2008, employs 169 teachers and 52 classroom assistants who are mainly recruited from the United Kingdom. A British curriculum is offered for students who range in age from 3 years to 18 years and the school is organized with multiple classes from the Foundation to Post 16 qualification.

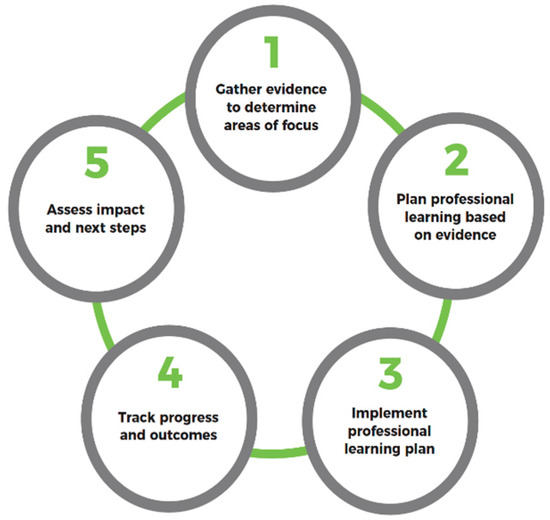

Prior to introducing the Visible Learning research to the entire faculty, a leadership team was identified. This team consisted of both administrators and teacher leaders and is subsequently referred to as the Visible Learning Leadership Team (VLLT). They engaged in professional learning in order to determine a process for evidence-informed decision-making for the entire faculty. The team decided that inquiry-based professional learning cycles (in the Visible Learning literature, this process is referred to as an Impact Cycle) were an approach they would utilize in order to help the faculty in deliberately implementing evidence-based strategies in their practice. Figure 1 illustrates the five stages of a Visible Learning Impact Cycle.

Figure 1.

Five Stages of a Visible Learning Impact Cycle.

The focus of the school was applying effective feedback across the secondary division and developing learner agency in the primary division. Professional resources included the development of an Action Research Teaching and Learning Guide that was produced by teachers for teachers. The purpose was to deepen educators’ understanding of effective methods for gathering evidence and being an evaluator of their classroom practice. Other resources included videos of students demonstrating the use of learning intentions and success criteria to provide peer feedback, students talking about learning dispositions, the difference between achievement and progress, and videos demonstrating teacher-to-student feedback and student-to-teacher feedback.

Professional learning included several full day sessions for leaders and the entire school faculty delivered by an external educational consultant. In addition, ongoing professional readings and teacher team learning was led by school leaders. During different iterations of Impact Cycles, the focus areas from the Visible Learning research included:

- Using learning intentions and success criteria effectively;

- Students’ use of learning intentions and success criteria in setting challenge expectations and goals for themselves;

- Using assessments to track progress and student use of assessment data;

- Using effective feedback and engaging with the three feedback questions (Where am I going? How am I doing? Where to next?)

While teams had an overarching focus for their collaborative Impact Cycle, for example, ‘What does it mean to be in the Learning Pit?’ (Nottingham, 2017) [8], each teacher also selected an individual aspect for their own Impact Cycle. This allowed some autonomy within each area that was identified for deeper implementation. The emphasis was on teacher learning and risk taking.

Implementation of influences from the Visible Learning database by teachers in classrooms was observed and documented by the VLLT during the 2017–2018 school year. Additional sources of evidence of implementation included visual displays representing ‘the language of learning’ and student work samples in department areas and corridors. Artifacts of student learning demonstrated that students were continually referring to the success criteria and tracking their own progress toward the learning intentions. Every six to eight weeks, teacher teams gathered data regarding students’ progress and analyzed it to determine their impact and adapt their instruction accordingly.

As noted earlier, increasing student agency and providing effective feedback were two focus areas in the school in Abu Dhabi. Examples of increased student agency included samples of student generated questions and investigations along with concept maps that students used to track their new learning in science classrooms. Teachers noted that accessing the student voice was a significant factor related to implementation and described how they were partnering with students to design learning experiences and to figure out ways to increase active participation. An example in mathematics included explicitly teaching students how to use teacher created rubrics and targets, which lead to students co-constructing levels of progress on blank rubrics. Teachers collaborated to develop progress maps for students so that students could record and track their progress against expected outcomes. In the languages department, a ‘Tour De France’ map was created and each stage represented a curriculum outcome written in student-friendly language. Students consistently used the map as a tool to engage in peer and self-assessment.

During interviews with students, they described how they participated in assessing and discussing their work and listed a variety of strategies they had been using to self-monitor their progress toward the learning intention. Students also noted how the shift from “final grades” and summative assessment toward more formative techniques empowered them to take more ownership for their own learning. One student in Year 6 noted, “Mistakes are part of learning. It’s okay. It kind of gives you opportunities and hope.” Another student in Year 5 noted, “You can’t always have success in everything you do. Visible learning helps you meet challenges and get through doubt. You get a real feeling of accomplishment.”

5. Ontario, Canada

A second example took place in a medium-sized school district in Ontario, Canada over the course of three years (2015–2018). It involved approximately 125 classroom teachers (7th–10th grade representing a variety of disciplines), approximately 20 school and/or system administrators, and 10 system support personnel (professional learning facilitators). Cross-panel teams were organized based on inclusion in a ‘family of schools’ (determined by geographic location). The student learning focus over the course of the project was topic development in writing, which included the skills of developing and organizing ideas and information.

Professional learning included a combination of whole group sessions and team sessions that were supported by the district’s professional learning facilitators (PLF) who were assigned to particular schools. Whole group sessions were co-planned by the PLFs and a 3-person team (including two of the authors of this paper) who worked as Provincial Literacy Leads. The professional learning sessions involved supporting teachers, principals, and professional learning facilitators in building capacity to improve student achievement by learning about and implementing the following influences from the Visible Learning research.

- Using clear learning intentions and success criteria to support students’ developing main ideas with sufficient supporting details and organizing information and ideas in a coherent manner (i.e., topic development);

- Using worked examples as a resource for learning for educators and for students;

- Using feedback strategically (e.g., using a variety of methods of feedback and targeting feedback to students’ instructional level).

Like the example from Abu Dhabi, the teachers in the school district in Ontario, Canada took part in Impact Cycles (in Ontario, this process is referred to as Collaborative Inquiry). During the course of the project, teachers engaged in a cyclical, iterative process, which involved gathering evidence of student learning, clarifying a focus area, goals and learning needs, trying and testing specific influences from the Visible Learning research in classrooms, collaboratively analyzing student work by using a protocol, and evaluating the impact on the changes in practice in relation to student achievement.

Implementation was not immediate. However, with each iterative cycle, it developed gradually. Evidence of implementation of evidence-based strategies included the following: PLFs observations in the field, samples of teachers’ feedback to students over time, teachers’ self-reports based on exit cards, and samples of students’ early and later work (i.e., student writing evolving over time).

One consistent and regular part of the professional learning was analyzing student work to assess students’ strengths and needs in order to collaboratively plan the next steps for learning. Each teacher focused on a small group of ‘marker students.’ Marker students were those students who raised concerns for teachers in terms of the student’s topic development writing skills, those who performed below expectation on standardized tests, and/or those who were presenting other challenges related to literacy learning. Teams then examined student work (of marker students) over time and compared early samples with late samples of work to determine if students were making progress regarding the targeted learning intentions and success criteria. Teachers were able to specifically identify areas of improvement and respond accordingly. For example, when teachers determined from their assessment that some students provided insufficient supporting details for a topic, teachers used a set of worked examples that ranged in proficiency (e.g., little or no supporting details to many connected and relevant supporting details) with students to help them self-assess their own writing compared to the worked examples. Once students identified ‘How am I doing?’, teachers gave additional support through instruction and feedback (e.g., modeling how to add supporting details such as examples, statistics, analogies, and/or explanations). Results demonstrated that more than 70% of the marker students made significant gains in achievement.

In addition, over time, teachers’ descriptions of student work focused on the articulated learning intentions and success criteria as compared to teachers’ earlier descriptions that were related to aspects of writing unrelated to the learning intentions and success criteria (e.g., aspects that were not directly related to topic development such as the number of sentences in a paragraph, spelling, and grammar).

Next, we present the key conditions from these two examples that helped foster the translation of the Visible Learning research into practice.

6. Six Supporting Conditions

In this section, we present six conditions that facilitated teachers’ engagement with research in ways that translated into meaningful changes in their classroom practice and increases in student achievement. We also share anecdotal evidence from the school districts (in the examples highlighted above) where we have worked. We are not suggesting that this is an empirical investigation or even a collective case study, but rather our interpretation of the enabling conditions in which measurable gains in student achievement were realized through deep implementation of some of the key influences in the Visible Learning database.

6.1. The Presence of a Learning Methodology

In these examples, teams engaged in a clearly laid out professional learning methodology. The Impact Cycle (also known as the Collaborative Inquiry or the Collaborative Action Research) enabled teams to carefully consider evidence-based strategies from the Visible Learning research, apply and refine them in their practice, and examine the impact of their changed actions on student learning.

The first step in the Impact Cycle is to gather evidence to determine areas of focus. This enabled the teams to conduct a deep diagnosis and gather baseline information. What is important to note is that, through this formal process (whether we refer to it as an Impact Cycle or Collaborative Inquiry), teacher’s reflections were in light of the evidence and not based on guesses, hunches, or insufficient proof. In our experiences, when critical analysis of student work with clear understandings of the learning intention and success criteria, is not part of the process, educators will often talk in generalities about student outcomes (e.g., “The students were much more engaged. I think it made a difference”). This was happening early in both cases, but, over a short period of time, teachers’ reflection shifted in light of the evidence because they continued to collaboratively examine sources of student learning data as part of the professional learning cycle.

“Publicly seeking evidence of positive effects on student learning does not happen serendipitously or by accident” (Donohoo, Hattie, & Eells, 2018) [9] (p. 44). Teams needed a clearly laid out process in order to examine evidence of their impact on student achievement. Protocols (e.g., see Table A1 ‘Protocol for Analyzing Student Work’) that were incorporated into the process also provided safety for risk-taking. The process helped teachers to orient their work around outcomes and focused them on questions such as: “Did the students gain the essential skills and understandings? How do we know? How can we use evidence of student learning to improve classroom instruction?”

6.2. Teachers Needed Examples of How to Apply Strategies in Order to Integrate Them into Their Practice

After learning more about what makes a particular influence most impactful, teachers needed examples that were in line with the student learning intentions and success criteria in order to integrate them into their practice. This was instrumental in helping keep the work targeted and prevent the selection of superficial strategies in response to student learning needs. Teachers also needed multiple opportunities to see the strategies modeled before they were ready to apply the influences in their practice.

For example, in Ontario, when learning about the three feedback questions (i.e., Where am I going? How am I doing? and Where to next?) and the levels of feedback (i.e., task, process, and self-regulation), teachers were provided multiple opportunities to learn more about Hattie and Timperley’s (2007) [10] model that makes feedback more effective. Through direct instruction, teachers’ were better able to understand the differences between the task, process, and the self-regulation level feedback and the self-level feedback (e.g., praise). Teachers were also provided examples of feedback statements and coded feedback according to the levels. In this gradual release of the responsibility approach, the teachers eventually crafted feedback based on samples of student work they brought. By the end of the third year, many teachers indicated their next steps were to put additional strategies into place for peer-to-peer feedback. Teachers’ consistent use of learning intentions and success criteria helped them better understand how both could be used by students to self and peer assess and, therefore, teachers’ readiness to engage students in self and peer assessment increased.

In the Abu Dhabi example, the VLLT were responsible for first implementing the strategies in their own classrooms and also provided evidence from other sources of how the process was implemented. Lesson plans and classroom observations were continually shared and available to all staff. A space was set up for collaboration to also take place virtually and the original Action Research magazine included the initial Impact Cycles carried out by the team as a form of reference to others. These models were an important aspect in helping teachers integrate strategies in their own classrooms.

6.3. Teachers Needed a Conduit for the Educational Research

In both these projects, teachers were introduced to the Visible Learning research via a conduit. An overview of the Visible Learning research was provided along with an explanation of effect sizes and meta-analyses. Particular factors were selected and used as opportunities to demonstrate the need to uncover the untold stories behind the effect sizes. Homework is a good example of an influence that needs to be ‘unpacked’ for an audience because it is affected by moderating variables. A moderator is a third variable that affects the strength of the relationship between the independent (e.g., homework) and dependent variables (student achievement). The Visible Learning research demonstrated that homework has a higher effect size in high schools than it does in primary grades. When the effect size of homework is taken at face value, it appears less significant than when it is disaggregated, according to grade level. Teachers needed a knowledgeable expert to help them understand this and to engage them in learning more about the “stories” behind the influences they selected to use in their practice.

In both examples, relevant research was summarized for teachers and they were engaged in identifying and discussing key findings. The knowledgeable other consistently communicated information about the strength of the evidence for the influence in the Visible Learning database (e.g., feedback has an effect size of 0.70) to the teachers and helped them understand what that meant concerning various factors’ potential to accelerate student learning or not.

6.4. The Organizational Environments Were Supportive

The organizational environments supported learning. Not only were teachers learning but administrators were learning alongside them as part of the supportive environment. Robinson, Hohepa, and Lloyd’s (2009) [11] meta-analysis examined the impact of school leadership on student outcomes and identified ‘promoting and participating in teacher learning and development’ as the most effective leadership practice with an effect size of 0.84. Teams were invited to ‘lean on each other’ and to all share the responsibility and outcomes for students as they pursued the work together. Opportunities for staff collaboration were deliberately scheduled.

In the Ontario example, although classroom teachers brought student work to the team and largely implemented the instruction to support students, they drew on the contributions of team members who co-analyzed and co-assessed the student work and then co-planned next steps to support students based on the needs identified. Teams included educators from a variety of roles including classroom teachers, teachers in supporting roles outside the classroom (e.g., special education), administrators (principals and vice-principals), and PLFs from the central office who were assigned to each team. The professional learning was designed so that all members of teams built their capacity around common learning (i.e., use learning intentions and success criteria, use assessment student assessment data and track progress, and use feedback strategically), but it was also designed to utilize the unique ways each of the roles contributed to and influenced the work. In particular, school principals in addition to learning alongside other members of the team (e.g., co-assessing student work), leveraged their role to support and influence shifts in individual practice and collaborative team practice. For example, principals helped to validate and support teachers within teams who were ‘slowing down their practice’ in order to spend more time giving feedback even though a more deliberate feedback practice may have differed from other practices and culture within the school.

In the Abu Dhabi example, there was an emphasis and expectation that each teacher would have an ‘impact partner’ and dedicated departmental time to ‘catch up on how it is going’ and discuss results. The Heads of the Department noted a shift in the professional discussions in the sense that they had “a much greater focus on learning rather than teaching” and, as a result, they reported “feeling more like a team.”

6.5. It Was Important for Educators to See Themselves as Agents of Influence

Educators saw themselves as agents of influence. This condition helped encourage educators to implement and adapt influences from the Visible Learning research through motivational mechanisms. The attribution theory plays a key role in understanding this relationship. When teams recognized small successes early during the process and attributed student progress to factors that were within their realm of influence (e.g., actions of the team and individual teachers’ changes in practice), they were motivated to continue. The recognition of mastery experiences unfolded as teachers were encouraged to share their learning and successes with peers through the Impact Cycle.

What was important to note in the Ontario example is that, early on, teachers’ frequently made external attributions for students’ successes and/or lack of progress (e.g., students did not attend classes regularly, parents did not hold their children accountable for completing their homework, etc.). Later in the project, when teachers were asked to account for students’ successes or otherwise, they overwhelmingly attributed results to their deliberate teaching practices including the use of learning intentions, success criteria, and feedback as opposed to factors that were outside of their influence (e.g., student absenteeism, lack of classroom resources). One teacher noted, “I think the reasons for students’ improvement is that I went back to the success criteria and students used it twice to reassess work.” Most of the actions that teachers attributed to making a difference in student learning by the third year had been a focus of the professional learning that took place during the course of this project. Even in the few cases where teachers observed a lack of student progress, attributions in the third year of the project were still based on teachers’ actions (e.g., removed scaffolds too soon, lack of teacher clarity).

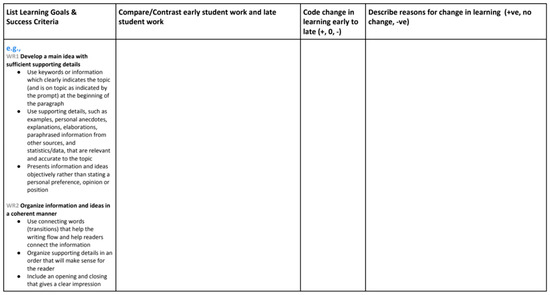

In addition to engagement in the Impact Cycle, what helped teachers make explicit links between their actions and student results was the documentation of their teaching practices. This allowed a deliberate focus on reflecting on learning in light of evidence and an expectation that this learning was openly shared and celebrated. This allowed them to make connections between what they did and the results they observed in their students. In Ontario, an organizer (see Figure A1 ‘Learning and Practice Documentation Chart’) was used to help make these connections more explicit.

In the school in Abu Dhabi, the faculty described their shift in seeking student voice as evidence of impact and a much more collaborative approach to change. One team from the primary division described how they went through several versions of a self-assessment/peer feedback sheet as they discussed the effectiveness of the rubric provided. One staff member noted that students acknowledged their increased voice in decisions when a student asked, “Did you actually change this feedback sheet because of what I said?”. During interviews, students described how they now felt “less pressured to get everything right, or get all ‘As’ as they now understood the process of learning.”

One member of the VLLT explained how there was a sense of collegial competition among the departments when the evidence of student voice and visual displays put pressure on each group “to not be seen as being left behind. Staff began to ask for more time to collaborate and see colleagues teach or share examples of new practices being implemented.” It is likely this social persuasion and opportunities for vicarious experiences lead to an increase in teacher efficacy. The VLLT described the enthusiasm and change that had occurred over the previous 12 months.

“The biggest thing for me is this culture of learning… what has been made explicit is the whole ethos… we are now all openly talking about learning rather than getting through the day to day procedures… We are evaluating, adapting it and changing it and even though these things were happening, you were on your own. Now we are sitting in meetings talking about it and sharing it. I think the benefit to students is massive.”(Year 6 Teacher and member of VVLT)

6.6. The Schools Monitored and Adjusted Their Implementation Strategy

In both examples, teams monitored and adjusted their implementation strategy in light of implementation evidence collected throughout the projects. In addition, both the VLLP team in Abu Dhabi and the planning team in Ontario recognized the importance of celebrations and acknowledgments as part of their strategic implementation plan.

In the Ontario example, the planning team created a theory-of-action (Argyris & Schon, 1974) [12] in the first year in order to make their mental maps explicit. The team continuously revisited their theory-of-action when examining the implementation evidence in order to ensure their theory-in-use aligned with the theory they espoused. In the second year, the team created a list of success criteria for the educator participants. A couple of examples are provided below.

We know we are successful when:

- -

- We can determine feedback that will help students close the gap between their current understanding and the learning intentions;

- -

- Feedback is acted upon by students and teachers (e.g., Students have received, understood, and used the feedback provided to improve their ability to develop main ideas with sufficient supporting details. Teachers have used feedback from students to adjust their instruction);

- -

- We can track student progress in relation to the teacher practice in order to determine our impact on student outcomes.

After large group sessions, the planning team identified evidence in which the success criteria had been met or not and determined the next steps in relation to this information.

In the Abu Dhabi example, additional resources were developed based on an assessment of how to strengthen implementation. The school ‘teacher researchers team’ self-published a Teaching and Learning Magazine for staff to assist and keep staff up-to-date with current knowledge and effective practices. Two recent publications included an ‘Action Research Edition’ and ‘A Guide to the Impact Cycle Process.’ A second edition was published after the completion of the first round of Impact Cycles as a means of celebrating and sharing the achievements of teacher learning. The edition included Visible Learning Recaps, Visible Learning Impact Cycles, and action research for the purpose of formal collaboration with a university.

7. Conclusions

Hattie’s (2009) [1] synthesis represents the largest collection of evidence-based research regarding what matters in schools in raising student achievement. As we move into the 10th anniversary since the original publication, deep implementation of the influences that are most likely to impact student achievement remain largely unrealized in many schools and districts. Knowing what works and making it work (so that increases in student achievement are realized) are two different things. The question we set out to answer based on our long-term work in school districts was: What conditions helped encourage educators to implement and adapt evidence from the Visible Learning synthesis as they encountered it?

In this paper, we shared two examples of the reception of the Visible Learning research in schools. In doing so, we identified six key conditions that helped foster the translation of the Visible Learning research into practice in ways that demonstrated a measurable impact on student learning. As Hattie (2009) [1] noted (citing Dewey), “Evidence does not provide us with rules for action but only with hypotheses for intelligent problem solving and for making inquiries about our ends of education” (p. 247).

One condition that was key to the notion of implementing evidence-based practices and realizing collective impact was engagement in a professional learning methodology (Donohoo & Katz, in press) [7]. By participating in Impact Cycles (a form of Collaborative Inquiry), educators in these two examples inquired into best practices related to an identified student learning need, applied new approaches, reflected on the impact in light of the evidence, and made adjustments accordingly. The initial deep diagnosis that occurred when teams gathered evidence to determine an area of focus (the first stage in the cycle) was an important precursor criterion for determining whether or not changes impacted student learning.

In addition to the presence of a learning methodology, teachers needed examples of how to apply strategies in order to use them effectively in their classrooms. In both cases, multiple examples were provided throughout the course of the implementation strategy. By re-visiting the examples, the focus remained on the selected strategies and this focus enabled educators to understand how to infuse them into their everyday instruction.

In these two cases, it was essential to help educators make the link between their collective actions and the resulting student outcomes. Teachers saw themselves as agents of influence. The Impact Cycle helped teams make sense of their actions by examining evidence regarding students’ experiences in schools. In doing so, teachers were able to orient their work around student outcomes and gain a better understanding about the potential impact of their collective actions. Teachers also needed a conduit for the research in order to better understand meta-analyses, effect sizes, and the implications for teaching and learning.

Another enabling condition for implementation was a supportive organizational environment. Both locations promoted and supported teachers working together to solve the dilemmas of teaching and learning. The supportive environment enabled teachers to deliberate over the challenges they faced in relation to the identified student learning need, examine their current practices, and determine how to best implement the evidence-based practices in order to improve student outcomes. Common understandings regarding effective practice were built collaboratively as a result. Lastly, the schools monitored and adjusted their implementation strategy throughout the project. Monitoring the implementation plan allowed the leadership teams to adjust their course of action based on successes and challenges identified by the educators.

These six conditions were instrumental in helping educators implement and adapt evidence from the Visible Learning synthesis when they encountered it. In addition, they were instrumental in helping teachers realize that, through their collective efforts, they were making a difference.

Author Contributions

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Analyzing student work protocol.

Table A1.

Analyzing student work protocol.

| 2 min. | Facilitator

|

| 3 min. | Introduction Teacher

|

| 4 min. | Description Facilitator

|

| 6 min. | Interpretation Facilitator

|

| 5 min. | Implications/Next Steps Facilitator

|

| 15 min. | Team

|

| 35 min. + 35 min. | Team

|

| 10 min. | Reflection

|

Total time approximately 2 h

Adaptation based on the following two resources.

The Learning Conversations Protocol: http://www.edu.gov.on.ca/eng/literacynumeracy/inspire/research/learning_conversations.pdf

and Pedagogical Documentation http://www.edu.gov.on.ca/eng/literacynumeracy/inspire/research/CBS_PedagogicalDocument.pdf.

Figure A1.

Learning and Practice Documentation Chart developed by Brian Weishar.

References

- Hattie, J. Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement; Routledge: New York, NY, USA, 2009. [Google Scholar]

- Glass, G. Primary, secondary, and meta-analysis of research. Educ. Res. 1976, 5, 3–8. [Google Scholar] [CrossRef]

- Brown, J.; Sharp, C. The Use of Research to Improve Professional Practice: A Systematic Review of the Literature. Oxford Rev. Educ. 2003, 29, 449–470. [Google Scholar] [CrossRef]

- Cousins, J.; Walker, C. Predictors of educators’ valuing of systematic inquiry in schools. Can. J. Program Eval. 2000, 25, 52. [Google Scholar]

- Lysenko, L.; Abrami, P.; Bernard, R.; Dagenasi, C.; Janosz, M. Educational Research in Educational Practice: Predictors of Use. Can. J. Educ. 2014, 37, 1–26. [Google Scholar]

- Williams, D.; Coles, L. Teachers’ Approaches to Finding and Using Research Evidence: An Information Literacy Perspective. Educ. Res. 2007, 49, 185–206. [Google Scholar] [CrossRef]

- Donohoo, J.; Katz, S. Achieving Quality Implementation in Schools: The Role of Collective Efficacy; Corwin: Thousand Oaks, CA, USA, 2018; in press. [Google Scholar]

- Nottingham, J. The Learning Challenge: How to Guide Your Students through the Learning Pit to Achieve Deep Understanding; Corwin: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Donohoo, J.; Hattie, J.; Eells, R. The power of collective efficacy. Educ. Leadersh. 2018, 75, 40–44. [Google Scholar]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Robinson, V.; Hohepa, M.; Lloyd, C. School Leadership and Student Outcomes: Identifying What Works and Why; Best Evidence Synthesis Iteration [BES]; Ministry of Education: Auckland, New Zealand, 2009.

- Argyris, C.; Schon, D.A. Theory in Practice: Increasing Professional Effectiveness; Jossey-Bass: San Francisco, CA, USA, 1974. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).