Abstract

This study investigates how student teachers engaged with data science and machine learning (ML) through a collaborative, scenario-based project in a graduate-level online course, AI in STEAM Education. The study focuses on the pilot implementation of the module Responsible AI & Data Science: Ethics, Society, and Citizenship, developed within the EU-funded DataSETUP project. This module introduced student teachers to core data science and AI/ML concepts, with an emphasis on ethical reflection and societal impact. Drawing on qualitative artifacts from the pilot, the analysis applies a five-dimensional framework to examine participants’ thinking across the following dimensions of data engagement: (1) asking questions with data, (2) collecting, cleaning, and manipulating data, (3) modeling and interpreting, (4) critiquing data-based claims, and (5) reasoning about data epistemology. Findings show that student teachers demonstrated growing technical and ethical awareness and, in several cases, made spontaneous pedagogical connections—despite the absence of prompts to consider classroom applications. A supplementary coding lens identified four aspects of emerging pedagogical reasoning: instructional intent, curricular relevance, learning opportunities, and the role of the teacher. These findings highlight the value of integrating critically reflective, practice-based data science education into teacher preparation—supporting not only technical fluency but also ethical, civic, and pedagogical engagement with AI technologies.

1. Introduction

Artificial Intelligence (AI) and data science are no longer peripheral to everyday life—they are deeply embedded in the fabric of modern society, transforming how knowledge is produced, how decisions are made, and how individuals engage with institutions and systems. From recommendation algorithms and content moderation on social media to facial recognition in smartphones, predictive policing, and AI-assisted hiring, algorithmic decision-making increasingly shapes the choices people encounter in daily life (Huang & Qiao, 2024). AI systems are not merely technological tools; they function as powerful sociopolitical forces whose design and deployment are entangled with questions of equity, transparency, and educational agency (Baidoo-Anu & Ansah, 2023; Gould, 2021; Zhai et al., 2021). As AI capabilities continue to advance and data generation accelerates, their impact spans multiple sectors—including commerce, healthcare, public administration, and education. In the educational domain, AI drives a range of emerging practices such as personalized learning, predictive analytics, and data-informed policy development. AI-powered tools like adaptive learning platforms, intelligent tutoring systems, and generative applications such as ChatGPT 4.0 are beginning to reshape teaching and learning. These developments make clear that preparing teachers for an AI-mediated world cannot be reduced to technical upskilling alone; rather, it requires a conceptual framework that integrates technical fluency, ethical reflection, and pedagogical imagination into a coherent vision of teacher readiness.

Data science—broadly defined as an interdisciplinary field focused on extracting value and actionable insights from data (Wing, 2019)—is central to AI’s transformative societal impact and its educational implications. In a world increasingly shaped by data-driven AI decision-making, the ability to collect or generate, interpret, and visualize data has become a foundational technical skill (Biehler et al., 2018; Tanaka et al., 2022). However, technical proficiency alone is not sufficient, as AI systems also raise pressing ethical, civic, and social concerns. Biases in datasets, opaque algorithmic decision-making, and the commodification of personal data can reinforce existing inequalities and undermine accountability. In educational contexts, for instance, algorithmic processes may shape student trajectories and access to learning opportunities in ways that are neither transparent nor equitable. These challenges underscore the need for critical data literacy: a reflective, ethically grounded capacity to evaluate how data is produced, whose perspectives it privileges or excludes, and how algorithmic systems influence social and institutional dynamics (Bobrowicz et al., 2022; Gould, 2017, 2021).

Realizing the vision of data science literacy as both a technical and civic competency is hindered by the fact that many young people—including those preparing to become teachers—remain underprepared to engage critically and responsibly with data. Research consistently highlights persistent gaps in school graduates’ ability to interpret statistical information, evaluate the credibility of digital content, and detect misinformation in online environments (Konold et al., 2015; Wineburg et al., 2016; Zucker et al., 2020). These are not simply shortcomings in statistical or digital skills; they represent missed opportunities to develop the ethical awareness and critical thinking essential for navigating a datafied society.

Consequently, there is an urgent need to embed data science and responsible AI education across formal education systems—and especially in teacher education programs, where the next generation of teachers must be equipped to cultivate these literacies in others (Dunlap & Piro, 2016; Mandinach & Gummer, 2016). As both consumers and facilitators of technology-enhanced learning, teachers are uniquely positioned to influence how AI is understood, applied, and critically examined in classrooms and schools.

1.1. Preparing Youth for Responsible AI: The Educational Imperative

As AI and data science increasingly shape knowledge production, identity formation, and access to opportunity, education systems face a critical imperative: to prepare young people not only for participation in an AI-driven economy, but also for ethical, reflective, and informed engagement in a society mediated by algorithms. As already pointed out, AI systems are not inherently neutral; they reflect and often amplify the social assumptions, value judgments, and historical inequities embedded in their training data, raising significant concerns about their tendency to replicate and exacerbate existing social and cultural biases (Adiguzel et al., 2023; Baidoo-Anu & Ansah, 2023). When adopted without critical oversight, these systems can reinforce discrimination, obscure accountability, and legitimize surveillance. In response to these risks, educators and policymakers have called for the integration of responsible AI principles—such as fairness, transparency, and ethical reflection—into curricula and pedagogical design (Miao & Holmes, 2021, 2023; Vargas-Murillo et al., 2023).

Several frameworks have sought to articulate what such integration might involve. The revised Guidelines for Assessment and Instruction in Statistics Education (GAISE II) (Bargagliotti et al., 2020) emphasize statistical reasoning as central to preparing learners to interpret and make decisions with data. By contrast, the Charles A. Dana Center’s data science course framework (Charles A. Dana Center at The University of Texas at Austin, 2024) advances a broader civic orientation, highlighting how data science education should prepare learners to participate in democratic life, reason about equity, and interrogate social systems. While GAISE II offers important progressions in statistical reasoning, its narrower focus tends to overlook the ethical and sociopolitical dimensions emphasized by the Dana Center. Together, these frameworks illustrate both the strengths and the gaps in current approaches to AI and data science education: one emphasizes technical progression, the other civic purpose, yet neither fully bridges the two. This tension is particularly consequential for teacher education, where readiness requires student teachers to integrate AI and data science with civic, ethical, and pedagogical perspectives in their practice.

Meeting this challenge requires an integrated approach that brings technical proficiency, ethical reflection, and civic responsibility into dialogue with pedagogical practice. Rather than treating AI ethics, data science literacy, and pedagogy as separate strands, they must be woven together into a single educational vision. Scholars increasingly advocate for an expansive vision of AI and data science education, in which learners not only gain practical skills such as analyzing and visualizing data, but also develop the critical capacity to interrogate how data is collected, structured, and used in algorithmic systems (Bobrowicz et al., 2022; Wing, 2019). In this framing, data science literacy emerges as a core civic competency, essential for responsible participation in a society where decisions are increasingly influenced by opaque algorithms (Dove, 2022).

STEAM education—with its integration of science, technology, engineering, arts, and mathematics—offers a compelling framework for cultivating responsible AI and data science literacy in ways that are interdisciplinary, creative, and socially relevant. By engaging students in hands-on projects—such as building image or sound classifiers using accessible tools like Teachable Machine, Machine Learning for Kids, or Scratch—learners are introduced to core machine learning (ML) concepts, including data collection, labeling, model training, and algorithmic bias (Carney et al., 2020; Yue et al., 2022). These experiential learning opportunities not only build technical understanding but also open space for ethical reflection—such as questioning why certain images are misclassified or whose perspectives are missing from training datasets. In doing so, students begin to see algorithmic systems not as neutral technologies, but as sociotechnical artifacts shaped by human choices and values. Such activities foster critical awareness and position AI and data science literacy as key components of digital citizenship, encouraging students to examine how automated systems impact equity, representation, and civic life (Makar et al., 2023). Importantly, this humanistic and inquiry-driven approach helps students move beyond being passive users of AI to becoming active questioners, makers, and socially aware participants in an algorithmically mediated world (Aguayo et al., 2023; Aguilera & Ortiz-Revilla, 2021).

In sum, the educational imperative is not only to prepare students to navigate AI-infused environments but to engage with them critically, ethically, and imaginatively. Responsible AI and data science education must support students in becoming informed questioners and active participants—equipped to understand, critique, and even shape the technologies that structure contemporary life. Realizing this vision, however, depends on the readiness of teachers, who must themselves embody this integration in their practice.

1.2. Teacher Readiness and the Need for Targeted Training

Preparing students for ethical and informed engagement with AI requires teachers who are themselves literate in AI and data science—not only in technical terms, but also in ethical, civic, and pedagogical dimensions. Building on the integrated vision outlined above, teacher readiness must therefore be understood as the ability to weave together technical fluency, critical reflection, and pedagogical imagination. Teachers must be equipped to critically evaluate data sources, identify biases in algorithmic systems, and facilitate student inquiry into the social implications of AI technologies. Without this foundation, there is a risk that teachers may adopt AI tools uncritically, reinforcing deterministic or techno-solutionist narratives that obscure deeper issues of bias, accountability, and equity (Dunlap & Piro, 2016; Mandinach & Gummer, 2016). As Roll and Wylie (2016) emphasize, teacher readiness must extend beyond basic tool fluency to include an understanding of how AI shapes assessment, knowledge production, and learner experience—both inside and outside the classroom. This redefinition of readiness goes beyond the technical focus of frameworks such as GAISE II (Bargagliotti et al., 2020) and beyond the civic emphasis of the Charles A. Dana Center framework (Charles A. Dana Center at The University of Texas at Austin, 2024), requiring their integration into a more holistic orientation for teacher education.

Embedding these critical perspectives into teacher education is essential. When student teachers learn to engage with AI and data science in ways that are both pedagogically sound and ethically reflective, they are better prepared to cultivate AI and data science literacy among their students and to contribute to a broader culture of critical, inclusive, and socially responsive engagement with AI in society (Miao & Holmes, 2023; Ridgway et al., 2022; Xu & Ouyang, 2022).

Yet, despite the growing societal relevance of AI and data science literacy, most teacher education programs have yet to integrate these competencies meaningfully into their curricula. Research shows that initial teacher education rarely addresses AI or data science in a systematic way, particularly when it comes to their ethical, civic, and instructional dimensions (Henderson & Corry, 2021; Olari & Romeike, 2021). As a result, many teachers enter the profession without the necessary conceptual grounding or pedagogical strategies to engage critically with these technologies or to support students in doing so.

Addressing this gap requires a twofold shift in teacher education. First, responsible AI and data science education must be embedded across teacher preparation programs. Student teachers must receive interdisciplinary training that links data-driven AI to ethics, equity, and civic life. Such an approach encourages teachers to engage with questions about how data is generated, whose perspectives are represented, and what social assumptions underlie algorithmic systems (Aguayo et al., 2023; Aguilera & Ortiz-Revilla, 2021; Ridgway et al., 2022; Xu & Ouyang, 2022).

Second, teacher education must include practice-oriented, reflective learning experiences that help teachers connect technical understanding with pedagogical application. Student teachers should have hands-on opportunities to interact with AI systems and data workflows—such as labeling datasets, training models, or evaluating algorithmic outcomes—while engaging in structured reflection on the ethical, pedagogical, and social implications of their use. As Jiang et al. (2022) show, teachers who engage with AI workflows themselves are better equipped to scaffold similar experiences for students. When combined with structured ethical reflection, these engagements support pedagogical strategies that reflect core educational values—such as fairness, transparency, and democratic participation—thereby fostering not only digital skills but also ethical dispositions and civic sensibilities (Miao & Holmes, 2021).

Critically, these practices must extend across the full lifecycle of data science—from data collection and cleaning to model evaluation and interpretation (Olari & Romeike, 2021). Developing this holistic understanding enables teachers to foster critical data literacy in their students: the capacity to interrogate data sources, recognize algorithmic bias, and evaluate the broader implications of data-driven decision-making (Gould, 2017; Ridgway et al., 2022).

Ultimately, teacher readiness in the era of AI must be redefined. It is not enough to prepare teachers to use AI tools—they must be equipped to critique and reimagine them in ways that advance equity, justice, and the common good. Introducing data science in teacher education as more than a technical skill set—as a framework for ethical agency, critical thinking, and active citizenship—better prepares teachers to cultivate reflective and inclusive learning environments for all students.

1.3. Context and Purpose of the Present Study

The present study is situated within the broader context of the EU-funded Erasmus+ project DataSETUP (November 2023–October 2026), which seeks to integrate data science education into university-level teacher preparation programs across Europe. Implemented by a multinational consortium of universities in Germany, Cyprus, Greece, Ireland, and Turkey, the project aligns with European priorities on digital transformation, civic engagement, and inclusive education. Adopting a design-based research (DBR) methodology (Cobb et al., 2003), DataSETUP iteratively designs, tests, and refines educational modules, digital tools, and assessment frameworks that position data science as both a technical and civic competency. In addition to data analysis and visualization, the project emphasizes the ethical, societal, and civic dimensions of data, with the goal of preparing student teachers to engage learners in critical, justice-oriented education. In this sense, DataSETUP directly responds to the redefined vision of teacher readiness outlined above, by combining technical fluency with ethical and civic perspectives.

As part of this initiative, the module Responsible AI & Data Science: Ethics, Society, and Citizenship was developed and pilot tested in a graduate-level STEAM education course at European University Cyprus during the Spring 2025 academic semester. The module introduces student teachers to core concepts in data science and ML, while emphasizing ethical reflection and the societal implications of AI. Through hands-on engagement with accessible educational tools such as Google Teachable Machine and CODAP, participants explore technical processes alongside critical issues of bias, fairness, and educational relevance.

The study reported in this article focuses on a collaborative, scenario-based learning activity within the Responsible AI & Data Science: Ethics, Society, and Citizenship module. Specifically, it investigates how the creation and evaluation of an image classification model supported student teachers’ development across five dimensions: asking questions with data; collecting, cleaning, and manipulating data; modeling and interpreting; critiquing data-based claims; reasoning about data epistemology. The study is guided by the following research questions:

- Which technical and ethical aspects of AI and data science emerged through student teachers’ design and evaluation of image classification models?

- What elements of the data science task appeared to encourage student teachers to make connections to school practice, and how were these connections framed?

2. Materials and Methods

2.1. Research Design

The DataSETUP project employs a Design-Based Research (DBR) approach (Cobb et al., 2003) to guide the iterative development, implementation, and refinement of Data Science Education modules for teacher education. This methodology is well-suited for investigating and advancing educational innovation in authentic contexts, as it supports collaboration between researchers and practitioners, continuous adaptation based on empirical feedback, and grounding in pedagogical theory. Informed by a shared conceptual framework, the modules are co-developed across partner institutions and are designed to support student teachers in STEAM fields in developing the technical, ethical, and pedagogical competencies needed to integrate data science into school education.

The project is structured around two iterative implementation cycles. The initial cycle, conducted in Spring 2025, piloted early versions of the modules in diverse teacher education settings across partner institutions. Feedback and evaluation data from this first cycle are currently being used to revise and improve the modules. A second cycle will be carried out during the 2025–2026 academic year (Fall 2025 and Spring 2026), allowing for further testing and refinement. This iterative DBR process ensures that the resulting materials are not only theoretically robust but also contextually responsive and practically usable. The final modules will be disseminated through the DataSETUP platform, with adaptations and translations enabling broader use across European teacher education programs.

2.2. Participants and Context

This study was conducted during the Spring 2025 semester within the context of a newly developed graduate-level course, ‘AI in STEAM Education’, offered as part of the fully online MA in Technologies of Learning & Communication and STEAM Education at European University Cyprus. Delivered via the Blackboard learning platform, the course comprises thirteen modules designed to explore intersections between technology, pedagogy, and STEAM disciplines. Two of these modules—Responsible AI & Data Science: Ethics, Society, and Citizenship and Integrating AI/ML-Related Data Science in STEAM Education to Foster Responsible and Active Citizenship—were developed under the EU-funded DataSETUP project. These two modules address complementary aspects of AI/ML-related data science education. The first introduces foundational concepts of data-driven AI/ML alongside ethical and societal considerations. The second builds on this foundation, guiding student teachers in the design of classroom activities that integrate data science and AI tools in STEAM Education, with a focus on fostering civic engagement, critical thinking, and social responsibility. This study examines the initial pilot implementation of the first module in S2025.

The course enrolled 36 student teachers, all of whom voluntarily participated in the study. The majority were female (n = 32; 88.9%), and approximately two-thirds were over the age of 30, with nearly half falling within the 31–40 age range. Participants brought diverse academic and professional backgrounds, with prior degrees in fields such as Early Childhood Education, Primary Education, Special and Inclusive Education, Language and Literature, Computer Science, and Engineering. Their teaching experience also varied: while most had taught for at least 1–2 years, about one-third reported more than five years of experience. Participants were evenly split between pre-primary/primary and secondary education levels.

Targeting a diverse cohort of participants, the Responsible AI & Data Science: Ethics, Society, and Citizenship module invited student teachers to explore how data and algorithms shape automated decisions in ways that raise significant pedagogical and civic questions. The module combined instructor-led input with experiential, inquiry-based learning. It began by situating AI in both real-life and educational contexts, introducing key concepts such as data-driven decision-making, probabilistic modeling, and algorithmic logic. Activities like ‘ChatGPT in Action’ prompted participants to analyze AI-generated responses for accuracy, bias, and transparency, fostering early engagement with the capabilities and limitations of large language models (LLMs) and the assumptions they encode.

The instructional approach integrated the statistical investigation cycle (PPDAC) and connected it to AI-related problem-solving processes. It emphasized how data science both built on and extended traditional statistical thinking—particularly by using complex, often pre-existing datasets, and by prioritizing practices such as modeling, iterative refinement, and ethical implementation. Through this framework, student teachers were introduced to critical issues such as algorithmic bias, data quality, model fairness, and the broader societal consequences of AI. These concepts were reinforced through hands-on, inquiry-based activities using user-friendly educational tools like Google’s Teachable Machine, Common Online Data Analysis Platform (CODAP), Machine Learning for Kids, and Code.org’s AI for Oceans.

Through these tools, participants explored key ML processes while critically examining how technical design choices affect outcomes. For instance, in building gesture-recognition games or engaging with Code.org’s AI for Oceans, student teachers considered how training data, diversity, and contextual variability influence the accuracy and reliability of AI systems, and how these systems can either reinforce or challenge existing inequities. These activities encouraged them to view AI not as a neutral technology but as a system with embedded values and potential impacts on equity and inclusion.

The module’s culminating activity was a collaborative project in which student teachers worked in eight groups of 4–5 to develop an image classification model using Google’s Teachable Machine. The activity was structured around detailed implementation guidelines designed to simulate a simplified ML modeling process. Participants were tasked with selecting a meaningful classification problem, clearly articulating its purpose and potential real-world application, and collecting 50–100 labeled images per class, ensuring diversity in aspects such as lighting, angle, and background to mitigate bias. They then trained and evaluated their models, conducting performance testing and robustness checks using new images and varied testing conditions.

The collaborative project offered student teachers a hands-on, practice-oriented opportunity to engage deeply with core ML concepts, including data preparation (collection, cleaning, labeling), model training, validation, accuracy assessment, and bias detection. As part of this process, participants completed a structured workbook and responded to targeted reflection prompts that guided them in analyzing model misclassifications, identifying potential sources of algorithmic bias, and considering the ethical implications of ML systems—particularly issues related to fairness, privacy, and discrimination. They were also asked to evaluate the real-world impact of model decisions on individuals and groups, and to propose strategies for improving model accuracy and reducing bias.

The final project deliverables included the structured group workbook, a 5-min group presentation, and a 1-page reflective report. Across these outputs, participants documented their technical processes, the challenges they faced, and key insights related to ethical considerations and societal implications. These structured yet open-ended artifacts offered rich qualitative data for examining how student teachers engaged with data-driven AI/ML in critical and meaningful ways.

While, as previously described, the DataSETUP project as a whole follows a DBR methodology—structured around two iterative implementation cycles and supported by mixed-methods data collection— the present article focuses on the first exploratory analysis of student teacher products from the initial pilot of the Responsible AI & Data Science: Ethics, Society, and Citizenship module (Spring 2025). Our goal is to gain preliminary insights into how participants engaged with both the technical and ethical dimensions of AI, as reflected in their project work. Findings from this first cycle will inform the ongoing revision of the modules, while subsequent studies in the broader project will extend the analysis through additional data sources and the second implementation cycle.

2.3. Data Collection and Analysis

Data collection for the module as a whole followed a mixed-methods approach, drawing on both qualitative and quantitative sources (pre-/post-surveys, classroom observations, teacher educator reflection sheets, student workbooks, online discussions). While these instruments inform the overall iterative refinement of the module, they are not the focus of this article. In this first analysis, we concentrate specifically on qualitative data generated through the collaborative project—namely, the group workbooks, presentations, and reflective reports. This focus enables us to trace how student teachers articulated their emerging understandings of the technical and ethical dimensions of AI, while also highlighting the potential of the module to foster reflective engagement within teacher education.

All data were collected in accordance with the General Data Protection Regulation (GDPR) and the institutional ethics guidelines of European University Cyprus, with the study receiving ethical approval from the Cyprus National Bioethics Committee. Participation was entirely voluntary, and student teachers were assured that choosing not to participate—or withdrawing at any point—would have no impact on their course grade or academic standing, given that the study was embedded in a graduate-level course. All participants were informed about the study’s purpose, their rights, and the measures in place to protect confidentiality. The group workbooks and other data sources were anonymized, securely stored, and analyzed in aggregate to protect participant privacy and uphold ethical research standards.

To examine how student teachers engaged with core aspects of data-driven AI/ML during the collaborative project, we developed a five-dimensional analytical framework that integrates perspectives from data science education, statistics education, computational thinking, and critical data literacy. The framework draws on established educational models—including the GAISE II framework (Bargagliotti et al., 2020), which emphasizes statistical reasoning, and the Data Science Course Framework developed by the Charles A. Dana Center (Charles A. Dana Center at The University of Texas at Austin, 2024), which foregrounds civic engagement and equity. Building on the complementary strengths but also the limitations of these models, our framework brings them into dialogue with recent efforts to define data science and computational thinking practices in education (e.g., Kazak et al., 2025; Lee et al., 2022). This integrative approach reflects the need, identified in the Introduction, to connect technical fluency with ethical, civic, and pedagogical dimensions of teacher readiness.

The framework was initially piloted on a subset of three group projects, which were coded by one member of the research team and subsequently discussed with the full team. Based on this discussion, the framework was refined and then applied systematically across the entire dataset by the research team working collaboratively. The coding decisions were further discussed and finalized in team meetings, and a final consistency check was conducted across all data to ensure stability of interpretations.

The final version of the framework comprises five core dimensions of data engagement:

Asking Questions with Data: how students frame their inquiry; whether their questions are contextually or educationally meaningful.

Collecting, Cleaning, and Manipulating Data: how data is selected, framed, and processed; whether students address representational bias or dataset limitations.

Modeling and Interpreting: how models are built and evaluated; whether students engage in interpretive reasoning beyond performance metrics.

Critiquing Data-Based Claims: whether students identify bias, discuss fairness, or reflect on the implications of algorithmic decision-making.

Reasoning about Data Epistemology: how students conceptualize the origin, construction, and underlying assumptions of data.

These dimensions provided a structured lens through which to analyze student artifacts and identify engagement patterns, interpretation, and critical reasoning patterns. The fifth dimension, data epistemology, was introduced inductively during early rounds of analysis, as student teachers occasionally questioned how data were defined, whose experiences were represented, and how labeling decisions shape meaning. This dimension draws on critical data studies and recognizes data as sociocultural constructs rather than neutral facts (D’Ignazio & Klein, 2023; Philip et al., 2016).

The analytical framework and coding schema used in the analysis are summarized in Table 1.

Table 1.

Analysis Framework with Extensions.

In addition to the five analytical dimensions described above, we introduced a supplementary lens to capture how student teachers began to consider the educational value of their projects. Importantly, pedagogical reasoning was not part of the assignment prompt. Its emergence in some groups was therefore limited, yet significant, offering initial insights into how the module may open possibilities for connecting data science with teaching and learning.

We coded student work along four dimensions—(1) instructional intent, (2) curricular relevance, (3) identification of learning opportunities, and (4) awareness of the teacher’s role—and applied a simple three-level scale: Explicit, Implied, or Absent. “Explicit” indicated direct statements about classroom use. “Implied” was used when pedagogical relevance could be inferred without being directly stated. “Absent” denoted no reference to educational application. For example, one group explicitly stated that “This classification can be used in educational programs, helping students learn about the characteristics and differences between means of transport” (Group 8), which we coded as Instructional Intent, with additional explicit elements of Learning Opportunities. In contrast, another group noted that “Finally, we tested children’s drawings to check whether the model could be used in different environments” (Group 3), which we interpreted as Implied Curricular Relevance, since it pointed to a potential classroom connection without directly articulating an instructional use. “Absent” denoted no pedagogical link (e.g., Groups 5 and 6, where projects focused only on technical aspects with no reference to teaching applications).

Coding decisions were discussed and finalized within the research team to ensure consistent application of these definitions. While only a subset of groups demonstrated pedagogical reasoning, we view these spontaneous references as valuable indicators of the potential of such modules to foster connections with school practice. Rather than evidence of generalizable outcomes, these findings provide a first glimpse into how the module activities may open up avenues for linking technical learning with classroom realities.

3. Results

This section presents the main patterns that emerged from the analysis of student teacher assignments completed during the collaborative project. Guided by the five-dimensional analytical framework introduced in the methodology, we first examine how participants engaged with technical, interpretive, and ethical aspects of data-driven AI/ML. We then discuss an additional theme that surfaced inductively, early signs of pedagogical reasoning. Although pedagogical application was not part of the assignment prompt, some groups made incidental yet noteworthy connections to teaching and learning. Overall, the results provide an initial sense of how such modules can function within teacher education, illustrating both promising directions and areas where further scaffolding is needed.

3.1. Unfolding the Layers of Data Science Activity

This subsection presents findings related to the five core dimensions of the analytical framework. Each dimension highlights specific ways student teachers interacted with data—technically, interpretively, and ethically—during the collaborative project, offering insight into their evolving understanding of data-driven AI/ML within an educational context.

3.1.1. Asking Questions with Data

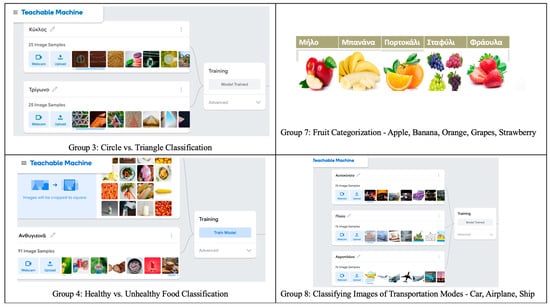

Across several projects, student teachers formulated technically clear classification tasks, such as distinguishing between shapes, types of fruit, types of food, or modes of transportation (see Figure 1).

Figure 1.

Examples of student image classification models.

Among the student projects, a small number of groups, most notably Groups 1, 7, and 8, formulated classification tasks that were clearly embedded in meaningful, context-rich, or socially relevant settings. These groups used data not just to distinguish between categories, but to explore how classification connects with real-world concerns and educational intentions.

Group 1, for example, designed a model to distinguish between facial expressions of joy and anger, writing:

“The goal is to develop a model for recognizing emotions that could support children in identifying and expressing how they feel.”

They further noted the potential for such a model to be used in educational interventions to foster emotional literacy, highlighting its value beyond mere technical experimentation.

Similarly, Group 7 framed their classification task around preschool education, stating:

“The activity was implemented for educational purposes for preschool children… categories included apple, banana, orange, grapes, and strawberry.”

Group 8 combined technical objectives with environmental awareness:

“This topic has educational applications in teaching about technology, engineering, history, and environmental issues… [and could support] understanding the impact of emissions on the planet.”

However, α broader analysis reveals a tendency among student teachers to treat data science as a mainly technical task, focused on achieving performance and robustness, with limited engagement in meaningful questioning. Group 6 is illustrative of this tendency. They described their task as follows: “The classification problem we selected is distinguishing ‘orange’ from ‘not orange’. The goal is to build a simple, efficient system for fruit sorting in production lines and warehouses.”

While technically precise, this framing did not extend to societal, educational, or ethical concerns.

In contrast to the groups that situated their models in classroom or social contexts, most teams treated the task primarily as an exercise in building a functioning classifier, valuing efficiency and accuracy over the formulation of meaningful questions. For example, whereas Groups 1, 7, and 8 connected their projects to emotional literacy, early childhood learning, or environmental awareness, Group 6’s “orange vs. not orange” project remained focused on producing a reliable sorting tool, without considering wider implications. This contrast highlights the range of orientations within the cohort, from projects explicitly tied to educational or social purposes to those framed mainly as technical problem-solving.

3.1.2. Collecting, Cleaning, and Manipulating Data

Across the eight groups, several demonstrated an awareness of the need for data diversity and framing. For instance, Group 1 deliberately varied camera angles and backgrounds in their dataset, but also acknowledged that “using only one face in the photos limited diversity”, a recognition coded as representational bias awareness. Group 3 likewise reflected on how their data choices shaped outcomes, noting that “the majority of photos showed dogs as larger and cats as smaller, creating a bias in size recognition,” which directly illustrates how labeling decisions can embed implicit bias.

One group went further by explicitly linking dataset design to broader social assumptions. Particularly, Group 4 observed: “we trained the model with professional food photos… it showed bias since it could not adapt to or recognize anything different from its training data, and it also excluded foods from different cuisines”. This reflects representational bias awareness, while also hinting at an early recognition of the cultural dimensions of data.

In contrast, other groups engaged primarily at a technical level. Group 6, for example, reported: “we included images of different lighting, background, and angle… however, misclassification occurred with visually similar fruits like grapefruit and peach”. Such comments were coded as data diversity and framing, but did not extend to considering social or cultural implications. Group 7 similarly highlighted practical misclassifications (e.g., “a tennis ball being recognized as an apple”) without connecting them to dataset design or bias.

In sum, a few groups experimented with strategies to reduce bias, some recognized representational limitations, and one group moved toward questioning cultural assumptions embedded in data. Most, however, did not fully engage with the “messiness” of real-world data, underscoring the need for stronger scaffolding to help student teachers progress from noticing technical variation to critically interrogating data practices.

3.1.3. Modeling and Interpreting

All eight groups managed to build and test classification models using Teachable Machine, but their approaches to interpreting results differed.

Performance testing was the most common level of engagement. Groups 3, 6, and 7 reported accuracy rates and identified misclassifications. Group 3 presented a detailed table showing that half peaches and grapefruits were often misclassified as oranges, which they attributed to similarities in color and shape: “The grapefruit was always recognized as an orange due to their similar appearance.” (see Table 2). Group 6 noted a 46% error rate under multicolored backgrounds and low lighting, recognizing that evaluation must extend beyond ideal conditions: “The model showed a 46% error rate in images of oranges with multicolored background and low lighting”. Group 7 documented errors, such as a tennis ball being classified as an apple, though without further reflection on the underlying causes.

Table 2.

System Evaluation: Classification Accuracy by Object (%).

A smaller set of groups engaged in reflective analysis. For instance, Group 4 emphasized how training data and model choices can reproduce bias, pointing to the value of fairness and interpretability techniques (e.g., SHAP (SHapley Additive exPlanations), LIME (Local Interpretable Model-agnostic Explanations)) to enhance transparency and trust.

Finally, in two cases, modeling decisions were clearly purpose-driven. Group 1 designed an emotion recognition model “to support children in identifying and expressing how they feel”, explicitly linking the activity to educational goals. Group 4 also connected their modeling to ethical concerns, situating technical design choices within a broader discussion of accountability.

In sum, the eight projects illustrate a spectrum: Groups 3, 6, and 7 engaged primarily in accuracy-based evaluation; Group 4 combined error analysis with ethical reflection; and Group 1 aligned modeling with pedagogical aims. This variation highlights both the accessibility of basic evaluation practices and the potential, albeit limited in this cohort, for connecting model interpretation to ethical and educational reasoning.

3.1.4. Critiquing Data-Based Claims

Engagement with fairness, validity, and the socio-ethical implications of algorithmic decision-making appeared across all groups, though with differing depth and focus.

In Groups 2, 5, 6, 7, and 8, bias detection was made explicit, with student teachers noting how dataset choices and conditions influenced fairness and reliability. For example, Group 2 emphasized bias mitigation strategies such as data balancing and fairness-aware loss functions, noting that “ethical design and explainability mechanisms strengthen trust and reduce algorithmic bias”. Group 5 similarly highlighted that imbalance and poor labeling could generate unfair outcomes, pointing to risks of discrimination if underrepresented groups were not included. Group 6 linked high error rates in low-light conditions (46%) to potential bias in training data, while Group 7 noted that variety in backgrounds and lighting was necessary to “avoid prejudice in the model’s outputs”. Group 8 warned that biased datasets risk reinforcing stereotypes in educational settings.

In Groups 2, 4, 5, and 8, socio-ethical discussion emerged more strongly than in the rest of the groups, linking technical design choices to broader questions of responsibility and social values. Group 4 questioned how relying on professional food photos could reproduce narrow cultural ideals of “healthy eating”, stressing that labeling practices are not neutral but reflect social values. Group 8 insisted that AI should be used “with social care and pedagogical purpose.” Group 2 connected technical fairness with accountability and human oversight, while Group 5 pointed to privacy and transparency as prerequisites for responsible AI.

In Groups 3 and 6, student teachers showed openness to limitations by acknowledging specific cases of model failure. Group 3 reported frequent misclassification of peaches and grapefruits due to visual similarity, while Group 6 explicitly acknowledged how poor lighting and multicolored backgrounds compromised reliability.

Overall, the projects reflected different ways of engaging with critique: some groups (e.g., 3, 6) focused mainly on identifying errors and practical limits; others (2, 5, 7, 8) foregrounded fairness and technical strategies for bias mitigation; and a smaller number (4, 8, partly 2 and 5) engaged in deeper socio-ethical reflection about representation, privacy, and accountability.

3.1.5. Data Epistemology

Across the eight groups, engagement with epistemological questions was rare and uneven. This category concerns reflections on where data come from, what assumptions they carry, and what kinds of knowledge are constructed through classification. In most cases, student teachers treated datasets as neutral inputs, focusing primarily on technical adequacy rather than on the social or cultural framings embedded in data.

Some groups nevertheless showed initial signs of questioning data origin and authorship. Group 2, for instance, remarked that bias “often exists in training data and leads to unfair decisions against specific social groups”, stressing the need for transparency and interpretability to counter the “black box” nature of AI. Group 5 raised concerns about privacy and accountability, linking dataset construction to ethical risks of discrimination. These reflections illustrate awareness that datasets are not value-free, even if the comments remained at a general level.

Group 4 provided the most developed example by explicitly interrogating the cultural assumptions embedded in data selection. They observed that “using only professional food photos could misrepresent everyday eating habits… cultural identity is shaped by what is and isn’t labeled healthy”. This comment highlights how dataset authorship (professional vs. everyday images) makes certain perspectives visible while excluding others, thereby shaping the model’s constructed knowledge. Here, student teachers implicitly recognized that data encode cultural values, not just objective reality.

By contrast, other groups (e.g., 3, 6, 7) confined their analysis to technical considerations, such as accuracy under varied conditions or the visual similarity of classes, without reflecting on how classification categories themselves frame knowledge. The absence of such epistemological engagement underscores both the novelty of this perspective for student teachers and the difficulty of reaching it without explicit scaffolding.

Taken together, these patterns suggest that reasoning about data epistemology was the least developed dimension in our analysis. Yet the few examples we observed (Groups 2, 4, 5) are important: they point to emerging recognition of data as authored, culturally situated, and perspectival. Helping student teachers to deepen this stance, moving from seeing data as neutral information to recognizing them as social artifacts, remains a key challenge for teacher education in data science.

3.2. Teaching and Learning with Data Science: Emergent Pedagogical Reasoning

Although the module was not designed to elicit pedagogical reflection, a number of student teacher groups made spontaneous references to educational applications. These ranged from brief, passing comments to more fully formed instructional ideas. The depth and clarity of these references varied across groups, revealing a spectrum of pedagogical reasoning, from none at all to implicit educational framing to explicit teaching proposals. Our coding captured this variation across four dimensions: instructional intent (Was teaching mentioned as a goal?), curricular relevance (Were school subjects or learner levels referenced?), identification of learning opportunities (Were student outcomes or skills implied or described?), and awareness of the teacher’s role (Was there awareness of how a teacher might mediate or facilitate the task?). The results of this coding process are summarized in Table 3.

Table 3.

Evidence of Pedagogical Reasoning in Student Teacher Group Projects Across Four Dimensions: Instructional Intent, Curricular Relevance, Learning Opportunities, Role of Teacher.

The distribution across groups shows that pedagogical reasoning was far from uniform. Groups 1 and 3 provided the most developed examples, explicitly describing how their emotion recognition models could be integrated into classroom activities. Group 1 designed an emotion recognition model to detect joy and anger in classroom contexts. They proposed its use in “playful activities to support teachers’ socio-emotional awareness.” Their framing positioned the teacher as both interpreter of emotional data and designer of responsive actions, tying their model to emotional literacy and metacognitive dialogue.

Group 3 developed a three-tiered image classification model that distinguished among animals (dog, cat, other), their size (small or large), and their perceived emotion (e.g., playful, hostile). Although not prompted to do so, the student teachers suggested that such a system could support young learners in engaging with classification tasks, reflecting on how emotions are represented, and discussing ways in which models can fail or misinterpret visual input. In their reflection, they emphasized:

“The emotional classification task, despite its complexity, opens pathways for social-emotional learning and media literacy. Learners can discuss why the same animal face might be perceived differently, and what this says about emotion recognition in both humans and machines.”

This level of pedagogical interpretation was not required, yet it emerged organically. The group moved beyond technical reporting to suggest that the subjectivity inherent in emotional labeling could serve as a meaningful learning opportunity. Although they did not explicitly define the teacher’s role, their comments clearly envisioned classroom discussion as an integral part of how the model would be used in an educational context.

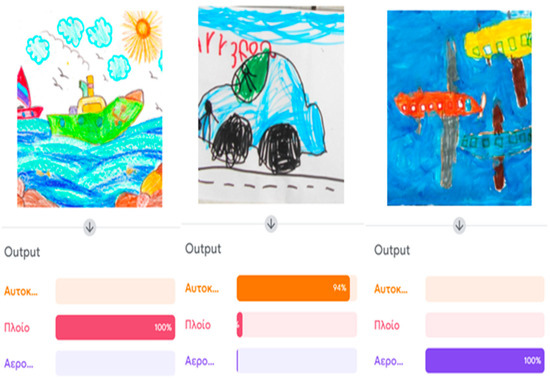

Other groups offered more partial or implied connections. Group 2 proposed using their model in early education, suggesting links to geometry and computing, and hinted that the teacher might support ethical discussion. Group 7 situated their project within STEAM and environmental education, but did not elaborate on the teacher’s role. Group 8 tested their model with children’s drawings, which implied classroom relevance even though the teacher’s mediation was only indirectly referenced (see Figure 2). Group 4, while less detailed, nevertheless connected their food classification project to health education and fairness, suggesting an ethical use of data in classroom contexts.

Figure 2.

Group 8: Recognizing transportation modes in children’s drawings (labels in Greek: Αυτοκίνητο = Car, Πλοίο = Ship, Αεροπλάνο = Airplane).

4. Discussion and Conclusions

This study set out to investigate how student teachers engaged with foundational data science and AI/ML concepts, including ethical reasoning, through a collaborative project in a graduate-level STEAM course. The findings reveal that when data-driven AI/ML is introduced through accessible, hands-on tools and accompanied by ethical reflection, student teachers can meaningfully engage with core technical and conceptual dimensions—even in the absence of prior training in AI, statistics, or programming. Guided by a five-dimensional analytical framework, we found that student teachers demonstrated increasing sophistication in posing contextually grounded data questions, designing and evaluating models, and critically reflecting on fairness, bias, and the societal implications of automated decisions. These outcomes align with calls in the literature to reconceptualize data science literacy as both a technical and civic competency (Wing, 2019; Bobrowicz et al., 2022; Dove, 2022), and to embed such literacy across teacher education programs in ways that emphasize ethics, equity, and inclusion (Miao & Holmes, 2021, 2023; Ridgway et al., 2022). By showing how student teachers engaged with these dimensions through project-based inquiry, our study contributes empirical evidence to ongoing debates on how data science literacy, AI ethics, and pedagogical reasoning can intersect in teacher preparation.

Across several of the framework’s dimensions—particularly those focused on questioning, data handling, and model interpretation—student teachers demonstrated increasing technical fluency and thoughtful reflection. Their projects reflected an appreciation for the importance of diverse and balanced datasets, and several groups were able to analyze model misclassifications with thoughtful attention to the impact of context, lighting, or visual similarity. In doing so, they began to connect technical outcomes to underlying data characteristics, highlighting a growing understanding of the complexity and limitations of ML systems, and suggesting an emerging capacity for data science literacy that combines interpretive reasoning with conceptual understanding of statistical and ML model-based processes. These findings are in accord with recent research advocating for experiential, inquiry-based approaches to AI and data science education (Carney et al., 2020; Aguilera & Ortiz-Revilla, 2021), particularly within interdisciplinary STEAM contexts that encourage exploration and creativity alongside critical analysis.

As student teachers moved into the more conceptual and reflective dimensions of the framework, we observed varied levels of conceptual and reflective engagement. In critiquing data-based claims, several groups recognized the ethical and social implications of biased or oversimplified models, particularly in relation to fairness, stereotyping, and cultural representation. However, these reflections often remained abstract or disconnected from real world contexts or classroom realities, indicating a need for more structured support to help student teachers connect ethical awareness to concrete instructional practice. This echoes concerns in the literature that, while teachers are increasingly aware of the need to critically engage with AI, many still lack the tools or confidence to translate such awareness into pedagogical action (Dunlap & Piro, 2016; Henderson & Corry, 2021; Xu & Ouyang, 2022).

The most challenging dimension proved to be data epistemology, namely reasoning about where data come from, what assumptions they carry, and what knowledge is constructed through classification. Most groups of participants treated data as objective inputs rather than socially constructed representations. Only a few groups questioned the origin, authorship, or cultural framing of the data they used. This limited engagement with the epistemological underpinnings of data science underscores an important gap identified in earlier work on critical data literacy (Gould, 2017, 2021; D’Ignazio & Klein, 2023). Without explicit scaffolding, student teachers may struggle to critically examine how categories are defined, whose experiences are included or excluded, and what values or assumptions are embedded in data collection and labeling practices. Our findings confirm that epistemological reasoning requires careful instructional design and is prompted by authentic, socially relevant contexts. This offers a crucial starting point for developing deeper forms of critical data literacy in teacher education. Encouraging such reflection is essential if teachers are to prepare students for full participation in a datafied society, where algorithmic systems increasingly structure access to opportunity, identity, and representation.

A particularly noteworthy and unanticipated finding of this study was the emergence of pedagogical reasoning. Despite the module not requiring or scaffolding educational applications, several groups spontaneously made connections to teaching and learning. These ranged from linking classification tasks to topics in early childhood education and environmental studies to identifying learning goals such as emotion regulation, AI literacy, and critical thinking. In some cases, student teachers also began to conceptualize the teacher’s role as an interpreter, facilitator, or ethical guide—moving beyond a technocentric view of AI to a more relational, learner-centered perspective. This pattern accords with scholarship on teacher noticing, which highlights how teachers learn to attend to, interpret, and respond to features of classroom practice (Gamoran Sherin & van Es, 2008/2009; Bastian et al., 2025). It also intersects with work on Technological Pedagogical Content Knowledge (TPACK) (Mishra & Koehler, 2006), as student teachers moved toward integrating technical understanding with pedagogical considerations. Our findings extend this work by foregrounding the ethical and relational dimensions that also entered into their reasoning, aligning with emerging frameworks that emphasize ethics as central to AI-related teacher competence (Miao & Cukurova, 2024). Taken together, these insights suggest that pedagogical reasoning tends to arise when tasks intersect with themes that are already socially or educationally resonant (e.g., emotions, fairness, early childhood learning). Where tasks were framed narrowly as technical classification problems, pedagogical reasoning did not emerge. This reinforces the importance of task framing: contextual and reflective prompts can encourage student teachers to see beyond technical performance and to imagine classroom relevance. While demonstrating that pedagogical reasoning can emerge even without prompting, our findings strengthen arguments for designing tasks that intentionally create openings for instructional imagination. These insights resonate with recent scholarship emphasizing the transformative potential of STEAM education to integrate AI and data science in ways that are culturally responsive, pedagogically meaningful, and socially just (Aguayo et al., 2023; Aguilera & Ortiz-Revilla, 2021; Makar et al., 2023).

In this regard, the second module in the course, Integrating AI/ML-Related Data Science in STEAM Education to Foster Responsible and Active Citizenship, provides a structured opportunity to develop these connections further. Building on the conceptual and ethical foundation laid in the first module, it guides student teachers in designing classroom-ready STEAM educational scenarios that integrate data-driven AI/ML and justice-oriented inquiry. This progression reflects a deliberate curricular sequence —from exploration to application—that supports student teachers in developing not only technical fluency and ethical awareness, but also pedagogical agency. The second module was also piloted during the Spring 2025 semester, with preliminary evidence suggesting a positive impact on participants’ confidence and creativity in applying data science in classroom contexts. While student feedback and assignments point to promising developments, systematic analysis of the collected data is still pending. Future analysis will offer a more robust understanding of how student teachers envision, plan, and reflect on the use of AI/ML-related data science in education when pedagogical application is explicitly scaffolded.

At the same time, several limitations of the present study must be acknowledged. The simplicity of the classification tasks, and the use of user-friendly platforms like Teachable Machine, likely constrained opportunities for deeper engagement with ambiguity, contestation, and the epistemological dimensions of data. Several groups approached the work as straightforward sorting exercises (e.g., “orange” versus “not orange”), without encountering the kinds of ambiguity or contested categories that arise in real-world datasets. Research on the pedagogical value of “productive struggle” (Warshauer, 2015; Kapur, 2008) suggests that engagement with messy, complex data can create important opportunities for reasoning about uncertainty, fairness, and representation. Future studies might therefore explore how working with authentic datasets—while carefully scaffolded—can provoke richer forms of technical, ethical, and pedagogical reflection. A further limitation is that pedagogical reasoning was not an explicit focus of the assignment; our analysis relied on incidental and retrospective evidence, which constrains the strength and generalizability of our conclusions. Future research should investigate how pedagogical reasoning about AI and data science evolves over time, how it can be purposefully supported through course design, and how it influences actual teaching practice in diverse educational settings.

Nonetheless, the study makes several contributions. It illustrates how student teachers, even with minimal prior training, can engage meaningfully with AI/ML and data science concepts when provided with structured, experiential learning opportunities. It shows that ethical and critical reflection can be introduced early, not as an add-on but as an integral part of technical exploration. Moreover, it reveals that, under the right conditions, teacher-learners begin to appropriate AI/ML-related data science not only as a technical domain, but as a pedagogical resource—imagining how it might be used to support inquiry, dialogue, and student empowerment.

In conclusion, preparing teachers for an AI-mediated world requires more than technical upskilling. It calls for a vision of teacher readiness that positions educators as interpreters of data, facilitators of ethical dialogue, and designers of inclusive, critically aware learning environments. Because AI systems are inseparable from the data on which they are built, teacher education must foreground data science literacy as central to professional readiness. One way this might be realized is through assignments that ask student teachers to engage with messy, real-world datasets—developing a functional model, interrogating issues of bias and representation, and designing classroom activities to help students grapple with these complexities. Ultimately, teacher preparation programs that integrate technical fluency, critical reflection, and pedagogical imagination can cultivate professionals who are ready not only to use AI but to help their students navigate and question a datafied world with agency and critical awareness.

Author Contributions

All five authors have contributed to the study conceptualization; methodology; investigation; data curation; writing—original draft preparation; writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the EU, under the Erasmus + Key Action 2 program (DataSETUP—Promoting Data Science Education for Teacher Education at the University level (Ref. #: 2023-1-DE01-KA220-HED00160333)). Any opinions, findings, and conclusions or recommendations presented in this paper are those of the authors and do not necessarily reflect those of the EU.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Cyprus National Bioethics Committee (CNBC) (protocol code EEBK ΕΠ 2025.01.52 and date of approval: 2025-02-12).

Informed Consent Statement

Informed consent was obtained from all study participants.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors gratefully acknowledge the valuable contributions of the student teachers who participated in this study. No AI was employed during the research conduct or initial drafting of this paper. During the manuscript refinement phase, ChatGPT 5.0 was used for language editing when deemed beneficial. This assistance was aimed at enhancing the clarity, coherence, and style of the text, while ensuring the integrity of the original content was preserved.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Adiguzel, T., Kaya, M. H., & Cansu, F. K. (2023). Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemporary Educational Technology, 15(3), ep429. [Google Scholar] [CrossRef]

- Aguayo, C., Videla, R., López-Cortés, F., Rossel, S., & Ibacache, C. (2023). Ethical enactivism for smart and inclusive STEAM learning design. Heliyon, 9(9), e19205. [Google Scholar] [CrossRef]

- Aguilera, D., & Ortiz-Revilla, J. (2021). STEM vs. STEAM education and student creativity: A systematic literature review. Education Sciences, 11, 331. [Google Scholar] [CrossRef]

- Baidoo-Anu, D., & Ansah, L. O. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. SSRN Electronic Journal, 7(1), 52–62. [Google Scholar] [CrossRef]

- Bargagliotti, A. E., Franklin, C., Arnold, P., Gould, R., & Miller, D. (2020). Pre-K–12 guidelines for assessment and instruction in statistics education II (GAISE II): A framework for statistics and data science education. American Statistical Association. Available online: https://www.amstat.org/asa/files/pdfs/GAISE/GAISEIIPreK-12_Full.pdf (accessed on 30 August 2025).

- Bastian, A., Buchholtz, N., & Kaiser, G. (2025). Using AI chatbots to facilitate preservice teachers’ noticing skills. ResearchGate. [Google Scholar] [CrossRef]

- Biehler, R., Frischemeier, D., Reading, C., & Shaughnessy, J. M. (2018). Reasoning about data. In D. Ben-Zvi, K. Makar, & J. Garfield (Eds.), International handbook of research in statistics education (pp. 139–192). Springer. [Google Scholar] [CrossRef]

- Bobrowicz, K., Han, A., Hausen, J., & Greiff, S. (2022). Aiding reflective navigation in a dynamic information landscape: A challenge for educational psychology. Frontiers in Psychology, 13, 881539. [Google Scholar] [CrossRef] [PubMed]

- Carney, M., Webster, B., Alvarado, I., Phillips, K., Howell, N., Griffith, J., Jongejan, J., Pitaru, A., & Chen, A. (2020, April 25–30). Teachable machine: Approachable web-based tool for exploring machine learning classification. Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–8), Honolulu, HI, USA. [Google Scholar] [CrossRef]

- Charles A. Dana Center at The University of Texas at Austin. (2024). Data science course framework. Available online: https://www.utdanacenter.org/frameworks (accessed on 3 August 2025).

- Cobb, P., Confrey, J., diSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9–13. [Google Scholar] [CrossRef]

- D’Ignazio, C., & Klein, L. F. (2023). Data feminism. MIT Press. [Google Scholar]

- Dove, G. (2022, January 12). Learning data science through civic engagement with open data. Paderborn Colloquium on Data Science and Artificial Intelligence in School, Paderborn, Germany. Available online: https://www.prodabi.de/wp-content/uploads/Slides_Graham_Dove_Learning_Data_Science_through_Civic_Engagement_with_Open_Data.pdf (accessed on 3 August 2025).

- Dunlap, K., & Piro, J. S. (2016). Diving into data: Developing the capacity for data literacy in teacher education. Cogent Education, 3(1), 1132526. [Google Scholar] [CrossRef]

- Gamoran Sherin, M., & van Es, E. A. (2009). Effects of video club participation on teachers’ professional vision. Journal of Teacher Education, 60(1), 20–37, (Original work published 2008). [Google Scholar] [CrossRef]

- Gould, R. (2017). Data literacy is statistical literacy. Statistics Education Research Journal, 16(1), 22–25. [Google Scholar] [CrossRef]

- Gould, R. (2021). Toward data-scientific thinking. Teaching Statistics, 43(S1), S11–S22. [Google Scholar] [CrossRef]

- Henderson, J., & Corry, M. (2021). Data literacy training and use for educational professionals. Journal of Research in Innovative Teaching & Learning, 14(2), 232–244. [Google Scholar] [CrossRef]

- Huang, X., & Qiao, C. (2024). Enhancing computational thinking skills through artificial intelligence education at a STEAM high school. Science & Education, 33, 383–403. [Google Scholar] [CrossRef]

- Jiang, S., Lee, V. R., & Rosenberg, J. M. (2022). Data science education across the disciplines: Underexamined opportunities for K-12 innovation. British Journal of Educational Technology, 53, 1073–1079. [Google Scholar] [CrossRef]

- Kapur, M. (2008). Productive failure. Cognition and Instruction, 26(3), 379–424. [Google Scholar] [CrossRef]

- Kazak, S., Leavy, A., & Podworny, S. (2025, February 4–8). Framework for developing data science education modules for teacher education in STEAM subjects [Poster presentation]. 14th Congress of the European Society for Research in Mathematics Education (CERME14), Bolzano, Italy. [Google Scholar]

- Konold, C., Higgins, T., Russell, S. J., & Khalil, K. (2015). Data seen through different lenses. Educational Studies in Mathematics, 88(3), 305–325. [Google Scholar] [CrossRef]

- Lee, H., Mojica, G., Thrasher, E., & Baumgartner, P. (2022). Investigating data like a data scientist: Key practices and processes. Statistics Education Research Journal, 21(2), 3. [Google Scholar] [CrossRef]

- Makar, K., Fry, K., & English, L. (2023). Primary students’ learning about citizenship through data science. ZDM—Mathematics Education, 55, 967–979. [Google Scholar] [CrossRef]

- Mandinach, E. B., & Gummer, E. S. (2016). Every teacher should succeed with data literacy. Phi Delta Kappan, 97(8), 43–46. [Google Scholar] [CrossRef]

- Miao, F., & Cukurova, M. (2024). AI competency framework for teachers. UNESCO Publishing. [Google Scholar] [CrossRef]

- Miao, F., & Holmes, W. (2021). Artificial intelligence and education. Guidance for policy-makers. UNESCO. Available online: https://discovery.ucl.ac.uk/id/eprint/10130180/ (accessed on 3 August 2025).

- Miao, F., & Holmes, W. (2023). Guidance for generative AI in education and research. UNESCO. Available online: https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research (accessed on 3 August 2025).

- Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054. [Google Scholar] [CrossRef]

- Olari, V., & Romeike, R. (2021, October 18–20). Addressing AI and data literacy in teacher education: A review of existing educational frameworks. WiPSCE ‘21: The 16th Workshop in Primary and Secondary Computing Education (pp. 1–2), Erlangen, Germany. [Google Scholar] [CrossRef]

- Philip, T. M., Olivares-Pasillas, M. C., & Rocha, J. (2016). Becoming racially literate about data and data-literate about race: Data visualizations in the classroom as a site of racial-ideological micro-contestations. Cognition and Instruction, 34(4), 361–388. [Google Scholar] [CrossRef]

- Ridgway, J., Campos, P., & Biehler, R. (2022). Data science, statistics, and civic statistics: Education for a fast changing world. In J. Ridgway (Ed.), Statistics for empowerment and social engagement teaching civic statistics to develop informed citizens (pp. 563–580). Springer. [Google Scholar] [CrossRef]

- Roll, I., & Wylie, R. (2016). Evolution and revolution in artificial intelligence in education. International Journal of Artificial Intelligence in Education, 26(2), 582–599. [Google Scholar] [CrossRef]

- Tanaka, T., Himeno, T., & Fueda, K. (2022). University’s endeavor to promote human resources development for data science in Japan. Japanese Journal of Statistics and Data Science, 5, 747–755. [Google Scholar] [CrossRef]

- Vargas-Murillo, A., Pari-Bedoya, I., & Guevara-Soto, F. (2023, June 9–12). The ethics of AI assisted learning: A systematic literature review on the impacts of ChatGPT usage in education. 2023 8th International Conference on Distance Education and Learning (pp. 8–13), Beijing, China. [Google Scholar] [CrossRef]

- Warshauer, H. K. (2015). Productive struggle in middle school mathematics classrooms. Journal of Mathematics Teacher Education, 18(4), 375–400. [Google Scholar] [CrossRef]

- Wineburg, S., McGrew, S., Breakstone, J., & Ortega, T. (2016). Evaluating information: The cornerstone of civic online reasoning. Stanford Digital Repository. Available online: http://purl.stanford.edu/fv751yt5934 (accessed on 3 August 2025).

- Wing, J. M. (2019). The data life cycle. Harvard Data Science Review, 1(1), 6. [Google Scholar] [CrossRef]

- Xu, W., & Ouyang, F. (2022). The application of AI technologies in STEM education: A systematic review from 2011 to 2021. International Journal of STEM Education, 9(1), 59. [Google Scholar] [CrossRef]

- Yue, M., Jong, M. S.-Y., & Dai, Y. (2022). Pedagogical design of K-12 artificial intelligence education: A systematic review. Sustainability, 14(23), 15620. [Google Scholar] [CrossRef]

- Zhai, X., Chu, X., Chai, C. S., Jong, M. S. Y., & Li, Y. (2021). A review of artificial intelligence (AI) in education from 2010 to 2020. Complexity, 2021, 8812542. [Google Scholar] [CrossRef]

- Zucker, A., Noyce, P., & McCullough, A. (2020). JUST SAY NO! Teaching students to resist scientific misinformation. The Science Teacher, 87(5), 24–29. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).