Characteristics of Effective Mathematics Teaching in Greek Pre-Primary Classrooms

Abstract

1. Introduction

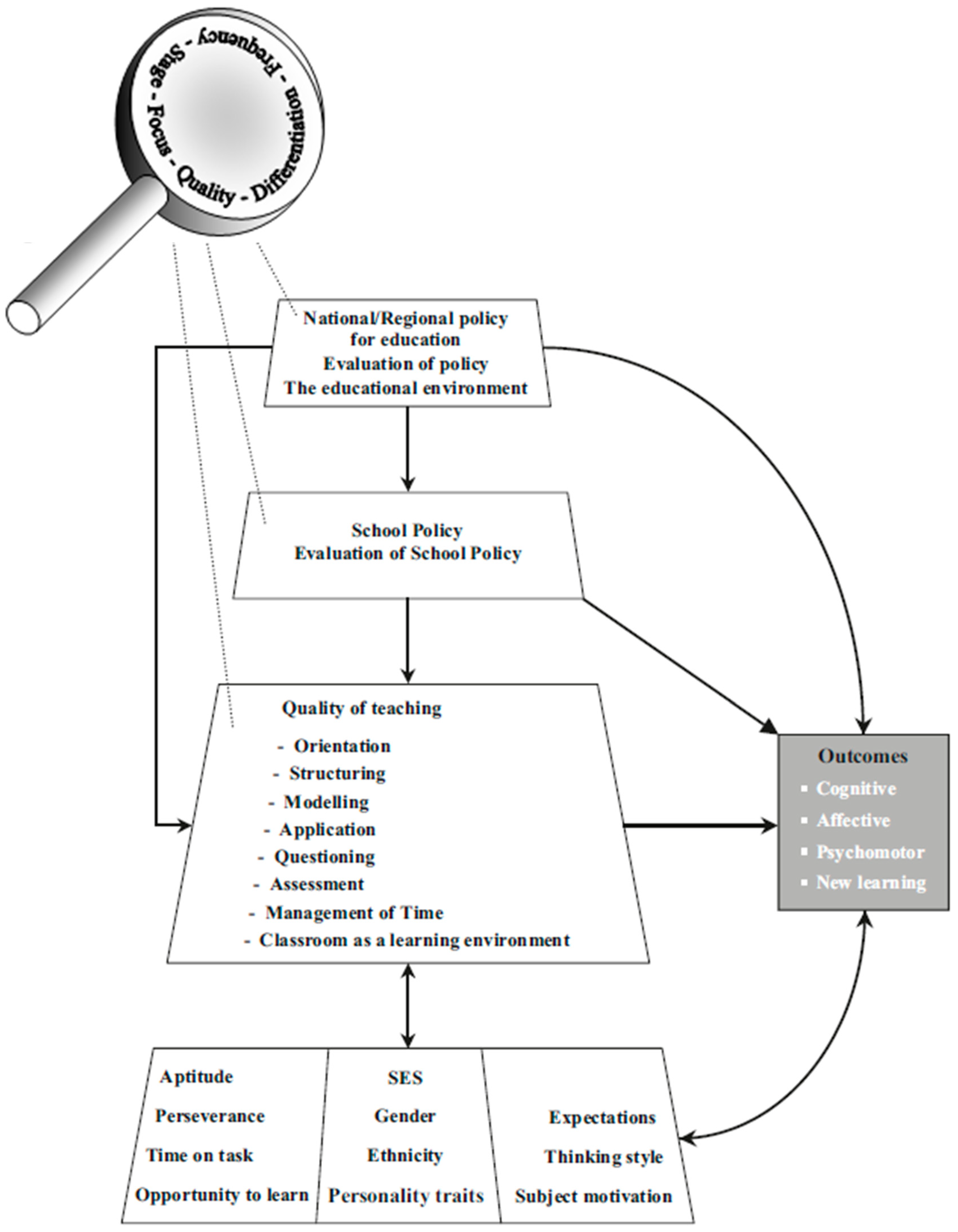

2. The Theoretical Framework of the Study: An Overview of the Dynamic Model of Educational Effectiveness at the Teacher Level

2.1. Teacher-Level Factors

- (1)

- Orientation. This factor refers to teacher behavior aimed at presenting the objectives of a specific task, lesson, or series of lessons, and/or encouraging students to identify the reasons behind a given activity. Such practices are intended to enhance the perceived relevance and meaning of tasks, thereby promoting students’ active engagement in the learning process (e.g., De Corte, 2000; Paris & Paris, 2001). Consequently, orientation tasks should occur at various points throughout a lesson or sequence of lessons—such as the introduction, core, and conclusion—and across lessons aimed at achieving different types of objectives. It is expected that teachers will support their students to develop positive attitudes towards learning. Therefore, effective orientation tasks are those that are clear to students and encourage them to identify the goals of the activity. Teachers should also consider all students’ perspectives on why a specific task, lesson, or series of lessons is conducted.

- (2)

- Structuring. Rosenshine and Stevens (1986) asserted that student achievement is enhanced when teachers deliver instructional content actively and structure it using the following essential strategies: (a) beginning with overviews and/or reviewing objectives, (b) outlining the content to be covered and signaling transitions between lesson segments, (c) emphasizing key ideas, and (d) summarizing main points at the lesson’s conclusion. Summary reviews are also important for student achievement, as they help integrate and reinforce the learning of essential concepts (Brophy & Good, 1986). These structuring strategies do more than aid memorization; they enable students to understand information as a coherent whole by recognizing the connections between its parts. Additionally, student achievement tends to be higher when information is repeatedly presented and key concepts are reviewed (Leinhardt, 1989). The structuring factor further encompasses teachers’ ability to gradually increase lesson difficulty (Creemers & Kyriakides, 2006; Krepf & König, 2022). By scaffolding learning in this way, teachers help students begin tasks that are accessible and manageable, fostering their confidence and motivation. As students build their understanding and skills, the tasks become more complex, sustaining their interest and encouraging deeper cognitive involvement and the development of advanced skills (L. W. Anderson & Krathwohl, 2001; Zeitlhofer et al., 2024).

- (3)

- Questioning. D. Muijs and Reynolds (2001) assert that effective teaching involves frequent questioning and engaging students in discussions. While research on question difficulty yields inconsistent findings (Redfield & Rousseau, 1981), the developmental level of students determines the appropriate complexity of questions. Studies indicate that about 75% of questions should seek correct answers (L. M. Anderson et al., 1979), with the remainder aiming for substantive responses rather than no response (L. M. Anderson et al., 1979; Brophy & Good, 1986). Recent studies and meta-analyses emphasize the importance of balancing factual questions with higher-order prompts that promote reasoning and exploration in mathematics (Kokkinou & Kyriakides, 2022; Kyriakides et al., 2013; Orr & Bieda, 2025). Many classrooms focus mainly on questions that have only one correct answer. Research indicate that incorporating 30–40% process questions—such as those that invite explanations or predictions—can foster deeper mathematical thinking (Björklund et al., 2020). In addition, high-order questioning has been found to be more effective in promoting students’ critical thinking than low-order questions, which primarily target basic knowledge and comprehension (Barnett & Francis, 2011; Kyriakides et al., 2020; Pollarolo et al., 2023). The type of questions posed should align with the instructional context: basic skills instruction benefits from quick, accurate responses. At the same time, complex content calls for more challenging questions that may not have a single correct answer. Brophy (1986) highlights that evaluating question quality should consider frequency, teaching objectives, and timing. Moreover, a mix of product questions (requiring specific responses) and process questions (demanding explanations) is essential, with effective teachers leaning towards more process questions (Evertson et al., 1980; D. Muijs & Reynolds, 2001). Drawing on findings from studies examining teacher questioning skills and their link to student achievement, this factor is defined in the DMEE by five elements related to the type of question asked, the time given for student responses, and the quality of feedback provided—whether the question is answered or remains unanswered (Creemers & Kyriakides, 2006). Firstly, teachers are encouraged to use a combination of product questions—which typically elicit a single correct response—and process questions, which require students to give more elaborate explanations (Askew & Wiliam, 1995; Scheerens, 2013). Second, the length of the pause after questions is considered, with expectations that it varies depending on the question’s level of difficulty. Third, question clarity is assessed by examining the extent to which students understand what is expected of them, that is, what the teacher wants them to do or discover. Fourth, the appropriateness of the question’s difficulty level is evaluated. Optimal question difficulty should vary according to the context. For example, basic skills instruction requires a great deal of drill and practice, and thus necessitates frequent, fast-paced review, in which most questions are answered rapidly and correctly. However, when teaching complex cognitive content or trying to get students to generalize, evaluate, or apply their learning, effective teachers usually raise questions that may have no single correct answer at all (R. D. Muijs et al., 2014). Specifically, most questions should prompt correct answers. In contrast, the remaining questions should encourage substantive, overt responses (even if incorrect or incomplete), rather than no response at all (Alexander et al., 2022; Brophy & Good, 1986; Kyriakides et al., 2021b). Fifth, the manner in which teachers handle student responses is analyzed: correct answers should be acknowledged as such (not necessarily by the teacher). When students provide partially correct or incorrect answers, effective teachers recognize the accurate parts and, if there is potential for improvement, work to elicit more accurate responses (Rosenshine & Stevens, 1986). These teachers maintain engagement with the original respondent by rephrasing the question or providing clues, rather than ending the interaction by supplying the answer themselves or asking another student to respond (Alexander et al., 2022; R. D. Muijs et al., 2014; Kyriakides et al., 2018b).

- (4)

- Modeling. While research on teaching higher-order thinking skills and problem-solving has a long history, these teaching and learning activities have gained unprecedented focus over the past two decades due to policy priorities emphasizing new educational goals. As a result, researchers have investigated the extent to which the modeling factor is linked to student learning outcomes (R. D. Muijs et al., 2014). Numerous studies on teacher effectiveness indicate that effective teachers are expected to assist students in using specific strategies or developing their own approaches to solving various types of problems (Grieve, 2010). Consequently, students are encouraged to cultivate skills that support the organization of their own learning, such as self-regulation and active learning (Kraiger et al., 1993). In defining this factor, the DMEE considers not only the characteristics of modeling tasks provided to students but also the teacher’s role in aiding students to devise problem-solving strategies. Teachers can either demonstrate a straightforward method for solving a problem or encourage students to articulate their own approaches, using these student-generated strategies as a foundation for modeling. Recent research suggests that the latter approach may more effectively promote students’ ability to apply and refine their own problem-solving strategies (Aparicio & Moneo, 2005; Gijbels et al., 2006; Kyriakides et al., 2020; Polymeropoulou & Lazaridou, 2022).

- (5)

- Application. Drawing on cognitive load theory—which suggests that working memory has limited capacity and can only retain a certain amount of information at a time (Kirschner, 2002; Paas et al., 2003)—each lesson should incorporate application activities. Effective teachers facilitate student learning by providing opportunities for practice and application through seatwork or small-group tasks (Borich, 1996; R. D. Muijs et al., 2014). Beyond simply counting the number of application tasks, the application factor examines whether students are merely asked to repeat previously covered material or whether the tasks require engagement at a more complex level than the lesson itself. It also considers whether application tasks serve as foundations for subsequent teaching and learning steps. Additionally, this factor addresses teacher behaviors related to monitoring, supervising, and providing corrective feedback during application activities. When students work independently or in groups, effective teachers circulate to track progress and offer assistance and constructive feedback (Hattie & Timperley, 2007; Yang et al., 2021).

- (6)

- Classroom as a learning environment. This factor encompasses five key elements that enable teachers to establish a supportive and task-focused learning environment: teacher-student interaction, student-student interaction, the way students are treated by the teacher, the level of competition among students, and the degree of classroom disorder. Research on classroom environment highlights the first two elements—teacher-student and student-student interaction—as central components in assessing classroom climate (e.g., Cazden, 1986; Den Brok et al., 2004; Harjunen, 2012). However, the DMEE emphasizes examining the types of interactions present in the classroom, rather than focusing on students’ perceptions of their teacher’s interpersonal behavior. Specifically, the DMEE focuses on the immediate effects of teacher actions in fostering relevant interactions, as learning occurs through these interactions (Kyriakides et al., 2021b). Therefore, the model aims to assess how effectively teachers promote on-task behavior by encouraging such interactions. The remaining three elements pertain to teachers’ efforts to establish an efficient and supportive learning environment (R. D. Muijs et al., 2014; Walberg, 1986). These are evaluated by observing teacher behaviors in setting rules, encouraging students to respect and follow them, and maintaining these rules to sustain an effective classroom environment.

- (7)

- Assessment. Assessment is considered an essential component of teaching. In particular, formative assessment has been identified as one of the most significant factors linked to effectiveness across all levels, especially within the classroom (e.g., Christoforidou & Kyriakides, 2021; De Jong et al., 2004; Hopfenbeck, 2018; Panadero et al., 2019; Shepard, 1989). Consequently, the information collected through assessment is expected to help teachers identify students’ learning needs and evaluate their own instructional practices. Beyond the quality of assessment data—specifically their reliability and validity—the DMEE focuses on the extent to which the formative, rather than summative, purpose of assessment is realized. This factor also encompasses teachers’ skills across all key phases of the assessment process—planning and constructing tools, administering assessments, recording results, and reporting—and emphasizes the dynamic interplay among these phases (Black & Wiliam, 2009; Christoforidou et al., 2014; Christoforidou & Kyriakides, 2021; Kyriakides et al., 2024a).

- (8)

- Management of time. Building on a critical analysis of earlier educational effectiveness research models, the DMEE emphasizes that efficient time management is a key indicator of effective classroom management. Carroll’s model (Carroll, 1963), a foundational framework in EER, defined student achievement—or learning effectiveness—as a function of the actual time required for learning relative to the time spent on learning (i.e., quantity of instruction). However, Carroll’s model does not address how learning occurs. More recent frameworks, such as Creemers’ comprehensive model (Creemers, 1994), highlight ‘opportunity to learn’ and ‘time on task’ as crucial factors influencing educational outcomes. These factors, operating at various levels, are strongly connected to student engagement. Thus, effective teachers are expected to cultivate and maintain an environment that maximizes engagement and optimizes learning time (e.g., Creemers & Reezigt, 1996; Scheerens, 2013; Wilks, 1996). Recognizing the limitations of earlier models of EER, the DMEE argues that concepts like time/opportunity and quality are somewhat vague but can be clarified by examining other instructional characteristics linked to learning outcomes. For example, the DMEE argues that effective teachers should organize and manage the classroom to function as an efficient learning environment, thereby maximizing student engagement. Towards this direction, the amount of instructional time used per lesson is considered, as well as how well that time fits within the overall schedule. Moreover, this factor focuses on whether students remain on task or become distracted, and whether teachers effectively address classroom disruptions without wasting teaching time. It also assesses how time is allocated across different lesson phases based on their importance and the distribution of time among various student groups. Consequently, time management is considered as a critical indicator of a teacher’s ability to manage the classroom effectively.

2.2. Using a Multidimensional Approach to Measure the Functioning of Teacher Factors

- (1)

- Frequency. Frequency represents a quantitative method for assessing the functioning of each effectiveness factor by determining how often an activity related to that factor occurs within a system, school, or classroom. It is assumed that measuring frequency enables researchers to gauge the importance teachers place on each factor (Creemers & Kyriakides, 2008). To date, the most effective studies have focused solely on this dimension, likely because it is the simplest way to measure a factor’s functioning. For example, to assess the frequency dimension of the structuring factor, researchers might count the number of structuring tasks during a lesson or the total time spent on such tasks. However, the DMEE contends that relying solely on a quantitative approach is insufficient, especially given that the relationship between the frequency of each factor and student achievement may be nonlinear. For instance, a curvilinear relationship is expected between the frequency of teacher assessment and student learning outcomes, as excessive emphasis on assessment may reduce actual teaching and learning time. In contrast, the absence of any assessment prevents teachers from adapting instruction to students’ needs (Creemers & Kyriakides, 2006; van Geel et al., 2023; Wolterinck et al., 2022). Therefore, the additional four dimensions, which explore qualitative aspects of each factor’s functioning, should also be taken into account.

- (2)

- Focus. The focus dimension considers both the specificity of activities linked to a factor’s functioning and the number of objectives each activity is intended to achieve. Specifically, the DMEE acknowledges that tasks associated with a factor’s functioning are purposeful and do not occur by chance. For example, when teachers ask students to complete an application task, they likely aim to achieve specific goals through that activity, such as practicing a particular algorithm to solve a quadratic equation, recognizing its use in various problem types, or identifying different representations of a concept. This means that researchers measuring the qualitative aspects of a factor’s functioning should strive to identify the intended purposes of each activity, acknowledging that an activity may aim to fulfill one or multiple objectives. The importance of the focus dimension stems from research showing that when all activities target a single purpose, the likelihood of achieving that goal is high, but the overall effect of the factor may be limited because other potential purposes and synergies remain unaddressed (Kyriakides et al., 2021b; Schoenfeld, 1998). Conversely, if all activities aim to achieve multiple purposes, there is a risk that specific objectives may not be addressed thoroughly enough to be successful (R. D. Muijs et al., 2014; Pellegrino, 2004). The second aspect of the focus dimension involves the specificity of activities, which can range from very specific to more general. For example, in relation to the orientation factor, a task may prompt students to reflect on the reasons behind a specific task, lesson, or sequence of lessons. The DMEE assumes that assessing the focus dimension—both in terms of activity specificity and the number of goals a teacher aims to achieve—helps determine whether an appropriate balance is struck. In the case of orientation, effective teachers are expected to provide not only particular orientation tasks (e.g., why we are estimating the perimeter of this shape) but also more general ones (e.g., why we study geometry) to support student learning outcomes and foster positive attitudes toward learning (Kyriakides et al., 2021b).

- (3)

- Stage. The stage at which tasks associated with a factor take place is also examined; the factors need to be sustained over an extended period to ensure they exert a continuous, direct or indirect, influence on student learning (Creemers, 1994). This assumption is partly grounded in findings from evaluations of programs aimed at improving educational practice, which show that the impact of such interventions depends, in part, on the duration of their implementation within a school (Gray et al., 1999; Kyriakides et al., 2021a, 2024b; Slater & Teddlie, 1992). For instance, structuring tasks are not expected to occur solely at the beginning or the end of a lesson but should be distributed across its various stages. Similarly, orientation tasks should not be confined to a single lesson but extended throughout the entire school year to sustain student motivation and maintain active engagement in learning. Although measuring the stage dimension provides insights into the continuity of a factor’s presence, the specific activities associated with the factor may vary over time (Creemers, 1994). Hence, employing the stage dimension to assess a factor’s functioning enables us to determine the degree of consistency at each level, as well as the flexibility in applying the factor throughout the study’s duration.

- (4)

- Quality. This dimension pertains to the intrinsic characteristics of a specific factor, as outlined in the literature. Its importance in measuring a factor’s functioning stems from the fact that effectiveness can vary; research has shown that only particular activities linked to a factor positively influence student learning outcomes. For example, in terms of orientation, the measurement of dimension quality refers to the properties of the orientation task, specifically whether it is clear to students and whether it has any impact on their learning. In particular, teachers may present the reasons for performing a task simply because they have to do it as part of their teaching routine, without having much effect on student participation. In contrast, others may encourage students to identify the purposes that can be achieved by performing a task and therefore increase their students’ motivation towards a specific task/lesson/or series of lessons. Another example, when considering the quality of the application tasks, the appropriateness of each task is measured by looking at the extent to which the students are asked simply to repeat what they already covered with their teacher or whether the application task is more complex than the content covered in the lesson, perhaps even being used as a starting point for the next step in teaching and learning. The extent to which application tasks are used as starting points for learning can also be seen as an indication of the impact that application tasks have on students.

- (5)

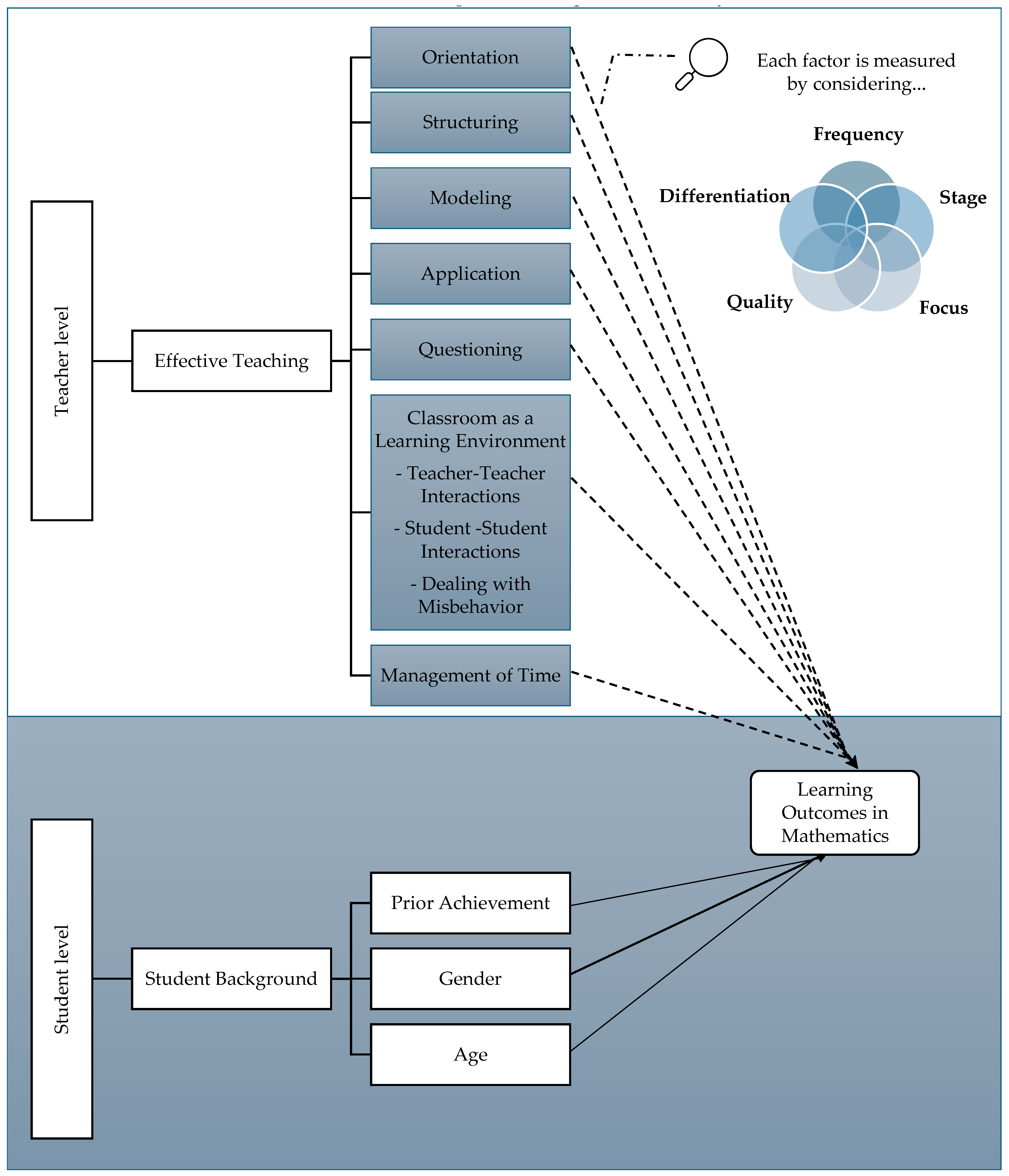

- Differentiation. Differentiation refers to the extent to which activities associated with a factor are carried out consistently for all individuals involved (e.g., students, teachers, and schools). Adapting instruction and related activities to the specific needs of each individual or group is expected to improve the effective implementation of the factor and ultimately maximize its impact on student learning outcomes. While differentiation might be considered a characteristic of an effectiveness factor, it has been intentionally treated as a distinct dimension for measuring each effectiveness factor, rather than being included within the quality dimension (see Kyriakides et al., 2021b). This distinction highlights the importance of recognizing and addressing the unique needs of each student or group. The DMEE asserts that it is difficult to deny that individuals of all ages learn, think, and process information differently. One approach to differentiation involves teachers tailoring their instruction to meet the individual learning needs of students, which are influenced by factors such as gender, socioeconomic status (SES), ability, thinking style, and personality type (Goyibova et al., 2025; Jiang & Yang, 2022; Kyriakides, 2007; Zajda, 2024). For example, effective teachers tend to provide low-achieving students with more active instruction and feedback, greater redundancy, and smaller instructional steps that lead to higher success rates (Brophy, 1986; Creemers & Kyriakides, 2006; Zeng, 2025). Importantly, the differentiation dimension does not suggest that different students are expected to reach different goals. Instead, adopting policies to address the unique needs of various groups of schools, teachers, or students is intended to ensure that all can achieve the same educational objectives. This perspective is supported by research that focuses on advancing both quality and equity in education (e.g., Kelly, 2014; Kyriakides et al., 2018a). Therefore, by treating differentiation as a distinct measurement dimension, the DMEE aims to support schools where teaching practices are maladaptive and assist teachers in implementing differentiation that does not hold back lower achievers or increase individual differences (Anastasou & Kyriakides, 2024; Caro et al., 2016; Kokkinou & Kyriakides, 2022; Kyriakides et al., 2019; Perry et al., 2022; Scherer & Nilsen, 2019; Tan et al., 2023). In conclusion, we argue for adopting a multidimensional perspective when examining the contribution of specific teaching practices. Additionally, an integrated approach to defining teaching quality is employed, drawing upon prior studies that have utilized the DMEE framework. To address the research questions presented in the next section, this study is grounded in the theoretical framework depicted in Figure 2, which incorporates both student-level and teacher-level effectiveness factors. Teaching quality is defined through seven of the eight teacher-level factors outlined in the DMEE, except of assessment, which was not measured in the present study. For practical reasons, it was only possible to use the observation instruments developed by Creemers and Kyriakides (2012) to measure the teacher factors of the DMEE and not the student and teacher questionnaires, which were designed in order to generate data on the assessment factor (see Christoforidou et al., 2014; Christoforidou & Kyriakides, 2021; Kyriakides et al., 2024a). It is important to note that these factors of DMEE are considered as multidimensional constructs assessed across the five dimensions previously described, aiming to determine their impact on students’ learning outcomes in mathematics. Furthermore, selected student background variables—namely prior achievement in mathematics, age, and gender—were included to examine their predictive validity in accounting for variations in student achievement (see Figure 2, which presents the study’s theoretical framework).

3. Research Aim

- To what extent can the five dimensions proposed by the DMEE be used to measure in a valid and reliable way each teacher factor of the model?

- Which teacher factors (if any) of DMEE explain variation in student achievement in mathematics?

- To what extent does the use of all five dimensions to measure each factor help us to explain a larger variance of student achievement in mathematics?

4. Methods

4.1. Measuring Students’ Achievement in Mathematics

4.2. Measuring Quality of Teaching

5. Results

5.1. Using Five Dimensions to Measure Each Teacher Factor: Testing the Validity of the Proposed Measurement Framework

5.2. Searching for Teacher Factors Associated with Student Achievement in Mathematics

6. Discussion

7. Research Limitations and Suggestions for Further Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

Abbreviations

| DMEE | Dynamic Model of Educational Effectiveness |

| ECE | Early Childhood Education |

| CFA | Confirmatory Factor Analysis |

| MTMM | Multitrait Multimethod Matrix |

| TPD | Teacher Professional Development |

| 1 | Greek Interdisciplinary Integrated Curriculum Framework for Kindergarten can be retrieved from: https://users.sch.gr/simeonidis/paidagogika/27deppsaps_Nipiagogiou.pdf (accessed on 1 May 2020). |

References

- Alexander, K., Gonzalez, C. H., Vermette, P. J., & Di Marco, S. (2022). Questions in secondary classrooms: Toward a theory of questioning. Theory and Research in Education, 20(1), 5–25. [Google Scholar] [CrossRef]

- Anastasou, M., & Kyriakides, L. (2024). Academically resilient students: Searching for differential teacher effects in mathematics. School Effectiveness and School Improvement, 35, 48–72. [Google Scholar] [CrossRef]

- Anderson, L. M., Evertson, C. M., & Brophy, J. E. (1979). An experimental study of effective teaching in first-grade reading groups. The Elementary School Journal, 79, 193–223. [Google Scholar] [CrossRef]

- Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching and assessing: A revision of Bloom’s taxonomy of educational objectives: Complete edition. Longman. [Google Scholar]

- Andrich, D. (1988). Rasch models for measurement. Sage Publications, Inc. [Google Scholar]

- Aparicio, J. J., & Moneo, M. R. (2005). Constructivism, the so-called semantic learning theories, and situated cognition versus the psychological learning theories. Spanish Journal of Psychology, 8(2), 180–198. [Google Scholar] [CrossRef]

- Askew, M., & Wiliam, D. (1995). Recent research in mathematics education 5–16. HMSO. [Google Scholar]

- Azigwe, J. B., Kyriakides, L., Panayiotou, A., & Creemers, B. P. M. (2016). The impact of effective teaching characteristics in promoting student achievement in Ghana. International Journal of Educational Development, 51, 51–61. [Google Scholar] [CrossRef]

- Balladares, J., & Kankaraš, M. (2020). Attendance in early childhood education and care programmes and academic proficiencies at age 15 (OECD education working papers, No. 214). OECD Publishing. [Google Scholar] [CrossRef]

- Barnett, J. E., & Francis, A. L. (2011). Using higher order thinking questions to foster critical thinking: A classroom study. Educational Psychology, 32(2), 201–211. [Google Scholar] [CrossRef]

- Berber, N., Langelaan, L., Gaikhorst, L., Smets, W., & Oostdam, R. J. (2024). Differentiating instruction: Understanding the key elements for successful teacher preparation and development. Teaching and Teacher Education, 140, 104464. [Google Scholar] [CrossRef]

- Björklund, C., van den Heuvel-Panhuizen, M., & Kullberg, A. (2020). Research on early childhood mathematics teaching and learning. ZDM, 52, 607–619. [Google Scholar] [CrossRef]

- Black, P., & Wiliam, D. (2009). Developing a theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21(1), 5–31. [Google Scholar] [CrossRef]

- Borich, G. D. (1996). Effective teaching methods (3rd ed.). Macmillan. [Google Scholar]

- Brophy, J. (1986). Teacher influences on student achievement. American Psychologist, 41(10), 1069–1077. [Google Scholar] [CrossRef]

- Brophy, J., & Good, T. L. (1986). Teacher behavior and student achievement. In M. C. Wittrock (Ed.), Handbook of research on teaching (3rd ed., pp. 328–375). MacMillan. [Google Scholar]

- Caro, D. H., Lenkeit, J., & Kyriakides, L. (2016). Teaching strategies and differential effectiveness across learning contexts: Evidence from PISA 2012. Studies in Educational Evaluation, 49, 30–41. [Google Scholar] [CrossRef]

- Carroll, J. B. (1963). A model of school learning. Teachers College Record, 64(8), 723–733. [Google Scholar] [CrossRef]

- Cazden, C. B. (1986). Classroom discourse. In M. C. Wittrock (Ed.), Handbook of research on teaching (pp. 432–463). Macmillan. [Google Scholar]

- Chapman, C., Muijs, D., Reynolds, D., Sammons, P., & Teddlie, C. (2016). The Routledge international handbook of educational effectiveness and improvement. Routledge. [Google Scholar] [CrossRef]

- Christoforidou, M., & Kyriakides, L. (2021). Developing teacher assessment skills: The impact of the dynamic approach to teacher professional development. Studies in Educational Evaluation, 70, 101051. [Google Scholar] [CrossRef]

- Christoforidou, M., Kyriakides, L., Antoniou, P., & Creemers, B. P. M. (2014). Searching for stages of teacher’s skills in assessment. Studies in Educational Evaluation, 40, 1–11. [Google Scholar] [CrossRef]

- Creemers, B. P. M. (1994). The effective classroom. Cassell. [Google Scholar]

- Creemers, B. P. M., & Kyriakides, L. (2006). Critical analysis of the current approaches to modelling educational effectiveness: The importance of establishing a dynamic model. School Effectiveness and School Improvement, 17(3), 347–366. [Google Scholar] [CrossRef]

- Creemers, B. P. M., & Kyriakides, L. (2008). The dynamics of educational effectiveness: A contribution to policy, practice and theory in contemporary schools. Routledge. [Google Scholar]

- Creemers, B. P. M., & Kyriakides, L. (2012). Improving quality in education: Dynamic approaches to school improvement. Routledge. [Google Scholar]

- Creemers, B. P. M., Kyriakides, L., & Antoniou, P. (2013). Teacher professional development for improving quality of teaching. Springer. [Google Scholar]

- Creemers, B. P. M., Kyriakides, L., & Sammons, P. (2010). Methodological advances in educational effectiveness research. Routledge. [Google Scholar] [CrossRef]

- Creemers, B. P. M., & Reezigt, G. J. (1996). School level conditions affecting the effectiveness of instruction. School Effectiveness and School Improvement, 7(3), 197–228. [Google Scholar] [CrossRef]

- De Corte, E. (2000). Marrying theory building and the improvement of school practice: A permanent challenge for instructional psychology. Learning and Instruction, 10(3), 249–266. [Google Scholar] [CrossRef]

- De Jong, R., Westerhof, K. J., & Kruiter, J. H. (2004). Empirical evidence of a comprehensive model of school effectiveness: A multilevel study in mathematics in the first year of junior general education in the Netherlands. School Effectiveness and School Improvement, 15(1), 3–31. [Google Scholar] [CrossRef]

- Demetriou, D., & Kyriakides, L. (2012). The impact of school self-evaluation upon student achievement: A group randomisation study. Oxford Review of Education, 38(2), 149–170. [Google Scholar] [CrossRef]

- Den Brok, P., Brekelmans, M., & Wubbels, T. (2004). Interpersonal teacher behaviour and student outcomes. School Effectiveness and School Improvement, 15(3/4), 407–442. [Google Scholar] [CrossRef]

- Dierendonck, C. (2023). Measuring the classroom level of the Dynamic Model of Educational Effectiveness through teacher self-report: Development and validation of a new instrument. Frontiers in Education, 8, 1281431. [Google Scholar] [CrossRef]

- Dimosthenous, A., Kyriakides, L., & Panayiotou, A. (2020). Short- and long-term effects of the home learning environment and teachers on student achievement in mathematics: A longitudinal study. School Effectiveness and School Improvement, 31(1), 50–79. [Google Scholar] [CrossRef]

- Doyle, W. (1986). Classroom organization and management. In M. C. Wittrock (Ed.), Handbook of research on teaching (3rd ed., pp. 392–431). Macmillan. [Google Scholar]

- Eikeland, I., & Ohna, S. E. (2022). Differentiation in education: A configurative review. Nordic Journal of Studies in Educational Policy, 8(3), 157–170. [Google Scholar] [CrossRef]

- Eliassen, E., Brandlistuen, R. E., & Wang, M. V. (2023). The effect of ECEC process quality on school performance and the mediating role of early social skills. European Early Childhood Education Research Journal, 32(2), 325–338. [Google Scholar] [CrossRef]

- Evertson, C. M., Anderson, C. W., Anderson, L. M., & Brophy, J. E. (1980). Relationships between classroom behaviors and student outcomes in junior high mathematics and English classes. American Educational Research Journal, 17, 43–60. [Google Scholar] [CrossRef]

- Fuller, B., Bein, E., Bridges, M., Kim, Y., & Rabe-Hesketh, S. (2017). Do academic preschools yield stronger benefits? Cognitive emphasis, dosage, and early learning. Journal of Applied Developmental Psychology, 52, 1–11. [Google Scholar] [CrossRef]

- Getenet, S. T., & Beswick, K. (2023). The influence of students’ prior numeracy achievement on later numeracy achievement as a function of gender and year levels. Mathematics Education Research Journal, 35(2), 123–140. [Google Scholar] [CrossRef]

- Gijbels, D., Van de Watering, G., Dochy, F., & Van den Bossche, P. (2006). New learning environments and constructivism: The students’ perspective. Instructional Science, 34(3), 213–226. [Google Scholar] [CrossRef]

- Goyibova, N., Muslimov, N., Sabirova, G., Kadirova, N., & Samatova, B. (2025). Differentiation approach in education: Tailoring instruction for diverse learner needs. MethodsX, 14, 103163. [Google Scholar] [CrossRef]

- Gray, J., Hopkins, D., Reynolds, D., Wilcox, B., Farrell, S., & Jesson, D. (1999). Improving school: Performance and potential. Open University Press. [Google Scholar]

- Grieve, A. M. (2010). Exploring the characteristics of “teachers for excellence”: Teachers’ own perceptions. European Journal of Teacher Education, 33(3), 265–277. [Google Scholar] [CrossRef]

- Hachey, A. C. (2013). The early childhood mathematics education revolution. Early Education and Development, 24(4), 419–430. [Google Scholar] [CrossRef]

- Harjunen, E. (2012). Patterns of control over the teaching–studying–learning process and classrooms as complex dynamic environments: A theoretical framework. European Journal of Teacher Education, 34(2), 139–161. [Google Scholar] [CrossRef]

- Hattie, J., & Timperley, H. (2007). The power of Feedback. Review of Educational Research, 77(1), 81–112. [Google Scholar] [CrossRef]

- Heck, R. H., & Moriyama, K. (2010). Examining relationships among elementary schools’ contexts, leadership, instructional practices, and added-year outcomes: A regression discontinuity approach. School Effectiveness and School Improvement, 21(4), 377–408. [Google Scholar] [CrossRef]

- Hopfenbeck, T. N. (2018). Classroom assessment, pedagogy and learning–twenty years after Black and Wiliam 1998. Assessment in Education: Principles, Policy & Practice, 25(6), 545–550. [Google Scholar]

- Jiang, Y., & Yang, X. (2022). Individual and contextual determinants of students’ learning and performance. Educational Psychology, 42(4), 397–400. [Google Scholar] [CrossRef]

- Katsantonis, I. G. (2025). Typologies of teaching strategies in classrooms and students’ metacognition and motivation: A latent profile analysis of the Greek PISA 2018 data. Metacognition and Learning, 20(4), 86–108. [Google Scholar] [CrossRef]

- Keeves, J. P., & Alagumalai, S. (1999). New approaches to measurement. In G. N. Masters, & J. P. Keeves (Eds.), Advances in measurement in educational research and assessment (pp. 23–42). Pergamon. [Google Scholar]

- Kelly, A. (2014). Measuring equity in educational effectiveness research: The properties and possibilities of quantitative indicators. International Journal of Research & Method in Education, 38(2), 115–136. [Google Scholar] [CrossRef]

- Kirschner, P. A. (2002). Cognitive load theory: Implications of cognitive load theory on the design of learning. Learning and Instruction, 12(1), 1–10. [Google Scholar] [CrossRef]

- Knaus, M. (2017). Supporting early mathematics learning in early childhood settings. Australasian Journal of Early Childhood, 42(3), 4–13. [Google Scholar] [CrossRef]

- Kokkinou, E., & Kyriakides, L. (2022). Investigating differential teacher effectiveness: Searching for the impact of classroom context factors. School Effectiveness and School Improvement, 33(3), 403–430. [Google Scholar] [CrossRef]

- Konstantopoulos, S. (2011). Teacher effects in early grades: Evidence from a randomized study. Teachers College Record, 113(7), 1541–1565. [Google Scholar] [CrossRef]

- Kraiger, K., Ford, J. K., & Salas, E. (1993). Application of cognitive, skill-based, and affective theories of learning outcomes to new methods of training evaluation. Journal of Applied Psychology, 78(2), 311–328. [Google Scholar] [CrossRef]

- Krepf, M., & König, J. (2022). Structuring the lesson: An empirical investigation of pre-service teacher decision-making during the planning of a demonstration lesson. Journal of Education for Teaching, 49(5), 911–926. [Google Scholar] [CrossRef]

- Kyriakides, L. (2002). A research-based model for the development of policy on baseline assessment. British Educational Research Journal, 28(6), 805–826. [Google Scholar] [CrossRef]

- Kyriakides, L. (2007). Generic and differentiated models of educational effectiveness: Implications for the improvement of educational practice. In T. Townsend (Ed.), International handbook of school effectiveness and improvement (pp. 41–56). Springer. [Google Scholar]

- Kyriakides, L., Anthimou, M., & Panayiotou, A. (2020). Searching for the impact of teacher behavior on promoting students’ cognitive and metacognitive skills. Studies in Educational Evaluation, 64, 100810. [Google Scholar] [CrossRef]

- Kyriakides, L., Antoniou, P., & Dimosthenous, A. (2021a). Does the duration of school interventions matter? The effectiveness and sustainability of using the dynamic approach to promote quality and equity. School Effectiveness and School Improvement, 32, 607–630. [Google Scholar] [CrossRef]

- Kyriakides, L., Charalambous, E., Christoforidou, M., Antoniou, P., & Ioannou, I. (2024a). Searching for differential effects of the dynamic approach to teacher professional development: A study on promoting formative assessment. European Journal of Teacher Education, 47, 1–20. [Google Scholar] [CrossRef]

- Kyriakides, L., Christoforou, C., & Charalambous, C. Y. (2013). What matters for student learning outcomes: A meta-analysis of studies exploring factors of effective teaching. Teaching and Teacher Education, 36, 143–152. [Google Scholar] [CrossRef]

- Kyriakides, L., & Creemers, B. P. M. (2008). Using a multidimensional approach to measure the impact of classroom-level factors upon student achievement: A study testing the validity of the dynamic model. School Effectiveness and School Improvement, 19(2), 183–205. [Google Scholar] [CrossRef]

- Kyriakides, L., & Creemers, B. P. M. (2009). The effects of teacher factors on different outcomes: Two studies testing the validity of the dynamic model. Effective Education, 1(1), 61–86. [Google Scholar] [CrossRef]

- Kyriakides, L., Creemers, B. P. M., & Charalambous, E. (2018a). Equity and quality dimensions in educational effectiveness. Springer. [Google Scholar]

- Kyriakides, L., Creemers, B. P. M., & Charalambous, E. (2019). Searching for differential teacher and school effectiveness in terms of student socioeconomic status and gender: Implications for promoting equity. School Effectiveness and School Improvement, 30(3), 286–308. [Google Scholar] [CrossRef]

- Kyriakides, L., Creemers, B. P. M., & Panayiotou, A. (2018b). Using educational effectiveness research to promote quality of teaching: The contribution of the dynamic model. ZDM—Mathematics Education, 50, 381–393. [Google Scholar] [CrossRef]

- Kyriakides, L., Creemers, B. P. M., Panayiotou, A., & Charalambous, E. (2021b). Quality and equity in education: Revisiting theory and research on educational effectiveness and improvement (1st ed.). Routledge. [Google Scholar] [CrossRef]

- Kyriakides, L., Ioannou, I., Charalambous, E., & Michaelidou, V. (2024b). The dynamic approach to school improvement: Investigating duration and sustainability effects on student achievement in mathematics. School Effectiveness and School Improvement, 35, 342–364. [Google Scholar] [CrossRef]

- Leinhardt, G. (1989). Math lessons: A contrast of novice and expert competence. Journal for Research in Mathematics Education, 20(1), 52–75. [Google Scholar] [CrossRef]

- Linder, S. M., Powers-Costello, B., & Stegelin, D. A. (2011). Mathematics in early childhood: Research-based rationale and practical strategies. Early Childhood Education Journal, 39, 29–37. [Google Scholar] [CrossRef]

- Lindorff, A., Sammons, P., & Hall, J. (2020). International perspectives in educational effectiveness research: A historical overview. In J. Hall, A. Lindorff, & P. Sammons (Eds.), International perspectives in educational effectiveness research (pp. 23–44). Springer. [Google Scholar] [CrossRef]

- Mejía-Rodríguez, A. M., & Kyriakides, L. (2022). What matters for student learning outcomes? A systematic review of studies exploring system-level factors of educational effectiveness. Review of Education, 34, e3374. [Google Scholar] [CrossRef]

- Michaelidou, V. (2025). Searching for effective teacher practices during play to promote student learning outcomes. Studies in Educational Evaluation, 86, 101473. [Google Scholar] [CrossRef]

- Ministry of Education, Religious Affairs and Sports. (2003). Greek interdisciplinary integrated curriculum framework for kindergarten (Διαθεματικό Ενιαίο Πλαίσιο Προγραμμάτων Σπουδών (ΔΕΠΠΣ) για το Νηπιαγωγείο). The Institute of Educational Policy. Available online: http://www.pi-schools.gr/content/index.php?lesson_id=300&ep=367 (accessed on 1 May 2020).

- Muijs, D., & Reynolds, D. (2001). Effective teaching: Evidence and practice. Sage. [Google Scholar]

- Muijs, R. D., Kyriakides, L., van der Werf, G., Creemers, B. P. M., Timperley, H., & Earl, L. (2014). State of the art-teacher effectiveness and professional learning. School Effectiveness and School Improvement, 25(2), 231–256. [Google Scholar] [CrossRef]

- Orr, S., & Bieda, K. (2025). Learning to elicit student thinking: The role of planning to support academically rigorous questioning sequences during instruction. Journal of Mathematics Teacher Education, 28(3), 523–544. [Google Scholar] [CrossRef]

- Paas, F., Renkl, A., & Sweller, J. (2003). Cognitive load theory and instructional design: Recent developments. Educational Psychologist, 38(1), 1–4. [Google Scholar] [CrossRef]

- Panadero, E., Broadbent, J., Boud, D., & Lodge, J. M. (2019). Using formative assessment to influence self-and co-regulated learning: The role of evaluative judgement. European Journal of Psychology of Education, 34, 535–557. [Google Scholar] [CrossRef]

- Panayiotou, A., Kyriakides, L., & Creemers, B. P. M. (2016). Testing the validity of the dynamic model at school level: A European study. School Leadership and Management, 36(1), 1–20. [Google Scholar] [CrossRef]

- Panayiotou, A., Kyriakides, L., Creemers, B. P. M., McMahon, L., Vanlaar, G., Pfeifer, M., Rekalidou, G., & Bren, M. (2014). Teacher behavior and student outcomes: Results of a European study. Educational Assessment, Evaluation and Accountability, 26, 73–93. [Google Scholar] [CrossRef]

- Paris, S. G., & Paris, A. H. (2001). Classroom applications of research on self-regulated learning. Educational Psychologist, 36(2), 89–101. [Google Scholar] [CrossRef]

- Pellegrino, J. W. (2004). Complex learning environments: Connecting learning theory, Instructional design, and technology. In N. M. Seel, & S. Dijkstra (Eds.), Curriculum, plans, and processes in instructional design (pp. 25–49). Lawrence Erlbaum Associates. [Google Scholar]

- Perry, L. B., Saatcioglu, A., & Mickelson, R. A. (2022). Does school SES matter less for high-performing students than for their lower-performing peers? A quantile regression analysis of PISA 2018 Australia. Large-Scale Assessments in Education, 10, 17. [Google Scholar] [CrossRef] [PubMed]

- Pollarolo, E., Skarstein, T. H., Størksen, I., & Kucirkova, N. (2023). Mathematics and higher-order thinking in early childhood education and care (ECEC). Nordisk Barnehageforskning, 20(2), 70–88. [Google Scholar] [CrossRef]

- Polymeropoulou, V., & Lazaridou, A. (2022). Quality teaching: Finding the factors that foster student performance in junior high school classrooms. Education Sciences, 12(5), 327. [Google Scholar] [CrossRef]

- Pramling, N. (2022). Educating early childhood education teachers for play-responsive early childhood education and care (PRECEC). In E. Loizou, & J. Trawick-Smith (Eds.), Teacher education and play pedagogy: International perspectives (1st ed., pp. 67–81). Routledge. [Google Scholar] [CrossRef]

- Redfield, D. L., & Rousseau, E. W. (1981). A meta-analysis of experimental research on teacher questioning behavior. Review of Educational Research, 51(2), 237–245. [Google Scholar] [CrossRef]

- Reezigt, G. J., Guldemond, H., & Creemers, B. P. M. (1999). Empirical validity for a comprehensive model on educational effectiveness. School Effectiveness and School Improvement, 10(2), 193–217. [Google Scholar] [CrossRef]

- Rosenshine, B., & Stevens, R. (1986). Teaching functions. In M. C. Wittrock (Ed.), Handbook of research on teaching (3rd ed., pp. 376–391). Macmillan. [Google Scholar]

- Sammons, P. (2009). The dynamics of educational effectiveness: A contribution to policy, practice and theory in contemporary schools. School Effectiveness & School Improvement, 20(1), 123–129. [Google Scholar] [CrossRef]

- Sammons, P., Sylva, K., Hall, J., Siraj, I., Melhuish, E., Taggart, B., & Mathers, S. (2017). Establishing the effects of quality in early childhood: Comparing evidence from England. Early Education, 1–10. [Google Scholar] [CrossRef]

- Sammons, P., Sylva, K., Melhuish, E., Siraj-Blatchford, I., Taggart, B., & Hunt, S. (2008a). Effective pre-school and primary education 3-11 Project (EPPE 3-11): Influences on children’s attainment and progres s in key stage 2: Cognitive outcomes in year 6. Research report No. DCSF-RR048. DCSF Publications. [Google Scholar]

- Sammons, P., Sylva, K., Siraj-Blatchford, I., Taggart, B., Smees, R., & Melhuish, E. (2008b). Effective pre-school and primary education 3-11 project (EPPE 3-11) influences on pupils’ selfperceptions in primary school: Enjoyment of school, anxiety and isolation, and self-image in year 5. Institute of Education, University of London. [Google Scholar]

- Scheerens, J. (1992). Effective schooling: Research, theory and practice. Cassell. [Google Scholar]

- Scheerens, J. (2013). The use of theory in school effectiveness research revisited. School Effectiveness and School Improvement, 24(1), 1–38. [Google Scholar] [CrossRef]

- Scheerens, J. (2016). Educational effectiveness and ineffectiveness: A critical review of the knowledge base. Springer. [Google Scholar]

- Scheerens, J., & Bosker, R. J. (1997). The foundations of educational effectiveness. Pergamon. [Google Scholar]

- Scherer, R., & Nilsen, T. (2019). Closing the gaps? Differential effectiveness and accountability as a road to school improvement. School Effectiveness and School Improvement, 30(3), 255–260. [Google Scholar] [CrossRef]

- Schoenfeld, A. H. (1998). Toward a theory of teaching-in-context. Issues in Education, 4(1), 1–94. [Google Scholar] [CrossRef]

- Seidel, T., & Shavelson, R. J. (2007). Teaching effectiveness research in the past decade: The role of theory and research design in disentangling meta-analysis results. Review of Educational Research, 77(4), 454–499. [Google Scholar] [CrossRef]

- Shepard, L. A. (1989). Why we need better assessment. Educational Leadership, 46(2), 4–8. [Google Scholar]

- Shuey, E., & Kankaraš, M. (2018). The power and promise of early learning (OECD education working papers, No. 186). OECD Publishing. [Google Scholar] [CrossRef]

- Slater, R. O., & Teddlie, C. (1992). Toward a theory of school effectiveness and leadership. School Effectiveness and School Improvement, 3(4), 247–257. [Google Scholar] [CrossRef]

- Stringfield, S. C., & Slavin, R. E. (1992). A hierarchical longitudinal model for elementary school effects. In B. P. M. Creemers, & G. J. Reezigt (Eds.), Evaluation of educational effectiveness (pp. 35–69). ICO. [Google Scholar]

- Tan, C. Y., Hong, X., Gao, L., & Song, Q. (2023). Meta-analytical insights on school SES effects. Educational Review, 77(1), 274–302. [Google Scholar] [CrossRef]

- Teddlie, C., & Reynolds, D. (2000). The international handbook of school effectiveness research. Falmer Press. [Google Scholar]

- ten Braak, D., Lenes, R., Purpura, D. J., Schmitt, S. A., & Størksen, I. (2022). Why do early mathematics skills predict later mathematics and reading achievement? The role of executive function. Journal of Experimental Child Psychology, 214, 105306. [Google Scholar] [CrossRef]

- Townsend, T. (2007). International handbook of school effectiveness and improvement. Springer. [Google Scholar]

- van Geel, M., Voeten, M., Jansen, L., Helms-Lorenz, M., Maulana, R., & Visscher, A. (2023). Adapting teaching to students’ needs: What does it require from teachers? In R. Maulana, M. Helms-Lorenz, & R. M. Klassen (Eds.), Effective teaching around the world (pp. 723–736). Springer. [Google Scholar] [CrossRef]

- Vogel, S. E., Grabner, R. H., & Schneider, M. (2023). Foundations for future math achievement: Early numeracy, home learning environment, and the absence of math anxiety. Trends in Neuroscience and Education, 33, 100217. [Google Scholar] [CrossRef] [PubMed]

- Walberg, H. J. (1986). Syntheses of research on teaching. In M. C. Wittrock (Ed.), Handbook of research on teaching (pp. 214–229). Macmillan. [Google Scholar]

- Watts, T. W., Duncan, G. J., Siegler, R. S., & Davis-Kean, P. E. (2024). It matters how you start: Early numeracy mastery predicts high school math course-taking and college attendance. Developmental Psychology, 60(1), 123–135. [Google Scholar] [CrossRef]

- Wilks, R. (1996). Classroom management in primary schools: A review of the literature. Behaviour Change, 13, 20–32. [Google Scholar] [CrossRef]

- Wolterinck, C., Poortman, C., Schildkamp, K., & Visscher, A. (2022). Assessment for learning: Developing the required teacher competencies. European Journal of Teacher Education, 47(4), 711–729. [Google Scholar] [CrossRef]

- Wright, B. D. (1985). Additivity in psychological measurement. In E. E. Roskam (Ed.), Measurement and personality assessment (Vol. 8, pp. 101–111). Elsevier. [Google Scholar]

- Yang, L., Chiu, M. M., & Yan, Z. (2021). The power of teacher feedback in affecting student learning and achievement: Insights from students’ perspective. Educational Psychology, 41(7), 821–824. [Google Scholar] [CrossRef]

- Zajda, J. (2024). Social and cultural factors and their influences on engagement in the classroom. In Engagement, motivation, and students’ achievement (Vol. 48, pp. 324–342). Globalisation, comparative education and policy research. Springer. [Google Scholar] [CrossRef]

- Zeitlhofer, I., Zumbach, J., & Schweppe, J. (2024). Complexity affects performance, cognitive load, and awareness. Learning and Instruction, 94, 102001. [Google Scholar] [CrossRef]

- Zeng, Y. (2025). Research on the strategies of satisfying students’ individualized learning needs in curriculum education. Journal of International Education and Development, 9(1), 48–53. [Google Scholar] [CrossRef]

| SEM Models | X2 | d.f. | CFI | RMSEA | X2/d.f. |

|---|---|---|---|---|---|

| Orientation | |||||

| (1) 5 correlated traits, 2 correlated methods | 118.7 | 69 | 0.945 | 0.03 | 1.72 |

| Questioning | |||||

| (1) 5 correlated traits, 2 correlated methods | 126.3 | 69 | 0.948 | 0.04 | 1.83 |

| Application | |||||

| (1) 5 correlated traits, 2 correlated methods | 116.6 | 69 | 0.952 | 0.03 | 1.69 |

| Modeling | |||||

| (1) 4 correlated traits, 2 correlated methods | 119.4 | 73 | 0.949 | 0.03 | 1.63 |

| Management of Time | |||||

| (1) 5 correlated traits, 2 correlated methods | 117.3 | 69 | 0.953 | 0.02 | 1.70 |

| Structuring | |||||

| (1) 5 correlated traits, 2 correlated methods | 120.8 | 69 | 0.956 | 0.02 | 1.75 |

| Classroom as a Learning Environment | |||||

| (Teacher-student relations, Student-student relations) | |||||

| (1) 2 correlated second order, 2 correlated methods | 118.0 | 69 | 0.955 | 0.02 | 1.71 |

| Dealing with Misbehavior | |||||

| (1) 5 correlated traits, 2 correlated methods | 127.0 | 69 | 0.951 | 0.04 | 1.84 |

| Final Mathematics Achievement (Dependent Variable) | |||||||

|---|---|---|---|---|---|---|---|

| Factors | Model 0 | Model 1 | Model 2a | Model 2b | Model 2c | Model 2d | Model 2e |

| Fixed part (Intercept) | 17.41(1.02) *** | 11.21 (1.01) *** | 9.85 (1.03) *** | 9.87 (1.02) *** | 10.24 (1.04) *** | 9.56 (1.03) *** | 10.21 (1.02) *** |

| Student Level | |||||||

| Prior achievement | 0.61 (0.02) *** | 0.59 (0.03) *** | 0.58 (0.03) *** | 0.57 (0.03) *** | 0.57 (0.03) *** | 0.58 (0.03) *** | |

| Sex (boys = 0, girls = 1) | 0.15 (0.13) | 0.16 (0.14) | 0.14 (0.12) | 0.10 (0.11) | 0.17 (0.15) | 0.19 (0.16) | |

| Age (in years) | −0.08 (0.24) | −0.07 (0.25) | −0.08 (0.28) | −0.08 (0.26) | −0.09 (0.26) | −0.07 (0.27) | |

| Teacher Level | |||||||

| Freq Structuring | 0.38 (0.16) ** | ||||||

| Freq Orientation | 0.32 (0.14) * | ||||||

| Freq Questioning | 0.27 (0.13) * | ||||||

| Freq Application | 0.14 (0.14) | ||||||

| Freq Modeling | 0.18 (0.15) | ||||||

| Freq Management of time | 0.31 (0.14) * | ||||||

| Freq Teacher-student relation | 0.36 (0.15) ** | ||||||

| Freq Student-student relation | 0.34 (0.14) ** | ||||||

| Freq Misbehavior | 0.35 (0.16) * | ||||||

| Stage Structuring | 0.24 (0.09) ** | ||||||

| Stage Orientation | 0.25 (0.11) * | ||||||

| Stage Questioning | 0.19 (0.09) * | ||||||

| Stage Application | 0.17 (0.08) * | ||||||

| Stage Modeling | 0.20 (0.09) * | ||||||

| Stage Management of time | 0.25 (0.11) * | ||||||

| Stage Teacher-student relations | 0.13 (0.11) | ||||||

| Stage Student-student relation | 0.24 (0.11) * | ||||||

| Stage Misbehavior | 0.22 (0.10) * | ||||||

| Focus Structuring | 0.29 (0.12) ** | ||||||

| Focus Orientation | 0.14 (0.13) | ||||||

| Focus Questioning | 0.24 (0.11) * | ||||||

| Focus Application | 0.19 (0.08) ** | ||||||

| Focus Modeling | 0.17 (0.08) * | ||||||

| Focus Management of time | 0.14 (0.09) | ||||||

| Focus Teacher-student relation | 0.16 (0.07) * | ||||||

| Focus Student-student relation | 0.18 (0.09) * | ||||||

| Focus Misbehavior | 0.09(0.08) | ||||||

| Quality Structuring | 0.29 (0.11) ** | ||||||

| Quality Orientation | 0.26 (0.10) ** | ||||||

| Quality Questioning | 0.34 (0.12)** | ||||||

| Quality Application | 0.28 (0.13) * | ||||||

| Quality Management of time | 0.33 (0.14) ** | ||||||

| Quality Teacher-student relations | 0.29 (0.12) ** | ||||||

| Quality Student-student relation | 0.28 (0.11) ** | ||||||

| Quality Misbehavior | 0.28 (0.12) * | ||||||

| Dif/tion Structuring | 0.12 (0.08) | ||||||

| Dif/tion Orientation | 0.21 (0.10) * | ||||||

| Dif/tion Questioning | 0.19 (0.09 )* | ||||||

| Dif/tion Application | 0.21 (0.09)* | ||||||

| Dif/tion Management of time | 0.13 (0.11) | ||||||

| Dif/tion Teacher-student relation | 0.20 (0.09) * | ||||||

| Dif/tion Student-student relation | 0.15 (0.09) | ||||||

| Dif/tion Misbehavior | 0.16 (0.09) | ||||||

| Quality Modeling including differentiation | 0.17 (0.08) * | ||||||

| Variance components | |||||||

| Class | 20.20% | 17.31% | 13.25% | 12.09% | 12.58% | 10.80% | 12.19% |

| Student | 79.80% | 32.99% | 32.65% | 32.61% | 32.62% | 32.60% | 32.61% |

| Explained | 49.70% | 54.10% | 55.30% | 54.80% | 56.60% | 55.20% | |

| Significance test | |||||||

| X2 | 2654.53 | 2165.82 | 1918.22 | 1868.62 | 1936.92 | 1853.52 | 1962.52 |

| Reduction | 488.71 | 247.6 | 297.2 | 228.9 | 312.3 | 203.3 | |

| Degrees of freedom | 1 | 7 | 8 | 6 | 8 | 5 | |

| p-value | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Michaelidou, V.; Kyriakides, L.; Sakellariou, M.; Strati, P.; Mitsi, P.; Banou, M. Characteristics of Effective Mathematics Teaching in Greek Pre-Primary Classrooms. Educ. Sci. 2025, 15, 1140. https://doi.org/10.3390/educsci15091140

Michaelidou V, Kyriakides L, Sakellariou M, Strati P, Mitsi P, Banou M. Characteristics of Effective Mathematics Teaching in Greek Pre-Primary Classrooms. Education Sciences. 2025; 15(9):1140. https://doi.org/10.3390/educsci15091140

Chicago/Turabian StyleMichaelidou, Victoria, Leonidas Kyriakides, Maria Sakellariou, Panagiota Strati, Polyxeni Mitsi, and Maria Banou. 2025. "Characteristics of Effective Mathematics Teaching in Greek Pre-Primary Classrooms" Education Sciences 15, no. 9: 1140. https://doi.org/10.3390/educsci15091140

APA StyleMichaelidou, V., Kyriakides, L., Sakellariou, M., Strati, P., Mitsi, P., & Banou, M. (2025). Characteristics of Effective Mathematics Teaching in Greek Pre-Primary Classrooms. Education Sciences, 15(9), 1140. https://doi.org/10.3390/educsci15091140