Integrating Artificial Intelligence and Extended Reality in Language Education: A Systematic Literature Review (2017–2024)

Abstract

1. Introduction

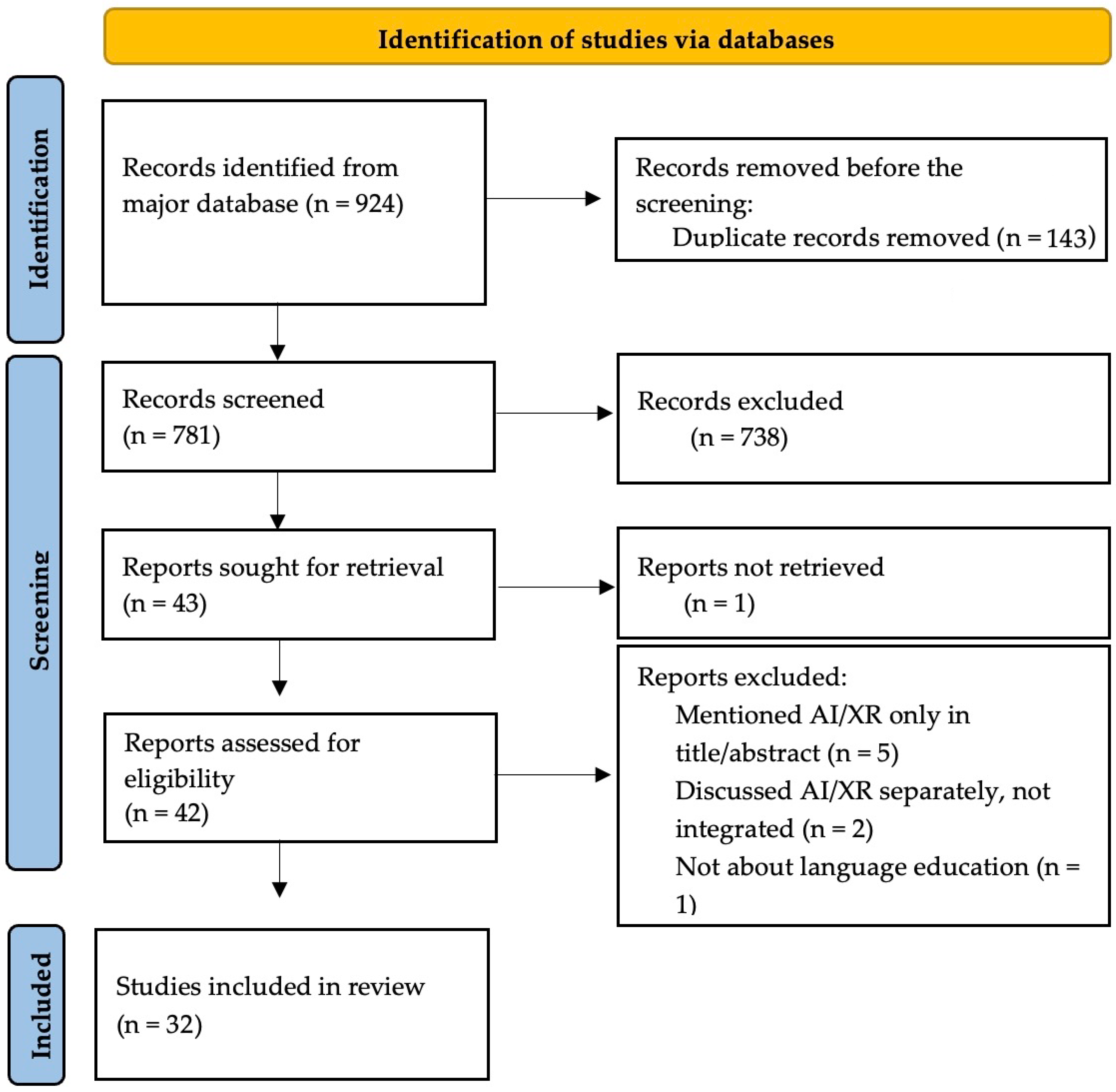

2. Methodology

2.1. Search Strategy (Identification)

2.2. Inclusion and Exclusion Criteria (Eligibility)

2.2.1. Inclusion Criteria

2.2.2. Exclusion Criteria

2.3. Screening and Selection

3. Results

3.1. Demographic Information of Selected Studies

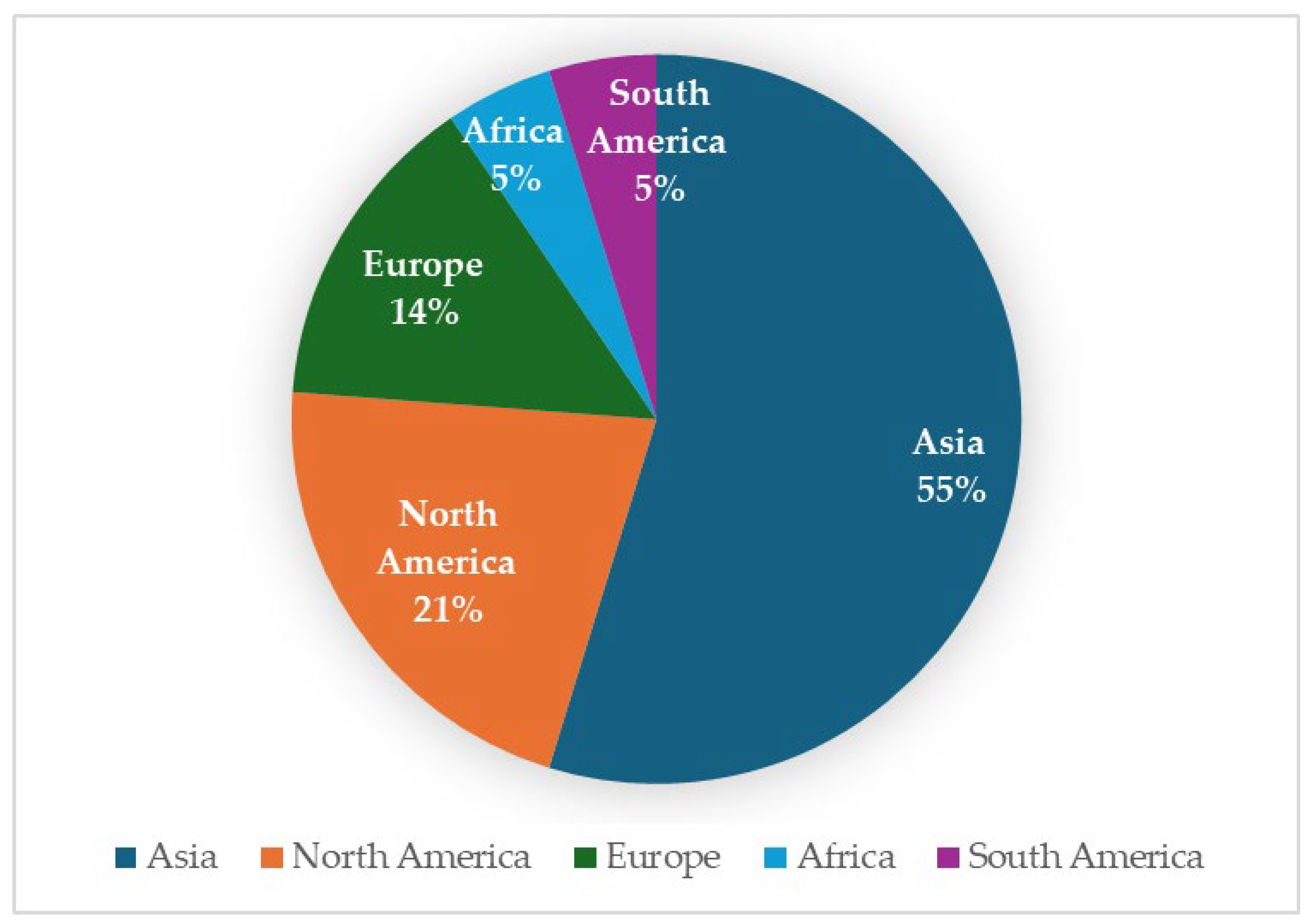

3.1.1. Geographic Locations

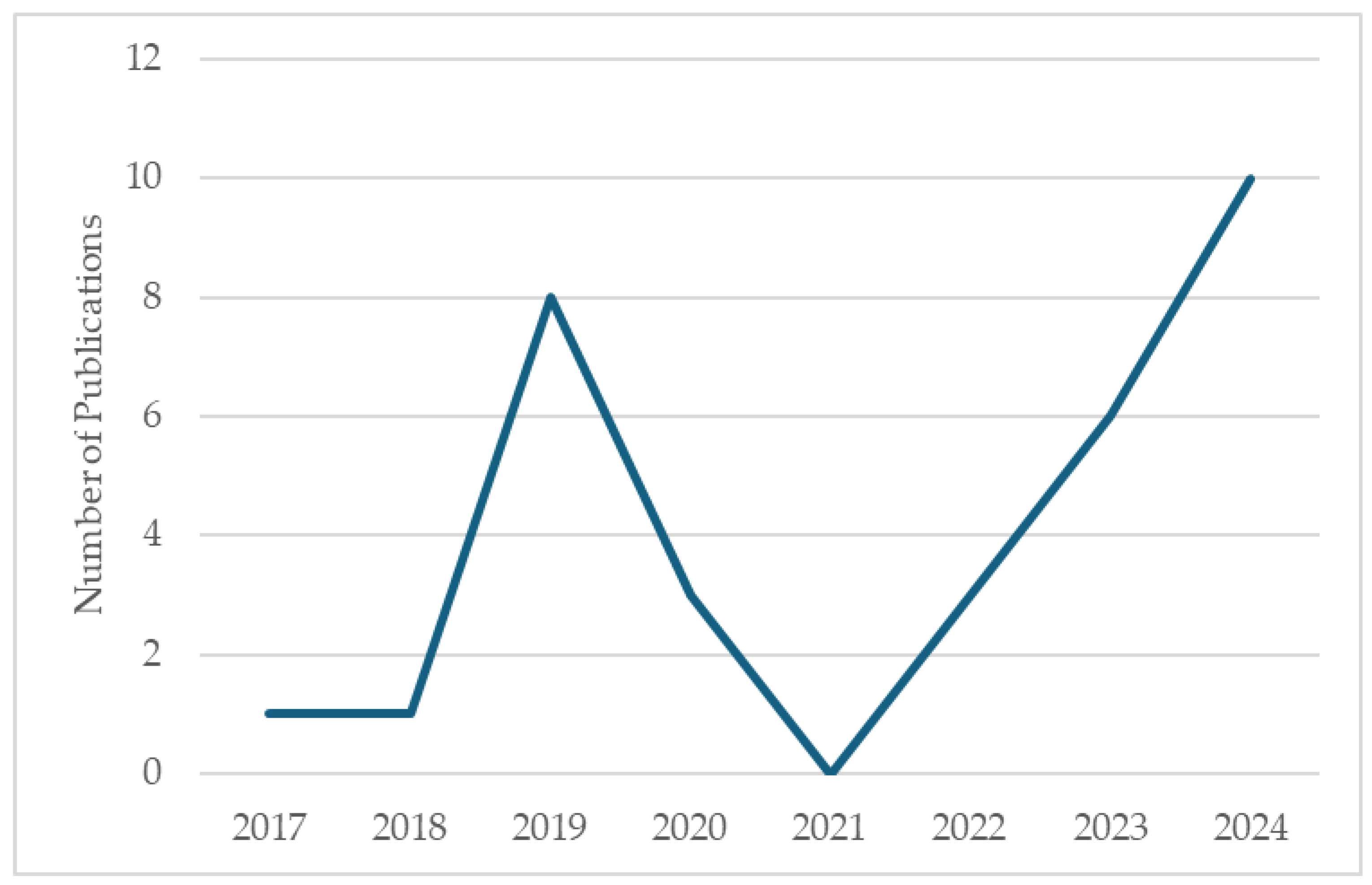

3.1.2. Publication Trend

3.1.3. Scholarly Sources by Type

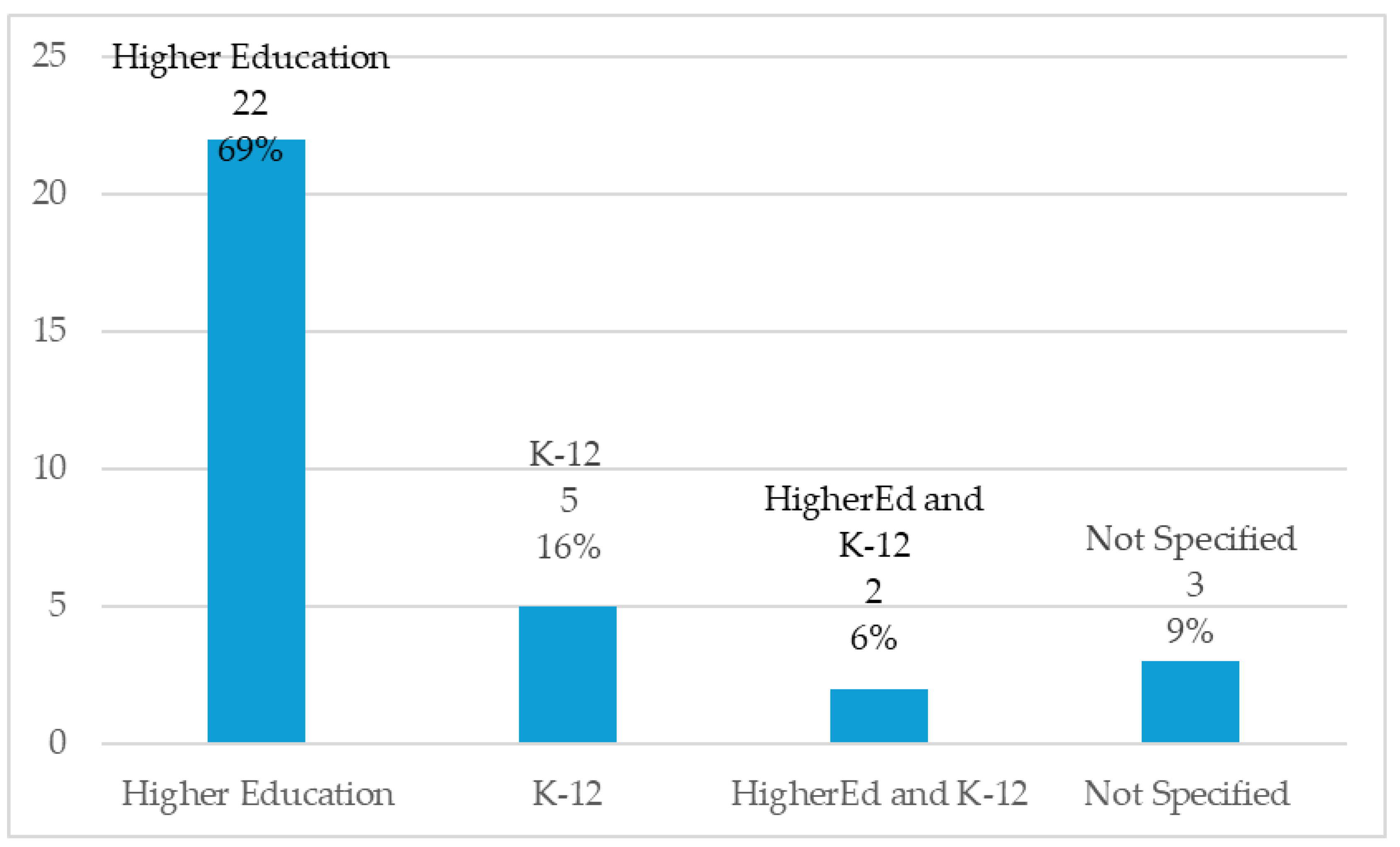

3.1.4. Educational Levels

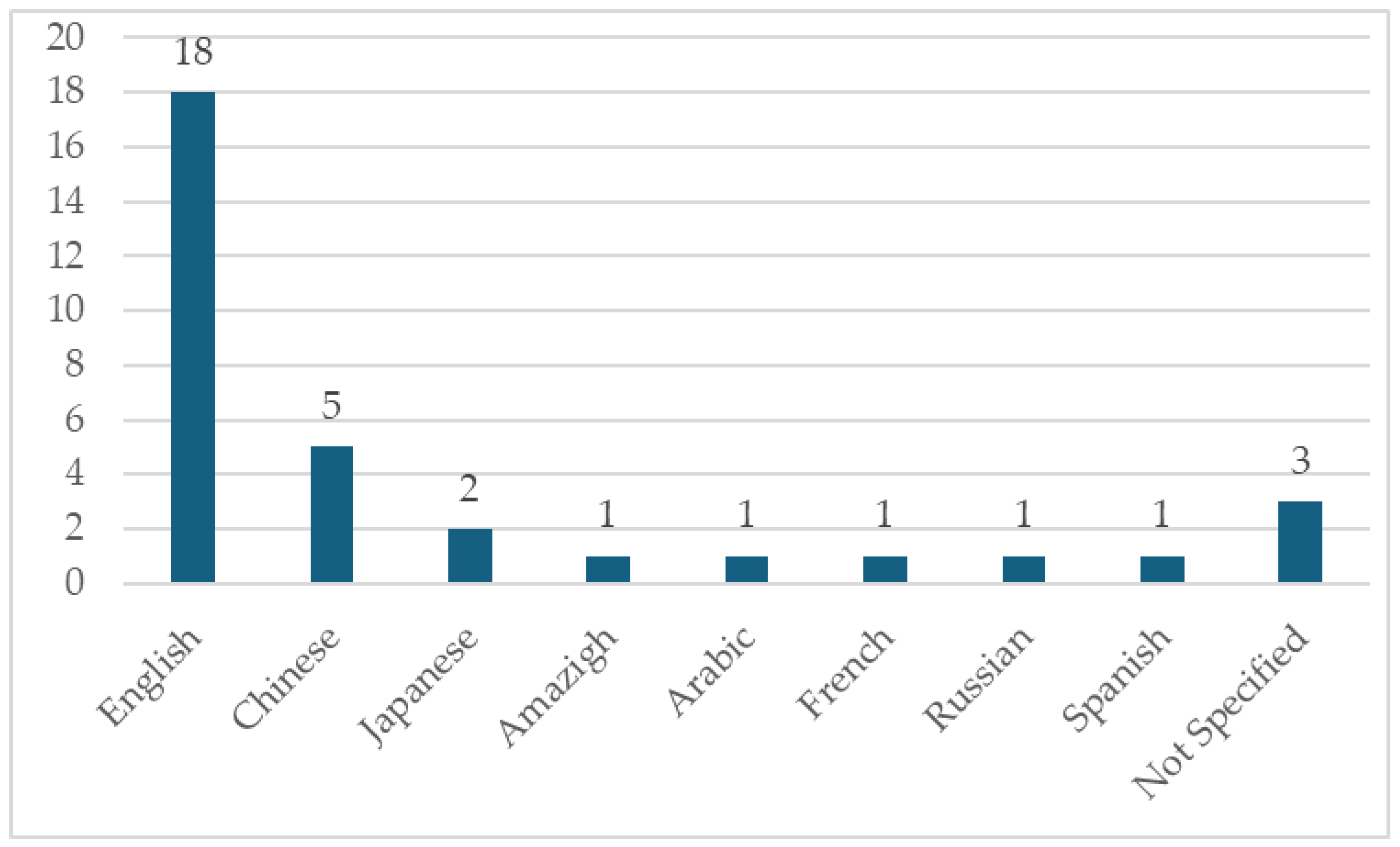

3.1.5. Target Languages

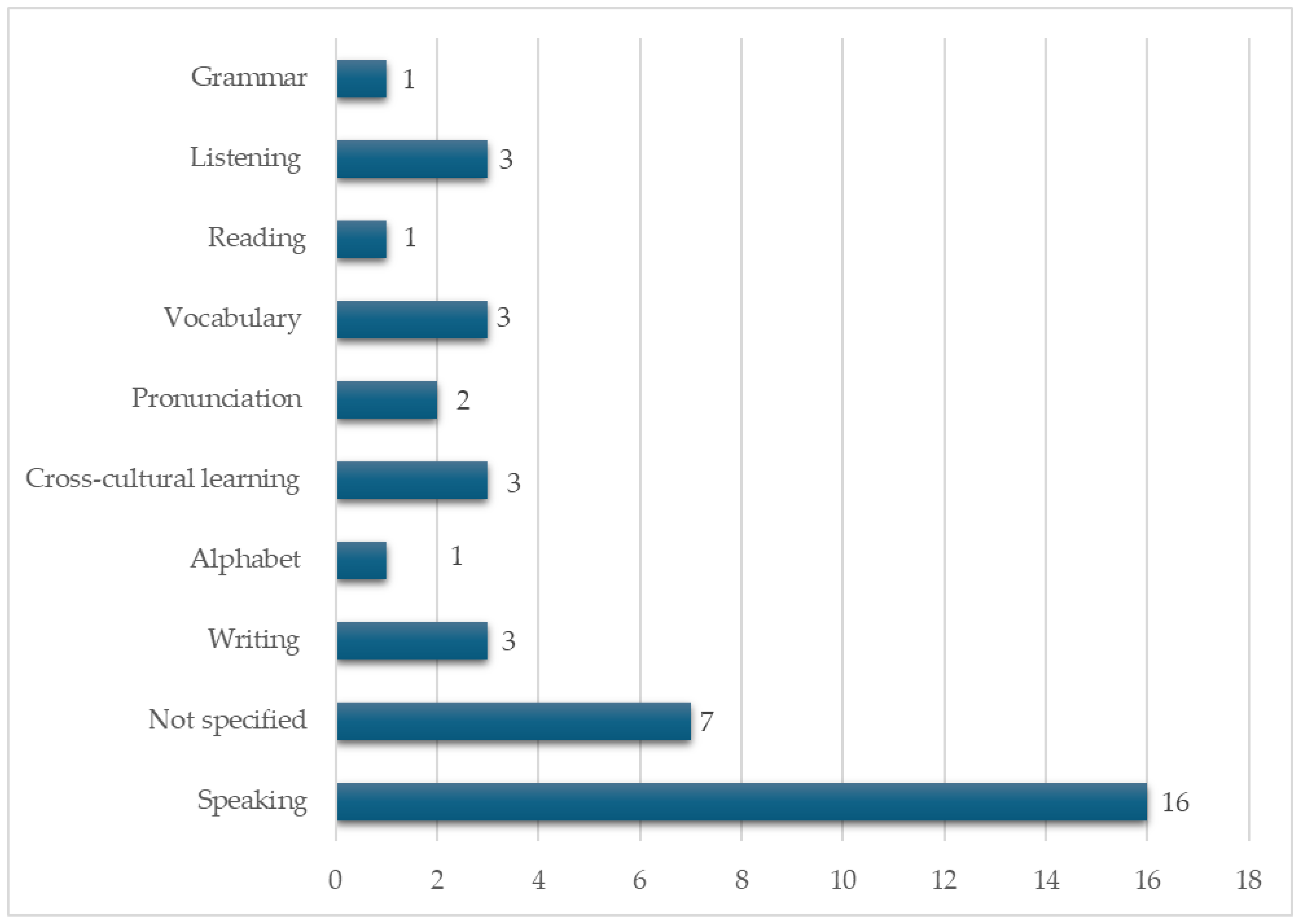

3.1.6. Language Foci

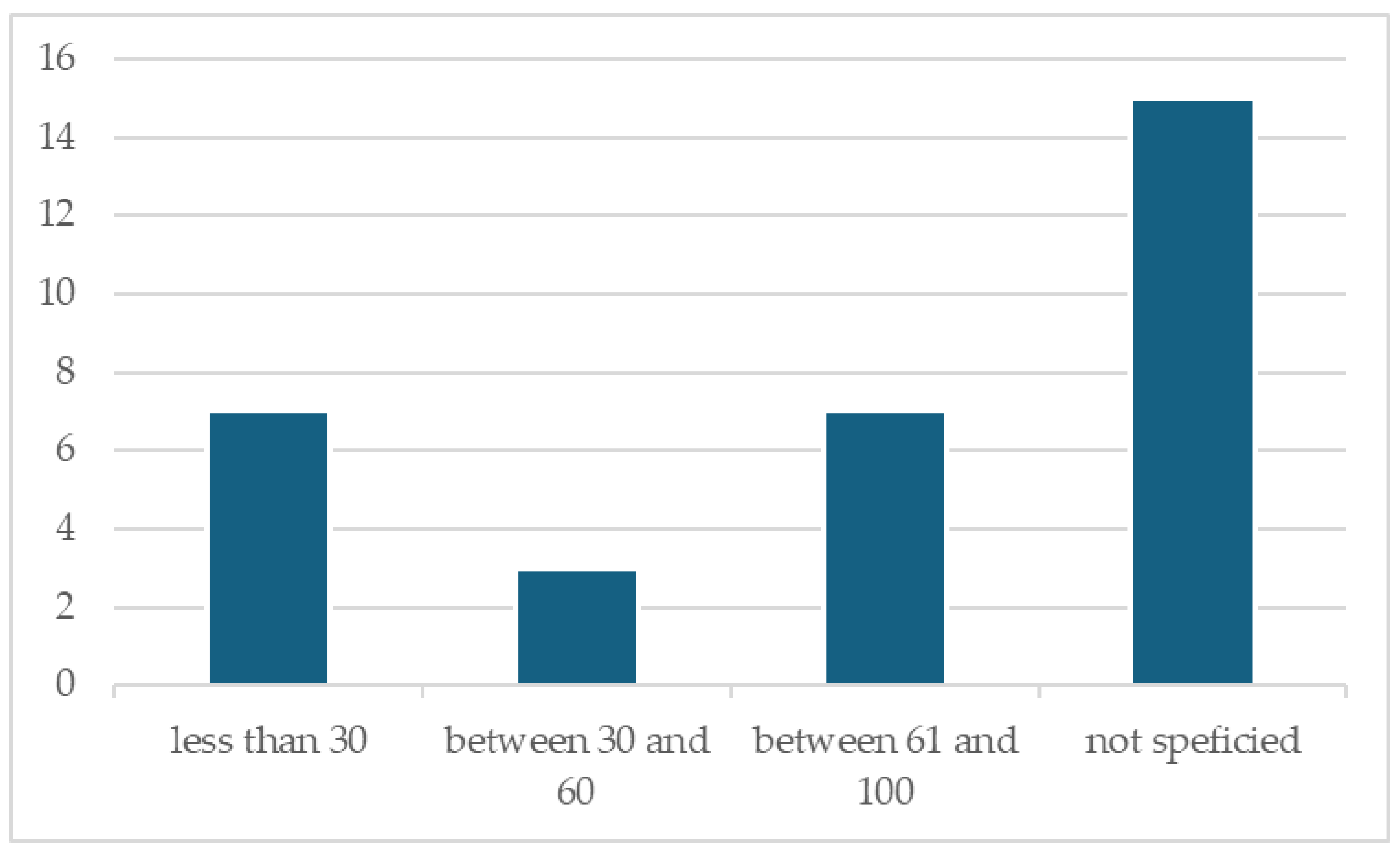

3.1.7. Participants Number

3.1.8. Length of Intervention

3.1.9. Learning Outcomes

3.1.10. XR Applications

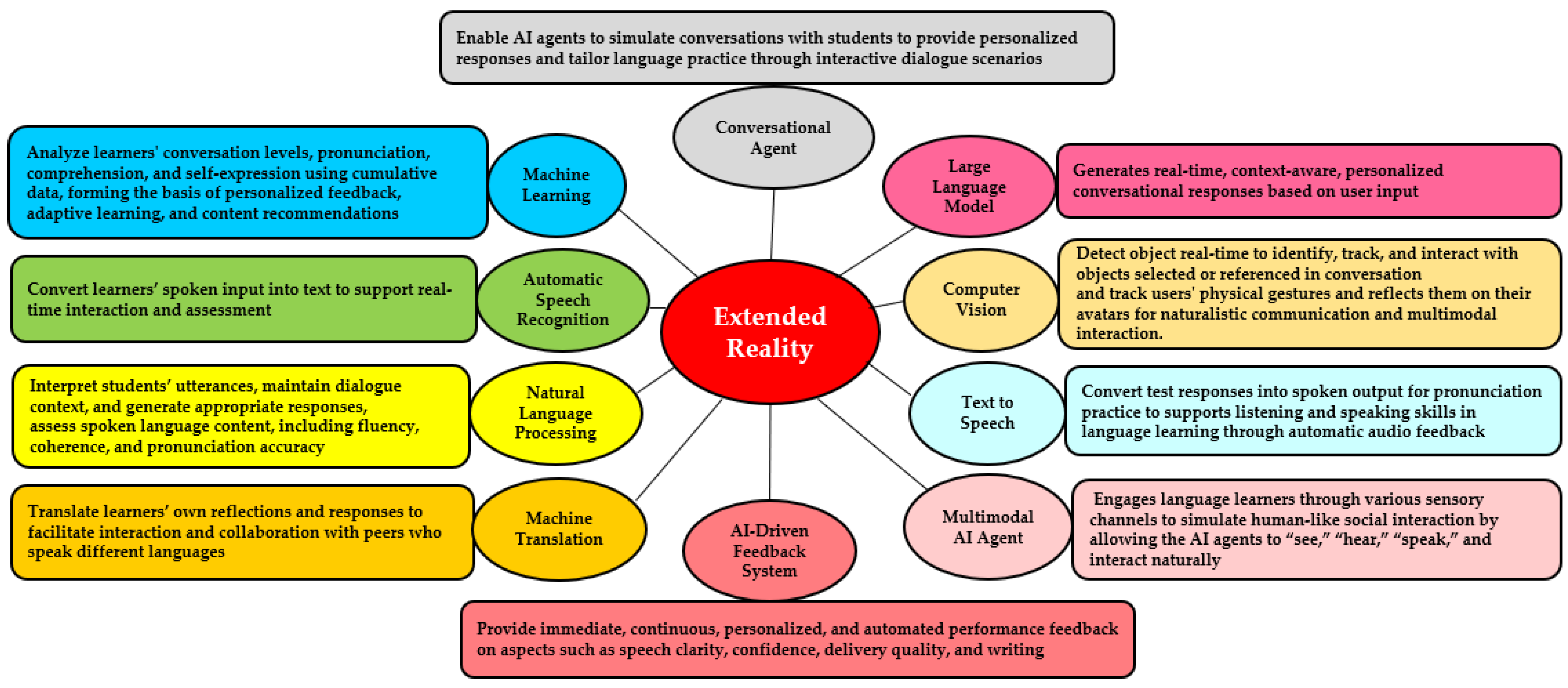

3.1.11. AI Applications

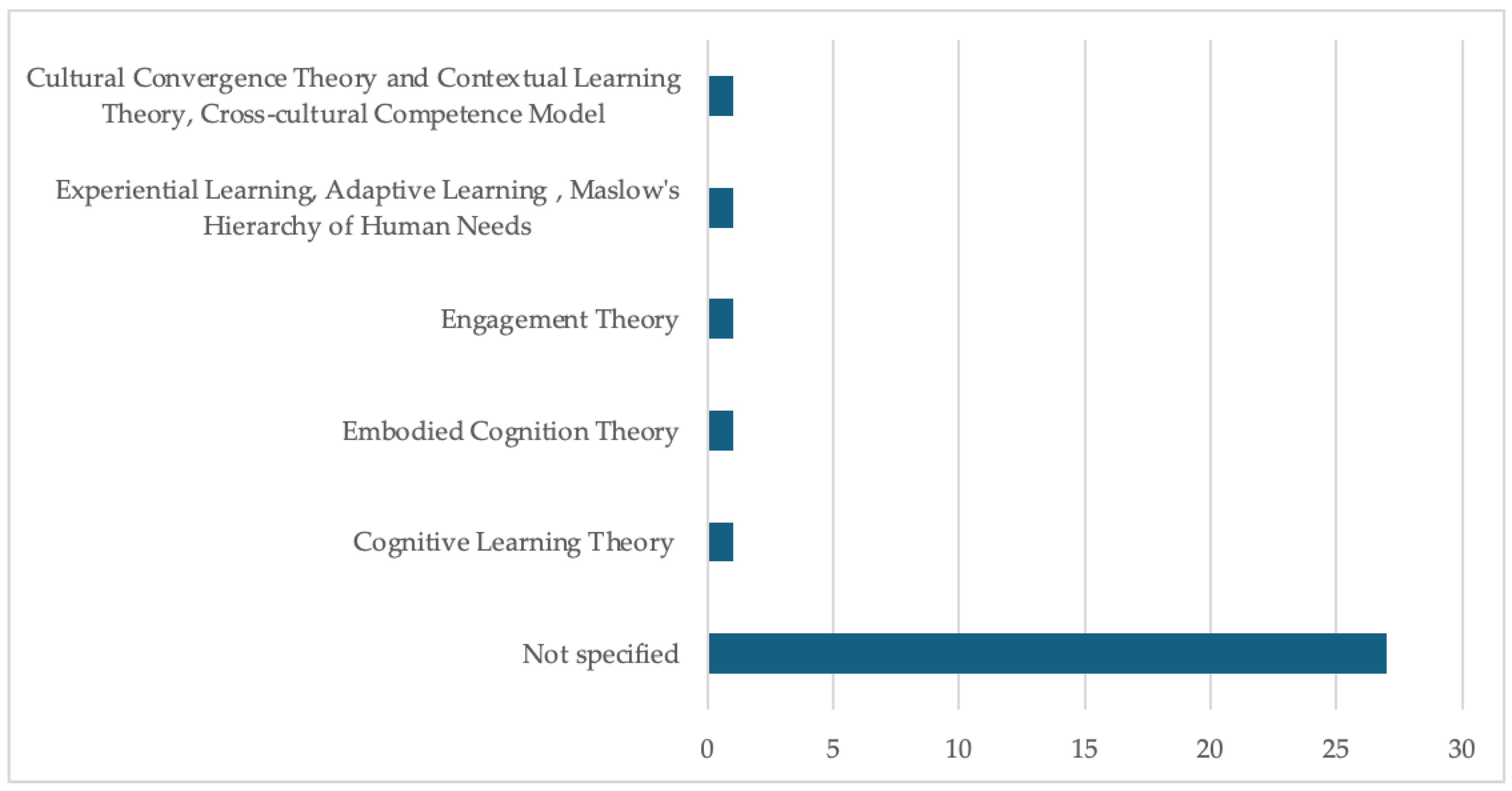

3.1.12. Theoretical Framework

3.2. Integration Strategies and Integration Affordances

3.2.1. Integration Strategies

AI–XR Integration Strategies in Speaking

AI–XR Integration Strategies in Listening

AI–XR Integration Strategies in Writing

AI–XR Integration Strategies in Vocabulary

AI–XR Integration Strategies in Cross-Cultural Learning

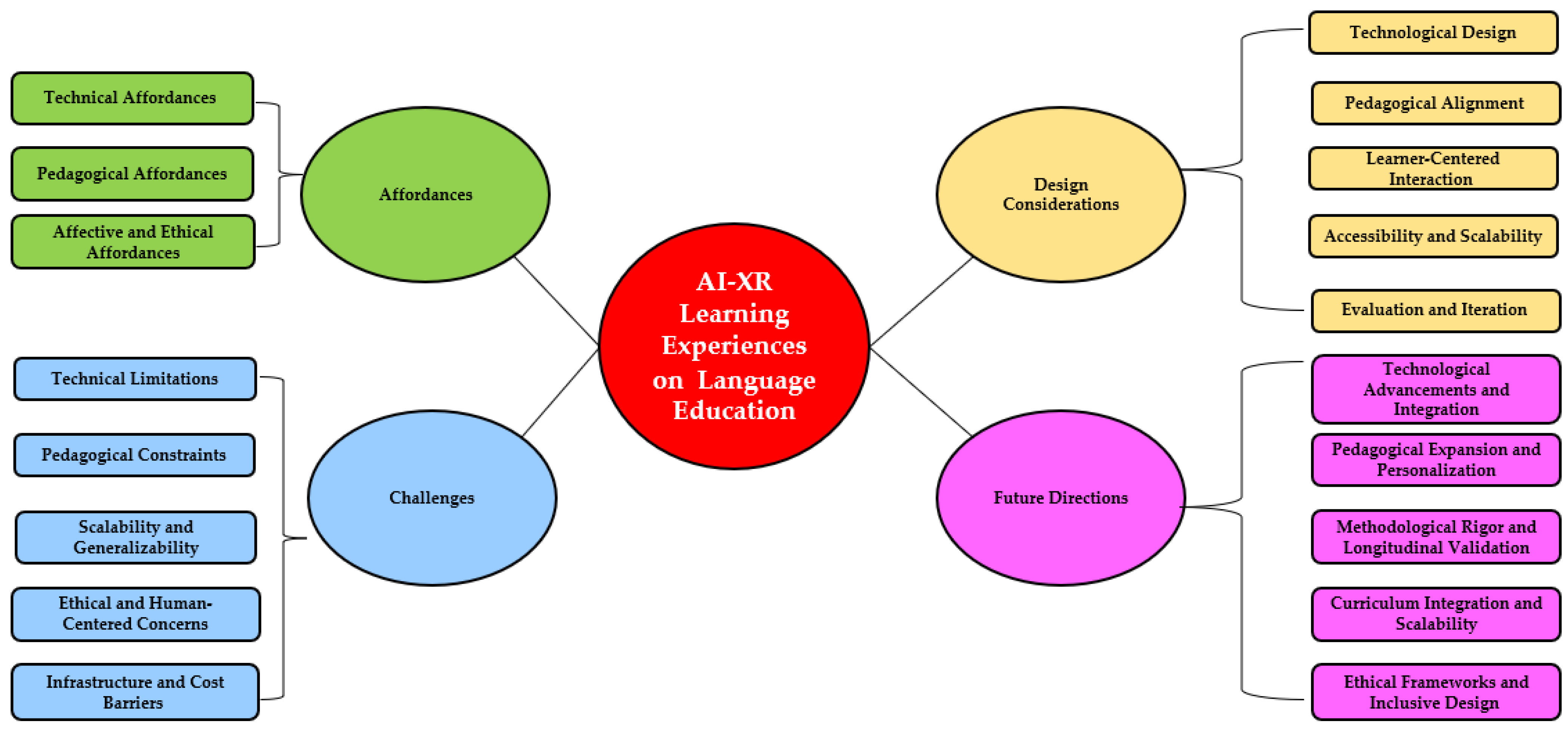

3.2.2. Affordances

Technical Affordances

Pedagogical Affordances

Affective Affordances

3.3. Challenges, Design Considerations and Future Directions

3.3.1. Challenges

Technical Limitations

Pedagogical Constraints

Scalability and Generalizability

Ethical and Human-Centered Concerns

Infrastructure and Cost Barriers

3.3.2. Design Considerations

Technological Design

Pedagogical Alignment

Learner-Centered Interaction

Accessibility and Scalability

Evaluation and Iteration

3.3.3. Future Directions

Technological Advancements and Integration

Pedagogical Expansion and Personalization

Methodological Rigor and Longitudinal Validation

Curriculum Integration and Scalability

Ethical Frameworks and Inclusive Design

4. Discussion

4.1. Synthesis and Interpretation of Findings

4.1.1. The Current Landscape of AI–XR in Language Education (RQ1)

4.1.2. Pedagogical Integration and Affordances (RQ2)

4.1.3. Challenges, Design Considerations, and Research Gaps (RQ3)

4.2. Theoretical and Conceptual Insights

4.3. Contextual Nuances and Emerging Patterns

4.4. Critical Reflections on the Review Process

4.5. Positioning the Review Within the Broader Discourse

4.5.1. Bridging Fragmented Research Silos

4.5.2. Advancing Pedagogical and Design Frameworks

4.5.3. Setting a Research Agenda

4.5.4. Reframing the Role of AI and XR in Language Education

4.5.5. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AR | Augmented Reality |

| ASR | Automatic Speech Recognition |

| CA | Conversational Agents |

| CV | Computer Vision |

| DBR | Design-Based Research |

| ERIC | Education Resources Information Center |

| LLMs | Large Language Models |

| ML | Machine Learning |

| MMAA | Multimodal AI Agents |

| MR | Mixed Reality |

| MT | Machine Translation |

| NLP | Natural Language Processing |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RTFS | Real-Time Feedback Systems |

| TTS | Text-to-Speech |

| TBLT | Task-Based Language Teaching |

| VR | Virtual Reality |

| XR | Extended Reality |

Appendix A

| Author | Title | Journal or Conference Proceedings | |

|---|---|---|---|

| 1 | (Allen et al., 2019) | The Rensselaer Mandarin Project—A Cognitive and Immersive Language Learning Environment | Proceedings of the AAAI Conference on Artificial Intelligence |

| 2 | (Bottega et al., 2023) | Jubileo: An Immersive Simulation Framework for Social Robot Design | Journal of Intelligent & Robotic Systems |

| 3 | (Chabot et al., 2020) | A Collaborative, Immersive Language Learning Environment Using Augmented Panoramic Imagery. | 2020 6th International Conference of the Immersive Learning Research Network (iLRN) |

| 4 | (Y.-C. Chen, 2024) | Effects of technology-enhanced language learning on reducing EFL learners’ public speaking anxiety. | Computer Assisted Language Learning |

| 5 | (Y.-L. Chen et al., 2022) | Robot-Assisted Language Learning: Integrating Artificial Intelligence and Virtual Reality into English Tour Guide Practice | Education Sciences |

| 6 | (Divekar et al., 2022) | Foreign language acquisition via artificial intelligence and extended reality: Design and evaluation. | Computer Assisted Language Learning |

| 7 | (Gorham et al., 2019) | Assessing the efficacy of VR for foreign language learning using multimodal learning analytics | Professional development in CALL: a selection of papers |

| 8 | (Guo et al., 2017) | SeLL: Second language learning paired with VR and AI | SIGGRAPH Asia 2017 Symposium on Education |

| 9 | (Hajahmadi et al., 2024) | ARELE-bot: Inclusive Learning of Spanish as a Foreign Language Through a Mobile App Integrating Augmented Reality and ChatGPT | 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) |

| 10 | (Hollingworth & Willett, 2023) | FluencyAR: Augmented Reality Language Immersion | Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology |

| 11 | (Hwang et al., 2024) | Integrating AI chatbots into the metaverse: Pre-service English teachers’ design works and perceptions | Education and Information Technologies |

| 12 | (Kizilkaya et al., 2019) | Design Prompts for Virtual Reality in Education | Artificial Intelligence in Education |

| 13 | (H. Lee et al., 2023) | VisionARy: Exploratory research on Contextual Language Learning using AR glasses with ChatGPT | Proceedings of the 15th Biannual Conference of the Italian SIGCHI Chapter |

| 14 | (S. Lee et al., 2025) | Enhancing Pre-Service Teachers’ Global Englishes Awareness with Technology: A Focus on AI Chatbots in 3D Metaverse Environments. | TESOL Quarterly |

| 15 | (Mirzaei et al., 2018) | Language learning through conversation envisioning in virtual reality: A sociocultural approach | Future-proof CALL: language learning as exploration and encounters—short papers from EUROCALL 2018 |

| 16 | (Nakamura et al., 2024) | LingoAI: Language Learning System Integrating Generative AI with 3D Virtual Character | Proceedings of the 2024 International Conference on Advanced Visual Interfaces |

| 17 | (Obari et al., 2020) | The Impact of Using AI and VR with Blended Learning on English as a Foreign Language Teaching | CALL for widening participation: short papers from EUROCALL 2020 |

| 18 | (Park et al., 2019) | ). Interactive AI for Linguistic Education Built on VR Environment Using User Generated Contents | 2019 21st International Conference on Advanced Communication Technology (ICACT) |

| 19 | (Seow, 2023) | LingoLand: An AI-Assisted Immersive Game for Language Learning. | Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology |

| 20 | (Shadiev et al., 2021) | Cross-cultural learning in virtual reality environment: Facilitating cross-cultural understanding, trait emotional intelligence, and sense of presence | Educational Technology Research and Development |

| 21 | (Shukla et al., 2019) | iLeap: A Human-Ai Teaming Based Mobile Language Learning Solution for Dual Language Learners in Early and Special Educations | A Human-Ai Teaming Based Mobile Language Learning Solution for Dual Language Learners in Early and Special Educations |

| 22 | (Smuts et al., 2019) | Towards Dynamically Adaptable Immersive Spaces for Learning | 2019 11th Computer Science and Electronic Engineering (CEEC), |

| 23 | (Song et al., 2023) | Developing a ‘Virtual Go mode’ on a mobile app to enhance primary students’ vocabulary learning engagement: An exploratory study. | Innovation in Language Learning and Teaching |

| 24 | (Tazouti et al., 2019) | ImALeG: A Serious Game for Amazigh Language Learning | International Journal of Emerging Technologies in Learning (iJET) |

| 25 | (Tolba et al., 2024) | Interactive Augmented Reality System for Learning Phonetics Using Artificial Intelligence | IEEE Access |

| 26 | (J.-H. Wang et al., 2020) | Digital Learning Theater with Automatic Instant Assessment of Body Language and Oral Language Learning | 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT) |

| 27 | (Y. Wang et al., 2022) | An Integrated Automatic Writing Evaluation and SVVR Approach to Improve Students’ EFL Writing Performance | Sustainability |

| 28 | (Xin & Shi, 2024) | Application of Hybrid Image Processing Based on Artificial Intelligence in Interactive English Teaching | ACM Transactions on Asian and Low-Resource Language Information Processing |

| 29 | (Xu et al., 2019) | Design and Implementation of an English Lesson Based on Handwriting Recognition and Augmented Reality in Primary School. | International Association for Development of the Information Society |

| 30 | (Yang & Wu, 2024) | Design and Implementation of Chinese Language Teaching System Based on Virtual Reality Technology | Scalable Computing: Practice and Experience |

| 31 | (Yu, 2023) | AI-Empowered Metaverse Learning Simulation Technology Application | AI-Empowered Metaverse Learning Simulation Technology Application |

| 32 | (Yun et al., 2024) | Interactive Learning Tutor Service Platform Based on Artificial Intelligence in a Virtual Reality Environment | Intelligent Human Computer Interaction |

References

- Allen, D., Divekar, R. R., Drozdal, J., Balagyozyan, L., Zheng, S., Song, Z., Zou, H., Tyler, J., Mou, X., Zhao, R., Zhou, H., Yue, J., Kephart, J. O., & Su, H. (2019). The rensselaer mandarin project—A cognitive and immersive language learning environment. Proceedings of the AAAI Conference on Artificial Intelligence, 33(1), 9845–9846. [Google Scholar] [CrossRef]

- Almelhes, S. A. (2023). A review of artificial intelligence adoption in second-language learning. Theory and Practice in Language Studies, 13(5), 1259–1269. [Google Scholar] [CrossRef]

- Aslan, S., Alyuz, N., Li, B., Durham, L. M., Shi, M., Sharma, S., & Nachman, L. (2025). An early investigation of collaborative problem solving in conversational AI-mediated learning environments. Computers and Education: Artificial Intelligence, 8, 100393. [Google Scholar] [CrossRef]

- Bottega, J. A., Kich, V. A., Jesus, J. C. D., Steinmetz, R., Kolling, A. H., Grando, R. B., Guerra, R. D. S., & Gamarra, D. F. T. (2023). Jubileo: An immersive simulation framework for social robot design. Journal of Intelligent & Robotic Systems, 109(4), 91. [Google Scholar] [CrossRef]

- Bozkir, E., Özdel, S., Lau, K. H. C., Wang, M., Gao, H., & Kasneci, E. (2024, July 8–10). Embedding large language models into extended reality: Opportunities and challenges for inclusion, engagement, and privacy. 6th ACM Conference on Conversational User Interfaces (Vol. 38, pp. 1–7), Luxembourg. [Google Scholar] [CrossRef]

- Chabot, S., Drozdal, J., Peveler, M., Zhou, Y., Su, H., & Braasch, J. (2020, June 21–25). A collaborative, immersive language learning environment using augmented panoramic imagery. 2020 6th International Conference of the Immersive Learning Research Network (iLRN) (pp. 225–229), San Luis Obispo, CA, USA. [Google Scholar] [CrossRef]

- Chen, C., Hung, H., & Yeh, H. (2021). Virtual reality in problem-based learning contexts: Effects on the problem-solving performance, vocabulary acquisition and motivation of English language learners. Journal of Computer Assisted Learning, 37(3), 851–860. [Google Scholar] [CrossRef]

- Chen, J., Dai, J., Zhu, K., & Xu, L. (2022). Effects of extended reality on language learning: A meta-analysis. Frontiers in Psychology, 13, 1016519. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.-C. (2024). Effects of technology-enhanced language learning on reducing EFL learners’ public speaking anxiety. Computer Assisted Language Learning, 37(4), 789–813. [Google Scholar] [CrossRef]

- Chen, Y.-L., Hsu, C.-C., Lin, C.-Y., & Hsu, H.-H. (2022). Robot-assisted language learning: Integrating artificial intelligence and virtual reality into English tour guide practice. Education Sciences, 12(7), 437. [Google Scholar] [CrossRef]

- Corbin, J., & Strauss, A. (2014). Basics of qualitative research: Techniques and procedures for developing grounded theory. Sage publications. [Google Scholar]

- Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20, 22. [Google Scholar] [CrossRef]

- Crum, S., Li, B., & Kou, X. (2024). Generative artificial intelligence and interactive learning platforms: Second language vocabulary acquisition. In C. Stephanidis, M. Antona, S. Ntoa, & G. Salvendy (Eds.), HCI international 2024 posters. HCII 2024. Communications in computer and information science (Vol. 2117). Springer. [Google Scholar] [CrossRef]

- Divekar, R. R., Drozdal, J., Chabot, S., Zhou, Y., Su, H., Chen, Y., Zhu, H., Hendler, J. A., & Braasch, J. (2022). Foreign language acquisition via artificial intelligence and extended reality: Design and evaluation. Computer Assisted Language Learning, 35(9), 2332–2360. [Google Scholar] [CrossRef]

- Escalante, J., Pack, A., & Barrett, A. (2023). AI-generated feedback on writing: Insights into efficacy and ENL student preference. International Journal of Educational Technology in Higher Education, 20, 57. [Google Scholar] [CrossRef]

- Godwin-Jones, R. (2023). Presence and agency in real and virtual spaces: The promise of extended reality for language learning. Language Learning & Technology, 27(3), 6–26. [Google Scholar]

- Gorham, T., Jubaed, S., Sanyal, T., & Starr, E. L. (2019). Assessing the efficacy of VR for foreign language learning using multimodal learning analytics. In C. N. Giannikas, E. Kakoulli Constantinou, & S. Papadima-Sophocleous (Eds.), Professional development in CALL: A selection of papers (pp. 101–116). Research-Publishing.net. [Google Scholar] [CrossRef]

- Guo, J., Chen, Y., Pei, Q., Ren, H., Huang, N., Tian, H., Zhang, M., Liu, Y., Fu, G., Hu, H., & Zhang, X. (2017, November 27–30). SeLL: Second language learning paired with VR and AI. SA ‘17: SIGGRAPH Asia 2017 Symposium on Education (pp. 1–2), Bangkok, Thailand. [Google Scholar] [CrossRef]

- Hajahmadi, S., Clementi, L., Jiménez López, M. D., & Marfia, G. (2024, March 16–21). ARELE-bot: Inclusive learning of Spanish as a foreign language through a mobile app integrating augmented reality and ChatGPT. 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 335–340), Orlando, FL, USA. [Google Scholar] [CrossRef]

- Hamilton, D. E., McKechnie, J., Edgerton, E., & Wilson, C. (2020). Immersive virtual reality as a pedagogical tool in education: A systematic literature review of quantitative learning outcomes and experimental design. Journal of Computers in Education, 8(1), 1–32. [Google Scholar] [CrossRef]

- Hollingworth, S. L. C., & Willett, W. (2023, October 29–November 1). FluencyAR: Augmented reality language immersion. Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (pp. 1–3), San Francisco, CA, USA. [Google Scholar] [CrossRef]

- Huang, X., Zou, D., Cheng, G., & Xie, H. (2021). A systematic review of AR and VR enhanced language learning. Sustainability, 13(9), 4639. [Google Scholar] [CrossRef]

- Hwang, Y., & Lee, J. (2024). Exploring pre-service English teachers’ perceptions and technological acceptance of metaverse language classroom design. Sage Open, 14(4), 21582440241300543. [Google Scholar] [CrossRef]

- Hwang, Y., Lee, S., & Jeon, J. (2024). Integrating AI chatbots into the metaverse: Pre-service English teachers’ design works and perceptions. Education and Information Technologies, 30(4), 4099–4130. [Google Scholar] [CrossRef]

- Karacan, C. G., & Akoğlu, K. (2021). Educational augmented reality technology for language learning and teaching: A comprehensive review. Shanlax International Journal of Education, 9(2), 68–79. [Google Scholar] [CrossRef]

- Kizilkaya, L., Vince, D., & Holmes, W. (2019). Design prompts for virtual reality in education. In S. Isotani, E. Millán, A. Ogan, P. Hastings, B. McLaren, & R. Luckin (Eds.), Artificial intelligence in education (Vol. 11626, pp. 133–137). Springer International Publishing. [Google Scholar] [CrossRef]

- Kolb, D. A. (2014). Experiential learning: Experience as the source of learning and development. FT Press. [Google Scholar]

- Lee, H., Hsia, C.-C., Tsoy, A., Choi, S., Hou, H., & Ni, S. (2023, September 20–22). VisionARy: Exploratory research on contextual language learning using AR glasses with ChatGPT. 15th Biannual Conference of the Italian SIGCHI Chapter (p. 22), Torino, Italy. [Google Scholar] [CrossRef]

- Lee, S., Jeon, J., & Choe, H. (2025). Enhancing pre-service teachers’ global English awareness with technology: A focus on AI chatbots in 3D metaverse environments. TESOL Quarterly, 59(1), 49–74. [Google Scholar] [CrossRef]

- Li, B., Bonk, C. J., Wang, C., & Kou, X. (2024a). Reconceptualizing self-directed learning in the era of generative AI: An exploratory analysis of language learning. IEEE Transactions on Learning Technologies, 17(3), 1515–1529. [Google Scholar] [CrossRef]

- Li, B., Lowell, V., Watson, & Wang, C. (2024b). A systematic review of the first year of publications on ChatGPT and language education: Examining research on ChatGPT’s use in language learning and teaching. Computers and Education: Artificial Intelligence, 100, 100266. [Google Scholar] [CrossRef]

- Li, B., Wang, C., Bonk, C. J., & Kou, X. (2024c). Exploring inventions in self-directed language learning with generative AI: Implementations and perspectives of YouTube content creators. TechTrends, 68, 803–819. [Google Scholar] [CrossRef]

- Liu, M. (2023). Exploring the application of artificial intelligence in foreign language teaching: Challenges and future development. SHS Web of Conferences, 168, 03025. [Google Scholar] [CrossRef]

- Lowell, V. L., & Yan, W. (2023). Facilitating foreign language conversation simulations in virtual reality for authentic learning. In T. Cherner, & A. Fegely (Eds.), Bridging the XR technology-to-practice gap: Methods and strategies for blending extended realities into classroom instruction (Vol. I, pp. 119–133). Association for the Advancement of Computing in Education and Society for Information Technology and Teacher Education. Available online: https://www.learntechlib.org/p/222242/ (accessed on 18 December 2023).

- Lowell, V. L., & Yan, W. (2024). Applying systems thinking for designing immersive virtual reality learning experiences in education. TechTrends, 68(1), 149–160. [Google Scholar] [CrossRef]

- Makeleni, S., Mutongoza, B. H., & Linake, M. A. (2023). Language education and artificial intelligence: An exploration of challenges confronting academics in global south universities. Journal of Culture and Values in Education, 6(2), 158–171. [Google Scholar] [CrossRef]

- Makhenyane, L. E. (2024). The use of augmented reality in the teaching and learning of isiXhosa poetry. Journal of the Digital Humanities Association of Southern Africa (DHASA), 5(1). [Google Scholar] [CrossRef]

- Mirzaei, M. S., Zhang, Q., Van der Struijk, S., & Nishida, T. (2018). Language learning through conversation envisioning in virtual reality: A sociocultural approach. In P. Taalas, J. Jalkanen, L. Bradley, & S. Thouësny (Eds.), Future-proof CALL: Language learning as exploration and encounters—Short papers from EUROCALL 2018 (pp. 207–213). Research-Publishing.net. [Google Scholar] [CrossRef]

- Nakamura, H., Nakazato, H., & Tobita, H. (2024, June 3–7). LingoAI: Language learning system integrating generative AI with 3D virtual character. 2024 International Conference on Advanced Visual Interfaces (pp. 1–2), Arenzano, Italy. [Google Scholar] [CrossRef]

- Nazeer, I., Jamshaid, S., & Khan, N. M. (2024). Linguistic impact of augmented reality (AR) on English language use. Journal of Asian Development Studies, 13(1), 350–362. [Google Scholar] [CrossRef]

- Obari, H., Lambacher, S., & Kikuchi, H. (2020). The impact of using AI and VR with blended learning on English as a foreign language teaching. In K.-M. Frederiksen, S. Larsen, L. Bradley, & S. Thouësny (Eds.), CALL for widening participation: Short papers from EUROCALL 2020 (pp. 253–258). Research-Publishing.net. [Google Scholar] [CrossRef]

- Ouzzani, M., Hammady, H., Fedorowicz, Z., & Elmagarmid, A. (2016). Rayyan—A web and mobile app for systematic reviews. Systematic Reviews, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ, 372, n71. [Google Scholar] [CrossRef]

- Park, W., Park, D., Ahn, B., Kang, S., Kim, H., Kim, R., & Na, J. (2019, February 17–20). Interactive AI for linguistic education built on VR environment using user generated contents. 2019 21st International Conference on Advanced Communication Technology (ICACT) (pp. 385–389), PyeongChang, Republic of Korea. [Google Scholar] [CrossRef]

- Parmaxi, A., & Demetriou, A. A. (2020). Augmented reality in language learning: A state-of-the-art review of 2014–2019. Journal of Computer Assisted Learning, 36(6), 861–875. [Google Scholar] [CrossRef]

- PRISMA. (2015). Transparent reporting of systematic reviews and meta-analyses. Available online: http://www.prisma-statement.org/ (accessed on 18 December 2023).

- Rangel-de Lazaro, G., & Duart, J. M. (2023). You can handle, you can teach it: Systematic review on the use of extended reality and artificial intelligence technologies for online higher education. Sustainability, 15(4), 3507. [Google Scholar] [CrossRef]

- Schorr, I., Plecher, D. A., Eichhorn, C., & Klinker, G. (2024). Foreign language learning using augmented reality environments: A systematic review. Frontiers in Virtual Reality, 5, 1288824. [Google Scholar] [CrossRef]

- Seow, O. (2023, October 29–November 1). LingoLand: An AI-assisted immersive game for language learning. UIST’23 Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (p. 120), San Francisco, CA, USA. [Google Scholar] [CrossRef]

- Shadiev, R., Wang, X., & Huang, Y.-M. (2021). Cross-cultural learning in virtual reality environment: Facilitating cross-cultural understanding, trait emotional intelligence, and sense of presence. Educational Technology Research and Development, 69(5), 2917–2936. [Google Scholar] [CrossRef]

- Shukla, S., Shivakumar, A., Vasoya, M., Pei, Y., & Lyon, A. F. (2019, April 11–19). iLeap: A human-AI teaming based mobile language learning solution for dual language learners in early and special educations. International Association for Development of the Information Society (IADIS) International Conference on Mobile Learning (pp. 57–64), Utrecht, The Netherlands. [Google Scholar]

- Smuts, M. G., Callaghan, V., & Gutierrez, A. G. (2019, September 18–20). Towards dynamically adaptable immersive spaces for learning. 2019 11th Computer Science and Electronic Engineering (CEEC) (pp. 113–117), Colchester, UK. [Google Scholar] [CrossRef]

- Song, Y., Wen, Y., Yang, Y., & Cao, J. (2023). Developing a ‘Virtual Go mode’ on a mobile app to enhance primary students’ vocabulary learning engagement: An exploratory study. Innovation in Language Learning and Teaching, 17(2), 354–363. [Google Scholar] [CrossRef]

- Tafazoli, D. (2024). From virtual reality to cultural reality: Integration of virtual reality into teaching culture in foreign language education. Journal for Multicultural Education, 18(1/2), 6–24. [Google Scholar] [CrossRef]

- Tazouti, Y., Boulaknadel, S., & Fakhri, Y. (2019). ImALeG: A serious game for amazigh language learning. International Journal of Emerging Technologies in Learning (IJET), 14(18), 28–38. [Google Scholar] [CrossRef]

- Tolba, R. M., Elarif, T., Taha, Z., & Hammady, R. (2024). Interactive augmented reality system for learning phonetics using artificial intelligence. IEEE Access, 12, 78219–78231. [Google Scholar] [CrossRef]

- Vall, R. R. F. d. l., & Araya, F. G. (2023). Exploring the benefits and challenges of ai-language learning tools. International Journal of Social Sciences and Humanities Invention, 10(01), 7569–7576. [Google Scholar] [CrossRef]

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes (Vol. 86). Harvard University Press. [Google Scholar]

- Wang, J.-H., Chen, Y.-H., Yu, S.-Y., Huang, Y.-L., & Chen, G.-D. (2020, July 6–9). Digital learning theater with automatic instant assessment of body language and oral language learning. 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT) (pp. 218–222), Tartu, Estonia. [Google Scholar] [CrossRef]

- Wang, Y., Luo, X., Liu, C.-C., Tu, Y.-F., & Wang, N. (2022). An integrated automatic writing evaluation and SVVR approach to improve students’ EFL writing performance. Sustainability, 14(18), 11586. [Google Scholar] [CrossRef]

- Xin, D., & Shi, C. (2024). Application of hybrid image processing based on artificial intelligence in interactive English teaching. ACM Transactions on Asian and Low-Resource Language Information Processing, 3626822. [Google Scholar] [CrossRef]

- Xu, J., He, S., Jiang, H., Yang, Y., & Cai, S. (2019). Design and implementation of an English lesson based on handwriting recognition and augmented reality in primary school (pp. 171–178). International Association for Development of the Information Society. [Google Scholar]

- Yan, W., & Lowell, V. L. (2024). Design and evaluation of task-based role-play speaking activities in a VR environment for authentic learning: A design-based research approach. The Journal of Applied Instructional Design, 13(4), 14. [Google Scholar] [CrossRef]

- Yan, W., & Lowell, V. L. (2025). The Evolution of virtual reality in foreign language education: From text-based MUDs to AI-enhanced immersive environments. TechTrends, 69, 853–858. [Google Scholar] [CrossRef]

- Yan, W., Lowell, V. L., & Yang, L. (2024). Developing English language learners’ speaking skills through applying a situated learning approach in VR-enhanced learning experiences. Virtual Reality, 28, 167. [Google Scholar] [CrossRef]

- Yang, T., & Wu, J. (2024). Design and implementation of Chinese language teaching system based on virtual reality technology. Scalable Computing: Practice and Experience, 25(3), 1564–1577. [Google Scholar] [CrossRef]

- Yu, D. (2023, September 18–20). AI-empowered metaverse learning simulation technology application. 2023 International Conference on Intelligent Metaverse Technologies & Applications (iMETA) (pp. 1–6), Tartu, Estonia. [Google Scholar] [CrossRef]

- Yun, C.-O., Jung, S.-J., & Yun, T.-S. (2024). Interactive learning tutor service platform based on artificial intelligence in a virtual reality environment. In B. J. Choi, D. Singh, U. S. Tiwary, & W. Y. Chung (Eds.), Intelligent human computer interaction. IHCI 2023. Lecture notes in computer science (Vol. 14531, pp. 367–373). Springer. [Google Scholar] [CrossRef]

| Category | Description |

|---|---|

| Bibliographic Details | Author(s), publication year, location, included scholarly sources by type (e.g., peer-reviewed journal articles, peer-reviewed conference proceedings, peer-reviewed book chapters) |

| Study Aims and Context | Purpose of the study and the educational setting (e.g., K-12 classroom, university language course, informal learning environment), target language, and language focus |

| Participants and Sample Size | Number of participants |

| Length of Intervention | Duration of instructional implementation |

| Learning Outcomes | Cognitive, linguistic, affective, and cultural learning outcomes assessed (such as knowledge retention, speaking proficiency, learner engagement, cultural competence) |

| AI Technology Used | Type of AI used (e.g., automatic speech recognition, text-to-speech, natural language processing, conversational agent) |

| XR Technology Used | Type of XR used (VR, AR, MR, Metaverse) and platform/hardware details |

| Theoretical Frameworks | Structured lens through which researchers interpret, analyze, and connect key concepts, guiding the design, implementation, and evaluation of a study (e.g., embodied cognition, experiential learning, engagement theory) |

| Integration Strategies | Description of how AI and XR components interacted or were integrated (e.g., VR-Automatic Speech Recognition integration, AR-Text-to-Speech integration) |

| Affordances | Any pedagogical, affective, or technological advantages (e.g., enhanced learner engagement, increased language immersion, personalized feedback through AI, real-time pronunciation correction, or improved motivation) that show how the integration of AI and XR supports or extends language learning processes |

| Challenges | Any problems, limitations, or drawbacks noted (e.g., technical issues, small sample sizes, usability challenges, or pedagogical limitations) that could inform future research |

| Design Considerations | Important factors or guidelines that need to be considered when designing an AI–XR learning experience in language learning (e.g., pedagogical alignment, learner-centered interaction, accessibility, and scalability) |

| Future Directions | Suggestions for what researchers could explore or investigate next based on the study’s findings and limitations |

| XR Technology | Design Software | Number of Studies | Articles |

|---|---|---|---|

| VR | 3D Images | 3 | (Nakamura et al., 2024; Seow, 2023; Song et al., 2023) |

| Commercial Software | 2 | (Y.-C. Chen, 2024; Gorham et al., 2019) | |

| 360 Video | 2 | (Shadiev et al., 2021; Y. Wang et al., 2022) | |

| Unity 3D | 4 | (Bottega et al., 2023; Park et al., 2019; Smuts et al., 2019; Tazouti et al., 2019) | |

| Unity 3D and 3D Images | 2 | (Y.-L. Chen et al., 2022; Yang & Wu, 2024) | |

| Not Specified | 6 | (Guo et al., 2017; Kizilkaya et al., 2019; Mirzaei et al., 2018; Obari et al., 2020; J.-H. Wang et al., 2020; Yun et al., 2024) | |

| AR | 3D Images | 1 | (Tolba et al., 2024) |

| Unity 3D | 1 | (Hollingworth & Willett, 2023) | |

| Unity 3D, 3D Max, Vuforia | 1 | (Xin & Shi, 2024) | |

| Not Specified | 4 | (Hajahmadi et al., 2024; H. Lee et al., 2023; Shukla et al., 2019; Xu et al., 2019) | |

| MR | 360° Panoramic Displays | 3 | (Allen et al., 2019; Chabot et al., 2020; Divekar et al., 2022) |

| Metaverse | 3D Images | 1 | (S. Lee et al., 2025) |

| Commercial Platform | 1 | (Hwang et al., 2024) | |

| Unity 3D | 1 | (Yu, 2023) |

| Language Focus | Number of Articles | Integration Strategies | Example Studies |

|---|---|---|---|

| Speaking | 15 | Extended Reality with Automatic Speech Recognition Extended Reality with Text-to-Speech Extended Reality with Conversational Agents Extended Reality with Computer Vision | (Bottega et al., 2023; Y.-L. Chen et al., 2022; Y.-C. Chen, 2024; Divekar et al., 2022; Guo et al., 2017; Hollingworth & Willett, 2023; Kizilkaya et al., 2019; H. Lee et al., 2023; Mirzaei et al., 2018; Nakamura et al., 2024; Park et al., 2019; Seow, 2023; Shukla et al., 2019; J.-H. Wang et al., 2020; Yun et al., 2024) |

| Listening | 3 | Extended Reality with Automatic Speech Recognition Extended Reality with Natural Language Processing | (Allen et al., 2019; Chabot et al., 2020; Guo et al., 2017) |

| Writing | 3 | Extended Reality with Real-Time Feedback System Extended Reality with Text-to-Speech Extended Reality with Machine Learning | (Gorham et al., 2019; Y. Wang et al., 2022; Xu et al., 2019) |

| Vocabulary | 3 | Extended Reality with Automatic Speech Recognition Extended Reality with Computer Vision | (Allen et al., 2019; Chabot et al., 2020; Song et al., 2023) |

| Cross-Cultural Learning | 3 | Extended Reality with Automatic Speech Recognition Extended Reality with Conversational Agents | (Mirzaei et al., 2018; Shadiev et al., 2021; Yang & Wu, 2024) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, W.; Li, B.; Lowell, V.L. Integrating Artificial Intelligence and Extended Reality in Language Education: A Systematic Literature Review (2017–2024). Educ. Sci. 2025, 15, 1066. https://doi.org/10.3390/educsci15081066

Yan W, Li B, Lowell VL. Integrating Artificial Intelligence and Extended Reality in Language Education: A Systematic Literature Review (2017–2024). Education Sciences. 2025; 15(8):1066. https://doi.org/10.3390/educsci15081066

Chicago/Turabian StyleYan, Weijian, Belle Li, and Victoria L. Lowell. 2025. "Integrating Artificial Intelligence and Extended Reality in Language Education: A Systematic Literature Review (2017–2024)" Education Sciences 15, no. 8: 1066. https://doi.org/10.3390/educsci15081066

APA StyleYan, W., Li, B., & Lowell, V. L. (2025). Integrating Artificial Intelligence and Extended Reality in Language Education: A Systematic Literature Review (2017–2024). Education Sciences, 15(8), 1066. https://doi.org/10.3390/educsci15081066