1. Introduction

The integration of artificial intelligence (AI) in education offers promising avenues for fostering critical thinking but also raises concerns about balancing technological reliance with autonomous cognitive development. Recent studies suggest that AI can enhance learning by promoting cognitive flexibility and personalizing instruction (

Panit, 2025;

Sako, 2024). Nonetheless, excessive dependence on AI may impede the development of critical thinking skills (

Hading et al., 2024;

Çela et al., 2024). In response to these concerns, Human-Centered Artificial Intelligence (HCAI) in education emphasizes collaborative inquiry, creativity, and social responsibility, with educators serving as crucial mediators between technology and learning experience. Through this human-centered lens, education can develop essential 21st-century skills—such as digital literacy, problem-solving, and critical analysis—thereby transforming AI from a mere technological tool into a key element of immersive and meaningful learning within a holistic pedagogical framework (

Anastasiades et al., 2024).

Grounded in the above approaches, the study was conducted during the winter semester of the academic year 2024–2025 within the Pedagogical and Teaching Sufficiency Program offered by the Audio and Visual Arts Department, Ionian University. It draws on empirical data collected through classroom observations and students’ midterm assignments. In greater detail, after attending lectures on the theory of the social construction of reality as developed by Berger and Luckmann in

The Social Construction of Reality: A Treatise in the Sociology of Knowledge (

Berger & Luckmann, 1966), students were assigned the task of designing a lesson plan (

Papadopoulou, 2018a,

2019) that would encapsulate the concept of social construction and the multiple manifestations of reality (

Papadopoulou, 2018b) through an artistic medium—such as a visual artwork, a short play, or a choreographed performance. A key element of the assignment—and a central focus of our research—was the explicit instruction for students to adopt a dialogical approach as the core methodological framework for their engagement with AI. This approach was informed both by theoretical underpinnings and by applied insights from ongoing research conducted at the Institute for Game Research (

Institute for Game Research, n.d.). It builds on the work of two co-authors of this study—who are also members of the Institute—who have been employing dialogical strategies in training language models while simultaneously developing an educational and ethically grounded protocol for the responsible integration of AI-driven virtual agents in learning environments.

2. Conceptual Framework

Theoretical frameworks are essential in research—not only as scaffolds for organizing thought, but as interpretive instruments that shape how we ask questions and make sense of data. From an epistemological perspective, they also serve as conceptual tools for grasping how knowledge about social reality is constructed and becomes institutionalized (

Mills, 1959;

Berger & Luckmann, 1966;

Giddens, 1984). In this specific study, rather than adopting a fixed theoretical model from the outset, we mobilize a set of evolving concepts to illuminate the emerging dynamics of human–AI interaction. Drawing on insights from posthumanist theory, psychology, and anthropology, we use concepts such as “non-human agency”, “co-agency”, “flow”, and “play”. While non-human agency and co-agency informed the original research design, the concepts “flow” and “play” emerged inductively through participants’ lived experiences. The synthesis of these diverse concepts enables a multilayered understanding of dialogic human–AI interaction, where meaning, emotion, and cognition are co-constructed. This approach reveals how learning and creativity are not solely human-centered processes but unfold through entanglements with intelligent technologies.

2.1. AI as a Form of Non-Human Agency in Posthumanist Thought

In the context of this study, which investigates how students interact with AI within dialogical learning environments—where meaning is co-constructed through mediated engagement—the posthumanist framework introduces a novel analytical perspective. Rather than centering human intentionality and rational subjectivity, as in traditional sociological paradigms, posthumanist thought shifts attention to the agency of non-human actors (

Haraway, 1985;

Schatzki, 2002;

Latour, 2005;

Hayles, 1999;

Braidotti, 2013). In

Reassembling the Social (2005), Latour conceptualizes “agency” as the capacity of an entity—human or non-human—to make a difference in a given state of affairs. Applied to educational practice, this conceptualization suggests a paradigm shift: learning is no longer viewed as a purely individual cognitive process but as a relational and situated achievement, co-constructed through ongoing entanglements with material, social, and technological actors (

Lin & Li, 2021). Drawing on posthumanist theory, AI can be viewed not as a neutral tool but as an agentic actant whose algorithmic interventions reshape discursive possibilities, thereby relocating learning from an individual cognitive act to a situated, relational achievement that emerges through continuous entanglements of human, material, and technological actors

2.2. Co-Agency in Human–AI Interaction

A growing body of empirical studies treats artificial intelligence not as a neutral tool but as a co-agent: AI systems such as ChatGPT participate in shaping the learning process by proposing pathways and engaging in meaning-making alongside the user. This process transforms learning from a solitary intellectual endeavor into a collaborative and hybrid undertaking, grounded in relational dynamics. This perspective underscores the collaborative construction of knowledge, where both human and non-human agents operate in complementarity (

Haraway, 1985;

Schatzki, 2002;

Latour, 2005;

Hayles, 1999;

Braidotti, 2013). Sociologically, this lens reveals how algorithms do not merely implement instructions but actively participate in configuring social and pedagogical practices (

Kuijer & Giaccardi, 2018). Within this framework, everyday interactions—such as co-authoring with AI or collaboratively solving problems—emerge through processes of mutual adaptation. Human–AI interaction, therefore, can be seen as a mode of reciprocal shaping. More broadly, when approached intentionally, the combined capabilities of humans and AI can foster a form of hybrid competence—an emergent intelligence that surpasses what either could accomplish alone (

Dezső-Dinnyés, 2019). In this light, co-agency underscores the collaborative construction of knowledge and reframes AI-supported learning as a reflective and dialogic engagement, not just a technical skill. Ultimately, co-agency provides a framework for understanding human–AI interaction as an evolving interdependent dynamic—a jointly navigated space of shared inquiry and meaning-making. This perspective invites educators to approach AI not as a replacement for human agency, but as a generative partner in the learning process, provided it is guided by ethical awareness and pedagogical intentionality. The idea of

co-agency holds significant value in the development of AI-driven educational tools. According to

Osmanoglu (

2023), safeguarding human agency is essential when designing such systems; rather than substituting human input, AI should be designed to stimulate originality and critical thought. This view is consistent with the aims of AI literacy, which seek to prepare learners to interact with intelligent systems in reflective and responsible ways. As a conceptual lens, co-agency helps illuminate how humans and AI can relate in educational and creative processes. It highlights the importance of mutual involvement and co-creation, promoting an educational culture where critical reflection and creative participation are central. Rather than treating AI as an autonomous force shaping human behavior, this approach advocates for seeing it as a collaborative entity—one that, when designed with ethical sensitivity, can strengthen human potential and enrich learning experiences.

2.3. Flow States in Human–AI Interaction

Τhe theory of flow, as developed by

Mihaly Csikszentmihalyi (

1990), describes an optimal psychological state of deep immersion and focused engagement in an activity. In such states, individuals often lose their sense of time and self, experiencing high levels of performance and satisfaction. Flow emerges when there is a balance between the challenge of a task and the individual’s skill level, along with clear goals and immediate feedback. Across domains such as learning, work, play, and creative expression, flow is considered a vital condition for enhancing autonomy, creativity, and experiential learning.

As artificial intelligence (AI) becomes increasingly embedded in both everyday and professional life, researchers across diverse fields are turning their attention to the intersection between AI and flow experiences. Recent studies investigate how AI can both induce and sustain flow across different domains. In adaptive learning environments and games, AI systems are designed to support flow by responding dynamically to user behavior (

Juvina et al., 2022).

Weekes and Eskridge (

2022) introduce the

Flow Choice Architecture (FCA), an artificial intelligence system designed to enhance the experience of flow among knowledge workers. FCA combines biosignal data with AI techniques to offer personalized

nudges—that is, gentle, context-aware suggestions or interventions (such as auditory cues, visual prompts, or short reminders) that help workers maintain focus, avoid interruptions, and sustain high levels of cognitive performance. Rooted in Thaler and Sunstein’s Nudge Theory, these recommendations are not forced or intrusive but are designed to subtly steer behavior in ways that promote well-being and immersive engagement during cognitively demanding tasks.

2.4. From Homo Ludens to AI Ludens: Toward a Culture of Playful Intelligence

While Csikszentmihalyi examines flow primarily from the perspective of psychology, we can also discern its immersive qualities embedded within Huizinga’s anthropological understanding of play. For Huizinga, play is a generative cultural force: a ritual space where alternative forms of being and relating can emerge. In his seminal work

Homo Ludens,

Huizinga (

1938/1950) defines play as a voluntary, bounded, and rule-governed activity that takes place outside the sphere of ordinary life. Its essential feature, he argues, is its immersive nature: play absorbs the individual so fully that one steps out of daily reality and into a separate symbolic order. It is an experience that demands intense focus and emotional investment, not for external gain, but for the value of the experience itself. Seen through this lens,

flow becomes the experiential and affective core of play and corresponds to one of the most fundamental human needs. From Huizinga’s perspective, the desire to play is not merely a choice but a deep human impulse—a need for immersive experience so compelling that it draws the individual back into the play space, again and again. Like a current of water, this urge finds expression wherever it can, seeking renewal, resonance, and release.

Zhu et al. (

2021) propose that human–AI interaction can take the form of a play, based on their research on neural network-based gameplay environments. They argue that designers of AI systems should account for the concept of flow, as such interactions can foster immersion, exploration, and co-creation.

3. Central Hypothesis and Research Questions

The central hypothesis of this research is as follows:

«Dialogic engagement with generative AI constitutes a transformative educational process in which human agency and co-agency are dynamically co-constructed. When AI is approached not as a passive tool but as a dialogic partner, it can foster critical thinking, conceptual reframing, and creative expression».

To explore this hypothesis, the study addresses the following research questions:

- RQ1:

In what ways does dialogic interaction with AI influence students’ expression of agency and the emergence of co-agency throughout the learning process?

- RQ2:

What role does dialogic structure play in fostering critical reflection and creative experimentation during human–AI interaction?

- RQ3:

How do students perceive and evaluate AI when it is integrated as a dialogic partner in their learning process?

4. Related Work

Grounded in a collaborative perspective, dialogical approaches to AI-assisted education have advanced the concept of co-agency, highlighting the mutual shaping of learning processes by humans and AI. Rather than positioning AI as a passive tool, this framework emphasizes mutual responsiveness and shared intentionality between human and artificial agents. A dialogical approach functions not merely as a channel for interaction but as a pedagogical strategy that fosters critical thinking, intellectual autonomy, and creative exploration through the ongoing negotiation of meaning. In such settings, learners become active co-constructors of knowledge, shaping the learning experience in partnership with the AI. Recent evidence underlines this claim. Research shows that AI-supported collaborative environments enable students to test ideas, challenge assumptions, and refine understanding through iterative dialog (

Sako, 2024), while AI-based language-instruction studies reveal that dialogic co-agency nurtures trust, self-confidence, and openness to alternative perspectives (

Muthmainnah et al., 2022). Complementing these qualitative insights, a 2024 mixed-methods study of 121 undergraduates conducted by Ruiz-Rojas, Salvador-Ullauri, and Acosta-Vargas found that occasional use of generative-AI tools—particularly Canva and ChatPDF—was linked to a clear, self-reported rise in critical-thinking skills (64%) and a modest yet positive boost in collaboration (~60%) (

Ruiz-Rojas et al., 2024). Likewise, experimental work on design-based learning demonstrates that integrating AI can significantly enhance creative self-efficacy and reflective thinking (

Saritepeci & Yildiz Durak, 2024), providing further empirical weight to AI’s transformative potential.

However, cultivating critical thinking with AI still demands a careful balance between technological and traditional pedagogies (

Panit, 2025;

Çela et al., 2024). Educators therefore play a pivotal role, guiding students through dialogical strategies—participatory exploration, field visits, workshops—that ensure AI enhances rather than replaces analytical and reflective capacities (

Chaparro-Banegas et al., 2024). Responsible, critical integration can thus strengthen reasoning and argumentation skills (

Susanto et al., 2023).

A prime example of this perspective is the prototype of a virtual agent used to investigate educators’ perspectives on AI through open-ended discussions (

Katsenou et al., 2024). The most fundamental aspect of this virtual agent was its agency, which was trained to differ from the typical function of ChatGPT—primarily providing direct answers to preset queries. Instead, this assistant’s chief objective was to enhance creativity, critical thinking, and socio-emotional development, mainly by generating its own questions. The “Froufrou prototype,” as the assistant was called, was developed based on Gianni Rodari’s principles and the constructivist approach, aiming to weave children’s personal experiences into narrative contexts through targeted questions. Remarkably, within just one hour of interaction, the virtual agent effectively reduced kindergarten teachers’ initial resistance to introducing AI in the classroom. The Froufrou prototype exemplifies how an AI developed with a focus on dialogical interaction and a human-centered perspective can transform doubts and concerns into a positive stance. Consequently, through this experiment, educators recognized that AI could serve as a creative partner that deepens and enriches the learning experience.

5. Method of Research: A Hybrid Ethnography

This study employed a hybrid ethnographic approach, conducted within a blended learning environment that combined in-person classroom sessions with online participation. The course was attended by both officially enrolled students, who were physically present, and recent graduates of the teacher certification program, who joined remotely.

As part of the course, we provided students with a practical guide titled “

Artificial Intelligence as a Learning Partner through Dialogic Learning”, which was developed through ongoing experiments conducted at the Institute for Game Research. The aim of these experiments is to formulate a methodological workflow for educators working with digital assistants and to contribute to the development of an ethical and pedagogical framework for AI-supported education. The guide presented to the students was grounded in sociocultural and dialogic learning theories, particularly those of

Vygotsky (

1978) and

Bruner (

1990), emphasizing the role of language as a tool for thinking and learning through social interaction. It introduced techniques for active and reflective engagement with Artificial Intelligence, such as providing contextual input (e.g., uploading conceptual content like “wild pedagogy” so the AI can respond more precisely), assigning roles (e.g., asking the AI to act as an ethnographer or educator), breaking down complex tasks into smaller steps (e.g., identifying main themes, then generating subcategories), and encouraging critical questioning (e.g., prompting students to challenge responses and formulate deeper inquiries). Through this approach, AI was presented as a learning mediator that supports meaning-making through dialog, aiming to foster students’ autonomy and active participation. The guide was proposed as an optional resource, inviting students to apply it voluntarily in their own learning processes

5.1. Participants of the Research

In the study, a total of 56 participants were involved, including both students and graduates of the Department of Audio & Visual Arts, Ionian University, and graduates from other Art Hellenic Universities who aimed to obtain certification of Pedagogical and Teaching Sufficiency. Their diverse backgrounds provided valuable insights into the integration of AI in creative learning environments. To ensure the anonymity of students (further on by using the word students we included all the participants of the Pedagogical and Teaching Sufficiency Program), all names referenced in this study are pseudonyms. Any identifying information has been altered or omitted to protect the privacy of the individuals involved.

5.2. Data Collection

The empirical material was drawn from three main sources: (1) structured field notes compiled during students’ live presentations, (2) midterm creative projects involving the use of AI tools, and (3) responses to a final reflective questionnaire. This multidimensional methodology—which integrates the analysis of synchronous and asynchronous interactions, AI-generated content, and self-reflective data—offers a nuanced understanding of the complex social dynamics that characterize contemporary hybrid educational settings (

Liu, 2022). The research was conducted over the course of the academic semester, beginning in September 2024 and concluding with the end of the January 2025 examination period.

5.2.1. Field Notes from Student Presentations

The first corpus for our analysis was derived from classroom observational notes taken during student presentations. These notes focused on two primary axes: (a) the extent to which students adopted the dialogic methodology introduced in class, and (b) the depth of their conceptual engagement with theory and their perceived interaction with AI. Notably, many initial submissions lacked concrete examples or traces of reasoning that demonstrated how students had used dialogic strategies in their engagement with AI to develop their ideas. In response, we explained the conceptual gap this omission created in their submissions and offered all students the opportunity to revise and resubmit their work. Significantly, almost all of them took this opportunity. The revised versions included not only improved conceptual articulation but also concrete documentation of their dialogic processes—such as transcripts of their exchanges with AI and visualizations of the key turning points in their interaction. These additions allowed for a richer understanding of how students navigated, adapted, or struggled through the co-constructive learning process. During the final presentations, we observed that prior classroom practices—such as annotated reflections, modeling of AI dialog, and peer discussions—had a tangible impact on student outcomes. Those who had embraced dialogic techniques (e.g., iterative prompting, follow-up questions, or treating AI as a sparring partner to test ideas) produced more thoughtful, layered results. In contrast, students who submitted simple, one-shot prompts often produced technically competent but conceptually shallow outputs.

Some unexpected findings also emerged. One student (Rania) became so deeply immersed in playful engagement with AI that she produced two distinct projects: one based on a single playful prompt—a linguistic game she referred to as “mine”—and another, more formal academic version. Notably, she did not initially regard the playful piece as a “real” academic submission. Themes of personalization and adaptability also surfaced. One student asked the AI to explain a complex theoretical concept using football metaphors, thereby creating a familiar frame of reference. Another student with dyslexia reported that AI-supported reading and synthesis allowed for more accessible and less stressful processing of course material. These examples highlight the potential of dialogic AI to personalize learning, foster inclusion, and challenge normative expectations in academic work. A summary of these findings is presented in

Table 1.

5.2.2. Data Collected Through Student Assessments and Post-Activity Questionnaire

The second corpus of data collection focused on the evaluation of students’ submitted deliverables, with particular attention to the visible chain of thought demonstrated in how they structured their interactions with AI. We examined whether students had shaped the dialog purposefully, framing their inquiries in a way that revealed critical intent and conceptual clarity. Key questions guiding our analysis included: Did the student attempt to guide the AI’s responses? Was there evidence of intentional framing and iterative refinement? Did moments of tension, contradiction, or misunderstanding emerge during the dialogic process—and if so, how did the student respond?

These questions were further explored through a reflective questionnaire administered at the end of the course. The questionnaire was not designed based on predefined categories; rather, it was constructed inductively, drawing from themes observed during the teaching process and student presentations. Questions probed how students’ perceptions of AI had evolved: whether it was seen as a collaborator or merely a tool, how it compared to human interaction, and whether the experience enhanced or hindered their creativity and critical thinking. The questionnaire also invited students to reflect on their emotional engagement with AI, their learning challenges, and the extent to which dialogic interaction contributed to clearer conceptual understanding.

5.3. Method of Analysis

Within this framework, we employed techniques from discourse analysis (

Fairclough, 1992) to investigate shifts in language, reasoning, and meaning-making over time. Our analysis focused particularly on four interpretive dimensions: linguistic markers, dialogic reasoning, interpretive shifts, and evolving cognitive patterns. These dimensions allowed us to identify not only how students expressed agency in their interaction with AI, but also how their perspectives transformed throughout the course. These dimensions are defined and illustrated as follows: (1) Linguistic markers—These included specific phrases, pronouns, or verbs that signaled how students positioned themselves in relation to AI. For instance, statements such as “

We tried a few prompts together, and then I adjusted based on what it gave me” used inclusive pronouns to indicate a collaborative framing. In contrast, expressions like

“It gave me a result. I just used it” suggested a passive stance and a more instrumental view of the technology. Such markers provided insight into the degree of perceived agency and dialogic engagement. (2) Dialogic reasoning—This referred to instances in which students engaged in reflective back-and-forth with the AI, often using its responses as prompts for deeper inquiry. One student noted: “

When I saw what the AI gave me, I realized it didn’t make sense in the context I had imagined. So I asked it again, but in a different way. Then I realized I hadn’t been clear myself.” This kind of reasoning reflects cognitive flexibility, meta-reflection, and willingness to revisit one’s own assumptions. (3) Interpretive shifts—These were changes in the way students conceptualized AI, their topic, or the theoretical framework over time. A typical example involved a student who initially claimed that “

AI is just a neutral tool—like Photoshop but with text” and later concluded: “

I realized that the AI reflects the data it was trained on. It’s not neutral—it actually replicates dominant views.” This shift from a technical to a sociocultural interpretation demonstrated a deepening of conceptual understanding. (4) Evolving cognitive patterns—These were evident in the transition from descriptive to analytical or theoretically grounded reflections. One student began by asking AI for an image of a futuristic city and simply accepted the output. Later, he wrote: “

I asked the AI to visualize a class-divided city. I then analyzed how its visual choices reflected capitalist aesthetics, referencing Lefebvre’s notion of abstract space.” This example illustrates how dialogic interaction can scaffold higher-order thinking and theoretical integration. Taken together, these dimensions allowed us to trace not only what students said, but how their ways of thinking developed through interaction with AI. Building upon this initial categorization, we developed broader thematic groupings that reflected the pedagogical function of the teaching approach we employed (see

Table 2). Based on this framework, we sought to construct a narrative that would reflect, as accurately as possible, the patterns and insights emerging from our empirical findings. A consistent pattern across the data set revealed that students who actively engaged in classroom dialog—through lectures, discussions, and experimentation—underwent a noticeable shift in how they perceived and utilized AI. Their initial assumptions, often grounded in a purely instrumental understanding of AI, gave way to more nuanced interpretations that emphasized dialogic potential, critical reflexivity, and co-agency. This transformation was especially visible in students who treated AI as a conversational partner, prompting them to rethink their own ideas and frameworks. In contrast, most of the assignments that did not exhibit such interpretive or cognitive transformation were those produced by students who had not participated in the dialogic aspects of the course, such as attending lectures or engaging in feedback sessions. This observation underscores the centrality of dialogic engagement—not only as a pedagogical method but also as a catalyst for critical development in AI—creative outputs, and reflective responses. Whether AI was approached as a constrained tool, a cognitive partner, or a site of negotiation, the quality of interaction significantly shaped both the form and the depth of learning.

Together, classroom observations, assessment of deliverables, and students’ reflective responses provided a multi-layered view of their evolving relationship with AI—one shaped by both cognitive and affective dimensions. The integration of these distinct sources functioned as a form of methodological triangulation, allowing us to trace shifts in learner agency, dialogic sophistication, and theoretical integration

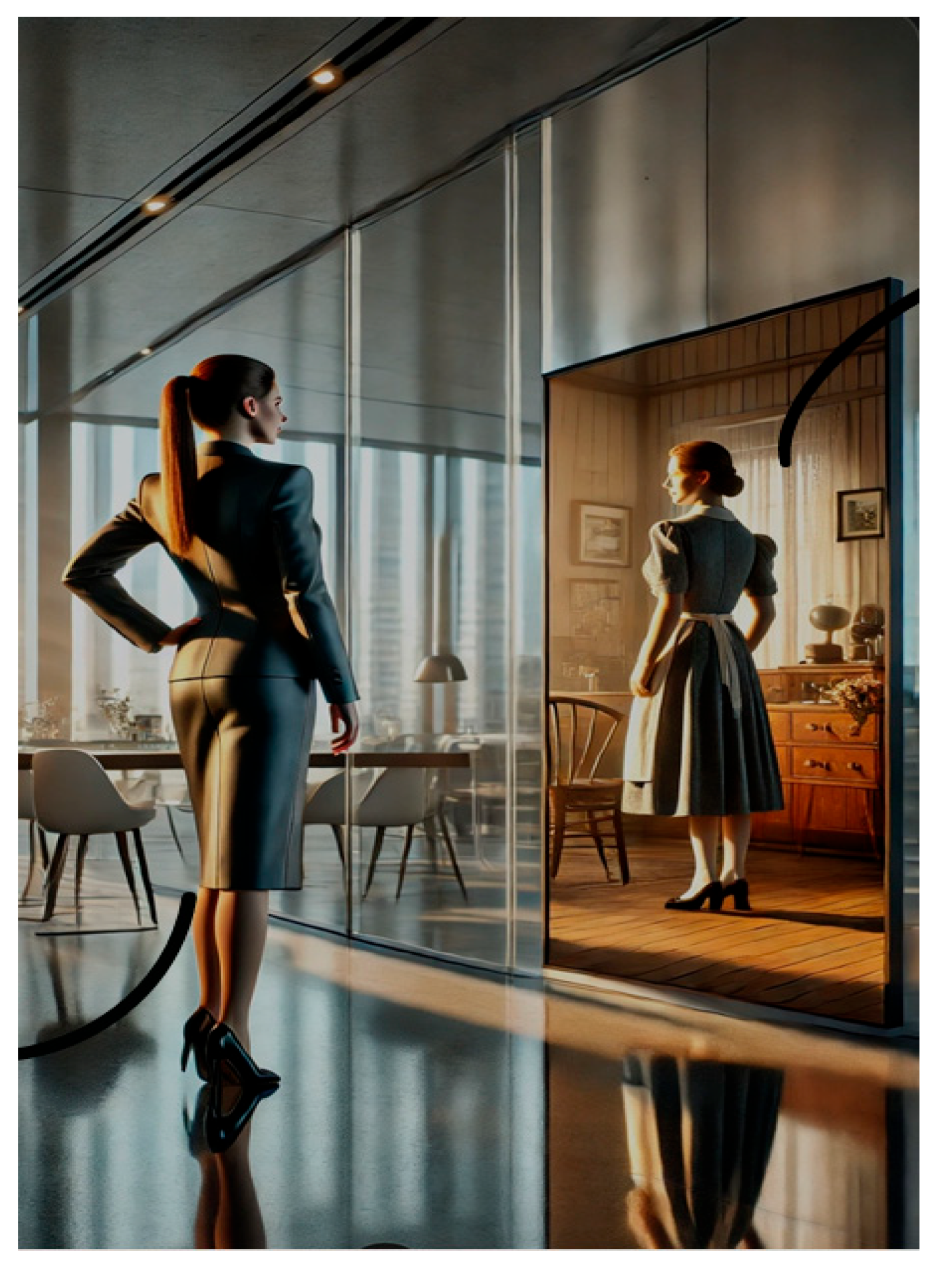

Finally, it is worth mentioning that, as part of the data analysis process, we experimented with the use of ChatGPT to detect and trace students’ reasoning chains within their submitted work. A particularly illustrative case involved a student who designed a visual representation of the theory of social change, with a focus on the evolving roles of women. The student’s process was iterative and dialogical. Initially, she prompted the AI with a general question about how it perceives social change in relation to women. The AI generated an image of a traditional housewife. The student refined her prompt, requesting

“both a traditional and a modern woman,” but the next image included only the latter. Recognizing the difficulty of merging opposites, she then provided detailed instructions regarding “setting, body language, clothing, and symbolic contrast.” After several iterations, the final image depicted the two figures in clearly distinct roles, conveying the intended narrative of social transformation (

Figure 1). When asked to analyze the student’s process, ChatGPT successfully identified the progression of thought. It noted, for example:

“The student begins with an exploratory prompt, testing the AI’s spontaneous interpretation. As the responses fail to meet her conceptual goal, she engages in iterative correction, demonstrating critical reflection and increased control over the output.” And further:

“Through successive refinements, the student transitions from open-ended to strategically framed interaction, gradually constructing a visual metaphor aligned with sociological theory.” Encouraged by such insight, we extended the use of ChatGPT across several student submissions, prompting it to detect logical sequences and dialogic evolution. In the early stages, the model offered promising contributions. For instance, in one case, it remarked:

“The student’s initial query is descriptive—‘What is AI?’—but through follow-up prompts such as ‘Can AI replace human creativity?’ and ‘How does emotion affect educational dialogues with AI?’, the reasoning shifts toward a speculative and critical mode.” However, as the process unfolded, limitations became evident. The AI began producing fictional reasoning chains—attributing to students transitions or logical structures that were absent in their actual texts. As the analytical quality diminished, we observed a dilution of content and increasing detachment from the authentic student voice. Consequently, we decided to discontinue this approach in its current form.

5.4. Limitations of the Research

Within the framework of an ethnographic approach, certain limitations are inevitable and must be considered. As Clifford Geertz reminds us, ethnographic inquiry is inherently interpretive—“

something that is made, something that is crafted” (

Geertz, 1973)—and therefore always bears the imprint of the researcher’s subjectivity. In this project, the principal investigator functioned as the primary research “instrument,” and their prior experiences inevitably shaped the reading of the data. To mitigate bias, the initial coding and cross-checking were carried out collaboratively by the two instructor-authors who taught the course, Katsenou and Papadopoulou, ensuring that multiple insider perspectives informed the analysis. During the initial student presentations, Deliyannis was present and joined the interpretation process alongside the students themselves, adding an additional vantage point grounded in live classroom interaction. Finally, Kotsidis and Anastasiadis reviewed the findings as external readers, offering the critical distance necessary for triangulation and helping to limit interpretive drift.

As is typical in ethnographic research, the aim was not to produce statistical generalizations, but rather to develop a nuanced understanding of the phenomenon under investigation. Ultimately, the ambition of ethnographic inquiry is not to yield sweeping, law-like claims (

Geertz, 1973;

Hammersley & Atkinson, 2019). Instead, it purposefully privileges depth over breadth: By closely examining how students constructed dialogic interactions with AI systems, this study aims to shed light on emerging forms of learning engagement and to contribute to the broader scholarly conversation on the educational uses of artificial intelligence.

6. Results

The following section offers a narrative-based account of the empirical material collected during the course, drawing from observations during student presentations, student assignments, interactions with AI tools, and reflective questionnaires. The main narrative unfolds around the techniques students employed to actively guide AI through dialog, provoking it to generate more meaningful and higher-quality content. The analysis reveals that when human–AI interaction is framed through a dialogical lens, both parties are mutually affected. The content generated through AI is significantly enhanced, while at the same time, students develop a deeper understanding of the material they are working with—gaining insight into both the potential and the limitations of AI systems.

6.1. The Dual Role of Artificial Intelligence—Support or Substitution?

Although most students reflected positively on their interactions with AI—particularly praising its ability to simplify complex concepts and help them grasp abstract ideas—they all acknowledged a deeper concern: the gradual erosion of human initiative and creative control. This concern became especially pronounced when AI was used to generate content without thoughtful guidance, transforming the tool from a support mechanism into an automated response engine. Several students reported a growing sense of diminished human agency, noting that prolonged use of AI tools occasionally led to a more passive stance in their creative and cognitive processes. While they recognized the efficiency and convenience that AI offers, students also reflected on how its generative ease often bypasses essential human cognitive processes—such as reflective thinking, experiential learning, and step-by-step reasoning—processes that require time, effort, and uncertainty. In contrast, AI systems tended to deliver immediate outputs, reducing the need for sustained mental engagement and the gradual construction of knowledge. Panos captured this tension clearly:

Students should not use AI excessively without the supervision of a teacher, as this can lead to misuse—such as using it to automatically solve exercises without understanding the theory. AI should serve as a supportive tool for students, helping them understand the material better, and not as a provider of ready-made answers.

(Panos)

This quote constitutes a pedagogical position. For AI to hold educational value, it must operate within a framework of guided learning, where the presence of the teacher is not optional but essential. The educator acts as a mediating figure, ensuring that AI use remains within the boundaries of meaningful learning rather than drifting into passive consumption. In this context, the teacher assumes a catalytic and mediating role: not only guiding students in how to use AI meaningfully but also establishing the pedagogical boundaries within which AI can operate as a learning companion rather than a shortcut. The teacher becomes a sense-maker, a human anchor who ensures that AI supports critical engagement and conceptual understanding, rather than bypassing them. Nikolas offers a crucial insight into the use of AI, shifting the focus away from the tool itself and toward the educational system that shapes its deployment. In his view, the problem is not just how students use AI, but why—and what broader pedagogical values this use reflects. Nikolas implicitly advocates for a pedagogical approach that integrates AI into meaningful learning activities—contexts where students are invited to engage with complexity, uncertainty, and intellectual risk. In such a vision, AI is not an add-on to the competitive pursuit of better grades, but a catalyst for reflection and dialog within a culture of learning that values process over performance.

6.2. Turning Points in AI Dialog: From Semantic Deadlocks to Co-Agency

Having identified the tension between creative autonomy and AI’s generative ease, the next step in our analysis focuses on a critical shift: after recognizing the problems associated with instrumental AI use, most students actively sought ways to reclaim creative control through dialogic strategies. Rather than abandoning the tool, they began to transform their mode of engagement, experimenting with techniques that promoted collaboration, iteration, and reflective dialog. While some, like Marina and Konstantinos, initially perceived AI as a constraint—either interrupting their creative flow or providing pre-formed answers—many others reported turning points in their relationship with the tool. These turning points often emerged through semantic deadlocks: moments when AI misunderstood a prompt, produced vague or contradictory responses, or failed to align with the student’s intent. What is remarkable is that these breakdowns were not treated as failures. On the contrary, many students reframed these disruptions as creative threshold opportunities to pause, rethink, and renegotiate the interaction. Rather than passively accepting AI’s output, they responded with precision, adapted their prompts, and initiated deeper cycles of exchange. Through this process, AI shifted from being a static content generator to becoming a dialogic partner in a co-creative process. This transition illustrates a significant pedagogical insight: when AI is situated within a reflective and iterative learning environment, even its limitations can provoke creative agency.

Students did not merely learn to “use” the tool better—they learned to converse with it, to challenge it. Emilia, for example, described her interaction with AI as cognitively demanding, highlighting the need for continuous rephrasing and negotiation to reach a satisfactory result. For her, semantic deadlocks became catalysts for more meaningful dialog, resembling the productive role of disagreement in human conversations, where conflict often leads to the redefinition of boundaries and the elevation of communicative quality. The empirical material indicates that the quality of AI-mediated learning interactions is shaped less by the technical affordances of the system and more by the student’s dialogic orientation and interactional strategies. Analysis of student assignments and reflective questionnaires suggests two dominant modes of engagement.

The Structured Approach: Students in this group approached AI as a disciplined and dialogic partner, carefully setting the stage for meaningful exchange. Rather than posing prompts, they offered rich context, defined clear goals, and made their intentions transparent. Through this orchestration, the AI was reimagined not as a passive tool, but as a responsive interlocutor—one capable of posing questions, supporting critical thought, and participating in the iterative clarification of ideas. This relational strategy enhanced not only the coherence of the AI’s output but also sharpened the students’ reflective awareness of their own thinking processes. A particularly compelling example of the structured approach is Mina, who deliberately engaged with ChatGPT in a way that ensured meaningful dialog, elevating AI from a simple answer-generating tool to an intellectual companion. She emphasizes that a crucial factor in this productive collaboration was her decision to provide structured input, thereby shaping an interactive space that facilitated targeted and substantive discussions. What distinguished her experience was AI’s ability to sustain the conversation by posing new questions, helping her structure her thoughts, and deepening her analysis. The continuous exchange of ideas did not merely serve as a means of acquiring information but functioned as a mechanism reinforcing dialogic learning and critical thinking.

Similarly, Katerina developed a methodology to structure her communication with AI. She approached interaction by instructing the system to generate an image reflecting the social construction of reality. Throughout this process, she meticulously articulated her expectations for each generated image, refining AI’s output iteratively. “

I kept pushing it until it made sense to me—not just visually, but conceptually,” she noted. Once satisfied with the final image, she uploaded her notes—previously shared on Open class—and requested a summarized explanation, subsequently prompting AI to connect these insights to the image. She also instructed AI to integrate references from

Berger and Luckmann’s (

1966) seminal work,

The Social Construction of Reality, for further elaboration. These narrations reveal how a thoughtful structuring of dialog opened space for genuine co-construction of meaning.

The Critical Approach: Other students adopted a more interrogative stance, approaching AI as a site of contestation. They challenged the system’s assumptions, probed its limits, and foregrounded epistemological tensions. These engagements were marked by iterative questioning and reflective skepticism, fostering awareness of AI’s biases and constraints while simultaneously using it as a springboard for academic critique. The critical approach is exemplified in the interaction of Nikos, who persistently questioned AI’s claims, cross-referenced them with academic sources, and demanded argumentative justification. When presented with a general statement about the social construction of reality, he responded: “Can you provide a specific example from Berger and Luckmann’s work that supports this claim?” This prompted a more nuanced reply, shifting the interaction into a space of deliberative scrutiny. When AI offered deterministic or culturally narrow explanations, Nikos interrogated their theoretical underpinnings: “This interpretation seems biased toward a Western framework—what alternative sociological perspectives exist on this issue?” This iterative questioning process transformed his interaction into a critical engagement, fostering a deeper reflection on both AI’s limitations and its potential as a tool for academic inquiry.

This idea of active dialog with AI and co-agency is vividly illustrated in an image generated by Katerina with AI assistance (

Figure 2), which visually represents cooperation: two individuals collaboratively constructing a cube. Just as these two figures work together to build the structure, AI can serve as a collaborative partner in dialogic learning. The use of AI facilitates exploration, continual revision of knowledge, and meaning making through dialog, offering new perspectives and stimulating critical thinking. Consequently, AI and dialogic learning can coexist on the same intellectual trajectory, converging toward the expansion of knowledge and the construction of meaning.

6.3. AI as Co-Player: From Dialogic Play to Flow and Meaning-Making

A salient finding of this research was that the pivotal factor in modifying students’ perceptions, even among those initially skeptical about artificial intelligence (AI), was the stimulation of their curiosity through lectures. In this context, AI was not merely utilized as a tool for completing a semester project but rather served as a domain for experimentation through which students sought to comprehend not only AI itself but also their own identities and the world around them. The activation of intrinsic interest emerged as a pivotal factor, as it led to the suspension of initial reservations, even among students who initially held negative predispositions. An illustrative example is provided by Rania, who initially voiced strong criticism of AI, asserting that its operation tends to mimic the aesthetic choices of the most prevalent patterns, thereby undermining creative originality. Her account of the experience is as follows:

In general, I found this project interesting, even though I had my reservations about the use of AI at the beginning. This is something I will analyze further. Nevertheless, I found it engaging. The topic appealed to me greatly, and I began forming my first idea immediately after leaving the class. (…). The notion that reality is not objective but socially constructed was a provocative thought that captured my interest and made me feel as if I was decoding the world around me. The topic led me to reflect on questions such as: ‘Which beliefs are truly my own?’ and ‘Which have been imposed on me by society?’ I find these inquiries highly stimulating as they touch upon existential issues and provoke intellectual exploration, offering a sense of self-awareness and self-criticism. Additionally, I found the challenge of translating a theoretical concept into an image particularly exciting.

(Rania)

Rania’s experience exemplifies the course’s thematic content, which functioned not solely as a subject of study but also as a stimulus for critical thinking and philosophical inquiry. The phrases “captured my interest,” “exciting challenge,” “provocative idea,” and her reference to “decoding the world” align directly with

Csikszentmihalyi’s (

1990) concept of flow. This concept refers to a state of complete focus, immersion in experience, and heightened creativity, where participants experience total absorption and a loss of temporal awareness—attributes associated with the notion of optimal experience. The experience was further intensified when Rania began interacting with AI, transforming the process into a form of playful exploration.

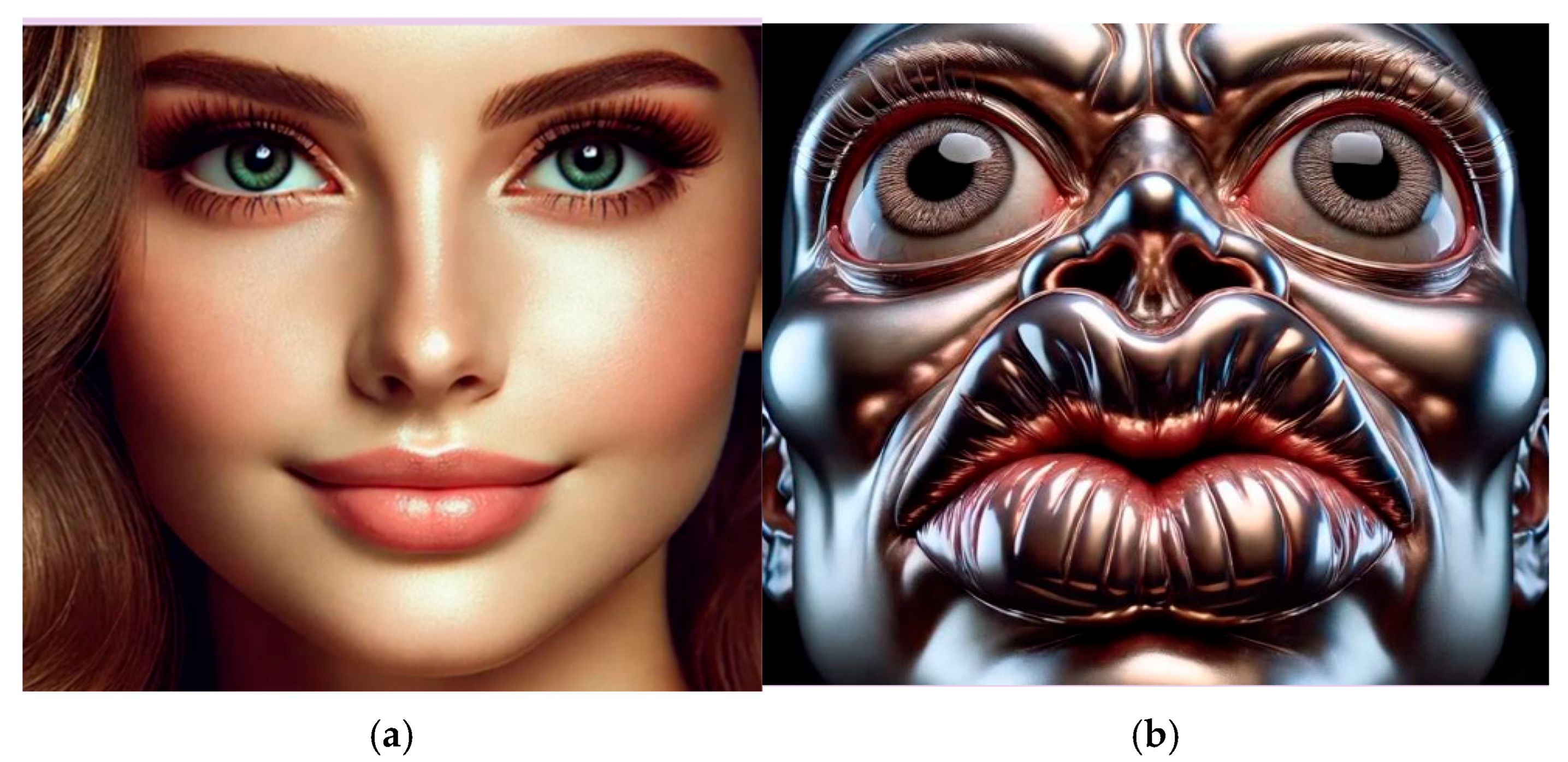

My initial idea was to explore the concept of beauty. I asked AI to generate an image of a beautiful woman based on standard beauty ideals. Every time it provided an output, I asked for another: ‘I want an even more beautiful woman,’ without specifying details on how she should look. At one point, the AI displayed a message indicating that we had surpassed the boundaries of realism and that continuing the process would result in unnatural, distorted features. Nevertheless, I persisted: ‘I want an even more beautiful woman,’ until I had essentially reached the system’s limit. Then, I asked AI to compare the last generated woman with the original and to determine which it found more attractive (

Figure 3).

(Rania)

This interaction extended beyond simple technological experimentation, evolving into a critique of the very aesthetic and societal standards that shape perceptions of beauty. However, through the ‘game’ of continually requesting ‘greater beauty’, Rania reached the limits of artificial creation, directly experiencing the distortions that arise from an obsession with idealized aesthetics. In her own reflections, it became clear that the process had taken on a ludic character—a disruption of the assignment’s frame, not out of disregard, but through an intense, immersive exploration. What started as a project on beauty had transformed into a performance of questioning: of societal norms, of the AI’s embedded values, and of her own complicity in the pursuit of the ideal. Yet, despite the depth of the experience, Rania expressed unease in presenting it in class. She prepared two versions of her work: one that aligned with conventional expectations and theoretical analysis, and another that exposed the raw and paradoxical findings of her ‘game’ with the algorithm. This attitude of play was echoed by other students. Several described the task as an “opportunity to play with the tool”—an exploration that, while framed as experimentation, unintentionally became a form of critical engagement. In attempting to ‘play,’ they had unknowingly charted their own learning pathways, revealing both the affordances and constraints of AI systems. Their trajectories offered more than content—they became traces of cognitive negotiation, where meaning emerged not from predetermined rubrics but from situated interaction and reflection.

Figure 3.

The playful experiment that emerged from Rania’s interaction with AI regarding beauty stereotypes. (a) The initial depiction of beauty; (b) the final depiction of beauty.

Figure 3.

The playful experiment that emerged from Rania’s interaction with AI regarding beauty stereotypes. (a) The initial depiction of beauty; (b) the final depiction of beauty.

6.4. AI as a Safe Place for Risk, Error, and Learning

AI is increasingly described by students as a supportive space—a kind of emotionally neutral companion that fosters psychological safety and encourages intellectual risk-taking. One student insightfully noted:

AI can create a low-pressure environment where learners interact without the fear of being judged. Personalized learning platforms allow students to progress at their own pace, strengthening their self-confidence and reducing the anxiety of performing in front of peers. However, there is a risk of isolation if AI replaces human interaction, which is crucial for cultivating social skills.

(Leuteris)

This comment encapsulates a subtle yet powerful function of AI: its role as a non-judgmental interlocutor. In emotionally charged contexts—where fear of failure, peer comparison, or performance anxiety might inhibit learning—AI provides a space of emotional openness. Its absence of human evaluation, with its implicit gaze and social norms, enables students to express themselves more freely, experiment with ideas, and engage in creative processes without fear of failure. Through this lens, students construct AI not simply as a machine, but as an emotional buffer—a space where it is permitted and risk is decoupled from shame. AI does not “understand” emotions in a human sense, but its neutrality functions as a protective shell. It does not interrupt, ridicule, or expose. It waits.

However, students acknowledged that while AI created a psychologically safe space—free from judgment and performance anxiety—it also led, at times, to a retreat from genuine human interaction. Leuteris acknowledges this tension directly, suggesting that AI’s soothing presence must not replace the developmental frictions of human dialog. At the same time, students also identified critical limitations in AI’s ability to offer true emotional support. A recurring concern was its lack of lived experience and empathy. While AI may reduce performance anxiety, it cannot replicate the relational depth that characterizes human interaction. As one Spiros noted:

A mentor does not just create knowledge but also emotion. AI cannot replace human interaction, the tone of voice, or the touch. Discussing with an expert allows for a deeper analysis of a topic and offers insights enriched with personal experience and practical knowledge—something AI cannot replace.

(Spiros)

These reflections point to an important distinction: feeling safe is not only about the absence of judgment, but also about the presence of authentic connection. Students described mentorship not merely as knowledge transfer, but as an experience of being seen, supported, and challenged by someone who brings their own narrative, values, and sensitivity into the learning process. In this regard, while AI can offer a low-pressure environment, it still lacks the affective texture and interpersonal resonance that human educators provide. Based on students’ reflections, the question is not whether AI can support learning, but how educators can curate this support without allowing it to become a shelter from growth. Their comments point toward a pedagogy that positions AI as a starting point—a tool for building initial confidence—rather than an endpoint. What they call for is not dependency on artificial guidance, but a structure that ultimately reconnects learners with the social, collaborative, and dialogic dimensions of education.

A prevailing thread running through the students’ reflections is the value of a hybrid approach, one that intertwines human mentorship with AI support. As Maria expressed,

If I had the choice, I would start with guidance from an expert and then continue my exploration with artificial intelligence. The experience of discussion with an expert is richer, but AI worked collaboratively, helping me organize my thoughts.

(Marina)

Her account gestures toward a learning process that is not linear, but layered and relational, where human insight and technological responsiveness co-shape the learning trajectory. Rather than presenting human and artificial intelligence as opposing forces, the students’ narratives suggest a mutually constitutive relationship—one in which algorithmic precision supports, rather than supplants, lived experience and intuitive understanding.

An additional factor that emerged from research findings is the substantial support that AI can offer to those facing learning difficulties, such as dyslexia. They find in AI a “safe space” where they can practice without the anxiety of criticism that often accompanies the learning process in a traditional classroom setting. A notable example is that of Semeli, who leveraged the capabilities of artificial intelligence to create an audiovisual work, artistically capturing the feelings and challenges she experiences as a dyslexic student (

Figure 4). Both the choice of the theme and her approach demonstrate how AI can enhance creativity and provide motivation for students to explore their personal experiences, transforming them into material for learning and expression.

At the center of the scene, a solitary figure wandering in the darkness symbolizes the loneliness and internal struggle of those who are forced to forge their own path in a society that is not designed for them. On the horizon, skyscrapers in the shape of labyrinths highlight the presence of these challenges at every stage of life. The dark, dreamlike atmosphere intensifies the emotional impact, while the epic element adds a sense of adventure—emphasizing that these obstacles are not merely difficulties, but also opportunities.

(Semeli)

Semeli’s creative process exemplifies a cohesive synthesis of AI into her artistic practice, revealing the intrinsic human need for expressive communication alongside the structured, adaptive logic of artificial intelligence. As demonstrated in her assessment, she began by engaging in extensive discussions with AI—harnessing it as an inexhaustible reservoir of information and symbolism free from human bias or criticism. This “research and ideas” phase enabled her to explore diverse symbols and metaphors that authentically captured her experiences. As her ideas evolved, she transitioned into a phase of experimentation and adaptation, gradually infusing her work with personal emotions and insights. Together, these processes illustrate how AI not only functions as a tool for technical execution but also acts as a collaborative partner, enriching creative expression through the dynamic interplay of human intuition and AI cogency. Building on this dynamic, Semeli strategically employed a suite of AI tools to give “flesh and blood” to her feelings and artistic expression. For the visual component, she harnessed DALL·E

1 to generate evocative imagery that captured the abstract challenges of learning difficulties, refining the aesthetics through iterative feedback. Concurrently, she collaborated with ChatGPT

2 to explore and articulate creative ideas for a musical composition, drawing on its algorithmic reasoning to propose innovative approaches that resonated with her artistic intent. These conceptual insights were then transformed into sound using SOUNDRAW

3, which synthesized a musical piece that balanced emotional depth with rhythmic precision. Finally, by integrating both visual and auditory elements into Adobe After Effects Pro

4, she crafted a cohesive multimedia narrative.

Semelis’s approach to AI transcends the realm of creative expression, acting as a liberating force for individuals grappling with dyslexia. By addressing the inherent challenges that often impede creativity and foster dependency, AI enables individuals with Dyslexia to express themselves more freely. For instance, one student with dyslexia noted, “For the first time, I feel that I don’t have to ask my mother or others for help to correct a text,” underscoring the transformative potential of this technology.

7. Discussion

The empirical data directly address three intertwined research questions (see

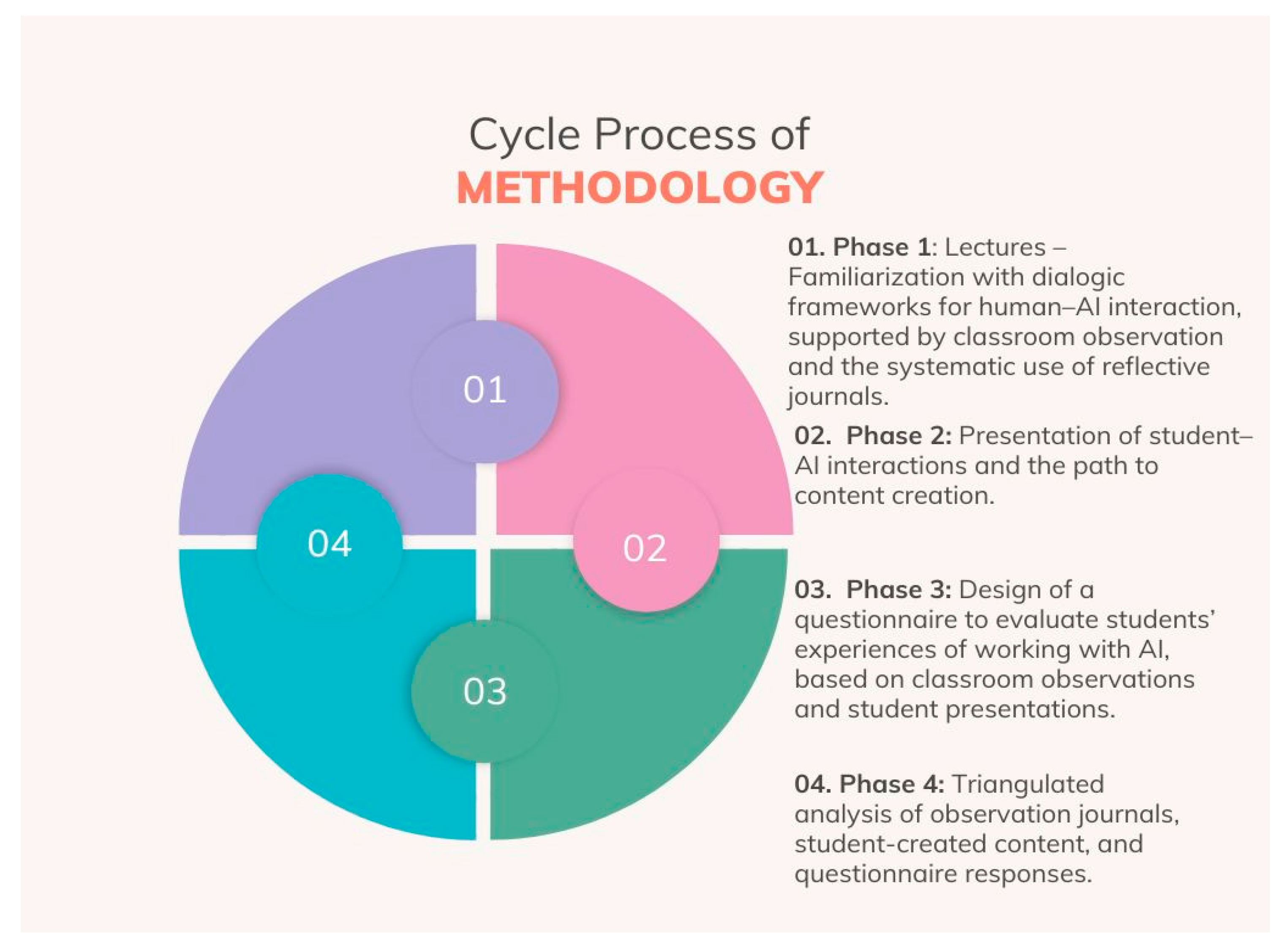

Table 3). First (RQ1), we explore how dialogic interaction with AI shapes students’ agency and sparks the emergence of co-agency throughout the learning process. The research findings directly address the research question by demonstrating how participants cultivated their own agency as they experimented with different methodologies to overcome the difficulties they encountered when the AI led them into semantic deadlocks. Το illustrate these dynamics, we present the interaction in the diagram “Two Pillars of Learning: Human–AI Dialogical Interaction.” In this visual schema, the left pillar represents the human learner and the right pillar the AI partner; between them unfold four successive stages—Question → Response/Suggestion → Reflection/Reformulation → Refined Output—which together weave a feedback-rich dialogical loop. With each rotation of this loop, students refine the methodology and wording of their questions, while the AI reframes and enriches the content, activating a relation of mutual influence. Through this continuous, self-reinforcing exchange, human and algorithmic agency are reshaped together, co- constructing a collaborative framework that embodies the essence of human–machine co -agency and fosters deeper critical engagement in student work. Secondly, in response to Research Question 2 (RQ2), we examine the role that dialogic structure plays in cultivating critical reflection and creative experimentation during human–AI interaction. This approach fosters an iterative mindset, placing learners in a state of continuous metacognitive vigilance. They continually evaluate their stance and the evolving logic of the algorithm, thereby elevating their work to a higher level of thought. Thirdly (RQ3), we investigate how students perceive and evaluate AI when it is integrated as a dialogic partner in their learning process. Our findings show that students experience AI as a hybrid partner that blends cognitive scaffolding with the psychological safety of judgment-free interaction. The model’s ability to offer emotional support and knowledge is acknowledged, but it is also stressed that its strengths are fully realized when combined with human capacities for empathy and the integration of diverse perspectives.

The sequential, multilayered methodological strategy we employed surfaced our findings and rigorously traced the dynamics of dialogic human–AI interaction. Across four interconnected phases—introducing dialogic frameworks (Phase 1), observing student-led presentations (Phase 2), co-creating a targeted questionnaire through ethnographic observation (Phase 3), and triangulating interview transcripts, reflective journals, and classroom artifacts (Phase 4)—we captured the evolving ways students think and act in relation to AI. Each step was built directly on its predecessor, deepening analytical insight while keeping the study tightly aligned with the original research questions. This integrative design ultimately produced a theoretically grounded, reflexively interpreted account of the findings (see also

Scheme 1 for a visual representation).

To summarize, the results of this study highlight a key conflict in the educational integration of AI. While generative AI provides unparalleled opportunities for creative experimentation, these opportunities are only unlocked when interaction is deliberately designed as a dialogic and co-constructive process. The findings indicate that the educational value of AI does not lie in its technical capabilities per se, but rather in the relational and situated ways in which learners engage with it. From a posthumanist standpoint, agency is not a static property of isolated entities, but rather an emergent phenomenon arising through interaction (

Nordström et al., 2023). In dialogic educational settings, this co-agency manifests through continuous intra-action between students and AI systems, in which both human thought and machine output are shaped through mutual responsiveness. This framework enables the consideration of AI not merely as a neutral assistant, but rather as a semi-autonomous interlocutor whose suggestions, limitations, and generative capacities are implicated in the shaping of the trajectory of learning (

Skågeby, 2018;

Uricchio & Cizek, 2023). Students who adopted a structured, dialogical approach—posing follow-up questions, negotiating semantic ambiguities, and framing AI as a collaborative partner—exhibited higher levels of conceptual clarity, metacognitive awareness, and critical engagement. These forms of interaction gave rise to a dynamic learning space where co-agency became visible and productive. Conversely, when AI was approached as a shortcut to quick answers, students often reported diminished cognitive depth. These observations echo the findings of

Lee et al. (

2025), who showed that uncritical reliance on AI-generated outputs correlates with a decline in critical thinking and reflective analysis.

However, the integration of AI into educational contexts is not without its challenges and limitations. As Nikolas insightfully observed, AI is often used not to deepen understanding, but as a tool to deliver ready-made information: “It provides ready-made information, often incorrect, but since it ‘gets you the grade, who cares, right?’” His words express a deep disillusionment with the grade-oriented logic of formal education. For Nikolas, the real failure lies not in AI itself, but in the institutional framework that prioritizes performance over meaning and results over reflection. Within this framework, AI becomes merely another shortcut—valued not for its pedagogical affordances, but for its capacity to deliver immediate, measurable outcomes. These include the emphasis on speed over depth, correct answers over inquiry, and grades over understanding. Within this perspective, efficiency becomes the dominant virtue, and AI the perfect tool to serve it.

This tension reveals the need for intentional pedagogical framing of AI use—one that resists instrumentalism and instead nurtures processes of dialog, experimentation, and reflective meaning-making. Without such framing, the risk is not simply misuse of the tool, but the reinforcement of pre-existing educational logics that marginalize critical thinking and imagination. In this new reality, the role of the teacher remains invaluable and multifaceted. A hybrid teaching approach, combining human guidance with AI capabilities, can maximize the learning experience: the teacher defines pedagogical objectives, selects suitable AI applications, and acts as a “bridge” between the machine and the student. Through this mediation, the human-centered dimension of learning is maintained, as teachers encourage the development of critical thinking, emotional intelligence, and ethical perspectives on technology.

8. Conclusions

The study concludes with the assertion that for the effective and equitable implementation of AI within the education system, it is imperative that there is a balanced and targeted integration of the technology. It is suggested that AI can function as a valuable tool and a collaborative partner in learning, on the condition that the promotion of autonomy is combined with collaborative learning and that curiosity and creativity are fostered.). This preservation of the delicate equilibrium between technological advancement and fundamental pedagogical values offers a promising outlook for the future of learning, which is characterized by meaningfulness, inclusivity, and collaboration.

The integration of artificial intelligence (AI) in education demands not merely technical deployment, but the deliberate cultivation of pedagogical frameworks that prioritize meaningful interaction, critical reflection, and relational learning. The findings of this study emphasize that without a dialogically grounded learning framework, AI risks becoming a mere instrument of efficiency—a shortcut to content production—rather than a partner in thought. To avoid this instrumental drift, it is essential to embed AI within a dialogic paradigm from the outset. This involves training educators and students to approach AI not as an answer machine, but as an interlocutor capable of supporting conceptual exploration and reflective reasoning. Within such a framework, AI serves not to replace human cognition, but to amplify it—through co-agency, iterative dialog, and shared meaning-making. As students’ interactions showed, this transformation does not occur automatically: it must be scaffolded through tools, protocols, and pedagogical intentionality that foreground process over product.

Being grounded in meaningful learning occurs when students engage in activities that are contextually rich, personally relevant, and socially mediated. AI should therefore be integrated into authentic, meaningful tasks—projects that challenge students to construct, reflect, and reframe their understanding through active engagement. As the present research illustrates, when AI-supported interaction is embedded within such practices, it can stimulate not only cognitive development but also philosophical inquiry, identity reflection, and creative experimentation. Rania’s case in particular demonstrated how play, flow, and personal relevance merged to produce a learning experience that was emotionally resonant and intellectually generative. This invites a broader educational imperative: to design discovery-based learning environments. Existing research on flow and AI is still in the early stages and often confined to gamified or lab-based settings. This study contributes to the field by situating AI within a longitudinal, real-world educational context, highlighting the importance of examining AI’s role in naturalistic, hybrid learning environments over time.

To fully understand and responsibly harness AI’s educational potential, more long-term, situated studies are urgently needed—studies that go beyond controlled experiments and explore how AI reshapes learning dynamics in the messy, social, emotionally charged environments of real classrooms. Such research must also attend to equity, student voice, and cultural variability, ensuring that AI is integrated in ways that are inclusive, context-sensitive, and pedagogically meaningful. In conclusion, a dialogical framework is a necessity for the ethical and effective integration of AI in education. Without it, AI risks reinforcing surface-level engagement and passive consumption. With it, AI becomes a co-thinker, a reflective partner, and a medium through which students construct knowledge with depth, relevance, and agency. Designing such environments requires intentionality, critical literacy, and long-term commitment—but the reward is a vision of AI not as a threat to education, but as an extension of its most humanizing possibilities.

Author Contributions

Conceptualization: R.K., K.K., A.P., P.A. and I.D.; Methodology: R.K., A.P., K.K., P.A. and I.D.; Validation: R.K., K.K., A.P., P.A. and I.D.; Formal Analysis: R.K., A.P., K.K. and I.D.; Investigation: R.K., P.A., A.P., K.K. and I.D.; Data Curation: R.K., K.K., A.P., P.A. and I.D.; Writing—Original Draft Preparation: R.K.; Writing—Review and Editing: R.K., K.K., A.P., P.A. and I.D.; Supervision: I.D. (AI methodology) and A.P. (pedagogical framework). All authors actively participated in the dialogic process of knowledge co-construction and approved the final version of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the use of anonymized student assignments and classroom presentations, which were originally created for educational purposes during the academic semester. The data used were non-identifiable and were collected in the context of regular university coursework, in line with ethical guidelines for educational research.

Informed Consent Statement

Informed consent was waived due to the use of anonymized secondary data derived from student assignments, which were originally created solely for educational purposes within the context of a university course. All data were analyzed in a non-identifiable form and in accordance with the ethical guidelines for educational research. Additionally, written informed consent was obtained from the students whose visual materials (e.g., drawings or images) are included and published in this paper.

Data Availability Statement

The data supporting the findings of this study are not publicly available due to ethical restrictions. Specifically, the data consist of student assignments and educational artifacts produced during the course, which are subject to privacy and academic confidentiality agreements. Sharing these materials would compromise the anonymity and intellectual ownership of the student participants.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Anastasiades, P., Kotsidis, K., Stratikopoulos, K., & Pananakakis, N. (2024). Human–Centered artificial intelligence in education. The critical role of the educational community and the necessity of building a holistic pedagogical framework for the use of HCAI in education sector. Open Education—The Journal for Open and Distance Education and Educational Technology, 20(1), 29–51. [Google Scholar] [CrossRef]

- Berger, P. L., & Luckmann, T. (1966). The social construction of reality: A treatise in the sociology of knowledge. Doubleday. Available online: https://archive.org/details/socialconstructi0000berg (accessed on 26 June 2025).

- Braidotti, R. (2013). The posthuman. Polity Press. Available online: https://archive.org/details/posthuman0000brai/page/n7/mode/2up (accessed on 26 June 2025).

- Bruner, J. (1990). Acts of meaning. Harvard University Press. [Google Scholar]

- Chaparro-Banegas, N., Mas-Tur, A., & Roig-Tierno, N. (2024). Challenging critical thinking in education: New paradigms of artificial intelligence. Cogent Education, 11(1), 2437899. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M. (1990). Flow: The psychology of optimal experience. Harper & Row. [Google Scholar]

- Çela, E., Fonkam, M., & Potluri, R. M. (2024). Risks of AI-assisted learning on student critical thinking: A case study of Albania. International Journal of Risk and Contingency Management, 12(1), 1–9. [Google Scholar] [CrossRef]

- Dezső-Dinnyés, R. (2019, June 2–4). Co-ability practices. Nordic Design Research Conference (NORDES) 2019: Who Cares? Espoo, Finland. Available online: https://www.nordes.org (accessed on 3 July 2025).

- Fairclough, N. (1992). Discourse and social change. Polity Press. [Google Scholar]

- Geertz, C. (1973). The interpretation of cultures: Selected essays. Basic Books. [Google Scholar]

- Giddens, A. (1984). The constitution of society: Outline of the theory of structuration. University of California Press. [Google Scholar]

- Hading, E. F., Rustan, D. R. H. P., & Ruing, F. H. (2024). EFL students’ perceptions on the integration of AI in fostering critical thinking skills. Global English Insights Journal, 2(1), 1–10. [Google Scholar] [CrossRef]

- Hammersley, M., & Atkinson, P. (2019). Ethnography: Principles in practice (4th ed.). Routledge. [Google Scholar] [CrossRef]

- Haraway, D. J. (1985). A manifesto for cyborgs: Science, technology, and socialist feminism in the 1980s. Socialist Review, 80, 65–108. Available online: https://archive.org/details/1985.-haraway-a-cyborg-manifesto.-science-technology-and-socialist-feminism (accessed on 26 June 2025).

- Hayles, N. K. (1999). How we became posthuman: Virtual bodies in cybernetics, literature, and informatics. University of Chicago Press. [Google Scholar]

- Huizinga, J. (1950). Homo ludens: A study of the play element in culture. Beacon Press. (Original work published 1938). [Google Scholar]

- Institute for Game Research. (n.d.) Institute of game research. Available online: https://gameresearch.gr/ (accessed on 25 May 2025).

- Juvina, I., O’Neill, K., Carson, J., Menke, P., Wong, C. H., McNett, H., & Holsinger, G. (2022, October 6–7). Human-AI coordination to induce flow in adaptive learning systems. Romanian Conference on Human-Computer Interaction, Craiova, Romania. [Google Scholar] [CrossRef]

- Katsenou, R., Deliyiannis, I., & Honorato, D. (2024, November 11–13). Kindergarten teachers’ conversations with Froufrou: Human/AI interaction as a new anthropological field. 17th Annual International Conference on Education, Research, and Innovation (ICERI 2024) (pp. 7175–7183), Seville, Spain. [Google Scholar]

- Kuijer, L., & Giaccardi, E. (2018, April 21–26). Co-performance: Conceptualizing the role of artificial agency in the design of everyday life. CHI Conference on Human Factors in Computing Systems (CHI ’18), Montréal, QC, Canada. [Google Scholar] [CrossRef]

- Latour, B. (2005). Reassembling the social: An introduction to actor-network-theory. Oxford University Press. [Google Scholar]

- Lee, H.-P., Sarkar, A., Tankelevitch, L., Drosos, I., Rintel, S., Banks, R., & Wilson, N. (2025, April 26–May 1). The impact of generative AI on critical thinking: Self-reported reductions in cognitive effort and confidence effects from a survey of knowledge workers. CHI Conference on Human Factors in Computing Systems (CHI ’25), Yokohama, Japan. [Google Scholar] [CrossRef]

- Lin, Z., & Li, G. (2021). A post human perspective on early literacy: A literature review. Journal of Childhood Education & Society, 2(1), 69–86. [Google Scholar] [CrossRef]

- Liu, R.-F. (2022). Hybrid ethnography: Access, positioning, and data assembly. Ethnography, 1–18. [Google Scholar] [CrossRef]

- Mills, C. W. (1959). The sociological imagination. Oxford University Press. [Google Scholar]

- Muthmainnah, Seraj, P. M. I., & Oteir, I. (2022). Playing with AI to investigate human-computer interaction technology and improving critical thinking skills to pursue 21st-century age. Education Research International, 2022, 17. [Google Scholar] [CrossRef]

- Nordström, P., Lundman, R., & Hautala, J. (2023). Evolving coagency between artists and AI in the spatial cocreative process of artmaking. Annals of the American Association of Geographers, 113(9), 2203–2218. [Google Scholar] [CrossRef]

- Osmanoglu, B. (2023). Forms of alliances between humans and technology: The role of human agency to design and setting up artificial intelligence-based learning tools. In Training, education, and learning sciences. AHFE International. [Google Scholar] [CrossRef]

- Panit, N. M. (2025). Can critical thinking and AI work together? Observations of science, mathematics, and language instructors. Environment and Social Psychology, 9(11), 1–16. [Google Scholar] [CrossRef]

- Papadopoulou, A. (2018a). Art didactics and creative technologies: No borders to reform and transform education. In L. Daniela (Ed.), Didactics of smart pedagogy (pp. 159–178). Springer. ISBN 978-3-030-01551-0. [Google Scholar]

- Papadopoulou, A. (2018b, August 4–10). Visual education in the light of neurobiological functions and reviewing the question of sense perception and action of the mind, according to Aristotle. Proceedings of the XXIII World Congress of Philosophy (Vol. 58, ), Athens, Greece. [Google Scholar] [CrossRef]

- Papadopoulou, A. (2019). Art, technology, education: Synergy of modes, means, tools of communication. Education Sciences, 9(3), 237. [Google Scholar] [CrossRef]

- Ruiz-Rojas, L. I., Salvador-Ullauri, L., & Acosta-Vargas, P. (2024). Collaborative working and critical thinking: Adoption of generative artificial intelligence tools in higher education. Sustainability, 16(13), 5367. [Google Scholar] [CrossRef]

- Sako, T. (2024). Enhancing critical thinking through AI-assisted collaborative task-based learning: A case study of prospective teachers in Japan. Journal of English Language Teaching and Linguistics, 9(2), 157–170. [Google Scholar] [CrossRef]

- Saritepeci, M., & Yildiz Durak, H. (2024). Effectiveness of artificial intelligence integration in design-based learning on design thinking mindset, creative and reflective thinking skills: An experimental study. Education and Information Technologies, 29, 25175–25209. [Google Scholar] [CrossRef]

- Schatzki, T. R. (2002). The site of the social: A philosophical account of the constitution of social life and change. Penn State University Press. [Google Scholar] [CrossRef]

- Skågeby, J. (2018). “Well-behaved robots rarely make history”: Coactive technologies and partner relations. Design and Culture, 10(2), 187–207. [Google Scholar] [CrossRef]

- Susanto, A., Andrianingsih, A., Sutawan, K., Marwintaria, V. A., & Astika, R. (2023). Transformation of learner learning: Improving reasoning skills through artificial intelligence (AI). Journal of Education, Religious, and Instructions, 1(2), 37–46. [Google Scholar] [CrossRef]

- Uricchio, W., & Cizek, K. (2023). Co-creating with AI. Minnesota Review, 100, 118–131. [Google Scholar] [CrossRef]

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes (M. Cole, V. John-Steiner, S. Scribner, & E. Souberman, Eds. & Trans.). Harvard University Press. [Google Scholar]