1. Introduction

Personalised Learning (PL) has gained increasing prominence as a student-centred pedagogical approach that seeks to adapt instruction and learning activities to the unique needs, preferences, and progress of individual learners (

Nandigam et al., 2014;

Prain et al., 2013). Rooted in cognitive and constructivist learning theories, PL aims to promote learner autonomy and deeper engagement, and improve academic outcomes by enabling flexible pacing, customisable content, and goal-oriented progression (

Bernacki et al., 2021). In higher education, especially within Science, Technology, Engineering, and Mathematics (STEM) disciplines, the demand for adaptive and responsive teaching strategies has intensified due to increasing student diversity, growing class sizes, and the imperative to enhance student engagement and attainment. However, despite its conceptual appeal and pedagogical advantages, the practical integration of PL into structured university curricula, particularly in STEM disciplines, remains a significant challenge (

Gao et al., 2020;

Krahenbuhl, 2016). These challenges encompass but are not limited to the difficulties educators face in understanding each student’s needs, monitoring their progress (

Krahenbuhl, 2016), and assessing interdisciplinary learning in STEM (

Gao et al., 2020). Some students may also struggle with self-directed learning, identifying their own knowledge gaps, and maintaining motivation without structured support from educators (

Mascolo, 2009). These barriers are especially pronounced in courses that involve complex conceptual frameworks and time-sensitive practical deliverables.

To address these systemic and pedagogical challenges, we developed the Guided Personalised Learning (GPL) model, a structured and scalable approach that embeds personalisation within the boundaries of existing university course structures. Unlike fully automated PL systems, GPL retains the educator’s role in facilitating and refining learning through data-informed decisions. The model integrates three interconnected components: a three-dimensional knowledge and skill grid that visualises learning objectives and conceptual relationships; interactive learning progress assessments (ILPAs) that promote diagnostic feedback and self-evaluation; and an adaptive learning resource pool that recommends targeted support materials based on learner profiles.

This study builds upon our previous conceptual work (

Chen et al., 2024) by evaluating the GPL model’s implementation in two undergraduate engineering modules, Network Engineering and Software Engineering. Through an analysis of student attainment data collected across two academic years, we assess the model’s effectiveness in improving academic outcomes. In particular, we examine whether engagement with ILPA correlates with improved performance and higher proportions of students reaching advanced attainment levels. By systematically analysing both individual and cohort-level outcomes, this study provides empirical evidence of the GPL model’s potential to bridge the gap between personalised instruction and institutional pedagogical practice. The findings aim to inform broader efforts to scale learner-centred pedagogies within STEM education.

2. Literature Review

2.1. Foundations of PL in Higher Education

The concept of personalised learning (PL) has evolved significantly in higher education, driven by the need to accommodate increasingly diverse student profiles and to promote more inclusive and effective learning environments. Rooted in cognitive and constructivist learning theories, PL shifts the focus from uniform instructional delivery to learner-centred approaches that account for prior knowledge, interests, and pace. Central to this shift is the principle of learner agency, enabling students to take ownership of their learning through adaptive pathways, self-monitoring, and goal setting (

Bernacki et al., 2021;

Sampson & Karagiannidis, 2002).

PL has been associated with improved learning outcomes and increased learner satisfaction, particularly when implemented through customised learning resources and adaptive pathways (

Fariani et al., 2023). As learning environments continue to evolve to meet the needs of diverse student populations, PL has gained recognition as an effective approach to enhancing engagement and academic performance. Nonetheless, PL’s broad implementation remains inconsistent, with significant barriers in pedagogy, technology, and institutional structures still hindering its full potential. Additionally, its implementation remains complex, involving significant pedagogical and structural challenges (

González-Patiño & Esteban-Guitart, 2023).

2.2. Technological Approaches to PL: Opportunities and Gaps

The fast advancement of technology has been a catalyst for the development of personalised learning, but it also present challenges. Technologies, including Intelligent Tutoring Systems (ITSs), adaptive learning platforms, and data-driven dashboards, are increasingly used to deliver tailored feedback, monitor progress, and recommend targeted resources (

Apoki et al., 2022;

Fariani et al., 2023). These tools offer potential benefits but also present challenges related to integration with traditional classroom structures and curriculum alignment. When properly aligned with curriculum objectives, these tools can enhance student engagement and knowledge retention. Additionally, these tools are particularly valuable in large, heterogeneous classrooms where individualised instructor attention is limited (

Abbas et al., 2023). Despite their potential, many of these solutions remain technologically driven rather than pedagogically integrated (

González-Patiño & Esteban-Guitart, 2023;

Joseph & Uzondu, 2024b). They often operate in parallel to formal instruction, lacking alignment with curriculum goals, and frequently bypass meaningful educator involvement. This risks reducing PL to a transactional process, disconnected from the deeper cognitive and metacognitive scaffolding that students require to engage with complex content and problem-solving tasks (

González-Patiño & Esteban-Guitart, 2023).

2.3. PL and Student-Centred Pedagogy in Engineering Education

The aforementioned limitations are particularly pronounced in STEM disciplines, where course structures are often rigid, content-dense, and sequenced in ways that make flexibility difficult to implement without compromising coherence (

Bathgate et al., 2019;

Saldívar-Almorejo et al., 2024). In engineering education specifically, the development of critical thinking and problem-solving capabilities is not only a pedagogical aim but a professional imperative. These competencies cannot be cultivated through automation alone; they demand sustained interaction with disciplinary ways of thinking, frequent formative feedback, and opportunities to apply knowledge in unfamiliar or ill-structured contexts. In this regard, the educator’s role is indispensable, not merely as a content expert, but as a learning architect who scaffolds the development of students’ reasoning, troubleshooting, and design-thinking abilities (

Joseph & Uzondu, 2024a;

Keiler, 2018).

The shift toward student-centred pedagogy reinforces the need for active educator engagement. Student-centred approaches prioritise active, inquiry-led learning and reframe the educator as a facilitator who supports students in constructing meaning, collaborating with peers, and engaging critically with content (

Confrey, 2000;

Davidson et al., 2014). While these approaches have shown promise in improving engagement and fostering higher-order thinking, institutional resistance and the persistence of traditional teaching methods present considerable obstacles to their wider implementation. For example, in STEM contexts, these approaches often face resistance due to entrenched traditions of lecture-based delivery and summative assessment. While empirical studies highlight the benefits of collaborative and inquiry-based learning for enhancing critical thinking, creativity, and conceptual understanding (

Guo et al., 2020;

Preeti et al., 2013), their adoption at scale remains limited. Institutional constraints, workload pressures, and a lack of integrated pedagogical models have hindered widespread implementation.

Recent scholarship has increasingly advocated for hybrid frameworks that integrate the strengths of PL with sustained educator oversight. These models seek to preserve the flexibility and customisation offered by adaptive technologies while embedding them within the core structure of teaching and learning, ensuring alignment with intended learning outcomes and professional competencies (

Hashim et al., 2022;

Zhao, 2024). Despite growing interest in these hybrid personalised learning models, there remains a paucity of empirical studies that evaluate their impact in high-stakes, credit-bearing modules in engineering education, particularly those that integrate personalised learning within formal curricula while maintaining strong educator involvement. This study addresses this gap by implementing and assessing the Guided Personalised Learning (GPL) model, which offers a pragmatic and pedagogically coherent framework for embedding personalised learning into engineering courses without displacing educator agency or institutional coherence. The GPL model seeks to support both learner autonomy and instructor-led scaffolding, blending learner-centred and instructor-guided approaches to provide a balanced solution to the challenges of scalability and sustainability in PL. Crucially, it enables educators to guide students in diagnosing their own learning needs, making informed use of feedback, and engaging critically with course material. This study contributes to the growing body of evidence on how structured, data-informed, and educator-mediated PL models can be effectively deployed in engineering education to promote deep learning and improved academic outcomes.

3. The Guided Personalised Learning (GPL) Model

The GPL model was developed to operationalise PL and student-centred pedagogy in a scalable, curriculum-embedded manner within STEM higher education. While many existing personalised learning tools rely heavily on automation or algorithmic adaptation, the GPL model adopts a hybrid design. It combines adaptive learning technologies with educator guidance to ensure alignment with learning outcomes and institutional practices.

As shown in

Figure 1, the GPL model comprises three integrated components:

A three-dimensional knowledge and skill grid,

Interactive Learning Progress Assessments (ILPA), and

An adaptive learning resource pool.

These components function as an interconnected system, enabling learners to assess their own progress, receive feedback, and access targeted support, while allowing educators to monitor trends and adjust instruction accordingly. These components are embedded into the university Moodle-based virtual learning environment, allowing for seamless integration and accessibility.

3.1. Three-Dimensional Knowledge and Skill Grid

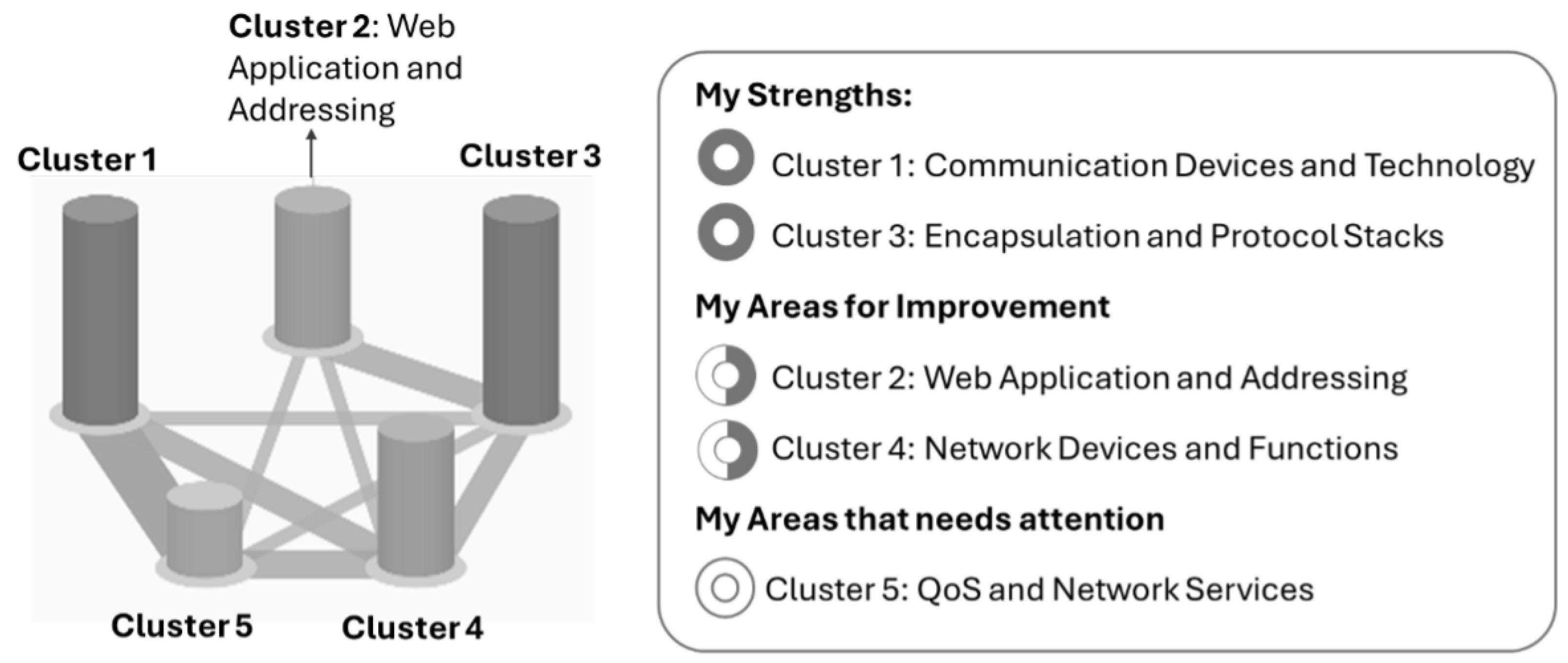

The knowledge and skill grid provides a visual representation of the conceptual structure of a module. Organised into thematic clusters, the grid maps course content against expected levels of understanding and inter-topic dependencies. Each cluster is represented as a cylinder, with its height indicating a learner’s demonstrated proficiency (based on ILPA results) and the connections between clusters indicating conceptual interrelationships.

Unlike traditional syllabi or flat lists of learning objectives, this three-dimensional model presents a dynamic, learner-facing view of academic progression. It functions both as a roadmap and a reflective tool, helping students identify their strengths and gaps, and guiding them toward intentional learning. For educators, aggregated cohort data enables the identification of common areas of difficulty, supporting targeted instructional responses.

3.2. Interactive Learning Progress Assessment (ILPA)

ILPAs form the diagnostic and formative core of the GPL model. These assessments are designed not only to test knowledge, but also to guide learners in evaluating their understanding and learning needs. Embedded directly within the university’s virtual learning platform, ILPAs are tailored to the content and pedagogical objectives of each course.

The design of ILPAs is informed by constructivist and situated learning theories. Learners build knowledge through authentic tasks and real-time feedback, encouraging continuous development and engagement with course content.

3.3. Adaptive Learning Resource Pool

The third component of GPL model is a curated pool of resources aligned to specific knowledge clusters and learner profiles. Informed by the ILPA outcomes, the system recommends targeted materials for reinforcement or enrichment. Resources include short videos, interactive exercises, guided problem sets, and readings, each mapped to the relevant areas of the knowledge and skill grid.

This approach enables just-in-time, need-based learning support. A student identified as underperforming in a particular domain of a module, such as quality of service (QoS) in network systems, would be guided to resources that specifically address those gaps. The goal is not merely to provide more content, but also to streamline access to the most relevant materials.

Educators maintain oversight of the resource pool, adapting its content based on cohort performance trends, student feedback, and course developments. This ensures that the materials remain pedagogically aligned and contextually appropriate.

3.4. Integration and Function

Together, these components support a feedback-rich, data-informed learning environment. The knowledge and skill grid structures the curriculum; ILPA diagnoses learning needs and promotes reflection; and the resource pool supports targeted improvement. This cycle empowers learners to become active participants in their learning while allowing educators to scaffold support based on evidence. An example illustrating how the three components work together to provide learners with personalised learning guidance is presented in

Section 6.

The GPL model is designed for modular integration into existing courses, requiring no departure from institutional systems or curriculum frameworks. Its emphasis on structured personalisation makes it particularly suitable for STEM contexts, where clarity of progression, timely feedback, and scalable design are essential.

4. GPL Implementation and Evaluation

This section outlines the implementation of the GPL model within two undergraduate engineering modules and describes the methodology used to evaluate its effectiveness.

4.1. Implementation Context

The GPL model was implemented and evaluated in two undergraduate modules within the School of Electronic Engineering and Computer Science. These included Network Engineering, a first-year module introducing the fundamental principles of data communication and the protocols that govern computer networks, and Software Engineering, a second-year module focusing on software development methodologies.

In both modules, the GPL model was fully integrated into the university Moodle-based virtual learning environment. The implementation process involved constructing a three-dimensional knowledge and skill grid aligned the expected learning outcomes, the UK Quality Assurance Agency (QAA) Engineering Benchmark Statement (

Quality Assurance Agency (QAA), 2023), and the Accreditation of Higher Professional Education (AHPE) framework (

Engineering Council, 2021) defined by the UK Engineering Council. Additionally, the process included designing ILPAs and generating personalised learning progress reports based on the ILPA results, incorporating individualised three-dimensional grids, and providing tailored recommendations linked to the most appropriate resources from the curated learning resource pool to support student development throughout the module.

4.2. ILPA Design

The design of ILPAs plays a pivotal role in the implementation of the GPL model. ILPAs enable formative assessments, personalised feedback, and resource recommendation, supporting learners’ self-regulated and continuous development.

In the Network Engineering module, the ILPA took the form of a scenario-based interactive assessment designed to contextualise foundational concepts of data communication and network protocols within an applied narrative. Learners engaged with a storyline involving two students working collaboratively on a networked project, making decisions and answering structured questions aligned with five key knowledge clusters derived from the module’s learning outcomes. These clusters covered areas such as network topologies, communication models, protocol design, transmission mechanisms, and troubleshooting techniques. The assessment interface was custom-developed using HyperText Markup Language (HTML) and Cascading Style Sheets (CSS) and incorporated a visual dashboard that tracked learner progress across a three-dimensional knowledge and skills grid.

Upon completion, students received a personalised learning report that mapped their performance against the expected competencies and provided recommendations for targeted resources within the module’s resource pool. The pedagogical underpinning of the ILPA design in this module draws on constructivist learning theory (

Bada & Olusegun, 2015), which asserts that learners construct knowledge actively through engagement with real-world scenarios and reflective problem-solving. The narrative format and interactive tasks encouraged learners to internalise core networking principles by applying them to realistic, problem-based contexts. Furthermore, the ILPA design reflected principles of cognitive load theory (

Plass et al., 2010), with the scenario structured to manage intrinsic complexity while enhancing germane cognitive processing. Through guided discovery and immediate feedback, the activity supported deeper conceptual understanding and long-term retention of networking fundamentals.

In the Software Engineering module, ILPAs were delivered through eight interactive assessment sets created using the H5P platform. These assessments were integrated directly into the university’s virtual learning platform and consisted of a variety of question types, including multiple-choice, fill-in-the-blank, drag-and-drop, and open-ended items. Multimedia elements such as instructional videos and illustrative diagrams were incorporated to support diverse learning styles and to enhance learner engagement. The questions were randomised and stratified by difficulty, ensuring varied levels of challenge tailored to individual learner progression. Upon completion, students received detailed, immediate feedback that guided them towards appropriate follow-up resources to reinforce their understanding. Educators had access to embedded analytics, which allowed for the monitoring of both individual performance and cohort-level trends, thereby informing responsive teaching interventions.

The ILPA design in Software Engineering was informed by several complementary pedagogical theories. Constructivist learning theory (

Bada & Olusegun, 2015) guided the inclusion of real-world software development scenarios and reflective questions, enabling students to build knowledge through experiential engagement. Situated learning theory (

Goel et al., 2010) shaped the authenticity of assessment tasks by embedding them within contexts that simulated professional software engineering environments. This approach emphasised the acquisition of practical knowledge and decision-making skills within realistic constraints. Furthermore, the structure of progressive ILPA release followed the principles of formative assessment and feedback theory (

Nicol & Macfarlane-Dick, 2006;

Woods, 2015), allowing learners to identify knowledge gaps incrementally, act on feedback in real time, and consolidate their learning over the duration of the module.

In both modules, ILPA activities were optional and designed to complement, rather than replace, existing summative assessments. Students could engage with these tasks at their own pace and revisit them throughout the term. This flexible and student-centred approach supported autonomy, encouraged iterative learning, and cultivated metacognitive awareness, core principles of effective self-regulated learning.

4.3. Research Design and Participants

The evaluation employed a comparative research design incorporating both within-cohort and inter-cohort analyses to evaluate the impact of the GPL model. The within-cohort analysis compared the academic performance of students who engaged with ILPA tasks to those who did not, within the same academic year. The inter-cohort analysis examined overall module performance before and after the introduction of the GPL model to assess changes in academic outcomes over time.

In the 2023/24 academic year, 97 students were enrolled in the Network Engineering module, with 66 students (70%) completing the ILPA activity. In the Software Engineering module, 647 students were enrolled, and 202 students (38.8%) engaged with the ILPA activities. Attainment data consisted of final module marks and relevant assessment scores. All data were anonymised prior to analysis, and the study was conducted in accordance with ethical guidelines, having received approval from the University Ethics Committee. Informed consent was obtained from all participants.

5. Methodology and Results

We applied both quantitative and qualitative analyses in this work. Quantitative analysis aimed to determine whether engagement with the GPL model, specifically participation in ILPA activities, was statistically associated with improved academic performance. Statistical tests included F-tests to examine differences in performance variances between participant and non-participant groups, and Welch’s t-tests to compare mean scores in cases where variances were unequal. In addition, grade distributions across academic years were analysed to identify any broader trends in student achievement following the implementation of GPL.

Qualitative data were collected through standard module evaluation questionnaires, providing insights into students’ perceptions of the GPL approach and its perceived impact on their learning experience.

5.1. Comparative Analysis of GPL Across Both Courses

To evaluate the effectiveness of GPL, we compared the module results of students who participated in ILPA with those who did not in both the Network Engineering and Software Engineering courses.

5.1.1. Network Engineering Course

The Network Engineering course is a first-year undergraduate module with 97 enrolled students. For this analysis, 94 students were included, excluding three who dropped out. Among them, 66 students (70%) attempted the ILPA.

We first conducted a two-sample F-Test to determine whether the variances of the two groups (ILPA and non-ILPA participants) were equal. The results of the F-Test are shown in

Table 1.

The p-value from the F-Test, P(F ≤ f) = 0.0062, is significantly lower than the standard significance level 0.05, providing strong evidence to reject the null hypothesis of equal variances. Additionally, the calculated F-statistic (0.465) is less than the critical F-value (0.6032), confirming that the variances of the two groups are significantly different.

Given the evidence of unequal variances from the F-Test, we employed Welch’s

t-Test (unequal variances

t-test) to compare the means of the ILPA participants and non-participants with Equation (

1):

where

and

are the sample means of the ILPA and non-ILPA groups, respectively;

and

are the sample variances of the ILPA and non-ILPA groups, respectively;

and

are the sample sizes of the ILPA and non-ILPA groups, respectively.

The p-value resulting from Welch’s t-Test was P , which is well below 0.05. This indicates that the difference in the means of the ILPA and non-ILPA groups is highly significant.

The statistical analysis validates that the group of students who participated in the ILPA achieved significantly higher module results (mean = 67.92) compared to those who did not (mean = 50.98). The results confirm that the ILPA positively impacts student module performance and indicates a positive impact of GPL on student learning outcomes in the Network Engineering course.

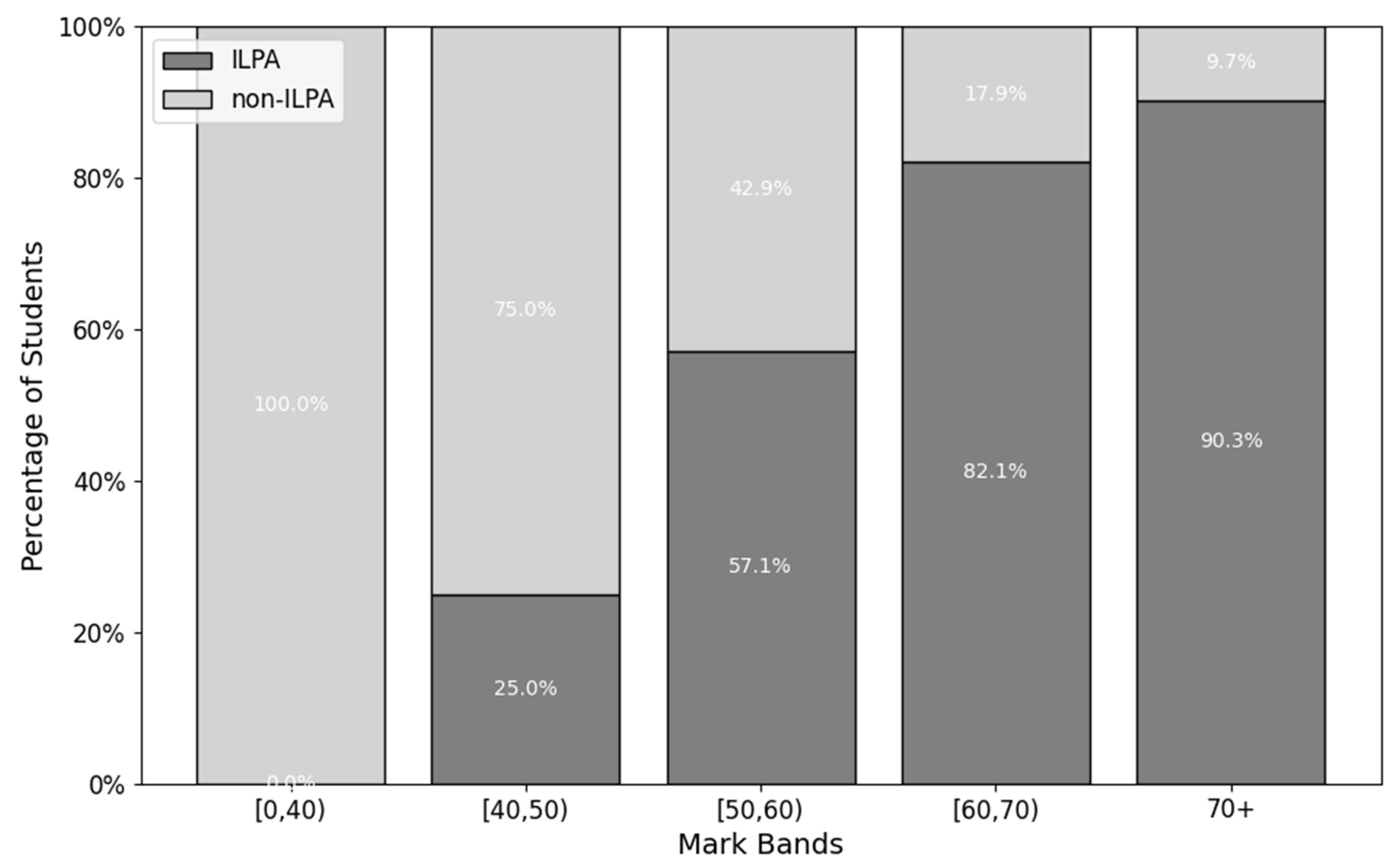

Figure 2 shows the percentage distribution of students across different mark bands based on their participation in ILPA. The dark grey bars represent students who participated, while the light grey bars represent those who did not participate. The data reveals a clear trend: ILPA participation is significantly higher in the upper mark bands. Notably, in the 70+ mark band, 90.3% of students are ILPA participants, compared to just 9.7% non-participants. In contrast, the lower mark bands (e.g., [0–40)) consist entirely of non-participants (100.0%), with no ILPA participants present.

5.1.2. Software Engineering Course

The Software Engineering course is second-year undergraduate module focused on software development methodologies. A total of 647 students were included in the analysis. Similar to the Network Engineering course, we analysed the module results of students who participated in ILPA (202, 38.8%) versus those who did not.

Table 2 shows the results of the F-Test.

The p-value from the F-Test, P(F≤ f) = 1.3035 , is significantly lower than the standard significance level 0.05, providing strong evidence to reject the null hypothesis of equal variances. Additionally, the calculated F-statistic (0.5904) is less than the critical F-value (0.8168), confirming that the variances of the two groups are significantly different.

The p-value resulting from Welch’s t-test was P = 2.5823 × 10−17, which is significantly less than 0.05. This indicates that the difference in the means of the ILPA and non-ILPA groups is highly significant. Similar to the Network Engineering course, this indicates that the GPL model has a positive impact on the Software Engineering course as well.

Figure 3 shows the percentage distribution of students across different mark bands based on their participation in ILPA. The data demonstrates that the proportion of ILPA participants increases significantly in the higher grade bands. Specifically, in the 70+ mark band, nearly 47.9% of students are ILPA participants compared to 52.% who are non-participants. Conversely, in the lower mark bands (e.g., [0–40)), only 5.0% of ILPA participants are present, compared to 95.0% of non-participants.

5.2. Comparison of Student Performance Across Academic Years

To further evaluate the impact of GPL and student engagement with ILPA, we compared the course results from the academic year 2023/24 (when ILPA was introduced) with the previous academic year 2022/23 (when ILPA was not available) for both courses, using F = test for variance comparison and a t-test for mean comparison.

Table 3 shows the results of the F-Test for the Network Engineering course.

Since the p-value from the F-test P(F ≤ f) = 0.2938 > 0.05, we fail to reject the null hypothesis, indicating no significant difference in variance between the two years. This suggests that the spread of student scores remains consistent across both cohorts, allowing us to use a t-test assuming equal variances.

The t-test p-value (0.0081) is below 0.05, confirming a statistically significant increase in student performance in 2023/24 compared to 2022/23 for the Network Engineering course.

Table 4 shows the results of the F-Test for the Software Engineering course.

The p-value of the F-Test, is smaller than the standard significance level of 0.05, and the F-statistic (0.8577) is less than the critical F-value (0.8784). These indicate that we reject the null hypothesis of equal variances and apply Welch’s t-Test to compare the means of the two academic years. The p-value from Welch’s t-Test was , a value far smaller than 0.05. The extremely small p-value indicates overwhelming evidence to reject the null hypothesis, confirming a significant difference between the mean module results of the two academic years for the Software Engineering course. These findings, again, underline the effectiveness of the GPL model as a pedagogical approach to enhancing student attainment.

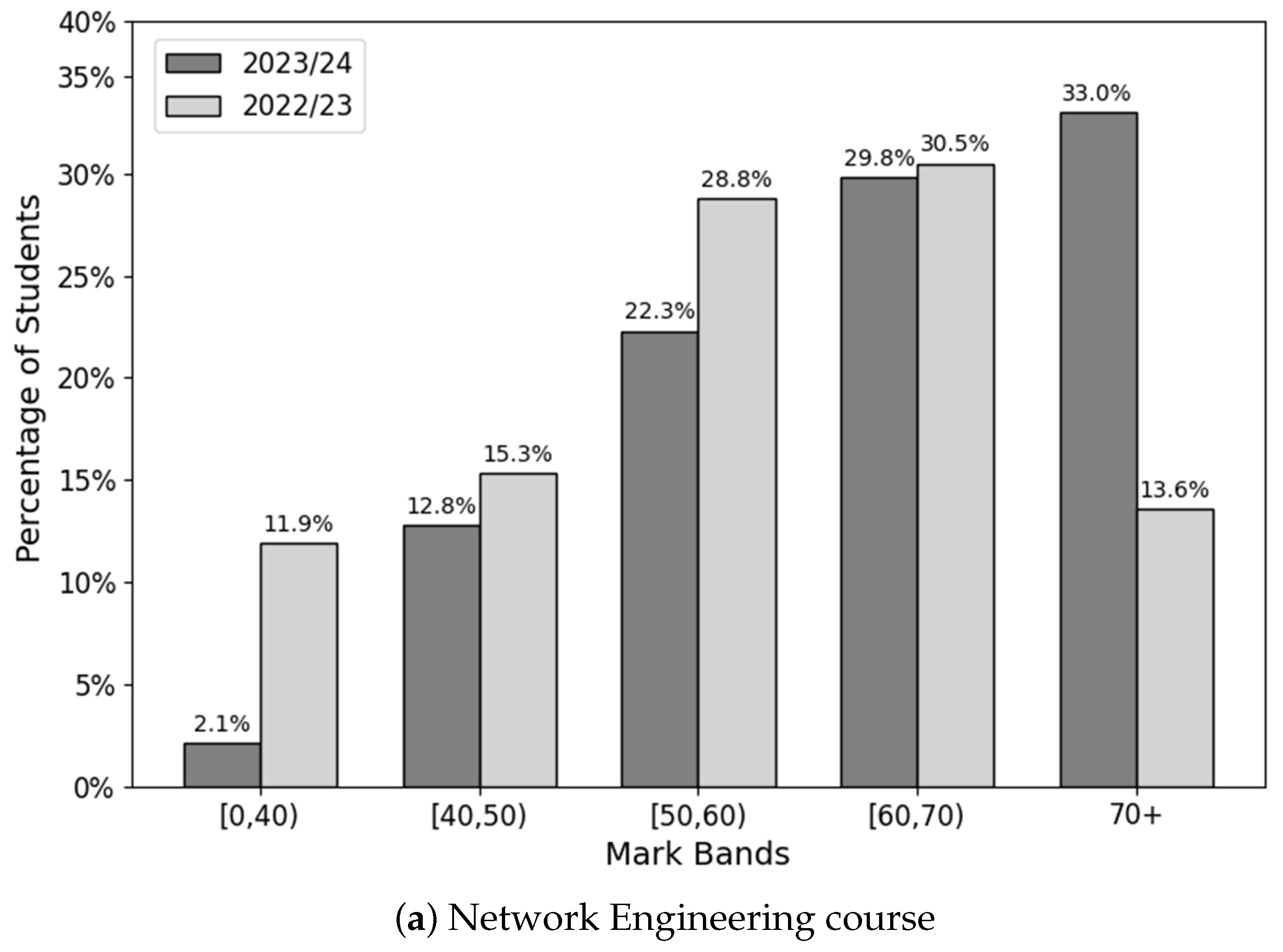

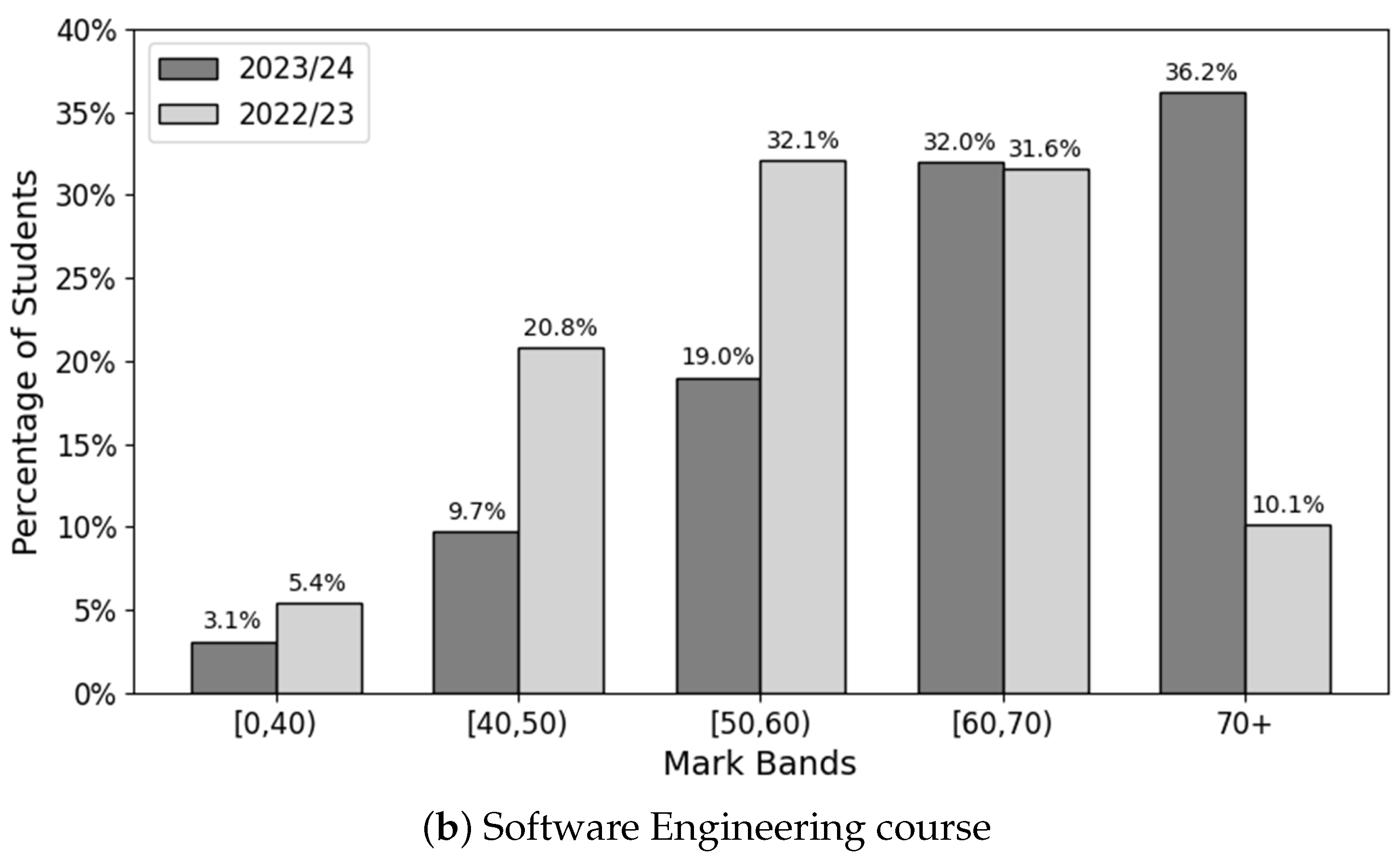

Figure 4 compares the percentage distribution of students across mark bands between the academic years 2022/23 and 2023/24. The results indicate a significant improvement in student performance for the academic year 2023/24 for both courses.

For the Network Engineering course, the proportion of students in the lowest mark band [0–40) decreased significantly from 11.9% in 2022/23 to 2.1% in 2023/24. Similarly, in the Software Engineering course, this proportion declined from 5.4% to 3.1%, indicating fewer students in the failing category.

Additionally, both courses saw a notable increase in the percentage of students achieving marks in the highest band (70+), rising from 13.6% to 33.0% in Network Engineering and from 10.1% to 36.2% in Software Engineering.

The distribution in mid-range bands [50–60) and [60–70) remained relatively stable between the two years, with a slight increase in 2023/24. These results suggest that the implementation of GPL and students’ engagement with ILPA had a positive impact on student attainment, leading to a greater proportion of students achieving higher grades in 2023/24 compared to 2022/23.

5.3. Qualitative Data from Students

To supplement the quantitative findings, qualitative data were collected through end-of-module evaluation questionnaires, which included open-ended questions aimed at capturing student perceptions of the GPL model and their experiences with ILPAs. A thematic analysis of the responses revealed several recurring themes, offering valuable insight into the student experience and the perceived impact of GPL on learning.

A prominent theme was enhanced clarity and direction in learning. Many students noted that the ILPA activities and associated feedback helped them better understand the expectations of the module and identify specific areas requiring further development. Several comments highlighted the usefulness of the personalised progress reports in breaking down complex subject matter into manageable components and offering targeted learning pathways.

Students also emphasised the value of immediate, formative feedback. The real-time responses provided after each ILPA task were seen as instrumental in correcting misconceptions early and reinforcing understanding. This aligns with established principles of self-regulated learning and underscores the importance of timely feedback in promoting student confidence and competence. As one respondent stated, “I appreciated being able to get feedback straight away, it helped me fix mistakes before moving on to new material.”

Another recurring theme was increased engagement and motivation. Students described the interactive format and scenario-based design of the ILPAs as more engaging than traditional assessments. This was particularly evident in the Network Engineering module, where the narrative-driven approach appeared to resonate strongly with learners. One student wrote, “The scenario made it feel less like a test and more like solving a real problem. It was actually enjoyable.”

Some students also reported a heightened sense of personalisation and support, noting that the GPL model made them feel that their individual learning needs were recognised. This perception of tailored support appeared to strengthen student satisfaction and engagement, particularly among those who previously struggled with large-cohort teaching formats.

Students identified several features of the ILPAs as particularly helpful. The most valued aspect was the provision of immediate results, which allowed students to receive quick feedback on their performance. The variety of question types also contributed to a more engaging and comprehensive learning experience. Additionally, the inclusion of randomised questions and the option to repeat assessments were seen as valuable tools for reinforcing knowledge and maintaining their interest. While the scoring feature was mentioned less frequently, it nonetheless played a role in supporting self-monitoring and progress tracking. In terms of perceived benefits, the ILPAs were especially effective in helping students deepen their understanding of the subject matter. Many also noted that it supported the development of connections between concepts, suggesting that the ILPAs facilitated more integrated learning. Increased motivation and engagement were frequently mentioned, along with the convenience and flexibility of the format. The availability of immediate feedback further enhanced the learning experience by allowing students to promptly identify areas for improvement.

However, a small subset of students expressed challenges with the optional nature of the ILPAs. A few participants noted that they might have benefited more from the activities had they been more explicitly integrated into the summative assessment structure. Others mentioned that balancing ILPAs with other coursework deadlines was difficult, suggesting the need for clearer guidance on time management and the role of ILPAs within the broader learning journey.

Overall, the qualitative feedback underscores the pedagogical potential of GPL in enhancing learner autonomy, engagement, and satisfaction. These insights affirm the model’s capacity to foster not only improved academic outcomes, as shown in the quantitative analysis, but also more meaningful and personally resonant learning experiences.

6. Discussion

This study provides strong empirical evidence that the GPL model enhances academic outcomes in undergraduate engineering education. Especially the students who engaged with Interactive Learning Progress Assessments (ILPAs), the model’s central formative component, achieved significantly higher scores and were more likely to attain top-grade classifications. These within-cohort gains were complemented by inter-cohort improvements, with modules implementing GPL exhibiting overall rises in attainment and reduced failure rates. Together, these findings affirm the pedagogical value of structured, data-informed personalisation in STEM learning environments.

The effectiveness of the GPL model lies in its ability to address a core challenge in higher education: how to offer timely, tailored learning support at scale. By embedding diagnostic assessments within existing course frameworks and aligning them with adaptive resources and visualised progress tracking, GPL fosters a learning ecosystem in which feedback is immediate, relevant, and actionable. ILPAs encouraged metacognitive engagement by helping learners identify knowledge gaps, reflect on their development, and access curated resources in response to diagnostic results. These mechanisms support self-regulated learning, which is a critical factor for academic success.

Notably, the model’s benefits extended beyond self-selecting, highly motivated learners. The observed improvement in overall cohort performance suggests that GPL can raise outcomes for a broad spectrum of students, including those who might struggle with abstract or cumulative content typical of STEM disciplines. By integrating personalisation into the course infrastructure rather than treating it as an ancillary enrichment activity, the model promoted inclusivity and equity. This systemic embedding ensured that personalised support was not dependent on individual proactivity but available to all learners as a core part of the curriculum.

Another key strength of GPL is its hybrid design, which balances digital automation with educator expertise. While data analytics and progress dashboards enhance scalability and responsiveness, human judgment remains central to the design of ILPAs, curation of learning materials, and interpretation of student data. This approach mitigates the risks often associated with purely algorithmic personalised systems, such as reduced transparency, misalignment with pedagogical intent, and marginalisation of educator agency. Instead, educators used learner insights not only to guide individuals but also to inform broader instructional strategies and curriculum refinement.

The use of a three-dimensional knowledge and skill grid further contributed to the model’s impact by making learning progression visible and actionable.

Figure 5 gives an example of a personalised learning progress report of a student on the Network Engineering course. The report shows the learner’s knowledge and skill grid based on the results of the ILPA, highlighting strengths, and pinpointing specific areas for improvements. As described in

Section 3, within the three-dimension grid, each cylinder symbolises a cluster of knowledge and skills, with the height reflecting the depth of the learner’s understanding, determined by the proficiency in answering related questions. The thickness of the links between cylinders/clusters indicates the correlation between knowledge and skills among clusters. This visual progress report serves as a valuable tool for the learner to build their personalised learning plan. In this specific example, the learner is advised to focus or revisit course content related to clusters 2, 4, and 5, especially cluster 5 on QoS and Network Services. The student can then access relevant learning materials or activities for the relevant clusters in the adaptive learning resource pool embedded in the virtual learning environment.

In fields such as engineering, where learning is both conceptual and cumulative, such transparency supports both student agency and educator planning. The visualisation clarifies expectations, promotes self-monitoring, and helps students navigate complex content in a structured way. Nevertheless, several limitations must be acknowledged. ILPA participation was voluntary, introducing potential self-selection bias. Although the inter-cohort comparison partially offsets this concern, future studies should explore controlled or mandatory implementation scenarios to strengthen causal inferences. Additionally, while the present analysis focused on attainment data, richer insights would be gained from incorporating qualitative feedback from students and staff to explore experiences, attitudes, and behavioural changes resulting from engagement with GPL.

The successful implementation of GPL across two modules with distinct content, pedagogies, and learner profiles underscores its adaptability and potential for broader application. However, such expansion requires thoughtful curriculum alignment, staff development, and institutional support. Embedding diagnostic assessment and personalised learning into the core fabric of teaching demands collaboration, resources, and a commitment to continuous improvement.

7. Conclusions and Implications

This study assessed the GPL model’s impact in two undergraduate engineering modules, showing its ability to enhance academic performance and support personalised learning through interactive assessments, adaptive feedback, and progress visualisation within existing systems. Participation in GPL, especially via ILPAs, was linked to higher academic attainment and improved cohort performance, demonstrating its potential to support diverse learners. The model’s success relied on existing infrastructure, making it scalable, sustainable, and applicable across STEM disciplines and institutions.

7.1. Implications for Practice

For educators and curriculum designers, GPL offers a practical framework for embedding personalisation into core teaching and learning processes. Its modular architecture allows for incremental adoption, while its emphasis on formative assessment ensures feedback remains timely and targeted. Staff development should prioritise training in diagnostic assessment design, resource curation, and data-informed pedagogy.

For institutions, the study demonstrates how existing digital ecosystems, such as virtual learning environments and analytics dashboards, can be mobilised to support student-centred education. Strategic investment should focus on enabling interdisciplinary collaboration, technical integration, and continuous feedback mechanisms. Policy frameworks that promote co-creation with students and iterative design processes will further enhance institutional readiness for scalable innovation.

7.2. Directions for Future Research

Future studies should investigate the application of GPL in other disciplinary domains and at different academic levels. Longitudinal research could explore the sustained impact of GPL on progression, retention, and academic identity formation. Additionally, the integration of AI-driven analytics with human-centred design represents a promising area for future inquiry, offering new possibilities for precision personalisation while safeguarding pedagogical transparency and educator agency.

Author Contributions

Conceptualization, Y.C. and K.K.C.; Methodology, Y.C., L.M. and K.K.C.; Software, L.M. and P.P.; Validation, L.M. and P.P.; Formal analysis, Y.C., L.M. and P.P.; Investigation, Y.C. and L.M.; Data curation, Y.C., L.M. and P.P.; Writing–original draft, Y.C., L.M. and P.P.; Writing–review & editing, Y.C., L.M., P.P. and K.K.C.; Visualization, P.P.; Supervision, Y.C.; Project administration, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Queen Mary University of London Research Ethics Committee of QMRECQMERC20.565.DSEECS25.012 on 27 February 2025.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author to protect the data privacy and restrict unauthorized use.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GPL | Guided Personalised Learning |

| PL | Personalised Learning |

| STEM | Science, Technology, Engineering, and Mathematics |

| ILPA | Interactive Learning Progress Assessment |

| HTML | HyperText Markup Language |

| CSS | Cascading Style Sheets |

References

- Abbas, N., Ali, I., Manzoor, R., Hussain, T., & Hussaini, M. (2023). Role of artificial intelligence tools in enhancing students’ educational performance at higher levels. Journal of Artificial Intelligence, Machine Learning and Neural Network (JAIMLNN), 3(5), 36–49. [Google Scholar] [CrossRef]

- Apoki, U. C., Hussein, A. M. A., Al-Chalabi, H. K. M., Badica, C., & Mocanu, M. L. (2022). The role of pedagogical agents in personalised adaptive learning: A review. Sustainability, 14(11), 6442. [Google Scholar] [CrossRef]

- Bada, S. O., & Olusegun, S. (2015). Constructivism learning theory: A paradigm for teaching and learning. Journal of Research & Method in Education, 5(6), 66–70. [Google Scholar]

- Bathgate, M. E., Aragón, O. R., Cavanagh, A. J., Frederick, J., & Graham, M. J. (2019). Supports: A key factor in faculty implementation of evidence-based teaching. CBE—Life Sciences Education, 18(2), ar22. [Google Scholar] [CrossRef] [PubMed]

- Bernacki, M. L., Greene, M. J., & Lobczowski, N. G. (2021). A systematic review of research on personalized learning: Personalized by whom, to what, how, and for what purpose(s)? Educational Psychology Review, 33(4), 1675–1715. [Google Scholar] [CrossRef]

- Chen, Y., Chai, K. K., Ma, L., Liu, C., & Zhang, T. (2024, May 8–11). Empowering university students with a guided personalised learning model. 2024 IEEE Global Engineering Education Conference (EDUCON) (pp. 1–4), Kos Island, Greece. [Google Scholar]

- Confrey, J. (2000). Leveraging constructivism to apply to systemic reform. Nordic Studies in Mathematics Education, 8(3), 7–30. [Google Scholar] [CrossRef]

- Davidson, N., Major, C. H., & Michaelsen, L. K. (2014). Small-group learning in higher education-cooperative, collaborative, problem-based, and team-based learning: An introduction by the guest editors. Journal on Excellence in College Teaching, 25(3&4), 1–6. [Google Scholar]

- Engineering Council. (2021). Accreditation of higher professional education (ahpe) framework. Available online: https://www.engc.org.uk/standards-guidance/standards/accreditation-of-higher-education-programmes-ahep/fourth-edition-implemented-by-31-december-2021/ (accessed on 19 May 2025).

- Fariani, R. I., Junus, K., & Santoso, H. B. (2023). A systematic literature review on personalised learning in the higher education context. Technology, Knowledge and Learning, 28(2), 449–476. [Google Scholar] [CrossRef]

- Gao, X., Li, P., Shen, J., & Sun, H. (2020). Reviewing assessment of student learning in interdisciplinary STEM education. International Journal of STEM Education, 7, 1–14. [Google Scholar] [CrossRef]

- Goel, L., Johnson, N., Junglas, I., & Ives, B. (2010). Situated learning: Conceptualization and measurement. Decision Sciences Journal of Innovative Education, 8(1), 215–240. [Google Scholar] [CrossRef]

- González-Patiño, J., & Esteban-Guitart, M. (2023). Changing to personalized education at the university: Study case of a connected learning experience. Preprints. [Google Scholar] [CrossRef]

- Guo, P., Saab, N., Post, L. S., & Admiraal, W. (2020). A review of project-based learning in higher education: Student outcomes and measures. International Journal of Educational Research, 102, 101586. [Google Scholar] [CrossRef]

- Hashim, S., Omar, M. K., Ab Jalil, H., & Sharef, N. M. (2022). Trends on technologies and artificial intelligence in education for personalized learning: Systematic literature. Journal of Academic Research in Progressive Education and Development, 12(1), 884–903. [Google Scholar] [CrossRef] [PubMed]

- Joseph, O. B., & Uzondu, N. C. (2024a). Curriculums development for interdisciplinary STEM education: A review of models and approaches. International Journal of Applied Research in Social Sciences, 6(8), 1575–1592. [Google Scholar]

- Joseph, O. B., & Uzondu, N. C. (2024b). Professional development for STEM Educators: Enhancing teaching effectiveness through continuous learning. International Journal of Applied Research in Social Sciences, 6(8), 1557–1574. [Google Scholar]

- Keiler, L. S. (2018). Teachers’ roles and identities in student-centered classrooms. International Journal of STEM Education, 5, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Krahenbuhl, K. S. (2016). Student-centered education and constructivism: Challenges, concerns, and clarity for teachers. The Clearing House: A Journal of Educational Strategies, Issues and Ideas, 89(3), 97–105. [Google Scholar] [CrossRef]

- Mascolo, M. F. (2009). Beyond student-centered and teacher-centered pedagogy: Teaching and learning as guided participation. Pedagogy and the Human Sciences, 1(1), 3–27. [Google Scholar]

- Nandigam, D., Tirumala, S. S., & Baghaei, N. (2014, December 10–12). Personalized learning: Current status and potential. 2014 IEEE Conference on e-Learning, e-Management and e-Services (IC3e) (pp. 111–116), Hawthorne, VIC, Australia. [Google Scholar]

- Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. [Google Scholar] [CrossRef]

- Plass, J. L., Moreno, R., & Brünken, R. (2010). Cognitive load theory. Cambridge University Press. [Google Scholar]

- Prain, V., Cox, P., Deed, C., Dorman, J., Edwards, D., Farrelly, C., Keeffe, M., Lovejoy, V., Mow, L., Sellings, P., Waldrip, B., & Yager, Z. (2013). Personalised learning: Lessons to be learnt. British Educational Research Journal, 39(4), 654–676. [Google Scholar] [CrossRef]

- Preeti, B., Ashish, A., & Shriram, G. (2013). Problem based learning (PBL)—An effective approach to improve learning outcomes in medical teaching. Journal of Clinical and Diagnostic Research: JCDR, 7(12), 2896. [Google Scholar] [PubMed]

- Quality Assurance Agency (QAA). (2023). Engineering benchmark statement. Available online: https://www.qaa.ac.uk/the-quality-code/subject-benchmark-statements/subject-benchmark-statement-engineering (accessed on 19 May 2025).

- Saldívar-Almorejo, M. M., Flores-Herrera, L. A., Rivera-Blas, R., Niño-Suárez, P. A., Rivera-Blas, E. Z., & Rodríguez-Contreras, N. (2024). e-Learning challenges in STEM education. Education Sciences, 14(12), 1370. [Google Scholar] [CrossRef]

- Sampson, D., & Karagiannidis, C. (2002). Personalised learning: Educational, technological and standarisation perspective. Digital Education Review, 4(4), 24–39. [Google Scholar]

- Woods, N. (2015). Formative assessment and self-regulated learning. The Journal of Education. Available online: https://thejournalofeducation.wordpress.com/2015/05/20/formative-assessment-and-self-regulated-learning/ (accessed on 19 May 2025).

- Zhao, C. (2024). Application and prospect of artificial intelligence in personalized learning. Journal of Innovation and Development, 8, 24–27. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).