1. Introduction

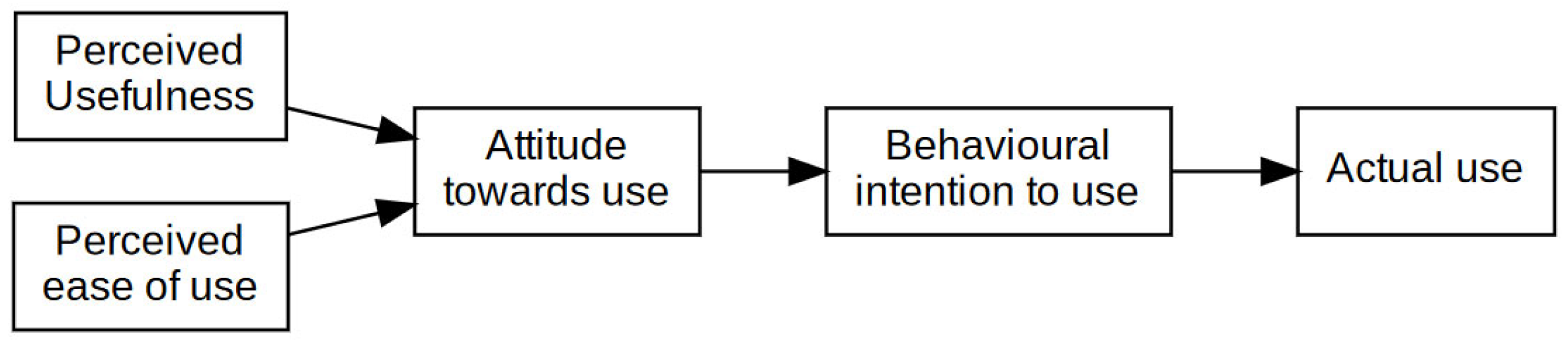

The digital transformation of schools is a multifaceted and far-reaching process that encompasses more than the adoption of new technologies. It also entails profound pedagogical, organizational, and cultural changes. This transformation is an ongoing and dynamic process that goes beyond technical infrastructure and requires the redesign of teaching methods, learning environments, and institutional structures. In this context, the integration of generative Artificial Intelligence (AI) such as ChatGPT (GPT-4) into daily school life has gained increasing relevance. On one hand, the use of such technologies offers potential for new instructional approaches, while on the other hand, it presents challenges for existing educational practices and demands a critical examination of its impact on learners, teachers, and institutional frameworks (

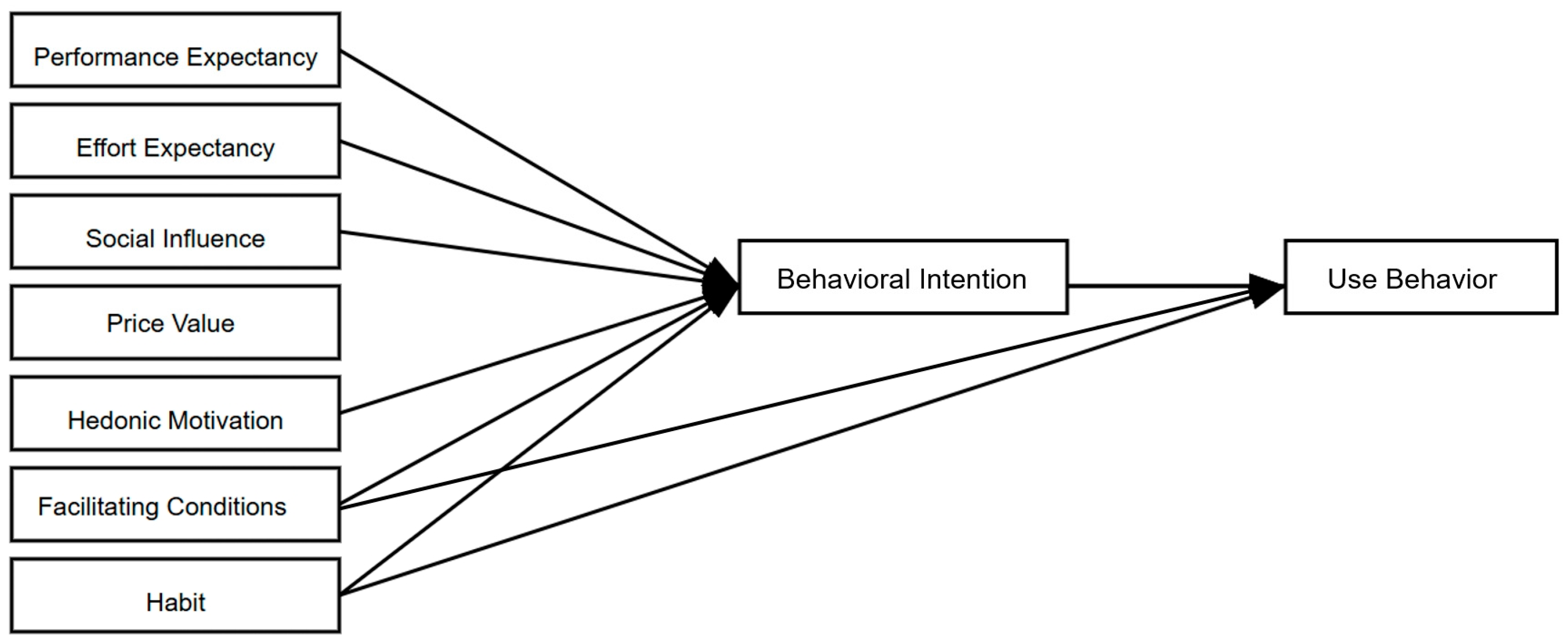

Venkatesh et al., 2003).

Particularly with regard to the integration of generative AI such as ChatGPT in school-based and instructional contexts, the question of learners’ acceptance has become a central research topic. Acceptance is a crucial factor for the sustainable and effective use of AI applications in educational settings. To fully realize the potential of technologies like ChatGPT, it is essential to understand the conditions under which learners are willing to adopt and integrate these technologies into their learning processes. Acceptance here is not only a matter related to students’ attitudes towards the technology, but is also related to students’ perception of its usefulness and the learning benefits it offers. Given ChatGPT’s remarkable capabilities compared to other AI-based language models, its use has the potential to fundamentally revolutionize how learners acquire information and knowledge (

Rudolph et al., 2023). However, these potentials must also be critically examined, particularly regarding the reliability of AI-generated content and the risks associated with inaccurate or incomplete information (

Lund et al., 2023).

The acceptance of ChatGPT in school contexts has so far remained largely unexplored. This is remarkable given that the field of higher education has already produced numerous studies on the factors influencing acceptance and the usage patterns of ChatGPT and similar AI applications (

Strzelecki, 2023a,

2023b;

Foroughi et al., 2023). These studies show that factors such as

habit,

performance expectancy, and

hedonic motivation are significant predictors of the effective use of ChatGPT (

Strzelecki, 2023a,

2023b). While valuable, these findings primarily focus on the higher education sector and therefore provide limited answers to the specific challenges and opportunities associated with the use of generative AI in school contexts. In addition to individual attitudes, school-level conditions such as teacher support also play an important role. These conditions may be shaped by organizational structures, the availability of professional development opportunities, and a supportive technological infrastructure. In this context, it is necessary to understand how technological, institutional, and individual factors interact in order to effectively promote the acceptance of ChatGPT and similar AI systems in schools. In this regard, technology acceptance models are of particular relevance. These models provide valuable theoretical foundations for investigating the various factors that influence technology acceptance. They take into account both individual and contextual dimensions, allowing for a nuanced analysis of the relationships between

perception,

motivation, and

behavior.

Building on this, the present study focuses on learners’ perspectives and investigates their acceptance of ChatGPT in school contexts. The goal is to generate empirically grounded insights into the factors that influence students’ willingness to engage with this specific form of generative AI in educational processes. By focusing on a specific technological application, this study contributes to empirical educational research by systematically examining the underexplored student perspective within the digital transformation of schools.

4. Method

A quantitative research design based on a standardised online questionnaire was chosen to examine students’ acceptance of ChatGPT usage. The questionnaire consisted of 57 items.

Sociodemographic characteristics were assessed with three items capturing the learners’ age, gender, and type of school attended. At the beginning of the survey, students were also asked about their usage behavior and application areas of ChatGPT in the school context. Usage behavior was measured with one item asking how often ChatGPT is used by students for school-related purposes. A seven-point Likert scale was used with the following options: 1 = Never, 2 = Once a month, 3 = Several times a month, 4 = Once a week, 5 = Several times a week, 6 = Once a day, and 7 = Several times a day.

The application areas of ChatGPT when used by students were assessed with two items. One item captured the purposes for which ChatGPT is used for school-related tasks. Respondents could select multiple options from the following: homework, writing texts, information seeking, preparing a presentation, preparing for an exam, and during exams. The second item assessed the school subjects in which ChatGPT is used by students.

In the further course of the questionnaire, the constructs of UTAUT2 according to

Venkatesh et al. (

2012) were assessed.

Performance expectancy, effort expectancy, facilitating conditions, and

habit were each measured using four items, while

social influence, hedonic motivation, price value, and

behavioral intention to use were each assessed with three items. All items were adapted from the original formulations by

Venkatesh et al. (

2003,

2012) to suit the technological context of ChatGPT use in schools (e.g., “

I believe that using ChatGPT for school is helpful.”).

Additionally, the questionnaire included measures for

personal innovativeness,

conscientiousness, and

challenge preference, representing an extension of the UTAUT2 model in this study.

Personal innovativeness was assessed using three items following

Agarwal and Prasad (

1998),

conscientiousness was measured with four items based on the short version of the

Big Five Inventory (BFI-K) by

Rammstedt and John (

2005), and

challenge preference was assessed with three items adapted from the short version of the

Achievement Motivation Inventory (LMI-K) by

Schuler and Prochaska (

2001).

The areas and constructs of the questionnaire are presented in

Table 1. All items were rated using a five-point Likert scale with the following response options: 1 =

strongly disagree, 2 =

rather disagree, 3 =

neutral, 4 =

rather agree, and 5 =

strongly agree.

Within the scope of this study, the UTAUT2 model was operationalized almost entirely and without modifications. To ensure reliability,

Cronbach’s alpha (α) was calculated. Additionally, a confirmatory factor analysis was conducted. One item from the

facilitating conditions scale was excluded, which increased the reliability from α = 0.733 to α = 0.808. As a result, all reliability coefficients for the examined constructs fall within a good to very good range. The reliability of the scales used in the study is presented in

Table 2.

4.1. Sample

Data were collected through a standardized online questionnaire targeting students of legal age in final-year classes at vocational schools and general upper secondary schools (Gymnasium) in Germany. The data collection took place between April and May 2024 with official approval from the relevant Ministry of Education.

A total of 568 responses were collected. However, 62 cases were excluded due to incomplete responses, resulting in a final sample of

N = 506 upper secondary students from four different school types. Of the surveyed students, 52.6% were female and 44.7% were male. A total of 2.7% identified as non-binary. The age of the respondents ranged from 18 to 30 years, with an average age of 18.5 years. Regarding the type of school attended, 58.9% of the participants were enrolled in a vocational upper secondary school, 19.4% attended a vocational college, 11.3% were in vocational training and attended a vocational school, and 10.4% of the respondents attended a general upper secondary school. The composition of the sample is shown in

Table 3.

While the sample is clearly defined in terms of school type, age, and gender, it is important to note that the data were collected exclusively in the federal state of Baden-Württemberg. This regional focus, combined with the predominance of students from vocational upper secondary schools, may limit the generalizability of the findings to other regions or school types. In addition, socioeconomic background was not assessed. These limitations should be taken into account when analyzing and interpreting the data.

4.2. Analysis of the Data

The statistical software SPSS (version 30) was used for the analysis of the collected data and the answering of the research questions. Prior to the analysis, the data were checked for completeness and plausibility. Descriptive analyses were carried out to examine the extent to which and in what areas ChatGPT is used by students in the school context. These analyses were based on the mean values of behavioral intention to use and actual usage behavior. In addition, the frequencies and distribution of students’ responses regarding usage behavior and the areas of application of ChatGPT were considered. This provided insights into how extensively and in what ways students use ChatGPT for school-related purposes.

Subsequently, regression analyses were conducted based on the UTAUT2 model as well as the extended version of UTAUT2 developed in the context of this study. The coefficient of determination R2 of the regression models was used to assess the explanatory power of UTAUT2 and its extension with regard to students’ technology acceptance of ChatGPT in the school setting. Furthermore, the regression coefficients β of the independent variables allowed for further insights into which acceptance factors of UTAUT2 and which constructs of the extended model are predictive of students’ behavioral intention to use and actual usage behavior, and thus of their overall technology acceptance of ChatGPT in the educational context.

In addition, the regression analyses aimed to examine whether and to what extent there are relationships between the constructs of the extended UTAUT2 model, namely personal innovativeness, conscientiousness, and preference for challenge, and students’ technology acceptance of ChatGPT in school.

The regression analyses conducted aimed to examine the direct and indirect effects of key independent variables derived from the underlying theoretical models. However, the potential moderating effects of moderator variables (e.g., age, gender) were not included in the analyses.

5. Results

5.1. Use of ChatGPT by Students

Table 4 presents the descriptive analysis of students’ use of ChatGPT. In this context, the mean values and standard deviations of

behavioral intention to use and actual

usage behavior were examined.

The students indicated a generally positive attitude toward the use of ChatGPT in the school context. The mean value for behavioral intention was 3.25, with a standard deviation of 1.16, which places it slightly above the midpoint of the five-point Likert scale (3.00). These values are based on responses from 457 students in the sample. The mean value for actual usage behavior was 3.33, with a standard deviation of 1.73, also exceeding the scale midpoint.

Table 5 presents the students’ responses regarding the frequency of their ChatGPT use in the school context. The data include both the absolute frequency and the relative percentage in relation to the overall sample.

Regarding the frequency of ChatGPT use by students in the school context, the results show that 14% of respondents stated that they never use ChatGPT for school purposes. Roughly one quarter turn to it about once per month, one fifth engage with it several times per month, and a comparable share do so several times per week. Fewer than ten percent use it daily, suggesting that occasional to moderate usage is typical in the school context.

The descriptive analysis of ChatGPT use focuses on the purposes for which students apply the tool and the subjects in which it is most frequently used.

Table 6 presents students’ responses regarding the purposes for which they use ChatGPT for school. The data include both absolute frequencies and their proportional share in relation to the sample.

Regarding the purposes for which students use ChatGPT, the tool is primarily employed for information seeking, writing texts, and completing homework. It is also frequently used in preparation for presentations, with 42.5% of respondents indicating such use. In contrast, ChatGPT is used less often for studying for exams, and its use during actual exam situations is negligible, with only 3.2% of students reporting this.

Table 7 presents students’ responses about the school subjects in which they use ChatGPT. The data include both absolute frequencies and their proportional share in relation to the sample.

Regarding the subjects in which students use ChatGPT, it appears that ChatGPT is particularly relevant in language and social science subjects. Students use ChatGPT especially in subjects such as History and Social Studies (53.2%), German (52.0%), and English or other languages (50.2%). In Economics and Natural Sciences, the use of ChatGPT is less common. About 39.3% of students reported using ChatGPT in Economics. In subjects like Biology, Chemistry, and Physics, 38.7% of students use ChatGPT. In Mathematics or Computer Science, usage is noticeably lower, with only 13.8% of students indicating that they use ChatGPT in these subjects.

5.2. Regression Analysis to Examine Students’ Acceptance of ChatGPT

In this study, various linear regression models were developed based on the UTAUT2 model by

Venkatesh et al. (

2012) and its extension. The results allow for the depiction of relationships within the UTAUT2 and its extension, as applied in this study. Using the model fit measure

R2, statements can be made about the explanatory power of the UTAUT2 and its extension regarding students’ technology acceptance of ChatGPT in the school context. Based on the regression coefficients (

β), insights can be gained into which acceptance factors from UTAUT2 or constructs from its extension predict students’ acceptance of ChatGPT in the school context. Furthermore, the analysis shows to what extent there are interaction effects between the extension constructs (

personal innovativeness,

conscientiousness, and

preference for challenge) and students’ acceptance of ChatGPT.

Initially, two regression models were created based on the original UTAUT2. The first regression model is presented in

Table 8. It aims to explain the

behavioral intention to use ChatGPT in the school context, which serves as the dependent variable. The independent variables integrated into the model are constructs from the UTAUT2 that are known to influence

behavioral intention to use and

actual usage behavior.

The model shows a very high explanatory power, with an R2 of 0.645, and is significant (p = 0.001). Performance expectancy, hedonic motivation, and habit have a significant influence on behavioral intention to use (p = 0.001) and thus represent the predictors of behavioral intention to use. Performance expectancy has the strongest effect, with a β of 0.388, followed by habit (β = 0.319) and hedonic motivation (β = 0.179). Performance expectancy and habit show medium to large effect sizes, while the effect of hedonic motivation is weak to moderate. Effort expectancy, social influence, facilitating conditions, and price value, however, do not significantly affect behavioral intention to use ChatGPT in school contexts.

The second regression model is presented in

Table 9. It aims to explain learners’

behavior to use ChatGPT in the school context.

Usage behavior is the dependent variable in this regression model. The independent variables included are those constructs from the original UTAUT2 that have a direct effect on the actual

usage behavior of a specific technology.

The model has a high explanatory power, with an R2 of 0.431, and is significant (p = 0.001). Behavioral intention to use (p = 0.001), facilitating conditions (p = 0.002), and habit (p = 0.001) have a significant influence on usage behavior. Thus, all independent variables in the model have a significant impact and serve as predictors of usage behavior. Behavioral intention to use has the strongest effect (β = 0.390), followed by habit (β = 0.290) with a medium effect size, and facilitating conditions (β = 0.118) with a weak effect.

5.3. Extension of the UTAUT2 Model

Regarding the extension of the UTAUT2 in this study, two additional regression models were developed. These models include additional constructs that are not part of the original UTAUT2. The extended regression models are supposed to provide further insights into how these extensions might contribute to increasing the explanatory power concerning learners’ acceptance of ChatGPT in the school context. At the same time, they are supposed to offer insights into the influence of the constructs considered here on learners’ technology acceptance.

The third regression model is presented in

Table 10. It aims to explain learners’

behavioral intention to use ChatGPT in the school context.

Behavioral intention to use is the dependent variable in this regression model. The independent variables integrated into the model are those from the original UTAUT2 that influence

behavioral intention to use. Additionally,

personal innovativeness,

conscientiousness, and

challenge preference were included as independent variables representing the extension of the UTAUT2 in this study.

The model has a very high explanatory power, with an

R2 of 0.647, and is significant (

p = 0.001). Compared to the first regression model (see

Table 8), which includes only constructs from the original UTAUT2, the explanatory power can hardly be increased. The explanatory power of the first regression model was an

R2 of 0.645. Thus, the increase in

R2 is 0.002.

Performance expectancy, hedonic motivation, and

habit have a significant effect on

behavioral intention to use (

p = 0.001) and therefore serve as predictors of this construct.

Performance expectancy exhibits the strongest effect (

β = 0.379), followed by

habit (

β = 0.316) with a medium effect size, and

hedonic motivation (

β = 0.167) with a weak effect. Again, the constructs from the UTAUT2 extension (

personal innovativeness,

conscientiousness, and

challenge preference) have no significant influence on

behavioral intention to use.

The fourth regression model is presented in

Table 11. It aims to explain the

usage behavior of learners regarding the use of ChatGPT in the school context.

Usage behavior is the dependent variable of this regression model. As independent variables, the constructs that influence

usage behavior in the original UTAUT2 were integrated into the regression model. Additionally,

personal innovativeness,

conscientiousness, and

challenge preference were included as independent variables representing the extension of the UTAUT2 within this study.

The model shows a high explanatory power, with an

R2 of 0.436, and is significant (

p = 0.001). Compared to the second regression model (see

Table 9), which includes only constructs from the original UTAUT2, the explanatory power can hardly be increased. The explanatory power of the second regression model was an

R2 of 0.431. Thus, the increase in

R2 is 0.005.

Behavioral intention to use (

p = 0.001),

facilitating conditions (

p = 0.010), and

habit (

p = 0.001) have a significant influence on

usage behavior.

Behavioral intention to use ChatGPT has the strongest effect, with a

β of 0.383, followed by

habit (

β = 0.286) and

facilitating conditions (

β = 0.102).

Behavioral intention to use and

habit show medium to high effect sizes, as in the second regression model. The effect of

facilitating conditions is again a weak effect size. The additional constructs from the UTAUT2 extension (

personal innovativeness,

conscientiousness, and

preference for challenge) again do not have a significant influence on

usage behavior.

6. Discussion

The present study examined the use and acceptance of ChatGPT among students in upper secondary education. The findings reveal how often students use ChatGPT, for what purposes, in which subjects it is most common, and which psychological and contextual factors shape acceptance and use. The study also tests whether the established UTAUT2 model and its proposed extensions account for students’ acceptance of ChatGPT. The discussion is organized around six guiding research questions.

In relation to

research question 1 (RQ1), which addressed the extent to which students use ChatGPT in the school context, the descriptive analyses revealed that a significant majority of students already use the tool. A total of 86% of the respondents reported using ChatGPT for school-related tasks, while only 14% indicated that they had never done so. This shows that ChatGPT has already become a widely established tool among upper secondary students. These results are consistent with findings from

Strzelecki (

2023b), who reported similar usage patterns among university students. Although the present study focused on a younger population, the usage rates suggest that ChatGPT is already deeply integrated into the academic routines of students. Given this reality, an outright ban on ChatGPT in schools appears both unrealistic and educationally counterproductive. Rather, schools are called upon to adapt both instruction and assessment practices. Traditional assignments, such as purely text-based writing tasks, are becoming less effective in assessing student performance, as AI tools can easily generate coherent and formally correct responses. Accordingly, a pedagogical shift is needed, moving away from reproduction-focused assessments and toward formats that emphasize

critical thinking, reflection, and evaluation. In line with the demands articulated by

Foroughi et al. (

2023), it seems increasingly relevant to design learning tasks in which students engage with ChatGPT outputs, such as by evaluating their quality, identifying errors, or revising AI-generated texts as part of classwork or exams.

With regard to research question 2 (RQ2), which examined the purposes and subject areas in which ChatGPT is used by students, the findings show that the tool is primarily used for research (64.2%), writing texts (52.2%), and homework (48.8%). Less frequent purposes include preparing presentations (42.5%) and studying for exams (22.5%). Very few students (3.2%) reported using ChatGPT during actual examinations. This usage pattern reflects the perceived utility of ChatGPT in open-ended or text-based learning tasks, while more formal or controlled assessment settings appear less influenced by its presence. In terms of subjects, the tool is used most frequently in History or Social Studies (53.2%), German (52.0%), and English or other foreign languages (50.2%). Its use is less common in Economics (39.3%) and Natural Sciences, such as Biology, Chemistry or Physics (38.7%). The lowest usage rates were found in Mathematics and Computer Science (both 13.8%). These results highlight the role of ChatGPT as a predominantly language- and content-processing tool. In practice, this suggests that AI integration is already progressing unevenly across subjects. Teachers in language and social science subjects can draw on existing student practices and design lessons that incorporate ChatGPT use meaningfully, for instance by analyzing text versions created with and without AI assistance. For STEM subjects, however, there is a need for new instructional approaches that clarify how ChatGPT and similar tools can support analytical or conceptual thinking in those domains.

Turning to

research question 3 (RQ3), which asked whether the UTAUT2 model can explain students’ technology acceptance of ChatGPT in the school context, the regression analyses showed that the model offers strong explanatory power. Specifically, the regression model based on the original UTAUT2 yielded an

R2 = 0.645 for

behavioral intention to use and an

R2 = 0.431 for

actual usage behavior. These results confirm that the UTAUT2 model is highly suitable for analyzing technology acceptance in secondary education. The values are comparable to those reported by

Strzelecki (

2023b), although his models, based on structural equation modeling, achieved slightly higher

R2 values. This confirms the general robustness of the model even when applied to younger learners.

Regarding

research question 4 (RQ4), which examined the specific factors that promote or hinder students’ acceptance of ChatGPT, the findings show that

performance expectancy (

β = 0.388),

habit (

β = 0.319), and

hedonic motivation (

β = 0.179) significantly predicted students’

behavioral intention to use. These results are in line with those of

Foroughi et al. (

2023), who also identified these constructs as central predictors of technology acceptance. The particularly strong effect of performance expectancy suggests that students are most likely to adopt ChatGPT when they believe it will enhance their academic performance. This implies that instruction should focus on showing students how ChatGPT can be used strategically and effectively. Integrating AI literacy into the curriculum, such as by explaining how language models work or where their limitations lie, can help foster informed use. The significance of

habit as a predictor highlights the role of routine and familiarity. Many students already use ChatGPT as part of their regular study habits, which reinforces the need to raise awareness about the limitations of AI-generated content. Errors in training data, misinformation, and overconfidence in AI outputs must be explicitly addressed in instruction. Prior studies, such as

Lund et al. (

2023) and

Ahmed et al. (

2023), have emphasized these risks, which should be explored through targeted classroom activities. The influence of

hedonic motivation suggests that students are also more inclined to use ChatGPT when the experience is enjoyable. This finding supports pedagogical designs that make learning with AI engaging and motivating.

In addressing research question 5 (RQ5), which examined whether the extended UTAUT2 model improves the explanatory power of the original model, the results show only a marginal increase in R2, from 0.645 to 0.647 for behavioral intention, and from 0.431 to 0.436 for actual usage behavior. Thus, adding the constructs personal innovativeness, conscientiousness, and challenge preference did not meaningfully improve the model. This suggests that these personality-related traits play only a limited role in students’ acceptance of ChatGPT in school settings.

This interpretation is confirmed by the findings related to research question 6 (RQ6), which investigated which of the additional constructs in the extended model are related to students’ technology acceptance. The analysis showed that none of the three added constructs had a significant effect on either behavioral intention or actual usage behavior. From an educational perspective, this is a highly relevant finding. It indicates that AI-based tools like ChatGPT are not only used by particularly curious, innovative, or challenge-seeking students. Rather, their use is widespread across personality types. As a result, didactic strategies should be designed to support all learners, regardless of individual disposition. Instruction should offer all students the opportunity to explore the benefits and risks of AI systems and develop competencies for their responsible use. Differentiated instructional approaches and reflective activities can help ensure that every learner is able to navigate the digital learning environment safely and effectively.

While the findings of this study are robust and largely consistent with prior research, several limitations must be considered. First, the study was based on a convenience sample of 506 students and thus cannot claim to be representative. The sample size is comparable to those in Strzelecki’s studies (534 and 503 participants) and exceeds that of

Foroughi et al. (

2023) (406 participants), yet the generalizability of the findings remains limited. In addition, data collection was conducted exclusively in one German federal state, which further restricts the transferability of the results to other regional or institutional contexts. Furthermore, all data were collected via self-report questionnaires, which may be biased by social desirability effects. In terms of methodology, it is important to note that the study used multiple regression analysis rather than structural equation modeling. This limits its ability to capture complex mediating and moderating relationships between constructs. While

Strzelecki (

2023a,

2023b) and

Foroughi et al. (

2023) applied structural models to assess causal pathways more holistically, the present study offers a partial yet focused investigation of key acceptance factors. To deepen the understanding of the mechanisms underlying students’ acceptance and use of AI tools, future studies should apply more advanced multivariate methods, particularly structural equation modeling. This would enable a more precise analysis of mediated effects and interdependencies within the UTAUT2 framework.

In addition to methodological refinement, upcoming research should also consider relevant contextual conditions. These include access to digital infrastructure, institutional expectations, curricular frameworks, and available support structures. Such factors may significantly influence how students adopt and use generative AI technologies in everyday school settings and should therefore be more systematically integrated into future explanatory models.

Despite these limitations, the findings clearly demonstrate that ChatGPT is already widely used and accepted by upper secondary students and that this use is shaped primarily by practical benefits, habit, and the enjoyment of using the tool. For educational institutions, these results highlight the urgent need to rethink how learning is organized, how digital tools are integrated, and how AI-related competencies are fostered. Instructional formats and assessment methods must be adapted to reflect the realities of AI-enhanced learning. Schools are thus challenged to actively shape digital transformation processes and to prepare students for a future in which artificial intelligence will be an integral part of their learning and working environments.

To translate these insights into practice, it is essential that educators and school leaders take an active role in supporting students’ responsible and informed engagement with AI tools. Teachers can incorporate activities that require students to critically analyze, compare, and revise AI-generated texts, helping them recognize both the potential and limitations of such tools. At the same time, school leaders can support this process by promoting AI-related professional development and fostering a culture of reflective technology use across the school. These efforts can strengthen students’ digital literacy and ensure that AI is integrated into classroom settings in pedagogically meaningful ways.

At the same time, contextual conditions such as teacher support, school policy frameworks, and access to digital infrastructure may also influence students’ use of AI tools in classroom settings. These factors could shape how frequently and in what ways students engage with ChatGPT, and they may moderate or mediate the relationship between individual acceptance and actual usage. Considering such school level variables can help to better understand differences in AI integration across educational contexts.

Building on the findings of this study, it would also be valuable to explore instructional formats that align with students’ current use patterns and motivational factors. For example, future research could examine how structured learning activities involving the critical evaluation or revision of AI-generated content affect learning outcomes and student engagement. This may help develop pedagogical approaches that foster both critical thinking and the competent use of generative AI for school purposes.

7. Conclusions

The present study examined how upper secondary students perceive the use of ChatGPT in the school context and to what extent they are willing to integrate this AI-based tool into their everyday learning. The goal was to gain a differentiated understanding of students’ technology acceptance and to systematically capture their attitudes toward ChatGPT using a theoretically grounded model. The study thereby contributes to current discourse on how digital technologies can be meaningfully integrated into school-based learning processes.

The findings indicate that ChatGPT is already used routinely by a majority of learners, particularly for tasks such as homework, information seeking, and text production. This use takes place largely independent of formal instructional integration, pointing to the coexistence of informal, technology-supported learning alongside traditional classroom structures. This development underscores the need for schools to reflect and respond to students’ evolving learning practices.

The analysis of influencing factors was based on the UTAUT2 model. The results show that students’ behavioral intention to use ChatGPT is significantly predicted by performance expectancy, habit, and hedonic motivation. These findings confirm that students tend to use ChatGPT when they perceive it as useful for academic achievement, when its use is already established in daily routines, and when interacting with the tool is experienced as enjoyable. The model demonstrated high explanatory power.

In contrast, the additional constructs included in the extended model (personal innovativeness, conscientiousness, challenge preference) were not significant and increased the explanatory power only marginally. This suggests that stable personality traits and value orientations are not primary determinants of ChatGPT usage in the school context. Students’ willingness to use ChatGPT appears to be shaped predominantly by practical, experience-based factors rather than by individual dispositions.

These findings point to concrete implications for school development. Since acceptance is linked to perceived usefulness and motivational factors, pedagogical strategies should focus on enabling students to use ChatGPT purposefully and reflectively. Instructional formats should include exercises that promote the critical examination of AI-generated content and foster awareness of the limitations of such tools. The integration of AI literacy into curricula could support these aims.

At the same time, several limitations of the study must be acknowledged. The data are based on a convenience sample and rely entirely on self-reports, which may be subject to social desirability bias. Moreover, data collection was limited to a single federal state in Germany, which restricts the generalizability of the findings to other regional contexts. The statistical analyses were conducted using multiple regression. While this method is suitable for estimating direct effects, it does not allow for the modeling of indirect or moderating relationships. In addition to methodological refinement, future research should also examine how school specific conditions, such as access to digital infrastructure, institutional norms, curricular constraints, or support structures, influence the acceptance and use of AI tools in educational settings.

In summary, the study shows that students already use ChatGPT in diverse and autonomous ways. Their acceptance is not driven by avoidance tendencies but by perceived learning benefits, motivation, and habitual integration into everyday learning. Schools are therefore called upon to develop teaching concepts that respond to these realities and promote the competent, goal-oriented, and reflective use of AI-based technologies.