Examining Undergraduates’ Intentions to Pursue a Science Career: A Longitudinal Study of a National Biomedical Training Initiative

Abstract

1. Introduction

- Does participation in the BUILD program impact undergraduate students’ intentions to pursue a science-related research career over time?

- Do the key BUILD initiative components of research experience and mentorship contribute to any observed differences between intentions to pursue a science-related research career for BUILD and non-BUILD students?

1.1. The BUilding Infrastructure Leading to Diversity (BUILD) Initiative

1.2. Undergraduate Research Experiences

1.3. Mentorship in STEMM

2. Materials and Methods

2.1. Data Source and Sample

2.2. Outcome Variable: Intentions to Pursue a Science Career

2.3. BUILD Participation Variable

2.4. Background Characteristics Variables

2.5. Additional Explanatory Variables

2.5.1. Research Experience

2.5.2. Mentoring

2.5.3. Scholarships

2.6. Analyses

3. Results

3.1. Sample Characteristics

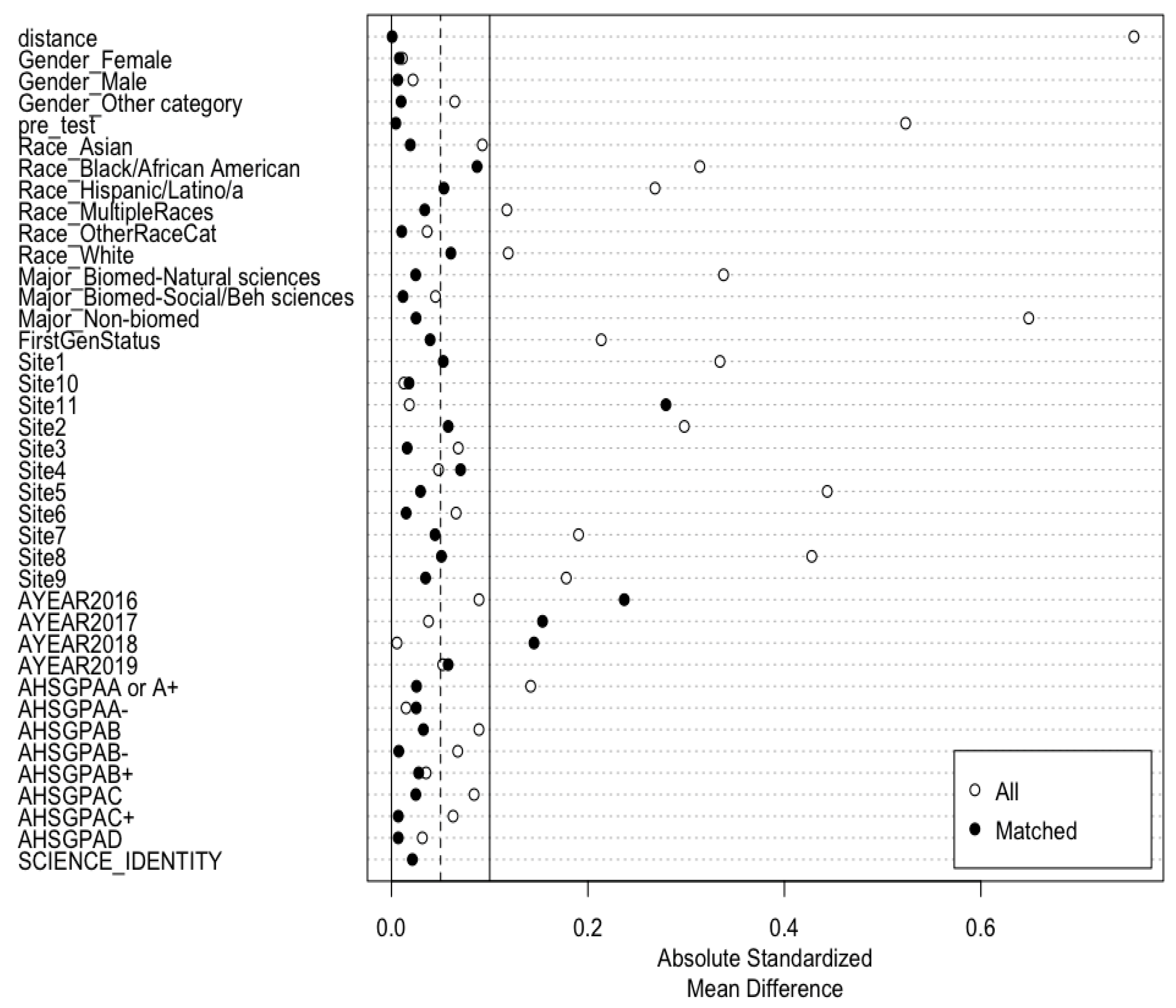

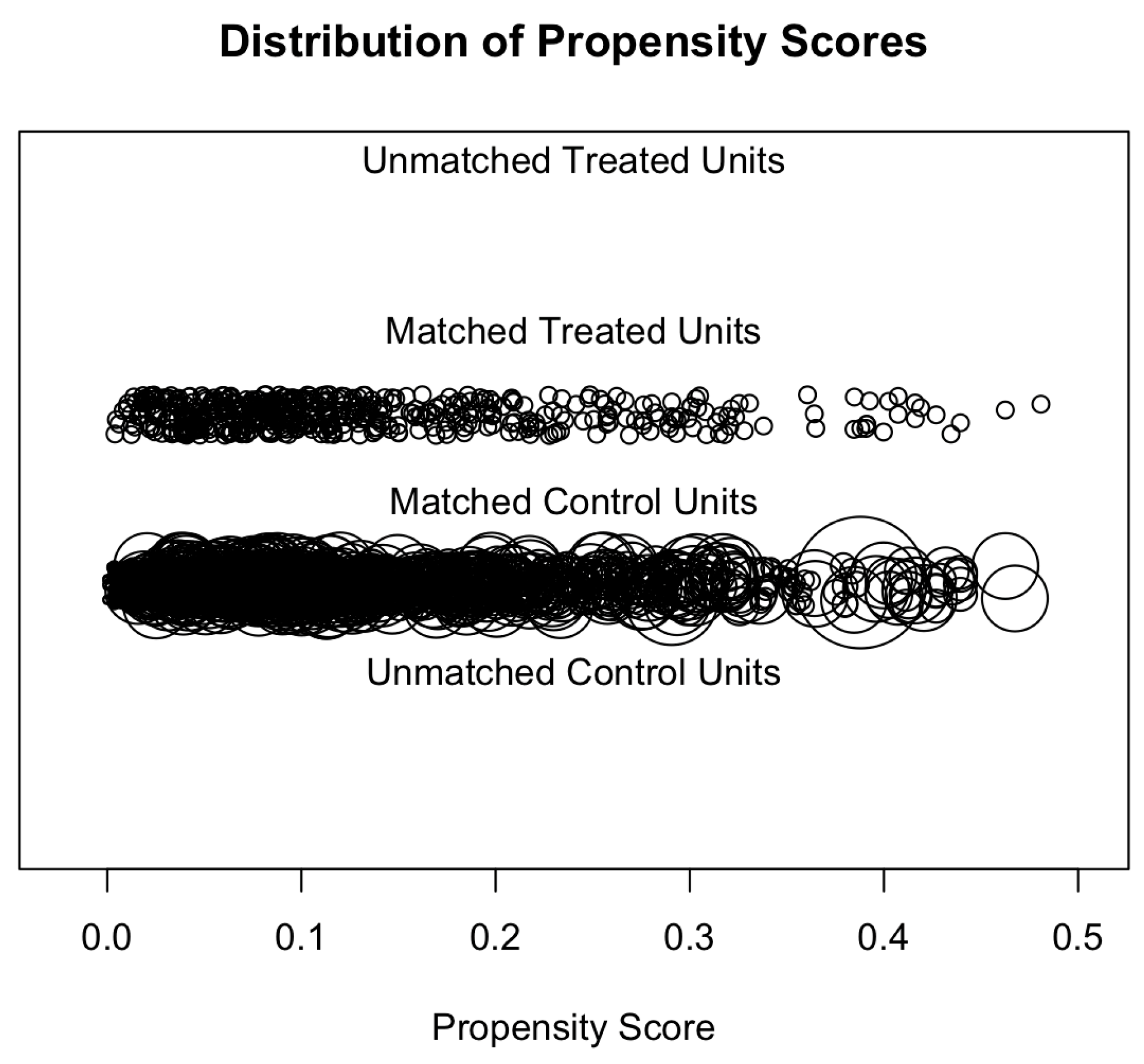

3.2. Propensity Score Estimation and Outcome Modeling

3.2.1. Measuring BUILD Effects Using ATE Weights

3.2.2. Measuring BUILD Effects Using the ATT Weights

3.3. Limitations

4. Discussion

4.1. Summary of Results

4.2. Lessons Learned: Implications Related to STEMM Policy and Practice

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | The science identity scale is operationalized using four agreement items: I have a strong sense of belonging to a community of scientists; I derive great personal satisfaction from working on a team that is doing important research; I think of myself as a scientist; and I feel like I belong in the field of science (Estrada et al., 2011). |

References

- Aikens, M. L., Robertson, M. M., Sadselia, S., Watkins, K., Evans, M., Runyon, C. R., Eby, L. T., & Dolan, E. L. (2017). Race and gender differences in undergraduate research mentoring structures and research outcomes. CBE Life Sciences Education, 16(2), ar34. [Google Scholar] [CrossRef] [PubMed]

- American Association of Colleges & Universities (AAC&U). (n.d.). High-impact practices. AAC&U. Available online: https://www.aacu.org/trending-topics/high-impact (accessed on 15 January 2025).

- Atkins, K., Dougan, B. M., Dromgold-Sermen, M. S., Potter, H., Sathy, V., & Panter, A. T. (2020). “Looking at myself in the future”: How mentoring shapes scientific identity for STEM students from underrepresented groups. International Journal of STEM Education, 7(1), 42. [Google Scholar] [CrossRef]

- Austin, P. C. (2011). An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivariate Behavioral Research, 46(3), 399–424. [Google Scholar] [CrossRef]

- Byars-Winston, A., & Dahlberg, M. L. (Eds.). (2019). Introduction: Why does mentoring matter? In The science of effective mentorship in STEMM. National Academies Press. [Google Scholar] [CrossRef]

- Byars-Winston, A. M., Branchaw, J., Pfund, C., Leverett, P., & Newton, J. (2015). Culturally diverse undergraduate researchers’ academic outcomes and perceptions of their research mentoring relationships. International Journal of Science Education, 37(15), 2533–2554. [Google Scholar] [CrossRef]

- Carpi, A., Ronan, D. M., Falconer, H. M., & Lents, N. H. (2017). Cultivating minority scientists: Undergraduate research increases self-efficacy and career ambitions for underrepresented students in STEM. Journal of Research in Science Teaching, 54(2), 169–194. [Google Scholar] [CrossRef]

- Carter, F. D., Mandell, M., & Maton, K. I. (2009). The influence of on-campus, academic year undergraduate research on STEM Ph. D. outcomes: Evidence from the Meyerhoff Scholarship Program. Educational Evaluation and Policy Analysis, 31(4), 441–462. [Google Scholar] [CrossRef]

- Chang, M. J., Eagan, M. K., Lin, M. H., & Hurtado, S. (2011). Considering the impact of racial stigmas and science identity: Persistence among biomedical and behavioral science aspirants. The Journal of Higher Education, 82(5), 564–596. [Google Scholar] [CrossRef] [PubMed]

- Chen, C. Y., Kahanamoku, S. S., Tripati, A., Alegado, R. A., Morris, V. R., Andrade, K., & Hosbey, J. (2022). Systemic racial disparities in funding rates at the National Science Foundation. Elife, 11, e83071. [Google Scholar] [CrossRef]

- Cobian, K. P., Hurtado, S., Romero, A. L., & Gutzwa, J. A. (2024). Enacting inclusive science: Culturally responsive higher education practices in science, technology, engineering, mathematics, and medicine (STEMM). PLoS ONE, 19(1), e0293953. [Google Scholar] [CrossRef]

- Davidson, P. L., Maccalla, N. M. G., Afifi, A. A., Guerrero, L., Nakazono, T. T., Zhong, S., & Wallace, S. P. (2017). A participatory approach to evaluating a national training and institutional change initiative: The BUILD longitudinal evaluation. BMC Proceedings, 11(12), 15. [Google Scholar] [CrossRef]

- Eagan, M. K., Hurtado, S., Chang, M. J., Garcia, G. A., Herrera, F. A., & Garibay, J. C. (2013). Making a difference in science education: The impact of undergraduate research programs. American Educational Research Journal, 50(4), 683–713. [Google Scholar] [CrossRef] [PubMed]

- Eagan, M. K., Romero, A. L., & Zhong, S. (2023). BUILDing an early advantage: An examination of the role of strategic interventions in developing first-year undergraduate students’ science identity. Research in Higher Education, 65, 181–207. [Google Scholar] [CrossRef] [PubMed]

- Estrada, M., Burnett, M., Campbell, A. G., Campbell, P. B., Denetclaw, W. F., Gutiérrez, C. G., Hurtado, S., John, G. H., Matsui, J., McGee, R., Okpodu, C. M., Robinson, T. J., Summers, M. F., Werner-Washburne, M., & Zavala, M. (2016). Improving underrepresented minority student persistence in STEM. CBE—Life Sciences Education, 15(3), es5. [Google Scholar] [CrossRef]

- Estrada, M., Hernandez, P. R., & Schultz, P. W. (2018). A longitudinal study of how quality mentorship and research experience integrate underrepresented minorities into STEM careers. CBE Life Sciences Education, 17(1), ar9. [Google Scholar] [CrossRef] [PubMed]

- Estrada, M., Woodcock, A., Hernandez, P. R., & Schultz, P. W. (2011). Toward a model of social influence that explains minority student integration into the scientific community. Journal of Educational Psychology, 103(1), 206–222. [Google Scholar] [CrossRef] [PubMed]

- Fregoso, J., & Lopez, D. D. (2020). 2019 College senior survey. Higher Education Research Institute, UCLA. Available online: https://www.heri.ucla.edu/briefs/CSS/CSS-2019-Brief.pdf (accessed on 20 June 2025).

- Fry, R., Kennedy, B., & Funk, C. (2021). STEM jobs see uneven progress in increasing gender, racial and ethnic diversity. Pew Research Center. Available online: https://www.pewresearch.org/social-trends/2021/04/01/stem-jobs-see-uneven-progress-in-increasing-gender-racial-and-ethnic-diversity/ (accessed on 15 January 2025).

- Gibbs, K. D., Jr., Reynolds, C., Epou, S., & Gammie, A. (2022). The funders’ perspective: Lessons learned from the National Institutes of Health Diversity Program Consortium evaluation. New Directions for Evaluation, 2022(174), 105–117. [Google Scholar] [CrossRef]

- Gilmore, J., Vieyra, M., Timmerman, B., Feldon, D., & Maher, M. (2015). The relationship between undergraduate research participation and subsequent research performance of early career STEM graduate students. The Journal of Higher Education, 86(6), 834–863. [Google Scholar] [CrossRef]

- Greifer, N. (2022). Assessing balance. Sist Oppdatert, 7, 2022. [Google Scholar]

- Guerrero, L. R., Seeman, T., McCreath, H., Maccalla, N. M., & Norris, K. C. (2022). Understanding the context and appreciating the complexity of evaluating the Diversity Program Consortium. New Directions for Evaluation, 2022(174), 11–20. [Google Scholar] [CrossRef]

- Guo, S., & Fraser, M. W. (2014). Propensity score analysis: Statistical methods and applications (Vol. 11). SAGE publications. [Google Scholar]

- Haeger, H., & Fresquez, C. (2016). Mentoring for inclusion: The impact of mentoring on undergraduate researchers in the sciences. CBE—Life Sciences Education, 15(3), ar36. [Google Scholar] [CrossRef]

- Hathaway, R. S., Nagda, B. A., & Gregerman, S. R. (2002). The relationship of undergraduate research participation to graduate and professional education pursuit: An empirical study. Journal of College Student Development, 43(5), 614–631. [Google Scholar]

- Ho, D., Imai, K., King, G., & Stuart, E. A. (2011). MatchIt: Nonparametric preprocessing for parametric causal inference. Journal of Statistical Software, 42, 1–28. [Google Scholar] [CrossRef]

- Hurtado, S., Cabrera, N. L., Lin, M. H., Arellano, L., & Espinosa, L. L. (2009). Diversifying science: Underrepresented student experiences in structured research programs. Research in Higher Education, 50, 189–214. [Google Scholar] [CrossRef]

- Hurtado, S., White-Lewis, D., & Norris, K. (2017). Advancing inclusive science and systemic change: The convergence of national aims and institutional goals in implementing and assessing biomedical science training. BMC Proceedings, 11(Suppl. S12), 17. [Google Scholar] [CrossRef] [PubMed]

- James, S. M., & Singer, S. R. (2016). From the NSF: The national science foundation’s investments in broadening participation in science, technology, engineering, and mathematics education through research and capacity building. CBE—Life Sciences Education, 15(3), fe7. [Google Scholar] [CrossRef] [PubMed]

- Joffe, M. M., Ten Have, T. R., Feldman, H. I., & Kimmel, S. E. (2004). Model selection, confounder control, and marginal structural models: Review and new applications. The American Statistician, 58(4), 272–279. [Google Scholar] [CrossRef]

- Kenward, M. G., & Roger, J. H. (1997). Small sample inference for fixed effects from restricted maximum likelihood. Biometrics, 983–997. [Google Scholar] [CrossRef]

- Kuh, G., & Kinzie, J. (2018, May 1). What really makes a ‘high-impact’ practice high impact? Inside Higher Ed. Available online: https://www.siue.edu/provost/honors/contact/pdf/HIP_Editorial (accessed on 15 January 2025).

- Linn, M. C., Palmer, E., Baranger, A., Gerard, E., & Stone, E. (2015). Undergraduate research experiences: Impacts and opportunities. Science, 347(6222), 1261757. [Google Scholar] [CrossRef]

- Maccalla, N. M. G., Purnell, D., McCreath, H. E., Dennis, R. A., & Seeman, T. (2022). Gauging treatment impact: The development of exposure variables in a large-scale evaluation study. New Directions for Evaluation, 2022(174), 57–68. [Google Scholar] [CrossRef]

- Maton, K. I., Domingo, M. R. S., Stolle-McAllister, K. E., Zimmerman, J. L., & Hrabowski, F. A., III. (2009). Enhancing the number of African-Americans who pursue STEM PhDs: Meyerhoff Scholarship Program outcomes, processes, and individual predictors. Journal of Women and Minorities in Science and Engineering, 15(1), 15–37. [Google Scholar] [CrossRef]

- McCreath, H. E., Norris, K. C., Calderόn, N. E., Purnell, D. L., Maccalla, N. M. G., & Seeman, T. E. (2017). Evaluating efforts to diversify the biomedical workforce: The role and function of the Coordination and Evaluation Center of the Diversity Program Consortium. BMC Proceedings, 11(12), 27. [Google Scholar] [CrossRef]

- McNeish, D., & Stapleton, L. (2016). The effect of small sample size on two-level model estimates: A review and illustration. Educational Psychology Review, 28(2), 295–314. [Google Scholar] [CrossRef]

- Merolla, D. M., & Serpe, R. T. (2013). STEM enrichment programs and graduate school matriculation: The role of science identity salience. Social Psychology of Education: An International Journal, 16(4), 575–597. [Google Scholar] [CrossRef] [PubMed]

- National Science Board, National Science Foundation. (2021). The STEM labor force of today: Scientists, engineers and skilled technical workers. science and engineering indicators 2022. NSB-2021-2. Alexandria, VA. Available online: https://ncses.nsf.gov/pubs/nsb20212 (accessed on 15 January 2025).

- Norris, K. C., McCreath, H. E., Hueffer, K., Aley, S. B., Chavira, G., Christie, C. A., Crespi, C. M., Crespo, C., D’Amour, G., Eagan, K., Echegoyen, L. E., Feig, A., Foroozesh, M., Guerrero, L. R., Johnson, K., Kamangar, F., Kingsford, L., LaCourse, W., Maccalla, N. M.-G., … Seeman, T. (2020). Baseline characteristics of the 2015–2019 first year student cohorts of the NIH building infrastructure leading to diversity (BUILD) program. Ethnicity & Disease, 30(4), 4. [Google Scholar] [CrossRef]

- Ramirez, K. D., Joseph, C. J., & Oh, H. (2022). Describing engagement practices for the enhance diversity study using principles of tailored panel management. New Directions for Evaluation, 2022(174), 33–45. [Google Scholar] [CrossRef] [PubMed]

- Ramos, H. V., Cobian, K. P., Srinivasan, J., Christie, C. A., Crespi, C. M., & Seeman, T. (2023). Investigating the relationship between participation in the building infrastructure leading to diversity (BUILD) initiative and intent to pursue a science career: A cross-sectional analysis. Evaluation and Program Planning, 102380. [Google Scholar] [CrossRef]

- R Core Team. (2022). R: A language and environment for statistical computing. Vienna, Austria. Available online: https://www.R-project.org/ (accessed on 1 March 2024).

- Robinson, K. A., Perez, T., Nuttall, A. K., Roseth, C. J., & Linnenbrink-Garcia, L. (2018). From science student to scientist: Predictors and outcomes of heterogeneous science identity trajectories in college. Developmental Psychology, 54(10), 1977–1992. [Google Scholar] [CrossRef]

- Rosenbaum, P. R., & Rubin, D. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1), 41–55. [Google Scholar] [CrossRef]

- Russell, L. (2007). Mentoring is not for you!: Mentee voices on managing their mentoring experience. Improving Schools, 10(1), 41–52. [Google Scholar] [CrossRef]

- Russell, S. H., Hancock, M. P., & McCullough, J. (2007). Benefits of undergraduate research experiences. Science, 316(5824), 548–549. [Google Scholar] [CrossRef]

- Saw, G., Chang, C. N., & Chan, H. Y. (2018). Cross-sectional and longitudinal disparities in STEM career aspirations at the intersection of gender, race/ethnicity, and socioeconomic status. Educational Researcher, 47(8), 525–531. [Google Scholar] [CrossRef]

- Schultz, P. W., Hernandez, P. R., Woodcock, A., Estrada, M., Chance, R. C., Aguilar, M., & Serpe, R. T. (2011). Patching the pipeline: Reducing educational disparities in the sciences through minority training programs. Educational Evaluation and Policy Analysis, 33(1), 95–114. [Google Scholar] [CrossRef]

- Stets, J. E., Brenner, P. S., Burke, P. J., & Serpe, R. T. (2017). The science identity and entering a science occupation. Social Science Research, 64, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Stolzenberg, E. B., Aragon, M. C., Romo, E., Couch, V., McLennan, D., Eagan, M. K., & Kang, N. (2020). The American freshman: National norms fall 2019. Higher Education Research Institute, UCLA. Available online: https://www.heri.ucla.edu/monographs/TheAmericanFreshman2019.pdf (accessed on 20 June 2025).

- Stuart, E. A. (2010). Matching methods for causal inference: A review and a look forward. Statistical Science: A Review Journal of the Institute of Mathematical Statistics, 25(1), 1. [Google Scholar] [CrossRef] [PubMed]

- Stuart, E. A., & Green, K. M. (2008). Using full matching to estimate causal effects in nonexperimental studies: Examining the relationship between adolescent marijuana use and adult outcomes. Developmental Psychology, 44(2), 395–406. [Google Scholar] [CrossRef]

- Tsui, L. (2007). Effective strategies to increase diversity in STEM fields: A review of the research literature. The Journal of Negro Education, 76(4), 555–581. [Google Scholar]

- Valantine, H. A., Lund, P. K., & Gammie, A. E. (2016). From the NIH: A systems approach to increasing the diversity of the biomedical research workforce. CBE—Life Sciences Education, 15(3), fe4. [Google Scholar] [CrossRef]

- Winterer, E. R., Froyd, J. E., Borrego, M., Martin, J. P., & Foster, M. (2020). Factors influencing the academic success of Latinx students matriculating at 2-year and transferring to 4-year US institutions—Implications for STEM majors: A systematic review of the literature. International Journal of STEM Education, 7(1), 34. [Google Scholar] [CrossRef]

| Variable | Non-BUILD Students | BUILD Students | Chi-Square Tests | ||

|---|---|---|---|---|---|

| (N = 9097) | (N = 551) | ||||

| n | % | n | % | ||

| Major | |||||

| Biomedical Natural Science Field | 5863 | 68.7 | 435 | 82.5 | X2 (2, 9648) = 75.34, p < 0.001 |

| Biomedical social science field | 933 | 10.9 | 66 | 12.5 | |

| Non-biomedical field | 1735 | 20.3 | 26 | 4.9 | |

| Financial Worry | |||||

| Major (not sure I will have enough funds to complete college) | 1610 | 19.2 | 97 | 18.4 | X2 (2, 9648) = 10.344, p = 0.0056 |

| Some (but I probably will have enough funds) | 5133 | 61.1 | 295 | 56.1 | |

| None (I am confident that I will have sufficient funds) | 1657 | 19.7 | 134 | 25.5 | |

| Gender | |||||

| Female | 6152 | 67.7 | 371 | 67.3 | X2 (2, 9648) = 1.4937, p = 0.474 |

| Male | 2844 | 31.3 | 177 | 32.1 | |

| Non-binary/Other | 97 | 1.1 | 3 | 0.5 | |

| Race/Ethnicity | |||||

| Asian | 1875 | 20.8 | 92 | 16.7 | X2 (5, 9648) = 87.63, p < 0.001 |

| Black/African American | 1522 | 16.9 | 168 | 30.6 | |

| Latine | 2573 | 28.5 | 111 | 20.2 | |

| Multiple races | 651 | 7.2 | 60 | 10.9 | |

| Other race category | 188 | 2.1 | 13 | 2.4 | |

| White | 2216 | 24.6 | 106 | 19.3 | |

| First-Generation Status | |||||

| Non-first-generation students | 6033 | 74.7 | 423 | 82.8 | X2 (1, 9648) = 16.696, p < 0.001 |

| First-generation students | 2040 | 25.3 | 88 | 17.2 | |

| High School GPA | |||||

| A or A+ | 2625 | 29.0 | 198 | 36.1 | X2 (7, 9648) = 21.866, p = 0.003 |

| A− | 2624 | 29.0 | 166 | 30.3 | |

| B | 1305 | 14.4 | 59 | 10.8 | |

| B− | 328 | 3.6 | 12 | 2.2 | |

| B+ | 1986 | 21.9 | 108 | 19.7 | |

| C | 58 | 0.6 | 2 | 0.4 | |

| C+ | 122 | 1.3 | 3 | 0.6 | |

| D | 7 | 0.1 | 3 | 0.5 | |

| n | Mean (SD) | n | Mean (SD) | ||

| Science Identity | 7855 | 54.3 (8.2) | 508 | 59.3 (7.7) | t(584.06) = 13.136, p < 0.001 |

| Time | Student’s Intention to Pursue a Science- Related Career | Chi-Square Tests of Outcome Comparing Non-BUILD vs. BUILD Students | |

|---|---|---|---|

| Non-BUILD N (%) | BUILD N (%) | ||

| 0 | 4266 (53.9) | 391 (76.5) | X2 (1, 9655) = 100.38, p < 0.001 |

| 1 | 3039 (58.4) | 291 (79.1) | X2 (1, 6068) = 61.36, p < 0.001 |

| 2 | 2346 (52.8) | 313 (81.3) | X2 (1, 4967) = 116.38, p < 0.001 |

| 3 | 1969 (47.4) | 313 (74.9) | X2 (1, 4705) = 114.43, p < 0.001 |

| 4 | 1075 (42.2) | 203 (71.5) | X2 (1, 2993) = 88.30, p < 0.001 |

| 5 | 495 (38.1) | 120 (75.5) | X2 (1, 1509) = 81.39, p < 0.001 |

| 6 | 168 (37.4) | 38 (62.3) | X2 (1, 528) = 13.81, p < 0.001 |

| Variables in the Model | Odds Ratio (Standard Error) | |||

|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | |

| (Intercept) | 0.42 (1.09) *** | 0.41 (1.09) *** | 0.38 (1.09) *** | 0.42 (1.10) *** |

| BUILD | 8.44 (1.18) *** | 5.59 (1.19) *** | 5.09 (1.19) *** | 4.44 (1.21) *** |

| Intent to Pursue at Baseline | 9.48 (1.08) *** | 8.65 (1.08) *** | 8.52 (1.08) *** | 8.51 (1.08) *** |

| Time (Ref: Time 1) | ||||

| Time 2 | 0.67 (1.06) *** | 0.66 (1.07) *** | 0.66 (1.07) *** | 0.66 (1.07) *** |

| Time 3 | 0.44 (1.07) *** | 0.41 (1.07) *** | 0.40 (1.07) *** | 0.41 (1.08) *** |

| Time 4 | 0.30 (1.08) *** | 0.26 (1.08) *** | 0.26 (1.09) *** | 0.30 (1.11) *** |

| Time 5 | 0.22 (1.10) *** | 0.21 (1.11) *** | 0.21 (1.11) *** | 0.22 (1.13) *** |

| Time 6 | 0.13 (1.16) *** | 0.13 (1.17) *** | 0.12 (1.17) *** | 0.11 (1.19) *** |

| Site (Ref: Site1) | ||||

| Site 2 | 1.30 (1.14) | 1.26 (1.14) | 1.25 (1.14) | 1.21 (1.15) |

| Site 3 | 2.29 (1.18) *** | 2.00 (1.19) *** | 2.10 (1.19) *** | 2.14 (1.19) *** |

| Site 4 | 0.97 (1.13) | 0.94 (1.13) | 0.90 (1.13) | 0.88 (1.13) |

| Site 5 | 1.87 (1.15) *** | 1.74 (1.15) *** | 1.74 (1.15) *** | 1.70 (1.15) *** |

| Site 6 | 1.26 (1.17) | 1.22 (1.17) | 1.19 (1.17) | 1.19 (1.17) |

| Site 7 | 1.14 (1.16) | 0.99 (1.17) | 0.94 (1.17) | 0.92 (1.18) |

| Site 8 | 1.42 (1.10) *** | 1.27 (1.10) * | 1.24 (1.10) * | 1.16 (1.10) |

| Site 9 | 2.30 (1.14) *** | 2.07 (1.14) *** | 2.10 (1.14) *** | 2.05 (1.14) *** |

| Site 10 | 1.74 (1.16) *** | 1.58 (1.17) ** | 1.60 (1.17) ** | 1.56 (1.17) ** |

| Site 11 | 2.70 (1.14) *** | 2.28 (1.14) *** | 2.19 (1.14) *** | 2.35 (1.15) *** |

| Research Experience | 2.29 (1.07) *** | 2.16 (1.07) *** | 2.38 (1.08) *** | |

| Have a Mentor | 1.36 (1.06) *** | 1.32 (1.06) *** | ||

| Scholarship Received | 0.90 (1.06) | |||

| Variables in the Model | Odds Ratio (Standard Error) | |||

|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | |

| (Intercept) | 1.65 (1.21) ** | 1.60 (1.21) * | 1.38 (1.22) *** | 1.63 (1.25) * |

| BUILD | 2.36 (1.14) *** | 1.80 (1.14) *** | 1.65 (1.14) *** | 1.56 (1.16) ** |

| Intent to Pursue at Baseline | 7.66 (1.13) *** | 7.17 (1.13) *** | 6.94 (1.13) *** | 7.56 (1.15) *** |

| Time (Ref: Time 1) | ||||

| Time 2 | 0.49 (1.09) *** | 0.45 (1.10) *** | 0.42 (1.10) *** | 0.39 (1.11) *** |

| Time 3 | 0.25 (1.10) *** | 0.20 (1.10) *** | 0.19 (1.11) *** | 0.17 (1.12) *** |

| Time 4 | 0.15 (1.11) *** | 0.11 (1.12) *** | 0.10 (1.12) *** | 0.13 (1.16) *** |

| Time 5 | 0.09 (1.14) *** | 0.08 (1.14) *** | 0.07 (1.15) *** | 0.08 (1.17) *** |

| Time 6 | 0.05 (1.19) *** | 0.04 (1.20) *** | 0.04 (1.20) * | 0.04 (1.24) *** |

| Site (Ref: Site1) | ||||

| Site 2 | 3.60 (1.56) ** | 3.36 (1.57) ** | 3.18 (1.59) | 3.13 (1.70) * |

| Site 3 | 1.83 (1.37) | 1.56 (1.38) | 1.69 (1.38) | 2.18 (1.44) * |

| Site 4 | 0.97 (1.24) | 1.03 (1.25) | 0.99 (1.25) | 1.08 (1.28) |

| Site 5 | 3.06 (1.67) * | 2.47 (1.71) | 2.75 (1.75) | 3.06 (1.83) |

| Site 6 | 1.53 (1.35) | 1.72 (1.36) | 1.66 (1.36) ** | 1.64 (1.40) |

| Site 7 | 0.61 (1.23) * | 0.57 (1.23) ** | 0.54 (1.24) | 0.48 (1.26) ** |

| Site 8 | 0.88 (1.18) | 0.85 (1.18) | 0.85 (1.19) ** | 0.78 (1.20) |

| Site 9 | 2.41 (1.32) ** | 2.41 (1.33) ** | 2.43 (1.33) | 2.46 (1.38) ** |

| Site 10 | 1.44 (1.28) | 1.38 (1.29) | 1.44 (1.29) ** | 1.57 (1.32) |

| Site 11 | 2.44 (1.23) *** | 2.06 (1.24) *** | 2.04 (1.24) *** | 2.00 (1.27) ** |

| Research Experience | 2.54 (1.08) *** | 2.26 (1.09) *** | 2.94 (1.1) *** | |

| Have a Mentor | 1.79 (1.08) | 1.77 (1.09) *** | ||

| Scholarship Received | 0.75 (1.10) ** | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Srinivasan, J.; Cobian, K.P.; Ramos, H.V.; Christie, C.A.; Crespi, C.M.; Seeman, T. Examining Undergraduates’ Intentions to Pursue a Science Career: A Longitudinal Study of a National Biomedical Training Initiative. Educ. Sci. 2025, 15, 825. https://doi.org/10.3390/educsci15070825

Srinivasan J, Cobian KP, Ramos HV, Christie CA, Crespi CM, Seeman T. Examining Undergraduates’ Intentions to Pursue a Science Career: A Longitudinal Study of a National Biomedical Training Initiative. Education Sciences. 2025; 15(7):825. https://doi.org/10.3390/educsci15070825

Chicago/Turabian StyleSrinivasan, Jayashri, Krystle P. Cobian, Hector V. Ramos, Christina A. Christie, Catherine M. Crespi, and Teresa Seeman. 2025. "Examining Undergraduates’ Intentions to Pursue a Science Career: A Longitudinal Study of a National Biomedical Training Initiative" Education Sciences 15, no. 7: 825. https://doi.org/10.3390/educsci15070825

APA StyleSrinivasan, J., Cobian, K. P., Ramos, H. V., Christie, C. A., Crespi, C. M., & Seeman, T. (2025). Examining Undergraduates’ Intentions to Pursue a Science Career: A Longitudinal Study of a National Biomedical Training Initiative. Education Sciences, 15(7), 825. https://doi.org/10.3390/educsci15070825