The Development and Validation of a K-12 STEM Engagement Participant Outcome Instrument

Abstract

1. Introduction

1.1. Importance of STEM Out-of-School Time Programming in Middle School

1.2. Examining Student STEM Outcomes

2. Conceptual Framework

2.1. STEM Interest

2.2. STEM Identity

2.3. STEM Self-Efficacy

2.4. 21st Century Skills

3. Methodological Frameworks

4. Methods

4.1. Instrumentation

4.2. Data Collection and Analysis

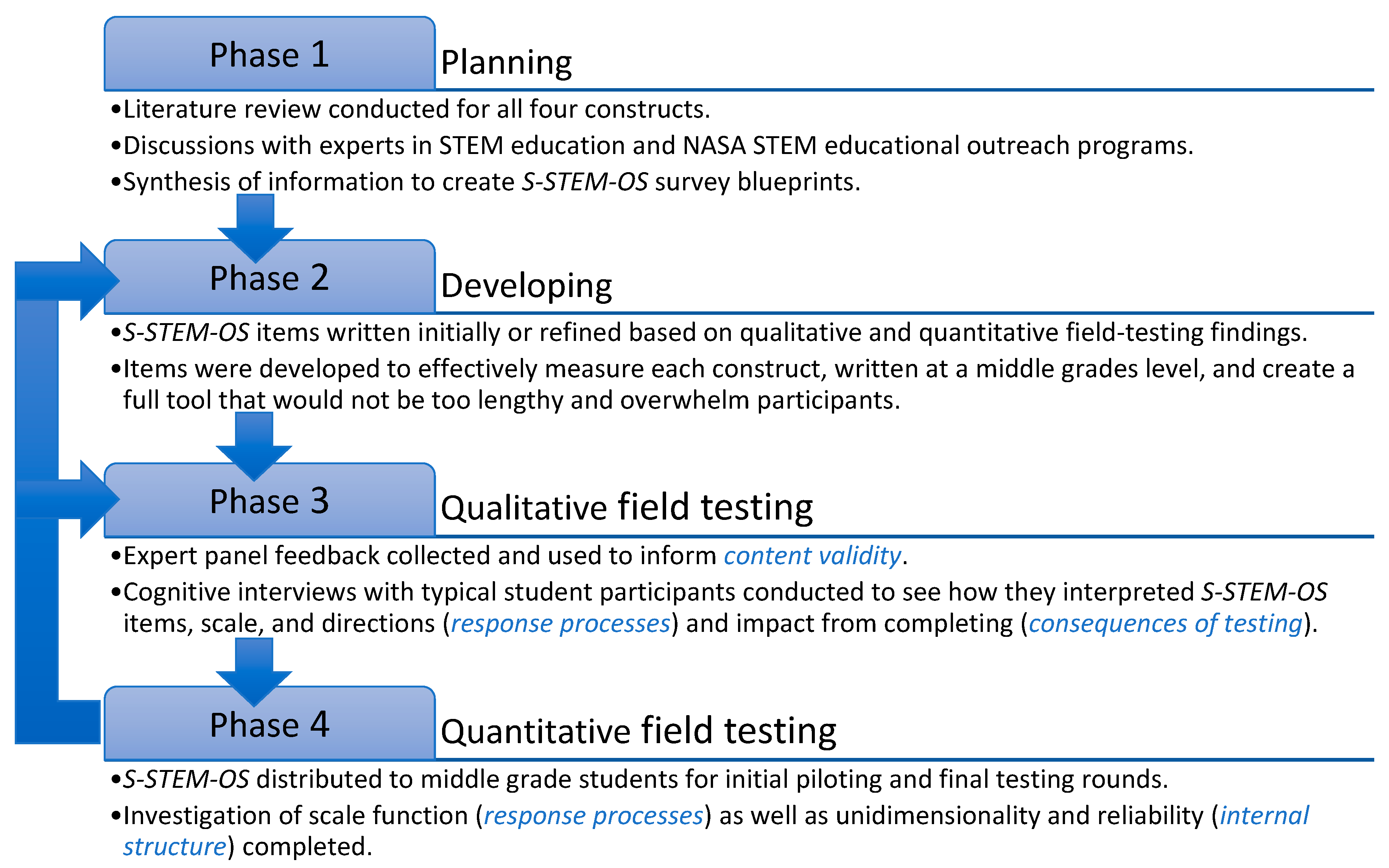

4.2.1. Phase 1: Planning for Survey Development

4.2.2. Phase 2: Developing Survey Items

4.2.3. Phase 3: Qualitative Field Testing

Content Validity Evidence

Response Processes Validity Evidence

Consequences of Testing Validity Evidence

4.2.4. Phase 4: Quantitative Field Testing

Response Processes Validity Evidence

- A minimum of 10 observations per category for stable rating scale structure estimates.

- Average category measures advance monotonically suggesting that students choosing higher rating scale categories possess higher amounts of the latent trait being studied.

- An outfit mean-square (MNSQ) < 2.00 for rating scale categories signifies the level of randomness in data is not excessive, nor does it threaten the measurement system.

- Appropriate advancements in step calibrations between categories imply that participants are using each rating scale category uniquely and that each category is needed. The criteria are as follows: steps advance by at least 1.00 logit for a 5-point scale or 1.40 logits for a 4-point scale, and by less than 5.00 logits regardless of scale.

Internal Structure Validity Evidence

5. Results

5.1. Qualitative Field Testing

5.1.1. Content Validity Evidence

5.1.2. Response Processes Validity Evidence

5.1.3. Consequences of Testing Validity Evidence

5.2. Quantitative Field Testing

5.2.1. Response Processes Validity Evidence

5.2.2. Internal Structure Validity Evidence

6. Discussion

6.1. Using the S-STEM-OS

6.2. Limitations and Future Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ainley, M., Hidi, S., & Berndorff, D. (2002). Interest, learning, and the psychological processes that mediate their relationship. Journal of Educational Psychology, 94(3), 545. [Google Scholar] [CrossRef]

- Alfred, M. V., Ray, S. M., & Johnson, M. A. (2019). Advancing women of color in STEM: An imperative for U.S. global competitiveness. Advances in Developing Human Resources, 21(1), 114–132. [Google Scholar] [CrossRef]

- Allen, S., & Peterman, K. (2019). Evaluating informal STEM education: Issues and challenges in context. New Directions for Evaluation, 2019(161), 17–33. [Google Scholar] [CrossRef]

- American Educational Research Association (AERA), American Psychological Association (APA) & National Council on Measurement in Education (NCME). (2014). Standards for educational and psychological testing. American Educational Research Association. [Google Scholar]

- American Museum of Natural History. (n.d.). Learn and teach. Available online: https://www.amnh.org/learn-teach (accessed on 22 October 2023).

- Andrich, D. (1978). A rating formulation for ordered response categories. Psychometrika, 43, 561–573. [Google Scholar] [CrossRef]

- Archer, L., DeWitt, J., Osborne, J., Dillon, J., Willis, B., & Wong, B. (2010). “Doing” science versus “being” a scientist: Examining 10/11-year-old schoolchildren’s constructions of science through the lens of identity. Science Education, 94(4), 617–639. [Google Scholar] [CrossRef]

- Archer, L., DeWitt, J., Osborne, J., Dillon, J., Willis, B., & Wong, B. (2013). ‘Not girly, not sexy, not glamorous’: Primary school girls’ and parents’ constructions of science aspirations. Pedagogy, Culture & Society, 21(1), 171–194. [Google Scholar] [CrossRef]

- Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215. [Google Scholar] [CrossRef]

- Bandura, A., & Locke, E. A. (2003). Negative self-efficacy and goal effects revisited. Journal of Applied Psychology, 88(1), 87–99. [Google Scholar] [CrossRef]

- Barron, B. (2006). Interest and self-sustained learning as catalysts of development: A learning ecology perspective. Human Development, 49(4), 193–224. [Google Scholar] [CrossRef]

- Battelle for Kids. (2019). Battelle for Kids Framework for 21st century learning. Available online: https://www.battelleforkids.org/networks/p21/frameworks-resources (accessed on 22 October 2023).

- Blackmore, C., Vitali, J., Ainscough, L., Langfield, T., & Colthorpe, K. (2021). A review of self-regulated learning and self-efficacy: The key to tertiary transition in science, technology, engineering and mathematics (STEM). International Journal of Higher Education, 10(3), 169. [Google Scholar] [CrossRef]

- Blotnicky, K. A., Franz-Odendaal, T., French, F., & Joy, P. (2018). A study of the correlation between STEM career knowledge, mathematics self-efficacy, career interests, and career activities on the likelihood of pursuing a STEM career among middle school students. International Journal of STEM Education, 5(1), 22. [Google Scholar] [CrossRef] [PubMed]

- Boone, W. J., Townsend, J. S., & Staver, J. (2010). Using Rasch theory to guide the practice of survey development and survey data analysis in science education and to inform science reform efforts: An exemplar utilizing STEBI self-efficacy data. Science Education, 95(2), 258–280. [Google Scholar] [CrossRef]

- Boss, S. (2019). It’s 2019. So why do 21st-century skills still matter? Ed Surge. Available online: https://www.edsurge.com/news/2019-01-22-its-2019-so-why-do-21st-century-skills-still-matter (accessed on 22 October 2023).

- Boy Scouts of America. (n.d.). STEM merit badges. Available online: https://www.scouting.org/merit-badge-tips-guide/stem-merit-badges/ (accessed on 22 October 2023).

- Boyacı, Ş. D. B., & Atalay, N. (2016). A scale development for 21st century skills of primary school students: A validity and reliability study. International Journal of Instruction, 9(1), 133–148. [Google Scholar] [CrossRef]

- Carlone, H. B., & Johnson, A. (2007). Understanding the science experiences of successful women of color: Science identity as an analytic lens. Journal of Research in Science Teaching, 44(8), 1187–1218. [Google Scholar] [CrossRef]

- Carlone, H. B., Scott, C. M., & Lowder, C. (2014). Becoming (less) scientific: A longitudinal study of students’ identity work from elementary to middle school science: Becoming (less) scientific. Journal of Research in Science Teaching, 51(7), 836–869. [Google Scholar] [CrossRef]

- Dou, R., & Cian, H. (2022). Constructing STEM identity: An expanded structural model for STEM identity research. Journal of Research in Science Teaching, 59(3), 458–490. [Google Scholar] [CrossRef]

- Dou, R., Hazari, Z., Dabney, K., Sonnert, G., & Sadler, P. (2019). Early informal STEM experiences and STEM identity: The importance of talking science. Science Education, 103, 623–637. [Google Scholar] [CrossRef]

- Duncan, P. W., Bode, R. K., Lai, S. M., & Perera, S. (2003). Rasch analysis of a new stroke-specific outcome scale: The stroke impact scale. Archives in Physical Medicine Rehab, 84, 950–963. [Google Scholar] [CrossRef]

- Godwin, A., Cribbs, J., & Kayumova, S. (2020). Perspectives of identity as an analytic framework in STEM education. In C. C. Johnson, M. Mohr-Schroeder, T. Moore, & L. English (Eds.), Handbook of research on STEM education (pp. 267–277). Routledge. [Google Scholar]

- Grimmon, A. S., Cramer, J., Yazilitas, D., Smeets, I., & De Bruyckere, P. (2020). Interest in STEM among children with a low socio-economic status: Further support for the STEM-CIS-instrument through the adapted Dutch STEM-LIT measuring instrument. Cogent Education, 7(1), 1745541. [Google Scholar] [CrossRef]

- Habig, B., & Gupta, P. (2021). Authentic STEM research, practices of science, and interest development in an informal science education program. International Journal of STEM Education, 8(1), 1–18. [Google Scholar] [CrossRef]

- Han, J., Kelley, T., & Knowles, J. G. (2021). Factors influencing student STEM learning: Self-efficacy and outcome expectancy, 21st century skills, and career awareness. Journal for STEM Education Research, 4(2), 117–137. [Google Scholar] [CrossRef]

- Hava, K., & Koyunlu Ünlü, Z. (2021). Investigation of the relationship between middle school sudents’ computational thinking skills and their STEM career interest and attitudes toward inquiry. Journal of Science Education and Technology, 30(4), 484–495. [Google Scholar] [CrossRef]

- Kang, H., Calabrese Barton, A., Tan, E., Simpkins, S., Rhee, H., & Turner, C. (2019). How do middle school girls of color develop STEM identities? Middle school girls’ participation in science activities and identification with STEM careers. Science Education, 103(2), 418–439. [Google Scholar] [CrossRef]

- Kelley, T., Knowles, J. G., Han, J., & Sung, E. (2019). Creating a 21st century skills survey instrument for high school students. American Journal of Educational Research, 7(8), 583–590. [Google Scholar] [CrossRef]

- Kim, A. Y., Sinatra, G. M., & Seyranian, V. (2018). Developing a STEM identity among young women: A social identity perspective. Review of Educational Research, 88(4), 589–625. [Google Scholar] [CrossRef]

- Komarraju, M., & Nadler, D. (2013). Self-efficacy and academic achievement: Why do implicit beliefs, goals, and effort regulation matter? Learning and Individual Differences, 25, 67–72. [Google Scholar] [CrossRef]

- Köller, O., Baumert, J., & Schnabel, K. (2001). Does interest matter? The relationship between academic interest and achievement in mathematics. Journal for Research in Mathematics Education, 32(5), 448–470. [Google Scholar] [CrossRef]

- Krapp, A., & Lewalter, D. (2001). Development of interests and interest-based motivational orientations: A longitudinal study in school and work settings. In S. Volet, & S. Järvelä (Eds.), Motivation in learning contexts: Theoretical advances and methodological implications (pp. 201–232). Elsevier. [Google Scholar]

- Krishnamurthi, A., Bevan, B., Rinehart, J., & Coulon, V. R. (2013). What afterschool STEM does best. Afterschool Matters, 18, 42–49. Available online: https://files.eric.ed.gov/fulltext/EJ1016823.pdf (accessed on 22 October 2023).

- Krupa, E. E., Bostic, J. D., & Shih, J. C. (2019). Validation in mathematics education: An introduction to quantitative measures of mathematical knowledge: Researching instruments and perspectives. In J. Bostic, E. Krupa, & J. Shih (Eds.), Quantitative measures of mathematical knowledge. Routledge. [Google Scholar]

- Lavi, R., Tal, M., & Dori, Y. D. (2021). Perceptions of STEM alumni and students on developing 21st century skills through methods of teaching and learning. Studies of Educational Evaluation, 70, 101002. [Google Scholar] [CrossRef]

- Linacre, J. M. (1994). Sample size and item calibration stability. Rasch Measurement Transactions, 7(4), 328. [Google Scholar]

- Linacre, J. M. (2002a). Optimizing rating scale category effectiveness. Journal of Applied Measurement, 3(1), 85–106. [Google Scholar] [PubMed]

- Linacre, J. M. (2002b). What do infit and outfit, mean-square and standardized mean? Rasch Measurement Transactions, 16(2), 878. [Google Scholar]

- Linacre, J. M. (2022). Dimensionality: PCAR contrasts and variances. Available online: https://www.winsteps.com/winman/principalcomponents.htm (accessed on 22 October 2023).

- Liu, X. (2010). Using and developing measurement instruments in science education: A Rasch modeling approach. Information Age. [Google Scholar]

- Luo, T., So, W. W. M., Wan, Z. H., & Li, W. C. (2021). STEM stereotypes predict students’ STEM career interest via self-efficacy and outcome expectations. International Journal of STEM Education, 8(1), 36. [Google Scholar] [CrossRef]

- Maiorca, C., Roberts, T., Jackson, C., Bush, S., Delaney, A., Mohr-Schroeder, M. J., & Soledad, S. Y. (2021). Informal learning environments and impact on interest in STEM careers. International Journal of Science and Mathematics Education, 19(1), 45–64. [Google Scholar] [CrossRef]

- Master, A., Cheryan, S., & Meltzoff, A. N. (2017). Social group membership increases STEM engagement among preschoolers. Developmental Psychology, 53(2), 201. [Google Scholar] [CrossRef]

- May, T. A., Bright, D., Fan, Y., Fornaro, C., Koskey, K. L., & Heverin, T. (2023). Development of a college student validation survey: A design-based research approach. Journal of College Student Development, 64(3), 370–377. [Google Scholar] [CrossRef]

- McCombs, J., Whitaker, A., & Yoon, P. (2017). The value of out-of-school time programs. Rand Corporation report PE-267-WF. Rand Corporation. [Google Scholar] [CrossRef]

- McDonald, M. M., Zeigler-Hill, V., Vrabel, J. K., & Escobar, M. (2019). A single-item measure for assessing STEM identity. Frontiers in Education, 4, 78. [Google Scholar] [CrossRef]

- Merriam, S. B. (2009). Qualitative research: A guide to design and implementation. Jossey-Bass. [Google Scholar]

- Milner, D. I., Horan, J. J., & Tracey, T. J. (2014). Development and evaluation of STEM interest and self-efficacy tests. Journal of Career Assessment, 22(4), 642–653. [Google Scholar] [CrossRef]

- Mitsopoulou, A. G., & Pavlatou, E. A. (2021). Factors associated with the eevelopment of secondary school students’ interest towards STEM studies. Education Sciences, 11(11), 746. [Google Scholar] [CrossRef]

- NASA. (2020). NASA strategy for STEM engagement. Available online: https://www.nasa.gov/sites/default/files/atoms/files/nasa-strategy-for-stem-2020-23-508.pdf (accessed on 22 October 2023).

- National Academies of Sciences, National Academy of Engineering, and Medicine [NASEM]. (2007). Rising above the gathering storm: Energizing and employing America for a brighter economic future. The National Academies Press. [Google Scholar]

- National Academies of Sciences, National Academy of Engineering, and Medicine [NASEM]. (2021). Call to action for science education: Building opportunity for the future. The National Academies Press. [Google Scholar]

- National Assessment for Educational Progress [NAEP]. (2013). Trends in academic progress: Reading 1971–2012, mathematics 1973–2012. Available online: https://nces.ed.gov/nationsreportcard/subject/publications/main2012/pdf/2013456.pdf (accessed on 22 October 2023).

- National Assessment for Educational Progress [NAEP]. (2015). 2015 mathematics & reading assessments. The Nation’s Report Card. Available online: https://www.nationsreportcard.gov/reading_math_2015/#?grade=4 (accessed on 22 October 2023).

- National Assessment for Educational Progress [NAEP]. (2020). NAEP long-term trend assessment results: Reading and mathematics. The Nation’s Report Card. Available online: https://www.nationsreportcard.gov/ltt/?age=9 (accessed on 22 October 2023).

- National Assessment for Educational Progress [NAEP]. (2023). NAEP long-term trend assessment results: Reading and mathematics. The Nation’s Report Card. Available online: https://www.nationsreportcard.gov/highlights/ltt/2023/ (accessed on 22 October 2023).

- National Center for Education Statistics [NCES]. (2019). 2019 NAEP mathematics and reading assessments: Highlighted results at grades 4 and 8 for the nation, states, and districts. Available online: https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2020012 (accessed on 22 October 2023).

- National Research Council (NRC). (2010). Assessing 21st century skills. (D. H. Hill Jr. Library). National Academies Press. Available online: https://catalog.lib.ncsu.edu/catalog/NCSU2499658 (accessed on 22 October 2023).

- National Research Council (NRC). (2015). Identifying and supporting productive STEM programs in out-of-school settings. The National Academies Press. [Google Scholar] [CrossRef]

- National Science and Technology Council Committee on STEM Education [NSTC]. (2018). Charting a course for success: America’s strategy for STEM education. National Science and Technology Council. Available online: https://trumpwhitehouse.archives.gov/wp-content/uploads/2018/12/STEM-Education-Strategic-Plan-2018.pdf (accessed on 22 October 2023).

- National Science Foundation. (2022). The state of US science and engineering 2022. Available online: https://ncses.nsf.gov/pubs/nsb20221/u-s-and-global-stem-education-and-labor-force (accessed on 22 October 2023).

- Nelson, A. G., Goeke, M., Auster, R., Peterman, K., & Lussenhop, A. (2019). Shared Measures for evaluating common outcomes of informal STEM education experiences. New Directions for Evaluation, 161, 59–86. [Google Scholar] [CrossRef]

- Nugent, G., Barker, B., Grandgenett, N., & Adamchuk, V. I. (2010). Impact of robotics and geospatial technology interventions on youth STEM learning and attitudes. Journal of Research on Technology in Education, 42(4), 391–408. [Google Scholar] [CrossRef]

- Ok, G., & Kaya, D. (2021). The relationship between middle school students’ levels of 21st century learning skills and their interest in STEM career. Acta Didactica Napocensia, 14(2), 333–345. [Google Scholar] [CrossRef]

- Paul, K. M., Maltese, A. V., & Svetina Valdivia, D. (2020). Development and validation of the role identity surveys in engineering (RIS-E) and STEM (RIS-STEM) for elementary students. International Journal of STEM Education, 7, 1–17. [Google Scholar] [CrossRef]

- Pearson, J., Giacumo, L. A., Farid, A., & Sadegh, M. (2022). A systematic multiple studies review of low-income, first-generation, and underrepresented, STEM-degree support programs: Emerging evidence-based models and recommendations. Education Sciences, 12(5), 333. [Google Scholar] [CrossRef]

- Porter, S. R., Whitcomb, M. E., & Weitzer, W. H. (2004). Multiple surveys of students and survey fatigue. New Directions for Institutional Research, 121, 63–73. [Google Scholar] [CrossRef]

- Pressick-Kilborn, K., & Walker, R. (2002). The social construction of interest in a learning community. Research on Sociocultural Influences on Motivation and Learning, 2, 153–182. [Google Scholar]

- Rasch, G. (1980). Probabilistic models for some intelligence and attainment tests. (Copenhagen, Danish Institute for Educational Research), with foreward and afterword by B.D. Wright. The University of Chicago Press. [Google Scholar]

- Renninger, K. A., Ewen, L., & Lasher, A. K. (2002). Individual interest as context in expository text and mathematical word problems. Learning and Instruction, 12(4), 467–490. [Google Scholar] [CrossRef]

- Robinson, K. A., Lee, Y.-K., Bovee, E. A., Perez, T., Walton, S. P., Briedis, D., & Linnenbrink-Garcia, L. (2019). Motivation in transition: Development and roles of expectancy, task values, and costs in early college engineering. Journal of Educational Psychology, 111(6), 1081–1102. [Google Scholar] [CrossRef]

- Sakellariou, C., & Fang, Z. (2021). Self-efficacy and interest in STEM subjects as predictors of the STEM gender gap in the US: The role of unobserved heterogeneity. International Journal of Educational Research, 109, 101821. [Google Scholar] [CrossRef]

- Science Club for Girls. (2022). Transforming the face of STEM. Available online: https://www.scienceclubforgirls.org/ (accessed on 22 October 2023).

- Scott, E. E., Wenderoth, M. P., & Doherty, J. H. (2020). Design-based research: A methodology to extend and enrich biology education research. Life Sciences Education, 19(3), 1–12. [Google Scholar] [CrossRef]

- SETI Institute. (2023). Education and outreach. Available online: https://www.seti.org/seti-educators (accessed on 22 October 2023).

- Severino, L., DeCarlo, M. J., Sondergeld, T. A., Ammar, A., & Izzetoglu, M. (2018). A validation study of an eighth grade reading comprehension assessment. Research in Middle Level Education, 41(10), 1–16. [Google Scholar]

- Sharp, L. M., & Frankel, J. (1983). Respondent burden: A test of some common assumptions. Public Opinion Quarterly, 47(1), 36–53. [Google Scholar] [CrossRef]

- Singer, A., Montgomery, G., & Schmoll, S. (2020). How to foster the formation of STEM identity: Studying diversity in an authentic learning environment. International Journal of STEM Education, 7(1), 1–12. [Google Scholar] [CrossRef]

- Smith, E. V. (2002). Understanding Rasch measurement: Detecting and evaluating the impact of multidimensionality using item fit statistics and principal components analysis of residuals. Journal of Applied Measurement, 3, 205–231. [Google Scholar]

- Smithsonian Science Education Center. (2023). Transforming K-12 education through science in collaboration with communities across the globe. Available online: https://ssec.si.edu/ (accessed on 22 October 2023).

- Society for Science. (2023). Creating access and opportunities for students and teachers. Available online: https://www.societyforscience.org/ (accessed on 22 October 2023).

- Sondergeld, T. A. (2020). Shifting sights on STEM education instrumentation development: The importance of moving validity evidence to the forefront rather than a footnote. School Science and Mathematics Journal, 120(5), 259–261. [Google Scholar] [CrossRef]

- Sondergeld, T. A., & Johnson, C. C. (2014). Using Rasch measurement for the development and use of affective assessments in science education research. Science Education, 98(4), 581–613. [Google Scholar] [CrossRef]

- Sondergeld, T. A., & Johnson, C. C. (2019). Development and validation of a 21st Century Skills assessment: Using an iterative multi-method approach. School Science and Mathematics Journal, 119(6), 312–326. [Google Scholar] [CrossRef]

- Staus, N. L., Lesseig, K., Lamb, R., Falk, J., & Dierking, L. (2020). Validation of a measure of STEM interest for adolescents. International Journal of Science and Mathematics Education, 18(2), 279–293. [Google Scholar] [CrossRef]

- Stewart, J., Henderson, R., Michaluk, L., Deshler, J., Fuller, E., & Rambo-Hernandez, K. (2020). Using the social cognitive theory framework to chart gender differences in the developmental trajectory of STEM self-efficacy in science and engineering students. Journal of Science Education and Technology, 29(6), 758–773. [Google Scholar] [CrossRef]

- Su, R., Rounds, J., & Armstrong, P. I. (2009). Men and things, women and people: A meta-analysis of sex differences in interests. Psychological Bulletin, 135(6), 859–884. [Google Scholar] [CrossRef]

- Teasdale, R. M. (2022). How do you define success? Evaluative criteria for informal STEM education. Visitor Studies, 25(2), 163–184. [Google Scholar] [CrossRef]

- van Aalderen-Smeets, S. I., Walma van der Molen, J. H., & Xenidou-Dervou, I. (2018). Implicit STEM ability beliefs predict secondary school students’ STEM self-efficacy beliefs and their intention to opt for a STEM field career. Journal of Research in Science Teaching, 56(4), 465–485. [Google Scholar] [CrossRef]

- van Laar, E., van Deursen, A. J. A. M., van Dijk, J. A. G. M., & de Haan, J. (2017). The relation between 21st Century skills and digital skills: A systematic literature review. Computers in Human Behavior, 72, 577–588. [Google Scholar] [CrossRef]

- Voot, J., Erstad, O., Dede, C., & Mishra, P. (2013). Challenges to learning and schooling in the digital networked world of the 21st Century. Journal of Computer Assisted Learning, 29, 403–413. [Google Scholar] [CrossRef]

- Wang, X. (2013). Why students choose STEM majors: Motivation, high school learning, and postsecondary context of support. American Educational Research Journal, 50(5), 1081–1121. [Google Scholar] [CrossRef]

- Willis, G. B., & Artino, A. R., Jr. (2013). What do our respondents think we’re asking? Using cognitive interviewing to improve medical education surveys. Journal of Graduate Medical Education, 5(3), 353–356. [Google Scholar] [CrossRef]

- Wright, B. D. (1992). Point-biserial correlations and item fits. Rasch Measurement Transactions, 5(4), 174. [Google Scholar]

- Wu, F., Fan, W., Arbona, C., & de la Rosa-Pohl, D. (2020). Self-efficacy and subjective task values in relation to choice, effort, persistence, and continuation in engineering: An expectancy-value theory perspective. European Journal of Engineering Education, 45(1), 151–163. [Google Scholar] [CrossRef]

| Construct | Operational Definition |

|---|---|

| STEM Identity | An individual’s perception of themselves as a STEM-capable person and their perception of their potential in STEM educational pursuits and STEM careers (Godwin et al., 2020). In other words, how individuals “see” themselves (or not) as a person in STEM. |

| STEM Self-Efficacy | An individual’s belief in their own capabilities to achieve certain outcomes (Bandura, 1977) and when considering STEM self-efficacy specifically, a relationship exists between the level of self-efficacy and an individual’s motivation to learn (Luo et al., 2021, likelihood to choose a STEM major in college (e.g., Wang, 2013), and decision to choose a STEM career path (e.g., Blotnicky et al., 2018). In other words, self-efficacy is related to the confidence a person has in their ability to do STEM. |

| STEM Interest | A person’s interest in STEM discipline(s) overall is key to their motivation to learn, as well as a predictor of the likelihood of individuals pursuing STEM careers (e.g., Blotnicky et al., 2018). Key components of STEM interest include not only the “spark” but also the process of sustained engagement with STEM over time. |

| 21st Century Skills | Essential skills for engaging in STEM learning and careers. Examples of these skills include collaboration, creativity, problem-solving, critical thinking, communication, technological literacy, innovation, leadership, productivity, adaptability, and accountability. |

| Phase | |||

|---|---|---|---|

| Validity Evidence | Instrumentation | Sample | Analysis |

| Phase 1: Planning | |||

| No Validity Evidence | NA | NA | NA |

| Phase 2: Developing | |||

| No Validity Evidence | NA | NA | NA |

| Phase 3: Qualitative Field Testing | |||

| Content—Do survey items align with the construct (theoretical trait)? | Expert Panel Review Open-Ended Survey | 4 Experts | Content Analysis |

| Response Processes—Do participants interpret the survey as intended? | Cognitive Interview Protocols | 7 Middle School Students | Content Analysis |

| Consequences of Testing—How are participants impacted by completing the survey? | |||

| Phase 4: Quantitative Field Testing | |||

| Response Processes—Do participants interpret the survey as intended? | S-STEM-OS | 51 Initial Pilot158 Final Testing | Rasch Polytomous Rating Scale Analysis |

| Internal Structure—Are measures unidimensional? Do the measures produce replicable outcomes? | S-STEM-OS | 51 Initial Pilot158 Final Testing | Rasch Psychometric Analysis |

| Type of Issue | Construct | Original Item | Summary of Student Feedback and Researcher Actions | Revised Item(s) |

|---|---|---|---|---|

| Wording Clarity Needed | STEM Self-Efficacy | I am confident to try out new ideas on my own in STEM. | Students struggled with independence in this item. The notion of doing STEM alone was confusing as they suggested most STEM activities were led or supervised by a teacher or other adult. The item was revised. | I am confident about trying out new STEM ideas. |

| Multiple Components in Item | STEM Interest | I like to read or watch videos about STEM when I am not in school. | Students noted a distinct difference between reading and watching STEM content. Thus, this double-barreled item was broken into two distinct items. | I like to read about STEM when I am not in school. I like to watch videos about STEM when I am not in school. |

| Confusion Interferes with Ability to Answer | STEM Identity | Other students in my class think I do well in STEM. | Students were adamant that they did not know what other students thought about them in terms of their STEM abilities. As such, this item was removed. | NA—Item Eliminated |

| Construct | Run (# Items) Scale Used | Rating Scale Guidelines | |||

|---|---|---|---|---|---|

| 10+ Observations per Category | Measures Advance | Outfit MNSQ < 2.0 | Step Calibrations Acceptable | ||

| STEM Identity | Initial Pilot (5 items) 5-point (SD, D, N, A, SA) | No (SD = 9) | Met | Met | No (D→N = 0.17) |

| Final Field Testing (5 items) 4-point (SD, D, A, SA) | Met | Met | Met | Met | |

| STEM Self-Efficacy | Initial Pilot (8 items) 5-point (SD, D, N, A, SA) | No (SD = 7) | Met | Met | Met |

| Final Field Testing (5 items) 4-point (SD, D, A, SA) | Met | Met | Met | Met | |

| STEM Interest | Initial Pilot (10 items) 5-point (SD, D, N, A, SA) | Met | Met | Met | No (D→N = 0.86) (N→A = 0.39) (A→SA = 0.56) |

| Final Field Testing (6 items) 4-point (SD, D, A, SA) | Met | Met | Met | Met | |

| 21st Century Skills | Initial Pilot (8 items) 5-point (SD, D, N, A, SA) | No (SD = 7) | Met | Met | No (D→N = 0.86) |

| Final Field Testing (7 items) 4-point (SD, D, A, SA) | Met | Met | Met | Met | |

| S-STEM-OS Construct | ||||

|---|---|---|---|---|

| Psychometric Indices (Guidelines) | STEM Identity | STEM Interest | STEM Self-Efficacy | 21st Century Skills |

| Reliability (<0.70 = Poor; 0.70 = Acceptable; 0.80 = Good; 0.90 = Excellent) | ||||

| Person | ||||

| Initial Pilot | 0.59 | 0.78 | 0.75 | 0.76 |

| Final Field Testing | 0.82 | 0.83 | 0.81 | 0.82 |

| Item | ||||

| Initial Pilot | 0.95 | 0.92 | 0.95 | 0.87 |

| Final Field Testing | 0.99 | 0.99 | 0.99 | 0.96 |

| Separation (<1.50 = Poor; 1.50 = Acceptable; 2.00 = Good; 3.00 = Excellent) | ||||

| Person | ||||

| Initial Pilot | 1.20 | 1.88 | 1.74 | 1.77 |

| Final Field Testing | 2.12 | 2.21 | 2.09 | 2.15 |

| Item | ||||

| Initial Pilot | 4.44 | 3.40 | 4.21 | 2.54 |

| Final Field Testing | 9.54 | 9.23 | 9.58 | 5.03 |

| Item Point-Biserial (Negative value = Unacceptable; Positive value = Acceptable) | ||||

| Initial Pilot | All Positive | All Positive | All Positive | All Positive |

| Final Field Testing | All Positive | All Positive | All Positive | All Positive |

| Item Fit (MNSQ >2.00 = Degrades measure; <0.50 or >1.50 = Less productive, not degrading; 0.50 to 1.50 = Productive for measure) | ||||

| Initial Pilot | All Productive | Item 9 (Infit = 2.56, Outfit = 2.13) | Item 4 (Outfit = 2.22) | All Productive |

| Final Field Testing | All Productive | Item 2 (Infit = 1.51) | All Productive | All Productive |

| Unidimensionality (RPCA <50% = Examine further; ≥50% = Good) | ||||

| Initial Pilot | 54.9% | 52.0% | 53.9% | 47.4% |

| Final Field Testing | 61.4% | 62.5% | 64.5% | 50.8% |

| Construct Item Number and Stem | Measure in Logits (SE) | Measure Overlap | Keep/Remove Decision | Item Removal Explanations | ||

|---|---|---|---|---|---|---|

| STEM Identity (5 items) | ||||||

| 4. I see myself working in a STEM job someday. | 1.35 (0.16) | Keep | While some items overlapped in measure, all items were deemed different enough in conceptual meaning to maintain all in this construct. | |||

| 3. My friends think I do well in STEM. | 0.36 (0.17) | Keep | ||||

| 5. I think I will do well in high school STEM classes. | 0.23 (0.18) | Keep | ||||

| 2. My teachers think I do well in STEM. | −0.38 (0.21) | Keep | ||||

| 1. My parents think I do well in STEM. | −1.56 (0.30) | Keep | ||||

| STEM Interest (10 items) | ||||||

| 4. I like to watch videos about STEM when I am not in school. | 0.88 (0.12) | Keep | Item 9 was removed due to misfit and student confusion reported in cognitive interviews. Items 3 and 4 focused on engagement with STEM media and were statistically similar in measure. Thus, item 3 was removed because more students from the cognitive interviews suggested “reading” about STEM outside of school seemed abnormal to them. Three items related to “STEM problem-solving” were statistically similar in measure (2, 5, 8). Only the more general item (8) was maintained for a more parsimonious construct. | |||

| 3. I like to read about STEM when I am not in school. | 0.70 (0.12) | Remove | ||||

| 7. I discuss STEM with friends and/or family. | 0.51 (0.13) | Keep | ||||

| 10. I often think of STEM activities to try out. | 0.41 (0.13) | Keep | ||||

| 9. I have done a STEM camp, club, or competition. | 0.00 (0.14) | Remove | ||||

| 8. I enjoy solving STEM problems. | −0.23 (0.16) | Keep | ||||

| 6. I want to increase my STEM knowledge as much as possible. | −0.39 (0.17) | Keep | ||||

| 2. I enjoy figuring out how things work. | −0.45 (0.17) | Remove | ||||

| 5. I enjoy the challenge of doing STEM activities. | −0.61 (0.19) | Remove | ||||

| 1. I enjoy learning about STEM. | −0.81 (0.21) | Keep | ||||

| STEM Self-Efficacy (8 items) | ||||||

| 5. Other students ask me for help with STEM activities. | 2.22 (0.21) | Keep | Items 1, 6, 7, and 8 were all considered to address similar content of student perception of their “STEM ability”, and all of these items overlapped in terms of item measure. Thus, items 1, 6, and 8 were removed to produce a more parsimonious construct. Item 7 was maintained to keep a more general ability item focused on “school”. | |||

| 3. I am confident about trying out new STEM ideas. | 0.12 (0.19) | Keep | ||||

| 4. I understand how STEM is used in jobs. | 0.11 (0.19) | Keep | ||||

| 6. I receive good grades on STEM activities in school. | −0.29 (0.20) | Remove | ||||

| 7. I understand STEM concepts we discuss in school. | −0.33 (0.20) | Keep | ||||

| 2. I get excited about doing STEM projects. | −0.46 (0.21) | Keep | ||||

| 1. I am good at STEM. | −0.64 (0.22) | Remove | ||||

| 8. I do well in school STEM activities. | −0.73 (0.22) | Remove | ||||

| 21st Century Skills (8 items) | ||||||

| 7. When a STEM project or task does not go as planned, I can think of other ways to accomplish it. | 0.56 (0.22) | Keep | While many items in this construct were statistically similar in measure, as shown by overlap, only one item was removed (2). This item focused on “problem-solving”, a component of 21st century skills, but also addressed in other constructs of the survey. | |||

| 1. I can think of creative STEM ideas. | 0.51 (0.22) | Keep | ||||

| 3. I am able to talk about my STEM ideas. | 0.51 (0.22) | Keep | ||||

| 2. I am able to solve STEM problems. | 0.31 (0.23) | Remove | ||||

| 8. I am able to be the leader of a team working on a STEM activity. | 0.25 (0.23) | Keep | ||||

| 6. I am able to complete a STEM project or task by its due date. | 0.20 (0.24) | Keep | ||||

| 5. I am a good team member when I work on STEM activities in a group. | −1.08 (0.31) | Keep | ||||

| 4. I can use the internet to get the information I need for a STEM project. | −1.28 (0.33) | Keep | ||||

| Item Statistics | ||||

|---|---|---|---|---|

| Construct Item Number and Stem | Measure in Logits (SE) | Infit (MNSQ) | Outfit (MNSQ) | Point- Biserial |

| STEM Identity (5 items) | ||||

| 4. I see myself working in a STEM job someday. | 2.92 (0.17) | 1.13 | 1.01 | 0.75 |

| 5. I think I will do well in high school STEM classes. | −0.12 (0.15) | 1.06 | 1.05 | 0.71 |

| 3. My friends think I do well in STEM. | −0.46 (0.15) | 1.13 | 1.12 | 0.69 |

| 2. My teachers think I do well in STEM. | −0.89 (0.16) | 0.83 | 0.81 | 0.73 |

| 1. My parents think I do well in STEM. | −1.44 (0.16) | 0.88 | 0.84 | 0.70 |

| STEM Interest (6 items) | ||||

| 2. I like to watch videos about STEM when I am not in school. | 1.87 (0.14) | 1.51 | 1.37 | 0.55 |

| 4. I discuss STEM with friends and/or family. | 1.12 (0.13) | 1.23 | 1.21 | 0.66 |

| 6. I often think of STEM activities to try out. | 0.15 (0.12) | 1.00 | 1.06 | 0.72 |

| 5. I enjoy solving STEM problems. | −0.46 (0.13) | 0.73 | 0.69 | 0.82 |

| 3. I want to increase my STEM knowledge as much as possible. | −0.99 (0.13) | 0.85 | 0.82 | 0.80 |

| 1. I enjoy learning about STEM. | −1.69 (0.14) | 0.77 | 0.75 | 0.81 |

| STEM Self-Efficacy (5 items) | ||||

| 4. Other students ask me for help with STEM activities. | 3.13 (0.18) | 1.39 | 1.23 | 0.65 |

| 3. I understand how STEM is used in jobs. | −0.35 (0.16) | 1.08 | 1.04 | 0.71 |

| 2. I am confident about trying out new ideas on my own in STEM. | −0.54 (0.16) | 0.91 | 0.94 | 0.73 |

| 5. I understand STEM concepts we discuss in school. | −0.82 (0.16) | 0.68 | 0.66 | 0.76 |

| 1. I get excited about doing STEM projects. | −1.41 (0.17) | 1.04 | 1.00 | 0.66 |

| 21st Century Skills (7 items) | ||||

| 2. I am able to talk about my STEM ideas. | 1.04 (0.13) | 1.14 | 1.14 | 0.64 |

| 1. I can think of creative STEM ideas. | 0.37 (0.13) | 0.93 | 0.92 | 0.68 |

| 5. I am able to complete a STEM project or task by its due date. | 0.30 (0.13) | 1.01 | 1.01 | 0.70 |

| 7. I am able to be the leader of a team working on a STEM activity. | 0.30 (0.13) | 1.14 | 1.12 | 0.71 |

| 6. When a STEM project or task does not go as planned, I can think of other ways to accomplish it. | −0.20 (0.14) | 0.94 | 0.89 | 0.67 |

| 4. I am a good team member when I work on STEM activities in a group. | −0.47 (0.14) | 0.90 | 0.85 | 0.72 |

| 3. I can use the internet to get the information I need for a STEM project. | −1.36 (0.16) | 0.89 | 0.83 | 0.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

May, T.A.; Johnson, C.C.; Harold, S.; Walton, J.B. The Development and Validation of a K-12 STEM Engagement Participant Outcome Instrument. Educ. Sci. 2025, 15, 377. https://doi.org/10.3390/educsci15030377

May TA, Johnson CC, Harold S, Walton JB. The Development and Validation of a K-12 STEM Engagement Participant Outcome Instrument. Education Sciences. 2025; 15(3):377. https://doi.org/10.3390/educsci15030377

Chicago/Turabian StyleMay, Toni A., Carla C. Johnson, Sera Harold, and Janet B. Walton. 2025. "The Development and Validation of a K-12 STEM Engagement Participant Outcome Instrument" Education Sciences 15, no. 3: 377. https://doi.org/10.3390/educsci15030377

APA StyleMay, T. A., Johnson, C. C., Harold, S., & Walton, J. B. (2025). The Development and Validation of a K-12 STEM Engagement Participant Outcome Instrument. Education Sciences, 15(3), 377. https://doi.org/10.3390/educsci15030377