1. Introduction

During the past 100 years, simulators have come to play an increasing role for learning and instruction in professional training. Beginning in fields such as aviation and healthcare, simulators have been used in diverse settings, including vocational training (

Braunstein et al., 2022), business education (

Beranič & Heričko, 2022) and training of mariners (

Sellberg et al., 2022), for example. In recent years, simulations have made their way into the regular educational systems through the use of virtual labs, virtual microscopes and similar resources (

Lantz-Andersson et al., 2019).

In the present study, we examine the work of operators and facilitators when orchestrating learning and instruction in the context of full-scale simulators in healthcare training. Such environments are often well resourced and have access to qualified technical and healthcare staff with extensive and varied experiences of simulations. Successful execution of simulations requires continuous interventions by professionals, who monitor and evaluate what actions participants take as the simulation evolves (

Wiig & Säljö, 2024). Our empirical focus is on the roles that operators and facilitators play in promoting meaningful and challenging learning opportunities in critical exercises in healthcare. More specifically, the analytical focus is on the joint agentic elements of participants and technologies in the co-creation, monitoring and development of a dynamic space for learning healthcare practices.

In research on healthcare simulations, the role of operators and facilitators is considered essential to simulations (see, e.g.,

Dieckmann et al., 2008;

Husebø et al., 2024;

Nordenström et al., 2023;

Sawyer et al., 2016). In the literature, the terminology used for defining the roles and responsibilities of those who run simulations varies (cf. The Healthcare Simulation Standards of Best Practice

TM (HSSOBP

TM) Operations,

Decker et al., 2021). Usually, there is a division of labour in simulations, which implies that the operator primarily is responsible for “running the computer” (

Seropian, 2003, p. 1698), while the facilitator is responsible for the exercises and for the instructional relevance of the debriefing. But, in practice, the roles are often not as clearcut as this. A simulation is a dynamically evolving process, where technology, pedagogy and healthcare work are intertwined and difficult to separate. This means that operators often, in addition to their responsibilities for the technology, will contribute by playing different roles in the scenario (doctor, patient and relatives), and facilitators will intervene with technologies, by supplementing the decisions on how to use technology with healthcare relevant input.

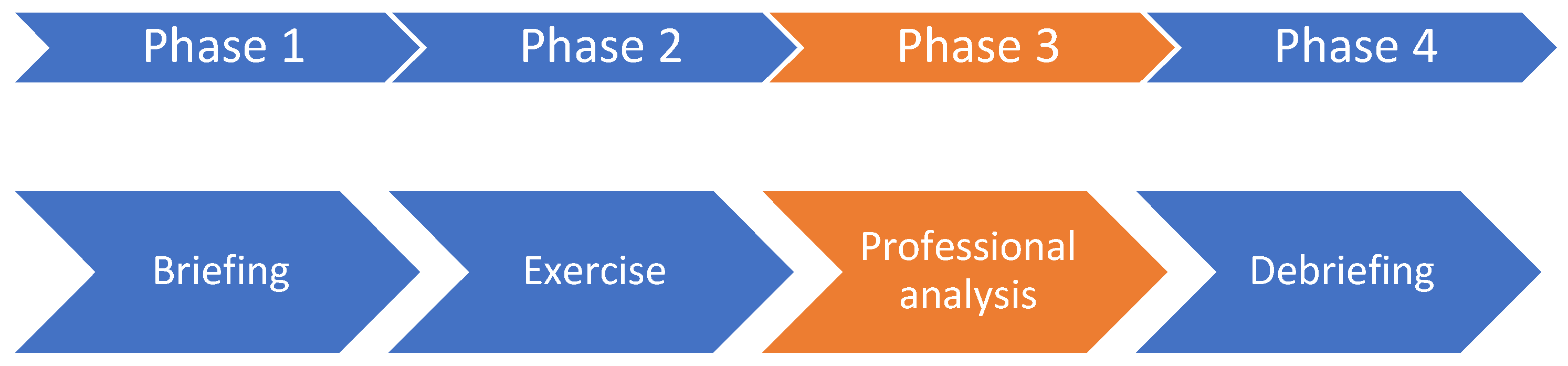

While simulator research describes the simulation process as a method most often organized as Briefing—Exercise—Debriefing, our interest in how operators and facilitators contribute is often thought of as the “backstage” work of professional evaluation and analysis in the learning environment. However, in addition to the three stages conventionally focused on, there is a critical part (Phase 3 in

Figure 1), during which teams of operators and facilitators analyse the simulation that has just taken place and prepare for the debrief with the students. The work they do here is both retrospective and prospective. During this stage, the operators and facilitators analyse and evaluate the simulation, and, among other things, they decide if student performance lives up to expected standards.

Our research builds on the assumption that the teamwork of operators and facilitators, as well as the activities they engage in during simulations, should not be perceived as passive backstage activities of little relevance for the success of simulations as professionally relevant contexts for learning. On the contrary, their analytical gaze and their contributions to building and enacting know-how during simulation activities, are decisive for the outcomes and for the learning experiences of students during all phases. An interesting feature of simulations is precisely that they provide rich opportunities for following a range of significant learner activities, including how learners physically engage in health care practices, how they document their conceptual understanding when diagnosing patients, and how they communicate with patients and amongst themselves. Thus, operators and facilitators are in a position where they can both follow the delivery of care in detail in real time and consider the solutions students suggest and the difficulties they run into. Consequently, over time, the team of operators and facilitators develop first-hand insights into how to guide and evaluate student activities during simulations.

Thus, this study aims to use video documentation of the concrete work of operators and facilitators during ongoing simulation training sessions to analyse the nature of their work and their contributions to the progress of simulations as environments for learning (Phase 3,

Figure 1). Put differently, the novelty of this article is our focus on the analytical gaze that operators and facilitators develop as a team in order to support learning and evolution of the simulation exercises. By examining the activities of these actors, our contribution to the field of educational sciences is that we can begin to better understand how the process of enacting professional know-how in healthcare is conducted and evaluated during the entire practice of simulations, and not only during debriefings. Analytically, we can investigate the agency that the different elements of the activity, students, the instructors (operators/facilitators), and the technologies, exert in the co-construction and development of learning experiences.

In addition, one interesting element of simulations as contexts for professional learning that should not be overlooked is that they increase our capacities for understanding the details of how students/learners appropriate and exercise their know-how in a performative sense. What students do and say in situ provides clues to their know-how in response to challenges in healthcare practices (

Wiig & Säljö, 2024). Thus, from the perspective of evaluating learning, and in comparison to either paper-and-pencil tests or oral testing, simulators offer an environment for studying how participants

enact their know-how in challenging situations, and this enactment can be documented, analysed and assessed in real time as well as retrospectively through video documentation (

Chue et al., 2022;

Sellberg et al., 2022). By analysing simulations, operators and facilitators (and researchers) gain access to the conceptual (explanations, diagnostic reasoning, etc.), physical (use of equipment, handling of patients etc.) and collaborative actions that students engage in as they handle the challenges of the exercise.

Another significant feature of this mode of organizing learning and instruction is that it relies on intimate continuous coordination between human agents and technologies, digital as well as physical. The assumption of this analysis is that the technologies exert agency in the sense that they build on the use of representations (documentation) and physical artifacts (manikins, medical equipment for interventions, etc.) that guide the work performed. These resources have a long history and have been designed on the basis of institutional experiences of providing care. Through their design and use, these resources contribute to the progression of the simulation and to the agency of all those involved. From a sociomaterial perspective, the agentic elements of technologies in social practices must be recognized as essential for understanding the nature of professional practices and for learning such practices (

Fenwick, 2014;

Hawley, 2021;

Hutchins, 1995;

Mäkitalo, 2016).

In the following, three questions will be addressed: how do operators and facilitators (a) describe, analyse and assess features of the students’ delivery of care, (b) monitor the progress of the scenario, including the function of the technology in these activities, and (c) comment on their own contributions during simulations. Thus, our focus is on the gaze of operators and facilitators as professional teams during simulations, the ways in which they build knowledge about simulations and decide on how these can be made more relevant as learning events. This multilayered nature of professional expertise implies that both the operators/facilitators (humans) and the technological resources serve as agents that co-determine the outcomes of simulations as contexts for learning.

3. Method and Data

The design of this study is inspired by interactional ethnography (

Skukauskaite & Green, 2023) using video to document professional practices and discourses in healthcare. For this study, we observed two operator rooms in an advanced full-scale Nordic simulator laboratory to capture how the participants, operators (n = 6) and facilitators (n = 10), articulated their expertise as part of the simulator instruction. The data were generated in a compulsory simulation course in Basic Nursing. The recordings were made before the students’ first clinical placement in medical units at hospitals, i.e., in terms of clinical experience the students are novices. Students were divided into groups of 6–10 participants, and they were engaged in a total of six different scenarios over a two-day period. The scenarios focused on patients with deteriorating health conditions, such as hypoglycaemia, cardiac arrest, postoperative bleeding and angina pectoris. In each scenario, from two to three students acted as nurses, while the other students in the group acted as family members or observers. The learning outcomes included assessing how students prioritized relevant nursing actions for clinical assessment, leadership and decision-making. They were obliged to implement care procedures such as the airway, breathing, circulation, disability and exposure approach (ABCDE) to determine basic physiological values of the patient in pain, to execute clear leadership by using Close Loop for effective communication, and to manage the health-care intervention related to Identification, Situation, Background, Analysis and Advice (ISBAR) to ensure quality and patient safety. Thus, what they were intended to learn are the mediational means used by professionals in specific care situations. The data included below in this article are from two scenarios, heart stop and postoperative bleeding.

The simulation follows the pattern described in

Figure 1. Each simulation lasted about 2 h and started with a briefing session in which the facilitator introduced the patient case, the equipment necessary for handling the exercise and the learning goals. The simulated scenario lasted 15 min. After watching the video of their own performance (15 min), the student group engaged in a collective debriefing that lasted 50 min. This session was led by the facilitator following the PEARLs script.

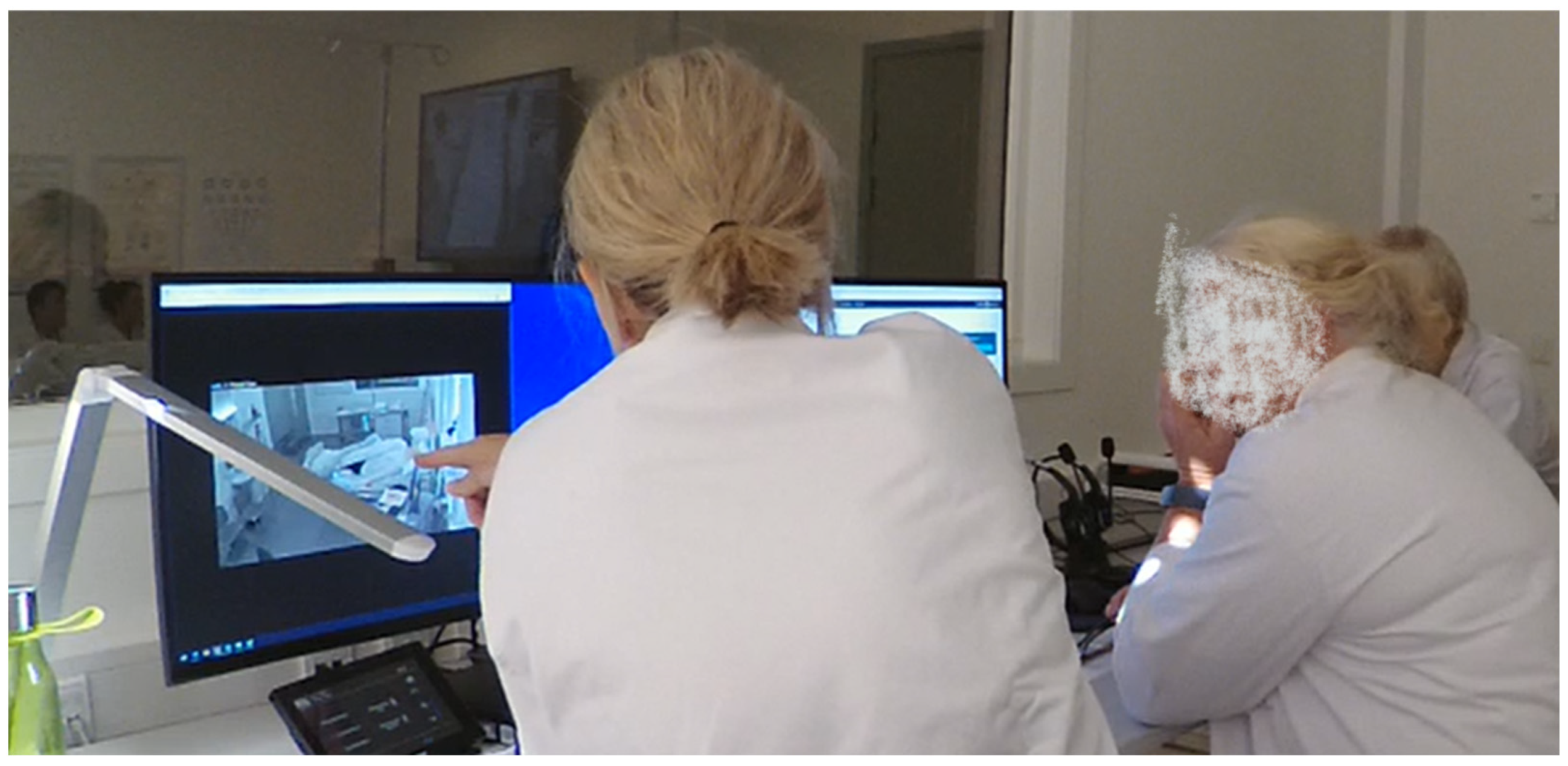

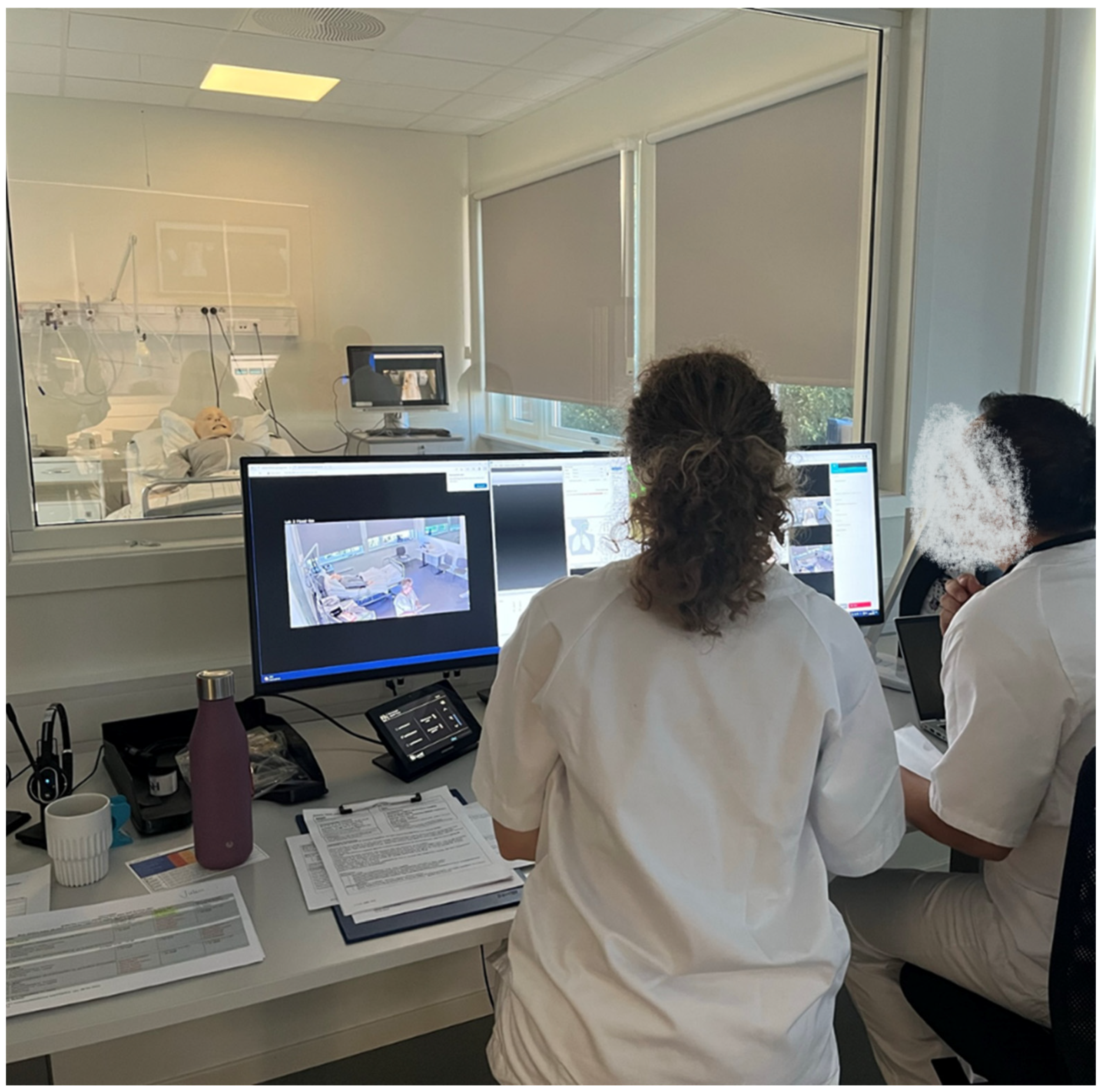

1The data for this study capture the reflections and analyses by operators and facilitators (Phase 3) as they prepared for the debriefing (Phase 4). The object of inquiry of our research is the activities of these professional teams as they reflected on what they saw during Phase 2. The recordings were made in the control room and lasted approximately 15–30 min each.

In total, 30 conversations, comprising approximately 15 h of video recordings, were generated. In addition, observation-logs, photos, and other artifacts, such as assignments and students’ observation-schemes, were collected. One camera with a wide-angled lens was placed in the corner of the operator’s control room to capture the digital equipment, such as screens and touch-panels for steering the manikins, and the interactions between the operators and facilitators during their conversations. A separate microphone at the desk secured high quality sound recordings. See

Figure 2.

It should also be mentioned that each simulator suite is equipped with three wide-lens video cameras and one separate microphone that document student work. One of the cameras captured the whole room, another camera was placed over the hospital-bed to capture the detailed interactions between manikins and students, and one wide-angle camera was turned towards the observing students. The operators and facilitators used the 360 degree camera installed in the simulator suits to follow student activities. These recordings were the video documentation that operators and facilitators accessed as they analysed the simulator sessions. We should also point out that the operators and facilitators in this centre had professional backgrounds as nurses and extensive experiences of care and simulations.

The project was approved by the National Department of Ethics. All participants volunteered and signed an informed consent form. All personal information has been anonymized.

4. Results

4.1. Analytical Procedures

The material has been analysed through the lens of a sociocultural conceptual framework and by using interaction analysis (

Jordan & Henderson, 1995). For this analysis, all the conversations among operators and facilitators were transcribed and subjected to collaborative analysis. Data were analysed in several data sessions involving the authors as well as in data sessions involving a more comprehensive research group that comprises a network of researchers from different disciplines working with interaction analysis of video-recorded data (cf.

Heath et al., 2010). Preliminary findings were also presented at the American Educational Research Association 2024, receiving valuable recommendations to further elaborate and finetune the analyses (

Wiig & Säljö, 2024). The three episodes selected for this article were transcribed at an intermediate level to allow researchers in professional learning and instruction environments in healthcare to follow the conversations and activities (

Linell, 2011).

The video recordings, which documented the post-simulation analyses by operators and facilitators, were analysed, and three particularly interesting areas of participant concerns emerged. These areas imply that the teams of operator and facilitator were:

- (a)

calibrating observations and interpretations made during the simulation exercises.

Here, the operators and facilitators describe and analyse central features of the students’ delivery of care in order to calibrate their individual observations and interpretations. The work done here serves to reach an agreement on what has evolved during the simulation and decide what needs to be communicated to students during the debrief. Assessing student performance and what has been demonstrated in terms of professional know-how, are central features of this work.

- (b)

monitoring the progress of the scenario as an instructional event.

This involves considering (1) the use of the technological tools available during the simulation exercise, (2) attending to student activities and (3) the work by the operator in the operator room when monitoring and guiding the on-going simulation. Evaluative statements are part of this activity as well.

- (c)

commenting on their own contributions.

Operators discuss their experiences acting as patients, doctors, etc., during the simulation exercise. This discussion included the normative ambitions of operators and facilitators to improve the simulations as contexts for learning and how well they succeed in supporting student learning.

The nature of these three types of professional analyses during Phase 3 will now be described in some detail.

4.2. Calibrating Observations and Interpretations Made During the Simulation Exercises

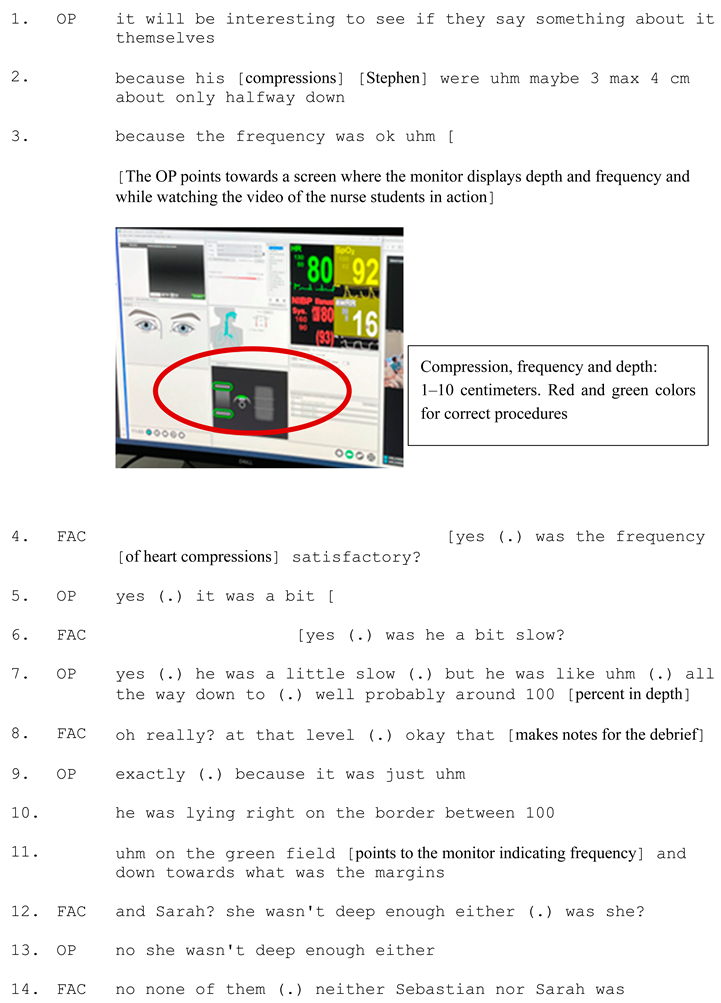

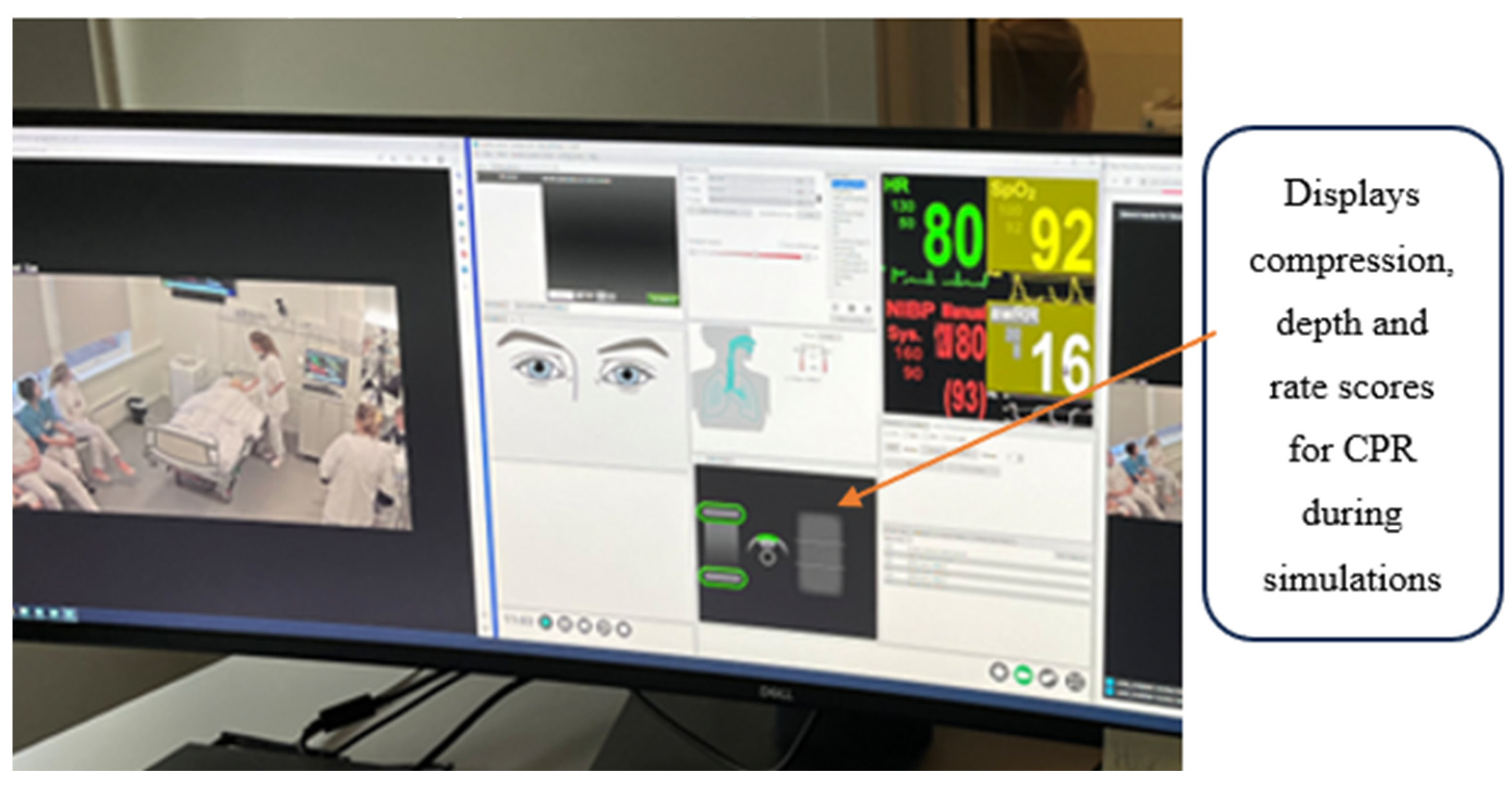

Delivery of care is a complex undertaking, and students engage analytical, communicative and physical activities that operators and facilitators have to analyse and evaluate. The following excerpts have been collected from conversations between two experienced participants, one operator and one facilitator, during the Heart Stop Scenario, a common simulation exercise. This is a stressful exercise for the students where they have to rescue the manikin’s life, and the operator and facilitator follow student work and look at the digital monitors providing data on the medical condition of the manikin/patient. One of the central issues of providing care in this emergency situation is the depth and intensity of the pressure exerted on the manikin’s chest. The operator can monitor the frequency (1–100%) and depth (1–10 centimetres) of correct heart and lung compressions through digital monitors of vital measures while simultaneously following the video documentation of student performance. On the monitors, correct procedures with respect to pace and depth of compressions are displayed in green colours and incorrect are in red. In Excerpt 1, we see how students struggle during this exercise, and we also see the detailed observations that the operator and facilitator make as they review student performance. See

Figure 3:

The nurse students struggled in different ways to give the manikin correct heart and lung treatment. In the first line, the operator says, it will be interesting to see if they say something about it themselves, thus anticipating the upcoming debrief session, and if students are aware of their difficulties in performing CPR (cardiopulmonary resuscitation) correctly. According to the operators’ in situ observations of the monitor for vital measures, Stephen was maybe 3 max 4 cm, or only halfway down, but the frequency was ok (lines 2 and 3).

Figure 4:

The facilitator asked two questions to address her concerns based on her observations during the exercise:

Was the frequency satisfactory? (line 4) and, more precisely,

was he a bit slow? (line 6). Noticing differences, the operator confirms her observation that Stephen

was a little slow but underlines that the compressions scored almost 100 percent in depth, as was shown by the monitor (line 7). Somewhat surprised, the facilitator

replies Oh really! At that level! (line 8), and she makes notes for the upcoming debriefing. The operator further explicates, by referring to the monitor:

he was right on the border between 100, uhm on the green field and down towards what was the margins (lines 10 and 11). The analysis continues in lines 12–14, when they highlight how the other students act by saying,

and Sarah? She wasn’t deep enough either, was she? No, none of them. These utterances demonstrate the ways the operator and the facilitator jointly negotiate their interpretations of the observations made. Together they calibrate and fill in missing information in their analyses of what is going on, and they have access to the technical mediation of the activities from the medical monitors. Thus, the situated calibration is tightly connected with their professional experience in nursing, their professional vision (

Goodwin, 1994), and the simultaneous consultation of technical devices. In this sense, the assemblage of operator/facilitator and the technologies exert agency in the situation resulting in a detailed analysis of the progression of the provision of healthcare.

4.3. Monitoring the Progress of the Scenario as an Instructional Event

A simulation is a dynamic event. Even though there is a scenario based on a well-considered script, the activities of students are decisive for the progress and the relevance of the exercise. In response to the students’ initiatives and performance, operators and facilitators have to be creative to keep the exercise on track towards the intended learning goals. Excerpt 2 is from a conversation between a trainee operator (OPT), an experienced operator, and the facilitator in a scenario referred to as Postoperative Bleeding. The learning goals include engaging in systematic patient observation (ABCDE-procedure) to assess a deteriorating/critically ill patient with a bleeding wound, and to keep the patient alive.

The operators and the facilitator monitor the progression of the scenario as an instructional event and jointly reflect on its instructional relevance for the students. They discuss how to trigger students to perform expected procedures, such as checking blood pressure, in a post-operative scenario setting. They are well acquainted with the design of the scenario and the learning goals central to the exercise, but they are also continuously responsive to the nature and quality of activities that the students engage in. In the following excerpt, the facilitators discussed how they could manipulate the technical features of the manikin with a bleeding wound and intervene through triggers to support students, who showed a lack of understanding of what to do in a critical postoperative care situation (cf.

Figure 5).

![Education 15 00347 i002]() |

In this excerpt, the operators and the facilitator were concerned, even perplexed, that the students did not seem to notice the significance of blood pressure in a postoperative bleeding setting. Monitoring the students’ obvious lack of knowledge regarding the importance of examining the manikin’s circulation (to check for possible blood loss), they jointly reflect on why students failed to attend to this. One explanation is lack of knowledge on the part of students, but the other alternative they consider is if they should have manipulated the medical digital technologies to provide students with sharper clues to check vital measurements during the exercise. Thus, they turn their attention to the interrelationship between the simulation and their own contributions. The operator trainee calibrates her observation with the team: you adjusted the pulse down to emphasize the thing about painkillers (lines 2 and 4). The operator confirms, and the trainee explicates her reasoning by pointing to the monitor while saying then I thought, if one were to somehow highlight the issue of post-operative bleeding so maybe the heart rate could have been high and the blood pressure low to follow that track a bit more (lines 5, 7, and 9). Suggesting how to highlight the instructional goal of the scenario, the team members discuss how to finetune the technical indicators to steer students into understanding how to perform the correct procedures by checking the circulation of a bleeding patient. They realize that the students did not understand the point of the simulation script, underscoring that we can make slightly more visible triggers on that (line 12). Consequently, the professional team highlighted that, if the operator provides more obvious technical triggers on vital measures, the facilitator can bring it up in the post-simulation reflections; this would be something to elaborate on during the debriefing (line 13) in order to reach learning goals. Commenting on the fact that students do not follow the to-do list of the ABCDE-procedure, the operator admitted that the scenario and the instructional design do not properly support students’ performance because they simply do not relate to C (line 14), i.e., circulation.

The practical instructional problem for the operators and facilitator is how they can make the significant details of the scenario salient for the students through technical as well as human interventions. The suggestion they make, to provide a more relevant instructional event in the simulator suite, is to redesign the situation by (a) having the operator provide clearer triggers, and (b) changing the technical parameters visible on the monitors in the simulation suits. This kind of “backstage” work thus involves using experience and know-how from current simulations, the technical equipment and the professional clinical expertise to improve the instructional relevance. In this sense, the team members recognize the problems of the agency of the various elements in the simulation. The scenario was not clear enough, and neither the technology nor their own interventions provided sufficient triggers. This is a comprehensive and very detailed analysis of the work carried out by these expert teams in an instructional situation and its apparent failure to promote professional learning. The analysis by the experts, furthermore, considered the many levels involved (scenario, technology and human intervention).

4.4. Commenting on Their Own Contributions as Participants When Acting as Patients

The final transcript is also from the scenario Postoperative Bleeding, but a new student group was involved. During the scenario, the students noticed a bleeding wound, but they did not respond by performing the correct clinical procedures in the care situation (similar to what happened in Excerpt 4b). By externalizing their observations, the operators and the facilitator shared their reflections on their own contributions acting as the patient in pain, as doctors, etc., during the simulation exercise, and they evaluated if the students’ actions were satisfactory when responding to their prompts. We enter the conversation while the trainee operator, acting as the doctor in the scenario, shares her observations of her own contributions and reflects on how to improve the scenario and the instruction. She suggests allowing the doctor to use some additional prompts related to the bleeding wound earlier in the script/scenario to make it more obvious for students that they have to check the blood-soaked bandage and perform the expected clinical assessment in the care-situation. This opens a normative evaluation of the instructional relevance of the entire scenario, discussing how to provide triggers, changes in their role-play and how to fine-tune their own work to improve the simulation as a context for learning (See

Figure 6):

Here, the operator trainee suggests that, in that doctor’s consultation, the operator, acting as the doctor, could have asked students if they should have examined the patient’s body a little earlier (line 1) during the simulation. The idea is to get students on to that track (line 3) of checking a surgical wound and start efficient treatment when blood soaked through the bandage, which was a central part of the scenario (line 5). The instructors realize that the students do not understand the point of the script/scenario/exercise; after all, they didn’t even think about it (line 7). The facilitator, observing the students during the exercise, confirms the evaluation and responds with a rhetorical question, they didn’t think of bleeding at all did they (line 9).

This led the team into discussions about their ambitions to improve the simulation as a context for learning these clinical skills. Consequently, they agreed that, to improve the scenario, we make a couple of such changes (line 10) to explore if we get some response on that (.) it will be interesting (line 11). Arguing that to improve the simulation moving back and forth is part of the continuous professional process of fine-tuning the simulation in relation to various student groups, that’s how learning is (line 12). Such a revision is also necessary in order to prepare students for situations they will encounter in their future working lives. Creating connections to a normal situation (line 15) in a hectic intensive hospital department, the trainee operator suggests several activities to revise the scenario to create realistic tasks and to trigger them a little more to intervene, since they should realize that they have to give some liquid, take another blood pressure and call the doctor a little sooner (line 15). The goal is to make the students aware of the urgency of this kind of situation. The facilitator agrees agreed and underlines that, in the daily life of nurses, it is important to recognize that if they are concerned that there is bleeding (.) they [should] call [the doctor] promptly (line 16). Together, the team members agree on the necessity of making students realize the significance of immediate action in that type of situation. The professional agenda behind this argument is that operators and facilitator are concerned that the students are acting as if nothing is very urgent (line 17) in the situation. The message they wanted to send is that, in situations of this kind, immediate action is expected.

The practical problem for the operators and the facilitator in this situation is similar to what we saw in Excerpt 1b) above; however, here they are commenting on their own contributions when acting as doctor, patient, etc., and considering whether these interventions provided enough information for the students to act on. Thus, the transcript document how the experts evaluate and calibrate their professional understanding of how their interventions co-determine several aspects of students’ performance. This concern matters in terms of how they as operators/facilitator initiate actions, notice variations in how individual students respond to them, and monitor the students’ actions to conclude whether they are too slow or not adequate. Thus, what they realize is that student performance of their know-how is, to some extent, relative to their own contributions during simulations.

The simulator technology allows a simulation to be continuously monitored by experts who are watching how students engage in meaning making while confronted with challenges. When they realized that the students do not perform the core elements of the scenarios in expected manners, they discussed possible interventions to remedy the problems preventing students from understanding the situation. Thus, the simulator team used their clinical experiences and know-how from emergency hospital wards, the technological equipment available and their expertise in simulations to improve students’ understanding/awareness of the nature of the situation, i.e., they want to get the students on to that track (line 3). In this sense, the team members recognize the problem of the agency of their own contributions when they performed the roles of doctor, relatives and patient in pain. They reflect on the point that students might know the correct procedures of what to do, but the problem is the relevance, or lack of, of the operators’ intervention. Put differently, the members of the team are uncertain if they appropriately triggered students’ understanding of how to make use of their experiences and possible know-how in the simulated situation.

Taken together, the empirical analyses show the significance of the role that operators and facilitators played for how simulations unfolded. They were active at several levels, as evaluators of student performance, analysts of how the scenario and responsible for the technology, and evaluators of their own contributions to the learning event. Outcomes of this kind of work are part of what the simulation is all about as a learning environment.

5. Discussion

In this study, we have scrutinized the contributions by operators and facilitators as professional teams as they engage in and develop simulations. We have shown how operators and facilitators reflect on the progress of the scenario, its instructional relevance and their own contributions to further develop simulations to create even more powerful learning settings. The goal has been to show that the “backstage” team activities are significant instances of professional learning while simultaneously improving the simulations’ instructional value.

The operators and facilitators reflected upon how the simulation, including the scenario, technology, student work and their own contributions, function as a coherent context for student learning of professional know-how. The problem they encountered was that the agentic elements of the scenario, the technology and their own contributions, all of which are intended to put the students on track, were not working as expected. One of the issues they struggled with was how the technologies and care situations they exposed students to sometimes seem to lack clarity or transparency for the students. When attempting to understand the roots of these elements of the simulation, a dichotomy of contrasting interpretations emerged among operators and facilitators. One reflection made when the simulation did not unfold according to expectations was that the students did not understand what to do in the scenario, and that this could lead to the difficulties observed. In that case, the conclusion was that students were not familiar with expected care procedures. At the same time, when facing cases in which the simulation does not work according to expectations, they suggested that the simulation technology was not transparent for the students, that is, they considered whether the problem was in the simulation and their own contributions, rather than in student knowledge. Consequently, they considered the problems that they observed from two perspectives. Either the students’ knowledge was below expectations, or, alternatively, the simulation did not work as intended because of the way in which the care situations were rendered by technology. These reflections on the nature of the problems they observed are formulated in a series of reflections regarding the dilemma of how clear the technology must be when presenting challenges, what they have to do when they intervene, and what students have to know in order to be able to respond with relevant care procedures.

The ways in which the expert teams reflect on student learning illuminate the disparities in perception between a professional and a nursing student when faced with a specific care situation. For instance, a bloody and wet bandage serves as a stimulus that a professional, equipped with patient experience, clinical expertise, and know-how, would instinctively respond to in a predictable manner. However, a stimulus does not necessarily prompt one specific action; rather, the response and the resulting action are contingent upon what you are looking for and what you are able to see in the situation. Consequently, the operators and facilitators realized that the bloody bandage, although a potent stimulus for a professional, fails to trigger the anticipated responses from the novice students. These students

de facto observed the bloody bandage but failed to grasp its significance within the scenario. In other words, their perception, understanding, reaction and enacted response differ markedly from those of experienced professionals when confronted with a situation of this kind. Through their analyses, the experts attempted to consider how to modify the situation and the technology in ways that could recalibrate how students perceived the situation. They sought to design the simulation in such a way that students saw the bloody bandage through a new lens as a sign of a severe situation that required an immediate response. This process underlines the profound transformative potential of simulations—reshaping not only what students know about healthcare in general sense, but also how they will be able to see what they are facing through a professional lens. In other words, the concerns are how to improve students’ professional vision (

Goodwin, 1994).

Operators and facilitators continuously discussed the effectiveness of scenarios and their relevance for professional learning, analysing student performance, their own contributions and the affordances of the technology in the learning situation. However, the agency of students in a situation relies on training to see and understand what the implications are of what you see. Therefore, the use of procedures and methodologies such as ISBAR, ABCDE or Close Loop must be trained, not just as instrumental techniques, but as ways of seeing something from a professional perspective. When reflecting on how to make students relate to the C (Circulation) of the ABCDE-procedure (Excerpt 2), they wanted students to understand how and why using systematic ways of determining basic physiological values of a patient in pain is vital and urgent. However, the simulation did not make students notice the significance of blood pressure in post-operative bleeding, and in response, the experts reflected on their contributions, contemplating various strategies of how to improve the scenario to get students on track. Thus, our findings suggest that there is an accumulation of professional judgement and learning as teams of operators and facilitators continuously attend to, including clinical, pedagogical, technological and simulation-specific issues.