Factors Influencing the Reported Intention of Higher Vocational Computer Science Students in China to Use AI After Ethical Training: A Study in Guangdong Province

Abstract

1. Introduction

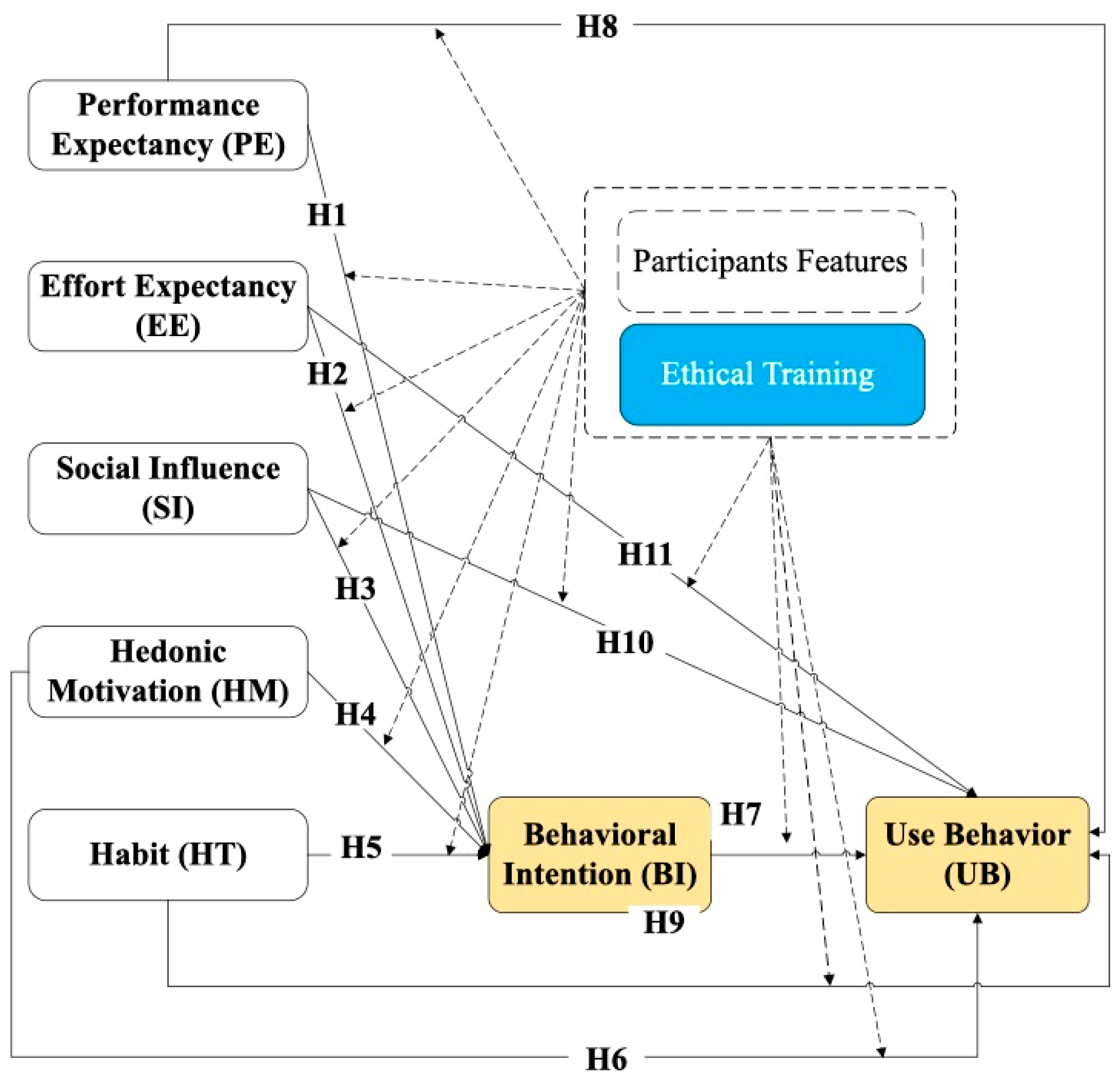

- RQ1: What are the factors that influence computer science students’ reported intention to use AI in college after ethical training?

- RQ2: What are the characteristics of participants’ attitudes towards ethical principles and responsibility?

2. Background and Literature Review

3. Research Design

3.1. Behavioral Intention

3.2. Use Behavior

3.3. Data Collection

3.3.1. Group Interview and Questionnaire

3.3.2. Project Assignment

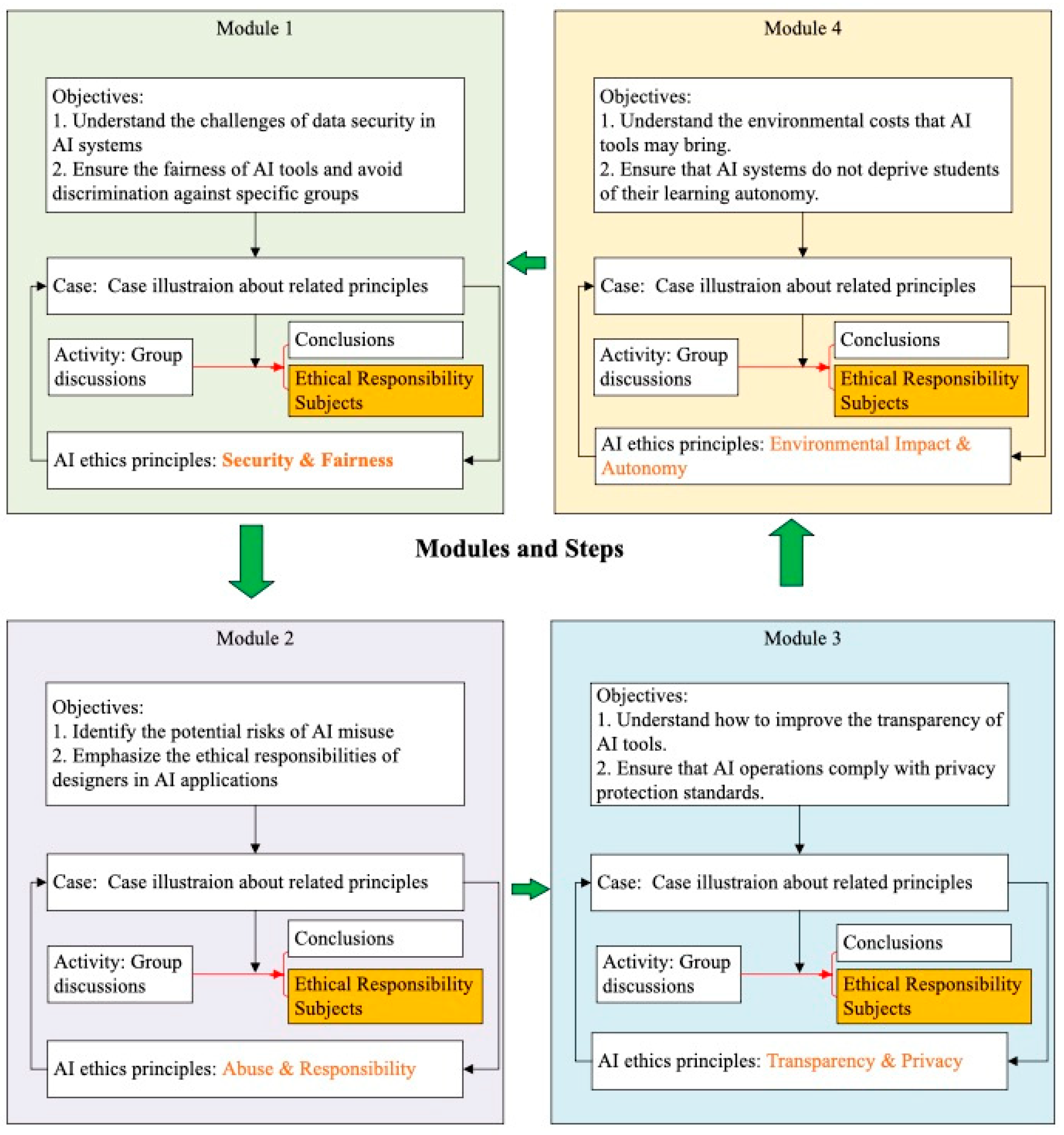

3.3.3. Ethics Training

4. Results

4.1. Characteristics of the Samples

4.2. Reliability and Validity of Constructs

4.3. Impact of Key Constructs

4.4. Discriminant Validity

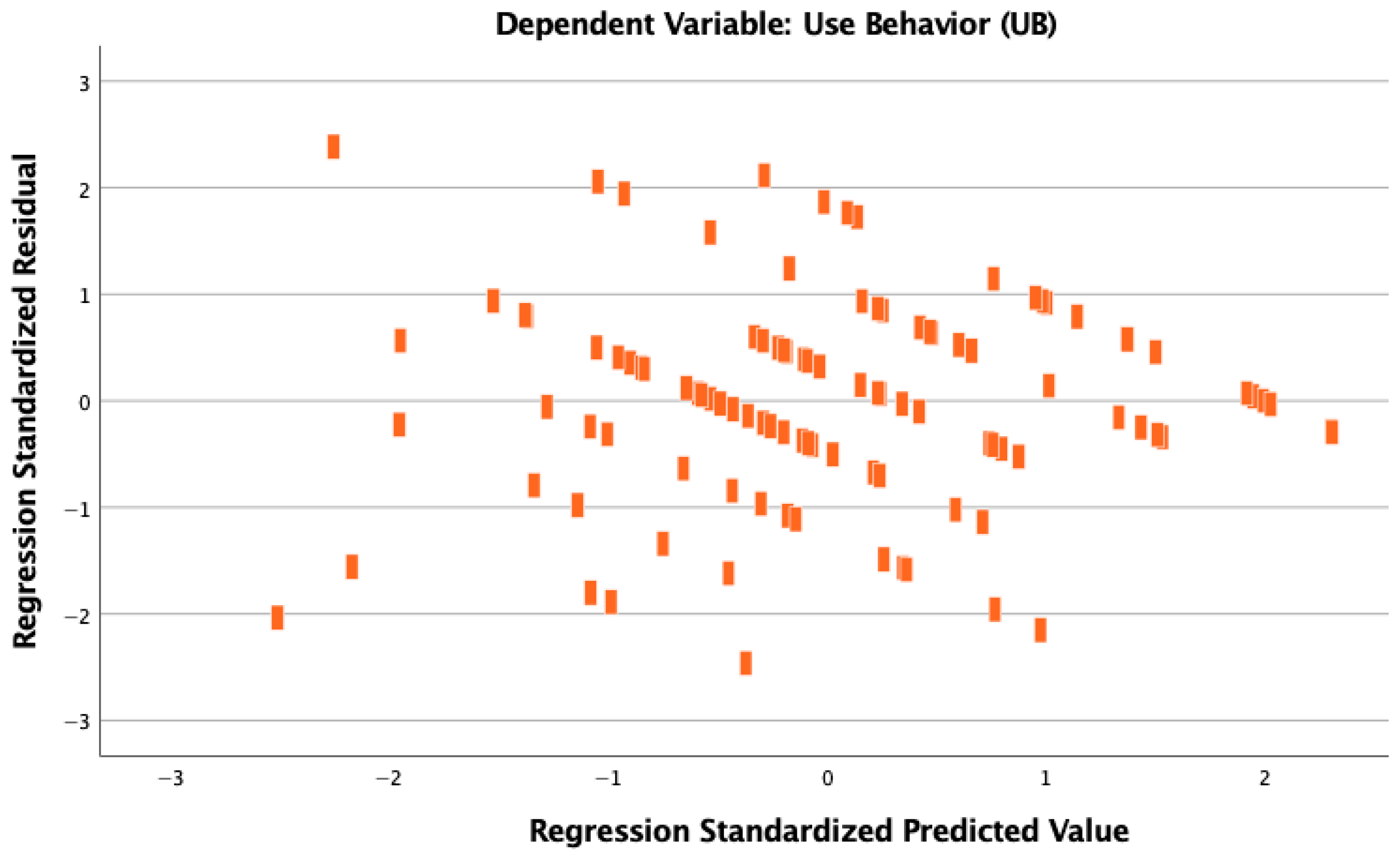

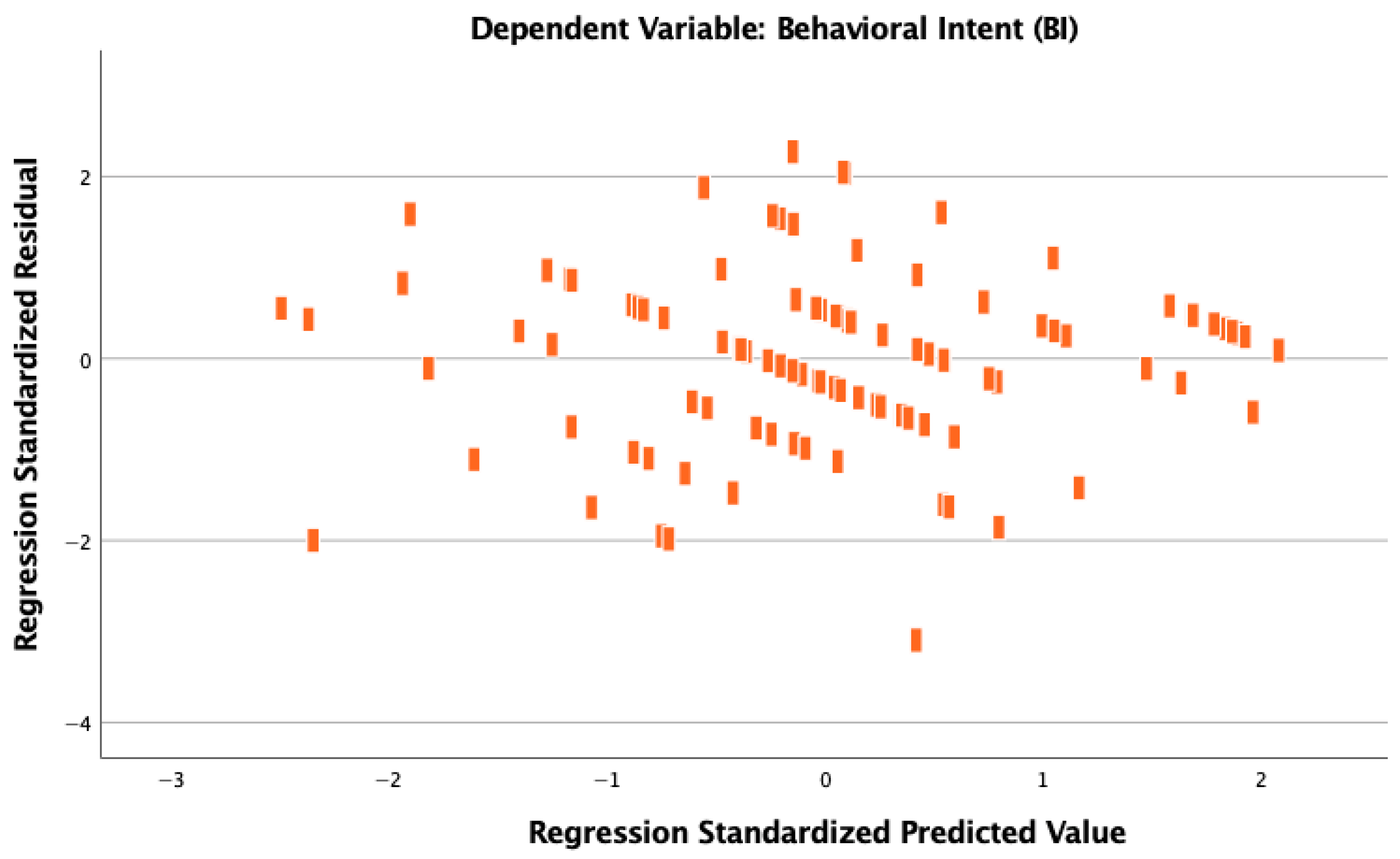

4.5. Regression Analyses

5. Discussion

5.1. Habit as a Predictor of Behavioral Intentions and Use Behavior

5.2. Role of Hedonic Motivation

5.3. Ethical Considerations and Lack of Influence from Traditional Predictors

5.4. Social Influence and Contextual Factors

6. Limitations and Future Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| PE | Performance Expectancy |

| EE | Effort Expectancy |

| SI | Social Influence |

| FC | Facilitating Conditions |

| HM | Hedonic Motivation |

| PV | Price Value |

| HT | Habit |

| BI | Behavioral Intention |

| UB | Use Behavior |

| LoC | Lines of Code |

| CC | Cyclomatic Complexity |

| CR | Composite Reliability |

| AVE | The Average Variance Extracted |

| Root of the AVE | |

| UTAUT2 | Tourism Management Information Systems |

Appendix A. Pre-Intervention Questionnaire

| Items |

|

Appendix B. Group Interview Questions

| Items |

|

Appendix C. Post-Intervention Questionnaire-1

| Items | 1 | 2 | 3 | 4 | 5 |

Performance Expectancy

| |||||

Appendix D. Post-Intervention Questionnaire-2

| Items | 1 | 2 | 3 | 4 | 5 |

Who do you think should bear the main responsibility for ensuring that AI is used ethically?

|

References

- Airaj, M. (2024). Ethical artificial intelligence for teaching-learning in higher education. Education and Information Technologies, 29(13), 17145–17167. [Google Scholar] [CrossRef]

- Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211. [Google Scholar] [CrossRef]

- Alalwan, A. A., Dwivedi, Y. K., Rana, N. P. P., & Williams, M. D. (2016). Consumer adoption of mobile banking in Jordan: Examining the role of usefulness, ease of use, perceived risk and self-efficacy. Journal of Enterprise Information Management, 29(1), 118–139. [Google Scholar] [CrossRef]

- AlQhtani, F. M. (2025). Knowledge management for research innovation in universities for sustainable development: A qualitative approach. Sustainability, 17(6), 2481. [Google Scholar] [CrossRef]

- Ambalov, I. A. (2021). An investigation of technology trust and habit in IT use continuance: A study of a social network. Journal of Systems and Information Technology, 23(1), 53–81. [Google Scholar] [CrossRef]

- An, Q., Yang, J., Xu, X., Zhang, Y., & Zhang, H. (2024). Decoding AI ethics from users’ lens in education: A systematic review. Heliyon, 10(20), e39357. [Google Scholar] [CrossRef] [PubMed]

- Ayinla, B. S., Amoo, O. O., Atadoga, A., Abrahams, T. O., Osasona, F., & Farayola, O. A. (2024). Ethical AI in practice: Balancing technological advancements with human values. International Journal of Science and Research Archive, 11(1), 1311–1326. [Google Scholar] [CrossRef]

- Azoulay, R., Hirst, T., & Reches, S. (2025). Large language models in computer science classrooms: Ethical challenges and strategic solutions. Applied Sciences, 15(4), 1793. [Google Scholar] [CrossRef]

- Ballesteros, M. A., Acosta-Enriquez, B. G., Valle, M. d. l. Á. G., Morales-Angaspilco, J. E., Callejas Torres, J. C., Luján López, J. E., Blanco-García, L. E., García Juárez, H. D., & Jordan, O. H. (2025). The influence of social norms and word-of-mouth marketing on behavioral intention and behavioral use of generative AI chatbots among university students. Computers in Human Behavior Reports, 19, 100760. [Google Scholar] [CrossRef]

- Becker, S., Bräscher, A.-K., Bannister, S., Bensafi, M., Calma-Birling, D., Chan, R. C., Eerola, T., Ellingsen, D.-M., Ferdenzi, C., Hanson, J. L., Joffily, M., Lidhar, N. K., Lowe, L. J., Martin, L. J., Musser, E. D., Noll-Hussong, M., Olino, T. M., Lobo, R. P., & Wang, Y. (2019). The role of hedonics in the human affectome. Neuroscience and Biobehavioral Reviews, 102, 221. [Google Scholar] [CrossRef] [PubMed]

- Borah, A. R., Nischith, T. N., & Gupta, S. (2024, January 4–6). Improved learning based on GenAI. 2024 2nd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT) (pp. 1527–1532), Bengaluru, India. [Google Scholar] [CrossRef]

- Borge, M., Smith, B. K., & Aldemir, T. (2024). Using generative ai as a simulation to support higher-order thinking. International Journal of Computer-Supported Collaborative Learning, 19(4), 479–532. [Google Scholar] [CrossRef]

- Ceccato, V., Gliori, G., Näsman, P., & Sundling, C. (2024). Comparing responses from a paper-based survey with a web-based survey in environmental criminology. Crime Prevention and Community Safety, 26(2), 216–243. [Google Scholar] [CrossRef]

- Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. International Journal of Educational Technology in Higher Education, 20(1), 1–25. [Google Scholar] [CrossRef]

- Chao, C.-M. (2019). Factors determining the behavioral intention to use mobile learning: An application and extension of the UTAUT model. Frontiers in Psychology, 10, 1652. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, M. A., & Kazim, E. (2022). Artificial Intelligence in Education (AIEd): A high-level academic and industry note 2021. AI and Ethics, 2(1), 157–165. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.-F., & Chao, W.-H. (2011). Habitual or reasoned? Using the theory of planned behavior, technology acceptance model, and habit to examine switching intentions toward public transit. Transportation Research Part F: Traffic Psychology and Behaviour, 14(2), 128–137. [Google Scholar] [CrossRef]

- Chen, M.-K., & Shih, Y.-H. (2025). The role of higher education in sustainable national development: Reflections from an international perspective. Edelweiss Applied Science and Technology, 9(4), 1343–1351. Available online: https://ideas.repec.org//a/ajp/edwast/v9y2025i4p1343-1351id6262.html (accessed on 1 October 2025). [CrossRef]

- Chen, S.-Y., Su, Y.-S., Ku, Y.-Y., Lai, C.-F., & Hsiao, K.-L. (2022). Exploring the factors of students’ intention to participate in AI software development. Library Hi Tech, 42(2), 392–408. [Google Scholar] [CrossRef]

- Corbin, T., Bearman, M., Boud, D., & Dawson, P. (2025). The wicked problem of AI and assessment. Assessment & Evaluation in Higher Education, 1–17. [Google Scholar] [CrossRef]

- Daher, W., & Hussein, A. (2024). Higher education students’ perceptions of GenAI tools for learning. Information, 15(7), 416. [Google Scholar] [CrossRef]

- Dakanalis, A., Wiederhold, B. K., & Riva, G. (2024). Artificial intelligence: A game-changer for mental health care. Cyberpsychology, Behavior, and Social Networking, 27, 100–104. [Google Scholar] [CrossRef] [PubMed]

- Darwin, Rusdin, D., Mukminatien, N., Suryati, N., Laksmi, E. D., & Marzuki. (2024). Critical thinking in the AI era: An exploration of EFL students’ perceptions, benefits, and limitations. Cogent Education, 11(1). [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [Google Scholar] [CrossRef]

- Demartini, C. G., Sciascia, L., Bosso, A., & Manuri, F. (2024). Artificial intelligence bringing improvements to adaptive learning in education: A case study. Sustainability, 16(3), 1347. [Google Scholar] [CrossRef]

- Dieterle, E., Dede, C., & Walker, M. (2024). The cyclical ethical effects of using artificial intelligence in education. AI & Society, 39(2), 633–643. [Google Scholar] [CrossRef]

- Dinev, T., & Hart, P. (2006). An extended privacy calculus model for e-commerce transactions. Information Systems Research, 17(1), 61–80. [Google Scholar] [CrossRef]

- Ding, Z., Brachman, M., Chan, J., & Geyer, W. (2025, June 23–25). “The diagram is like guardrails”: Structuring GenAI-assisted hypotheses exploration with an interactive shared representation. 2025 Conference on Creativity and Cognition (pp. 606–625), Virtual, UK. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M., & Williams, M. D. (2019). Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 21(3), 719–734. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Rana, N. P., Tamilmani, K., & Raman, R. (2020). A meta-analysis based modified unified theory of acceptance and use of technology (meta-UTAUT): A review of emerging literature. Current Opinion in Psychology, 36, 13–18. [Google Scholar] [CrossRef]

- Eden, C. A., Chisom, O. N., & Adeniyi, I. S. (2024). Integrating AI in education: Opportunities, challenges, and ethical considerations. Magna Scientia Advanced Research and Reviews, 10(2), 006–013. [Google Scholar] [CrossRef]

- Fan, Y., Tang, L., Le, H., Shen, K., Tan, S., Zhao, Y., Shen, Y., Li, X., & Gašević, D. (2025). Beware of metacognitive laziness: Effects of generative artificial intelligence on learning motivation, processes, and performance. British Journal of Educational Technology, 56(2), 489–530. [Google Scholar] [CrossRef]

- Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in society. Harvard Data Science Review, 1(1). [Google Scholar] [CrossRef]

- Forero-Corba, W., & Bennasar, F. N. (2023). Techniques and applications of machine learning and artificial intelligence in education: A systematic review. RIED-Revista Iberoamericana de Educación a Distancia, 27(1), 209–253. [Google Scholar] [CrossRef]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Gao, J., & Wang, D. (2024). Quantifying the use and potential benefits of artificial intelligence in scientific research. Nature Human Behaviour, 8(12), 2281–2292. [Google Scholar] [CrossRef]

- Gelvanovsky, G., & Saduov, R. (2025). The impact of ChatGPT on academic writing instruction for computer science students. In G. Jezic, Y.-H. Chen-Burger, M. Kušek, R. Šperka, R. J. Howlett, & L. C. Jain (Eds.), Agents and multi-agent systems: Technologies and applications 2024 (pp. 271–281). Springer Nature. [Google Scholar] [CrossRef]

- Goldenkoff, E., & Cech, E. A. (2024). Left on their own: Confronting absences of AI ethics training among engineering master’s students. Available online: https://peer.asee.org/left-on-their-own-confronting-absences-of-ai-ethics-training-among-engineering-master-s-students (accessed on 1 September 2025).

- Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157–169. [Google Scholar] [CrossRef]

- Haas, G.-C., Eckman, S., & Bach, R. (2021). Comparing the response burden between paper and web modes in establishment surveys. Journal of Official Statistics, 37(4), 907–930. [Google Scholar] [CrossRef]

- Heilinger, J.-C., Kempt, H., & Nagel, S. (2024). Beware of sustainable AI! Uses and abuses of a worthy goal. AI and Ethics, 4(2), 201–212. [Google Scholar] [CrossRef]

- Hirpara, N., Weber, M., Szakonyi, A., Cardona, T., & Singh, D. (2025). Exploring the role of GenAI in shaping education. In Intelligent human computer interaction (pp. 121–134). Springer. [Google Scholar] [CrossRef]

- Jafari, F., & Keykha, A. (2024). Identifying the opportunities and challenges of artificial intelligence in higher education: A qualitative study. Journal of Applied Research in Higher Education, 16(4), 1228–1245. [Google Scholar] [CrossRef]

- Jin, Y., Yan, L., Echeverria, V., Gašević, D., & Martinez-Maldonado, R. (2025). Generative AI in higher education: A global perspective of institutional adoption policies and guidelines. Computers and Education: Artificial Intelligence, 8, 100348. [Google Scholar] [CrossRef]

- Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389–399. [Google Scholar] [CrossRef]

- Joo, M. (2024). It’s the AI’s fault, not mine: Mind perception increases blame attribution to AI. PLoS ONE, 19(12), e0314559. [Google Scholar] [CrossRef]

- Khan, B., & Nadeem, A. (2023). Evaluating the effectiveness of decomposed Halstead Metrics in software fault prediction. PeerJ Computer Science, 9, e1647. [Google Scholar] [CrossRef]

- Lam, L. W. (2012). Impact of competitiveness on salespeople’s commitment and performance. Journal of Business Research, 65(9), 1328–1334. [Google Scholar] [CrossRef]

- Levy-Feldman, I. (2025). The role of assessment in improving education and promoting educational equity. Education Sciences, 15(2), 224. [Google Scholar] [CrossRef]

- Li, H., Wang, Y., Luo, S., & Huang, C. (2025). The influence of GenAI on the effectiveness of argumentative writing in higher education: Evidence from a quasi-experimental study in China. Journal of Asian Public Policy, 18, 405–430. [Google Scholar] [CrossRef]

- Liang, H., Fan, J., & Wang, Y. (2025). Artificial intelligence, technological innovation, and employment transformation for sustainable development: Evidence from China. Sustainability, 17(9), 3842. [Google Scholar] [CrossRef]

- Limayem, M., Hirt, S. G., & Cheung, C. M. K. (2007). How habit limits the predictive power of intention: The case of information systems continuance. MIS Quarterly, 31(4), 705–737. [Google Scholar] [CrossRef]

- Liu, J., Chen, K., & Lyu, W. (2024). Embracing artificial intelligence in the labour market: The case of statistics. Humanities and Social Sciences Communications, 11(1), 1112. [Google Scholar] [CrossRef]

- Liu, T., Luo, Y. T., Pang, P. C.-I., & Kan, H. Y. (2025). Exploring the impact of information and communication technology on educational administration: A systematic scoping review. Education Sciences, 15(9), 1114. [Google Scholar] [CrossRef]

- Liut, M., Ly, A., Xu, J. J.-N., Banson, J., Vrbik, P., & Hardin, C. D. (2024, March 20–23). “I didn’t know”: Examining student understanding of academic dishonesty in computer science. 55th ACM Technical Symposium on Computer Science Education V. 1 (pp. 757–763), Portland, OR, USA. [Google Scholar] [CrossRef]

- Luo (Jess), J. (2024). A critical review of GenAI policies in higher education assessment: A call to reconsider the “originality” of students’ work. Assessment & Evaluation in Higher Education, 49, 651–664. [Google Scholar] [CrossRef]

- Lyons, J. B., Hobbs, K., Rogers, S., & Clouse, S. H. (2023). Responsible (use of) AI. Frontiers in Neuroergonomics, 4, 1201777. [Google Scholar] [CrossRef]

- Machado, H., Silva, S., & Neiva, L. (2025). Publics’ views on ethical challenges of artificial intelligence: A scoping review. AI and Ethics, 5(1), 139–167. [Google Scholar] [CrossRef]

- Mallik, S., & Gangopadhyay, A. (2023). Proactive and reactive engagement of artificial intelligence methods for education: A review. Frontiers in Artificial Intelligence, 6, 1151391. [Google Scholar] [CrossRef]

- McCabe, T. J. (1976). A complexity measure. IEEE Transactions on Software Engineering, SE-2(4), 308–320. [Google Scholar] [CrossRef]

- Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A survey on bias and fairness in machine learning. ACM Computing Surveys, 54(6), 115:1–115:35. [Google Scholar] [CrossRef]

- Montgomery, D. C., Peck, E. A., & Vining, G. G. (2021). Introduction to linear regression analysis. Wiley. [Google Scholar]

- Niu, T., Liu, T., Luo, Y. T., Pang, P. C.-I., Huang, S., & Xiang, A. (2025). Decoding student cognitive abilities: A comparative study of explainable AI algorithms in educational data mining. Scientific Reports, 15(1), 26862. [Google Scholar] [CrossRef]

- Nozari, H., Ghahremani-Nahr, J., & Szmelter-Jarosz, A. (2024). Chapter one—AI and machine learning for real-world problems. In S. Kim, & G. C. Deka (Eds.), Advances in computers (Vol. 134, pp. 1–12). Elsevier. [Google Scholar] [CrossRef]

- Olorunfemi, O. L., Amoo, O. O., Atadoga, A., Fayayola, O. A., Abrahams, T. O., & Shoetan, P. O. (2024). Towards a conceptual framework for ethical AI development in it systems. Computer Science & IT Research Journal, 5(3), 616–627. [Google Scholar] [CrossRef]

- Pan, S., Goodnight, G. T., Zhao, X., Wang, Y., Xie, L., & Zhang, J. (2025). “Game changer”: The AI advocacy discourse of 2023 in the US. AI & Society, 40(4), 2807–2819. [Google Scholar] [CrossRef]

- Podgórska, M., & Zdonek, I. (2024). Interdisciplinary collaboration in higher education towards sustainable development. Sustainable Development, 32(3), 2085–2103. [Google Scholar] [CrossRef]

- Portocarrero Ramos, H. C., Cruz Caro, O., Sánchez Bardales, E., Quiñones Huatangari, L., Campos Trigoso, J. A., Maicelo Guevara, J. L., & Chávez Santos, R. (2025). Artificial intelligence skills and their impact on the employability of university graduates. Frontiers in Artificial Intelligence, 8, 1629320. [Google Scholar] [CrossRef] [PubMed]

- Qi, J., Liu, J., & Xu, Y. (2025). The role of individual capabilities in maximizing the benefits for students using GenAI tools in higher education. Behavioral Sciences, 15(3), 328. [Google Scholar] [CrossRef] [PubMed]

- Radanliev, P., Santos, O., Brandon-Jones, A., & Joinson, A. (2024). Ethics and responsible AI deployment. Frontiers in Artificial Intelligence, 7, 1377011. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, M., Silva, R., Borges, A. P., Franco, M., & Oliveira, C. (2025). Artificial intelligence: Threat or asset to academic integrity? A bibliometric analysis. Kybernetes, 54(5), 2939–2970. [Google Scholar] [CrossRef]

- Shahzad, M. F., Xu, S., An, X., Asif, M., & Javed, I. (2025). From policy to practice: A thematic analysis of generative AI technologies in China’s education sector. Interactive Learning Environments, 1–20. [Google Scholar] [CrossRef]

- Sheppard, B. H., Hartwick, J., & Warshaw, P. R. (1988). The theory of reasoned action: A meta-analysis of past research with recommendations for modifications and future research. Journal of Consumer Research, 15(3), 325–343. [Google Scholar] [CrossRef]

- Shrestha, S., & Das, S. (2022). Exploring gender biases in ML and AI academic research through systematic literature review. Frontiers in Artificial Intelligence, 5, 976838. [Google Scholar] [CrossRef]

- Strielkowski, W., Grebennikova, V., Lisovskiy, A., Rakhimova, G., & Vasileva, T. (2025). AI-driven adaptive learning for sustainable educational transformation. Sustainable Development, 33(2), 1921–1947. [Google Scholar] [CrossRef]

- Sumbal, M. S., & Amber, Q. (2025). ChatGPT: A game changer for knowledge management in organizations. Kybernetes, 54(6), 3217–3237. [Google Scholar] [CrossRef]

- Sykes, T. A., Venkatesh, V., & Gosain, S. (2009). Model of acceptance with peer support: A social network perspective to understand employees’ system use. MIS Quarterly, 33(2), 371–393. [Google Scholar] [CrossRef]

- Tamilmani, K., Rana, N. P., & Dwivedi, Y. K. (2021). Consumer acceptance and use of information technology: A meta-analytic evaluation of UTAUT2. Information Systems Frontiers, 23(4), 987–1005. [Google Scholar] [CrossRef]

- Tlili, A., Bond, M., Bozkurt, A., Arar, K., Chiu, T. K. F., & Rospigliosi, P. A. (2025). Academic integrity in the generative AI (GenAI) era: A collective editorial response. Interactive Learning Environments, 33, 1819–1822. [Google Scholar] [CrossRef]

- Vaishya, R., Dhall, S., & Vaish, A. (2024). Artificial Intelligence (AI): A potential game changer in regenerative orthopedics—A scoping review. Indian Journal of Orthopaedics, 58(10), 1362–1374. [Google Scholar] [CrossRef]

- Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157–178. [Google Scholar] [CrossRef]

- Wang, D., Dong, X., & Zhong, J. (2025). Enhance college AI course learning experience with constructivism-based blog assignments. Education Sciences, 15(2), 217. [Google Scholar] [CrossRef]

- Wang, H., Dang, A., Wu, Z., & Mac, S. (2024). Generative AI in higher education: Seeing ChatGPT through universities’ policies, resources, and guidelines. Computers and Education: Artificial Intelligence, 7, 100326. [Google Scholar] [CrossRef]

- Wijendra, D. R., & Hewagamage, K. P. (2021). Analysis of cognitive complexity with cyclomatic complexity metric of software. International Journal of Computer Applications, 174(19), 14–19. [Google Scholar] [CrossRef]

- Yusuf, A., Pervin, N., & Román-González, M. (2024). Generative AI and the future of higher education: A threat to academic integrity or reformation? Evidence from multicultural perspectives. International Journal of Educational Technology in Higher Education, 21(1), 21. [Google Scholar] [CrossRef]

- Zhang, R., & Wang, J. (2025). Perceptions, adoption intentions, and impacts of generative AI among Chinese university students. Current Psychology, 44(11), 11276–11295. [Google Scholar] [CrossRef]

- Zhou, T., Lu, Y., & Wang, B. (2010). Integrating TTF and UTAUT to explain mobile banking user adoption. Computers in Human Behavior, 26(4), 760–767. [Google Scholar] [CrossRef]

| Variable | N | % |

|---|---|---|

| Gender | ||

| Female | 15 | 14.29% |

| Male | 90 | 85.71% |

| Age | ||

| 20 | 42 | 41.18% |

| 21 | 42 | 41.18% |

| 22 | 13 | 12.75% |

| 23 | 3 | 2.94% |

| 24 | 2 | 1.96% |

| Time Using AI | ||

| ≤1 month | 27 | 25.71% |

| >1 and ≤6 months | 34 | 32.38% |

| >6 and ≤12 months | 16 | 15.24% |

| >12 and ≤24 months | 23 | 21.90% |

| >24 months | 5 | 4.76% |

| Time Knowing of AI | ||

| ≤1 month | 4 | 3.81% |

| >1 and ≤6 months | 25 | 23.81% |

| >6 and ≤12 months | 25 | 23.81% |

| >12 and ≤24 months | 35 | 33.33% |

| >24 months | 16 | 15.24% |

| Construct | Item | Factor Loading | Cronbach’s Alpha | AVE | CR |

|---|---|---|---|---|---|

| Performance expectancy | PE1 | 0.664 | 0.708 | 0.45 | 0.71 |

| PE2 | 0.684 | ||||

| PE3 | 0.664 | ||||

| Effort expectancy | EE1 | 0.566 | 0.648 | 0.389 | 0.654 |

| EE2 | 0.716 | ||||

| EE3 | 0.578 | ||||

| Social influence | SI1 | 0.629 | 0.741 | 0.508 | 0.753 |

| SI2 | 0.672 | ||||

| SI3 | 0.822 | ||||

| Hedonic motivation | HM1 | 0.709 | 0.788 | 0.557 | 0.79 |

| HM2 | 0.78 | ||||

| HM3 | 0.748 | ||||

| Habit | HT1 | 0.758 | 0.84 | 0.644 | 0.844 |

| HT2 | 0.842 | ||||

| HT3 | 0.805 | ||||

| Behavioral intention | BI1 | 0.747 | 0.457 | 0.456 | 0.714 |

| BI2 | 0.670 | ||||

| BI3 | 0.602 | ||||

| Use behavior | UB1 | 0.821 | 0.724 | 0.512 | 0.744 |

| UB2 | 0.830 | ||||

| UB3 | 0.415 |

| Variable | PE | EE | SI | HM | HT | BI | UB |

|---|---|---|---|---|---|---|---|

| PE | 0.671 | ||||||

| EE | 0.283 * | 0.624 | |||||

| SI | 0.142 | 0.397 ** | 0.712 | ||||

| HM | 0.248 * | 0.387 ** | 0.183 | 0.746 | |||

| HT | 0.221 * | 0.346 ** | 0.463 ** | 0.377 ** | 0.802 | ||

| BI | 0.250 * | 0.418 ** | 0.358 ** | 0.488 ** | 0.639 ** | 0.675 | |

| UB | 0.057 | 0.374 ** | 0.484 ** | 0.375 ** | 0.589 ** | 0.550 ** | 0.716 |

| Hypotheses | Independent Constructs | Dependent Constructs | r2 | β | t | p-Value | Results |

|---|---|---|---|---|---|---|---|

| H1 | Performance expectancy | Behavioral intention | 0.501 | 0.042 | 0.561 | 0.576 | Not supported |

| H2 | Effort expectancy | Behavioral intention | 0.135 | 1.602 | 0.112 | Not supported | |

| H3 | Social influence | Behavioral intention | 0.033 | 0.399 | 0.691 | Not supported | |

| H4 | Hedonic motivation | Behavioral intention | 0.239 | 2.951 | 0.004 | Supported | |

| H5 | Habit | Behavioral intention | 0.478 | 5.569 | 0.000 | Supported | |

| H6 | Habit | Use behavior | 0.477 | 0.299 | 2.96 | 0.004 | Supported |

| H7 | Behavioral intention | Use behavior | 0.222 | 2.141 | 0.035 | Supported | |

| H8 | Performance expectancy | Use behavior | −0.149 | −1.925 | 0.057 | Supported | |

| H9 | Hedonic motivation | Use behavior | 0.116 | 1.333 | 0.186 | Not supported | |

| H10 | Social influence | Use behavior | 0.234 | 2.705 | 0.008 | Supported | |

| H11 | Effort expectancy | Use behavior | 0.082 | 0.938 | 0.351 | Not supported |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, H.; Chan, K.I.; Pang, P.C.-I.; Manditereza, B.; Shih, Y.-H. Factors Influencing the Reported Intention of Higher Vocational Computer Science Students in China to Use AI After Ethical Training: A Study in Guangdong Province. Educ. Sci. 2025, 15, 1431. https://doi.org/10.3390/educsci15111431

Zou H, Chan KI, Pang PC-I, Manditereza B, Shih Y-H. Factors Influencing the Reported Intention of Higher Vocational Computer Science Students in China to Use AI After Ethical Training: A Study in Guangdong Province. Education Sciences. 2025; 15(11):1431. https://doi.org/10.3390/educsci15111431

Chicago/Turabian StyleZou, Huiwen, Ka Ian Chan, Patrick Cheong-Iao Pang, Blandina Manditereza, and Yi-Huang Shih. 2025. "Factors Influencing the Reported Intention of Higher Vocational Computer Science Students in China to Use AI After Ethical Training: A Study in Guangdong Province" Education Sciences 15, no. 11: 1431. https://doi.org/10.3390/educsci15111431

APA StyleZou, H., Chan, K. I., Pang, P. C.-I., Manditereza, B., & Shih, Y.-H. (2025). Factors Influencing the Reported Intention of Higher Vocational Computer Science Students in China to Use AI After Ethical Training: A Study in Guangdong Province. Education Sciences, 15(11), 1431. https://doi.org/10.3390/educsci15111431